4 minute read

Introducing MLCommons

By Gregg Barrett

MLCommons was officially launched on December 3rd, 2020. MLPerf, which has been absorbed into MLCommons, successfully collected input from dozens of companies and academic institutions to create the industry-standard benchmarks for machine learning.

Where MLPerf concerned itself with the development and maintenance of machine learning benchmarks for training and inference, MLCommons has a much broader objective and aims to answer the needs of the nascent machine learning industry through open and collaborative engineering in three areas: benchmarking, datasets and best practices.

Datasets

To make machine learning systems requires data and that is something that is not always easy to come by. In addition, to do an apples-to-apples comparison of one model against another requires using the same testing data. The problem however is that most public datasets are small, legally restricted, not redistributable and not diverse. To foster machine learning innovation MLCommons is therefore working to unite disparate companies and organisations in the creation of large diverse and redistributable datasets under a Creative Commons or similar license, for machine learning training and testing.

As an example, ImageNet is commonly used in computer vision, however there is no similar largescale dataset for speech where there might be a need to compare speech to text accuracy. The first effort in this regard is the People’s Speech Dataset, which is approximately 100 times larger than earlier open alternatives, and aims to be more diverse. Compiling such a dataset is not any easy task as aside from data engineering issues, there are licensing matters that need to be dealt with and the resulting dataset needs to be state of the art.

The People’s Speech dataset (SOURCE: MLCOMMONS)

For the Peoples Speech dataset 86 000 hours of speech and labels was collected from diverse sources, although primarily in English. In building the dataset much of the data was unaligned, meaning that the audio and text is there, but that they are not matched together. To match the audio and text forced alignment was used. Forced alignment uses a weak speech recognition system to match a segment of speech to the corresponding text. On a large CPU cluster, it costs around 1000 dollars to align 100 000 hours of audio. On an accelerator cluster the cost of forced alignment is negligible. The dataset will be released under the Creative Commons (CC-BY license), allowing for commercial use.

There are also a few reference TPU models that have been trained on the dataset that will also be released. We believe that the People’s Speech People’s Speech dataset will accelerate innovation in hardware-algorithm co-design and help MLPerf benchmarks reflect current production systems while incurring a modest cost. The 86,000 hours is therefore the first iteration of the dataset with more to come, and later versions due to branch out into more languages and accents.

Best Practices

New models emerge on a daily basis but sharing them remains an ad-hoc process. Often, when a researcher wants to use a model produced elsewhere, they must waste hours or days on a frustrating attempt to get the model to work. Similarly, a ML engineer may struggle to port and tune models between development and production environments which can be significantly different from each other. This challenge is magnified when working with a set of models, such as reproducing related work, employing a performance benchmark suite like MLPerf, or developing model management infrastructures. Reproducibility, transparency and consistent performance measurement are cornerstones of good science and engineering.

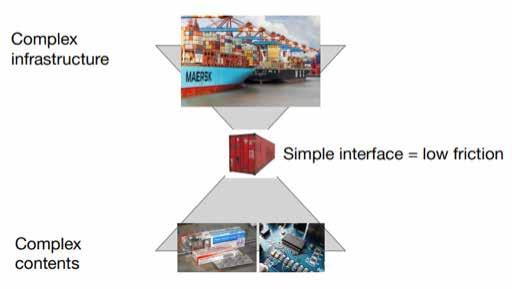

The first effort in this regard is MLCube, a set of common conventions that enables open and frictionless sharing of ML models across different infrastructure and between researchers and developers around the globe. MLCube can be viewed as a consistent interface to machine learning models in containers like Docker. Models published with the MLCube interface can be run on local machines, on a variety of major clouds, or in Kubernetes clusters -- all using the same code.

MLCube is currently a pre-alpha project with an active development team. We invite experimentation and feedback, code contributions, and partnerships with ML infrastructure efforts.

MLCube is a shipping container for ML models

Conclusion

Machine learning is completely unlike conventional software -- developers train an application rather than program it, and this requires a whole new set of techniques analogous to the breakthroughs in precision measurement, raw materials, and manufacturing that drove the industrial revolution. The launch of MLCommons in partnership with its founding members will promote global collaboration to build and share best practices - across industry and academia, software and hardware, from nascent startups to the largest companies. The Peoples Speech dataset and MLCube are the first in a very promising pipeline, more of which will be unveiled during the coming months.

Gregg Barrett serves on the MLCommons Research and Science working groups, is the CEO of Africa’s AI initiative, Cirrus, and a supporter of ELLIS and CLAIRE. ai