23 minute read

Research

22 MOTHS TEACH DRONES TO FLY 27 WHEN THE ROBOT BECOMES THE RESEARCHER

University’s Student-Built Satellites Blast into Space after Years of Delays

Advertisement

Better three years late than never. After a long-delayed launch, work built by Boston University SATellite (BUSAT) program students has made it into space.

On June 13, a private rocket made by BUSAT students blasted off from New Zealand’s Mahia Peninsula carrying mini-sensors that will study electric currents in Earth’s magnetic field that can cripple communications and power here on the ground. The launch had been delayed several times, including a planned March liftoff that was halted by the COVID-19 pandemic, from which New Zealand has recently emerged, recently announcing there are no new cases.

“Unfortunately, we weren’t able to bring any of the team to the launch site,” says program advisor and Assistant Professor Brian Walsh (ME). “We watched eagerly from home, where many of us gathered on a Zoom meeting.”

Being absent from the liftoff site didn’t diminish the day’s joy for those who had waited so long for the moment. “To say that

Brian Walsh’s expertise in small-satellite building was essential to ANDESITE, a BU student project that has successfully launched its first satellite into space. Joshua Semeter, right, collaborated with Walsh on ANDESITE.

I felt ecstatic and proud . . . would be an understatement,” says Phillip Teng (ENG’19), who worked for two and a half years on ANDESITE, the sensor’s network, adding that the launch was “the culmination of all the design choices and testing methodologies we’ve implemented along the way.”

There is a bittersweet note to the takeoff: it came just weeks after the death of Professor Emeritus Ted Fritz (Astronomy) who, according to Walsh, “founded and really fostered” BUSAT.

Walsh and Professor Joshua Semeter (ECE) joined forces when Walsh came to BU in 2015 with research expertise in small satellites and space technology; Semeter developed BUSAT’s science mission in the program’s early years and was already working on what became the ANDESITE launch.

BUSAT improved the satellites’ hardware and software in the three years since the first aborted launch, continuing to test its resilience to shaking and temperature extremes.

The rocket carrying mini-sensors developed by BU students blasted off from New Zealand on June 13.

“A lot of the research I’m involved in is building types of things like this—small satellites with sensors to measure things in the space environment—so it was a very natural collaboration” for him and his students, Walsh says.

The rocket will eject a canister that will, in turn, spit out the ANDESITE network of eight wireless sensors. The sensors, able to complete an Earth orbit in 90 minutes, will measure changes in electric currents flowing in and out of the Earth’s upper atmosphere along its magnetic field over the course of a few weeks, collecting data for its BU creators to analyze.

Semeter explains that ultimately, they’re “trying to understand this huge source of heat that impinges the outer atmosphere and . . . causes the atmosphere to become ionized, and therefore interferes with GPS signals and [radio] communications.”

“We get a brief period of excitement at launch,” he says, “and then it’s off to the ground station to start receiving science data as it comes in from the spacecraft.”— RICH BARLOW

Robot Reinforcement

A NEW MACHINE-LEARNING FRAMEWORK COULD BE USED TO COMPLETE HIGH-RISK, COMPLEX TASKS

While machine learning can identify potentially cancerous spots on mammograms or understand a spoken command to play music, researchers don’t fully understand exactly how machine-learning algorithms, well, learn. That blind spot makes it difficult to apply the technique to complex, high-risk tasks such as autonomous driving, where safety is a concern.

In a step forward, a team of researchers led by Professor Calin Belta (ME, SE, ECE) has developed a new approach to teaching a robot, or a team of robots, a high-risk, complex task—a framework that could be applied to a host of them.

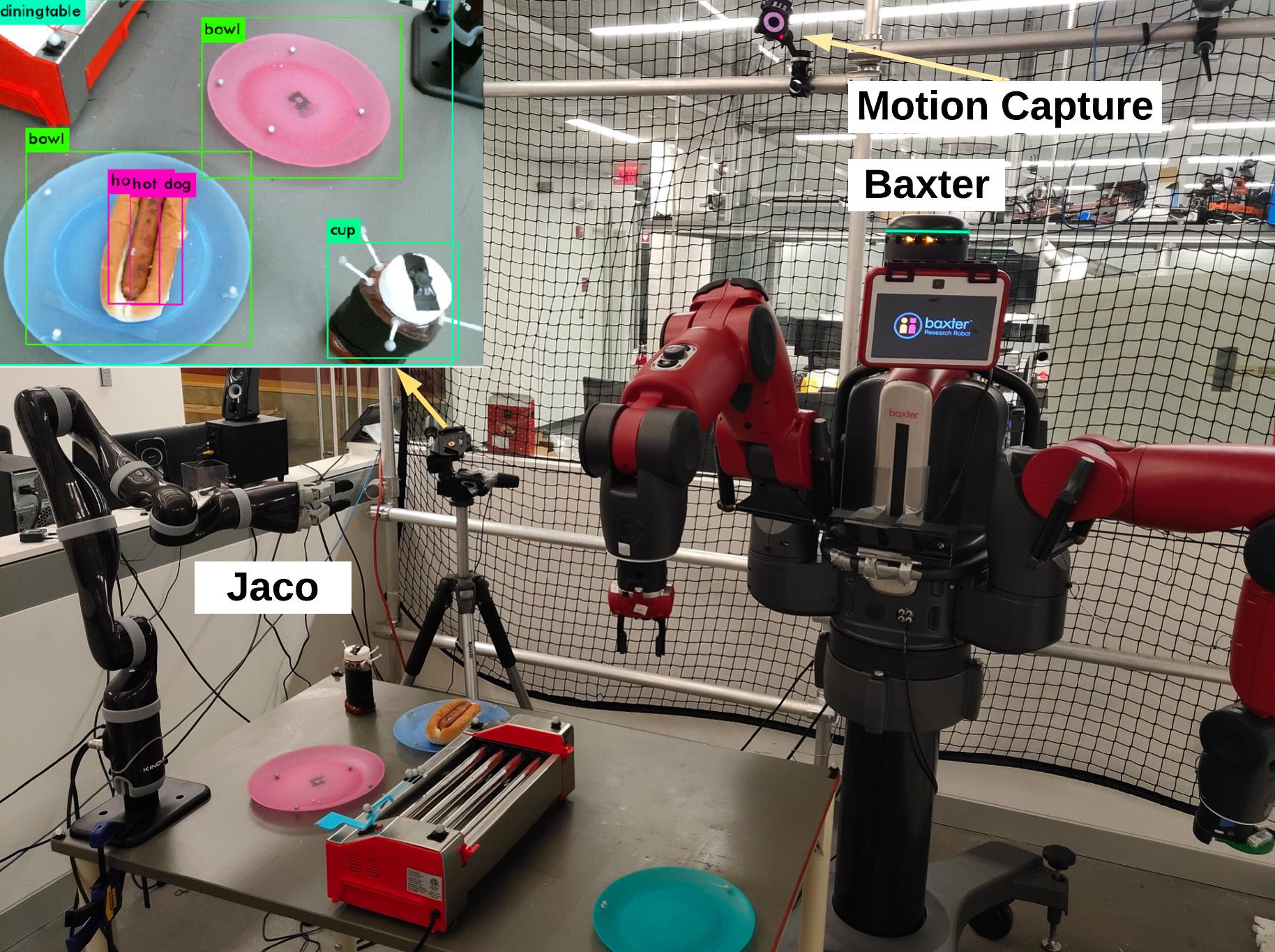

In a paper published in Science Robotics, the team demonstrated this framework with a proof-of-concept task by teaching two robots to cook, assemble and serve hot dogs together.

“We hope that such an architecture can help us impart our knowledge and objectives to the robot, and improve our understanding of what it has learned, thus leading to more capable robotic systems,” says doctoral fellow and first author of the paper Xiao Li (ME).

Utilizing machine learning and formal methods—an area of computer science that is typically used to guarantee safety, most notably in avionics or cybersecurity software—their work involves two disparate techniques that are difficult to combine mathematically and put together into a language a robot will understand.

Belta and his team used a branch of machine learning known as reinforcement learning: when a computer completes a task correctly, it receives a reward that guides its learning process.

Researchers built a simulation of the experiment to train the robots, denoting a safe region where the robots’ movement When the computer should be confined, and a “serve” region where the robots were completes a task supposed to serve the finished hot dog. The researchers also correctly, built what’s known as it receives a reward. prior knowledge into the algorithm, information that contained the steps the robots needed to take to successfully cook the hot dogs, like “pick up the hot dog and place it on the grill.” That knowledge also included information that would be obvious to a human, but not to a robot, e.g., if the hot dog was already being held, it didn’t need to be picked up.

Although the steps of the task are outlined in the algorithm, exactly how to perform those steps isn’t. When the robot gets better at performing a step, its reward increases, creating a feedback mechanism that pushes the robot to learning the best way to, for example, place a hot dog on a bun.

Integrating prior knowledge with reinforcement learning and formal methods makes this technique novel. By combining these three methods, the team can cut down the amount of possibilities the robots have to run through to learn how to cook, assemble and serve a hot dog safely.

To test their theory that combining the three would be the most effective and safe way to teach these robots to cook hot dogs, researchers measured the success rate of each robot when it was trained with any combination of reinforcement learning and the other two techniques; they were able to show that the highest success rate for both robots was when all three techniques were combined. Success here meant task completion while also remaining safe.

Unlike other types of machine learning, this framework allows researchers to analyze the success rate of the task’s stages, allowing them to understand and eliminate bottlenecks in the machine-learning process—something that is extremely difficult to do.

Belta sees this work as a proof-of-concept demonstration of their general framework, and anticipates that moving forward, it can be applied to other complex tasks, such as autonomous driving.— LIZ SHEELEY

The two robots, Jaco and Baxter, must work together to cook, assemble and serve a hot dog. They have to detect and find their supplies, shown in the upper left-hand corner, which are tracked with motion-capture throughout the experiment.

research

Moths Teach Drones to Fly

When an autonomous drone is deployed for a mission, it flies on a specific, programmed route. If there are any surprises along the way, the drone has a difficult time adapting to the change because it’s not programmed on how to do that.

Now, researchers have developed a new paradigm that would allow a drone to fly from point A to point B without a planned route—with the help of moths. Professor Ioannis Paschalidis (ECE, SE, BME), his team and collaborators at the University of Washington have extracted information about how a particular species of moth travels through a forest, and then used that data to create a new control policy for drones. Presented in PLoS Computational Biology, theirs is the first published work that uses data from an animal to improve autonomous drone navigation.

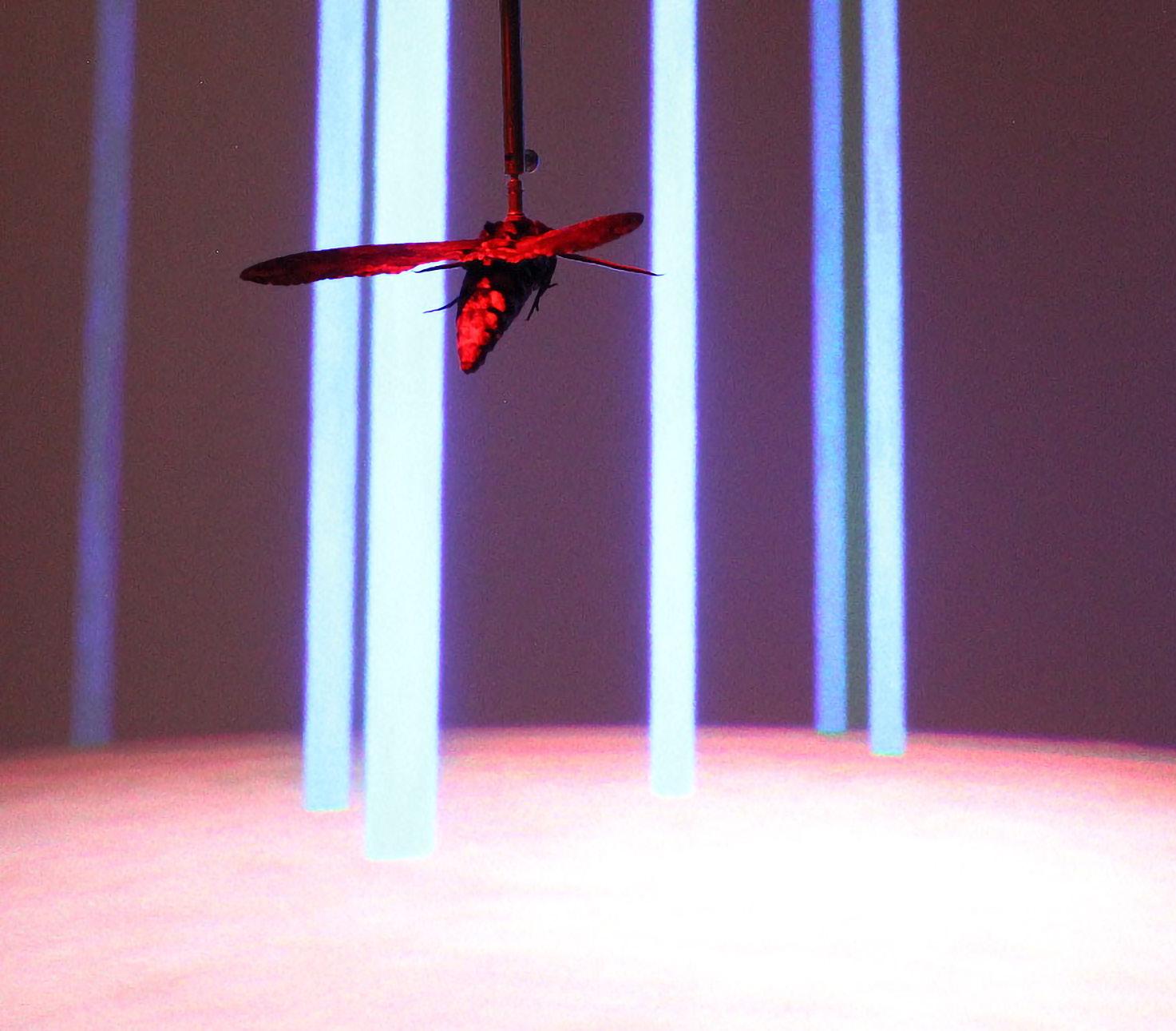

They first had researchers at the University of Washington collect data on how the moths would fly around a virtual forest, taking measurements on things like their flight trajectories, force and speed along the way. Those data were then used to extrapolate what kind of navigation policy the moths employed.

“We discovered that the moths relied heavily on what is known as ‘optical flow,’ ” Paschalidis explains. “That is a pattern of the motion of the various objects in the environment that is caused by your own motion relative to these objects, in this case, the moth’s own motion.”

That perceived motion then allows the moth to react to objects in the appropriate way, as objects that are moving fast are close, and those moving slowly are farther away. This is similar to how humans react while driving; if a car suddenly appears extremely fast in front of your car, you’ll brake, but if a car is far away and speeding around, you don’t have to react quite as quickly.

Understanding how the moths flew helped the researchers develop a drone policy that

was tested on virtual drones as they flew through a virtual forest. To see how robust this policy was, they had the drones fly through multiple types of environments— from a complex, heavily wooded forest to a sparse one.

Paschalidis notes the most surprising element of the study was that the policy helped the drone navigate through all of the different environments.

Typically, drone control policies are optimized for a specific mission, which

As the moth navigates what it thinks are trees (the light rods), the researchers can capture this movement data and extrapolate how the moth flies around different types of forests.

renders them fragile to unexpected events. This research is getting one step closer to an adaptive, self-aware autonomous vehicle policy that will allow drones to fly in a dynamic, complex and unknown environment.

The work is part of a $7.5 million MURI (Multidisciplinary University Research Initiative) grant awarded by the Department of Defense for developing neuro-inspired autonomous robots for land, sea and air.

Paschalidis says that collaborators from various universities will help gather data from other animals and insects, such as mice and ants, to develop more robust autonomous vehicle navigation policies. — LIZ SHEELEY

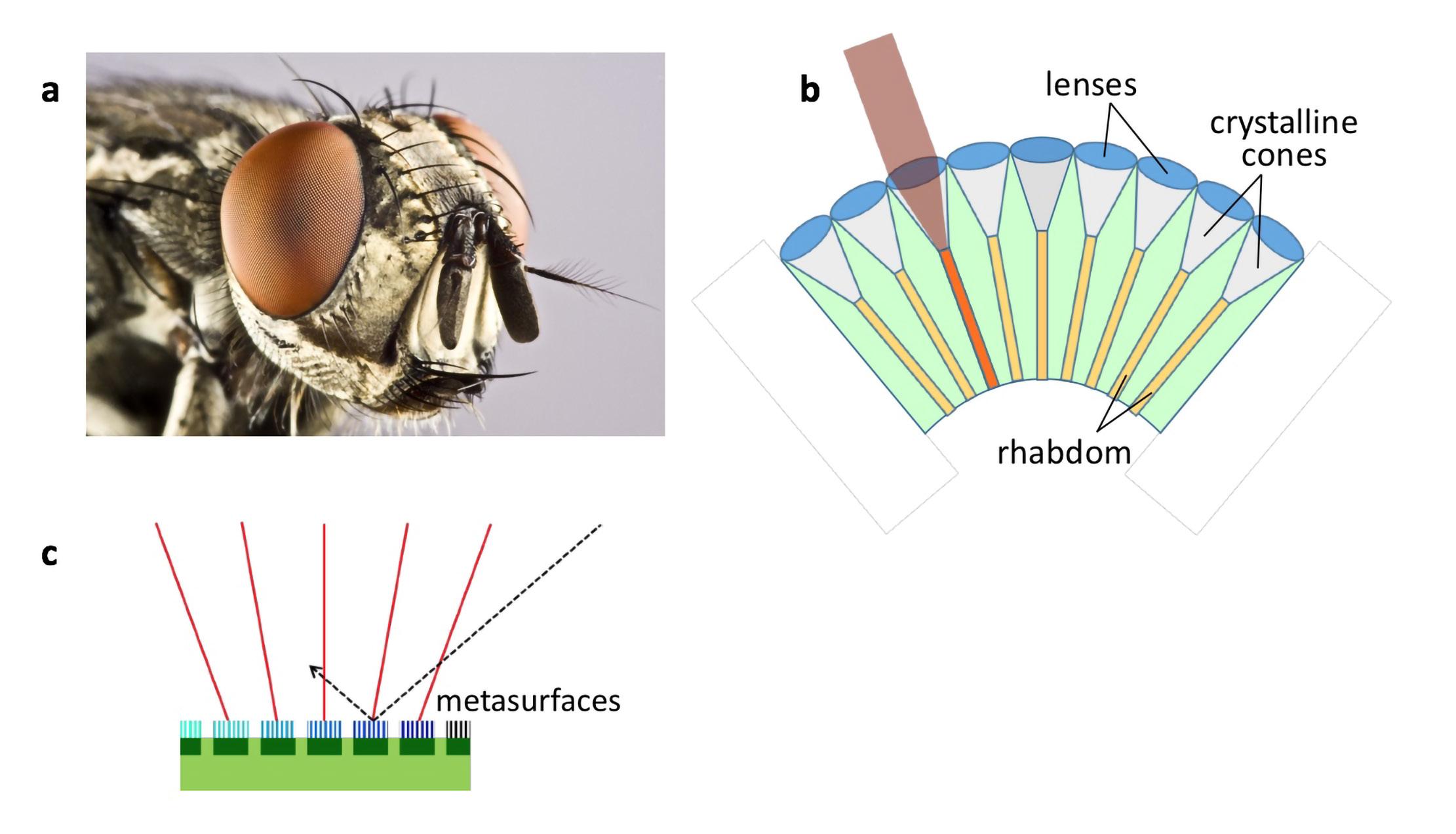

The research team took inspiration from the compound eye of insects, such as the fly (A). A compound eye is composed of multiple lenses that each detect particular angles of light (B); the team took this structure and turned it into angle-sensitive metasurfaces (C).

A Bug’s-Eye View

Although we’ve developed the ability to shrink down a camera to fit on a phone, the underlying principle of the camera itself hasn’t progressed. As a camera gets smaller, there’s a trade-off between field of view and image quality: the larger the field of view, the more distorted an image will be.

Taking inspiration from nature, Professor Roberto Paiella (ECE, MSE) and Assistant Professor Lei Tian (ECE) have developed a new type of camera that mimics the compound eye of insects, such as the fly, to solve this trade-off problem of single-lens, system-based cameras. Unlike compound eyes in nature, however, their device is based on flat—rather than curved— geometry and operates without lenses. Their work has been published in Nature Communications.

“There’s no standard technology to develop a camera with curved geometry, which has complicated the implementation of cameras directly inspired by the compound eyes of insects,” Paiella explains.

This new type of camera mimics the compound eye of insects, enabling a wide field of view.

“Until now, cameras have been flat with a single lens, and all the accompanying technology has been built around that standard. We had to introduce a new approach based on nanophotonics to be able to realize our flat, lensless design.”

Paiella and Tian wanted to develop a new camera and technology that could eventually be commercialized, so they used standard materials and fabrication processes to construct their prototypes.

To build a flat optoelectronic compound- eye camera, they began by developing novel metasurfaces—each of which is designed to only transmit light coming from certain angles and reflect the rest—to replace the traditional camera lens. This means that different metasurfaces are responsible for detecting a combination of points within the field of view. That information is collected and analyzed by a novel algorithm developed by the research team to reconstruct an image.

“We used similar principles to medical imaging analysis for MRI or CT, where the final images are reconstructed from the raw data by a designed transformation,” Tian notes.

These novel metasurfaces could also be tweaked to expand into other imaging capabilities, such as polarization vision—which is how many insects see, by sensing the directions of electrical currents.

This new framework is a proof-ofconcept design to show the benefits of combining these novel metasurfaces and computational imaging, which together make optoelectronic compound-eye cameras that allow for a wide field of view, highly miniaturized dimensions, and great flexibility in the placement of the different metasurfaces on the final product. These properties are ideally suited for application in medical equipment such as endoscopes, or swallowable cameras, or in surveillance and autonomous drones where the camera needs to be particularly small. — LIZ SHEELEY

research

Mining Bacteria Parts to Build Aroundthe-Clock Biosensors

Imagine a Fitbit that measures much more than steps, heart rate and calories burned, and continually tracks all of the indicators of physiological health that currently require expensive and timeconsuming blood plasma analyses. The device is inexpensive, reliable and powered by the same proteins that our bodies produce all day, every day. Although it sounds like a far-fetched concept by today’s standards, Professor James Galagan (BME) says research conducted in his lab could speed that device along from the drawing board to our daily lives.

A team of researchers from Galagan’s BU lab and the University of Bordeaux was inspired by the one commercially successful biometric device that monitors a physiological function around the clock: the continuous glucose monitor, which performs its central task by a protein obtained from a microbe that senses glucose.

“There are potentially millions of similar proteins,” Galagan says. “They can sense just about anything that affects our health. A primary reason we don’t have more sensors like the glucose sensor is that the proteins needed to make those sensors haven’t been identified.” So, his team—which includes faculty members Professors Mark Grinstaff, Allison Dennis, and Catherine Klapperich— set out to find a few.

Described in a paper published in Nature Communications, their findings used a novel screening approach to identify the first known bacteria-derived sensor for detecting progesterone, a female hormone that plays a critical role in reproduction. The team then developed technology that translated the sensor’s detection capabilities into an optical output, creating the first real-time, optical—and reversible— progesterone sensor.

The sensor’s reversibility allows it to generate continuous measurements as the hormone’s level rises and falls in the body, similar to the glucose sensor. It also sets the sensor apart from existing antibody-based methods for measuring progesterone, which provide only a measurement from a single point in time.

In a test using artificial urine, the researchers found that the sensor, which can be equipped with an inexpensive and portable electronic reader for point-ofcare applications, could detect progesterone with a specificity sufficient for clinical use. All of which suggests that it could be suitable for home use, replacing many laboratory-based tests for progesterone measurements that are required during the process of in vitro fertilization.

The study’s big takeaway, says Galagan, is that it’s a “first proof of principle that we could take an organism, identify a new sensing protein, isolate this protein from the bacterium, and engineer it into a sensor device that is applicable to point-of-care use. As far as we know, this has never been done before.”

He emphasizes that the newly developed approach does not use the bacteria as a sensor. Rather, it mines the bacteria for protein parts, isolates those parts, and then turns them into sensors that can be used for device engineering.

The paper’s technology provides a foundation for developing many more sensor devices based on the same class of proteins. The researchers are currently building on that foundation—developing technology to immobilize and deploy such sensors and working on ways to convert its detection capabilities into a direct electronic signal. — ART JAHNKE

Could proteins taken from bacteria, like E. coli depicted here, be a new platform for biometric devices that continuously monitor body functions? Boston University biomedical engineers have developed a proofof-concept that suggests the idea is within reach.

A Gold Standard Gets a Modern Makeover

MEASURING ANTIBIOTIC RESISTANCE IN HOURS

Antibiotic resistance continues to be a difficult problem to solve. One of the most effective ways to curb increasing resistance is to test infectious bacteria’s reaction to several antibiotics before choosing which one to prescribe to a patient, a process that typically takes several days. Now, Professor Kamil Ekinci (ME, MSE) and his group have cut that time down to hours.

Published in Proceedings of the National Academies of Sciences, their work uses a microfluidic device to first capture bacteria within a small channel and then detect the bacteria’s growth before and after exposure to antibiotics.

This new rapid antibiotic susceptibility test works the same way as the tried-andtrue method by measuring bacterial growth in antibiotics—but instead of needing to see the growth, researchers can measure it with electrical currents, allowing them to understand how the bacteria are reacting to a treatment on a microscale.

The current, gold-standard method for antibiotic susceptibility requires scientists to take a sample of the infection, culture the bacteria so they grow, and then test several antibiotics on them—a process that takes two to three days. With this new method, results can be seen within a couple of hours.

The test is simple to implement and— unlike the traditional method—operating the device doesn’t require expensive training. It could easily be utilized in the clinic to test bacteria in the common urinary tract infection, for example.

Building the microfluidic device presented the most difficult challenge, Ekinci says, as it needed to have very small constrictions to trap the tiny bacteria inside. They created the intricate device by pushing current photolithography techniques to their limits. Results in hours versus days.

Tens of bacteria needed versus thousands.

Professor Kamil Ekinci (ME, MSE)

The technology to measure the tiny differences in electrical signals already exists—it’s how a smartphone operates. Ekinci decided to use the concept of electrical resistance as a way to measure the growth rate (whether it was positive or negative) of the bacteria. By doing this, he only needed to wait for tens of bacteria to grow rather than the thousands necessary using the current method.

The more bacteria present, the greater the resistance to electrical current. Taken over time, multiple resistance measure-

Instead of needing to see bacterial growth, researchers can measure it with electrical currents and understand how bacteria are reacting to treatment.

ments can track the growth rate of these bacteria when exposed to different antibiotics; whichever antibiotic inhibits growth the most would be the best one to prescribe to the patient suffering from the infection.

Because bacteria develop resistance when they are exposed to an antibiotic— but survive treatment—tests like these are necessary. Letting any survive allows the bacteria to adapt to the antibiotic, creating a stronger form of that bacteria and leading to superbugs. — LIZ SHEELEY

research

Better, Simpler Detection

LIDAR-ON-A-CHIP COULD MAKE BUILDING AUTONOMOUS CARS MUCH EASIER

When developing new technologies, engineers are constantly pushing the limits of currently available electronics—but progress can only get so far before parts must be invented that create new, extended limits. Associate Professor Milos Popovic (ECE) and collaborators have built a new type of silicon chip that will help advance technologies, such as sensors, for self-driving cars and smartphone face recognition.

Technologies that need to detect remote objects with fine resolution rely on lidar, the optical version of radar, and use laser beams rather than radio waves. Unlike radar systems, lidar is capable of generating high-resolution 3D images and can more accurately detect the shape of an object. Lidar systems are currently bulky components, but their performance, resolution, cost and complexity could all be improved if they could be realized on a silicon chip— creating lidar-on-a-chip.

To accomplish this task, a tiny silicon photonic chip will require additional electronic components to steer a laser beam emanating from the chip surface, detect its reflections from distant objects, and generate the 3D image. In order to detect an object with high resolution at even moderate distance—say, 300 feet away—the electronics would need to be highly complex, which makes scaling up this type of system extremely difficult. There would be hundreds to thousands of electronic components required just for forming and steering the beam to the correct location.

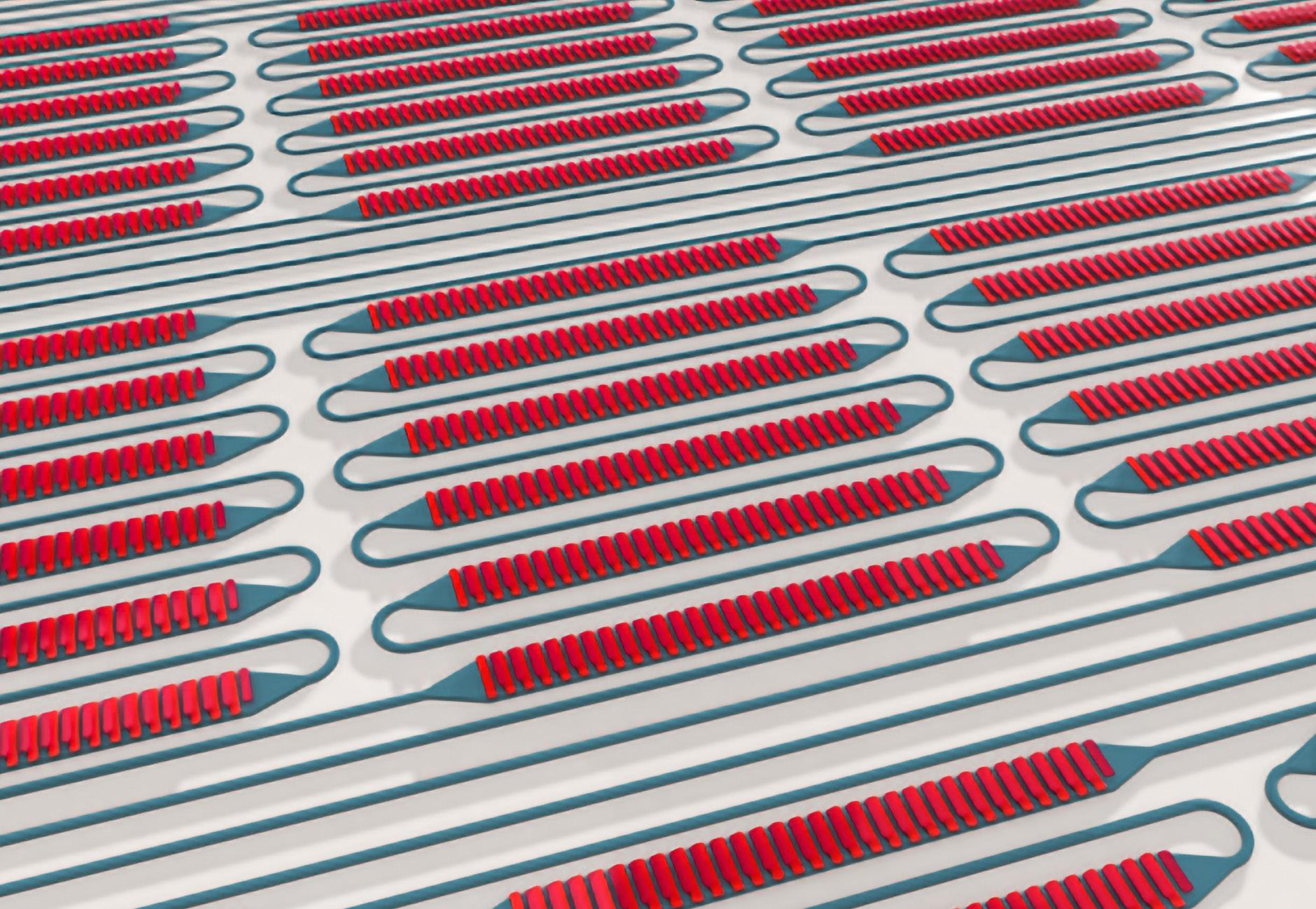

In a paper published in the journal Optica, Popovic’s team describes how they constructed a new chip with an all-optical, electronics-free steering capability in two dimensions—without compromising the lidar performance.

The team included Popovic’s graduate students and co-lead authors Nathan

The chip can be tiled, this rendering showing many serpentine waveguides on a single chip. Guiding light through all the waveguides simultaneously provides enhanced capabilities, such as increasing the range and resolution of the detector.

Dostart, a National Science Foundation graduate fellow who is continuing his career at NASA Langley Research Center, and Bohan Zhang, a National Defense Science and Engineering graduate fellow. The group collaborated closely with Professor Kelvin Wagner and his lab at the University of Colorado Boulder.

Instead of using electronic control circuits that would be programmed to generate and steer an optical beam, the new silicon chip uses a serpentine structure designed to distribute light and form and steer the beam in two dimensions in response to the user’s manipulation of only the input laser wavelength. As the laser wavelength changes, the angle of the emitted light also changes, effectively steering the outgoing laser beam to carry out a two-dimensional angular sweep. Instead of needing to control thousands of electronic circuits, this chip requires only the one control variable: the wavelength of light.

“There’s not really a technology that’s scalable up to large apertures,” Popovic says. “This paper is demonstrating a single optical tile that is the first step of an approach that breaks a lot of these barriers. What’s unique about this thing is basically that it’s an ultra-low complexity, kind of a game- changing design for an optical beam steering chip in the sense that it has basically zero controls.”

The chip can be tiled, meaning the team can create many serpentine waveguides on a single chip or use a series of chips, and guide light through all the waveguides simultaneously to provide enhanced capabilities, such as increasing the range and resolution of the detector. Sensors based on such chips could be placed in different spots around a car so that each array of on-chip serpentines can act as a detector in different directions.

For example, the iPhone has an assembly of small parts, including a laser and lens, that together can detect a face for its facial identification feature. Instead of a complex assembly of parts, Popovic’s team has shown that a key component of such a system, the beam-steering device needed for high-resolution images, can be realized on a single chip—allowing future lidar systems to be much simpler, and potentially be entirely integrated in a single chip or chip package. This could significantly lower the cost of these technologies in many applications such as phones, cars and satellites. These chips can also be made using the same manufacturing processes and factories currently in place, another benefit to rapid transition to application and adoption. — LIZ SHEELEY

When the Robot Becomes the Researcher

NEW AUTONOMOUS RESEARCHER CAN SPEED UP DISCOVERY OF THE BEST 3D-PRINTED MATERIALS

Additive manufacturing—or 3D printing—has vast applications, some of which are in the medical, aerospace and consumer fields. To further advance the area, researchers must study the best way to produce 3D-printed parts that can withstand more rigorous use than a 3D-printed prototype for research would receive.

Assistant Professor Keith Brown (ME, MSE, Physics) and Professor Elise Morgan (ME, MSE, BME) have developed a method of testing the mechanical properties of thousands of 3D-printed structures to catalog and understand them in an extremely efficient way. Their work has been published in Science Advances.

Their collaboration came out of a mutual interest in testing the toughness of materials—Morgan’s expertise lies in the mechanical behavior of biological materials and Brown’s, in nanotechnology and soft materials. Over the past few years, they have built an autonomous robot, or “researcher” (as they call it), to run this toughness experiment on its own, and it has since cataloged thousands of 3D-printed structures.

“I was thinking about a 3D printing system where you can create a structure, test it and then learn from it,” Brown recalls. “If you’ve got an autonomous system that you could give a question, something like, ‘I want to know what kind of structure is going to have the best property,’ then it will automatically test structures, and tell you the answer.”

Known as BEAR (Bayesian experimental autonomous researcher), the automated researcher was designed to perform experiments on its own, designing and testing parts to determine their mechanical properties. The system can print a 3D structure, remove it from the printer, weigh it using a scale and then crush it. It records every detail of the process, creating a vast database of structures and data related to how the materials behave when they are compressed, including whether, and how, they fail.

Brown and Morgan first chose to explore the property of toughness, which directly correlates to failure. Toughness and strength are related, but separate properties—glass, Morgan says, is actually stronger than steel, but only when it has no defects. And defects are almost impossible to prevent during even the most advanced manufacturing.

“Over many centuries of work in materials, and in mechanical engineering more broadly, we have gotten good at designing, fabricating and manufacturing strong materials, but designing tough materials has lagged behind,” she notes. “But it turns out that nature’s pretty good at designing tough materials. Bone, for instance, is surprisingly tough, considering what it’s made of.”

They also pulled in another faculty member, Assistant Professor Emily Whiting (Computer Science), to help with the complicated algorithm development. In order for the system to be autonomous—not

Built to study the mechanics of 3D-printed components, the entire BEAR system contains five printers, a six-axis robotic arm, a scale and a universal testing machine.

just automated—it needed to run machine- learning algorithms during the testing and evaluation phases. While the system runs, it learns about failure in 3D-printed parts, and can choose the next design to test based on past results.

This work with BEAR is just one example of how an automated researcher can be used to speed up typically slow and monotonous experiments. And although through developing and optimizing BEAR more than 2,500 structures were tested, only 32 were needed to reach the optimal structure design for toughness, showing them that they can test much more complicated structures than they have so far.

“With advances in additive manufacturing, you make very complicated hierarchical component designs,” Morgan says. “When trying to find the best design, the rate- limiting step is testing them and evaluating the results, but the advances in autonomous systems and robotics have enabled us to open up that rate-limiting step quite a bit.”

Brown and Morgan say that in the future, these new structures could be used for protection—such as pads inside of a helmet—and, potentially, synthetic bone substitutes. — LIZ SHEELEY