5 minute read

Driving into an autonomous future

from Networking

by DCD Magazine

Sameh Yamany VIAVI

Could Advanced Driver-Assistance Systems (ADAS) be the push needed toward a fully autonomous future?

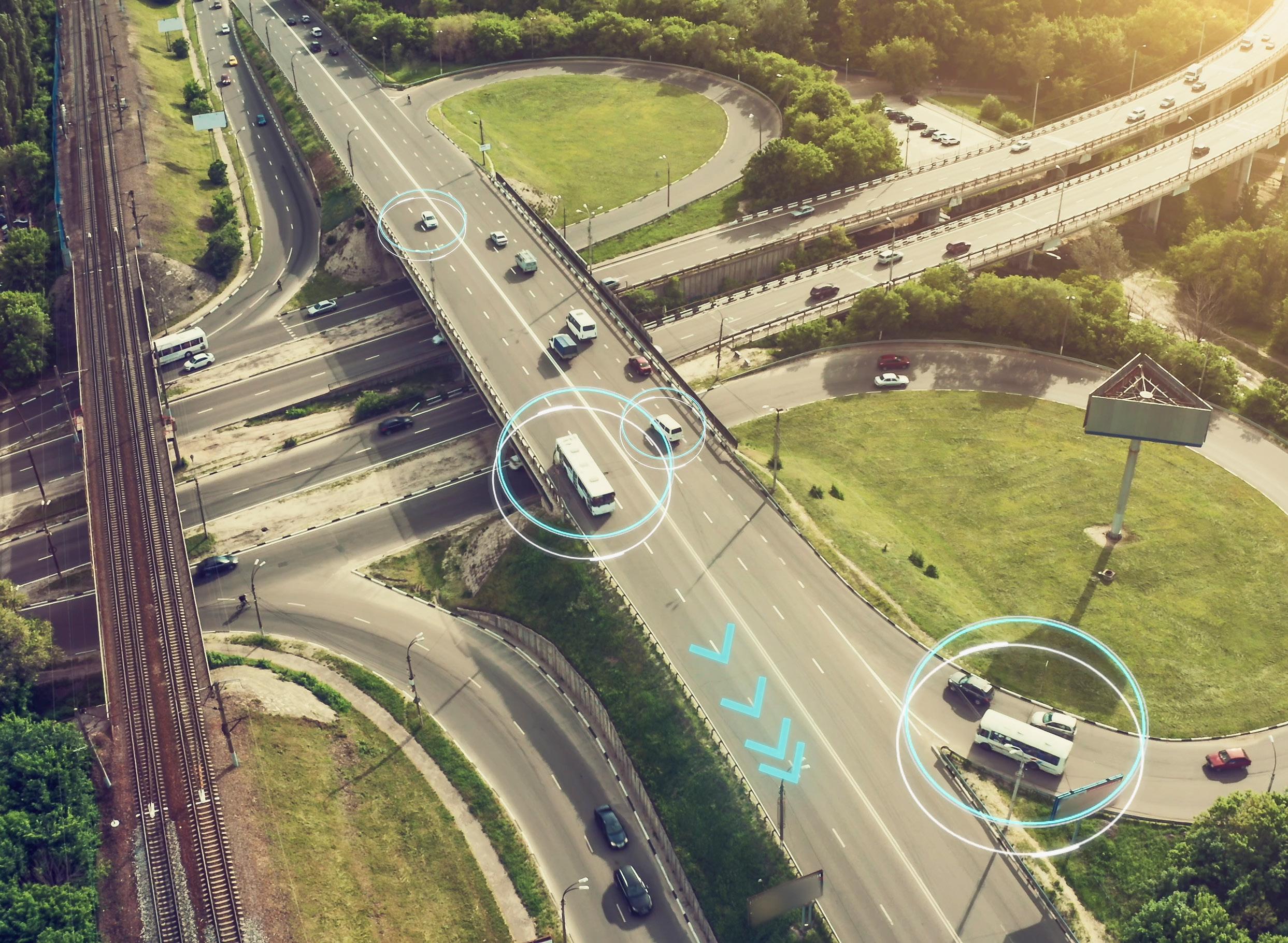

An exciting ultra-reliable, low-latency communication (URLLC) 5G use case that is not always associated with the hyperscale ecosystem is Advanced Driver-Assistance Systems (ADAS).

This oversight is not surprising with selfdriving vehicles grabbing headlines and exceeding expectations. The hyperscale data centers anchoring 5G services from distant locations are not as visible, but they still provide essential artificial intelligence (AI) rules, software updates, and big data storage to make ADAS possible.

Moving towards level five automation The Society of Automotive Engineers (SAE) has defined six levels of automation with level zero, being drivers that perform all functions manually and level five equal to full automation with no drivers needed.

The most advanced vehicles available today are usually classified as level two, where the driver must remain engaged while the car performs computer-guided functions.

Truly autonomous transportation will require a combination of high data capacity, latency as low as 1ms, and 99.9999% reliability. 5G and Edge computing anchored by hyperscale data centers have the power to turn this utopian vision into reality. What is ADAS?

ADAS origins in the 1950s and many advanced driver-assistance systems for individual vehicles have been available for decades.

Early ADAS features like anti-lock brakes, adaptive cruise control, and back-up cameras were designed with safety in mind. Built-in navigation systems and handheld devices equipped with GPS have changed driving habits forever.

As LiDAR, cameras, and pattern recognition technologies advance, instant communication and data transfer between vehicles, the cloud, and other objects are the missing ingredients needed to transform ADAS into a global transportation network.

Levels of driving automation

0No automation Manual control. The human performs all driving tasks (steering, acceleration, braking etc.)

1Driver assistance The vehicle features a single automated system (e.g. it monitors speed through cruise control.)

2 3 4 5

Partial automation

ADAS. The vehicle can perform steering and acceleration. The human still monitors all tasks and can take control at any time. Conditional automation

Environmental detection capabilities. The vehicle can perform most driving tasks, but human override is still required.

High automation

The vehicle performs all driving tasks under specific circumstances. Geofencing is required. Human override is still an option.

Full automation

The vehicle performs all driving tasks under all conditions. Zero human interaction or attention is required ADAS and hyperscale

If fully autonomous transportation is the Holy Grail of ADAS, hyperscale computing may be the unlikely enabler that makes it possible.

With high mobility and low latency pointing to Edge computing as an obvious solution, hyperscale data centers, with a minimum of five thousand servers on a ten thousand square foot or larger footprint, might seem as outdated as paper road maps and compasses – big data changes this equation.

ADAS is poised to become the largest IoT use case and automotive data is expected to reach zettascale proportions by 2028.

While many decisions can and will be made by onboard computers and many more functions will be performed at the Edge, there is still an enormous volume of data to be offloaded and analyzed.

Infrastructure for AI, data analysis for traffic optimization, and content storage are obvious non-latency dependent functions that can be housed in hyperscale data centers.

VIAVI

ADAS features

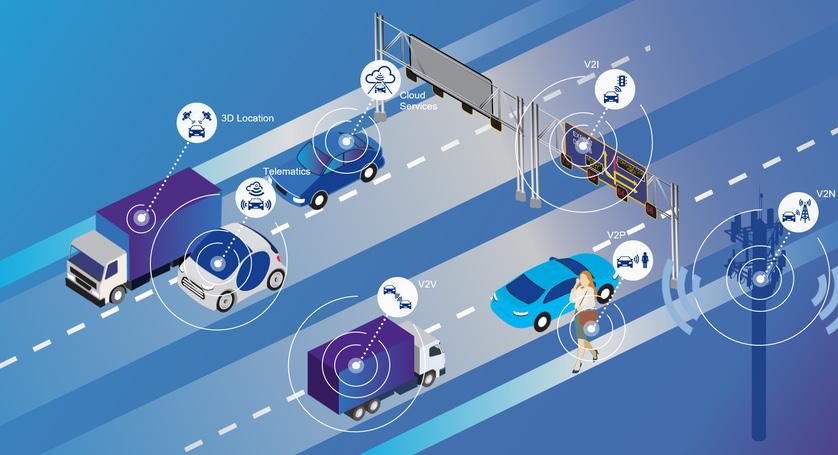

Vehicle to Everything (V2E) V2E and V2X are common acronyms for “vehicle to everything”. While not quite “everything”, 5G technology does extend communication in many different directions.

Vehicle to Network (V2N) refers to direct vehicle access to cloud-based services. Vehicle to Infrastructure (V2I) typically includes communication with equipment installed on or near the roadside, such as traffic signs and toll booths.

Vehicle to vehicle (V2V) allows the sensory information from surrounding vehicles to be shared for safety and navigation intelligence, while Vehicle to Pedestrian (V2P) communication can be used to warn both drivers and pedestrians of potential obstacles, including each other.

Infotainment As ADAS moves closer to level five, more travel time will be available for communication and entertainment. Some aspects of the infotainment experience will depend on the vehicle’s computer hardware and graphics.

5G will also play a role by supplying streaming content and connected gaming services to meet consumer demands. Many of these applications will be

bandwidth intensive, although not as exacting for latency and reliability. This presents an opportunity for infotainment content and user preference data storage in the hyperscale cloud.

3D location When complimented by artificial intelligence, augmented reality, and GPS data, the information provided by V2E can move geolocation services from 2D to 3D.

This improves situational awareness, giving drivers detailed 3D information on the surrounding terrain and potential obstacles. Hyperscale data centers will support the essential AI rules and long-term storage needed to maintain this virtual 3D map of the world.

Telematics By combining informatics and telecommunications, telematics provide a means to send vehicle information directly to the cloud for storage and analysis. This technology has found a ready-made application in fleet management for trucking companies, taxi operators, and emergency services.

5G is expected to move telematics further into the consumer domain as driving behavior is communicated to insurance companies, auto dealerships, and individuals.

The future of ADAS and hyperscale Although predictions for ADAS adoption and level five transformation vary, it is virtually certain that the data storage and computing demands will be unprecedented.

This will lead to an ongoing trade-off between onboard vehicle computers, Edge computing locations, and hyperscale data centers to prioritize and balance storage, analysis, and latency.

As data centers become more disaggregated and interoperable, their importance to ADAS advancement will remain unchanged.

The Automotive Edge Computing Consortium (AECC) was founded in 2018 to help vehicle manufacturers, OEMs, and suppliers evolve network architecture and computing infrastructure to meet the challenges of ADAS.

While questions remain as to who will finance, build, and operate the new infrastructure, consumer demand could be the ultimate driver of data center expansion. This will make proactive use case emulation, pre-deployment DCI fiber characterization, and high-speed transport testing invaluable.

Further reading Panel: What level of intelligence and automation do data center networks require? DCD broadcast: Exploring data center network infrastructure dynamics