1 minute read

Can technology be Racist?

Bias In Algorithms

Of the numerous biometrics used in technology, facial recognition could be the most widely used but also the least accurate method of identification. However, not all faces are classified equally by these matching algorithms. A 2019 a federal government study found “the systems generally work best on middle-aged white men’s faces, and not so well for people of color, women, children, or the elderly.” Additionally, Buolamwini and Gebru’s 2018 research concluded that some facial analysis algorithms misclassified Black women nearly 35 percent of the time, while nearly always getting it right for white men. Clearly this technology is not perfect and could be improved significantly.

Advertisement

Magnified By Misuse

Given the known errors within facial recognition technology, the ways law enforcement uses them become alarmingly racist. Believe it or not, your face is captured by cameras in public venues and used by police to compare against mugshot and driver’s license databases searching for potential crime suspects. Far from harmless, this practice is compounding racial bias because America’s legal system disproportionately polices and criminalizes Black people. Moreover, some police jurisdictions have used these algorithms within social media to track phrases like “Black Lives Matter”.

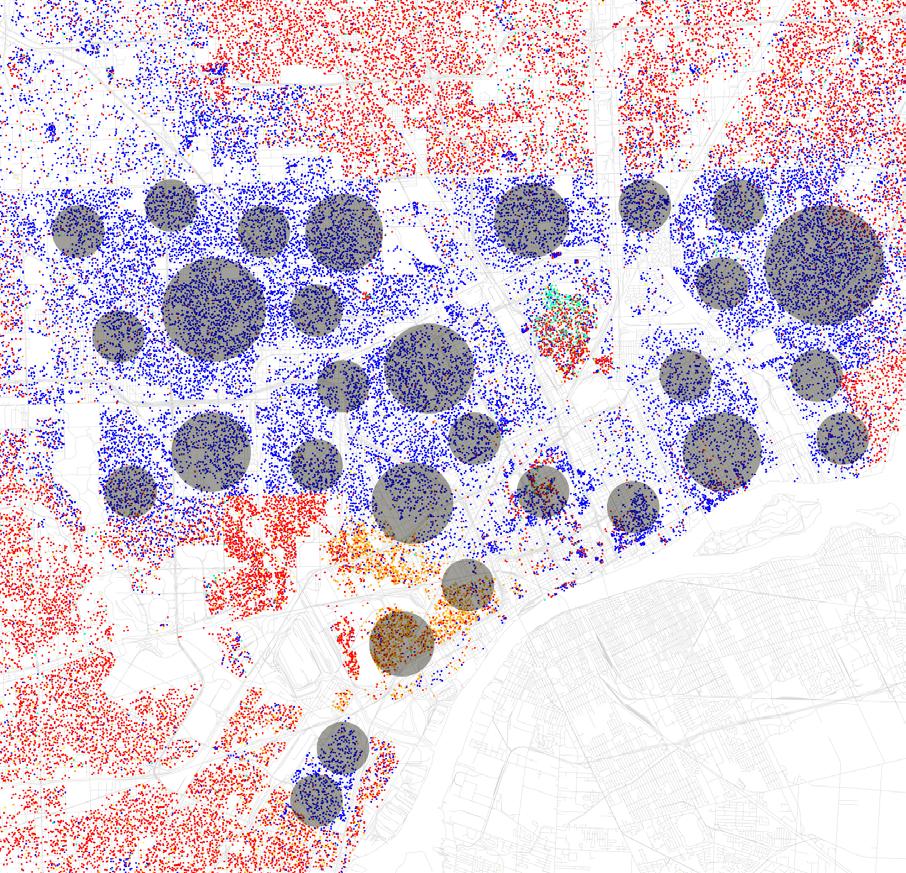

Camera locations shown on 2010 census map where Red is White, Blue is Black, and Orange is Hispanic,

Project Green Light Detroit

One example exposing systemic racism and racial bias within facial recognition is Project Green Light was enacted in 2016 installing high-definition cameras throughout the city of Detroit, which streams data directly to Detroit PD.