32 minute read

Living With Hearing Loss From

Advertisement

Samin lives in Iran. A family friend, Soheila (above, center), accompanied her on the day her replacement cochlear implant processor was switched on. Through a network of cochlear implant families, Samin was able to receive a processor donated from Australia that was used and older—but just like the one she lost.

From Dark Days to a Bright Future

Networking among mothers of cochlear implanted children around the world leads to a solution for a young girl.

By Sahar Reiazi

Samin was born in a small village in the province of Mashhad in northeast Iran. She is the only person in her family who has a hearing loss. Doctors suggested cochlear implants, and even though her father did not have the money they opted to get the surgery. Her father borrowed the money he needed and Samin was unilaterally implanted in Iran when she was 7 years old. Teachers in her school were impressed as Samin proved herself a fast learner and a bright child.

Samin turned 9 and her speech was progressing well. One day when she was riding the bus with her parents Samin dozed off. When they got off the bus, her parents realized the cochlear implant processor wasn’t on her ear. They were devastated. They searched and searched, contacted the bus company, and even filed a statement with the police saying her cochlear implant device had been lost. But the processor was gone. For the first time in two years, Samin had to experience silence.

Her family was distraught. Samin cried every day for a month, until she just went silent and communicated with her family through lipreading (speechreading). Her elder brother by two years was upset for his sister, and asked their mother why Samin wouldn’t talk anymore. “I was so distressed that I would lay down all day depressed and hopeless,” Samin’s mom says. “I couldn’t muster up the energy to get up and cook for my own children.”

Samin’s mom refers to that time as “the dark days.” They were still paying installments on Samin’s last device and could not afford to buy a new one. Though they appealed to different organizations, all said that due to the long waitlist it could be years until Samin was eligible for a new device—a device the family could not afford to begin with.

I heard about Samin’s story through a mutual friend. I told her I would help raise funds for Samin no matter what. Something inside me woke up, a little flame that would not be put out until I saw Samin with a new device. I started making calls and sending out emails. My mind was working nonstop to find someone I could reach out to. Every day counted, because I would wake up thinking how Samin could not hear, and how that wasn’t fair.

So many emails and messages from people across the globe came in, reassuring me that they would do anything within their power to find a device for Samin.

One person I contacted was my friend Hiroko in Japan. She was sympathetic because she had two daughters who wear cochlear implants and she could understand Samin’s situation well.

After just 48 hours, I received a call from Hiroko. When I picked up and said hello, she said excitedly, “Hi, Sahar. A device has been found for Samin from Australia! It will be donated to her, please contact….”

I was crying tears of joy; Hiroko was crying tears of joy. It was one of the best moments of my life.

Australia has excellent medical care for their citizens. The country supplies the newest hearing devices and latest technologies for free as part of a government organization called Medicare. That is why people often donate their older devices. The device donated to Samin was an older model—but exactly the same as what she had before.

I offered to pay for postage fees to the kind donor, but she insisted that she would pay for it herself. The device was sent out to Soheila Naderi, the friend who had introduced Samin to me. She lived close to Samin’s village and had better access to her than me. Soheila also has a son with cochlear implants. She is a dear soul who helps and informs families of children with hearing loss on her social media page.

The device arrived! Samin’s mom was informed, and Soheila took Samin and her mother to their local audiologist. The audiologist asked, “Samin, can you hear me?” and she replied “Yes.” Soheila recorded it all using her phone and sent the videos to me. We were all overcome by emotion.

I still keep in touch with Samin’s mom to ask how Samin is doing. Samin is attending rehabilitation classes and mainstream school and her future looks bright.

I aspire to create an organization in Iran which focuses on donating used hearing devices—such as hearing aids and cochlear implant speech processors, and spare parts such as batteries—to families who need them. Who knows, maybe we’ll call it “The Samin Foundation.” Samin is an Arabic word that means “precious,” and not only is everyone in this story precious to me, but so is being able to fully hear.

Sahar Reiazi (near left) appeared on the cover of the Spring 2021 issue, at hhf.org/magazine. Hiroko’s story is the cover story of this issue.

Share your story: Tell us your hearing loss journey at editor@hhf.org.

Support our research: hhf.org/donate.

Recent Research by Hearing Health Foundation Scientists, Explained

Increasing Our Understanding of Avian Hair Cell Regeneration

The hearing organ in mammals is the cochlea. In birds, it is the basilar papilla that, in sharp contrast to the cochlea, can regenerate its sensory hair cells and as a result recover from hearing loss within weeks. The mechanisms that trigger, sustain, and terminate the regenerative response in live animals (in vivo) are largely unknown.

Prior research has shown that the mobilization of immune cells or activation of immune genes is essential for aspects of regeneration promoting cell proliferation and wound healing. But while both the avian basilar papilla and the mammalian cochlea invoke an immune response after hair cell damage, one happens in the context of regeneration and the other does not.

For this study, we set out to build on our previous work that created a surgical model for the local infusion of the ototoxic drug sisomicin in the chicken ear in vivo. This eliminates the hair cells “all at once,” allowing for not only greater efficiency but also better leveraging of single-cell RNA-sequencing technology. Our prior research showed, intriguingly, that damaged chick hair cells communicate with supporting cells before dying themselves, sending signals that may promote the regenerative process. Using single-cell analysis we set out to find out how these signals affect the surrounding non-hair cells, also called supporting cells.

In addition, we were able to leverage our recently created, comprehensive inventory of tens of thousands of genes activated and deactivated throughout the first 24 hours of hair cell death. This baseline single cell data of the avian basilar papilla is in effect a “roadmap” for detecting changes in gene expression occurring after ototoxic insult. We used the same strategy to investigate the regenerative process at 30, 38, and 96 hours after hair cell death at a single cell resolution.

As published in the journal Development in May 2022, we found that among avian supporting cells at 30 and 38 hours, the most prominent change in gene expression was the upregulation, or activation, of immune-related genes, linked to a common signaling pathway that is known as JAK/STAT. We also showed that the upregulated genes within the supporting cell layer are linked to JAK activation, and that inhibition of JAK/STAT signaling abolishes the upregulation of these genes.

We provide evidence that in the regenerating basilar papilla, the expression of immune-related genes is tightly controlled, such that four days after damage, they are no longer expressed in newly regenerated hair cells. This is important because the JAK/STAT signaling pathway is highly potent, leading to inflammation, cytokine storms, and fibrosis.

Our next steps are to identify the signals that lead to upregulation of immune-related genes in supporting cells and to determine whether these signals are essential for the process of hair cell regeneration.

Building on research from fellow members of the Hearing Restoration Project, this paper contributes mechanistic knowledge about avian hair cell regeneration, finding that newly regenerated avian hair cells display unique combinations of genes that differ from naturally generated hair cells. This unveils differences between avian hair cell development/homeostasis and avian hair cell regeneration. Our collection of gene expression changes during avian hair cell regeneration provides a valuable resource with respect to devising strategies for hair cell regeneration in mammals.

The process of how we utilize data analysis methods to order gene activation during hair cell regeneration along a temporal axis is a major technical advance that was possible through the support from the Hearing Restoration Project. Each study will bring us a step closer toward unraveling possible strategies for novel therapies. —Amanda Janesick, Ph.D., and Stefan Heller, Ph.D.

Amanda Janesick, Ph.D., is a former postdoctoral fellow in the laboratory of Stefan Heller, Ph.D., who is a 2001–2002 Emerging Research Grants (ERG) scientist, a member of HHF’s Hearing Restoration Project (HRP), and a professor of otolaryngology–head & neck surgery at Stanford University.

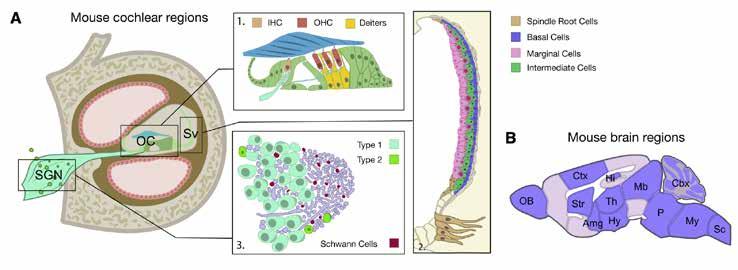

A schematic of the mouse cochlea (A) and the mouse brain (B) regions used for the enrichment analysis, in the American Journal of Human Genetics paper. Abbreviations: Amg, amygdala; Cbx, cerebellum; Ctx, cerebral cortex; Hi, hippocampus; Hy, hypothalamus; IHC, inner hair cells; Mb, midbrain; My, medulla; OB, olfactory bulb; OC, organ of Corti; OHC, outer hair cells; P, pons; Sc, spinal cord; SGN, spiral ganglion neurons; Str, striatum; Sv, stria vascularis; Th, thalamus.

Hope for Treatments Against Hearing Loss as 10 New Genes Identified

Researchers led by King’s College London, Karolinska Institute, and Erasmus University have identified 10 new genes linked with hearing loss and have located the part of the ear affected.

The findings, published in the American Journal of Human Genetics in June 2022, cast doubt on the understanding that age-related hearing impairment originates mainly from sensory hair cells. Researchers argue that the stria vascularis, a part of the cochlea in the ear, is a new target for treatments to help people with hearing loss.

Many people gradually lose some of their hearing ability as they get older, and an estimated 2.4 billion individuals will have some form of hearing loss by 2050. Age-related hearing impairment is a top contributor to years lived with disability and is also an important risk factor for dementia.

The team studied genetic analyses previously carried out in centers around the world using samples from 723,266 people from 17 studies with clinically diagnosed or self-reported hearing impairment. This meta-analysis is one of the largest conducted in hearing genetics to date. The researchers identified 48 genes linked to hearing loss, including 10 new variants newly linked to hearing.

Further analysis looking at mouse genetics indicated that age-related hearing loss is due to changes in the stria vascularis, which is necessary for hearing. The results provide targets for the basis of future research that could improve therapies against hearing loss.

“Our findings identify 10 genes newly linked with hearing loss,” says co-main author Frances Williams, Ph.D., a professor at King’s College London. “This study points to genes we could target for screening purposes, drug development, and even gene therapy in the future. This study provides a solid foundation for ultimately improving therapies against hearing loss.”

Co-main author Christopher R. Cederroth, Ph.D., an associate professor at the Karolinska Institute, adds, “It was hypothesized since the 1970s that the stria vascularis may play a role in hearing loss in humans, but the molecular evidence for this was missing until today.”

This press release originally appeared on EurekAlert. The paper’s coauthors also include 2009–2010 ERG scientist and HRP member Ronna Hertzano, M.D., Ph.D. (pictured), who is a professor in the department of otorhinolaryngology–head & neck surgery at the University of Maryland School of Medicine; Cynthia Morton, Ph.D., a member of HHF’s Council of Scientific Trustees; and 2002–2004 ERG scientist Anna Giersch, Ph.D.

From the American Journal of Cancer Research paper, confocal images (A-D) of the middle turns of the mouse cochlea (Segment 3, frequency range: 19.1-36.5 kHz) immuno-stained with Myosin 7A, show hair cell loss after HNK and/or cisplatin treatment. Severe outer hair cell loss was induced by the treatment of cisplatin at 15 mg/kg (C), which is largely reduced by pre-treatment of HNK 20 mg/kg (D). Scale bar: 100 µm. Data represents mean ± SEM.

Honokiol Presents a Potential Paradigm Shift for Reducing Cisplatin Ototoxicity

Platinum-based chemotherapeutic drugs are used in about 40 percent of cancer treatments. As potent as they are, severe side effects, such as damage to the brain, liver, kidney, etc., may occur.

As a major side effect during chemotherapy, cisplatin ototoxicity (damage to the inner ear) affects an estimated 100,000 to 300,000 cancer patients annually in the U.S. The symptoms include severe hearing loss, tinnitus, and dizziness. Cisplatin ototoxicity (CO) is related to the accumulation of reactive oxygen species (ROS), causing the loss of the outer hair cells (OHC), one of the two sensory hair cell types for hearing function in the inner ear.

To date, no effective Food and Drug Administration–approved treatment is available to protect against CO. The major concern of the candidate treatments is the interference of the therapeutic effects either by deactivating cisplatin or protecting tumor cells.

Recent work by our team—my lab and that of ClausPeter Richter, M.D., Ph.D.—shows for the first time that honokiol, a multifunctional small molecule extracted from herbal medicine (genus Magnolia), prevents cisplatininduced hearing loss both in vitro, in cultured cells derived from the inner ear, and in vivo, in mice.

Furthermore, this hearing protective effect of honokiol has also been verified on a transgenic tumor-bearing mouse model in chemotherapy regimen mimicking standard clinical treatments. Treatments were given in three cycles; in each cycle, 4 mg/kg/day (milligrams/ kilogram/day) of cisplatin was given for four days through injection, followed by a 10-day recovery interval.

After the three-cycle treatments, cisplatin induces a severe hearing loss and OHC loss in the high frequency region (over 16 kilohertz), which is significantly reduced by parallel honokiol treatments (10 mg/kg/day, injected one hour before cisplatin). Meanwhile, the tumor growth is significantly slower, and animal survival rate is higher in the honokiol and cisplatin group than in the cisplatinonly group.

These results indicate that honokiol is synergistic with cisplatin in tumor suppression, which is consistent with previous publications. Further studies show that the mechanism is related to the upregulation of sirtuin 3, a critical enzyme in mitochondria essential for ROS detoxification. The sirtuin 3 expression level is upregulated in the hair cells after honokiol treatment.

Our study indicates that honokiol is a promising candidate for hearing protection against cisplatin ototoxicity. Honokiol is approved by the European Food Safety Authority as a food supplement and has been tested for its anti-asthma and anti-anxiety activities in preclinical trials.

As a result, we expect a paradigm shift in cisplatin chemotherapy based on the current study and future clinical trials, where honokiol is applied to reduce side effects including hearing loss. This paper, published in the American Journal of Cancer Research in December 2020, formed the basis for my National Institutes of Health R01 project titled “Hearing Protection in Cisplatin Chemotherapy,” which was funded in February 2022. —Xiaodong Tan, Ph.D.

A 2017 ERG scientist, Xiaodong Tan, Ph.D., is a research assistant professor in the department of otolaryngology at Northwestern University’s Feinberg School of Medicine.

Clues to How the Auditory Cortex Controls Subcortical Circuits

Undergraduate textbooks typically paint the central auditory system as a linear, hierarchical structure: Sound information enters the brain via the 8th nerve, and electrical signals ascend through subcortical auditory circuits in a “bucket brigade” fashion. Sound is processed along each step of the pathway, and the auditory information reaches the auditory cortex, which supports higher-order perception and behavior.

However, this textbook model is somewhat simplistic: Decades of research shows that the auditory cortex originates descending projections that operate in parallel with the ascending auditory system: Neurons in the deep layers of auditory cortex send excitatory nerve fibers back down to the majority of subcortical regions, like the thalamus, inferior colliculus, and cochlear nucleus.

These “corticofugal” projections likely play a major role in hearing, as they provide an avenue for higher-order signals from the auditory cortex to shape how the early auditory system interprets incoming sounds from the ear. This cortical input conveys “top-down” information, which may provide contextual information to local processing in subcortical regions.

Despite the existence of corticofugal pathways being well-established, little is known regarding the cellular and biophysical mechanisms that enable the auditory cortex to transmit top-down signals. In addition, we don’t understand how descending cortical signals are integrated with ascending information.

In our eLife paper published in January 2022, we addressed this knowledge gap by studying the descending projection from the auditory cortex to the inferior colliculus (IC), a midbrain hub important for sound localization and speech perception. We combined optogenetic approaches with in vivo and in vitro patch-clamp electrophysiology in mice to study how the auditory cortex transmits messages to the IC. Our results revealed surprising biophysical properties of auditory cortex -> IC synapses that allow descending cortical signals to quickly amplify how individual IC neurons respond to ascending sound information. Interestingly, descending cortical pathways are not unique to the auditory system, but are also a feature of the brain’s visual, tactile, and olfactory pathways. Thus, our results may provide broadly generalizable insight into how the mammalian brain dynamically processes incoming sensory information. —Hannah Oberle and Pierre Apostolides, Ph.D.

This image from eLife shows an example micrograph of an injection site in the left auditory cortex in the mouse model. Dashed lines denote approximate borders of auditory cortical areas.

A 2019 ERG scientist, Pierre Apostolides, Ph.D., is an assistant professor of otolaryngology-head and neck surgery and an assistant professor of molecular and integrative physiology, both at the University of Michigan Medical School.

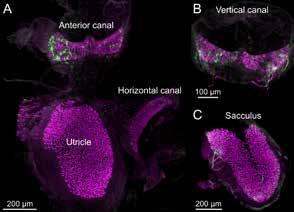

Vestibular end organ whole mounts from the mouse model imaged with 25× objective, as shown in Bio-Protocol. The utricular macula, anterior canal crista, and horizontal canal crista can be dissected out of the temporal bone in one piece. Calretinin (magenta) is expressed in Type I hair cells. Single nerve fibers are labeled with green fluorescent protein.

Protocols for Preparing Mouse Balance Organs for Research

The vestibular (balance) sensory apparatus contained in the inner ear is a marvelous evolutionary adaptation for sensing movement in three dimensions and is essential for an animal’s sense of orientation in space, head movement, and balance. Damage to these systems through injury or disease can lead to vertigo, Ménière’s disease, and other disorders that are profoundly debilitating.

One challenge in studying vestibular organs is their location within the bony inner ear and their small size, especially in mice, which has become an advantageous mammalian model. This protocol, published in Bio-Protocol in May 2022, describes the dissection procedure of the five vestibular organs from the inner ear of adult mice.

Immunohistochemical labeling can be used to identify specific hair cell types, such as calretinin-expressing Type I hair cells, or to localize proteins involved in mechanotransduction, cell structure, or vestibular disorders. We then describe the process of immunolabeling of a whole mount preparation using antibodies to label endogenous proteins or to amplify genetically expressed fluorescent proteins for confocal microscopic imaging.

Using typical lab equipment and reagents, a patient technician, student, or postdoc can learn to dissect and immunolabel mouse vestibular organs to investigate their structure in health and disease.

This is adapted from a paper published by Timothy Balmer, Ph.D. (far left), and Laurence Trussell, Ph.D. (near left), in Bio-Protocol in May 2022. A 2017 and 2022 ERG scientist, Balmer is an assistant professor in the School of Life Sciences at Arizona State University. His 2017 grant was generously funded by the Les Paul Foundation. A 1991 ERG scientist, Trussell is a professor of otolaryngology–head and neck surgery at Oregon Health & Sciences University. Balmer and Trussell used this procedure in their April 2019 eLife study, “Selective Targeting of Unipolar Brush Cell Subtypes by Cerebellar Mossy Fibers.”

Clues About the Development and Regeneration of Chick Hair Cells

The auditory system of the chicken has been extensively used in comparative studies of the evolution and development of hearing. The auditory systems of both humans and chickens are at comparable stages at birth, partially but not fully developed. Also, both species start to hear in the second-third of the gestation period, making the chick cochlea an attractive model system to study the developmental mechanisms of hair cells. In addition, avian species can regenerate hearing, an ability lost in mammals.

The avian equivalent of the mammalian organ of Corti is the basilar papilla. The two hair cell types found in the chicken basilar papilla are tall and short hair cells, with tall hair cells similar to mammalian inner hair cells, and short hair cells similar to mammalian outer hair cells.

Calcium ions are fundamental to the most important roles in sensory processing mechanisms in vertebrate hair cells, including avian and mammalian, such as mechanotransduction, synaptic release, and frequency selectivity. Moreover, it is well established that calcium ions are instrumental to hair cell development, for instance, by supporting the ability to produce spontaneous electrical activity (spontaneous action potentials), one of the hallmarks of development.

This developmentally regulated spontaneous electrical activity also reappears in regenerating chick hair cells, suggesting that the process of regeneration may partly mimic developmental processes. This is an important implication to keep in mind when trying to regenerate the sensory epithelium in mammals.

Auditory hair cells fire spontaneous action potentials before the onset of hearing, which may be a major determinant of synaptic formation and ensuing establishment of proper tonotopic maps along auditory axes during development. Once synapses are established between hair cells and spiral ganglia neurons, the spontaneous action potentials cease, highlighting the importance of spontaneous action potentials to the development of the auditory inner ear.

Specific ion currents have been identified as major players supporting this electrical activity in developing and regenerating chick hair cells. This research by Snezana Levic, Ph.D., published in Frontiers in Cell Neuroscience in January 2022, details the role of smallconductance, calcium-ion-activated potassium channels (SK) in developing and regenerating chick hair cells.

Analysis of the functional expression of SK current showed that most dramatic changes occurred between embryonic day 8 and embryonic day 16. Further, the SK current is present in developing tall hair cells and contributes to the patterning of spontaneous electrical activity, and the SK current reappears in regenerating hair cells. This study provides direct evidence that the functional expression of SK current may contribute to the patterning of spontaneous action potentials in developing and regenerating chick tall hair cells.

This is adapted from a paper in Frontiers in Cell Neuroscience by Snezana Levic, Ph.D. A 2008 ERG scientist, Levic is a lecturer in physiology at Brighton and Sussex Medical School in the U.K.

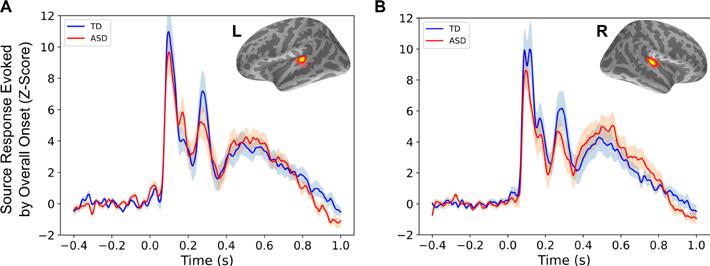

Why Children With Autism May Experience Auditory Sensory Overload

The successful navigation of complex everyday environments with multiple sensory inputs—such as restaurants, busy streets, and other social settings— relies on the brain’s ability to organize the barrage of information into discrete perceptual objects on which cognitive processes, such as selective attention, can act. Failure of this scene-segregation process, where one sound source stands out as the foreground “figure” and the remaining stimuli form the “background,” can result in an overwhelming sensory experience that makes it difficult to focus attention selectively on one source of interest while suppressing the others.

The experience of sensory overload and difficulty with being able to selectively listen to a foreground sound source of interest are ubiquitous in autism spectrum disorders (ASD). To group sound elements scattered across different frequencies correctly into individual sources (e.g., components of a speaker’s voice vs. components of background traffic noise in a busy street), the brain must carefully analyze the temporal coherence across the different sound elements in a mixture.

A leading hypothesis about sensory processing abnormalities in ASD is that this kind of temporal “synthesis” of sensory information is atypical. This hypothesis stems from behavioral data indicating that individuals with ASD often show impaired processing of analogous dynamic stimuli in the visual domain, such as the coherent motion of visual dots. Although behavioral evidence in ASD is consistent with the impaired-temporalsynthesis hypothesis, direct neural correlates have not been identified.

In our study published in PLOS Biology in February 2022, we investigated whether auditory temporal coherence processing is in ASD by employing a novel auditory paradigm. By manipulating temporal coherence in the scene with synthetic sounds, the paradigm was designed such that the acoustic features of the stimulus perceptually bind together into auditory objects with different levels of salience, with the salience of the foreground “figure” object parametrically increasing with increasing temporal coherence.

As hypothesized, we found that children with ASD had significantly reduced evoked responses to the pop-out of the foreground figure, alongside a lower magnitude of induced gamma band activity. Importantly, the cortical measures were not correlated with the behaviorally assessed ability to suppress attention to distractors, suggesting that lower-level auditory processes contribute to the observed abnormalities. The cortical measures did, instead, correlate with both ASD severity and abnormality of auditory sensory processing, thus predicting how children within the ASD group would stratify behaviorally.

These results suggest that neural processing of the temporal coherence of the acoustic constituents of an auditory stimulus is atypical in ASD. Given the importance of temporal coherence as a binding and scene segregation cue in natural sounds, the atypical processing of temporal coherence in ASD could contribute to poorer object binding, as has indeed been suggested, and demonstrated indirectly in the visual domain in ASD.

In scenes with multiple sound sources, the reduced growth of the response with temporal coherence could lead to foreground sounds “standing out” less saliently and a reduced ability to filter out extraneous sounds, contributing to a feeling of sensory overload. This would inevitably also impact speech and language processing, which are highly temporally sensitive, especially in environments with multiple sound sources. Indeed, speech perception impairments in noisy environments in particular have been documented in ASD.

One key advantage of our novel stimuli and the passive design is that the paradigm is translatable to patient populations where behavioral assessments cannot easily be performed (such as infants and toddlers). The paradigm

Responses evoked by overall stimulus onset, as shown in PLOS Biology. (A) Averaged left hemisphere evoked responses relative to stimulus onset, in source space, based on individually identified regions of interest (inset), for each group. (B) Same as (A), for the right hemisphere. Shaded areas show standard error per group. Abbreviations: ASD, autism spectrum disorder; L, left; R, right; TD, typically developing.

is also applicable to animal models of ASD and other neurodevelopmental disorders. In sum, our observations of reduced growth of neural responses with increasing temporal coherence of auditory stimuli in ASD provide new insights into the mechanisms that may ultimately contribute to the sensory processing deficits and socialcommunication challenges that are characteristic of ASD. If borne out in larger-scale studies, our findings also raise the possibility that audiological interventions (such as remote microphones in classroom settings) that help those with central auditory processing challenges may also be beneficial for children with ASD. —Hari Bharadwaj, Ph.D. A 2015 ERG scientist generously funded by Royal Arch Research Assistance, Hari Bharadwaj, Ph.D., is an assistant professor at Purdue University with a joint appointment in speech, language, and hearing sciences, and biomedical engineering.

Pinpointing How Older Adults Can Better Hear Speech in Noise

In real-world listening situations, we always listen to speech in the presence of other sources of masking, or competing sounds. One of the major sources of masking in such situations is the speech signal that the listener is not paying attention to. The process of understanding the target speech in the presence of a masking speech involves separating the acoustic information of the target speech and tuning out masker speech.

Young, typical-hearing individuals can function in moderate amounts of speech distortions with a minimal reduction in speech understanding, while older individuals with and without hearing loss are much more susceptible to the distortions of the speech signal and often experience increased difficulties in understanding speech in noisy environments.

The process of understanding target speech in the presence of masking speech partially involves separating out and attending to the acoustic information of the target speech while ignoring the acoustic information of the masking speech. Target speech and masking speech can be segregated based on factors such as the locations of the target and maskers in the environment, differences in fundamental frequencies between the target and maskers, interaural (between the two ears) time differences, interaural level differences, or differences in contextual information between the target and maskers.

Spatial release from masking (SRM) is the reduction in target identification thresholds when the maskers are physically distanced from the target. Results from recent speech masking studies confirmed that an individual’s ability to obtain a better identification threshold when the target and maskers are spatially separated is driven by a combination of better-ear listening, binaural (using both ears) unmasking, and perceived location of the target and the maskers.

Better-ear listening is an auditory phenomenon created by the head shadow effect wherein the listener uses the interaural level differences between the two ears to better understand speech. Binaural unmasking is the increased ability of the listener to better understand speech in the presence of noise when there is an interaural time difference between the signals reaching the two ears.

An individual’s temporal acuity is typically described using the gap detection threshold, which is the smallest silent interval in the stimulus detected by the listener. While many studies have concluded that older individuals have broader difficulties in temporal and spatial processing tasks, very few studies are available in the literature that contain multiple temporal and spatial processing measurements from the same individual.

We sought to measure the temporal and spatial processing capabilities of older individuals with hearing loss and to use statistical models to identify the individual contributions of gap detection thresholds and localization acuity to SRM. Twenty-five older listeners with varying degrees of hearing loss participated in this experiment, the results of which were published in the International Journal of Audiology in November 2021. We found that spatially separating target and masker sources helps to better identify speech in speech-on-speech masking scenarios, and that difficulties in spatial and temporal processing co-occur in older individuals and individuals with reduced hearing acuity.

However, reduced temporal and spatial processing due to aging, independent from reduced hearing acuity, was not observed. Multiple regression models predicting SRM using gap detection thresholds and localization acuity indicated that the ability of the listeners to use interaural time differences between the signals arriving at the two ears is responsible for obtaining better identification thresholds in spatially separated conditions. —Nirmal Kumar Srinivasan, Ph.D.

A 2016 ERG scientist generously funded by Royal Arch Research Assistance, Nirmal Kumar Srinivasan, Ph.D., is an assistant professor in the audiology program in the speech-language pathology and audiology department at Towson University in Maryland.

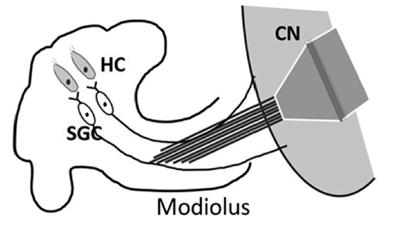

This is an illustration of cochlear implantation via the modiolus approach used in the rat model for this study in Measurement and Control. Abbreviations: HC: hair cell; SGC: spiral ganglion cell; CN: cochlear nucleus.

Using Algorithms to Measure Response Times to Auditory Nerve Stimulation

Neural information travels among multiple areas in the brain, which has been of great interest in the field of neurocomputation, and several computational methodologies have been developed and applied to measure the neural information flow.

Measurement of the information flow along the ascending auditory pathway from the periphery to the auditory cortex (AC) has been given much attention in neurocomputation. While the neurophysiological mechanisms of the auditory pathway have been well studied, the temporal resolution and relationships among the auditory centers are still under investigation, especially when suffering from acoustic trauma that results in peripheral deficits and signal changes in the auditory system.

In our study published in the journal Measurement and Control in April 2022, by chronically implanting the electrodes in the auditory nerve (modiolus approach) and auditory cortex, we measured rat auditory cortex (AC) neurons and auditory nerve (AN) signals in a digital format by using two new algorithms to calculate the neural response time of the AC neurons to electrical stimulation of the AN and quantify the neural information flow in the temporal domain.

The proposed algorithms could provide a clue on the signal response and its shifts in the AC during the development of hyperactivity, a neural mechanism of tinnitus, and lead to an in-depth understanding of the information flow inside the auditory pathway. This will help us to better understand the mechanisms of tinnitus.

One algorithm compared the time differences of neural spikes directly, which was based on the conventional idea of the spike train in neurocomputation. The other employed a modified cross-correlation algorithm.

To test the effectiveness of the proposed method, the time difference of the AC to AN was calculated by both algorithms with raw neural signals collected.

The animals had measurements before and after noise trauma, and one of the animals received intra-modiolus electrical stimulation to stimulate the AN.

As a proof-of-concept study, the consistent results from using these two algorithms support quantifying AC time delays to AN stimulation, which could interpret temporal information processing along the auditory pathway.

For future work, a larger sample size of animals will be used and multichannel neural signals will be simultaneously collected in the AC and other areas of the brain, allowing us to develop a more complete measurement system to analyze the temporal relations along the auditory pathways.

Together, the temporal relation and correlation would provide a clearer view of the neural information flow that will better our understanding of the mechanism of tinnitus-related neural activities in auditory brain structures. —Hao Luo, M.D., Ph.D.

A 2019 ERG scientist generously funded by Royal Arch Research Assistance, Hao Luo, M.D., Ph.D., is an instructor at Henry Ford Health System in Detroit.

Speech recognition in noise is typically poorer for older adults than younger adults, which can reflect a combination of sensory and cognitive-perceptual declines. Considerable variability, with some older adults performing nearly as well as younger adults, may be explained by differences in listening effort or cognitive resources to facilitate speech recognition. Neuroimaging evidence suggests that a set of frontal cortex regions are engaged with increasing listening difficulty, but it is unclear how this might facilitate speech recognition. Speech recognition in noise task performance has been linked to activity in cingulo-opercular regions of the frontal cortex, in what appears to be a performance monitoring role. More extensive frontal cortex activity during challenging listening tasks has been shown for older compared to younger adults, which could reflect task difficulty.

Our study, published in the journal NeuroImage in June 2022, examined neural mechanisms that may support speech understanding in noise and other difficult recognition tasks. In relatively quiet acoustic conditions, speech recognition often occurs very rapidly, mapping sensory input (sound) onto stored mental representations. However, when speech information is distorted or limited by background noise, recognition often becomes slow and effortful.

Recognition based on incomplete sensory information (e.g., noisy conditions) is theorized to involve perceptual decision-making processes that collect evidence until a recognition criterion is met. A perceptual decisionmaking theoretical framework has been used to account for the speed-accuracy tradeoff: fast responses associated with low accuracy, and slow responses associated with high accuracy.

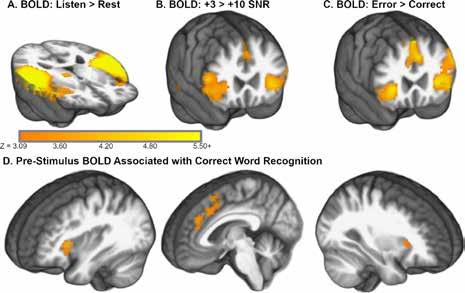

A figure from the NeuroImage paper shows the word recognition task significantly increased activity throughout superior temporal regions and cinguloopercular regions of cortex.

We used an fMRI (functional magnetic resonance imaging) experiment with middle-aged and older participants to examine perceptual decision-making activity, particularly in cingulo-opercular regions across the frontal cortex. Participants were instructed to listen to a word presented in a multi-talker babble environment (e.g., cafeteria noise), and repeat the word aloud. A sparse design with long, quiet gaps between scans (6.5 seconds) allowed speech to be presented without scanner acquisition noise and minimized speech-related head movement during image collection.

We found that word recognition was significantly less accurate with increasing age and in the poorer signal-tonoise ratio condition. A computational model fitted to task response latencies showed that evidence was collected more slowly and decision criteria were lower in the poorer of two signal-to-noise ratio conditions.

Our fMRI results replicated an association between higher, pre-stimulus BOLD contrast (i.e., prior to each presentation) measured in a cingulo-opercular network in the frontal cortex and subsequent correct word recognition in noise. Consistent with neuroimaging studies that linked cingulo-opercular regions in the frontal cortex with decision criteria, our results also suggested that decision criteria were higher and more cautious on trials with elevated activity. The takeaway is that perceptual decisionmaking accounted for changes in recognition speed and was linked to frontal cortex activation that is commonly observed in difficult listening tasks.

In summary, our study indicates that 1) perceptual decision-making is engaged for difficult word recognition conditions, and 2) frontal cortex activity may adjust how much information is collected to benefit word recognition task performance.

These findings potentially connect neuroscientific research on perceptual decision-making, often focused on visual object recognition, with our research on agerelated listening difficulties and (nonauditory) frontal cortex function in these tasks. Similar neural mechanisms may help guide how a person drives a car in heavy fog or listens to a friend speaking in a crowded restaurant. In either of these cases, a related set of brain networks may collect evidence until recognition occurs. Additional studies will characterize how evidence collection and decision criteria relate to hearing loss and older age. —Kenneth Vaden, Ph.D.

A 2015 ERG scientist generously funded by Royal Arch Research Assistance, Kenneth Vaden, Ph.D., is an assistant professor in the department of otolaryngology–head and neck surgery at the Medical University of South Carolina, where HHF board member Judy R. Dubno, Ph.D., one of the paper’s coauthors, is a professor.

For references, see hhf.org/summer2022-references.