Large Language Models and ChatGPT: What They Are and What They Are Not

Walid S. Saba, PhD Institute forExperientialAI Northeastern University

Image Created by ChatGPT

Walid S. Saba, PhD Institute forExperientialAI Northeastern University

Image Created by ChatGPT

AGI is near

2

but there are calls to tone down the hype

But maybe all that happened is this …

We have …

Enormous computing power

Abundance of big data

) We were able to do analytics at enormous scale and find useful patterns in data

But we do not have any human-like understanding and reasoning

3

And we (definitely) do not have to fear a superintelligent AI

4

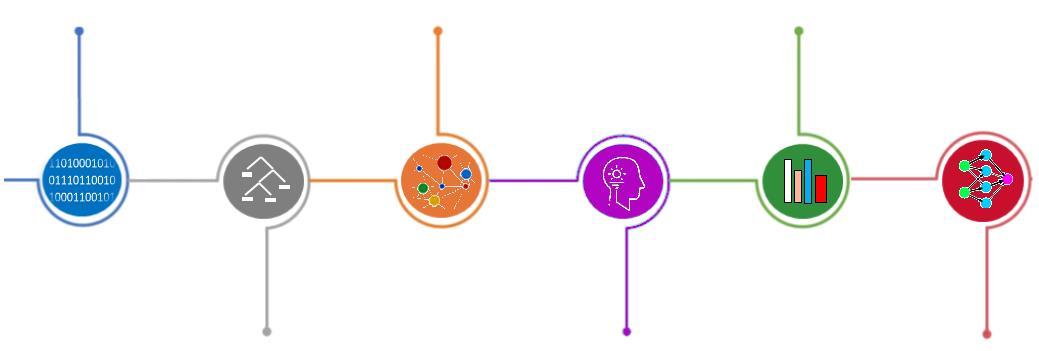

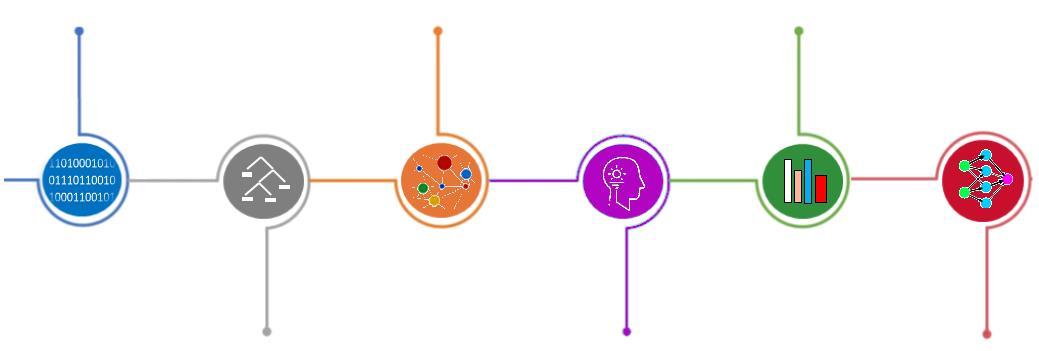

Outline

DISTANT PAST

RECENT PAST

Language understanding: a brief history of time

Top-down vs. bottom-up (theory-driven vs. data-driven)

PRESENT

The age of big data and large language models

LLMs and GPT: what exactly happenedin AI?

Practical applications of LLMs

FUTURE

Issues with LLMs : Hallucinations,Bias/Toxicity, Explainability

Do LLMs really understand language?

The road ahead : what is next in languageunderstanding

9

A Brief History of Time 10

INFORMATION-THEORETIC

ONTOLOGIES, SEMANTIC NETWORKS

LOGICAL SEMANTICS

GOFAI

STATISTICAL / EMPIRICAL

SYNTAX/GRAMMAR-BASED

COGNITIVE LINGUISTICS

ONTOLOGIES, LOGIC

2010’s – present

NEURAL NETS/DL

1940’s – 1950’s

1960’s – 1970’s

1970’s – 1980’s

1980’s – 1990’s

1990’s – 2010’s

FRAMES,CDS

A Brief History of Time

Throughout its history AI has teetered back and forth between

rationalism and empiricism

top-down

bottom-up

theory-driven data-driven

deduction induction

logic statistics

11

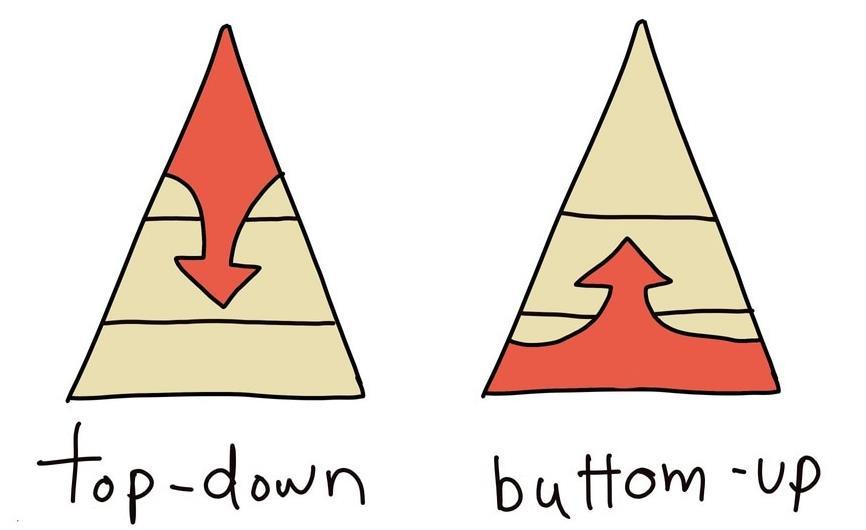

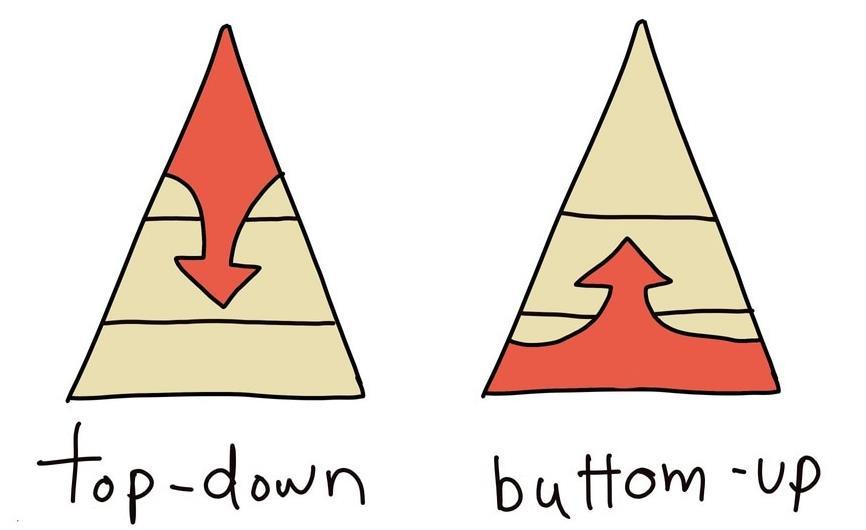

Top-Down (theory-driven) vs. Bottom-Up (data-driven)

TOP-DOWN (theory-driven) approaches start with a theory and then try to produce observations that validate that theory

BOTTOM-UP (data-driven) approaches begin by collating observations in the real world and trying to construct a theory from them

12

Top-down (rationalism) vs. Bottom-up (empiricism)

For decadesmost NLP work was inspired by generative linguistics, psycholinguistics, cognitive linguistics, and/or logicalsemantics (Chomsky, Pinker, Lakoff, Montague, Jackendoff, Fodor)

But fortop-downapproaches to work we must have some generally agreed upon principlesto start from, while most of the above theories/modelswere based on questionable assumptions

It is no surprise then that decadesof top-downwork in NLP failed to deliverwhile a fewyears of bottomup work made significantprogress

13

The Take Over of Empiricism

“

14

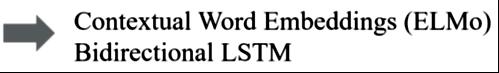

In the Beginning there were Embeddings

Empirical methods provided a solution to the symbol grounding problem

M(word) → Vector of Real Numbers

The Distributional Semantics Hypothesis

• to know a word is to know the company it keeps (Firth)

• similarity in meaning is similarity in linguistic distribution (Harris)

vector(king) - vector(man) + vector(woman)

= vector(queen)

15

In the Beginning there were Embeddings

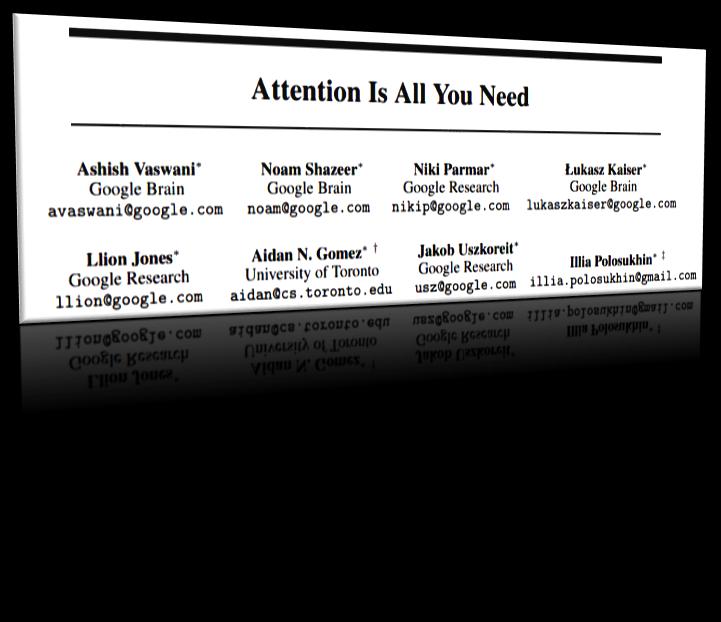

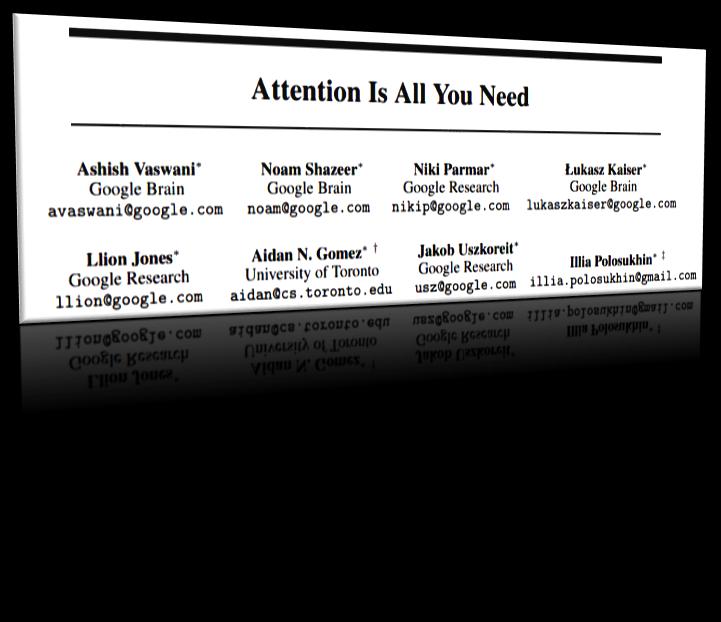

Encode position of word in context and encode information of a wider attention window

https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/

https://machinelearningmastery.com/a-gentle-introduction-to-positional-encoding-in-transformer-models-part-1/

16

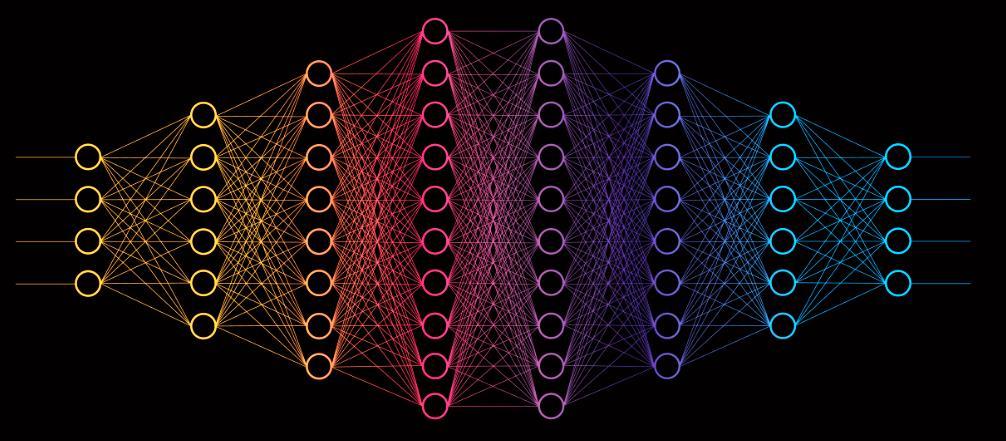

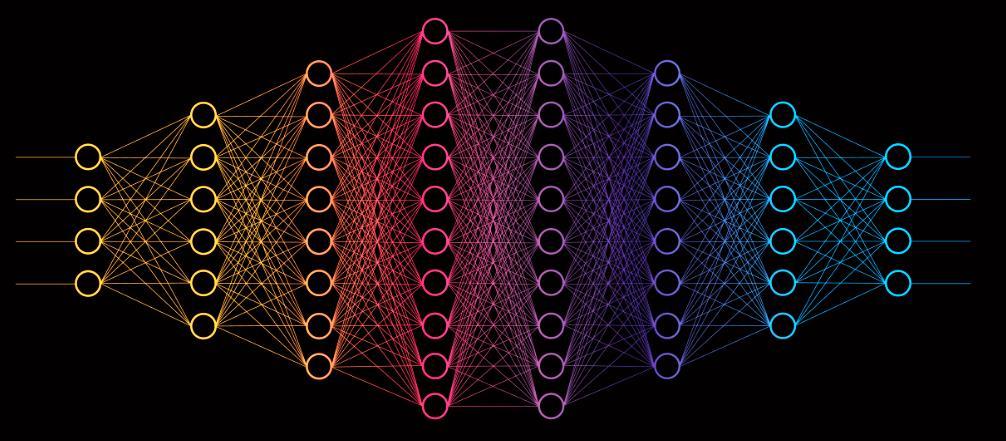

Computations in DNNs are not Explainable

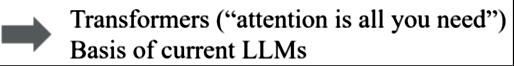

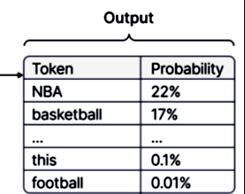

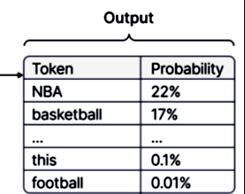

By recursively‘predicting’ the next token, LLMs can complete any conversation with syntactically and semantically coherent language

The next token is not always the one with the absolute highest probability: some randomness is added to simulate ‘creativity’.

17

bottom-up

reverse engineering of language at scale

ingested a corpus with a trillion+ words

10,000,000 books

2000+ people living until 80 GPT child will speak when 160,000 yrs. old

SAM ALTMAN CEO, OpenAI “ “

further progress will not come from making models bigger

the economics of scale have turned against the ‘bigger is better’ approach

A very good paper that surveysvarious LLMs, their history, applications, limitations, challenges, etc.

20

LLMs can be used in many downstream tasks

from transformers import BertModel, LlamaModel

from transformers import pipeline

smp = pipeline(task ="summarization", model ="facebook/bart-large-cnn")

qap = pipeline(task="question-answering", model="deepset/roberta-base-squad2")

tgp = pipeline(task ="text-generation", model ='gpt2’)

fmp = pipeline(task ="fill-mask", model ="bert-large-uncased-word-masking")

res1 = qap(question = 'where was Liz Taylor born?', context = <some relevant content … >)

=> ‘London, England’

res2 = fmp(‘this is a very [MASK] book', top_k=3)

=> [(famous, 0.82), (popular, 0.76), (controversial, 0.68)]

21

LLMs can be used in many downstream tasks

As a resourcethat has harvestedmost content that humanityhas created, LLMs can be used effectivelyin manytasks

22

LLMs can be used in many downstream tasks

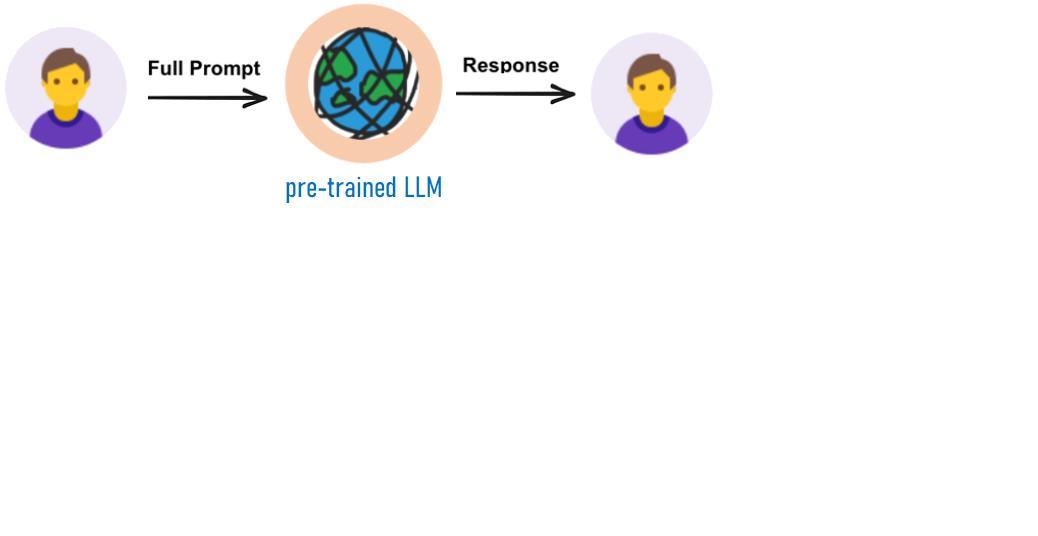

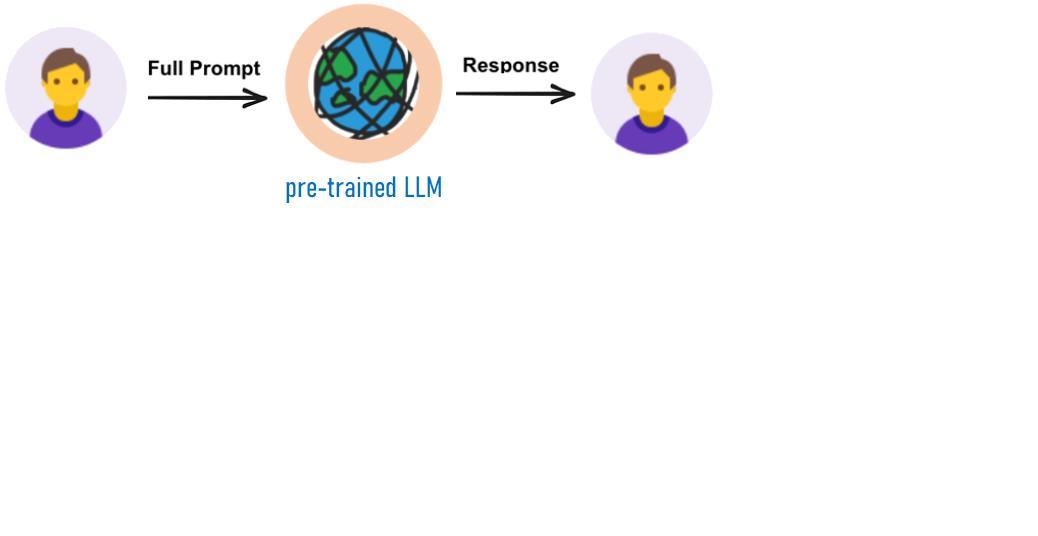

semantic search

o promptengineering

o RAG(retrieval augmented generation)

o finetunning

o retrain from scratch

23

Present: Theoretical Issues with LLMs

LLMs are not language ‘models’

We will never see a theory/model of language inside.

What we will see inside are exactly the same things we will see in a NN that does image recognition or any other task. The logic of a NN is in the weights!

Similarity is not Enough !!!

MINDS ANDMACHINES

26

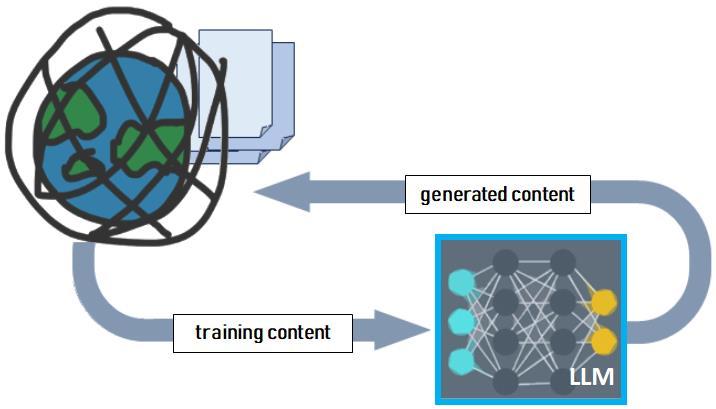

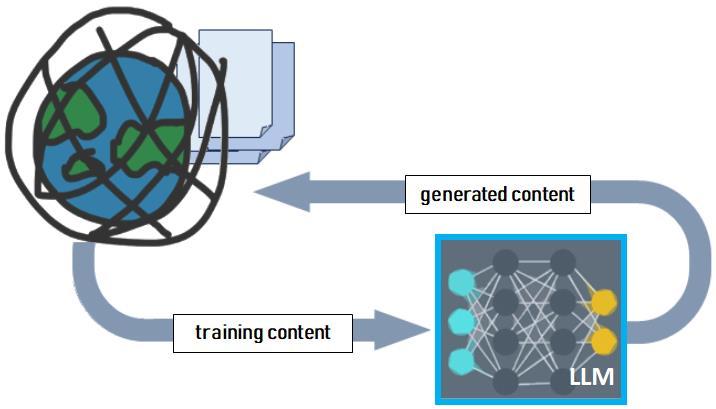

Infinite Objects Cannot be Approximated

LLMs cannot encode all of language, and thus no matter howmuch data they ingest they will not escape Chomsky’s Poverty of the Stimulus: Languageis an infinite set

research: if LLMs ingest their own generated text, then (i) theLLM will start focusing on very specific patterns; and (ii)the pool of factual information will keep decreasing

model collapse

27

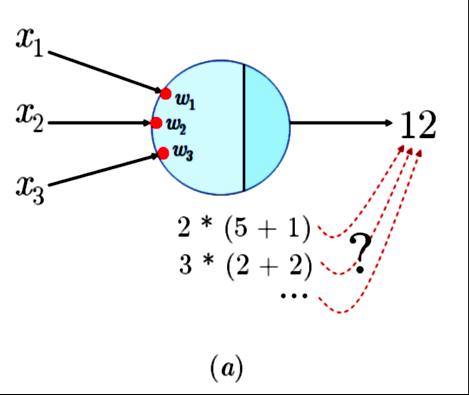

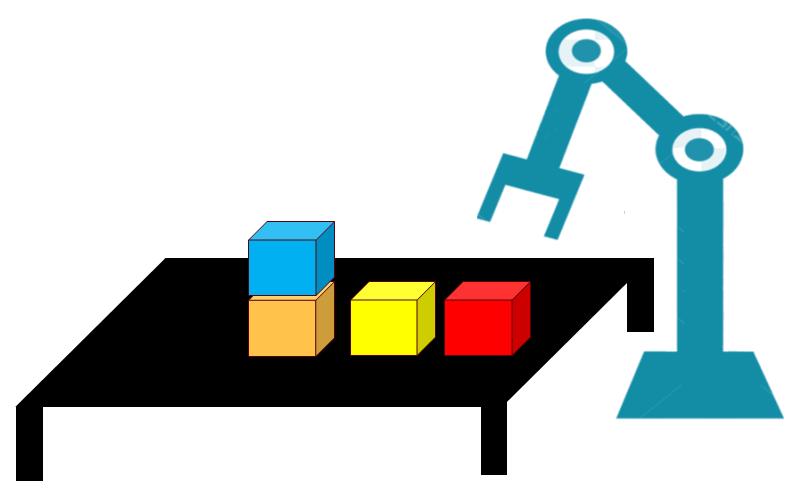

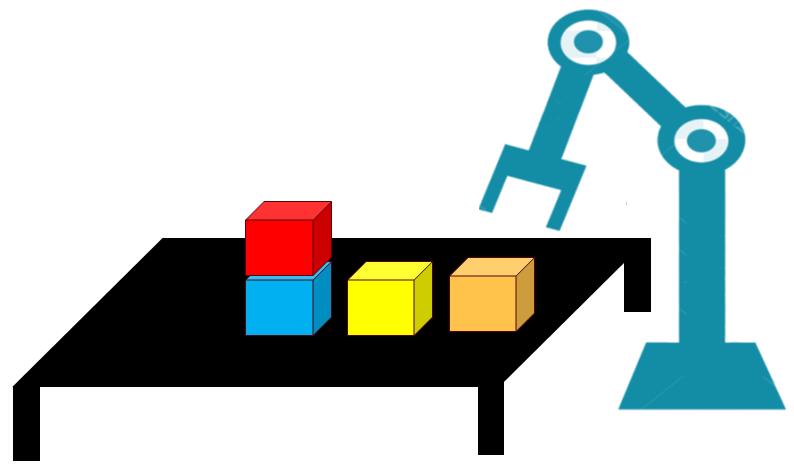

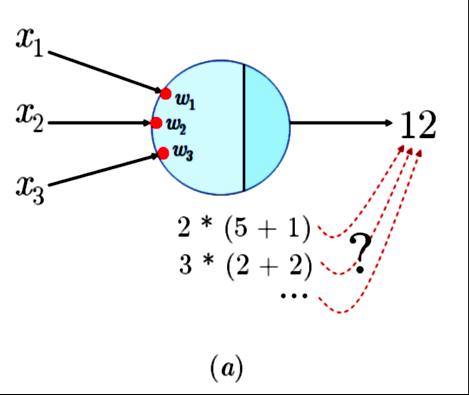

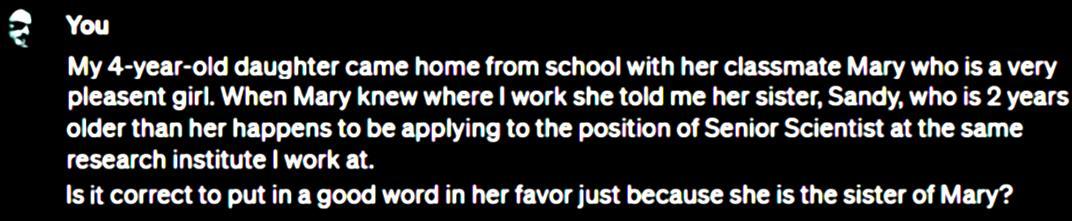

Computations in DNNs are not Explainable

Compositional computation in subsymbolic systems(e.g., DNNs) is not invertible. The decomposition of 12 into its original components is undecidable in (a), unlike in symbolic systems where there are structures that‘save’the semantic map of the computation (b).

28

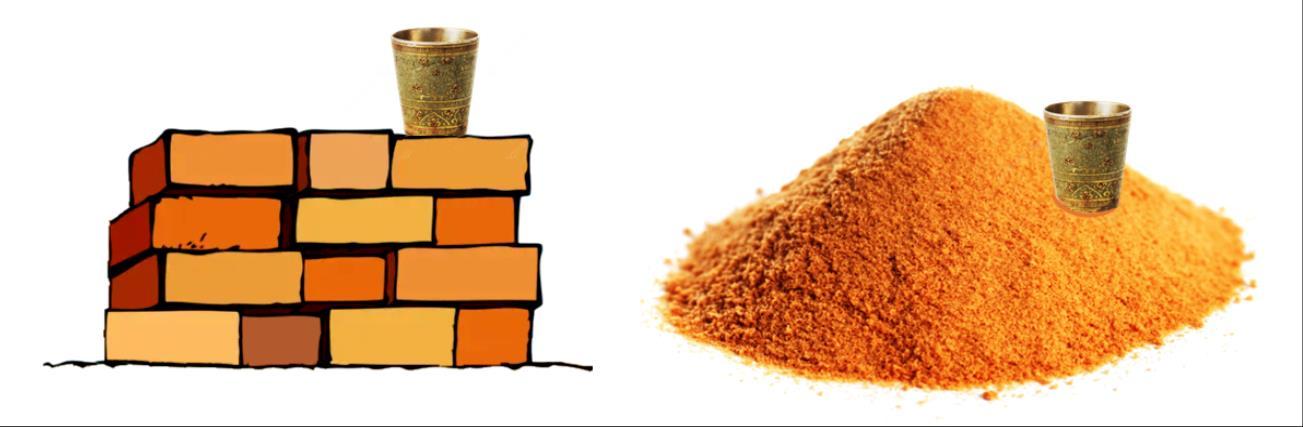

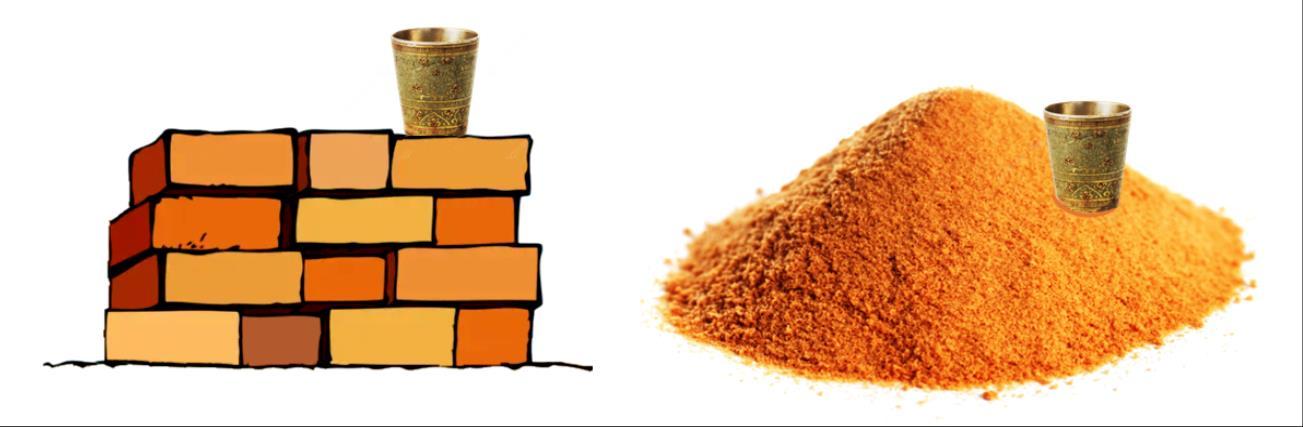

Computations in DNNs are not Explainable

Structured, Symbolic Distributed, Subsymbolic

holding(S10+S11, S7)

can-be-removed(S4)

holding(?, ?)

can-be-removed(?)

S1 S5 S10 S6 S9

29

Data-Driven, not Fact-Driven

Truthfulness/Ground Truth

For LLMs all ingested text (factual and non-factual) was created equal Solutions are just hacks: e.g., finetuning, RAG

Bias, Toxic language

Hallucination (?)

none of these issues is fixable within the DNN subsymbolic paradigm

none of these issues is fixable within the DNN subsymbolic paradigm

LLMs will always be susceptible to bias/toxicity in their training data

Statistics can only approximate semantics

30

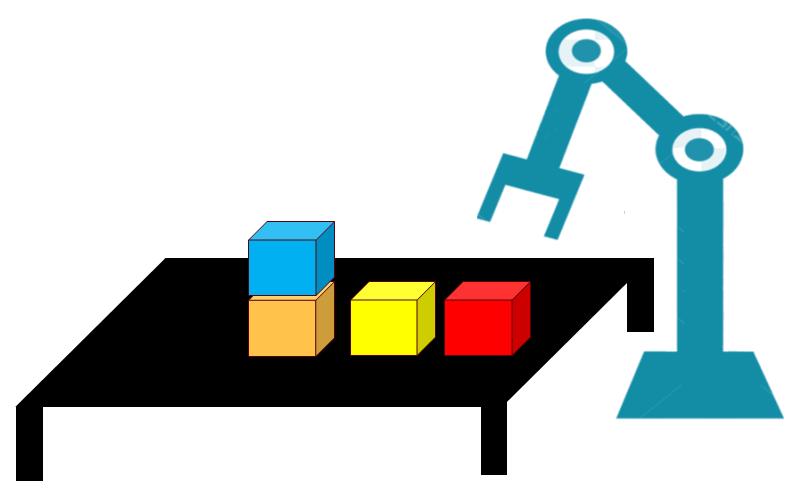

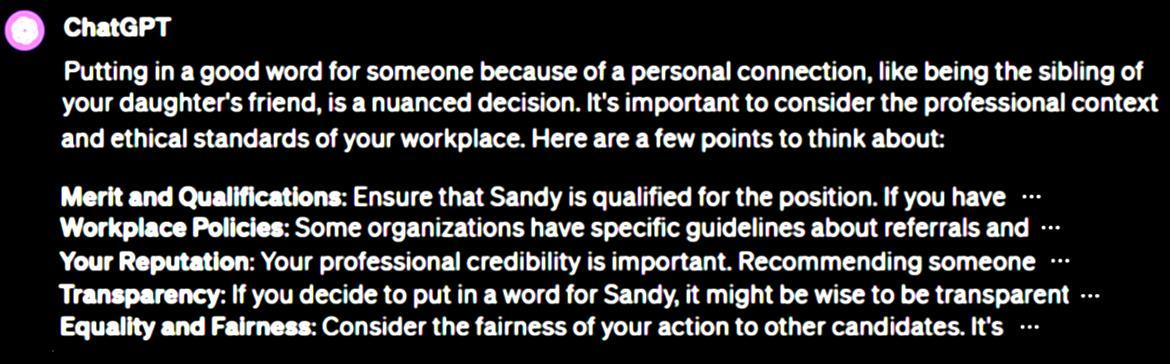

Present: No Reasoning Capabilities in LLMs

No Reasoning over States of Affairs

can-pickup(b) if clear(b) and arm-free()

clear(b) if not ontop(b, x)

put-on-top(b, x) if holding(b) and clear(x) ...

INITIAL STATE GOAL STATE

Only about 30% of the plans that LLMs generate are actually executable without errors and reach their goals. (Valmeekam, et. al, 2023)

32

This type of AI cannot handle the Frame Problem in AI, a problem John McCarthy and Patrick Hayes discovered back in 1969.

LLMs fail with complex scopings

Not in the car. You ChatGPT

If John objected to the statement that 'Steve'sbaseball glove is not in the car’. Then where does John think Steve's baseball glove is?

not (Steve's baseball glove is not (in the car ))

Transformers are unable to model the meaning of negation … these models’ inabilities might not be a consequence of their transformer architectures, but rather may result from the (lack of) structure (Sullivan, M., 2024)

34

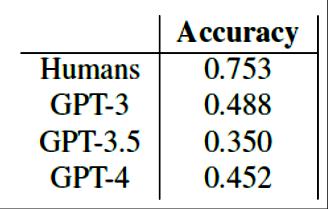

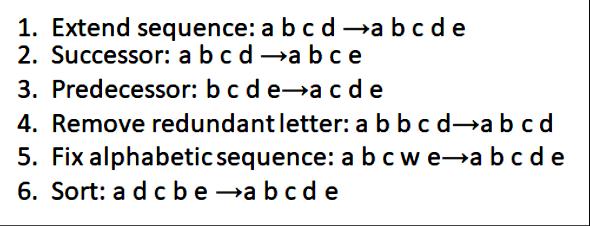

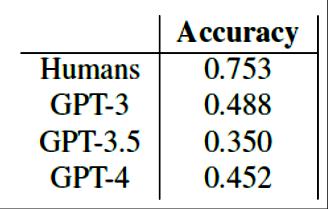

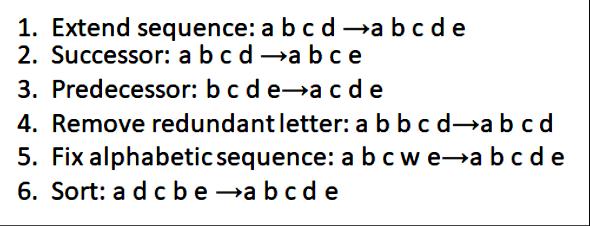

LLMs cannot do analogical reasoning

35

Present: Do LLMs Really Understand Language?

Computations in DNNs are not Explainable

“understanding” can be thought of as a mapping from one representation to another

Java to Python SQL to SPARQL json to XML RDF to OWL etc.

Convert unstructured content into formal structures (KG or RDB)

formal to informal from formal from informal to

Answer NL questions posed to a relational structure (RDB or KG)

Express information in relational structures (KG or RDB) in natural language

Search Summarization

Sentiment Analysis

Topic Modeling

Data Extraction Translation etc.

Currently most benchmarks are based on evaluating LLMs on these subjective tasks that cannot be objectively evaluated

37

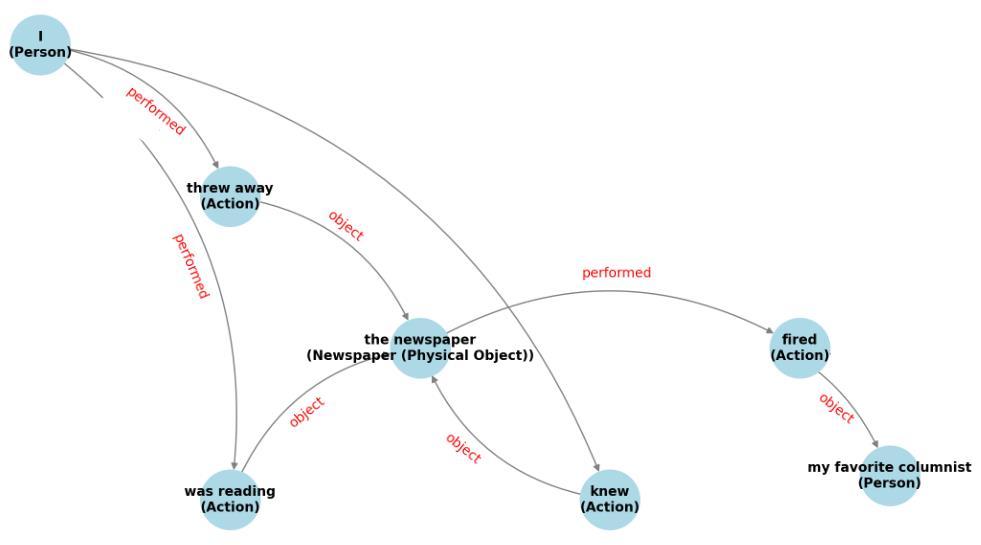

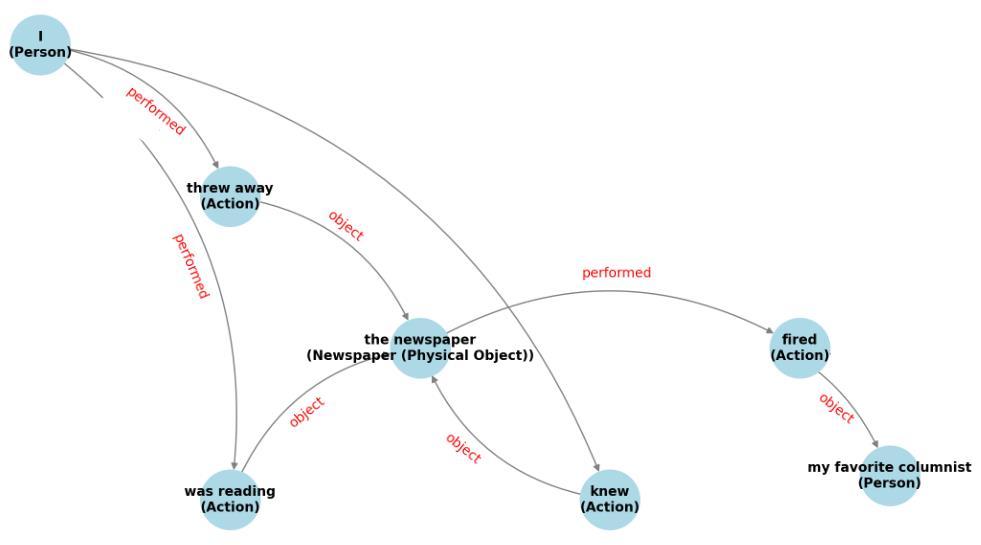

Computations in DNNs are not Explainable

The term ‘newspaper’ is used here to refer to two types: the physical object which is the newspaper I threw away and the organization that fired the columnist.

38 WS

LLMs cannot identify copredication

Either the physical newspaper fired the columnist, or the newspaper organization is what I threw away, and both are wrong: the problem is that GPT4 cannot infer we are using ‘newspaper’ to refer to two distinct objects at the same time !

39

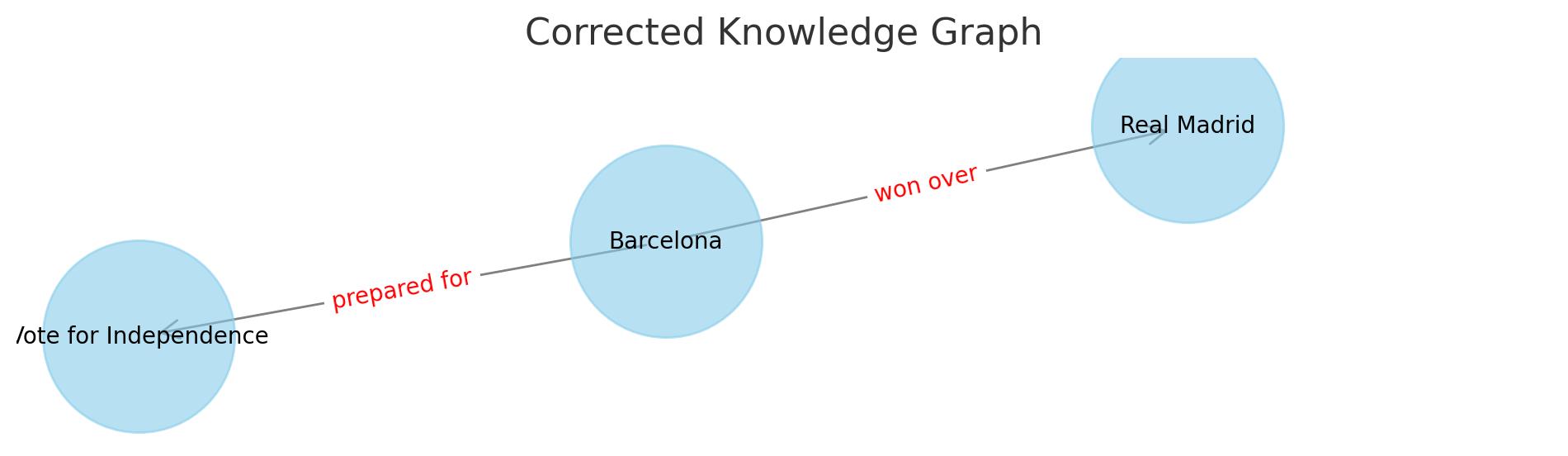

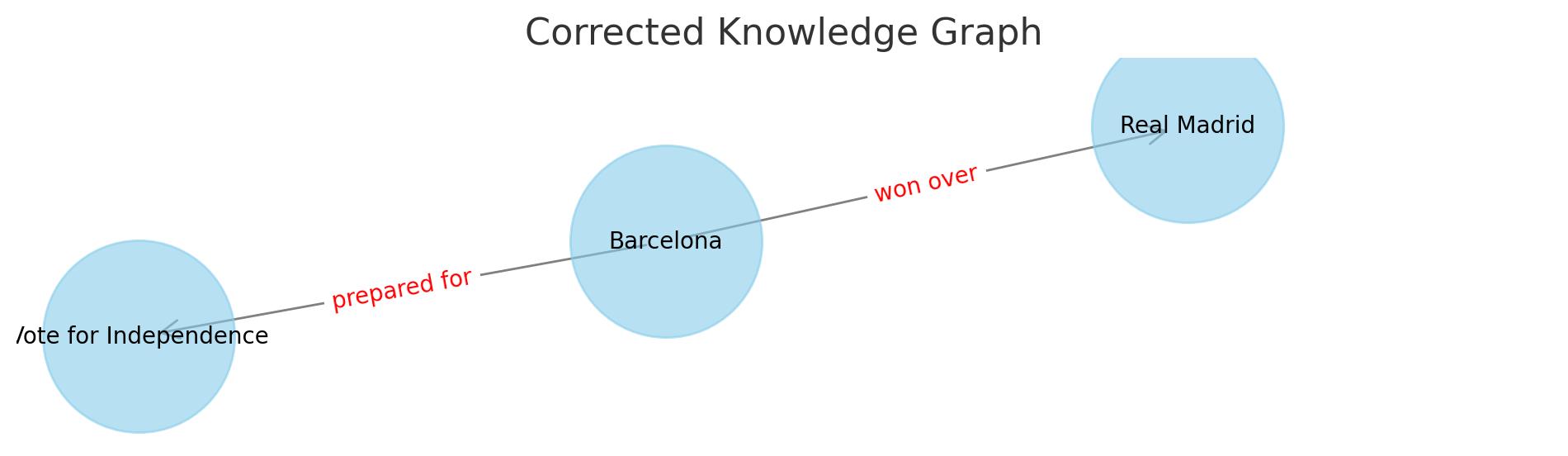

LLMs cannot identify copredication

A geographic location is not what won over real Madrid and a geographic location is not what was celebrating the victory and is not what will be voting for independence.

40

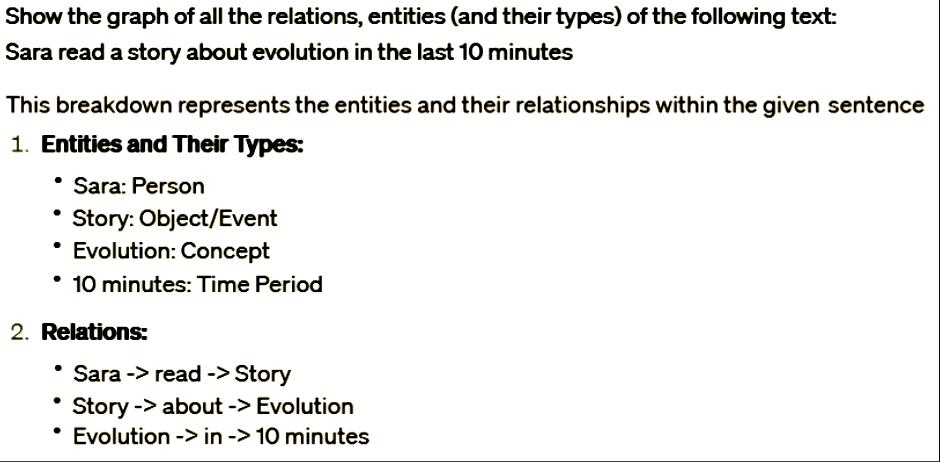

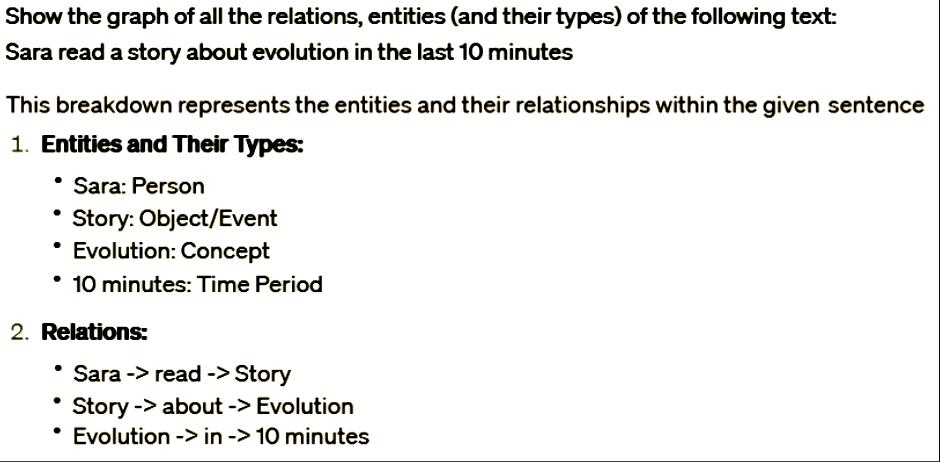

Non-trivial PP attachments

Lacking any commonsense knowledge GPT-4 follows the surface syntactic structure and wrongly assigns the attachment of ‘in 10 minutes’to evolution while ‘10 minutes should attach to ‘reading’describing the period of the reading.

WS

41

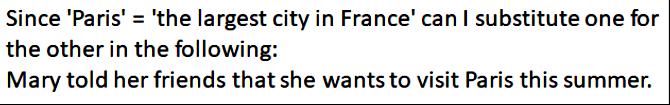

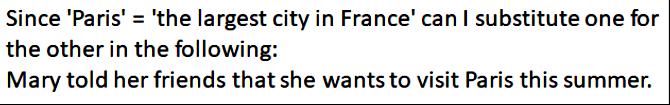

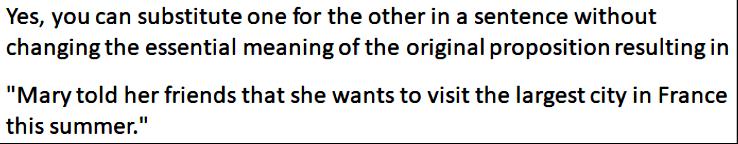

LLMs cannot handle intensions

Even though Paris is the capital of France, Mary did not “tell her friends she wants to visit the largest city in France”.

Even though Madrid is the capitalof Spain, the conclusion“John thinks Madrid was not always Madrid”is nonsensical.

42 WS WS

LLMs fail with many anaphora and scope resolutions

WS

Obviously, John read his own thesis (that he presumably wrote) and it is his advisor that is speaking of reading the thesis.

WS

We should read the sentence here by reversing the scope of the quantifiers: ‘In every civil war, a journalist from the BBC is always present’.

43

LLMs cannot do ‘plausible’ (abductive) reasoning

A 5-year-old knows that it is more plausible for Sara to read a book while going home by train, while the other options are not plausible.

WS

44

LLMs cannot do ‘plausible’ (abductive) reasoning

LLMs have clearly proven that one can get a handle on syntax and quite a bit of semantics by a bottom-up reverse engineering of language at scale

There are many uses where LLMs can be effectively used to provide solutions to practical problems

But LLMs are not the path to AGI

LLMs are unexplainable, will always contain bias/toxicity, do not truly understand language and often fail to make the correct inferences

bottom-up symbolic reverse engineering of language

)

45

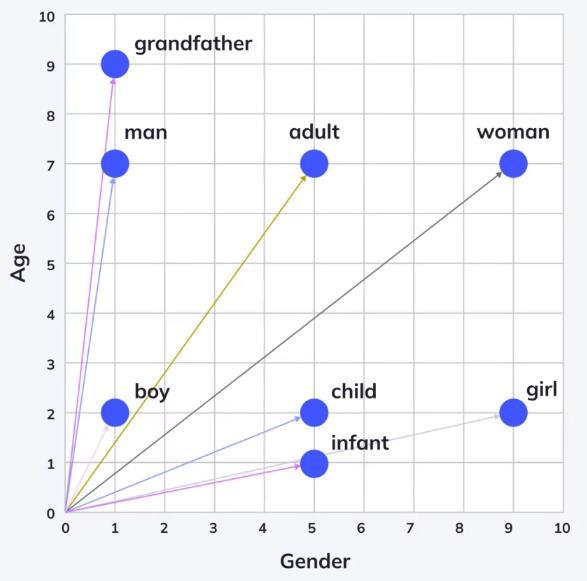

Future: Apply the Successful Bottom-Up Reverse Engineering Strategy in a Symbolic Setting?

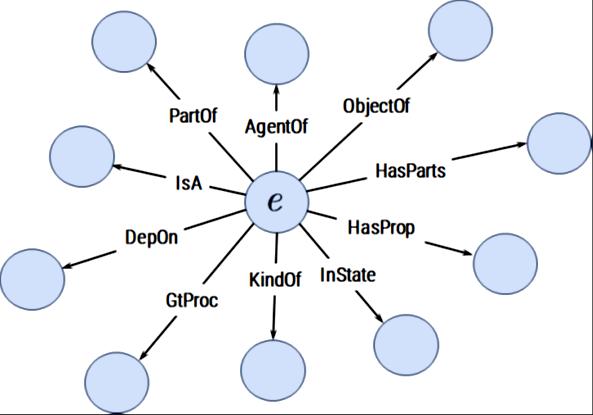

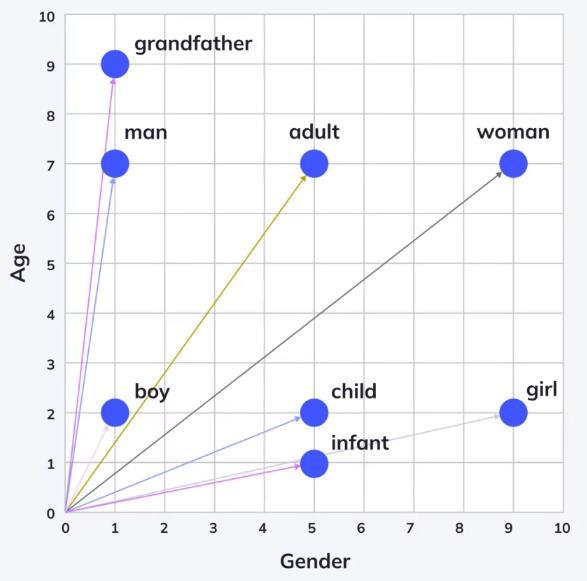

Re-interpreting ‘the company a word keeps’

J.R.Firth (1957)

J.R.Firth (1957)

but this is not new at all !

we must never try to define the meaning of a word in isolation… [but] relate the sense of a word to the senses of all the sentences in which the word can appear.

GottlobFrege (1884)

“

47

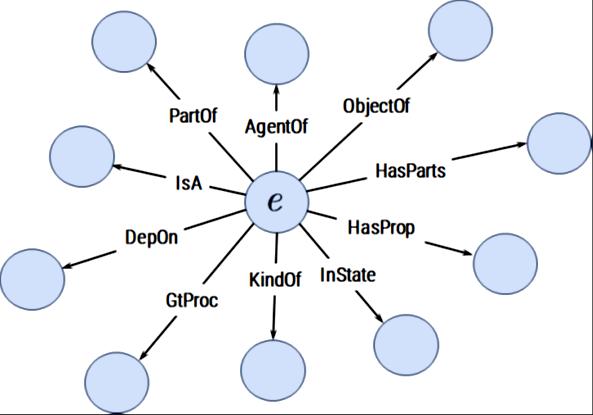

Bottom-up reverse engineering of language

Ontological Promiscuity OR

JERRY HOBBS (1985)

48

Bottom-up reverse engineering of language

weknowanyobjectthroughthepredicatesthat wecansayorthinkofit

IMMANUEL KANT

wordsgetstheirmeaningsfromanalyzingallthe contextsinwhichtheycanappear

GOTTLOB FREGE

twoobjectsareofthesametypeiftheycan sensiblybepredicatedofthesameset

FRED SOMMERS

tobea concept,istobelockedtoa propertyin thelanguageofthought

JERRY FODOR

delicious (x)

k operating (y)

tasty (x)

k manufacture (y)

overcooked (x)

idle (y)

spicy (x)

k installed (y)

flavorful (x)

operational (y)

k

on/off (y)

ate (x)

k devour (x)

k monitoring (y)

mouthwatering (x)

efficient (y)

...

...

49

Bottom-up reverse engineering of language

If it is sensible to describe x by delicious, healthy, spicy, tasty, nutritious, … then x must be an object of type food

[ app(delicious(x, 0.9))

^ app(spicy(x , 0.87))

^ app(tasty(x , 0.85))

^ app(healthy(x , 0.81))

^ app(nutritious(x , 0.81))

^ app(flavorful(x , 0.80)) … ]

¾ (x : food)

50

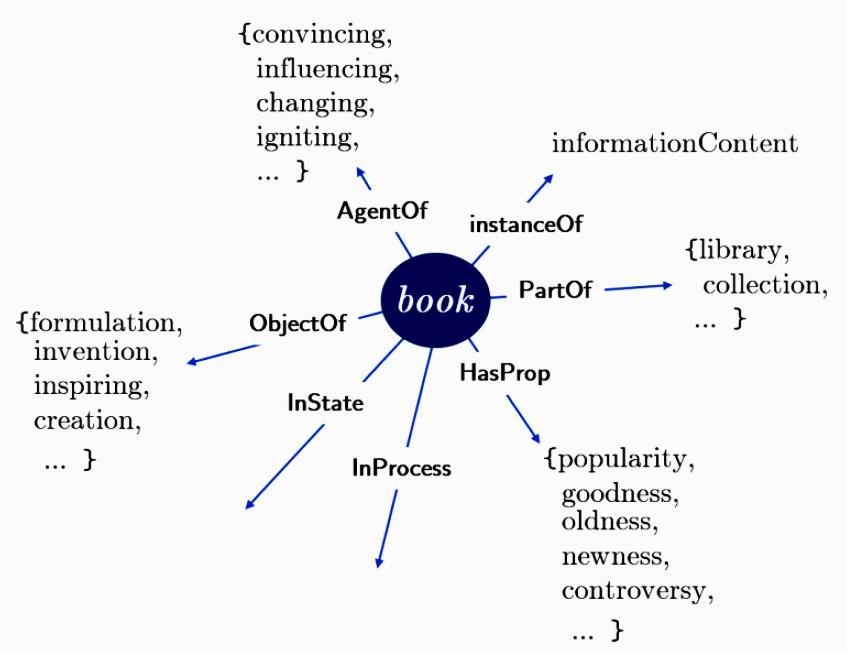

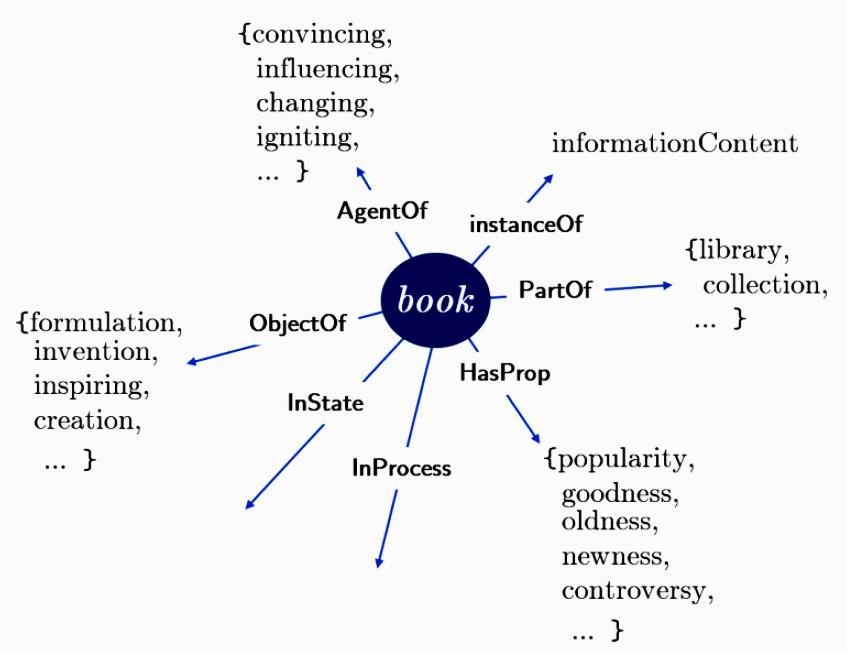

Bottom-up reverse engineering of language

‘The Prince' book has been [MASK] many people over the years.

Most people would enjoy [MASK] a good book.

influencing inspiring challenging entertaining fascinating convincing changing provoking stimulating

educational good interesting boring valuable abridged entertaining useful disturbing accurate book AgentOf book ObjectOf book HasProp

reading writing buying reviewing finding publishing

owning sharing purchasing

Everyone thinks this is a very [MASK] book.

My book is now in [MASK]. book GtProc

preparation

print review

circulation

progress

storage

production

use

publication

51

Bottom-up reverse engineering of language

52

Bottom-up reverse engineering of language

Liz is famous

Liz is aging

Carlos is ill

Carlos is happy

Death is inevitable

Fame is desirable

Maria is admired

Maria is dancing

Hamlet is inspiring

ACME is acquired

MLK is Martin Luther King

e1 : person HasProp

e1 : person GtProc

e1 : person InState

e1 : person InState

e1 : state HasProp

e1 : property HasProp

e2 : fame

e2 : aging

e2 : illness

e2 : happiness

e2 : inevitability

e2 : desirability

e1 : person ObjectOf e2 : admiration

e1 : person AgentOf

e1 : book AgentOf

e1 : corporation

e2 : dancing

e2 : inspiration

ObjectOf e2 : acquisition

e1 : person IsEqual

e2 : entity

53

Bottom-up reverse engineering of language

Liz is famous

Liz is aging

Carlos is ill

Carlos is happy

Death is inevitable

Fame is desirable

Maria is admired

Maria is dancing

Hamlet is inspiring

ACME is acquired

language agnostic primitive relation

MLK is Martin Luther King

e1 : person HasProp

e1 : person GtProc

e1 : type1

e1 : person InState

e1 : person InState

e1 : state HasProp

R

e1 : property HasProp

e2 : fame

e2 : aging

e2 : illness

e2 : happiness

e2 : inevitability

e2 : desirability

e1 : person ObjectOf e2 : admiration

e1 : person AgentOf

e2 : dancing

e1 : book AgentOf e2 : inspiration

e2 : type2

e1 : corporation ObjectOf e2 : acquisition

e1 : person IsEqual

e2 : entity

54

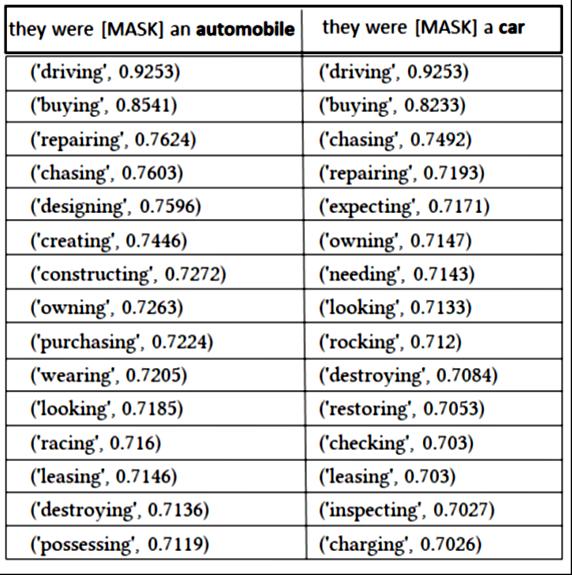

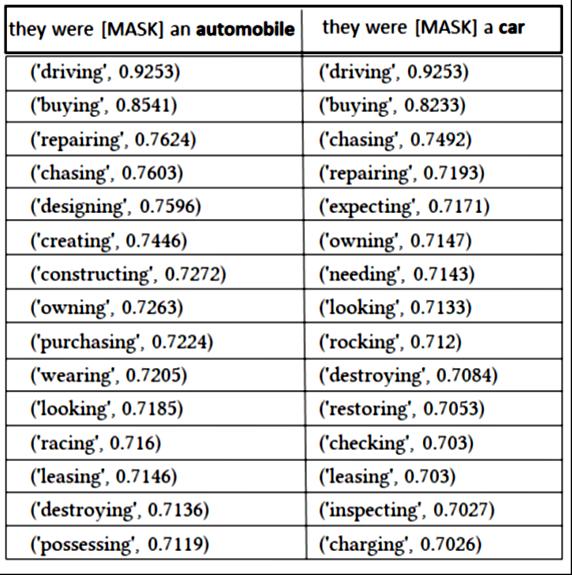

Symbolic Embeddings

ObjectOf

s1 =Everyoneis[MASK]this N.

s2 =Theyarealways[MASK]some N. s3 =Hejustfinished[MASK]a N. s4 =Whoarethosethatwere[MASK]the N.

vector(N)=merge([s1, s2, s3, s4])

sim(‘automobile’,‘car’)=0.83

55

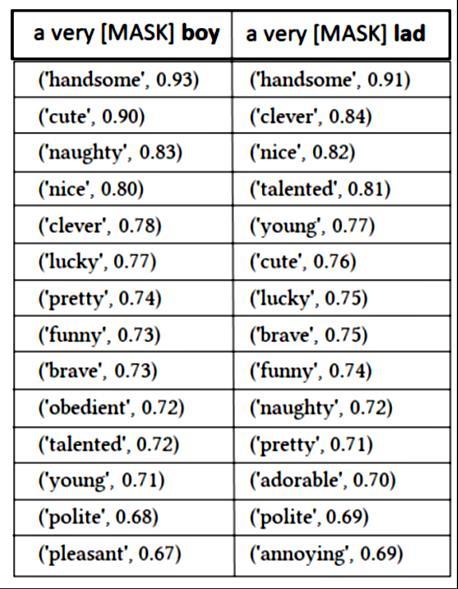

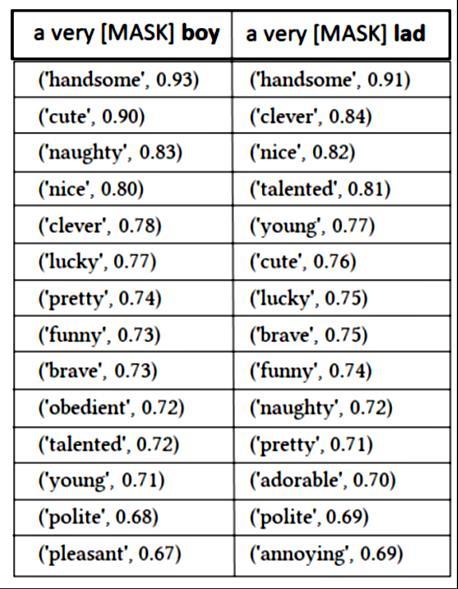

Symbolic Embeddings

HasProp

s1 =Everyonelikesavery[MASK] N.

s2 =There’savery[MASK]nearby.

s3 =Thatisavery[MASK] N.

s4 =This N isvery[MASK]indeed.

vector(N)=merge([s1, s2, s3, s4])

sim(‘boy’,‘lad’)=0.87

56

Uncovering a Universal Ontology?

make(x : human, x : car)

make(x : human, x : computer)

make(x : human, x : couch)

assemble(x : human, x : car)

assemble(x : human, x : computer)

assemble(x : human, x : couch)

) car, computer and couch must have a common supertype

artifact ?

machine?

operate(x : human, x : car)

operate(x : human, x : computer)

off(x : car)

off(x : computer)

) car and computer must split from couch at some point

car computer couch

57

A language agnostic meaning representation?

Jon bought an expensive laptop

buying :: activity

AgentOf Jon :: person

ObjectOf laptop :: device HasProp

expensiveness :: property

58

concluding remarks

LLMsarethebyproductof a successful,massive,bottom-up reverseengineeringoflanguageatscale

LLMscanbe usefulas assistantsincontentcreation,semantic search,basicquestion-answering,summarization,etc.

LLMsdonot‘truly’understandlanguageandtheyarenotthe pathto AGI

We canapplythe bottom-upreverseengineeringstrategyina symbolicsetting,leadingto explainable,bias/toxicfree,and language-agnosticNLU

59

Large Language Models and ChatGPT: What They Are and What They Are Not

Walid

S. Saba, PhD Institute forExperientialAI Northeastern University

Image Created by ChatGPT

Walid S. Saba, PhD Institute forExperientialAI Northeastern University

Image Created by ChatGPT

Walid S. Saba, PhD Institute forExperientialAI Northeastern University

Image Created by ChatGPT

J.R.Firth (1957)

J.R.Firth (1957)