3 minute read

Significant FY22 Successes and Collaborations

Generative Design

Ryan McClelland brought Goddard into the world of artificial intelligence-generated design and digital manufacturing. He successfully developed and adapted a process radically improving spacecraft and instrument structural development by demonstrating how the design, analysis, and fabrication of many spacecraft structures can now be largely automated by AI and robots. Improvements over traditional design processes include reducing development times and costs by a factor of ten, increasing stiffness and strength while reducing mass by two to four times, and reducing the likelihood of failure by lowering stresses and reducing human interface errors. This technology holds the potential to improve all NASA’s one-off hardware development projects while enabling missions to achieve more science per dollar by reducing development costs and increasing performance.

Distributed Space Missions: Architecture for the Future

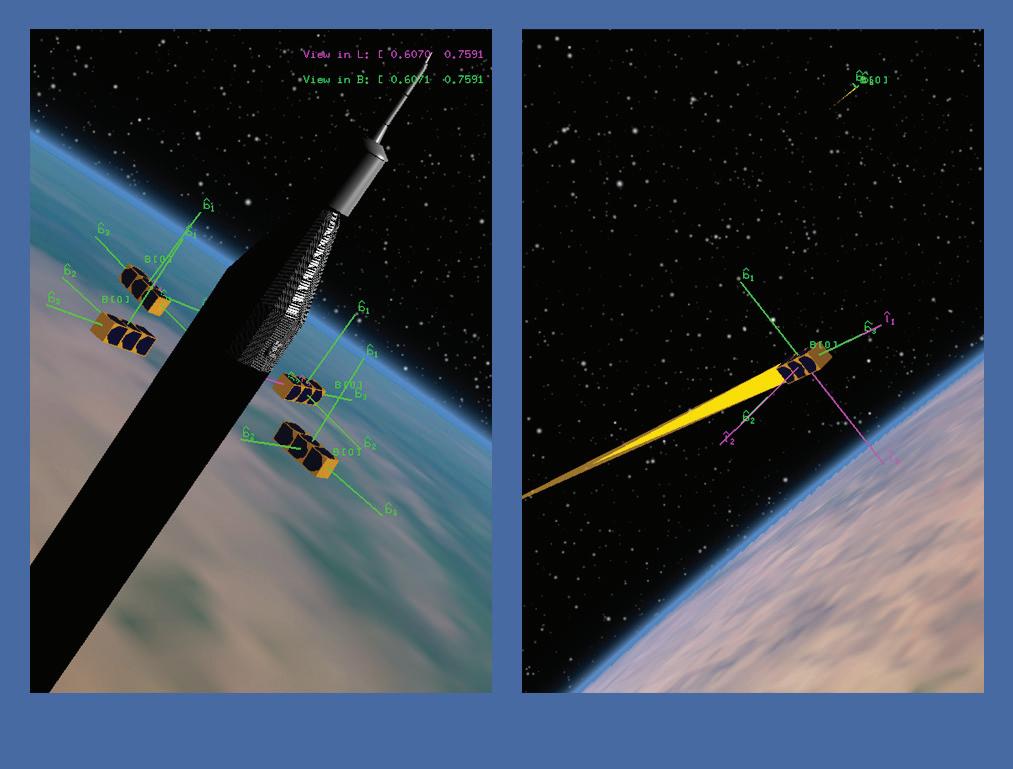

NASA is looking beyond the days focused primarily on large flagship missions flying a single spacecraft or instrument into the void to provide a single view of the universe. Distributed missions will combine perspectives from multiple spacecraft, with a variety of instruments capturing light from more of the spectrum to revolutionize exploration of everything from Earth to the remotest targets. Goddard innovators across disciplines and lines of business are preparing for the challenges of coordinating spacecraft swarms, incorporating disparate sensors, and even visiting multiple destinations.

From the Communications and Navigation line of business, Frederick Naikal is developing new broadband radio receivers to tackle the high-data rate communications distributed missions will need. In addition to bandwidth, distributed satellites will coordinate everything from orbit, trajectory, pointing, data collection, and other swarm functions in a local network. Jonathan Boblitt and Yen Wong are working on disruption tolerant networking (DTN) protocols to ensure data is not lost between nodes of these distributed networks.

Distributed missions will require coordinated data collection, often observing phenomena from multiple perspectives. How those spacecrafts coordinate their trajectory and pointing was the focus of Sabrina Thompson’s Dynamic Intelligent Tasking for Opportunistic Science (DITOS) of SmallSat Constellations. Meanwhile, Pavel Galchenko put considerable effort into software solutions for formation control.

Also helping unite multiple spacecraft, Wayne Yu is looking at how Goddard’s autonomous Navigation Guidance and Control, or autoNGC, can adapt to the demands of DSM autonomy through deep space or in orbit around distant worlds. In her Cross Cutting Technologies investigation, Evana Gizzi built on her prior mission resilience work on fault-diagnosing algorithms for spacecraft by extending these programs to accommodate swarms. Other efforts funded in FY22 include mission planning for small satellite swarms, and emulation environments that can simulate these missions in a whole system, or digital twin engineering approach.

OpNav

Instruments that gather science data can often serve as “eyes” for spacecraft using the Goddard-developed autonomous Navigation Guidance and Control (autoNGC) software system, reducing dependance on ground control. While optical navigation solutions depend on high-datavolume images, enabling spacecraft to conduct those navigation guidance and control functions autonomously preserves communications channels for sending critical science data back to Earth.

Andrew Liounis and Chris Gnam are developing a celestial optical navigation capability to allow a spacecraft to track moons, planets, and other bodies against background stars, providing accurate location within the solar system (CuttingEdge, Fall 2022).

Once a spacecraft approaches its destination, optical navigation might give way to lidar scanning and ranging. Guangning Yang and Stephen Mark worked to improve 3D Mapping and navigation lidar technologies from the Communication and Navigation and Cross Cutting

Technologies lines of business in FY22. Yang developed tools that would use the same lidar for navigation, high-resolution 3D imaging and laser communications. Mark stepped back to identify hardware, software, and technology features useful for developing high-fidelity, lightweight systems of the future and worked to establish a self-sustaining product line of 3D mapping lidars for multiple applications.

Using terrain images and models, meanwhile, can provide location accuracy for planetary probes for landing, sample collection and navigating the surface, while providing accuracy down to a few dozen feet. Multiple projects in FY22 built on Goddard’s history with terrain relative navigation (TRN). Alvin Yew developed a system to identify the rocky horizon of the Moon or Mars and improve location resolution on the ground (CuttingEdge, Fall 2022) Michael Shoemaker worked to improve TRN solutions by testing them on data from the Mercury MESSENGER mission. David Wilson worked on a project to provide accelerated spacerated hardware for processing the high data-volume images that TRN uses for navigation.