4 minute read

Adapted Computer Program Pushes Satellite Navigation Toward Autonomy

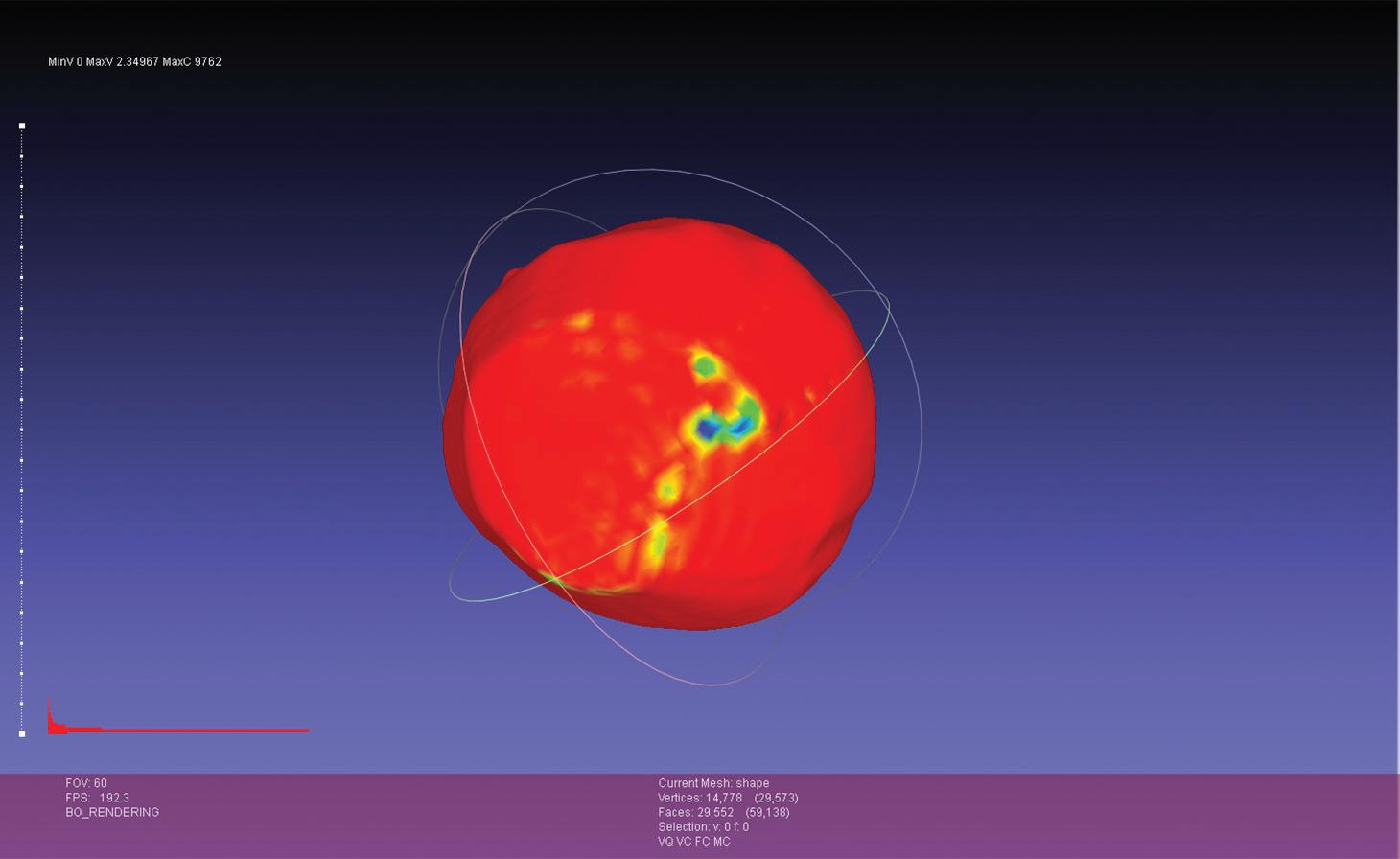

This view of asteroid Bennu ejecting particles from its surface on January 19, 2019 was created by combining two images taken on board NASA’s OSIRIS-REx spacecraft. The Optical Navigation team on OSIRIS-REx used images of Bennu, like this one, to help navigate the spacecraft with unprecedented accuracy, proving the technology. Image credits: NASA/Goddard/University of Arizona/Lockheed Martin

As Goddard engineers continue to push the boundaries of navigation technology, one team is working to make navigation easier for smaller satellites with less processing power and bandwidth.

Optical navigation technology, adapted from NASA’s Origins, Spectral Interpretation, Resource Identification, Security, Regolith Explorer (OSIRISREx) mission, would place navigation capabilities onboard satellites rather than on the ground like other, similar technologies. The new technology serves three purposes, said aerospace engineer and team lead Andrew Liounis: building a 3-D global model of the object the satellite is approaching — called a global shape model, modeling small patches of the same object in higher resolution, and estimating the relative brightness of spots on the surface.

With this new technology, satellites would not only be able to navigate better, but scientists and engineers would also get a better sense of the target objects, such as their geographical features and gravity fields.

The team previously worked on navigation for OSIRIS-REx, helping create the high-resolution model of the asteroid Bennu. That mission had ample access to the Deep Space Network, which helps communicate data back to Earth, along with more ground workers to process the images once they arrived. Images were communicated back to Earth and compiled into a model in a long, handson process, using resources that might not be available for smaller missions.

“With small satellites, you run into a number of limitations, the largest being an issue with downlink bandwidth,” Liounis said. “On OSIRIS-REx we had on the order of 40,000 images. But on a smaller mission downlinking just hundreds of images could be prohibitively expensive.”

This year, Liounis’s team received Internal Research and Development (IRAD) funding to support additional development. The team is developing the project as part of onboard tool cGIANT — based on the Goddard Image Analysis and Navigation Tool, GIANT which was improved in FY19 and FY20 by IRAD and other funding (See CuttingEdge Spring 2019, Page 10). cGIANT is a part of the automatic Navigation Guidance and Control, or autoNGC, suite of autonomous tools. This funding allowed the team to develop a way to break up the algorithm’s processing steps, allowing for regions of a shape model to be developed separately then added together, rather than processing the entire model at once. Splitting the steps enables them to run the algorithms even on small satellites with limited computational power.

Onboard shape modeling has been both a great challenge and great success, said team member Joshua Lyzhoft. The team knew that by decreasing the initial amount of the images used to make the models and providing updates with newly obtained images, they could cut costs while maintaining the efficacy of the program. To do so onboard a small satellite, they needed to develop new methods of creating models that are more robust and less computationally expensive.

These adaptations bring autonomous visual navigation within reach of small-satellite missions, who’s limitations arise from a number of factors, particularly radiation-hardened technology. In space, satellites no longer have radiation protection from earth’s atmosphere, which can cause electronics to behave unpredictably. Scientists and engineers must harden their technologies against space radiation. This process protects the technologies onboard, but decreases processing power and costs more.

This is a shape model built from an early prototype of the flight software, using images of Vesta taken by the Dawn mission.

Image credit: Josh Lyzhoft

“With these small projects, we won’t necessarily have the same bandwidth,” Lyzhoft said. “We won’t have the funds to do all the shape modeling on the ground; we won’t have the humans to do all this processing. But we’re reducing these costs by starting to make this autonomous, and it’ll be easier to navigate these small bodies in the future to do more space exploration.”

Right now, the team has a functioning software prototype of this new optical navigation technology. They’re using it to look for bottlenecks, or places where the code slows down. The team can then send it to specialized software engineers to turn the technology into flight code and continue to test and validate the system. Finally, the team will work with other projects to incorporate this technology into a satellite and fly it in space.

The key focus of this optical navigation technology is automation. In the future, only one person might be needed to make sure the system is functioning properly, Lyzhoft said, rather than the team of people required to process this data currently.

“We’re trying to automate a lot of things to not require as much human involvement in the system,” he said. “When it comes to navigating small bodies, it would be really good to have this automation to help reduce mission costs and improve computational requirements.”

CONTACT

Andrew.J.Liounis@nasa.gov or 301-286-2856