27 minute read

Appointments

Altasciences Clinical Research,

a full-service provider focused on early stage clinical research with over twenty-five years of experience, announces the addition of Dr. Gaetano Morelli to the Altasciences family. Morelli has over two decades of experience in clinical research, phases I to III, as a principal investigator and medical director. He is the director of gastroenterology/endoscopy at St. Mary’s Hospital, McGill University, in Montreal, Canada, and maintains both private and academic GI practices in the area.

Advertisement

Sequence Bio announces the appointment of Dr. Michael S. Phillips as its new Chief Scientific Officer. The emerging data-driven biotechnology company is based in Newfoundland and Labrador. He comes equipped with over 25 years of experience in large-scale genomic projects, drug target discovery, and leading research teams. His most recent post was

VP Genomics at Genomics Medicine Ireland. Michael has extensive expertise leading large research groups in Academia, Biotech, Pharma and Hospital settings for drug target discovery, biomarker research, clinical diagnostics, and technology development. His positions include roles at Merck & Co., Orchid BioSciences, Génome Québec, Université de Montréal, Montreal Heart Institute and Centre Hospitalier de L’Université de Montréal (CHUM) Research Centre. His role as the chief scientific officer will entail leading scientific development projects and genomic and precision medicine research for Sequence Bio. Specifically, he will be focusing on the NL Genome Project – a proposed large-scale genome sequencing project in the Newfoundland and Labrador genetic isolate founder population to drive novel therapeutic discovery and development. as a pharmaceutical product and to enhance its efficacy and safety across multiple patient populations. He is a trained clinical and molecular epidemiologist, as well as an accomplished entrepreneur and life-science executive who was recently recognized and appointed as 1 of 10 “Canadian Innovation Leaders” by the Federal Minister of Innovation, Science and Economic Development. Prior to joining NAC, Tyler was a founder and the chief executive officer of Sequence Bio, a privately-held biotechnology company that lead a large-scale human genome sequencing initiative designed to support genetic-driven drug discovery and precision medicine.

Dr. Michael S. Phillips Dr. Bev Holmes

The Michael Smith Foundation for Health Research Board

has announced after an executive search process, Dr. Bev Holmes, has been appointed to president and CEO. With an eight year history in the company, Bev is ideally suited for this position and will continue to help it grow. Bev brings a wealth of experience in health research, practice and policy to this role. Since joining the Foundation in 2010, her commitment to integrating these disciplines has seen her lead the Foundation through the launch of a new suite of funding programs that focus on developing, retaining, and recruiting BC health research talent and support addressing health system priorities. She has served as interim president and CEO of the Foundation since early 2017.

Astellas Pharma announced that Nate Crisel has been promoted to vice president, Real World Informatics and Analytics (RWI). He will be reporting to chief financial officer, Chikashi Takeda. Crisel will continue to be responsible for overseeing the RWI division, a central function accountable for expanding efficient, effective and compliant use of healthcare data across the entire Astellas value chain. Prior to joining Astellas in 2008, Crisel served as an analytical chemist and pharmaceutical development project manager at Eli Lilly and Company. After Eli Lilly, he held roles ranging from business development and executive leadership at biotech start-ups, including Embedded Concepts, LLC, and Tracera, LLC. Crisel received a Master of Business Administration in finance and entrepreneurship from Purdue University’s Krannert School of Management and a Bachelor of Science in chemistry from Indiana University.

Invictus MD Strategies Corp.

has announced the appointment of Dylan Easterbrook as chief financial officer. Easterbrook is a seasoned accounting and financial professional, who most recently was controller of a publicly traded global fibre optic connectivity solution provider. Prior to that role he was with Ernst & Young, one of the largest professional services firms in the world where he led audit engagements for both public and privately held companies from a variety of industries, including the emerging markets. Dylan is a Canadian Chartered Professional Accountant and holds a Bachelor of Science in Life Sciences degree

Dylan Easterbrook

from the University of British Columbia. “We are excited about Dylan’s appointment as he brings solid experience and capabilities that match our needs as we continue to develop,” said Dan Kriznic, chairman & CEO, of Invictus MD. “The timing is ideal as we continue to execute on an aggressive development strategy aimed at creating Canada’s Cannabis Company.”

National Access Cannabis Corp.,

announced that Dr. Tyler Wish has joined the company to establish and lead its research and development division. In this executive role, Wish will be responsible for furthering the company’s efforts to advance knowledge of medicinal cannabis Dr. Tyler Wish

Nuvo Pharmaceutical promotes Jesse Ledger internally, adding the role of chief executive officer to his current title of president.

Jesse Ledger

Jesse assumes this role from John London, who is now the executive chairman of the Mississauga based company. Ledger has over 15 years of pharmaceutical experience with a proven expertise in business development. Prior to joining Nuvo in April of 2015, he was vice-president of business development and international Business at Tribute Pharmaceuticals Canada. Ledger holds an honours bachelor of business administration degree from Trent University.

by dave rauSch feature

Breathe Deep!

Advanced air controls help ensure safe air in laboratories

WHEN DiD you LAST READ A MATERiAL SAfETy DATA SHEET iN DETAiL?

A critical part of ensuring safe lab procedures, the writing in these documents typically manages to be both dry, yet extremely frightening at the same time. Whether from chemical or pathological agents, the range of hazards discussed in material safety data sheets often involve airborne dangers to lab workers.

Regardless of the type of lab you work in – testing, university research, healthcare or manufacturing – ensuring safe air is crucial to avoid health impacts ranging from mild irritation to severe long-term damage and even death. Airflow controls have advanced rapidly in recent years, with specialty venturi valves and digital controls that may eliminate the spread of toxic fumes and pathogens, while reducing maintenance costs and conserving energy – in both positive, negative and switchable pressure environments.

The number of workers who are potentially exposed to airborne hazards is substantial. Just one subset of Canadian laboratories – testing labs – employs about 20,000 people in an estimated 2,700 facilities throughout the country, according to the Canadian Council of Independent Laboratories.

Airborne hazards in brief

Health & Safety Ontario’s “Laboratory Safety” sheet summarizes the various health and safety hazards from chemical and biological agents. The airborne dangers they list include both short and long-term health effects, such as: • Respiratory system damage from inhaling toxic chemicals • Irritation from acids and bases • Asphyxia from cryogenic chemicals • Infection and disease from viruses, bacteria and fungi ifying potential airborne hazards in labs. These include various compressed gases: • Poisonous (chlorine, carbon monoxide) • Reactive (ammonia, boron trichloride) • Flammable (acetylene, ethylene) • Inert (nitrogen, argon)

While the university describes the first three types of gases as being of “particular concern,” it explains that inert compressed gases also pose danger since they can cause asphyxia by displacing oxygen from confined spaces.

Specific hazards often present in hospital and biomedical research laboratories include toxic fumes from pharmaceuticals (such as cancer chemotherapy drugs – “antineoplastics”) and solvents for staining and processing tissues in pathology labs to help determine proper diagnoses for patients.

feature

Clearing the air

One of the most essential components of laboratory safety is the facility’s engineering controls. “These types of controls are preferred over all others because they make permanent changes that reduce exposure to hazards and do not rely on worker behavior,” notes the U.S. Occupational Safety and Health Administration (OSHA).

To clear airborne hazards in laboratories, the engineering controls must fulfill multiple functions, including ensuring adequate ventilation and air exchange rates; filtering airborne contaminants; and maintaining appropriate pressure relationships between the laboratory and adjacent interior and exterior spaces. Notably, the airflow controls must provide negative pressure to contain pathogens in labs where such biohazards are present, while positive pressure is crucial in settings where the sterility of the lab is paramount.

Many laboratory workers are familiar with fume hoods, which exhaust airborne hazards from their immediate work area. Less visible are the room-level air handling sys-

Tenting process for environmental protection during VAV sensor cleaning can disrupt work flows in labs. Credit: Abatement Technologies, inc.

tems that transport exhaust fumes outside the building and also ensure appropriate ventilation of the entire lab.

If you work in an older building, it is a safe bet that hidden in the ceiling are many units known as variable air volume (VAV) terminal units/boxes. Used in commercial and institutional buildings around the world, VAVs boxes are calibrated air dampers to control temperature and humidity into a designated space, and are still common in new labs being constructed today.

The basic operation of a VAV box involves temperature, pressure and sometimes occupancy sensors within a space sending signals to the VAV box controller. Within the VAV box an electric actuator positions a butterfly damper to control the volume of air passing into a space from the building’s heating, ventilation and air conditioning (HVAC) system. But, a number of manufacturers offer a higher performance alternative known as a venturi valve, which provides labs with quicker, more flexible, repeatable and accurate airflow control. Venturi valves do not require any scheduled maintenance and offer higher airflow turndowns, resulting in more room state pressurization flexibility, lower energy use and reduced maintenance – for operational cost savings.

Advanced airflow control with venturi valves

Venturi valves operate based on the Venturi effect, a fluid mechanical principal named after the famed Italian physicist. The body of the valve is a tube with a constricted neck, for an overall hourglass-like shape. A cone assembly inside the valve responds immediately to changes in air pressure in the HVAC ducting, automatically adjusting to pressure changes.

Leaving aside a lengthy discussion of the physics of fluid dynamics and valve operation, this configuration provides a number of benefits in labs,

feature

VAV terminal box sensor fouling due to build-up of lint. Credit: Phoenix Controls

as described below. (To see a demonstration of the inner workings of a venturi valve, many videos are available on YouTube, such as this one posted by HVAC consultant Belnor Engineering: https://www.youtube. com/watch?v=_7uhkeRNO-c).

Compared to traditional VAV boxes, venturi valves provide high accuracy airflow control due to: • High speed of response, to both duct pressure and flow setpoint changes which cannot be matched by valves requiring flow measurement (e.g., VAV boxes and other alternatives) due to inherent signal latency between the flow sensor, controller and actuator. • Mechanical pressure independence

instantly maintains flow, even with constant changes in static pressure, so that a stable, reliable amount of directional airflow is not compromised. No movement of the actuator is needed, thereby extending the life of the entire assembly. • Factory characterized flow metering technology that provides higher turn-downs to achieve a number of stable, accurate room pressure states. The cone assembly quickly moves into position to achieve the flow set point vs having to measure and find its position. • Volumetric offset guarantees directional airflow. Zone balance controls for some venturi valves track each other, maintaining a design offset between supply and total exhaust to ensure directional pressure in the space.

Higher turndown ratios mean the device has a wider range over which it can accurately provide the correct airflow. With this improved accuracy, labs can better manage ventilation and maintain air pressure relationships for worker safety and research/ testing/production integrity, regardless of room state (occupied, unoccupied or purge condition).

Cost savings

While worker safety is paramount when choosing laboratory airflow controls, many lab operators also select venturi valves for the operational cost savings they provide. Energy savings are based on cost reductions due to better management of conditioned exhaust. Operational savings result from no scheduled maintenance, as venturi valves do not use pressure transducers to measure flow.

Energy savings

To reduce energy consumption in a ventilation system, it is important to consider air flow. For example, to flow 1,000 cubic feet per minute (CFM) of air into a space, the facility designers could specify a 10-inch VAV terminal box or a 10-inch venturi valve. Based on the physics underlying the two valve types, a venturi valve can accurately exhaust as little as 50 CFM, compared to a minimum 250 CFM required from a VAV terminal box. Because a lab typically requires dozens of air control devices or more (depending on its size), and it costs a handful of dollars to vent

each CFM, the energy cost difference between venturi valves and VAVs is substantial.

Reduced maintenance

In addition to higher-than-necessary energy consumption costs, a traditional airflow control system using VAVs incurs high maintenance costs. If not properly maintained, the valve will not function as designed and can result in poor airflow control that reduces lab worker safety.

In its discussion of maintenance of lab systems, Health & Safety Ontario’s “Laboratory Safety” sheet notes the importance of “regular inspection and testing for airflow (proper velocities and volumes), duct work (free of corrosion, leaks and dents), and fans (working properly).” Regular maintenance of VAVs is crucial, as the valves’ butterfly dampers are susceptible to gathering lint and dust. In a facility with 500 traditional VAV terminal boxes, annual cleaning costs are on the order of $50,000 - $100,000. The design of venturi valves obviates this problem and the cost and hassle of regular cleaning.

Conclusion

You do not need to be an airflow control expert to play a role in ensuring that plans for lab construction – whether for a new building or a refurbished building – adequately address air safety. With the basic knowledge of airborne hazards and airflow controls discussed above, you have a starting point to ask the facility designers some intelligent questions to help ensure safe air for you and your lab colleagues.

Dave Rausch is the market manager for Phoenix Controls. He has more than 20 years of experience in the building industry, including engineering and product management roles in airflow controls and fire suppression systems. drausch@phoenixcontrols.com

To see this story online visit

feature

by ProfeSSor Palwinder Singh and dr. manPreet Singh bhatti

Design of Experiments Improves Peptide Bond Yield from 20% to 76%

Living matter is primarily made of proteins, so understanding how nonliving matter can form proteins is critical to understanding how life emerged. The peptide bond, which is formed when the carboxyl group of one molecule reacts with the amino group of another molecule, releasing water, is the basic building block of proteins. In all of today’s living cells, the ribosome, a large and complex molecular machine, serves as the site of biological protein synthesis. But, as life was emerging peptides had to be synthesized in a much simpler way. Researchers demonstrated decades ago that amino acids can be formed naturally in conditions believed to have existed on earth when life was emerging and they have long tried to gain a better understanding of what conditions might be conducive to the formation of simple peptides. Peptide bond formation also has many important applications in pharmaceutical research and manufacturing since certain peptides have been shown to be effective in treating cancer, diabetes, infections and other diseases.

Studying peptide bond formation

Researchers Professor Palwinder Singh, organic chemist, and Dr. Manpreet Bhatti, environmental engineer, at Guru Nanak Dev University, Amritsar, India, recently worked to finetune the conditions that best promote peptide bond formation in an uncatalyzed aqueous phase reaction. In their first series of experiments, reaction of an equimolar solution of the amino acids (His) and proline (Pro) in solution in acetonitrile-water was studied as a function of temperature, pH, reaction time and concentration. The four factors of interest were varied one at a time while the yield was subjected to high-resolution mass spectroscopy to quantify the peptide bond formation by measuring the amount of the peptides Pro-His dipeptide, diketopiperazine 2, diketopiperazine 3, and tripeptide 4. However, it was difficult to optimize the conditions

feature

for the best yield of the dipeptide because the formation of diketopiperazine impedes the quantification of the formation of other peptides.

The researchers changed their approach to using aqueous solutions of Carbobenzoxy derivatized valine (NCbz-Val) and Glycylglycine methyl ester hydrochloride (Gly(OMe)-HCl) which prevents the formation of diketopiperazine. Gly(OMe) was used in place of glycine for easy isolation of the dipeptide methyl ester from the aqueous solution. However, glycine worked equally well for peptide bond formation. The one-factor-at-a-time (OFAT) approach was again used to investigate the effects of temperature, pH, reaction concentration and reaction time on yield. Over 75 runs, the reaction temperature ranged from 60oC to 120oC, the pH was varied from 3 to 10, the reaction time was between 30 and 300 minutes and the concentration ranged from 0.94 to 4.7M. Over 75 runs, the highest yield of peptides was 20%.

Interactions between factors

“We felt that we should be able to obtain a better yield than this,” said Dr. Manpreet Bhatti, Assistant Professor in the Department of Botanical and Environmental Sciences at Guru Nanak Dev University. “I had a hunch that one or more interac-

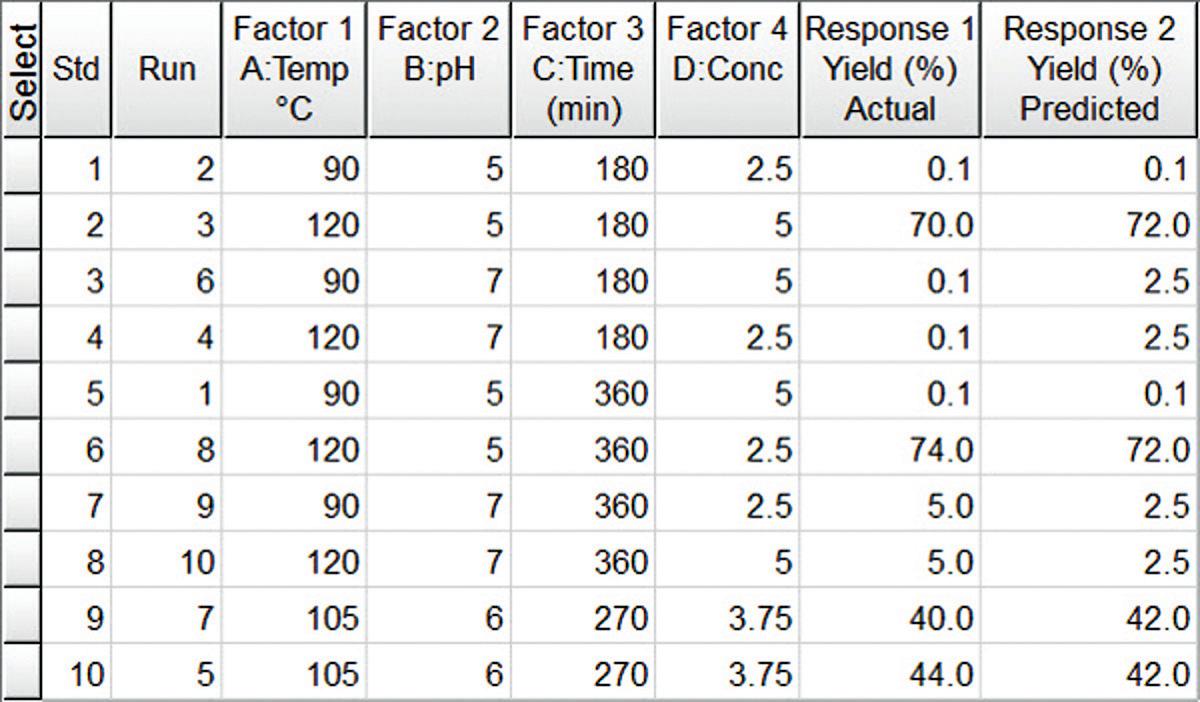

figure 1

tions between variables might be playing a role that was obscured by the OFAT method. A major problem with studying one factor at a time is that you cannot detect interactions between factors. By varying an individual factor you can find the optimal value of each one with all the others held constant. However, when you combine the supposedly-optimized values of each factor the results are often far less than optimal because of the ways that they interact with each other.”

“Design of experiments provides a better approach that varies the values of all factors in parallel so it uncovers not just the main effects of each factor but also the interactions between the factors,” Bhatti added. “This approach makes it possible to identify the optimal values for all factors in combination and also requires far fewer experimental runs than one factor at a time.” Bhatti used DesignExpert® software from Stat-Ease, Inc. to develop a highly-fractionated twolevel factorial screening experiment that evaluated 4 variables at a time, called a resolution IV design. The experiment required 10 runs to explore the design space, including investigating and evaluating the main effects of each factor as well as the two-factor interactions.

Designing the experiment

Bhatti used a Design-Expert template to create an experimental design that requires the minimum runs needed for screening at this level of resolution. “We selected Design-Expert software because it is relatively easy to use compared to other statistical software. Design-Expert walks the user through the process of creating a designed experiment. The user simply enters the factors and selects the type of design that fits his or her needs. The software provides feedback on the design, such as the number of runs required and the effects resolution. This makes it easy to quickly evaluate the pros and cons of different designs.”

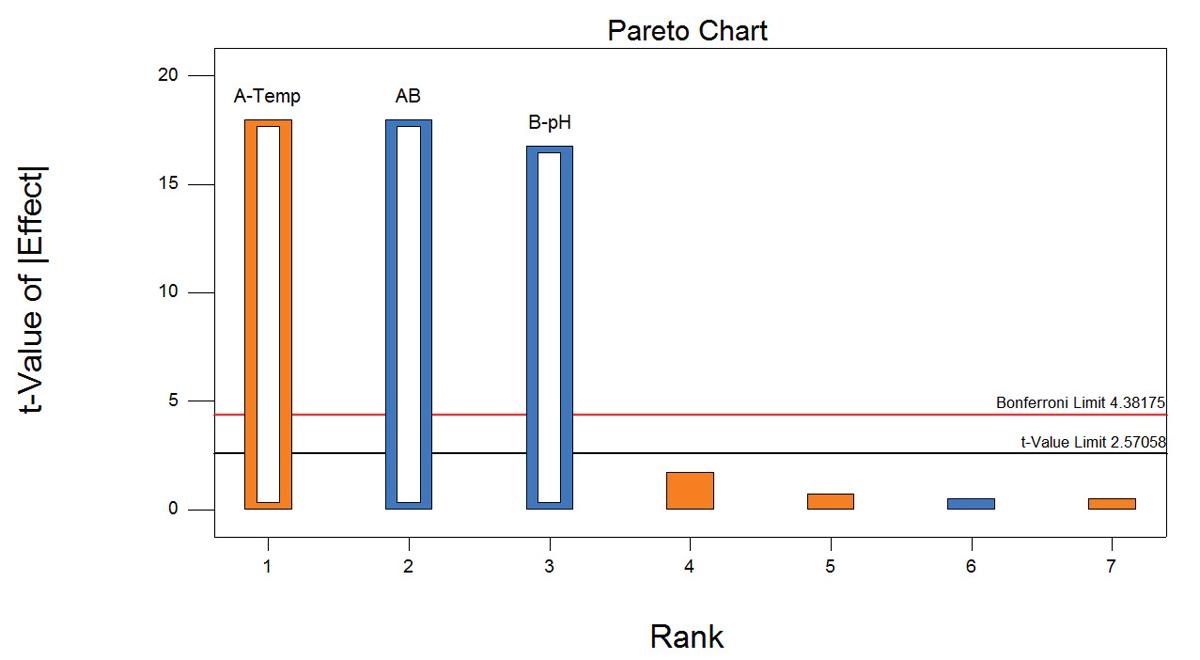

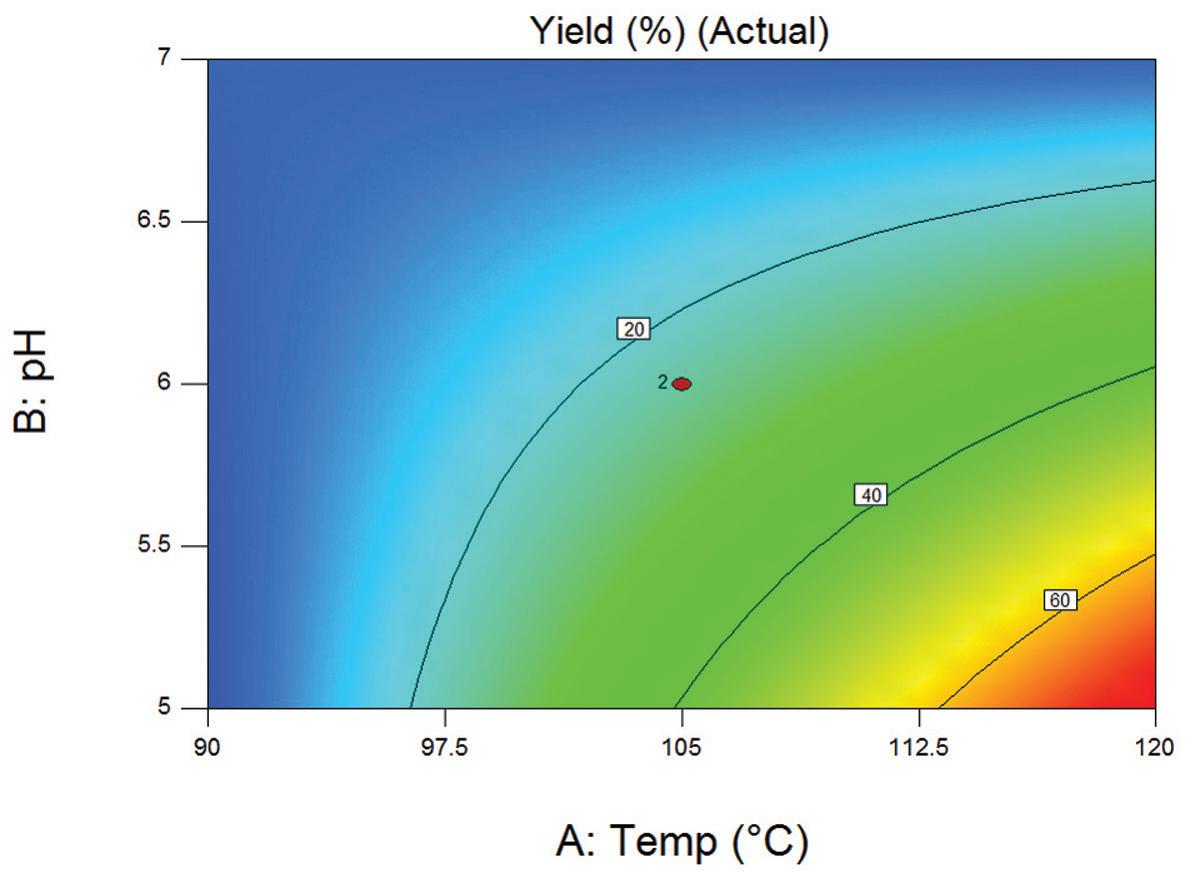

As shown in Figure 1, the factors in the experiment were reaction temperature (90˚ to 120˚C), pH (5 to 7) reaction time (180 to 360 minutes) and concentration of reactants (2.5 M to 5.0M)). A maximum yield of 74% of NCbz-Val-Gly(OMe) was obtained with a reaction temperature of 120˚C, a pH of 5, a reaction time of 360 min and a reactant concentration of 2.5 M. Figure 2, shows the data analyzed by the Pareto chart which indicates a strong interaction of pH and temperature that had been masked in the one-factor-at-a-time experiment. The two-dimensional contour plot shown in Figure 3 further shows that the effect of temperature depends dramatically on the pH, which is the key to optimizing the reaction.

The results also showed a single peak in the chromatogram indicating a lack of racemization under the optimized reaction conditions. Racemization refers to the conversion of an enantiomerically pure compound (one where only one enantiomer is present) into a mixture of the enantiomers. A lack of racemization is valuable in pharmaceutical manufacturing applications because it eliminates the need for a subsequent operation to separate the enantiomers.

fractional factorial design showing four independent variables along with actual and predicted yield. The design is shown in standard order for illustration purposes, but was actually run in random order as shown in the Run column.

feature

figure 2

Pareto chart showing the significant process variables.

figure 2

Two-dimensional contour plot showing the effect of temperature and pH on compound yield.

Building up the peptide chain

The optimized reaction conditions were used to produce a number of NCbz-dipeptides and also for a buildup of the peptide chain. The treatment of N-Cbz-Val-Gly(OMe) with NaOH in acetone–water, followed by the reaction of the resulting N-CbzVal-Gly(OH) with L-leucine(OMe)-HCl resulted in the formation of N-CbzVal-Gly-Leu(OMe). Ester hydrolysis and the reaction of N-Cbz-Val-GlyLeu(OH) with L-Ala(OMe)-HCl at 120oC and pH 5 resulted in N-CbzVal-Gly-Leu-Ala(OMe). Although the peptide was elongated in a stepwise fashion, the sequence of amino acids in N-Cbz-Val-Gly-Leu-Ala(OMe) was verified with the help of the fragmentation pattern in the mass spectrum and nuclear magnetic resonance (NMR) spectra. For comparison of the optical rotation, N-Cbz-Val-Gly-LeuAla(OMe) was also prepared through a conventional method. These results were used to develop a synthetic protocol for production of peptides. The practicability of the method was also verified by synthesizing a pentapeptide. Starting with N-Cbz-Gly, the stepwise addition of Pro, Val, Ala and Ile resulted in the formation of a pentapeptide with a 55% overall yield.

In conclusion, DOE was used to demonstrate that temperature and pH function synergistically in the process of peptide bond formation. The optimized reaction was used to achieve sequence-specific and non-racemized synthesis of a tetrapeptide and pentapeptide at high yields. This is believed to be the first published report of constructing sequence-specific peptides in a noncatalyzed reaction. Besides demonstrating a possible pathway for the creation of proteins from nonliving matter, it may also prove to be an economical method for commercial peptide synthesis.

Palwinder Singh is Professor, Department of Chemistry, Guru Nanak Dev University

Dr. Bhatti’s is Assistant Professor, Department of Botanical and Environmental Sciences, Guru Nanak Dev University

To see this story online visit

by dmitri Petrov and deniS KouraKin feature

Three Industrial Revolutions Coming from Speedy, Cheap Genome Technology

In just over half a century, humanity has witnessed microwave sized car phones and refrigerator sized computers rapidly evolve into a single, handheld device that is millions of times more powerful than all of NASA’s combined computing capabilities in 1969. The eye watering speed of technological advancement for personal devices has been impossible to ignore, but many people are unaware that genome sequencing technology has actually come further, faster - and isn’t slowing down. The results of which, will soon be revolutionizing society as we know it.

Back in 2009, it took several weeks and cost thousands of dollars for a lab to determine the sequence of a genome. Now, it can be done with the same level of scientific accuracy in a matter of days. Home-based ‘spit tests’ can provide limited genetic information for under $125. Today the worldwide sequencing market is estimated at US$14.71 billion, just a decade since the technology was first introduced commercially.

The reduced cost of sequencing has increased researchers’ and hobbyists’ access to genomic information, which has led to a major increase in innovative breakthroughs in the field. These discoveries have the power to change the course of humanity in just the next few years. Here are three industries that’ll be impacted:

Welcome to personalized medicine

Personalized medicine, or genomic medicine, was born out of the Human Genome Project, completed in April 2003, which sequenced the entire human genome. According to the National Human Genome Research Institute (NHGRI), the organization behind the effort, it can be defined as “an emerging medical discipline that involves using genomic information about an individual as part of their clinical care (e.g. for diagnostic or therapeutic decision-making) and the health outcomes and policy implications of that clinical use.”

Since its origin, DNA sequencing applied to personalized medicine has furthered the understanding, diagno-

feature

sis and treatment of countless diseases, including various forms of cancer, HIV/AIDS, heart disease, rheumatoid arthritis, multiple sclerosis and cystic fibrosis among others. It has also allowed us to better track infectious outbreaks, and will increasingly be involved in sci-fi level preventative medicine based on genetic predispositions for disease expression.

This means that very soon, analysis of your unique genomic data may be used to predict which treatment option is likely to be most effective for you, how you are likely to respond, and how to take preventive action if you carry a genetic mutation that increases the risk of developing a disease. A recent study from August 2017, demonstrates that whole-genome sequencing can even be beneficial for primary care visits. In the study, researchers found that doctors could more accurately diagnose and prescribe treatment for patients who knew both their family history information and their DNA sequences.

With ‘at home’ DNA testing kits becoming more and more popular, the use cases and the positive outcomes they deliver are only set to grow. In fact, a report from Credence Research forecasts the direct-to-consumer genetic testing market to grow to $340 million by 2022, and AncestryDNA recently announced that it had reached an impressive six million people in its database. But if the popularity of companies like 23andme and Ancestry still have you unimpressed by the idea of the future of genomic medicine, you may not be aware that you will soon be able to take a census of not just your genome, but of all the living things inside you.

High-throughput next-generation DNA sequencers and bioinformatics already make it possible to identify the bacterial species that live in your gut. Research has only begun to understand the complex effects these microbial communities have on our health, behaviour, and even mental processes. The future of this research is guaranteed to lead to advancements in health and increase the precision and personalization of modern medicine.

Farming with scientific precision

DNA sequencing isn’t just about the bacteria in your gut though, but also what you put into it on a daily basis. Our food system is entirely reliant on the success of billions of fractionable chemical and biological interactions that ultimately result in edible and nutritious food for our ever-growing global population, which the United Nations expects to reach 9.8 billion by 2050.

Organized farming is the backbone of that food system and it requires detailed understanding of soil health and fertility to optimise yields, decide which crops to grow and develop fertiliser and pesticide strategies. In grand part, these soil tests are currently limited to the chemical analysis of things like soil PH, phosphorus, nitrogen and potassium.

But soil is much more than simply its chemical composition, as it contains millions of species of bacteria, fungi and archaea - together, these represent the most diverse and intricate ecosystems on earth. This species data is potentially much richer than any possible chemical analysis, and as the genomic understanding of microscopic environments evolves, it will have important implications for food production and environmental stewardship. The information yielded from this genetic sequencing of soil microbiomes could possibly then be used to identify the root causes of disease in crops, and improve treatment decisions.

As the databases of genetic in-

feature

formation grow, it will be possible to use genomic information to more accurately identify environmental contaminants, and use strategic species introduction into soil environments to organically metabolise a wide variety of pollutants in soils. Additionally, similar to the previously mentioned genome-based disease identification for humans, it will be possible to identify causes of disease in crops and develop less toxic treatment strategies. This would all contribute to higher crop yields, more sustainable environmental practices, and overall greater food security - which is particularly important considering the estimate that we could run out of food for our growing global population as soon as 2027.

Biological engineering

Considering the impending global food shortage, DNA sequencing has showed promise to save the day in yet another way - through biological engineering, or more specifically, synthetic biology - with the creation of lab-grown meats. But that’s just one example. This field of science encompasses the design and fabrication of all biological components and systems that do not already exist in the natural world. At its core, it’s the process of tinkering with DNA to achieve a wide variety of biologic materials and/or organisms, to apply towards an unimaginable number of uses.

Millions of dollars are currently being invested in companies researching or currently iterating on a wide range of biologic creations, from consumable, bioreactor-brewed “clean” meat that is produced without the slaughter of animals in as little as four weeks, to lab-grown organs that could revolutionize organ replacement. While the industry is still young, an investment report by SynBioBeta showed that over US$1 billion was invested in synthetic biology companies in 2016 alone.

In December 2017, Boston biotech start-up Ginkgo Bioworks, raised $275 million supporting its vision of bringing synthetic biology to the mainstream, and marking it as “perhaps the most heavily funded synthetic bio company ever.” The investment in this company highlights the expectation that practical applications of synthesized biological materials and engineered organisms are set to heavily impact the previously mentioned health care, food and farming industries, as well as the pharmaceutical, biofuel, retail, textile, and several other industries.

The increased speed and reduced cost of DNA sequencing technology for researchers has already given rise to many impressive scientific breakthroughs, but the business models supporting this research are still in their infancy. The current DNA sequencing market reflects mostly early adopters and pioneers, and is expected to grow at a near exponential rate as the technology continues to become more affordable and accessible for broader audiences.

With every passing minute, genetic data banks are storing more genetic information, creating larger libraries of increasingly complete genetic material, and the technologies are becoming more efficient. This means that very soon, these cutting edge scientific discoveries will cease to be novel, and instead, become revolutionary new ways forward for modern society.

Dmitri Petrov is the co-founder of NGX Bio and a lab head at Stanford University.

Denis Kourakin is CEO of NGX Bio - a company which provides access to any sequencing platform globally - fast and cost-effectively.

To see this story and all the figures online visit https://