7 minute read

Bringing AI to the Rugged Edge

By Jim Ison, Vice President, One Stop Systems

Bridging the gap between sensors and highperformance compute power is a growing challenge, especially in systems where quick, complex decisions are vital

The concept of “the edge” in embedded systems has taken on new urgency in the federal space. The need to address the burgeoning amount of high-speed, vital data with powerful AI processing for immediate complex decision and reaction could be likened to the idea of stuffing a data center under the seat of a helicopter (if only!). “The edge” can be defined as “where it’s happening” and in government systems, that’s the field. Traditionally, the problem of linking high-speed sensors and actuators with super-powerful AI resources has been addressed with high-speed data communications. But that has serious limitations in terms of field operations where package size, speed, mobility, and reliability are paramount.

This compound challenge involves a combination of truly enormous processing power with vast multiple terabyte data storage, internal data routing, external high-speed data acquisition bandwidth and blazing high-speed networking capability. All this must then be packaged into a standard rack mount with cooling capability for the truly large amount of heat that will be generated. In addition, the packaging must be ruggedized for shock and vibration, capable of a variety of power source connections and designed for easy setup and installation. It literally involves making data center hardware available at the rugged edge.

There have, of course, been previous efforts to meet the demands of AI at the edge but so far none of the attempts have managed to meet all the challenges facing true “under the seat” implementation. There have been several that have incorporated sufficient computing power— mostly in the form of graphic GPUs that can handle the parallel inferencing inherent in AI. However, some have not managed to meet the small form factor size requirements. Those that have, have not been able to package enough hardware nor supply the needed cooling. Still missing from some that may meet many of the needs is the necessary ruggedization required for land vehicles and aircraft.

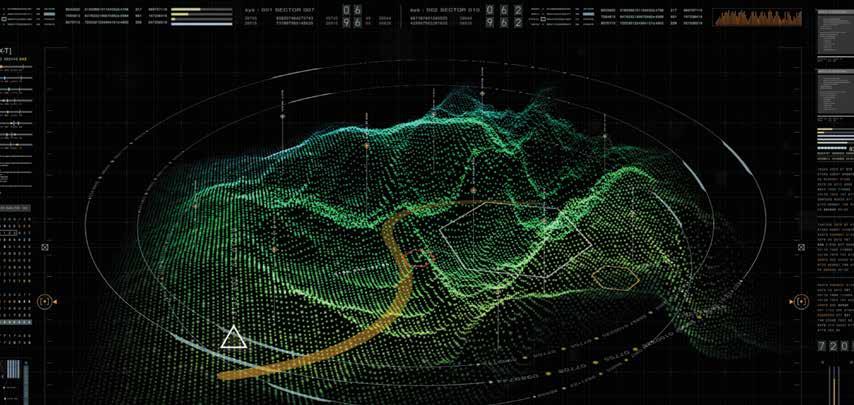

One feature they all do seem to agree on, and implement is the use of a high-performance graphic processor, or GPU. GPUs are not only superior for floating point operations needed in graphics but also for the parallel inference operations required by AI. The GPU that almost all AI at the edge implementations are focusing on is the NVIDIA A100 Tensor Core GPU (Figure 1). The A100 implements seven GPU cores per unit and offers up to 2 terabytes per second of memory bandwidth.

Figure 1: The NVIDIA A100 Tensor Core GPU provides up to 20X higher performance over the prior generation and can be partitioned into seven GPU instances to dynamically adjust to shifting demands. It is available in 40GB and 80GB memory versions.

Deep learning and AI algorithms depend highly on parallel processing and the A100 can implement up to 384 NVIDIA(r) CUDA(r) cores, which harness the increase in parallel processing power of the GPU for distributed algorithms where each unit works independent of other units and the results are combined and coordinated to produce complex decisions and inference results. It is also largely becoming the processor of choice in actual data center implementations. That opens a world of portable and easily adaptable software.

Enter the OSS Rigel System

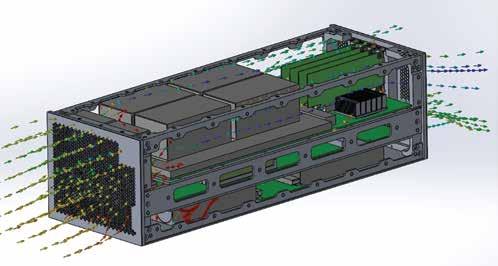

First the major news: One Stop Systems has managed to fit a fully functional 4-GPU system with networking, storage, and power supply into a 4U air-cooled ruggedized package, two of which mounted side-by-side take up a single rack width (Figure 2). Named the Rigel Edge Supercomputer, the result can be called “AI on the Fly.”

The 4U chassis is divided into three layers. The bottom power layer contains two redundant power supplies: 2 each of a 24V 1300 W supply and 2 each of a 54V 2000W supply for a total of 3300 Watts supply. Each of these has several external connection possibilities to accommodate different application installation scenarios. The extended vehicular input power range includes 48 to 270 VDC, 110 to 220 VAC, and three-phase power at 400-800Hz. The bottom power layer alone presents its own cooling challenges, which will be addressed by the overall fan/fan control system.

The middle layer is the server layer providing switching services and control for the main GPUs and for the PCIe and network interfaces. It uses two Broadcom PEX 88080 switches for a PCIe Gen. 4 Express Fabric matrix. The switches enable I/O sharing with standard SR-IOV or multifunction capability, allowing multiple hosts (such as here, the GPUs) to reside on a single PCIe Fabric topology. Hosts communicate through generic DMA or NT with other standard hosts and end points using application software.

The PCIe networking interfaces and traffic are controlled by an AMD EPYC 7003 series processor which provides a full feature set across the stack with industry leading I/O, 7nm x86 CPU technology, and an integrated security processor on die. That CPU also has access to 512 GBytes of DDR4 dynamic RAM. EPYC 7003 CPUs provide up to 32MBytes of L3 cache per core, 4-6-8 memory channel interleaving designed for better economies and performance in multiple DIMM configurations, plus synchronized clocks between fabric and memory, all driving better, faster, more efficient data flow. The 7003 in Rigel offers 82 lanes. The result is massive data acquisition bandwidth for large scale sensor data flows up to 32GBytes/sec via PCIe along with high-speed network connectivity up to 800GBits/sec.

The four NVIDIA A100GPUs on a single board represent a monumental concentration of compute power along with a power and heat dissipation challenge, which is solved by the Rigel de-

Figure 2: The Rigel Edge Supercomputer from One Stop Systems combines a GPU layer with a Middle Server layer and a bottom power layer in a 4U ½ -width air-cooled chassis.

sign. The integrated server board with four GPUs can accept high resolution image data from 1600 1Gbit high-resolution sensors, and then process, analyze, and perform Fast Fourier Transforms for frequency domain calculation before converting the data back into the time domain, and storing 264TB. All this at a rate of up to 5 PetaOPS—that’s 5 x 105!

Each of the A100 GPUs has seven Multi-Instance GPU or MIG cores. Those are full physical processor cores for a total of 28. The software-implemented CUDA cores can amount to a total of 384, all directed by the algorithms and fed by the 7003-powered data flow system, which includes the network connected sensors and brings together edge and AI as never before. The combination of the CPU-controlled data traffic, the PCIe-enabled data acquisition, the GPU processing power, and the software implemented massive parallelism combine to make an awesome mix in a small box. It’s that small box that highlights the final achievement of Rigel’s realization of AI on the Fly.

And that is AI couldn’t fly if it overheated. Mechanical and thermal design are vital to realizing the size/performance goals of such an ambitious system that is required to work reliably in transportable environments where shock and vibration are a given. These include autonomous vehicles and government aircraft where they are deployed under a seat or in a utility/trunk area. For mobility and use in fixed installations, they need to fit standard equipment racks as well as transit cases—all with sufficient power access and cooling (Figure 3).

The fans used are 80x38mm and are specifically offset from the chassis centerline to align with the heat sink inlets. Sheet metal ducting is used to even more effectively direct air flow. Current design data indicates that providing 50 CFM or more to the GPU inlet would be sufficient to cool the 500 W GPU at an ambient temperature of 35C under constant max power draw. This is something that many experts were skeptical about but was possible.

The Rigel design is also forward looking in the sense that it uses the already widely implemented NVIDIA and ASRock/AMD Gen4 technology, which makes it readily scalable to Gen5 and Gen6. It is also pre-fitted out-of-the box with simple set up and unified management GUI that allows ready access to both hardware and software upgrades. This is in line with the demands of information officers for a “single pane of glass” monitoring and management system supporting the most recent APIs such as Redfish for a truly software defined datacenter. AI on the Fly is now ready to take off.

Figure 3: Air cooling in the Rigel includes directed air flow over specially designed multi-level heat sinks for the GPUs as well as directed flow over all three layers.