In the exciting world of technology, with robots and computers taking center stage, learning how to talk their language is super important! From doctors using AI to diagnose diseases to scientists using robots to explore space—computers are everywhere! To keep up with this amazing age, just knowing how to use a computer isn’t enough. The bustling world of technology thrives on innovation, and that’s where artificial intelligence (AI) comes in.

Upon request from some schools, Uolo introduces the Artificial Intelligence (AI) series for Grades 9 and 10. The objective of this special series is to expose learners to essential elements in AI and introduce common domains and applications of AI to them. The series also introduces the fundamentals of AI through engaging activities that can be done in a computer lab or on a computer at home. Thus, this book serves as a gateway to explore its fascinating depths. Get ready to embark on an intellectual adventure as you unravel the mysteries of AI and its potential impact on your future.

Each book comprises a defined set of chapters, which have detailed theoretical explanations. The explanations of concepts have been designed keeping the context of learners in mind, so that the topics are relatable, engaging and exciting. The in-chapter activities offer students practical experience to various AI concepts and domains, facilitating a deeper understanding.

To reinforce takeaways of each chapter, the chapter-end summary includes key pointers of all that has been covered. This also provides a comprehensive overview of the entire chapter at a single glance. Comprehensive exercises at the end of each chapter include various question types, like fill in the blanks, true or false, multiple-choice, short answer, long answer, and application-based questions. To further enhance student proficiency, these chapters end with an unsolved activity which provides additional practice of the AI concepts.

We hope this series ignites the learners’ curiosity and empowers them to become informed participants in the ever-evolving world of AI.

You must have seen that while texting a friend using your smartphone, the predictive text fills in the rest of the phrase even before you get to the end of the sentence. Similarly, while shopping online, you get product recommendations based on your shopping history. Or when you are out for a jog, your smartwatch helps you track your fitness goal by giving useful information like the number of steps. All of this is possible because of Artificial Intelligence (AI), a technology that has transformed how we live, work, and interact with machines all around us.

Being intelligent means being good at learning, problem-solving, thinking, remembering, communicating, and making choices. It also involves being creative and managing emotions well.

Here are some examples of how smart people use their intelligence in different ways:

Problem-solving: A person with high intelligence can solve complex problems efficiently and effectively. For example, an engineer might use his/her intelligence to develop innovative solutions to technical problems.

Creativity: People with high intelligence tend to be creative and can come up with unique ideas and solutions. Artists, for instance, may use their intelligence to create thought-provoking pieces of art that challenge viewers’ perspectives.

Invention: People with very high intelligence can invent new things in different areas. For example, the renowned physicist Albert Einstein, whose intelligence led to significant contributions in science and technology.

Just like how we use our intelligence to do everyday tasks and become better at them, similarly machines are becoming increasingly smart thanks to Artificial Intelligence (AI). AI can be defined as a branch of computer science concerned with creating intelligent machines that can learn from data, solve problems, and make decisions. It involves the study of principles, concepts, and technologies that enable machines to exhibit human-like intelligent behaviour.

AI involves using special programs and techniques that enable computers to learn and perform the tasks. AI gives machines the ability to think smartly, solve issues, and perform a variety of activities.

John McCarthy, who is regarded as the father of AI, defined AI as “the science and engineering of making intelligent machines.”

Human intelligence and Artificial intelligence differ in several ways. Let us learn about some of these differences.

Origin

Human intelligence originates from the complex neural networks and cognitive processes of the human brain.

Learning

Adaptability

Human intelligence is shaped through experiences, education, and interactions with the environment.

Human intelligence possesses a special ability to adapt to new situations, learn from mistakes, and apply knowledge creatively.

AI is developed by programming algorithms and computing systems within machines.

AI learns from data fed into algorithms, with limited ability for contextual understanding and human-like cognitive abilities.

AI adapts due to programmed algorithms and the data it processes, but lacks human-like adaptability and creativity.

Emotion and Consciousness

Human intelligence is linked to emotions and morality, which influence how we make decisions and act.

AI has no emotions or morality, relying purely on programmed instructions and data processing.

Limitations

Human intelligence has constraints such as processing power, tiredness, and emotional states.

Evolution Human intelligence evolved as adaptations to the challenges faced by our early human ancestors in their environments.

AI may struggle with tasks that require common sense, intuition, or a nuanced understanding of human behaviour.

AI evolves as technology, research, algorithms, and computing power advances.

While both human intelligence and artificial intelligence share the goal of solving problems and making decisions, they differ significantly in their origins, learning processes, adaptability, emotional aspects, limitations, and evolution.

Artificial Intelligence deals with the study of the principles, concepts, and technology for building machines that enable them to think, act, and learn like humans. Machines possessing AI should be able to mimic human traits, i.e., making decisions, predicting outcomes based on certain actions, learning, and improving on their own. An example of this is self-driving cars. It integrates various components, such as sensors, cameras, GPS, etc., and decision-making algorithms to mimic human-like driving behaviour.

Machine Learning is a subset of AI that enables machines to improve at a task with experience. It enables a computer system to learn from experiences using the provided data and make accurate algorithms for predictions or decisions. In the self-driving car example, these algorithms analyse data collected from cars’ sensors and cameras to learn about traffic behaviour, pedestrian movement, etc. This helps the cars improve their driving capabilities over time through experience.

Deep Learning is a subset of machine learning in which a machine is trained with vast amounts of data. It is an AI function that mimics the working of the human brain, processing information for tasks, like object detection, speech recognition, language translation, and decision-making. In self-driving cars, deep learning is often used for tasks, such as object detection, lane detection, pedestrian recognition, and traffic sign recognition, enabling safe navigation.

AI can help computers play games, recognise faces in pictures, respond to our voice commands, etc. AI is basically an umbrella term that has different domains or areas where it can be used. The three main domains of AI are Data, Computer Vision, and Natural Language Processing (NLP). Let us learn about these domains.

Data is a collection of raw facts that can be transformed into useful information. It can be in the form of text, images, audio, or video.

AI enhances computer performances by learning from data. The more the data, the more intelligent the machine becomes. AI can process and analyse data, identify patterns and trends based on its goals. For example, when you play games online, AI applies what it has learnt to make the game more fun and challenging.

Let us perform the following experiment to understand how AI learns from data.

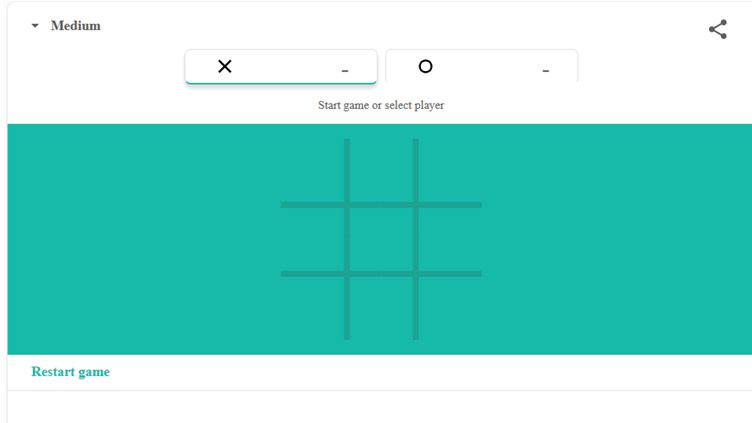

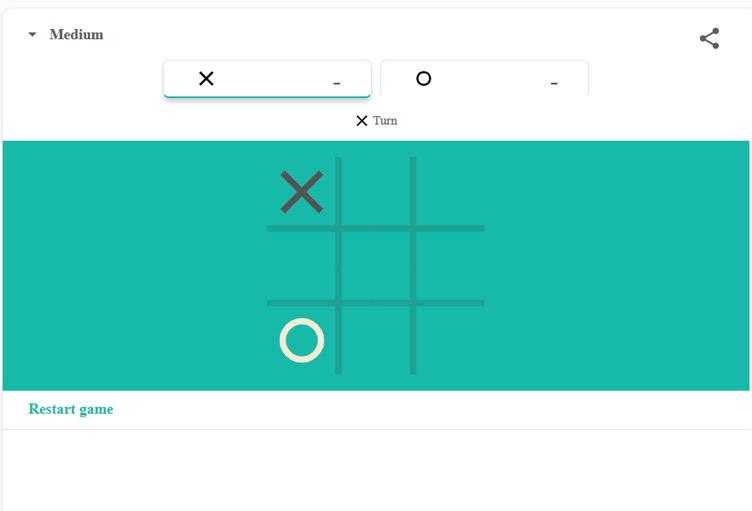

Objective: Tic Tac Toe game is an interactive online game based on data for AI where the machine tries to predict the next move of the participant by learning from their previous moves. It is a digital translation of the classic Xs and Os game played on a 3x3 grid. In this game, two players take turns to mark the spaces with either an X or an O. The one who creates a line of three with these symbols either horizontally, vertically, or diagonally on the grid wins the game.

Follow the given steps to explore how this application works.

1. Visit the link: https://www.google.com/fbx?fbx=tic_tac_toe

2. The following window appears.

3. Select either ‘X’ or ‘O’ as your input move for the game. Here, we have selected ‘X’, and the AI game makes ‘O’ in the grid to make a counter move.

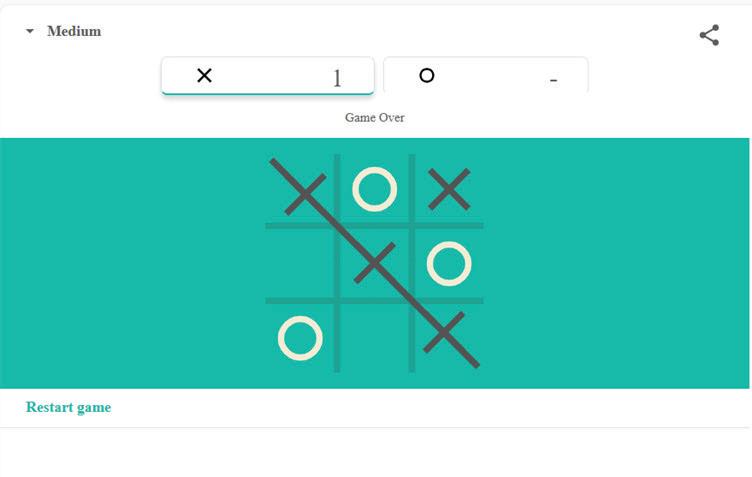

4. Continue the game until three Xs or three Os are drawn in a row, column, or diagonal.

5. If you win the game, the following screenshot will depict your victory.

Computer Vision is another important domain of AI which uses cameras to see and understand visual information.

By using cameras to monitor the environment and keep an eye on activity at the front door, a smart home security system makes use of computer vision. It detects familiar faces to unlock the door for family members and sends alerts for unfamiliar faces.

Let us perform the following experiment to understand how AI uses computer vision to see and understand things.

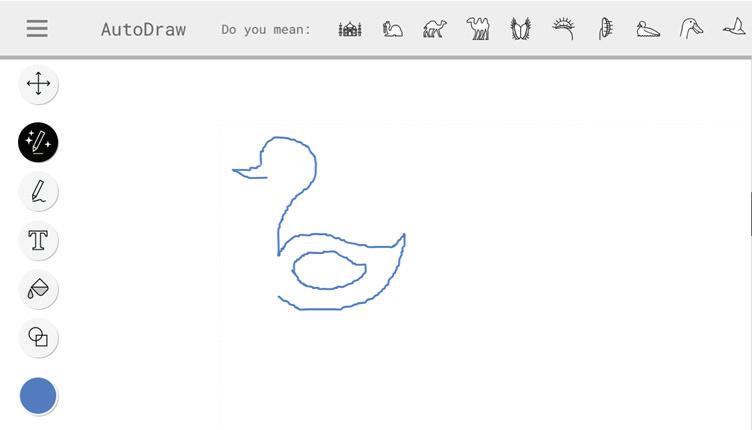

Objective: AutoDraw is a fun and free online drawing tool based on computer vision. It uses AI to guess your drawing and provides suggestions to turn your scribbles into complete drawings.

Follow the given steps to explore how this application works.

1. Visit the link: https://www.autodraw.com/

2. The following window appears.

3. Click on the Start Drawing button.

4. Now, select the AutoDraw tool from the left panel.

5. As soon as you begin to scribble in the drawing area, the AutoDraw tool will make use of computer vision to guess your drawing.

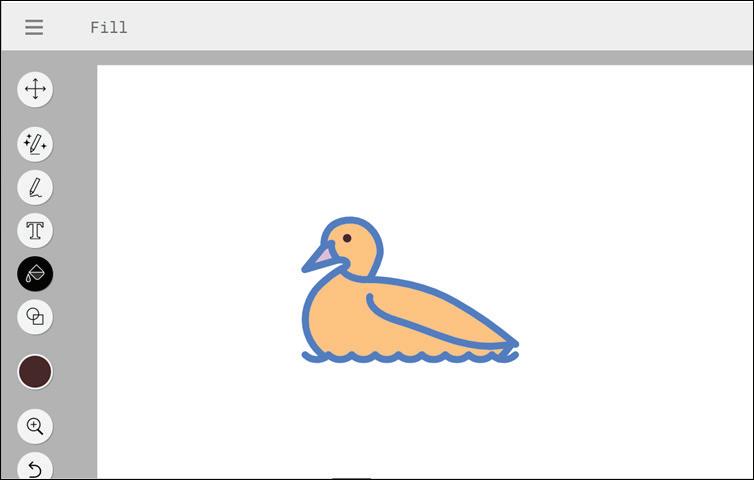

6. Choose the drawing from the list of suggestions provided in the top bar that most closely resembles the one you plan to create.

7. You may further customise your drawing by using the Shape tool to draw a circle for the eyes and fill in colours using the Fill tool.

Fill Tool

Shape Tool

Natural Language Processing

Natural Language Processing (NLP) is a domain of AI that enables computers to understand human language and generate appropriate responses when we interact with them.

It allows computers to talk to us in a way that feels natural to us. Popular examples of NLP applications include Google Assistant, Siri, Alexa, Google Translate, etc.

Let us perform the following experiment to understand how AI makes use of NLP.

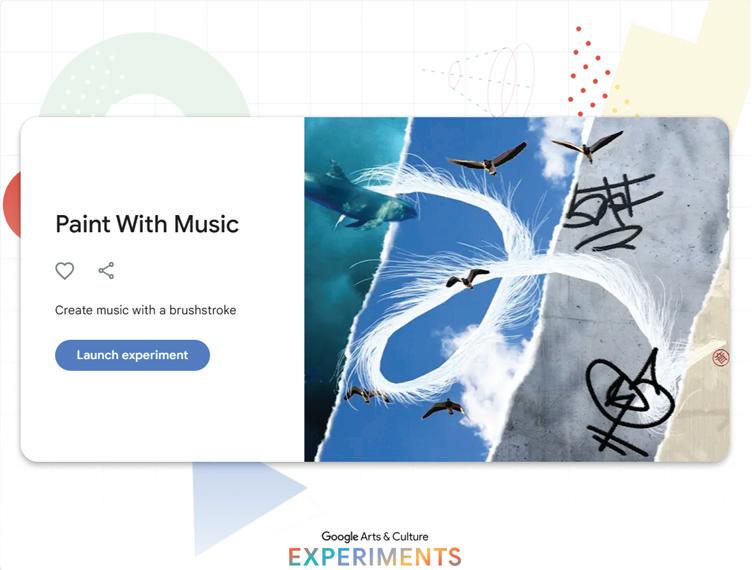

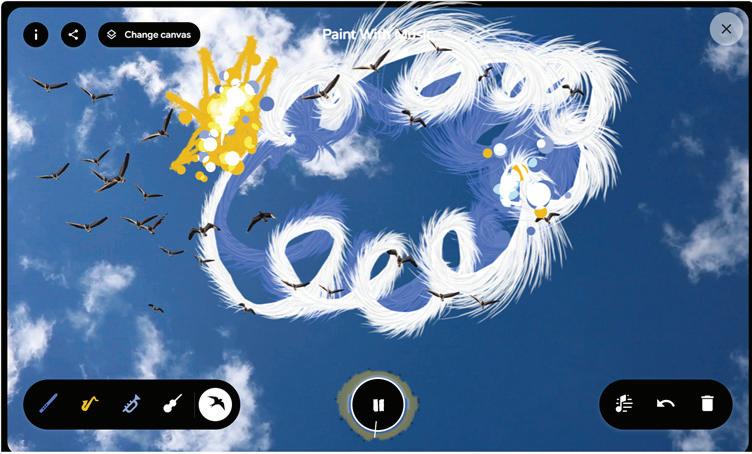

Objective: Paint With Music is an interactive experience that lets you immerse fusion of two powerful art forms: painting and musical composition. It lets you translate the movement of your brush strokes into musical notes played by your chosen instrument. The user can paint on the given canvases, such as the sky or the ocean.

Follow the given steps to explore how this application works.

1. Visit the link: https://artsandculture.google.com/experiment/YAGuJyDB-XbbWg?cp= eyJhdG1vc3BoZXJlIjoibmF0dXJlIn0

2. The following window appears.

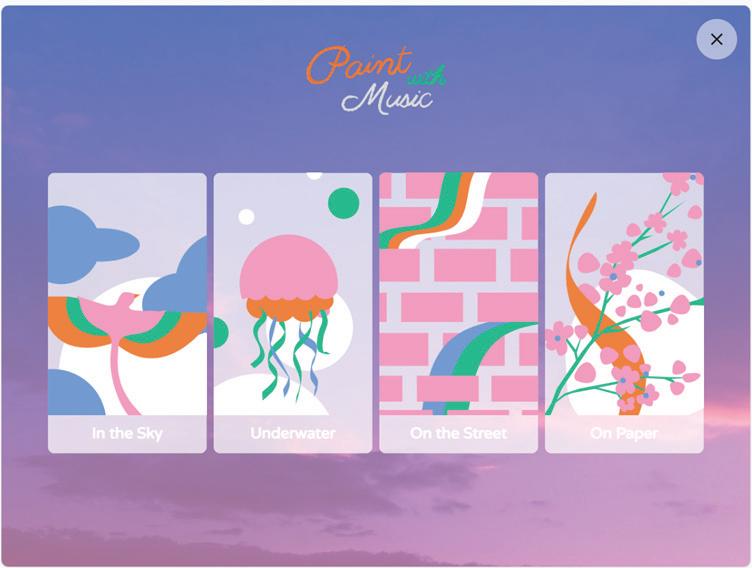

3. Click on the Launch experiment button. It will direct you to a web page, as shown.

4. You can choose any of the given environments for your canvas—sky, underwater, street, or paper. Here, we have selected the sky. After selection, the following screen appears.

5. Choose any one musical instrument to paint on the canvas—flute, saxophone, trumpet, or violin. You can even add an element of nature, such as a bird, to your canvas.

6. You can personalise your artwork by experimenting with various strokes inspired by different instruments.

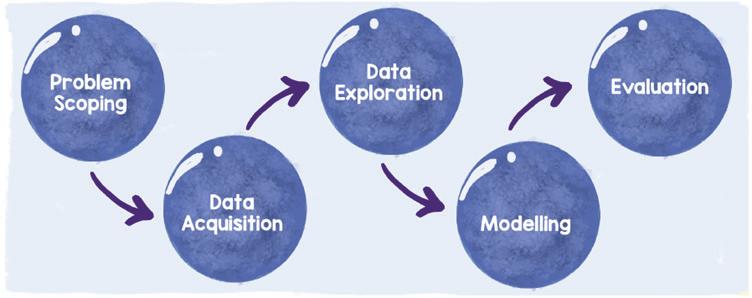

The AI project cycle is a step-by-step method for solving problems using proven scientific approaches. It helps us understand problems better and outlines the necessary steps for making projects successful.

Let us learn about the five important steps involved in an AI Project Cycle.

Problem Scoping: This stage requires defining the problem or goal that the AI project seeks to address. It includes understanding the demands of stakeholders, identifying potential for AI applications, and determining success criteria.

Data Acquisition: This stage identifies appropriate data sources and collects data to assist the AI project. This may involve obtaining data from internal databases, external sources, or gathering new data through sensors, etc.

Data Exploration: Once the data is collected, it is analysed to gain insights into its traits, quality, and appropriateness for analysis. This may involve activities, like data cleaning, data integration, and exploratory data analysis to identify patterns and correlations in the data.

Modelling: During this stage, AI models are developed using machine learning or deep learning techniques. This includes choosing relevant algorithms, using prepared data to train the models, and optimising their performance.

Evaluation: The developed AI models are assessed by testing in real-world scenarios or with validation datasets. Performance metrics are utilised to evaluate the accuracy, dependability, and effectiveness of the models in solving the problem that was defined during the problem scoping stage.

Although these stages cover the essential elements of an AI project cycle, it is crucial to acknowledge that the cycle is often iterative, featuring feedback loops across stages and the possiblity of more iterations to enhance the AI solution.

1. Being intelligent means being good at learning, problem-solving, thinking, remembering, communicating, and making choices.

2. Artifical Intelligence (AI) is a concept that gives computers the ability to think like humans.

3. Human intelligence and artificial intelligence differ significantly in their origins, learning processes, adaptability, emotional aspects, limitations, and evolution.

4. Machine learning is a subset of AI that enables machines to improve at a task with experience.

5. Deep learning is a subset of machine learning in which a machine is trained with vast amounts of data.

6. Data is a collection of raw facts that can be transformed into useful information. It can be in the form of text, images, audio, or video.

7. Computer vision is how AI uses cameras to see and understand visual information.

8. Natural Language Processing (NLP) is a domain of AI that enables computers to understand human language and generate appropriate responses when we interact with them.

9. The AI project cycle is a step-by-step method for solving problems using proven scientific approaches.

A. Fill in the Blanks.

Hints: AI project cycle

John McCarthy Google Lens Deep Learning Computer Vision

1. is an AI function that mimics the workings of the human brain in processing data for use in detecting objects.

2. The is a step-by-step method for solving problems using proven scientific approaches.

3. is a domain of AI that uses cameras to see and understand things.

4. is the father of AI.

5. is an image and text recognition app developed by Google.

B. Select the Correct Option.

1. Which of the following is a domain of AI?

a. Computer Vision

c. Data

2. Which among the following is a trait of AI?

a. Common Sense

c. Computational Power

b. NLP

d. All of these

b. Emotions

d. Fatigue

3. is a collection of raw facts that can be transformed into useful information.

a. Data

c. Machine Learning

b. Deep Learning

d. NLP

4. Which among the following is an application of NLP?

a. Siri

c. Google Translate

b. Alexa

d. AutoDraw

5. enables a computer system to learn from experience using the provided data and make accurate algorithms for predictions.

a. Computer Vision

c. Machine Learning

b. NLP

d. Deep Learning

1. AI can help recognise faces in pictures.

2. AI learns from data fed into algorithms.

3. Data acquisition is the first stage in an AI project cycle.

4. Computer vision helps computers understand and respond when we talk to them.

5. People with high intelligence tend to be creative.

D. Answer the Following.

1. What are the differences between human intelligence and artificial intelligence?

2. Define deep learning.

3. Briefly describe the different stages of the AI project cycle.

4. What is computer vision?

5. Define data.

E. Apply Your Learning.

1. Yash recently installed a smart home security system that uses facial recognition technology to enhance home security. Name the domain of AI that this security system uses.

2. Megha is traveling with her father in a self-driving car and she wonders how the car effortlessly detects lanes, pedestrian crossings, and other vehicles on the road. What should her father explain to her about the technology behind these capabilities ?

3. Utkarsh is using Siri, the virtual assistant developed by Apple, to accomplish various tasks on his iPhone. He is amazed at how Siri understands his commands and responds effectively. Name the AI domain that empowers Siri to achieve this level of functionality.

1. Visit the link: https://tenso.rs/demos/fast-neural-style/ to edit digital images by transferring the visual style from the source image to an uploaded image or an image captured through webcam.

2. Visit the link: https://transformer.huggingface.co/doc/distil-gpt2 to see how AI autocompletes your thoughts as you type.

3. Visit the link: https://artsandculture.google.com/experiment/blob-opera/ AAHWrq360NcGbw?cp=e30 to create amazing operatic music.

In the previous chapter, we have explored the exciting world of artificial intelligence, learning how it works and where it is used. Now, let us dive into another interesting topic, Computer Vision, in this chapter.

You might have used a smartphone with a face lock feature. Have you ever wondered how your phone effortlessly recognises your face every time it opens the phone lock? This is because of computer vision. Let us now learn what computer vision is and how it is useful.

Computer vision is a domain of artificial intelligence that enables computers and systems to extract meaningful information from digital images, videos, and other visual inputs, enabling them to take actions or make recommendations based on that information. If AI enables computers to think, then computer vision enables them to see, observe, and recognise. Apart from face locks in smartphones, Google Lens and self-driving cars are some popular examples of computer vision.

Computer vision algorithms can be trained with a lot of visual data to process visuals at incredible speed and accuracy. These applications are available to everyone at ease today and they are on the priority list of many industries. Let us explore some major applications of computer vision:

When you set up a face lock on your smartphone, you are actually teaching the device to recognise your unique facial features. Computer vision technology analyses the distinct details of your face, such as the arrangement of your eyes, nose, and mouth. It then saves these features as digital signatures and refers to them whenever required. So, every time you look at your phone, the computer vision technology compares what it “sees” with the stored facial signature. If the match is successful, the phone unlocks, granting you access. This is how computer vision makes our devices smarter and more user-friendly.

Industries such as banking and finance, travel, property and real estate, law and legal services, and vehicle registration and verification are major areas where computer vision capabilities can be applied to extensive documentation for accurate validation of the documents as quickly as possible. It helps in fraud detection, authenticating individuals, verifying ownership of property, identifying forfeited currency, and documents.

Devices and sensors equipped with computer vision technology help with faster and accurate diagnosis of diseases and health conditions. Real-time blood loss monitoring in patients and during operations, locating tumours, brain haemorrhage, internal infections, cardiovascular ailments, etc., can all be scanned to minimise the death rate.

The shopping cart’s sensors can easily perform object identification of the items in the cart and match the details in the database using computer vision. Computer vision can help in taking inventory of items in stock for reorders and tracking the status of items. Quick checkout at the mall exit, locating misplaced items, checking shoplifting, minimising billing errors, and identifying regular buyers and their preferences are the major benefits to be harvested with the help of CV technology.

Taking a picture of an item and locating it on the internet is a common practice today. Finding items, people, and places can be highly efficient with computer vision technology. They also help in product comparison for variety, finding similar products based on looks and make, classifying items on physical features and people for age-specific or gender-specific services, consolidating product catalogues, etc.

The new trending innovation in digital marketing using Computer Vision (CV), helps users create realistic visual content for the intended audience and customers. Companies like Lenskart and Zara use computer vision for virtual try-on experiences, allowing users to visualise how the merchandise would look on them before making

a purchase. This interactive application demonstrates the versatility and widespread adoption of computer vision across different industries.

Augmented reality (AR) and virtual reality (VR) learning experiences heavily rely on computer vision algorithms. These algorithms play a pivotal role in transforming static educational content into dynamic and interactive formats. For instance, computer vision is instrumental in generating 3D visuals from 2D images, facilitating assignment and answer-sheet verification, enabling online classes, and enhancing virtual tour excursions. In essence, computer vision acts as a foundational tool for creators, allowing them to craft immersive educational content that fosters engaging and effective online learning environments.

Self-driven cars, driverless trucks, pilotless planes, and hands-free driving assistance are some examples of CV applications. This involves the process of identifying the objects, finding their navigational roots, and at the same time monitoring the environment.

CV applications can recognise various scientific icons and optical codes. This helps in visual communication (like understanding traffic and road signs by self-driven vehicles), Google Translate, and extracting text from images by pixel scanning.

Creating sustainable smart homes and cities is possible with the help of CV applications. These applications can be used in various areas, like public services, domestic services, home security, upkeep, hospitals, offices, schools, traffic, and energy consumption. By using CV applications, we can make life better without harming the environment.

Computers only understand data in the form of numbers. An image is an array of thousands of pixels. Pixels, also known as picture elements, are the smallest units of an image on a computer display. Pixels are microscopic in size, and together form a smooth, final visual of the scene that our brain perceives as a single image. If a computer can store the information of an image, then the computer can see that image.

Image features refer to distinctive characteristics of an image that can be used to identify objects, detect patterns, and perform various tasks related to image analysis.

Some common features include:

• Edges: Edges are abrupt changes in brightness or colour within an image. They can be used to identify boundaries between objects in an image.

• Corners: Corners are the points where two or more edges meet. They can be used to identify specific points of interest in an image, such as the corners of a building.

• Textures: It refers to repeating patterns within an image, such as a grain of wood. It can be used to classify images based on their contents.

• Colour Histogram: They are graphical representations of the distribution of colours in an image. They can be used to identify colour patterns within an image.

Thus, image features play an important role in image recognition, object detection, scene analysis, etc.

•

1. Computers use cameras to take pictures or videos of the world around them.

2. Software is used to analyse the images or videos to identify objects and patterns.

3. AI is used to make sense of the data collected and understand the information that the computer is ‘seeing’.

Let us perform the following experiment to understand how computer vision works.

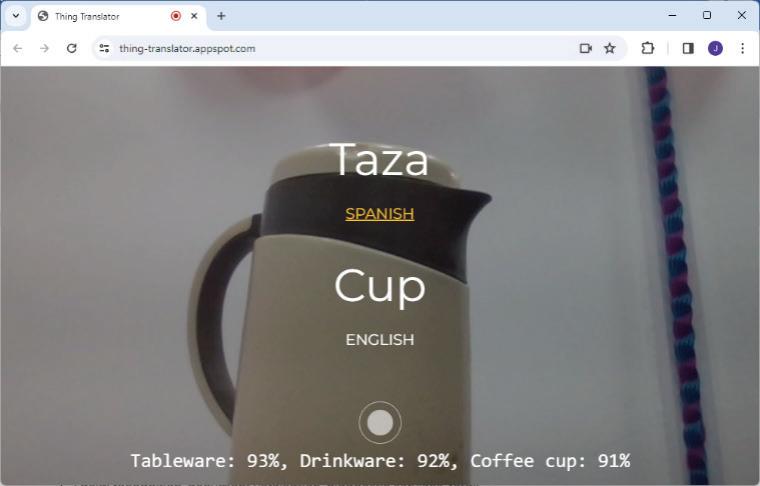

Objective: The Thing Translator application recognises the objects shown in front of the camera. It extracts the features of the object and matches them with the images in its database.

Follow the given steps to explore how this application works.

1. Visit the following link: https://thing-translator.appspot.com/

2. The following window appears.

3. Display an object in front of the camera and click on the round button on the screen.

4. The application will recognise the object and will display its name on the screen, as shown.

1. Computer vision is an AI domain that enables computers to derive meaningful insights from digital images and videos.

2. Facial recognition, document verification, diagnostic services, retail optimisation, image-based search, digital marketing, education, and autonomous vehicles are some of the key applications of computer vision.

3. Edges, corners, textures, and colour histograms are vital for image analysis and recognition.

4. Pixels, the smallest units forming an image, are interpreted by computers as arrays of data.

5. Computer vision enhances efficiency, accuracy, and user experience across various industries.

6. Image features are fundamental in image recognition, object detection, and scene analysis within computer vision applications.

•

1. Computer vision enables computers to derive meaningful information from , videos, and other visual inputs.

2. recognition is commonly used as a security feature in handheld digital communication devices.

3. The application of computer vision in the industry includes performing object identification of items in the shopping cart.

4. Image features, such as can be used to identify boundaries between objects in an image.

5. In the context of image analysis, are graphical representations of the distribution of colours in an image.

1. Computer vision is a subset of artificial intelligence.

2. The application of computer vision in the retail industry does not involve tracking the status of items.

3. Autonomous vehicles routing-and-parking assistance is not an example of computer vision applications.

4. Image features like edges can be used to classify images based on their content.

5. Computer vision is not applicable in the education sector.

C. Select the Correct Option.

1. Which of the following is one of the major applications of computer vision in law enforcement?

a. Inventory management

c. Digital audio-visual marketing

b. Facial recognition

d. Image-based search

2. What does CV stand for in the context of digital audio-visual marketing?

a. Computer Vision

c. Comprehension Vision

b. Consumer Vision

d. Consequences Vision

3. What is the purpose of the colour histogram feature in image analysis?

a. Object recognition and image retrieval

c. Edge detection

b. Colour pattern identification

d. Texture classification

4. How do computers understand data in the context of images?

a. Text

c. Colours

b. Numbers

d. Pixels

5. In the application of computer vision in the retail industry, what can be tracked using sensors in the shopping cart?

a. Employee attendance

c. Temperature of products

b. Status of items

d. Social media engagement

1. Explain one major benefit of using computer vision in the retail industry.

2. How does computer vision help in digital audio-visual marketing?

3. How do computers understand images, considering the form of data they comprehend?

4. Provide an example of how facial recognition is used in law enforcement.

5. List two distinctive characteristics of an image used as features in image analysis.

1. Discuss the impact of computer vision on the healthcare industry. Provide examples.

2. Explain the process by which computers see images and the role of pixels.

3. Explore the applications of computer vision in autonomous vehicles, detailing its benefits and challenges.

1. How can computer vision be applied to enhance the security features of smart homes?

2. Propose a scenario where image-based search using computer vision can be beneficial in everyday life.

3. Imagine you are designing a new application for the retail industry. How can adding computer vision to the application improve the shopping experience for customers?

Visit the link: https://quickdraw.withgoogle.com and let AI identify your drawing.

In the previous chapter, you have learnt about an important domain of AI called computer vision. This chapter focuses on a different field of AI called Natural Language Processing (NLP). It is the ability of computers to understand and process human language. Let us learn about this concept in detail.

Natural Language Processing (NLP) is the domain of Artificial Intelligence (AI) that deals with the language-based interactions between a machine and a human, as well as between two machines. The objective of NLP is to enable computers to understand, comprehend, and generate human language in a way that is both meaningful and contextually appropriate. NLP enables humans to interact with computers more naturally, using spoken or written language rather than highly-developed programming languages. Virtual assistants, like Siri, Alexa, Google Assistant, etc., respond to user queries by utilising the capabilities of NLP. NLP involves a variety of algorithms that process and analyse large amounts of natural language data from users’ interactions, including text and speech.

NLP has numerous applications in our daily lives. Let us learn about some of them.

Chatbots are computer programs designed to imitate human-like conversation using text or speech interfaces. NLP is essential for chatbots to comprehend the user’s input, generate appropriate responses, and engage in meaningful conversations.

Here’s how NLP plays a pivotal role in enhancing chatbot capabilities:

Chatbots are widely used by organisations to provide customer services on websites and social media platforms. These chatbots can address frequently asked questions, assist with troubleshooting, and transfer the complex issues to the right team member when needed. For example, chatbots assist customers with tasks, such as requesting personal loans, and obtaining information on money transfers or if you want to report a lost card, a chatbot is there to assist you anytime, offering 24 × 7 assistance.

NLP is used by chatbots to engage in conversations with users, in order to understand their preferences and provide personalised product recommendations. For example, a chatbot for a clothes store would inquire about a customer’s size, budget, and preferred styles in order to suggest items that fit their tastes.

Chatbots are used in educational field to provide academic support, such as helping students with homework, providing conceptual explanations, quizzing students, offering study tips, etc. For example, ChatGPT provides personalised learning experiences and adaptive tutoring, catering to individual learning styles.

Sentiment analysis is an NLP technique used to analyse and interpret the sentiment expressed in text data. By comprehending human language, NLP enables computers to detect whether the sentiment conveyed in text is positive, negative, or neutral. Let us learn about some applications of NLP in sentiment analysis.

Businesses use sentiment analysis to track their brand’s presence on social media platforms, like Twitter, Facebook, and Instagram. Companies gain insights into public opinion and engage customers more effectively by recognising sentiment or emotion from customers’ comments, posts, likes, shares, emojis, etc.

E-commerce giants like Amazon and Myntra use sentiment analysis to evaluate product reviews and ratings. This enables businesses to identify top-selling products, discover product flaws, and make data-driven decisions regarding product development and marketing strategies.

Sentiment analysis is used to analyse public sentiment towards political candidates, parties, and policies. It is used in political campaigns to determine the opinions of voters. It also helps to determine whether their messaging strategies are resonating positively with voters.

Film and entertainment industry players use sentiment analysis to analyse audience reactions to films, series, TV shows, etc. By assessing sentiment expressed by audiences in reviews and social media discussions, companies can gauge audience preferences, predict box office, or streaming platform success, and customise promotional campaigns to achieve commercial success.

NLP can be utilised to auto-generate short and clear summaries of longer texts or documents through AI algorithms and techniques. Let us look at some of the applications of NLP in automatic summarisation.

Websites and apps like Google News, Apple News, and Flipboard use NLP algorithms to automatically produce summaries of news articles from diverse sources. This allows users to stay informed about the latest news topics without the need to read through entire articles.

Google Docs offers a feature that allows you to use NLP to create content summaries for lengthy documents, research papers, or reports. These tools can be used by professionals and students to extract key information and insights from large volumes of text.

NLP algorithms convert the spoken content of YouTube videos into text through automatic transcription, producing a text-based representation known as a transcript. Once the transcript is analysed, the algorithms identify key points, topics, and important moments within the video. Based on this analysis, the algorithms generate summaries, capturing the relevant information in the video clip.

Imagine you are a social media manager of a company, and you want to assess the sentiment expressed in customer remarks and feedback related to the recent launch of a smartphone. You have a dataset of Twitter comments about this new product. Let us now understand how NLP analyses customer’s sentiments.

Tokenisation involves breaking down each comment into individual words or tokens. For example, the comment “Just received my new phone! It’s amazing! #happy #excited” would be tokenised into the following list of words: [“Just”, “received”, “my”, “new”, “iPhone 15”, “!”, “It’s”, “amazing”, “!”, “#happy”, “#excited”].

Segmentation involves dividing a long thread of comments into smaller segments or phrases for analysis. In this case, the segmentation could be as follows:

“Just received my new iPhone 15!”

“It’s amazing!”

“#happy”

“#excited”

Lemmatisation refers to treating different forms of the same word as equivalent. For instance, words like “received”, “receives”, and “receiving” could be lemmatised to the common lemma “receive”. This increases the accuracy of the analysis.

The tokens may be tagged with their part of speech (POS). POS tagging involves assigning a tag to each token based on its grammatical function in the sentence. For example, the word “received” would be tagged as a verb, “amazing” would be tagged as an adjective, and “iPhone 15” would be tagged as a noun.

NER is used to recognise and identify named entities in the text, such as people, places, and organisations. Identifying the product name or brand mentioned in the comments enables sentiment analysis specific to that entity. For example, identifying “iPhone 15” as a named entity allows sentiment analysis to be customised specifically to this product referenced in the customer comment.

By processing the data through tokenisation, segmentation, lemmatisation, POS tagging, and NER, valuable information can be extracted from raw text data, offering insights into customer feedback or comments. Based on these insights, informed decisions can be made for marketing strategies, product enhancements, etc.

In NLP, the Bag of Words model plays a role in text classification, sentiment analysis, and other language processing tasks. This model represents a document as a collection of words, ignoring the order and grammatical structure of the words. It only considers how often each word appears in the document.

The Bag of Words algorithm is not concerned with the sequence of words, which is why it is called a ‘bag’. Think of it like a bag of groceries—you care about how many apples or oranges you have, not the order in which you put them in the bag. Similarly, the model focuses on the vocabulary, which is the collection of unique words found in the data, and the frequency, which is how many times each word appears.

Create a Bag of Words model for the following documents:

Document 1: “The game has fun levels and cool challenges.”

Document 2: “Levels offer a variety of challenges.”

Document 3: “Players face a variety of challenges.”

Objective: The objective of the Bag of Words model is to extract the meaningful words from the given text and prepare them for language processing.

To create a Bag of Words model for these documents, we first need to pre-process the text by converting it to lowercase and removing stop words (common words like “the”, “a”, “of”, and “and” that don’t carry much meaning in document analysis). This helps the model focus on the content-rich words that are more likely to convey the sentiment or meaning of the text.

After preprocessing, the documents would look like this:

Document 1: “game has fun levels cool challenges.”

Document 2: “levels offer variety challenges.”

Document 3: “players face variety challenges.”

Next, we create a vocabulary of all the unique words in the documents:

These two features help us create document vectors for each document. They are presented in the form of a document vector table. This table contains all the terms in the dataset in the table header and displays the frequency of terms in rows as one row for each document.

For example:

In this way, we can represent each document as a vector of word frequencies, or a “bag” of words. This allows us to compare documents and perform various NLP tasks.

1. NLP stands for Natural Language Processing. NLP is the domain of AI that deals with the language-based interactions between a machine and a human, as well as between two machines.

2. Chatbots are computer programs engineered to imitate human-like conversation using text or speech interfaces.

3. Sentiment analysis is an NLP technique used to analyse and interpret the sentiment expressed in text data.

4. NLP can be used to autogenerate short and clear summaries of longer texts or documents through AI algorithms and techniques.

5. NLP works in different stages, such as tokenisation, segmentation, lemmatisation, Part-of-Speech (POS) tagging, and Named Entity Recognition (NER).

6. Tokenisation involves breaking down each comment into individual words or tokens.

7. Segmentation involves dividing a long thread of comments into smaller segments or phrases for analysis.

8. Lemmatisation refers to treating different forms of the same word as equivalent.

9. POS tagging involves assigning a tag to each token based on its grammatical function in the sentence.

10. Named Entity Recognition (NER) is used to recognise and classify named entities in the text, such as people, places, and organisations.

A. Fill in the Blanks.

Hints: Chatbots transcript Google Docs Part-of-Speech Sentiment analysis

1. tagging involves assigning a tag to each token based on its grammatical function in the sentence.

2. are computer programs engineered to imitate human-like conversation using text or speech interfaces.

3. Converting spoken content of YouTube videos to text-based representation is known as .

4. Professionals can use to create content summaries for lengthy documents, research papers, or reports.

5. is an NLP technique used to analyse and interpret the emotion expressed in text data.

B. Select the Correct Option.

1. Which of the following domains of AI deals with language-based interactions between a machine and a human?

a. NLP

c. Machine Learning

b. Computer Vision

d. None of these

2. is used to recognise and classify named entities in the text, such as people, places, and organisations.

a. POS tagging

c. Lemmatisation

b. NER

d. Tokenisation

3. Which among the following is an application of NLP?

a. Chatbots

c. Automatic Summarisation

b. Sentiment analysis

d. All of these

4. involves breaking down each sentence into individual words.

a. Segmentation

c. Tokenisation

b. Lemmatisation

d. NER

5. Why do e-commerce giants use sentiment analysis?

a. evaluate product reviews

c. uncover product flaws

b. identify top-selling products

d. All of these

C. State True or False.

1. Lemmatisation refers to treating different forms of the same word as equivalent.

2. Political campaigns use sentiment analysis to gauge voter sentiment.

3. ChatGPT uses NLP to provide personalised learning experience.

4. The full form of NER is Named Entity Recognition.

5. POS tagging is used to extract vocabulary and frequency in the dataset.

D. Answer the Following.

1. What are chatbots?

2. How does NLP contribute to sentiment analysis?

3. Explain tokenisation.

4. How does NLP contribute to educational support?

5. Name the different stages in which NLP works.

E. Apply Your Learning.

1. Seema, a research analyst, is often working on tasks such as analysing lengthy reports and extracting key insights. Name any one application that she can use for creating content summaries of these reports.

2. Parth bought household items from an e-commerce platform, which later prompted him to rate the purchased products. How does these product ratings benefit the e-commerce platform he purchased from?

3. Shweta struggles to stay updated on current news due to time constraints, so she relies on Google News, a news aggregation platform. Which domain of AI does Google News utilise to efficiently provide these news summaries?

4. Ishita visits her school’s website, where she encounters a robot that asks her questions like, ‘How may I help you?’ It then assists her with searching for study resources. What is this robot commonly referred to as, and why does it engage Ishita with questions?

5. Mr Prabhav is a Market Researcher in the film and entertainment industry who specialises in analysing the sentiments of audience reactions to various films. Which domain of AI does he use, as a part of his job, to help filmmakers achieve commercial success?

Explore the following link and observe how NLP analyses your text: https://www.ibm.com/ demos/live/natural-language-understanding/self-service

The Artificial Intelligence (AI) series for grades 9 and 10 by Uolo aims to expose learners to essential elements in AI and introduce common domains and applications of AI to them. It also introduces the fundamentals of AI to the learners through engaging activities that can be done in a computer lab or on a computer at home. This series paves the way for students to become informed participants in the exciting future shaped by artificial intelligence.

• Engaging In-chapter Activities: Engaging activities within each chapter that offer students practical experience of various AI concepts and domains, thereby facilitating a deeper understanding.

• Let’s Revise: To reinforce takeaways of each chapter, these chapter-end summaries include key points that recaps everything that has been covered in the chapter.

• Chapter Checkup: Comprehensive exercises at the end of each chapter that have various question types, like fill in the blanks, true or false, multiple-choice, short answer, long answer, and application-based questions to provide students a thorough revision of the text.

• Activity Section: To further enhance student proficiency, all the chapters end with an unsolved activity which provides additional practice of the AI concepts.

Uolo partners with K-12 schools to bring technology-based learning programs. We believe pedagogy and technology must come together to deliver scalable learning experiences that generate measurable outcomes. Uolo is trusted by over 10,000 schools across India, South East Asia, and the Middle East. hello@uolo.com