Risksandcontrolsfor artificialintelligenceand machinelearningsystems

Report

Version1.0

May27,2024

D-16-432

Projectleads:LiinaKamm(CyberneticaAS)

HendrikPillmann(RIA)

Authors:DanBogdanov

PaulaEtti

LiinaKamm

AndreOstrak

TanelPern

FedorStomakhin

MariaToomsalu

Sandhra-MirellaValdma

AntoVeldre

CyberneticaAS,Mäealuse2/1,12618Tallinn,Estonia.

E-mail: info@cyber.ee ,Website: https://www.cyber.ee,Phone: +3726397991

Co-fundedbytheEuropeanUnion.Viewsandopinionsexpressedarehoweverthoseofthe author(s)onlyanddonotnecessarilyreflectthoseoftheEuropeanUnionortheEuropeanCybersecurityCompetenceCentre.NeithertheEuropeanUnionnortheEuropeanCybersecurity CompetenceCentrecanbeheldresponsibleforthem.

©EstonianInformationSystemAuthority,2024

6.1 Informationsecuritycontrols

8.1 DescribeyourAIsystem

8.1.1 Howtogoevenfurther?

8.2 Findadeploymentmodelsuitingyoursystem

8.3 Identifyapplicablelegalnorms

8.3.1

8.3.2 DM2:systemusinganexternally-trainedAImodel

8.3.4 Howtogoevenfurther?

8.4 Evaluatethreatstousers,society,andenvironment.

8.4.1 DM1:systemusingAIasaservice

8.4.3

8.4.4

8.5.4 Howtogoevenfurther?

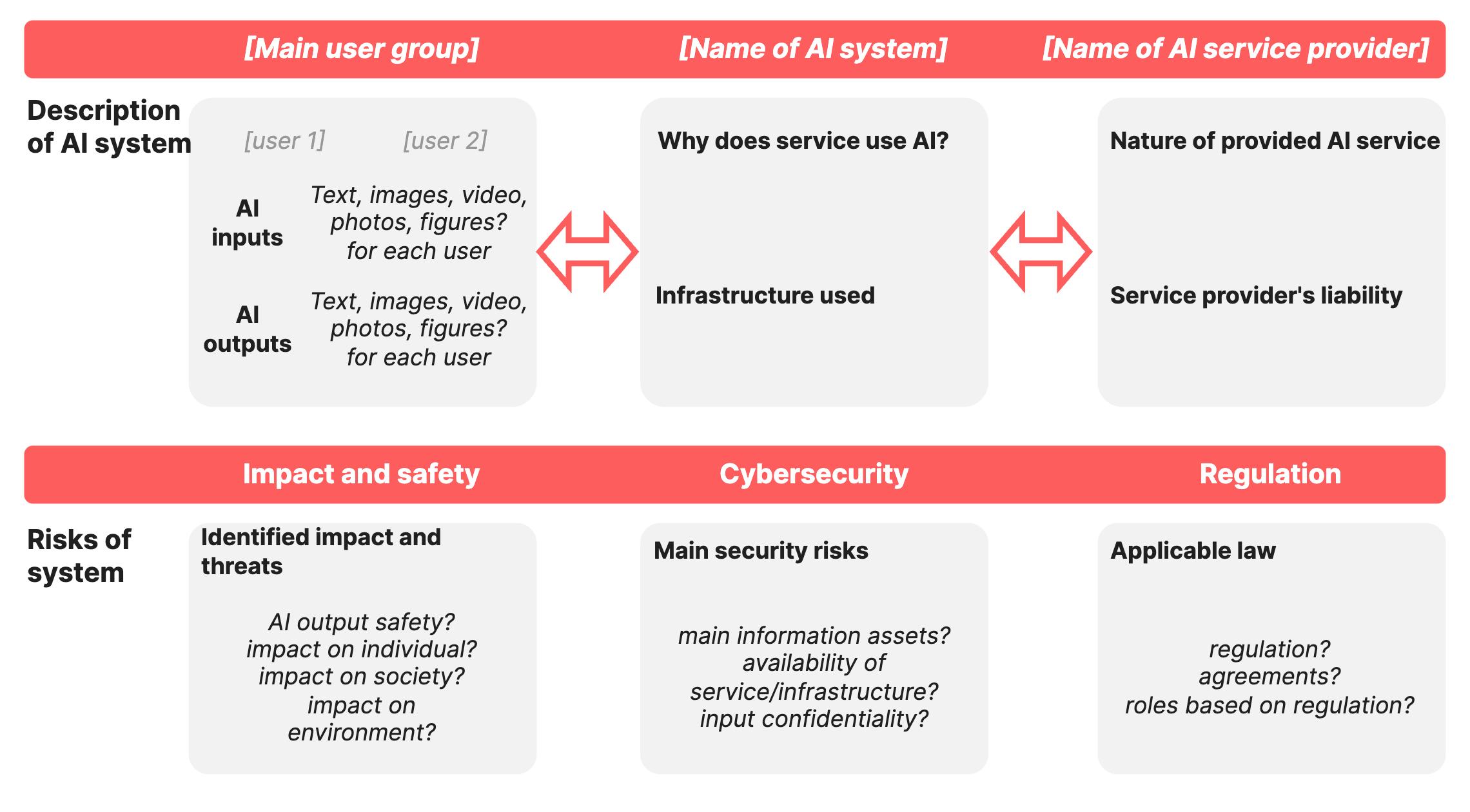

8.6 AIsysteminasingleslide

1Introduction

1.1Purpose

TheEstoniansocietyhasadopteddigitalservicesforimprovingworkefficiency.Ourdigital stateisrenownedforitslowadministrativeoverhead.Transactionsbetweenstateagenciestake placeovertheX-Roaddataexchangelayer.Boththepublicandtheprivatesectorhaveadopted digitalidentitysolutions.ForEstonia,adigitalsocietyisanobjectofconstantdevelopment.

Rapidadvancesincomputingpowerhavetakenthedevelopmentofartificialintelligencetechnologytoaqualitativelynewlevel.Artificialintelligencesystemscapableofgeneratingtext, images,sounds,music,andvideobasedonanaturallanguagedescriptionhavemadethetechnologyaccessibletoawidepopulation,leadingtoanincreasingbeliefthatinformationtechnologywillenablethedevelopmentofanewgenerationofsystemscapableofperformingsuch tasksbetterthanhumans.

ArtificialintelligencesystemsarebeingdevelopedinEstoniaandtherestoftheworldbyboth publicandprivatesectorinstitutions.Thepurposeofthisreportistosupporttheimplementationofthistechnologybyprovidingguidanceinensuringcybersecurity,fulfillingoflegalrequirements,andsocietalsafety.

Thereportiswrittenforabroadaudience.Itwillbemostusefulforsmallandmedium-size organisationsandprivateindividualswhomaynothavelegal,informationsecurity,orartificial intelligenceexpertsontheirstaff.Theseuserswillbeabletoutilisethequick-referenceguide attheendofthereportforAIsystemriskassessmentandchoiceofmeasures.Ourgoalisfor everyonetouseAIlawfully,safely,andwithoutharmingthesocietyandenvironment.

Morematureorganisationsemployingqualitymanagementsystemsandmorelabour-intensive riskmanagementprocesseswillbeprovidedwithguidanceontheapplicationofartificialintelligence.Theywillbegivenrecommendationsonwhichstandardsandreportstofollowtoensure anadequatelevelofmaturity.

1.2Definitionsandabbreviations

AGI Artificialgeneralintelligence. AI Artificialintelligence. AIsystem Artificialintelligencesystem. AIHLEG

EUHigh-LevelExpertGrouponAI.

API Applicationprogramminginterface.

ASI

Artificialsuperintelligence. BERT

BidirectionalEncoderRepresentationfromTransformers.

CaaS

Computeasaservice.

CNN

Convolutionalneuralnetwork.Amodelarchitectureusedinimagerecognition.

CPU Centralprocessingunit.

CUDA

ComputeUnifiedDeviceArchitecture,atoolkitdevelopedbytheNvidiaCorporationforacceleratedgeneral-purposecomputing.

DPO

Directpreferenceoptimisation.Fine-tuningmethod.

FLOP

Floating-pointoperation.Computationalresourcesrequiredformodeltrainingismeasured infloating-pointoperations.

GAN

Generativeadversarialnetwork.Modelarchitectureusedinimagesynthesis.

GPT

Generativepretrainedtransformer.AImodelarchitecture.

GPU Graphicsprocessingunit.

IaaS Infrastructureasaservice.

AItechnology

IPO

LLM

Thestudyanddevelopmentofartificialintelligence.

Identitypreferenceoptimisation.Fine-tuningmethod.

Largelanguagemodel.Artificialintelligencemodelusedfornaturallanguageprocessing, distinguishedbythelargenumberofparametersinvolved.

LSTM

Longshort-termmemory.Modelarchitecturewidelyusedinlanguagemodelsbeforethe adoptionoftransformers.

ML Machinelearning.

MoE

MixtureofExperts.Modelarchitecture.

NPU

Neuralprocessingunit.Artificialintelligenceacceleratormainlyusedinphones.

OWASP

OpenWorldwideApplicationSecurityProject.Webcommunityaggregatingandproducing webapplicationandsoftwaresecurityresources.

PaaS Platformasaservice.

RAG

Retrieval-augmentedgeneration.Methodusedforthedeploymentofartificialintelligence applicationswherethelanguagemodelsinheritsadditionalcontextfromadatabaseoranotherexternalsourcebasedonauserpromptforimprovingresponsequality.

RLHF

Reinforcementlearningwithhumanfeedback.Fine-tuningtechnologyutilisingreinforcementlearning.

RNN

Recurrentneuralnetwork.Modelarchitecturewidelyusedinlanguagemodelsbeforethe adoptionoftransformersandLSTM.

SaaS

Softwareasaservice.

SFT

Supervisedfine-tuning.AImodeltrainingmethodthat,unlikepre-training,issupervisedand isusedforthefurtherguidanceofthemodel’swork.

TPU

Tensorprocessingunit.AIacceleratordevelopedbyGoogle.Corporation

VAE

Variationalautoencoder.Modelarchitectureusedinimagesynthesis.

XAI

ExplainableAI.Collectionofmethodsfortheexplanation,interpretation,andvalidationof theworkofAImodelsandtheresultsofthiswork

1.3Structureofthereport

WebeginourreportwithanoverviewofthehistoryofAIandmainAItechnologies(Section 2 ). Wewillthenmoveontotheirapplications,presentingexamplesofareasoflifeinwhichadditionalvalueishopedtobegainedfromAI.Thefielditselfhasbeendevelopingrapidlyduring thewritingofthisreport;hence,wewillalsoincludeanoverviewofcurrenttrends.

Countriesallacrosstheworldhavebeguntolegallyregulateartificialintelligence.Section 3 providesareviewofthecurrentstateofthislegislation.Section 4 focusesonthearchitecture ofAIsystemsandpresentsthreegeneralmodelsforthedeploymentofAIapplications.These threedeploymentmodelsformagoodbasisfororganisationsfortheapplicationoftheirrisk assessmentmethodologies.

Alongsidelegalconsiderations,applicationsofAItechnologymustalsotakeintoaccountcybersecurityandsocietalsafetyrequirements.Guidelinesforrelevantriskassessmentmeasures arepresentedinSection 5 .Theexistenceofrisks,meanwhile,alsonecessitatestheapplication ofmitigatingmeasures.ThesearereviewedinSection 6 .

Section 7 summarisestherecommendationsforthepromotionoftheapplicationofAIsystems inEstoniadevelopedinthecourseofthisstudy.

Thelastpartofthereportisthemostpracticalandismainlytargetedatthoselookingforquick solutionsforanalysingtherisksofAIsystems.Thispartpresentsspecificandeasy-to-follow guidanceforidentifyinganddealingwiththemainrisksinthecreationordevelopmentofanAI system.RelevantguidelineswithsupportingfigurescanbefoundinSection 8 .

2OverviewandusecasesofAI applications

2.1Historyofartificialintelligencetechnology

Artificialintelligence(AI)isunderstoodhereinasanysystemcapableofperformingtasksseeminglyemployinghuman-levelintelligence.Figure 1 presentsanoverviewofimportantmilestones inthehistoryofAI.AIasafieldgrewoutofcybernetics,thegoalofwhichwasthestudyof feedbacksystems,includingbiological,technological,andsocialsystems.Althoughtheidea andstructureofartificialneuronswasalreadyproposedinthe1940s,thehistoryofartificial intelligenceistracedtoasummerseminarheldatDartmouthin1956wherethetermwasfirst proposed.

Theparticipantsoftheseminarreachedtheconclusionthatmachinescanbemadetoperform alltaskstiedtohumanintelligence.Indeed,theyconsideredcomputerstobecapableofindependentlearning,languageuse,andcreativity.Eventhoughnobigbreakthroughsweremade duringthetwo-monthseminar,overthenext20years,itsparticipantsfiguredamongthemain promotersofAItechnology.TheAIsystemsdevelopedinthisperiodwerecapableofsolving mathematicalproblems,playingcheckers,andtranslatingtextsfromonelanguagetoanother.

Figure1.HistoryofAIdevelopment

1958sawthebirthofthehigh-levelLispprogramminglanguagethatbecamethemainlanguage ofAIsoftwareforthenextthreedecades.Theseeminglymajoradvancesandsolutionsdevelopedinthisperiodfellrathershortinreality,though.Translationprogramsemployedliteral translationandremainedthusunabletorelatethemeaningofphrases.Programsforproving mathematicaltheoremsorplayingcheckerswereonlycapableofreviewingalimitednumberof statesandfailedtosolvemorecomplexproblems.

Problem-solvingwasdemonstratedinsmallplay-environmentscalledmicroworlds.Perhaps themostfamousofthemicroworldswerevirtualblocksworldsthattheusercouldmanipulate

usingEnglish-languagecommands,e.g.viatheSHRDLUlanguageparser.Eventhoughgenetic algorithmsandthebasicprinciplesofartificialneuralnetworkswerealreadyproposedinthe late1960s,littleprogresswasmadewiththesealgorithmsduetotheirlowlevelofoptimisation andinsufficientcomputationalpower.

ThehopesraisedbytheemergenceofthefirstAIsystemsledmanyresearcherstomake promisesthatcouldnotbefulfilled.ThisledtodisappointmentamongthebackersofAIresearchandadeclineinAIresearchanddevelopmentinthe1970s.BoththeUKandtheUS significantlycutAIfundingforuniversities,andtheUSDefenseAdvancedResearchProjects Agency(DARPA)stoppedfundingAIprojectsaltogether.Thiserafrom1974to1980iscalled thefirstAIwinter.

Inspiteofthefundingcuts,thedevelopmentofAIstillcontinued,butinsteadofsolvinglarge andcomplexproblemsthefocusnowturnedtosystemsconcentratingknowledgeprovidedby expertsindifferentfieldsandusingthisforthesolutionofnarrowerproblems.Suchso-called expertsystemswereusedine.g.medicineandanalyticalchemistry.Expertsystemswerealso successfullystudiedbyEstonianresearchers(includingEnnTõuguandLeoVõhandu).

Thesuccessofexpertsystemsledtorenewedpublicinterestinartificialintelligenceintheearly 1980s.Oneofthefirstcommercialrules-basedsystemswasR1,asystemthatassistedclients inconfiguringcomputersinaccordancewiththeirrequirements.In1981,theso-calledFifth GenerationComputerSystemsprojectwasannouncedinJapan.Theprojectinvolvedadecadelongplanforthedevelopmentofintelligentcomputers.Thisalsocreatedrenewedinterestin artificialintelligenceintheUSandtheUK.

ThenewAIboompeakedinthesecondhalfofthe1980s.LargeAmericancorporationscreated workinggroupsfocusingonAIsystems.Thefocusonceagainturnedtoartificialneuralnetworks andtheirtrainingusingbackpropagationalgorithms.Mathematicalandstatisticaloptimisation methods,aswellasspecialisedlanguagesandsoftwarewereincreasinglyemployedforthe developmentofAIalgorithms.Thebest-knownAI-specificlanguagesweretheallpartsofthe Lispfamilyofprogramminglanguages.Specialcomputers–Lispmachines–weredevelopedto runprogramswrittenintheselanguagesmoreefficiently.

Inspiteofthelargeadvancesmade,1987markedthebeginningofasecondAIwinter.The maintenanceandupdatingofspecialisedartificialintelligenceswascomplicated;theywere alsounabletoindependentlyhandlepreviouslyunfamiliarinputs,leadingtothemquicklybecomingobsolete.IBMandAppleproducedeverhigher-performancegeneral-purposedesktop computers.Special-purposemachines(includingLispmachines)losttheirusefulness.Thefifth generationcomputerprojectfailedtoyieldthehoped-forresults.Thus,1991shouldhaveseen thecompletionofartificialintelligencecapableofholdingeverydayconversationswiththeuser; itwouldtakedecadesbeforethisgoalwasfinallyreached.Disappointedinthelimitedcapabilitiesofexpertsystems,DARPAagaindrasticallyreducedfundingforAIsystemsresearch.

SubsequentdevelopmentofAItechnologywasincreasinglyfoundedonexactmathematical methodsdevelopedinthepast.Thefocusonceagainmovedtorigorouslogicandsolutions weresoughtfromcontroltheory,asubfieldofcybernetics.Atthesametime,researchersalso begantoutiliseprobabilitymodelsandfuzzylogicenablingthemtodescriberelationshipsand conditionalprobabilitiesoffeaturesand,unlikepurelogic,expresslackofknowledgeanduncertaintyinforecasts.

The1990ssawtheriseofdataminingandmachinelearningalgorithms.Systemswerenolonger describedonlybyprogrammersandexperts:thecomputersbecamecapableofindependent learningthroughtheanalysisoflargedatasets.AItechnologyandprobabilitymethodsweretied

togetherbyBayesiannetworksallowingtheconditionalprobabilitieslinkingdifferentvariables tobeexpressedintheformofdirectedgraphs.AnewparadigmemergedinAIthatsawartificial intelligencesasagentsreceivingsignalsfromtheenvironmentandattemptingtooptimisetheir behaviourfortheachievementofcertaingoals.ThegreatestachievementofAItechnologyin the1990scouldbeconsideredtobethevictorybythechess-playingsystemDeepBlueover thereigningchessworldchampionGarryKasparovonMay11th,1997.Bythispoint,AIsystems alsobegantobeutilisedineverydayservices,especiallyweb-basedsolutions.Naturallanguage processingwasthusemployedbytheGooglePageRanksearchalgorithm,alsocreatedin1997. Thealgorithmrankedthepagesdisplayedafteruserqueries;thisisconsideredoneofthecritical piecesoffunctionalitysettingGoogleapartfromotherexistingsearchengines.

Naturallanguageprocessingwasalsoemployedinspeechsynthesismodels,suchasDECtalk, usedashisspeechsynthesiserbyStephenHawking,aswellastheslightlymorecomplexBell LabsTTS(Text-to-Speechsystem),capableofsynthesisingspeechinseveraldifferentlanguages.Fornearly20years,startingfromtheearly1990s,machinetranslationasafieldwas dominatedbystatisticalmodelsdevelopedatIBM.Meanwhile,hiddenMarkovmodelsbecame predominantinspeechrecognition.Themainapproachtofacerecognitioninthe1990sconsistedintheuseofeigenfacealgorithmsemployinglinearalgebraicmethodsfortheanalysisof facialfeatures.

Inspiteoftheadvancesmadebyartificialintelligencesystems,thetermAIwasstillfrowned uponattheendofthe1990s.Researchersavoidedtheterm,preferringtospeakofstatistical methods,machinelearning,andcontroltheoryinstead.TheendofthesecondAIwinteris notclearlydefined,butitisgenerallyagreedtohaveendedby2005whentheStanford-built self-drivingcarStanleycoveredthe212kmDARPAGrandChallengetrailintheNevadadesert inlessthansevenhours.Thiswasamajorstepforward,consideringthatduringtheprevious year’sten-houreventnoneofthecompetingvehicleswereabletocovermorethan12km.Two yearslater,DARPArepeatedthecompetitioninacitysetting.Thewinnerofthischallengewas theCarnegieMellonUniversityBossrobotwhichcovered96kminlessthansixhoursinthese conditions.

In2011,IBMdemonstratedtheirquestion-answeringsystemWatsonontheUSTV-showJeopardy!(AlsopopularinEstoniaunderthenameKuldvillak).Intwoconsecutiveshows,Watson competedagainsttwohumanplayers(oneofwhomwasKenJennings,regardedasoneofthe bestJeopardy!playersinhistory)winningbothgamesbyagoodmargin.Watson’ssuccess wasfoundedonideasderivedfromavarietyoflanguagemodelsandlargecomputingpower, enablingthesystemtobetrainedonlargedatasets.Erroranalysiswascontinuouslycarried outthroughoutthetraining,andtheprogramwasconstantlyimproved.Nevertheless,Watson’s performancewasnotcompletelyflawless.Forinstance,duringtheFinalJeopardy!roundofthe firstshow,Watsongavetheanswer’Toronto’toaquestionaboutUScities.

Oneofthegreatestbreakthroughsoftheartificialintelligenceeracamein2012whenthe AlexNetconvolutionalneuralnetworkwontheImageNetLargeScaleVisualRecognitionChallenge(ILSVRC)byalargemargin.AlexNetwasnotthefirstconvolutionalneuralnetwork;the architecturewasfirstproposedbyYannLeCunbackin1989.Thebreakthroughwascatalyzed bytrainingalgorithmsoptimisedforspecialisedgraphicsprocessingunitsenablingthetrainingoflargeranddeeperneuralnetworksthaneverbefore.TheImageNetdatabasecontained 15millionimagesfrommorethan22000categories.InthefollowingImageNetcontests,all winningideaswerebasedonconvolutionalneuralnetworksandAlexNet’sresultwasimproved multipletimes.Today,theImageNetchallengeisconsideredtohavebeensolved.

AftertheAlexNetbreakthrough,neuralnetworkshavebeensubjecttoactivedevelopment.

Alongsideconvolutionalneuralnetworks,significantattentionwasalsogarneredbylargelanguagemodels,recurrentneuralnetworks,longshort-termmemorymodels.This,inturn,ledto therapiddevelopmentofspeechrecognitionandsynthesisandtranslationmodels.Artificial intelligencewaswidelyadoptedinmedicine,industry,andfinance.Recurrentnetworksbegan toseeuseintimeseriesanalysis,robotics,andgames.Notably,theAlphaGosystemreceived greatattentionafterdefeatingaprofessionalhumanplayeratGoin2015.

Asatthetimeofthisreport,themainpublicattentionisdirectedtogenerativeAImodelscapableofcommunicatinginhumanlanguage,answeringquestions,seeminglylogicalreasoning, generatingimagesandmusic,andassistingprogrammersinwritingcode.Whiletheconcept ofgenerativemachinelearningmodelsishardlynew,themainachievementsrelatedtodeep generativeneuralnetworksdatetothepreviousdecade.Generativeadversarialmodelsand variationalautoencoderswereintroducedin2014,bothofwhichareimportanttoolsforimagesynthesis.Generativeadversarialmodelsallowedsynthesisinghigh-resolutionimagesof humanfacesforthefirsttime.

In2015,itwasdemonstratedthatthemethodsofstatisticalphysicscanbeusedfortraining generativediffusionmodels.Perhapsthebiggeststepforward,however,cameintheformof attentionmechanismtransformers,thebasicarchitectureofwhichwasproposedbyGooglein 2017.Transformersareatthecoreofanumberofwell-knowngenerativelanguagemodels, suchasGPTandBERT,aswellastheGitHubCopilotcodecompletiontool.

Transformersenabletheconstructionofparallelisablemodelswithlongcontextwindowsthat canbetrainedunsupervisedonlargedatasets.Unsupervisedmodelscanalsoberetrained forspecifictasksthroughtransferlearning.Thisisavitalfeature,foratime-andresourceconsuminguniversalmodelonlyhastobetrainedonceinsuchcase.Thismodelcanthenlater beeasilyadaptedtoaspecificproblemusingamuchsmallerdatasetandfarfewerresources.

Imagesynthesis,ormorespecifically,text-to-imagemodelsalsousetransformers,buttheir architectureisgenerallymorecomplex.DALL-E3andStableDiffusionuseanautoencoderfor encodingimages;theencodeddataareusedfortrainingdiffusionmodels,inturnmadeupof convolutionalneuralnetworks.

2.2Artificialintelligencealgorithmsandtaxonomies

Theterm’artificialintelligence’isverybroadandencompassesmethodswithlargedifferences incomplexity,explanatorypoweranddepth,aswellasareasofuseandtrainingalgorithms.On ahigherlevel,artificialintelligencealgorithmsaredividedintorule-basedsystems,traditional machinelearningalgorithms,andneuralnetworks.

2.2.1Rule-basedsystems

Rule-basedsystemsarethesimplestartificialintelligencesystems.Ingeneral,thesesystems consistofrulescreatedbyhumanexpertsthatthecomputercanthenfollowtosolveproblems seeminglyrequiringhumanintellect.Forexample,rule-basedsystemsaregoodatsolvingcertaintypesoflogicalthinkingexercisesandpuzzles(e.g.so-calledEinstein’spuzzlesandzebra puzzles).

2.2.2Machinelearning

Machinelearningmeansthatthecomputerlearnstosolveataskbasedonexistingdata(which couldincludemachine-readablerepresentationsofsensors,previousevents,etc.).Machine learningutilisesmathematicaloptimisationmethodswhichtheprogramusesforfindingamaximallyaccuratesolutiontotheinitialproblem.Thisallowsthesystemtosolvetaskswherethe solutionalgorithmisdifficultforahumantodescribeusingpreciseinstructions.

Machinelearningmethodscanbecategorisedinvariousways.Forexample,fromtheperspectiveofapplicationsandtrainingdata,machinelearningcanbedividedintosupervisedand unsupervisedmachinelearningandreinforcementlearning.

2.2.2.1Supervisedandunsupervisedmachinelearning,reinforcementlearning

In supervisedmachinelearning ,thegoalofthetrainingalgorithmistocreateamodelcapableof predictingvaluesorvectors,alsoknownaslabels,basedontheinputreceived.Inunsupervised learning,themodelbeingtrainedispresentedwithtrainingdatawhichincludesbothinputs andthecorrespondinglabels.Themodelcancontinuouslycompareitspredictionswithcorrect labelsandusethecomparisonresultsforimprovingitspredictioncapacity.Supervisedmachine learningisusedinalmostallfieldswheremachinelearningisutilised,suchasmedicalresearch, image,text,andvoicerecognitionorprocessing,andthetrainingofsearchenginesandspam filters.

Supervisedmachinelearningtasksaredividedintoclassificationandregressiontasks.Thegoal ofclassificationmodelsistopredictwhichofthetwoormoreclassesagivenrecordbelongs to.Regressionmodelstrytoprovideamaximallyaccuratepredictionofthenumericalvalue correspondingtotherecord.

In unsupervisedmachinelearning ,labelscorrespondingtotherecordseitherdonotexistor themodelcannotseethem.Thegoalofthealgorithminsuchcasesistoidentifyrelationships orstructurewithinthedatawithouttheaidoftraininglabels.Unsupervisedalgorithmspermitthedimensionalreductionofthebasedata(principalcomponentanalysis)orgroupingof similarrecords(clustering).Unsupervisedmachinelearningmethodsareusede.g.ingenetics fortheidentificationofsub-populations,aswellasfortraininggenerativemodels,suchasautoencoders.Unsupervisedmethodsareoftenalsousedpriortotheemploymentofsupervised machinelearning.

Anotherclassofmethodsalongsidesupervisedandunsupervisedmachinelearningalgorithms isreinforcementlearning.Inthecaseofreinforcementlearning,noteverysingleinputwillbe pairedtoanoutput.Thealgorithmwillinsteadlearntoselectactionsbasedontheenvironment sothattherewardfortheseactionsismaximised.Forexample,reinforcementlearningcanbe usedforspeechprocessingorteachingthecomputertoplaygames.Reinforcementlearning wasthususedfore.g.trainingAlphaGo.

Transferlearning isamachinelearningtechniquewhereininformationacquiredfortheperformanceofonetaskisalsousedforperformingothertasks.Forexample,trainedgeneral-purpose languagemodelscanbeusedfortheperformanceofdifferentlinguistictaskswithoutanyadditionalfine-tuningofthemodel(seeSection 2.2.4.1).

2.2.2.2Machinelearningalgorithms

Linearregression (Figure 2 )isoneofthesimplestsupervisedmachinelearningmodels.Asa statisticalmodel,ithasactuallybeenusedforcenturies.Themodelisusedfortheprediction ofarealnumberoutputvaluefrominputdata.Asperthename,linearregressionisusedfor modellingalinearrelationshipbetweenaninputandanoutput.Thetrainedmodelisthuseasily explainable,asiteasytosurmisefromthemodelitselfhowachangeintheinputvaluewill influencetheprediction.

Logisticregression (Figure 2 )isverysimilarinnaturetolinearregression;inspiteofitsname, however,itismainlyusedforclassificationanalysis.Inthecaseofbinarylogisticregression, thepredictionalgorithmfirstemploysalinearfunction,theoutputofwhichcanbeinterpreted asthelogarithmoftheprobabilityofalabel.Theoutputisthenpassedtoasigmoidfunction thattransformstheoutputvaluetoaprobabilityintherange [0, 1].Logisticregressioncanalso easilybeadaptedtosituationswheretherearemorethantwooutputclasses.

Supportvectormachines aresupervisedmachinelearningmethodsinitiallydevelopedforclassificationtasks.Thesimplestsupportvectormachineisalinearclassifiertaskedwithfindinghyper-levelsdemarcatingrecordsofdifferentclasses.Linearclassifierspresumethatdata classesarelinearlyseparable,whichis,however,generallynotthecase.Thishasledtothe developmentofanumberofadaptationsovertimewhichenablesupportvectormachinestobe trainedfornon-linearclassification,regressionanalysis,exceptionfinding,anddimensionality reduction.

Supportvectormachinesareusedinimageandtextclassification,butalsoine.g.biology.The mainweaknessofsupportvectormachinesistheirdifficultexplainabilityandhighercomputationalcomplexityintraining.

Decisiontrees (Figure 3 )aresupervisedhierarchicaldatastructure-basedmodelsutilisedfor regressionandclassificationanalysisasaseriesofrecursivedecisions.Thetreeconsistsof testnodesandendnodesorleaves.Inthetestnodes,theinputissubjectedtotestswhich areusedforchoosingthenextbranches.Leavesreturntheoutputcorrespondingtotheinput basedonthetestsperformed.

Decision-makingcanbeenvisionedasaseriesofyes/noquestionswereeachnewquestion dependsonapreviousoneandthefinalpredictedvaluedependsoneachsingleanswer.Decisiontreesareeasilyexplainableandintuitivelyunderstandablemodelswhichhasmadethem historicallyextremelypopular.

ThenaiveBayesmethod isaclassificationalgorithmutilisingtheBayestheoremforthepre-

Figure2.Linearandlogisticregression

dictionofthemostprobablelabelsbasedonaninput.Thismethodpresumesthattheinput featuresusedfortrainingthemodelareindependentofeachother.Nevertheless,thenaive Bayesmethodhasbeenhistoricallypopularduetoitssufficientpower,aswellassimpleexplainabilityandtrainability.Unlikemanyothermachineslearningalgorithms,thesolutionofthe naiveBayesmethoddoesnothavetobefoundiniterativesteps,astheformulaforassessing thehighestprobabilitycanbepresentedinanexplicitform.

The k-nearestneighbouralgorithm (Figure 4 )isasupervisedalgorithmthatcanbeusedfor solvingbothregressionandclassificationtasks.Asperthemethod’sname,predictionsare madebasedon k nearestneighbourswhere k isapositiveinteger.Inthecaseofclassification tasks,thealgorithmdetermineswhichclasshasthehighestrepresentationamongthe k nearest neighbours.Inthecaseofregression,thepredictedvalueistheaverageofthevaluesof k nearestneighbours.Thepredictionscanbemodifiedbyassigningweightstotheneighbours basedontheirdistancefromtheoriginalrecord.Distancesbetweendifferentpointscanbe measuredusingdifferentmetricsbasedontheinitialproblem.

Thenearest-neighbourmethodispopular,asthereisnoneedforpre-training:predictionsare madebasedonthetrainingdata.Themodelisalsoeasilyexplainable.Themaindrawbackof themodelisseeninthefactthatthemethodisalocalone,i.e.predictionsarebasedonafew individualrecordswhiletherestofthetrainingdatasetisignored.

Figure3.Decisiontreeforacarpurchase

Figure4.The k-nearestneighbouralgorithmanalysesthenearestneighboursoftheunidentified record

Principalcomponentanalysis isanunsupervisedalgorithmthatallowstranslatingdatatoa moreeasilyexplainablecoordinatesystemusinglineartransformations.Principalcomponent analysisisoftenutilisedthedimensionalreductionofthedataset.Thisisespeciallyusefulin situationswheremanyfeaturesfoundinthedatasetarestronglycorrelatedtoeachother.First principalcomponentsarevectorsthatmaximallyrepresentthevarianceofthedatauponmapping.Mappingthedataontothefirstprincipalcomponentsalsoenablestheclusteringofthe datatobestudiedvisually.

The k-meansmethod or k-meansclusteringmethodisanunsupervisedmachinelearningalgorithmthatdividesthedatarecordsinto k differentclusterswhere k isapositiveinteger.The k-meansmethodshouldnotbeconfusedforthe k-nearestneighbourmethodwhichisasupervisedmethodology.Whereas,inthecaseofthe k-nearestneighbourmethod,predictionscan bemadebyonlylookingatthenearestpointstotherecord.The k-meansmethodlooksforan optimumclusteringforallpointswhichmakestrainingmuchmoredifficultandtheinterpretation oftheoutputrequiresidentifyingalltherecordsthatwereclusteredtogether.Clusterscanbe usedforidentifyingrelationshipswithinthedataset.Clusteringyieldsthecentreofeachcluster whichcanbeusedine.g.,signalprocessingasarepresentativeclusterpoint.Themethodcan alsobeusedforautomaticfeaturelearningwhichallowsinputdatatobetranslatedtoaform suitableforothermachinelearningmethods.

HiddenMarkovmodels (Figure 5 )arestatisticalalgorithmsmodellingMarkovprocesses,i.e., seriesofpossibleeventswheretheprobabilityofeachfollowingeventonlydependsonthe stateoftheprocessafterthepreviousevent.Markovprocessstatesarenotobservableina hiddenMarkovmodel.Theonlythingsthatareobservablearetheeventsdirectlyinfluencedby thehiddenstates/events.Thegoalistousetheobservableeventstostudythehiddenstates andevent.

Figure5.ExampleofahiddenMarkovmodelofactivitiesfordifferentweatherconditions

Ensemblemethods (Figure 6 )aretechniquescombiningdifferentmachineslearningmodels. Combinedmodelsareoftenbetterandmorestablethanindividualmodelsbythemselves.Var-

iousmethodsexistforthecombinationofmodels:bootstrapaggregatingorbagging,stacking, boosting.Thebest-knownensemblemethods,suchasdecisionforestsandgradient-boosted treescombinedifferentdecisiontrees.Diffusionmodelshavealsobeenusedforthegeneration ofneuralnetworkparameters[1].Ensemblelearningisalsoknownasmeta-learning.

2.2.3Artificialneuralnetworks

Artificialneuralnetworksaremachinelearningmodelsthatattempttoimitatetheoperationof thehumanbrain.Neuralnetworksconsistoflayersofnodes,thebehaviourofwhichshouldbe similartotheneuronsfoundinthebrain.Eventhoughthefirstneuralnetworkswerebuiltas earlyasinthe1950s,theyonlysawrealsuccessaboutadecadeagowiththecreationofthe firstconvolutionalneuralnetworkscapableofachievingbetterresultsinimageprocessingand facerecognitionthananyotherexistingalgorithm.

Increasesincomputingpowerandreductionsinrelatedcostshavecreatedtheconditionsfor traininglargeandcomplexneuralnetworkswhichhasledtoakindofraceinbothresearch andimplementationofsuchsystems.Today,modelsbasedonneuralnetworksarecapable ofsolvingtasksthatwereconsideredimpossibleamerefewyearsago.Neuralnetworksare generallydifficulttoexplainandthetrainedmodelsareseenasblackboxes.Asaresult,more easilyexplainablemachinelearningmodelsofsimilarpredictivecapacityareoftenpreferredto neuralnetworks.Thestudyoftheexplainabilityofneuralnetworksisanactivefieldofresearch.

2.2.3.1Neuralnetworkarchitectures

Fullyconnectedneuralnetworks (Figure 7 )areoneofthefirstneuralnetworkarchitectures everdeveloped.Afullyconnectednetworkismadeupofaseriesoffullyconnectedlayers whichinturnconsistoflinearnodes,theoutputsofwhicharesubjectedtonon-linearactivation functions.

Convolutionalneuralnetworks areneuralnetworkscomprisingoneorseveralhiddenconvolutionallayers.Whereasfullyconnectedlayerscompriselinearnodesorweightscorresponding toeachinputvalue,aconvolutionallayerismadeupofsmallkernels/filtersmakingthelayers

Figure6.Ensemblemethodscombinedifferentmachinelearningmodels

Figure7.Artificialneuralnetworksconsistofdifferentlayersandnodes.

smallerandenablingthemtobeusedforthecreationofdeeper(i.e.involvingmorelayers) neuralnetworks.

Thebest-knownapplicationofconvolutionalneuralnetworksisartificialvision.Inthecaseof facerecognition,aconvolutionalnetworkcanidentifydifferentfeatureslayerbylayer,beginning withlinesandangles,followedbyeyesandthemouth,andendingwiththecompletehuman face.Convolutionalnetworkshadarealbreakthroughin2012.withAlexNetwhichbeatother contestantsintheImageNetLargeScaleVisualRecognitionChallengebyahugemargin.From thenon,convolutionalnetworkshavebeenthemaintoolofartificialvision.Convolutionalnetworksarealsosuccessfullyusedintextprocessingand,toalesserextent,otherspecialtytasks.

Bothfullyconnectedandconvolutionalneuralnetworksareexamplesoffeed-forwardnetworks wheretheoutputofahiddenlayeristheinputforthenextlayer,i.e.informationonlyflowsina singledirectionthroughthenetworklayers.Incaseinformationcanalsoflowinacyclicmanner withintheneuralnetwork,i.e.alayer’soutputisfedbackintothenetworkandcaninfluence laterinputstothesamelayer,thiskindofneuralnetworkiscalledarecurrentneuralnetworks. Recurrentneuralnetworksaremainlyusedfortheanalysisofdataseries,astheycankeep trackoftheprecedinginputswithinthesameserieswhenprocessingatraininginput.Recurrent neuralnetworksarewidelyusedin,e.g.,languagemodels,textgeneration,speechrecognition, artificialvision,videolabelling.

Thetrainingofrecurrentneuralnetworkscanberenderedunstablebythe’explosion’or’vanishing’ofgradientsduringbackpropagation.Tomitigatethisproblem,longshort-termmemory (LSTM)neuralnetworkshavebeenadoptedasasubsetofrecurrentnetworks.Atthecoreof LSTMarecellswithinput,output,andforgetgatescontrollingtheflowofinformationthrough thecellinordertopreventgradientexplosionorvanishingduringbackpropagation.

Transformers aredeeplearningmodelsusingattentionmechanismsfortheanalysisofsequentialdata.Transformerscameintothelimelightin2017whenitwasshownthat,whenapplied tonaturallanguageprocessing,theyarecapableofidentifyingthecontextcorrespondingto atokenbasedontheprecedingsequencewithouttheiterativeanalysisofthissequence.An inputofacertainlengthisanalyzedasawholeandanattentionmechanismisusedtoidentify thesignalsmostrelevanttoeachtokenintheprecedingsequenceoftokens.Thisenablesthe modelstobetrainedinparallel,thusreducingcomputingcostscomparedtoe.g.LSTMs. UnlikeLSTMs,inthecaseoflonginputs,transformerslackthecapacitytokeeptrackofthe

entireprecedingseriesandcanonlytrackacertainsegmentoftheserieswhichcanprove problematicinanalyzinglongtexts.Transformersgenerallyconsistsofanencoderandadecoder,thefirstofwhichanalyzestheinputantthesecondgeneratestheoutputstep-by-step. Thecoderanddecodercanbeusedbothsimultaneouslyandseparately.Forinstance,GPTis apurelydecoder-basedandBERTapurelyencoder-basedmodels;thereare,however,models suchasT5thatemploybothanencoderandadecoder.

Transformersareusedinthetrainingofbothsupervisedandunsupervised,aswellashybrid models.Largelanguagemodels,suchasBERTandGPT,arefirsttrainedunsupervisedona largesetoftexts.Themodelwillthenbetrainedonasmaller,labelleddatasetforaspecific task.Transformer-basedmodelshaveachievedalmostcompletedominanceamonglanguage modelintherecentyears.Inotherfields,however,nosimilarsuccesshasbeenobserved.For instance,inartificialvision,convolutionalneuralnetworksarestillpreferredtotransformers, evenifattentionmechanismsarealreadyemployedinthese.

An autoencoder isanunsupervisedneuralnetworkcomprisingbothanencoderandadecoder. Inputisreceivedbytheencoderandtransformedtoanotherform,whilethedecoderattempts toreconstructtheoriginalinputfromthetransformedinput.Thetrainedencodercanthenbe usedfordimensionalreductionoftheinputdataandthedecoder,forthegenerationofnew data.Inmostcases,thegenerativecapacityofanautoencoderislimited,astheproximityof thedecoder’sinputsdoesnotguaranteethesimilarityofoutputs.

Variationalautoencoders (VAE)havebeenproposedforuseindatageneration,e.g.image synthesis.VAEsdifferfromordinaryautoencodersinthattheencodermapsaninputtoadistribution,ratherthanasinglepoint,e.g.,byoutputtinganormaldistributionmeanvalueand covariancematrix,whereasthedecoderwillbegivenarandomvectorfromthisdistributionas aninputwhichitwillthentryanduseforreconstructingtheencoder’soriginalinput.Unlike autoencoders,trainedVAEdecoderswillusuallygeneratesimilaroutputsforproximateinputs.

A generativeadversarialnetwork (GAN)isagenerativemodelwheretwoneuralnetworks–a generativeandadiscriminativeone–contestwitheachotherfortrainingthemodel.Bothneural networksaretrainedsimultaneously.Thegenerativemodelreceivesaninputfromasimpledistributionandattemptstousethistogenerateanoutputfromacomplexdescriptivedistribution, whereasthediscriminativemodelattemptstodistinguishtheoutputsofthegenerativemodel fromrealdata,thedistributionofwhichthegenerativemodelisattemptingtoimitate.GANscan beusedin,e.g.,imagesynthesiswherethegenerativemodelisgeneratingimagesofhumans whilethediscriminativemodelisattemptingtodistinguishtherealimagesfromthegenerated ones.Generativeadversarialnetworksarealsousedinspeechandtextsynthesis.

Diffusionmodels aregenerativemodelsbasedonMarkovprocesses.Diffusionmodelsare somewhatsimilartoautoencoders,inthattheycompriseaforwardprocesswherenoiseis addedtorealdatastep-by-step,andareverseprocessattemptingtorecreatetheoriginalinput throughthegradualremovalofthenoise.Ingeneral,noiseusedfortrainingdiffusionmodels isgeneratedusinganormaldistribution;aftertheadditionofasufficientamountofnoisethe originalinputwilldisappearcompletelyandtheoutputwillonlyconsistofrandomnoise.

Ifalotofnoiseisaddedtotheinputatonceitwillbeextremelydifficulttopredicttheoriginal input,butitturnsoutthatwhennoiseisaddedinsufficientlysmallincrements,themostrecent additionofnoisecanbepredictedandremovedusing,e.g.,aneuralnetworkfortheprediction.Thetrainedmodelcanbesequentiallyappliedtoacompletelyrandominputandusedto generateanoutputsimilartorealdata.

Theefficiencyofsuchtrainingstemsfromtheknowledgethatifnoiseisgeneratedfromanormal

distributionandaddedsequentially,thenalloftheaddednoisealsooriginatesfromanormal distribution.Thesumofnoisefromseveralincrementscanthusbesimultaneouslyaddedto theoriginalinputduringtraining,andtheneuralnetworkcanbeaskedtopredictonlythesmall amountofnoiseaddedinthelateststep.

Diffusionmodelsoriginatefromstatisticalphysics.In2015,itwasdemonstratedthattheycan alsobeusedforimagesynthesis.Subsequentstudyofthesemodelshasgivenrisetothe realisationthatdiffusionmodelsaremorepowerfulandstableyetlessresource-intensivethan, forexample,generativeadversarialnetworksthatwerepreviouslythebestimage-generating models.Today,diffusionmodelsandtransformersarethemaincomponentsoftext-to-image models,suchasDALL-E3andStableDiffusion.

2.2.4Largelanguagemodels

Largelanguagemodels(LLMs)aregenerallytransformer-basedtextsynthesismodels,distinguishedbythelargenumberofparametersandamountoftrainingdataused.Non-transformer basedlanguagemodelsalsoexist.Variousarchitectures,suchasRetNet[2 ],RWKV[3 ],and Mamba[4 ]havebeendevelopedthatcanalsobeusedforthecreationoflanguagemodels,offeringsolutionsfortheweaksidesoftransformerarchitecture.Alargepartofrecentinnovation inmachinelearningandartificialintelligencehasbeenrelatedtothedevelopmentofLLMsand theadoptionofproducts(suchasChatGPT)builtonLLMs.

Accordingtoonehypothesis,shouldartificialgeneralintelligence(AGI)provepossibleatall,it canonlybedevelopedonthebasisofmultimodallargelanguagemodels[5 ].DemisHassabis fromtheAIdeveloperDeepMindhasopinedthat’multimodalfoundationalmodelsaregoingto bekeycomponentofAGI’1 .ConceptionsanddefinitionsofAGIvary,however,andsomeclaim thenecessaryleveloftechnologyhasalreadybeenreached[6 ].

2.2.4.1Training

Aswithallotherartificialintelligencemodels,themodelarchitectureneedstobetrainedafter beingestablished.ThetrainingofLLMsusuallyinvolvesseveralsteps,noneofwhichare,however,strictlyrequired.ThetrainingprocessofLLMsandthechoicesmadeintheprocessare closelytiedtothedeploymentmodelsoftheAIapplicationsfoundedupontheLLM.

Pre-training isthefirst,unsupervisedstageoftrainingwherethemodelisfedtextsequences containingmaskedelementsandisinstructedtopredicttheseelements.Theselectionofthe maskedelementsisautomatic.Pre-trainingisthemostcompute-intensiveprocessinvolving hugeamounts( ∼trilliontokens)ofunlabelled,low-qualitydata,usuallyacquiredthroughweb crawling.Pre-trainingyieldsapre-trainedmodelthatcangenerateacontinuationtoaninput basedonwhatithaslearnedfromthetrainingdata.Thiscontinuationmaynotnecessarily beuseful:whenthepre-trainedmodelisaskedaquestionitcangenerateananswertothe question,oritmaygenerateacontinuationorfollow-upquestions.

Supervisedfine-tuning (SFT)isthesecondstageoftrainingmeanttotunethemodelfora specificpurpose.Forinstance,inthecaseofchatbots,itisspecificallypreferredthatthesystem generateanswers,nototherkindsofoutputs.Trainingdatausedforfine-tuningareoften, althoughnotalways,assembledandlabelledbyhumans.Theirqualityishigherandquantity

1 TheGuardian:’GooglesaysnewAImodelGeminioutperformsChatGPTinmosttests’. https: //www.theguardian.com/technology/2023/dec/06/google-new-ai-model-gemini-bard-upgrade Visited December11th,2023

muchlower( ∼tensofthousandssamplepairs)comparedtopre-trainingdata.

Reinforcementlearningwithhumanfeedback (RLHF)isthethird,reinforcement-basedphase oftrainingwherethemodelistunedtohumanpreferences.Arewardmodeliscreatedforthis purpose,whichisthenappliedtothefine-tunedmodelfortheevaluationofitsoutputs.The rewardmodelistrainedusingadatasetcreatedwithhumanassistancewhereeachqueryis mappedto1ormore (good answer,bad answer) pairswherethegoalistomaximiseforeach pairthedifferencebetweentherewardmodel’sevaluationofthegoodandbadanswers.After therewardmodelhaslearnedtodistinguishthedesirableanswersfromtheundesirableones, itwillbeemployedtoadditionallyfine-tunethemodelthathasalreadyundergoneSFTduring reinforcementlearning.

Directpreferenceoptimisation and identitypreferenceoptimisation (DPO,IPO)arealternative approachestofine-tuningwhere,similarlytoRLHF,adatasetofhumanpreferencesisused forpreferencelearning.ThetwoapproachesaredistinguishedbythefactthatunlikeRLHF, DPOandIPOdonotrequiretheemploymentofarewardmodelbecausetheLLMitselfcan fulfiltheroleoftherewardmodel[7 8 ],usingthedifferencebetweentheevaluationsofgood andbadanswersasthelossfunction.Whereasamodelthathasonlybeenpre-trainedcangive irrelevantordangerousanswers,SFTandRLHF/DPO/IPOaspartsofthetrainingprocessenable usinghumansupervisiontotrainthemodeltomakeitmoresecureandmorecompatiblewith userandbusinessrequirements.

2.2.4.2Inferenceandcontextlearning

Aprompt isauserinputtokenusedbyagenerativeimageorlanguagemodelforthegeneration ofanoutput.Thisprocessiscalled inference .Apromptisusuallymadeupofanatural-language text.ThepromptsusedbyLLM-basedchatbotsarecombinedwithapre-promptcontaining additionalinformationonthecontextoftheconversation,theuser,andthelanguagemodel. Amongotherthings,thisiscrucialforensuringthatthechatbot’soutputisbasedonitsrole asachatbotrespondingtoquestions,ratherthangeneratingacontinuationtotheuserinput. Apre-promptcanalsobeusedforprovidinginformationabouttheoutsideworld,suchasthe date,time,username,contentsofadocumentortextfile,andotherfeaturesoftheuserorthe environment.

Modelsareunabletodistinguishapromptfromapre-prompt,afactexploitedbynumerous promptinjectiontechniques.Asthepre-promptiseasyfortheusertoacquirethroughawellcraftedprompt,itshouldnotcontaininformationthattheusershouldnothaveaccessto.Inthe caseoftransformerarchitecture,thepromptalongwiththepre-promptmustfitintothemodel’s contextwindow whichismeasuredintokensandcontainsthe(pre-)informationnecessaryfor generatinganoutput.Another,morecomplexformofthisapproachisretrieval-augmentedgeneration(RAG)whereinthelanguagemodelscreatesadatabasequerybasedontheuserprompt andAPIinformationfoundinthepre-prompt,andusestheresultsofthisqueryforgenerating aresponse.Thisalsosolvesoftheproblemoftheuser-provideddatabeingtoolargetoinsert intothecontextwindowusingaprompt.Modelarchitectureswithunlimitedpromptlengthalso exist,e.g.Mamba[4 ]andRWKV[3 ].

Whereassimplerlanguagemodelsrequireretrainingorfine-tuningforeachnewtask,thelanguageknowledgeandgeneralisationcapacityofLLMsmeanthat,inmanycases,articulating thetaskandaddingafewexamplestothepromptisallittakes[9 ].Giventhatinformation relatedtothetaskisfedintothemodel’scontextwindow,thisapproachiscalled in-context learning .

In-contextlearningisdividedintonumeroussub-methods:few-shotlearningwheretheprompt issupplementedseveralexamplesalongsidetheinstructions,one-shotlearningwhereasingle exampleisprovided,andzero-shotlearningwherethequeryismadewithoutprovidingany examples.Themoreparametersthelanguagemodelcontains,thefewerexampleshavetobe normallyaddedtothepromptforthesuccessfulcompletionofthetask.

2.3Applicationsofartificialintelligence

Imagesynthesis meanstheautomaticgenerationofanimagewithpredeterminedfeatures,e.g. basedonaverbaldescription(oranotherimageandaverbaldescription).Imagesynthesissubfieldsinclude,inanorderofincreasinggranularity,inpainting,outpainting,styletransfer,deep learning-basednoiseremoval,videosynthesis,andrefinement.Thesedays,imagesynthesis generallyemploysgenerativeadversarialnetworks[10 ]and,increasingly,diffusionmodels[11, 12 ].

Thepurposeof artificialvision istheartificialextractionofinformationfromimages.Thiscomprisesclasssegmentationandinstancesegmentation,labelling,andobjectrecognition.Artificialvisiongenerallyutilisesconvolutionalneuralnetwork(CNN)andtransformer-baseddeep learningmodels[13 14 ].Commonusecasesincludethemonitoringofcattleandagricultural equipment,monitoringofroadconditionsandthesurroundingsbyself-drivingcarsordelivery bots,facerecognition,andaugmentedreality.

Thepurposeof speechsynthesis istogeneratehuman-understandablespeechfromagiven text.Primitivespeechsynthesismodelsoperatedbysequentiallylinkingpre-recordedphonemes orwords,buttoday,transformer-basedneuralnetworksaregenerallyusedforthistask[15 , 16 ]. Speechsynthesisisusedinchatbots,automatedmessagedelivery,screenreaders,computer gamelocalisation,anddubbing.Subfieldsofspeechsynthesisincludespeechstyletransfer, i.e.,imitationofthetoneandpatternsofsamplespeech.

Incontrasttospeechsynthesis,thepurposeof speechrecognition istheextractionofinformationfromhumanspeech.Speechrecognitionincludesspeechtranscription,inthecaseoff whichtextualinformationisextractedfromthespeech.Whereaspastspeechrecognitionmodelsemployedstatisticalmethods,today’ssystemsaremainlybuiltuponneuralnetworksbased onCNNsandtransformers[17 ].Speechrecognitionisusedinsmarthomesandhands-free devicesforvoiceinstructionsanddictation.

Naturallanguageprocessing isabroadfieldcomprisingthegenerationandclassification,as wellastheinterpretationoftexts.Textgenerationgenerallymeanspredictingthenexttoken, withprevioustokensprovidingthecontextfortheprediction.Textclassificationandinterpretationareusedinsemanticsearchwherecandidatephrasesfoundinadocumentortextexcerpt arecomparednotbasedonkeywordmatchingbutsemanticproximity.Deeplearningnetworks comprisingrecurrentneuralnetworks(RNN)andlongshort-termmemory(LSTM)werepreviouslyusedinspeechsynthesis.Amajorbreakthroughinthefieldcamewiththeemergenceof largelanguagemodels(LLMs),thearchitectureofwhichisgenerallytransformer-based[18 , 19 , 20 ].LLMsareusedin,e.g.,copywriting,chatbots,neuralmachinetranslation,emotionanalysis, andcodegeneration.

Generaldataprocessingandanalysis. Machinelearningmethodsarealsousedindataanalysisinotherapplications.Theseincludevariousclassification,clusteranalysis,anddiscreteor continuousfeaturepredictiontasks,suchaspredictingstockpricemovements,processingof brainsignalscollectedbyabrain-computerinterface,orclusteranalysisbasedonclients’consumptionhabits.Dependingonthenatureofthetask,bothdeeplearningneuralnetworksand

statisticalmachinelearningmethodscanbeused.

2.4Areasofuseofartificialintelligence

Thetechnologiesdiscussedabovehavefounduseinmanywalksoflife:e-governance,the privatesector,educationandresearch,healthcare,andunspecifiedpersonaluses.Wewillnext takealookatsomeofthesefieldsandapplications.

E-stateande-governance. TheAIstrategiespublishedbytheEstonianMinistryofEconomic AffairsandCommunicationsforeseewidespreadadoptionofAIinthepublicsector.Thenatural languageprocessing-basedvirtualassistantBürokrattenablescommunicationwithpublicsectorservicesviaachatwindow.TheEstonianParliament’sdigitalstenographerHansusesspeech recognitiontotranscribespeechesmadeintheplenaryhall.Severalministrieshaveusedthe Textatextanalysistoolkitforauditingtheirdocumentation.Ilme,aserviceprovidedtheNational ArchivesofEstonia,allowsusingartificialvisiontofindpeoplesimilartouser-uploadedimages inhistoricalphotos.

Education. Artificialintelligencehasnumeroususesineducation,e.g.considertheEducation technologycompasspublishedbytheEstonianEducationandYouthBoard(HARNO) 2 .Theeducationalnon-profitKhanAcademyusesachatbotbasedonGPT-4forthepersonalisationof studies.TheDuolingolanguagelearningapplicationcontainsasimilarGPT-4-basedinteractivechatbotsolution;naturallanguageprocessingmethodsarealsoemployedbytheLingvist languagelearningapplication.

Research. Artificialintelligenceandmachinelearninghavebeenusedbyresearchersforboth discoveringnewknowledgeandfindingandsystematisingexistinginformation 3 .TheSemanticSearchsearchportalusesnaturallanguageprocessingandartificialvisionforsummarising, indexing,andsearchingscientificpublications,whereastheAlphaFoldAIsystemdevelopedby Alphabethasmadeitpossibletopredicttheshapesofproteinswithpreviouslyunknownstructures.Modelsbasedonmachinelearningandartificialintelligencehavebeenadoptedinparticle physicsfordataanalysisandsimulationdesign,andinbiomedicine,forthedevelopmentofnew pharmaceuticals.

Healthcare. Artificialintelligencehasbeensuccessfullyemployedinpersonalmedicine,clinical research,aswellasdrugdevelopment4 .Machinelearning-basedbigdataanalysismethods allowusingthepatient’sgenedataforprovidingbettertreatment.Artificialvisionishelpfulinthe interpretationofmedicalimagesanddiagnosingthepatient.Naturallanguageprocessingand textanalysismethodsenablefindingandorganisingpatientdata.Machinelearningmethodsare usedindrugdevelopment,e.g.inmolecularsimulations,predictionoftherapeuticproperties, aswellasthegenerationofmolecularstructuresandsynthesispaths.

Privatesector. Machinelearning-basedaudioprocessing,noiseremoval,andaudioandvideo streampackingtechniques(Skype)areutilisedintelecommunications.Artificialvisionisused ine.g.robotics(Milrem,Cleveron),agriculture,identityverification(Veriff).Chatbotsbasedon naturallanguageprocessingareincreasinglycommonincustomersupport.

2 EducationandYouthBoard.Educationtechnologycompass. https://kompass.harno.ee/tehisintellekt VisitedAugust10th,2023

3 OECD,ArtificialIntelligenceinScience. https://www.oecd.org/publications/ artificial-intelligence-in-science-a8d820bd-en.htm VisitedAugust10th,2023

4 NationalInstituteforHealthDevelopment.Artificialintelligenceasthefoundationforpersonalmedicineinoncology. https://www.tai.ee/et/personaalmeditsiini-uudiskirjad/ tehisintellekt-kui-personaalmeditsiini-alus-onkoloogias VisitedAugust11th,2023

Personaluse. AI-basedpersonalassistants,suchasGoogleAssistant,AmazonAlexa,andSiri werecommonevenbeforetheemergenceofLLMsanddiffusion-basedimagesynthesismodels.TheproliferationandincreasedaccessibilityoffLLMsanddiffusion-basedimagesynthesis modelshasledtoanevolutionaryleapinthisarea,includingthewidespreadadoptionofthe AIaaS(artificialintelligenceasaservice)businessmodel.Modelsdevelopedforpresonaluse andpluginsandapplicationsbuiltuponthesecananalyzecode(GitHubCopilot),readdocumentsorwebpagesandextractnecessaryinformation(BingChat),generatetextsfrombirthdayinvitationstomarketingmaterials(ChatGPT).

Imagesynthesismodelscanbeusedbyindividualsforcreatingillustrationsinthedesiredstyle, generateinteriordesignideas,increasetheresolutionofimagesorphotos(StableDiffusion, Midjourney),andevenidentifycertainspeciesofmushroomsinthewoods.

2.5Explainabilityinmachinelearning

Theemergenceofdeeplearningmethodsandincreasesinthecomplexityofmachinelearning modelshavegivenrisetoquestionsregardingtheexplainabilityofthemodels.Explainabilityof amodelmeanstheabilitytoprovideahuman-understandableexplanationoftherelationship betweenthemodel’soutputandinput.EUdataprotectionregulationsconsiderthetransparency oftheusedartificialintelligencetechnologyvitalforsituationswhereautomateddecisionsare madeusingmachinelearningmodels[21].Thiscanbeachievedthroughtheexplainabilityof themodel.

ExplainableAI(XAI)hasbeenproposedasasolutionfacilitatingmovementtowardsmoretransparentartificialintelligenceandthusavoidinglimitationsontheadoptionofAIincriticalareas [22 ].Asatthetimeofthisreport,thereisasofyetnoglobalconsensusregardingthedesirable thresholdofalgorithmicexplainability[23 ].

ExplainabilityiscloselytiedtotheissuesoftransparencyandtrustworthinessofAIsystems. Thesystematicdefinitionofexplainabilityrequirementsisthusavitalstepinthedevelopment oftransparentandtrustworthyartificialintelligencesystems[24 ].TheOECDhasfound[25 ] that,inordertoensuretransparencyandexplainability,AIactorsshouldprovidemeaningful information,appropriatetothecontext,andconsistentwiththestateofart:

• tofosterageneralunderstandingofAIsystems;

• tomakestakeholdersawareoftheirinteractionswithAIsystems;

• toenablethoseaffectedbyanAIsystemtounderstandtheoutcomeand

• toenablethoseadverselyaffectedbyanAIsystemtochallengeitsoutcomebasedonplain andeasy-to-understandinformationonthefactors,andthelogicthatservedasthebasis fortheresult.

Real-worldinterpretationsoftheexplainabilityrequirementshavealsobeenstudied[24 ].These studieshaveledtothefindingthattheexplainabilityofAIis, interalia ,fosteredbytheestablishmentofsystematicdefinitionsandtheformalisationandquantificationofexplanationsand performanceindicators[22 ].Fourcomponentsofexplainabilityhavebeenproposed[24 ]:

• addressees–towhomtoexplain?

• aspects–whattoexplain?

• context–inwhatkindofsituationtoexplain?

• explainers–whoexplains?

Anexplainablemodelismoretrustworthy,itiseasiertodevelop,test,andaudit;itisalsoeasier todetectanybiasesandexplainabnormalbehaviour.Explainabilityisvitalinmedicinewhere, e.g.,animagemodeldesignedtodetecttumoursismoretrustworthy,ifthepredictionisaccompaniedbyanexplanationofwhichfeaturesoftheimage(contrast,shape)ledtothedetection ofthepresence(orabsence)ofatumour.Likewise,someonereceivinganegativeresponseto theirloanapplicationfromabankmightbeinterestedtolearnwhattheyneedtodoforthebank togivethemaloan(aso-calledcounterfactualexplanation).Aprofanityfilterhighlightingthe inputwordscontributingthemosttotheclassificationofamessageasobscenewillbeeasier todevelopandtestthanonethatdoesnot.

Explainabilityisnotalwaysrequired.Wheretherisksarelowandtheproblemitselfhasalready beenstudiedindepth,itcanprovesuperfluous.Asarule,thereisalsoatrade-offbetween thecapabilitiesandexplainabilityofthemodel[26 ].Whereas,inthecaseofalinearregression model,therelationshipbetweentheoutputandtheinputcanbegleamedfrommerelylooking attheregressioncoefficients,morecomplexandmorepowerfulmodels,suchasdeepneural networks,areakindofa’blackbox’[27 ]forhumanswherethemodel’spredictionordecision principlesarenolongeridentifiableonthebasisofthemodel’sstructureandparameters.

Explainabilitycanbedividedintointrinsicandpost-hocexplainability.Inthecaseofintrinsic explainability(alsoknownastransparency),themodel’scomplexityislimitedinordertoprevent itfrombecomingablackboxandtomaintaintheexplainabilityofitsparametersovertheentire modelfromthestart.Modelswithasimplestructure,suchasdecisiontreesandsimpleregressionmodels,areconsideredself-explainable.Wherethetaskathandcallsfortheemployment ofamorecomplexmodel,post-hocmethodsareusedforincreasingitstransparency.

Post-hocmethodsaregenerallymodel-agnostic–theydonotdependonthearchitectureofthe model,nordotheypresumethepossessionofanoverviewofitsinternalcomponents.Post-hoc explanationstreatallmodels,includingthosethatareself-explainableduetotheirsimplicity,as blackboxes.So-calledlocalpost-hocexplainabilitymethodsdemonstratehowmuchandin whichdirectionsmallindividualchangesininputfeatureswillshiftthemodel’soutput,orwhat arethesmallestnecessarychangesininputfeaturesrequiredforthemodeltopredictanother class.Globalpost-hocexplainabilitymethodsallowunderstandingtheintermediarylayersof analready-trainedmodel:thus,OpenAIhascreatedMicroscope 5 ,acollectionofvisualisations, thancanbeusedtoacquireanoverviewoftheintermediarylayersofdifferentimagemodels, theneuronscontainedtherein,andtheirproperties.Italsoallowsstudyingwhichpictureswithin theinputdatasetactivatetheneuroninquestionthemost.

2.6Globaltrends

2.6.1Fasterandlarger

Increasingmodelsizes. Justascomputingpower,thesizeofneuralnetworkshasalsoundergoneanexponentialgrowth.In1989,YannLeCun’steamusedaconvolutionalneuralnetwork toidentifynumbersinimages.Thenetworkconsistedoftwoconvolutionalandonefullyconnectedlayer,foratotaloffewerthantenthousandtrainableparameters.TheAlexNetmodel introducedin2012comprisedoffiveconvolutionalandthreefullyconnectedlayer,withasmany as61millionparameters.

Withthespreadoftransformerarchitecture,thenumberoftrainableparameterskeptincreasing (Figure 8 ):TheBERT-baseandGPT-1languagemodels(2018)alreadycontained ∼110million, 5 OpenAIMicroscope https://microscope.openai.com VisitedDecember10th,2023

Figure8.Growthinthenumberofmodelparametershasbeenexponential.

GPT-2(2019)–1,5billion,andGPT-3(2020)–175billiontrainableparameters.Thenumber ofparametersusedinGPT-4hasnotbeenpublicisedbutithasbeenspeculatedthatitisa so-called’mixtureofexperts’(MoE)modelwith ∼1.76trillionparameters.Theincreaseinthe numberofparametersalsomeansincreaseddemandsforcomputingpowerandmemoryrequiredforbothtrainingamodelandtheapplicationofatrainedmodel(inference).Efficient trainingofamodelalsorequiresever-largeramountsoftrainingdata.

Withtheincreaseinthenumberofparameters,languagemodelshavebeguntoexhibitemergentabilities,generallyunderstoodascapabilitiesthatexistinmodelswithlargernumbersof parametersbutlackinginsmallerones[28 ].Forinstance,largerlanguagemodelsarecapableof summarisingandtranslatingtexts,generatingcode,findingpatternsinatextandunderstandinghumour,whilesmallermodelsarelimitedtoansweringtosimplerquestionsorgenerating seeminglygrammaticallycorrecttext.Somehavealsoattemptedtoexplainsuchostensibly emergentabilitieswiththebettermemorisationcapacityandimprovedsteerabilityviaprompts characteristictolargermodels[29 ].Untiltheadoptionofmodelweightquantisationandmodel pruning,suchfeatureswerethoughttoappearinalanguagemodelfrom ∼7billionparameters, eventhoughcertainemergentpropertieshadbeenobservedinthe1.5billionparameterGPT-2. Today,however,ithasbecomeclearthatsmallerorcompressedmodelsmayalsopossesssuch abilitiestoacertainextent.

Alanguagemodelwithahighernumberofparametersrequiresalargertrainingdatasetforthe efficientutilisationoftheseparameters.LargerEnglish-languagetrainingsetscomprisetrillions oftokens,whereasthesizeofEstoniandatasetsdoesnotexceedseveralbillions.Thismeans thatalanguagemodeltrainedbasedontheEstonianlanguagewillgenerallybesmallerand lesscapable.TheshareofEstonianinmulti-languagedatasetsisverysmall,meaningthata modeltrainedontheEstonianlanguagemaynotalwaysbecapableofmasteringthelanguage. Onestrategyformitigatingthisdisparityisfine-tuningmodelstrainedonanEnglish-language datasetusingEstonian-languagedata.

Increasinghardwarerequirements. ComputerGPUsusetheSIMD(singleinstruction,multiple data)architecturewhichallowsthesameoperationtobeperformedsimultaneouslyonseveral piecesofdata.Thisallowssignificantlyspeedinguprenderingworkflowsandothergraphicsrelatedtaskswhereacertainoperationneedstoberepeatedoneachbufferelement.This featuredidnotgounnoticedfortheresearchersofdeeplearningneuralnetworkswhoproposed

theideain2009thatmatrixoperationsfrequentlyusedinneuralnetworkscouldbespedup usinggraphicsprocessingunits[30 ].

Foreachnewtokenbeinggenerated,transformer-basedlargelanguagemodelsmustaccessall theweightsandattentionvectors (q,k,v) employedbythemodelandmovethemfromRAMto GPUregisters.Asufficientlylargenumberofsufficientlylargeweightmatriceswillresultinincreasedloadingtimes.Thismakesmemorycapacityandthroughputcomparableinimportance toplainFLOPS 6 .

Unlikefine-tuning,in-contextlearningdoesnotrequirethecomputationallyexpensiveupdatingofmodelweightsalongsideinference(prediction).Thein-contextlearningfunctionalityof certainLLMscanalsobeimplementedonahigh-performancepersonalcomputer 7 .Quantisation[31]–reductionintheaccuracyandmemoryrequirementsofmodelparameters–isused tofacilitatefittingthemodelweightsinthePC’sGPUmemory.E.g.16-bitfloatingpointnumbersareusedinplaceof32-bitones;themostpowerfulquantisationmethodsre-encodethe parameterssothatasingleparameterwillonlyrequireabitmorethan2bitsofmemory[32 ]. Onthedownside,themodel’sabilitiesmaysufferfromquantisation.

Theexpansionoffieldsemployingparallelprocessing(machinelearning,simulations,scientific modelling,cryptocurrencymining)hasincreaseddemandforbothhardwareandfirmwaresuitableforthetask.NvidiahasthusdevelopedtheCUDAplatformcomprisingbothhardwarecomponentsandasoftwareframeworkfortheutilisationofGPUsinparallelprocessingtasks.Apple haddevelopedtheOpenCLparallelprocessingstandardthat,unlikeCUDA,wasnotbasedon aspecifictypeofhardwarebuttoday,they,too,haveswitchedtotheirownhardware-specific framework,calledMetal.

ClassicserverarchitecturesarenolongeradequateforofferingAIasacloudservice.Extremely largevolumesofdataalsomeanthatspecialiseddatacentresorcloudservicesareusedfordata storageandprocessing.Whenscalingaservice,cloudinfrastructureandspecialisedhardware arerecommendedforbothinferenceandtraining.Meanwhile,specialisedhardwarenolonger meansonlyGPUs–italsocoverssolutionsevenmorespecifictoneuralnetworks,suchasthe tensorprocessingunit(TPU)developedbyGoogle,ortheneuralprocessingunit(NPU)usedin smartphonesandInternet-of-Things(IoT)devices.

2.6.2Fromgeneral-purposetospecial-purpose

Fromfoundationmodelstoapplications. FoundationmodelsareoftenmentionedinthecontextofLLMs.Thesearegeneral-purposemodelsthatcanbeusedforperformingmanydifferenttasks.Chatbotsareoneofthemostbasicapplicationsoffoundationmodels,asthey onlyrequirecommandofnaturallanguageandgeneralknowledgethatcanbederivedfrom modelweightsanddonotrequireaseparatedatabaseinterface.Non-deterministicmodeloutputisalsoacceptableinchatbots.Indomain-specificapplications,thegeneralisationability andknowledgeofthefoundationmodelmaynotalwaysbeadequateforthetask.Specialised solutionsandmodelshavethereforebeendevelopedalongsideandbasedonlargefoundation models.Theseareespeciallygoodatprocessingmedicalandlegaltext,summarisinglarge

6 FLOPS(floatingpointoperationspersecond)isameasureofcomputerperformance.

7 llama.cppisanopen-sourceapplicationthatfacilitatesrunninginferenceonLLaMA,LLaMA2,andotherlanguage modelsusingquantisation.

documents 8 ,programminglanguagesandpatterns 9 ,imagerecognition 10 ,andcanevaluatethe likelihoodofanimageortextbeingcreatedbyagenerativemodel11 .

SimplersolutionshavealsoemergedthatconnecttoanexistingAImodelusingitsAPI,e.g., forinteractingwithandsummarisingdocumentsintheformofPDFfiles.Thebusinessrisk involvedinsuch’thin’solutionsisthattheprovidersofAPIsandmodelscaneasilyimplement suchfunctionalityintheirownproducts,justlikeOpenAIhasdonewiththeanalysisofPDFfiles inChatGPT 12 .

Fromthesynthesisofasingletypeofcontenttothecreationofheterogenouscontent. When amodelinteractswithdifferentinputoroutputmodalitiesitcanbeclassedasmultimodal.In otherwords,evenasimpleimageclassifiercouldbeconsideredmultimodalinthatitreceivesan imagesasaninputandoutputsatextlabel.Inreality,thetermismainlyusedformodelswhere inputswithdifferentmodalitiesaremappedtothesameembedding,suchasOpenAICLIP 13 andGPT-4V 14 .Multimodaltext-to-videomodelsalsoexistthatgenerateanimagesequence correspondingtotheprompt,eitherrelyingonreferenceimages[33 ]orwithout[34 35 ].

Whereasmultimodalinputshavebeensimpletoprocessthusfar,generatinganoutputcomprisingdifferentmodalitiesismoredifficult.Themostcommon(andeasiest)solutionsofaristhe combinationoftheoutputsandinputsofmultiplemodels.Thus,ChatGPTcomprisesanimage generationfunctionalitywheretextualinstructionsgeneratedusingtheGPT-4languagemodel basedonauserpromptarefedtotheDALL-E3imagesynthesismodelwhichwillthenreturn thegeneratedimagestotheusers.TheInvideoAIservice 15 (alongsideseveralothersimilar services)composesvideosbasedoninputtext:itgeneratesascriptbasedonauserprompt andsearchesthedatabaseforclipswhicharethenassembledintoavideo,afterwhichitalso generatesasoundtrack.

OneoptionforcombiningAIservicesisanAIagent(insomecasesagenerativeagent)capable ofinterfacingwithdifferentservices,e.g.makingInternetqueriesforperformingthetaskit hasbeengiven.AIagentsarecharacterisedbyacontinuousfeedbackcyclebetweenmaking queries(interfacingwiththeoutsideenvironment)andupdatingtheirinternalstate.Forthis reason,itisvitalforAIagentstobecapableofplanningtheirnextstepswhilealsokeeping trackoftheresultsoftheprevioussteps,theirinternalstate,andthebroadercontentsand purposeofthetask[36 ].Aself-drivingcarcanbeconsideredanAIagent.

Thesedays,AIagentsgenerallymeansolutionsbasedonlargelanguagemodelsthatfacilitate automatisingmulti-stepactionsrequiringthedivisionoftasksintosubtasks,additionalplanning, andconstantfeedbackbasedonnaturallanguageinstructions.Someofthecurrentlypopular (asofwritingthisreport)frameworksforcreatingandmanagingAIagentsincludeAutoGPT, BabyAGI,andAiAgent.App.

8 Claude2: https://www.anthropic.com/index/claude-2

9 GitHubCopilotX: https://github.com/features/preview/copilot-x

10 Gpt-4Vision: https://openai.com/research/gpt-4v-system-card

11 StableSignature: https://ai.meta.com/blog/stable-signature-watermarking-generative-ai/

12 ChatGPTPlusmemberscanuploadandanalyzefilesinthelatestbeta. https://www.theverge.com/2023/ 10/29/23937497/chatgpt-plus-new-beta-all-tools-update-pdf-data-analysis VisitedFebruary25th, 2024

13 CLIP:Connectingtextandimages. https://openai.com/research/clip

14 GPT-4V(ision)systemcard. https://openai.com/research/gpt-4v-system-card

15 InvideoAI. https://invideo.io/

2.6.3Fromclosedtoopen

Modelsforprovidingaccesstoclosedmodels. ThelargerAImodelsgot,themoreexpensive theirtraining,management,anddeploymentbecame.Themorepowerfultheygot,thegreater therisksofexploitingtheirgenerativecapabilitiesbecame.OpenAIwasfoundedin2015asa non-profitwiththegoalofresearchingartificialintelligenceandamainfocusondeeplearning neuralnetworks 16 .Intheearlydays,theorganisationputastressonopennessandcreating valueforthewholesociety.

On8April2019,afewmonthsaftertheannouncementandunveilingoftheGPT-2language model,thedecisionwasmadetosplitthecompanyintoa’limitedprofit’company(OpenAILP) andanon-profit(theexistingOpenAINonprofit),withtheboardofthelatterremainingthegoverningbodyofthetwonewpartnerorganisations 17 .Thisstepwaspurportedlytakenbecause ofthehighmaintenanceexpensesofmodernAIsystems:trainingthesesystemsiscomputeintensive,maintenanceofthebigdatainfrastructureusedforthetrainingiscostly,andanNGO’s opportunitiesforraisingcapitalarefarexceededbythoseofcompanies.Thiswasfollowedby apartnershipwithMicrosoftwhoinvestedonebillionUSdollarsintothecompany,andanother 10billiondollarsin2023.

GPT-2wasOpenAI’slastcompletelyopenlanguagemodels.In2020,OpenAIreleasedGPT-3, buttheparametersofthetrainedmodelwerenotmadeaccessibletothepublic–accessto themodelwaslimitedtotheOpenAIAPI18 andGPT-3itselflicensedtoMicrosoft19 underthe cooperationagreementsignedearlier.ThedecisiontocreateanAPIwasmotivatedbysecurity requirements,aswellasfinancialconsiderations.AsthemaintaineroftheAPI,OpenAIretains therighttorestrictaccesstothemodeltoexploiters;theAPIwasalsothefirstcommercial productofOpenAILPthathelpedfundfurtherresearchandmaintaintheexpensiveserverinfrastructure.

Emergenceofpublicmodels. In2023,Metaannounceditsownseriesoflanguagemodels, LLaMA 20 ,surprisingtheworldbymakingthemodelscompletelypubliclyaccessible,evenfor commercialuse.ThelicenceoftheLLaMA2modelseriesreleasedafewmonthslaterexcluded companieswithmorethan700millionannualusersinordertoprotectMetafromitsbiggest competitors.Thesameyearalsosawthereleaseofthesourcecodeandparametersofstability.ai’sgenerativeimagemodel,StableDiffusion 21 .Theemergenceofmodelsfarsurpassing GPT-2intheircapabilities,suchasLLaMA2,hasunleashedanavalancheofsmallerbut,in someways,morepowerfulAImodelsfine-tunedforspecificareasofuse.Theperformance ofthesemodelsisonlymarginallyinferiortofoundationmodelswithamuchhighernumberof parameters.Mistral-7B 22 andSSD-1B 23 aregreatexamplesofsuchmodels.

Hobbyists,smallenterprises,andresearchinstitutionscanhardlyaffordtheinformationinfras-

16 OpenAI. https://openai.com/blog/introducing-openai VisitedOctober20th,2023

17 OpenAILP. https://openai.com/blog/openai-lp VisitedOctober23rd,2023

18 OpenAIAPI. https://openai.com/blog/openai-api VisitedOctober23rd,2023

19 OpenAIlicensesGPT-3technologytoMicrosoft. https://openai.com/blog/ openai-licenses-gpt-3-technology-to-microsoft VisitedOctober23rd,2023

20 IntroducingLLaMA:Afoundational,65-billion-parameterlargelanguagemodel. https://ai.meta.com/blog/ large-language-model-llama-meta-ai/ VisitedOctober24th,2023

21 StableDiffusionPublicRelease. https://stability.ai/blog/stable-diffusion-public-release VisitedOctober24th,2023

22 MistralAI. https://mistral.ai/ VisitedOctober24th,2023

23 AnnouncingSSD-1B:ALeapinEfficientT2IGeneration. https://blog.segmind.com/ introducing-segmind-ssd-1b/ VisitedOctober24th,2023 Risksandcontrolsforartificialintelligenceandmachinelearningsystems

tructureortrainingbudgetsofthelikesofOpenAI,Google,orMeta,whichhascausedashiftin focusfromthenumberofparameterstotheirefficientuse,thequalityoftrainingdata,andalternativemodelarchitectures.AsdemonstratedbyGoogle’sleaked’WeHaveNoMoat’24 memo, theirsuccesshasbeenacauseforconcernforlargecorporations.Theemergenceofmoreefficientandcheaperfine-tuningmethods,suchasLoRA[37 ],hasallowedhobbyiststokeepup withlargetechnologycompaniesinspiteofthegapininvestmentcapacity.

Motivatedby,ononehand,thetechnologyindustry’sdesiretouseAIonportabledevicesand, ontheotherhand,thelimitedresourcesofsmallenterprisesandtheopensourcecommunity,a numberof’smalllanguagemodels’(SLMs)withfewerparametershavenowemerged,suchas Microsoft’sPhi-1.5[38 ]andPhi-2,Google’sGeminiNano 25 andGemma[39 ],aswellasMistral 7B[40 ]andtheQwen1.5familyofSLMs[41]whichareonlyslightlyinferiorinperformanceto muchlargermodels.

2.6.3.1Developmentsindeploymentmodels

AnAImodelinitselfisnotsufficientforperformingbusinesstasks.Themodelmusthaveaccess toinputdataandmustbecapableofproducingproperlyformatted,high-qualityoutputdata. DeploymentmodelsrefertothestructureofAIapps,interfacesbetweentheAImodelandother componentsoftheapp,andtheflowsofdatabetweenthesecomponents(includingusers’ personaldata).

Thefirst,moreprimitiveAImodels(e.g.linearregression,perceptrons,rules-basedexpertsystems)werenotcompute-intensive,makingtheinformationinfrastructureforrunningthemodel lesscriticalthandatastorageinfrastructure.AIapplicationdeploymentmodelsonlybecame relevantwiththewidespreadadoptionofAIinthe2010s,accompaniedbygrowingdatasets, proliferationofneuralnetworks,andtheresultingneedtoacceleratetrainingandinferenceusingGPUsthatwerenotalwaysreadilyphysicallyaccessibletothetrainersorusersofAImodels. Alongsidedatastorageandnetworking,cloudinfrastructureprovidersbegantoofferhardware andcloudcomputingenvironmentsforAImodels(e.g.GoogleColab,AmazonSageMaker),but theuserswerestillresponsibleforthedevelopment,training,anduseoftheirmodels.

Thegeneral-purposenatureofsubsequentlargetextandimagesynthesismodelsmeantthat forcertaintasks,themodelnolongerneededtobetrainedfromthegroundup.Thisgaveriseto AIaaSorAIasaservice,allowingcompaniesandindividualstouselargeAImodelsevenwithout investmentsintohardware,training,andotherinformationinfrastructure.

TheemergenceofChatGPTandAIAPIshastriggeredadelugeofthin’APIwrapperaps’using thegeneralisationabilityofChatGPToranotherAItextsynthesissolutionforsolvingdomainspecifictasks.Someoftheseapplicationsprovidelittlebesidesaconvenientuserexperience andacarefullycraftedpre-prompt;meanwhile,thereproducibilityofsuchsolutionscreatessignificantbusinessrisksforthecreatorsofwrapperapps.ThisriskmaterialisedattheOpenAIDev DaywhereOpenAIintroduceda’customGPT’serviceallowinguserstobuildspecial-purpose chatbotswithoutwritingasinglelineofcode 26

ThebusinessnicheofAIserviceprovidersisnotgenerallyfoundedoninnovativemodelarchitecture,astheseareusuallypublic,buttheinformationinfrastructurebuiltaroundthemodel,

24 Google:”WeHaveNoMoat,AndNeitherDoesOpenAI”. https://www.semianalysis.com/p/ google-we-have-no-moat-and-neither VisitedOctober26th,2023

25 GoogleBlog:IntroducingGemini https://blog.google/technology/ai/google-gemini-ai/ VisitedDecember14th,2023

26 IntroducingGPTs. https://openai.com/blog/introducing-gpts VisitedNovember20th,2023

theuserexperienceprovidedbythesolution,andthequantityandqualityofdomain-specific trainingdata.TheX(formerTwitter)AIserviceGrokhasreal-timeaccesstothedatabaseof userpostsandMicrosoft’sCopilotXcodingassistantwouldnotbenearasefficientwithoutthe constantlyupdatedGitHubrepository.ChatGPT,meanwhile,allowstheusertogivefeedback toallchatbot’sanswerswhichhasenabledOpenAItocollectlargeamountsofvaluabledataon users’interactionswiththechatbottofacilitatethefurtherimprovementofthequalityoftheir languagemodels.

Trainingdataqualitymanagementisvitalasitallowssignificantlyreducingtheamountofdata requiredforthetrainingofanequivalentmodel[42 ],butalsobecausetheproportionofsynthetic contentontheInternethasrisensharplyasoflateand,accordingtoexperts,mightreach90 percentby2026[43 ].

2.6.4Fromunregulatedtoregulated

2.6.4.1AIethics

Theethicsofcomputerscienceisamultifaceted,comprisingbothmoralandethicalconsiderationsrelatedtothedevelopment,deployment,anduseofcomputingtechnologies,suchasAI. Itisvitaltoensurethatthesetechnologiesaredevelopedandusedinwaysthatmirrorhuman valuesandpromotesocialwellness[44 ].Ethicalprinciplesaredynamic,meaningthattheycan changeintime,adaptingtodevelopmentsinscienceandthesociety[45 ].

TheemploymentofAItechnologiesisontherise–by2027,themarketcapitalisationofthe fieldisexpectedtoreach407billiondollars[46 ].Estoniancompaniesarealsoincreasingly usingAItechnologies–asatQ1of2023,themarkethasseena2%increasecomparedto2021. AccordingtoStatisticsEstonia,AItechnologiesaremostfrequentlyusedinEstoniabyfinance andinsurance,informationandcommunication,andenergysectorenterprises[47 ].

Eventhoughartificialintelligencetechnologiesdemonstrateenormouspotential,theuseofAI alsogivesrisetonumerousquestionsandfears.Forexample,asurveycarriedoutinEnglandin2023showedthatpeoplearethemostworriedaboutself-drivingcarsandautonomous weapons.TheyalsofearthatifAIisusedforprofessionaldecision-making,theartificialintelligencemayproveunabletoaccountforindividualreal-worldcircumstancesanddecision-making maysufferfromalackoftransparencyandresponsibility[48 ].

In2018–2021,ascandalbrokeoutinTheNetherlandswhenitwasfoundthatthenationaltax officehadusedaflawedriskanalysisalgorithmindecision-making,resultinginthousandsof childsupportreceiversbeingbaselesslyaccusedoffraud[49 ].Thisledtotensofthousandsof families,oftenfromlowerincomebracketsorethnicminorities,fallingintopoverty.Someofthe victimsperformedsuicideandoverathousandchildrenwhereplacedintofosterfamilies[50 ].

Professionaldecisionsofthiskindmayalsoincludecourtrulings.Thisraisesthequestion whetherarulingmadebyanartificialintelligenceisequivalentinqualitytoonemadebyahuman judge,whetherthesysteminquestionhasbeentrainedonhigh-qualitydata,andwhethercare hasbeentakentoruleoutdiscriminationonanygrounds,suchasgender,race,orincome.ResearchershavepointedoutthatAImodelsbasedoninformationderivedfromolderinputdata aremorelikelytofollowmoreconservativepracticesandmaynotbecapableofadaptingto significantpoliticalchangesovertime[51].IthasalsobefoundthattheuseofAIformaking courtrulingsmayproveathreattotheintegrityofdatawhich,duetotheirverynature,would requirethehighestlevelofsecurity[52 ].

IthasbeenfoundthatLLMsmaytendtoreinforceincorrectlegalassumptionsandbeliefswhich inturngivesrisetosignificantconcernsoverthereliabilityoftheresultsinalegalcontext[53 , 54 ].ThetransparencyandaccuracyoftheAImodelalsobecomecriticalinthecontextof trials[55 ].

Ethicalissuesemerginginthedevelopment,deployment,anduseofAIarethesubjectofAI ethicswhichisconsideredoneofthesubdomainsofappliedethics.ThegoalofAIethicsis todeterminehowanartificialintelligencesystemcanincreaseordecreasehumanwell-being throughchangesinqualityoflifeorautonomyandindependence.DifferentAIethicsframeworks aregenerallybuiltaroundfundamentalrights[45 ].

OnApril8th,2019,theEUHigh-LevelExpertGrouponAI(hereinafterAIHLEG)presentedits ethicsguidelinesfortrustworthyAI[45 , 56 ]withthegoalofprovidingguidanceforpromoting andsupportingethicalandrobustartificialintelligence.Lessattentionispaidtothelegalaspectsofthesystem.ThedocumentpresentsapreliminaryframeworkfortrustworthyAIwhile alsodiscussingissuesrelatedtotheimplementationandevaluationofAIsystems[45 ].

2.6.4.2AIregulationintheEU

InApril2021,theEuropeanCommissionproposedthefirstlegalframeworkregulatingAI[57 ]. Theproposalwasbuiltaroundarisk-basedapproach,assertingthatartificialintelligencesystemsshouldbeanalyzedandclassifiedbasedonthethreattheyposetousers[58 ].NegotiationsovertheAIActendedonDecember8th,2023.Inearly2024,theAIActisexpectedtobe publishedintheOfficialJournaloftheEuropeanUnion. Neithershouldoneoverlooktheexistinglegalframework.Morespecifically,theGeneralData ProtectionRegulation(GDPR)of2016[59 ]stressestheimportanceoftheprotectionofnaturalpersonsintheautomatedprocessingofpersonaldata 27 .Inadditiontothetheabove,the development,implementation,anduseofartificialintelligencemustalsoaccountforotherrequirements,suchasintellectualpropertyrights.Formoredetailsonthelegalaspectsofartificial intelligence,seeSection 3 ofthereport.

27 GDPRregulatestheautomatedprocessingofpersonaldata,includingprofiling,andconfersonthedatasubject therighttoopposeindividualdecisionsbasedonsuchprocessing(seeGDPRarticles2,21,and22,andrecitals15 and71).

3Legalaspects

3.1Internationallegalinitiatives

3.1.1Regulation

ExperiencefromrecentyearsindicatesthatAIregulationisrapidlydevelopingallovertheworld. TheexamplespresentedbelowpertaintojustsomeofthestatesregulatingAIsystems.

OnOctober30th,2023,thePresidentoftheUnitedStatesJoeBidenissuedanexecutiveorder toensurethattheUSmaintainsaleadingpositionintheworldinAIsystems.TheExecutive OrderestablishesnewstandardsforAIsafetyandsecurity,protectsAmericans’privacy,advancesequityandcivilrights,standsupforconsumersandworkers,promotesinnovationand competition,advancesAmericanleadershiparoundtheworld,andmore[60 ].

TheUKParliamenthaspublishedabilltoregulatetheuseofAItechnologiesintheworkplaceand makeprovisionaboutworkers’andtradeunionrightsinrelationtotheuseofartificialintelligence technologies.ThefirstreadingofthebilltookplaceonMay17th,2023[61, 62 ].InSeptember 2023,theUKgovernmentpublishedawhitepaperonapro-innovationapproachtoAIregulation. Thisframeworkisunderpinnedbyfiveprinciples[63 ]:

1. safety,securityandrobustness;

2. transparencyandexplainability;

3. fairness;

4. accountabilityandgovernance;

5. contestabilityandredress.

DiscussionsovertheregulationofartificialintelligencearealsounderwayinAustralia[64 ].In 2022,theAustraliangovernmentpublishedaconsultationontherulesforartificialintelligence andautomateddecision-making.TheconsultationwasdrivenbytheAustraliangovernment’s digitaleconomystrategylayingoutanambitiousvisionAustraliabecomingoneofthe10best digitaleconomiesandsocietiesby2030[65 66 ].AccordingtothenewdraftlawofsearchenginespresentedonSeptember8th,2023,theAustraliangovernmentrequiresInternetsearch serviceproviderstoreviewandregularlyupdatetheirartificialintelligencetoolsinordertoensurethatclass1Amaterials(e.g.,materialsrelatedtothesexualabuseofchildren,supportof terrorism,andextremeviolence)arenotreturnedinsearchresults.Thedraftactalsomandates thatusersmustbeabletoidentifywhetheranimageaccessiblethroughasearchengineisa deepfake[67 , 68 , 69 ].

InSeptember2023,CanadapublishedavoluntarycodeofconductontheresponsibledevelopmentandmanagementofgenerativeAIsystems[70 ].WorkisalsoonthewayontheArtificial IntelligenceandDataAct(AIDA)thatwouldsetthefoundationfortheresponsibledesign,developmentanddeploymentofAIsystemsthatimpactthelivesofCanadians[70 ].Theactwould ensurethatAIsystemsdeployedinCanadaaresafeandnon-discriminatoryandwouldhold businessesaccountableforhowtheydevelopandusethesetechnologies.Inadditiontothe above,onOctober12th,2023,theCanadiangovernmentannouncedapublicconsultationon theeffectsofgenerativeartificialintelligenceoncopyright[71].

Alongsidetheabove-listedstates,legalinitiativesrelatedtoAIsystemshavealsobeenundertakeninIsrael,Japan,China,Chile,Mexico,Peru,Singapore,andotherplaces[72 ].EUlegal

actsonartificialintelligencesystemsarecoveredinSection 3.3 ofthereport.

3.1.2Standards

TurningourattentionnexttoapproachestoAIfoundininternationalsoftlaw,variousnon-binding recommendationsandguidelineshavebeenpublishedtopromotethedevelopmentandadoptionofethical,responsible,andtrustworthyAI.Thesearegenerallyfoundedonprincipleslike privacy,explainability,impartiality,security,andbeinghuman-centered.