7 minute read

The impact of artificial intelligence on Romani Integration

“AI is good at describing the world as it is today, with all of its biases, but it does not know how the world should be”- Joanne Chen (Partner, Foundation Capital at SXSW 2018)

by Svenja Kesseler

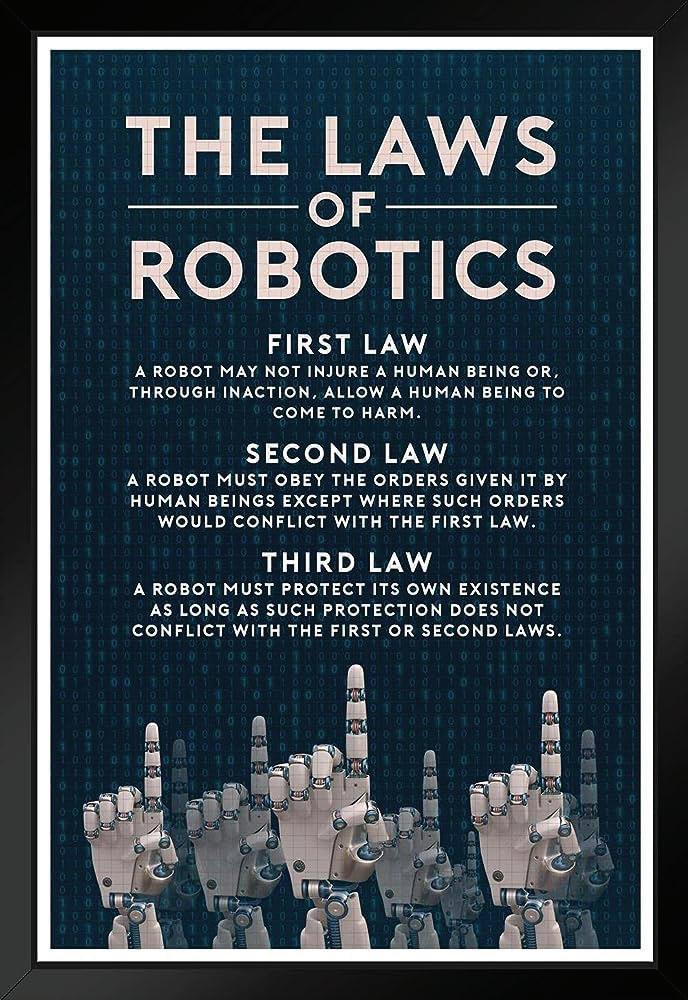

The word „robotics“ was first used by the American writer Isaac Asimov in his short story „The Vicious Circle“, published in 1942. In his depiction of the robot subject, the author outlines the three laws of robotics, highlighting his thoughts on our future relationship with robots and artificial intelligence:

L aw number one, the most important law states: “a robot may never harm a human being or, by remaining passive, allow a human being to be exposed to danger.“

Nowadays, 81 years later, AI is on the rise. The use of algorithms for decision making in many sectors of society, economy and daily life is increasing rapidly, one of the most known being Chat GPT, an AI-powered language model that was introduced in November 2022. Although its potential and capabilities are impressive, it has to be addressed that the increasing use of AI- systems may entail risks for marginalized groups.

AI’s Flawed Reality: Biases in Big Data

AI- systems use highly complex algorithms that are combined and trained with so called big data. Big data is based on data from the internet, which includes online news, websites, forums and of course, social media. This data is not unbiased or objective, it can include gender and ethnic biases as well as stereotypes and prejudices in society.

Although the name suggests it, artificial intelligence is not actually intelligent. It blindly connects patterns in its data without the ability to understand or question them. For example, an algorithm may find lots of news articles about crimes and violence associated with the word “roma” and conclude that the Roma Community is dangerous and criminal, although that may not reflect actual crime rates.

We expect AI to provide an objective analysis based on scientific data, but instead the data used may reinforce systemic flaws and injustices. Predictions are based on past events, which can be problematic considering what is commonly known as historical bias.

Therefore, the rise of artificial intelligence can have a lasting impact on the rights of the Roma Community and the barriers they will face present day and future.

Unseen and Unheard: Roma Marginalization in Algorithmic Bias

The minority nature and marginalization of the Roma community as well as their culture not being written down, but passed down by word of mouth, creates a lack of representation in data sets algorithms are trained with and reference. This leads to poorer results and a greater risk of error and false data. Majority groups have a bigger proportion of the data in the data set, which lowers their chance of false representation because non accurate data can be balanced out by accurate data and prevent the AI from collecting false patterns. Additionally, majority groups may be deemed more important by the AI, because they are represented more in the data.

Social media and content recommendations are based on algorithms. The algorithm is supposed to predict the kind of content a certain user likes or wants to engage with, This can create an environment, in which the user only faces content they agree with. Consequently, this prevents a differentiated culture of discussion, which is very important for a functioning democracy.

Combined with the anonymous spreading of fake news, this environment tends to amplify sterotypes, discriminatory patterns and can lead to more cultural and societal division.

With which tasks should AI be trusted?

Furthermore, AI- based- systems are becoming more popular among the police. It is used, for instance, to predict where a new crime is going to happen or how likely it is that a specific person will be reoffending.

But what police data captures is not repeated offending, but the repeat arrest and conviction of a crime. This is crucial to understand, because which crimes are convicted can be tied to biases in the police and judicial system. Convicted crimes and committed crimes are not always identical.

If these algorithms are used unquestioned by the police it may lead to excessive surveillance and pressure on discriminated against communities, such as the Roma community.

Algorithmic Prejudice in Border Control and Urban Planning

In June 2021 the European Parliament released a paper called „Artificial intelligence at EU borders“, which stated that the EU is planning on using automated facial recognition technology in the near future at EU Borders, in order to mitigate security risks related to cross- border terrorism and serious crime.

Border agencies are using facial recognition algorithms in order to compare the face of a person wanting to pass a border with a criminal database of known criminals and terrorists. The problem arises when facial recognition algorithms have problems with differentiating nonwhite faces, due to a lack of representation of people of color in its data sets. Consequently, it might frequently link people from Roma communities to criminality, although they did not commit a crime.

Lastly, AI- systems used for urban planning may lead to the decision not to invest in mostly romani neighborhoods, because the algorithm determines that the neighborhood has too much criminality and vandalism, when in reality this conclusion is influenced by prejudices and underrepresentation in its database.

If a local government decides to base their decision on the AIs assessment, it can prevent much needed public investments in these neighborhoods.

The use of technological tools in areas such as border control, security and access to social services and public investments, can potentially deepen the exclusion and discrimination of Roma people and other marginalized groups.

Navigating the Ethical Landscape of Artificial Intelligence

Artificial intelligence offers progress and opportunities to society, but also dangers and threats. It can not be ignored, that it offers opportunities to some and entails risks for others. For a sustainable technological future, in which the rights of everyone are protected, it is necessary to take certain steps.

One positive example could be the „Artificial Intelligence Law“ the European Commission is working on currently, which will set certain standards tied to a legal framework to make sure AI is used fairly, transparently and responsibly.

The impact of a technology has to be included in its design as well as ethical aspects for the safe integration of AI in society. Transparency is an important first step. It is necessary to know which data sets were used to train an ai model and which players were involved in its creation. Furthermore, AI- algorithms have to be trained with diverse and representative data.

That is not an easy task, the complexity of the algorithms makes it difficult to identify and remove biases. One of the greatest threats therefore lies in disproportionate confidence in its recommendations and determinations. One thing everyone can do right now is realizing for oneself that AI is not always right and that it is important to check the information it is providing.

The Power of Choice: Shaping AI for a Better World

But if we manage to contain the negative impacts and threats AI imposes, it has the potential to actually lower cultural barriers. Translation algorithms for example can help with overcoming language barriers and improve intercultural communication. Social media algorithms could be used intentionally to connect people of different backgrounds, who can share their unique experiences with each other and therefore gain a better understanding of different living realities.

AI has the potential to play an important role in bridging cultural division and creating a more inclusive and tolerant world, if it is created with the benefits of everyone in mind. The rules, Issac Asimov created in his short novel „The Vicious Circle“, can only be upheld, if not only the AI, but also humans follow certain standards and rules. It already becomes apparent that individuals can be harmed by artificial intelligence, which is why it is important that the law, politics and society keep up with this fast evolving technology- and also treat it with care. So far AI is just a tool- we must intentionally choose to use it properly and fairly.