AI and ML: Generative AI fuelling data quality revolution

AI Applications: AI-powered biometrics fighting fraud

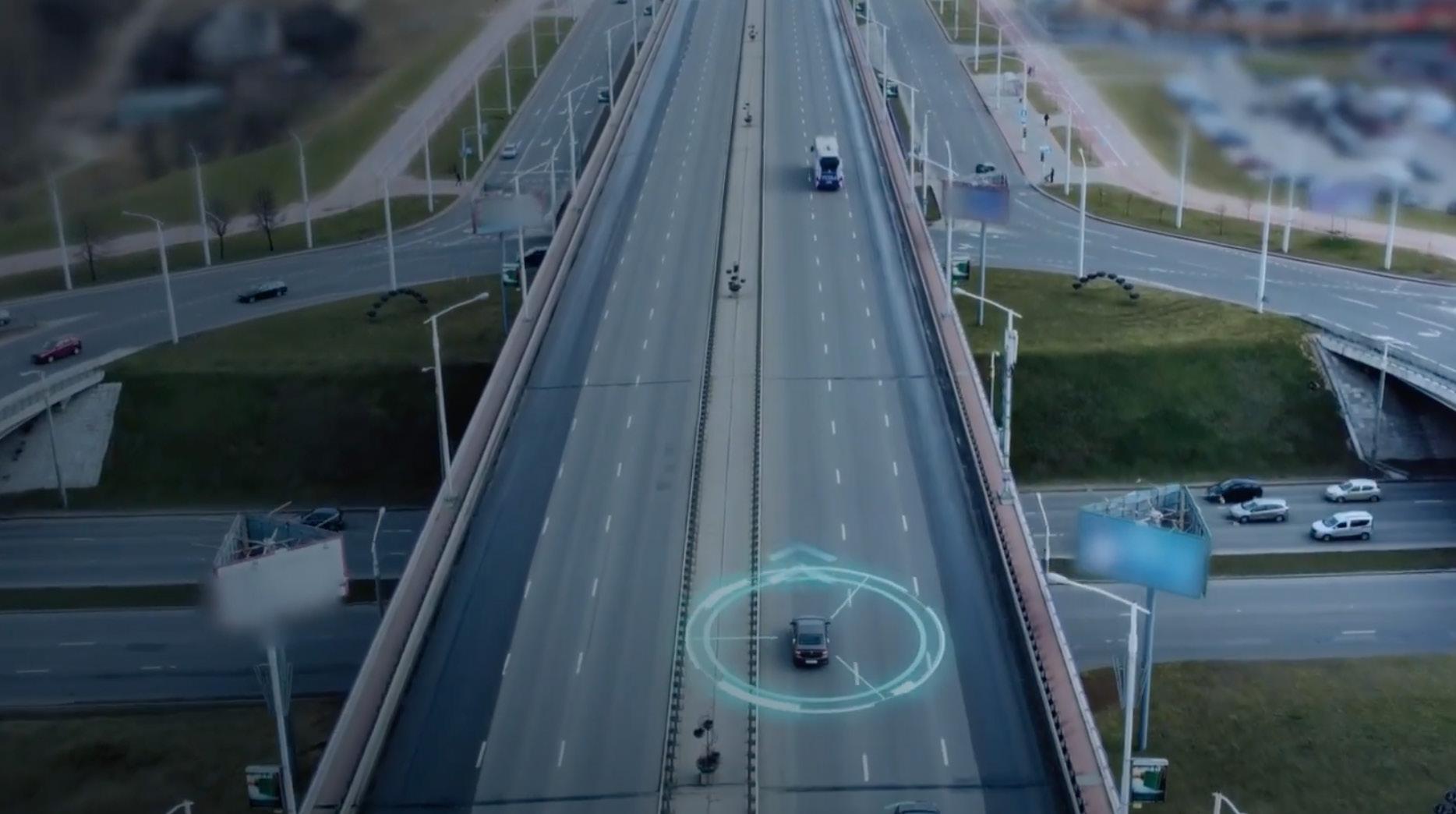

Technology: Powering the nextgeneration of driving aids

AI and ML: Generative AI fuelling data quality revolution

AI Applications: AI-powered biometrics fighting fraud

Technology: Powering the nextgeneration of driving aids

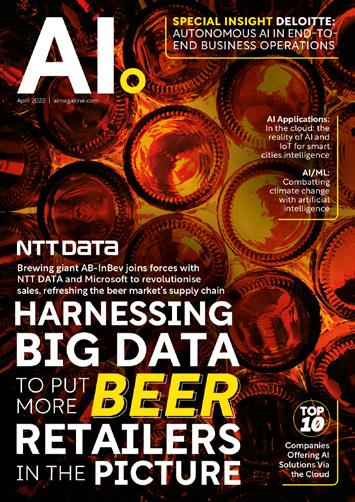

AI magazine is an established and trusted voice with an engaged and highly targeted audience of 33,000 global executives

Digital Magazine

Website Newsletters

Industry Data & Demand Generation

Webinars: Creation & Promotion

White Papers & Research Reports

Lists: Top 10s & Top 100s

Events: Virtual & In-Person

WORK WITH US

EDITOR-IN-CHIEF

MARCUS LAW

CHIEF CONTENT OFFICER

SCOTT BIRCH

MANAGING EDITOR

NEIL PERRY

PROOF READER

JESS GIBSON

CHIEF DESIGN OFFICER

MATT JOHNSON

HEAD OF DESIGN

ANDY WOOLLACOTT

LEAD DESIGNER

HECTOR PENROSE

FEATURE DESIGNERS

MIMI GUNN

SOPHIE-ANN PINNELL

HECTOR PENROSE

SAM HUBBARD

JUSTIN SMITH

REBEKAH BIRLESON

ADVERT DESIGNERS

JORDAN WOOD

DANILO CARDOSO

CALLUM HOOD

VIDEO PRODUCTION MANAGER

KIERAN WAITE

SENIOR VIDEOGRAPHER

HUDSON MELDRUM

DIGITAL VIDEO PRODUCERS

MARTA EUGENIO

ERNEST DE NEVE

THOMAS EASTERFORD

DREW HARDMAN

JOSEPH HANNA

SALLY MOUSTAFA

JINGXI WANG

PRODUCTION DIRECTORS

GEORGIA ALLEN

DANIELA KIANICKOVÁ

PRODUCTION MANAGERS

JANE ARNETA

MARIA GONZALEZ

CHARLIE KING

YEVHENIIA SUBBOTINA

MARKETING MANAGER

INDIA BERRY

PROJECT DIRECTORS

KRIS PALMER

TOM VENTURO

MEDIA SALES DIRECTOR

JAMES WHITE

MEDIA SALES

JASON WESTGATE

MANAGING DIRECTOR

LEWIS VAUGHAN

CEO

GLEN WHITE

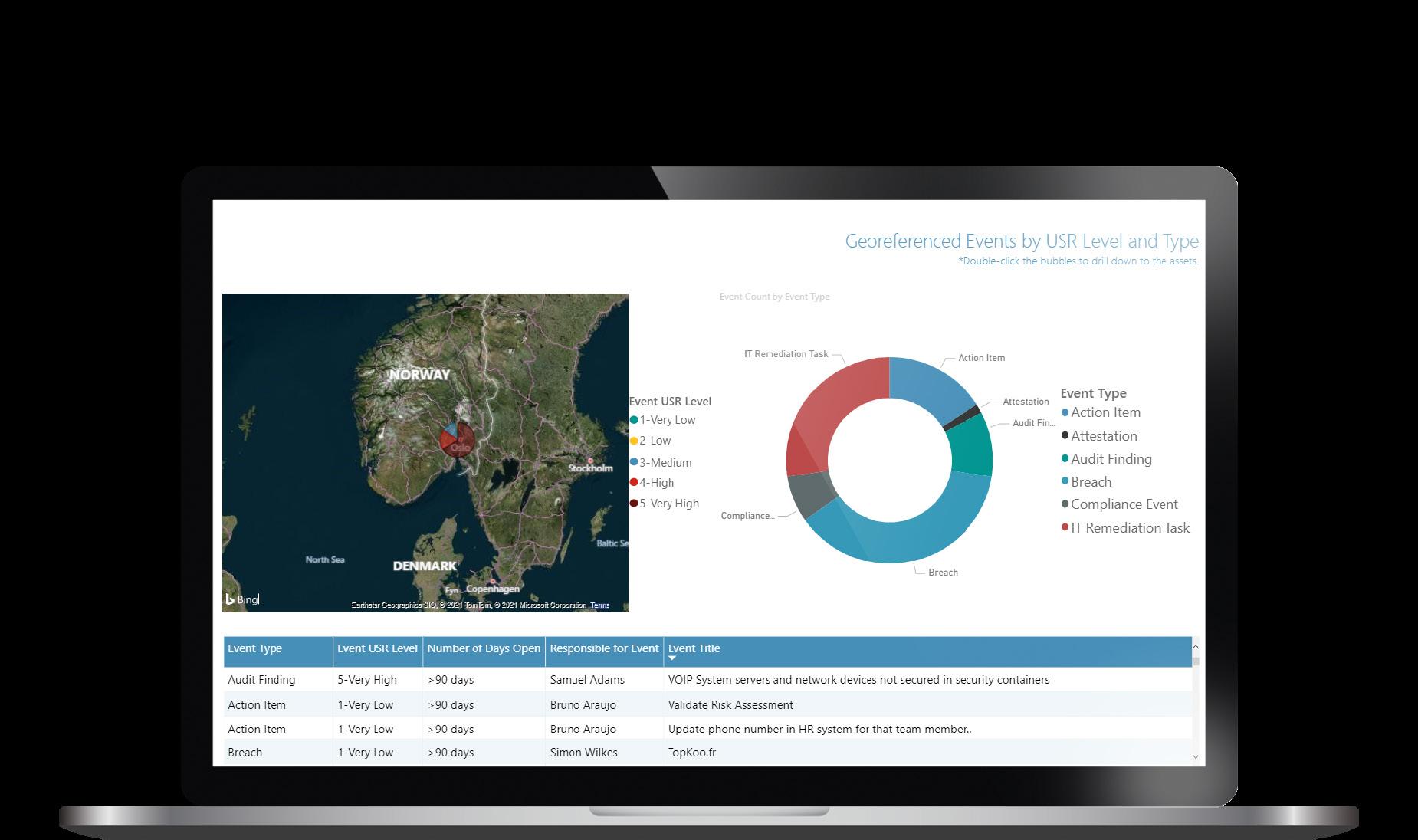

Data is the lifeblood of the world’s future smart cities. But as connected devices increase, so too will security threats

By 2050, two-thirds of people globally are expected to live in cities. As urbanisation continues to rise, cities are facing new challenges that require innovative solutions.

At the heart of one of these possible solutions, the smart city, is the smart sensor. Distributed in the tens of thousands across smart city test neighbourhoods, or even entire boroughs, IoT sensors monitor everything from traffic patterns and footfall to utility usage and emissions.

One such example of this is the city of Barcelona. The Spanish capital’s smart city pilot demonstrates how the smart application of data can transform everything from shopping to transportation, enabling services from autonomous driving to augmented reality.

But as the number of connected devices increase, so too will security threats.

The use of both AI and ML in smart city systems will improve the efficiency of systems and expand their advantages. However, this will also create new avenues for attack through the cloud, as well as make the detection of malfunction behaviours more difficult.

marcus.law@bizclikmedia.com

“The smart application of data can transform everything from shopping to transportation, enabling services from autonomous driving to augmented reality”

Innovators are paving the way for a more resilient, sustainable and efficient future. The rules have changed. It’s time for DISRUPTION.

Tech LIVE Virtual returns to highlight the innovators changing the industry through expert keynote speakers, interactive fireside and panel discussions. This exclusive 1-day virtual event will bring together the greatest voices in the industry for an essential deep dive into the future of Technology, AI and Cyber.

Brought to you by BizClik, Technology, AI and Cyber Magazines, the event will shine a light on essential topics such as the AI revolution, quantum computing, the virtual workplace, technology’s place in sustainability and much more.

It’s time for DISRUPTION.

Position your business as a pioneer in Technology and showcase your values, products and services at Tech LIVE Virtual.

This is your chance to share your innovations with the technology community by making an impact in front of fellow decision-makers and influencers as well as accessing potential partners via an active and engaged audience.

See you on the 8th June 2023.

14 BIG PICTURE Giving black hole researchers a clearer view

16 LIFETIME ACHIEVEMENT

Kevin Kennedy, SVP Products

20 FIVE MINS WITH Demis Hassabis, DeepMind Technologies

6 - 7 September 2023

Business Design Centre, London

SPONSORSHIPS GET YOUR PASS

Researchers have developed a new machine-learning technique, known as PRIMO, to enhance the fidelity and sharpness of radio interferometry images. The purpose? Creating a new, high-fidelity version of the Event Horizon Telescope's iconic image of the supermassive black hole at the centre of Messier 87, a giant elliptical galaxy located 55 million lightyears from Earth.

Building on the image taken in 2017 by the Event Horizon Telescope, PRIMO relies on a branch of machine learning known as dictionary learning, which teaches computers certain rules by exposing them to thousands of examples.

“With our new machine-learning technique, PRIMO, we were able to achieve the maximum resolution of the current array,” said lead author Lia Medeiros. EHT with PRIMO

With more than 27 years in technology product management, and more than half of those spent in security, Vectra’s Senior Vice President of Products Kevin Kennedy has seen it all.

Today leading the Threat Detection and Response product vision and strategy for Vectra, Kennedy launched his career in threat intel at IronPort and has also held roles at Juniper, Cisco, and Agari Data.

“At Vectra, the thing that we do best in the world and why our customers invest, is we give real-time attack signal intelligence using AI,” Kennedy told AI Magazine. “Most organisations are in a digital transformation: they are moving to the cloud, and they will be hybrid forever. We give them a consistent view of detection and response across that, and really the clarity of signal that we give is why they choose Vectra.”

As Kennedy describes, Applied AI is central to Vectra’s approach. “The company's a little over 10 years old and our technical North Star, from day one, has been using AI, and we've had to do a lot of innovation to figure out how to apply it best to this problem set.

“While there's ChatGPT, most of the AI that is used today is applied AI, so you really have to understand your domain.”

“If you look at the threat landscape today, there are a few things going on,” Kennedy says. “One is that there is more of it. So if you think about the typical enterprise going through a digital transformation, they've got their data centre, public cloud identity, workers outside, and it's very difficult.

“With things like the cloud, the understanding of how that will be attacked is really nascent. You can think all the

intelligence using the power of its ‘North Star’

2015

“You can think all the way back to Windows XP days. We're in those days for the cloud, because we're just discovering how people will use the cloud to attack itself”

KevinKennedy Joined Vectra AI

way back to Windows XP days. We're in those days for the cloud, because we're just discovering how people will use the cloud to attack itself.”

Another issue, Kennedy explains, is the increasing accessibility of certain tools that used to be reserved for nation states.

“If you think about that threat landscape, tools that used to be reserved for nation-states are now available in tool kits that anyone can use. They're automated. On the AI front, I’ve seen some interesting things about ChatGPT, and people asking questions about how to hack. Anyone can have access to it. That's what defenders are up against.”

As Kennedy describes, solving problems with legacy tech signatures will still have a role to play, but utilising AI is key. “You're never going

to solve the problem though, because we're just burning out defenders. AI is the only way to get a good signal, both in individual attack surfaces and then pulling the narrative together.

“It’s one of our guiding principles that you've got to use AI. But it's just a tool, especially in applied AI, and it's all about how you use it. The methodology for using it is what defines success versus failure from the customer outcome standpoint. So that's been our focus: it's not just AI, but how you use AI in the right ways to solve the problem.”

When it comes to detecting threats with AI there are a couple of philosophies, Kennedy explains.

“We've definitely placed our bet, and we believe it gives better outcomes,”

“Tools that used to be reserved for nation-states, are now available in tool kits that anyone can use”

he describes. “There's one school of thought that says, ‘You learn about an environment, and you do lots of counts and metrics, and then you flag what's unusual’; the bet is that in the unusual you'll find the threat.

“The challenges with that are that when you look at especially large enterprise environments, there's so much happening every day that lead to a lot of noise and alerts for the security teams to deal with.”

The other problem, Kennedy describes, is that attackers are good at blending in. “They're good at making themself look pretty close to normal,” he says. “So you actually miss a lot of the relevant signals.”

“So we said, ‘That doesn't really work well’. Rather than thinking about it as more of a pure data science problem, it's in reality a security problem.

“We are constantly pushing the envelope of data science techniques. Four or five years ago, there were lots of breakthroughs around Google Translate, and the use of recurrent neural networks, and long shortterm memory (LSTM) models. And we said, ‘Okay, that works really well for translation. It's actually the right technical approach for command and control tunnels’, and so we then applied that. We took the latest learnings from that domain and applied them to security, and we were the first to do that. And so we're always keeping up on data science.

“We're always keeping up with breakthroughs in security research,” Kennedy concludes. “We have to continue doing that work, and then bringing them together to deliver the best outcomes for our customers.”

As CEO and Co-Founder of Google DeepMind, Demis Hassabis is one of the most influential experts in the field of AI.

A child chess prodigy, at the age of 17 Hassabis joined the computer game company Bullfrog Productions, where he worked as a designer on science fiction game Syndicate. Here, Hassabis was the lead programmer for the highly influential Theme Park, released in 1994, which won the industry’s Golden Joystick Award.

In 2010, Hassabis founded the AI company DeepMind Technologies with Shane Legg and Mustafa Suleyman. The trio began working on AI technology by teaching it how to play old games from the 1970s and 80s, with a goal to create a general-purpose AI that can be useful for almost anything.

Hassabis attended Cambridge University where he studied for his computer science undergraduate degree, earning a double first-class honours.

At the start of 2014, Google announced the company had acquired DeepMind for $500m and agreed to take over DeepMind technologies. With Google, DeepMind established an artificial intelligence ethics board.

Later that same year, DeepMind received the ‘Company of the Year’ award from Cambridge Computer Laboratory. It also published research

on computer systems that are able to play the board game ‘Go’.

AlphaGo, a computer program developed by DeepMind, beat the European Go champion Fan Hui in 2015. This was the first time an AI defeated a professional Go player. Go is considered much more difficult for computers to win. Due to the high number of possibilities within the game, it is prohibitively difficult for traditional AI methods such as brute force.

With Amazon, Google, Facebook, IBM and Microsoft, in 2016 DeepMind became a founding member of Partnership on AI, an organisation dedicated to the society-AI interface.

Last year DeepMind made headlines with AlphaFold, which is capable of deciphering the structure of almost all proteins known to science.

The company partnered with the European Molecular Biology Laboratory (EMBL) to make the most complete and accurate database yet of predicted protein structure models for the human proteome.

In 2010, Demis Hassabis co-founded the artificial intelligence company DeepMind Technologies, which has been credited as bringing AI to the mainstream

“Our goal at DeepMind has always been to build AI and then use it as a tool to help accelerate the pace of scientific discovery itself”

“We are bringing together our world-class talent in AI with the computing power, infrastructure and resources to create the next generation of AI breakthroughs and products across Google and Alphabet”

DeepMind announced the data would be made freely available to the scientific community, allowing scientists to carry out work that would have previously taken years in just a few days.

“Our goal at DeepMind has always been to build AI and then use it as a tool to help accelerate the pace of scientific discovery itself, thereby advancing our understanding of the world around us,” said Hassabis at the time.

“For our team, AlphaFold’s success was especially rewarding, both because it was the most complex AI system we’d ever built, requiring multiple critical innovations, and because it has had the most meaningful downstream impact.”.

In April, Hassabis announced that DeepMind, which was acquired by Google in 2014, would henceforth be known as Google DeepMind, with the organisation joining forces with the Google Brain team to ‘accelerate progress towards a world in which AI helps solve the biggest challenges facing humanity’.

“When Shane Legg and I launched DeepMind back in 2010, many people thought general AI was a farfetched science fiction technology that was decades away from being a reality,” Hassabis said in an announcement to DeepMind employees.

“Now, we live in a time in which AI research and technology is advancing exponentially. In the coming years, AI – and ultimately AGI – has the potential to drive one of the greatest social, economic and scientific transformations in history.

“Through Google DeepMind, we are bringing together our worldclass talent in AI with the computing power, infrastructure and resources to create the next generation of AI breakthroughs and products across Google and Alphabet, and to do this in a bold and responsible way. The research advances from the phenomenal Brain and DeepMind teams laid much of the foundations of the current AI industry, from Deep Reinforcement Learning to Transformers, and the work we are going to be doing now as part of this new combined unit will create the next wave of world-changing breakthroughs.”

SAP is a global software provider and a leader for enterprise business process software, including solutions to manage supply chains. SAP provides technologies, supports the cloud and cloud platform environments, as well as artificial intelligence/machine learning (AI/ML) libraries, robotic process automation (RPA) and in-memory technology for high-end computers. SAP’s solutions for manufacturing execution and insights are part of a portfolio of products for supply chain management and leverages these technologies.

“We're an enterprise business software and a technologies company,” says Sam Castro Senior Director, Solution Management, LoB Digital Manufacturing.

Castro is a Senior Director at SAP and a part of the line of business manufacturing solution management team. The line of business covers the 27 manufacturing industries for which SAP provides software solutions.

“All of those industrial companies have needs around operations visibility, control and reporting,” Castro explains. “The different industries have different targets that they're after. Some are heavier on the asset side, some of them are heavier on product quality and yields, others are all about logistics and moving products around on-time through the supply chain.”

SAP is met with a diverse set of requirements and needs from its customers. Solution management takes these industry needs and applies them to market direction and invests them in the portfolio.

“We provide guidance on where to focus and the emphasis for development, and that strategy big picture where we want to take the products,” Castro explains.

In college, Castro completed a Bachelor's in computer engineering and a Master's in computer science at the Rochester Institute of Technology (RIT).

“I came from the hardware bridge to the software bridge very naturally after graduating,” says Castro. “I was dropped into the manufacturing floor because that is exactly where the hardware automation side bridges over into the software.”

He was faced with a great deal of information and digital signals from the automation layer and was tasked to turn it into information — how does SAP make that translation?

“I started at the very lowest level and moved my way through Lighthammer Software, which was acquired by SAP back in July 2005,” says Castro. “I worked my way through SAP into the role that I'm in today.”

“Being a sustainable enterprise means that you're an efficient enterprise”

SAM CASTRO SENIOR DIRECTOR, SOLUTION MANAGEMENT, LOB DIGITAL MANUFACTURING, SAP

Sam Castro is Senior Director of Solution

Sam Castro is Senior Director of Solution

Today’s supply chain comes with constant uncertainty. So how are you preparing for whatever comes next?

Discover how Deloitte can help you enable a built-to-evolve digital supply chain that allows you to pivot quickly no matter what surprises the future brings.

Learn more about our Kinetic Supply Chain offerings for embedding flexible capabilities, intelligent insights, and sustainability from end to end.

Contact SAP@deloitte.com to get the conversation started.

Digitally driven business is an imperative in today’s uncertain landscape, but to thrive organizations will need digital supply chains that can anticipate disruption and evolve in lockstep with change all while prioritizing sustainability and responsible practices.

At Deloitte, such capabilities are part of our vision for the Kinetic Supply Chain a built-to-evolve supply chain enabled by a clean-core ERP, intelligent technologies, responsive cloud solutions, and an inclusive ecosystem of capabilities. Kinetic Supply Chain capabilities can allow you to see issues and opportunities before they arise and to take action sooner.

Ultimately, it’s about flexibility which can be elusive for many organizations. While optimizing supply chains over the years, some companies may have gained efficiencies without gaining the flexibility that they need in today’s environment. For example, they may have gotten down to one or two key suppliers instead of 20,

but they may not have the ability to sense the need for new suppliers, in the event of a regional fuel or material shortage, for example. They also may lack the ability to seamlessly and quickly integrate any new suppliers into their digital ecosystem. And at the same time, they may lack visibility into what those suppliers will mean for their carbon footprint, their sustainability goals, and their impact on the planet.

With a Kinetic Supply Chain covering planning, procurement, distribution, and operations you can proactively address many of those challenges and become “disruption ready.” More than a vision, the Kinetic Supply Chain is real, and you can see it in action at The Smart Factory @ Wichita At this full-fledged manufacturing facility, housed in a net-zero-impact building, Deloitte, SAP, and others are collaborating to solve real business needs and understand what it takes to build and scale flexible digital supply chains. Contact SAP@deloitte.com to schedule a visit or to get more insights on enabling a built-to-evolve digital supply chain.

When you talk about risk resilience at SAP, it’s about how to handle the real world, not setting up a plan and adhering to it day in and day out.

“You would like it to be like clockwork, for sure,” says Castro. “Where everything always aligns and meshes the way that it's supposed to all the time, every second. But we know that's not always the case.”

Weather events, pandemics, labour shortages or large sporting events can cause supply chain issues. For Castro, resiliency is the byproduct of having to have to handle these off-topic or out-of-sync scenarios and the ability to detect that you're out of sync

“Here are the enablers of AI and ML type algorithms that you can use and put together how you see fit”

SAM CASTRO SENIOR DIRECTOR, SOLUTION MANAGEMENT, LOB DIGITAL MANUFACTURING, SAP

with the original plan and react to it in a coordinated manner.

“The faster you can do that, the faster you can correct that problem,” says Castro. “Then you’re able to identify how often those deviations occur — that frequency of occurrence, that is your opportunity.”

Being able to quantify that opportunity and understand what those little deviations actually add up to, and how that impacts the business financially, is one of the key topics around what customers will hear about resiliency from SAP, says Castro.

“Sustainability is an overlay to that, sustainability is a byproduct of efficiency,” says Castro. “Being a sustainable enterprise means that you're an efficient enterprise.

TITLE: GLOBAL VICE PRESIDENT, CENTRE OF EXCELLENCE

INDUSTRY: MANUFACTURING

LOCATION: PENNSYLVANIA, US

Sam Castro joined SAP in July of 2005 with the acquisition of a small company called Lighthammer. He was responsible for implementation consulting, field enablement, custom development, and training for the core products (Illuminator, Xacute, UDS, CMS). These products have since evolved into the core SAP Connected Manufacturing products (Mfg. Integration & Intelligence or MII and Plant connectivity or PCo) that you see today.

Sam is now part of SAP LoB Manufacturing Solution Management group, which is directly responsible for strategy, direction, and customer adoption of all of the manufacturing products at SAP. He is specifically responsible for Industrial Analytics, that is SAP MII, Digital Manufacturing for insights, and Digital Manufacturing for execution, and he is the solution owner for Process MES products. In this role, he is actively working on mid- and long-term features and deliverables and how they are positioned with the broader SAP portfolio; he also provides guidance for product development investment.

If things are running effectively, things are running safely, and in a very energy-friendly manner as well.”

Castro views the impact of the cloud on manufacturing as a positive one.

“There are benefits for the IT team from a maintenance perspective and a continuous update and management of that software package,” he explains.

Cloud users are not dealing out of sync or outdated documentation, they’re not dealing with security issues that creep into the environment over time. Updates and patches are handled in real-time by the cloud hosting and software provider, that SaaS provider in the cloud environment. Castro views offloading that burden from the manufacturing layer and the IT teams that support them centrally and locally as a big deal for organisations and businesses.

“It keeps that barrier to entry for managing efficient production and tracking off of those teams, and it puts it firmly on the shoulders of the software provider. What does that mean for the business? It means that the end users aren't working with stale software. You're not working with software that has a UI from 15 years ago. You're not working with an ad-hoc analytical environment that used to be cool but now uses plug-ins and stuff that your browser doesn't support and ultimately causes it to have problems,” Castro explains.

As businesses are not dealing with these issues from the end user perspective, they're able to take advantage of a very modern, easy to consume and use software experience and focus on their core business functions.

“Despite not directly interacting with it, the work around you is what's driving that environment for you,” says Castro. “You're not putting that burden of three or four extra clicks on somebody, this is just software that's being driven from digital signals; from

integration, automation, and the tasks that the operator is performing.”

This newer approach to software design is how SAP leverages the industry investment companies have made and it is what's ultimately reducing the impact that end users have on that environment themselves.

There are different pillars within organisations, which have their own priorities. CEOs, CIOs, CTOs and CFOs are all working together and have overlapping needs that drive different business cases. But they need to have the right information at the top layer to make the right decision for the lowest layers within the organisation. This doesn't happen unless there is a framework in place for the distribution

and analysis of the data that is generated, from the very edges of the manufacturing and supply chain processes to the shop floor.

“If you don't have a way for that information to work its way up to the top, organisations

really struggle to understand where the priority needs to be,” says Castro.

For manufacturers to focus on business value versus technology, Castro believes that they need to intelligently manage profitability and investments. As a result of that additional profitability, they also need to protect that inflow of money and profitable behaviour for the company.

“Is that a CapEx investment? Is it an OPEX investment? Is it better granularity on product quality and an emphasis on quality for certain products or certain areas within a process that are very tricky and cumbersome?” asks Castro. “Maybe it's a new product that you're introducing and as a result, that process isn't fully stable yet. What is the emphasis in how

“Sustainability is an overlay to that, sustainability is a byproduct of efficiency”

SAM CASTRO SENIOR DIRECTOR, SOLUTION MANAGEMENT, LOB DIGITAL MANUFACTURING, SAP

much we put into that project to stabilise it? Those are the goals that are very coveted from the C-suite down, but they really are reliant from all edges of the supply chain and having that information roll all the way up.”

Enterprise-led manufacturing follows in tune with this exactly.

“The enterprise has to provide guidance to the manufacturing and supply chain teams as a whole,” says Castro. Where they want to see improvements and how much they're willing to invest in those improvements, what's it worth? How do you build that community up?”

To understand the role that manufacturing plays in an organisation’s reinvestment strategy, you must first understand where it matches up with other locales in the manufacturing environment.

112.6K+ employees worldwide (Sept. 30, 2022)

160 number of countries

22K+ partner companies

245mn+ Subscribers in SAP’s cloud-based user base

“Manufacturing isn't just a single-faceted environment. It's often made up of plants that have been around for a long time, some that were built up by your own organisation, some that came into the organisation through acquisition,” says Castro. “So you see different heritages and mentalities. They have this communal approach for how the plant manager wants to lead that group in the business forward.”

At SAP, being able to take advantage of AI standardisation in a universal way is important.

“You can take and apply these very technical algorithms in order to get information off them. Here's the technology, here are the enablers of data, here are the enablers of AI- and ML-type algorithms that you can use and put together how you see fit,” says Castro. “Then that carries over into

the application side, which says, we know we have these technologies, we know that this data is being generated from our transacting processes, so we have our own structured analytics pieces and now we can use these structures to drive our own models to influence our execution process.”

SAP has global partners, as well as local partners, who rely on its technology. When Castro talks about partnerships, he does not put one partner over another.

“We try to keep the community as open as possible,” he says. “We try not to promote one partner over another, because they're all very important to us.”

The openness of SAP and the openness of its software is for its customers to take advantage of, but also for their partners to put their own industry expertise behind.

“It is what gives SAP the power that we have to leverage in our own technologies to leverage partner-led innovation using those technologies to intelligently power our applications.”

“ You want it to be like clockwork, where everything always aligns. But we know that that's not always the case”

SAM CASTRO SENIOR DIRECTOR, SOLUTION MANAGEMENT, LOB DIGITAL MANUFACTURING, SAP

By automating and simplifying data management tasks like never before, generative AI technologies will revolutionise how organisations handle their data

WRITTEN BY: MARCUS LAWAnew data quality revolution is underway, powered by models that use generative AI and machine learning techniques such as ChatGPT. Although the use of AI in the data industry has, until now, mainly focused on predictive analytics, today we are entering an era of creative generative AI, where a powerful tool for NLP, data analysis, and automation will shape the future of data management and data quality.

Used in the data industry since the 1950s and 1960s – when they were developed to process and analyse data – early AI programs used rule-based systems, symbolic reasoning, and expert systems to make inferences and generate insights from data. Today, use of AI has accelerated dramatically:

according to the Data and Analytics

Leadership Annual Executive Survey 2023, 80.5% of data executives indicate that AI/ ML will be an area of increased data and analytics investment during 2023 and it will be the highest investment priority for 16,3% of them, followed by data quality for 10.6% of organisations.

“Data quality is a make-or-break aspect of data management,” explains Davide Pelosi, Manager, Solutions Engineering at data integration and data integrity leader Talend. “It ensures that businesses can make informed decisions based on accurate, complete, and consistent information. When data quality is poor, it can lead to errors in decision-making, loss of revenue, and damage to a brand's reputation.

“Fortunately, software solutions providers are leading the charge in innovative data quality tools and techniques that help businesses identify and fix data quality problems quickly and efficiently,” he says. “However, there's still much work to be done. In a recent survey, 97% of the people Talend surveyed indicated they face challenges in using data, and their top concern is ensuring data quality, coming in first with almost half of all respondents (49%). That means there's a massive opportunity for improvement - and the rewards can be huge for businesses that get it right.”

According to a report by Gartner, by 2025, at least 50% of all data management tasks will be automated. Most will be completed using AI/ML-powered automation, such as generative language models, so it's time for old-fashioned data management techniques to move aside, as ChatGPT and other generative language applications promise to shake the market up.

From content creation to development task automation, these technologies are already making waves in the business world – and their impact on data management and data quality initiatives is, quite frankly, exciting.

“By automating and simplifying data management tasks like never before, these technologies promise to revolutionise how organisations handle their data,” Pelosi comments. “The prospect of nextlevel automation and efficiency means it's easier than ever for businesses to ensure their data's accuracy, completeness, and consistency. Let's take the example of a data quality workflow.

OVER AI/ML

50%: GARTNER PREDICTS OVER HALF OF ALL DATA MANAGEMENT TASKS WILL BE AUTOMATED BY 2025

“BUSINESSES CAN CREATE BUSINESS RULES SIMPLY BY ASKING IN NATURAL LANGUAGE, WITHOUT NEEDING DEVELOPMENT OR COMPLEX UIS”

DAVIDE PELOSI MANAGER, SOLUTIONS ENGINEERING, TALEND

Large language models like GPT-4 or Google’s LaMDA use Natural Language Processing (NLP) to understand and respond to human-generated text inputs in a conversational manner. A subfield of AI that focuses on enabling computers to process and understand human language, these models utilise NLP techniques to analyse and interpret the text input it receives – including tasks such as partof-speech tagging, named entity recognition, sentiment analysis, and language modelling. These NLP techniques help related tools understand the meaning, context, and intent behind the text input, allowing them to generate relevant, coherent responses in a conversational style.

quantify the severity of the issues. Then, based on the assessment results, generative language models can be used to suggest data quality rules and transformations in natural language text that business stakeholders can easily understand.”

From there, these proposed rules can be reviewed and validated by data quality experts and business stakeholders, who may accept or reject them or suggest modifications to better align with their business requirements.

“Businesses can also create additional Business Rules simply by asking in natural language, without needing development or complex UIs,” Pelosi adds. “For example, a business user might ask, ‘Please raise the acceptable age to drink alcohol to 18 and mark all the people not following the rule as not being targeted for the spring marketing campaign’, like we do today with Alexa. Once the rules are accepted, they can be converted into executable code, such as Python or SQL, using a similar, template-based approach.

“Of course, before deploying the code to production, it will need to be tested and validated using a sample of data to ensure the rules are working as expected and the data quality metrics are being met. But, once done, the cleaned data can be used for various downstream tasks, from data analysis and visualisation to machine learning and business intelligence.

DAVIDE PELOSI MANAGER, SOLUTIONS ENGINEERING, TALEND“Picture this: the world of data management and quality is about to undergo a significant transformation, and we've got a sneak peek at what's coming. Although the use of generative language models in this field is still in its infancy and is being researched by industry experts, there are already some jaw-dropping research projects and prototypes out there that show the mind-boggling potential of this technology.”

“GENERATIVE LANGUAGE MODELS CAN BE USED TO SUGGEST DATA QUALITY RULES AND TRANSFORMATIONS IN NATURAL LANGUAGE TEXT THAT BUSINESS STAKEHOLDERS CAN EASILY UNDERSTAND”

With human-level performance on various professional and academic benchmarks, the latest version of OpenAI’s GPT technology, GPT-4, is highly impressive.

Despite its capabilities, however, GPT-4 has similar limitations as earlier GPT models. “Most importantly, it still is not fully reliable,” the OpenAI team says. “Great care should be taken when using language model outputs, particularly in high-stakes contexts, with the exact protocol (such as human review, grounding with additional context, or avoiding high-stakes uses altogether) matching the needs of a specific use-case.”

Aaron Kalb, Chief Strategy Officer and Co-Founder at Alation, reiterates this final point: tools like GPT should not yet be trusted to advise on important decisions.

“That’s because it’s designed to generate content that simply looks correct with great flexibility and fluency, which creates a false sense of credibility and can result in

so-called AI ‘hallucinations’.

As Kalb – who, when working at Apple, was part of the founding team behind its groundbreaking Siri voice assistant – explains, the authenticity and ease of use that makes GPT so alluring is also its most glaring limitation: “Only if and when a GPT model is fed knowledge with metadata context – so essentially contextual data about where it’s located, how trustworthy it is, and whether it is of high quality – can these hallucinations or inaccurate responses be fixed and GPT trusted as an AI advisor.”

“GPT is incredibly impressive in its ability to sound smart. The problem is that it still has no idea what it’s saying. It doesn’t have the knowledge it tries to put into words. It’s just really good at knowing which words ‘feel right’ to come after the words before, since it has effectively read and memorised the whole internet. It often gets the right answer since, for many questions, humanity has collectively posted the answer repeatedly online.”

WATCH NOW

WRITTEN BY: MARCUS LAW

PRODUCED BY: LEWIS VAUGHAN

WRITTEN BY: MARCUS LAW

PRODUCED BY: LEWIS VAUGHAN

With more than 460 million ‘Pinners’ using its platform each month, Pinterest is powered by its data architecture, ML and experimentation platforms

Pinterest is the visual inspiration platform people around the world use to shop for products personalised to their taste, find ideas and crafts to do offline, and discover the most inspiring creators.

Beginning as a tool to help people collect the things they were passionate about online, today more than 460 million people flock to Pinterest’s platform every month to explore and experience billions of ideas.

Central to powering this platform is data engineering on a vast scale – as Dr. Dave Burgess, VP of Data Engineering at Pinterest, explains.

“In Data Engineering we create and run reliable and efficient planet-scale data platforms and services to accelerate innovation at Pinterest and sustain our business,” he says. “We do everything from online data systems to logging data, big data and stream processing platforms, analytics and experimentation platforms, machine learning (ML) platforms, and the Pinterest Developer Platform for external developers to build applications using Pinterest APIs.”

As Burgess explains, one of the biggest challenges in data engineering is improving Pinterest’s developer productivity, which is measured through surveys and the time taken to complete tasks: “For example, the time it takes to train and deploy a new machine learning model or run an experiment.”

From the survey results, a developer productivity NPS (Net Promoter Score) is calculated, from +100 to -100. “When I first started at Pinterest four years ago, our developer productivity NPS was -5 and now it’s +65.”

Since joining the business four years ago, Burgess has overseen the replacement of many of Pinterest’s data engineering systems with the latest in open-source software. “We’ve also built machine learning and experimentation platforms on top of our data platform, increased ML Engineering velocity by 10x and run hundreds of new experiments every week,” he adds. “We’ve also democratised our data so that everyone in the company can use data to make decisions, build applications and experiment. All of this has significantly improved our agility, developer productivity, and the products for our customers.”

2010 Year founded

$2.8bn Revenue in 2022

4K+ Employees around the globe

460m+ Monthly users

‘Under the covers’, according to Burgess, Pinterest is a ‘massive ML machine’: “We use ML to generate recommendations for our home feed, search results, related products, advertising, and also have augmented reality for our Pinners (the affectionate name we call our users) to see makeup on their face.”

Central to Pinterest’s success is its ML platform. Used to power everything from product recommendations and image categorisation to online advertising and spam filtering, Burgess explains that it enables Pinterest’s engineers to be significantly more productive.

“Our ML engineers can iterate much more quickly, building and deploying new ML models in a day, performing offline training

“In data engineering, we create and run reliable and efficient planet-scale data platforms and services to accelerate innovation at Pinterest, sustaining our business”

DR. DAVE BURGESS VP DATA ENGINEERING, PINTERESTHow

data engineering is powering Pinterest’s global platform

to iterate and improve their models offline before testing them with real production traffic, and have production ML systems be automatically monitored and self-healed,” he comments.

One such tool is Pinterest Lens, a visual search tool allowing users to search for ideas and products using images. The tech trick behind this feature is computer vision, which identifies objects in photos to suggest related content, allowing users to find similar items on Pinterest. These innovations, Burgess explains, are powered by open-source and internal advancements in ML technology.

“Our ML platform is built with a combination of open source ML technologies, like PyTorch, Tensorflow and MLFlow, and tech that integrates with our own big data and online systems,” he explains. “That enables us

to train ML models and automatically deploy them into serving systems for ML inference.”

Pinterest is an organisation defined by a culture of experimentation. As Burgess describes, its Experimentation Platform encourages experimentation and datadriven decision-making throughout the whole organisation, while also enabling the organisation to test thousands of new ideas.

“Our Experimentation Platform is designed to support rapid iteration and the continuous improvement of our products, and allow us to quickly test and refine new features, user interfaces, and other elements of the user experience. By using data to guide our product development decisions, Pinterest is able to better meet the needs and preferences of our users, as well as increase inspiration.”

Pinterest had long recognised the need to optimise its data storage system. Using HBase, the image sharing platform was carrying a large footprint, with more than 50 clusters and data totalling one petabyte. Enter PingCAP, an enterprise company launched in 2015 by seasoned infrastructure engineers frustrated with the way databases were managed, scaled and maintained.

Seeing no capable solutions on the market, they built TiDB, an advanced, open-source, distributed SQL database for powering modern applications with elastic scaling, realtime analytics and continuous access to data.

“Pinterest’s storage and caching team wanted to find their next-generation, unifying storage system,” explains Liquan Pei, Principal Technologist at PingCAP. “As a NoSQL database, HBase offers a very simple key value interface, but the business logistics are complex. To add new features, Pinterest had to build additional layers on top of HBase, which incurs a very high maintenance workload.” With those motivations in mind, Pinterest

evaluated more than 15 solutions and settled on TiDB in 2020. Pei says the reason for Pinterest choosing PingCAP came down to TiDB’s robust technical capabilities and PingCAP’s high-quality enterprise support.

TiDB is set to bring a host of benefits to Pinterest’s day-to-day operations. When carrying out the project, PingCAP evaluated Pinterest’s secondary index services system and, using TiDB, achieved better performance and 80% cost reduction.

“Because of TiDB’s capabilities, we were able to reduce the system from six components to one, greatly reducing the maintenance burden,” adds Pei. In the long run, TiDB’s expressiveness and scalability should also help Pinterest’s IT teams from a practical perspective. Pei continues: “People from Pinterest will enjoy peace of mind because a lot of work is handled by TiDB, so they can focus instead on more impactful work.”

As Pinterest went in search of a next-generation, unifying data storage system, the company found the perfect solution in PingCAP’s TiDB

One of the number of changes made in Pinterest’s data systems involves the building of a next-generation data warehouse and the transition to a Data Mesh: an emerging approach to data architecture that aims to address the challenges of managing large and complex data environments, which was first introduced by Zhamak Dehghani – a software architect at ThoughtWorks – in 2019.

“At a high level, Data Mesh is a decentralised data architecture that emphasises data ownership and autonomy,” Burgess explains. “Rather than having a central data team manage all the data for an organisation, Data Mesh encourages each business unit or team to take ownership of their own data domains, managing their data in a way that is best suited to their needs.”

This approach involves breaking down data into smaller, more manageable domains that can be owned and managed by individual teams. Each team is responsible for the data within their domain, including defining the schema, ensuring data quality, and providing access to other teams that need to use the data.

To enable collaboration and sharing across domains, Pinterest has a catalogue of schemas and metadata stored in Apache DataHub, has standardised its data vocabularies and metrics, has tiered the quality of its data, and has integrated its open-sourced Querybook platform to collaborate and share SQL queries.

“Querybook is an open-source data collaboration platform developed by Pinterest,” Burgess explains. “It has a userfriendly interface for data analysts and engineers to collaborate on data analysis

tasks, allowing them to share queries, datasets, and insights with one another. It’s the most popular and highly-rated internal tooling platform at Pinterest.”

As Burgess describes, Querybook also benefits from advanced data analysis capabilities for ad-hoc data analysis, generating visualisations, and even building machine learning models: “We’ve also built a ChatGPT-like interface to automatically generate and execute queries from a text business statement. For example, you could ask it how many daily active users there are on Pinterest over the past month and it will generate a SQL query with the right tables and fields.”

“Overall,” Burgess asserts, “Data Mesh represents a new way of thinking about data architecture that helps us to manage our large and complex data environment more effectively, while also fostering greater collaboration and innovation.”

DR. DAVE BURGESS VP DATA ENGINEERING, PINTEREST

“By using data to guide our product development decisions, Pinterest is able to better meet the needs and preferences of our users, while increasing inspiration”

Building a successful partner ecosystem Pinterest’s Data Engineering department works with a number of third party partners, including AWS for cloud infrastructure and Percona for MySQL support, along with a number of other companies on open source software such as Netflix, Lyft, AirBnB, AWS, Starburst (for Presto/Trino), StarRocks Technologies, and Preset (for Superset), as well as close collaborations with the open source community.

Another of Pinterest’s partners, PingCAP, has assisted with the deployment of its TiDB system: a distributed SQL database engine that provided users with better data consistency, reducing tail latencies by 30-90% while reducing hardware instance costs by more than 50%.

“We had been using an older version of HBase for many years, which is a scalable open-source, distributed, column-oriented NoSQL database,” Burgess explains. “We’ve made many fixes to HBase over the years to make it faulttolerant at our scale on AWS, used it for different kinds of use cases, and added a lot of functionality on top.”

“The biggest pain points with this older version of HBase were: the total cost of ownership to maintain and run this; limited functionality, which led to lower engineering productivity and increased application complexity; the lack of data consistency across tables, affecting our users’ experience; and the scalability requirements our internal users wanted to run at.”

This partnership with PingCAP to use TiDB is already reaping benefits, providing better data consistency, a lower total cost of ownership, and more powerful features than the previous solution, HBase.

“As a NewSQL database, TiDB provides a scalable solution in a huge problem space for use cases that need stronger consistency or richer functionalities”, Burgess explains. “It fills in the gap between our existing SQL and NoSQL systems, allowing developers to build

storage applications faster without making painful tradeoffs.”

“All these factors combined enable us to more easily build and scale businesscritical applications including shopping catalogues, advertising index systems, trust and safety systems and many more.”

“Data Mesh represents a new way of thinking about data architecture that helps us to manage our large and complex data environment more effectively, while also fostering greater collaboration and innovation”

DR. DAVE BURGESS VP DATA ENGINEERING, PINTEREST

“We will make it easier for Pinners to shop for the things they love. Pinners will be able to go from being inspired to making this a reality in their lives”

DR. DAVE BURGESS VP DATA ENGINEERING, PINTEREST

What are Pinterest’s main aims for the next five years?

As Burgess describes, central to Pinterest’s plans for the future is innovating and creating new technologies and products that put Pinners first. “This means enhancing the user experience and driving growth internationally.”

The organisation will also look to improve its advertising products and expand its advertising partnerships with businesses of all sizes, while becoming a more sustainable and socially responsible company. Reducing its environmental impact is part of the latter, as is promoting diversity and inclusion, in addition to supporting causes related to social and environmental issues.

“We will make it easier for Pinners to shop for the things they love. They’ll be able to go from being inspired to making this a reality in their lives,” Burgess adds. “We will also be a more sustainable company, with almost 100% renewable energy for our operations. This includes renewable energy for our offices and data centres.”

With the space moving quickly, making the most of the opportunities presented by developments in ML and AI will also be central to Pinterest’s success going forward.

“This space is changing quickly with the recent advances in Large Language Models, Stable Diffusion, and Transformer models,” Burgess concludes. “We have the ability to generate images and text answers, augment ML models with more data, recognise objects in images, and create an augmented reality. We can also significantly improve our productivity with AI-assisted bots that generate code and answers.”

“There are many applications of this and it’s going to be a game changer.”

AI-powered tools such as behavioural biometrics prevent fraud by detecting high-risk scenarios and helping institutions make better decisions

WRITTEN BY: MARCUS LAW

WRITTEN BY: MARCUS LAW

Already a familiar sight in technology from smartphones to laptops, biometric capabilities are increasingly embedded in everyday life.

With fraud on the rise, biometric technology has become increasingly popular in recent years, with the global biometric market's revenue projected to hit US$83bn by 2027.

Banks worldwide are expected to spend an additional US$31bn on AI embedded in existing systems by 2025 to reduce fraud, according to an IDC report, which also said fraud management featured strongly as a priority for banking executives.

Mitek brings the future to business with patented solutions and intuitive technologies that bridge the physical and digital worlds. The company’s leadership in identity verification, including facial biometrics, image capture technology and ID card verification enables customers to confidently onboard users, verify identities within seconds and strengthen security against cybercrimes.

As Chris Briggs, Global SVP of Identity at Mitek, explains, AI-powered behavioural biometrics prevent fraud by detecting highrisk scenarios and helping institutions make better decisions: “For example, if a customer who logs in twice each month suddenly starts logging in more frequently or if a client who always types their password in copies and pastes the password from a different location, those pattern anomalies signal that these logins carry additional risk.”

Research released by ID R&D, a provider of AI-based voice and face biometrics and liveness detection technologies,

educators. Empowering students. Explore how we accelerate student discovery, learning and innovation with our Digital Education 3D Experience.

found that humans have greater difficulty identifying images of biometric spoofing attacks compared to computers performing the same task, with machines as much as 10x faster than humans at detecting fake faces.

“Furthermore, AI-powered liveness detection is better than humans at recognising what is real and what is not. It ensures the integrity of a biometric match by distinguishing both identity and liveness, through AI. For example, fraudsters are no longer able to bypass screening processes with photos of a printed image. Also, fraudsters cannot work around liveness, mitigating the threat of identity fraud.”

With Verizon’s 2022 Data Breach Investigations Report finding that stolen password credentials were involved in 61% of all company data breaches last year, password technology is clearly no longer sufficient.

Enter biometric technology, where facial recognition or voice verification is used to verify a customer’s identity. “The software measures the capture to create a baseline data point template or the "lock" that will be the determining data point for future uses,” comments Briggs. “This means that only the matching biometrics, whether its

“AI-powered liveness detection is better than humans in recognising what is real and what is not”

While biometric bias can be part of an identity lifecycle, biometric technology itself is not inherently biased – it is the design of biometric technology that can introduce discrimination, explains Mitek CTO Stephen Ritter.

“Biometric systems analyse the physiological or behavioural traits of an individual for the purposes of identity verification and authentication. This is often conducted through fingerprint and facial recognition technology built on machine learning and AI – all powered by algorithms. Bias occurs when the algorithm operates in a discriminatory fashion, which often stems from how the algorithm is built, designed or tested.”

First solution: Testing standards

“First,” Ritter says, “we need a way to evaluate biometric bias. There is currently no standardised, thirdparty measurement for evaluating demographic bias in biometric technologies.

“The industry needs a way to evaluate the equity and inclusion of biometric technologies. This would give service providers a way to ensure that their solution is equitable, regardless of whether it was built in-house or based on third-party technology from a vendor. This benchmark would provide the public with the information they need to select a service provider that’s more equitable.

Second solution: Global AI guidelines

“Determining ‘what is right’ goes beyond creating accuracy benchmarks – we also need to create ethical guidelines,” Ritter explains. “Until there are ethical guidelines for the use of this technology, there is no way to understand what is ‘right.’

“AI ethical guidelines would serve to solidify the rights and freedoms of individuals using or subject to datadriven biometric technologies. “Until we define what is and is not an ethical use of biometric technology, there is no metric or benchmark that will exist to gauge the quality of technology.”

physiological or behavioural characteristics, provided will confirm a person's identity and unlock the service or account.”

As Adam Desmond, Sales Director EMEA at OCR Labs, explains, biometric authentication is leading the charge in the growing fight against identity fraud: “Banks are already using AI-powered facial biometrics in conjunction with liveness detection to verify faces and documents.

“But voice technology offers the next level up in powerful and convenient biometrics, with a critical role to play in improving anti-fraud defences. In fact, when combined with face biometrics, voice is one hundred times more powerful than face alone. In our experience, the combination of both voice and face biometrics makes the verification process almost impenetrable by fraudsters.”

A step further, into behavioural biometrics, can detect unusual patterns of behaviour to improve security, using what Briggs describes as a ‘behavioural signature’.

“Behavioural biometrics uses customers’ digital breadcrumb trails, as well as how customers approach online logins, to effectively create a behavioural signature that fraudsters are hard-pressed to emulate. Implementing behavioural pattern analysis into continuous verification frameworks adds an additional layer of security that is difficult even for the most sophisticated fraudsters to crack.”

“When combined with face biometrics, voice is one hundred times more powerful than face alone”

ADAM DESMOND SALES DIRECTOR EMEA, OCR LABS

Biometric technology itself is not inherently biased – it is the design of biometric technology that can introduce discrimination.

But as Briggs explains, to tackle AI bias in identity verification, we first need a way to evaluate biometric bias: “There is currently no standardised, third-party measurement for evaluating demographic bias in biometric technologies.

“The industry needs a way to evaluate the equity and inclusion of biometric technologies. This would give service providers a way to ensure that their solution is equitable, regardless of whether it was built in-house or based on third-party technology from a vendor. This benchmark would provide the public with the information they need to select a service provider that’s more equitable.”

Determining ‘what is right’ goes beyond creating accuracy benchmarks – creating ethical guidelines is essential.

“AI ethical guidelines would solidify the rights and freedoms of individuals using or subject to data-driven biometric technologies,” Briggs explains. “Until we define what is and is not an ethical use of biometric technology, there is no way to understand what is ‘right’.”

As Ricardo Amper, CEO of digital identity company Incode, told Cyber Magazine, trust is critical to the success of AI-powered biometrics.

“But trust is crucial for its success; the impact that biometrics can have can be substantially limited if there is a lack of trust in how the technology is used,” he explained. “Users need to be sure that their data is being used for the purpose it was given and nothing else. This trust is hard to come by,

but if gained, then there will be no limit to the impact biometrics can have on our lives.”

As Briggs explains, the rise of transparency will be an important component of maintaining consumer trust in biometrics: “Consumers are becoming increasingly aware of exactly who and/or what technologies they are interacting with and how the data they are providing is being used. Over the next year, we can expect the leading technology innovators to lead by example – setting rules of engagement and standards for collecting and managing data.

“Separately,” Briggs concludes, “to reduce the risk of new cyberthreats, regulators should begin to define a clear private-public framework to address these problems within the private sector. Consumers should believe the regulators have their backs, but fully understand they themselves are responsible for all aspects of their data and identities.”

Barely a decade into the popularisation of electric vehicles, Faraday Future is launching the first ultra-tech luxury electric vehicle: meet the FF 91

Prashant Gulati is the esteemed Vice President of Strategy at Faraday Future. Now in his seventh year with the company, he handles the entirety of its strategic roadmap, which includes overseeing the business plan, mobility initiatives, manufacturing strategy, and directing fundraising efforts.

Fundraising is a key part of Gulati’s role: “My proudest professional achievement has been co-leading the public offering, which helped the company raise more than $1bn through a listing on NASDAQ,” he says.

With over 20 years of success in the technology and automotive industries, Gulati is an accomplished executive with a track record of scaling businesses. He has successfully led a public offering and held leadership roles to drive growth and expansion at several companies globally. In recognition of his industry contributions, Gulati was selected for the coveted Business Insider list of EV Industry Power Players.

Gulati holds a bachelor's degree in computer science and an MBA from the Indian School of Business.

Having grown up in India, Gulati has always had a deep affinity for the environment. This draw of environmental stewardship was heightened when Gulati and his wife were expecting their first child. “I had a sense of urgency to contribute to technology that could help slow climate change and create a better world for our children to grow up in,” he explained.

“I explored numerous fields, including renewable energy, smart grids and energy storage, before focusing on EVs and finding

Faraday Future. I immediately clicked with the company as it had such a bold vision and fit the environmentally-focused technological approach I was seeking. My journey into the auto industry has been quite unusual – almost accidental.”

Faraday Future: driving the EV industry

“Climate change is the defining challenge of our time, and transportation is one of the largest contributors to it,” says Gulati. “At the same time, the world cannot slow down. We need more growth, more productivity, more time to commit to our passions. So, at Faraday Future, we've been working on the intersection of these problems - of clean mobility and climate change on one

“We were founded with the mission to help people live, move, and breathe more freely – and that's one of the things that drives a lot of us”

PRASHANT GULATI VICE PRESIDENT OF STRATEGY, FARADAY FUTURE

hand, and helping people lead productive, connected lives on the other.”

Faraday Future has taken the first step towards achieving that mission by building the FF 91, which Gulati believes is the most connected, comfortable, and technologically advanced electric car in the world. The company achieved start of production of the FF 91 in California and plans to sell it through a direct sales model in its dual home bases of the US and China.

“The vision of the company is much more than building and selling electric cars, though,” Gulati tells us. “We want to engage our users, build a community, and offer internet and AI services throughout the vehicle lifecycle.”

TITLE: VICE PRESIDENT OF STRATEGY

INDUSTRY: MOTOR VEHICLE MANUFACTURING

LOCATION: UNITED STATES

Prashant Gulati is the esteemed Vice President of Strategy at Faraday Future. Now in his seventh year with the company, he handles the entirety of its strategic roadmap, which includes overseeing the business plan, mobility initiatives, manufacturing strategy, and directing fundraising efforts.

With over 20 years of success in the technology and automotive industries, Gulati is an accomplished executive with a track record of scaling businesses. He has successfully led a public offering and held leadership roles to drive growth and expansion at several companies globally. In recognition of his industry contributions, Gulati was selected for the coveted Business Insider list of EV Industry Power Players.

Gulati holds a bachelor's degree in computer science and an MBA from the Indian School of Business.

WE DESIGN. WE ENGINEER. WE MANUFACTURE.

Our advanced electrical and lighting, battery storage, and thermal management solutions are the key to turning your ideas into reality. Trust us to provide smart solutions for your global technology needs and deliver results beyond your expectations.

Contact us today and let’s bring your product vision to life together.

In an exclusive interview, Jason Murar, President and CEO of JVIS USA, discusses how JVIS is pioneering the EV industry while supporting Faraday Future

JVIS USA is a world-renowned manufacturing leader boasting unrivalled proficiency across both design and engineering. Blending state-of-the-art technologies with vertically-integrated processes, the company guides a concept from the initial sketch to the final product launch, and everything in between.

By prioritising innovation, JVIS has established itself as a leader in the continuous shift towards electric vehicles (EVs). It specialises in delivering technology and components uniquely optimised for EVs, continuously seeking out novel concepts, technologies, and procedures.

JVIS has also played a vital role in supporting Faraday Future (FF) to unveil their vehicles to the market. Jason Murar, President and CEO of JVIS USA, explains:

“We’ve assisted FF in almost every facet, from the initial product development – where we identified key product innovations for their consumers – all the way through to the actual testing and validation, in compliance with automotive standards for those components and the continuous supply of critical components used in their vehicles.

Armed with impressive electrical solutions, JVIS employs the latest technology, such as capacitive touch surfaces and smart panels.

Murar says: “In the EV market, you see a lot of change with smart panels, which are now highly integrated into both the exterior and interior of vehicles.”

JVIS is committed to leading the development of the EV industry, particularly as it plays a large part in the company’s growth. “Our customers see how we’re taking static panels and turning them into functional panels, giving expanded use of the vehicle,” Murar explains. “These components include sensors and software to increase the user’s experience, such as soft-close doors and ambient lighting.

“JVIS’s continued investment in innovation and growth will enable us to make significant contributions to this dynamic field. If you are looking to turn your product ideas into reality, contact us today to learn more about how JVIS technology can help.”

Faraday Future started from scratch, taking a “clean sheet approach” to building electric cars. Faraday Future’s technology innovations include its proprietary Variable Platform Architecture (VPA), propulsion system, and Internet, Autonomous Driving, and Intelligence (I.A.I.) systems. The company has approximately 660 patents across these areas.

“So far, the company has invested billions of dollars in creating industry-leading product and technology,” recounts Gulati. “Building cars is a capital-intensive business, and we've had our ups and downs.

“The way I would describe the ethos of the company is one of perseverance and tenacity; one of never, ever giving up in the service of our mission.”

The FF 91 has been designed as an all-ability car, possessing the handling of a sedan, the space, reliability, and comfort of an SUV, and the top-level performance and driving dynamics of a sports car.

“A lot of people liken it to a Rolls-Royce, with increased comfort, connectivity, and performance ” Gulati tells us – and the statistics certainly speak for themselves.

“There's a lot that has gone into developing and thinking about the design, driving experience, and the overall user experience” says Gulati who is incredibly passionate about the vehicle.

“There is no electric car in our segment right now – competing with Rolls-Royce, Bentley, Maybach – so we're quite excited

about being the first EV of our kind, and we think it's going to redefine industry standards. The first ultra-tech luxury electric vehicle.”

Faraday Future: the leader in luxury electric vehicles WATCH NOW

Faraday Future: the leader in luxury electric vehicles WATCH NOW

“We are building what we believe is the most connected, most comfortable, most technologicallyadvanced electric car in the world: we call it the FF 91”

PRASHANT GULATI VICE PRESIDENT OF STRATEGY, FARADAY FUTURE

The technology under the bonnet Faraday Future describes the technology behind the FF 91 within three pillars:

1. Variable Platform Architecture

“Think of it like a Lego,” Gulati explains: “You can change the size of the platform and build different vehicles of different sizes for different purposes. You can put different motor and powertrain configurations. This skateboard-like platform approach enables us to build multiple vehicles on the same platform, reduce time to cost, time to market, and more.”

2. In-house Propulsion Technology

Faraday Future has developed a proprietary inverter design and propulsion system. The drive units are fully integrated with the inverter, and transmission and control unit to enable leading horsepower, efficiency, and acceleration.

3. Internet, Autonomous Driving, and Intelligence (I.A.I.)

“Our software, internet, and AI development is the most important of these pillars,” says Gulati. “That's where the company shines compared to all of our competition.” Faraday Future’s commitment to these technologies supports the user experience in the car, both practically and luxuriously, ensuring seamless user experience through different elements, one of which is advanced voice control to manage complex queries without driver or passenger distraction.

• 3 motors

• 1,050 horsepower

• 0 to 60 in 2.27 seconds

• Class leading EPA and CARB certified range of 381 miles

• Charge Time (20-80%): 25 min @ 200kW DC Fast Charge

• Overall length: 5,250mm/ 206.7in

• Up to three 5G modems and a newly developed operating system, allowing customers to use apps and stay fully connected

• Industry-leading 49 inches of rear seat legroom

• 60-degrees rear seat recline in NASA-inspired, zero-gravity seats

Faraday Future invested a lot in creating its own technology. “Some companies basically source parts and build a car, essentially becoming assemblers,” explains Gulati. “At Faraday Future, we've invested a lot of time and capital on creating our own technology.”

Faraday Future has strong partnerships with, and relies on, key suppliers to ensure the FF 91 is completed in a timely manner and with the high quality its users will demand. The company was affected to a lesser degree by supply chain issues during the pandemic because of planned low volumes at launch, and because many of the parts are created by suppliers uniquely

for the FF 91, and so we don't compete with others for those. Notwithstanding, the company has taken lessons from that period and gotten even more diligent about planning and ordering across the supply chain.

In terms of scale, Faraday Future employs 590 people across the US and China, and with its production plant in California the company will ultimately be capable of producing approximately 10,000 vehicles per year. The current manufacturing setup is asset light, and the supply chain is built with expansion in mind: if needed, the company has a contract manufacturing partner for this anticipated expansion, allowing early-stage flexibility.

“The company's DNA is completely global,” Gulati says: “We've designed and defined the product with an abundance of cutting-edge technology to cater to a global audience.”

The company is headquartered in Los Angeles, where much of the engineering and manufacturing takes place, but it also has roots in China – home to a Faraday Future engineering centre. The FF 91 will be initially launched in the US, followed by China, before being launched globally.

“Looking to the next 18 months, we are very focused on the FF 91 production, sales, and

“Climate change is the defining challenge of our time, and transportation is one of the largest contributors to it”

PRASHANT GULATI VICE PRESIDENT OF STRATEGY, FARADAY FUTURE

deliveries,” Gulati explains. “We're going to be scaling our operation, ramping up supply chain – that's the key focus area making the FF 91 successful.

Gulati believes that the value chain disruption we’re seeing now is going to continue, not only accelerating on the product and technology front, but on the sales side, too, as industry sales volumes increase.

“In the distribution model, quite a few OEMs have expressed a desire to sell directly to customers” Gulati explains.

“For the industry, this transition away from ICE (Internal Combustion Engine) vehicles is going to impact sales economics. Today, auto dealers mostly earn margins from selling auto parts and services, and financial services, and that's going to change. Maintenance and parts and services replacement in EVs is far less than ICE vehicles. So, we expect to see changes on the business side as well as on the product and technology side.”

Despite this value chain disruption and anticipated global economic slowdown, Gulati himself doesn’t forecast a slowdown for Faraday Future, citing research from McKinsey that shows the luxury vehicle market (vehicles $150,000 and above) is projected to grow significantly over the next 10 years.

Looking to the longer-term future, Faraday Future has ensured built-in capability to add further models to its Variable Platform Architecture.

“We have plans for our next model –we call it the FF 81 – which will share 60% commonality of parts with the FF 91,” Gulati says. “Although, of course, such future developments are dependent on a number of things, including fundraising.”

To promote long-term growth and success within the electric vehicle industry, Gulati says the focus is – and should remain –on batteries, reducing cost of materials, recycling, and developing new chemistries.

“Since 2010, the price of batteries has dropped significantly – until about 2019,” Gulati recounts. “In the last few years, battery and raw material prices have risen again because of supply chain disruptions, due to the pandemic.”

The second focus that Gulati expects of the industry is in EV infrastructure.

“We are seeing significant regulatory support to promote the transition to electric vehicles,” he says. “Governments are allocating a lot of capital and policy support towards education, charging infrastructure, and local sourcing of electric cars, so we anticipate that will continue.”

The future is on its way – and it looks electric.

“We need more growth, more productivity, and more time to commit to our passions”

PRASHANT GULATI VICE PRESIDENT OF STRATEGY, FARADAY FUTURE

Data is the lifeblood of smart cities like Barcelona, transforming everything from shopping and transportation to autonomous driving and augmented reality

WRITTEN BY: MARCUS LAWBy 2050, two-thirds of people globally are expected to live in cities. As urbanisation continues to rise, cities are facing new challenges that require innovative solutions.

Enter the Internet of Things (IoT), a network of interconnected devices that collect, analyse and share data. IoT is the backbone of smart cities, enabling the seamless integration of technology into urban infrastructure and services.

A modern network must be able to respond easily, quickly and flexibly to the growing needs of today’s digital business. Must provide visibility & control of applications, users and devices on and off the network and Intelligently direct traffic across the WAN. Be scalable and automate the process to provide new innovative services. Support IoT devices and utilize state-of-the-art technologies such as real-time analytics, ML and AI. And all these must be provided with maximum security and minimum cost.

This is the power that brings the integration of two cloud managed platforms, Cisco Meraki and Cisco Umbrella. This integration is binding together the best of breed in cloud-managed networking and Security. cisco.com

And just what are smart cities? Think about your phone and its capabilities, but on a city-wide connectivity scale. In essence, they are crucial for addressing urban challenges, with the IoT playing a pivotal role in their success – from water and electricity, traffic flow, and parking to refuse removal and sewerage – creating more effective, efficient management.

At the heart of the smart city is the smart sensor. Distributed in the tens of thousands across smart city test neighbourhoods, or even entire boroughs, IoT sensors monitor everything from traffic patterns and footfall to utility usage and emissions.

If local governments are curiously eyening smart city capabilities in their own backyard, they need to look at deploying

sensors while making use of those that already exist, harnessing the vital data required to catapult their cities into the future.

In smart city environments with IoT, everything from vehicles to hand-held devices is constantly interacting with one other, generating not only a large volume of data, but also different variants of datasets.

As Tikiri Wanduragala, Senior Consultant for Infrastructure Solutions at Lenovo, explains, this data is the lifeblood of smart cities. “It flows directly between the cameras, sensors and metres that connect our community services, from transport systems to water networks.

Smart cities are powered by cutting-edge technologies that transform urban infrastructure and services. These include Internet of Things (IoT) devices that collect and analyse data to optimise resource allocation, improve transportation systems, and enhance public safety.