GABRIEL STRAUB

THE SMARTEST MINDS IN DATA SCIENCE & AI

Expect smart thinking and insights from leaders and academics in data science and AI as they explore how their research can scale into broader industry applications.

Key Principles for Scaling AI in Enterprise: Leadership Lessons with Walid Mehanna

Maximising the Impact of your Data and AI Consulting Projects by Christoph Sporleder

How AI is Reshaping Startup Dynamics and VC Strategies by KP Reddy

Helping you to expand your knowledge and enhance your career.

Hear the latest podcast over on

KP REDDY

WALID MEHANNA

CHRISTOPH SPORLEDER

CONTRIBUTORS

Gabriel Straub

Christoph Sporleder

Shahin Shahkarami

Guillaume Desachy

Ben Taylor

Nicole Janeway Bills

Francesco Gadaleta

Jhanani Ramesh

Rohan Sharma

Gustavo Polleti

Anthony Alcaraz

Philipp Diesinger

Gabriell Fritsche-Máté

Andreas Thomik

Stefan Stefanov

Martin Musiol

Charmaine Baker

Glenn Hapgood

EDITOR

Damien Deighan

DESIGN

Imtiaz Deighan imtiaz@datasciencetalent.co.uk

Data & AI Magazine is published quarterly by Data Science Talent Ltd, Whitebridge Estate, Whitebridge Lane, Stone, Staffordshire, ST15 8LQ, UK. Access a digital copy of the magazine at datasciencetalent.co.uk/media.

DISCLAIMER

The views and content expressed in Data & AI Magazine reflect the opinions of the author(s) and do not necessarily reflect the views of the magazine, Data Science Talent Ltd, or its staff. All published material is done so in good faith. All rights reserved, product, logo, brands and any other trademarks featured within Data & AI Magazine are the property of their respective trademark holders. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form by means of mechanical, electronic, photocopying, recording or otherwise without prior written permission. Data Science Talent Ltd cannot guarantee and accepts no liability for any loss or damage of any kind caused by this magazine for the accuracy of claims made by the advertisers.

WELCOME TO ISSUE 9 OF DATA & AI MAGAZINE

YOUR QUARTERLY INTO THE EVOLVING WORLD OF ENTERPRISE DATA SCIENCE AND ARTIFICIAL INTELLIGENCE

IN THIS ISSUE

Our articles discuss both the challenges and innovations within the data and AI space, highlighted by our cover story featuring Gabriel Straub of Ocado Technology. Gabriel provides an in-depth look at why advanced technology and AI are pivotal for the future of online grocery shopping. His insights show how AI continues to reshape industries far and wide.

Alongside our cover story, we feature a diverse lineup of thoughtprovoking articles. Chris Sporleder from Rewire discusses the impact of strategic data and AI consulting on transforming businesses. Shahin Shahkarami at IKEA explores the company’s journey through AI transformation, emphasising practical applications in retail.

For those looking to deepen their leadership and knowledge base, Nicole Janeway Bills offers a curated list of five essential reads for digital leaders, empowering our readers with the tools to lead in a digitised economy.

On the governance front, Rohan Sharma provides a practical guide for investors navigating the complex landscape of AI governance, a mustread for stakeholders aiming to balance innovation with responsibility. Meanwhile, Martin Musiol engages us with a conversation about the evolution of generative AI – from generative adversarial networks (GANs) to multi-agent systems – offering a glimpse into the future of AI technology.

Each article, while unique in its focus, threads a common narrative about the transformative power of AI and the critical need for an informed approach to technology adoption and integration in various sectors.

AN ESSENTIAL ENTERPRISE AI EVENT: REWIRE LIVE (FRANKFURT)

Thursday 10th April 2025 – Dealing with AI’s Last Mile Problem

We are thrilled to co-promote an event with Rewire, designed to connect industry leaders, innovators, and thinkers in the field of data & AI. This must-attend gathering promises to be at the forefront of solving AI’s last mile problem. It will be an invaluable opportunity to network, share ideas, and learn from some of the brightest minds in the industry.

Rewire LIVE 2025 is set to be a pivotal day for corporate executives and business leaders who want to address critical aspects of AI implementation in the corporate sphere. Focusing particularly on ‘the last mile’ of driving scale and adoption to truly harness the transformative potential of data & AI.

Why attend?

The landscape of data & AI is fast-evolving, and despite the substantial promise it holds, the actual deployment and scaling of AI within organisations presents numerous challenges. From technical integration hurdles to ethical and regulatory considerations, the path to effective AI implementation is complex.

Rewire LIVE aims to cut through the AI hype to provide clear guidance on these challenges, offering a mix of technical expertise and strategic guidance from a panel of distinguished speakers and experts from both corporate and academic backgrounds.

Who should attend?

This event is specifically designed for senior executives: CEOs, CTOs, heads of departments, and directors across technology, strategy, analytics, and data-intensive sectors like supply chain and marketing. There will be the chance to network with peers, gain insights from industry leaders, and bring back knowledge that can shape your organisation’s future.

Registration is simple:

Visit Rewire LIVE 2025 registration to express your interest.

Our events team will review your application and confirm your place. Given the strategic importance of this event, ensuring your seat is crucial for those who wish to lead in the AI-driven corporate world.

Thank you for your continued engagement with Data & AI Magazine. We hope this issue enlightens and inspires you, and we look forward to meeting you in Frankfurt for what promises to be a pivotal gathering.

Damien Deighan Editor

WHY ONLINE GROCERY NEEDS ADVANCED TECHNOLOGY

When Open AI launched Chat GPT in November 2022, artificial intelligence suddenly shot to the top of the agenda in every boardroom. LinkedIn was flooded with hot takes about the hundreds of ways that AI would change the way we live, work and interact. Many media outlets portrayed AI as a brand-new technology that had come out of nowhere and taken us all by surprise.

But in the online grocery space, AI isn’t as new as you may think. In fact, at Ocado Technology, it’s a crucial element of the e-commerce, automation and logistics technology that we develop and deploy for retailers all over the world. Shoppers have been experiencing the benefits of AI working behind the scenes for many years without even realising.

GABRIEL STRAUB joined Ocado Technology in 2020, bringing over 10 years’ experience in leading data teams and helping organisations realise the value of their data. At Ocado Technology, his role as Chief Data Officer is to help the organisation take advantage of data and machine learning so that we can best serve our retail partners and their customers.

Gabriel is also a guest lecturer at London Business School and an Independent Commissioner at the UK’s Geospatial Commission. He has advised startups and VCs on data and machine learning strategies. Before Ocado, Gabriel held senior data roles at the BBC, Not On The High Street and Tesco.

Gabriel was named AI Expert of the Year by Digital Leaders and one of the top people in data by both DataIQ and HotTopics. Gabriel has an MA in Mathematics from Cambridge and an MBA from London Business School.

So why the hype now?

Wide-scale and free access to generative AI tools such as ChatGPT is definitely an exciting development. If your job involves content creation or processing large amounts of documents, I’m sure you will have experienced a change in how you do things.

But for the online grocery space, generative AI is just one tool in our toolbox. We use a vast array of AI techniques to solve business problems for our clients. From deep reinforcement learning to train robot arms to pick and pack thousands of different items, to optimisation algorithms which can make sure deliveries arrive on time using the fewest number of vans.

Wait, why do you need AI and robotics to deliver my tomatoes?

When Ocado was founded, many believed that the vision of building a feasible online grocery service was an almost unsolvable challenge because of its complexity.

Handling tens of thousands of orders that contain over 50 items every day is complex enough as it stands. When you combine this with the fact that the products need to be in and out of the warehouse very fast for freshness, and you need to arrive perfectly on time so that your customer can refrigerate their groceries, the logistics challenge gets bigger and bigger.

Without getting smart with automation, data and AI early on, we were never going to achieve a viable model. We quickly found that technology from companies who specialise in the less complex general merchandise space could not be repurposed for the online grocery use case. The tech simply couldn’t handle the need for managing different temperature regimes and perishable goods. There was no template for what we wanted, and no off-the-shelf product that would help us, so we built the technology ourselves.

A technology-powered online grocery operation creates a lot of data. Shoppers log millions of data points as they interact with the webshop, and every shipment that comes in from the supply chain, every movement of goods around the warehouse, and every move our logistics vehicles make create flows of valuable information.

they can offer a much wider range of products to their customers. For example, Ocado.com in the UK has a range of around 50,000 items. This means, we need to make it simple to navigate that large range for customers. We don’t want shoppers to ‘get lost’ in the virtual aisles.

On average, 70% of shoppers abandon their shop after adding products to their baskets (source: baymard.com/lists/cart-abandonment-rate). AI is critical to overcoming the friction that leads shoppers to abandon. We need to make their lives easy.

For example, many shoppers use our AI-based ‘instant shop’ that automatically populates a whole order with items based on their data and history. It means a shopper can go from login to checkout in just a few clicks.

We also use AI to inspire shoppers with ML features that recreate the fun of spontaneously grabbing something new in the supermarket with personalised recommendations.

An example of this is flash sales, where AI technology triggers price drop sales of products with a risk of purge advertised to shoppers. This not only helps us reduce waste, but helps inspire our customers to try (and continue with!) new products. It turns out new-to-you products bought through flash sales have a significant chance of being repurchased in following shops so clearly it’s a proposition that shoppers benefit from for inspiration.

We also use AI in our search function, where our models are designed to improve the accuracy of matching, which massively increases the regularity of preferred brands and products appearing at the top of customers’ search results. AI models can suggest search terms that closely align with a customer’s search history, enabling them to find what they’re looking for without needing to type a letter.

Thanks to AI, every single customer has a different experience and interacts with a unique storefront tailored to their needs.

Thanks to AI, every single customer has a different experience and interacts with a unique storefront tailored to their needs.

We use artificial intelligence to find patterns, insights and optimisations in this data that allow us to improve the customer experience and make efficiency gains.

How does AI help shoppers fill baskets quickly and inspire customers to try new products?

Using large centralised fulfilment centres that store products in giant grid structures, means that the retailers we work with don’t have the same capacity constraints that a supermarket store has. This means

What other factors aside from the unique storefront play an important part in the customer experience?

Customers want their shop to arrive in full, at the right time and all for a competitive price. One of the consumer frustrations with online grocery shopping is that when their order arrives, the retailer has made substitutions that they are not happy with.

For us, this isn’t an acceptable customer experience. We set up data flows through our platform so that we know exactly what’s in our warehouse, when new stock will be arriving, and which supplier orders can still be modified. That means we only show customers products on the webshop that will be in stock at the time that their order is prepared so we rarely need to make substitutions.

But we can only offer a top-level service if the model is operationally efficient. AI plays a crucial role in eliminating waste and driving down cost.

Can you explain how AI allows retailers to order exactly the right amount of stock?

Deep learning models are able to forecast what customers will buy. Retailers have found that our deep learning models are up to 50% more accurate than their traditional forecasting systems. The AI recommends the optimal amount of stock to buy from suppliers to guarantee availability of products for shoppers without creating waste from unsold products. For our partners using our supply chain AI, over 95% of stock is ordered automatically without the need for any manual intervention.

The models benefit from the latest developments in transformer models, which are at the heart of the current Iarge language model and generative AI phenomenon. This innovation means that in the UK, only around 0.7% of stock we order goes unsold compared to an industry average of 2-3%.

But once we have inbounded our stock from the supply chain, we need to get it in and out of the warehouse in the most efficient manner.

Can you talk about how Ocado’s AI enables highly efficient picking and packing of orders?

In our automated customer fulfilment centres that we provide for retailers worldwide, hundreds of retrieval robots whizz around giant storage grids to fetch containers containing the items that people have ordered.

These ‘bots’ are orchestrated by an AI ‘air traffic control’, which controls every move the bots make. AI helps make decisions like: which stock storage box to use for the next order, how to efficiently path the bots around each other, and where to put boxes down once we’ve finished picking from them.

Compared to a typical manual warehouse, the storage and retrieval bot system and advanced automation

Compared to a typical manual warehouse, the storage and retrieval bot system and advanced automation reduces the end-to-end labour time to complete one order from over an hour to under 10 mins.

reduces the end-to-end labour time to complete one order from over an hour to under 10 mins.

What role does computer vision play in improving your engineering operations?

Our fleets of bots travel tens of millions of km every year, and occasional mechanical wear can mean there is a failure. AI plays a crucial role in minimising the impact. If a bot ceases to provide updates to the air traffic control system, we use computer vision on the 360-degree fisheye cameras above the grid to locate the bot for maintenance attention. This makes our engineering operations more efficient.

Can you give an overview of how Ocado’s robotic packing of shopping bags works without the need for human touch?

The bots deposit stock next to robot arms to be picked into customer bags. Our retail partners can stock up to 50,000 different products each. Robot arms need to be

able to deal with a huge variety of weight, size, shape, surface, fragility and packaging.

We use the latest AI and machine vision algorithms to solve this challenge. The system takes inputs from the cameras and sensors on the robot and outputs the motor-control instructions to move the robotic arm and the end effector.

We used imitation learning techniques where human pilots demonstrate how picking and packing should be done with a controller and the robot arms learn from this data, generalise, and improve over time.

Now that robotic pick is live in operations, it’s supervised by remote teleoperation crews who can assist the arm in identifying grasp points for unfamiliar products or scenarios. If the AI gets ‘stuck’ it calls for a person to assist remotely. Every time we use teleoperation we gather data which, combined with the information of the failed pick attempt, helps train the AI to handle similar scenarios in the future.

How are you using technology to optimise the delivery journey on the roads?

Unlike more traditional courier services, grocery orders cross multiple temperature regimes, take up significant space, and are expected within

narrow time windows. As a result, a grocery delivery van may deliver 20 or so orders in a shift, whereas a van delivering parcels might deliver hundreds. This makes the labour cost in the last mile far more significant on a per-order basis.

Minimising delivery costs is therefore vital to achieve the margins we want. Without AI, it would be difficult for us to deliver groceries to your door on time for the right cost.

Firstly, AI helps us minimise the number of bags we use, and therefore vans and routes. 3D packing algorithms mean we can densely fill shopping bags so they fit into a lower number of vans, whilst ensuring bags aren’t too full so fragile items might be damaged.

Our AI delivery optimiser calculates the optimal distribution of all of our orders onto available vans taking into account the order volume, weight, availability of vans and drivers and much more. It also plans the optimum path for all of these vans to take in order to minimise fuel consumption, number of drivers needed, and ensure everything gets delivered on time.

It takes into account traffic conditions, and learns from past drive time and stop time data for every road and every customer address.

Data from vans is used both for the real-time monitoring of the routes and for feeding into our routing systems so that the routes we drive tomorrow will be even better than the ones we drove today – e.g. the best place to park on a Wednesday afternoon during school term time may be very different to the best location on a Sunday morning.

How important is human critical thinking in your approach to deploying AI?

AI is embedded throughout our tech estate. We have over 130 distinct AI use cases across Ocado. Many of our robotics products deal with highly unstructured environments. We can’t pre-programme a robot to deal with the many millions of scenarios it could be faced with. Due to the high number of unknown factors and almost infinitely variable environments, we must have AI systems based on data that can learn, adapt and generalise.

But any AI we use is always augmented with great engineering in other crafts. ML engineers and data scientists work alongside mechanical engineers, controls, mechatronics, electronics and software engineering. Our data team is embedded in our product streams, rather than being a totally separate function.

Despite our wide-scale use of AI, our mantra is to always choose the right tools for the job. We need to remain critical when we use this technology. AI is not the right approach for every product domain. There are many areas where we will always have hardcoded software systems.

We take a similar approach with the latest generative AI tools. Generative AI, or any sophisticated AI

approach, can be very exciting, but there is only a small subset of business problems that are best solved by very sophisticated techniques.

More often, the most important thing is good data and an understanding of what it means. It might be very trendy to develop complex algorithms to achieve certain tasks, but often there’s a simpler maths approach which will be just as effective. And likewise, small, faster and cheap-to-run machine learning models beat massive parameter large language models at a lot of tasks.

What is Ocado’s approach to ensuring responsible decisions are made with AI technology?

With so much AI technology embedded across our platform, it’s critical that we develop and deploy these technologies as robustly as possible. We want our partners and their users to feel confident about using our AI and robotics products and services. They want to know we’ve thought about performance, safety and privacy. This is even more important as we scale internationally and support new businesses beyond the online grocery space.

This is why we have committed to design and deploy AI systems responsibly – always considering fairness, accountability, transparency and explicability throughout the AI lifecycle. This will ensure systems function as intended and prevent mistakes, meaning responsible AI is essentially successful AI.

CERTAIN

If you could build precise and explainable AI models without risk of mistakes, what would you build? Rainbird solves the limitations of generative AI for high-stakes applications.

CHRISTOPH SPORLEDER

CHRIS is a managing partner at Rewire, a data and AI consulting firm with offices in Amsterdam and Heidelberg. He is responsible for developing client relationships in Germany, Austria and Switzerland. Rewire’s DACH operations provide end-to-end data and AI transformation, from strategy through implementation and change management.

MAXIMISING THE IMPACT OF YOUR DATA & AI CONSULTING PROJECTS

FROM STRATEGY TO EXECUTION: A GUIDE FOR ENTERPRISE LEADERS

Could you share how you got into consulting?

My journey started in data and analytics. My first role was with a utility company where I analysed mainframe data and even helped calculate the company’s first wind park. I was fascinated by analytics, and that passion led me to SAS, a leading data analytics company, where I was involved in building out their professional services division, which ultimately led me into consulting.

With the advent of cloud computing, the consulting landscape shifted dramatically. Previously, we focused on explaining the value of data analytics, but cloud technology has enabled us to operationalise insights in ways that weren’t previously possible. This evolution has been incredibly exciting.

How did you communicate the value of data back when it was relatively new?

The internet was just emerging then, but many industries were already familiar with using data, such as insurance actuarial work. For those in other sectors, we emphasised modernisation and the potential of new algorithms to enhance insights. But with today’s GenAI advancements, there’s far less emphasis on convincing people of data’s value – now the focus is on how to execute and achieve impact.

How did you experience the shift from local to cloud computing?

Cloud computing was a major leap, especially in

handling and integrating data from diverse sources. Thistransformation was also driven by IoT developments, connecting millions of devices and greatly expanding the data we could analyse, from customer behaviours to complex production processes.

In the past two decades, what aspects of enterprise data functions haven’t changed much?

Data management remains a challenge. We’ve evolved from data warehouses – low-tech but high-governance – to data lakes, which brought high-tech but low-governance, often creating data chaos. Now, with concepts like data mesh, we’re seeing a more balanced approach that combines technology with governance. Yet, engaging business leaders in data management is still an ongoing challenge.

How do you see the role of consulting in the data and AI space?

You have to differentiate between types of consulting services – there isn’t just one type, but many different flavours and shapes. It’s a spectrum:

On one end , you have the ‘body leasers’ – you order three units of data scientists, and that’s exactly what you get, but then you have to direct their work. On the other end , you have strategy consulting firms – the peak of the mountain, high-performing and fastmoving consulting firms.

In between , you have system integrators who lean more toward the first category, and boutique firms that position themselves in the middle or closer to the strategy end.

You need to identify the right type of consulting for your current state and engage with the appropriate firm. It’s rarely one provider from start to finish – you’ll likely need different types of services at different points in your maturity journey.

Choosing the right consulting partner is crucial. For instance, strategy consultants excel at high-speed, impactful short-term engagements but may require substantial internal resources to keep pace. In contrast, system integrators operate within very specific project parameters, and body leasing can lead to dependency on individual freelancers. Each approach has its place, but the fit depends on the project’s goals.

Do you view strategy as the starting point for data and AI initiatives?

Yes, I do. However, you need to be aware that data and AI initiatives can originate from different points in organisations:

Sometimes you have an enthusiastic board member who believes in it unconditionally. That’s a brilliant starting point, and your strategy becomes more operational since you don’t need to convince anyone at the board level. You can focus on implementation and achieving impact. If you don’t have that convinced board member, you first need to:

● Analyse your value pools.

● Determine the potential commercial impact.

● Calculate the transformation costs. This requires a different type of consulting service, typically a shorter engagement, but it’s necessary work to gain board-level commitment.

How often does misalignment occur between the developed solution and business goals?

Yes, that does happen and it’s not so much that the overall objectives fall apart, but rather the specific scoping of the solution that can be problematic.

What often happens is that there’s a complex challenge, like developing a ‘copilot’ based on generative AI. A copilot is typically not a single use case, but rather multiple use cases bundled together. Deciding how to slice and scope that effort requires very conscious choices:

● Do I go for maximum immediate impact?

● Do I prioritise speed of deployment?

● Do I aim for breadth in terms of how many functions, processes, or assets the copilot should address?

● Or do I take a more strategic, scaled approach – build the first use case to help develop the necessary infrastructure and data products, which then accelerates subsequent use cases?

These design choices are critical, and sometimes the solution being developed can end up not fully aligned with the organisation’s strategic direction. It’s very important to identify and fix any such misalignment very early on.

With today’s GenAI advancements, there’s far less emphasis on convincing people of data’s value – now the focus is on how to execute and achieve impact.

While less common in data and AI engagements compared to other types of consulting work, this challenge can still arise. The key is to maintain tight alignment between the solution being built and the overarching business goals throughout the project.

What common challenges do organisations face early in their data and AI journeys?

There are very common challenges, particularly around the expectation versus reality gap. The first step in capability building is understanding the art of the possible. There are a lot of expectations, especially now with GenAI, about what it can and cannot do. Spending time understanding the possibilities is crucial to avoid mismatched expectations.

A second major challenge is that organisations frequently underestimate the infrastructure and data management requirements needed to produce an AI use case. Transformations can fail in different directions: Some organisations overshoot by investing heavily in technology and data first. I’ve seen programs spend three years working on data without deploying a single use case. On the other extreme, some programs deploy three use cases without considering how to build a common data foundation or infrastructure.

Both extremes should be avoided. The right approach is to think about all dimensions and develop your infrastructure, data foundation, and organisational capabilities alongside the use cases that create impact. Recently, I posted about the end of the ‘lighthouses’ phenomenon. We’ve seen many organisations building exciting lighthouse projects by different groups, but with no common foundation or connection between them. While individual lighthouses may be stable, they don’t help the organisation scale data and AI because there’s no scaling plan behind them.

Once the partner is chosen, what can be done to ensure effective collaboration between the consulting company and the organisation?

There are several key considerations:

1. When choosing your partner, think about common objectives. We increasingly see performance-based coupling between organisations and consulting partners, working toward shared goals with remuneration tied to reaching these objectives.

2. Establish proper engagement governance with regular feedback loops between the client and consulting organisations.

3. Understand that consulting organisations also have an obligation to develop their people. On longerterm engagements, there will be some rotation of

personnel that needs careful planning. Friction can arise when consultants need to move on after 4-6 months to avoid task repetition and continue their professional growth. This requires open conversations and planning.

Are there procurement or RFP process improvements that would benefit AI consulting projects to ensure they are set up correctly? This is specifically about data and AI rather than general procurement. Data and AI projects have significant dynamics across different dimensions, similar to the early days of online marketing – you often end up somewhere different from where you initially planned. This doesn’t mean you’re not creating impact; you may just take a different path to achieve it.

For procurement, this means you shouldn’t engage data and AI consultancies in the traditional way you would work with a system integrator. Strict, tight statements of work can actually hinder your success.

What other factors are crucial for a successful consulting-client collaboration?

You need alignment across three domains: business, leadership, and technology. Business leaders provide insight into core needs, leadership drives organisational changes, and the tech team delivers solutions. These elements together form a strong foundation for impactful AI projects.

How important is leadership involvement in AI projects?

Essential. Successful AI initiatives integrate AI into processes, often involving workflow changes, capability-building, and sometimes even role redefinitions. Leadership commitment is key to driving these changes. You cannot implement data and AI at scale without senior leadership commitment. Without it, initiatives will remain siloed or isolated in specific departments. Everyone says AI will change the world and transform every process, but that will only happen if your most senior leadership not only supports it, but is convinced that this is a new enterprise capability.

Everyone says AI will change the world and transform every process, but that will only happen if your most senior leadership not only supports it, but is convinced that this is a new enterprise capability.

Looking forward, what trends do you see in the data and AI field?

I see a few key trends emerging:

INFRASTRUCTURE AND TECHNOLOGY:

● The hyperscalers are dominating the infrastructure space, but architectures are becoming more componentised. There’s rapid innovation in data and AI tools, so organisations need the ability to easily swap out components rather than being locked into specific solutions.

INTEGRATING CONVENTIONAL AI AND GENERATIVE AI (AGENT NETWORKS):

There will be a lot of discussion around how to integrate conventional AI models (e.g., for risk scoring) with the new wave of generative AI models (language, image, video). Creating end-to-end processes that leverage both will be an important next step.

DATA MESH:

● The focus is shifting from AI as a separate tool on top of processes to AI becoming an embedded, natural step within processes. This allows for more seamless integration and automation of simple operational decisions.

What major pitfalls should organisations avoid in this space?

Many still underestimate the infrastructure needed to support AI. Additionally, trying to shortcut foundational work in data management or infrastructure will hinder sustainable AI growth.

Why should data & AI practitioners consider consulting as a career?

Consulting offers unparalleled learning opportunities. Every client and project is unique, exposing you to varied business challenges and technologies. It’s an excellent choice for anyone seeking continuous growth and diverse experiences.

While a full data mesh implementation can be highly complex and time-consuming, organisations can take a more pragmatic approach. Focusing on data product-centric thinking and selectively adopting certain data mesh components that can be implemented quickly, is a smart way to progress.

Can you explain the Rewire philosophy and approach?

Rewire not only help clients identify impactful areas but also support them in capability building and integrating AI into business processes. The Rewire approach is a hybrid between strategy consulting and implementation. Our aim is to drive impact collaboratively with clients, adapting engagement models as projects evolve. Our team’s strength is in balancing high-level strategy with hands-on execution and capability building.

FROM PERSONALISATION TO INSPIRATION : HOW IKEA RETAIL (INGKA GROUP) IS PIONEERING THE USE OF AI IN HOME RETAIL

& DESIGN

Can you give us an overview of how data and AI is set up at IKEA?

I work for IKEA Retail (Ingka Group), IKEA’s largest franchise. My work covers approximately 31 countries where IKEA operates throughout Europe, North America, parts of Asia, and South America. The Data & Analytics Team oversees AI within the company’s product teams, and deals with data foundation, governance, management, and insights for IKEA Retail, as a whole. We work alongside the product teams, or sometimes we work independently to build data platforms and other tech that the different product teams can enjoy and reuse. Recently, we opened an AI lab internally, which focuses on GenAI and applied AI functionality. Our goal is to have an R&D function across IKEA as we explore how new technologies can give new experiences to our customers.

Within my team, we have several specialist teams working on different aspects of innovation. One team is dedicated to what we call ‘retail data machinery,’ focusing on personalised recommendations, personalisation engines, and search optimisation. Another team specialises in insights, analysing the broader

SHAHIN SHAHKARAMI leads a data and AI engineering team at IKEA, driving transformative changes for one billion customers across the globe. His focus is on recommendation systems, multi-modal search and NLP to personalise the omnichannel shopping experience. His team is focused on building end-to-end ML applications. Prior to IKEA, Shahin delivered analytics solutions in the telecommunications industry, enhancing customer satisfaction at scale.

customer journey – both online and across our omnichannel platforms – looking at how people transition between stores, apps, and the web. We’re also investing heavily in building generative AI capabilities that will shape IKEA for years to come.

What are the main areas of the business that your team work with?

We focus on growth, or as we call it, ‘growth and range’, which includes how we present IKEA products to our customers online. That’s an essential part of IKEA – our products and how we personalise them. We’re here to make sure every customer finds the most relevant products.

Another key focus is ensuring IKEA remains a truly unique omnichannel retailer, where the IKEA experience feels seamless and consistent, whether you’re looking at our website, our app or you’re in an IKEA store – the

goal is that these channels work cohesively, while staying true to the IKEA brand.

I also work on home imagination capabilities, which is about helping online customers imagine and better design their homes. We want customers to feel inspired and translate their home furnishing needs online in an interactive and playful way.

What’s the long-term strategy for data and AI at IKEA?

Our vision in IKEA is to develop high-quality data and AI products that enable accurate actionable insight and on the other hand infuses automate intelligence to enhance efficiency and performance. Specifically within AI, we are actively investing to build capabilities to maintain IKEA’s leadership within the home furnishing space.

How do you decide what data and AI use cases to work on? It’s always about having a clear

SHAHIN SHAHKARAMI

strategy of what we want to achieve. Where do we want to be in a few years’ time? Are there areas that we need to address as an online retailer, and also as a leader of life at home within the furnishing industry? The use cases and projects typically stem from two different angles. First, we consider our product with an overall view together with UX and engineering, to then understanding the product’s impact on the business, and the business goals we want to achieve. Then we consider how AI or data can help us attain that goal. That’s how we choose which projects to take on. And then there is an additional angle, which is to consider new capabilities. For example, with the GenAI situation, which opened up a lot of doors for us. We need to understand the tech and assess if it can solve our customer problems more efficiently or create new experiences for us. In those cases we start by experiementing the tech without having a use case in mind to begin with, then we see a realm of possibilities from there.

We do some opportunity sizing from there, and then see if we can embark on these projects moving forward.

How do you ensure that the data and AI teams collaborate well with business stakeholders and other teams in the organisation?

sometimes we take more of a hybrid approach: having a central team that supports the larger product teams.

There’s value to having a standalone function in the organisation; in being able to connect the dots between different product teams that might be operating in silos, due to the company size. We can take a more holistic view, and then collaborate between different teams, acting as the glue – and the voice of reason –for the organisation.

When you’re working with the teams on a specific project, who’s doing the execution? Each product team consists of four parts, as we call them: PDEX (which is product), engineering, data, and UX. These four work together from the start until the end of the project.

Sometimes there are different flavours in the organisation. For example in PDEX there are products that don’t need a data person because you’re building a front-end tooling, but some products are more AI-heavy. And in this instance we’re involved from the ideation to execution.

causal effect testing.

This has helped us to understand the value of the work of AI and our data scientists as well, because when you make a feature, you can always visualise the impact in that moment. But you can also do it holistically, at a larger level.

We also evaluate opportunity sizing – essentially, what the potential impact would be if we scaled a solution across all our global operations or removed certain organisational barriers. From there, we assess the return on investment (ROI). But it’s all about finding the right balance. For example, even if the ROI on a specific product’s search function is low, it’s still essential to have that feature on the website.

But for our large investments, we always ask: does it reduce costs by improving productivity or efficiency? Does it generate revenue, or enhance customer satisfaction? The initiative needs to deliver on at least one of these in the medium or long term. From there, we work backwards to identify specific use cases.

There should be a good balance between projects you know are going to generate revenue, save costs and improve customer satisfaction, whilst at the same time investing in projects that are more exploratory and innovative; asking open-ended questions that could pay off in the future.

The secret to good collaboration is ensuring we all share one goal. And within that common business goal, we have different specialists who work on achieving it.

We have our data and analytic coworkers embedded in different product teams. Sometimes we have our specialists fully embedded in certain product teams, because we know that will ensure the goal alignment and also the delivery of a certain data analytics, inside the product, or AI product. And

How do you measure the return on investment of data and AI initiatives at IKEA?

That’s one of the favourite questions of any C-suite or board member: how do we actually measure the impact of data and AI? Because it can all become a bit abstract. Over the last few years, we’ve built a great experimentation and measurement culture within IKEA, meaning that everything we have for our customers, always gets A/B tests and different types of

A great example of something that’s hard to quantify, is the fact that many customers who visit IKEA don’t use a shopping list, but they’re there for inspiration. They’re not just looking for a list of items to buy, they are seeking an experience. Because of this, we aim to have all these tools available to help customers get inspired, and then measure the impact of that inspiration – for example, if the customer is coming back or if they’re adding things directly. In these instances the ROI isn’t as tangible as you want it to be, but sometimes you need to address it in order to be an industry leader in this area. You want customers to come to you for inspiration as well.

Can you give us an example of a successful data and AI initiative at IKEA? I think one of the most successful

examples has been our journey in recommendation and personalisation. Our product range is at the heart of IKEA: the stores, the website, everything revolves around it. Our vision to help every customer access the most relevant range, the most relevant content, the most relevant services – this has been really important. So, we built an in-house recommendation engine.

This engine addresses a really complex set of recommendation problems, because we’ve got so many different journeys, different channels, different experiences, and you want to build something that’s effective and reusable, and also cost-conscious and scalable at the same time.

Essentially, our recommendation system consists of more than 15 AI models that work together to power different recommendation panels that we have on the website, and in other locations you might not associate with recommendation, like a chat or a 3D setting. The engine can offer the right product for a customer in all these contexts. And today approximately 40% of online visitors to IKEA are using it and it’s generating both direct and indirect revenue across the website.

In the past, we used to buy these recommendations from an external company. It’s been a strategic direction for us to build them all in-house and in a way that IKEA can generate the right content and products for different types of customers.

What prompted you to build these technologies in-house?

The way I see it is this: if the capability we’re developing requires complete control and a clear understanding of everything that goes in and out, then it’s something we need to build in-house. For example, IKEA is different from other retailers because it sells its own product online in many countries (compared to a retailer like Amazon, which has an open-

ended number of products). So, the complexity and challenge is thereby different, and we need to build these things internally to offer the best service to our customers.

What were the challenges you experienced when building this tech, and how did you overcome them?

The main challenges were twofold. First, there were technical challenges: we wanted to build personalisation for our customers, but did we have real-time data for our customers to personalise things in real-time settings? Could we serve these models in a scalable but cost-conscious way? And from an algorithmic perspective, how should we design this system to address all these journeys and channels in a way that’s reusable and functional?

We solved these technical challenges by having really smart people that work endlessly to solve these problems. We focussed on our data foundation, for example, by ensuring we understand our customers and customer data, setting up goods and the ops setup structures. And from an algorithmic perspective we gained a lot of knowledge, whilst solving these problems in an innovative way.

The second challenge was to overcome the organisational issues, in particular the change management side of things. Moving technology is easy because it’s logical: you move from point A to B. But organisationally, it’s always challenging when you’re coming up with new ideas that are changing parts of the company, fundamentally.

But thankfully, we’ve achieved a high level of collaboration within the organisation. We have a clear vision of where we want to go and we can help guide everyone towards that.

What impact has your background and experience had in the way you approach

productionalising tools of this complex nature?

I have a deep technical background in the space, and I’ve been really interested in AI since my academic experience. But when it comes to these complex systems, I always look at how they can be of practical benefit. And that’s where maybe my product owner side comes into play: really looking from the customer perspective. Does this really improve customer satisfaction? Does it really help our customer?

I sometimes feel the role I have is more of a translator: taking some really complex technical concept that might involve, say, the cost function of neural networks, and translating this for a senior leader or different department within the company towards business priorities. And then conversely, explaining some business idea which might sound really abstract to someone who works in data science because there are so many ways of doing it, and translating it back into technical language.

Can you tell us about the next potential use case for the project?

A next potential case is how we can help our customers better imagine their homes. Because the essential problem we’re trying to solve is this: if people buy product X or product Y, does it really fit in their room? Does it fit in with their style? Does it fit within their budget?

That translation is really difficult to achieve, because people’s homes are complex. People are complex as well. And that’s where we’re utilising AI to help customers imagine how the products will look, and how they will match their personal style.

There are different types of capabilities such as scaling designs, which give customers these tools to help them place products in their rooms, or to re-design the room, and to give them different options so they can compare styles. Our ultimate aim is to simplify

the process of designing a room. Interior design might be easy for a group of our customers, but not for most people. On the other hand, people do have a feeling for what they want and what they like, based on their needs and desires.

So in summary, that’s the use case: interior design, toolings, and 3D settings that are very, very interesting. And that’s what the team is focusing on at the moment. We’re still in the early stages of investing in and understanding these capabilities. We’re committed to doing this responsibly, ensuring that everything we create prioritises the safety and well-being of both our customers and our business.

As a GenAI use case the project has huge potential. Why should you buy this pillow that’s white and square versus the other pillow that is also white and square? There’s this whole angle of better storytelling and answering customers’ questions, helping them when they’re deciding and choosing products.

And for certain types of products, you need to help people visualise the end design. Let’s say if you’re buying a kitchen and you’re spending a significant amount of money. Or if you just bought a house and it’s completely empty and you don’t know where to start. It’s all about removing the fear of design for the customer, by helping them imagine what the room could look like with these different styles and sets of products.

So, with these capabilities and technologies we’re building, we are using GenAI to scale that design. But on the other hand, we are also doing a lot of engineering and tech work to create these toolings as well.

What are you most excited about for the future of data and AI at IKEA?

This might sound cliché, but IKEA’s

SHAHIN SHAHKARAMI

mission of creating a better life for many, is honestly something really iconic. And I think AI can help with that. AI has huge potential for helping every single individual from different budgets, from different backgrounds,

I believe we’re on the verge of a revolution in e-commerce and retail. In five years, we may be embracing experiences we can’t even imagine today – experiences that will soon feel as natural as the ones we rely on now.

different stages of life. You could be a student, you could be a young professional, you could be retired and use these capabilities. That’s really exciting!

And more specifically within my own domain, what really excites me is that there’s a lot of movement in spatial computing, spatial understanding, of the interior space. GenAI capabilities are getting a lot better at that. When exploring that area of spatial computing for IKEA, there are a lot of the models that have become cheaper and better. There’s the option to offer hyper personalisation at scale and give new experiences to our customers. And storytelling our range to our customers is another topic that really excites me.

What future trends in AI and data could impact large enterprises like IKEA?

There are three main trends. One is, of course, AI acts. the EU AI Act, for example. How that impacts an organisation. IKEA has an immense focus on that; we’ve been investing in responsible AI, and we’ve got responsible AI teams organised within the company to become a leader in this space.

The second is the future trends of productivity at work. I think all these productivity tools can really enhance the day-to-day work of a lot of our coworkers in large organisations. At IKEA, for example, even if you move productivity by a few percentages, it will have a massive impact. If your coworkers are more productive in answering customer calls, in answering customer questions in the stores, or maybe even if software engineers are more efficient in the way that they write code – that will drive meaningful transformation.

And the third trend is GenAI. It now has better multimodal capabilities that are cheaper, safer and more secure. It can be used on your device and not in the central clouds. These models are evolving into having better reasoning, and getting better at solving complex tasks. There are a lot of new applications that will come with GenAI. I believe we’re on the verge of a revolution in e-commerce and retail. In five years, we may be embracing experiences we can’t even imagine today – experiences that will soon feel as natural as the ones we rely on now.

HOW TO BUILD AN OPEN-SOURCE COMMUNITY IN BIG PHARMA

PROGRAMMING LANGUAGES:

A TRUE PARADIGM SHIFT IN THE PHARMACEUTICAL INDUSTRY

In recent years, the pharmaceutical industry has experienced a significant paradigm shift in its adoption of opensource programming languages. Up until recently, each young graduate had to choose between open-source and proprietary programming languages. However, the landscape has evolved, with a majority of pharmaceutical companies now embracing a multilingual programming strategy. The goal is to use the right tool for the right job.

GUILLAUME DESACHY is dedicated to helping bring the right medicine to the right patient by leveraging the power of biometrics.

As Head of Biometrics at Pierre Fabre, he leads a department of 15+ experts in data management, programming and statistics, working collaboratively to drive the success of both clinical trials and real-world evidence studies. The Biometrics Department supports all drugs developed and commercialised by Pierre Fabre. Since his graduation from the French National School of Statistics (ENSAI) in 2011, he has had the privilege of working across all stages of drug development (from pre-clinical research to launch) across diverse landscapes, including academia (UCSF - U.S.), biotech ventures (Enterome - France) and global pharmaceutical industry leaders (BMS, Servier, AstraZeneca & Pierre Fabre - France & Sweden).

AUTHOR : Guillaume Desachy

WORK DONE AS : Statistical Science Director, Biometrics, Late-stage Development, Respiratory and Immunology (R&I), BioPharmaceuticals R&D, AstraZeneca, Gothenburg, Sweden

CURRENT EMAIL ADDRESS : guillaume.desachy@pierre-fabre.com

In early 2021, a group of us at a major pharmaceutical company convened to discuss the growing importance of the open-source programming language R within our organisation and the industry at large. R was not a new thing at AstraZeneca, nor was it a fleeting trend. Despite its established presence, we had limited insight into who the R users were within this large organisation, and there were no formal channels for them to connect, share knowledge, or inspire one another. No forums, gatherings, or events existed to facilitate the collaboration and growth of this community.

OUR OBJECTIVE?

Federate all collaborators using the open-source programming language R, enhancing cross-team collaboration and knowledge-sharing.

We decided it was time to establish a community of R users within AstraZeneca. Since 2016, the company has utilised an internal social media platform called Workplace, akin to Facebook for companies, as the primary communication tool among groups. It was immediately clear that this platform would serve as the foundation for developing our R user community.

HOW TO KICKSTART AN OPEN-SOURCE COMMUNITY IN A BIG PHARMA COMPANY

We decided to steal with pride a successful concept from the external world: the data visualisation contest known as TidyTuesday * , which utilises opensource data. However, we didn’t just want to replicate

it; we aimed to make it uniquely our own. The core principle would remain the same as TidyTuesday, but with a distinctive twist. Each time we promoted a dataset, we aligned it with our corporate values and current global events. And that’s how #azTidyTuesday was born!

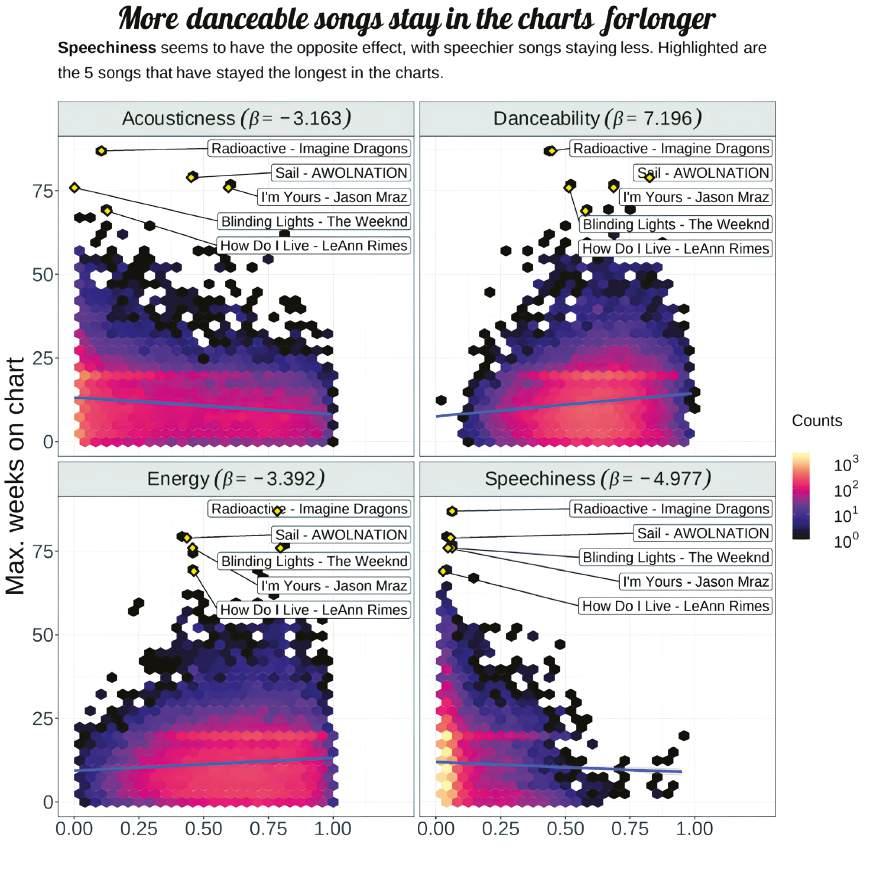

FIGURE 1

Featured entry for the final edition of #azTidyTuesday Dataset: Billboard Hot 100

Scientific question: Is there anything that can explain that a song is going to make it to the top of the charts? Could it be explained by its genre, its danceability, its energy, its loudness, its speechiness, its acousticness, its instrumentalness, its liveliness, its valence, its tempo, its duration?

Graph credit: Alvaro Mendoza Alcala

* TidyTuesday is a weekly initiative by the global R community for practising data analysis skills. Every Tuesday, a new dataset is posted on GitHub, and participants are invited to explore, visualise, and share their analyses using the R programming language and its packages, especially those from the tidyverse. It’s a great opportunity to learn, practise, and improve your R skills, as well as to see how others approach the same dataset.

#azTidyTuesday was just the beginning and proved to be a very popular way to promote and grow the community. It also underscored the need to develop additional initiatives to meet the diverse needs of our community members.

Shortly thereafter, we launched a monthly

initiative showcasing publicly available R packages or functions. This was done through blog posts that showcased both well-known and lesser-known packages and functions. Each blog post included a few lines of code that readers could try out at their own convenience and pace.

It quickly became apparent that we needed to federate this community of R users, and an internal R conference seemed like the perfect way to achieve this. Such an event would send a strong message both internally and externally.

The very first AstraZeneca R Conference, #AZRC2022, was scheduled for February 2022. At the time, our community was growing but still in its early stages, so we were uncertain about the level of interest, the topics that would resonate, and who would be

willing to help organise it.

To our amazement, more than 500 collaborators from 22 countries attended the inaugural AZ R Conference! This conference was a fully virtual, halfday conference featuring Max Kuhn as the keynote speaker, over 30 speakers and nearly 100 poster authors!

The conference was structured into three parallel tracks: governance, machine learning, and reproducibility.

500+ participants from 22 countries!

FIGURE 2

Countries highlighted in orange show countries from which AZ collaborators connected from for the AstraZeneca R Conference 2022. Graph credit: Tom Marlow

The overwhelming response to the conference sent a clear message: the community of R users within AstraZeneca was getting definite traction.

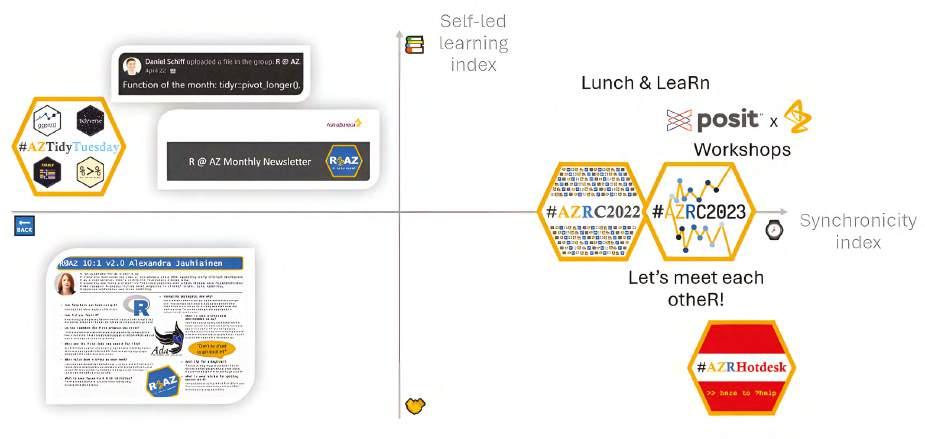

Rather than resting on our laurels after the success of this internal conference, we recognised the need to improve inclusion and diversity in addressing the community's needs and learning styles. We decided to evaluate all future initiatives based on two indices: the self-led learning index and the synchronicity index. The self-led learning index differentiates between self-directed learning and structured instruction, while the synchronicity index distinguishes between synchronous and asynchronous initiatives.

For example, #azTidyTuesday and the ‘Function of

the Month’ initiatives were asynchronous by nature, allowing community members to engage at their own pace. In contrast, the AstraZeneca R Conference 2022 was highly synchronous: although all talks were recorded for later viewing, real-time interaction with speakers required live attendance.

Following the inaugural internal R Conference, we knew we needed to continue fostering a sense of belonging and strengthening connections across departments.

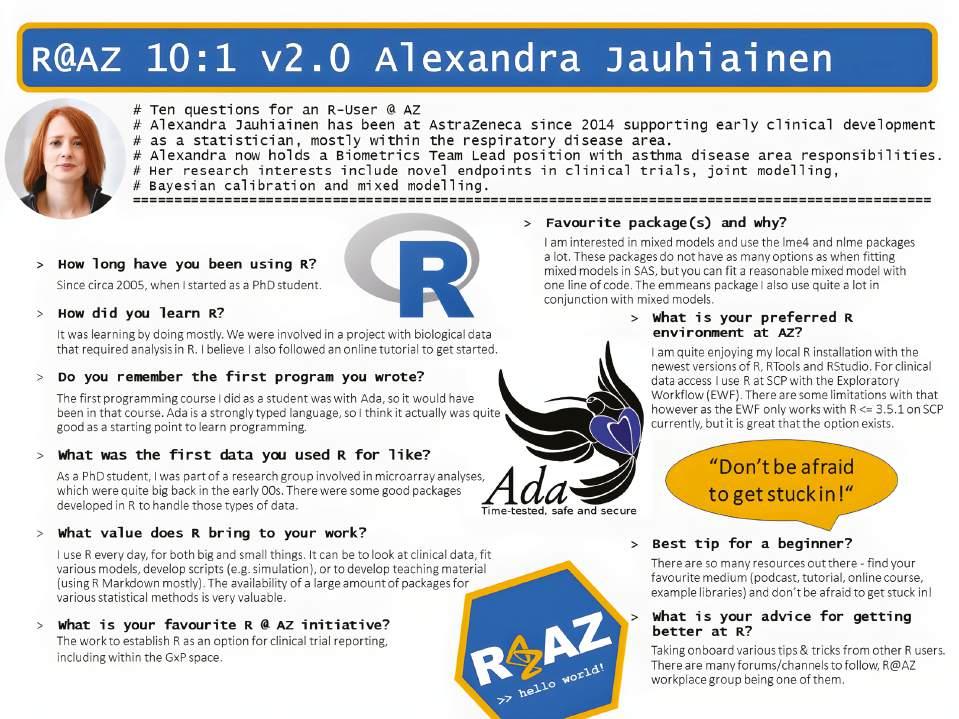

We then launched ‘R @ AZ 10:1.’ The idea was to showcase an R user within the organisation and ask them 10 questions, further promoting engagement and collaboration within our growing community.

FIGURE 3

Example of R@AZ 10:1, featuring Alexandra Jauhiainen

Some of the feedback we received from the community after #AZRC2022 was a desire for more frequent, conference-like events. We heard this loud and clear, but we also recognised that everyone has busy schedules.

This led to the idea of hosting ‘Lunch & LeaRns’. The concept was simple: invite a member of our community to showcase something cool they did with R, all within a 30-minute session. The only rule was that each Lunch & LeaRn would last exactly 30 minutes, including Q&A.

And again, we kept inclusion & diversity in mind by scheduling these sessions at lunchtime, alternating between Europe and the U.S. to accommodate different time zones.

As more collaborators began returning to office sites, we saw an opportunity to further nurture these virtual connections in real life. This inspired the launch of the ‘R Hot Desk’ at the AstraZeneca Gothenburg site.

The principle is straightforward: once a month, experts in the open-source programming language R meet in an open area of the Gothenburg offices and dedicate two hours to answering R-related questions. This initiative allows for face-to-face interaction and real-time problem-solving, further strengthening the community.

The interest in our face-to-face help desk did not meet our expectations. While there was some definite interest, the format may not have been ideal. Therefore, we decided to

rejuvenate the concept by transitioning to an online help desk instead of a face-to-face one.

WHAT IS THE VALUE OF THIS COMMUNITY? WHAT IS A KEY PERFORMANCE INDICATOR? WHAT IS ITS RETURN ON INVESTMENT?

All of this was based on voluntary involvement: there was no hierarchy between Guillaume Desachy (R@AZ Lead) and the members of the Steering Committee. Given the preciousness of everyone’s time, it’s reasonable to question the time spent building such a community. What is the value of this community? What are the key performance indicators (KPIs) for this community? What is the return on investment (ROI) of this community?

At first, these questions can be puzzling and extremely difficult to answer.

When considering the value of this community, we initially looked at the number of members in our internal social media group. However, this was not a true KPI, as it is not a metric we can directly influence. No matter how hard we worked, our initiatives could only nudge collaborators to join the group. Joining a social media group was never the end goal for this community. The true objective was to foster the community and encourage meaningful connections.

One metric that seems to be a more accurate KPI is the number of ongoing initiatives within the community. The Steering Committee has direct influence over this, as it decides which initiatives to start and run on a regular basis. This metric better reflects our efforts to actively engage and support the diverse needs of the community.

FIGURE 4

This KPI was used to gauge the vitality of this community. Regularly, we assessed additional KPIs for each initiative led by the R@AZ Steering Committee: Is the initiative well-attended? Is it well-received? Is it meeting the needs of the community?

When momentum around an initiative began to ebb, the Steering Committee would pause, reflect, and sometimes decide to retire the initiative. This was the case with #azTidyTuesday, which we decided to retire after 25 editions.

Key performance indicators are one thing, but they still don't fully address the question of return on investment (ROI). This question was particularly challenging to answer until one day when an Executive Director at AstraZeneca reached out to Guillaume Desachy and one of his teammates with a simple query:

‘Have either of you used this R package?’

Neither Guillaume nor his teammate had ever used this R package. However, we knew we could leverage the community. We posted a message in the Hot Desk Teams channel, and within 40 minutes, we had identified someone who had experience with the package.

Reflecting on this, we realised that without the existence of this community, it would have likely taken much longer to identify this subject matter expert. This highlighted the ROI of having a wellconnected and active community.

HAS THIS BENEFITTED THE BROADER ORGANISATION?

From the very beginning, our objective has been to federate all collaborators using the open-source programming language R, thereby strengthening collaboration across teams and enhancing knowledge-sharing. In less than three years, we have built a thriving open-source community of 1,600+ collaborators within a large pharmaceutical company. If we consider that all collaborators in this community are connected, it means there are a total of 1.4 million * connections within this community. By this measure alone, we have certainly met our objective of uniting all collaborators using this opensource programming language in AstraZeneca.

As decision-makers, we value tangible KPIs and a clear ROI. One achievement the Steering Committee is particularly proud of is the creation of a psychologically safe space. The once nascent Hot Desk where community members were hesitant to ask questions, has evolved into a place where collaborators of all skill levels can quickly find solid answers. This community has also enabled collaborators to take on leadership roles, whether leading an initiative, heading the organising committee for the yearly conference (20+ collaborators), or even leading the community’s Steering Committee (15+ collaborators). For those looking to develop professionally, this community has been a platform for honing skills such as leadership, communication, and networking.

This open-source community has been repeatedly recognised as a model for community-building within a company in the pharmaceutical industry and showcased in various forums: useR! 2022, R/ Pharma 2022, Posit blog post [2022], Posit MeetUp [2022], Posit customer story [2023], Boehringer-Ingelheim’s guest blog post [2023], The Effective Statistician Podcast [2023], R-Ladies Paris MeetUp [2024]

Over a three-year period, this once-emerging community evolved into a hub able to attract worldleading experts for exclusive gatherings.

Although the Lead of the R@AZ Steering Committee has now embraced a new career opportunity as Head of Biometrics at Pierre Fabre Laboratories, the R community he leaves behind at AstraZeneca lives on. A new Lead of the R@AZ Steering Committee has since taken over, and the community continues to grow, meet the needs of its members, and challenge itself. This ongoing effort consistently promotes the use of open-source within a large pharmaceutical company.

A true advocate for the importance of building communities and strong networks to enhance collaboration and knowledge-sharing across teams, Guillaume Desachy leverages his experience with R@AZ to foster collaboration across different departments at Pierre Fabre Laboratories. Striving to be as inclusive as possible, he remains mindful of diverse learning styles in all his initiatives and day-today work as Department Head.

HOW TO GET THIS STARTED IN YOUR OWN ORGANISATION

Whether your organisation is large, medium or small, building strong communities in a work environment is crucial. In this article, we have discussed the clear return on investment of such ventures. You too can start your own community of subject matter experts within your organisation. Here are five tips and tricks we’ve learned along the way:

● Start with the end in mind: Clearly define your objectives and what you hope to achieve with the community.

● Put a structure in place: Establish a framework for how the community will operate, including roles, responsibilities, and communication channels.

● Surround yourself with passionate people: Engage individuals who are enthusiastic and committed to the community’s success.

● Ask yourself: Is this initiative sustainable in the long run? Consider not just the first few editions but the next 20. Plan for longevity and continuous engagement.

● Doing things well is important and talking about what you do is equally important as it will help build momentum.

SPECIAL THANKS TO ALL WHO CONTRIBUTED TO BUILDING THIS COMMUNITY, INCLUDING Abhijit Das Gupta, Anna Samsel, Anna Strzeszewska-Potyrała, Antal Martinecz, Bartosz Górnikiewicz, Chuyu Deng, Daniel Schiff, Ewa Klimkowska, Gabriella Rustici, Gustav Söderström, Jamie MacKay, Jasia King, Jasmine Sadler, Kartik Patel, Lindsey Jung, Monika Eliasz-Kowalska, Monika Huhn, Parth Shah, Per-Arne Stahl, Rachel Evans, Sara Nozohouri, Theodosia Salika, Tom Marlow, Tomasz Lebitko, Vera Hazelwood, Yerka Larsson, and Zach Dorman.

For further insights into Guillaume’s career and publications, you can follow him on LinkedIn www.linkedin.com/in/guillaume-desachy.

FROM PROBABILITY TO PRECI SION:

RETHINKING AI FOR CRITICAL APPLICATIONS

BEN TAYLOR is a pioneer of the causal decision intelligence movement. Before co-founding Rainbird in 2013, he was a computer scientist at Adobe and went on to lead the technical development of an award-winning AI system that revolutionised the insurance industry. Ben is a frequent speaker on the importance of trust in AI and is a member of the All-Party Parliamentary Group on Artificial Intelligence.

In high-stakes AI applications, precision and explainability are paramount, qualities that large language models (LLMs) inherently lack, regardless of prompting strategy or architecture. While LLMs are powerful tools for processing language, their predictive, probabilistic nature makes them unsuitable for tasks requiring deterministic, explainable decision-making.

This article explores the limitations of LLMs, particularly their inability to provide precise, causal reasoning. It introduces the role of causal reasoning platforms, such as Rainbird, to ensure accuracy and accountability in critical decisions.

By combining the strengths of LLMs in knowledge extraction with the deterministic nature of causal reasoning, organisations can achieve transparent and reliable decision intelligence in areas like tax and audit, credit decisioning, and health administration.

By combining the strengths of LLMs in knowledge extraction with the deterministic nature of causal reasoning, organisations can achieve transparent and reliable decision intelligence in areas like tax and audit, credit decisioning, and health administration.

BEN TAYLOR

BEN TAYLOR

THE PRECISION CHALLENGE OF LANGUAGE MODELS

Imagine asking an LLM like GPT4 or Sonnet 3.5, a simple arithmetic question: What is 1 + 1?

You will get the correct answer, not because the LLM is performing a calculation, but because it’s been wellexposed to the question in its training data.

Now, consider a slightly more complex question: What is 1 + 2 × 2?

The correct answer requires an understanding of order of precedence. Again, an LLM will likely give you the correct answer, but not because it understands the order it needs to perform the calculation. It’s not performing a calculation at all, it’s predicting the likely best output based on its training data, which for most LLMs, is the public internet.

But what happens when we scale up the complexity?

Let’s pose a larger number calculation directly to an LLM:

Calculate 5674328923 / 993

The response might surprise you. Instead of computing the exact answer, the LLM will generate an approximation and most likely, an incorrect result. If you ask the same question five times, you’ll likely get five different answers.

This is an inherent consequence of the way LLMs are designed. They predict the next word in a sequence based on patterns learned during training, not through precise mathematical computation.

This limitation highlights a fundamental challenge. LLMs are predictive, not deterministic. In high-stakes applications where precision is paramount, relying solely on predictive models will lead to errors that, depending on the use case, may have significant ramifications.

PRECISION IN HIGH-STAKES DOMAINS

Precision isn’t just crucial in calculating arithmetic; it’s vital across any high-stakes domain where decisions are of high consequence.

For example:

● Tax and Audit: Ensuring compliance with tax regulations requires precise identification of appropriate tax treatments. Auditors must meticulously evaluate financial statements to detect discrepancies or fraud.

● Credit Decisioning: Banks must accurately assess the creditworthiness of applicants to minimise defaults and remain on the right side of financial regulations.

● Healthcare Administration: Accurate management of patient records and billing is crucial for ensuring proper treatment, reimbursement and regulatory compliance. Health administrators need precision when tracking healthcare quality metrics, to improve patient outcomes and meet accreditation standards.

In these areas, decisions aren’t just about numbers –they’re about understanding cause and effect in realworld situations. They require reasoning over knowledge while ensuring compliance with regulations, providing transparent and logical explanations for each outcome.

ADDRESSING SYMPTOMS, NOT ROOT CAUSES

When discussing the shortcomings of LLMs, the conversation often centres around avoiding hallucinations – the term used to describe the tendency of these models to generate plausible-sounding but factually incorrect or nonsensical information.

There are many approaches you might take to ground an LLM and limit the impact of hallucinations. You might, for example, use retrieval augmented generation (RAG) to ground the LLM into referencing your own documented sources. Techniques like RAG and other prompting strategies, such as chain-of-thought (CoT), improve an LLM’s recall and may reduce hallucinations, but don’t address the core issue.

Hallucinations are symptomatic of a broader and more fundamental issue – an inherent lack of precision in LLMs. This is because fundamentally, LLMs operate on probability distributions, not logical reasoning.

While RAG and other similar processing architectures may improve factual accuracy, they cannot turn an LLM into a precise, deterministic reasoning engine. They can never be precise.

All of the strategies implemented to mitigate hallucinations are addressing symptoms rather than root causes. The result is an imprecise attempt to simulate logical reasoning without the benefit of actually being logical. OpenAI’s GPT4 o1 model, previously known as Project Strawberry, is a good example of this.

No grounding or prompting strategies can transform a probabilistic text generator into a deterministic reasoner.

WHEN DETERMINISM MATTERS

Deterministic systems are essential when:

● Precision is required: In fields like finance or healthcare where small errors can have significant consequences.

● Explanations are necessary: When decision-makers need to understand the causal rationale behind outcomes if they are to trust and act upon them.

● Regulatory compliance is mandatory: Where transparent decision-making is crucial for meeting legal and ethical standards.

What’s required to close this gap is a companion technology that can perform the equivalent of a mathematical calculation, only over logical expressions of knowledge.

This encoding approach serves two key purposes: it enables us to derive precise, reproducible answers from

BEN TAYLOR

knowledge representations, and it allows us to trace the reasoning behind the system’s conclusions.

Renowned computer scientist Judea Pearl, a pioneer in the field of causal reasoning argues that, while data can show us correlations, it can never truly tell us why something happens.

After more than two decades of AI being synonymous with data-centric machine learning, this is a critical distinction. In high-stakes decisions, understanding the causal relationships, the why behind an outcome, is just as important as the outcome itself.

Consider a medical diagnosis scenario. An LLM might correctly correlate a condition based on a list of symptoms, but without understanding the causal relationships between a disease and the resulting symptoms. It can’t provide the kind of explanatory power that a human doctor, or a causal reasoning system, could.

For high-stakes decisions, knowing that two variables are linked isn’t enough. We need to comprehend the underlying mechanisms and causes behind their relationship. Understanding the pattern alone is insufficient, so grasping the causal connections is crucial for making informed, reliable decisions that we can trust.

ENTER CAUSAL REASONING

To address these challenges, we must turn to causal reasoning and symbolic models. Unlike LLMs, which generate outputs based on patterns from their training data, symbolic AI operates on explicit rules and logic. In Rainbird’s case, these are structured as weighted knowledge graphs.

This approach enables nuanced but ultimately deterministic outputs. Given the same inputs, a deterministic system will always produce the same results and is able to explain why.

A brief historical perspective helps us to understand the foundations of this approach.

The field of knowledge representation and reasoning (KR&R) has been a cornerstone of AI research since the discipline’s inception. Early expert systems demonstrated the power of encoding domain knowledge in a form that machines could reason over. While these systems had limitations, they laid the groundwork for modern causal reasoning platforms that have now overcome those limitations.

Causal reasoning powered AI systems consist of two parts:

1. Knowledge Representation: Experts encode domain knowledge into the system using logical rules and relationships.

2. Reasoning Engine: The system applies this knowledge to specific data, processing it through logical inference to arrive at evidenced conclusions. Importantly, this approach doesn’t require that all

knowledge be binary or perfectly defined. Where there’s uncertainty or ambiguity in the domain knowledge, this can also be encoded as part of the system. The key is that the reasoning process itself remains deterministic.