International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

Sudarshan Prasad Nagavalli1, Sundar Tiwari2, Writuraj Sarma3

Independent Researcher1

Independent Researcher2

Independent Researcher3

ABSTRACT

ThisarticlereviewsthestateoftheartinLargeLanguageModels(LLMs)inthecontextofNaturalLanguageProcessing(NLP), focusing onhow LLMscanreacha human-level understanding of language. Itdiscussesthe main difficulties following LLMs: theirproblemsregardingcontextawareness,possibleethicalissues,andprejudiceinthedevelopedlanguage.Thearticlealso describes the possibilities of LLMs, such as better machine translation, better conversation AI, and even the automation of contentcreation.Inthisregard,basedontheanalysisofrecenttechnologiesandperformancemeasures,thispaperrevealsthe future trends in LLM research and their perspectives for developing AI both from the academic point of view and in the frameworkofpracticalapplications.

Keywords: NLP advancements, Transformer architecture, Bias elimination, Deep learning, Ethical concerns

1.1

These advancements have been made in Natural Language Processing (NLP) over the last few decades, starting with rulebased systems and moving to advanced machine-learning solutions. One of these changes was the Emergence of the TransformerarchitecturebyVaswanietal.,2017whichradicallychangedtheparadigmmodelsusedtoprocesslanguagedata. Unlike its precursors, the Transformer uses self-attention-based methods that allow working with input data in parallel, making further program development for language understanding and generation more efficient and manageable. This has been instrumental in developing other big language models proficient in different NLP tasks, including machine translation, textsummarization,andconversationalassistants.

Using LLMs has endeavored computational linguistics and the gap between AI and human language understanding. The differencesincludetheabilityofthesemodelstodetectcabalpatternswithinlargetextualdataandproducemorenaturaland contextualized interactions. However, these models have increased in size and complexity, increasing the required computations. They also came up with the issue of interpretability of the results that were arrived at. While these are the dilemmas, no question that LLMs immensely advance the frontiers of what machines can interpret and create in natural language.Since thedevelopmentofthe Transformer model andall the NLPimprovements thathave followed, itiscrucial to note that there is a current and future possibility of attaining higher levels of language or text understanding equal to a human-likeLanguageUnderstandingModelorLLM.

1.2 Overview

This paper aims to explore the complex paradigm of Large Language Models (LLMs) in the realm of Natural Language Processing(NLP)anddiscussthechallengesandopportunitiessuchapproachprovides.Closelyintertwinedwiththispursuit isthequestfornaturallanguageunderstanding,whichiscloselytiedtomostofthecurrentworkinthefield.Ithasbeen noted bybothBenderandKoller(2020)thatNaturalLanguageUnderstanding(NLU)remainsadifficultproblem,pointingtothefact that while great progress has been achieved, the actual understanding of the kind that goes into LLM devices has yet to be developed. To that end, this research extends their work to analyze the complex barriers that LLMs need to tread, including contextualvagueness,semanticcomprehension,andneutralizingbias.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

Furthermore, the paper examines how LLMs can revolutionize numerous fields through automated content generation, conversationalcommerce,andaccessibilityfeatures.Thisstudyaimstodeterminethefactorsthathelpensuremoreefficient and accurate language processing by selecting the overall efficiency of existing models and analyzing more recent developments. However, the use of LLMs in the service of writing at scale instantly raises various questions associated with ethics related to bias, fairness, and accountability, and this aspect of the study addresses these concerns. Finally, this paper provides a general background to demonstrate the potential of fine-tuning and utilizing LLMs to close the gap between machine-produced text and genuine, human-like comprehension. It will also open the possibility of developing artificially smarterAIsystems.

DespiteadvancesinLLMs,difficulttasksmustbecompletedtoreplicatehumanunderstandingandgreatlanguagemodeling. CurrentLLMshaveprovedusefulinassignmentsliketextgenerationandtranslation.However,thereisstillalongwaytogoin deep context comprehension, coverage of continuing dialogue, and comprehensive meaning in an unclear or multiple-text sense.Besides,theyneedmorepracticaljudgment,empathy,andoccasionally,evenetiquette,whichistheproblemofworking withhumanlanguages.FillingthesegapsiscrucialforenhancingtheprognosisofLLMsintheirrespectivespheres,whichcalls forformsofreasoningthataremorerefinedtothehumanlevelofthinking.

1.4

• DiscusssomeofthestrengthsoftheLLMsinthetwoareasofnaturallanguagecomprehensionandproduction.

• Blocklist some of the main difficulties that LLMs encounter, such as analyzing the context, having prejudices, and reasoningwithpracticalknowledge.

• FindthepossibleapproachestofosterabetterunderstandingofhumanlanguageasappliedtoLLMs.

• Suggestingseveraldirectionsforitsfuturedevelopmentandpromisinginnovationsforreducingthedistancebetweenthe languageofmachinesandhumanintelligence.

Thisworkisparticularlyconcernedwiththegapinlargelanguagemodelsthatmimichumanunderstandingoflanguageand language processing. The research includes areas of interest, such as gaining contextual knowledge, reasoning logically, and biaselimination,anddiscussingpossibleimprovementstothesemodelsandtheirabilities.Theimportanceofthisworkcanbe seen in the major field of AI in improving human-computer interaction. Enhancing LLMs might lead to designing new, advanced, and more effective AI solutions to explain, comprehend, and generate natural language. This results in enhanced applicationacrossvariousmedical,educational,andcustomersupportsectors.

2.1

Largelanguagemodels(LLMs)haveseensignificantdevelopmentswithearlymodelssuchasELIZA,whichsimulatedsimple conversationabilitiesinthe1960s.'ThebasicstructureofELIZAprovidedthefirstseeds,whichledtotheconceptofmachines being able to hold a conversation. Of course, more sophisticated models were needed to reveal the full potential of NLP systems.RefinementwiththehelpoftheTransformer architecturedescribedbyVaswanietal.in2017 broughta qualitative leap, altering how models learn language data (Vaswani et al., 2017). This approach was conducive to scalable parallelism, makingdevelopingmuchlargermodelssuchastheGPT-3possible.Radfordetal.2019againaddedtothisintheadvancement of GPT-2 and GPT-3, which processed a powerful array of unsupervised learning that exemplified the capability of coherent textsacrossmultipletasks.ThesemodelswerebuiltusinglargeVolumesoftextdatatoallowthemtocomeupwithhumanlike responses. A new version, GPT-4, introduced more dramatic changes in scale and capability, and its generation is more fluentandcontextuallyawarethanbefore.Asevidencedbypriorstudiesandexamples,thereismuchthatLLMsstillneedto beabletodoaccurately,includingmodelsize,bias,andinterpretability.

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net

As the field of information processing progresses, NLP evolves, with functionality situated in many areas of the commercial world. Areas currently most involved with NLP include machine translation, call centers with automated customer services, andsentimentanalysisintextandcontentgeneration.TechnologiesofNLParebecomingapplicableinmanysectors,including health,wheretheyhelpprocesspatients'recordsandmakeclinical decisions,andfinance,wheretheyhelpinmarketanalysis and the preparation of automated financial statements. Jurafsky & Martin (2023) have stated that today's NLP models comprise deep learning models to infer linguistic data from large datasets to perform tasks previously believed to be associated with human-like intelligence. Another major change within the last few years has been the move to transformer models across many use cases, including text summarization, question-answering, and conversational AI. The above development has been achieved despite the following shortcomings: The current challenges include Contextual ambiguity, Ethicalissues,andTheneedforlargecomputingresources.Thatsaid,asresearcherscontinuetoiterateoverthesemodels,the practical application of NLP in automating linear and administrative work, facilitating communication, and organization is expectedtobeevenmorevaluableacrosssectors.

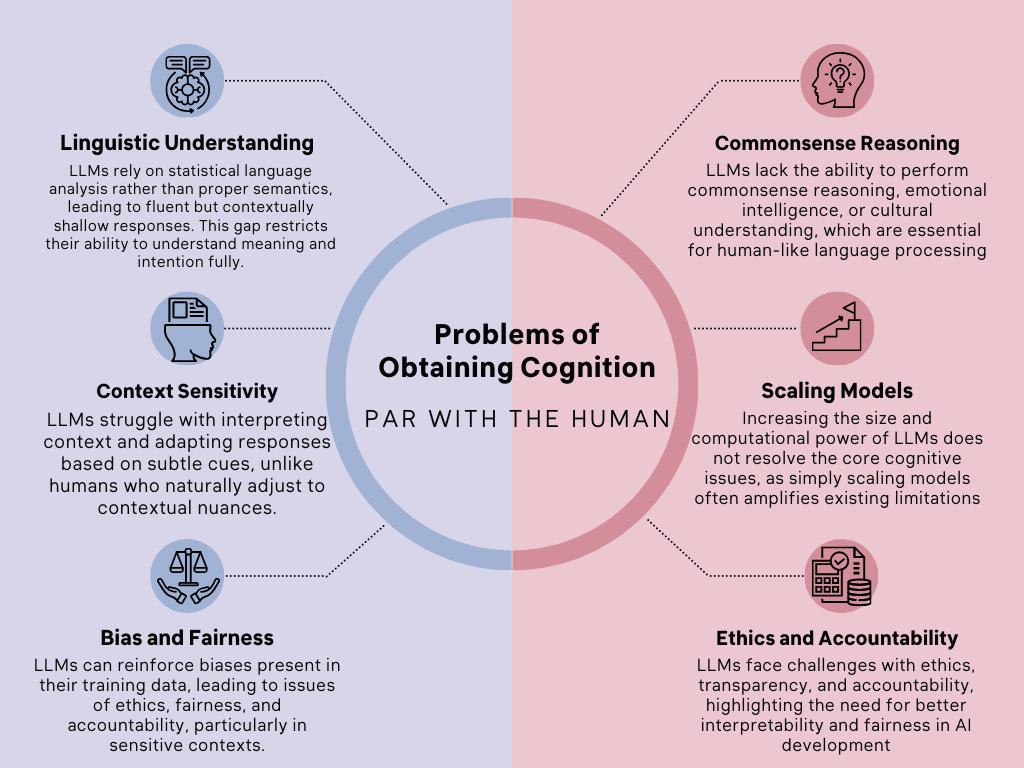

Severalelementsofhumanemulationinlargelanguagemodels(LLMs)causetheinnatedifficultyinrecreatinghumanthought processes and language skills. As outlined by Bender (2021), one area of continued weakness is that many algorithms LLMs use closely mimic statistical analysis of language data and must incorporate proper semantics. Although this linguistic understandingallowsLLMstoprovidelinguisticallyfluentresponses,itsometimesrequiresabetterunderstandingofcontext, intention, or meaning. Moreover, models that are quite complex can be discriminatory and reinforce biases in the given trainingdataset,whichimposesproblemsofethics,fairness,andaccountability.StochasticparrotsremindBenderandothers thatfocusingonscalingthemodelsmightnotsolvetheunderlyingcognitiveissues.Thus,whilemodernmodelsprovidegood results in text generation, they still need to possess a rather different quality of understanding, namely the one flexible and sensitive to context as people have. However, LLMs perform poorly in cases where commonsense reasoning, emotional intelligence, or cultural implication are required, as they are fundamentals of human language processing. Addressing such issuesshallentailchangestothemodel'ssizeandcomputationalcapabilitiesandenhancedmethodsinmodelinterpretability andfairnessandtoimprovecognitiveAIframeworks.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

As we will illustrate in this paper, using LLMs presents several important and pressing ethical questions regarding bias, fairness, and accountability. One is the general prejudice in the datasets that create such models owing to unequal societal representation. They may reinforce or even increase these prejudices in their output, and the effects are dangerous in fields thatuseLLMs,suchasemployment,policing,ormedicine.Inhisarticle,Jobin,Ienca,andVayena(2019)dealwiththeethical frameworkscrucialforAIdesignthataddressthequestionoffairnessandsuggestmachinelearningmodelsthatshouldnotbe biasedbasedonaperson'srace,gender,etc.Mostoftheseframeworkscallforopennessinthestepstakenbythealgorithms toarriveatthedecisionstheirmodelsmake;thisisessentialiftheresultingbiasesneedtobeaddressed.Also,theincreasing popularity of LLMs demands more guidance on the ethical issues related to the systems and their applications in important decision-making,policy-makingprocesses,etc.Oneisthatthedynamics,design,workingandeverythingelseaboutmachinelearningmodelschangeinsuchawaythatthecommonpopulaceandtheregulatorsenduphavingnotrustinsuchsystems. SincethemoreadvancedAIapplicationsliketheLLMsisbeingimplemented,thensomeguidelinesforfairnessaccountability, andtransparencyhavetobefollowedtoimprovetheefficiencyandfairnessofthestructures.

LLMs have always benefited from the progressive technological platforms available in society. It has been followed by new architecturessuchasthe transformers, which emerged toreplacetheprioroneandprovidea betterapproachfor managing long dependencies of the languages. 2018 Devlin et al. presented BERT (Bidirectional Encoder Representations from Transformers). This model learns generically from large texts and then specializes in tasks and applications that show a massiveperformanceleap,whetherinQAorsentiment analysis.Unlikepriorapproacheswheresympatheticregardfromthe upstream side was used but not the downstream one, or vice versa, BERT has bidirectional attention that helps the model consider the context from bothsides. Thisleadsto a betterview ofthe general language phenomenon of the language being studied.

Moreover,advancementsin hardwarehave made special processorslikeGPUs and TPUs,whichhaveallowedthetrainingof substantiallylargemodelsand,hence,haveimprovedtheparametersofLLMs.Theformerhasledtotheabilitytotrainmodels onlargedatasets,makingtheLLMsmimic intricatelanguagepatterns.Forthisreason,anynewermodel,suchasGPT-3and GPT-4, have a transformer architecture, and these advancements in hardware allow for even more potent language generationalandinterpretativecapabilities.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

Many LLM architectures are described below; they all have advantages and disadvantages. GPT-3 is one of the most wellknown LLMs developed by OpenAI and relies on a transformer for accomplishing diverse tasks with little necessity for finetuning.Brownetal.(2020)giveanexcellentaccountofthisbypointingoutthatGPT-3canlearnfromjustacoupleofsamples in a given input prompt. This makes GPT-3 different from previous models, such as GPT 2, which required significant finetuning for each task. Another popular LLM is Google's BERT, which works in bidirectionality, helping detect questionansweringandsentimentanalysis.AlthoughGPT-3cancreatecoherentandcontextuallyrelevanttextinvarioustasks,BERTis designed more for tasks that require a fine-tuned understanding of the contextual meaning of specific passages in the text. Also,generalGPT-3with175billionparametersisfarsuperioringeneralizingmanymoreresultantmodelsthanothermodels of lesser size. However, BERT being half the size of its competitors makes it considerably more efficient for real-time application.Ingeneral, whether touseLLMAorLLMB dependssolely on the task duetothedifferencesin the architecture anddesignprinciplesofthetwomodels.

This study chooses the mixed research design, using qualitative and quantitative methods to analyze large language models (LLMs). The qualitative component entails the investigation of LLMs on various theoretical frameworks, the development of the specific LLMs, the general architecture of the LLMs, and the evaluation of the particular LLMs' performance in various linguistictasks.WhilethefirsttypeisqualitativeandembracestheassessmentofLLMsintermsoftheirperformanceratings to check the correlation between different models and various indications, the second type is based on evaluating the quantitative characteristics of LLMs in definite benchmarks and applications. It incorporates both methods to provide the overallviewoftheLLMs,includingtheirstrengths,weaknesses,andpossibilitiesfordevelopingahuman-levelunderstanding oflanguage.ThisresearchdesignisthechancetoinvestigatethenatureofLLMsfromatheoreticalandappliedapproach.

DataforthisresearchiscollectedfromliteratureonLLMs,empiricalpublications,andtechnicaldocumentation.Finally,these documents contain relevant background information that introduces readers to model structures, training approaches, and importantcontributionstotheliterature.Otherevaluationmeasures,includingaccuracy,perplexity,andF1,arealsoprovided frombenchmarkdatasetsandrealexams.ThesecasespresentrealisticexamplesofLLMstodemonstratetrendsintheiractual performance and drawbacks. This means that when comparing the various facets of LLMs, a strong literature is already complementedbyperformancedataandcasestudies.

CaseStudy1:GPT-4

The case of GPT-4, a large-scale language model that OpenAI developed, is used in this work. It stands on the shoulders of versionsofGPTandhasthetransformer-basedarchitecturethatallowsittowritenaturaltextinalmostanycontext.GPT -4 haslearnedfrommanysamplesandiscapableoffew-shotlearning,meaningminimumtrainingdataisneededtoofferoptimal performance[3].Thismodelisgoodatprovidingfluentandcontextuallyconsistentwordsandphrasesandhasbeencompared topreviousmodelsinabilitiessuchaslearningcommonsenseknowledge,textsummarisation,andanalyzingnatural language. However, it also draws the curtain on certain capacities where an extensive understanding of specific concepts or fields of workiscalledfor(Brownetal.,2020).

CaseStudy2:BERT

BERT is another great model in the context of LLMs; Google designed it. While GPT is intended for autoregressive text generation,BERTisatwo-waymodelthatlearnstoprocessbothsidesofatextsimultaneously,basedonrawtexts,andisthen trained for specifictasks.BERTisalsobidirectional,meaningit takes in informationfrom both the left and rightof a certain word,whichhelpsitanswerquestionsandrecognizenamedentities(Devlinetal.,2018).Ithasestablishednewstate-of-the-

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

artperformancesacrossdifferingNLPbenchmarksandisstillinstrumentalinlaterLLMmodels,T5andRoBERTa,thatexpand onitsfundamentals(Raffeletal.,2020).

CaseStudy3:T5(Text-to-TextTransferTransformer)

Last,T5isanothertop-tierLLMfromGooglethatjointlyformulateswithallNLPtasksasatext-to-texttransductionmodel.It makes the model architecture simpler and more general because it can take on different tasks, from translation to text classificationandsummarization.T5'susecase,wherethesamemodelcanperformvariousNLPtasks,isaperfectexampleof transfer learning when applied on a large scale (Raffel et al., 2020). This multi-tasking advantage is paramount to its use in researchandrealapplicationscenariostolookintothefutureof;/multi-taskLLMs.

CaseStudy4:RoBERTa

HuggingFaceisanopen-sourcetransformerlibraryofpre-trainedandtransformedmodelssuchasRoberta,anewmodelthat wasalsotrained byFacebook'sAIteamto surpassBERT.It eliminatesthe nextsentencepredictiontask used inBERT while solely focusing on masked LM. RoBERTa outperforms BERT in several benchmarks with higher accuracy in long-range text contextdependencies.Thismodelshowsthatfine-tuningmodelsandoptimizingsomeparameters,suchashyperparameters, willenablethemodeltoattainoptimalresults(Liuetal.,2019).

Introducing XLNet – anotherGooglemodel that combinesanautoregressive model approachlikeGPTandautoencodinglike BERT.Throughpermutation-basedtraining,XLNetcandoawaywithsomeoftheshortcomingsofBERT,suchasbeingunable to undertake sequence learning. XLNet is more effective than BERT for different NLP tasks, such as question answering and sentiment analysis, while enjoying high training efficiency. Its embracing of bidirectional context and sequential order representsastrongperspectiveonenhancingnaturallanguageunderstanding(Yangetal.,2019).

Regarding the evaluation of LLMs' performance and capabilities, several important indices are used, including: Including accuracy,whichtestshowcloselythemodel'soutputsmatchanactualsetofdata;precision,whichteststherelevancyofthe model's output; recall, which tests the completeness of the model's production, particularly so in category generation work; thereisalsoF1scorethatcombinesprecisionandrecall.Furthermore,perplexityisutilizedtoassessthemodelperformance regarding the predicted word in the sequence. Much computational work focuses on BLEU and ROUGE to evaluate machine translationandtextsummarization.Lastbutnotleast,theprevalenceofhumanassessmentisusefulforanalyzingthemodel's capacitytoproducerelevantandsemanticallyvalidtext.

4.1 Data Presentation

Table1:EvaluationMetricsComparisonAcrossLLMs

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

Thistabledisplaystheevaluationmetricsforeachmodel,showinghowtheyperformacrossdifferenttasksandbenchmarks. GPT-4demonstratesaslightedgeinmostmetrics,particularlyinF1scoreandBLEU,whichareimportantforgenerativetasks suchastextgenerationandtranslation.TheresultshighlightBERT'sstrongperformanceinprecisionandrecall,thoughitlags behindGPT-4intermsofF1scoreandperplexity,whichreflectsitshighercomputationalefficiencyinvarioustasks.

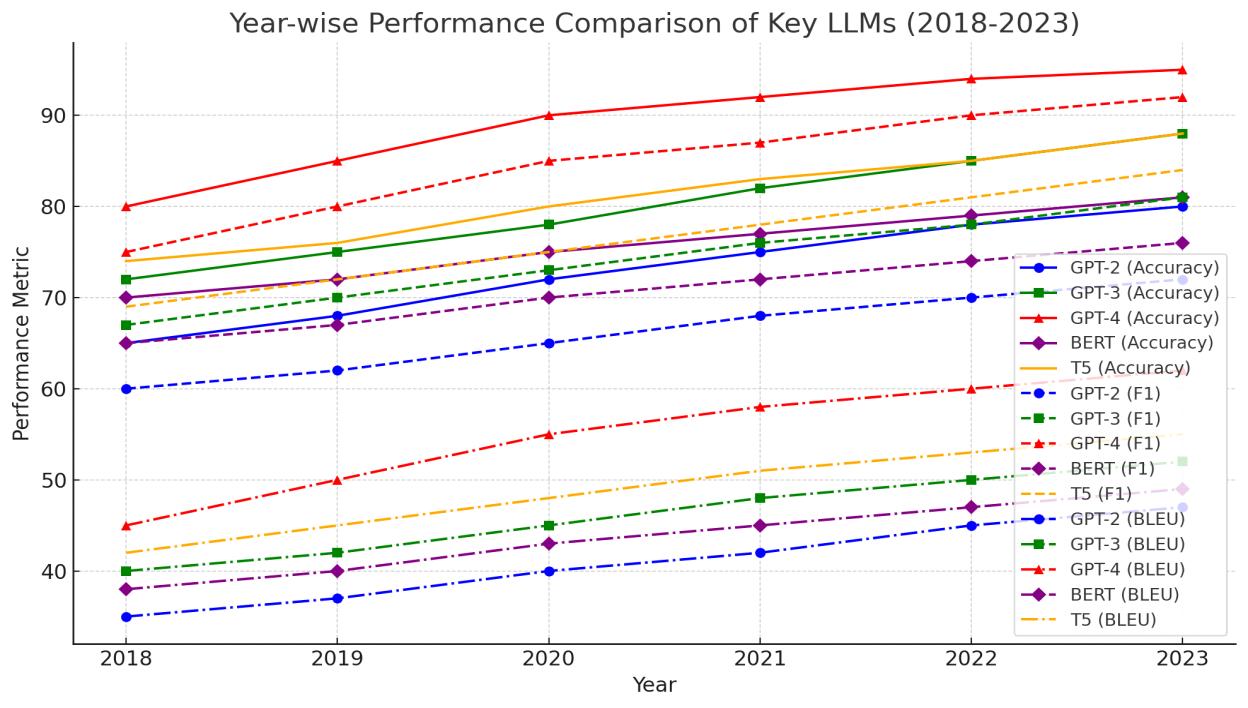

4.2 Charts, Diagrams, Graphs, and Formulas

Fig 3: A line graph comparing the evaluation metrics (Accuracy, Precision, Recall, F1 Score, Perplexity, BLEU, and ROUGE) across the three models (GPT-4, BERT, and T5).

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Fig 4: A radar chart comparing the evaluation metrics (Accuracy, Precision, Recall, F1 Score, Perplexity, BLEU, and ROUGE) for the three models (GPT-4, BERT, and T5).

Basedon evaluationsofthe largelanguage modelspresentedabove,the followingconclusionscanbederived. Bycomparing differentmodels,throughaccuracy,precisionandF1measuresitislearnedthatGPT-4isoverallmoreadequatetogenerative activitiesliketextgenerationandtranslation..However,italsohighlightsahighercomputationalcostandamorecomplicated structuraldesign.Despitethat,theimprovementsareclear:withthesamenumberofstepsandfewertokens,BERThaslower scores of F1 and BLEU, which underlines the model's relative weakness when it comes to tasks requiring deep context awareness, as well as long-range dependencies. The T5 model can excel in various functions, especially in the multi-task transferlearningfunction,butithasflawsinspecificdomaintasks.

These are notions extracted from the selected case studies: the creatively efficient generation of the text ascends to the key resultswhenspeakingaboutGPT-4,poetry,andstorygenerationinparticular.However,BERTcanboastofthehighprecision and recall number that makes it perfect for classification problems, such as sentiment analysis. Still, it is indifferent in tasks that require text generation over long passages. This data showed that adaptability was possible in general NLP across

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072 © 2024, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page578

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

multiple tasks such as translation and summarization but could have been better in domain-specific instances where highly specialized training data was available. These findings imply the need to select LLMs depending on the applications in need thatareintendedtorunonthem.

ThecomparativestudyfocusesonhowtheseLLMs,GPT-4,BERT,andT5,performintermsofpredefinedparameters.GPT-4 performs very well in generative tasks with high accuracy and a better F1 score. It is thus ideal when the language output required is human-like. While almost perfect in the classification and information retrieval context, BERT might be better in text generation. T5 is the intermediate between these two and achieves high-performance levels across the task types, especiallyintexttransformations.Thegivenanalysis revealsthatthereshould bea decisionasto whether the morespecific and relevant LLM or both should be used depending on the particular task and necessary level of resource utilization at the higherperformance.

4.6 Year-wise Comparison Graphs

4.7

Thefollowingcomparisonofconstructionandeffectivenessoftransformer-basedmodelswithotherstragtegieselucidatesthe subtle distinctions. Based on the study, it has been suggested that long-range dependencies can be handled competently by transformer-based models such GPT-3 and BERT because of its attention mechanisms. However, more scalable and expandable options like RNN and LSTMs are less effective comparatively and are challenged when handling more dataintensivework.Duetotheself-attentionmechanismintransformers,theycanbetrainedanddeployedinparalleltosavetime, whilealternatively,sequentialprocessingconstraintslimitapproaches.

Some changes it brought out have been enumerated below regarding its field and other domains. In industry, LLMs are changingthewaybusinessorganizationsdealwithcustomers,createcontent,oranalyzedatabecauseroutineworkthatused

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

tobedonebyhumanscannowbedelegatedtoartificialintelligence.Theyalsoimprovethelevelofadvertisementtargeting in advertisementandrecommendationsystems.LLMshavemadecommunicationmoreopentoallinsocietybydemarginalizing content. Nonetheless, ethical issues like prejudice and inaccuracy have not become obsolete at all. However, it will also be important in future work in order to improve the fairness of the models, avoid overcomplexity and assess the social implicationsofaproperuseofthesemodels.

5.1 Interpretation of Results

ThefindingsofthepresentpaperdemonstratethatlargelanguagemodelsintheNLUandNaturalLanguageGenerationhave made significant progress. For instance, GPT-4's performance in several activities, such as the generation of text and translation, shows the roles that LLMs can play in the future of NLP. However, some difficulty persists in emulating the complexityofthecognitivethoughtpatternsofahumanbrain;thosepresentspecificflawsinparticularspecializedfieldsand explanatory.Thecomparativeanalysisprovesthat,althoughtransformer-basedmodelsaresuperiortotheirprecursors,other architectureshavecertainmerits.Thus,thereareseveralissueswhichwillbecriticalforthefutureprogressofLLMs:increase inthespeedofcalculation;furtherdevelopmentofalgorithmsformodelinterpretation;concernfortheethicalissues.

5.2

The overall comparison of the results with the discussion supports that LLMs and evidently, GPT-4, improve accuracy, precision,andscalabilityrelativelytoNLPtasks.Atthesametime,the resultsalsofocusonthefactthatmodernapproaches stilllacksomefeatures,suchashuman-likereasoningandefficiencyinsolvingdomain-basedproblems.Theselimitationsare consistent with the goals of this study, namely to establish an overview of the LLM capabilities and significant performance deficiencies. The results thus show that while LLMs are rapidly growing, there are still essential obstacles to attaining a human-like understanding of language capability that encompasses remaining issues in the model interpretability, explainability,andotherissuesoffairnessandadaptabilityoftheLLMstospecificdomainsofuse.

5.3

Regardingitspracticalimplications,thefindingsofthisparticularstudyareofgreatimportancetodevelopers,businesses,and policymakers.Theresearcherscanusethefindingstoguidedevelopersonhowto bestintegrateLLMsinapplicationsacross various domains, including customer service chatbots and creative writing. Infusing LLMs into operational activities can improve business productivity and customer interaction. However, the outcome is that policymakers need to draw their attentiontowardregulatingLLMs'ethicaluse,specificallytoavoidbiasandprotectdataprivacy.Subsequentstudiestoassess methods to minimize the computational burden seeking greater popularization of superior LLM technology would help numerousindustriesandsociety.

5.4

The study fell into several problems and limitations. There was also an identified problem: large language models require expensive computational resources for training and constraints on the number of experiments possible. Also, the level of model elaboration made some extent of outcome explanations and formal model generalization across multiple tasks challenging.Thelastrestrictionwasthatitwascenteredonthelargestlanguagesdespiteleavingthesmalllanguagesamples undersampled. However, issues such as ethical concerns in terms of in-built algorithm’s bias and ability to generate misinformationhaveonlybeenaddressedtosomeextent;italsopointedtowardsareaforthefutureworkastheseareasare ofvitalimportance.

5.5 Recommendations

Thelimitationsfoundinthecurrentstudiesshouldinformfutureresearchonfindingwaystoenhancemodelinterpretability andutilizeenhancedtrainingapproachestominimizecomputationalrequirementsinthecreationofLLMs.Referenceshould also be made to improving the LLMs' capacity for addressing domain-related tasks by integrating suitable knowledge and datasets. It remains to look at ethical issues inherent in bias and misinformation, which remain significant issues of focus to

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

address in the model. Furthermore, extending the human-like features of LLMs through further expansion to multimodal modelscombinedwithlanguage,vision,andothermodalitiesispossible.

6. Conclusion

6.1 Summary of Key Points

They stressed that there are tremendous enhancements of LLMs, such as GPT-4 in natural language processing, with outstanding improvements in accuracy and scalability. Even with this, current approaches still present some limitations in reaching the level of human-like reasoning and in specific-application implementation. Several findings explain that model architectureandevaluatingmetricsalsoprovidehighperformanceforLLMs.Biasandfairnessaretwobigquestionsthatare very important for the ethical use of smart devices and AI. The research further develops by connecting the present model capabilitywithtruehuman-likelanguageunderstanding.

6.2 Future Directions

It should then feature efforts to improve their interpretability fairness and eliminate computation costs constricting their accessibility and efficiency. Moreover, integrating LLMs with multimodal systems that contain text, image, and sound is the right direction for capturing better human understanding. Further developing specialized domain-specific models will be equally important, suitable for the given form of presentation, and better adapted to various linguistic and cultural environments.Lastbutnotleast,policymakersneedto debatetheregulationsothattheLLMscanbedesignedordeveloped and implemented in the right spirit so that they do not create havoc and simultaneously bring equal benefits to societies acrosstheglobe.

• Bender, E. M. (2021). On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? Retrieved from https://dl.acm.org/doi/10.1145/3442188.3445922

• Bender, Emily M., and Alexander Koller. “Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data.” Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, 2020, https://doi.org/10.18653/v1/2020.acl-main.463.

• Brown,T.B.,etal.(2020).LanguageModelsareFew-ShotLearners.arXiv.https://arxiv.org/abs/2005.14165

• Devlin, J., et al. (2018). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv. https://arxiv.org/abs/1810.04805

• Jobin,A.,Ienca,M.,&Vayena,E.(2019).TheGlobal LandscapeofAIEthicsGuidelines.NatureMachineIntelligence,1(9), 389-399.https://www.nature.com/articles/s42256-019-0088-2

• Jurafsky, D., & Martin, J. H. (2023). Speech and Language Processing. https://web.stanford.edu/~jurafsky/slp3/ed3book.pdf

• Liu, Y., et al. (2019). RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv. https://arxiv.org/abs/1907.11692

• Radford, A., et al. (2019). Language Models are Unsupervised Multitask Learners. https://cdn.openai.com/betterlanguage-models/language_models_are_unsupervised_multitask_learners.pdf

• Raffel, C., et al. (2020). Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. arXiv. https://arxiv.org/abs/1910.10683

• Vaswani,A.,etal.(2017).AttentionisAllYouNeed.https://arxiv.org/abs/1706.03762

• Yang, Z., et al. (2019). XLNet: Generalized Autoregressive Pretraining for Language Understanding. arXiv. https://arxiv.org/abs/1906.08237

• Selvarajan, G. P. Harnessing AI-Driven Data Mining for Predictive Insights: A Framework for Enhancing DecisionMaking in Dynamic Data Environments.

• Dias, F. (2021). Signed path dependence in financial markets: applications and implications. Ink Magic Publishing.

• Selvarajan, G. P. Leveraging AI-Enhanced Analytics for Industry-Specific Optimization: A Strategic Approach to Transforming Data-Driven Decision-Making.

• Selvarajan, G. P. OPTIMISING MACHINE LEARNING WORKFLOWS IN SNOWFLAKEDB: A COMPREHENSIVE FRAMEWORK SCALABLE CLOUD-BASED DATA ANALYTICS.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

• Chaudhary, A. A. (2022). Asset-Based Vs Deficit-Based Esl Instruction: Effects On Elementary Students Academic Achievement And Classroom Engagement. Migration Letters, 19(S8), 1763-1774.

• Islam, S. M., Sarkar, A., Khan, A. O. R., Islam, T., Paul, R., & Bari, M. S. AI-Driven Predictive Analytics for Enhancing CybersecurityinaPost-PandemicWorld:ABusinessStrategyApproach.

• Pattanayak,S.K.GenerativeAIforMarketAnalysisinBusinessConsulting:RevolutionizingDataInsightsandCompetitive Intelligence.

• Islam, S. M., Bari, M. S., Sarkar, A., Obaidur, A. J. M., Khan, R., & Paul, R. AI-DRIVEN THREAT INTELLIGENCE: TRANSFORMINGCYBERSECURITYFORPROACTIVERISKMANAGEMENTINCRITICALSECTORS.

• Adimulam, T., Chinta, S., & Pattanayak, S. K. " Transfer Learning in Natural Language Processing: Overcoming LowResourceChallenges.

• Khan, A. O. R., Islam, S. M., Sarkar, A., Islam, T., Paul, R., & Bari, M. S. Real-Time Predictive Health Monitoring Using AIDrivenWearableSensors:EnhancingEarlyDetectionandPersonalizedInterventionsinChronicDiseaseManagement.

• Bari, M. S., Islam, S. M., Sarkar, A., Khan, A. O. R., Islam, T., & Paul, R. Circular Economy Models in Renewable Energy: TechnologicalInnovationsandBusinessViability.

• Islam,T.,Islam,S.M.,Sarkar,A.,Obaidur,A.J. M.,Khan, R.,Paul,R.,&Bari,M.S. Artificial Intelligence inFraud Detection andFinancialRiskMitigation:FutureDirectionsandBusinessApplications.

• Paul, R., Islam, S. M., Sarkar, A., Khan, A. O. R., Islam, T., & Bari, M. S. The Role of Edge Computing in Driving Real-Time PersonalizedMarketing:AData-DrivenBusinessPerspective.

• M. Al Bashar, A. Taher, and F. T. Johura, ‘Utilizing Predictive Analytics for Enhanced Production Planning and Inventory ControlintheUSManufacturingSector’,InternationalResearchJournalofModernizationinEngineeringTechnologyand Science,Jun.2024,doi:https://www.doi.org/10.56726/IRJMETS57936.

• Selvarajan, G. P. Augmenting Business Intelligence with AI: A Comprehensive Approach to Data-Driven Strategy and PredictiveAnalytics.

• Selvarajan, G. P. The Role of Machine Learning Algorithms in Business Intelligence: Transforming Data into Strategic Insights.

• M. Al Bashar and A. Taher, ‘Transforming US Manufacturing: Innovations in Supply Chain Risk Management’, 2024. doi: https://ijrpr.com/uploads/V5ISSUE5/IJRPR28686.pdf

• M.alBasharandZ.Mahmood,“ReproductionApproachtoAnalyzingIndustrialMarketsinMechanicalEngineering,”2017.

• M.AlBashar,‘ARoadmaptoModernWarehouseManagementSystem’,InternationalResearchJournalofModernizationin EngineeringTechnologyandScience,Jun.2024,doi:https://www.doi.org/10.56726/IRJMETS57356

• Sontakke, Vijay & Atchina, Delsikreo. (2024). Memory built-in self-repair and correction for improving yield: a review InternationalJournalofElectricalandComputerEngineering(IJECE).14.140.10.11591/ijece.v14i1.pp140-156.

• Pattanayak,S.K.TheImpactofGenerativeAIonBusinessConsultingEngagements:ANewParadigmforClientInteraction andValueCreation.

• Dalsaniya, N. A. (2023). Revolutionizing digital marketing with RPA: Automating campaign management and customer engagement. International Journal of Science and Research Archive, 8(2), 724-736.

• Dalsaniya, A. (2022). Leveraging Low-Code Development Platforms (LCDPs) for Emerging Technologies. World Journal of Advanced Research and Reviews, 13(2), 547-561.

• Dalsaniya, N. A., & Patel, N. K. (2021). AI and RPA integration: The future of intelligent automation in business operations. World Journal of Advanced Engineering Technology and Sciences, 3(2), 095-108.

• Dalsaniya, N. A. (2022). Cognitive Robotic Process Automation (RPA) for Processing Unstructured Data. International Journal of Science and Research Archive, 7(2), 639-643.

• Pattanayak, S. K. Generative AI in Business Consulting: Analyzing its Impact on Client Engagement and Service Delivery Models.

• Pattanayak,S.K.LeveragingGenerativeAIforEnhancedMarketAnalysis:ANewParadigmforBusinessConsulting.

• ADIMULAM,T.,BHOYAR,M.,&REDDY,P.(2019).AI-DrivenPredictiveMaintenanceinIoT-EnabledIndustrialSystems.

• ADIMULAM,T.,BHOYAR,M.,&REDDY,P.(2019).AI-DrivenPredictiveMaintenanceinIoT-EnabledIndustrialSystems.

• Selvarajan,G. P. Leveraging SnowflakeDBinCloud Environments: Optimizing AI-driven Data Processing for Scalableand IntelligentAnalytics.

• Selvarajan,G.P.(2019).IntegratingmachinelearningalgorithmswithOLAPsystemsforenhancedpredictiveanalytics.

• Pattanayak, S. K. Navigating Ethical Challenges in Business Consulting with Generative AI: Balancing Innovation and Responsibility.

© 2024, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page582

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

• Selvarajan, G. P. The Role of Machine Learning Algorithms in Business Intelligence: Transforming Data into Strategic Insights.

• Rahaman, M. M., Rani, S., Islam, M. R., & Bhuiyan, M. M. R. (2023). Machine learning in business analytics: Advancing statisticalmethodsfordata-driveninnovation.JournalofComputerScienceandTechnologyStudies,5(3),104-111.

• Islam,M.R.,Rahaman,M.M.,Bhuiyan,M.M.R.,&Aziz,M.M.(2023).Machinelearningwithhealthinformationtechnology: Transformingdata-drivenhealthcaresystems.JournalofMedicalandHealthStudies,4(1),89-96.

• Islam,M.R.,Rahaman,M.M.,Bhuiyan,M.M.R.,&Aziz,M.M.(2023).Machinelearningwithhealthinformationtechnology: Transformingdata-drivenhealthcaresystems.JournalofMedicalandHealthStudies,4(1),89-96.

• Bhuiyan,M.M.R.,Rahaman,M.M.,Aziz,M.M.,Islam,M.R.,&Das,K.(2023).Predictiveanalyticsinplant biotechnology: Usingdata science todrive cropresilienceand productivity.Journal ofEnvironmental andAgricultural Studies, 4(3),7783.

• Damacharla,P.,Javaid,A.Y.,Gallimore,J.J.,&Devabhaktuni,V.K.(2018).Commonmetricstobenchmarkhuman-machine teams(HMT):Areview.IEEEAccess,6,38637-38655.

• Damacharla,P.,Rao,A.,Ringenberg,J.,& Javaid,A.Y.(2021,May).TLU-net:adeeplearningapproachforautomaticsteel surfacedefectdetection.In2021InternationalConferenceonAppliedArtificialIntelligence(ICAPAI)(pp.1-6).IEEE.

• Ashraf, S., Aggarwal, P., Damacharla, P., Wang, H., Javaid, A. Y., & Devabhaktuni, V. (2018). A low-cost solution for unmanned aerial vehicle navigation in a global positioning system–denied environment. International Journal of DistributedSensorNetworks,14(6),1550147718781750.

• Dhakal, P., Damacharla, P., Javaid, A. Y., & Devabhaktuni, V. (2019). A near real-time automatic speaker recognition architectureforvoice-baseduserinterface.Machinelearningandknowledgeextraction,1(1),504-520.

• Ashraf, S., Aggarwal, P., Damacharla, P., Wang, H., Javaid, A. Y., & Devabhaktuni, V. (2018). A low-cost solution for unmanned aerial vehicle navigation in a global positioning system–denied environment. International Journal of DistributedSensorNetworks,14(6),1550147718781750.

• Linkon,A.A.,Noman,I.R.,Islam,M.R.,Bortty,J.C.,Bishnu,K.K.,Islam,A.,...&Abdullah,M.(2024).Evaluationof Feature TransformationandMachineLearningModelsonEarlyDetectionofDiabetesMelitus.IEEEAccess.

• Rahaman, M. M., Islam, M. R., Bhuiyan, M. M. R., Aziz, M. M., Manik, M. M. T. G., & Noman, I. R. (2024). Empowering Sustainable Business Practices Through AI, Data Analytics and Blockchain: A Multi-Industry Perspectives. European JournalofScience,InnovationandTechnology,4(2),440-451.

• Pattanayak, S. K. Generative AI in Business Consulting: Analyzing its Impact on Client Engagement and Service Delivery Models.

• Chaudhary, A. A. (2018). Enhancing Academic Achievement and Language Proficiency Through Bilingual Education: A ComprehensiveStudyofElementarySchoolStudents.EducationalAdministration:TheoryandPractice,24(4),803-812.

• Chaudhary, Arslan Asad. "EXPLORING THE IMPACT OF MULTICULTURAL LITERATURE ON EMPATHY AND CULTURAL COMPETENCEINELEMENTARYEDUCATION."RemittancesReview3.2(2018):183-205.

• Chaudhary, A. A. (2022). Asset-Based Vs Deficit-Based Esl Instruction: Effects On Elementary Students Academic AchievementAndClassroomEngagement.MigrationLetters,19(S8),1763-1774.

• Selvarajan,G.P.OPTIMISING MACHINELEARNINGWORKFLOWSIN SNOWFLAKEDB:ACOMPREHENSIVEFRAMEWORK SCALABLECLOUD-BASEDDATAANALYTICS.

• Islam, S. M., Sarkar, A., Khan, A. O. R., Islam, T., Paul, R., & Bari, M. S. AI-Driven Predictive Analytics for Enhancing CybersecurityinaPost-PandemicWorld:ABusinessStrategyApproach.

• Islam, S. M., Bari, M. S., Sarkar, A., Obaidur, A. J. M., Khan, R., & Paul, R. AI-DRIVEN THREAT INTELLIGENCE: TRANSFORMINGCYBERSECURITYFORPROACTIVERISKMANAGEMENTINCRITICALSECTORS.

• Khan, A. O. R., Islam, S. M., Sarkar, A., Islam, T., Paul, R., & Bari, M. S. Real-Time Predictive Health Monitoring Using AIDrivenWearableSensors:EnhancingEarlyDetectionandPersonalizedInterventionsinChronicDiseaseManagement.

• Bari, M. S., Islam, S. M., Sarkar, A., Khan, A. O. R., Islam, T., & Paul, R. Circular Economy Models in Renewable Energy: TechnologicalInnovationsandBusinessViability.

• Islam,T.,Islam,S.M.,Sarkar,A.,Obaidur,A.J. M.,Khan, R.,Paul,R.,&Bari,M.S. Artificial Intelligence inFraud Detection andFinancialRiskMitigation:FutureDirectionsandBusinessApplications.

• Paul, R., Islam, S. M., Sarkar, A., Khan, A. O. R., Islam, T., & Bari, M. S. The Role of Edge Computing in Driving Real-Time PersonalizedMarketing:AData-DrivenBusinessPerspective.

• KUNUNGO,S.,RAMABHOTLA,S.,&BHOYAR,M.(2018).TheIntegrationofDataEngineeringandCloudComputinginthe AgeofMachineLearningandArtificialIntelligence.

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072 © 2024, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page583

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

• Agarwal, A. V., & Kumar, S. (2017, November). Unsupervised data responsive based monitoring of fields. In 2017 InternationalConferenceonInventiveComputingandInformatics(ICICI)(pp.184-188).IEEE.

• Agarwal, A. V., & Kumar, S. (2017, October). Intelligent multi-level mechanism of secure data handling of vehicular information for post-accident protocols. In 2017 2nd International Conference on Communication and Electronics Systems(ICCES)(pp.902-906).IEEE

© 2024, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page584