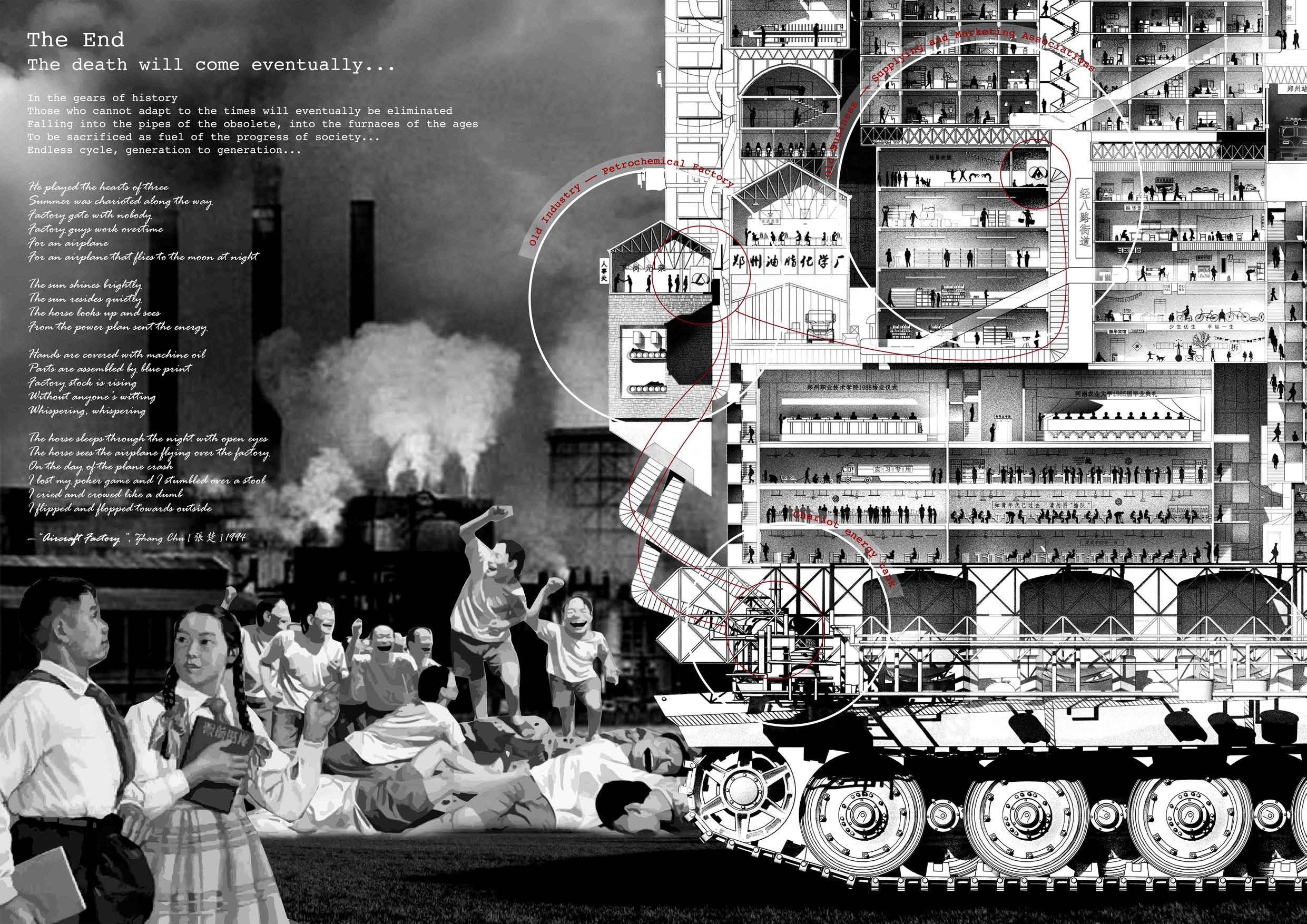

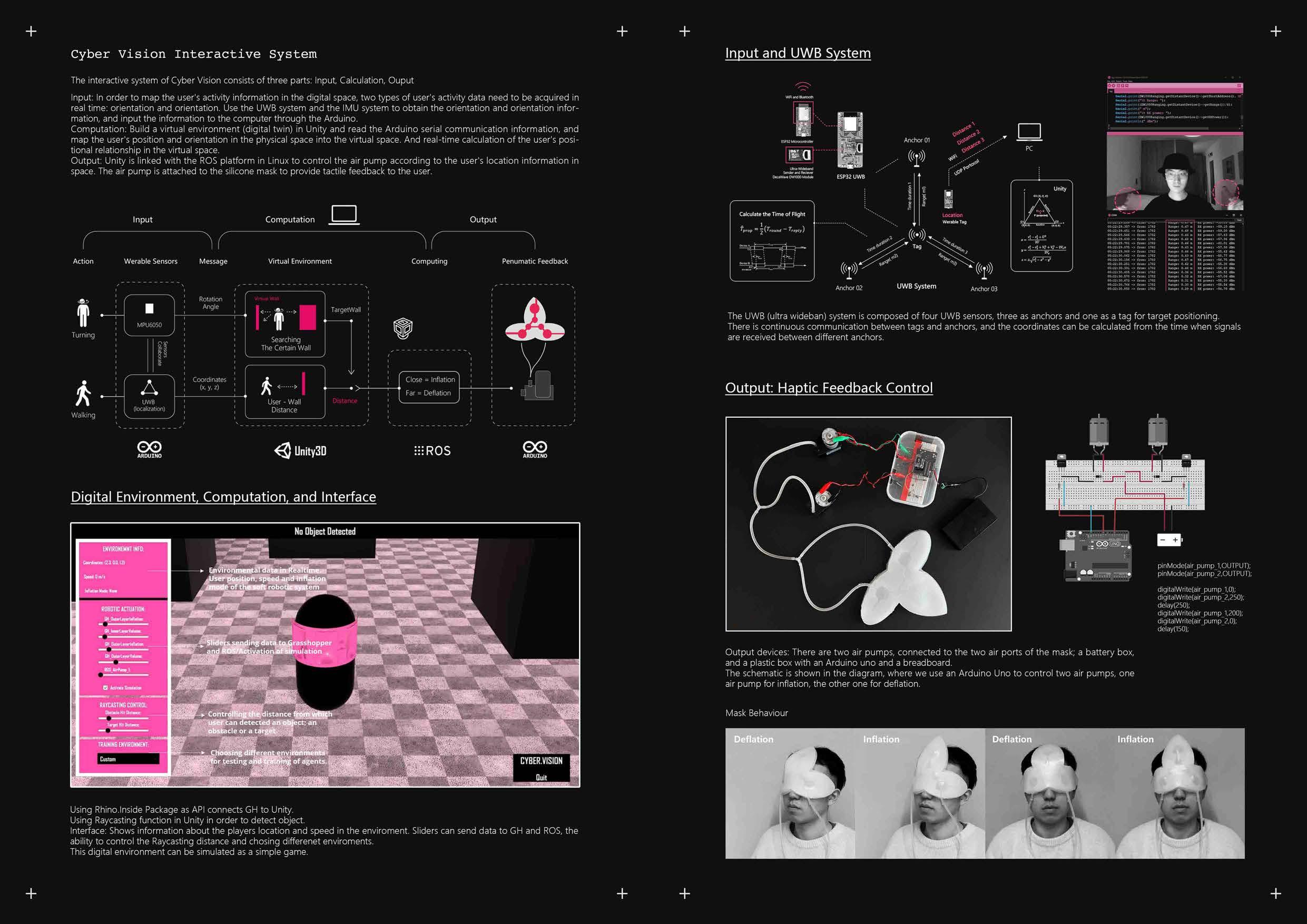

Cyber Vision Interactive System

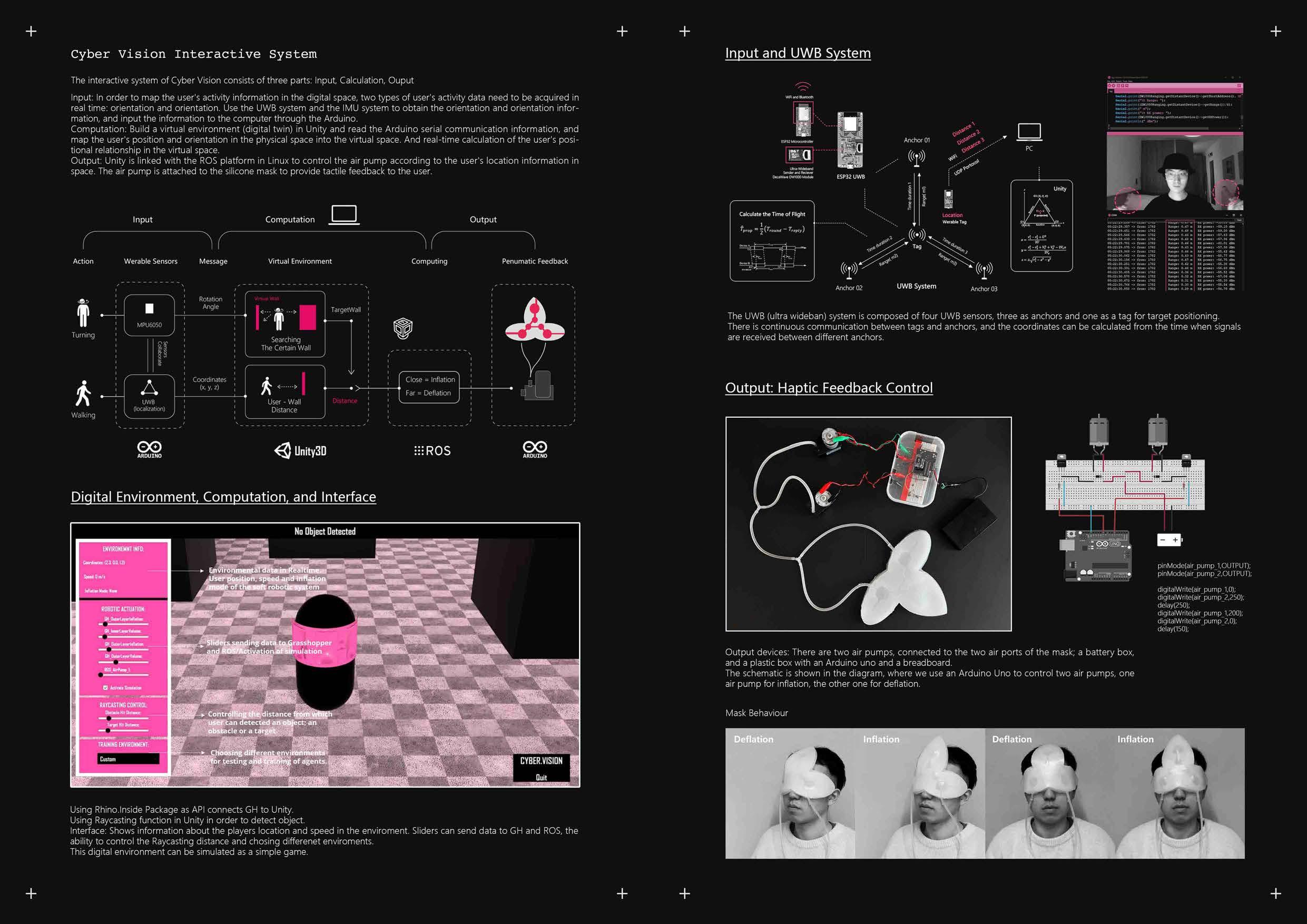

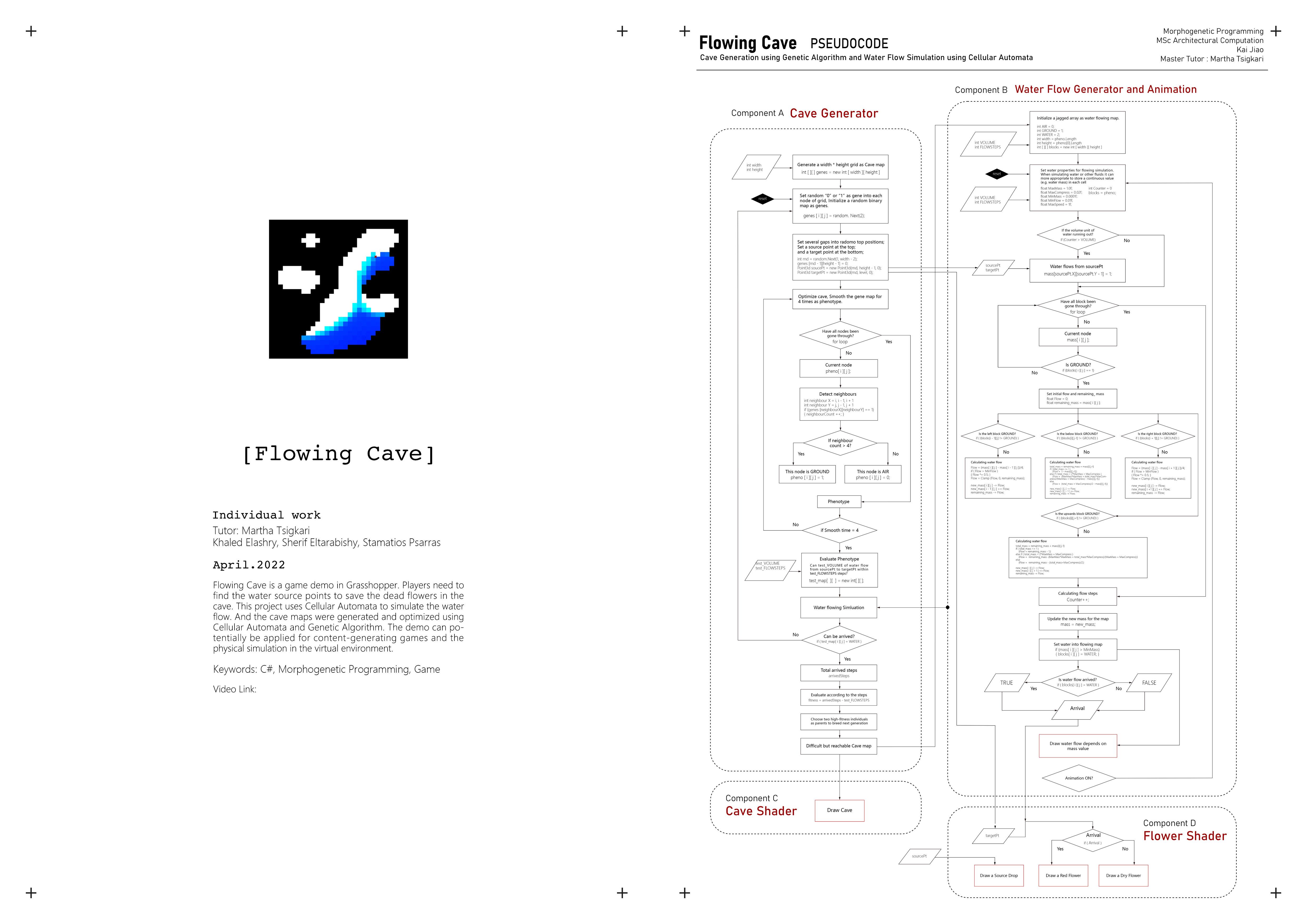

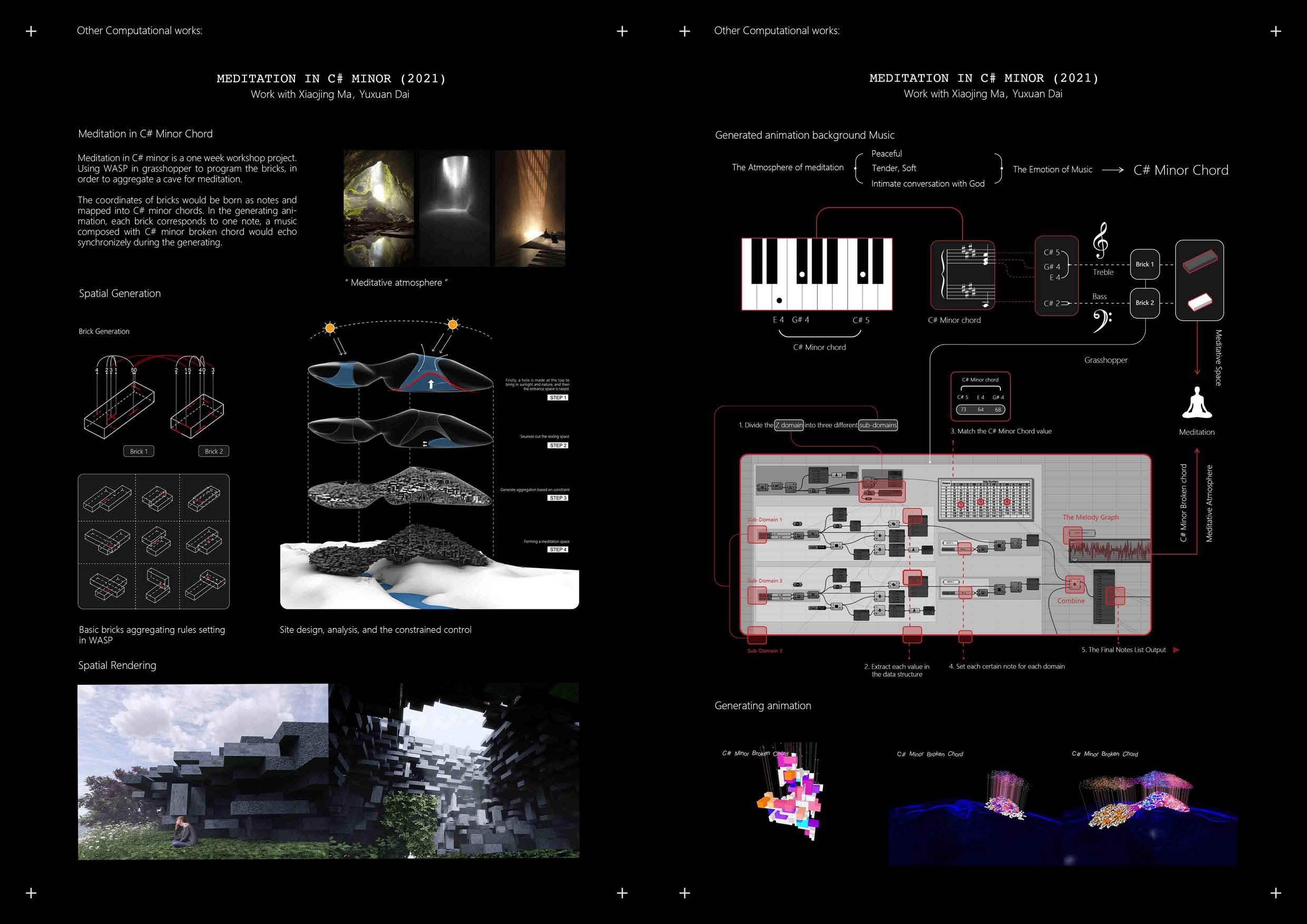

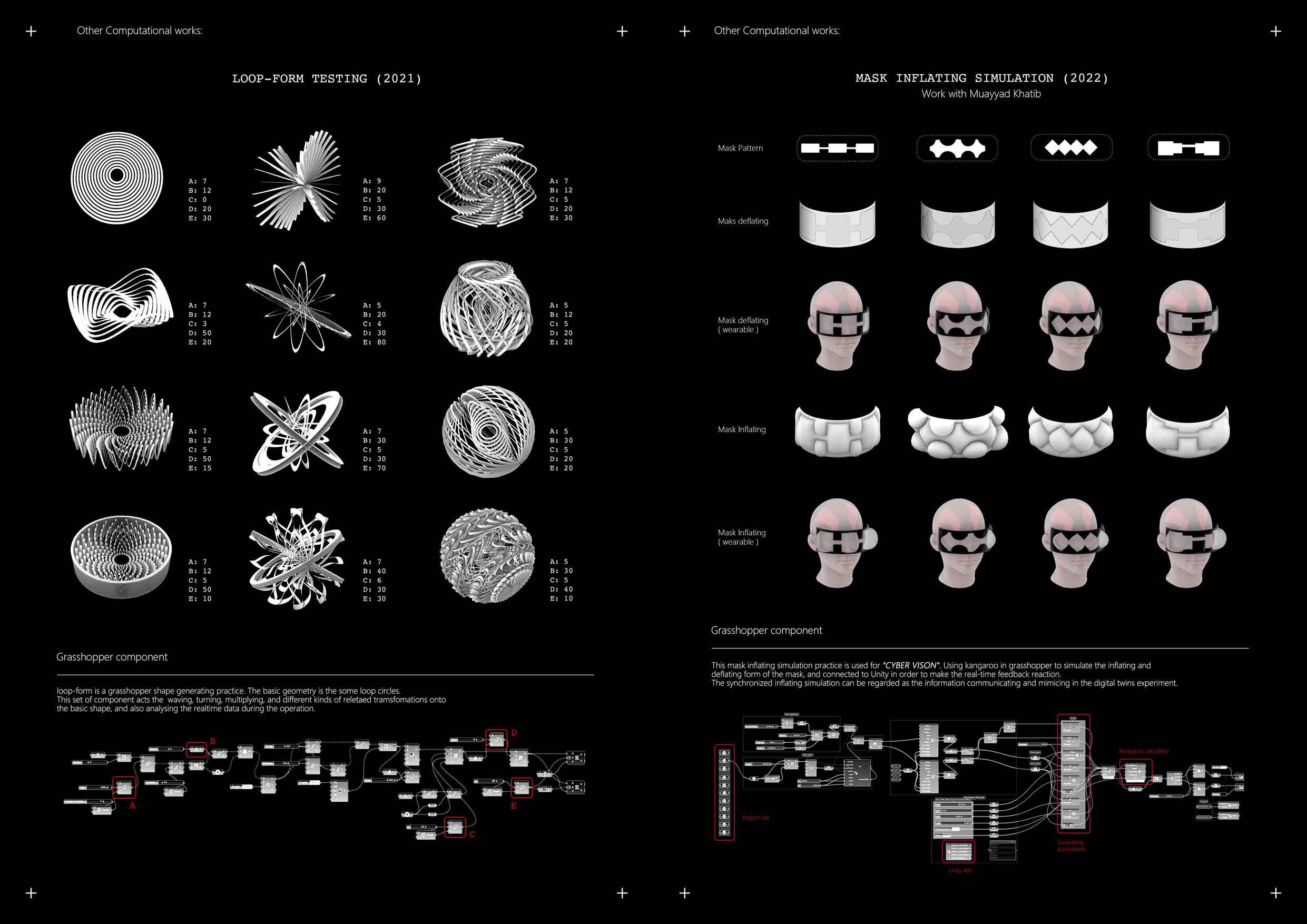

in ter act ive system of Cyber Vision consists of three parts: Input, Calculation, Ouput Input: In order to map the user's activity in formation in t he digital space, wo t ypes of user's activity data need to be acquired in rea t me: orientation and orientation. Use the UWB system and he IMU system to obtain the orientation and orientation informat ion, and inpu t t he in format ion to the computer t hroug h the Arduino. Computation: Build a vi rtual environment (digital t win) in Unity and read the Arduino serial communication information, and map the user's pos it ion and orientation in he physical space into the vi rtual space. And real-time calculation o f the user's posit ional relationship in the vi rtual space. Output: Unity is linked wi t h the ROS platform in Linux to control the air pump according to the user' s location information in space. The air pump is attached to the silicone mask to pro vide tact ile feedbac k to the user. Input Action Werable Sensors T • MPU6050 Turning 111 1 L-. ' • 1' UWB (localization) Walking 00 ARDUINO Me ssage Rotation Angle Coordinates (X, y, Z) Computation Virtua En v ironm e nt ·:--- , ---• I_ Sea rching The Certai n Wa l •--- •l " User -Wal Distance TargetWall -1 _,,. Unity3• Di ital Environment Com utation and Interface No Object De tected Using Rhino.Inside Package as API connects GH to Unity. Using Raycasting function in Unity in o rder to detect object. Output Computing Pe numatic Feedback e ,,------------- y_ Close nflation rS°J Fa Deflation _____________ ,,. ~------------" :::ROS 00 AROUINO Interface : Shows in formation about the p laye rs loca ion and speed in the enviroment. Sliders can send data to GH and ROS, the ability to control the Raycasting distance and chasing differenet enviroments. This digital environment can be simulated as a simple game. In ut and UWB S stem Calculate the Time of Flight Y,OU•<•••n •-••-•••Yo Y•••• os,n,U,JSJ -> !<0<0: 1111 ur.g•: o.o" RX-"' -si.:O <Sa.. os,21,n 1S1 ·> t,o:o, 11,2 R,.r,9 Q.O:,. It..: pc, -S9 lQ d!n os,u 29 SH -> tto:o: n12 11..&~• o.H,. ltX pea.-.., -s1 o ,u;, os,22,2~.tn -> f<<:<11 1111 11..&•~• o.u ro: p,o1tu, -S7.H = os,22,n.H: ·> !<o:a, 1712 II..&•.~·· Q.H" ltX -(0,0l db os,n,n.ns -> :,.,,,., 1111 11..&"9•= o.o,. l<X po,,or, -57. ,i ,ta, os,u,it.?11 •> 1,co: 2 ••"'l•= o.H i,x -ss.:2 d?D ·> !<_, JJll R•r>'l•• o.u J.X peo,·uc -s .n d!n 05:22:JO,lH ·> f< 1711 hr19 0,47 J.X p,;,,.., ·Sl,H d!n OS,2i:JO Hl ·> h«a: 1112 U""•' Q.H II;<-~• ·$$,JI 4?11> os,u,N.Jh •> !<O:,:,.I l.lll it.,.,,.._., ~-H.. l<X -~, to ·> fr.-, 17 1 ll£"'1•• 0.)t ?.X p,:-,M<i ·SS.51 d?lo 05,n,10.nt -> !r«:>: 17 2 1>-1r.9•• o.u,. ~,x P<>'.,.r, 05,n,,o iu -> !re,:,, Pll 1t.t-r.1•• o ,i,. l<X Anchor 02 UWBSystem os,1~1)1 lH •> fr=: Pll ~•n1•• <> 1<X _r, -SS.S~ 4:l<o os,n,n.151 •> fr=, 1112 t.n9•• o.n" ,.x -"' -s~.10 = The UWB (ultra wideban) system is composed of four UWB sensors, three as anchors and one as a tag for target positioning. There is con t inuous communication between tags and anchors, and the coordinates can be calculated from the time when signals are recei ved between different anchors. Out ut: Ha tic Feedback C ,----- antral • t r~~ ::~ ,, - •• , - 1 ,.:::=: J: ff rt in:',M,:: • Output devices: There are wo air pumps, connected to the t wo air ports of the mask; a battery bo x, and a p las t ic bo x wi th an A rduino uno and a breadboard. The schema t ic is shown in t he diagram, whe re we use an Arduino Uno to control t wo air pumps, one air pump for inflation, the other one for deflation. Mask Behaviour Inflation pinMode(air pump 1 OUTPUD; pinMode(air=pump) OUTPUD; digitalWrite(air pump 1,0) digitalWrite(air- pump- 2,250); delay(250); -digitalWrite(air pump 1,200); digitalWrite(air- pump- 2,0); delay(150); -JK JK JK JK JK JK JK JK JK JK JK JK JK JK JK JK

The

https://www.youtube.com/watch?v=-UgTmG7Ff3Q

JK JK JK JK JK JK JK JK JK JK JK JK JK JK JK JK

https://bpro2022.bartlettarchucl.com/architectural-computation-22-1/year1-kai-jiao

JK JK JK JK JK JK JK JK JK JK JK JK JK

JK JK JK

Meta Presence

"Meta Presence" Experiment

Personal Presence

vi

space

meta

have t he nature

boundar

of space changed?

how should human ex -

space

defined?

w does vi rtual space affect human percept ion of presence

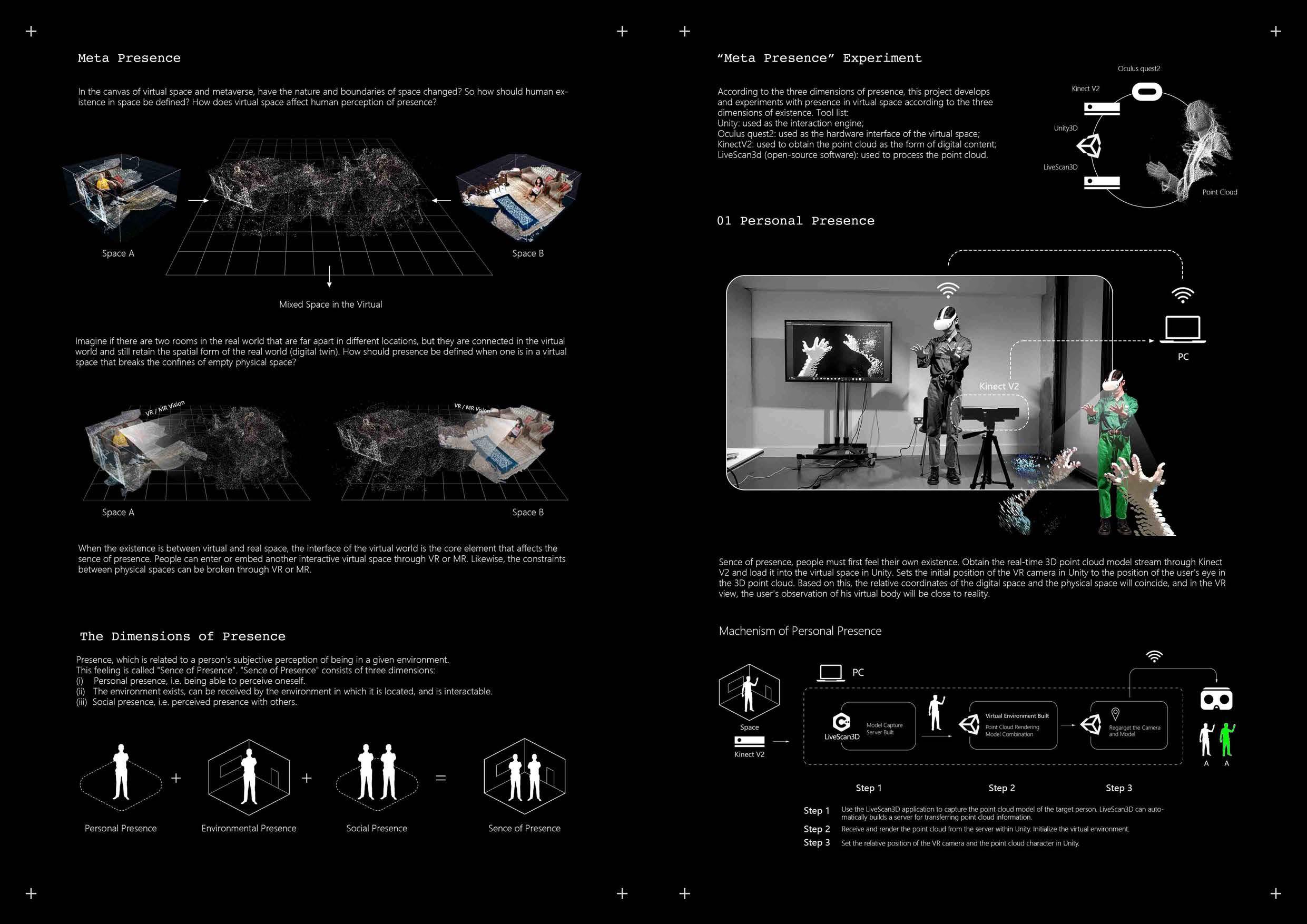

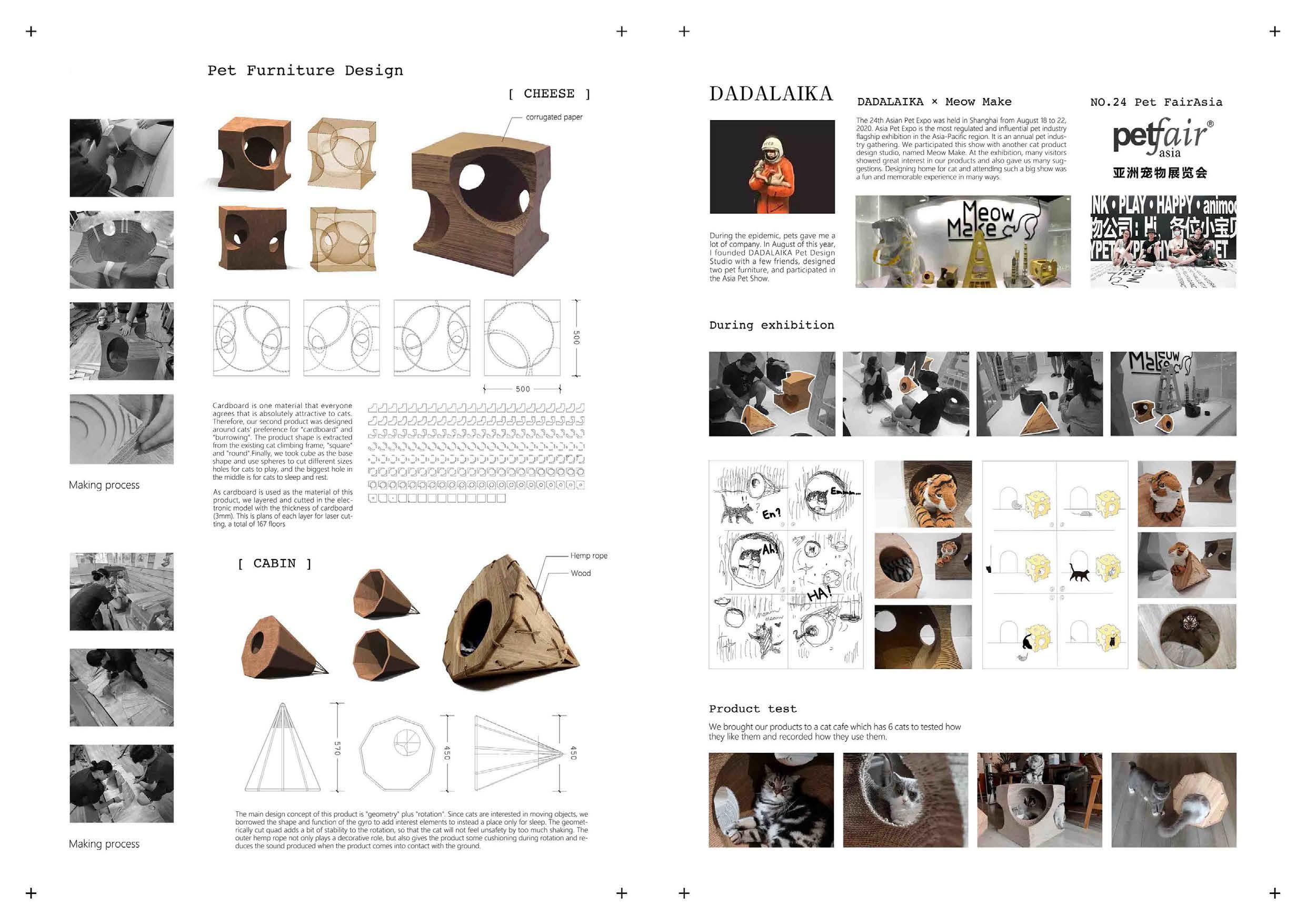

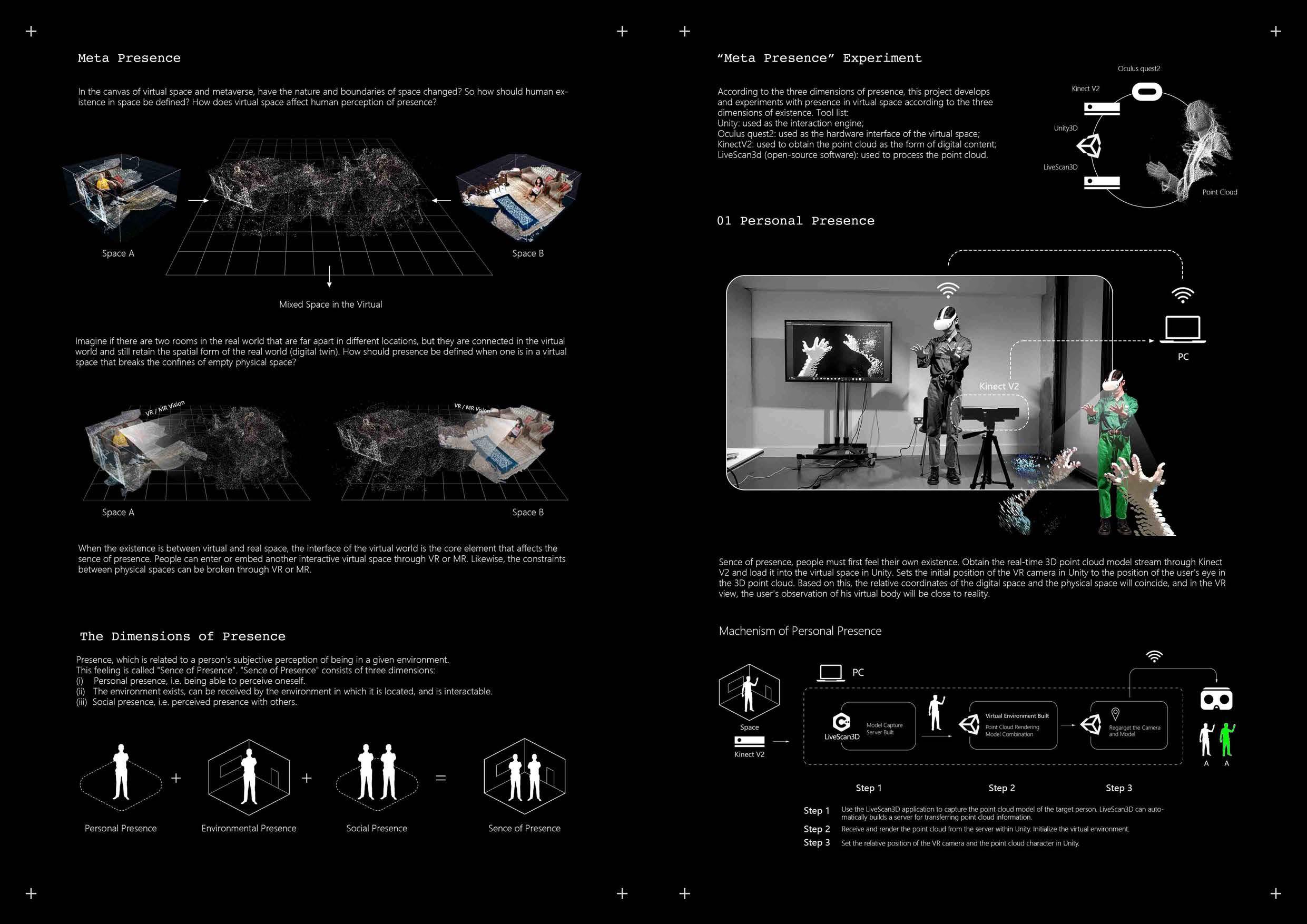

Space A Space B Mixed Space in the Vi rtual Imagine if there are t wo rooms in the real wo rld that are far apa rt in different locations, but they are connected in the vi rtual wo r ld and still retain the spatial form of the rea l wo rld (digi tal twin). Ho w should pre se nce be defined whe n one is in a vi rtual space that brea ks the confines of empty ph ysical space? Space A Space B Wh en the existence is bet ween vi rtual and real space, the in terface of the vi rtual wo rld is the core e ement that affects the sence of presence. People can enter or embed another interactive vi rtual space through VR or MR. Likewise, the const raints bet ween ph ysical spaces can be bro ken through VR or MR. The Dimensions of Presence Prese nc e, which is relate d to a person's subjective perception of be ing in a given en vironmen t. Th is eeling is called "Sence of Presence" "Sence of Presence" consists o f three dimensions: (i) Persona l presence i.e. be ing able to perce ive onesel f (ii) The en vironmen t exis ts , can be received by the environment in which it is loca ted , and is interactable. (iii) Social presence, i.e. perce ived presence wi th others.

In the can vas of

rtual

and

ve rse ,

and

ies

So

is tence in

be

Ho

?

wi th

vi

to the three dimensions of existence. Tool list: Unity: used as the interac ion engine; Oculus quest2: used as the hardware interface of the vi rtual space; Kinec t V2: used to obtain the point cloud as the form of digital content; LiveScan3d (open-source software): used to process t he po in t cloud.

Oculus quest2 LiveScan3D ,,,-, ' I' • , I I Sence of presence, peop le must first feel their own existence. Obtain the real-t me 3D po in t cloud model stream t h rough Kinect V2 and load it into the vi rtual space in Unity. Sets the initial pos ition of the V R camera in Unity to t he pos ion of the user's eye in the 3D point cloud. Based on th is, t he relati ve coordinates of the digital space and the ph ysical space will coincide, and in the VR view, the user's observation of his vi rtua bod y will be close to reality. Machenism of Personal Presence Step 1 Model Capture Server Built Step 1 Vi tual Env ronment Bu ilt Point Cloud Rende ri ng Mode! Combination Step 2 Regarget the Camera and Model Step 3 Use the Li veScan3D application to capture the point cloud model of the target person. LiveScan3D ca n automatically builds a server for transferring point cloud in ormation Step 2 Receive and render the point cloud from the server within Unity Initialize the virtual e nviro nment. Step 3 Set the relative position of the VR camera and the point cloud character in Unity. JK JK JK JK JK JK JK JK JK JK JK JK JK JK JK JK

According to the three dimensions of presence, this project develops and experimen t s

presence in

rtual space according

01

JK JK JK JK JK

JK JK JK JK JK JK JK JK JK JK

JK

JK JK JK JK JK

JK JK JK JK JK JK JK JK JK JK

JK

https://www.youtube.com/watch?v=yLVoUVoo8Hg

JK JK JK JK JK JK JK JK JK JK JK JK JK JK JK JK

JK JK JK JK JK

JK JK JK JK JK JK JK JK JK JK

JK

https://bpro2022.bartlettarchucl.com/architectural-computation-22-1/year1-kai-jiao-1

JK JK JK JK JK JK JK JK JK JK JK JK JK JK JK JK

JK JK JK

JK JK JK

JK JK JK JK

JK JK JK

JK

JK JK

https://www.youtube.com/watch?v=-1dkKZ1agas&t=40s

JK JK JK JK JK JK JK JK JK JK JK JK JK JK

JK JK

JK JK JK JK JK

JK JK JK JK JK JK JK JK JK JK

JK

JK

JK JK JK JK JK JK JK JK JK JK JK JK

JK JK JK