MSI Smart Cobotics Flipbook

Smart cobotics technology lowers the barrier of entry for robotic innovation and wider adoption. The mission of this Smart Cobotics Centre was to unlock productivity, grows, and healthier workplaces by addressing some of the key barriers holding industry back. Seven universities collaborated closely to advance 1) intuitive interaction and collaboration between people and robots, 2) enable robots to use more human like dexterous manipulation skills, 3) reduce the effort of designing, deploying, verifying and validation human-robot collaborative systems, and 4) explore the wider social, regularity, and legal challenges holding back the deployment of smart collaborative robotic technology in a manufacturing context.

Each of the posters within this interactive book represents an Industrial Digital Technology (IDT) or Feasibility Project developed within the Smart Cobotics Centre

The Centre is built on strong relationships with 50+ industrial and research partners across core UK industrial sectors. Our engagement with these industrial partners included the undertaking of Feasibility Projects to address the challenges which impede the adoption of collaborative robotics into their manufacturing processes.

This Flipbook illustrates how the Centre has fulfilled its Mission to unlock the full potential of UK industry to improve productivity and maximise growth by enabling people to achieve high quality results through collaborative automation and robotics.

Intelligent Human-Robot Collaboration for Future Advanced Healthcare Applications

Mohamed Adlan Ait Ameur (adlan.ait-ameur.2020@uni.strath.ac.uk), Dr Erfu Yang, Dr Scott Brady Design, Manufacturing and Engineering Management (DMEM), University of Strathclyde, Glasgow National Manufacturing Institute Scotland (NMIS), University of Strathclyde, Glasgow

Research background and Aim

In Healthcare 4.0, Human-Robot Collaboration (HRC) is crucial for improving patient care Robots assist healthcare professionals in diagnostics and surgery, leading to more personalised healthcare. The research aims to develop a framework to enhance collaboration between caregivers and patients, improving healthcare delivery.

Design

HRC Modalities

This PhD project is funded by a Global Research Scholarship from the University of Strathclyde, the Department of Design, Manufacturing and Engineering Management (DMEM), and the National Manufacturing Instiutte Scotland (NMIS).

Robots in Healthcare

We aim to construct a comprehensive framework optimising Human-Robot Collaboration (HRC) in healthcare.

This framework will consider caregivers’ and patients’ physiological signals, speech, gestures, body language, and facial expressions.

By integrating these components, the designed interfaces will promote seamless communication, trust-building, and empathy between humans and robots.

Rapid Design, Development, Validation of Smart Human-Robot Collaborative Systems

Professor Anja Maier (PI), Professor Jorn Mehnen (CoI) (jorn.mehnen@strath.ac.uk), Professor Xiu Yan (CoI) Dr Baixiang Zhao (PDRA), Dr Amr Ahmed (PDRA), Mohamad Adlan Ait Ameur (RA) Design, Manufacturing and Engineering Management (DMEM), University of Strathclyde, Glasgow

DMEM’s Contributions:

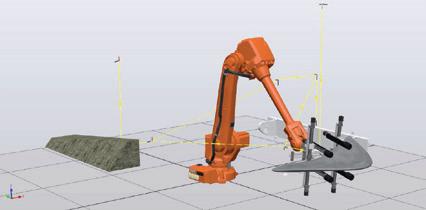

• The design and implementation of a high-fidelity Digital Twin in Nvidia Isaac Sim as a central component for the simulation of challenging industrial environments such as factory shop floors or farms and vineyards.

• The design of a robotic gripper, equipped with tactile sensory systems that can safely grasp soft fruit for quality evaluation, facilitating close interaction between humans and cobots.

• Applied studies of human-robot collaboration to minimise stress and fatigue of people.

• The development and validation of a design for human-robot collaborative systems based on realistic simulation of human-involved manufacturing or drone-based inspection processes.

Advancing smart manufacturing to enhance UK industry productivity, quality, and adaptability through widespread adoption of smart collaborative robotics technology, achieving at least a 35% increase in productivity, near zero-defects in quality, and near zero changeover and re-configuration times.

Human-robot collaboration battery pick and place experiment.

Photorealistic drone flying simulation showing the third person view (left) and the perspective as seen by the drone (right).

Farm simulation for human-robot collaborative harvesting, with the farm and the robot perspective in the farm field.

Gripper models for grasping different object geometries

Configuration of UR10e (left), smart Robotiq gripper 2F-85 (middle), and grasping a soft fruit (right). SCAN ME!

Autonomously Picking Food from Bins: A Case

Study & Test Bed

• Pick and place food items from bins onto kitchenware on a tray for people.

• The system can autonomously navigate a fixed complex environment & delicately pick up a single target from a bin containing multiple objects (clutter).

1) RBG-D camera on wrist: Object detection via YOLOv5.

2) Grasp pose generation through the Fast Graspability Evaluation (FGE) algorithm using a depth map of clutter.

3) Visual servo to grasp pose via computation of the position and orientation of the target.

4) Motion planning for collision avoidance with MoveIt. Virtual scene in RViz.

Wrist F/T sensor verifies grasps for task planner to sequence picking.

“The intersection of bin picking & organic items brings a flurry of difficult to predict behaviors –which may require robots to have a softer touch.”

Project Webpage: Loong Yi Lee

Tactile Tool Manipulation: A

feasibility study in paste spreading

• Vision-based tactile sensors such as TacTip can be trained to measure the pose and forces on a tool like a spreading spatula.

• Force control enables delicate spreading of pastes on bread or scraping unknown surfaces.

TacTip tactile images from different contact conditions

Example of tactile tool “servoing”, following an unknown surface.

Tactile sensing and deep learning enables higher dexterity in tool manipulation. This project develops a system that delicately spreads pastes on bread, handling uncertainties in materials and contact interactions. The work can be expanded to other tools.

Outcomes

Modular tactile tool holder & robot control policy.

Study in pastes and spreading interaction for spreading path planning.

Demonstrator for spreading different pastes on bread.

Contact @bristol.ac.uk

Ly.lee, Silvia.Terrile, Bowen.Deng, Nathan.Lepora Jonathan.Rossiter

TacTip

Gaussian Density Network

Distributed Multi-Camera Networks for Markerless Body Tracking in Complex Human-Robot Workspaces

Problem Statement

Traditional human-robot collaboration (HRC) systems often struggle with accurately and reliably tracking human movements, resulting in inefficiencies, safety risks, and limited interaction between humans and robots. Current technologies, such as marker-based systems and IMUbased body tracking, are not practical for HRC as they required special suits to be worn

Markerless tracking would be much less intrusive and safer when deployed as passive observer continuously tracking body movements The challenge is integrating feeds from distributed cameras to reconstruct complex workspaces

Key Results

• Enhanced Accuracy: Improved detection and tracking of human movements.

• Real-Time Performance: Improved the latencies by decentralisation.

• Robustness in Dynamic Environments: Maintains performance in various lighting conditions and occlusions.

• User-Friendly GUI: Simplified configuration and control for easy accessibility.

End Users

• Manufacturing and Industrial Automation.

• Healthcare and Rehabilitation

• Logistics and Warehousing

• Construction and Maintenance

• Entertainment and Service Industries:

• Research and Development

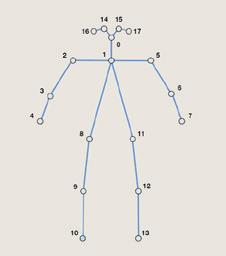

Body Tracking

Overlays Skeleton: Projects a skeleton image onto the person, representing joints and basic facial structure.

Adjustable Detail: Skeleton detail can vary from 18 to 38 points, impacting speed and processing power.

Expected Impacts

• 3D Motion Capture: Accurate reconstruction of body part positions.

• Redundancy: Reduced error likelihood due to sensor malfunctions or environmental conditions.

• Occlusion Handling: Continuous tracking despite occlusions.

• Healthcare and Assistive Technologies: Monitor patient movements for diagnostics and elderly care.

• Physical Therapy: Assess recovery and tailor rehabilitation programs.

• Ergonomic Assessments: Identify harmful postures and movements for safer workspaces.

A significant advancement in accurately capturing human movement across larger and more complex spaces, including those with static and dynamic occlusions. The system can provide comprehensive coverage and detailed analysis, making it suitable for various applications beyond HRC, e.g. understand and analyse human behaviour in workspaces.

Contacts: Dr Masoud S. Bahraini – Senior Research Fellow, The University of Birmingham s.m.sotoodehbahraini@bham.ac.uk

Emotional Recognition System for Safe and Responsive Human-Robot Collaboration

INTRODUCTION: Advancements in robotics necessitate systems capable of understanding and responding to human emotions in real-time This project aims to develop a model for real-time emotion recognition using multimodal data to enhance the safety and responsiveness of humanrobot collaboration in industrial settings

AIM: Develop an integrated framework for robotic systems that enable adaptive and safe interactions by identifying human emotional states via facial and physiological data

OBJECTIVES

Integrate sensors to capture facial and physiological data

Combine multimodal data streams using sensor fusion.

Integrate facial expression data with

IMPACT

Provide data-driven insights to improve safety in HRC.

Minimise downtime by detecting operator fatigue for timely breaks.

Increase productivity by optimising robot responses to human emotions, reduce errors

Provide open-access machine learning models and datasets.

Groundwork for future innovations in emotionally aware robotics.

The ISCF smart collaborative industrial robotics project faces barriers of Responsiveness, acceptance, skill, capability, cost. PA1 focusing on effective, natural, and safe human-robot collaboration

Develop algorithms to detect emotions using ML algorithms on facial data and physiological data (EEG, ECG, EDA)

Validate adaptive robot control system based on emotional feedback.

Facial Emotion Recognition System for Safe and Responsive Human-Robot Collaboration

INTRODUCTION: Advancements in robotics necessitate systems capable of understanding and responding to human emotions in real-time This project aims to develop a model for real-time emotion recognition using facial data to enhance the safety and responsiveness of human-robot collaboration in industrial settings

AIM: Develop an integrated framework for robotic systems that enable adaptive and safe interactions by identifying human emotional states via facial expression

OBJECTIVES

Achieve high accuracy in real-time facial emotion recognition

Prepare & process dataset (benchmarked datasets – CK+, JAFFE, FER2013 & AffectNet).

Develop algorithms to detect emotions using ML algorithms on facial data

Integrate emotion recognition capabilities with robot control systems.

Validate adaptive robot control system based on emotional feedback

Datasets & Algorithms

Datasets: CK+, JAFFE, FER2013, AffectNet

Algorithms: Haarcascade, CNN, MTCNN, SVM, and AlexNet

Facial Recognition

Smart cameras to capture facial expressions. Employ MTCNNs for feature extraction and emotion classification.

Adaptive Robot Control

Control framework to dynamically adjust robot behaviour based on emotion recognitions.

Feedback loops to continuously adapt to the human's emotional state.

IMPACT

Improved emotion recognition accuracy from 40% to 78 1%

Improves safety in collaborative environments

Improved efficiency in manufacturing operations

Enhances the robot's response to human emotional states

The ISCF smart collaborative industrial robotics project faces barriers of Responsiveness, acceptance, skill, capability, cost. PA1 focusing on effective, natural, and safe human-robot collaboration

Intelligent, Adaptive Flexible Gripper for Handling Large Heavy Irregular Shaped Objects

Motivation

Handling large, heavy, and irregularly shaped objects remains a significant challenge in many manufacturing industries Current gripping systems often lack the adaptability and precision required to handle diverse object geometries, weights, and materials safely and efficiently As industries push towards higher automation and flexibility, the demand for intelligent, adaptive systems that can securely handle these objects grows Therefore, this project seeks to address this critical gap by developing an advanced gripping mechanism that integrates smart sensing, control systems, and adaptive algorithms to manage irregularly shaped objects safely.

Robust Failsafe Systems

Create algorithms that adjust grip dynamically based on object properties

+ Real-time adaptability

sdsd

- High complexity

Challenge: Ensuring stability and precision across varied object shapes

Sensor Fusion Integration :

Combine force, vision, and proximity sensors for realtime object monitoring.

+ Improved precision

- Data complexity

Adaptive Hardware Design

Develop a modular, flexible gripper capable of adapting to different object sizes, shapes, and weights.

+ High adaptability

- Increased complexity

Goals

Develop an intelligent, adaptive gripper system with failsafe mechanisms for handling large, heavy, and irregular objects by:

Integrating real-time sensor fusion for precise and responsive object manipulation

Developing adaptive algorithms to adjust grip dynamically based on object properties

Implementing robust failsafe systems to ensure operational safety and prevent system malfunctions

Methods

Developing Adaptive Algorithms

Automatic system disengagement upon detecting overloads or anomalies.

+ Ensures safety

Challenge: Ensuring durability while maintaining flexibility across diverse object types.

Balancing mechanical complexity with reliable performance and cost-effectiveness.

- Potential delays

Challenge: Balancing sensitivity and minimizing unnecessary system shutdowns.

Sensor Fusion Integration

Challenge: Ensuring real-time integration without lag or data inconsistency

Managing synchronization across multiple sensors for accurate control.

Impact

•Increases efficiency in handling large, heavy, and irregular objects.

•Enhances automation flexibility in diverse

•Reduces operational downtime with reliable failsafe mechanisms. manufacturing environments.

•Lowers manual labor costs through advanced adaptive systems.

The ISCF smart collaborative industrial robotics project faces barriers of Responsiveness, acceptance, skill, capability, cost. PA1 focusing on effective, natural, and safe human-robot collaboration.

Bahadir Tarhan, Fahad Khan, Daniel Oakley, James Fowler, Dr Seemal Asif s.asif@cranfield.ac.uk

Robot Assisted

Lifting and Assembling of Unwieldy and Heavy Objects

Problem Statement

- Repetitive handling of heavy objects (>10kg) is common in many industrial tasks, often leading to worker injuries, fatigue, and reduced productivity

- Current solutions, such as manual handling or fully automated systems, have limitations in flexibility, safety, and efficiency

- The challenge lies in developing a system where humans and robots can effectively collaborate to manipulate heavy and unwieldy objects, leveraging their respective strengths to enhance safety, precision, and efficiency.

Key Results

• Enhanced Safety: Reducing the risk of injuries by ensuring safe interactions between humans and robots.

• Increased Efficiency: Reducing the physical strain on workers and speeding up processes.

• Improved Precision: More accurate and adaptable object manipulation through humanrobot collaboration.

End Users

• Manufacturing Workers: Assists workers in lifting and positioning heavy components.

• Construction Workers: Aids in handling heavy materials and tools.

• Logistics and Warehouse Staff: Streamlines movement and organization of heavy packages.

This human-robot co-manipulation system offers a transformative solution for industries that require the handling of heavy objects. By combining human dexterity and decision-making with robotic strength and precision, it improves safety, efficiency, and precision in various applications.

Barriers and limitations

1. Interpreting Position and Orientation Changes: Difficulty in understanding intended steering inputs.

2. Recognising and Interpreting Changing Contact Conditions: Current admittance and impedance controllers rely on single force/torque sensors, limiting effectiveness in scenarios with multiple contacts from operators and the environment e.g. during assembly.

3. Safety: Challenges in distinguishing intended from unintended inputs.

Expected Impacts

• Service Enhancement: Improved health and safety and more intuitive support for workers in handling heavy objects.

• Production Enhancement: Improved performance and more consistent quality resulting from robot support human operations.

Contacts: Dr Masoud S. Bahraini – Senior Research Fellow, The University of Birmingham s.m.sotoodehbahraini@bham.ac.uk

Made Smarter Innovation - Research Centre for Smart, Collaborative Industrial Robotics

Prediction and analyses of thermal-induced distortion

PA3: Rapid design, validation and deployment of smart HRC systems

WHAT IT IS: A modular CAE simulation toolkit for optimising robotic multi-stage assembly systems. It accurately models fixturing, process sequences, and heat application, considering real non-rigid, compliant parts with thermo-mechanical errors. This toolkit predicts thermal-induced distortion based on welding parameters, geometry, weld sequence, stitch length, cooling time, clamping, and fixturing It's validated for various component configurations and sizes, serving prototyping needs effectively.

Framework: Thermo-mechanical model development for

WHAT IT CAN DO:

1) Scenario generation to develop process window and support system optimisation

2) To optimise fixturing and clamping system design

3) Reduce dimensional variation and improve the structural integrity of the components

KEY MODELLING CAPABILITIES:

1) Physics-based model predicting interaction between process parameters and the materials’ behaviour

2) Simulate the effect of heat introduced during welding in the mechanical behaviour of the clamps and the nonlinear distribution of heat, which leading to mechanical distortion

3) Simulate single station assembly system involving placing, clamping, fastening and releasing operations (PCFR cycle)

4) To support fixturing and clamping for multi-stage assembly

5) Support prototype development

6) Support overlaying defect information for AR/VR systems (Digital Twins) to enable cobotic-human repair/rework

WMG Team Contact:

Dr Anand Mohan (anand.mohan@warwick.ac.uk)

Duc Tam Hong Nguyen (duc.t.h.nguyen@warwick.ac.uk)

Dr Dan Dai (dan.dai.1@warwick.ac.uk)

Dr Pasquale Franciosa (p.franciosa@warwick.ac.uk)

Professor Darek Ceglarek (d.j.ceglarek@warwick.ac.uk)

Made Smarter Innovation - Research Centre for Smart, Collaborative Industrial Robotics

AI-Enabled Digital Twin System for Optimized Manufacturing Processes

PA3: Rapid design, validation and deployment of

An AI-enabled digital twin system leverages real-time data and advanced AI technologies analytics to simulate, monitor, and optimize manufacturing processes, enhancing efficiency, reducing downtime, and enabling smarter decision-making.

Key Challenges:

• Data silos

• Data privacy/security

• Limited data availability

• Difficulty in labelling

• Costly in CAD model

• Real-time defect control

• Complex 3D dynamic scene construction

User-defined upload local data (signal, video…)

• Comprehensive Data/AI Model Integration

3D Object Model, Quality Analysis Report, Performance Optimization Suggestions, Predictive Maintenance Alerts, … AI-Enabled Digital Twin:

• Real-Time Quality Control

• Advanced Root Cause Analysis

• 3D Virtual Environment Construction

• Predictive Maintenance

• Optimized Performance and Efficiency

M1: Deep Generative Network for Enhanced Welding Time Series Generation and Classification

M2: Transfer Learning for Welding Video Defect Detection

M3: Enhanced Welding Defect Recognition Using Unsupervised Continuous Learning

M4: Advanced Video-Based 3D Generation Techniques for Precision Shape Inspection

AI-enabled Corrective Actions for Cobotic-Human Repair/Rework

WMG Team Contract:

Industrial Benefits: Improving efficiency, quality, and decision-making while reducing costs and downtime through real-time monitoring and predictive insights (Aerospace, Shipbuilding, Agriculture, etc )

Defect Recognition with Unlabelled

Dr Dan Dai (dan.dai.1@warwick.ac.uk)

Duc Nguyen(duc.t.h.nguyen@warwick.ac.uk)

Dr Pasquale Franciosa (p.franciosa@warwick.ac.uk)

Professor Darek Ceglarek (d.j.ceglarek@warwick.ac.uk)

3D Model Accuracy with CAD (mm)

Car Door (Video by M3T Drone)

In-situ Visual Inspection Conducted by Humans, Cobots and Drones in Factory Environments

PA3: Rapid design, validation and deployment of smart HRC systems

WHAT IT IS: In manufacturing, inspection ensures high quality of products (towards Zero-defect strategy). Standard inspection approaches such as (I) manual inspection, (II) fixed sensor inspection systems, and (III) industrial robot inspection systems are spatially constrained for (A) distributed manufacturing stations and (B) large assembly structures. Drone technology provides an emerging solution for scenarios (A) and (B) by delivering versatile sensor(s) as payloads on ‘flying robots.’ Suitable sensors can be flexibly delivered or positioned at any required locations during the inspection process

Benefits of Drones Implementation for Quality Inspection in Factories

Duc Nguyen (duc.t.h.nguyen@warwick.ac.uk)

Dr Anand Mohan (anand.mohan@warwick.ac.uk)

Dr Dan Dai (dan.dai.1@warwick.ac.uk)

Dr Pasquale Franciosa (p.franciosa@warwick.ac.uk)

Professor Darek Ceglarek (d.j.ceglarek@warwick.ac.uk)

Situational Awareness for Advanced Human-Robot Collaboration using Multi Modal Sensors on Soldering Cell

INTRODUCTION: Advancements in robotics and automation requires both emotional and situational awareness This project leverages multimodal sensory data to enhance collaboration, using a soldering cell setup as a test bed for advanced human-robot interaction

AIM: Integrate emotional and situational awareness using multi-modal sensing and develop framework for improved efficiency and safety in human-robot collaboration on soldering cell as a test bed

OBJECTIVES

Develop a system combining facial emotion, physiological, skeleton and gaze monitoring using multimodal sensory data.

Implement a GUI for user task selection and monitoring, developed using Qt framework.

Data collection of facial expression, physiological signals, eye gaze and pose estimation data (skeleton & ArUco) using a multimodal sensor fusion.

Develop a collaborative robot system capable of executing dynamic tasks.

Develop control framework to dynamically adjust robot behaviour based on emotion recognitions and situational context.

Measure trust, stress, fatigue and comfort Levels, trust and stress level of an operator.

Facial Recognition:

• Use smart cameras to capture facial expressions.

• Employ MTCNNs for feature extraction and emotion classification.

Physiological Monitoring:

• Use Empatica E4 wristbands for HR, EDA, and skin conductance data.

• Use Emotiv Flex for ECG and brain activity monitoring.

• Employ RGB-D cameras for pose estimation of robots, humans, and objects.

• Use ZED stereo cameras to track human body joints (18 points) for pose estimation.

• Use Tobii Pro Glasses 3 for eye gaze data.

• Sensor Fusion and Data Integration.

• Capture data from physiological sensors & pose estimation sensors.

• Integrate emotional expression data with physiological signals and pose estimation data using a multi-layer perception.

• Develop control framework to dynamically adjust robot behaviour based on emotion recognitions and situational context.

• Implement a GUI for user task selection and monitoring, developed using Qt framework.

The ISCF smart collaborative industrial robotics project faces barriers of Responsiveness, acceptance, skill, capability, cost. PA1 focusing on effective, natural, and safe human-robot collaboration.

Combine emotional recognition with situational awareness models using machine learning and sensor fusion techniques.

Integrate the emotion recognition system with situational awareness data (pose estimation & gaze) with robotic system for real-time robot cell control and test the collaborative soldering cell setup.

Conduct experiments to collect data for further development of ML algos and the evaluation of system performance and user experience.

IMPACT

Open-Access to dataset and resources of physiological, situational and facial data

Real-time situational and emotional awareness enables robots to react to unexpected situations

Improves the robot's response to human emotional states, ensuring safer and natural interactions

Situational Awareness:

Adaptive Robot Control:

Fahad Khan, Jamie Rice, Daniel Oakley, Dr Seemal Asif

An Ethical Framework for H Human-Robot Collaboration in M Manufacturing

WHY an Ethical Framework?

• Collaboration between humans and robots in mixed teams on the shop floor holds the potential to unlock many opportunities, but also unforeseen challenges that could affect worker wellbeing and limit successful outcomes.

• Designing future H-R collaborations raises a series of technical, social, and human factors concerns, which require careful consideration.

• Potential ethical issues and responsibilities that result from H-R collaboration need to be identified to inform ethical frameworks and these can then serve as the foundation for the responsible design and innovation of future manufacturing processes, practices, and artefacts.

BUILDING the Ethical Framework

• N=32 European philosophers, ethicists, and legal experts were engaged in a one-year long research

• Three iterative rounds of data collection and analysis were employed through a qualitative Delphi approach

BENEFITS for research and practice

In manufacturing contexts:

• Ethical design guidance for anybody involved in H-R systems to help prioritise human safety, wellbeing and dignity in environments where future collaborative robots will operate

In academic contexts:

• Integration of human-centric considerations into university curricula to apply ethical decision-making approaches to robot design requirements

KEY RESULTS an overview of the proposed structure

• Ethical principles pertain to the direct work dynamic between human workers and collaborative robots on the shop floor, while, at organisational level, they refer to the governance of processes, procedures, and practices that organisations will implement within H-R collaboration.

• The framework suggests a hierarchical relationship between humans and collaborative robots, with robots serving as ‘aids’ to human workers Critically, human workers will continue to play a crucial role in overseeing and performing tasks in varying situations and scenarios occurring during their interaction with collaborative robots.

Join us in shaping a responsible future integrating collaborative robots into safe and effective H-R/mixed teams.

Scan the QR code to share your valuable insights.

This framework outlines ethical considerations and implications for responsibly shaping the future of human-centric industry towards organisational (and societal) governance. The framework identifies a set of principles and design goals targeting the different layers of an organisation, with H-R collaboration scenarios on the shop floor and the ethical governing role and practices of the management.

Smart Cobotics Centre Contact Information

If there is a poster in which you are particularly interested and you would like to find out more, please get in touch with the relevant Academic lead.

The University will be indicated on the poster itself, and the individual contacts are then as follows:

University of Birmingham (Project Lead)

Professor Niels Lohse - n.lohse@bham.ac.uk

Cranfield University

Professor Phil Webb - p.f.webb@cranfield.ac.uk

Dr. Seemal Asif - s.asif@cranfield.ac.uk

Dr. Sarah Fletcher - sarah.fletcher@cranfield.ac.uk

Loughborough University

Professor Peter Kinnell – p.kinnell@lboro.ac.uk

University of Bristol

Professor Jonathan Rossiter - jonathan.rossiter@bristol.ac.uk

Professor Nathan Lepora - n.lepora@bristol.ac.uk

University of Dundee

Professor Angela Daly - adaly001@dundee.ac.uk

University of Strathclyde

Professor Jorn Mehnen - jorn.mehnen@strath.ac.uk

Professor Anja Maier - anja.maier@strath.ac.uk

University of Warwick

Professor Dariusz Ceglarek - d.j.ceglarek@warwick.ac.uk