1 minute read

SSOLUTIONS SOLUTIONS OLUTIONS

The main solution that many people suggest is to build ML models on data that is unbiased. For the example of racist models in the medical field, this would look like building a model on data that does not strictly contain healthcare spending history for white and black Americans, but rather includes other factors that don’t misrepresent black Americans. The reason is that ML models try to make predictions based on data points that are most like the new, unlabeled data point. If a model is built on data that is not accurate or representative of a population, we see these kinds of problems. For issues involving the labeling of LGBTQ+ individuals, it is likely not enough to build a model on unbiased data Groups that are constantly evolving will continue to be hurt by algorithms that are based on previously seen data While these predictions may be accurate at times, they may not hold up in the future, so it is likely that AI will never be successful in trying to classify LGBTQ+ individuals. Overall, it is difficult to determine the exact solution to the issues raised by ML and AI. While there are countless benefits of these phenomena that we are experiencing in our daily lives, we must be aware of the social issues that come with it, as well as why they are there in the first place From there, we can work towards rectifying these problems and preventing them from happening again in the future.

Sources

Advertisement

Grant, Crystal “Algorithms Are Making Decisions About Health Care, Which May Only Worsen Medical Racism ” American Civil Liberties Union, 24 Feb 2023, www aclu org/news/privacy-technology/algorithms-in-health-care-may-worsen-medicalracism

Keyes, Os The Misgendering Machines: Trans/HCI Implications of Automatic Gender Recognition ironholds org/resources/papers/agr paper pdf

Obermeyer, Ziad, et al “Dissecting Racial Bias in an Algorithm Used to Manage the Health of Populations Science, 25 Oct 2019, www science org/doi/10 1126/science aax2342

Rangnekar, Priti “Decoding the ‘Encoding’ of Ableism in Technology and Artificial Intelligence ” Medium 27 July 2021 medium com/@scienceconnectorg/decoding-theencoding-of-ableism-in-technology-and-artificial-intelligence-a4f33bcccc85

Wareham, Jamie “Why Artificial Intelligence Is Set up to Fail LGBTQ People ” Forbes, 26 Mar 2021, www forbes com/sites/jamiewareham/2021/03/21/why-artificial-intelligencewill-always-fail-lgbtq-people/?sh=3e81a6d2301e

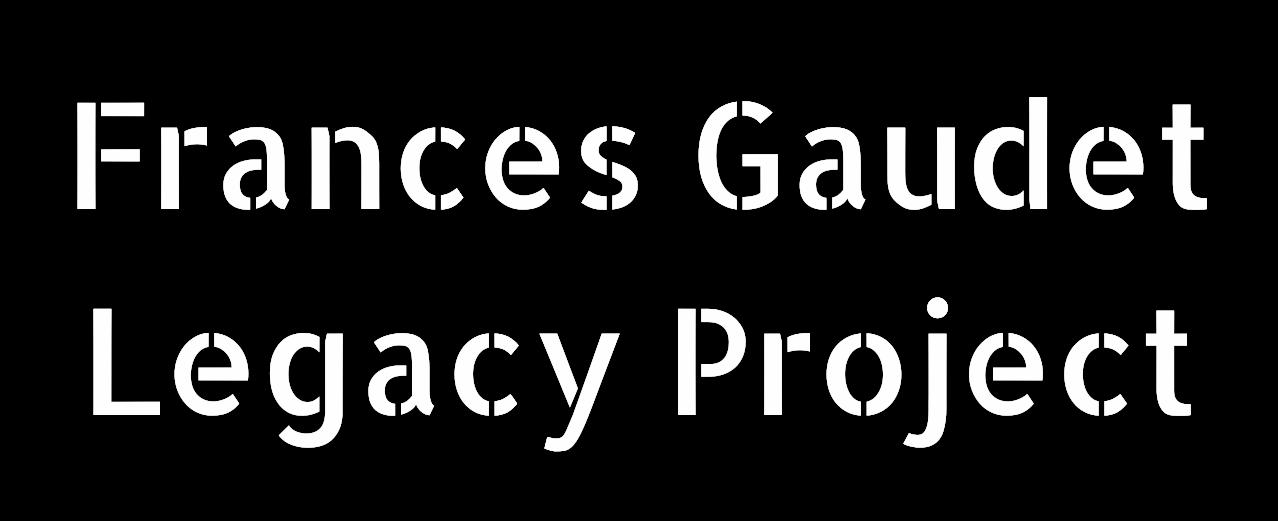

This paid internship provides students with tangible experience in technology and feminist leadership while working on the digital scholarship projects of Tulane faculty in the humanities, social sciences, and sciences. Technical areas include: digital media, digital archiving, web design, database development, coding, design thinking, UX/UI, and audio/visual editing.

AresourceusedtodocumentthelifeofFrancesJoseph-Gaudetan Americanactivist,educator,andsocialworkerwhofocusedoncauses suchasprisonreform.Thegoalofthisprojectistoguideandinspire similarworkinthepresenttimes.

ProjectPartners:NellBoltonandRosanneAdderleyPh.D.

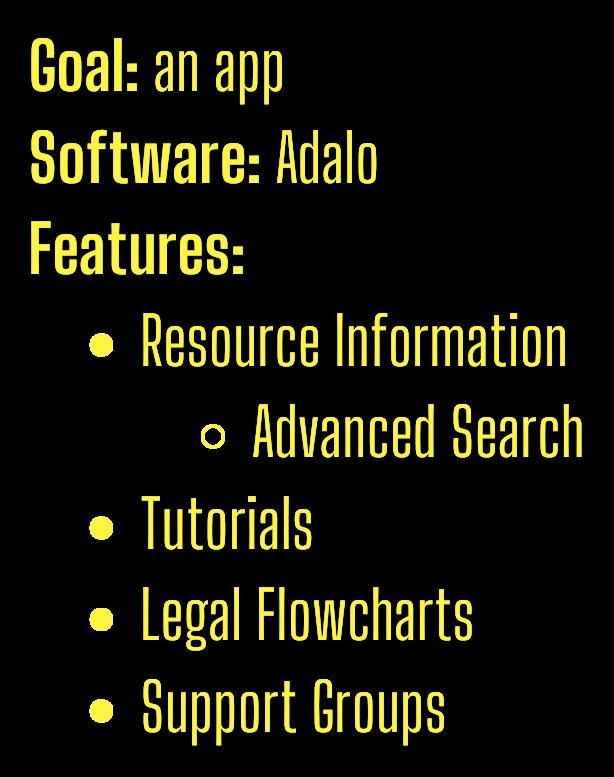

Required:WebsiteandDigitalArchive Software:Omeka

Contributions:

Buildofwebsiteinfrastructure

Websitemaintenance

Digitalarchivingofresources

Goals:Awebsite&activesocialmedia

Software:wordpress,LinkedIn,Twitter,Canva

Project:Anonlinecollectionwhosepurposeistomaintainthe legacyofCongoSquare,anareainthesoutherncornerof ArmstrongParkwherebothenslavedandfreepeopleofcolor wouldgatherthroughoutthe19thcentury.

CongoSquareInterview

Views 2monthsago

Project Promotion Socialmedia Maintenance Website Development