31 minute read

NEWS

ArcelorMittal Launches 5G Steel Initiative

By David Miller

Senior Technical Writer

As the need to remotely monitor and manage large numbers of connected assets increases, wireless connectivity in industry continues to grow in importance. However, many current wireless and cellular networks lack the data transmission rates, reliability, and low latency required for mission-critical industrial applications. In the case of technologies such as centrally controlled autonomous mobile robots, even small amounts of latency cannot be tolerated.

Faster data transmission rates also allow for large quantities of data to be collected from intelligent field devices and enable live audio-visual remote assists to be shared with frontline workers via augmented reality (AR) headsets. 5G, the latest cellular technology in the LTE (Long-Term Evolution) family of telecommunication standards, may help to surmount these issues. While other attempts have been made to integrate cellular or other wireless networks capable of meeting the needs of industrial use-cases—such as Wi-Fi, Bluetooth, and Zigbee—these deployments have often been hindered by reliability and security concerns, as well as limitations regarding the number of devices supported and communication range. By contrast, 5G offers high data transfer rates, low latency, and a broad reach, while promising to be both secure and nearly universally supported.

Still, new technologies like 5G often face adoption headwinds. Many companies are slow to adopt them until real-world applications have been demonstrated. As a result, jointly funded pilot projects and collaborations between private and public sector stakeholders often prove vital to accelerating technological innovation.

One such endeavor is a recent partnership between steel provider ArcelorMittal France, telecommunications company Orange Business Services, and 5G supplier Ericsson. Supported by the French government in connection with its 2021 French Recovery Plan, this 5G Steel initiative will address industrial use cases of 5G adapted to ArcelorMittal France’s specific challenges and business requirements. Ericsson will provide 4G and 5G network technologies and Orange Business Services will contribute its expertise in integration and support.

Specifically, the 5G network will provide: Extensive coverage for all of ArcelorMittal France’s industrial sites, both indoors and outdoors; high data throughput for all connected devices; low latency to support the use of autonomous vehicles and other remote-controlled machinery; network slicing to accommodate each business’s unique processes; and data security to ensure sensitive data remains protected.

The 5G Steel initiative will begin at ArcelorMittal’s Dunkirk plant, followed by Mardyck in Hauts-de-France and Florange in the Grand Est area. Two key applications the initiative seeks to bolster are remote maintenance and autonomous vehicle control. In the case of remote maintenance, workers equipped with tablets will more easily be able to validate and record information directly to the company's information systems. In addition, AR will allow them to access documents, images, or live experts remotely. Regarding its use of autonomous vehicles, ArcelorMittal will be launching the first autonomous train on its private 5G network with the goal of putting it into service in 2023. This train will be used to transport products between various stages of manufacturing at the company’s largest sites.

Scan the QR code to learn more about 5G.

Scan QR code to read about an Ericsson smart factory in the U.S. that is implementing 5G.

OPC Foundation Updates Field Level Communication Specs and Launches Cloud Library

By David Miller

Senior Technical Writer

Interoperability is the challenge at the heart of Industry 4.0. With the basis for the Industrial Internet of Things (IIoT) being the networking together of previously isolated systems, standardized communication methodologies are vital to industry’s digital transformation.

With its wide adoption by technology vendors, the OPC Foundation's Unified Architecture (OPC UA) has proven to be a core element of industrial interoperability. OPC UA is a machine-to-machine communication architecture compatible with multiple operating systems, programming languages, and platforms. It helps integrators and end users ease the process of integrating disparate systems and equipment—often from various vendors—through the use of semantics to provide a common structural framework for various types of data exchange.

At the 2021 OPC General Assembly Meeting, developments pertaining to these goals were discussed, including the release of new specifications for OPC's Field Level Communication (FLC) initiative, as well as the announcement of a globally available OPC UA Cloud Library.

The purpose of the FLC initiative is to develop a general model of OPC UA architecture for use by technology suppliers in their automated field devices. The first set of OPC UA Field eXchange (OPC UA FX) specifications were announced at the SPS event in 2019. The newly released second set of specifications consists of four parts and focuses on enabling communication between automation components using both OPC UA client/ server and publish/subscribe methodologies.

Those four parts: • Provide an overview and introduce the basic concepts of using OPC UA for field level communications. • Specify the base information model and communication concepts to meet the

various use cases and requirements of factory and process automation. • Describe networking services, such as topology discovery and time synchronization. • Describe the data structures for sharing information required for offline engineering using descriptors and descriptor packages.

The OPC Foundation created a multivendor interoperability demo that uses components from 20 different manufacturers to illustrate the functionality of the new specifications. Focusing on horizontal forms of communication, such as controllerto-controller, the demo shows how process data is exchanged via UDP/IP using wired Ethernet or TSN (time-sensitive networking), as well as in combination with a 5G wireless connection.

Cloud library

The OPC UA cloud library, developed in partnership with the Clean Energy and Smart Manufacturing Innovation Institute (CESMII), was also announced at the event. Drawing on contributions from all major cloud vendors, the cloud library contains 65 OPC UA information models.

According to the OPC Foundation, this library allows applications to more easily access OPC UA information models and reportedly increases overall ease-of-use for end users by making the entire collection of OPC UA companion specifications more widely available. While assets at the operations technology level are already able to access these information models from other devices, security concerns have limited the degree to which they can be delivered via the cloud. However, through the OPC UA cloud library, this difficulty can be surmounted.

Erich Barnstedt, chief architect for standards and consortia at Microsoft and chair of the OPC UA cloud library working group, explained how this new cloud library helps address security concerns. "The OPC UA cloud library is the missing link that makes OPC UA information models available in the cloud on a global scale without requiring a connection to physical machines," he said. "It enables OPC UA information models that are used as blueprints for industrial digital twins to be looked up and matched against time-series machine telemetry data provided by cloud-based analytics software, which is a common requirement in IIoT projects."

PACK EXPO East 2022 Nears Record Size with Numerous Highlights

By Sean Riley

Senior Director, Media and Industry Communications, PMMI

With just a few weeks remaining before the event, current exhibitor numbers position PACK EXPO East (March 21-23, 2022, Pennsylvania Convention Center, Philadelphia) as one of the best ways to see technology in action from more than 400 exhibiting companies. This year’s event is expected to show at least a 9% increase in the number of exhibitors—including 21 new exhibitors signing up in the past two months—according to show producer PMMI, The Association for Packaging and Processing Technologies.

This show is already surpassing the size of the last two PACK EXPO East events in 2018 and 2020, which was held just before the pandemic. Registration is also keeping pace with the 2020 show.

“Things have changed dramatically since 2020 and our industry has been working to keep up during this pandemic,” says Laura Thompson, vice president, trade shows, PMMI. “There is a need to meet face-to-face to find solutions to keep up with demand and our numbers indicate that the industry is excited to come together and see the latest technologies first-hand.”

The biennial event provides professionals from the consumer packaged goods (CPG) and life sciences rich Northeast corridor a convenient and rewarding opportunity to explore the latest packaging and processing technology in person, connect with suppliers, and find applicable solutions in a changing marketplace.

After a highly successful PACK EXPO Las Vegas 2021 led the way in bringing the industry together again, PMMI has proven that PACK EXPO events provide unmatched networking and educational opportunities.

Attendees and exhibitors alike will have access to several must-see highlights at this year’s show, including: • Free, supplier-driven 30-minute seminars on the Innovation Stage to learn from experts. • The Forum at PACK EXPO will feature free, interactive 45-minute open ses-

sions followed by small group discussions and Q&A sessions on the latest industry trends. • Networking high points include the

PACK EXPO East Opening Reception, the Young Professionals Reception hosted by PMMI’s Emerging

Leaders Network, and The Packaging & Processing Women’s Leadership Network networking reception. • The Workforce Development Pavilion will feature PMMI Partner Schools presenting mechatronics, packaging and processing offerings, and provide an opportunity to meet students interested in careers in the industry. • The Association Partner Pavilion features associations dedicated to advancing the packaging and processing industry, including the Reusable Packaging Association, Institute of Packaging Professionals, AIM (automatic identification), The Association for Contract Packagers and Manufacturers, Flexible Packaging Association, Adhesive and Sealant Council, Organization for Machine Automation & Control, and the Foundation For Supply Chain Solutions, with more to come.

Just a drive or train trip away for most of the East Coast, no other event will offer such a wide range of state-of-the-art packaging and processing solutions as PACK EXPO East. For more information and to register, visit packexpoeast.com. To learn about the PACK Ready Health and Safety plan and Philadelphia’s recent vaccination mandate, visit packexpoeast.com/packready.

Manufacturers on a journey to lower carbon emissions and optimize energy use need to measure consumption from the supply chain to the line, the cell, and the product being produced.

By Stephanie Neil, Senior Editor

In 2020, Schneider Electric’s Lexington, Ky., facility—a 60-year-old brownfield facility where safety switches and circuit breakers are produced—was recognized as a “Lighthouse Factory” by the World Economic Forum (WEF), which, together with McKinsey & Company in 2018, created the Global Lighthouse Network initiative. “Lighthouse Factories” are companies that effectively use Industry 4.0 technologies to drive digital transformation. As a result, these companies are recognized as beacons for others to follow.

The Lexington factory’s digital energy management strategy leverages IIoT (Industrial Internet of Things) connectivity with power meters and predicative analytics to capture greater energy consumption granularity and optimize energy costs. As a result, the company has been able to reduce energy use by 26%, net CO2 by 30%, and water use by 20%. Based on that progress, the Schneider Electric factory was selected by WEF in 2021 as one of only three facilities in the world to be named a “Sustainability Lighthouse.”

“It’s a testament to the work we’ve been doing in Lexington, and a reflection of the broader Schneider mission,” said Luke Durcan, Schneider Electric’s director of the company’s EcoStruxure platform. “We have been on the decarbonization sustainability path for many years.”

Decarbonization is part of the company’s commitment to Sustainable Development Goals (SDGs), a universal call to action by the United Nations which outlines a collection of 17 interlinked global goals designed to be a "blueprint to achieve a better and more sustainable future for all." Schneider Electric is engaged in efforts to accomplish all 17 SDGs, as well as five sustainability megatrends related to climate, the circular economy, ethics, health and equity, and development.

For its core business, Schneider is transitioning to more electric, digital, decarbonized, and decentralized energy. The company notes that it is committed to carbon neutrality at its sites and in its ecosystem by 2030. “As a manufacturer of things, this is aggressive,” Durcan said.

As aggressive as Schneider Electric’s actions around sustainability are, more companies are following a similar path. According to a Capgemini Research Institute report on sustainable operations, of the 960 executives surveyed, 91% aim to achieve 100% renewable electricity and 87% plan to be carbon neutral by 2040.

Achieving this, however, takes intent and technology. “We have a decarbonization pathway which is a four-stage, 12-point plan to understand the baseline and set targets,” Durcan said. And Schneider Electric uses some of its own technology to understand the actual base level in the plant. This technology includes its PowerLogic power meters, the EcoStruxure Power Monitoring Expert energy visualization and analysis tools that collect metering and machine data at the control layer, and Aveva Insight—a cloud platform that uses artificial intelligence to create actionable information to improve asset reliability and operational performance from enterprise systems, MES (manufacturing execution systems), time series, and non-sequential energy data.

Schneider Electric says its manufacturing customers are using the same technologies for their own sustainability initiatives. “To say there’s been an explosion in the last 12 months is an understatement,” Durcan said. “There’s been a seismic shift in people’s decarbonization efforts.”

But this move to energy efficiency and carbon neutrality does not come naturally, and manufacturers need a lot of help when it comes to getting started.

Letting off steam

A common question is: “Where do I start?” And a common answer is, “Start by doing an assessment on where the greatest gains can come from with the least amount of effort,” said Nathan Hedrick, national product manager at Endress+Hauser. “Typically, I find that some of the biggest energy consumers are compressed air and steam where small changes can lead to big savings.”

Indeed, industry experts agree that steam, compressed air, and electromechanical systems are the biggest culprits when it comes to wasted energy. Fortunately, relatively easy fixes exist for these issues as long as you can measure energy use in these areas first.

Endress+Hauser makes field instruments to measure and monitor the flow, level, pressure, and temperature of liquids. “These instruments are the eyes and ears into the process and are important to sustainability efforts because they can generate baselines that can be monitored, measured, and reported on to see trends,” said Mark Thomas, Endress+Hauser’s industry marketing group manager.

Sean Silvey, product application specialist with Fluke Corp., a supplier of electrical test and measurement tools, agrees that an energy assessment is a good first step. “But in energy, there isn’t a body of research for an industrial plant manager to use to set baselines for what ‘reasonable’ energy usage looks like in a manufacturing facility. So how do you assess what portion of current energy usage is reasonable and what is wasteful? Or, of that

At the Schneider Electric Lexington smart factory, engineers and plant managers use Aveva Insight software to view and optimize energy consumption across the factory fl oor.

wasteful portion, what provides high enough ROI (return on investment) to address? The ROI under discussion here is the cost per kilowatt hour as charged by the utility.”

Fluke’s power quality and energy analyzers troubleshoot power quality issues and discover the cost of wasted energy. Multiple parameters are measured simultaneously and displayed in formats that quickly describe overall power quality health. And understanding energy waste points is key. “Every system and operation has the potential to be a point of waste that can be mitigated or remedied,” Silvey said. “The goal is to map the energy use of specific equipment and processes to look at where energy is being wasted to quantify the waste and prioritize improvements or replacements based on life of the equipment, as well as which modifications can deliver the best return on investment.”

Silvey’s “energy efficiency checklist” starts with a profile of energy use and then traces the energy consumption to understand energy waste points. He warns that manufacturers should not try to manage every kilowatt consumed by the facility, but instead divide the facility by electrical infrastructure and key systems. “The understanding of basic energy components enables an electrician to set up energy logging equipment to measure overall levels and quality of consumption and then trace when energy is consumed by what. The biggest power savings come from determining when power usage peaks, evaluating overall power consumption compared to utility invoices, and possibly rebalancing loads.”

By power logging each major system and mapping those costs against utility bills to quantify where and when consumption occurs, companies can often realize savings by simple operational and schedule changes, Silvey said.

Measuring for more output

There are other ways to measure energy use beyond checking the utility bill, and it starts with collecting the data from an energy meter connected to an equipment component—a conveyor or a pump, for example—and putting it into a historian as a way to maintain the history of the equipment to look for optimizations. “As you monitor it over time, using a dashboard, you then have a data set and can use analytics to get efficiency,” said Gimmi Filice, senior product manager at GE Digital.

In addition to historians, MES software can be tied to facility management software to determine when different lines are idle and can be powered down. A large automotive manufacturer using GE Digital’s Proficy MES software saved 10-15% on energy usage just by looking at what lines were not running during certain hours in the evening, dimming the lights, and shutting off high energy equipment, Filice said.

Other customers are taking digital transformation tools to another level, like using artificial intelligence and digital twin technology to make predictions of how equipment will perform. According to GE Digital, a steel, chemicals, and cement conglomerate in Southwest China turned to Proficy CSense (analytics software that improves asset performance using a process digital twin) to optimize control of its energy-inten-

(product images not to scale)

XCSR RFID Safety Sensor & XUSL4M Light Curtains with muting for automated machine shutdown, XCSLF switch for entry prevention, & XY2CED Double-sided Cable Pull Switch for fast emergency manual shutdown.

...because just ONE workplace accident is too many...

For over 90 years, Telemecanique Sensors has developed quality sensor products, helping engineers ensure their machines are safe; meeting all the applicable safety standards. Who,

then, should you trust for all your safety sensor solutions?

Simply easy!

Fluke’s three-phase power quality analyzers capture hundreds of power quality parameters to ensure critical power quality events are never missed.

sive cement cooler process. By analyzing the data, new insights were gained about variation in the cement cooler’s performance. A digital twin model enabled them to predict how process input changes would affect the cement cooler’s performance.

Though looking at a portion of data may help tweak a machine or a line, it does not provide the holistic view needed to truly optimize energy use. “I’ve often found that our own instrumentation has a lot more unlocked potential in the form of unused data that users do not fully leverage to their benefit,” said Endress+Hauser’s Hedrick.

That is where new kinds of manufacturing data-capturing technologies are coming into play. An example being Sight Machine, a platform that converts unstructured plant data into a standardized data foundation.

“A manufacturer should know how many units it’s producing and how much energy it’s using, but they need to dial down to see where it’s being used to get to the level of not just plant or line efficiency, but asset efficiency,” said Matt Smith, senior vice president, digital transformation for Sight Machine.

Sight Machine features tools, called “cookbooks,” that contain recipes for products. Using this information, Sight Machine can determine the most efficient way to make these products using statistical weighing. “Cookbooks look through all of the historical data and, based on conditions—be it humidity, raw materials, etc.—give you your best set of running conditions using as little water as possible, for example,” says Smith.

Schneider Electric’s Durcan agrees that the ability to track resource and energy use, not just at the plant level but at the product level, is important. The Lexington plant, for example, is a high-volume, low-mix facility, and when you are producing products the same way every day, managing the resources is straightforward. But that’s rarely the case. “You need to associate the resources with the actual product flowing through the facility. We have to think not just about what a plant does on a week-to-week or year-to-year basis, but how to optimize them,” he said.

That means broadening the scope beyond what is happening in the plant to include the extended supply chain. It can be difficult to collect all that data, but it is an important aspect of measuring energy use. “In the past we’d go to the ERP system to see what standard and variable costs were and see where it is cheaper to produce product, which was all based on cost. Now, we are genuinely looking at our supply chain based on carbon and resources, and that is a different proposition,” Durcan said. “Cost is not a reflection of carbon and energy produced.”

There are numerous aspects to understanding energy use, and there is no one approach that works for all cases. To address this, many manufacturing technology suppliers, like Schneider Electric, have formed consulting groups to help companies establish baselines and measurements. But to be successful, there needs to be “strategic corporate intent,” Durcan said. “Everyone is at a different point on the journey; make sure you are strategic about what the objectives are first.”

WHYTHE MQTT PROTOCOL IS SO POPULAR

This first installment in a four-part series on key industrial network technologies explains how an ultra-lightweight data transfer protocol became a widely used data gathering tool for Internet of Things applications.

By James R. Koelsch Contributing Writer

The message queuing telemetry transport (MQTT) protocol is a key contender for the most favored method of data transference. The main reason why is MQTT’s open-source design and lightweight stature make it well suited to connect disparate devices to supervisory control and data acquisition (SCADA) systems as well as other industrial networks.

As Omer Qadri, product marketing manager for edge and HMI products at Aveva, explains, MQTT uses a publish/subscribe architecture that reduces bandwidth utilization by 95% compared to traditional polled communications and client/ server communications using the hypertext transfer protocol (HTTP). “An HTTP header is typically around 8,000 bytes,” he says, “but the MQTT protocol uses only two bytes and a few lines of code.” This is key in an era where millions of IIoT (Industrial Internet of Things) devices have been deployed, many with low internal memory and processing power.

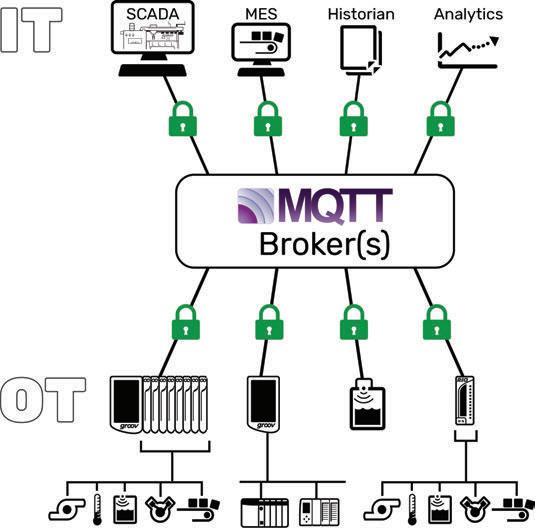

Besides having a much smaller footprint on the network, MQTT’s publish/subscribe architecture is also flatter than the architecture used by traditional industrial automation protocols, such as Modbus, EtherNet/IP, and Profinet. “This [MQTT] architecture replaces the traditional automation pyramid,” observes Garrett Schmidt, senior product manager for communication interfaces at Phoenix Contact USA.

Whereas clients in a client/server architecture communicate directly with an endpoint or server, publishers and subscribers—message senders and recipients, respectively—never talk directly to each other in a publish/subscribe architecture. Rather, they communicate with an intermediator called a broker; the publisher supplies the broker with data and the subscribers consume the data.

“The broker can reside anywhere—on the cloud, on a private server, or just running on a PC somewhere,” says Schmidt. “It filters the incoming messages and distributes them to the appropriate subscribers.”

He adds that this decoupling of publishers and subscribers enhances flexibility in IIoT applications in at least three ways: “First, publishers and subscribers only need to know how to contact the broker, not each other. Second, a broker can store messages for clients that are not online and deliver them when the resource is available. And third, operations do not have to be interrupted when waiting to receive or publish a message to coincide with the asynchronous nature of most client libraries.”

MQTT also has the advantage of being an open-source protocol built upon TCP/IP (transmission control protocol and internet protocol). In essence, MQTT permits users to send TCP/IP messages back and forth, according to Arlen Nipper, a co-creator of MQTT and president and chief technology officer at Cirrus Link Solutions.

Like HTTP, MQTT defines only a transport protocol. It doesn’t provide for security; it relies on TCP/IP for that. Like HTTP, MQTT also doesn’t define a payload specification. Although being payload agnostic offers the flexibility to transfer any payload, including those from legacy systems, it can complicate the connection of some devices. In these cases, a programmer would be required to translate the data.

To eliminate this translation work and streamline imple-

The MQTT protocol relies on a publish/subscribe architecture, rather than the more traditional poll/response architecture, for gathering data from field devices and tracking the state of the connected devices. At the center of this publish/subscribe architecture is a broker. Connected devices (publishers), send data to the broker, which makes that data available to various subscribers that have permission to consume the data. Source: Opto 22

mentation, the open-source Sparkplug payload specification was released in 2016. “It marked the first attempt to standardize on an interoperable format for MQTT in industrial applications,” says Josh Eastburn, director of technical marketing at Opto 22.

In 2018, the Eclipse Foundation sponsored the Tahu Project, which collected reference implementations of Sparkplug. The result has been the emergence of plug-and-play IIoT devices using MQTT.

Nipper says Sparkplug does for IIoT what the hypertext markup language (HTML) did for the Internet of People. Consequently, he is expecting IIoT applications to explode, as the Internet of People did once both HTTP and HTML were defined.

Explosive growth expected

MQTT is already making significant inroads in industrial automation, as well as enjoying widespread use in other applications. Facebook, for example, adopted it as the transport layer for its Messenger app back in 2011. “Literally overnight, 800 million people were using MQTT,” notes Andy Stanford-Clark, MQTT’s other co-creator and distinguished engineer and master inventor at IBM UK.

Since then, other Big Tech companies have followed suit. Amazon’s AWS, Microsoft’s Azure, IBM’s Watson, and Google IoT platforms, for example, all are using MQTT. With such broad uptake, MQTT overtook HTTP in 2018 as the transport protocol of choice for the Internet of Things, reports Stanford-Clark.

Many automation suppliers expect MQTT to eventually dominate the industrial networking space. “We believe MQTT will become the de facto industrial standard in the next 10 years,” predicts Qadri. “It will enjoy widespread adoption as industry replaces legacy Modbus, OPC, and other telemetry protocols that are still predominant in SCADA applications.”

Key milestones

The success of MQTT in the consumer space has obscured some fundamental facts about its origins. Namely that the protocol has been around for 23 years now and was originally developed for industrial automation, specifically for Phillips 66.

The development of MQTT occurred after AT&T had been broken up and a number of vendors began offering their own SCADA systems to deliver data in real time by satellite. “Every one of those companies had a proprietary transport layer,” recalls Nipper, who, at the time, was with Arcom Control Systems Inc., a company he had co-founded and which is now part of Eurotech.

The one exception was AT&T, which designed its new SCADA offering to run natively on TCP/IP. Phillips 66 had installed one of these systems and asked Nipper for help with increasing the efficiency of realtime data flows between field devices and multiple data consumers. “Polling over a VSAT [very small aperture terminal] is slow,” explains Nipper. “And it was very expensive if you had hundreds of sites, like we did at Phillips 66.” Other constraints included the use

of devices reliant on 8-bit embedded microprocessors and 300-baud communications.

Because the SCADA manager at Phillips 66 wanted to replicate the success the IT department had been having with messageoriented middleware (MOM) from IBM, he introduced Nipper to IBM’s Stanford-Clark. In 1999, the pair developed MQTT for MOMbased SCADA.

Despite being an efficient, open-source protocol, MQTT would not gain much momentum for nearly a decade. “It wasn’t until the protocol became available in a royalty-free license that it began to catch on outside of IBM,” explains Eastburn. “In 2010, Mosquitto, the first open-source MQTT broker was released, proving that MQTT had a life outside of IBM and marking a turning point in its adoption.”

Two other milestones in industry’s adoption of the protocol occurred in 2011. First was the Eclipse Foundation initiating the Paho Project, which collected MQTT clients implemented in various languages. “In 2011, IBM and Eurotech donated MQTT client implementations in C and Java to the foundation, allowing for a complete MQTT system to be built from open-source components,” says Eastburn.

That same year, IBM also began the standardization process of MQTT with the Organization for the Advancement of Structured Information Standards (OASIS) ultimately adopting version 3.1.1 as a standard in 2014. Then, in 2016, the International Organization for Standardization (ISO) and the Geneva-based International Electrotechnical Commission (IEC) also approved it as ISO/IEC 20922:2016.

To keep up with advances in related technologies, OASIS released version 5 of MQTT in March 2019. This version allows users to do new things with MQTT via the cloud, large distributed infrastructures, and clusters of multiple brokers. “We were careful not to let too many things creep into it, as we have to stick to the founding principles of keeping the protocol easy to understand and not very chatty on the wire,” says StanfordClark. ISO is currently considering adoption of version 5 as well.

Arlen Nipper, MQTT co-creator, president and CTO at Cirrus Link Solutions Potential application concerns

Despite the success MQTT and its publish/ subscribe architecture have had, it’s not optimal for every application, according to Kenneth Tran, founder and CEO of Koidra Inc., a supplier of artificial intelligence-driven IoT technologies. “We find the pub/sub model is often not the best solution for higher-level applications, in part because they must be configured to consider asynchronous data availability,” he says. “In a factory, it’s typical to have many sensors connected to a controller, server, or sensor hub in the field.”

In the IoT systems that Koidra offers, an on-premises IoT hub aggregates data pulled from a factory’s sensors via smaller, local sensor hubs. “These IoT hubs perform lightweight data cleansing, processing, and compression—and then push the resulting information to the cloud,” explains Tran. In this case, “because there is only one consumer, the central cloud, the pub/sub framework would be overkill.”

Another potential pitfall is getting locked into a particular vendor’s proprietary IoT platform. This can happen with data sent to the vendor’s cloud services, which can happen despite MQTT’s open-source origins. In these instances, users buy their edge device and software and connect it using MQTT.

“But you have no access to the data if it all remains within the vendor’s cloud environment,” explains Travis Cox, co-director of sales engineering at Inductive Automation.

Consequently, Cox urges users to make sure the configuration of these cloud-based systems allows them access to their data. “You can send the data to their cloud,” he says, “but ultimately you should be able to send that data to your systems too.”

A second way to get locked into proprietary technology, despite the use of MQTT, is through the payload format. This can occur because MQTT can transfer payloads in any format, including a vendor’s proprietary binary format.

“If you don’t understand what’s being sent, then it’s going to be very hard for you to take advantage of it,” Cox points out. To avoid this pitfall, insist on either having a definition that tells you what the data look like or use the open-source Sparkplug payload specification.

Cox also recommends building a resilient architecture. “If you were to lose a connection or access to your central broker, then your applications would be blind,” he says. One way that he suggests for building resiliency against such interrupted connections would be to store data in a local cache so it can be forwarded when the connection is re-established. Another way to enhance resiliency is to have two brokers, so that one can continue working if the other should fail.

Andy Stanford-Clark, MQTT co-creator, master inventor at IBM UK

Scan the QR code to learn more about MQTT security and industrial networking trends.

Wearable Technology Bolsters Worker Safety

PepsiCo’s use of Kinetic’s Reflex wearable device has had a definitive impact on both worker safety and company culture.

As the Industrial Internet of Things (IIoT) has become more ubiquitous, intelligent sensors have transformed everything from machine vision systems to conveyor belts into sources of valuable data for upstream analytics. Soon, those sensors could find their way onto workers themselves. Already, wearable technology is a fast-burgeoning field that is not to be neglected. Augmented reality (AR) headsets have allowed field service professionals working in dangerous conditions to access hands-free schematics, instruction

IO-Link:

We speak your language

Impact67 Pro & MVK Pro

IMPACT67 Pro & MVK Pro, Murrelektronik‘s compact IO-Link master module with eight multifunctional master ports, are the most intelligent way to connect IO-Link devices. Combine these modules with our selection of IO-Link hubs and analog converters to increase your flexibility while minimizing hardware costs.

In addition to pure process data (I/Os), the new Pro modules also provide secondary extended diagnostic data (voltage, current and temperature values) for both the respective ports and the entire module. This allows anomalies to be detected and the actual process to be optimized via data analysis. With the help of OPC UA, this can be done completely without a controller using a standard protocol. manuals, and even real-time audiovideo feeds for remote assistance from potentially distant subject matter experts. Now, new types of wearables are allowing end-users to generate useful data insights as well. What’s more, the collaboration they foster between operational technology (OT) and information technology (IT) professionals is helping to bridge the OT/IT divide.

Food, beverage, and snack company PepsiCo—which recently outfitted its workers with Reflex wearable technology devices from Kinetic—has seen those benefits clearly. The Reflex device is a belt-mounted wearable sensor that can automatically detect when a worker is assuming high-risk postures such as bending, overreaching, or twisting that may lead to repetitive strain injuries while on the job. From here, the actions taken are two-fold. First, workers receive realtime feedback via a gentle vibration which alerts them that they have assumed a high-risk posture, helping them to adjust their behavior. The data is then shipped upstream to a cloud-based web dashboard that delivers actionable insights to management on how to improve workplace ergonomics.

Not only does this help to improve the health of workers by averting injuries, it saves PepsiCo money by reducing worker compensation costs related to repetitive strain and other workplace related impairments. According to Kinetic, claim costs can be reduced by up to 54% using the Reflex device.

“At a company like PepsiCo, there’s a lot of lifting and a lot of manual handling of materials—twisting, turning, and high-risk postures. We’ve always tried to do ergonomic work to improve conditions as much as we could, but there was always something missing—a behavioral element,” said Cormac Gilligan, global vice president of environment, health, and safety at PepsiCo. “We never really tapped into that to create a sense of ownership in the mind of the individual employee, and help them to behave and move in a different way. The impact [Kinetic’s wearables] have had in that regard has been massive.”

Moreover, Kinetic’s Reflex wearable has brought PepsiCo other benefits beyond labor optimization and cost-cutting. The devices have also facilitated greater interaction between OT and IT personnel, which Gilligan said has made a meaningful difference in PepsiCo’s company culture.

In many cases, employees have been more willing to make changes to their behavior when they are receiving and acting on data insights themselves, rather than merely taking instructions from supervisors. Beyond that, the role of OT personnel in producing data has granted them a larger role in discussions on how to improve workplace ergonomics.

“It started off for us as a way of simply trying to eliminate ergonomic risks that drive our worker compensation costs, but it’s become more of an employee engagement exercise. They like the device, and they want to wear the device. This allows us to engage with the employees in a way we haven’t before,” Gilligan said. “The employees are having conversations with us that are helping us to understand why, at a particular time or in a particular place, they have to adopt a high-risk posture, which helps us to redesign the work area or process. We were telling them what to do, and now they’re telling us what to do—that’s extremely powerful.”