Emerging Technologies 1

1.1 OBJECTIVE

Understand the concept of emerging technologies.

Discuss various aspects of emerging technologies.

Dealing with different types of data.

Understand the application of data and analytics principles.

Understand various data analytics and visualization techniques to make better business decisions for Return On Investment (ROI).

Understand various visualization techniques such as Bubble charts, Geo-maps, Gauge charts, Tree maps, Heat maps, and Motion charts.

1.2 INTRODUCTION

Banking and Financial services in India have witnessed tremendous transformation in the era of “Digital India,” and the trend is continuing. “Digital India” is an ambitious initiative launched by the Government of India with the vision to transform India into a digitally empowered society and knowledge economy. The “Digital India” program was announced by Prime Minister Narendra Modi on July 1, 2015, with the aim of leveraging digital technologies to drive inclusive growth, empower citizens, improve governance, and bridge the digital divide across the country. Various factors, such as new governmental policies and customer expectations, have contributed to the shift. However, technological innovation is the main factor that has had the maximum impact on this sector.

The development and advancement of several technological innovations have helped the Banks to grow and meet increasing customer expectations. Products and Services

offered in the banking sector have undergone many changes with the large-scale adoption of new technologies. The partnership between new technologies in the financial sector and banking services has revolutionized traditional money-handling methods. This collaboration is expected to grow, paving the way for further shifts in the financial services sector in the days to come.

Fintech business solutions and Internet/Mobile banking services offered by Banks are by far the most well-known examples of growing technological trends in the banking and financial services space. They herald the beginning of a journey towards achieving a cashless society, total digital transformation and the growing rise of Fintech in the country. The only constant thing in this era of change is change itself.

Emerging technologies are those whose development, practical applications, or both are yet substantially unrealised, to the point that they are symbolically emerging into prominence from obscurity. Although these technologies include older technologies with untapped promises, such as deep learning, these technologies are primarily new. Emerging technologies are often portrayed as able to alter the status quo.

Emerging technologies are characterized by radical novelty, rapid growth, coherence, significant effect, as well as uncertainty and ambiguity. All new technologies can not be considered emerging unless they are seen as emerging in their area of knowledge, geographical situation, use, or implementation. Such technologies will have a significant impact on both the financial and social settings as well as user experience.

1.3 EMERGING TECHNOLOGY TRENDS IN BANKS

Globally, technology is changing at a great pace driving many organizations, especially Banks, to scurry embracing state-of-the-art technologies. For the past few years, Banks in India have been aggressively investing in technologies to promote digital banking, offering innovative, robust, and secure solutions optimized to meet the expectations of tech-savvy customers and deliver enhanced customer experience. Digitalization led to branchless banking through Internet banking channels, Digital wallets, Mobile banking, Chatbots, etc. While some technologies enhance the customer experience, other technologies intelligently analyze the customer behaviour and requirements for the banks, enabling the focused development of both business and products. The term emerging is relative, as technology considered emerging in one field may be well established in other fields.

While emerging technologies can be disruptive during the emergence phase, they are often uncertain and ambiguous. In summary, Emerging technology or processes are innovative technologies that can be broadly characterized by major innovation, rapid growth, huge impact, coherence, uncertainty, and ambiguity.

1.4 SOME KEY EMERGING TECHNOLOGIES AND THEIR USES IN BANKING

The requirements and expectations of customers’ Banking experience vary depending on the touchpoints they use to transact. Banks have an Omnichannel presence and should ensure that customers have a seamless experience across various channels/ touchpoints.

The beginning of such an exercise starts with Data analytics. Data analytics involves the exercise of analyzing raw data to help in decision-making. Since raw data is voluminous, processing such vast data is done through mechanical processes using algorithms. Use cases show that Data Analytics help organisations to significantly enhance their efficiency levels and help in achieving the ultimate goal of customer delight.

Some of the emerging technologies/products will be described briefly in this chapter, followed by a detailed discussion in some of the important chapters in the ensuing chapters of this module.

Some emerging technologies in India are:

1.Artificial intelligence/machine learning/deep learning

2.Blockchain

3.Big Data

4.Robotic Process Automation

5.5G Networks

6.Virtual reality (VR)/Augmented reality (AR)

7.IOT (Internet-of-Things)

8.Voice Assistants, Chatbots

9.ChatGPT

10.Wearables

11.Quantum Computing

1.4.1 Artificial Intelligence (AI)

Artificial intelligence is an important branch of computer science that emphasizes the creation of intelligent machines and software that work and react like humans. Some of the focused areas in artificial intelligence are speech recognition, cognitive analytics, deep learning, planning, and problem-solving.

AI has the potential to transform customer experiences and bring entirely new business models in banking. Tapping this potential requires good collaboration between humans and machines to offer a humanized experience customized to individual customers. Artificial Intelligence can learn from customer behaviour and offer personalized services for all customers focused on their specific requirements. The key components of AI are machine learning, computer vision, Natural Language Progression (NLP), and Natural Language Generation (NLG).

AI is being used in Banks for predictive analysis, voice recognition, Risk management, fraud prevention, Wealth management, etc. AI can also enhance data security, decisionmaking speed and accuracy, and employee output. Banks can also use machine learning to re-engineer back-office processes. AI-driven banking mobile apps are used to meet users’ expectations with personal, contextual, and predictive services.

1.4.2 Blockchain

A Blockchain is based on Distributed Ledger Technology (DLT) that stores transactions known as “blocks” and then links and sequences the transactions using cryptography. DLTs provide a path to access irrefutable information in realtime from any point in the network. Blockchain is distributed across a peer-to-peer network, with block redundancies and consensus mechanisms to ensure no one can manipulate the transactions. With blockchain, every transaction is trackable, and relationships are established with a network of peers rather than one-to-one.

Blockchain technology involves cryptographically signed, immutable/irrevocable transactional records shared by all participants in a network using distributed ledger technology with shared control.

Banks are exploring and implementing blockchain to address the increased need to secure and manage transactions across the Internet. Blockchain technology can be used in banking activities like secure document management, reporting, payments, treasury & securities, and trade finance. The banking industry can benefit from Blockchain technology as it helps in fraud prevention, increases the Bank’s IT infrastructure’s resilience, and increases the integrity and transparency of processes.

1.4.3 Big Data

Big data is a collection of larger, more complex data sets, especially from new data sources. These data sets are so voluminous that traditional data processing software just can’t manage them. But these massive volumes of data can be used to address business problems we wouldn’t have been able to tackle before. Big data can be characterized by 5Vs viz. Volume, Velocity, Variety, Veracity, and Value.

Banks have been tapping the potential of big data for the last few years. Big-data analytics empowers banks in various areas of banking, starting from optimizing cash management to unravelling customer behaviour and improving the bottom line. Using various machine algorithms like clustering techniques, banks can make critical business decisions.

Big Data allows banks to comprehensively understand customers, products/services, markets, industry regulations, competitors, etc. One of the significant areas of application of big data in the banking space is improving customer insight and engagement to the ultimate level.

Digitization of financial products and services enables customers to easily interact with banks using digital platforms conveniently. Big Data technologies can analyze the digital data received from various digital channels to enhance the quality of their products/services as they can gain insights into the customers’ problems, preferences, and needs. Such customer insights are critical for improving the customer experience and staying ahead of the competition in the market.

Enhanced employee engagement

Big Data can help enhance the employee experience by exploiting numerous Big Data tools and algorithms. Banks can track, monitor, and assess their employee’s performance to identify the best performers and the weak or unhappy performers. Banks can reward the top performers and take initiatives to improve the skill sets/ motivation of nonperforming employees, paying the way for increased output and good employee culture.

1.4.4 Robotic Process Automation (RPA)

Robotic process automation is automating routine business practices with software robots. Tasks performed by software robots include transaction processing, IT management, and automated online assistants. Robotic process automation leverages artificial intelligence to build software robots to automate tasks that once required human intervention, such as customer service and IT management.

Robotic Process Automation (RPA) is often applied to repetitive and mundane tasks so that employees can focus on more complex banking operations that require human interaction and decision-making. For example, we can train a robot by building rules to read mails and send routine replies or generate purchase orders.

Some major advantages of RPA are 24 x 7 working without fatigue, time and cost savings, zero typos or copy/paste mistakes, and compliance. Banks can build a Virtual Workforce by adopting RPA integrated with artificial intelligence to automate processes by deploying custom applications. AI is about leveraging intelligent algorithms to resolve inefficiencies and drive enhanced customer experience at every point of

interaction. RPA is being used in banking activities like KYC, customer onboarding, Loan origination, etc.

1.4.5 5G Technologies

5G is the fifth-generation cellular network technology. 5G networks are digital cellular networks in which providers’ service areas are separated into small geographical areas known as cells. They use millimetre waves using multiple-input multipleoutput (MIMO) technology. Ideally, the new 5G wireless devices also have 4G LTE compatibility. In locations where 5G access is not available, the new networks use 4G to establish the connection with the cell. 5G networks drastically enhance our ability to move, manipulate, and analyze data on the fly.

5G technology is characterized by low latency, high data capacity, and reliability. 5G will eventually help Banks create new ways to deliver mobile, online, and in-branch services. Businesses can personalize their networks for optimal performance by combining 5G with new technologies such as edge computing and software-defined networking. This becomes relevant in the Work-From-Home (WFH) scenario. Some areas where 5G can be useful are Gigabit broadband to the home, Nextgeneration mobile user experience, IoT, facial recognition for identity authentication, Video Chatbots, Remote troubleshooting for IT personnel, Real-time fraud detection/ analytics, and Customer profiling.

1.4.6 Virtual Reality (VR)/Augmented Reality (AR)

Augmented reality (AR) is an enhanced version of the real physical world achieved with digital visual elements, sound, or other sensory stimuli delivered via technology. The real-world objects are enhanced by computer-generated perceptual information using multiple sensory modalities like visual, auditory, haptic, somatosensory, and olfactory. This experience is so seamlessly linked with the physical world that it is regarded as an integral part of it. It is a growing trend among companies involved in mobile computing and business applications in particular.

Virtual reality replaces the user’s real-world environment with a simulated one, whereas augmented reality affects one’s continuous perspective of a real-world environment. Mixed reality and computer-mediated reality are two phrases that are nearly synonymous with augmented reality.

Banks can improve their operational efficiency and employee productivity using Virtual reality, Augmented Reality, and Mixed Reality. To create new, winning business models, Banks need to create a strong application programming interfaces (API) strategy to deliver relevant data in real-time to the various service layers that connect ecosystem players.

1.4.7 Internet of Things (IoT)

The Internet of things (IoT) is the world of physical devices connected through the Internet. IoT devices are embedded with electronics, Internet connectivity, and other forms of hardware like sensors. These devices communicate and interact with other devices over the Internet and are remotely monitored and controlled.

The Internet of things (IoT) links billions of devices to the Internet. It uses billions of data points, all of which must be protected. IoT security and privacy are identified as important problems due to their larger attack surface.

The following are some examples of how IoT can be used in a bank’s existing processes and products:

Visa has enabled the possibility of using real-time geographical location data from a customer’s mobile device as an extra factor to evaluate their predictive fraud analysis. A simple example is matching a cardholder’s mobile location with the transaction location, enabling the issuer bank to make better decisions with respect to transaction approval.

Groceries by MasterCard: MasterCard has partnered with Samsung on an IoTenabled refrigerator application that enables the easy purchase of groceries with connected refrigerators. Users can order groceries based on current stock through e-commerce platforms, and the payment is made using NFC technology.

Alfa-Bank Sense: The Bank uses customer data for predictive services based on customer preferences, location, financial behaviour, etc. Customer data is gathered to offer a personalized experience, and the Bank also offers a userfriendly chat-like interface.

bPay by Barclaycard: Barclays’s contactless wearable payment solutions enable customers to make payments using their bands or keys. The customer can opt for bPay-enabled watches, wristbands, stickers, key fobs, etc., which can be used to pay small amounts using contactless payments. The potential of IoT, in combination with other technologies, is immense.

1.4.8 Voice Assistants, Chatbots

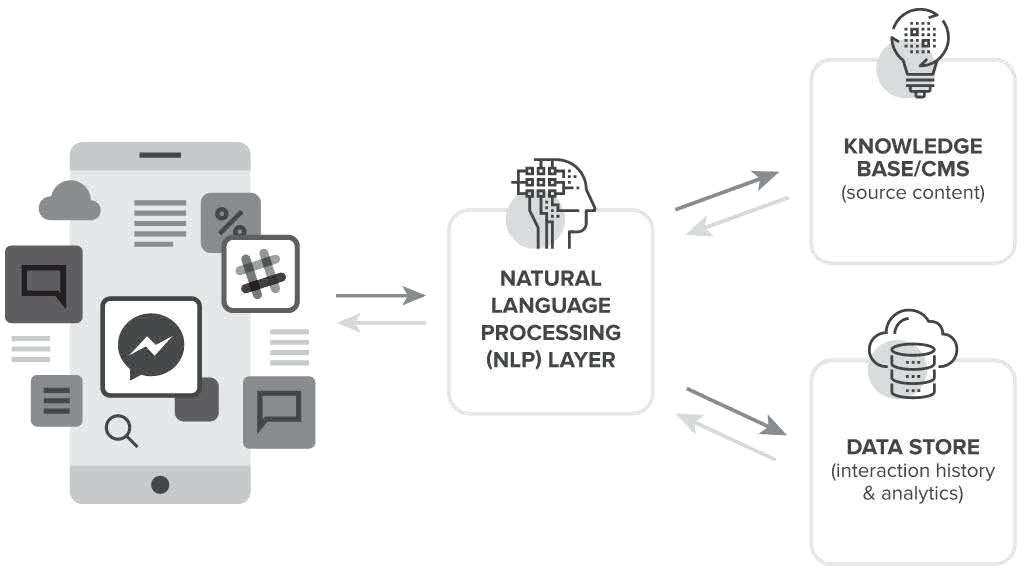

Chatbot technologies are being explored by banks and financial institutions to provide new interfaces to their existing service channels and to enable the appearance of 24/7 customer care capabilities. Financial institutions are experimenting with using chatbots to conduct day-to-day banking operations to entice millennials and the unbanked. Messaging apps have gained popularity in the last few years as a datacentric alternative to expensive SMS texting choices, with enhanced multimedia and group chat capabilities.

A chatbot (derived from “chat robot”) is a computer program that simulates human conversation, either via voice or text communication . Organizations use chatbots to engage with customers alongside the traditional customer service channels of phone, email, and social media. Visitors can access chatbots in one of two ways: through web-based or standalone applications. Today, chatbots are most frequently utilized in customer support, replacing tasks previously done by humans, such as help desk operators and customer relationship executives.

Chatbots or conversational agents are software applications that simulate a conversation or engagement with a real person by imitating written or spoken human speech. A Chatbot can be deployed for customer service, as a standalone application, or on a website. Conversational agents are becoming more popular in the area of Banking. Some real-life examples are virtual assistants like Google Now, Apple’s Siri, Amazon’s Alexa and Microsoft’s Cortana.

Organizations are gainfully offering Chatbots capabilities to automate the following areas:

(1)Automate and scale routine support functions,

(2)Provide education and information in a friendly, digestible style

(3)Guide shoppers to boost e-commerce sales

(4)Generate and qualify leads

(5)Engage users in an exciting new way

Source: https://www.wordstream.com/

While Chatbots can process the voice/text of the user and decipher what the user says, there are some challenges in the ability of chatbots to parse human speech having inherent complexities in metaphors and similes etc.

Despite these significant limitations, Chatbots are becoming increasingly more sophisticated, responsive, and natural.

1.4.9 ChatGPT

ChatGPT is a language model developed by OpenAI, based on the GPT-3.5 architecture. It is designed to generate human-like text based on the input it receives. Here are some key features and characteristics of ChatGPT:

Generative Model: ChatGPT is a generative language model, meaning it can generate coherent and contextually relevant text in response to user prompts.

Large-Scale Training: It has been trained on a diverse range of internet text, allowing it to have a broad understanding of various topics and domains.

Context-Aware Responses: ChatGPT considers the context of the conversation, making its responses contextually appropriate. It can understand and generate responses based on the preceding conversation history.

Conversational AI: The primary purpose of ChatGPT is to facilitate natural language conversations. Users can engage in a dialogue by providing prompts or asking questions, and ChatGPT responds accordingly.

Limitations: While ChatGPT is powerful, it has limitations. It may sometimes generate incorrect or nonsensical answers. It can be sensitive to the phrasing of input, and it might exhibit biased behaviour based on the training data.

Prompt Engineering: Users can influence the output by crafting their prompts carefully. The choice of words and the structure of the prompt can impact the nature of the response.

No Memory of Previous Prompts: ChatGPT does not have a memory of past interactions within a conversation. Each prompt is treated independently, and it does not retain information about prior requests in the same session.

Application Areas: ChatGPT can be used for a variety of applications, including content creation, brainstorming ideas, writing assistance, programming help, language translation, and more.

API Access: OpenAI has provided API access to developers, allowing them to integrate ChatGPT into their applications and services.

Usage Guidelines: OpenAI provides guidelines on the responsible use of ChatGPT to ensure that it is used ethically and does not produce content that violates policies or promotes harmful behaviours.

Please note that while ChatGPT is advanced and versatile, it may not always provide accurate or reliable information, and users should exercise critical judgment when interacting with it. The model is continually evolving, and updates may be made to enhance its capabilities and address limitations.

Similar products like ChatGPT

There are several similar products and language models developed by various organizations. While none may be an exact equivalent to ChatGPT, they share the general concept of natural language processing and generation. Here are some notable alternatives:

GPT Models by OpenAI: OpenAI has developed various versions of the Generative Pre-trained Transformer (GPT) models. These include GPT-2 and GPT-3, with GPT-3 being the basis for ChatGPT.

BERT (Bidirectional Encoder Representations from Transformers): Developed by Google, BERT is a pre-trained natural language processing model that excels in understanding the context of words in a sentence. It is commonly used for tasks like sentiment analysis and question answering.

XLNet: XLNet is another transformer-based language model developed by Google. It builds upon BERT and Transformer-XL and is designed to capture bidirectional context and long-term dependencies.

T5 (Text-To-Text Transfer Transformer): Developed by Google, T5 is a versatile language model that frames all NLP tasks as converting input text to output text. It achieved state-of-the-art results on multiple benchmarks.

BART (Bidirectional and Auto-Regressive Transformers): Developed by Facebook AI, BART is a sequence-to-sequence model that has shown effectiveness in various natural language generation tasks.

ERNIE (Enhanced Representation through kNowledge Integration): Developed by Baidu, ERNIE is designed to understand entities and their relationships in a given context. It incorporates knowledge graph information during pre-training.

DALL-E: Also developed by OpenAI, DALL-E is a variant of GPT that is specifically focused on generating images from textual descriptions. It demonstrates the application of language models beyond text.

Turing-NLG: Developed by Microsoft, Turing-NLG is a large-scale language model designed for natural language understanding and generation tasks.

ROBERTa (Robustly optimized BERT approach): ROBERTa is a variant of BERT developed by Facebook AI. It modifies key hyper parameters to improve performance on certain tasks.

Distil BERT: Distil BERT is a smaller, distilled version of BERT developed by Hugging Face. It retains much of BERT’s performance while being computationally more efficient.

BARD AI: Google created the conversational generative artificial intelligence chatbot Bard. Large language models (LLMs) of the LAMDA family served as its initial foundation; PALM and Gemini were added subsequently.

The effectiveness of these models can vary depending on the specific task or application. Each model may have its strengths and weaknesses, and the choice often depends on the specific requirements of a given project.

1.4.10 Wearable technology

Wearable technology, popularly referred to as “wearables,” is an electronic device that can be worn as accessories, embedded into clothing, implanted into the user’s body, or even tattooed on the user’s skin. Due to their increasing popularity, wearables have risen to the forefront of the Internet of Things (IoT).

The wearables business is producing a plethora of innovative and inventive products. Nowadays, every gadget we use daily is a “smart” device, such as smartwatches, smart glasses, and smart houses. These devices assist in collecting and analysing real-time personal data, which provides us with information about everything from our health to our workouts.

Wearable technology involves the usage of wearable gadgets that a person can comfortably wear on his body to record and track information for personal or business usage related to health and fitness. Other wearable tech gadgets include small motion sensors to take photos and sync with your mobile devices. They’ve grown in popularity as instruments for keeping us informed and connected to ourselves, to the point where one in every three people in the United States now owns a wearable device. Wearable technology will become the next game-changer in retail banking, allowing customers to access key banking services with a single click on a user-friendly interface on their wearable device.

Wearable types

Some of the most common types of wearable technology are:

Smartwatches: Connected wristwatches, such as the Apple Watch or Samsung Galaxy Watch, enable the wearer to answer calls, track fitness, and sleep, among other functions.

Smart rings: Wearable technology that integrates the features of a smartwatch into a ring. While rings have not yet reached their full potential, there are some decent options available.

Smart clothing: Clothing interwoven with technology can track your biometric data or shuffle your audio.

Advanced medical technology: wearable electrocardiograms (ECGs) transmit a patient’s heartbeat to a cardiologist, as well as other potentially life-saving on-body devices.

Head-mounted displays (HMDs): Virtual reality headsets and other displays enhance the immersive experience of gaming or online browsing.

Fig: Wearables source: https://builtin.com

Wearable services can help banking and financial services to bring enhanced value to customers and bring engaged technology. Banks can provide banking services through Wearables like smartwatches, Google Glass, Wrist Bands, etc.

1.4.11 Quantum Computing

Quantum computing is a field of computing that leverages the principles of quantum mechanics to perform certain types of computations much more efficiently than classical computers. Unlike classical bits, which can be in a state of 0 or 1, quantum bits or qubits can exist in a superposition of both states simultaneously. This property, along with entanglement and quantum interference, enables quantum computers to process information in ways that classical computers cannot.

Quantum computing has the potential to bring transformative changes to the field of finance and banking. While practical, large-scale quantum computers are still in the early

stages of development, several potential applications are being explored. Here are some ways quantum computing could impact the banking sector:

1.Optimization Problems: Quantum computers excel at solving complex optimization problems, such as portfolio optimization and risk management. Banks could leverage quantum algorithms to optimize investment portfolios, allocate assets more efficiently, and manage risk more effectively.

2.Quantum-Safe Cryptography: As quantum computers advance, they pose a threat to classical cryptographic algorithms. Banks are exploring post-quantum cryptographic techniques that can withstand attacks from quantum computers. Implementing quantum-resistant cryptographic solutions is crucial to ensuring the security of financial transactions.

3.Credit Scoring and Risk Assessment: Quantum computing can enhance credit scoring models by processing large datasets and complex relationships more efficiently. This could lead to more accurate risk assessments, allowing banks to make better-informed lending decisions.

4.Fraud Detection: Quantum algorithms may improve fraud detection capabilities by quickly analysing patterns and anomalies within vast datasets. This could enhance the ability to detect fraudulent activities in real-time, reducing financial losses for banks.

5.Simulation of Financial Markets: Quantum computers can simulate complex financial systems and market behaviour more accurately than classical computers. This could aid in the development of advanced financial models, pricing derivatives, and predicting market trends.

6.Supply Chain Finance: Quantum computing can be applied to optimize supply chain finance by solving intricate logistical problems. This includes efficient routing, inventory management, and supply chain risk assessment, which can benefit both banks and their corporate clients.

7.Quantum Machine Learning: Quantum machine learning algorithms could enhance predictive analytics and pattern recognition in banking. This can be applied to customer behaviour analysis, credit scoring, and identifying potential investment opportunities.

8.Blockchain and Cryptocurrencies: Quantum computers could potentially impact the security of existing blockchain and cryptocurrency systems. Banks involved in blockchain based transactions may need to adapt their cryptographic protocols to be quantum-resistant.

9.Derivative Pricing: Quantum computers could improve the efficiency of pricing complex financial derivatives, reducing the computational time required for these calculations.

10.Enhanced Monte Carlo Simulations: Quantum computing could accelerate Monte Carlo simulations, which are widely used in risk management, pricing financial instruments, and assessing market volatility.

11.Customer Authentication: Quantum-resistant cryptographic techniques may be crucial for securing customer authentication processes, ensuring that sensitive customer information remains secure even in the face of quantum attacks. It is important to note that practical, large-scale quantum computers are still in the experimental stage, and widespread adoption in the banking sector may take some time. Additionally, addressing the challenges of quantum error correction, system stability, and scalability is essential for realizing the full potential of quantum computing in the financial industry. As the field continues to evolve, ongoing research and collaboration between the quantum and financial communities will be critical.

Use of Quantum Computing in Banking

Quantum computing has the potential to revolutionize many industries, including banking and finance. Here’s how quantum computing could be utilized in the banking sector:

1.Optimization: Quantum computing can significantly enhance optimization problems that are prevalent in banking, such as portfolio optimization, risk management, and fraud detection. Quantum algorithms can analyze vast amounts of data and provide more accurate and efficient solutions to complex optimization problems.

2.Cryptography: Quantum computing poses a potential threat to current cryptographic methods, particularly those based on factoring large prime numbers, such as RSA encryption. However, it also offers the opportunity to develop quantumresistant cryptographic techniques, such as quantum key distribution (QKD) and post-quantum cryptography, which can provide enhanced security for banking transactions and data protection.

3.Quantitative Analysis: Quantum computers can perform complex calculations and simulations much faster than classical computers. In banking, this capability can be utilized for quantitative analysis, including pricing derivatives, assessing market risk, and conducting scenario analysis, leading to more accurate and timely decision-making.

4.Machine Learning and AI: Quantum computing can accelerate machine learning algorithms, enabling banks to extract valuable insights from large datasets more efficiently. Quantum machine learning algorithms can improve credit scoring, customer segmentation, and fraud detection, leading to more personalized and secure banking services.

5.Quantum Blockchain: Quantum computing could also impact blockchain technology, which underlies cryptocurrencies and distributed ledger systems. Quantum computers may be able to break some of the cryptographic mechanisms that currently secure blockchain networks. However, researchers are exploring

quantum-resistant blockchain solutions to mitigate this risk and ensure the security and integrity of decentralized financial systems.

6.Quantum Secure Communication: Quantum computing enables ultra-secure communication through quantum key distribution (QKD), which leverages the principles of quantum mechanics to encrypt and transmit data securely. Banks can use QKD to establish tamper-proof communication channels for sensitive financial transactions and data exchange.

7.Financial Modeling and Forecasting: Quantum computing can enhance financial modeling and forecasting by enabling more accurate simulations of complex economic systems and market dynamics. Banks can leverage quantum algorithms to develop predictive models for asset pricing, trading strategies, and economic forecasting, leading to better risk management and investment decisions.

Overall, while quantum computing is still in its early stages of development, its potential applications in banking and finance are promising. As the technology matures and becomes more accessible, banks and financial institutions are likely to explore and implement quantum computing solutions to gain a competitive edge and address complex challenges in the rapidly evolving financial landscape.

Summary :

These are some of the emerging technological trends in India’s banking and financial sector, and each one of them has the potential to change the way business is done at present. With historical changes in old paradigms, the future looks exciting and challenging at the same time. There are few impediments in the way of this massive transformation. Customers are open to banking innovations, and the government is keen on encouraging the digitisation of products and services. This dual push compels all players in the ecosystem, like regulators, PSU and Private Banks and other financial institutions & intermediaries, to use modern technology to stay competitive and relevant.

All PSBs now adhere to the EASE Reforms, which are overseen by the Indian Banks Association’s EASE Steering Committee. From EASE 1.0 to present EASE 6.0, there has been a radical change in focus, with a focus on digital customer experience, analytics-driven business optimisation, tech and data-enabled capability building, and improved HR operations.

1.5 ADOPTION OF EMERGING TECHNOLOGIES IN THE BANKING INDUSTRY

The adoption of emerging technologies has recently gained substantial momentum in India’s banking and financial sector. Banks are geared up for a transformational phase by implementing advanced technologies such as Blockchain, RPA, Artificial Intelligence (AI), Machine Learning (ML), and AR & VR. Over the years, the speed

of adoption of these new technologies has picked up. They are now more reliable and capable, improving customer relationships and business benefits.

Banks are now placing more emphasis on utilizing advanced technologies to increase production and reach.

If we segregate Emerging technologies into clusters, AI and Cloud sit in the middle or core clusters that unravel other technologies. AI and the Cloud are critical because of their ability to access and analyze data generated, stored, and sent by other technologies. 5G, IoT, task-specific hardware (TSH), AR/VR, AI, and Cloud are creating new ways of generating and accessing data by bridging the gap between the physical and digital financial worlds.

Emerging technologies are helping the development of new financial products and services, which, in turn, impact individuals and businesses in important ways.

1.6 INTRODUCTION TO DATA ANALYTICS

Data has become extremely important in the Banking and financial services sector. In 2006, Humbly coined the phrase, “Data is the new oil.” Michael Palmer expanded on Hamby’s quote by saying that, like oil, data is “valuable, but it cannot be used if it is unrefined”. Hence having data is not just enough. Many financial institutions face the challenge of utilizing data to make informed decisions. When they explore data and identify the underlying and hidden patterns and meanings from the analyzed data, financial institutions will be better placed to compete with tier peers for better business and bottom line. Good visualization approaches, tools, and projects can accelerate the journey.

1.6.1 Information Value Chain

Banks deal with huge volumes of data, which is produced, analyzed, exchanged, and delivered to the end-users in various departments, facilitating informed decisionmaking. An Organisation’s success depends on understanding the value of data and utilizing it for an effective and efficient business.

Information Value Chain can be defined as the insight and value created by data after going through a series of steps, resulting in action back in the real world.

The Information Value Chain begins with collecting data, followed by tuning data, transforming data into information, and ultimately into actionable decisions. Any business needs to ensure steady profitability and proper asset & resource management in the long run for its survival. Information and data are the resources that can empower decision-making and help measure the company’s performance. Delivery of required data and its transformation into meaningful information forms the data and information value chain. Data management empowers the effective

Data Information Decision

Diagrammatic representation of Information Value chain Action Value

operation of the data and information value chain. It is difficult to understand small volumes of data and turn the data into action. On the other hand, dealing with voluminous data can be challenging.

With an over-emphasis on data collection, organizations may create Data Lake structures that appear impressive but are inert entities with no inherent value for the organization. Action taken can go wrong, money squandered, and opportunities lost if other relevant factors are not considered.

Data Value Chain is described as a set of steps that must be taken to extract value from data. Value Chains can be modified by modelling the sequence of actions that an organization does in delivering a valuable product or service to the market (Porter 1985). The value chain can be used as an analytical tool to understand how data technology creates value. As stated by the European Commission, the Data value chain is the “core of the new knowledge economy, delivering opportunities from digital advancements to the more traditional sectors”.

1.6.2 Data sources and data types

Sources of data

Data for a business can come from various sources, including client feedback, sales numbers, and demand for a product or service. The following are some of the options for businesses looking for information:

Use social media, such as LinkedIn, Twitter, and Facebook. These platforms offer cost-effective solutions to survey consumer satisfaction with products or services and client preferences.

Reporting on Online Engagement: You may learn a lot about your website’s visitors using Google Analytics or Crazy Egg.

Transactional Information: Data gathered from sales reports, ledgers, and online payment transactions is included in this category. CRM systems allow companies to collect information on how customers spend money on its products.

The primary data:

Observations or factual information that is formatted in a way that aids further processing or storage can be called data. Primary data is the true connection between

the numerous data elements that make up information, which is considered while connecting them. In most cases, data lacks meaning, but it can be interpreted when the context is given. The context in which data is presented can yield meaning. Knowledge, on the other hand, is the next step in this progression. Researchers obtain primary data through interviews, surveys, tests, and other means. In research, primary data is considered the most valuable because it is acquired directly from the source.

Typically, primary data sources are designed to match the specific needs of a certain research project. Further, it is critical to understand the subject and purpose of the research before selecting a data collection source. It is equally important to identify the research and its aim before deciding on a data-gathering source.

For example, in the case of a market survey, the purpose of the survey and the sample population must be established before the survey can begin. A survey conducted offline is better suited to a population living in distant places without access to the Internet than one conducted online.

Interviews, surveys, questionnaires, observations, experiments, etc., are examples of primary data.

Secondary Data:

Secondary data is information gathered by someone other than the researcher, such as administrative data from programmes, geodata from specialist sources, and government census or demographic data. Secondary data adds context to any investigation, and in some circumstances, it’s the only source that covers the entire population needed to complete a study.

When compared to primary data, secondary data is known to be more readily available. Examples include books, personal sources, journals, newspapers, websites, government documents, and other secondary data sources. To use these sources, a relatively lower amount of study or manpower is required.

The principal data collection methods are intended to meet the specific research goals of the project. First-hand information is preferred in many circumstances, even though the procedure can be lengthy.

Access to reliable data is critical to the success of any research project.

1.6.3 Types of data

Data is created from various sources, and how data is organised differs. While some data is structured, the majority of data is unstructured. Data is traced, collected, and scaled in different ways, and each is stored in different databases. Structured as well as unstructured data are used widely in data analysis, but they operate quite differently. Structured data is well organized and structured, which can be

1.6.4 Structured Data

easily searched in relational databases. Unstructured data has no predefined format or organization, which makes it difficult to collect, process, and analyze. Let us understand these data formats and also the difference between them.

Structured data refers to data in a fixed field within a file or record. Structured data is typically stored in relational databases. Structured data can consist of numbers and text, and sourcing can happen automatically or manually as long as it’s within an RDBMS structure. It depends on creating a data model, defining what types of data to include and how to store and process it. Structured data — typically categorized as quantitative data — is highly organized and easily decipherable by machine learning algorithms.

Examples of structured data include names, dates, addresses, credit card numbers, stock information, geolocation etc.

Sample structured data format: Borrower

1.6.5 Unstructured Data

Unstructured data lacks a data model and is not structured in a predefined manner. It may, however, have a native internal structure. Unstructured data is generally defined as qualitative data that cannot be processed or analyzed using traditional tools and methods. Non-relational databases are used to process unstructured data because there is no predefined data model. Another method for managing unstructured data is to store it in its raw form in data lakes.

Typical examples of unstructured human-generated data are:

1.Word processing, presentations and logs are all examples of unstructured documents.

2.Email: Email has some internal structure due to its metadata and is sometimes semi-structured. Its message field, however, is unstructured, and traditional analytics tools cannot process it.

3.Social Media: Data gathered from Facebook, Twitter, and LinkedIn.

4.Websites such as YouTube, Instagram, and other photo-sharing sites.

5.Text messages and GPS coordinates are examples of unstructured mobile data.

6.Chat, instant messaging, phone recordings, and collaboration software are all unstructured forms of communication.

7.Examples of media are MP3, digital photos, audio, and video files.

8.Microsoft Office documents and productivity software are examples of business applications that can generate unstructured data.

Typical examples of machine-generated unstructured data include:

Satellite imagery can show weather data, landforms, and military movements.

Scientific data on Oil and gas exploration, space exploration, seismic imagery, and atmospheric data

Surveillance photos and video digital surveillance methods.

Sensor data from traffic, weather, and oceanography sensors

The most comprehensive Big Data analysis incorporates both structured and unstructured data. Unstructured data accounts for 80 percent or more of all enterprise data, and the percentage is increasing. The amount of unstructured data is significantly greater than that of structured data. This means that businesses that do not consider unstructured data are missing a lot of valuable business intelligence.

1.6.6 Semi-structured data

Semi-structured data is a combination of structured and unstructured data that shares both characteristics. It also follows a certain schema and consistency. A typical example of semi-structured data is photos stored in a smartphone, comprising unstructured images, tagged time, location, and other identifiable and structured data. Examples of Semi-structured data formats include JSON, CSV, and XML file types.

Semi-structured data is the “bridge” between structured and unstructured data. It lacks a predefined data model and is more complex than structured data while being easier to store than unstructured data. Semi-structured data uses “metadata” (for example, tags and semantic markers) to identify specific data characteristics and scale data into records and preset fields. Semi-structured data lacks a predefined data model and is more complex than structured data while being easier to store than unstructured data Semi-structured data uses “metadata” (for example, tags and semantic markers) to identify specific data characteristics and scale data into records and preset fields.

The three main data formats used to interact or transfer data between a web server to a client are CSV, XML, and JSON ( i.e. , computers, smartphones, etc. ).

Examples of semi-structured data

Semi-structured data exists in a multitude of formats, some of which are given below. 1.CSV files (comma separated values)

2.Electronic mail

3.HTML or “HyperText Markup Language”

4.Web pages

5.Tools that can be used to maintain non-relational data

Examples of Structured data tools

(

(

(

(

6.EDI (Electronic Data Interchange) – A collection of standards used for business information exchange.

a )OLAP: High-performance, multidimensional data analysis from centralized, unified data stores.

b )SQLite: A self-contained, serverless transactional relational database engine that requires no configuration.

c )MySQL: Integrates data into widely used software, including mission-critical, high-volume production systems.

d )PostgreSQL: SQL and JSON querying are supported, as are high-level programming languages (C/C+, Java, Python, and so on).

Use cases for structured data

Customer relationship management (CRM): CRM software uses analytical tools to process structured data to generate data revealing customer behaviour patterns and trends.

Online booking: Hotel and ticket reservation data ( e.g. , dates, prices, destinations, etc.) fits the “rows and columns” format of the predefined data model.

Accounting firms or departments process and record financial transactions using structured data.

Unstructured data tools

MongoDB: Uses flexible documents to process data for cross-platform applications and services.

DynamoDB: With built-in security, in-memory caching, and backup and restore, DynamoDB delivers single-digit millisecond performance at any scale.

Hadoop: A framework that allows for the distributed processing of large data sets using simple programming models that do not require formatting.

Azure: Azure is a cloud platform that hosts solutions as a collection of highly secure services that can be consumed for various computing purposes, including storage, processing and consumption of structured as well as unstructured datasets at scale.

Use cases for unstructured data

Data mining: Enables businesses to identify consumer behaviour, product sentiment, and purchasing patterns to serve their customers better using unstructured data.

Predictive data analytics: Alert businesses ahead of time of significant activity so that they can properly plan for and adjust to significant market shifts.

Chatbots: Perform text analysis to route customers’ questions to the appropriate answer sources.

Differentiating structured and unstructured data:

(1)Structured data is stored in a structure with clearly defined types, whereas unstructured data is typically stored in its native format. Structured data is organized in rows and columns, which can be mapped into predefined fields. Unstructured data, which is organized and easy to access in relational databases, does not have a predefined data model.

(2)Structured data is often quantitative, consisting of hard numbers or things that can be counted or categorized. Structured data can be analyzed using regression, which predicts relationships between variables, classification, which estimates the probability of a happening and data clustering based on different attributes. Unstructured data is usually characterized as qualitative data which cannot be processed and analyzed using conventional tools and methods. Qualitative data can be analyzed by extracting insights using advanced analytics techniques like data mining and stacking.

(3)Structured data is often stored in data warehouses, while unstructured data is stored in data lakes. Structured data requires less storage space as compared to unstructured data. When it comes to storing data, structured data is usually stored in a relational database (RDBMS). In contrast, the best fit for unstructured data is instead so-called non-relational, or NoSQL, databases.

(4)Without a predefined structure, data mining becomes complicated. Structured data is easy to find, whereas unstructured data is more difficult and requires processing to become understandable. Hence, there is a necessity to develop best practices for handling data like rich media, blogs, social media data, and customer communication.

(5)Structured data is stored in a predefined format, while unstructured data comes in multiple formats, shapes, and sizes. There is no data model for unstructured data; it is stored natively or in a data lake with no transformation required.

(6)Generally, Structured data is stored in predefined models and formats. In contrast, unstructured data is kept in its original format until it is extracted for analysis.

Structured dataUnstructured data

Structured data is quantitative data that consists of numbers and values.

Methods of analysis include classification, regression and clustering

Structured data is stored in tabular formats like excel sheets or SQL databases.

Structured data has a predefined data model.

Unstructured data is qualitative data that consists of audio, video, sensors, descriptions, and more.

Advanced analytics like data stacking and mining are generally used

Stored as audio files, videos files, or NoSQL databases

Unstructured data does not have a predefined data model.

Structured data is stored in data warehousesUnstruct ured data is stored in data lakes

Require less storage spaceRequire large storage

Tools generally scale vertically – processing power, memory, storage etc. to a single node

Tools generally scale horizontally – adding more nodes

Easy to search and understandDifficult to search and understand

1.6.7 Summary

Semi-structured data is a category that falls between structured and unstructured data. It is the data that has some kind of tagging structure but still doesn’t fit into the formal structure of a relational database.

Even though Big Data algorithms can analyze structured data much more easily, it’s critical not to overlook unstructured and semi-structured data. However, over 80% of all enterprise data falls into this category. It is more difficult to analyze unstructured data. Omitting it will leave enormous blind spots. Fortunately, as technology progresses, the hidden insights in unstructured data are becoming more accessible.

Unstructured data though difficult to manage provides immense value to any organization for improving its customer experience and the bottom line.

Leveraging the data collected in structured and unstructured formats ensures “systematic listening,” maximizing value from unique customer insights data points. Many insights about customer requirements and feelings are hidden in the unstructured data. Apart from analyzing this data, Listening to the customers’ feelings through social media, community forums, emails, chats, surveys, reviews, and calls is very important. The insights are there, hiding in your unstructured data.

Structured data is traditionally easier for Big Data applications to process. Still, current data analytics solutions are more focused on exploring unstructured data.

Has a predefined data model; is formatted to a set data structure before being stored.

Consists of numbers, values and strings.

Sourced from online forms, network logs, web server logs, GPS sensors.

Requires less storage space, Can be stored in tabular formats (excel sheets, SQL database) and data warehouses.

Examples include dates, social security numbers, customer names and addresses, credit card numbers, etc.

Makes up an estimated 20% of enterprise data.

Is not structured in predefined way; is stored in its native formats.

Consists of images, sensors, audio, video, text files and more.

Sourced from word processing documents, PDF files, email messages, etc.

Requires more storage space. Can be stored as media files, or in NoSQL database’s or data lakes.

Examples include emails, text files, reports, audio and video files, images, scanned PDFs, etc.

Makes up an estimated 80% of enterprise data.

Structured data v. Unstructured data –Source: https://blog.collabware.com

Best practices to keep your analytics clean and healthy

Roughly 80% of corporate data under management falls under the category of unstructured data. Following best practices will help you to improve the governance of these data.

1. Use only trusted data sources

The data that companies have directly created and accumulated is generally trustworthy, but most organizations also acquire data from external sources as they build an aggregated data repository for analytics.

To be confident of the reliability of such data, one should vet the data provider, understand the data source and know how the provider has prepared and secured the data.

Checking vendor governance standards to ensure they align with your standards should be routine before selecting a vendor. Before entering into an agreement, you should also examine the vendor’s latest IT audit report, focusing on governance and security performance.

2. Put in place unstructured data guidelines for user access and permissions

Unstructured data may not normally have a system of firm rules in place for user access and permissions. Unstructured data access should also have the same rules that structured data does.

Access to unstructured data should be limited to those users who require the data. Access matrix/privileges should be defined depending on job function or role. Access controls should be periodically reviewed as per corporate guidelines.

3. Secure your data

Secure unstructured data with the same level of security for structured data, such as: Trusted networks

Strong access control protocols

User access methods/monitoring

Perimeter defence that monitors/checks for vulnerabilities and potential breaches

Data Leakage Prevention measures

Physical protection – hardware should be physically caged and secured with strict access control

Cloud security protocols

When possible, where only those authorized can gain access.

4. Robust tracking of User logging and traceability

Ensuring tight monitoring on who or what is accessing the data, when/from where etc. assumes great importance for the governance of unstructured data.

5. Disposal of bad data

Bad data should be eliminated as a continuous process so that it never burdens the storage and processing power. Big data repositories should also be regularly refreshed and revisited, and data that is no longer needed should be discarded.

Data Storage

The digital recording of information and documents and their retention in a recording media for future use, is referred to as data storage. If necessary, storage systems may use electromagnetic, optical, or other media to preserve and restore data. In the case of a computer disaster or cyberattack, data storage makes it simple to back up files for safekeeping and speedy recovery.

Data can be physically saved on hard drives, disc drives, USB drives, or on the cloud. It is vital that your files are frequently backed up and available in the event that your systems fail. Reliability, comprehensive security features, and the cost to deploy and maintain the infrastructure are the most critical considerations to consider when purchasing any data storage device. Examining various data storage options and applications will assist you in making the right decision for your company’s needs.