Domain 1: Understanding the Foundations of Artificial Intelligence

Understand the basic elements of AI and ML

● Understand common elements of AI/ML definitions under new and emerging law:

○ 1. Technology (engineered or machine-based system; or logic, knowledge, or learning algorithm)

■ AI is “An engineered or machine-based system that can, for a given set of objectives, generate outputs such as predictions, recommendations, or decisions influencing real or virtual environments AI systems are designed to operate with varying levels of autonomy.” - NIST

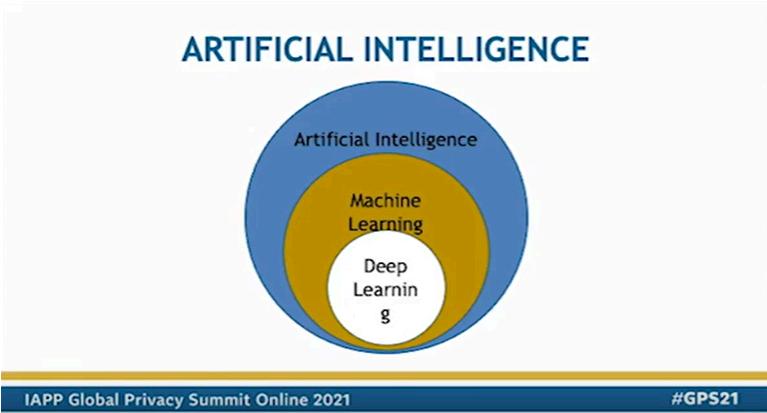

■ “Machine learning (ML) is a branch of artificial intelligence (AI) and computer science that focuses on the using data and algorithms to enable AI to imitate the way that humans learn, gradually improving its accuracy.” (source)

■ Knowledge-based systems are a form of artificial intelligence (AI) designed to capture the knowledge of human experts to support decision-making An expert system is an example of a knowledge-based system because it relies on human expertise. (source)

○ 2 Automation (elements of varying levels)

■ Automation is any technology that reduces human labor, especially for predictable or routine tasks.

■ AI simulates human intelligence with machines that can learn, reason, and act independently

■ Essentially, automation is about setting up machines to follow commands. AI is about setting up machines to mimic humans and think for themselves. (source)

○ 3 Role of humans (define objectives or provide data)

■ The work of humans can be enhanced by AI, AI is meant to help make human’s work more efficient and better quality.

■ Humans may define the objective for an AI, or may provide the data to train the AI to perform a certain objective (analyze data, create something, etc)

■ “Humans decide which datasets are discoverable, linked and analyzed ” (source)

■ Humans determine whether data, algorithms, algorithmic methods, code and people as brought together and operating within technical, operational and legal controls, safeguards and guide rails specified by the same or different humans are reliable and safe to produce appropriate outputs that lead to appropriate outcomes on humans or the environment Humans, directly or indirectly, consciously or otherwise, determine what is appropriate (source)

○ 4 Output (content, predictions, recommendations, or decisions)

■ Predictive AI - offers predictions, can be used to inform recommendations or decisions (source)

Parameters

■

Generative AI - generates new content, such as text, images, and video

Generative AI

Objective Generates new, original content or data

Function Creates new information or content

Training data Requires diverse and comprehensive data

Examples Text generation, image synthesis

Learning process Learns patterns and relationships in data

Use cases

Creative tasks, content creation

Challenges May lack specificity in output

Training complexity

Creativity

Different algorithms

(source)

Generally more complex and resource-intensive

Generative AI is creative and produces things that have never existed before

Generative AI uses complex algorithms and deep learning to generate new content based on the data it is trained on

Predictive AI

Predicts and analyzes existing patterns or outcomes

Makes predictions based on existing data

Requires historical data for learning and prediction

Forecasting, classification, regression

Learns from historical data to make predictions

Business analytics, financial forecasting

Limited to existing patterns, may miss novel scenarios

Requires less complex training compared to generative models

Predictive AI lacks the element of content creation

Predictive AI generally relies on statistical algorithms and machine learning to analyze data and make predictions

● Understand what it means that an AI system is a socio-technical system.

○ A socio-technical system is a type of system in which both social and technical elements are intertwined with each other AI Systems are considered social-technical because they are not just technical tools but also have a social impact on the people who use them and are affected by them (source)

● Understand the need for cross-disciplinary collaboration (ensure UX, anthropology, sociology, linguistics experts are involved and valued)

○ “allows complex problems to be solved that a single discipline alone could not handle ” (source) (reference)

● Knowledge of the OECD framework for the classification of AI systems

○ https://oecd.ai/en/classification

○ The Framework is used to:

■ Promote a common understanding of AI: Identify features of AI systems that matter most, to help governments and others tailor policies to specific AI applications and help identify or develop metrics to assess more subjective criteria (such as well-being impact).

■ Inform registries or inventories: Help describe systems and their basic characteristics in inventories or registries of algorithms or automated decision systems.

■ Support sector-specific frameworks: Provide the basis for more detailed application or domain-specific catalogs of criteria, in sectors such as healthcare or in finance.

■ Support risk assessment: Provide the basis for related work to develop a risk assessment framework to help with de-risking and mitigation and to

develop a common framework for reporting about AI incidents that facilitates global consistency and interoperability in incident reporting.

■ Support risk management: Help inform related work on mitigation, compliance and enforcement along the AI system lifecycle, including as it pertains to corporate governance.

○ The framework classifies AI systems and applications along the following dimensions:

■ People & Planet- This considers the potential of applied AI systems to promote human-centric,trustworthy AI that benefits people and planet In each context, it identifies individuals and groups that interact with or are affected by an applied AI system Core characteristics include users and impacted stakeholders, as well as the application’s optionality and how it impacts human rights, the environment, well-being, society and the world of work

■ Economic Context- This describes the economic and sectoral environment in which an applied AI system is implemented It usually pertains to an applied AI application rather than to a generic AI system, and describes the type of organization and functional area for which an AI system is developed Characteristics include the sector in which the system is deployed (e g healthcare, finance, manufacturing), its business function and model; its critical (or non-critical) nature; its deployment, impact and scale, and its technological maturity

■ Data & Input- This describes the data and/or expert input with which an AI model builds a representation of the environment. Characteristics include the provenance of data and inputs, machine and/or human collection method, data structure and format, and data properties. Data & Input characteristics can pertain to data used to train an AI system (“in the lab”) and data used in production (“in the field”)

■ AI Model- This is a computational representation of all or part of the external environment of an AI system – encompassing, for example, processes, objects, ideas, people and/or interactions that take place in that environment. Core characteristics include technical type, how the model is built (using expert knowledge, machine learning or both) and how the model is used (for what objectives and using what performance measures).

■ Task & Output- This refers to the tasks the system performs, e g personalisation, recognition,forecasting or goal-driven optimisation; its outputs; and the resulting action(s) that influence the overall context Characteristics of this dimension include system task(s); action autonomy; systems that combine tasks and actions like autonomous vehicles; core application areas like computer vision; and evaluation methods

● Understand the use cases and benefits of AI (recognition, event detection, forecasting, personalization, interaction support, goal-driven optimization, recommendation)

○ “By producing fresh content, generative AI is used to augment but not replace the work of writers, graphic designers, artists, and musicians. It is particularly useful in the business realm in areas like product descriptions, variations to existing designs, or helping commercial artists explore different concepts. Among its most common use cases, generative AI can:

■ Text: Generate credible text on various topics It can compose business letters, provide rough drafts of articles, and compose annual reports.

■ Images: Output realistic images from text prompts, create new scenes, and simulate a new painting

■ Video: Compile video content from text automatically and put together short videos using existing images

■ Music: Compile new musical content by analyzing a music catalog and rendering a new composition

■ Product design: Can be fed inputs from previous versions of a product and produce several possible changes that can be considered in a new version

■ Personalization: Personalize experiences for users such as product recommendations, tailored experiences, and new material that closely matches their preferences ” (source)

○ “Predictive AI is finding innumerable use cases across a wide range of industries. If managers knew the future, they would always take appropriate steps to capitalize on how things were going to turn out Anything that improves the likelihood of knowing the future has high value in business. Predictive AI use cases include financial forecasting, fraud detection, healthcare, and marketing Predictive AI can:

■ Financial services: Enhances financial forecasts. By pulling data from a wider data set and correlating financial information with other forward-looking business data, forecasting accuracy can be greatly improved.

■ Fraud detection: Spot potential fraud by sensing anomalous behavior In banking and e-commerce, there might be an unusual device, location, or request that doesn’t fit with the normal behavior of a specific user. A login from a suspicious IP address, for example, is an obvious red flag

■ Healthcare: Find use cases such as predicting disease outbreaks, identifying higher-risk patients, and spotting the most successful treatments

■ Marketing: More closely define the most appropriate channels and messages to use in marketing It can provide marketing strategists with the data they need to write impactful campaigns and thereby bring about greater success.” (source)

Understand the differences among types of AI systems

● Understand the differences between strong/broad and weak/ narrow AI.

○ “Weak AI, often known as narrow AI, is a category of artificial intelligence confined to a singular or limited domain. Weak AI mimics human thought processes By performing time-consuming operations and conducting data analysis through methods that people can’t always use, this technology can be advantageous to society” “It cannot break the rules; it just adheres to them and is constrained by them Weak AI helps convert enormous amounts of data into useful information by identifying patterns and generating predictions.” (source)

○ “Strong AI, also known as artificial general intelligence (AGI) or deep AI, is a computer system with a comprehensive intellect capable of learning and employing its intellectual ability to solve any problem ” “It can understand, work and have a thought process different from humans in certain situations. Strong AI uses an understanding of mind AI framework to understand the goals, motivations, standards, and cognitive processes that govern other intelligent beings.” (source)

Weak AI

Limited to perform specific tasks

Programmed for fixed function

It doesn’t have any consciousness or awareness of its own

They have a goal to complete a task with creative and accurate solutions

Examples of weak AI include Alexa, Siri and Google Assistant. NLP, Google Maps (suggesting alternative routes), ChatGPT, Email Spam Filter

Strong AI

Perform intelligent human level activities

Have the ability to learn, think and perform new activities like humans

It poses creativity, common sense and logic like humans

They have a goal to solve problems at a faster pace

There are no real examples of strong AI because it is a hypothetical theory. Some fictional examples are Wall-E and Big Hero 6 Useful for: Cyber Security, Robots with high intellect, Integration of strong AI in IoT (Internet of Things), Language translation machines Image recognition systems (source)

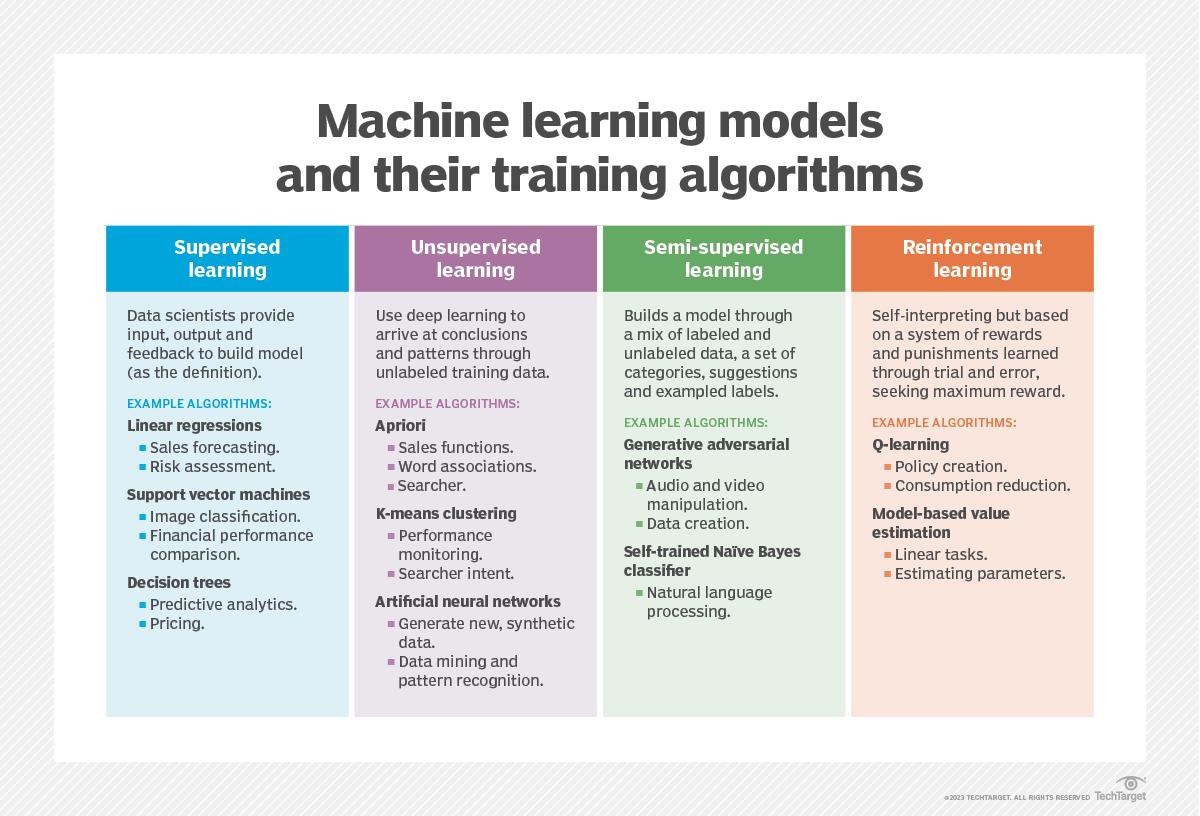

● Understand the basics of machine learning and its training methods (supervised, unsupervised, semi-supervised, reinforcement)

yp p g g

■ “Classification algorithms decide the category of an entity, object or event as represented in the data. The simplest classification algorithms answer binary questions such as yes/no, sales/not-sales or cat/not-cat

More complicated algorithms lump things into multiple categories like cat, dog or mouse. Popular classification algorithms include decision trees, logistic regression, random forest and support vector machines ” (source)

■ “Regression algorithms identify relationships within multiple variables represented in a data set This approach is useful when analyzing how a specific variable such as product sales correlates with changing variables like price, temperature, day of week or shelf location

Popular regression algorithms include linear regression, multivariate regression, decision tree and least absolute shrinkage and selection operator (lasso) regression ” (source)

○ Unsupervised- Automates the process of finding patterns in a dataset

Clustering and dimensional reduction are two common unsupervised learning algorithmic types

■ “Clustering algorithms help group similar sets of data together based on various criteria. Practitioners can segment data into different groups to identify patterns within each group ” (source)

■ “Dimension reduction algorithms explore ways to compact multiple variables efficiently for a specific problem ” (source)

○ Semi-supervised- characterize processes that use unsupervised learning algorithms to automatically generate labels for data that can be consumed by supervised techniques

○ Reinforcement- used to improve models after they’ve been deployed The most common reinforcement learning algorithms use various neural networks. (source)

● Understand deep learning, generative AI, multi-modal models, transformer models, and the major providers.

○ Deep learning

■ “Deep learning is a type of machine learning and artificial intelligence (AI) that imitates the way humans gain certain types of knowledge. Deep learning models can be taught to perform classification tasks and recognize patterns in photos, text, audio and other various data It is also used to automate tasks that would normally need human intelligence, such as describing images or transcribing audio files ” (Source)

■ Enables a computer to learn by example

■ Can be used for digital assistants, fraud detection, and facial recognition

■ Is able to create accurate predictive models from large amounts of unlabeled, unstructured data

(source)

○ Generative AI

■ Generates content, like images and text Newer version of AI compared to predictive AI, which recognizes patterns across time. This is a type of deep learning that generates content to resemble existing data

○ Multi-modal models

■ A subset of deep learning that deals with the fusion and analysis of data from multiple modalities, such as text, images, video, audio, and sensor

data Combines the strengths of different modalities to create a more complete representation of the data, leading to better performance on various machine learning tasks (source)

■ Multimodal learning presents two primary benefits (source):

● Multiple sensors observing the same data can make more robust predictions, because detecting changes in it may only be possible when both modalities are present.

● The fusion of multiple sensors can facilitate the capture of complementary information or trends that may not be captured by individual modalities

■ In general, multimodal architectures consist of three parts (source):

● Unimodal encoders encode individual modalities Usually, one for each input modality.

● A fusion network that combines the features extracted from each input modality, during the encoding phase.

● A classifier that accepts the fused data and makes predictions

○ Transformer models

■ A type of deep learning model, used for Natural Language Processing (NLP) These models can translate text and speech in near-real-time Transformer models work by processing input data, which can be sequences of tokens or other structured data, through a series of layers that contain self-attention mechanisms and feedforward neural networks Transformer models are trained using supervised learning, where they learn to minimize a loss function that quantifies the difference between the model's predictions and the ground truth for the given task (source)

● Understand natural language processing: text as input and output.

○ “the input is a block of text (either written text or text converted from speech), and the output is some desired characteristic of the text, such as its purpose (search query, command, review, etc.) and meaning or tone (positive, negative, angry, biased).” (source)

● Understand the difference between robotics and robotic processing automation (RPA)

○ “The term 'robotics' specifically relates to machines that can see, sense, actuate and, with varying degrees of autonomy, make decisions ” (source)

○ “RPA is a software robot that mimics human actions, whereas artificial intelligence is the simulation of human intelligence using computer software ” (source)

Understand the AI technology stack

● “The AI stack is a structural framework comprising interdependent layers, each serving a critical function to ensure the system’s efficiency and effectiveness. Unlike a monolithic

architecture, where each component is tightly coupled and entangled, the AI stack’s layered approach allows for modularity, scalability, and easy troubleshooting. This architecture comprises critical components such as data ingestion, data storage, data processing, machine learning algorithms, APIs, and user interfaces. These layers act as the foundational pillars that support the intricate web of algorithms, data pipelines, and application interfaces in a typical AI system Let’s understand these layers in depth ” (source)

● “The Generative AI tech stack comprises infrastructure, ML models (e g , GANs, transformers), programming languages, and deployment tools It's structured in three layers Applications, Model, and Infrastructure guiding tech choices for efficient development, cost reduction, and tailored outputs ” (source)

● Platforms and applications

○ “A well-architected AI tech stack fundamentally comprises multifaceted application frameworks that offer an optimized programming paradigm, readily adaptable to emerging technological evolutions. Such frameworks, including LangChain, Fixie, Microsoft’s Semantic Kernel, and Vertex AI by Google Cloud, equip engineers to build applications equipped for autonomous content creation, semantic comprehension for natural language search queries, and task execution through intelligent agents ”

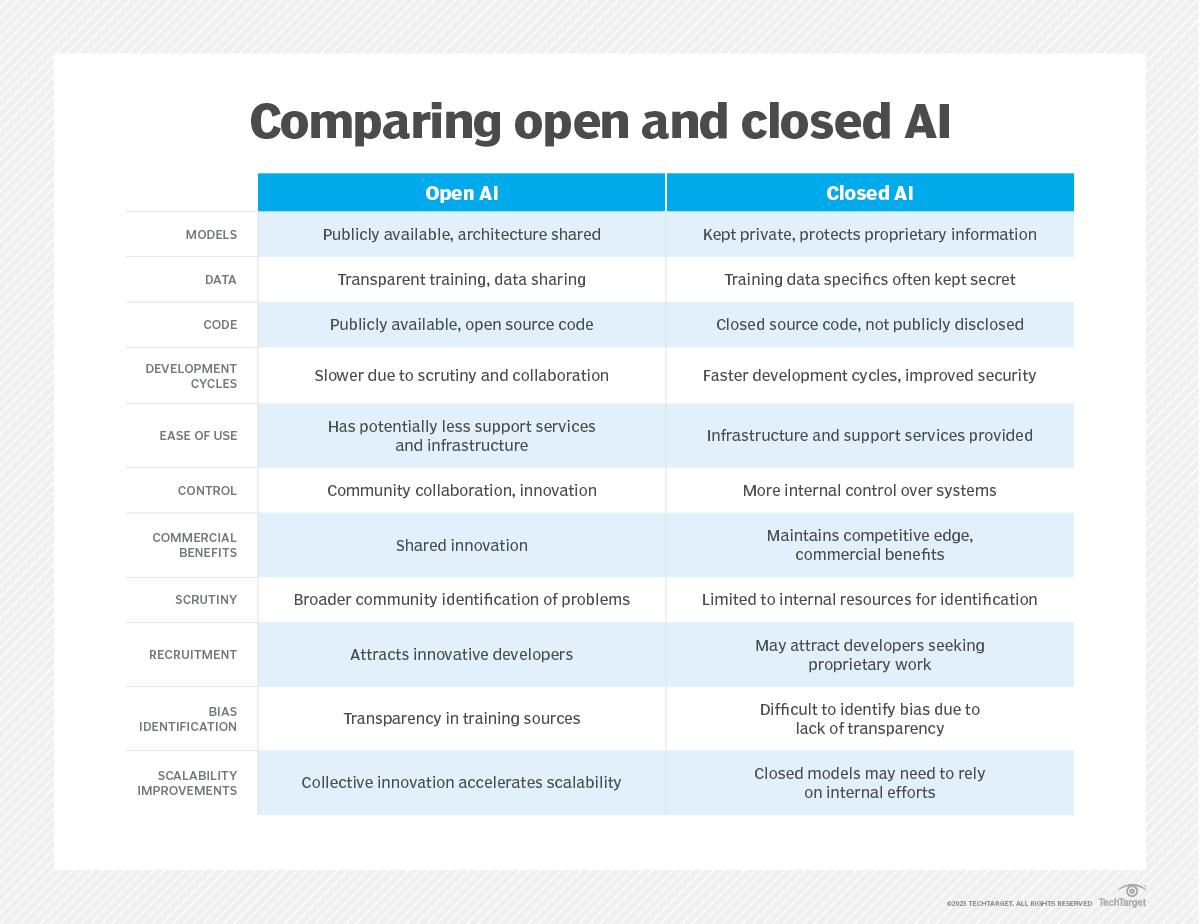

● Model types.

○ Foundation Models (FMs), essentially serving as the cognitive layer that enables complex decision-making and logical reasoning Large scale, pre-trained models

○ Closed-Source Foundation Models- obscures or protects the AI models, provenance of training data, and/or the underlying code Tends to be faster and can be used via various cloud services.(source)

○ Open-Source Foundation Models- openly sharing AI models, the provenance of training data and the underlying code While not as fast, it enables greater scrutiny of underlying code, models and data and often results in improved explainability and security (source)

○ Software

■ “our AI infrastructure will need a software stack that includes machine learning libraries and frameworks (like TensorFlow, PyTorch, or Scikit-learn), a programming language (like Python), and possibly a distributed computing platform (like Apache Spark or Hadoop) You'll also need tools for data preparation and cleaning, as well as for monitoring and managing your AI workloads. ” (source)

○ Hardware

■ “Machine learning and AI tasks are often computationally intensive and may require specialized hardware such as GPUs or TPUs. These resources can be in-house, but increasingly, organizations leverage cloud-based resources which can be scaled up or down as needed, providing flexibility and cost-effectiveness.” (source)

■ “Accelerator chips optimized for model training and inference workloads” (source)

Understand the history of AI and the evolution of data science

● 1956 Dartmouth summer research project on AI (source)

○ Birth of AI as a field of research

○ “the conference was “to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it ”

● Summers, winters and key milestones.

○ “The peaks, or AI summers, see innovation and investment The troughs, or AI winters, experience reduced interest and funding ” (source)

○ 1956–1974: THE GOLDEN YEARS

■ During the Golden Years of AI, the programs – including computers solving algebra word problems and learning to speak English – seem "astonishing" to most people.

○ 1974–1980: 20TH CENTURY AI WINTER

■ The first AI winter occurs as the capabilities of AI programs remain limited, mostly due to the lack of computing power at the time They can still only handle trivial versions of the problems they were supposed to solve.

○ 1987–1993: A RENEWED INTEREST

■ The business community's fascination and expectations of AI, particularly expert systems, rise. But they are quickly confronted by the reality of their limitations

● Understand how the current environment is fueled by exponential growth in computing infrastructure and tech megatrends (cloud, mobile, social, IOT, PETs, blockchain, computer vision, AR/VR, metaverse)

○ Increasing computing and storage capacities (source)

○ Enormous growth in the amount of data available for learning and analysis (source)

○ The development of learning machines based on artificial and neural networks. (source)

Domain 2: Understanding AI Impacts and Responsible AI Principles

Understand the core risks and harms posed by AI systems

● Understand the potential harms to an individual (civil rights, economic opportunity, safety).

○ “An inaccurate system will implicate people for crimes they did not commit And it will shift the burden onto defendants to show they are not who the system says they are.” (Source)

○ “Face recognition uniquely impacts civil liberties. The accumulation of identifiable photographs threatens important free speech and freedom of association rights under the First Amendment, especially because such data can be captured without individuals’ knowledge ”(Source)

○ ”The collection and retention of face recognition data poses special security risks. All collected data is at risk of breach or misuse by external and internal actors, and there are many examples of misuse of law enforcement data in other contexts.4Face recognition poses additional risks because, unlike a social security number or driver’s license number, we can’t change our faces Law enforcement must do more to explain why it needs to collect so much sensitive biometric and biographic data, why it needs to maintain it for so long, and how it will safeguard it from breaches ”(Source)

○ ”Our biometrics are unique to each of us, can’t be changed, and often are easily accessible. Face recognition, though, takes the risks inherent in other biometrics to a new level because it is much more difficult to prevent the collection of an image of your face. We expose our faces to public view every time we go outside, and many of us share images of our faces online with almost no restrictions on who may access them Face recognition therefore allows for covert, remote, and mass capture and identification of images.9 The photos that may end up in a database could include not just a person’s face but also how she is dressed and possibly whom she is with ” (Source)

○ ”Government surveillance like this can have a real chilling effect on Americans’ willingness to engage in public debate and to associate with others whose values, religion, or political views may be considered different from their own For example, researchers have long studied the “spiral of silence” the significant chilling effect on an individual’s willingness to publicly disclose political views when they believe their views differ from the majority.11” (Source)

● Understand the potential harms to a group (discrimination towards sub-groups).

○ ”Face recognition disproportionately impacts people of color.Face recognition misidentifies African Americans and ethnic minorities, young people, and women at higher rates than whites, older people, and men, respectively 2 Due to years of well-documented, racially biased police practices, all criminal databases including mugshot databases include a disproportionate number of African Americans, Latinos, and immigrants 3 These two facts mean people of color will likely shoulder significantly more of the burden of face recognition systems’ inaccuracies than whites.”(Source)

○ ”Due to years of well-documented racially-biased police practices, all criminal databases including mugshot databases include a disproportionate number of African Americans, Latinos, and immigrants 17 These two facts mean people of color will likely shoulder exponentially more of the burden of face recognition inaccuracies than whites ”

○ ”If job seekers’ faces are matched mistakenly to mug shots in the criminal database, they could be denied employment through no fault of their own. Even if job seekers are properly matched to a criminal mug shot, minority job seekers will be disproportionately impacted due to the notorious unreliability of FBI records as a whole”

● Understand the potential harms to society (democratic process, public trust in governmental institutions, educational access, jobs redistribution)

○ Emily Bender claims the real risks and harms are more “about concentration of power in the hands of people, about reproducing systems of oppression, about damage to the information ecosystem, and about damage to the natural ecosystem (through profligate use of energy resources) ”

● Understand the potential harms to a company or institution (reputational, cultural, economic, acceleration risks). (source)

○ Sensitive Data Exposure: Handling large datasets can lead to unintended exposure of confidential information, including customer and business data, posing risks of identity theft, financial fraud, and loss of public trust. Protection of privacy and data security have been prioritized and will be subject to closer scrutiny as a result of the recent executive order.

○ Cybersecurity Vulnerabilities: Integration of AI with entities’ institutional platforms can create entry points for hackers, risking not just data theft but also potential disruption of operations, particularly supply chains.

○ Data Control Concerns: Relying on external AI solutions can lead to issues with data control and governance, and can potentially expose companies to additional risks if vendors do not meet ESG or cybersecurity standards.

○ Opaque Decision Processes: The complexity of AI algorithms, especially in deep learning, often results in a lack of transparency and explainability, making it difficult for stakeholders to understand how decisions are made. This “black box” nature of AI can hinder accountability and trust in AI-driven ESG initiatives

○ Accountability Challenges: In cases where AI-driven decisions lead to adverse ESG outcomes, it can be difficult to attribute responsibility, complicating legal and ethical accountability

○ Compliance Complexity: AI systems utilized in an effort to enhance ESG performance may not account or keep up with the rapidly expanding number of ESG-related laws, regulations and standards developing across different regions, increasing the risk of inadvertent non-compliance.

○ Legal Uncertainties: Rapidly evolving AI technologies can outpace existing legal frameworks, creating uncertainties about liability for collection, maintenance and use of data, intellectual property rights, and other legal issues.

● Understand the potential harms to an ecosystem (natural resources, environment, supply chain). (source)

○ High Energy Consumption: The computation-intensive nature of training and running AI, particularly large models, can lead to high energy consumption and significant carbon footprints, potentially contradicting environmental sustainability efforts

○ Life Cycle Impact of AI Hardware: The production, operation, and disposal of the hardware necessary for AI (e g , servers, data centers) contribute to environmental concerns such as electronic waste and resource depletion

Understand the characteristics of trustworthy AI systems

● Understand what it means for an AI system to be ″human-centric ″

○ Creating AI systems that amplify and augment rather than displace human abilities HCAI seeks to preserve human control in a way that ensures artificial intelligence meets our needs while also operating transparently, delivering equitable outcomes, and respecting privacy Source

● Understand the characteristics of an accountable AI system (safe, secure and resilient, valid and reliable, fair).

○ The obligation and responsibility of the creators, operators and regulators of an AI system to ensure the system operates in a manner that is ethical, fair, transparent and compliant with applicable rules and regulations (see fairness and transparency).

○ Accountability ensures the actions, decisions and outcomes of an AI system can be traced back to the entity responsible for it.

● Understand what it means for an AI system to be transparent.

○ The extent to which information regarding an AI system is made available to stakeholders, including disclosing whether AI is used and explaining how the model works

○ It implies openness, comprehensibility and accountability in the way AI algorithms function and make decisions

● Understand what it means for an AI system to be explainable (XAI)

○ The ability to describe or provide sufficient information about how an AI system generates a specific output or arrives at a decision in a specific context to a predetermined addressee.

○ XAI is important in maintaining transparency and trust in AI.

● Understand what it means for an AI system to be privacy-enhanced (Reference) (Reference)

○ Privacy-enhancing technologies (PETs) are a collection of digital technologies and approaches that allow for the collection, processing, analysis, and sharing of information while safeguarding the privacy of personal data. They enable a relatively high level of utility from data while minimizing the need for extensive data collection and processing

○ Embody fundamental data protection principles by minimizing personal data use, maximizing information security, and/or empowering individuals

○ Federated Learning

■ Federated learning is a type of machine learning that enables the training of machine learning models across multiple decentralized devices without transferring the raw data to a central server.

■ Each device trains the model using its local data, and only aggregated updates get shared with the central server This helps preserve data privacy as the raw data remains on the devices and is never shared.

○ AI-generated synthetic data

■ This approach uses advanced AI algorithms to generate synthetic data with the same statistical properties and correlations as real data but without including personally identifiable information

Understand the similarities and differences among existing and emerging ethical guidance on AI

● Understand how the ethical guidance is rooted in Fair Information Practices (FIPPs), European Court of Human Rights, and Organization for Economic Cooperation and Development principles

○ Fair Information Practicies (FIPPS)- a collection of widely accepted principles that agencies use when evaluating information systems, processes, programs, and activities that affect individual privacy The FIPPs are not requirements; rather, they are principles that should be applied by each agency according to the agency’s particular mission and privacy program requirements (source)

■ Access and Amendment- Agencies should provide individuals with appropriate access to PII and appropriate opportunity to correct or amend PII

■ Accountability- Agencies should be accountable for complying with these principles and applicable privacy requirements, and should appropriately monitor, audit, and document compliance Agencies should also clearly define the roles and responsibilities with respect to PII for all employees and contractors, and should provide appropriate training to all employees and contractors who have access to PII

■ Authority- Agencies should only create, collect, use, process, store, maintain, disseminate, or disclose PII if they have authority to do so, and should identify this authority in the appropriate notice.

■ Minimization- Agencies should only create, collect, use, process, store, maintain, disseminate, or disclose PII that is directly relevant and necessary to accomplish a legally authorized purpose, and should only maintain PII for as long as is necessary to accomplish the purpose

■ Quality and Integrity- Agencies should create, collect, use, process, store, maintain, disseminate, or disclose PII with such accuracy, relevance, timeliness, and completeness as is reasonably necessary to ensure fairness to the individual

■ Individual Participation- Agencies should involve the individual in the process of using PII and, to the extent practicable, seek individual

consent for the creation, collection, use, processing, storage, maintenance, dissemination, or disclosure of PII. Agencies should also establish procedures to receive and address individuals’ privacy-related complaints and inquiries.

■ Purpose Specification and Use Limitation- Agencies should provide notice of the specific purpose for which PII is collected and should only use, process, store, maintain, disseminate, or disclose PII for a purpose that is explained in the notice and is compatible with the purpose for which the PII was collected, or that is otherwise legally authorized

■ Security- Agencies should establish administrative, technical, and physical safeguards to protect PII commensurate with the risk and magnitude of the harm that would result from its unauthorized access, use, modification, loss, destruction, dissemination, or disclosure.

■ Transparency- Agencies should be transparent about information policies and practices with respect to PII, and should provide clear and accessible notice regarding creation, collection, use, processing, storage, maintenance, dissemination, and disclosure of PII

○ European Court of Human Rights (source)

■ Rules on individual or State applications alleging violations of the civil and political rights set out in the European Convention on Human Rights

○ Organization for Economic Cooperation and Development principles (source)

■ Ensuring the basis of an effective corporate governance framework

● The corporate governance framework should promote transparent and efficient markets, be consistent with the rule of law and clearly articulate the division of responsibilities among different supervisory, regulatory and enforcement authorities

■ The rights and equitable treatment of shareholders and key ownership functions

● ‘The corporate governance framework should protect and facilitate the exercise of shareholders’ rights and ensure the equitable treatment of all shareholders, including minority and foreign shareholders All shareholders should have the opportunity to obtain effective redress for violation of their rights.’

● Basic shareholder rights should include the right to:

○ Secure methods of ownership registration;

○ Convey or transfer shares;

○ Obtain relevant and material information on the corporation on a timely and regular basis;

○ Participate and vote in general shareholder meetings;

○ Elect and remove members of the board; and

○ Share in the profits of the corporation

■ Institutional investors, stock markets, and other intermediaries

● ‘The corporate governance framework should provide sound incentives throughout the investment chain and provide for stock markets to function in a way that contributes to good corporate governance ’

○ All shareholders of the same series of a class should be treated equally

○ Insider trading and abusive self-dealing should be prohibited

○ Members of the board and key executives should be required to disclose to the board whether they, directly, indirectly or on behalf of third parties, have a material interest in any transaction or matter directly affecting the corporation

■ The role of stakeholders in corporate governance

● The corporate governance framework should recognize the rights of stakeholders established by law or through mutual agreements and encourage active co-operation between corporations and stakeholders in creating wealth, jobs, and the sustainability of financially sound enterprises

■ Disclosure and transparency

● The corporate governance framework should ensure that timely and accurate disclosure is made on all material matters regarding the corporation, including the financial situation, performance, ownership, and governance of the company

■ The responsibilities of the board

● The corporate governance framework should ensure the strategic guidance of the company, the effective monitoring of management by the board, and the board’s accountability to the company and the shareholders

● OECD AI Principles (source)

○ Promotes AI that is innovative and trustworthy and that respects human rights and democratic values Value-based principles

■ Inclusive growth, sustainable development and well-being

● Stakeholders should proactively engage in responsible stewardship of trustworthy AI in pursuit of beneficial outcomes for people and the planet, such as augmenting human capabilities and enhancing creativity, advancing inclusion of underrepresented populations, reducing economic, social, gender and other inequalities, and protecting natural environments, thus invigorating inclusive growth, sustainable development and well-being

■ Human-centred values and fairness

● AI actors should respect the rule of law, human rights and democratic values, throughout the AI system lifecycle These

include freedom, dignity and autonomy, privacy and data protection, non-discrimination and equality, diversity, fairness, social justice, and internationally recognised labor rights

● To this end, AI actors should implement mechanisms and safeguards, such as capacity for human determination, that are appropriate to the context and consistent with the state of art

■ Transparency and explainability

● AI Actors should commit to transparency and responsible disclosure regarding AI systems To this end, they should provide meaningful information, appropriate to the context, and consistent with the state of art:

○ to foster a general understanding of AI systems,

○ to make stakeholders aware of their interactions with AI systems, including in the workplace,

○ to enable those affected by an AI system to understand the outcome, and,

○ to enable those adversely affected by an AI system to challenge its outcome based on plain and easy-to-understand information on the factors, and the logic that served as the basis for the prediction, recommendation or decision.

■ Robustness, security and safety

● AI systems should be robust, secure and safe throughout their entire lifecycle so that, in conditions of normal use, foreseeable use or misuse, or other adverse conditions, they function appropriately and do not pose unreasonable safety risk.

● To this end, AI actors should ensure traceability, including in relation to datasets, processes and decisions made during the AI system lifecycle, to enable analysis of the AI system’s outcomes and responses to inquiry, appropriate to the context and consistent with the state of art

● AI actors should, based on their roles, the context, and their ability to act, apply a systematic risk management approach to each phase of the AI system lifecycle on a continuous basis to address risks related to AI systems, including privacy, digital security, safety and bias

■ Accountability

● AI actors should be accountable for the proper functioning of AI systems and for the respect of the above principles, based on their roles, the context, and consistent with the state of art.

● White House Office of Science and Technology Policy Blueprint for an AI Bill of Rights (source)

○ 5 principles:

■ Safe and Effective Systems

● You should be protected from unsafe or ineffective systems

■ Algorithmic Discrimination Protections

● You should not face discrimination by algorithms and systems should be used and designed in an equitable way

■ Data Privacy

● You should be protected from abusive data practices via built-in protections and you should have agency over how data about you is used

■ Notice and Explanation

● You should know that an automated system is being used and understand how and why it contributes to outcomes that impact you

■ Human Alternatives, Considerations, and Fallback

● You should be able to opt out, where appropriate, and have access to a person who can quickly consider and remedy problems you encounter

○ From Principles to Practice a handbook for anyone seeking to incorporate these protections into policy and practice

● High-level Expert Group AI (source)

○ European Commission appointed a group of experts to provide advice on its artificial intelligence strategy

○ Deliverable 1: Ethics Guidelines for Trustworthy AI

■ The document puts forward a human-centric approach on AI and list 7 key requirements that AI systems should meet in order to be trustworthy

○ Deliverable 2: Policy and Investment Recommendations for Trustworthy AI

■ Building on its first deliverable, the group put forward 33 recommendations to guide trustworthy AI towards sustainability, growth, competitiveness, and inclusion. At the same time, the recommendations will empower, benefit and protect European citizens

○ Deliverable 3: The final Assessment List for Trustworthy AI (ALTAI)

■ A practical tool that translates the Ethics Guidelines into an accessible and dynamic self-assessment checklist The checklist can be used by developers and deployers of AI who want to implement the key requirements This new list is available as a prototype web based tool and in PDF format

○ Deliverable 4: Sectoral Considerations on the Policy and Investment Recommendations

■ The document explores the possible implementation of the recommendations, previously published by the group, in three specific

areas of application: Public Sector, Healthcare and Manufacturing & the Internet of Things.

● UNESCO Principles (source)

○ “provide a basis to make AI systems work for the good of humanity, individuals, societies and the environment and ecosystems, and to prevent harm It also aims at stimulating the peaceful use of AI systems ”

○ “In addition to the existing ethical frameworks regarding AI around the world, this Recommendation aims to bring a globally accepted normative instrument that focuses not only on the articulation of values and principles, but also on their practical realization, via concrete policy recommendations, with a strong emphasis on inclusion issues of gender equality and protection of the environment and ecosystems ”

○ “ protect, promote and respect human rights and fundamental freedoms, human dignity and equality, including gender equality; to safeguard the interests of present and future generations; to preserve the environment, biodiversity and ecosystems; and to respect cultural diversity in all stages of the AI system life cycle”

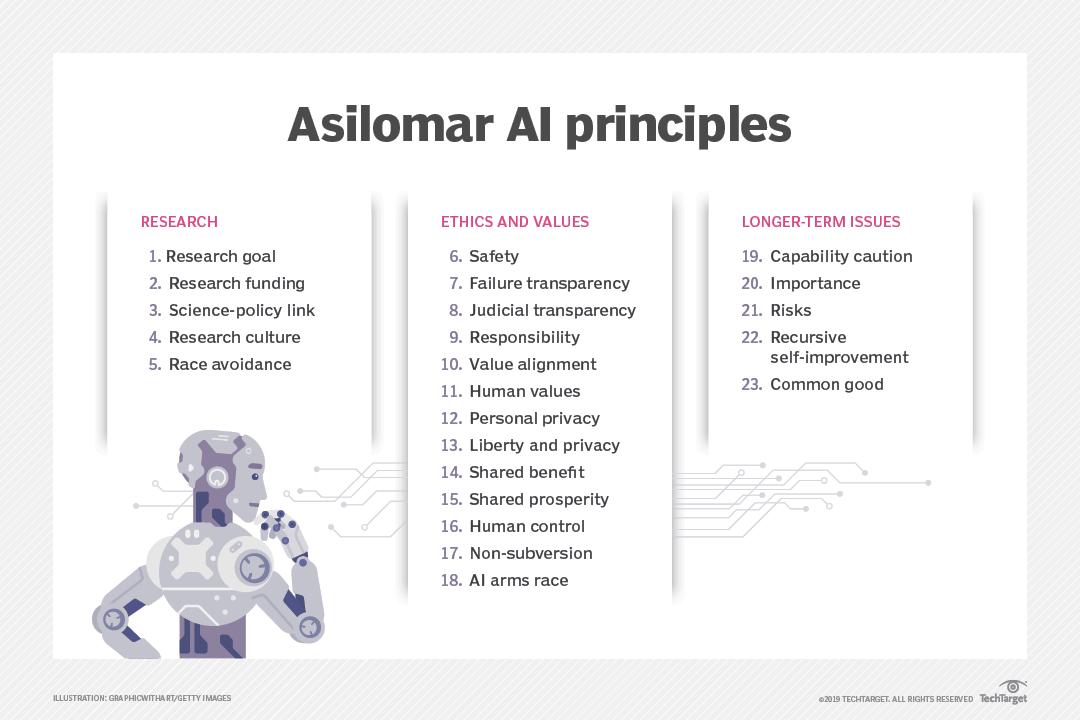

● Asilomar AI Principles ored by hics of

○ “To ensure every stakeholder involved in the design and development of autonomous and intelligent systems is educated, trained, and empowered to prioritize ethical considerations so that these technologies are advanced for the benefit of humanity” (source)

○ Eight general principles: human rights and well-being, transparency, accountability, effectiveness, competence and “awareness of misuse” in addition to “data agency,” giving individuals control over their data

○ Ethics-by-design approach

● CNIL AI Action Plan. (source) (reference)

○ Understanding the functioning of AI systems and their impacts on people: Through its new artificial intelligence service, the CNIL will focus on addressing key data protection issues relevant to the design and operation of AI applications These issues include the protection of publicly available data on the web against scraping, the protection of data transmitted by users of AI systems, and consequences for the rights of individuals to their data with respect to data collected for training AI applications and the outputs produced by AI systems, among other issues.

○ Guiding the development of AI that respects personal data: To support organizations innovating in the field of AI and to prepare for the potential passage of the EU AI Act, the CNIL will publish guidance and best practices on several AI topics, including a guide on rules applicable to the sharing and re-use of data as well as recommendations for the design of generative AI systems.

○ Supporting innovative players in the AI ecosystem in France and Europe: The CNIL will support actors in the AI ecosystem to innovate in a way that ensures protection of French and European fundamental rights and freedoms. In particular, the CNIL will soon open a new call for projects for participation in its 2023 regulatory sandbox and plans to engage in increased dialogue with research teams, R&D centers and organizations developing AI systems.

○ Auditing and controlling AI systems: The CNIL plans to develop a tool to audit AI systems and will continue to investigate complaints lodged with its office related to AI, including generative AI

Domain 3: Understanding How Current Laws Apply to AI Systems

Understand the existing laws that interact with AI use

● Know the laws that address unfair and deceptive practices.

○ Federal Trade Commission (FTC) Act (US) (Wheeler-Lea Act of 1938)

○ EU Directive on unfair commercial practices from 2005 (source)

○ The Children's Online Privacy Protection Act (COPPA), which governs the collection of information about minors

○ The Gramm Leach Bliley Act (GLBA), which governs personal information collected by banks and financial institutions

○ Telemarketing Sales Rule (TSR), Telephone Consumer Protection Act of 1991, and the Do-Not-Call Registry

○ Junk Fax Protection Act of 2005 (JFPA)

○ Controlling the Assault of Non-Solicited Pornography and Marketing Act of 2003 (CAN-SPAM) and the Wireless Domain Registry

○ Telecommunications Act of 1996 and Customer Proprietary Network Information (CPNI)

○ Cable Communications Policy Act of 1984

○ Video Privacy Protection Act of 1998 (VPPA) and Video Privacy Protection Act Amendments of 2012

○ Driver's Privacy Protection Act (DPPA)

● Know relevant non-discrimination laws (credit, employment, insurance, housing, etc.).

○ “The FCRA regulates "consumer reporting agencies" and people who use the reports generated by consumer reporting agencies. Crucially, a generative AI service potentially could meet the definition of "consumer reporting agency" if the service regularly produces reports about individuals' "character, general reputation, personal characteristics, or mode of living" and these reports are used for employment purposes ” (Source)

○ Confidentiality of Substance Use Disorder Patient Records Rule

■ Prohibits patient information from being used to initiate criminal charges or as a predicate to conduct a criminal investigation of the patient

○ The Fair Credit Reporting Act (FCRA), which regulates the collection and use of credit information

○ Fair and Accurate Credit Transactions Act of 2009 (FACTA)

■ contains protections against identity theft, “red flags” rules

○ Privacy Protection Act of 1980 (PPA)

■ The PPA requires law enforcement to obtain a subpoena in order to obtain First Amendment-protected materials

○ Title VII of the Civil Rights Act of 1964 (“CRA”) prohibits employment discrimination on the basis of race, color, religion, sex, or national origin

○ Title I of the Americans With Disabilities Act (“ADA”) prohibits employment discrimination against “qualified” individuals with disabilities

○ Genetic Information Nondiscrimination Act of 2008 (GINA)

○ Illinois Artificial Intelligence Video Interview Act – Requires that any employer relying on AI technology to analyze a screening interview must

providing information to candidates and obtain consent; must also report demographic data to the state to analyze bias

○ Maryland HB 1202 – Prohibits the use of facial recognition technology in the hiring process without consent of applicant

○ NYC Regulation – A bias audit must be conducted on any use of automated employment decision tools requires; notice must be provided to applicants and alternative selection process must be provided

○ The Wiretap Act

● Know relevant product safety laws. Know relevant IP law

○ Consumer Product Safety Act (CPSA) in 1972 for the purposes of protecting consumers against the risk of injury due to consumer products, enabling consumers to evaluate product safety, establishing consistent safety standards, and promoting research into the causes and prevention of injuries and deaths associated with unsafe products (source)

○ The General Product Safety Regulation (GPSR) requires that all consumer products on the EU markets are safe and it establishes specific obligations for businesses to ensure it It applies to non-food products and to all sales channels (source)

○ “U.S. Patent and Trademark Office (USPTO), U S Copyright Office, and courts have yet to fully establish clear guidelines concerning AI-created content or inventions. However, they generally recognize rights only for human authors and inventors In Europe, the European Patent Office (EPO) and European Union Intellectual Property Office (EUIPO) have similar stances, though discussions are ongoing about potential changes.” (source)

● Understand the basic requirements of the EU Digital Services Act (transparency of recommender systems). (source)

○ The DSA imposes obligations on all information society services that offer an intermediary service to recipients who are located or established in the EU, regardless of whether that intermediary service provider is incorporated or located within the EU

○ Transparency obligations: Advertising, user profiling, and recommender systems

■ Under Article 26, providers of online platforms must supply users with information relating to any online advertisements on its platform so that the recipients of the services can clearly identify that such information constitutes an advertisement Providers of online platforms are prohibited from presenting targeted advertisements based on profiling using either the personal data of minors or special category data (as defined in the GDPR)

■ Article 27 requires providers of online platforms that use recommendation systems to set out in their T&Cs the main parameters they use for such systems, including any available options for recipients to modify or influence them. Under Article 38, VLOPs and VLOSEs must provide at

least one option (not based on profiling) for users to modify the parameters used.

● Know relevant privacy laws concerning the use of data

○ The Federal (US) Privacy Act of 1974 and the E-Government Act of 2002 require agencies to address the privacy implications of any system that collects identifiable information on the public

○ The Health Insurance Portability and Accounting Act (HIPAA), which governs the collection of health information

○ Health Insurance Technology for Economic and Clinical Health Act of 2009 (HITECH), which increased penalties under HIPAA and provided greater access rights to individuals

○ The Family Educational Rights and Privacy Act (FERPA), which protects the privacy of student education records

○ Protection of Pupil Rights Amendment of 1978 (PPRA), which prevents the sale of student information for commercial purposes

○ CCPA/CPRA, Virginia Consumer Data Protection Act (VCDPA), CPA, CTDPA, Montana’s Consumer Data Privacy Act, Delaware Personal Data Privacy Act, Utah Consumer Privacy Act (UCPA), Oregon Consumer Privacy Act (OCPA), Iowa’s Consumer Data Protection Act (ICDPA), New Jersey Data Privacy Act (NJDPA), Indiana Consumer Data Protection Act, Tennessee Information Protection Act, Texas Data Privacy and Security Act (TDPSA)

Understanding key GDPR intersections

● Understand automated decision making, data protection impact assessments, anonymization, and how they relate to AI systems

○ “The data subject shall have the right not to be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly significantly affects him or her” (source)

○ “the data controller shall implement suitable measures to safeguard the data subject’s rights and freedoms and legitimate interests, at least the right to obtain human intervention on the part of the controller, to express his or her point of view and to contest the decision.”(source)

○ Creating a dataset to train an AI system may involve the use of PII This can lead to “high risks” to people’s rights and freedoms. A data protection impact assessment is mandatory in this scenario. This is different than an AI Conformity assessment

○ Anonymizing the training data can help to mitigate concerns by separating the information from the person

● Understand the intersection between requirements for AI conformity assessments and DPIAs.

○ “Before a high-risk AI system can be brought into the market, a Conformity Assessment (CA) should be made to ensure compliance with the AIA Additionally, in the AIA, the GDPR is explicitly mentioned when it comes to processing personal data[2], and when performing a Data Protection Impact

Assessment[3] (DPIA) is required This means there will be an overlap between these two assessments as a high-risk AI system would almost automatically include high risk processing under the GDPR” (source)

○ “A CA is focused on ensuring compliance with specific legal requirements, which are considered mitigation measures for high-risk systems The main goal of CA is to guarantee adherence to the mitigation measures or requirements mandated by the law. An approved CA is required to enter the market.” (source)

○ “A DPIA has a slightly different purpose, it serves as a tool for accountability by requiring controllers to assess and make decisions based on risks It also mandates reporting on the decision-making process. The primary objective of DPIA is to hold controllers accountable for their actions and ensure more effective protection of individuals’ rights The controller is ‘free’ to decide if and how it will mitigate risk.” (source)

○ “Whenever a high-risk AI system involves the processing of personal data, a DPIA will almost certainly be required. Both processes involve assessing risks related to specific systems and have distinct sets of requirements To prevent redundant work or conflicting conclusions, it is probable that the CA can form the foundation for the DPIA of the controller. If the provider also operates as a controller under the GDPR, then both the DPIA and CA will be carried out by the same entity, reinforcing each other”(source)

● Understand the requirements for human supervision of algorithmic systems.

○ Users have the right to:

■ Know about the automated decision;

■ Understand the decision-making logic;

■ Challenge the decision and share their perspective; and

■ Request human intervention for decision review.

○ This is why data controllers must plan for human intervention, allowing individuals to review their situation, understand the decision, and contest it. (source)

● Understand an individual’s right to meaningful information about the logic of AI systems

○ The existence of automated decision making, including profiling

○ “Meaningful information about the logic involved.”

■ ““Meaningful information about the logic involved” in relation to Article 22 of the GDPR should be understood as information around the algorithmic method used rather than an explanation about the rationale of an automated decision. For example, if a loan application is refused, Article 22 may require the controller to provide information about the input data related to the individual and the general parameters set in the algorithm that enabled the automated decision But Article 22 would not require an explanation around the source code, or how and why that specific decision was made ” (source)

○ “The significance and the envisaged consequences of such processing” for the individual.

Understanding liability reform

● Awareness of the reform of EU product liability law. (source)

○ Article 4 of the proposed Directive brings software into the scope of EU product liability laws. Operating systems, firmware, computer programs and applications and AI systems are all expressly included (by Recital 12)

○ Article 7 extends liability to manufacturers of defective components, distributors, fulfilment service providers and online platforms.

○ Articles 8 and 9 provide a disclosure regime and set of rebuttable presumptions designed to assist claimants

● Understand the basics of the AI Product Liability Directive.

○ “Complements the Artificial Intelligence Act by introducing a new liability regime that ensures legal certainty, enhances consumer trust in AI, and assists consumers’ liability claims for damage caused by AI-enabled products and services It applies to AI systems that are available on the EU market, or operating within the EU market.” (source)

● Awareness of U S federal agency involvement (EO14091)(source)

○ Further Advancing Racial Equity and Support for Underserved Communities Through the Federal Government

○ requires federal agencies to integrate equity into planning and decision-making

○ “When designing, developing, acquiring, and using artificial intelligence and automated systems in the Federal Government, agencies shall do so, consistent with applicable law, in a manner that advances equity”

○ “[Agencies shall consider opportunities to:] ensure that their respective civil rights offices are consulted on decisions regarding the design, development, acquisition, and use of artificial intelligence and automated system”

○ The term “algorithmic discrimination” refers to instances when automated systems contribute to unjustified different treatment or impacts disfavoring people based on their actual or perceived race, color, ethnicity, sex (including based on pregnancy, childbirth, and related conditions; gender identity; intersex status; and sexual orientation), religion, age, national origin, limited English proficiency, disability, veteran status, genetic information, or any other classification protected by law.

Domain 4: Understanding the Existing and Emerging AI Laws and Standards

Understanding the requirements of the EU AI Act

● Understand the classification framework of AI systems (prohibited, high-risk, limited risk, low risk). (source)

○ Prohibited- considered a significant threat to fundamental rights, democratic processes, and societal values Such systems are likely to compromise the integrity of critical infrastructures and engage in activities that could lead to serious incidents

○ High Risk- primarily used in critical sectors such as healthcare, transportation, and law enforcement. These systems undergo strict conformity assessments to ensure their accuracy, robustness, and cybersecurity, and their deployment is heavily regulated to mitigate potential risks associated with their use The Act also mandates human oversight in deploying high-risk AI, ensuring accountability and providing an additional safety and security layer

○ Limited Risk- considered less risky than their high-risk counterparts and thus face fewer regulatory constraints However, while they do not require the same level of scrutiny, they must still adhere to specific transparency obligations to maintain accountability and trustworthiness in their deployment. This means that the developers and operators of these systems must be able to provide clear explanations of how the system works, what data it uses, and how it makes decisions.

○ Low Risk- Some examples of such applications include AI-powered video games and spam filters. The key aspect of this proposal is that it seeks to minimize regulatory burdens placed on such systems, thereby promoting innovation and development in areas where risks associated with the use of AI are deemed negligible or non-existent.

● Understand requirements for high-risk systems and foundation models.

○ Demonstrating that the tech and its use does not pose a significant threat to health, safety and fundamental rights. These include a comprehensive set of risk management, data governance, monitoring and record-keeping practices, detailed documentation alongside transparency and human oversight obligations, and standards for accuracy, robustness and cybersecurity. (Source)

● Understand notification requirements (customers and national authorities).

○ “The AI Act requires developers of high-risk AI to set up a reporting system for serious incidents as part of wider post-market monitoring A serious incident is defined as an incident or a malfunction that led to, might have led or might lead to serious damage to a person’s health or their death, serious damage to property or the environment, the disruption of critical infrastructure or the violation of fundamental rights under EU law. Developers and, in some cases, deployers must notify the relevant authorities and maintain records and logs of the AI system’s operation at the time of the incident to demonstrate compliance with the AI Act in case of ex-post audits of incidents “ (Source)

● Understand the enforcement framework and penalties for noncompliance

○ Penalties for non-compliance follow a three-tiered system, with more severe violations of obligations and requirements carrying heftier penalties (source)

○ The heftiest fines are imposed for violations related to prohibited systems of up to €35,000,00 or 7% of worldwide annual turnover for the preceding financial year, whichever is higher

○ The lowest penalties for AI operators are for providing incorrect, incomplete, or misleading information, up to €7,500,000 or 1% of total worldwide annual turnover for the preceding financial year, whichever is higher

○ Penalties for non-compliance can be issued to providers, deployers, importers, distributors, and notified bodies.

(Source)

● Understand procedures for testing innovative AI and exemptions for research.

○ An exception within the AI Act to process special categories of personal data to detect and correct bias within AI applies to providers of AI systems

■ “the AI Act's exception applies to developers and entities outsourcing the development of AI systems, for non-private use. The exception does not seem to apply to organizations renting a fully developed AI system as a service, for example.” (source)

● Understand transparency requirements, i e , registration database

○ High-risk AI systems must be registered in an EU-wide public database

○ obligation to warn people that they are interacting with an AI system.

Understand other emerging global laws

● Understand the key components of Canada’s Artificial Intelligence and Data Act (C-27). (source)

○ “AIDA provides a definition of “person” that includes trusts, partnerships, unincorporated associations and any other legal entity, and further clarifies when a such a “person” will be considered responsible for an AI system. A person becomes a “person responsible” for an AI system if they design, develop, make available for use, or manage the operation of an AI system in the course of international or interprovincial trade and commerce.”

○ Responsibilities include:

■ ensuring the anonymization of data

■ conducting assessments to determine whether an AI system is “high-impact,”

■ establishing measures related to risks

■ monitoring and keeping records on risk mitigation

■ requirements for organizations to publish a plain-language description of all high-impact AI systems on a public website.

○ If adopted, will replace PIPEDA

● Understand the key components of U.S. state laws that govern the use of AI.(source)

○ Algorithmic Discrimination- an automated decision tool's differential treatment of an individual or group based on their protected class Bills that address this place the burden on AI developers and businesses using AI, often referred to as deployers, to proactively ensure that the technologies are not creating discriminatory outcomes in the consumer and employment context

■ “Provisions found in most of these bills require regular impact assessments of AI tools to ensure against discrimination; disclosure of such assessments to government agencies; internal policies, programs and safeguards to prevent foreseeable risks from AI; accommodating requests to opt-out of being subject to AI tools; disclosure of the AI's use to affected persons; and an explanation of how the AI tool uses personal information and how risks of discrimination are being minimized”

■ California, Connecticut, Vermont, Hawaii, Illinois, New York, Oklahoma, Rhode Island (lost steam), and Washington (lost steam)

○ Automated employment decision tools- "predictive data analytics" used by employers to make employment decisions about hiring, firing, promotion and compensation

■ “require employers to provide advance notice to and obtain consent from job applicants and employees who are subject to AEDTs, explain

the qualifications and characteristics that AI will assess to candidates, and conduct and disclose regular impact assessments or bias audits of AI tools Most of these bills, however, include carveouts for the use of AI when promoting diversity or affirmative action initiatives.”

■ Illinois, Massachusetts, New Jersey, New York, Vermont

○ AI Bill of Rights

■ “provide state residents the rights to know when they are interacting with AI, to know when their data is being used to inform AI, not to be discriminated against by the use of AI, to have agency over their personal data; to understand the outcomes of an AI system impacting them and to opt out of an AI system”

■ Oklahoma and New York

○ Working Group Bills

■ “creating government commissions, agencies or working groups to study the implementation of AI technologies and develop recommendations for future regulation”

■ Utah, Florida, Hawaii, Massachusetts

● Understand the Cyberspace Administration of China’s draft regulations on generative AI. (source)

○ “The requirements of this regulation will apply to domestic companies and to overseas generative AI service providers offering generative AI services to general public in China It is important to note also that the Generative AI Measures apply to services offered to the public and not the use of generative AI services by enterprises.”

○ In the development and use of generative AI services, generative AI service providers must:

■ not generate illegal content such as false or harmful information;

■ take effective measures to prevent the generation of discriminatory content;

■ not use advantages in algorithms, data, or platforms where this leads to monopoly and unfair competitive behaviors;

■ not infringe on others’ portrait rights, reputation rights, honor rights, privacy rights and personal information rights; and

■ take effective measures based on service types to increase the transparency of generative AI services and the accuracy and reliability of generative AI content

○ In respect of the training data, generative AI service providers must:

■ use data and foundation models from legitimate sources;

■ not infringe others’ legally owned intellectual property;

■ obtain personal data with consent or under situations prescribed by the law or administrative measures; and

■ take effective measures to increase the quality of training data, their truthfulness, accuracy, objectivity and diversity.

○ When providing generative AI services, generative AI service providers bear cybersecurity obligations as online information content producers and personal information protection obligations as personal information handlers and must:

■ enter into service agreements with registered generative AI service users which specify the rights and obligations of both parties;

■ guide users on the legal use of generative AI technology and take effective measures to prevent users from over-reliance on or “addiction to” the generated AI service;

■ not collect non-essential personal information, not illegally retain input information and usage records which can be used to identify a user and not illegally provide users’ input information and usage records to others;

■ receive and settle data subjects’ requests;

■ tag generated content such as photos and video as pursuant to the Administrative Provisions on Deep Synthesis of Internet-based Information Services (Deep Synthesis Provisions);

■ if illegal content is discovered, take measures to stop the generation and transmission of and delete illegal content, take rectification measures such as model improvement, and report to the relevant competent authorities;

■ where users are found to use generative AI services to conduct illegal activities, take measures to warn the user, or restrict, suspend or terminate the service, retain the records, and report to the relevant competent authorities; and

■ establish a mechanism for receiving and handling users’ complaints.

○ In relation to other legal obligations and enforcement supervision, generative AI service providers shall:

■ if the generative AI service comes with a public opinion attribute or social mobilization ability, carry out a safety assessment obligation and (within ten working days from the date of provision of services) go through record-filing formalities pursuant to the Administrative Provisions on Algorithm Recommendation for Internet Information Services (Algorithm Provisions); and

■ when the relevant competent authorities (e g , the CAC) commence supervisory checks on the generative AI service, cooperate with them, explain the source, size and types of the training data, tagging rules and the mechanisms and principles of the algorithm and provide necessary technology and data, etc., for support and assistance.

Understand the similarities and differences among the major risk management frameworks and standards

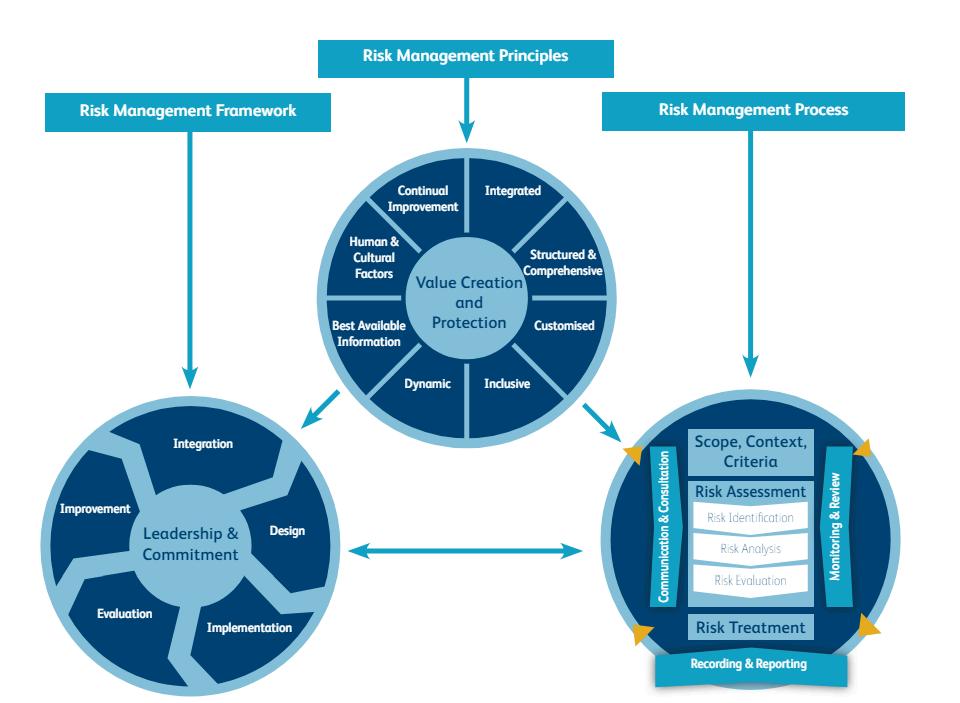

● ISO 31000:2018 Risk Management – Guidelines.

○ “A management system is the framework of policies, processes and procedures employed by an organization to ensure that it can fulfill the tasks required to achieve its purpose and objectives ” (source)

○ Governance and culture; strategy and objective-setting; performance; information, communications and reporting; and the review and revision of practices to enhance the performance of the organization

○ Emphasis on leadership endorsement and engagement, emphasis on organizational governance, emphasis on iterative nature of risk management (regularly updating processes and policies in response to new industry developments)

● United States National Institute of Standards and Technology, AI Risk Management Framework (NIST AI RMF).

○ Voluntarily used to improve the ability to incorporate trustworthiness considerations into the design, development, use, and evaluation of AI products, services, and systems.

○ The framework breaks down the AI risk management process into four core functions: "govern," "map," "measure," and "manage (source)

○ seven “characteristics of trustworthy AI,” which include: valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and fair with harmful biases managed.(source)

● European Union proposal for a regulation laying down harmonized rules on AI (EU AIA) (source)

○ A proposed European law on artificial intelligence (AI) – the first comprehensive law on AI by a major regulator anywhere

○ The majority of obligations fall on providers (developers) of high-risk AI systems.

○ Users are natural or legal persons that deploy an AI system in a professional capacity, not affected end-users

○ All GPAI model providers must provide technical documentation, instructions for use, comply with the Copyright Directive, and publish a summary about the content used for training

○ Free and open licence GPAI model providers only need to comply with copyright and publish the training data summary, unless they present a systemic risk

○ All providers of GPAI models that present a systemic risk – open or closed –must also conduct model evaluations, adversarial testing, track and report serious incidents and ensure cybersecurity protections

○ Outlines “prohibited” AI systems

● Council of Europe Human Rights, Democracy, and the Rule of Law Assurance Framework for AI Systems (HUDERIA).

○ Human Rights, Democracy, and the Rule of Law Impact Assessment (HUDERIA)

○ “define a methodology to carry out impact assessments of Artificial Intelligence (AI) applications from the perspective of human rights, democracy, and the rule of law, based on relevant Council of Europe (CoE) standards and the work already undertaken in this field at the international and national level , and to develop an impact assessment model.” (source)

● IEEE 7000-21 Standard Model Process for Addressing Ethical Concerns during System Design

○ “integrates ethical and functional requirements in systems engineering design and development in order to mitigate risk and increase innovation” (source)

○ “A set of processes by which organizations can include consideration of ethical values throughout the stages of concept exploration and development is established by this standard Management and engineering in transparent communication with selected stakeholders for ethical values elicitation and prioritization is supported by this standard, involving traceability of ethical values through an operational concept, value propositions, and value is positions in the

system design Processes that provide for traceability of ethical values in the concept of operations, ethical requirements, and ethical risk-based design are described in the standard All sizes and types of organizations using their own life cycle models are relevant to this standard.” (source)

● ISO/IEC Guide 51 Safety aspects – guidelines for their inclusion in standards.

○ “reducing risk that can arise in the use of products or systems, including use by vulnerable consumers. This Guide aims to reduce the risk arising from the design, production, distribution, use (including maintenance) and destruction or disposal of products or systems ” (source)

● Singapore Model AI Governance Framework. (source)

○ provides detailed and readily-implementable guidance to private sector organizations to address key ethical and governance issues when deploying AI solutions

○ Decisions made by AI should be: EXPLAINABLE, TRANSPARENT & FAIR

○ AI systems should be HUMAN-CENTRIC

Domain 5: Understanding the AI Development Life Cycle

Understand the key steps in the AI system planning phase

● Determine the business objectives and requirements

● Determine the scope of the project.

● Determine the governance structure and responsibilities. Understand the key steps in the AI system design phase

● Implement a data strategy that includes:

○ Data gathering, wrangling, cleansing, labeling

○ Applying PETs like anonymization, minimization, differential privacy, federated learning.

● Determine AI system architecture and model selection (choose the algorithm according to the desired level of accuracy and interpretability)

Understand the key steps in the AI system development phase

● Build the model

● Perform feature engineering.

● Perform model training

● Perform model testing and validation

Understand the key steps in the AI system implementation phase

● Perform readiness assessments

● Deploy the model into production

● Monitor and validate the model.

● Maintain the model

Domain 6: Implementing Responsible AI Governance and Risk Management

Ensure interoperability of AI risk management with other operational risk strategies

● Ex security risk, privacy risk, business risk