Today’s electronic engineers are challenged by multiple factors. Research over the years has illustrated common trends that you, as engineers, deal with including keeping your skills up-to-date, shrinking time-to-market windows, fewer engineers and smaller design teams for large projects and evolving technological trends. The bottom line is that you must continually update your engineering knowledge base to be successful in your work.

Throughout 2023, we are presenting a series of online educational days where you can learn how to address specific design challenges, learn about new techniques, or just brush up your engineering skills. We are offering eight different Training Days. Each day will focus on helping you address a specific design challenge or problem. These are not company sales pitches! The focus is on helping you with your work.

For more information and to register for these webinars, go to:

EETRAININGDAYS.COM

AVAILABLE ON DEMAND THERMAL MANAGEMENT

JUNE 14TH DESIGNING FOR SUSTAINABILITY

JULY 12TH EMI/RFI/EMC

AUGUST 9TH BATTERY MANAGEMENT

SEPTEMBER 13TH MOTOR DRIVES DESIGN

OCTOBER 11TH IOT DESIGN / WIRELESS

NOVEMBER 8TH ELECTRIC VEHICLE DESIGN

DECEMBER 6TH 5G / RF DESIGN

Welcome to EE World’s 5G, Wireless, and Wired Communications Handbook. What’s this? A longer name compared to 2021 and 2022? In truth, we at EE World have for years covered many forms of electrical communications. It’s time the handbook name better describes what we do.

5G is much more than cellular radio. There’s an entire network behind it. The electrical and optical links that form these networks continue to gain speed and capacity. Research is now underway to bring 224 Gb/sec data lanes over copper. At the same time, silicon photonics and co-packaged optics have moved into manufacturing. We’ll investigate these trends later in 2023 and beyond.

People who build networks, operate them, and develop applications demand more throughput, which falls on engineers to deliver. After all, network architects, software developers, IT people, telecom operators, and everyone else assume that the bits arrive at their destinations on time and intact. We don’t. We know bits don’t magically traverse networks. They need transmitters, receivers, signal processing, timers, and layers of protocols. As new network configurations such as Open RAN appear, they come with new wired connections. Disaggregating radio-access networks into open systems means more opportunities for interoperability problems. One such connection is the E2 interface, explained in “How does 5G’s O-RAN E2 interface work?”

Bits must arrive on time and in sync. For the third year, we’re delivering two articles on network timing in this handbook. We’ve also published other timing articles in between handbooks. You can find them all, plus timing products, at http://www.5gtechnologyworld.com/category/timing.

Wireless and wired networks deliver connectivity to the world, but what’s connectivity without connectors and cables? “Connectors and cables modernize data centers” delves into space constraints, signal integrity, cabling, and thermal-management issues in data centers. It’s up to EEs to solve those problems.

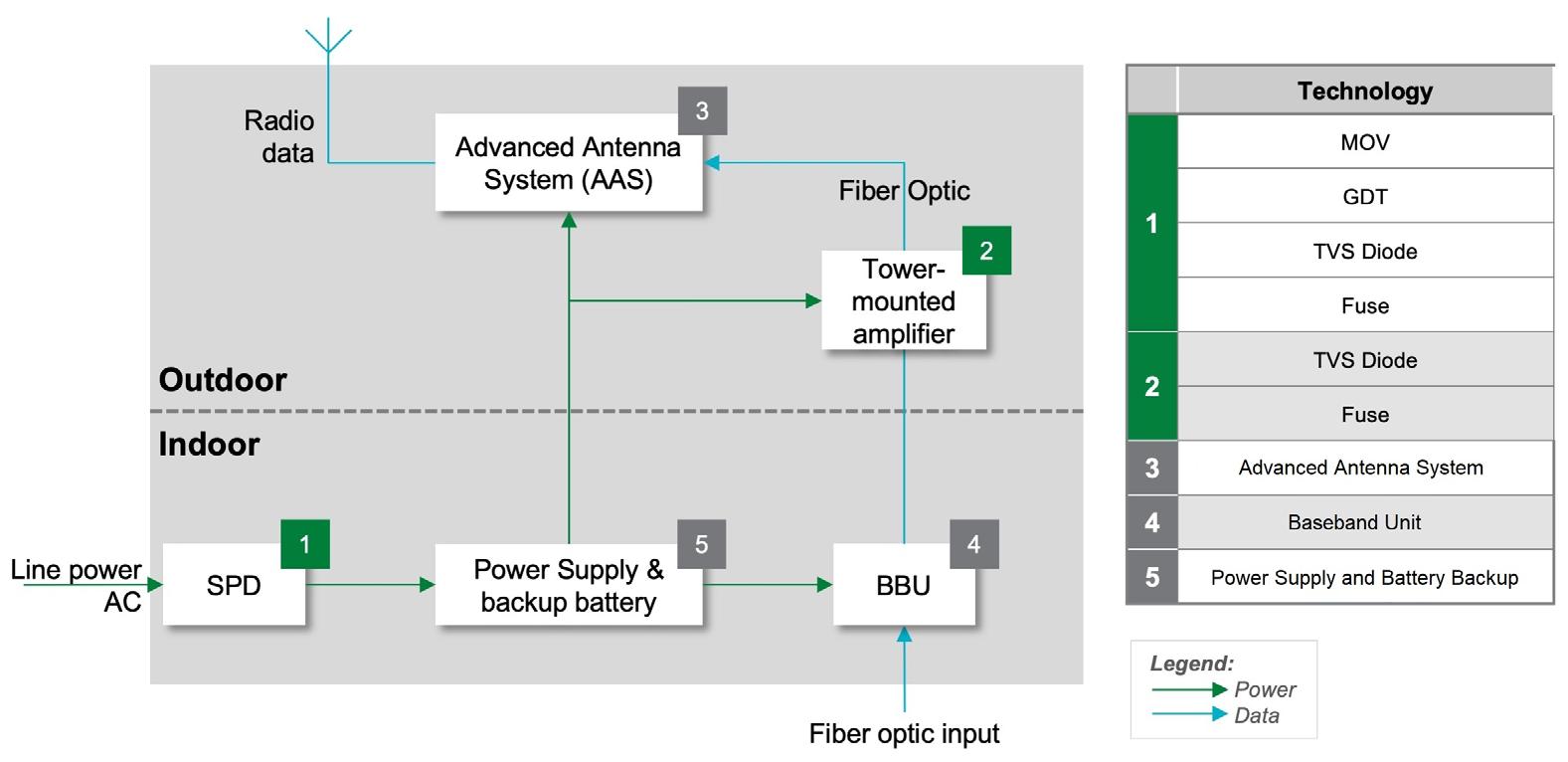

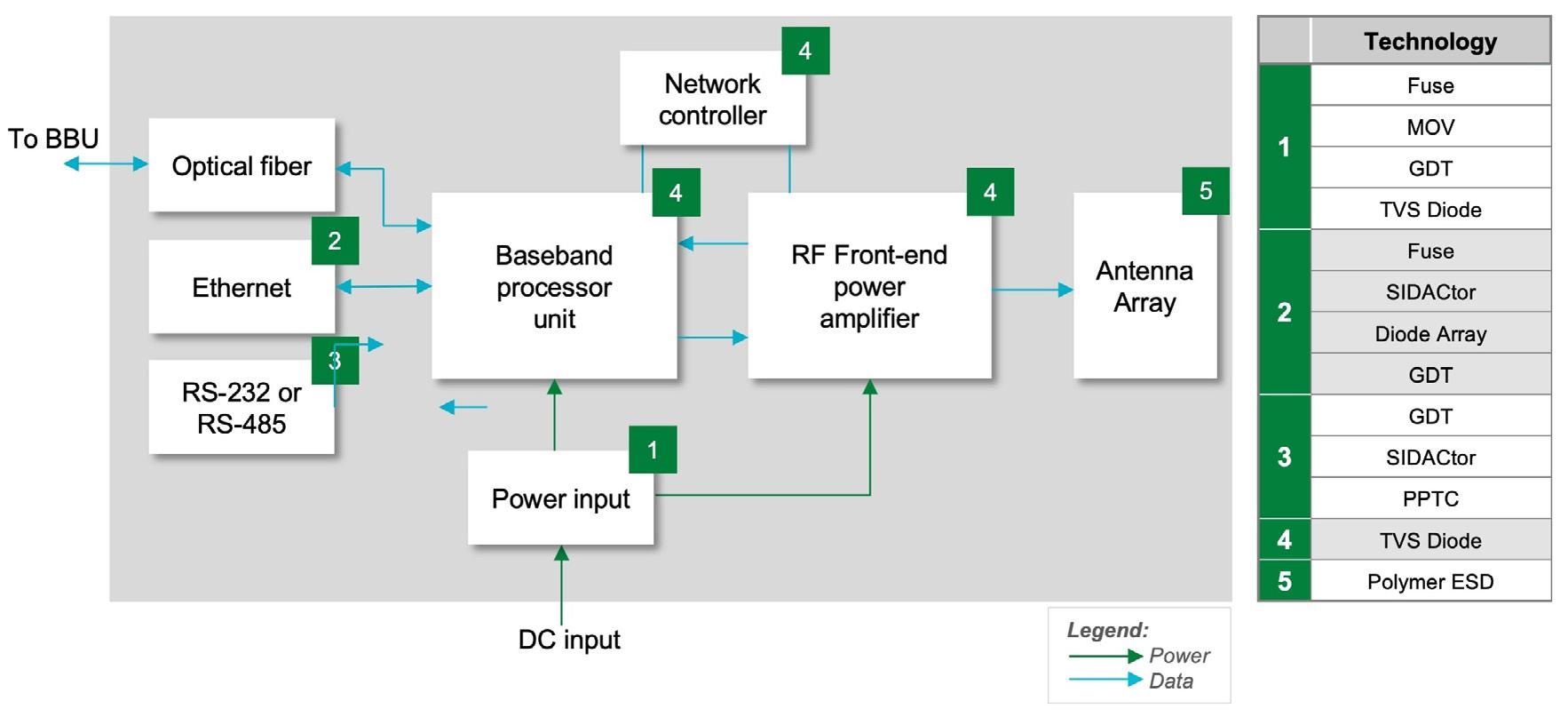

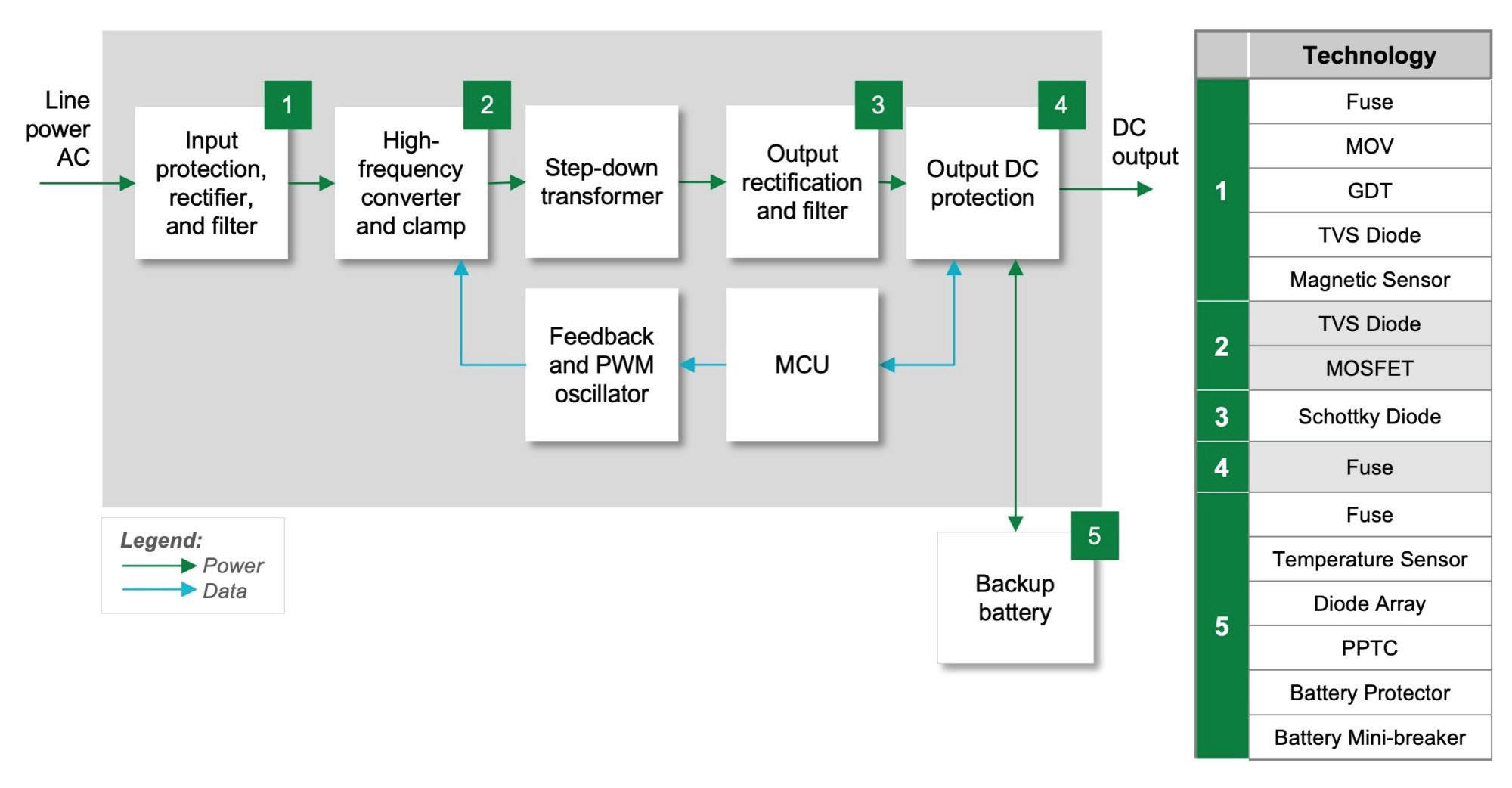

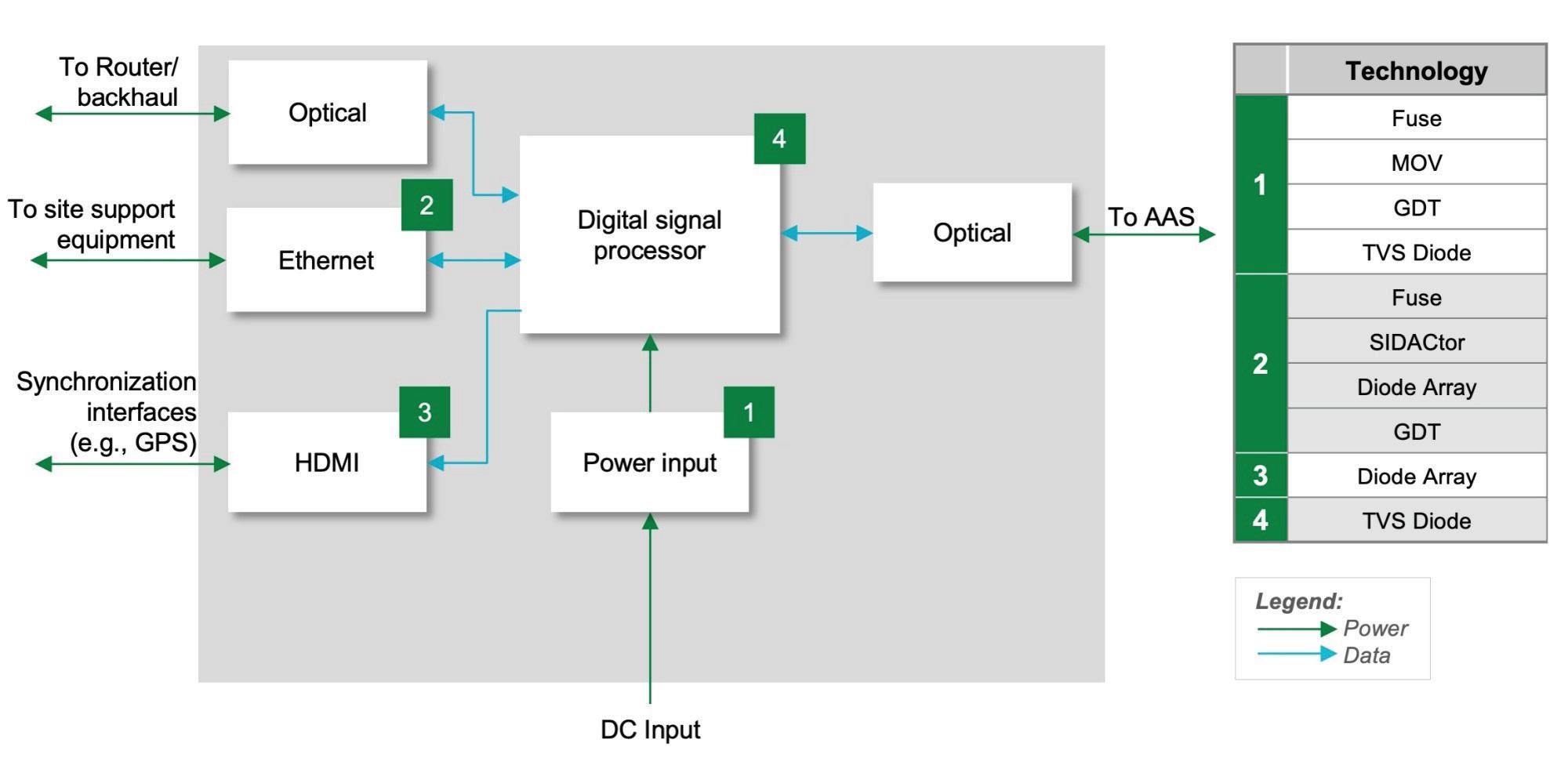

In this issue, you’ll also learn that just having a functioning network isn’t enough. 5G, like all networks, needs protection from electrical hazards. “How to safeguard cellular base stations from five electrical hazards” shows you how to use circuit-protection devices such as fuses and TVS diodes to keep circuits safe.

As if protection from electrical hazards wasn’t enough, networks can suffer from RF interference as well. One form of which is passive intermodulation (PIM) caused by nonlinearities in connectors, cables, and so on. Locating PIM problems in the field requires test equipment and expertise.

Even as 5G continues to deploy, researchers are looking into how to make marketers’ dreams come true. Having attended 6G conferences for a few years now, I can report that people already want more — and less — from wireless networks. They want a better user experience for consumers, lower latency for machines, more computing power for digital transformation, and the “metaverse,” whatever that is. Of course, we all want more while using less energy. Two articles in this handbook look at 6G. One covers channel sounding at frequencies above 100 GHz. Another looks at how 6G networks will need to operate more sustainably than does 5G.

As engineers, it falls on us to provide the infrastructure that makes it all happen.

Measuring just 0.47 x 0.28 mm, with an ultra-low height of 0.35 mm, our new 016008C Series Ceramic Chip Inductors offer up to 40% higher Q than all thin film types: up to 62 at 2.4 GHz.

High Q helps minimize insertion loss in RF antenna impedance matching circuits, making the 016008C ideal for high-frequency applications such as cell phones, wearable

devices, and LTE or 5G IoT networks.

The 016008C Series is available in 36 carefully selected inductance values ranging from 0.45 nH to 24 nH, with lower DCR than all thin film counterparts.

Find out why this small part is such a big deal. Download the datasheet and order your free evaluation samples today at www.coilcraft.com.

Once again, Coilcraft leads the way with another major size reduction in High-Q wirewound chip inductors

22 Open RAN networks pass the time

Network elements must meet certain frequency, phase, and time requirements to ensure proper end-to-end network operation. Synchronization architectures defined by the O-RAN alliance dictate how Open RAN equipment can meet these requirements.

24 How timing propagates in a 5G network

5G’s high speeds place extreme demands on the components that maintain accurate time.

Compliance with industry timing standards calls for accuracy in the face of temperature changes, shock, and vibration.

27 Connectors and cables modernize data centers

Demands for bandwidth-intensive, data-driven services are fueling a rise in compute, data storage, and networking capabilities. These rises put pressure on connectors and cable to deliver data at higher speeds with better signals and less heat.

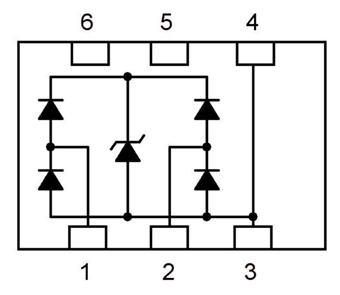

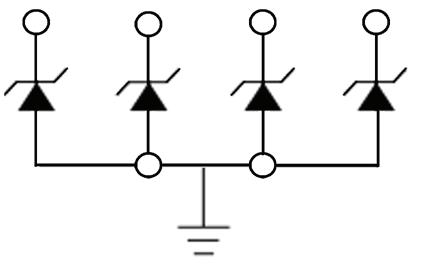

30 How to safeguard cellular base stations from five electrical hazards

Circuit-protection components such as fuses and TVS diodes protect power and data circuits from damage. Here’s where and how to insert them into your circuits.

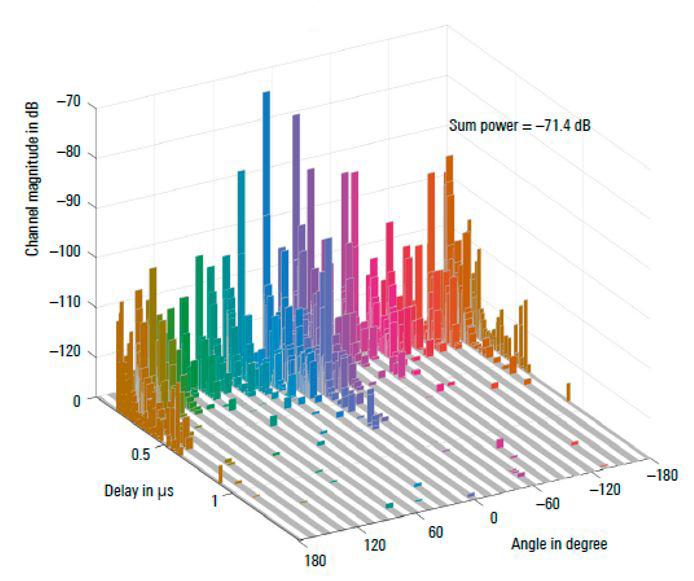

34 Experiments bring hope for 6G above 100 GHz

Efforts to explore and “unlock” this frequency region require an interdisciplinary approach with high-frequency RF semiconductor technology. The THz region also shows great promise for many application areas ranging from imaging to spectroscopy to sensing.

37 5G mmWave test builds on RF best practices

The high level of integration in today’s mmWave phone means traditional test methods no longer apply.

40 6G promises to bring sustainability to telecom

Sustainability is at the heart of 6G research, not just in telecom networks and equipment, but throughout the supply chain.

43 How does 5G’s O-RAN E2 interface work?

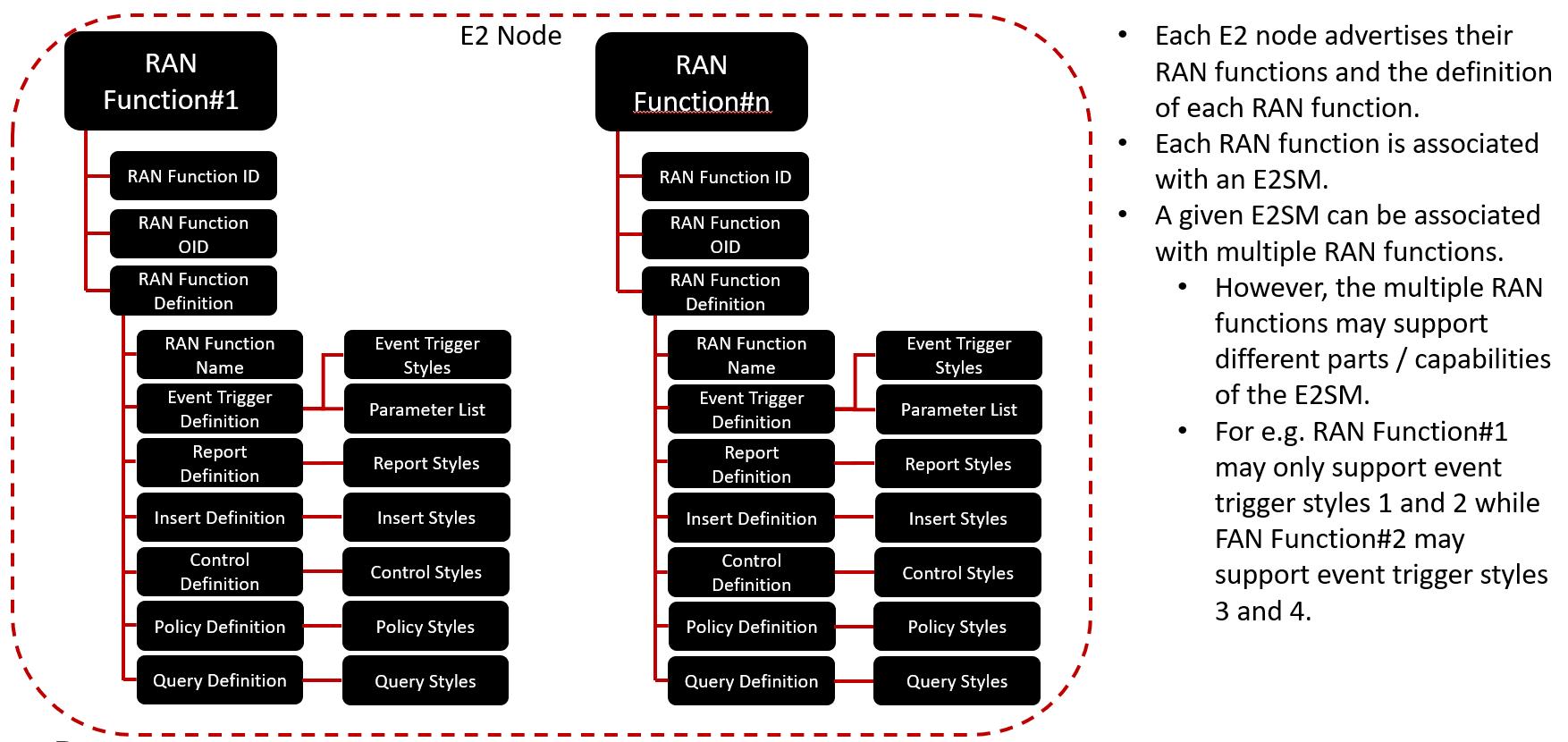

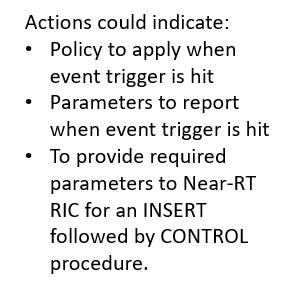

RAN intelligent controllers, as defined by O-RAN Alliance, let cellular operators deploy intelligent RAN optimization applications. E2 is a key interface defined by O-RAN Alliance, but there are challenges in practically using it.

EDITORIAL

VP, Editorial Director

Paul J. Heney pheney@wtwhmedia.com @wtwh_paulheney

Editor-in-Chief

Aimee Kalnoskas akalnoskas@wtwhmedia.com @eeworld_aimee

Senior Technical Editor Martin Rowe mrowe@wtwhmedia.com @measurementblue

Associate Editor Emma Lutjen elutjen@wtwhmedia.com

Executive Editor Lisa Eitel leitel@wtwhmedia.com @dw_LisaEitel

Senior Editor Miles Budimir mbudimir@wtwhmedia.com @dw_Motion

Senior Editor Mary Gannon mgannon@wtwhmedia.com @dw_MaryGannon

Managing Editor Mike Santora msantora@wtwhmedia.com @dw_MikeSantora

VIDEOGRAPHY SERVICES

Videographer Garrett McCafferty gmccafferty@wtwhmedia.com

Videographer Kara Singleton ksingleton@wtwhmedia.com

WTWH Media, LLC

1111 Superior Ave., Suite 2600 Cleveland, OH 44114

Ph: 888.543.2447

FAX: 888.543.2447

CREATIVE SERVICES & PRINT PRODUCTION

VP, Creative Services Mark Rook mrook@wtwhmedia.com @wtwh_graphics

VP, Creative Services Matthew Claney mclaney@wtwhmedia.com @wtwh_designer

Art Director Allison Washko awashko@wtwhmedia.com @wtwh_allison

Senior Graphic Designer Mariel Evans mevans@wtwhmedia.com @wtwh_mariel

Director, Audience Development Bruce Sprague bsprague@wtwhmedia.com

PRODUCTION SERVICES

Customer Service Manager Stephanie Hulett shulett@wtwhmedia.com

Customer Service Representative Tracy Powers tpowers@wtwhmedia.com

Customer Service Representative JoAnn Martin jmartin@wtwhmedia.com

Customer Service Representative

Renee Massey-Linston renee@wtwhmedia.com

Customer Service Representative Trinidy Longgood tlonggood@wtwhmedia.com

MARKETING

VP, Digital Marketing Virginia Goulding vgoulding@wtwhmedia.com @wtwh_virginia

Digital Marketing Coordinator

Francesca Barrett fbarrett@wtwhmedia.com @Francesca_WTWH

Digital Design Manager Samantha King sking@wtwhmedia.com

Marketing Graphic Designer Hannah Bragg hbragg@wtwhmedia.com

Webinar Manager Matt Boblett mboblett@wtwhmedia.com

Webinar Coordinator

Emira Wininger emira@wtwhmedia.com

Events Manager

Jen Osborne jkolasky@wtwhmedia.com @wtwh_Jen

Events Manager

Brittany Belko bbelko@wtwhmedia.com

Event Marketing Specialist Olivia Zemanek ozemanek@wtwhmedia.com

Event Coordinator Alexis Ferenczy aferenczy@wtwhmedia.com

Web Development Manager B. David Miyares dmiyares@wtwhmedia.com @wtwh_WebDave

Senior Digital Media Manager Patrick Curran pcurran@wtwhmedia.com @wtwhseopatrick

Front End Developer Melissa Annand mannand@wtwhmedia.com

Software Engineer David Bozentka dbozentka@wtwhmedia.com

Digital Production Manager Reggie Hall rhall@wtwhmedia.com

Digital Production Specialist Nicole Lender nlender@wtwhmedia.com

Digital Production Specialist Elise Ondak eondak@wtwhmedia.com

Digital Production Specialist Nicole Johnson njohnson@wtwhmedia.com

FINANCE

Controller Brian Korsberg bkorsberg@wtwhmedia.com

Accounts Receivable Specialist Jamila Milton jmilton@wtwhmedia.com

DESIGN WORLD does not pass judgment on subjects of controversy nor enter into dispute with or between any individuals or organizations. DESIGN WORLD is also an independent forum for the expression of opinions relevant to industry issues. Letters to the editor and by-lined articles express the views of the author and not necessarily of the publisher or the publication. Every effort is made to provide accurate information; however, publisher assumes no responsibility for accuracy of submitted advertising and editorial information. Non-commissioned articles and news releases cannot be acknowledged. Unsolicited materials cannot be returned nor will this organization assume responsibility for their care.

DESIGN WORLD does not endorse any products, programs or services of advertisers or editorial contributors. Copyright© 2023 by WTWH Media, LLC. No part of this publication may be reproduced in any form or by any means, electronic or mechanical, or by recording, or by any information storage or retrieval system, without written permission from the publisher.

SUBSCRIPTION RATES: Free and controlled circulation to qualified subscribers. Non-qualified persons may subscribe at the following rates: U.S. and possessions: 1 year: $125; 2 years: $200; 3 years: $275; Canadian and foreign, 1 year: $195; only US funds are accepted. Single copies $15 each. Subscriptions are prepaid, and check or money orders only.

SUBSCRIBER SERVICES: To order a subscription or change your address, please email: designworld@omeda.com, or visit our web site at www.designworldonline.com

POSTMASTER: Send address changes to: Design World, 1111 Superior Ave., Suite 2600, Cleveland, OH 44114

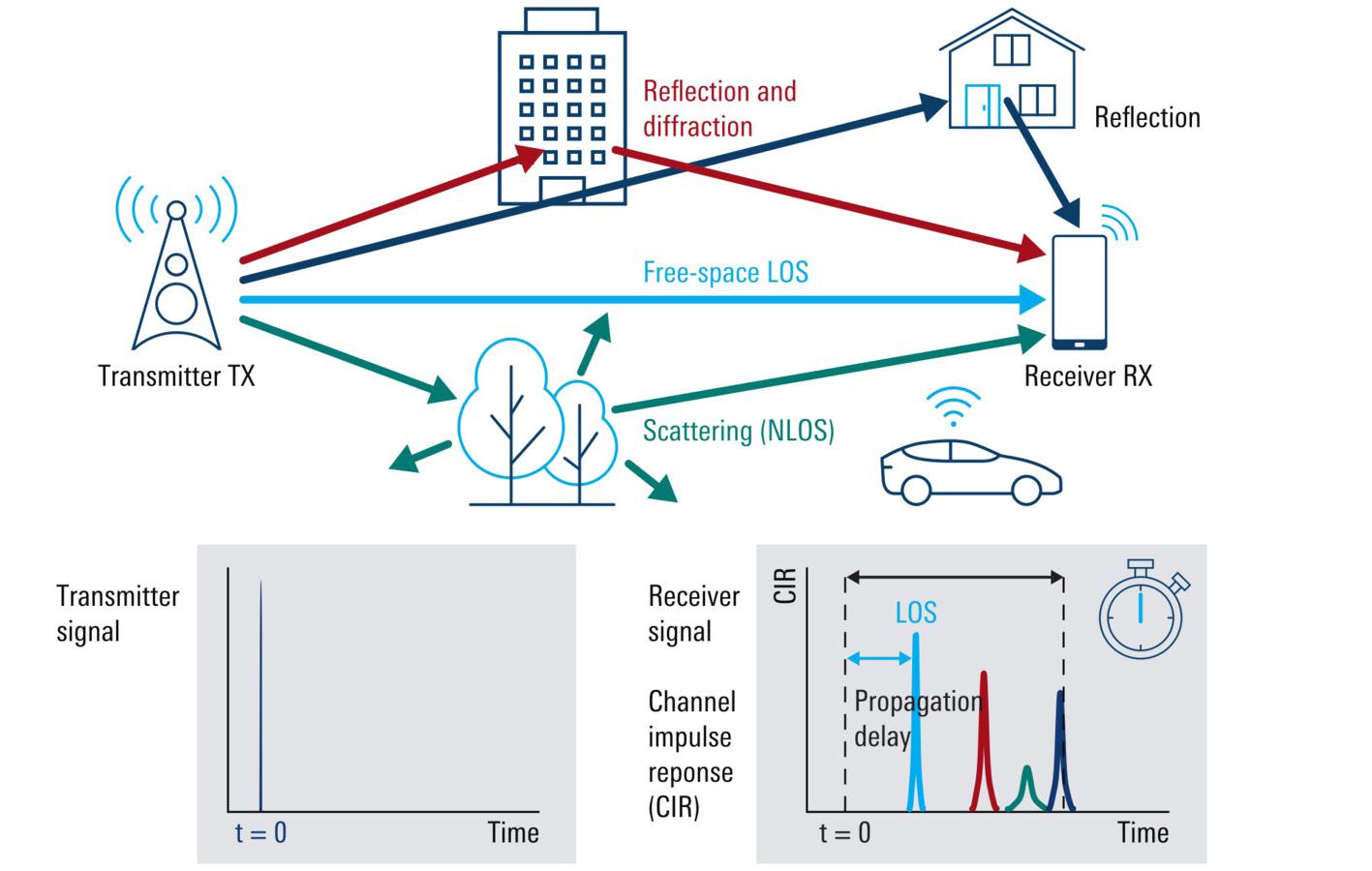

Wireless system design complexity keeps increasing, from mobile wireless technology moving from 3G and 4G to the expansive use cases of 5G, and the introduction of Industry 4.0. Driven by the need to optimally manage the sharing of valuable resources to an expanding set of users, a growing number of engineers are turning to artificial intelligence (AI) to solve the challenges introduced by modern systems.

From optimizing call performance through resource allocation to managing vehicle-to-vehicle (V2V) and vehicle-to-everything (V2X) communication between autonomous cars, AI has brought the sophistication necessary for today’s modern wireless applications. As the number and capabilities of those devices connected to networks expands, so too will the role of AI in wireless. For the future success of the technology, engineers should be aware of the key benefits and applications of AI in today's wireless systems, as well as the best practices necessary for optimal implementation.

Three distinct use-cases have defined mobile networks’ transition to 5G and have acted as the driving forces in engineers’ adoption of AI. These include:

• The optimization of speed and quality of mobile broadband networks,

• The need for ultra-reliable low rates, and

• Massive machine-type communication for time-sensitive connections between Industry 4.0 devices.

An expanding set of devices competing for the resources of the same network and an ever-increasing pool of users also leads to the increasing complexity of wireless systems. Formerly linear patterns of designs once understood by human-based rules and human processing of data are no longer sufficient. AI techniques, however, can overcome non-linear problems by extracting patterns automatically and efficiently. These techniques can do so beyond the ability of human-based approaches.

Integrating AI in a wireless environment enables machine learning and deep learning systems to recognize patterns within communications channels. These systems then optimize the resources given to that link to improve performance. As applications of a modern network compete for the same resources without the use of AI methodologies, managing these networks becomes a nearly impossible task.

The sophistication of AI also enables more efficient project management such as reduced order modelling. By incorporating simulated environments into an algorithmic model through estimating the behaviour of source environments, engineers can quickly study a system’s dominant effect using minimal computational resources. Additional benefits to the use of AI in this context can include more time to explore design and carry out more iterations faster, cutting time in production cycles and associated costs.

AI techniques can overcome nonlinear problems by extracting patterns automatically and efficiently.

MathWorks

Data quality is vital for the successful and effective deployment of AI. AI models need to be trained with a comprehensive range of data to adequately deal with real-world scenarios. Applications provide the data variability necessary for 5G network designers to train AI robustly by synthesizing new data based on primitives or by extracting them from over-theair signals. Failure to explore a large training data set and iterate on different algorithms based on that data could result in a narrow local optimization instead of an overall global one, compromising the reliability of AI in realworld scenarios.

A robust approach to testing AI models in the field is similarly critical to success. If signals to test AI are captured only in a narrow and localized geography, the lack of variability in that training data may negatively impact how an engineer may approach and optimize their system design. Without comprehensive field iterations, the parameters of individual cases cannot be used to optimize AI for specific locations, adversely impacting call performance.

Digital transformation has been embraced in industries across the spectrum, from telecommunications to automotive. This increased adoption of transformation has necessitated the widescale adoption of AI and is the primary driver for its application.

Placing electronic communications in areas once mechanically orientated generates large amounts of data as applications that include smart homes, telecommunication networks, and autonomous vehicles (AV) connect. The large quantities of data generated by these applications facilitates the development of future-looking AI techniques to accelerate the process of digital transformation, yet also stretches the resources of the joining network.

In telecommunications, AI is deployed at two levels—at the physical layer (PHY) and above PHY. The application of AI for improving call performance between two users is referred to as operating at PHY. Applications of AI techniques to physical layers include digital pre-distortion, channel estimation, and channel resource optimization. Additional applications include autoencoder design that spans automatic adjustments to transceiver parameters during a call. Figure 1 shows a roadmap for using data and AI to train models.

Channel optimization is the

enhancement of the connection between two devices, principally network infrastructure and user equipment such as handsets. Using AI helps to overcome signal variability in localized environments through processes such as fingerprinting and channel state information compression.

With fingerprinting, AI can optimize positioning and localization for wireless networks by mapping disruptions to propagation patterns in indoor environments, caused by individuals entering and disrupting the environment. AI then estimates, based on these individualized 5G signal variations, the position of the user. In so doing, AI overcomes traditional obstacles associated with localization methods using comparisons between received signal strength indication (RSSI) and the RSS in providers’ databases. Channel state information compression, on the other hand, is the use of AI to compress feedback data from user equipment to a base station. This ensures that the feedback loop informing the station’s attempt to improve call performance does not exceed the available bandwidth, leading to a dropped call.

Above-PHY uses are primarily in resource allocation and network management. As the number of users and use cases on the network exponentially increases, network designers are looking to AI techniques to respond to allocation demands in real time. Applications such as beam management, spectrum allocation, and scheduling functions are used to optimize the management of a core system’s resources for the competing users and use cases of the network.

In the automotive industry, using AI for wireless connectivity makes safer autonomous driving possible. Autonomous vehicles and V2V/V2X vehicular communications rely on

data from multiple sources, including LiDAR, radar, and wireless sensors, to interpret the environment. The hardware present in AVs must handle data from these competing sources to function effectively. AI enables sensor fusion (fusing competing signals to allow the vehicle’s software to make sense of its location and establish how it will interact with its environment by understanding omnidirectional messages).

This approach to communications allows the vehicle to establish a 360-degree field of “awareness” of other vehicles (Figure 2) and potential crash threats within its proximity. Whether through informing the driver for the vehicle or driving autonomously, utilization of AI is leading to improved road traffic safety and reducing the number of crashes at intersections.

As the use cases for wireless technology expand, so too does the need to implement AI within those systems. From 5G, to AV, to IoT, these applications would not have the sophistication necessary to function effectively without the use of AI. AI’s place in the engineering landscape, particularly wireless system design, has been growing exponentially in recent years and this pace of change can be expected to continue rising – and faster – as the use cases and the number of network users expand in the modern age.

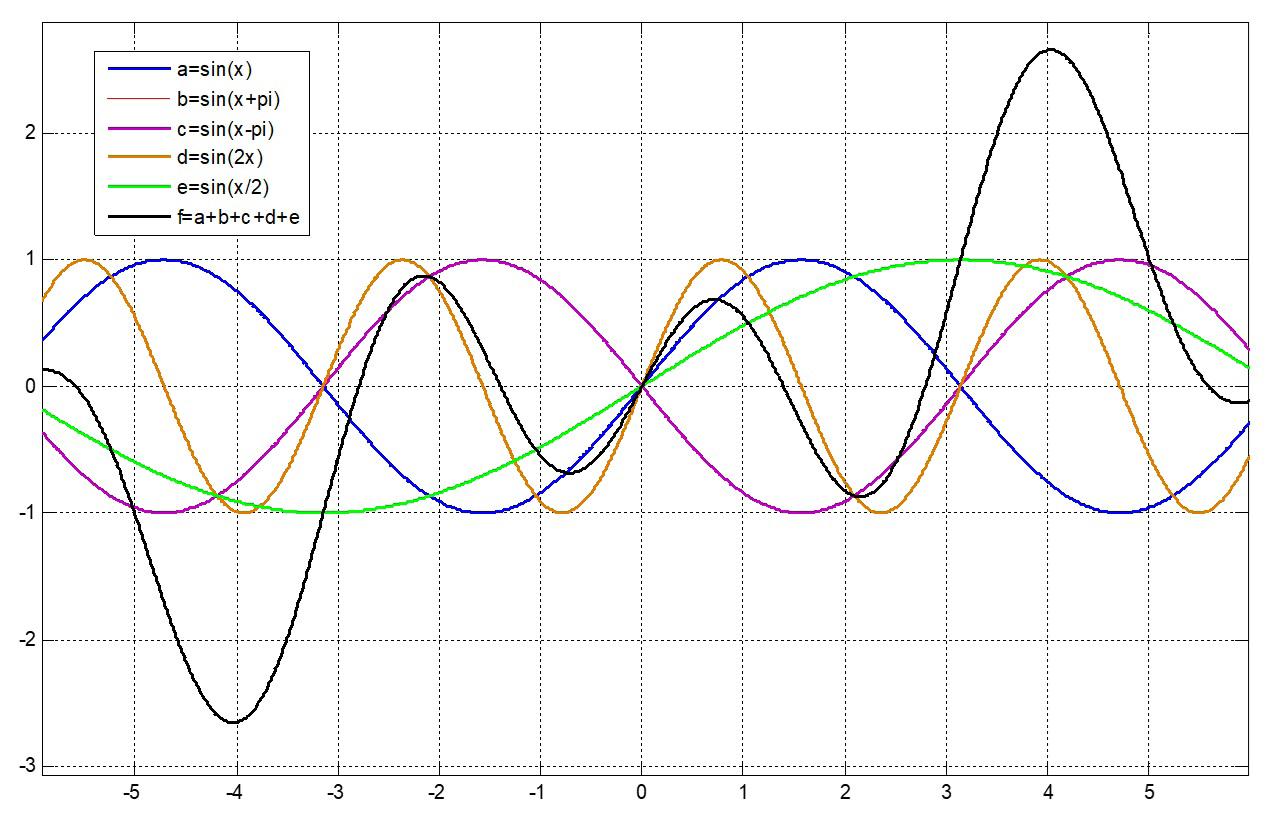

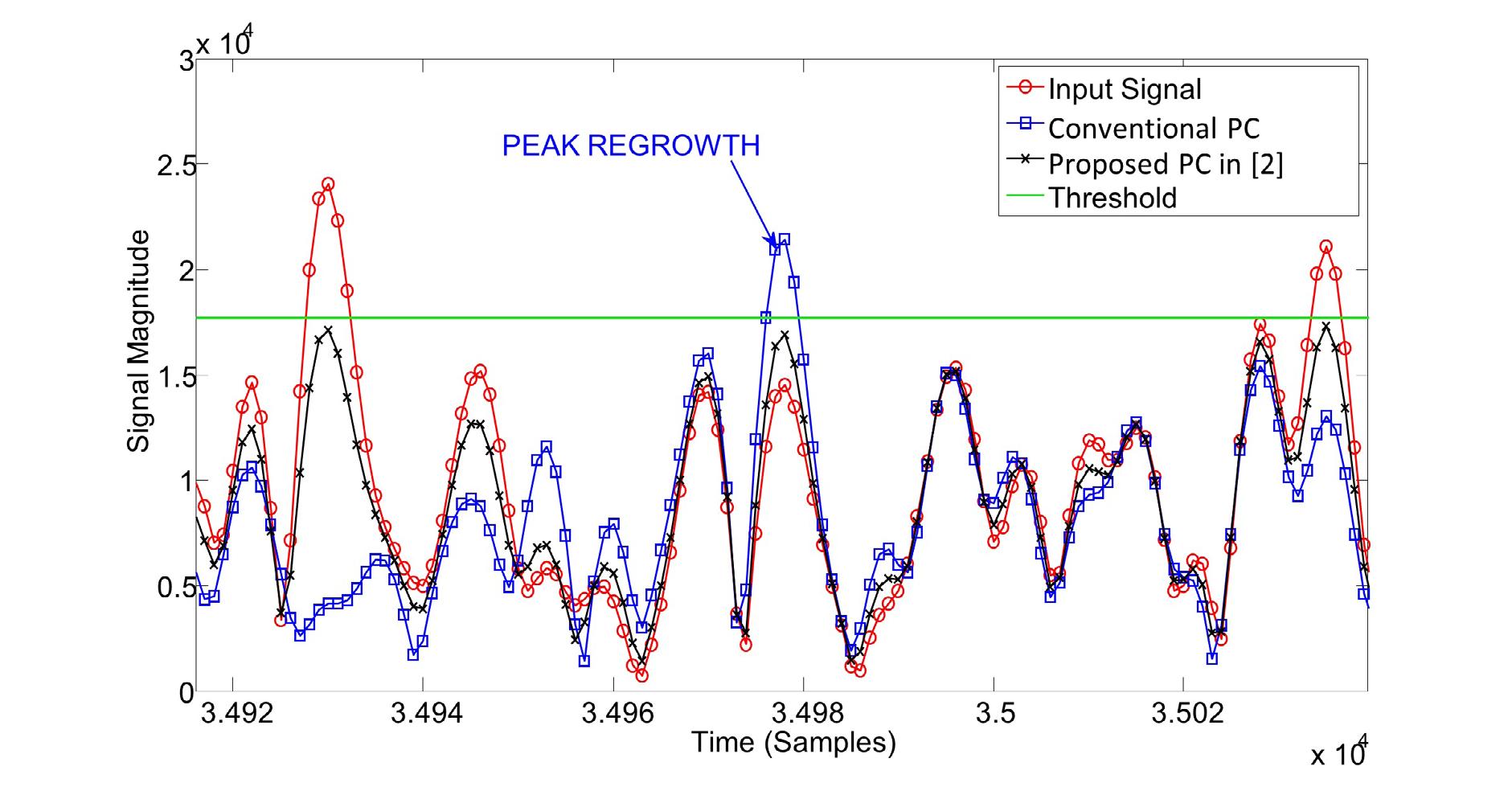

5G’s orthogonal frequency division multiplexing (OFDM) brings with it a major design challenge, namely an inherent wide dynamic variation between a signal’s peak and the average power. The peak-toaverage power ratio (PAPR) leads to inefficient transmission performance. That’s because the OFDM waveform is created by the sum of multiple sinusoidal signals that can exhibit constructive and destructive behaviour. As a result, at some time instances, the ratio between the maximum signal power to its average will become high. A signal with high PAPR will cause a power amplifier (PA) to operate in its non-linear region.

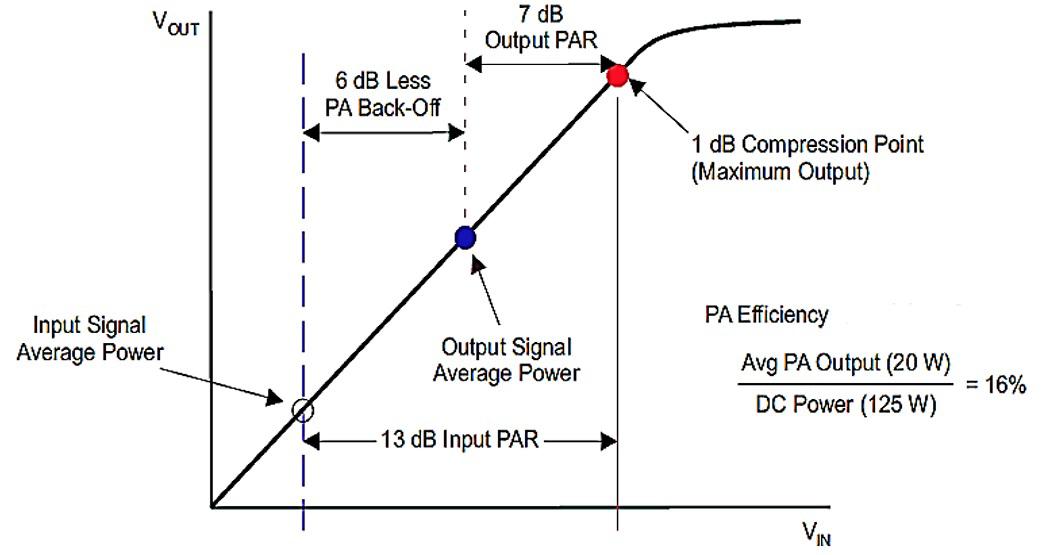

A high PAPR creates two problems. First, outof-band distortion leads to adjacent-channel interference and spectral emission mask (SEM) violations. Second, in-band distortion degrades the throughput performance. To tackle these problems, a radio’s power amplifier (PA) needs to operate below its 1 dB compression point (P1dB). That backing off improves linearity at the cost of reduced PA efficiency. We need a PAPR reduction technique to let the PA operate at high efficiency while maintaining good linearity.

Several techniques that reduce the PAPR of the OFDM signal have been proposed. There are two main categories for these techniques: distortion-based methods, which result in out-of-band distortion, and distortionless methods. This article defines PAPR in more detail and introduces the techniques to reduce PAPR. The article will also discuss some of the challenges to reduce the PAPR in multi-operator network use cases.

An OFDM waveform is created by the summation of multiple sinusoidal signals. Figure 1 shows that constructive interference between the sinusoids occurs when the individual sinusoids reach their maximum values at the same time. This constructive

interference will cause the output envelope of the OFDM waveform to suddenly rise, producing high peaks generated from the constructive characteristic. At other times, destructive interference will occur. In that case, the individual sinusoids will cancel out one another, resulting in troughs in the OFDM waveform output envelope. Furthermore, in the example of Figure 1, the sinusoids are

constant amplitude. The amplitudes of the sinusoids will, however, vary dynamically. This amplitude variation, combined with the destructive interference, results in a low average output envelope. Hence, the OFDM waveform features high PAPR.

As Figure 2 shows, when a multicarrier signal of very wide dynamic requirement with high PAPR such as an OFDM signal is fed into

a PA, the PA operates in its nonlinear region. Doing so results in out-of-band distortion, which violates the spectrum emission mask (SEM), disrupts other adjacent channels, and creates unwanted radiation. At the cellular system level, this causes poor coverage, dropped calls, and low quality of service. Backing off the power to avoid PA compression will result in lower power efficiency and cell coverage. A promising solution would reduce PAPR. In Figure 2, the PAPR of the input signal is 13 dB. A PAPR reduction technique can reduce this to 7 dB, allowing 6 dB less back off, which results in a significant PA efficiency improvement.

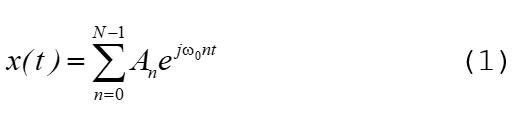

High PAPR is a major practical problem involving multi-carrier signals such as an OFDM signal. If A = (A0,A1, ...,A(N-1)) is a modulated data sequence of length N in time interval (0,t), where Ai is a symbol from a signal constellation and t is symbol duration, then the N carrier OFDM envelope is given by

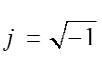

Figure 3. The peakcancellation technique uses peak detection and filtering to reduce peaks created when peaks in OFDM sinusoids align.

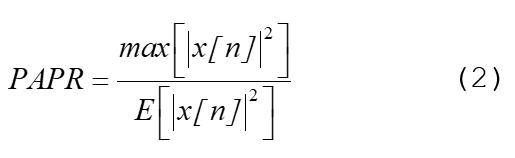

where and . The PAPR of a transmitted signal, , is the ratio of the maximum power and the average power of the signal and can be defined by:

called scrambling techniques, require some side information sent to the receiver device, adding complexity to the system. Moreover, this protocol is not defined by the 3GPP for user equipment. Techniques such as Partial Transmit Sequence (PTS) and Selected Mapping (SLM) fall into this category. These techniques are, however, not practical in 4G and 5G mobile systems. Hence, the distortion-based techniques are more attractive [1]. Here we review the peak cancellation technique used widely in wireless communication systems.

amplitude. Each PCE is occupied for the duration of the filter length and the incoming peaks should wait until the process of the PCE is completed. Finally, all the PCE outputs are accumulated and subtracted from the delayed input signal.

The PC’s main drawback is that it requires a minimum of two stages to achieve the required performance mandated in 3GPP. Hence, the complexity and latency will increase. The number of stages will increase for larger bandwidth and in multi carrier applications.

where E denotes the expectation operator, which calculates the signal’s average amplitude.

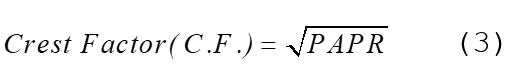

The other parameter to consider is crest factor, which is widely used in the literature, and is defined as the square root of the PAPR.

Until now, many techniques have been proposed to reduce high PAPR in communication systems. In general, these techniques can be separated into two categories, distortion-based and distortionless. In the former category, some distortion that violates the spectral emission mask (SEM) will occur. Techniques such as clipping, noise shaping, peak windowing, and peak cancellation fall into this category. As the aim in the system design is to maximise efficiency, it can tolerate some distortion.

Distortionless techniques, sometimes

Figure 3 shows the most promising PAPR reduction technique: peak cancellation (PC). Following the peak detection, the selected peaks are allocated to a peak cancellation engine (PCE). Each PCE gets the amplitude of the peaks and creates a scaling pulse by multiplying the peak phase by the filtered

Modern 5G systems present several challenges for PAPR reduction implementation.

Increasing the number of component carriers (CCs) creates more peaks in the selected window in the time domain signal envelope.

Those peaks cannot be compensated in one or two stages, requiring additional stages in the PAPR implementation. Especially in the inter-band carrier aggregation scenarios, where there is a large gap between the CCs, there will be several contiguous peaks in each selected window above the threshold. Applying the PC technique on these signals results in high error-vector magnitude (EVM). Selecting these peaks and applying filters requires more careful consideration to meet the EVM requirements. One technique to tackle this issue is proposed in [2].

Figure 4 shows the result of a clipped signal with conventional PC and with the proposed PC. Clearly, when there is no spacing between contiguous peaks in the selected window, as is the case in the conventional PC, peak regrowth occurs that can exceed the threshold. This results in spectral-emission violation and EVM degradation. The proposed PC results in suppressed peak regrowth because the spacing removes the possibility of overlapping in the finite impulse response (FIR) filter sidelobes.

End-to-end latency is one of the important factors in providing users with a quality experience, especially in 5G services such as ultra-Reliable Low Latency Communication (uRLLC). The peak cancellation technique requires several stages to reduce the high peaks in the signal. The latency contribution of the peak cancellation technique can be as high as 10 µs. That’s roughly 10% of the total latency specified in 3GPP Release 15.

Increasing the number of stages in the peak cancellation can increase the complexity and the power consumption of the system. Particularly when implemented in an FPGA, the complexity of this implementation increases the system cost.

EVM is one of the imperative metrics to measure the quality of the transmitted signal in a base station. Implementing the peak cancellation results in a tradeoff between the PAPR reduction capability and EVM performance. This is also important from the fact that greater PAPR reduction translates to better power amplifier efficiency. While achieving higher PAPR reduction is desirable,

EVM performance requirement must still be met. Increasing the PAPR reduction means that the threshold level in the peak cancellation needs to be lower, which results in much larger number of peaks detected in a search window. To avoid EVM degradation and peak regrowth, only contiguous peaks at some distance from each other can be selected and passed through the peak engines. It is important to know that peak regrowth is created from the contiguous peaks. That’s because the input signal of the PC is an up-sampled signal and hence the contiguous peaks can exceed the threshold at the same time. The peak regrowth causes spectral leakage or out of band radiation and EVM deterioration.

Most communication systems require PAPR reduction. A high PAPR signal, once transmitted to a power amplifier, creates spectral regrowth that violates the spectral emission mask and deteriorates a network’s throughput performance. PAPR reduction techniques are that required to overcome this issue and increase power amplifier efficiency. Implementing PAPR reduction techniques in modern wireless systems requires careful consideration and some tradeoffs.

[1] P. Varahram et al., Power Efficiency in Broadband Wireless Communications, CRC Press, 2014.

[2] P. Varahram et al., Power amplifiers, US Patent, Patent number 20210184630, 2021.

Pooria Varahram is Research and Development Principal Engineer at Benetel. He holds a PhD in wireless communication systems from University of Putra Malaysia. His main expertise is in digital front-end and signal processing techniques.

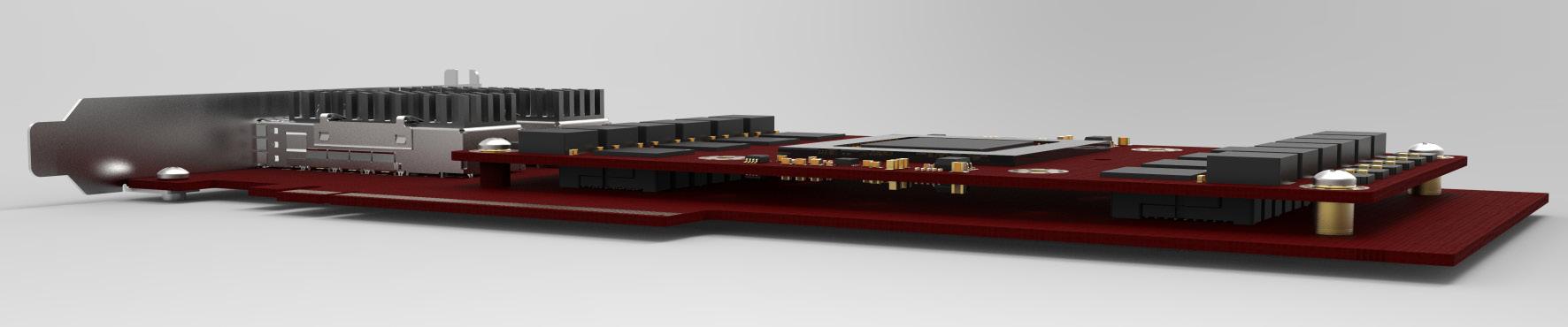

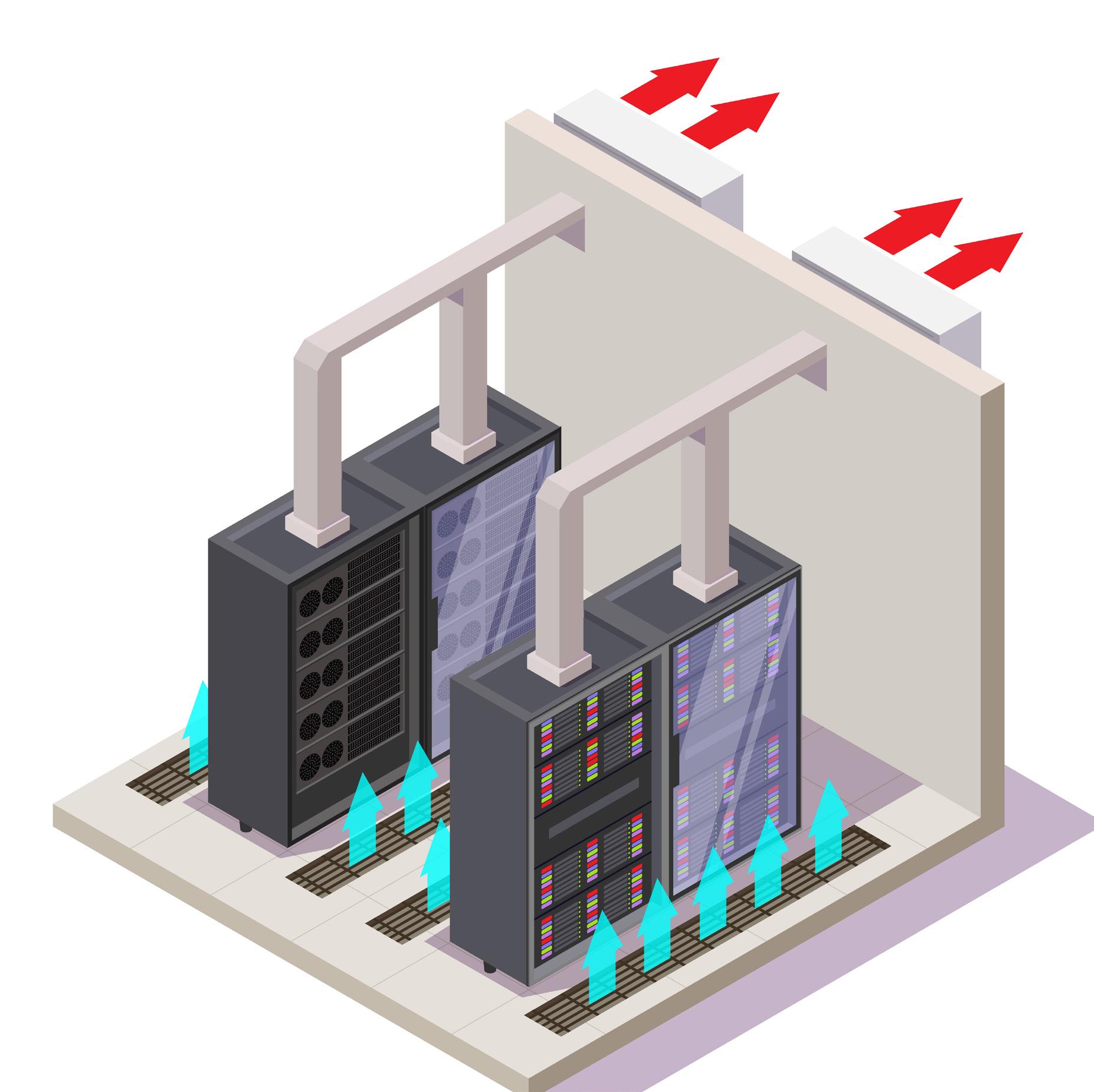

In today’s connectivity-driven world, the data center has risen to a place of unprecedented importance. At the same time, technologies such as machine learning have placed intense computational demands and the requirement of faster data access for platforms such as 5G. These conditions place an unsustainable strain on data center infrastructure.

By Scott Schweitzer, AchronixTo address this issue, engineers are re-imagining the very hardware on which the data center is built. Out of this effort, one of the most important technologies that has emerged is the SmartNIC. Dell’Oro Group predicts that the SmartNIC market will become a $2B market by 2027 [1].

In this article, we explore the SmartNIC; what it is, how it rose to prominence, and how it can be designed into a data center system to unlock future benefits.

Before understanding the SmartNIC, we must first discuss the fundamentals of its predecessor: the network interface card (NIC).

From a functional perspective, NICs have historically been an essential board or chip in computing systems, enabling connectivity in internet-connected systems [2]. Also known as network adapters, NICs are used in computer systems, such as personal computers or servers, to establish a dedicated communications channel between the system and a data network. NICs act as translators, taking host memory buffers and formatting them for transmission over a network as structured packets, typically Ethernet. NICs also receive data packets from the network and turn them into memory buffers for applications on the host. Interestingly, all data transiting a network is always represented as character data, regardless of how complex the data structure is or the number of significant digits in a decimal number. This is because the earliest data networks, like ARPANET (1969), were built using standards that had been carried forward from earlier technologies like Telex (1933), which used a 5-bit code created in the 1870s by Emile Baudot [3]. To be clear, ARPANET used 7-bit ASCII (1963); today, we use UTF-8 (8-bit), which is backward compatible with ASCII.

From a hardware perspective, a NIC consists of several key circuit blocks [4]. Some of the most important blocks include a controller for processing received data and ports for a bus connection (e.g., PCIe) within the larger system. With this hardware, the NIC implements the physical layer circuitry necessary to communicate with a data-link-layer standard, such as Ethernet. Operating as an interface, NICs transmit signals at the physical layer and work to deliver the data packets at the network layer.

Now, servers rely on NICs to establish network connectivity, but current changes in the industry will render basic NICs obsolete for server use.

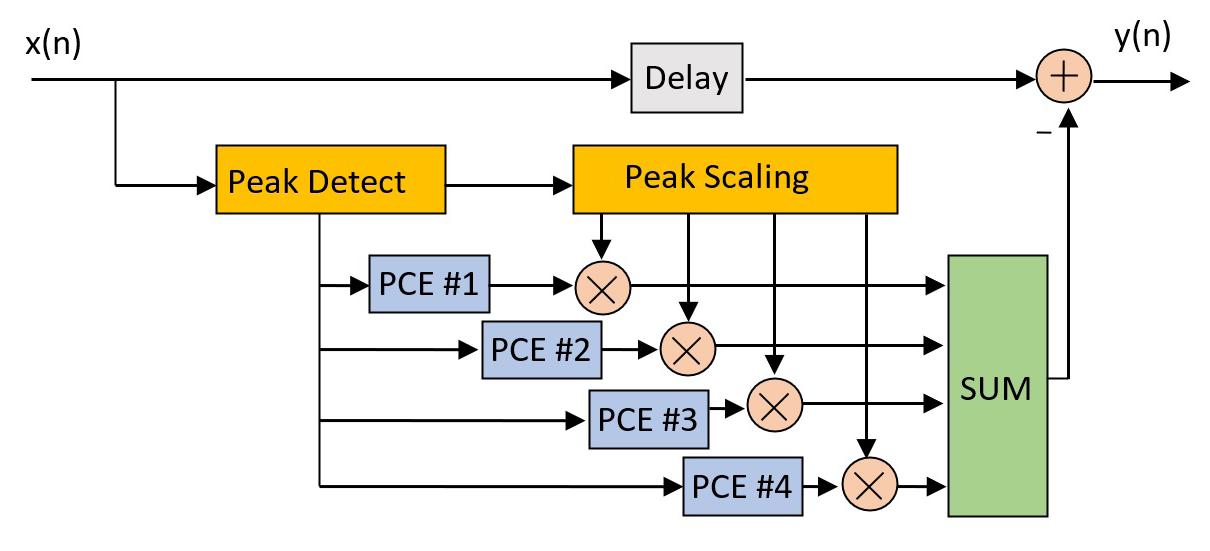

Learn the basics about SmartNICs, their design, and why they’ll be a crucial element in future networks.Figure 1. Compared to a traditional NIC, a SmartNic adds storage, security, and networking features, removing them from the host CPU.

The proliferation of cloud computing and a general increase in internet traffic inundate data centers with growing data and computational tasks. Meanwhile, hardware is getting bogged down by the rise of data and compute-intensive applications such as machine learning, which are placing a greater strain on the computing hardware within the data center. To further confound the matter, the industry is simultaneously pushing towards faster data rates with technologies such as 5G, and soon 6G.

The result: existing data center hardware can no longer keep up with these demands. Workloads accelerate faster than CPUs can handle; the virtualization and microservices running in the data center are quickly becoming unmanageable.

Data center architects have realized that the processing requirements needed to serve the network have become too great for conventional NIC-based architectures. Instead, to achieve high performance without sacrificing power or complexity, we need to offload complex networking tasks

from the server CPUs to dedicated accelerator blocks.

As many in the industry see it, the answer to these problems is the SmartNIC.

At a high level, SmartNICs are NICs augmented with a programmable data processing unit (DPU) for network data acceleration and offloading. The name SmartNIC and DPU are often used interchangeably. The SmartNIC adds extra computing resources to the NIC to offload tasks from the host CPU, freeing up the host CPU for more important tasks.

Early SmartNIC implementations used register-driven ASIC logic. These designs tend to have higher performance in terms of extremely low latency, high packet throughput, and low power consumption—the latter ranging from 15 W to 35 W. Despite the performance benefits, however, they lacked the programmability and flexibility required, often utilizing esoteric commandline tools to set registers. They lacked any meaningful way to programmatically manage packet and flow complexity.

SmartNICs are used in many different deployments, including storage, security, and network processing. Some specific tasks that a SmartNIC may be responsible for include overlay tunneling protocols such as VxLAN and complex virtual switching from server processors [5]. As shown in Figure 1, the eventual goal is to have a solution that consumes fewer host CPU processor cores and, at the same time, offers higher performance at a reduced cost.

Taking a deeper look at a SmartNIC, we find that the programmable packet pipeline consists of many individual hardware accelerator blocks, all optimized for high-performance and low-power networking tasks. Depending on variables such as application and cost, there are many different SmartNIC implementations, each with its own benefits and tradeoffs.

One popular method for designing SmartNICs is to use a cluster of Arm cores. One of the major benefits of Arm core-based designs is the huge proliferation of existing tools, languages, and libraries. On top of this, these

designs shine with respect to flexibility, featuring the best packet and flow complexity compared to other options.

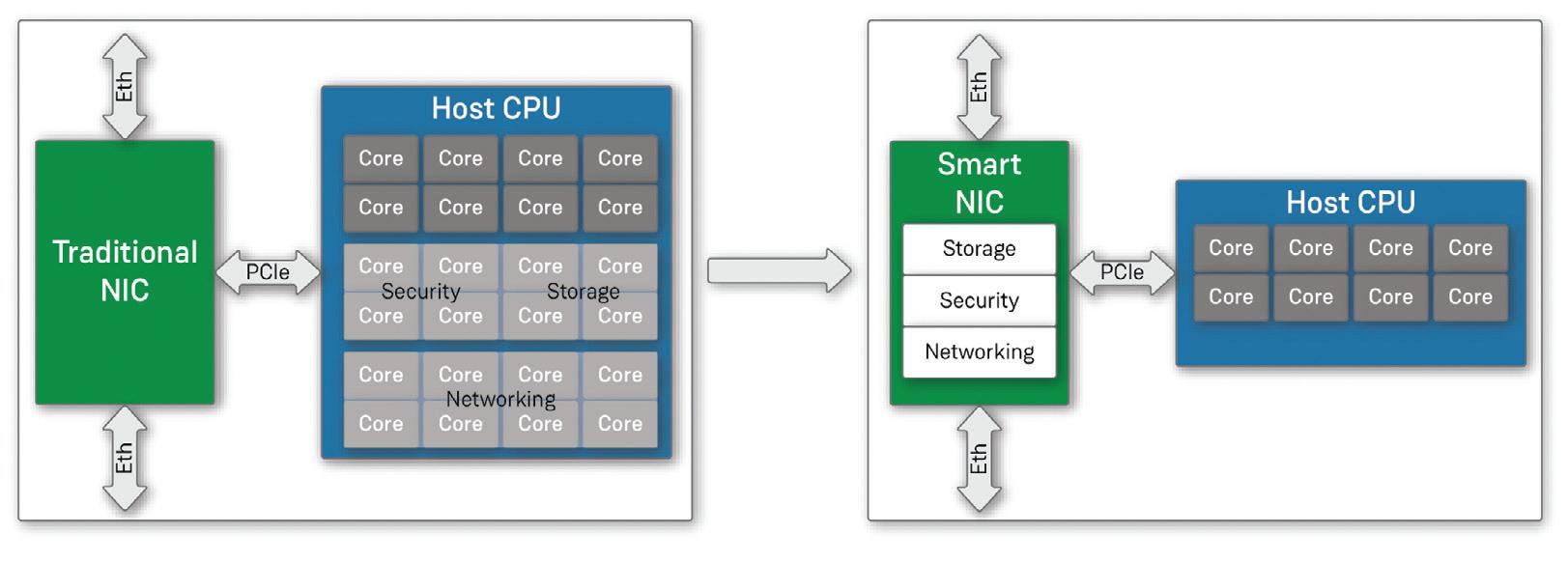

Many newer SmartNICs contain FPGAs, operating as an FPGAbased SmartNIC or an FPGAAugmented SmartNIC. An FPGA-based SmartNIC, shown in Figure 2, is a design that employs the expanded hardware programmability of FPGAs to build any data-plane functions required by the tasks offloaded to the SmartNIC. Because you can program an FPGA, you can tear down and reconfigure the FPGA’s data-plane functions at will and in real-time.

FPGAs can operate at hardware speeds as opposed to being limited by software, often offering several orders of magnitude in performance improvements. The large inherent parallelism in FPGAs leads to SmartNIC designs that exhibit high performance, high bandwidth, and high throughput.

The FPGA-based SmartNIC utilizes both hard logic for basic system input and output functions (blue boxes), as well as programmable soft-logic blocks (box and orange) for advanced packet and network flow processing tasks. A huge region of programmable logic can handle custom functions loaded on demand or written and loaded in the field. These optional logic blocks might include wire-rate packet deduplication within a half-second window, load balancing of packets, and even flows or advanced security functions including unanchored string searches through the entire packet. Such searches might look for potentially thousands of strings in parallel.

FPGA-augmented SmartNICs, on the other hand, are systems that simply add an FPGA to an existing NIC complex. Based on

simply add an FPGA to an existing NIC complex. Based on the design, the NIC can be either an existing multicore SmartNIC or just a simple NIC ASIC. The FPGA can reside either behind or in front of the NIC chip complex or even out-of-band using a secondary PCIe bus.

Overall, SmartNICs that leverage FPGA technology can reap the benefits of good programmability and flexibility, as well as excellent latency and outstanding throughput. However, nothing is free; greater throughput and flexibility means the FPGA often draws more power than similar ASICs, which deliver substantially less performance.

Clearly, you have numerous choices and tradeoffs to balance when it comes to designing a SmartNIC.

Ultimately, a successful SmartNIC design must:

• Implement complex data-plane functions, including multiple match action processing, tunnel termination and origination, traffic metering, and traffic shaping.

• Provide the host processor with per-flow statistics to inform networktuning algorithms that optimize traffic flow.

• Include a high-speed data plane that is programmable through either downloadable logic or code blocks to create a flexible architecture that can easily adapt to changing data plane requirements.

• Work seamlessly with existing data center ecosystems.

To achieve these, it is highly unlikely that any one technology (i.e., ASIC, FPGA, or CPU) will lead to a “passable” SmartNIC. Instead, you should choose at least two technologies such as a combination of ASIC and FPGA. In practice, the best design will likely be one that marries all three design options along with a very high-performance network-on-chip to tie them together.

The SmartNIC, a network interface card with an integrated data processing unit (DPU), is gaining importance for use in data centers. Engineers can design SmartNICs with ASICs, Arm Cores, and/or FPGAs. Design choices come with several tradeoffs. All things considered, a successful SmartNIC design should be programmable, performant, and fit seamlessly within the rest of the system.

References

[1] “Smart NIC Market to Approach $2 Billion by 2027, According to Dell’Oro Group,” Feb. 23, 2023. https://www.delloro.com/news/smartnic-market-to-approach-2-billion-by-2027

[2] Network Interface Card, https://www.sciencedirect.com/topics/ computer-science/network-interface-card

[3] “Émile Baudot Invents the Baudot Code, the First Means of Digital,” Jeremy Norman’s History of Information. https://www. historyofinformation.com/detail.php?id=3058

[4] Christopher Trick, “What is a NIC Card (Network Interface Card)?” Trenton Systems, April 12, 2022. https://www.trentonsystems.com/blog/ nic-card

[5] David Smith, “A Network Engineer’s Perspective of Virtual Extensible LAN (VXLAN),” Connected, December 17, 2018. https:// community.connection.com/network-engineers-perspective-virtualextensible-lan-vxlan/

Engineers have several mmWave over-the-air test methods available for evaluating phased-array antennas used in antenna-in-package designs. Each has pros and cons.

By Su-Wei Chang, Ethan Lin, Andrew Wu, and Jackrose Kuo, TMYTEK

By Su-Wei Chang, Ethan Lin, Andrew Wu, and Jackrose Kuo, TMYTEK

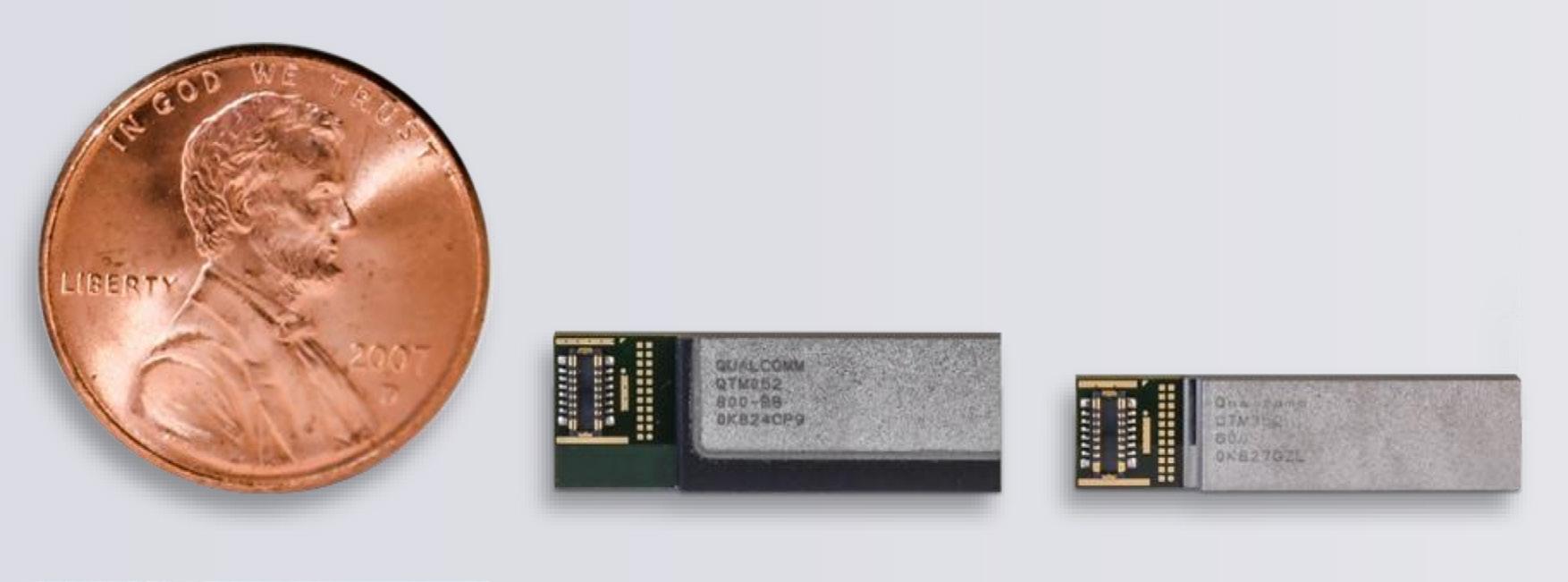

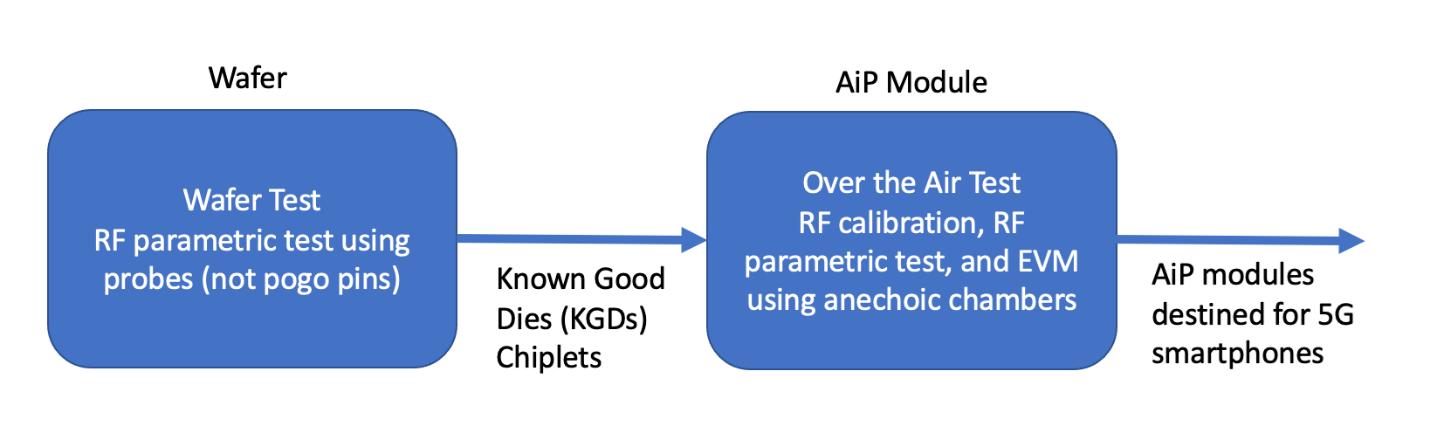

5G brought mmWave frequencies from 24 GHz to roughly 50 GHz to cellular networks. Those frequencies provide wide bandwidths that enable high data rates. Unfortunately, signals at these frequencies are susceptible to atmospheric absorption, scattering, and blocking. To offset these problems, mmWave depends on phased-array antennas and beam steering to direct energy to the target. Antennain-package (AiP) designs combine antennas with modems and mmWave components. That saves space but eliminates test points, making wired testing impossible. Testing AiP designs require over-the-air (OTA) techniques.

Evaluating antenna characteristics such as gain, phase, and radiation patterns guarantees beam-steering performance through OTA radiation testing is crucial to ensure that these systems meet the required standards for performance, coverage, and reliability.

OTA measurement methods include phased-array, far-field, indirect far-field,

and horn-antenna techniques. Testing

AiP RF front-end systems emphasize the importance of calibrating and measuring gain loss and phase error, which directly affects beamforming performance.

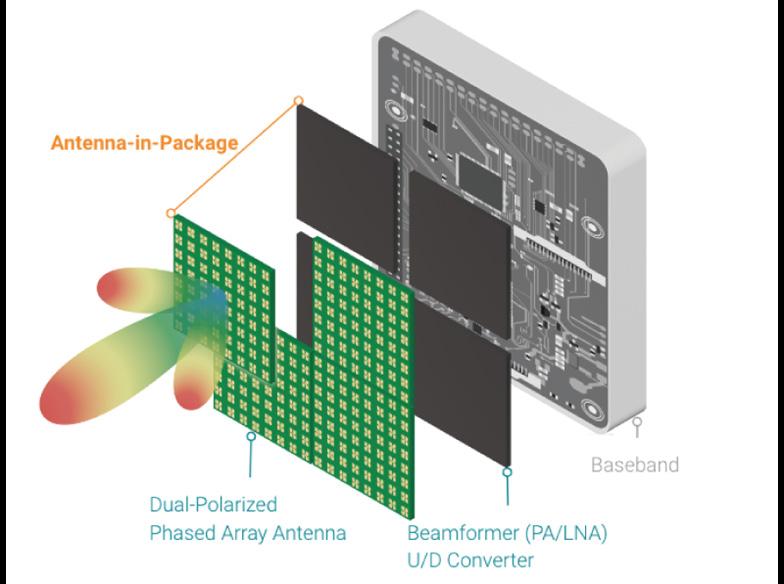

Figure 1 shows an RF front-end module with an integrated antenna array and beamformer ICs that form an active phasedarray beamforming subsystem consisting of:

• phase shifters,

• a power amplifier (PA),

• low-noise amplifier (LNA), and

• optional integrated frequency up/down converters, power management, and control.

Phased arrays control a signal’s gain and phase, creating constructive and destructive interference that enables beamforming and beam steering, as shown in Figure 2. AiPs are used in 5G/SATCOM applications.

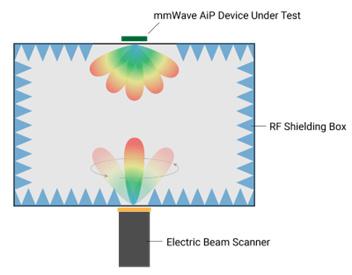

Figure 3 shows a test bed for evaluating beamforming characteristics, algorithms, and other advanced experiments such as MIMO and channel sounding.

Far-field testing is a commonly used method for 5G mmWave OTA testing. It involves measuring the electromagnetic radiation emitted by the device under test (DUT) at a distance greater than one-tenth of the wavelength of the signal being transmitted; refer to Figure 4. The far-field region is typically defined as the region beyond the Fresnel zone, which is the region surrounding the device where the phase of the electromagnetic waves is not uniform. Far-field testing is typically performed using an anechoic chamber, a specially designed room that absorbs electromagnetic waves to eliminate reflections from the walls and floor. The main advantage

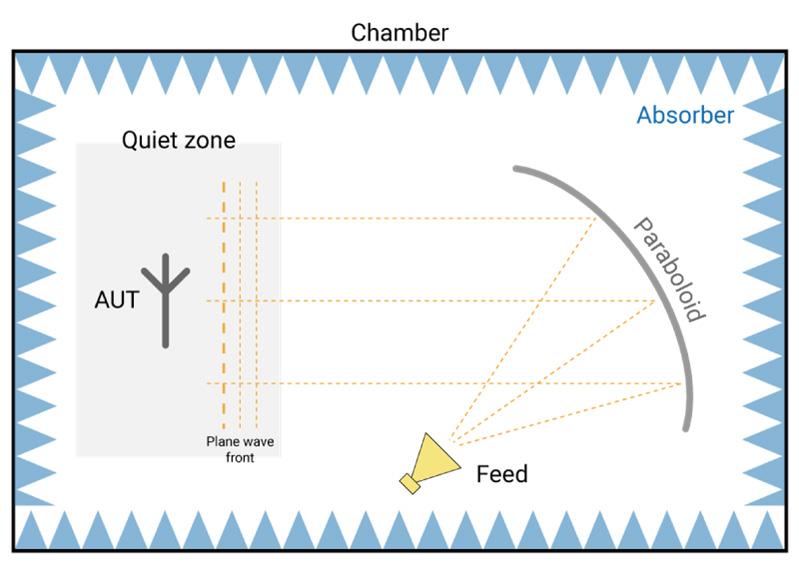

of far-field testing is that it provides a true representation of the antenna’s radiation pattern. Another form of testing called compact antenna test range (CATR) is a type of indirect far-field testing; a measurement method used to evaluate an antenna’s performance in a compact and controlled environment. It involves measuring the electromagnetic radiation emitted by the DUT at a distance greater than one-tenth of the wavelength of the signal being

transmitted but not in the farfield region. See Figure 5 for the environment setup. Indirect far-field testing is typically performed using a reflector or lens to focus the electromagnetic waves emitted by the DUT onto a detector. This method can be less expensive and easier to set up than traditional far-field testing, but it may not be as accurate. CATR is typically used to measure the radiation pattern and gain of an antenna, as well as to evaluate the performance of beamforming algorithms.

Horn-antennas used for testing the radiation pattern of an antenna measures the field strength at the aperture of a horn antenna, as shown in

Figure 6. This method is useful for testing arrays of antennas because the horn can individually illuminate each element of the array. Unfortunately, you need several horn antennas, resulting in increased cabling complexity. The main advantage of horn testing is that it allows for easy isolation of individual elements in an array. Horn testing may not, however, provide as accurate a measurement of far-field radiation pattern representation for the antenna as far-field or indirect farfield testing.

When testing the performance of AiP RF front-end systems, you must

calibrate the test bed and measure gain loss and phase error to ensure that the beamforming performance is up to standard. Gain loss is the difference between the expected and actual gain of an RF system, and likewise, the phase error is the difference between the expected and actual phase of an RF signal. Calibrating and measuring these parameters is crucial to ensure that the beamforming performance of an AiP RF front-end system is consistent and reliable.

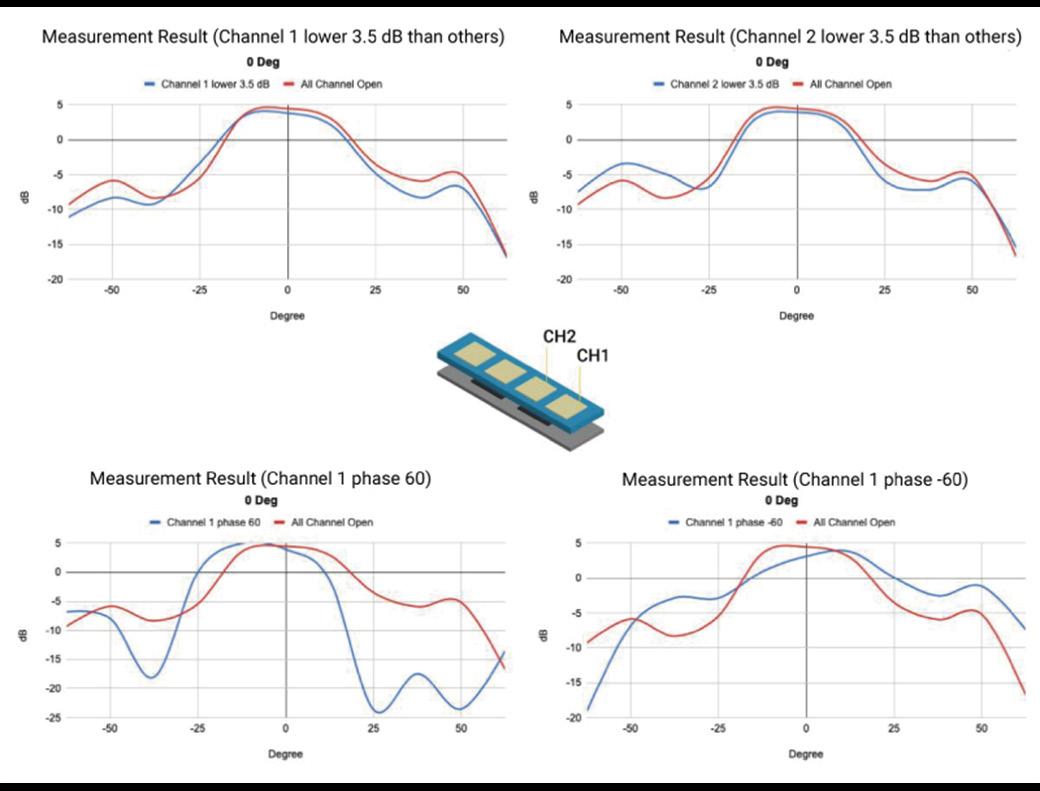

Figure 7 is the experiment of a 1x4 AiP module where the power gain is turned off or turned down, and the phase difference between channels is adjusted. What if it only measures the peak gain (gain at zero degrees)? It would not be able to absolutely justify the difference due to it being under the testing tolerance, which could result in extra troubleshooting effort. Instead of checking and comparing the beam pattern in red (all channels

open for good results) and blue (defect sample), there are clear results that make it easy to identify defects by the beam pattern.

Table 1 compares industry standard measurement methods.

In summary, existing chamberrotator-based measurement solutions provide complete measurement parameters, but they take up significant space and are expensive. In addition, turntable and rotator pattern measurement mechanisms are slow to generate results. Furthermore, although horn testing requires minimal investment for production testing, it evaluates peak only, making it not ideal for identifying defects in phased-array antennas.

As the mmWave market grows and the demand for phasedarray antenna simulation and measurement validation increases, there are various methodologies

• Reliable method for measuring the radiation pattern of an array antenna.

• Most commonly used method for testing array antennas.

• Allows for accurate gain and directivity measurements for the antenna.

• 3D radiation pattern measurements.

Antenna Test Range)

• Allows for testing array antennas in a compact environment.

• Eliminates the need for a large distance between the antenna and test equipment

• 3D radiation pattern measurement.

• Reliable method for measuring the radiation gain of an array antenna.

• Accurate gain and directivity measurements of the antenna when multiple horns are used

• Less expensive solution.

proposed from both academic research and industry in-house experimentation. Note that pattern-based measurements should be crucial to guarantee beamforming performance from a phased-array antenna.

Gain and phase are key factors in beamforming and beam steering. The only test case to guarantee phased-array antenna

• Requires a large distance between the antenna and test equipment, which can be challenging in certain test environments.

• Not well suited for testing array antennas that are close to each other.

• Slow measurement speeds due to mechanical rotator.

• High CAPEX and OPEX.

• Less reliable than direct far-field testing.

• More complex to set up and requires more equipment.

• Slow measurement speed due to mechanical rotator.

• High CAPEX and OPEX.

• Not well suited for testing array antennas that are close to each other.

• Requires a large distance between the antenna and test equipment.

• Only peak gain measurements.

Table

performance is a pattern-based measurement. That means the measurement system should contain multiple detecting probes to detect gain power at specific angles. But how to define resolution versus cost is a design topic based on usage needs.

Because peak gain is detected only by a single horn antenna, what if the system

Market proven measurement method for antenna gain and directivity

× Requires larger testing space and is costly

Alternative measurement method with compact testing space

× Slow measurement speed and is costly

Ultra cost-effective measurement method that is widely produced

× Less testing coverage

incorporates multiple horn antennas and is placed as the sector to detect the gain from different angles? It is thus possible to redraw the gain chart into a “pattern” map. The consequence of horn array methodologies is the beam pattern resolution corresponds to the quantity of horn detection sources. Higher pattern resolutions mean larger horn arrays, which increases cost. When there are many horn detectors in the same measurement system, calibrating each antenna for consistency is also challenging.

As an alternative to using horn antennas, a well-calibrated AiP system could be used as an electric beam scanner and activate beam-to-beam measurements to detect each beam angle from the DUT. The entire system would ideally be contained inside a chamber box to avoid interference (see Figure 8 for the system concept). Because software controls beam switching and the time delay when switching is mere microseconds, by theory, a full 3D-pattern measurement could be generated within 10 sec. This 3D beampattern measurement methodology is worth continued development and validation.

Measurement space correlates to the frequency and diameter of the antenna, and the only way to minimize space is with near-field testing. The problem: there is no radiation behavior

associated with the near-field region, hence the essential need for NF-FF (near-field to far-field) transformations.

NF-FF calculations should be calibrated and defined in a certain test configuration and chamber environment. Although there are several approaches to transformation equations that result in proven information

mapping, there is ample research and experimentation available for review online, so it will not be discussed here in depth.

To accelerate failure detection, you can use AI software to process large amounts of test data into a characteristic model for pattern recognition. You should analyze beam patterns in real-time to

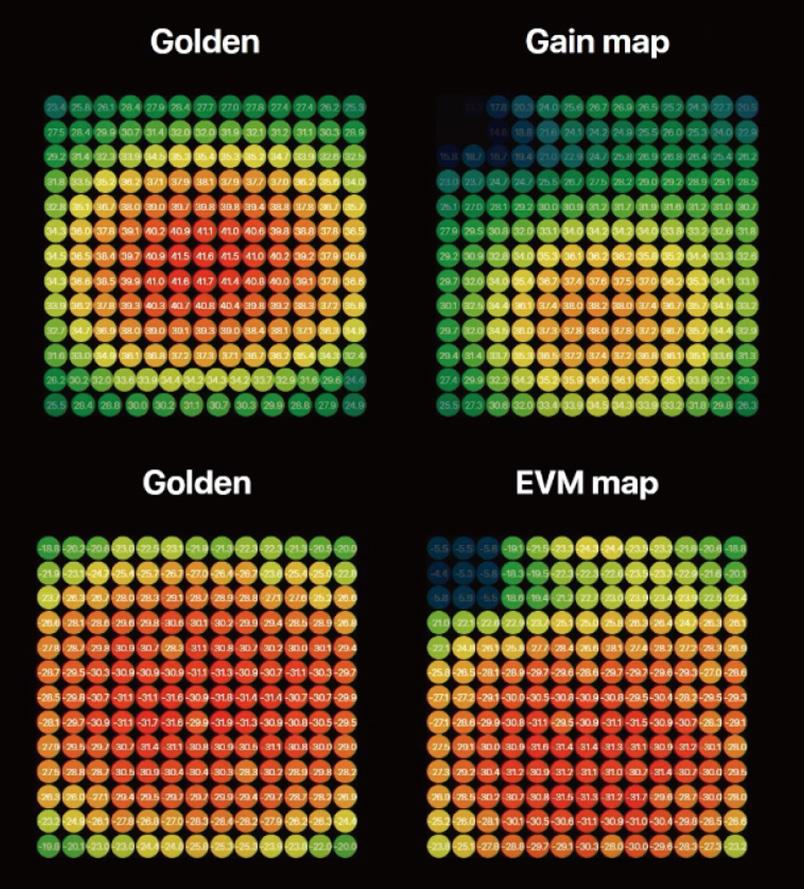

identify failures where defective components could be dispatched using conditional configurations. With such high-speed testing, you can collect measurement results quickly. Doing so lets you build a comprehensive database rich enough to develop AI modeling for advanced intelligent analysis, such as the characteristic comparison of gain and errorvector magnitude (EVM) maps in Figure 9. This approach lets you correlate production batches with characteristics of array elements to significantly improve production efficiency while providing valuable feedback to design engineering teams.

Applying outside-the-box thinking and combining all the above testing methodologies could create a useful OTA testing method that addresses testing speed as well as increases test coverage. As cost is always a key investment consideration, total cost of ownership (TCO) optimization could incorporate a simplified setup with electric scan and AI automation.

5G mmWave OTA testing is an essential process that plays a critical role in the development and deployment of 5G mmWave systems, which operate at frequencies above 24 GHz and offer higher data rates and lower latencies compared to lower frequency bands. OTA testing lets engineers evaluate the performance of mmWave systems under real-world conditions, including the effects of multipath, reflections, and penetration loss. The most important factors are beamforming performance, which is identified by the beam pattern through the gain and phase measurement, and testing speed that launches mmWave from production to commercialization to innovate the marketplace.

A loose connection; a metal roof; power lines. Even a rusty bolt. It’s estimated that mobile operators will spend $1.1 trillion on capital expenditure between 2020 and 2025, much of it allocated to creating, improving, and maintaining its advanced networks — a little corrosion can result in decreased data rates or dropped calls.

Passive intermodulation (PIM) interference is not a new problem for the mobile industry, but it is growing. PIM has become a pressing problem due to the rapid deployment of new technologies, the use of frequency bands located close to each other that are particularly susceptible to this issue, and the increasing number of subscribers. These factors have combined to create a challenging environment where PIM can cause significant disruption and degradation in the performance of wireless networks.

PIM is the generation of interfering signals caused by nonlinearities in a wireless network’s passive components. The interaction of mechanical components — such as loose cables, dirty or corroded connections, or metal-onmetal connections such as fasteners — can produce PIM. When two signals pass through these components, the signals can interfere with each other, creating harmonics that fall directly into the uplink band.

Networks can transmit and receive 4G/5G signals without ever seeing an issue. In busy networks with multiple frequency bands transmitted, however, the chances of causing RF interference increase.

Mobile networks aim to make the best use of the frequencies they license, meaning this is a problem — especially at the crowded lower-band 450 MHz to 1 GHz and mid-spectrum 1 GHz to 6 GHz bands used by many operators for 5G services. Higher frequencies and less crowded networks may be less prone to PIM interference.

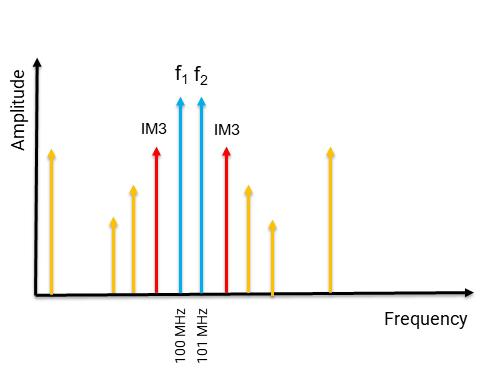

The third harmonic is often the strongest in PIM because it is the result of the second-order nonlinear mixing of two input signals, which generates an intermodulation product at three times the frequency of one of the input signals (see Figure 1 and Table 1). The result is an increase in the noise level affecting desired signals, leading to dropped calls and decreased capacity. To compensate, the power levels of the cellular site will increase so that the signal can be separated from the noise. This spike in power is a big flag to the network that a problem exists, but also creates its own issues.

PIM becomes more apparent when cell sites experience high levels of activity with numerous user devices connected to the network. As the site attempts to overcome the noise and interference within the network, it may need to increase power levels, which can further exacerbate PIM. An example of compensating for noise in a crowded environment

is speaking louder so that the listener can hear your message. In situations where the noise level is high, we naturally adjust our vocal volume to ensure that our message is effectively communicated. Similarly, cell sites trying to overcome noise interference increase their power levels to compensate, which can affect nearby cell sites.

Knowing that an interference problem exists in a network can be straightforward. The previously mentioned increased power use is a big clue, and many networks have fault-detection software that can point to where interference exists. Unfortunately, identifying the sites where PIM interference

5G brought passive intermodulation problems into the spotlight. Now it’s up to engineers and technicians to identify and mitigate signal degradation to minimize dropped calls and other issues.

Danny Sleiman, EXFOFigure 1. Intermodulation (IMD) creates distortion that degrades signal quality, causing PIM. The third harmonic is usually the worst offender.

exists and knowing what is causing the interference at these sites are very different challenges. The origins of PIM interference can be extremely difficult to pinpoint.

First, there are two types of PIM. Internal PIM is caused by the internal RF elements in the infrastructure such as loose connectors, damaged cables and connectors, and faulty elements in the antennas. These issues typically occur between the transmitter and the antenna, and the most common culprit is a damaged or faulty coaxial cable. External PIM is caused by objects located near cell sites. Examples include metallic objects (usually rusty) close to the antenna, metal roofs, or even digital billboards. Interference from either of these domains is difficult to identify.

Dealing with internal PIM can often require a specialist crew climbing a cell tower or accessing a rooftop to look for what might be causing the problem. Those situations entail expense and risk, especially carrying a bulky PIM analyzer. Even then, there’s no guarantee of identifying the problem the first time, so multiple truck rolls may be necessary to find and detect every potential source of PIM. While a corroded coaxial connector is the most likely suspect, this is not guaranteed; the entire process of finding and resolving an internal PIM issue can take weeks to complete. This is due in part to the required analysis, which can be a mostly manual effort rather than using automated technology.

External PIM can be even more difficult to locate. Technicians need to hunt down and pinpoint the source (or sources) causing the interference, and the solution may not be easily apparent. A spectrum analyzer can help pinpoint the source, and the process of interference hunting is highly manual and typically requires RF expertise. In extreme circumstances, the only solution may be to move the site.

Making this bad situation even worse, it’s not immediately obvious when the problem is external or when it is internal. Analysis can mean disconnecting the site from the network for assessment, which is far from ideal for an operator striving to keep customers connected. Technicians have a short window to try and resolve issues so that customers don’t suffer too much disruption.

The nature of PIM interference makes it a particular headache for operators, given that it’s common, hard to pin down, and resolving

it can be expensive. Plus, an extended hunt for PIM interference can cause more problems than it solves. As engineers search for the problem and change connections and hardware, they can end up introducing elements that make the PIM interference worse. Fortunately, there are ways to mitigate these issues.

It may seem counterintuitive in a mobile network that diagnosing PIM issues can involve both fiber and over-the-air RF testing. In fact, by using RF spectrum analysis over the fiber or CPRI protocol, technicians can isolate whether the issue is internal or external PIM. To perform the RF spectrum analysis over the fiber (or CPRI), technicians attach an optical splitter near the baseband unit at the bottom of an antenna tower to diagnose the PIM issue. Performing this analysis from the ground avoids the time and effort of a technician scaling a tower to address interference.

A major benefit is that RF spectrum analysis over the fiber is a passive test application, so the baseband unit and remote radio head will continue to process calls normally, allowing technicians to analyze the uplink spectrum during normal site operation — and during busy periods when PIM is most active.

This type of analysis requires specialist equipment as it must be attuned to different network components — and network equipment from different or multiple vendors means that the encrypted signals along fiber

are not simple to analyze. Processes are available to auto-detect vendors’ proprietary signals, which reduces the configuration by applying intelligence and automation.

One way to detect if a particular fastener or bolt is causing external PIM interference is to fix or replace it. This isn’t always ideal — if the problem remains then an engineer or technician has spent valuable time and resources repairing something that hasn’t solved the issue. One way around this is quite simple: throw a PIM blanket over the offending item (Figure 2). These suppress the RF signal, meaning it no longer interferes with the site. This way the process of elimination is faster and repairs can be carried out on items that are actually worth fixing.

For situations where the internal or external PIM is not readily diagnosed at a particular site, over-the-air spectrum analyzers with interference hunting connected to a PIM probe or a directional antenna can enable anyone to hunt down the causes of ongoing PIM interference (Figure 3). Many solutions to PIM problems use multiple tools and are too complex to use without advanced expertise, but investing in intelligent equipment means field technicians can detect problems more quickly.

Given that PIM interference causes slower data rates and dropped calls, any customer making regular use of a site is likely to already be suffering from issues. Extended downtime while the problem is located will only make this worse, so ensuring quick resolution is important.

Wherever we have wireless technology, we have PIM because it’s impossible to eliminate entirely. Thus, it’s a consideration that operators need to take seriously. Networks are only going to become more crowded as new technologies increase data throughput and more cell sites are built to cope with demand.

Operators need to approach this with a proactive as well as a reactive approach. The reactive approach will always be necessary, of course. Many sites were built to support older technologies and were upgraded for LTE and now 5G. When these upgrades were in place, problems with PIM became apparent. As PIM is often a result of infrastructure degradation, then dealing with PIM is simply part of ongoing maintenance.

But there are steps that operators can take to prevent PIM issues. As metal-on-metal connections are often a big problem, sites can use plastic fasteners and connections so that these issues don’t arise — stainless steel might be durable, but that doesn’t matter if it’s causing interference. Operators can take other steps to prevent the corrosion of materials. Potential PIM sources can be identified and

mitigated before they become an issue. All network planning, building, and maintenance needs to be carried out with PIM mitigation in mind, not just taking today’s technology into consideration but tomorrow’s as well. The shift to 5G revealed some big

The Women in Engineering Chat Series from Design

World

Register now for past and future WOMEN IN ENGINEERING Webinars

wtwh.me/womenengineering

PIM issues, so what about 6G? Or even further? What about when new bands are made available? Completely future-proofing against PIM interference is impossible, but the negative impact of PIM can be lessened with the right approach.

is a collection of webinars about female engineers who are positively influencing the culture of engineering — as well as design technologies and engineering practices.By Darrin Gile, Microchip Technology

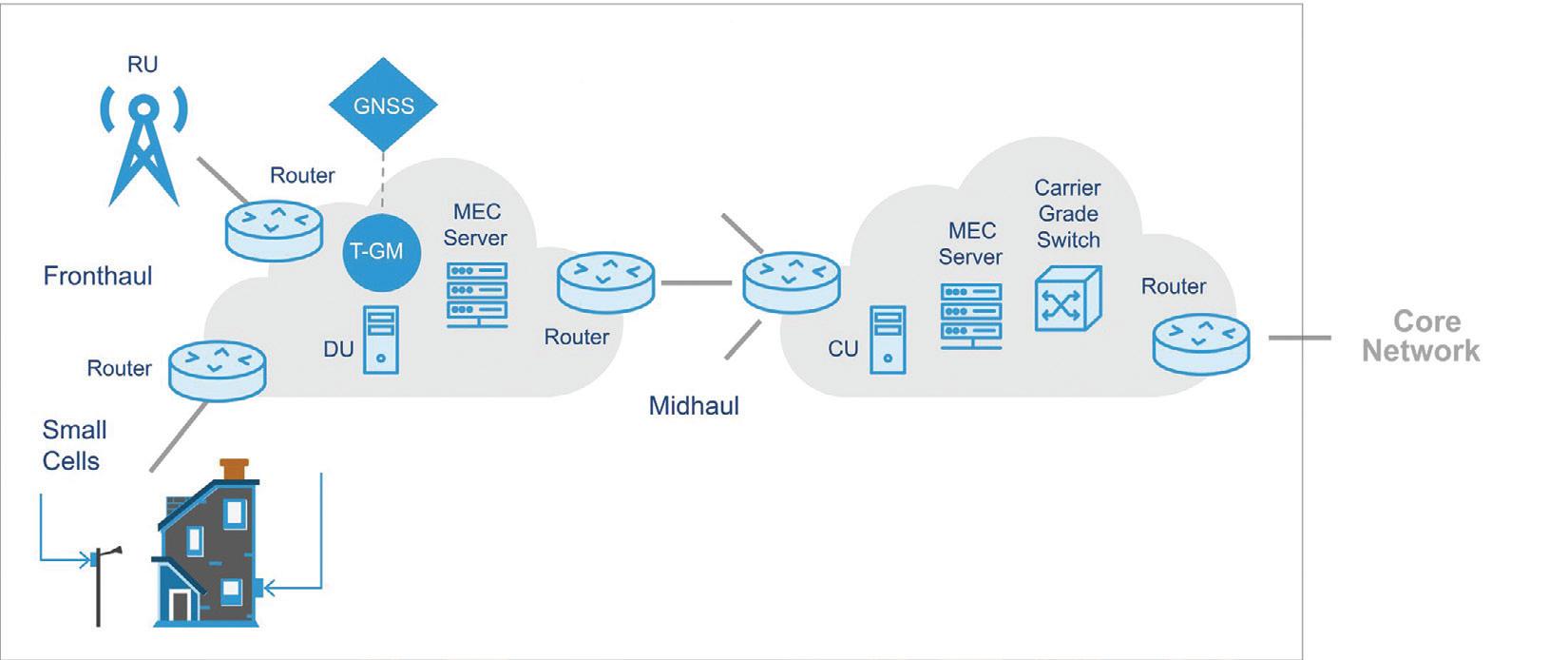

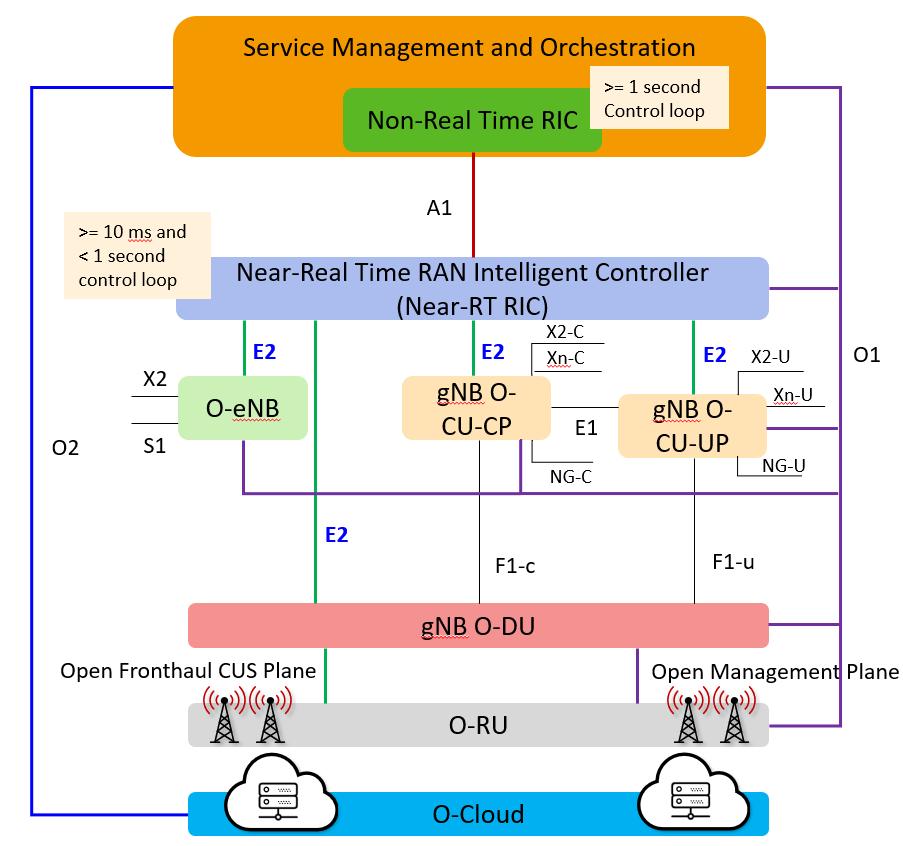

Network elements must meet certain frequency, phase, and time requirements to ensure proper end-to-end network operation. Synchronization architectures defined by the O-RAN alliance dictate how Open RAN equipment can meet these requirements.

Open RAN continues to attract interest from service providers looking to reduce cost, improve competition, and drive technology innovation. The desire for a disaggregated and virtual RAN architecture has introduced more flexibility, competition, and openness to the 5G networks.

The O-RAN Alliance was formed in 2018 to standardize hardware and define open interfaces that ensure interoperability between vendor equipment. Protocols, architectures, and requirements for the control, user, and synchronization planes are defined in O-RAN.WG4.CUS.0-v10.00.

The synchronization plane (S-Plane) addresses network topologies and timing accuracy limits for the fronthaul network connection between the O-RAN radio unit (RU) and distributed unit (DU). The requirements for frequency, phase, and time synchronization follow the 3GPP recommendations and align with the ITU-T network and equipment limits. For time-division duplex (TDD) cellular networks, the base requirement for TDD cellular networks is 3 µsec between base stations, or ±1.5 µsec (G.8271) between the end application and a common point. More stringent accuracy requirements exist for equipment used with advanced radio technologies such as coordinated multipoint or MIMO.

To meet these tighter network limits, equipment will need to meet the Class C (30 nsec) maximum absolute time error defined in G.8372.2.

The S-Plane consists of four topologies for distributing timing through the fronthaul network (RU to DU). These configurations rely on a combination of timebased and frequency-based synchronization techniques. A primary reference time clock (PRTC or ePRTC) located in the network will provide a base time for each network element. The use of GNSS, precision time protocol (PTP), and a physical layer frequency source, most commonly Synchronous Ethernet (SyncE), ensures the RU reliably receives the frequency and, more importantly, the phase and time synchronization

required to operate the network. Figures 1 and 2 show the four defined configurations for supporting network synchronization in the Open RAN fronthaul network.

Configuration LLS-C1

Synchronization for the first configuration occurs through a direct connection between the DU and RU. The DU will receive network time from a precision realtime-clock/telecom grandmaster (PRTC/T-GM) that is either colocated with the DU, or from a remote PRTC/T-GM located further back in the network.

Configuration LLS-C2

For configuration LLS-C2, the DU still receives network time from a co-located PRTC or one further upstream in the network. Network time passes from the DU through

additional switches that reside in the fronthaul network. For best performance, these switches should comprise a fully aware (G.8275.1) network where each node acts as a telecom boundary clock (T-BC). Partially aware networks where one or more switches don’t participate in the filtering of PTP are also allowed. Depending on the type of fronthaul network, the type and number of hops limits the network's overall performance. For example, a fully aware network comprised of Class C (30 nsec) T-BC’s can facilitate more hops than a fullyaware network comprised of Class B (70 nsec) T-BC’s.

Configuration LLS-C3

For the third configuration, both the DU and RU will receive network time from a PRTC located in the fronthaul network.

As with LLS-C2, network time can propagate through the fronthaul network via fully-aware or partially-aware switches. In some cases, the DU may participate as a T-BC in passing time to the RU.

Configuration LLS-C4 is the most preferred and easiest to implement but potentially the costliest of the four topologies. In this configuration, the RU gets time from GNSS as a pulse per second (PPS) clock or from a colocated PRTC/T-GM. The sheer number of 5G NR sites and the location requirements of the GNSS antenna can make this a costly or impractical configuration to deploy. GNSS at the radio sites may also be more susceptible to spoofing or jamming, which can disrupt proper operation.

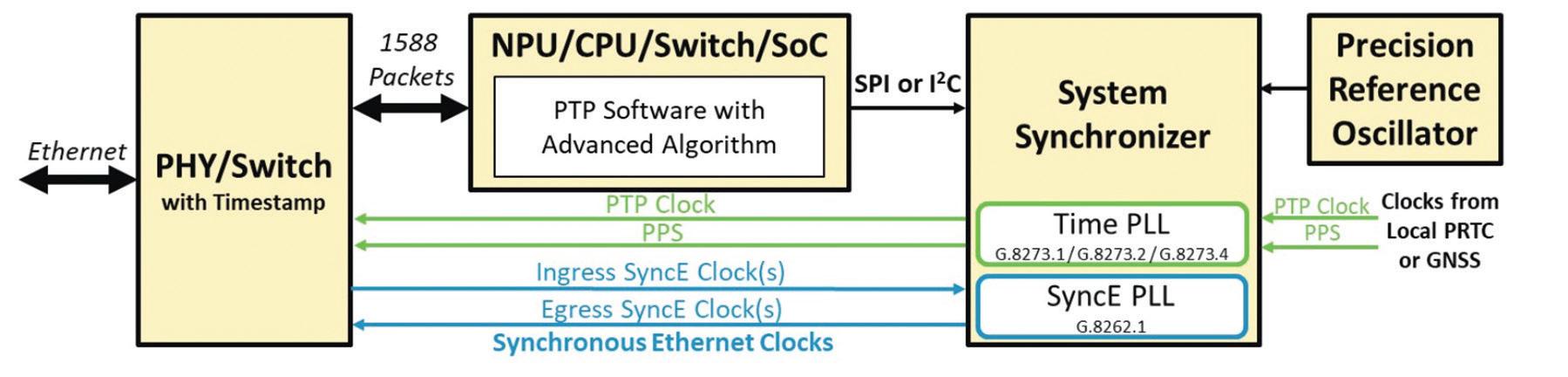

Like network deployments, the synchronization design of network equipment requires proper planning and design. To satisfy network synchronization limits, equipment will use a combination of timestampers, advanced phase lock loops (PLL), robust software for PTP support, and precision oscillators (Figure 3).

The first key piece of the design is the system synchronizer, which consists of several advanced PLLs. The synchronizer provides jitter and wander filtering for SyncE clocks, input reference-clock monitoring, hitless reference switching, and a numerically controlled oscillator for fine PPS/PTP clock control. The PLLs also provide bandwidths capable of locking directly to PPS clock sources.

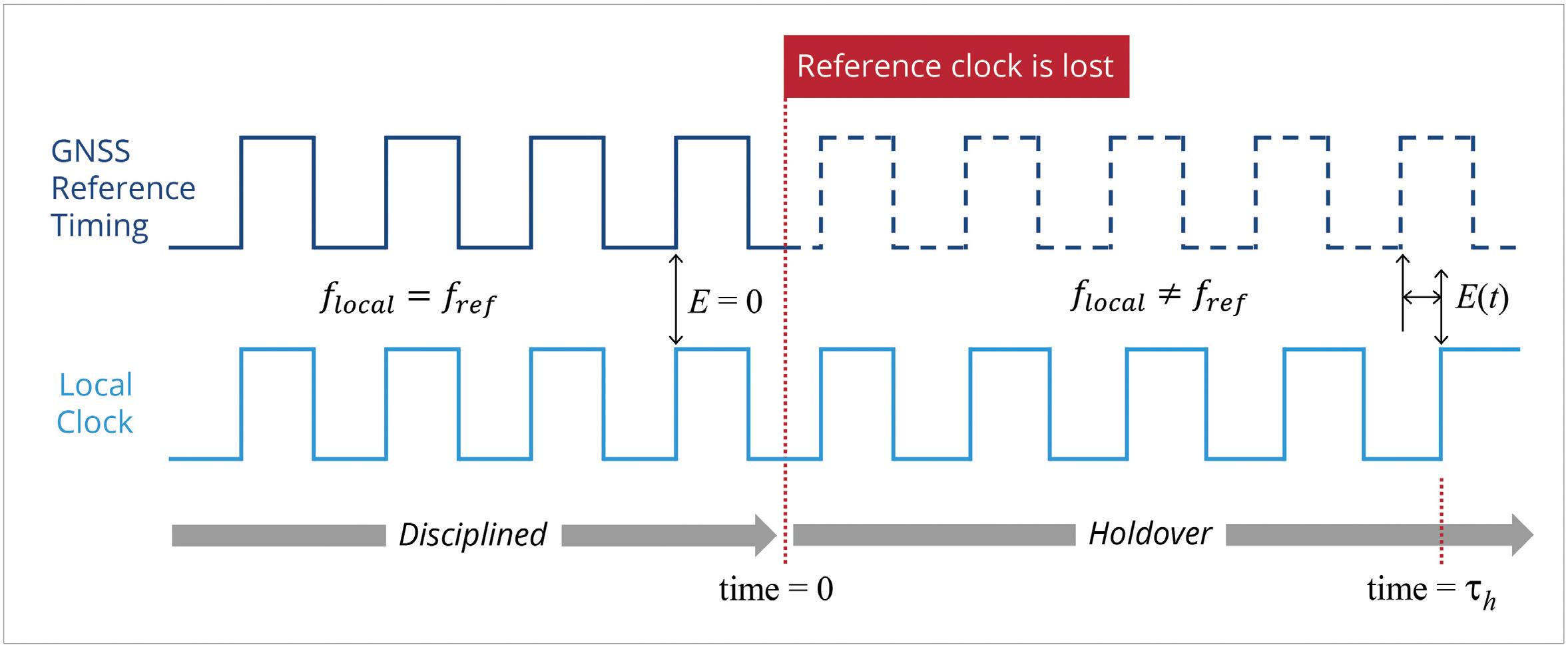

Accurate timestampers, PTP software, and an advanced algorithm will manage PTP traffic and provide the tuning calculations needed to accurately track T-GM phase and time. Finally, the precision oscillator is critical to ensure proper holdover and overall performance parameters.

These building blocks are the same for the DU, RU, and any switch participating in timing distribution. The actual implementation of the functional blocks may differ depending on the use case. For example, the precision oscillator may vary depending on the holdover requirements for each network element. A DU needs more stability and must support longer holdover times than an RU. Because of this, RU designs may be able to use higher-end temperature-controlled oscillators (TCXOs) or mini-oven-controlled oscillators (OCXOs) while a DU may use a more expensive OCXO.

You can employ any of several techniques to improve overall time accuracy within a piece of equipment. These techniques range from basic design items such as placing the timestamper as close to the edge of the equipment as possible, to more complex system calibration for phase management within the system. The use of SyncE and more specifically the Enhanced Synchronous Equipment Slave Clock (eEEC), as defined in G.8262.1, provides a stable frequency reference that greatly improves overall phase performance for hybrid configurations.

When using security protocols such as MACsec, take care to ensure that the encryption/decryption adds little or no delay to the timestamping function. Properly design and select the performance of the advanced algorithm and precision oscillator stability to provide the needed performance. For more complex designs, ensuring all timing components involved in the distribution of the PPS clock minimize input-to-output delay variation and outputto-output skew is critical to satisfy even the tightest equipment limits. Some synchronizers take advantage of calibration functionality that provides fine phase control measurements and adjustments. Additional compensation for phase error caused by the temperature and aging of the precision oscillator temperature can also be accomplished. You can use some or all these methods to ensure that equipment meets time and accuracy limits.

Initially, rural and private greenfield networks have been good launching points for deploying Open RAN. As more macro deployments begin to come online, providing high-accuracy network synchronization will be critical for delivering the performance that ultra-low latency applications and advanced radios technologies demand.

5G networks rely on the distribution of packets at high speed between the backhaul network and the air interface. Packets must travel through switches, routers, and networkprocessing units. Reliable packet distribution depends on highly accurate time signals that maintain precise synchronization of network equipment from end to end.

Clocks and oscillators throughout the 5G radio access network (RAN) propagate time signals among network equipment. According to the ITU-T’s recommendations for building a transport network, a timing signal can sustain a maximum error of just ±1.5 µsec in its journey between the backhaul and the radio.

Such a small margin imposes strict demands on the systems and oscillators that maintain stable and accurate timing. At certain points in the network, the strain on these components becomes acute because environmental factors weaken frequency stability. The choice of timing components is also, in some cases, constrained by the host equipment’s physical attributes.

This article offers guidance on choosing the right criteria for evaluating timing components for a 5G RAN, particularly in the critical locations where timing accuracy is most at risk of exceeding its error budget.

5G network technology came in response to market demands for faster downloads, stronger security, more data bandwidth, and connectivity to many more devices, user terminals and IoT nodes. The increasing application of artificial intelligence (AI) in mobile-networked devices, backed by the introduction of multi-access edge computing (MEC), also calls for the lower latency that 5G networks deliver compared to 4G.

Two important features of 5G arise from the use of higher frequencies: the mmWave bands and a shift from frequency-division duplex (FDD) to time-division duplex (TDD) signal modulation. Both changes narrow the margin of error allowed in the timing signals synchronizing 5G payloads and network operations; specifications for jitter and frequency stability must be set much tighter than in the 4G world.

Equipment manufacturers and network operators have learned that timing components have newfound importance in their world; timing has become a fundamental enabler of the new features and capabilities that underpin 5G networks’ revenue models.

As Figure 1 shows, a packet passes through multiple nodes in a 5G RAN on its journey from the core network to the radio. Data gets routed through the core and RAN, passing through switches and routers. Mid-haul and front-haul networks can employ MEC servers to provide cloud-computing functions, such as AI, close to the end user. This minimizes latency, tightens security, and improves IoT device performance.

5G base stations that employ Open RAN

By Gary Giust, Deepak Tripathi, and Carl Arft, SiTimetechnology have a new structure comprised of three functional units: the central unit (CU), distributed unit (DU), and radio unit (RU). The CU mainly implements non-real-time functions in the higher layers of the protocol stack and can attach to one or more DUs. The DU supports the lower layers of the protocol stack, including part of the physical layer. The RU includes hardware to convert radio signals into a digital stream for transmission over a packet network.

A notable innovation of 5G technology is the proliferation of small cells offering shortrange, high-bandwidth connectivity at the network edge. A small cell may be a femtocell, picocell, or microcell with a range of 10 m, 200 m, or 2000 m, respectively.

Industry standards govern time Synchronization requires a reference for time. In normal operation, this is derived from a Global Navigation Satellite Service (GNSS) radio signal, which is traceable to ultraaccurate atomic clocks running in government laboratories. Distributed throughout the network, this master time signal provides a reliable basis for synchronization.

The methods used to synchronize timing signals that propagate through the network are

5G’s high speeds place extreme demands on the components that maintain accurate time. Compliance with industry timing standards calls for accuracy in the face of temperature changes, shock, and vibration.Figure 1. Equipment in a 5G RAN transports data from a radio unit or small cell to a core network.

governed by industry standards. These standards are typically applied to equipment to make them comply with the 3GPP’s 5G specifications:

• The total end-to-end time error must be less than ±1.5 μs.

• The frequency error at the base-station air interface must be less than ±50 ppb.

• At the Ethernet physical layer, which supports packet transfer in the backhaul and mid-haul networks, Synchronous Ethernet (SyncE) provides frequency synchronization in compliance with the ITU-T G.8262 and G.8262.1 specifications.

Synchronization lets the equipment in a 5G network time-stamp individual packets and downstream equipment to extract reliable time measures from these time stamps. The IEEE 1588 Precision Time Protocol (PTP) provides a standard protocol for time-stamping data in a computer network.

To minimize time error, SyncE may be used to synchronize frequency between items of Ethernet networking equipment and, in combination with PTP, to synchronize the network to the GNSS time signal. For mainstream 5G RAN systems, a combination of PTP and SyncE offers the most accurate way to implement time synchronization. This timing setup, for example, lets equipment operating in ITU-T Class D mode keep time errors to under ±5 ns.

These systems normally depend on the unbroken availability of a GNSS signal to provide a reference time signal. Unfortunately, a CU or DU cannot always get access to upstream GNSS timing. In this case, they must rely on a local oscillator within a telecom grandmaster (T-GM) to maintain timing downstream for proper PTP operation.

When SyncE synchronizes to a reference time signal, timing is handed on from one node to the next. Each piece of network equipment recovers a clock signal from the data passing through it. It cleans the jitter from this recovered clock, then uses this clean clock signal to time data back out onto the line.

This operation repeats down the line, from where the time signal is directly derived from the GNSS clock all the way downstream to the network edge. This recover, clean, and retransmit process ensures that

downstream nodes are frequency synchronized to upstream nodes.

Such frequency synchronization uses jitter attenuators to clean the recovered clock signal. They feature a low-bandwidth phase-locked loop (PLL) operating between 1 mHz and 10 Hz to filter jitter and wander.

This attenuator also benefits from a local oscillator, providing redundancy and fault tolerance using input monitoring and hitless switching. The role of the local oscillator is to maintain an accurate time signal for a limited period if the upstream connection is temporarily lost. This holdover function is particularly important for routers, CUs, and DUs to keep the downstream network running.