ROBOTICS HANDBOOK

Lights-Out Manufacturing

Tools for Future Growth

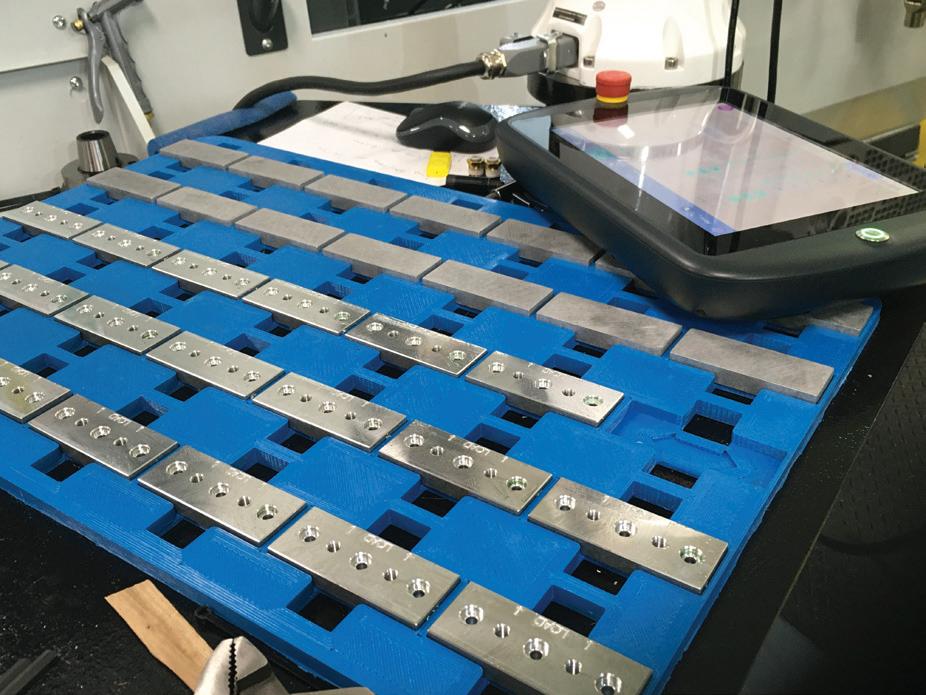

The Cobot Feeder Manufacturing Automation WorkSeries 300

Large Format 3D Printing

The Cobot Feeder is built to continuously feed parts to a cobot. This machine solves cobot downtime. Without some sort of automated loading and unloading system, cobots will inevitably sit idle, falling far short of their potential.

Traditional desktop 3D printers are fine for prototyping parts, but can be costly in terms of volume production. The WorkSeries 300 (1000 x 1000 x 700 mm build area) can produce near-net shape parts and have greater ability to customize, resulting in decreased costs and reduced time-to-market for industrial manufacturers.

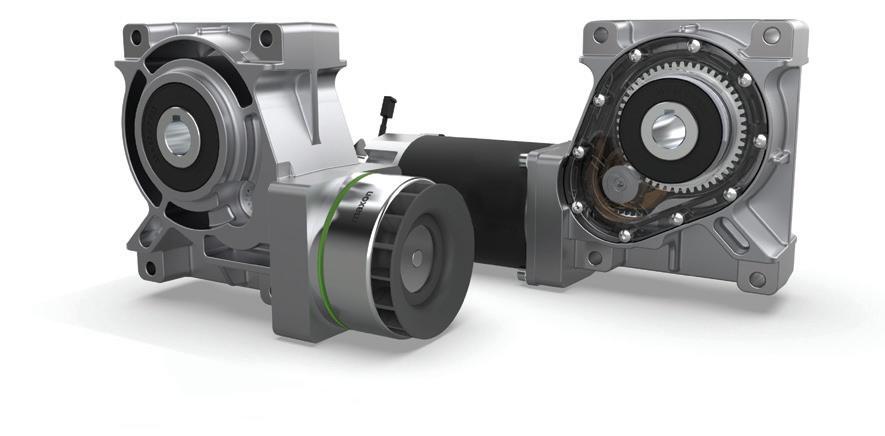

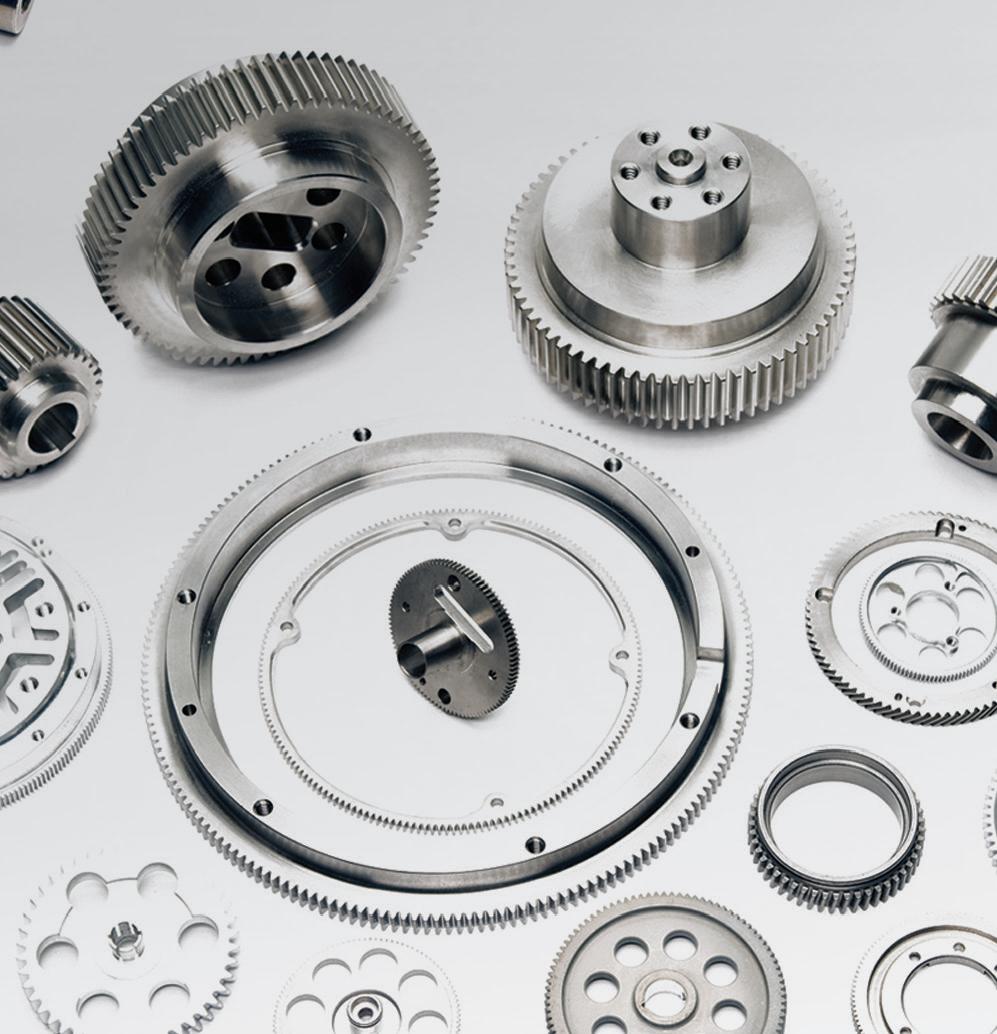

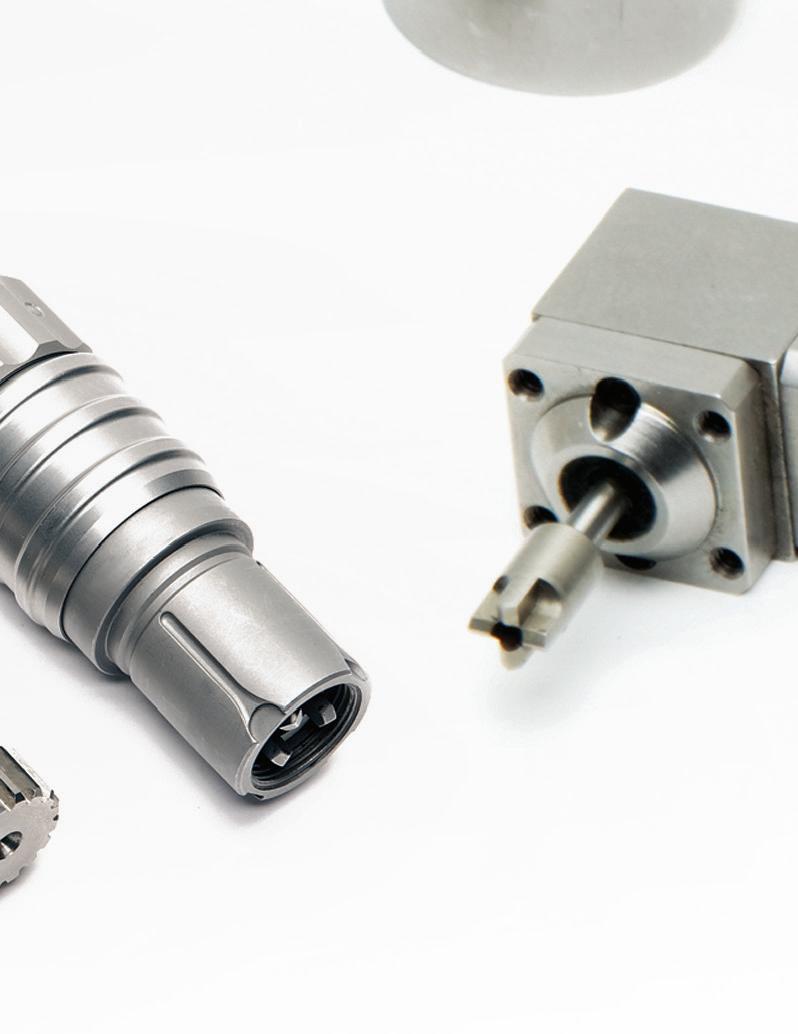

Ultra-Lightweight, Compact Gear Units

Introducing a new series of ultra-lightweight gear units featuring a newly engineered lightweight structure with an ultra-compact shape. Ideal for use on end of arm axes for small industrial and collaborative robots, the CSF-ULW is also well suited for general industrial machinery where weight is a critical factor. The two smallest sizes, 8 and 11, are available today. Other sizes coming soon!

• Zero Backlash

• High Accuracy

• Ultra Lightweight

• Ultra Compact

• Reduction Ratios 30:1~100:1

• Super Flat Configuration, 19mm (size 8); 21.5mm (size 11)

• Outer Diameter: φ 42.5mm (size 8);

φ 50.5mm (size 11)

• Weight: 90g (size 8), 150g (size 11)

on the cover

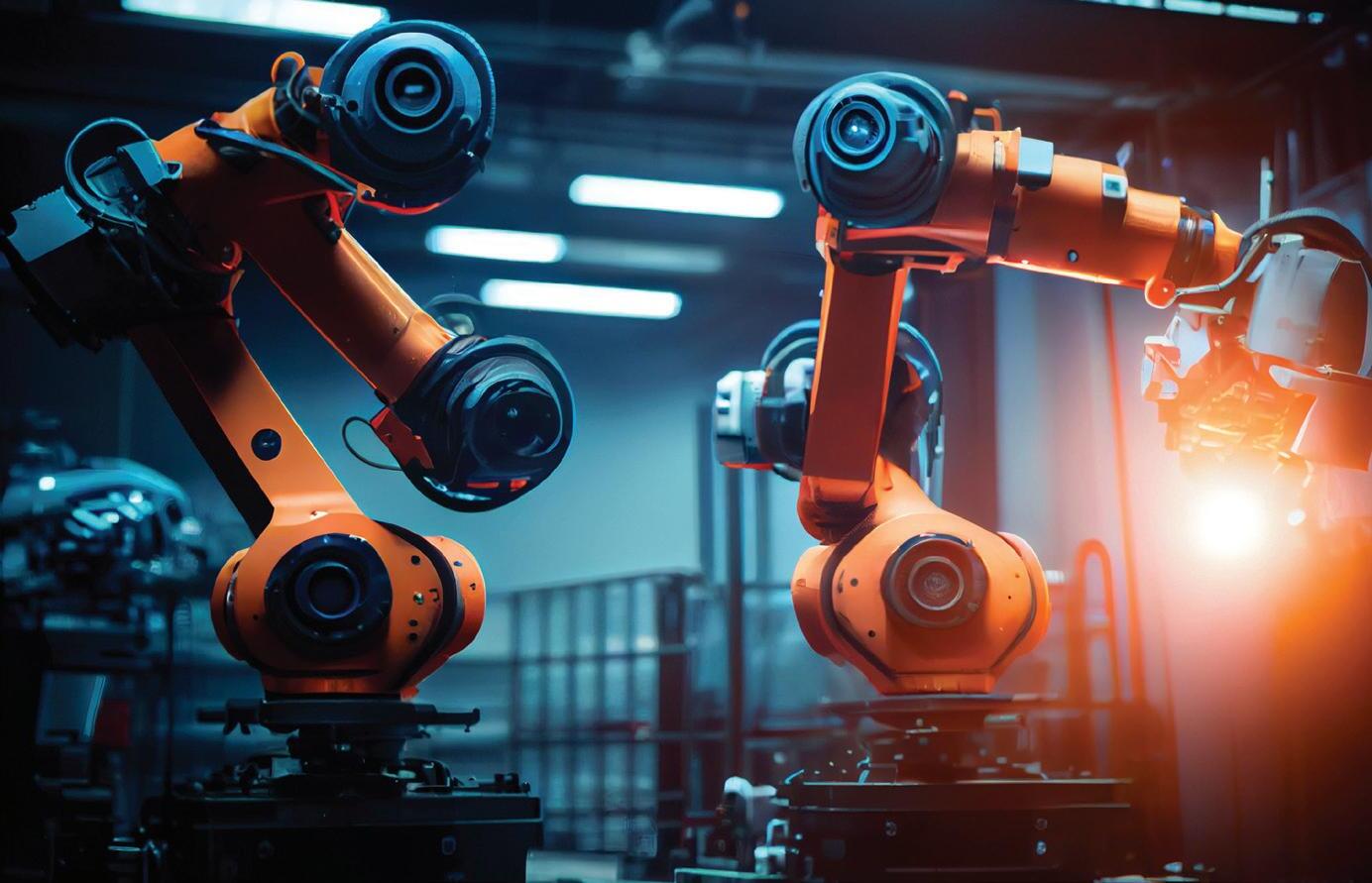

HUMANOID ROBOTICS DEVELOPERS MUST PICK WHICH PROBLEMS TO SOLVE

KAWASAKI WORKS WITH PARTNERS ON CL SERIES

COBOT APPLICATIONS

Kawasaki Robotics has focused on making its collaborative robots easy to program for applications such as arc welding.

PLUS ONE INTRODUCES DUAL ARMED INDUCTONE; PITNEY BOWES AUTOMATES PARCEL INDUCTION

InductOne builds on Plus One Robotics’ relationships with customers such as Pitney Bowes to increase picking throughput.

HUMANOID ROBOTICS DEVELOPERS MUST PICK WHICH PROBLEMS TO SOLVE

NVIDIA, which provides tools to accelerate design, simulation, and testing of humanoids, explains what we should expect from developers and AI.

The DeepMind team said that for robots to be more useful, they need to get better at making contact with objects in dynamic environments.

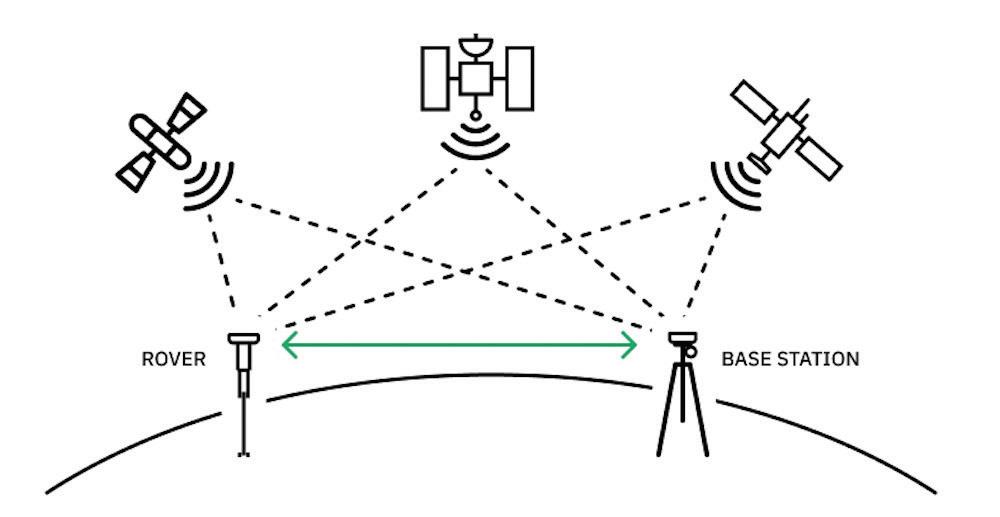

NAVIGATING POSITIONING TECHNOLOGY FOR OUTDOOR MOBILE ROBOTS

Absolute and relative positioning, as well as the data from multiple sensors, is important for a robot’s localization and navigation.

YOUR CUSTOM SOLUTIONS ARE CGI STANDARD PRODUCTS

Advanced Products for Robotics and Automation

CGI Motion standard products are designed with customization in mind. Our team of experts will work with you on selecting the optimal base product and craft a unique solution to help di erentiate your product or application. So when you think customization, think standard CGI assemblies.

Connect with us today to explore what CGI Motion can do for you.

DESIGN WORLD

FOLLOW THE WHOLE TEAM @DESIGNWORLD

EDITORIAL

VP, Editorial Director

Paul J. Heney pheney@wtwhmedia.com @wtwh_paulheney

Editor-in-Chief Rachael Pasini rpasini@wtwhmedia.com @WTWH_Rachael

Director, Audience Development Bruce Sprague bsprague@wtwhmedia.com

WEB DEV / DIGITAL OPERATIONS

Web Development Manager B. David Miyares dmiyares@wtwhmedia.com @wtwh_webdave

FINANCE

Controller Brian Korsberg bkorsberg@wtwhmedia.com

Accounts Receivable Specialist Jamila Milton jmilton@wtwhmedia.com

VIDEO SERVICES

DESIGN GUIDES

Key technology content produced by the editors of Design

Available for FREE download in individual PDFs.

Find these technical design guides on the Design World Design Guide Digital Library: www.designworldonline.com/ design-guide-library

EDITORIAL

Executive Editor Steve Crowe scrowe@wtwhmedia.com @SteveCrowe

Editorial Director Eugene Demaitre edemaitre@wtwhmedia.com @GeneD5

Senior Editor Mike Oitzman moitzman@wtwhmedia.com @MikeOitzman

Associate Editor Brianna Wessling bwessling@wtwhmedia.com

Managing Editor Mike Santora msantora@wtwhmedia.com @dw_mikesantora

Executive Editor Lisa Eitel leitel@wtwhmedia.com @dw_lisaeitel

Senior Editor Miles Budimir mbudimir@wtwhmedia.com @dw_motion

Senior Editor Mary Gannon mgannon@wtwhmedia.com @dw_marygannon

Associate Editor Heather Hall hhall@wtwhmedia.com @wtwh_heathhall

CREATIVE SERVICES

VP, Creative Director Matthew Claney mclaney@wtwhmedia.com @wtwh_designer

Art Director Allison Washko awashko@wtwhmedia.com

Media, LLC 1111 Superior Ave. 26th Floor Cleveland, OH 44114 Ph: 888.543.2447

Senior Digital Media Manager

Patrick Curran pcurran@wtwhmedia.com @wtwhseopatrick

Front End Developer Melissa Annand mannand@wtwhmedia.com

Software Engineer David Bozentka dbozentka@wtwhmedia.com

DIGITAL MARKETING

VP, Digital Marketing Virginia Goulding vgoulding@wtwhmedia.com @wtwh_virginia

Digital Marketing Manager Taylor Meade tmeade@wtwhmedia.com @WTWH_Taylor

Digital Marketing Coordinator Meagan Konvalin mkonvalin@wtwhmedia.com

Webinar Coordinator Emira Wininger ewininger@wtwhmedia.com

Webinar Coordinator Dan Santarelli dsantarelli@wtwhmedia.com

EVENTS

Events Manager Jen Osborne josborne@wtwhmedia.com @wtwh_jen

Events Manager Brittany Belko bbelko@wtwhmedia.com

Event Coordinator Alexis Ferenczy aferenczy@wtwhmedia.com

Videographer Cole Kistler cole@wtwhmedia.com

PRODUCTION SERVICES

Customer Service Manager Stephanie Hulett shulett@wtwhmedia.com

Customer Service Representative Tracy Powers tpowers@wtwhmedia.com

Customer Service Representative JoAnn Martin jmartin@wtwhmedia.com

Customer Service Representative Renee Massey-Linston renee@wtwhmedia.com

Customer Service Representative Trinidy Longgood tlonggood@wtwhmedia.com

Digital Production Manager Reggie Hall rhall@wtwhmedia.com

Digital Production Specialist Nicole Johnson njohnson@wtwhmedia.com

Digital Design Manager Samantha King sking@wtwhmedia.com

Marketing Graphic Designer Hannah Bragg hbragg@wtwhmedia.com

Digital Production Specialist Elise Ondak eondak@wtwhmedia.com

HUMAN RESOURCES

Vice President of Human Resources Edith Tarter etarter@wtwhmedia.com

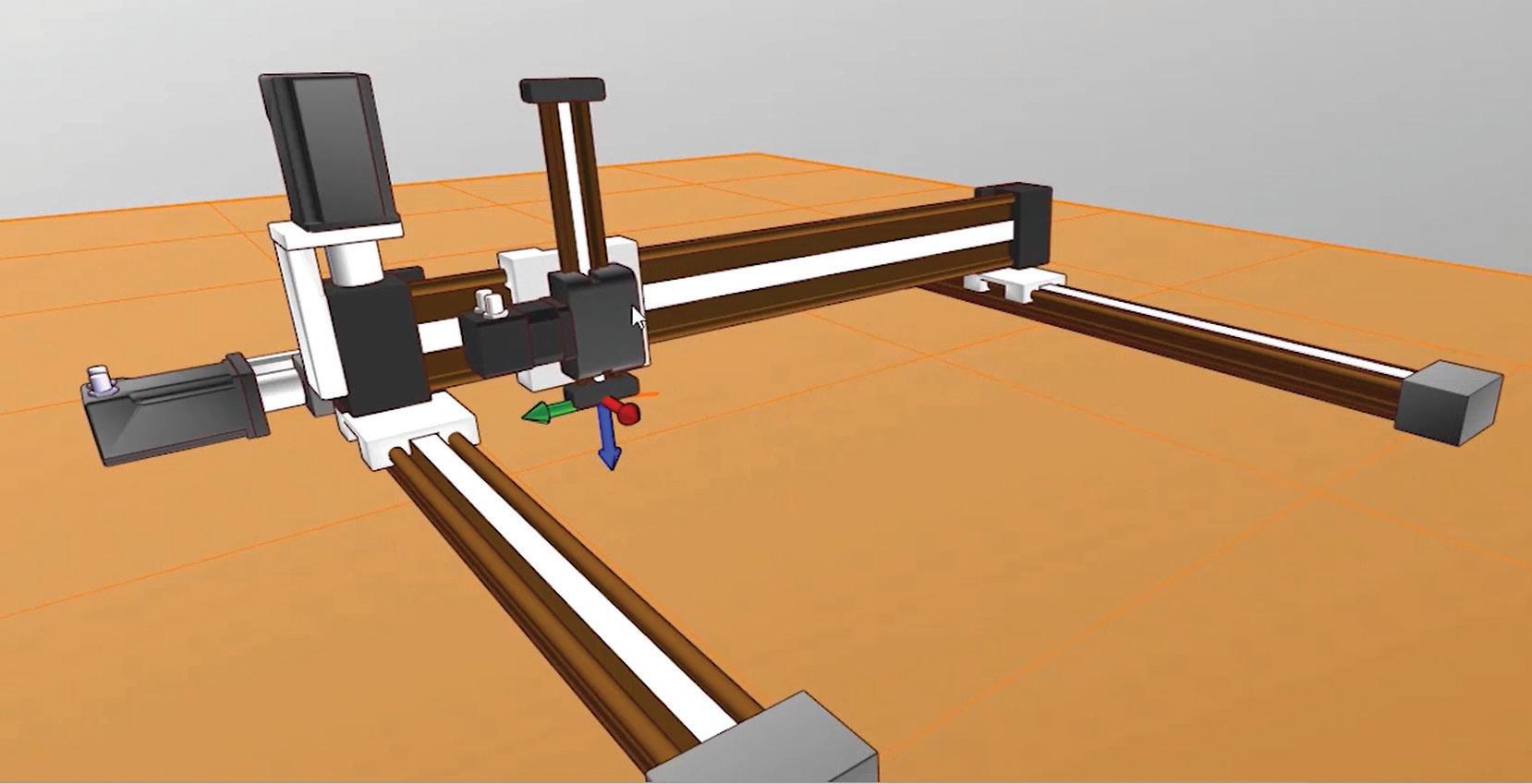

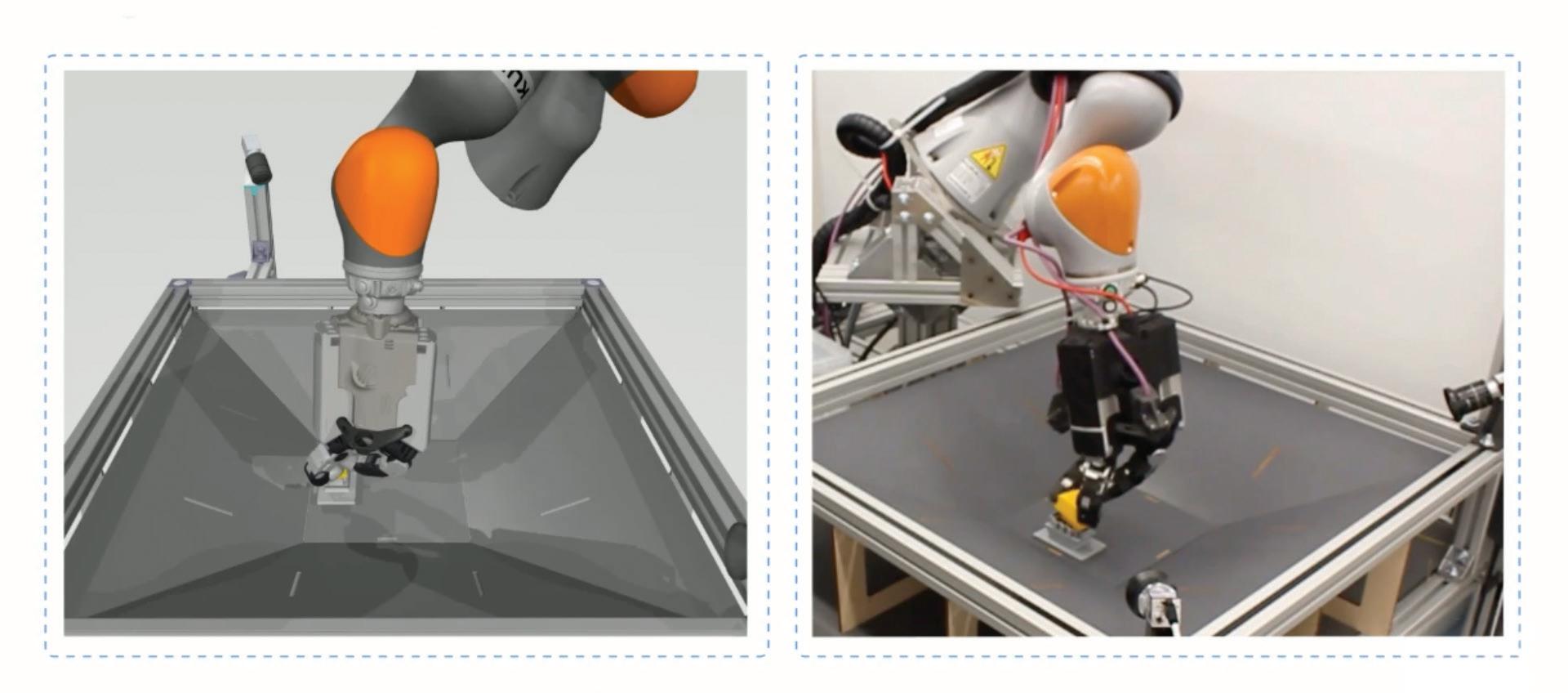

How to Use Digital Twins and Virtual Reality to Explore and Deploy Robotic Solutions Rapidly

Robots and collaborative robots (cobots) are on the leading edge of factory automation technologies. Digital twins and virtual reality (VR) are on the leading edge of design and development tools. Combined, they can be leveraged to create an industrial metaverse that delivers higher productivity faster, even for small- to medium-sized enterprises (SMEs).

Designers at SMEs can benefit from a simple and intuitive interface that combines a digital twin, a highly detailed virtual model of a physical object like a delta, linear, or multiaxis robot, and a 3-dimensional (3D) VR environment to enable direct execution and checking of the robot’s movement sequences.

Using these features supports fine-tuning and optimization of the automation system even without any physical hardware and enables rapid exploration of multiple solution possibilities.

This article first reviews the distinction between a mathematical, data-described digital twin and a visual digital twin (VR twin) and how both are needed to create the industrial metaverse. It then presents a robot control system and related software from igus that can be used

to simulate a robot on a 3D interface (visual digital twin) without using any physical hardware, along with compatible delta, linear, and multi-axis robots that can be used to realize the optimized solution.

Digital twins and VR are complementary technologies using different visualization forms, interactions, and hardware. Digital twins are data-based models of physical objects, systems, or processes. They are designed to be used over the entire lifecycle of the item being modeled from initial conception to decommissioning and recycling.

technology that also uses digital models. In a VR environment, it’s possible to simulate the relationships and interactions between objects, like a robot performing a task. So, while both technologies can be used for design and simulation, digital twin technology is focused on overall lifecycle considerations, and VR focuses on interactions between physical objects.

A metaverse combines digital twins and VR into a purpose-built virtual environment that supports real-time interactions between the digital objects and people. It’s often associated with gaming but is increasingly applied to business and industrial activities.

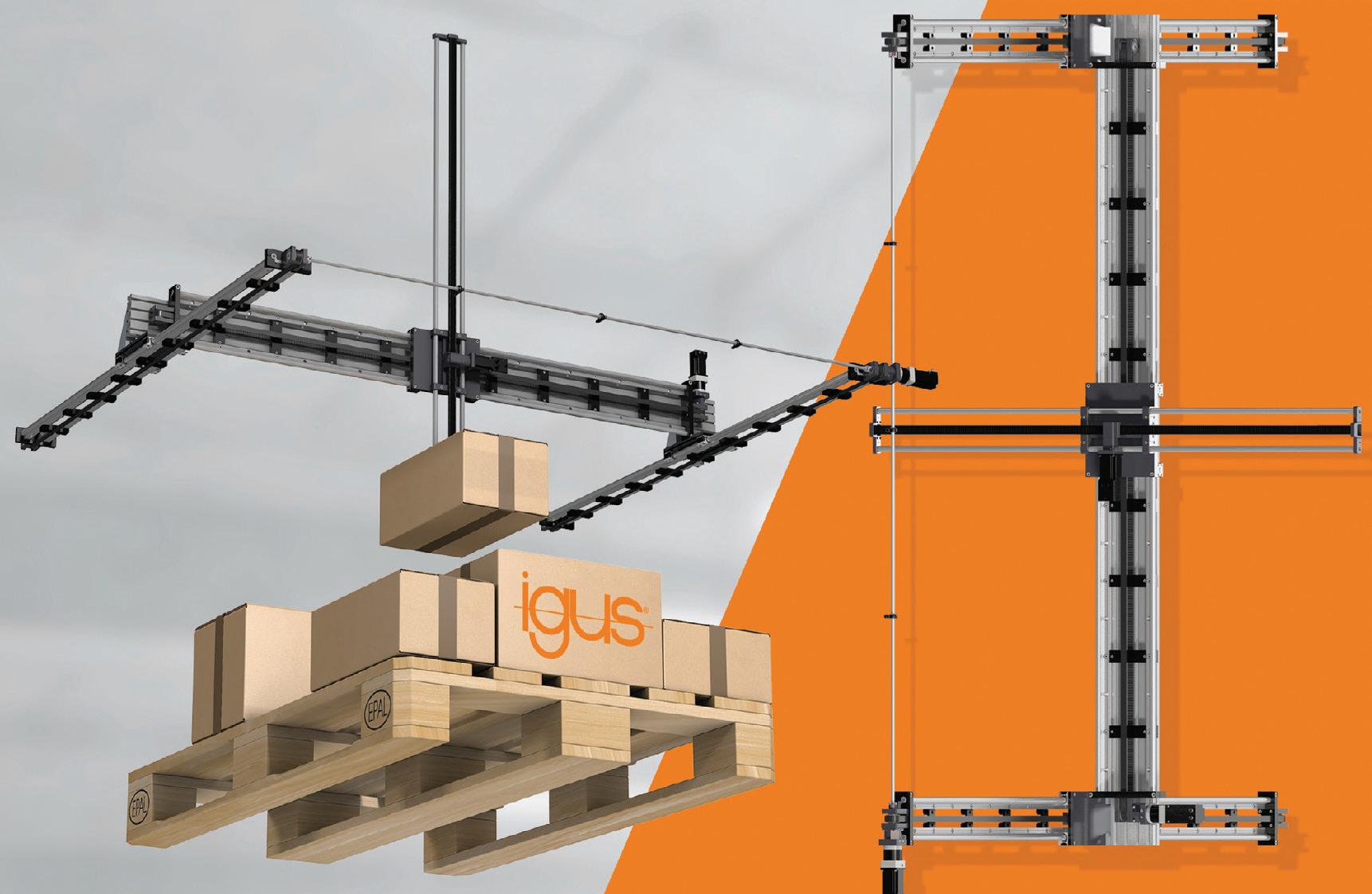

Figure 1: Example of a 3D VR digital twin of a three-axis gantry robot in the iguverse. (Image source: igus)

Welcome to the iguverse

Igus has developed the iguverse metaverse to support engineering interactions in industrial environments, such as developing and deploying robotic systems. The iguverse can be implemented through igus Robot Control (iRC) software. This free and license-free application enables users to control various types of robots, including delta robots, cobots (robot arms), and gantry robots.

It provides users with a 3D interface and over 100 sample programs. System requirements to implement iRC include a PC (minimum of an Intel i5 CPU) with Windows 10 or 11 (64Bit) with 500 MB of free disk space and Ethernet or wireless networking connectivity.

The software's core is a 3D digital twin of the robot being programmed. An example of this is a three-axis linear gantry robot like model DLE-

RG-0001-AC-500-500-100 with a workspace of 500 x 500 x 100 mm or a two-axis xy actuator like model DLE-LG-0012-AC-800-500 with an 800 x 500 mm workspace (Figure 1). Designers can define movements with a few mouse clicks and use the 3D model to ensure the required movements are feasible, even before purchasing the robot.

In addition to the iRC software, the robot controller is a key element in the iguverse development environment. For example, the model IRCLG12-02000 is for 48 V motors, has seven inputs and seven outputs, and has a 10 m cable for connecting to the robot. The IRC controllers include motor drive modules for various sizes of bipolar stepper motors and are available configurable or preconfigured. It also has several interfaces for system integration, including:

• Programmable logic controller (PLC) interface for control via

the digital inputs and outputs, especially for easy starting and stopping of programs via a PLC or pushbutton

• Modbus TCP interface for control via a PLC or PC

• Common Robotic Interface (CRI) Ethernet for control and configuration using a PLC or PC

• Robot Operating System (ROS) interface for operating the robot using ROS

• Interface for object detection cameras

• Cloud interface for remotely monitoring the robot’s state

Supported kinematics

A variety of kinematics (basic motions) that define the controlled movement of the robot are supported in the iguverse. In addition to the preconfigured kinematics, up to three more kinematically independent axes can be configured in IRC. Preconfigured kinematics include:

• 2-axis and 3-axes delta robots

• Gantry robots,

• 2 -axis (X and Y axis)

• 2-axis (Y and Z axis)

• 3-axis (X, Y, and Z axis)

• Robot arms (cobots),

• 3-axis (axis 1, 2, 3)

• 3-axis (axis 2, 3, 4)

• 4-axis (axis 1, 2, 3, 4)

• 4-axis (axis 2, 3, 4, 5)

• 5-axis (axis 1 to 5)

• 6-axis (axis 1 to 6)

• 4-axis SCARA robot

Figure 2: Locations of the main components on the Nicla Vision board. (Image source: Arduino)

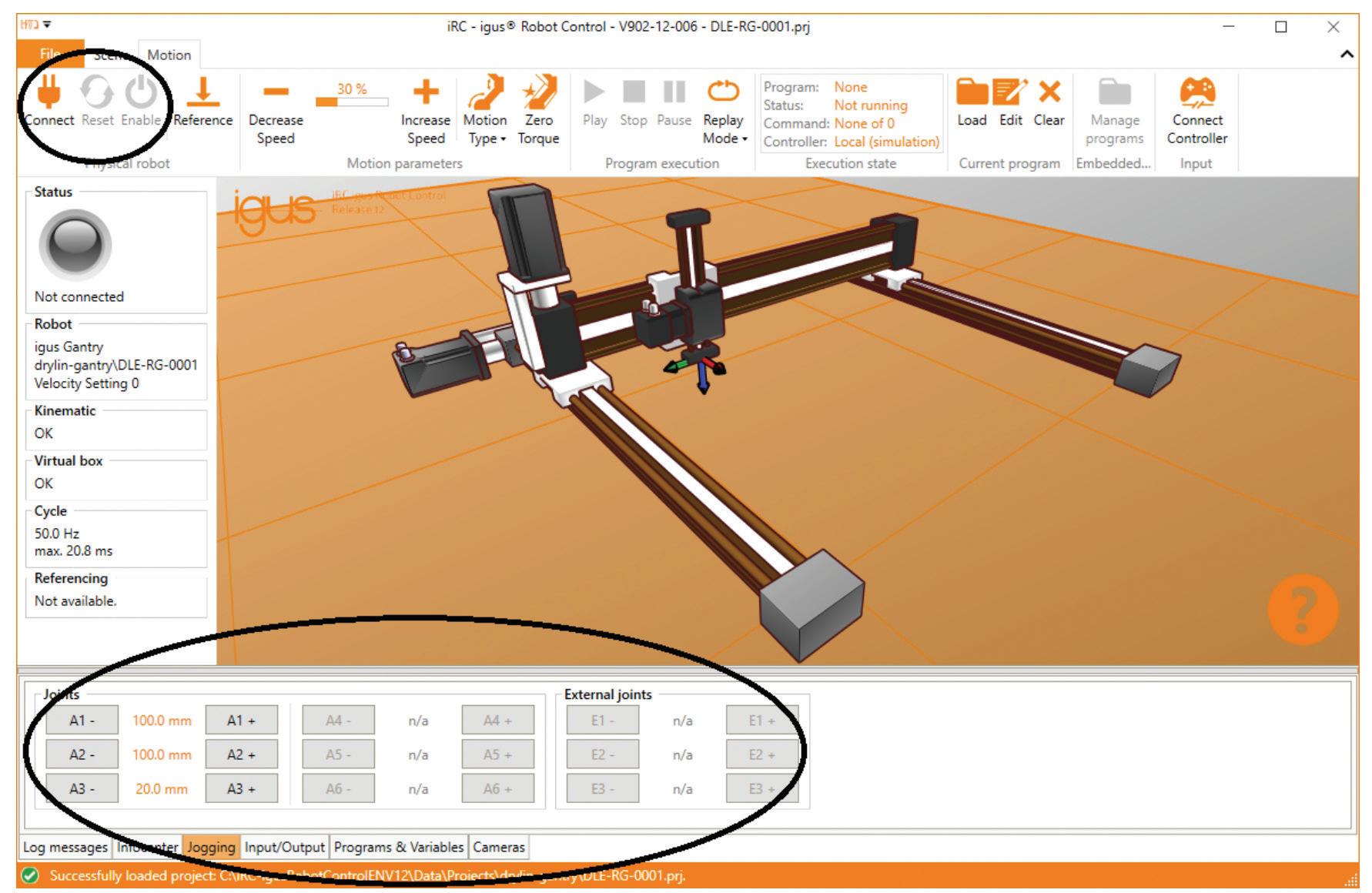

Figure 2: The “Jogging” tab (bottom left) in the iguverse immersive development environment can be used to enter motion profiles. (Image source: igus)

Easy programming for lowcost automation

Igus robots and the IRC are designed to support low-cost automation. That would not be possible without an easy-to-use programming interface. A 3-button mouse or a gamepad can move and position a robot in the iguverse. With the IRC software, a user can freely move all axes of the digital twin in the 3D interface. A teach-in function supports the development of robot control software, even without a physical robot being connected.

To implement teach-in, the user manually moves the virtual robot to the required position and defines how it moves there. The process is repeated until the complete motion profile has been created. The tool center in the IRC software allows users to add matching end effectors, like grippers, easily and automatically adjusts the tool center point on the robot. In addition, a connection to a higher-level industrial control system can be added.

The process begins by activating the robot using the “connect,” “reset,” and “enable” buttons as needed in the interface. The status LED on the IRC should become green, and the status should indicate “No Error.” The motion profile can now be entered using the “Jogging” tab (Figure 2).

Gantry robots

Gantry robots, like those included in the preceding examples of the iguverse, consist of two base X-axes, a Y-axis, and an optional Z-axis. The Y-axis is attached to the two parallel X-axes and moves back and forth in two-dimensional space. The optional Z-axis supports a third dimension of movement.

Gantry robots from igus have self-lubricating plastic liners that slide and roll smoother and quieter than traditional ball-bearing-based designs. The new design is lighter weight, corrosion-resistant, and maintenance-free, which are important qualities for SMEs. Also crucial for SMEs, these robots cost

up to 40% less than traditional gantry robots, providing a quicker return on investment (ROI).

These robots are suited for two classes of applications: low speeds with high loads or high speeds with low loads. Representative applications include packaging, pick and place, labeling, material handling, and assembly operations.

They are offered in a range of sizes. Available accessories include couplings, end effectors, and motor flanges. Examples of medium-sized gantry robots include:

• DLE-FG-0006-AC-650-650 is a two-dimensional flat gantry with a 650 x 650 mm workspace. This robot can handle payloads up to 8 kg and has a dynamic rate of up to 20 picks per minute.

• DLE-RG-0012-AC-800-800-500 is a three-dimensional gantry with an 800 x 800 x 500 mm workspace. It can handle payloads up to 10 kg with a dynamic rate of up to 20 picks per minute.

Figure 3: Palletizing is a common and important activity in manufacturing and logistics operations and can be automated using a gantry robot. (Image source: igus)

Figure 4: Example of a three-axis delta robot next to an igus iRC (left). (Image source: DigiKey)

Palletizing prowess

Palletizing products for shipment is an everyday activity in manufacturing and logistics operations. The newest and largest member of the iguverse is the XXL large gantry robot with a working space of 2,000 x 2,000 x 1,500 mm, well-suited for palletizing applications up to 10 kg. Custom designs with working spaces up to 6,000 x 6,000 x 1,500 mm are available.

These gantry robots can pick parts weighing up to 10 kg, transport them at a speed of up to 500 mm/s, and place them on a pallet with a repeatability of 0.8 mm (Figure 3). The igus palletizing robot solution costs up to 60% less than comparable systems.

Delta robots

Like gantry robots, delta robots are available with two or three axes. Delta robots have a dome-shaped work envelope mounted above the workspace. They have exceptionally high speeds and are often used for material handling and parts

placement. Examples of igus’ delta robots include:

• RBTX-IGUS-0047 is a three-axis design with a workspace diameter of 660 mm. It has an accuracy of ±0.5 mm, a maximum payload of 5 kg, a maximum speed of 0.7 m/s, and can perform up to 30 picks per minute. (Figure 4).

• RBTX-IGUS-0059 is a 2-axis design with a workspace diameter of 700 mm. It also has an accuracy of ±0.5 mm. Its maximum payload is 5 kg, its maximum speed is 2 m/s, and it can perform up to 50 picks per minute.

Articulated arm cobots

The iguverse also supports articulated arm cobots. Cobots can have from two to 10 or more axes, also called degrees of freedom (DOF). They generally have large work envelopes and can perform complex tasks in collaboration with a person. Igus model REBEL-6DOF-02 has 6 DOF and model REBEL-4DOF-02 has 4 DOF. Both have an accuracy of ±1 mm, a nominal working range of 400 mm and can perform a minimum of 7 picks per minute with a linear speed of 200 mm/s.

The 6 DOF model has a maximum payload of 2 kg and a maximum reach of 664 mm. The 4 DOF model has a maximum payload of 3 kg and a maximum reach of 495 mm (Figure 5).

Summary

The iguverse immersive industrial metaverse combines digital twins and VR to provide tools that enable rapid development and deployment of robotic solutions. It’s free, licensefree, and designed to run locally on a PC without a cloud connection. It can be used to develop and test robotic solutions without a robot being present.

It supports a wide range of kinematics in delta robots, gantry robots, robot arms (cobots), and SCARA robots. The IRC includes an array of interfaces to support automation and operational needs, including PLC interface, Modbus TCP/IP, CRI Ethernet, ROS interface, an interface for object detection cameras, and a Cloud interface. The iguverse, the iRC, and related robots from igus have been optimized to support the lowcost automation needs of SMEs. RR

Scan the QR code to learn more about the igus® Robot Control (iRC)

Figure 5: Articulated arm cobots with 4 DOF (left) and 6 DOF (right). (Image source: igus)

Compatible Materials for Robotics and Automation

• High Temp. Cable and Wire Wrapping Tapes

• Low Friction Polymer Films & Fabrics for Durable Release or Wear Surfaces

• Silicone Rubber Sheeting & Strip N’ Stick® Tapes

• Custom Die Cutting for part to component application

• Polymer Beading, Tie Cord, Bar, and Rod

Custom Roll Slitting

Lamination

That system was designed to keep up with customer demand for high-mix, lowvolume parts manufacturing without the need to learn how to program a robot.

“We want to provide turnkey machine tending out of the box,” asserted Thoma. “By partnering with a premium machining providers, we want to provide premier support and reliability without premier costs.”

In addition, an interactive CL108 cobot display showed how the CL Series Zero-G mode allows for hand guiding and recording programs with a light touch, said the unit of Minato City, Japan-based Kawasaki Heavy Industries. Saved parameters improve setup consistency and reduce setup errors, it said.

“The hands-on demo will allow people to feel the sensitivity of Zero-G hand guidance and the ease of teaching points,” Renard said. “They will have a feel for an industrial robot in a collaborative space.”

Other Kawasaki robots to watch Kawasaki also recently released its BA013N and BA013L industrial robots with built-in intelligence, adaptive arc welding, and realtime path modulation (RTPM) capabilities. Also for welding, K-Positioners can help automate workpiece movement to optimize the position of welding torches and reduce cycle times.

The company developed the RoboFin system with AMT and NEFF Automation for material removal and finishing. It can handle diverse materials and surface types, increase abrasive life up to 3X, and improve consistency and quality, said Kawasaki.

The K-Track linear axes are intended to simplify reconfiguration of production lines by reducing the number of robots needed for a particular task.

In addition, Kawasaki Robotics has developed the ASTORINO educational robot.

“The cobot space is competitive,” acknowledged Thoma. “But our robot has precise repeatability along the same lines as an industrial robot, and the robust nature of our robot is part of Kawasaki’s DNA. What sets us apart in the end is the company and the people who take a personal interest in making sure that customers get the right tool for the job.” RR

Hurco and Kawasaki have collaborated on robotic machine tending with the CL Series. Kawasaki

Kawasaki worked on cobot machine tending with Wauseon. Kawasaki

Eugene Demaitre • Editorial Director • The Robot Report

Plus One introduces dual armed InductOne; Pitney Bowes automates parcel induction

As they pass through warehouses and other facilities, many items are handled as packages rather than as eaches. Plus One Robotics in May launched InductOne, a two-armed robot designed to optimize parcel singulation and induction in highvolume fulfillment and distribution centers.

“Parcel variability is a significant challenge of automation within the warehouse,” stated Erik Nieves, CEO of Plus One Robotics. “That’s why InductOne is equipped with our innovative individual cup control [ICC] gripper, which can precisely handle a wide range of parcel sizes and shapes.”

“But it’s not just about what InductOne picks, it’s also about what it doesn’t pick,” he added. “The system avoids picking non-conveyable items, allowing them to automatically convey to a designated exception path and preventing the robots from wasting precious cycles handling items which should not be inducted.”

“We’ve doubled down on parcel handling; we’re not an each picking company,” Nieves told Automated Warehouse. “Vision and grasping for materials handling is hard, and Plus One continues to focus on packaged goods, which spend most of their time as parcels and can be picked by vacuum grippers.”

Plus One Robotics applies picking experience

Founded in 2016 by computer vision and robotics industry experts, Plus One Robotics said it combines computer vision, artificial intelligence, and supervised autonomy to pick parcels for leading logistics and e-commerce organizations. The San Antonio, Texas-based company has offices in Boulder, Colo., and the Netherlands.

Plus One said it applied its experience from more than 1 billion picks to develop InductOne. The company said it has learned from handling over 1 million picks per day, and achieving the reliability required for such high-volume operations led to its new parcel-handling machine.

“ICC and InductOne are the culmination of our learnings from these picks,” said Nieves at Automate. “It’s not just vision but also grasping and conveyance. I push back against those

InductOne includes vision and grasping refined by millions of picks of experience. Plus One Robotics

who say, ‘Data, data, data,” because we also need to appreciate the things above and below our system. We’re applying our expertise to the problem, but we’re not trying to be an integrator or just a hardware maker.”

Nieves noted that the value of robotics-as-a-service (RaaS) models is not recurring payments but the option they give users to scale deployments up or down as needed.

“As Pitney Bowes’ Stephanie Cannon said, it’s important [for automation providers] to get to 70% confidence and then work with customers who trust that you’ll work with them to get the rest of the way,” he said. “Our relationships with FedEx and Home Depot for palletization and parcel handling are built on that trust.”

Pitney Bowes automates parcel induction

Pitney Bowes wanted to automate parcel induction to scan tunnels and sorters at its e-commerce fulfillment hub at Baltimore/Washington International Thurgood Marshall Airport (BWI).

The company added Plus One’s PickOne AI and Yaskawa robot arms to handle a wide range of parcel sizes with consistent speed. Pitney Bowes reported that adding the systems to its distribution centers will improve services to its customer and network.

InductOne engineered for ease of deployment, efficiency

InductOne’s dual-arm design “significantly outperforms single-arm

solutions,” claimed Plus One. “While a single-arm system typically tops out at around 1,600 picks per hour, the coordinated motion of InductOne’s two arms can achieve sustained pick rates of 2,200 to 2,300 per hour. InductOne’s peak rate maxes out at a rate of 3,300 picks per hour, 10% faster than the leading competition.”

Plus One Robotics said its engineering team designed InductOne to be capable but as small as possible for easy integration into brownfield facilities and to minimize the need for costly site modifications.

“The engineering approach behind InductOne has been focused on efficiency and flexibility,” said Nieves. “We designed the system to be as compact and lightweight as possible, making it easier to deploy in limited spaces, including on existing mezzanines. The modular and configurable nature of InductOne also allows it to seamlessly integrate into a variety of fulfillment center layouts.”

InductOne includes the PickOne vision system, the Yonder remote supervision software, pick-and-place conveyors, integrated safety features, analytics, and training and ongoing support. The modular system also offers configurable layouts for cross-belt or tray sorters.

It can handle parcels weighing up to 15 lb. (6.8 kg) and up to 27 in. (69 cm) in length, 19 in. (48 cm) in width, and 17 in. (43 cm) in height. InductOne supports boxes, clear and opaque polybags, shipping envelopes, and padded and paper mailers. RR

InductOne is designed to handle a wide range of parcels.

Plus One Robotics

Eugene Demaitre • Editorial Director

The Robot Report

Humanoid robotics developers must pick which problems to solve

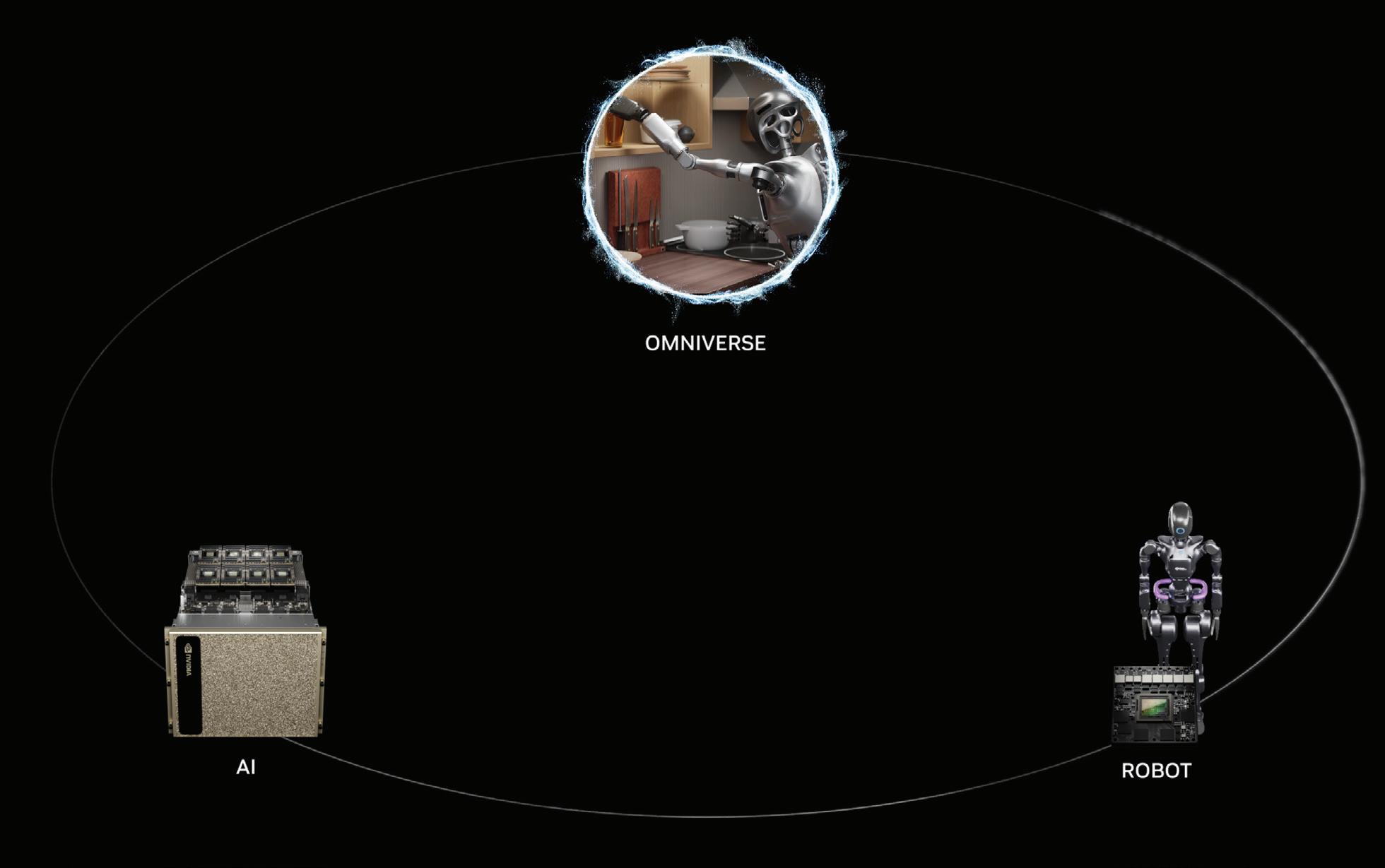

To be effective and commercially viable, humanoid robots will need a full stack of technologies for everything from locomotion and perception to manipulation. Developers of artificial intelligence and humanoids are using NVIDIA tools, from the edge to the cloud.

At NVIDIA’s GPU Technology Conference (GTC) in March, CEO Jensen Huang appeared on stage with several humanoids in development using the company’s technology. For instance, Figure AI in August unveiled its Figure 02 robot, which used NVIDIA graphics processing units (GPUs) and Omniverse to autonomously conduct tasks in a trial at BMW.

“Developing autonomous humanoid robots requires the fusion of three computers: NVIDIA DGX for AI training, NVIDIA Omniverse for simulation, and

NVIDIA Jetson in the robot,” explained Deepu Talla, vice president of robotics and edge computing at NVIDIA, which participated in RoboBusiness 2024.

Talla shared his perspective on the race to build humanoids and how developers can benefit from NVIDIA’s offerings with The Robot Report

Demand and AI create inflection points for humanoid robots

What do you think of the potential for humanoids, and why have they captured so much attention?

Talla: There’s the market need –everyone understands the current labor shortages and the need to automate jobs that are dangerous. In fact, if you look at the trajectory of humanoids, we’ve moved away from a lot of people trying to solve just mechatronics

projects into general-purpose robot intelligence.

There are also two inflection points. The first is that generative AI and the new way of training algorithms hold a lot of promise. From CNNs [convolutional neural networks] to deep learning, the slope is going up.

The second inflection point is the work on digital twins and the industrial metaverse. We’ve been working on Omniverse for well over 15 years, and in the past year or so, it has reached reasonable maturity.

The journey over the next several years is to create digital twins faster, use ray tracing and reinforcement learning, and bridge the sim-to-real gap. NVIDIA is a platform company – we’re not building robots, but we’re enabling thousands of companies building robots, simulation, and software.

NVIDIA

Is NVIDIA working directly with developers of humanoids?

Talla: We have the good fortune of engaging with every robotics and AI company on the planet. When we first started talking about robotics a decade ago, it was in the context of the computer brain and NVIDIA Jetson.

Today, robots need the three computers, starting with that brain for functional safety, able to run AI on low power, and featuring more and more acceleration.

There’s also the computer for training the AI, with the DGX infrastructure. Then, there’s the computer in the middle. We’re seeing use grow exponentially for OVX and Omniverse for simulation, robot learning and virtual worlds.

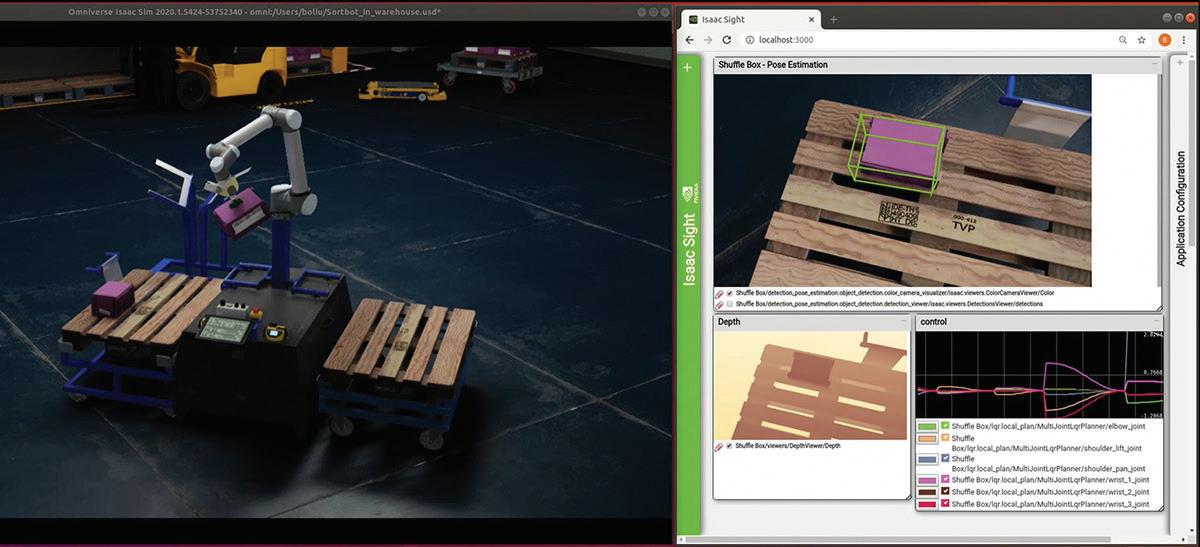

Simulation a necessary step to general-purpose AI, robots

Why is simulation so important for training humanoid robots?

Talla: It’s faster, cheaper, and safer for any task. In the past, the main challenge was accuracy. We’re starting to see its application in humanoids for perception, navigation, actuation, and gripping, in addition to locomotion and functional safety.

The one thing everyone says they’re working on – general-

purpose intelligence – hasn’t been solved, but we now have a chance to enable progress.

Isn’t that a lot of problems to solve at once? How do you help tie perception to motion?

Talla: Going back a year or two, we were focusing on perception for anything that needs to move, from industrial robot arms to mobile robots and, ultimately, humanoids.

With Isaac Perceptor, NVIDIA made continuous progress with ecosystem partners.

We’ve also worked with motion planning for industrial arms, providing cuMotion and foundation models for pose and grasping. All of those technologies are needed for humanoids.

Speaking of foundation models, how do the latest AI models support humanoid developers?

Talla: At GTC this year, we talked about Project GR00T, a generalpurpose foundation model for cognition. Think of it like Llama 3 for humanoid robots.

NVIDIA is partnering with many humanoid companies so they can fine-tune their systems for their environments.

At SIGGRAPH, we discussed how to generate the data needed to build this general-purpose model.

Solutions for Automation and Robotics

The Isaac robot simulator is designed to simplify the training of intelligent machines. NVIDIA

It’s a big challenge. ChatGPT has the Internet as its source for language, but how do you do this for humanoids?

As we embarked on this model, we recognized the need to create more tools. Developers can use our simulation environment and fine-tune it, or they can train their own robot models.

Everyone needs to be able to easily generate synthetic data to augment real-world data. It’s all about training and testing.

With its experience in simulation, what kind of boost does NVIDIA offer developers?

Talla: We’ve created assets for different environments, such as kitchens or warehouses. The RoboCasa NIM makes it easy to import different objects into these generated environments.

Companies must train their robots to act in these environments, so they can make the algorithms watch human demonstrations. But they want much more data on angles, trajectories.

Another method for training humanoids is with teleoperation. NVIDIA is building developer tooling for this, and we have another for actuation with multiple digits. Many

3 COMPUTERS

Talla has described NVIDIA’s solution to the three-computer challenge for humanoid developers. NVIDIA

robot grippers have only two fingers or suction cups, but humanoids need more dexterity to be useful for households or elder care.

We bring all these tools together in Isaac Sim to make them easier to use.

As developers build their robot models, they can pick whatever makes sense.

Domain-specific tasks can be built on foundational models

You mention NIMs – what are they?

Talla: NVIDIA Inference Microservices, or NIM, are easier to consume and already performance-optimized with the necessary runtime libraries.

Since each developer might focus on something different, such as

FOR POSITION FEED BACK ENCODERS

perception or locomotion, we help them with workflows for each of the three computers for humanoids.

How does NVIDIA determine what capabilities to build itself and what to leave for developers?

Talla: Our first principle is to do only as much as necessary. We looked at the whole industry and asked, “What is a fundamental problem?”

For manipulation, we studied motion and found it was cumbersome. We created CUDA parallel processing and cuMotion to accelerate motion planning.

We’re doing a lot, but there are so many domain-specific things that we’re not doing, such as picking. We want to let the ecosystem innovate on top of that.

Position, angle and speed measurement

Contactless, no wear and maintenance-free

High positioning accuracy and mounting tolerances

Linear and rotary solutions

Some companies want to build their own models. Others might have something that solves a specific problem in a better way.

What has NVIDIA learned from its robotics customers?

Talla: There are so many problems to solve, and we can’t boil the ocean. We sit down with our partners to determine what’s the most urgent problem to solve.

For some, it could be AI for perception or manipulation, while others might want an environment to train algorithms with synthetic data generation.

We want people to be more aware of the three-computer model, and NVIDIA works with all the other tools in the industry. We’re not trying to replace ROS, MuJoCo, Drake, or other physics engines or Gazebo for simulation.

We’re also adding more workflows to Isaac Lab and Omniverse to simplify robotic workflows.

Demand builds as humanoid innovators race to meet it

We’ve heard a lot of promises on the imminent arrival of humanoid robots in industrial and other settings. What timeframes do you think are realistic?

Talla: The market needs it to accelerate significantly. Developers

are not solving problems for automotive or semiconductor manufacturing, which are already heavily automated.

I’m talking about all of the midlevel industries, where it’s too complicated to put robots. Young people don’t want to do those tasks, just as people have migrated from farms to cities.

Now that NVIDIA is providing the tools for success with our Humanoid Robot Developer Program, innovation is only going to accelerate. But deployments will be in a phased manner.

It’s obvious why big factories and warehouses are the first places where we’ll see humanoids. They’re controlled environments where they can be functionally safe, but the market opportunity is much greater.

It’s an inside-out approach versus an outside-in approach. If there are 100 million cars and billions of phones, if the robots become safe and affordable, the pace of adoption will grow.

At the same time, skepticism is healthy. Our experience with autonomous vehicles is that if they’re 99.999% trustworthy, that’s not enough. If anything, because they move slower, humanoids in the home don’t have to get to that level to be useful and safe. RR

The Isaac platform provides developers support to build varied workflows NVIDIA

Brianna Wessling • Associate Editor • The Robot Report

Google DeepMind’s

GINSIDE ADVANCES IN ROBOT DEXTERITY

The DeepMind team said that for robots to be more useful, they need to get better at making contact with objects in dynamic environments.

oogle DeepMind recently gave insight into two artificial intelligence systems it has created: ALOHA Unleashed and DemoStart. The company said that both of these systems aim to help robots perform complex tasks that require dexterous movement.

Dexterity is a deceptively difficult skill to acquire. There are many tasks that we do every day without thinking twice, like tying our shoelaces or tightening a screw, that could take weeks of training for a robot to do reliably.

The DeepMind team asserted that for robots to be more useful in people’s lives, they need to get better at making contact with physical objects in dynamic environments.

The Alphabet unit‘s ALOHA Unleashed is aimed at helping robots learn to perform complex and novel two-armed manipulation tasks. DemoStart uses simulations to improve real-world performance on a multifingered robotic hand.

By helping robots learn from human demonstrations and translate images

to action, these systems are paving the way for robots that can perform a variety of helpful tasks, said DeepMind.

ALOHA Unleashed enables manipulation with 2 robotic arms Until now, most advanced AI robots have only been able to pick up and place objects using a single arm.

ALOHA Unleashed achieves a high level of dexterity in bi-arm manipulation, according to Google DeepMind.

The researchers said that with this new method, Google’s robot learned

Google DeepMind’s ALOHA Unleashed trains a transformer encoder-decoder architecture with a diffusion loss to learn highly dexterous bimanual manipulation tasks like tying shoelaces. Google DeepMind

Hanging T-shirts on a coat hanger has traditionally been very difficult for robots. It’s challenging because it involves deformable objects, requires many manipulation steps to solve the task, and involves the coordination of highdimensional robotic manipulators. Google DeepMind

to tie a shoelace, hang a shirt, repair another robot, insert a gear, and even clean a kitchen.

ALOHA Unleashed builds on DeepMind’s ALOHA 2 platform, which was based on the original ALOHA low-cost, open-source hardware for bimanual teleoperation from Stanford University. ALOHA 2 is more dexterous than prior systems because it has two hands that can be teleoperated for training and data-collection purposes. It also allows robots to learn how to perform new tasks with fewer demonstrations.

Google also said it has improved upon the robotic hardware’s ergonomics and enhanced the learning process in its latest system. First, it collected demonstration data by remotely operating the robot’s behavior, performing difficult tasks such as tying shoelaces and hanging T-shirts.

Next, it applied a diffusion method, predicting robot actions from random noise, similar to how the Imagen model generates images. This helps the robot learn from the data, so it can perform the same tasks on its own, said DeepMind.

Using reinforcement learning to teach dexterity

Controlling a dexterous, robotic hand is a complex task. It becomes even more complex with each additional finger, joint, and sensor. This is a challenge Google DeepMind is hoping to tackle with DemoStart, which it presented in a new paper. DemoStart uses a reinforcement learning algorithm to help new robots acquire dexterous behaviors in simulation.

These learned behaviors can be especially useful for complex environments, like multi-fingered hands. DemoStart begins learning from easy states, and, over time, the researchers add in more complex states until it masters a task to the best of its ability.

This system requires 100x fewer simulated demonstrations to learn how to solve a task in simulation than what’s usually needed when learning from real-world examples for the same purpose, said DeepMind.

After training, the research robot achieved a success rate of over 98% on a number of different tasks in simulation. These

Non-contact transmission of both

– High energy transmission (up to 400 Watts)

– Totally wear and maintenance free

PLUG-SOCKET INSERTION

DemoStart is a novel auto-curriculum reinforcement learning method. Google DeepMind said it is capable of learning complex manipulation behaviors on an arm equipped with a three-fingered robotic hand from only a sparse reward and a handful of demos in simulation. Google DeepMind

include reorienting cubes with a certain color showing, tightening a nut and bolt, and tidying up tools.

In the real-world setup, it achieved a 97% success rate on cube reorientation and lifting, and 64% at a plug-socket insertion task that required high-finger coordination and precision.

Training in simulation offers benefits, challenges Google says it developed DemoStart with MuJuCo, its open-source physics simulator. After mastering a range of tasks in simulation and using standard techniques to reduce the sim-to-real gap, like domain randomization, its approach was able to transfer nearly zero-shot to the physical world.

Robotic learning in simulation can reduce the cost and time needed to run actual, physical experiments. Google said it’s difficult to design these simulations, and they don’t always translate successfully back into real-world performance.

By combining reinforcement learning with learning from a few demonstrations, DemoStart’s progressive learning automatically generates a curriculum that bridges the simto-real gap, making it easier to transfer knowledge from a simulation into a physical robot, and reducing the cost and time needed for running physical experiments.

To enable more advanced robot learning through intensive experimentation, Google tested this new approach on a three-fingered robotic hand, called DEX-EE, which was developed in collaboration with Shadow Robot.

Google said that while it still has a long way to go before robots can grasp and handle objects with the ease and precision of people, it is making significant progress. RR

AUTOMATED WAREHOUSE

From mobile robots and automated storage to picking, palletizing, and sortation systems, warehouse operators have a wide range of options to choose from. To get started or scale up with automation, end users need to evaluate their own processes and environments, find the best fit for their applications, and deploy and manage multiple systems.

Automated Warehouse Week will provide guidance, with expert insights into the evolving technologies, use cases, and business best practices.

ENGINEERING Robotics Engineering Week features keynotes and panels, delivered by the leading minds in robotics and automation, addressing the most critical issues facing the commercial robotics developers of today.

Aaron Nathan • founder and CEO • Point One Navigation

NAVIGATING

POSITIONING TECHNOLOGY

FOR OUTDOOR MOBILE ROBOTS

Absolute and relative positioning, and the data from multiple sensors, is important for a robot’s localization and navigation.

As robots become more selfsufficient, they have to navigate their surroundings with greater independence and reliability.

Autonomous tractors, agricultural harvesters, and seeding machines must carefully make their way through crop fields while self-driving delivery vehicles must safely traverse the streets to place packages in the correct spot. Across a wide range of outdoor applications, autonomous mobile robots (AMRs) require highly accurate sources of positioning to safely and successfully complete the jobs for which they are designed.

Accomplishing such precision requires two sets of location

In high-precision agriculture, mobile robots travel down the same narrow path over many months to plant, irrigate, and harvest crops. Each pass requires the robot to reference the same spot each time. PointOne Nav

capabilities. One is to understand the relative position of itself to other objects. This provides critical input to understand the world around it and, in the most obvious case, avoid obstacles that are both stationary and under motion. This dynamic maneuvering requires an extensive stack of navigational sensors like cameras, radar, lidar, and the supporting software to process these signals and give real-time direction to the AMR.

The second set of capabilities is for the AMR to understand its precise physical location (or absolute location) in the world so it can precisely and repeatedly navigate a path that was programmed into the device. An

obvious use case here is high-precision agriculture, where various AMRs need to travel down the same narrow path over many months to plant, irrigate, and harvest crops, with every pass requiring the AMR to reference the same spot each time.

This requires a different set of navigational capabilities, starting with Global Navigation Satellite Systems (GNSS), which the entire ecosystem of sensors and software leverage. Augmenting GNSS are corrections capabilities like RTK and SSR that help drive 100x higher precision than GNSS alone for open-sky applications, and inertial measurement units (IMUs) combined with sensor fusion software

for navigating where GNSS is not available (dead reckoning).

Before we dive into these technologies, here’s a look at use cases where both relative and absolute locations are required for an AMR to do its job.

Robotics applications requiring relative and absolute positioning Agricultural Automation: In agriculture, AMRs are becoming increasingly common for tasks like planting, harvesting, and crop monitoring. These robots utilize absolute positioning, typically through GPS, to navigate large and often uneven fields with precision. This ensures that they can cover vast areas systematically and return to specific locations as needed. However, once in the proximity of crops or within a designated area, AMRs rely on relative positioning for tasks that demand a higher level of accuracy, such as picking fruit that may have grown or changed position since the AMR last visited it. By combining both positioning methods, these robots can operate efficiently in the challenging and variable environments typical of agricultural fields.

Last-Mile Delivery in Urban Settings: AMRs are transforming last-mile delivery in urban environments by autonomously transporting goods from distribution centers to final destinations. These robots use absolute positioning to navigate city streets, alleys, and complex urban layouts, ensuring they follow optimized routes while avoiding traffic and adhering to delivery schedules. Upon reaching the vicinity of the delivery location, the AMRs will also use relative positioning to maneuver around variable or unexpected obstacles, such as a vehicle that is double parked on the street. This dual approach enables the AMRs to handle the intricacies of urban landscapes and make precise deliveries directly to customers’ doorsteps.

Construction Site Automation: On construction sites, AMRs are employed to ensure the project is built to the exact specifications that were designated by the engineers. They also help with tasks like transportation of materials and mapping or surveying of environments. These sites often span large areas with constantly changing environments, requiring AMRs to use absolute positioning to navigate and maintain orientation within the overall project site. Relative positioning comes into play when AMRs perform tasks that require interaction with dynamic elements, such as avoiding other equipment or even personnel on the site. The combination of both positioning systems allows AMRs to effectively contribute to the complex and dynamic nature of construction

projects, enhancing efficiency and safety.

Environmental Monitoring and Conservation: In outdoor environments, AMRs are often deployed for environmental monitoring and conservation efforts such as wildlife tracking, pollution detection, and habitat mapping. These robots leverage absolute positioning to navigate vast natural areas, from forests to coastal regions, ensuring comprehensive coverage of the terrain and allowing for the capture of detailed site surveys and mapping. AMRs can perform tasks like capturing high-resolution images, collecting samples, or tracking animal movements with pinpoint accuracy and can overlay these samples over time in a cohesive way.

Technology for relative positioning AMRs leverage several sensors to locate themselves in relation to other objects in their environment. These include:

Automate with a Competitive Edge

POSITIONING FOR SUCCESS

Since 1999, Quantum Devices has been known for innovation and customization to match our customers’ motion control requirements.

Our encoders are used in multiple industries utilizing Robotics and Automation while encompassing work environments like Warehousing, Material Handling and Packaging— ideal, high technology fits for Autonomous Mobile Robots, Automated Guided Vehicles, Robotic Arms and more.

We design and manufacture in-house, offering encoders for large-volume OEMs, low-volume R&D, and custom application-specific designs; also, drop-in replacements and custom caliper [ring] encoders for large bores—starting at 1” [incremental and commutation options available].

Cameras: Cameras function as the visual sensors of autonomous mobile robots, providing them with an immediate picture of their surroundings similar to the way human eyes work. These devices capture rich visual information that robots can use for object detection, obstacle avoidance, and environment mapping. However, cameras are dependent on adequate lighting and can be hampered by adverse weather conditions like fog, rain, or darkness. To address these limitations, cameras are often paired with near-infrared sensors or equipped with night vision capabilities, which allow the robots to see in low-light conditions. Cameras are a key component in visual odometry, a process where changes in position over time are calculated by analyzing sequential camera images. In general, cameras always require significant processing to convert their 2-D images into 3-D structures.

Radar Sensors: Radar sensors operate by emitting pulsating radio waves that reflect off objects, providing information about the object’s speed, distance, and relative position. This technology is robust and can function effectively in various environmental conditions, including rain, fog, and dust, where cameras and lidar might struggle. However, radar sensors typically offer sparser data and lower resolution compared to other sensor types. Despite this, they are invaluable for their reliability in detecting the velocity of moving objects, making them particularly useful in dynamic environments where understanding the movement of other entities is critical.

Lidar Sensors: Lidar, or Light Detection and Ranging, is a sensor technology that uses laser pulses to measure distances by timing the reflection of light off objects. By scanning the environment with rapid laser pulses, lidar creates highly accurate, detailed 3D maps of the surroundings. This makes it an essential tool for simultaneous location and mapping (SLAM), where the robot builds a map of an unknown environment while keeping track of its location within that map. Lidar is known for its precision and ability to function well in various lighting conditions, though it can be less effective in rain, snow, or fog, where water droplets can scatter the laser beams. Despite being an expensive technology, lidar is favored in autonomous navigation due to its accuracy and reliability in complex environments.

Faction’s self-driving delivery cars rely on a complex array of sensors, including GNSS and Point One’s RTK network, to safely navigate their routes. PointOne Nav

Superior encoders for position and motion control

With a comprehensive line up of encoder solutions, Renishaw brings the expertise needed to address your manufacturing challenges. Whether your application calls for optical, magnetic or laser technology, our encoders achieve the highest levels of accuracy, durability and reliability.

Powerfully positioned for innovative motion control. Your partner for innovative manufacturing www.renishaw.com/encoders

usa@renishaw.com

Real-time kinematic (RTK) relies on known base stations with fixed positions to correct any errors in GNSS receiver positioning estimates. PointOne Nav

Ultrasonic Sensors: Ultrasonic sensors function by emitting high-frequency sound waves that bounce off nearby objects, with the sensor measuring the time it takes for the echo to return. This allows the robot to calculate the distance to objects and obstacles in its path. These sensors are particularly useful for short-range detection and are often employed in slow, close-range activities such as navigating within tight spaces like warehouse aisles, or for precise maneuvers like docking or backing up. Ultrasonic sensors are costeffective and work well in a variety of conditions, but their limited range and slower response time compared to lidar and cameras mean they are best suited for specific, controlled environments where high precision at close proximity is required.

The baseline technology used for absolute positioning starts with GNSS (the term that includes GPS and other satellite systems like GLONASS, Galileo, and BeiDou). Given that GNSS is affected by atmospheric

conditions and satellite inconsistencies, it can give a position solution that is off by many meters. For AMRs that require more precise navigation, this is not good enough – thus the emergence of a technology known as GNSS Corrections which narrows this error down to as low as one centimeter.

RTK: Real-time kinematic (RTK) uses a network of base stations with known positions as reference points for correcting GNSS receiver location estimates. As long as the AMR is within 50 kilometers of a base station and has a reliable communication link, RTK can reliably provide 1–2-centimeter accuracy.

SSR or PPP-RTK: State Space Representation (SSR), also sometimes called Precise Point Positioning, or PPPRTK, uses information from the base station network, but instead of sending corrections directly from a local base station, it models the errors across a wide geographical area. The result is broader coverage allows distances far

beyond 50km from a base station, but accuracy drops to 3-10 centimeters or more depending on the density and quality of the network.

While these two approaches work exceptionally well where GNSS signals are available (generally open sky), many AMRs will travel away from the open sky, where there is an obstruction between the GNSS receiver on the AMR and the sky. This can happen in tunnels, parking garages, orchards, and urban environments. This is where Inertial Navigation Systems (INS) come into play with their IMUs and sensor fusion software.

IMU: An inertial measurement unit combines combines accelerometers, gyroscopes, and sometimes magnetometers to measure a system’s linear acceleration, angular velocity, and magnetic field strength, respectively. This is crucial data that enables an INS to determine the position, velocity, and orientation of an object relative to a starting point in real time.

The history of the IMU dates back to the early 20th century, with its roots in the development of gyroscopic devices used in navigation systems for ships and aircraft. The first practical IMUs were developed during World War II, primarily for use in missile guidance systems and later in the space program. The Apollo missions, for example, relied heavily on IMUs for navigation in space, where traditional navigation methods were not feasible. Over the decades, IMU technology has advanced significantly, driven by the miniaturization of electronic components and the advent of Micro-Electro-Mechanical Systems (MEMS) technology in the late 20th century. This evolution has led to more compact, affordable, and accurate IMUs, enabling their integration into a wide range of consumer electronics, automotive systems, and industrial applications today.

Sensor Fusion: Sensor fusion software is responsible for combining data from the IMU, as well as other sensors to create a cohesive and accurate understanding of an AMR’s absolute location when GNSS is not available. The most basic implementations “fill in the gaps” in real time, between when the GNSS signal is dropped and when it is picked back up again by the AMR. The accuracy of sensor fusion software depends on several factors, including the quality and calibration of the sensors involved, the algorithms used for fusion, and the specific application or environment in which it is deployed. More sophisticated sensor fusion software can cross-correlate different

sensor modalities, resulting in superior positional accuracy than from any one of the sensors in the solution working alone.

Choosing the best RTK network for your autonomous robots

RTK for GNSS provides a highly accurate source of absolute location for autonomous robots. Without RTK, however, many robotics applications simply are not possible or practical. From construction survey rovers to autonomous delivery drones and autonomous agriculture tools, numerous AMRs depend on the centimeter-accurate absolute positioning that only RTK can provide. That said, an RTK solution is only as good as the network behind it. Consistently reliable corrections require

ROBOTICS

a highly dense network of base stations so that receivers are always within close enough range for accurate error corrections. The larger the network, the easier it is to get corrections for AMRs from anywhere. Density alone is not the only factor. Networks are highly complicated real-time systems and require professional monitoring, surveying, and integrity checking to ensure the data being sent to the AMR is accurate and reliable.

What does all of this mean for the developers of autonomous robots?

At least where outdoor applications are concerned, no AMR is complete without an RTK-powered GNSS receiver. For the most accurate solution possible, developers should rely on the densest and most reliable RTK network. And where robots must

move frequently in and out of ideal GNSS signal environments, such as for a self-driving delivery vehicle, RTK combined with an IMU provides the most comprehensive source of absolute positioning available.

No two autonomous robotics applications are the same, and each unique setup requires its own mix of relative and absolute positioning information. For the outdoor AMRs of tomorrow, however, GNSS with a robust RTK corrections network is an essential component of the sensor stack. RR

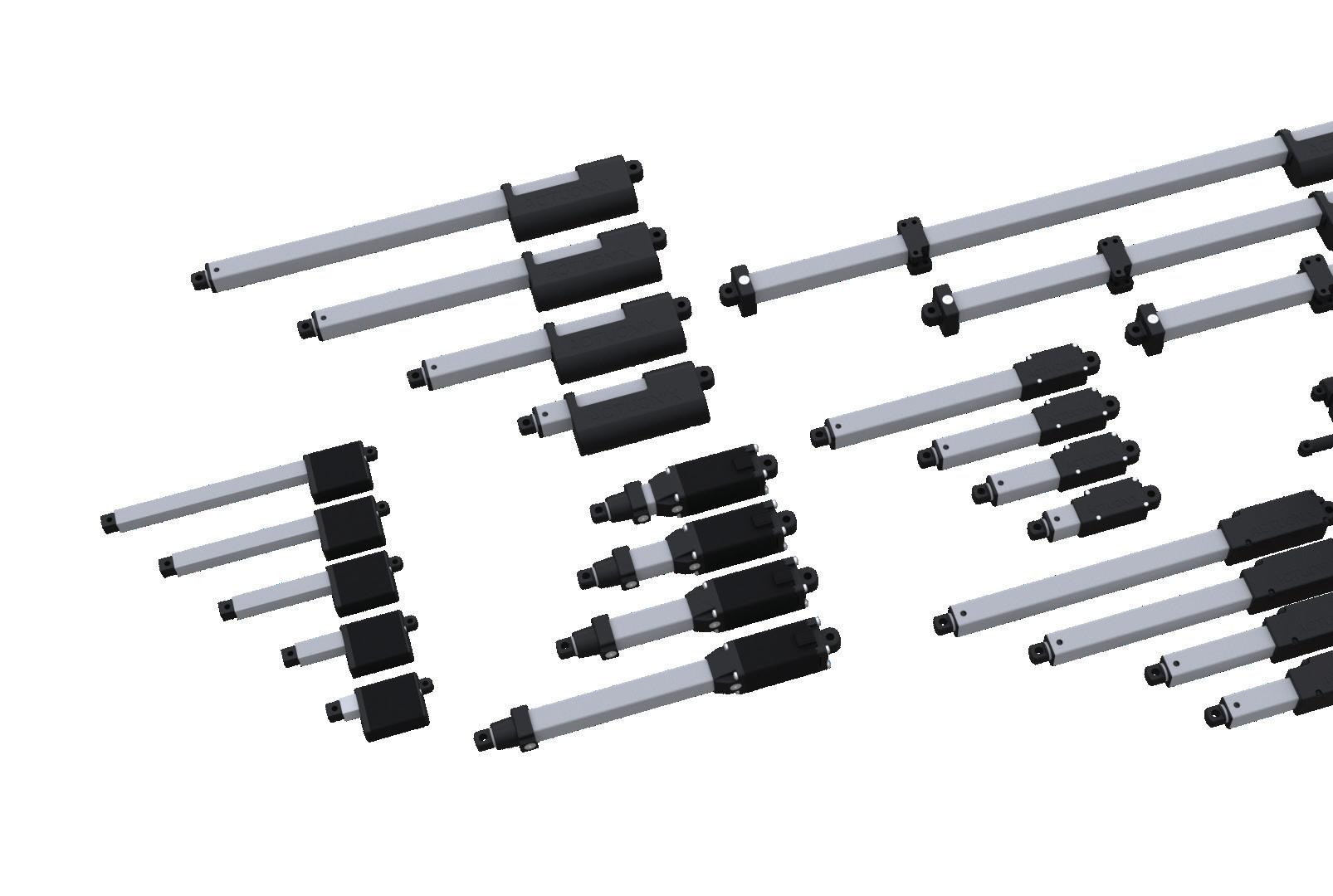

Actuonix Motion Devices offers a diverse range of micro linear actuators and linear servos, ideal for precision motion in tight spaces. Their products are used across sectors such as robotics, automotive, aerospace, and medical, providing reliable and customizable solutions. With a focus on innovation, Actuonix excels at in-house design and engineering, creating unique actuator models that meet specific application requirements.

In addition to their extensive selection of stroke lengths, force ratings, and control options, Actuonix is dedicated to excellent customer support. They offer valuable resources and technical expertise to help with seamless product integration. This commitment to quality and service makes Actuonix a trusted partner for motion control solutions in a wide variety of industries.

Unit 201-1753 Sean Heights Saanichton, BC Canada V8M 0B3

www.actuonix.com | 1-888-225-9198

Actuonix Motion Devices

• Flat: Generally used for load disbursement

• Tab/Lock: Designed to effectively lock an assembly into place

• Finishing: Often found on consumer products

• Wave: For obtaining loads when the load is static or the working range is small

Selecting the Optimal Washer ROBOTICS

• Belleville: Delivers the highest load capacity of all the spring washers

• Fender: Distributes a load evenly across a large surface area

• Shim Stacks: Ideal for simple AND complex applications

Boker’s Inc. 3104 Snelling Avenue Minneapolis, MN 55406-1937

Phone: 612-729-9365

TOLL-FREE: 800-927-4377

(in the US & Canada) bokers.com

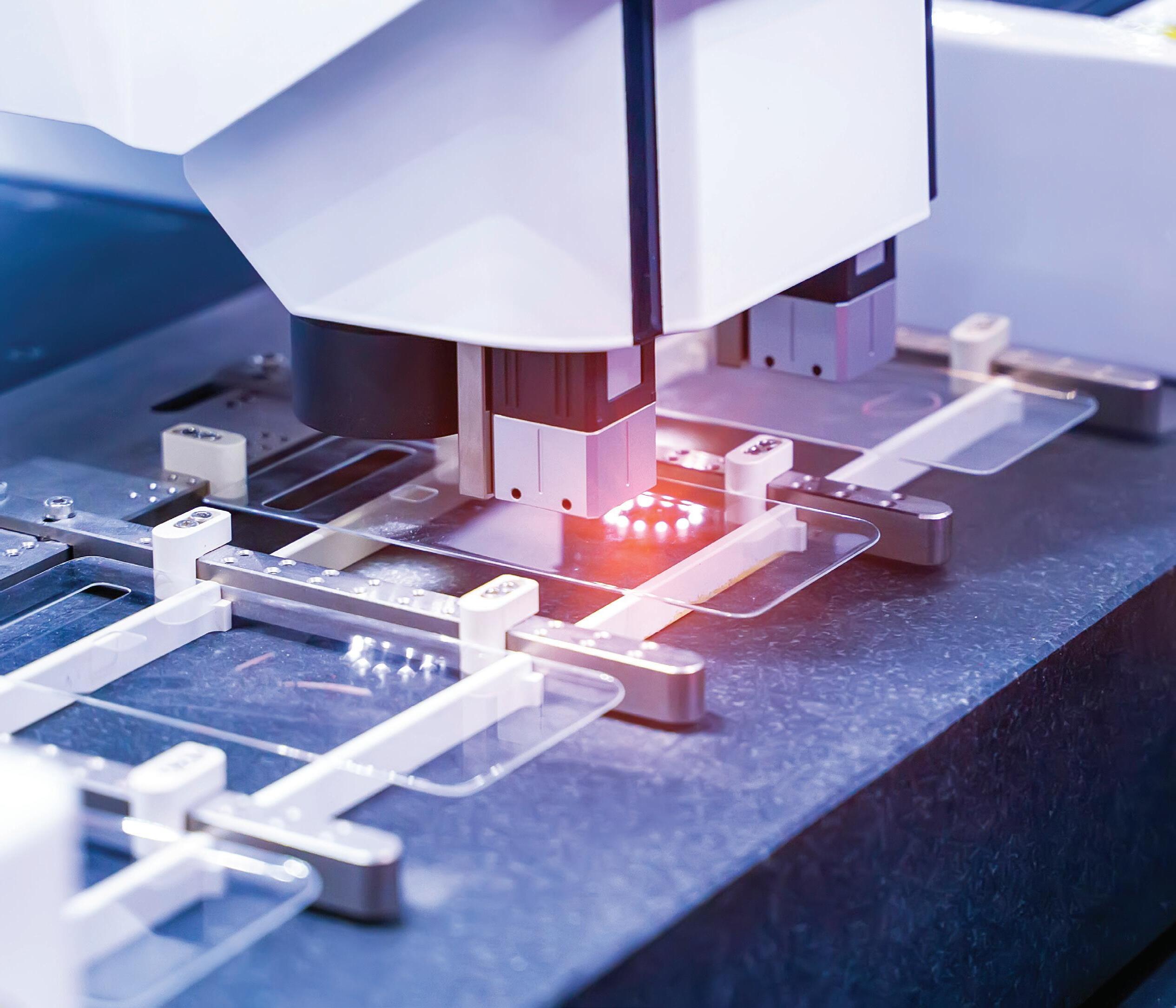

Canon U.S.A., Inc.

DC brushless Servo Motors

Today’s increasing demands of automation and robotics in various industries, engineers are challenged to design unique and innovative machines to differentiate from their competitors. Within motion control systems, flexible integration, space saving, and light weight are the key requirements to design a successful mechanism.

Canon’s new high torque density, compact and lightweight DC brushless servo motors are superior to enhance innovative design. Our custom capabilities engage optimizing your next innovative designs.

We are committed in proving technological advantages for your success.

Canon U.S.A., Inc.

Motion Control Products

3300 North First Street San Jose, CA, 95134

408-468-2320 www.usa.canon.com

ROBOTICS

CGI Inc.

Advanced Products for Robotics and Automation

At CGI we serve a wide array of industries including medical, robotics, aerospace, defense, semiconductor, industrial automation, motion control, and many others. Our core business is manufacturing precision motion control solutions.

CGI’s diverse customer base and wide range of applications have earned us a reputation for quality, reliability, and flexibility. One of the distinct competitive advantages we are able to provide our customers is an engineering team that is knowledgeable and easy to work with. CGI is certified to ISO9001 and ISO13485 quality management systems. In addition, we are FDA and AS9100 compliant. Our unique quality control environment is weaved into the fabric of our manufacturing facility. We work daily with customers who demand both precision and rapid turnarounds.

ISO QUALITY MANAGEMENT SYSTEMS: ISO 9001• ISO 13485 • AS9100 • ITAR

SIX SIGMA AND LEAN PRACTICES ARE EMBRACED DAILY WITHIN THE CULTURE

ROBOTICS

Low Friction Material Fabrication For Industrial Automation

Low friction polymers are important for automation applications as they can reduce wear and energy consumption, improve efficiency, and extend the lifespan of equipment. PTFE, UHMW, POM, PEEK, Nylon, and Polyimide films are often used in various components of automated systems, including insulators, bearing liners, slides, gears, and other moving parts. CS Hyde supplies various material types, thicknesses, adhesive options, and specialized converting capabilities to create material solutions for friction, impact, or abrasion related issues formed in the fastmoving environment of industrial automation. Common applications include wear strips, conveyor liners, bumper plates, and wire and cable wrapping.

CS Hyde Company www.cshyde.com

39655 N. IL Rt. 83 Lake Villa, IL 60046 800-461-4161

ROBOTICS

DigiKey www.digikey.com

701 Brooks Avenue South, Thief River Falls, MN 56701 USA

sales@digikey.com • 1-800-344-4539

Omron’s TM Collaborative Robots

Increase production and reduce worker fatigue with Omron Automation’s TM Collaborative Robots. Repetitive tasks such as pick and place inspection, machine tending, assembly, and more are easily handled by the 6 axis TM cobot. Utilizing graphical programming with the TMFlow software, hand guided position recording, and a built-in vision system, users can set up simple applications in mere minutes. Omron’s TM Collaborative Robots are ISO 10218-1:2011 and ISO/TS-15066 compliant and include features like rapid changeover using TMVision and Landmark, advanced collaborative control, and external camera support.

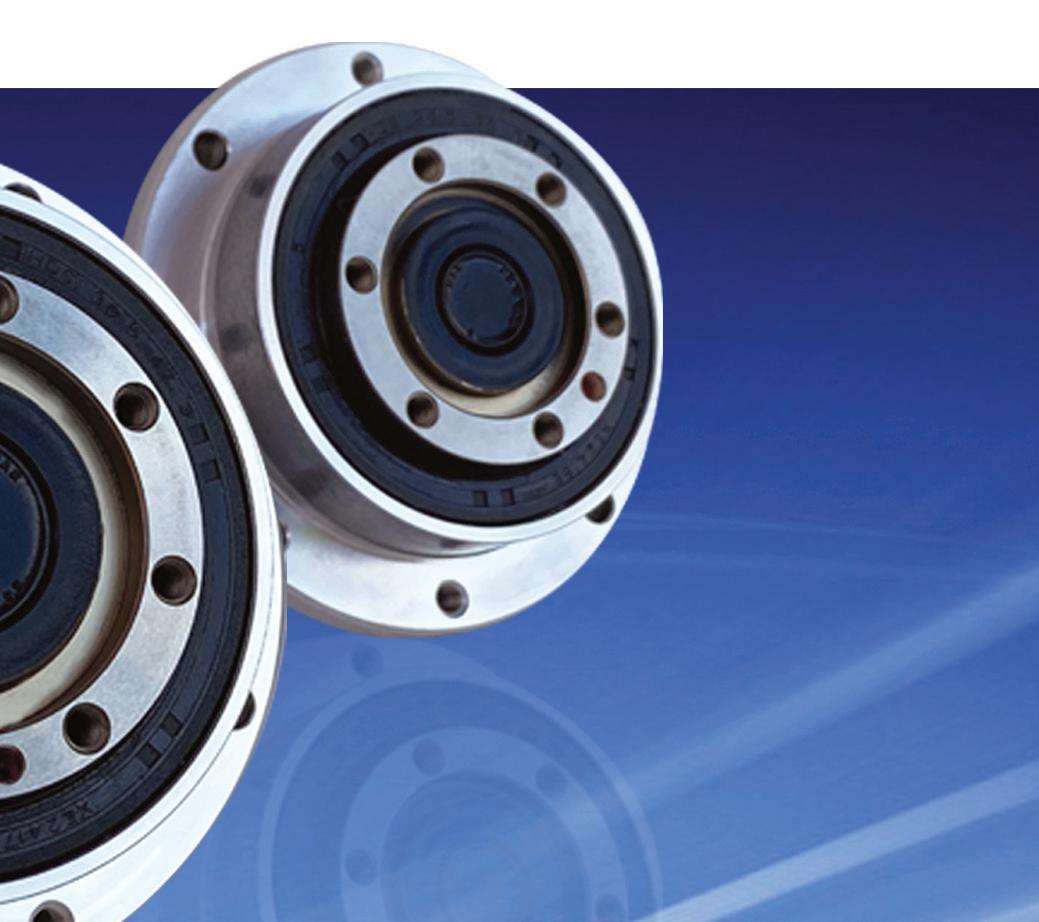

Extra Large, Hollow Shaft Speed Reducer

The new FBS Series gear unit features an extra large hollow shaft with a compact outer diameter that is ideal for robots and machines requiring complex cabling to pass through the axis of rotation. It includes large cross-roller bear ings, enabling the load to be mounted directly to the output and is available in two sizes (25, 32) and three reduction ratios (30:1, 50:1, 100:1).

Scan the QR code for more information on the FBS Series Hollow-Shaft Gear Unit.

ROBOTICS

ROBOTICS

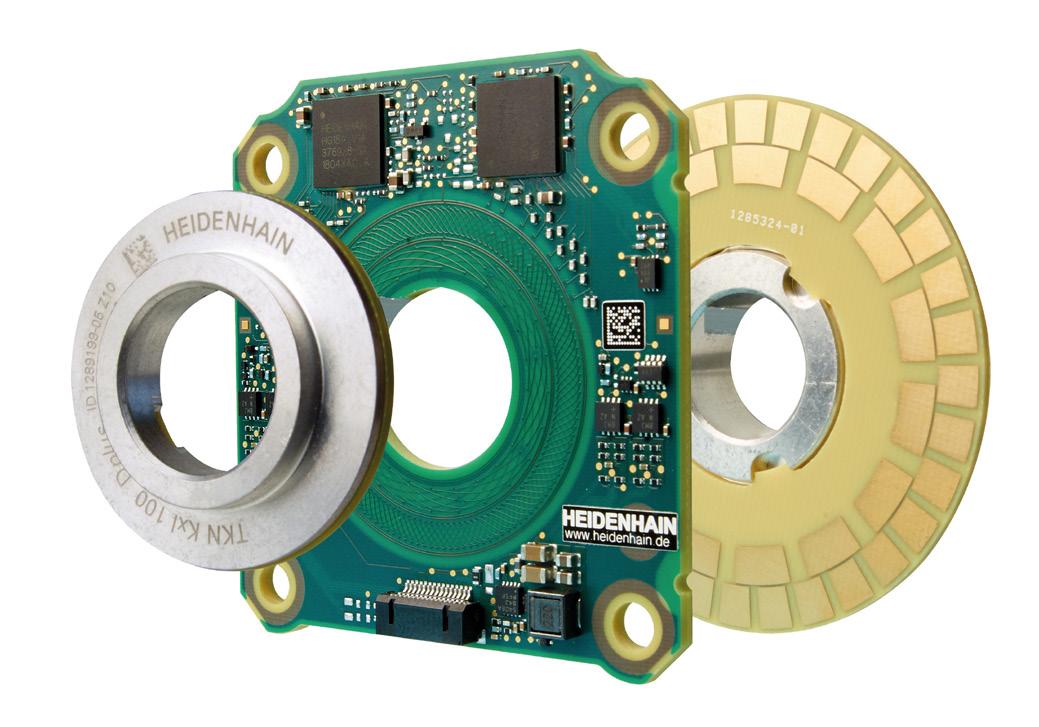

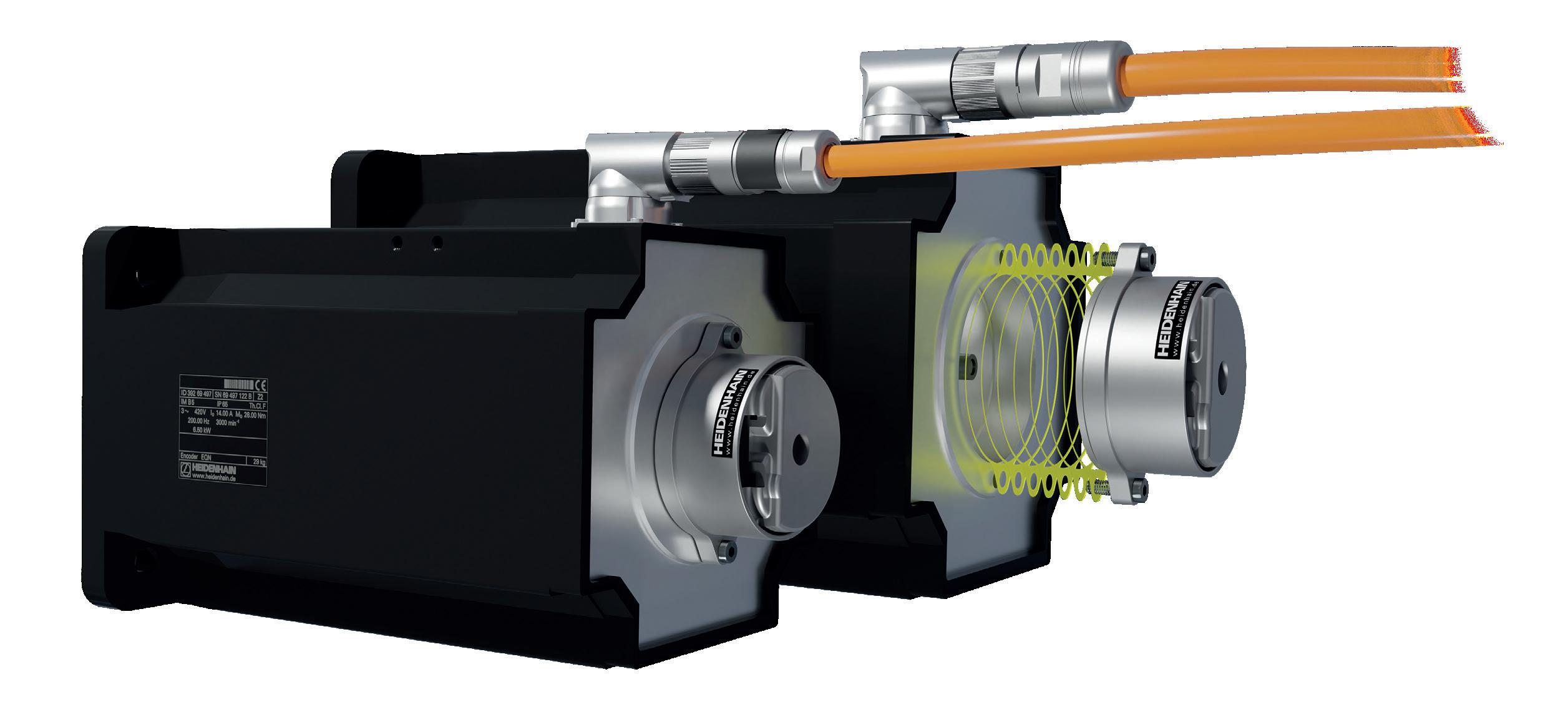

Absolute rotary encoders without integral bearing

The KCI 120 Dplus unites two rotary encoders in a single device, offering high reliability in a rugged and extremely compact design and the low 20 mm profile is great for tight installation spaces. The inductive scanning method is particularly resistant to contamination and magnetic fields. Their design permits high vibration loads of up to 400 m/s² on the stator and 600 m/s² on the rotor. Offering a mounting tolerance of ±0.3 mm for Encoder A and ±0.5 mm for Encoder B. A simply press-fit onto the motor shaft and output shaft, offering fast and easy installation.

The available safety functionality (SIL 3 safety), minimizes malfunctions and a safe operation of machines and automated systems.

HEIDENHAIN CORPORATION

333 East State Parkway Schaumburg, IL 60173

847-490-1191

www.heidenhain.us marketing01@heidenhain.com

Interconnect Solutions

LEMO® is the industry leader in the design and production of precise custom interconnect systems. LEMO® products are designed and manufactured according to rigorous and controlled processes. Inspection and traceability of products are systematically ensured in compliance with our standards. High-quality LEMO® Push-Pull connectors are used in a wide range of challenging application environments, such as medical, test & measurement, research, defense & military, information systems, aerospace & autonomous vehicles, robotics, automotive, industrial control, nuclear, broadcast & audio-video, and communications.

LEMO® has been designing precision connectors for over seven decades. Offering more than 90,000 combinations of products that continue to grow through customer-specific designs, LEMO® and its brands REDEL®, NORTHWIRE®, and COELVER® currently serve more than 100,000 customers in over 80 countries around the world.

Ph: 707.578.8811

E-mail: info_us@lemo.com Website: www.lemo.com/en

ROBOTICS

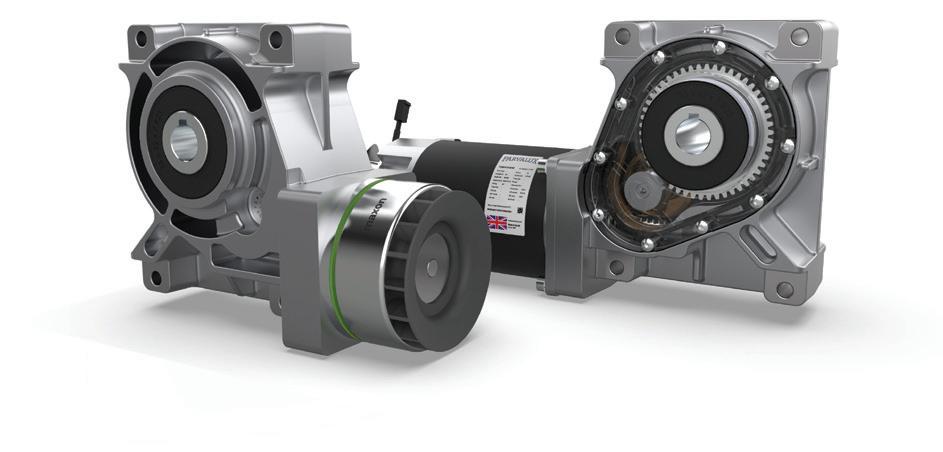

Why Do AGVs Need Reliable Motors?

Any automated system is only as good as its reliability. An automated guided vehicle (AGV) needs to do the job it is programmed to do, without error, quickly and efficiently. Therefore, the role AGVs play in materials handling is essential and their reliability is dependent on the component parts used to manufacture including robust motors. Motors for AGVs must be reliable and low maintenance and they need to be capable of being in operation 24/7 without loss of functionality.

The type of motor needed for your AGV depends on the type and size of the automated guided vehicle you have, and how much weight it will be expected to transfer at any one time. A motor that is too small won’t be able to function long and one that is too big and powerful could cause functionality issues.

The recommended motors for AGVs are brushless DC motors. Brushless motors are more powerful, quieter and lower maintenance solutions that suffer less wear and tear than standard DC motors. By using the appropriate motor for the equipment it can reduce maintenance costs and diminish lost revenues from downtime.

Parvalux offers an expanded selection of brushless motors that are highly efficient with a high starting torque. If you need something more specific, our expert team can design and build custom brushless DC motors for AGVs used in industries all over the world.

ROBOTICS

Fully Automate Your Automation

The factory of the future demands higher output with increased flexibility, and cobots can’t do it alone. The Cobot Feeder from Applied Cobotics addresses these demands with high-mix, high-volume production while eliminating cobot downtime and alleviating staffing issues. The bottom-line result is increased output without increased labor.

The Cobot Feeder presents a worktable that adjusts vertically, synchronizing with each custom tray on the rack tower. A horizontal loader/unloader provides accurate and repeatable motion while positioning trays in front of the cobot. These features, along with the portable dunnage tray cart, make the Cobot Feeder from Applied Cobotics an essential complement to any collaborative automation system. With a 90-day ROI, small to medium-sized enterprises will quickly achieve lights-out manufacturing, increasing safety, quality—and profits.

www.parvalux.com sales.us@parvalux.com

ROBOTICS

ROBOTICS

Encoders You Can Count On

Motion control applications use encoders to translate position, or motion of a shaft or axle, to analog or digital output signals. Applications that require a brushless DC motor also require an external system to commutate the rotor and stator poles of the motor. Quantum Devices’ encoders offer an all-in-one solution. Brushless DC motors are commonly used in applications such as industrial automation, transportation, aerospace, medical/ healthcare and consumer electronics. As BLDC motors have increased in popularity, so has the need for real-time position feedback.

Incremental & Commutation encoders like our QM35, QML35 and QM22 are ideal solutions for accurate position information. These commutation encoders allow engineers to simplify motor design and save their OEMs money by reducing assembly costs in highvolume manufacturing. Brushless motor manufacturers looking for commutating encoders can contact Quantum Devices’ engineers about customization of line counts, commutations or bore sizes.

Find your Renishaw motion control solution.

Encoders can measure linear, rotary, and partial arc motion by reporting incremental or absolute position. Position feedback from an encoder allows industrial machines to reliably control their moving axes. This control contributes to lower manufacturing time and cost. Discover how Renishaw’s different types of encoder products can meet your industrial automation needs. Our high-accuracy and high-quality encoders offer superior reliability, easy installation, and are suitable for even the harshest environments. Renishaw encoders feature a zerowear non-contact design. We offer incremental and absolute scales in a variety of materials. Our readheads provide position signals with low electrical noise and high resistance to vibration.

Renishaw, Inc.

1001 Wesemann Dr. West Dundee, IL, 60118

Phone: 847-286-9953

email: usa@renishaw.com website: www.renishaw.com

Compact, rugged motion sensing for any task

Explore Silicon Sensing’s range of gyroscopes, accelerometers and inertial systems delivering precise, compact and affordable solutions for any robotics environment.

Our products include:

• DMU11 - a proven, low cost, compact, six-degrees-of-freedom (6-DOF) inertial measurement unit delivering market-leading performance that is calibrated over its full rated temperature range.

• PinPoint® - A tiny gyro measuring only 5mm x 6mm and yet delivering on performance, reliability and price. Available in both flat and orthogonal mounts.

• CMS300 - a robust, compact gyro and dual-axis accelerometer combining precise performance with low power consumption. Available in both flat and orthogonal mount packages.

• Silicon Sensing - Redefining inertial sensing.

AUTOMATED WAREHOUSE

From mobile robots and automated storage to picking, palletizing, and sortation systems, warehouse operators have a wide range of options to choose from. To get started or scale up with automation, end users need to evaluate their own processes and environments, find the best fit for their applications, and deploy and manage multiple systems.

Automated Warehouse Week will provide guidance, with expert insights into the evolving technologies, use cases, and business best practices.

REGISTER TODAY roboweeks.com

ROBOTICS ENGINEERING

Robotics Engineering Week features keynotes and panels, delivered by the leading minds in robotics and automation, addressing the most critical issues facing the commercial robotics developers of today.

ad index

Ryan Ashdown rashdown@wtwhmedia.com 216.316.6691

Jami Brownlee jbrownlee@wtwhmedia.com 224.760.1055

Mary Ann Cooke mcooke@wtwhmedia.com 781.710.4659

Jim Dempsey jdempsey@wtwhmedia.com 216.387.1916

Mike Francesconi mfrancesconi@wtwhmedia.com 630.488.9029

Jim Powers jpowers@wtwhmedia.com 312.925.7793

LEADERSHIP TEAM

Publisher Courtney Nagle cseel@wtwhmedia.com 440.523.1685

CEO Scott McCafferty smccafferty@wtwhmedia.com 310.279.3844 @SMMcCafferty

EVP

Marshall Matheson mmatheson@wtwhmedia.com 805.895.3609 @mmatheson

CFO

Ken Gradman kgradman@wtwhmedia.com 773-680-5955

Live & OnDemand Webinars

From mobile robots and automated storage to picking, palletizing, and sortation systems — warehouse operators have a wide range of options to choose from. Automated Warehouse Week will provide guidance, with expert insights into the evolving technologies, use cases, and business best practices.

HMC 2, EnDat 3 and Rotary Encoders Get ready for digital manufacturing

Enhance your motors and drives with spacesaving, economical and high-performance position feedback.The future of digital manufacturing awaits you with new HEIDENHAIN products featuring the EnDat interface, such as EnDat 3 inductive and optical rotary encoders, and the HMC 2 singlecable solution. You’ll enjoy reduced cabling, field-proven connectivity, higher bandwidth, and greater configurability through send lists and access levels.