Today’s electronic engineers are challenged by multiple factors. Research over the years has illustrated common trends that you, as engineers, deal with including keeping your skills up-to-date, shrinking time-to-market windows, fewer engineers and smaller design teams for large projects and evolving technological trends. The bottom line is that you must continually update your engineering knowledge base to be successful in your work.

Throughout 2023, we are presenting a series of online educational days where you can learn how to address specific design challenges, learn about new techniques, or just brush up your engineering skills. We are o ering eight di erent Training Days. Each day will focus on a helping you address a specific design challenge or problem. These are not company sales pitches! The focus is on helping you with your work.

AVAILABLE ON DEMAND

THERMAL MANAGEMENT

MAY 3RD

EMI/RFI/EMC

JUNE 14TH

DESIGNING FOR SUSTAINABILITY

AUGUST 9TH

BATTERY MANAGEMENT

SEPTEMBER 13TH

MOTOR DRIVES DESIGN

OCTOBER 11TH

IOT DESIGN / WIRELESS

NOVEMBER 8TH

ELECTRIC VEHICLE DESIGN

DECEMBER 6TH 5G / RF DESIGN

For more information and to register for these webinars, go to:

Perhapsyou see the connection between origami and the IoT right away. It only occurred to me while watching a documentary the other night, opening my mind further to the symbiotic relationship between art and science.

The film “Between the Folds, The Science of Art, The Art of Science,” a 2008 documentary about origami, seems as far removed from 27 billion connected IoT devices (by 2025) as that 27 billion number appears to be from reality. The paper points connected the lines with such elegant purpose. While maybe not necessarily as elegant, I was reminded of the iconic graphic representations of the IoT — electrified lines moving from point to point around the globe.

In addition to the professional origami master artists profiled, the director also treated us to the several scientists and mathematicians who had taken up the art form. One hyperrealist walked away from a successful physics career to challenge the physics of a folded piece of paper. I read another profile of an advanced mathematician and scientist who received a MacArthur Genius Award for computational origami research. I was fascinated by the mathematical tools resulting in folds fashioned into elaborate sculptures. Additionally, origami’s physical structure has consistently inspired innovation in engineering, from better heart stents to foldable equipment at NASA to the Kresling-Inspired Mechanical Switch (KIMS) that has the potential to introduce a new class of memory devices.

After watching the film, I asked my Japanese friend, an executive with a company providing AI operations tools for IT and DevOps teams, what is it about those billions of connected devices of the IoT that makes me think about origami?

She took about two minutes to nail it.

“IoT made previously invisible and unmeasurable things datapoints, just like you create points with previously flat paper,” she said. “And these data points and paper points connect different dimensions, and something new emerges, whether a new insight or a new three-dimensional shape.”

It’s true. I learned that origami evolved and became more complex with more folds, points, and creases, elevating to an art form along the way. Rather than following instructions to create the same things, origami artists are creating new, one-of-a-kind pieces. Now think of how products are traditionally made — with uniform configurations, which the user then adapts to the design and intent of the product. We shaped ourselves into a pre-defined piece. With IoT and the added layer of AI, we can now create one-of-a-kind pieces, just like that art origami. We aren’t limited to the original intent of a product. We make it our own. Whether it is quantum computing giving artists a new way to envision the world or the origami of the Internet of Things, art and science are more innovative together than apart. Those billions of connected devices may indeed make every “thing” smarter. What we can create, envision, and dream of because of the massive amount of data communicated between the points is what will allow us to sculpt our world intelligently.

Aimee Kalnoskas Editor-in-Chief

02 The Origami of the Internet of Things

06 What are emerging sensor technologies for Agriculture 4.0? Agriculture 4.0 is emerging and requires an expanded sensor suite.

09 Edge computing security: challenges and techniques

Addressing security for edge devices from the beginning must be an integral part of the design process.

12 How does the PICMG IoT.1 specification for smart IoT sensors work?

The recently released IoT.1 speci cation de nes a rmware interface and low-level data model providing vendorindependent con guration of smart sensors and e ectors.

17 Private 5G networks unlock IoT applications

As more industries rely on wireless devices, sensors, and arti cial intelligence to connect people and machines, private networks bring the potential to enable more advanced IoT applications while increasing security, bandwidth, and speed.

20 Flexible connectivity lets IoT flourish

Designers have choices when it comes to creating new IoT designs; connectivity choices depend on the design’s constraints. As with any engineering decision, the learning curve and time-to-market constraints can dictate OEMing an existing solution to the problem.

24 Single-Pair Ethernet: simplifying your Ethernet connectivity from sensor to cloud

A physical layer for Industrial Ethernet, Single-Pair Ethernet combines high data rates with a lightweight cable design, streamlining communication across automation levels.

28 Test tool simplifies and automates LoRaWAN certification

LoRaWAN certi cation is the solution for successfully scaling long-range, low-power end devices for IoT. The LoRaWAN certi cation test tool (LCTT) accelerates time to market through test automation.

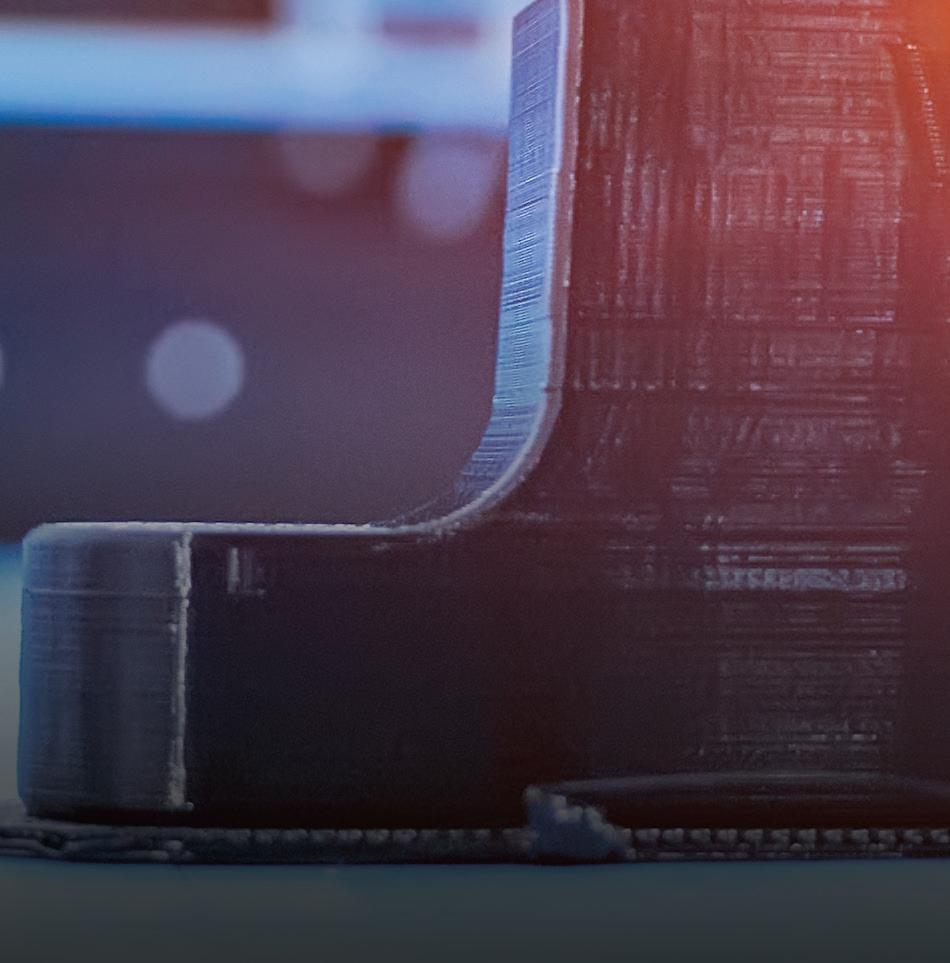

32 The future of PCBs in the world driven by IoT

The traditional PCB design methods may not support the functionalities o ered by an IoT product.

34 How does Matter work as middlewarefor IoT devices?

Matter has been called an interoperable application layer solution. The Connectivity Standards Alliance, the same source that oversees the development of Matter, refers to it as a protocol.

37 IoT: how 5G differs from LTE

5G extends its scope beyond consumer to many new vertical and enterprise markets. Thanks to its exibility and improved performance, 5G opens the door to many industrial applications.

EDITORIAL

VP, Editorial Director

Paul J. Heney pheney@wtwhmedia.com @wtwh_paulheney

Editor-in-Chief

Aimee Kalnoskas akalnoskas@wtwhmedia.com @eeworld_aimee

Senior Technical Editor Martin Rowe mrowe@wtwhmedia.com @measurementblue

Associate Editor Emma Lutjen elutjen@wtwhmedia.com

Executive Editor Lisa Eitel leitel@wtwhmedia.com @dw_LisaEitel

Senior Editor Miles Budimir mbudimir@wtwhmedia.com @dw_Motion

Senior Editor Mary Gannon mgannon@wtwhmedia.com @dw_MaryGannon

Managing Editor Mike Santora msantora@wtwhmedia.com @dw_MikeSantora

VIDEOGRAPHY SERVICES

Videographer Garrett McCafferty gmccafferty@wtwhmedia.com

Videographer Kara Singleton ksingleton@wtwhmedia.com

CREATIVE SERVICES & PRINT PRODUCTION

VP, Creative Services Mark Rook mrook@wtwhmedia.com @wtwh_graphics

Senior Art Director Matthew Claney mclaney@wtwhmedia.com @wtwh_designer

Senior Graphic Designer Allison Washko awashko@wtwhmedia.com @wtwh_allison

Graphic Designer Mariel Evans mevans@wtwhmedia.com @wtwh_mariel

Director, Audience Development Bruce Sprague bsprague@wtwhmedia.com

PRODUCTION SERVICES

Customer Service Manager Stephanie Hulett shulett@wtwhmedia.com

Customer Service Representative Tracy Powers tpowers@wtwhmedia.com

Customer Service Representative JoAnn Martin jmartin@wtwhmedia.com

Customer Service Representative

Renee Massey-Linston renee@wtwhmedia.com

Customer Service Representative Trinidy Longgood tlonggood@wtwhmedia.com

MARKETING

VP, Digital Marketing Virginia Goulding vgoulding@wtwhmedia.com @wtwh_virginia

Digital Marketing Coordinator

Francesca Barrett fbarrett@wtwhmedia.com @Francesca_WTWH

Digital Design Manager Samantha King sking@wtwhmedia.com

Marketing Graphic Designer Hannah Bragg hbragg@wtwhmedia.com

Webinar Manager Matt Boblett mboblett@wtwhmedia.com

Webinar Coordinator

Halle Kirsh hkirsh@wtwhmedia.com

Events Manager

Jen Osborne jkolasky@wtwhmedia.com @wtwh_Jen

Events Manager

Brittany Belko bbelko@wtwhmedia.com

Event Marketing Specialist Olivia Zemanek ozemanek@wtwhmedia.com

Event Coordinator Alexis Ferenczy aferenczy@wtwhmedia.com

Web Development Manager B. David Miyares dmiyares@wtwhmedia.com @wtwh_WebDave

Senior Digital Media Manager Patrick Curran pcurran@wtwhmedia.com @wtwhseopatrick

Front End Developer Melissa Annand mannand@wtwhmedia.com

Software Engineer David Bozentka dbozentka@wtwhmedia.com

Digital Production Manager Reggie Hall rhall@wtwhmedia.com

Digital Production Specialist Nicole Lender nlender@wtwhmedia.com

Digital Production Specialist Elise Ondak eondak@wtwhmedia.com

Digital Production Specialist Nicole Johnson njohnson@wtwhmedia.com

FINANCE

Controller Brian Korsberg bkorsberg@wtwhmedia.com

Accounts Receivable Specialist Jamila Milton jmilton@wtwhmedia.com

WTWH Media, LLC

1111 Superior Ave., Suite 2600 Cleveland, OH 44114

Ph: 888.543.2447

FAX: 888.543.2447

DESIGN WORLD does not pass judgment on subjects of controversy nor enter into dispute with or between any individuals or organizations. DESIGN WORLD is also an independent forum for the expression of opinions relevant to industry issues. Letters to the editor and by-lined articles express the views of the author and not necessarily of the publisher or the publication. Every effort is made to provide accurate information; however, publisher assumes no responsibility for accuracy of submitted advertising and editorial information. Non-commissioned articles and news releases cannot be acknowledged. Unsolicited materials cannot be returned nor will this organization assume responsibility for their care.

DESIGN WORLD does not endorse any products, programs or services of advertisers or editorial contributors. Copyright© 2023 by WTWH Media, LLC. No part of this publication may be reproduced in any form or by any means, electronic or mechanical, or by recording, or by any information storage or retrieval system, without written permission from the publisher.

SUBSCRIPTION RATES: Free and controlled circulation to qualified subscribers. Non-qualified persons may subscribe at the following rates: U.S. and possessions: 1 year: $125; 2 years: $200; 3 years: $275; Canadian and foreign, 1 year: $195; only US funds are accepted. Single copies $15 each. Subscriptions are prepaid, and check or money orders only.

SUBSCRIBER SERVICES: To order a subscription or change your address, please email: designworld@omeda.com, or visit our web site at www.designworldonline.com

POSTMASTER: Send address changes to: Design World, 1111 Superior Ave., Suite 2600, Cleveland, OH 44114

By Jeff Shepard

By Jeff Shepard

refers to systems that employ drones, robotics, the Internet of Things (IoT), vertical farms, artificial intelligence (AI), renewable energy, and advanced sensor methodologies. Agriculture 4.0 is similar to Industry 4.0 in some ways: where Industry 4.0 is designed to support automation and mass customization of production processes, Agriculture 4.0 is expected to support autonomous operations and mass customization of farming practices across microenvironments.

Integrating digital technologies into farming, agricultural operations can target resources needed to increase yields, reduce costs, and minimize crop damage, water, fuel, and fertilizer usage. This FAQ looks at sensor technologies under development for Agriculture 4.0, including wearables for plants and hyperspectral imaging, the EU’s Agricultural Interoperability and Analysis System program, and the security challenges related to wireless sensor networks and the Internet of Things in Agriculture 4.0.

Graphene and fiber optics are two technologies used to develop wearable sensors for plants. Graphene sensors can measure the time it takes for different crops to move water from the roots to the lower and upper leaves. Initially, researchers are using these sensors to help develop plants that use water more efficiently. In the longer term, these graphene sensors on tape (also referred to as ‘plant tattoos’) are expected to support the design of inexpensive, high-performance sensors for Agriculture 4.0 applications (Figure 1) and help improve the efficiency of irrigation systems. The process used to make the sensors can produce devices that are several millimeters across with features as small as 5 μm. The small feature sizes increase the sensitivity of the sensors. The conductivity of the graphene oxide in these sensors changes in the presence of water vapor, enabling the measurement of transpiration (the release of water vapor) from a leaf.

Fiber Bragg grating (FBG) sensing technology is also being developed for agricultural applications. An FBG acts as a notch filter that reflects a narrow portion of light centered around the Bragg wavelength (λB) when illuminated by a broad light spectrum. It’s fabricated as a microstructure inscribed into the core of an optical fiber. Unlike graphene sensors, which are an emerging technology, FBG

sensors are already in use in several areas, including aerospace, civil engineering, and human health monitoring. FBG sensors can be fabricated with high sensitivities, small size, and lightweight. In the case of agricultural sensors, the intrinsic sensitivity to strain (ε) and temperature variations (ΔT) of FBG technology is being combined with a moisture-activated polymer to detect relative humidity changes (ΔRH) in the surrounding air. In addition, FBG sensors can be multiplexed to support the monitoring of both plant growth and environmental conditions in a single device. The FBG designed for agricultural applications consists of three segments, one for ε sensing, one for ΔRH monitoring, and a third optimized for ΔT measurements. It was fabricated using a commercial FBG with a grating length of 10 mm, λB of 1533 nm, with a stretchable acrylate coating. The coating protects the FBG and improves its adherence to the plant’s stem.

Multispectral imaging is an established agricultural sensing technology. It can detect subtle changes in plant health before visible symptoms are apparent. For example, a drop in a plant’s chlorophyll content can be detected before the leaves are visibly yellow. Multispectral sensors use the 712 to 722 nm wavelengths (the red edge band) where

indications of stress are most easily identified. Multispectral imaging can be implemented using fixed installations where the sensors travel back and forth on a track system in a greenhouse or across an open field. They are also well suited to be carried aloft on a drone. For example, in one configuration, a drone-based multispectral imaging system can scan a 100-acre field (at 400 feet above the found with a 70% overlap) in less than 30 minutes (Figure 2). Some of the benefits of multispectral imaging include:

• Early disease detection

• Improved irrigation and water management

• Quicker and more accurate plant counting to optimize fertilizer application and pest control

• Cost reductions from the automation of activities previously performed by walking the fields

The primary difference between today’s multispectral sensors and emerging hyperspectral sensors is the bandwidth (the number of bands and how narrow the bands are) used to represent the data of the electromagnetic spectrum. Multispectral imagery generally uses 3 to 10 bands to cover the relevant spectrum. Hyperspectral imagery consists of hundreds or thousands of narrower bands (10 to 20 nm), providing greater

resolution and covering a broader spectrum range. Spectral resolution, or the ability to capture a large number of narrow spectral bands, is an important feature of hyperspectral imaging compared with multispectral imaging.

Other advantages of hyperspectral imaging include:

• Higher spatial resolution and the ability to discriminate smaller features,

• Higher temporal resolution and the ability to quickly sense important environmental changes such as the need for irrigation

• Higher radiometric sensitivity and the ability to discern small differences in radiated energy

Hyperspectral imaging sensors provide a highly detailed electromagnetic spectrum of agricultural fields, making it a useful tool for detecting smaller and more localized variations in important soil attributes and degradation, as well as changes in crop health and fitness. The increasing use of sensors in Agriculture 4.0 and the addition of higher resolution sensors such as hyperspectral imaging is driving the use of big data, and raising concerns related to data security, data integrity, and privacy. Addressing those concerns is a major emphasis for the EU’s ATLAS program.

The EU-funded Agricultural Interoperability and Analysis System (ATLAS) project aims to develop an open platform to support innovation and Agriculture 4.0. ATLAS is one of the EU’s Horizon 2020 research and innovation programs. The Fraunhofer Society is managing the project. It addresses the current lack of data interoperability in agriculture by combining agricultural equipment with sensor systems and data analysis. The resulting platform is expected to support the integration of hardware and software interoperability from a wide variety of sensor systems and amplify the benefits of digital agriculture. ATLAS aims to develop an open interoperability network for agricultural applications and build a sustainable ecosystem for innovative datadriven agriculture (Figure 3). ATLAS is building on networks of in-the-field sensors and multisensor systems to provide the big data needed to realize Agriculture 4.0.

The ATLAS platform is expected to support the flexible combination of agricultural machinery, sensor systems, and data analysis tools to overcome the current lack of interoperability and enable farmers to sustainably increase their productivity by using the most advanced digital technology and data. ATLAS will also define layers of hardware and software to allow the acquisition and share data from a multitude of sensors, as well as the analysis of this data using a variety of dedicated analysis approaches. The program will demonstrate the benefits of Agriculture 4.0 through a series of pilot studies across the agricultural value chain and end by defining the next generation of standards needed to continue the growing adoption of data-driven architecture.

Wireless sensor networks and security Wireless Sensor Networks (WSNs) and Internet of Things (IoT) solutions are extensively used in Agriculture 4.0, providing numerous benefits to farmers. The interconnection among diverse sensors and network devices, however, can contain unpatched or outdated firmware or software, creates opportunities for network insecurities, and opens various attack vectors, including device attacks, data attacks, privacy attacks, network attacks, and so on.

The increasing use of automation and even autonomous operations to improve yields also raises safety concerns. Additionally to ATLAS, the European Union’s Horizon

2020 research and innovation programs focus on developing network traffic monitoring and classification tools for use in Agriculture 4.0 systems. Effective traffic monitoring is expected to play an essential role in protecting assistants and users from the impacts of network attacks. Network traffic analysis and classification tools are being developed for Agriculture 4.0 based on Machine Learning (ML) methodologies to help mitigate the threats to WSNs and other IoTconnected assets.

Summary

The deployment of Agriculture 4.0 relies on the increasing use of WSNs to improve yields and reduce costs for farmers. It also requires the development of new sensor modalities, such as plant wearables using graphenebased and FBG sensors, and the expansion of existing sensor modalities, such as the move from multispectral to hyperspectral imaging. The EU’s ATLAS program is designed to improve interoperability and realize the maximum benefit from the growing diversity of sensor and data analysis technologies. Improvements in network security will also be essential to ensure data security, integrity, and privacy in Agriculture 4.0.

References:

5 Ways to Use Multispectral Imagery in Agriculture, Coptrz. https://coptrz.com/14-waysto-use-multispectral-imagery-in-agriculture/

Advances in hyperspectral sensing in agriculture, Special Agriculture 4.0. https://www.scielo.br/j/rca/a/ vQYBDCJn5xz4BgQDVjyGMVf/?format =pdf&lang=en

Agriculture 4.0 and Smart Sensors. The Scientific Evolution of Digital Agriculture: Challenges and Opportunities, MDPI sensors. https://www.preprints.org/ manuscript/202105.0758/v1/download

ATLAS, Agricultural Interoperability and Analysis System, ATLAS. https://www. atlas-h2020.eu/objectives/

Engineers make wearable sensors for plants, enabling measurements of water use in crops, Iowa State University. https://www.news.iastate. edu/news/2018/01/03/planttattoosensors

Plant Wearable Sensors Based on FBG Technology for Growth and Microclimate Monitoring, MDPI sensors. https://www.ncbi. nlm.nih.gov/pmc/articles/PMC8512323/pdf/ sensors-21-06327.pdf

Precision Agriculture Technologies and Factors Affecting Their Adoption, US Department of Agriculture. https://www.ers. usda.gov/amber-waves/2016/december/ precision-agriculture-technologies-andfactors-affecting-their-adoption/

connected world, a growing number of applications depend on embedded devices; collecting and acting upon data from a wide range of physical processes. As the computing power of the Systems-on-Chip (SoC) at the heart of these devices continues to grow, more decision-making is devolved from the cloud to the edge, turning this rapidly increasing number of edge devices into attractive targets for attackers.

The vulnerability of the IoT is, therefore, a growing concern. Data transmitted to the cloud needs protection, and commands received from remote services need validation. Additionally, while services deployed in the cloud benefit from highsecurity data center infrastructure, edge devices are installed in many different locations, where physical protection methods can be limited.

Security is, consequently, a fundamental property of the edge device, and developers must design security from the outset. The fundamental network security concepts of data in transit, data at rest, and access control mechanisms apply equally to the security of edge devices. However, additional protection measures and a level of intrusion detection capability are required because of their physical locations. These requirements must be built into MCU designs by hardware capabilities such as root of trust (RoT), tamper detection, secure boot, and secure enclaves coupled with advanced software mitigation techniques.

Both functional and platform security primitives are deployed in the security of edge devices. Functional security measures keep sensitive data secure and private. For example, by encrypting messages passed between two edge devices. Platform security primitives protect the implementation of functional security measures from remote or local attackers. The protection of the secret key used to encrypt and decrypt the messages passing between edge devices is an example of platform security.

Both types of security measures employ cryptographic features to provide one or more of the following capabilities in a system:

• Integrity — the data received is identical to the data sent

• Confidentiality — a third party cannot understand the message being communicated

• Authenticity — the authenticity of the message can be verified by the recipient

• Nonrepudiation — the sender cannot deny having sent the message

Cryptographic features and key management techniques enable the protection of in-transit and at-rest data, ensuring that the edge booting device is running authentic authorized firmware and software. A unique identity can also be created for the edge device, enabling a corrupt device to be identified and isolated.

The most commonly used cryptographic features and their applications are illustrated in Table 1.

Addressing security for edge devices from the beginning must be an integral part of the design process.

Advanced security measures require significant processing capabilities; SoC developers employ various hardware and software techniques to optimize chip performance while managing power consumption.

Microprocessor units (MPUs), with hardware acceleration, and hardware random number generation capabilities (figure 1.) offload the computational overheads of the security features, either to an extension of the main processor or to a purpose-built co-processor or core. NXP’s i.MX product family, as an example, includes specific hardware for cryptographic computation acceleration — the Cryptographic Accelerator and Assurance Module (CAAM). The CAAM also offers a unique key-wrapping mechanism protecting sensitive keys from being exposed to attackers.

As separate processors within the MPU, hardware accelerators are also effective isolation devices. The isolation of storage and processing of sensitive information is a common secure mitigation technique, and multicore MPUs offer effective isolation capabilities. With its own processing unit and local memory, the accelerator’s resources cannot be accessed by the other cores in the MPU, providing isolation capabilities to the system while not compromising the overall system performance.

The architectures of many modern edge devices enable the implementation of public key infrastructures (PKI) for edge computing. A third party certifies the binding between public keys, entities, individuals, and/ or organizations using a digital certificate in a PKI. The security features of the more advanced SoCs found in edge devices enable them to act as the third party in a PKI.

Other key security implementation techniques used in edge devices include:

Secure boot: chain of trust

A secure boot implementation guarantees that genuine, trusted binaries run on the system by starting from an

immutable memory within the SoC. The immutable part of the SoC and its features are also referred to as a root of trust (RoT), and specialized hardware security modules (HSMs) are often used to manage and protect the private key associated with RoT. An example of a secure boot implementation can be seen in many of the NXP i.MX range of applications processors, which use a mechanism called high assurance boot (HAB), shown in figure 2.

Trusted execution environment

A trusted execution environment (TEE) is a safe zone within an application processor, enabling separate execution of safe, trusted software from software with vulnerabilities such as large operating systems. Hardware vendors support TEE by including hardware extensions in their MPU designs, with examples including Intel SGX9, AMD Secure Encryption Virtualization (SEV)10, and Arm® TrustZone®11.

Virtualization clusters the applications performed by the edge device into virtual machines (VMs) executed by a hypervisor that also manages resource sharing among the virtual machines. If an attack compromises a VM, the other VMs will be unaffected, assuming that the hypervisor is not affected. There are several methods of implementing virtualization, including using a XEN hypervisor or a “docker” implementation. Additionally, modern SoCs provide hardware support for virtualization, the most common being memory management, which ensures secure memory partitioning.

Software architectures unlock the power of hardware in the implementation of security measures. Figure 3 shows an example of an edge device software stack that uses Arm TrustZone to provide two execution environments. The non-secure environment runs the rich Linux OS, while the

secure environment runs the open, portable OP-TEE software. Trusted applications in the open software offer cryptographic features to the Linux OS by using API access to core functionalities such as cryptographic, key, and storage operations. Those functions can be accelerated in hardware using the CAAM hardware on NXP i.MX devices.

Security should not be an afterthought

Security must be considered from day zero of an edge device’s development. Although the correct implementation of security is a complex and challenging task, the sooner it is addressed, the higher the chances that the end device will be fit for purpose — at a cost that does not threaten project viability.

The recently released IoT.1 specification defines a firmware interface and low-level data model providing vendor-independent configuration of smart sensors and effectors.

ThePCI Industrial Computer Manufacturer’s Group (PICMG) is developing a series of specifications to enable plug-and-play interoperability of smart sensors and effectors. The effort includes the large installed base of legacy non-IoT (not IP-enabled) devices and newer IoT-enabled smart sensors. The PICMG program includes the development of a family of three IoT specifications. PICMG IoT.1 is the second of those standards.

This FAQ presents an overview of PICMG IoT.1, looks at the previously released PICMG IoT.0 standard, and reviews the progress toward the third and final standard in the series, PICMG IoT.2.

IoT.1 supports sensing and motion

control needed in many emerging Industry 4.0 applications. It defines a firmware interface and low-level data model providing vendor-independent configuration of smart sensors and effectors, as well as plug-andplay interoperability with higher levels of the installation. Before IoT.1, PICMG addressed the lowest level of the hierarchy where the physical sensors and actuators of the factory equipment exist. Each of these devices may or may not be IoT-enabled, so PICMG developed IoT.0 (also called MicroSAM) to address the issue of hardware interoperability (Figure 1). The IoT.1 specification is a joint effort between PICMG and the Distributed Management Task Force (DMTF) organization. To more thoroughly address the needs of Industry 4.0, IoT.1 leverages and extends DFTF’s Platform Level Data Model (PLDM) specification. PLDM is a low-level messaging system that supports topologies, eventing, discovery, and runs over a variety of system-

level buses such as I2C/SMBus and PCIe Vendor-Defined Message (VDM) over Management Component Transport Protocol (MCTP) or Reduced Media Independent Interface (RMII) based transport (RBT) over Network Controller Sideband Interface (NC-SI), and others. Benefits of IoT.1 include:

• Enabling the production of smart sensors based on control circuits and software from PICMG-compliant suppliers.

• Enabling the interoperability of smart sensors and smart sensor components from various makers.

• Enabling integrators of sensors and smart effectors to use devices and controllers from multiple suppliers.

• Speeding the adoption of smart sensor technologies in Industry 4.0 through open specifications that ensure interoperability

The Micro Sensor Adapter Module (MicroSAM) is a compact computing module developed specifically to meet the needs of sensor-domain control in the Industrial Internet of Things (IIoT) and Industry 4.0. MicroSAM was the first IoT specification from PICMG; it was developed to solve the challenge of integrating non-IoT devices. MicroSAM is a 32 mm x 32 mm low-power microcontroller board that allows non-IIoTenabled devices to interact with an IIoT gateway in a plug-and-play manner. The introduction of MicroSAM filled a need not addressed by other PICMG specifications; a module designed for use with MCUs in the sensor domain. It’s optimized for processing performance and I/O connectivity for that purpose. Additionally, MicroSAM can be used with other PICMG devices such as MicroTCA, COM Express, or CompactPCI Serial, which provide higher layers of control, while MicroSAM provides sensor-optimized connectivity.

Figure 2. MicroSAM modules are designed to support the PICMG sensor domain data model and network architecture. Image courtesy of CNX Software

MicroSAM co-exists with and extends the existing ecosystem by offering a standards-based solution designed specifically for embedded use in Industry 4.0 applications. The basic features offered by MicroSAM are:

• Industrial operating temperature range from -40 to +85 °C

• Industrial-grade power filtering and signal conditioning for embedded installations

• RS422 communications

• Direct connectivity to analog voltage, analog current, and digital sensors

• Secure connectivity using latching connectors

• Pulse width modulation (PWM) output for motion control applications

• Synchronization using hardware interlock and trigger signals

• Compact (32 mm x 32 mm)

When combined with the PICMG sensor-domain data model and network architecture and data model, sensors connected to MicroSAM modules seamlessly integrate into the network with plug-and-play interoperability (Figure 2).

Coming soon: PICMG IoT.2

The PICMG IoT.2 network architecture specification is expected to be ratified later this year. It defines the integration of smart sensors and effectors, as well as their data, into larger Industry 4.0 systems of systems. IoT.2 is based on DMTF’s Redfish API and outlines an abstraction layer and transactional model so that sensor and effecter endpoints can be monitored and managed in the context of job models similar to those available from cloud service providers. When used together, IoT.1 and IoT.2 are intended to

support the analytics required for higher productivity levels and throughput across a factory environment (Figure 3).

• 1 provides low-level visibility of physical device parameters that can directly impact the quality and efficiency of your production line

• 2 provides an IT-like interface for managing both machines and jobs at a high level of abstraction.

To support collaboration, the PICMG IoT.2 standard will enable existing IoT communications protocols and models to be converted to maintain compliance with the new specification. The strategic alliance between PICMG and DMFT enables IoT.2 to use RedFish APIs. RedFish is a RESTful (Representational State Transfer) interface for the remote management of a platform. REST is simple, well-established, and easily facilitates client and server interactions. RedFish Framework benefits include:

• Easy for machines to parse and generate, and for people to use

• Scalable for use in Industry 4.0 systems of systems

• Re-uses established principles in well-organized formats

• Secure, extensible, and interoperable

The RedFish interface definition includes JavaScript Object Notation (JSON) and the Open Data Protocol (ODATS). ODATS is an ISO/IEC-approved OASIS standard that defines a set of best practices for building and consuming RESTful APIs. The RedFish interface leverages common Internet and web services standards, and its hardware management concept is very similar to PICMG’s Hardware Platform Management (HPM). Building on the RedFish communication protocol, PICMG

expects to add capabilities for motion control such as multicast capability and network time protocol (NTP) clocking. Additional security features will be included before the final release of the PICMG IoT.2 network architecture specification.

Summary

PICMG is developing a series of three specifications to enable plug-and-play interoperability of smart sensors and effectors. The specifications cover the large installed base of legacy non-IPenabled devices and newer IoTenabled smart sensors. The initial PICMG IoT.0 specification was developed to solve the challenge of integrating non-IoT devices. The recently released IoT.1 specification defines a firmware interface and low-level data model providing vendor-independent configuration of smart sensors and effectors designed to support sensing and profiled motion control needed in many emerging Industry 4.0 applications. The stillto-be-released PICMG IoT.2 will complete the effort and defines the integration of smart sensors and effectors, as well as their data, into larger Industry 4.0 systems of systems.

References:

Industrial IoT Overview, PICMG. https://www.picmg.org/industrialiot-overview/

MicroSAM System-on-Module

Standard Targets Microcontrollers, IIoT Sensors, CNX Software. https:// www.cnx-software.com/2020/10/14/ microsam-system-on-modulestandard-targets-microcontrollersiiot-sensors/

PICMG Ratifies IoT.1 Firmware

Specification for Smart IoT connected Sensors and Effecters, PICMG. https://www.picmg.org/ picmg-ratifies-iot-1-firmwarespecification-for-smart-iotconnected-sensors-and-effecters/

Our batteries offer a winning combination: a patented hybrid layer capacitor (HLC) that delivers the high pulses required for two-way wireless communications; the widest temperature range of all; and the lowest self-discharge rate (0.7% per year), enabling our cells to last up to 4 times longer than the competition.

Looking to have your remote wireless device complete a 40-year marathon? Then team up with Tadiran batteries that last a lifetime.

This article is based on an interview with Phil Ware and Sandro Sestan, u-blox.

By Martin Rowean IoT device using a cellular wireless module, there are many factors to consider in minimizing power consumption. Data sheets can provide guidance on power supply design, module placement, and connections. That will get you through the hardware design of your product, but it’s not the whole story. How you use the module makes a difference as well.

“How you use the module,” meaning that protocols make a difference in power consumption. Your goal is to keep the module operating at the lowest possible power level for the maximum amount of time while still achieving the desired performance.

The protocols you use and how you implement them at the application level make a difference in power use. Some power consumption is inevitable regardless of module or protocol because all modules must first connect to the cellular network; they all need to go through the cellular protocol stack. All modules have a boot time and must physically scan for a network in the bands of interest. That time is generally fixed. Once a module is registered on the network, you’re in the application space where your choices make a difference.

Suppose you’ve designed a module into a utility meter. You might use a “fire and forget” protocol that minimizes power consumption by simply sending a meter reading and shutting down the module. That’s the lowest power (and cost) way to send a message. If a reading gets lost, there’s always tomorrow. To accomplish this exchange, simply send a User Datagram Protocol (UDP), Message Queue Telemetry Transport for sensor networks (MQTT-SN), or Constrained Application Protocol (CoAP) message. The application can either shut down the module or use the 3GPP power-saving mode (PSM).

When the application requires an acknowledgment from a server, the module needs to wait for a downlink message. During this time, the radio is still connected, waiting for the ACK message. Some modules use NB-IoT because of low power, but then use “chatty” protocols that take time and use unnecessary power.

As an experiment, we tried using Cat-M1 and NB-IoT modules to send messages of 150 bytes. When sending that amount of data, the NB-IoT module used less power than the Cat-M1 module. Cat-M1 gets more efficient as the number of bytes increases. At 500 bytes and up, lowdata-rate protocols mean your transmitter is on for longer. Cat-M1 modules support ten times the data rate as NB-IoT, and thus are on for less time for a given amount of data.

With the NB-IoT protocol stack, when it re-attaches to the network, the attached message and the customer’s message are all part of the same transmission. Cat-M1 does not yet have these optimizations, and the application messages must travel over the User Data Plane. This is less efficient than sending messages over the Control Data Plane for shorter messages, say less than 200 bytes. The power efficiency crossover seems to occur somewhere between 150 and 200 bytes.

If you look at Lightweight M2M (LwM2M), you’ll see that its protocol is message heavy. Modules must negotiate lots of information with the host. The good news is it has lots of features. The dayto-day operation of an IoT device might not need LwM2M. It can use MQTT-SN or CoAP instead. Figure 1 shows the typical CoAP protocol sequence. Module users can change protocols depending on the application and should take advantage of that option. Keep that in mind when developing application software for your device. Protocol stack libraries are available for that purpose. Clients can be built into modules; you don’t need to develop your own client. There’s no cost in switching protocols. It’s just a matter of sending a few TCP or UDP packets.

3GPP power saving

3GPP specifies power-saving modes. For example, a module could go into listening mode for a specified amount of time before going back into sleep mode, called Extended Discontinuous Reception (eDRX). A module’s powersaving features depend on its chipset, although most implement Release 13/ Release 14 features. In listening mode, a chipset might only consume microamps of current. Some modules don’t need to fully boot when they wake up from sleep mode. Other modules need to boot, which can take four or five seconds when

the module is powered but not sending useful data.

The difference in power consumption from transmit to receive may depend on distance. A short distance to a base station means less transmit power. Receive current could be less than 100 mA but transmit current could be much higher. It depends on the distance to the base. The network defines the transmit power needed by the remote device. For the receiver, the network will determine how often (duty

cycle/C-DRX) the module will listen to the network’s signaling. Figure 2 compares a typical transmit and receive current for a wireless module.

As you can see, the protocols you use can make a difference in your IoT device’s power consumption. Choose your protocols based on data transfer rates and size. The right choices result in lower power use and lower power costs for the final device user.

consumption value depends on TX power and actual antenna load

Whilemajor U.S. telecom operators build their 5G networks, many organizations develop their own networks to take advantage of the next generation of connectivity. Private networks provide complete control of data and critical processes. Typically deployed in one or more specific locations owned or occupied by the end-user organization, private networks reside on premises. Only devices and users that the organization authorizes obtain network access. Figure 1 highlights what private networks can bring to organizations.

By Marco Contento, Telit and Xiaoxia Zhang, QualcommUnlike a public network, a private 5G network lets companies define the network’s capability, deployment timetable, and coverage quality. Configurations can vary by site, depending on the type of work undertaken in each venue, and customized to an organization’s specific location and operational needs. Elements include but are not limited to: Spectrum: organizations have the option to use either shared spectrum such as the general authorized access (GAA) tier of the Citizens Broadband Radio Service (CBRS) band, local-licensed spectrum dedicated to private networks in some countries, reuse licensed spectrum bands from mobile network operators, or use 5G NR in unlicensed bands as has already been standardized and pending commercialization. The GSMA expects mobile network operators (MNOs) to use spectrum from three principal ranges: (1) low-frequency bands (under 1 GHz), (2) mid frequency bands (in the core 3.3 GHz to 3.8 GHz range), and (3) high-frequency bands known as “millimeter wave” (in the 26 GHz, 28 GHz and 40 GHz range).

As more industries rely on wireless devices, sensors, and artificial intelligence to connect people and machines, private networks bring the potential to enable more advanced IoT applications while increasing security, bandwidth, and speed.Figure 1. Private 5G networks promise to connect people, sensors, and machines within factories, universities, ports, farms, and other facilitie. Image courtesy of Qualcomm

A rapidly growing number of countries in Asia, Europe, and North America have spectrum initiatives underway for private networks. Three examples are:

• Germany allocated 100 MHz in the 3.7 GHz to 3.8 GHz band for local and regional 5G networks that can be used for agriculture, forestry, and industrial applications.

• Japan set aside 28.2 GHz to 28.3 GHz for local 5G services and allows private LTE networks to use LTE Band 39 on a shared basis. It’s also considering private 5G allocations in the 4.6 GHz band and between 28.3 GHz to 29.1 GHz.

• The U.S. auctioned 70 MHz between 3.55 GHz and 3.65 GHz.

Core: in addition to spectrum, private 5G wireless networks will require a core, which includes software and hardware that can be acquired from a single or multiple vendor(s), or built with commodity hardware and open-source software. The core is responsible for a range of functions such as traffic shaping and rules for quality of service, billing, and data plans, as well as parameters for network monitoring.

Radios and antennas: private networks need radios and antennas for the CBRS spectrum in the US. These Citizens Broadband Radio Devices (CBSDs) need certification from a CBSD test lab.

Identity management: central to security, the core contains a database of subscribers and manages the subscriber identity module (SIM) cards activated for connection to the private network.

Enterprises can deploy a virtual private mobile network (dedicated network), which

typically uses 5G network slicing over the public mobile network. This affords the enterprise some of the advantages of a private network, minus the upfront cost and complexity involved in installing and operating an on-site wireless infrastructure (Figure 2).

Deloitte predicts that hundreds of thousands of companies in sectors such as retail, healthcare, education, utilities, manufacturing, and transportation will deploy private 5G networks over the next ten years. While these networks have been taking hold in Europe for some time, the 2020 Federal Communications Commission (FCC) auction finally freed up the necessary spectrum for U.S. enterprises, which can now build their own in-house and run it themselves, or outsource it to a mobile network operator (MNO) or systems integrator (SI).

In 2020, a wide range of organizations deployed these networks to address specific challenges and explore new opportunities:

• Germany-based Rohde & Schwarz partnered with Nokia to install a private 5G network at its plant in Teisnach, Germany to test new industrial applications and discover how to best optimize 5G for smart factories.

• Ford and Vodafone partnered to develop a private 5G network in the automaker’s new Electrified Powertrain in Manufacturing Engineering (E:PriME) facility located in Essex, England, with a goal to close the connectivity gap in critical automated processes on the factory floor — like welding, which is used extensively for parts that comprise an electric vehicle — and enable secure connectivity across the entire Ford campus.

British multinational energy and services company Centrica partnered with Vodafone to build the first 5G-ready mobile private network (MPN) for the oil and gas sector at its Easington facility, with a goal to create a digitized ecosystem that enables mission-critical monitoring, control, and communications in real time to improve environmental and worker safety among other things.

• The Belgian Port of Zeebrugge partnered with Nokia to deploy an end-to-end private 5G network to track, analyze and manage connected devices across multiple port-based applications in real time and support the use of autonomous vehicles, augmented reality, and drones.

• AT&T and Samsung deployed a 5G testbed at Samsung’s Austin, Texas manufacturing facility, providing a private 5G network that uses millimeter-wave (mmWave) spectrum and leverages LTE and Wi-Fi. The network also uses multiaccess edge computing (MEC) to keep data processing close to the end user.

• Lufthansa Technik and Vodafone Business built a standalone private 5G campus network at the Lufthansa base at Hamburg Airport in Germany to give the company the freedom to configure the network according to its needs. For example, technicians can now use high-resolution virtual and augmented reality technologies to work even more precisely and securely on the aircraft 24 fuselages.

According to an independent study from IHS Markit commissioned by Qualcomm, 5G will expand the mobile ecosystem to new industries that will result in a $13.1 trillion global economic value by 2035, powering the digital economy, with the support of dedicated private networks, fulfilling targeted needs of organizations in a range of sectors:

• $416B — Precision agriculture

• $984B — Construction and mining

• $264B — Digitized education

• $1,083B — Connected healthcare

• $2,224B — Richer mobile experiences

• $4,771B — Smart manufacturing

• 1,144B — Intelligent retail

• $2,213B — Connected smart cities

Private 5G networks for enterprises bring the potential to exploit new capabilities available in the latest phase of the 5G standard (3GPP Release 16), with enterprises likely to deploy in stages, and an initial focus on seamless mobility. Private 5G networks bring faster and safer operations, new capabilities, and efficiencies in industrial processes. Private networks will also require expertise in network design and management to meet industrial demands on network performance. Industry 4.0 applications have specific demands on communications technology concerning latency, reliability, and security. Thus, we suggest that you begin with test phases that can identify network infrastructure, coverage, and performance issues before a complete rollout.

According to Deloitte, 5G has the potential to become the world’s predominant local area network (LAN) and wide area network (WAN) technology over the next 10 to 20 years, especially in greenfield builds. For industrial users, the ability to design mobile networks to meet the coverage, performance, and security requirements of production-critical applications is fundamental to the new wave of cyber-physical systems ushering in Industry 4.0.

Industrial and enterprise operations will benefit from the rich capabilities of 5G, but cutting the data-collection wires, leading to more sensors for greater observability. By adding edge computing, 5G networks will process that data with low latency. Computeintensive machine learning will efficiently extract valuable insights, thereby reducing the Observe, Orient, Decide, and Act (OODA) loop for industrial processes. Industry 4.0 leaders stand to improve their operational efficiencies, improve their responsiveness to shifts in supply chains or customer demand, and their resilience to disruptive events, all to sharpen their competitive edge with the power of 5G.

• The industry’s lowest DCR and ultra-low AC losses across a wide frequency range

• Wide range of inductance values from 0.27 to 56 µH

• Current ratings up to 117 A with soft saturation

• Drop-in upgrade from our popular XAL1010 Series

Designengineers have choices on every level. Do we buy modules, or boards, or design the functions in-house? Do we use digital or analog signal processing? What microprocessor/microcontroller and architecture do we employ? Every decision has benefits and consequences.

Companies have core competencies in their specialty but often must make decisions regarding a device’s connectivity. As the technological players reshuffle the board, connectivity comes to the front, especially along the network edge. For example, do we use the newest 5G chipsets and go with 5G connectivity, or do we employ a lower-power wireless LAN technology capable of wide-area coverage that can reach all the devices at the edge?

Why all the focus on connectivity?

In a word: money. Wireless carriers charge a fee for every connected device, and the number of IoT devices is growing rapidly. Global wireless carriers needed three decades to reach the current 2.3 billion subscribers.

In the few years that IoT services have been available, more than 8.4 billion IoT devices have been connected. Now, at least 20 billion are connected. Even though not all IoT devices directly connect to the internet, 10 billion of them still create immense annual revenues for service providers. This influx of connectivity can be a huge revenue opportunity. IoT applications, however, have broad differences. The current capabilities of cellular-based and low-power wide-area network (LPWAN) applications differ such that no single standard will satisfy every need.

The choice of connectivity will depend on the constraints of the design. These include, but are not limited to:

• Cost

• Time to market

• Size

• Power

• Range

• Data rates

• Reliability

• Security

• Latency

• Ruggedness

• Expandability

Another factor is the cost burden to customers. If you require every IoT device in a small area to have a cellular connection, the cost of use can skyrocket. By the same token, if you need multiple aggregators and base stations to make your wide-area network function, that can also dissuade customers. Right off the bat, the constraints that your IoT-connected devices are sensitive to will steer your choices.

The cellular industry has unique advantages for IoT. Carriers already have almost ubiquitous LTE coverage in the US delivered by several hundred thousand macro base stations and perhaps three times that many small cells. In most cases, updating this infrastructure to accommodate communication with IoT devices requires just a software upgrade rather than a significant investment in hardware. In addition, even before IoT became the next big thing, wireless carriers provided connectivity to wireless-enabled sensors using 2G technology. Although 2G and 3G services are disappearing, 4G and 5G are available.

The industry has worked for years to accommodate IoT. 3GPP has included substantial specifications dedicated to IoT. Now at release 17, the protocols and standards under its umbrella include GSM 2G and 2.5G standards, UMTS 3G standards, LTE Advanced and LTE Pro 4G standards, 5G NR, and an evolving IP Multimedia Subsystem (IMS) as an access independent medium.

In contrast, low-power wide-area network (LPWAN) providers started with no such advantages. Because they were new entities, they needed to build every system in every area where coverage is desired. They have also had a limited time deploying these networks in key (typically urban) areas, as the cellular industry is rapidly rolling out its IoT-centric data plans. Fortunately, LPWAN systems are less expensive to build and deploy than cellular networks, do not always require leasing space on a tower, and cover wide geographical areas with fewer base stations. They also have the advantage of allowing proprietary solutions that can create the branding of function into the unlicensed RF bands.

Designers have choices when it comes to creating new IoT designs; connectivity choices depend on the design’s constraints. As with any engineering decision, the learning curve and time-to-market constraints can dictate OEMing an existing solution to the problem.Figure 1. The LPWAN standards vary from continent to continent and from country to country. In any case, aggregators and access points will allow internet connectivity regardless of the LPWAN standard used in a specific location. Image courtesy of GSMA

The question persists of whether LPWAN providers can survive in a cellular-dominated world. Most analysts believe they will offer similar capabilities to cellular networks, such as carrier-grade security and other mandatory features. They can become cost-competitive for customers as well. Analysts also suggest that at least half of IoT use cases can be served by LPWANs. It’s a relatively safe bet that, while the cellular industry will have a commanding presence in delivering IoT connectivity, LPWAN providers will still find room in what is likely to become a price war within individual markets.

Three standards seem to be emerging as viable choices for the engineering community at large. The market leaders so far are Long-Range Wide-Area Network (LoRaWAN), Narrowband Internet of Things (NB-IoT), and Long Term Evolution for Machines (LTE-M) standards. Others are, however, clawing their way into the visible ecosystem. LTE-M and NB-IoT have gained a foothold in North America, South America, parts of Europe, and Australia. NB-IoT has gained acceptance in Russia, China, and South Africa. LTE-M is establishing itself in Mexico and parts of Europe. But all are in play anywhere (Figure 1).

For large-scale deployment of relatively simple distributed sensor systems, LPWAN features low costs, long-range transfers, low power (coin cells can last for years), and sufficient throughput for simple and tokenized applications. For example, an array of wind speed, direction, temperature, and humidity sensors deployed over large continental areas might not have cellular coverage, especially in sparsely populated areas. This non-data-intensive information can be optimized to use time slots or polled operations. Each sensor can measure and log data at predetermined intervals. Because data values are simple short

numeric values, low data rates over long distances are desirable, with low-power operations, so batteries will last for years.

A lot will hinge on whether cellular coverage is available in a remote area. Although cellular services can provide longer ranges than Bluetooth, Bluetooth Low Energy (BLE), ZigBee, Wi-Fi, and other short-range protocols, LPWAN still has the advantage of the longest range in remote areas where there’s no cellular coverage.

A good choice for this application could be LoRaWAN. The narrow 125 kHz bandwidth supports 50 kb/sec data rates, which are sufficient to transfer low-power bursts of data over long distances. The 915 MHz frequency band offers many competitive component suppliers to help lower costs. Battery estimates of five to ten years are feasible. The Chirp Spread Spectrum (CSS) modulation technique is robust and relatively immune to noise, especially if channel selections can be made by measuring the best choices at specific locations. No cellular support is needed to implement this standard. LoRaWAN does not require license fees and uses an unlicensed spectrum.

Applications that need higher data rates can use LPWANs that link to cellular services if available at specific locations. NB-IoT and LTE-M LPWAN standards, which operate in a subset of the LTE bands, can have very long ranges because they link to cellular and

even cloud services. The NB-IoT features 100 kb/sec data rates using 180 kHz bandwidths because of QPSK and BPSK modulation. Battery life can potentially be ten or so years. The LTE-M standard increases the data rate to 370 Kb/ sec using a 1.08 MHz channel bandwidth and quadrature amplitude modulation (QAM). Note, LTE-M channels can support voice. This can be a nice feature when debugging a sensor in a remote area. Figure 2 shows an NB-IoT board that uses a module to add connectivity.

The cellular industry is developing technologies for IoT connectivity based on LTE. The industry’s overall roadmap is to build on LTE’s current versions and continue to refine them, including reducing their complexity and cost. As this process unfolds, cellular technology will become better suited to a wider variety of IoT applications, ultimately leading to the introduction of 5G, the fifth generation of cellular technology.

To achieve this goal, the industry consensus appears to be based on three standards primarily introduced in 3GPP release 13, ultimately resulting in what is included in the 5G standards. These solutions should ideally be implemented at frequencies below 1GHz where propagation conditions are more conducive to longer-range and building penetration:

• LTE-M: stands for long-term evolution for machines, also called enhanced machine type communication (eMTC). This is a cellular-based low-power wide-area network developed by 3GPP. Several releases are under this umbrella ranging from 1 Mb/sec to 10 Mb/sec download rates and 1 Mb/sec to 7 Mb/sec upload rates. Operating in half duplex or full duplex, the newer releases feature 10 ms to 15 ms of latency compared to 50 ms to 100 ms latency for older technology.

• NB-IoT: a narrowband version of LTE for IoT also developed by 3GPP. It used the same sub 6 GHz spectrum as 4G LTE and was designed with IoT in mind. It is claimed that IoT devices using the half-duplex NB-IoT can run for decades on battery power, but this will depend on your design. It does include power-saving features such as power-saving mode (PSM) and extended discontinuous reception (eDRX). It uses adaptive modulation, and an interesting repeater functionality called hybrid automatic repeat request (HARQ) to take advantage of narrowband transmission signaling. Up to 1 million NBIoT devices can connect within a square kilometer. Data rates are up to 127kb/sec download and 159 kb/sec upload.

• EC-GSM-IoT: extended Coverage-GSM for IoT is an extended coverage variation of Global System for Mobile Communications technology optimized for IoT in release 13 and can be deployed along with a GSM carrier. It can optimally achieve 2 Mb/sec upload and download rates but can drop to 474 kb/sec along the edge. The half-duplex protocol standards have long latencies, 700 ms to 2 sec, so real-time reporting and control are not recommended.

• 5G: chipsets for 5G are evolving now. Large-scale, high-volume manufacturers such as Samsung and Apple have grabbed these parts and will get application support and mindshare from device makers. Still, this technology will find its way to the bulk of the engineering community and onto PC boards designed for IoT functions. Although blazing speeds (peak 20 Gb/sec) and low latency (1 ms compared to 200 ms with 4G) are features of the 5G embedded designs, connectivity service charges are a part of this solution’s requirements. This adds up quickly as many 5G-based IoT devices come into service.

The unlicensed LPWAN technologies have an advantage over licensed versions, and the two leading the pack are LoRaWAN and Sigfox. Sigfox provides end-to-end connectivity using its proprietary architecture and ecosystem, but it lets endpoint devices use its technology for free if device manufacturers adhere to the Sigfox rules. Figure 3 shows a diagram of the Semtech SX1275 architecture. Figure 4 shows a Sigfox USB dongle.

It’s important to differentiate

LoRa, LoRaWAN, and offerings by Link Labs, as it can be a bit confusing. LoRa is the physical layer of the open standard administered by the LoRaWAN Alliance, while LoRaWAN is the Media Access Control (MAC) layer that provides networking functionality. LinkLabs is a member of the LoRaWAN Alliance that uses the Sematech LoRa chipset (Figure 5) and provides Symphony Link connectivity that has features unique to the company, such as operating without a network server. Symphony Link uses an eight-channel base station operating in the 433 MHz or 915 MHz industrial, scientific, and medical (ISM) bands, and the 868 MHz band used in Europe. It

can transmit over a range of at least 10 miles and backhauls data using Wi-Fi, a cellular network, or Ethernet through a cloud server to handle message routing, provisioning, and network management.

Newer players such as Symphony Link and Ingenu RPMA are overcoming some of the limitations of Sigfox and LoRaWAN. Expect to see more players in this space and cloud and infrastructure services emerging from cellular and non-cellular solutions.

An interesting anomaly in the IoT connectivity universe is Weightless. Weightless is an anomaly among IoT connectivity solutions; it was developed as a truly open standard managed by the Weightless Special Interest Group (SIG). It gets its name from its lightweight protocol that typically requires only a few bytes per transmission. This makes it an excellent choice for IoT devices that communicate very little information, such as some types of industrial and medical equipment, as well as electric and water meters.

Unlike many other standards, Weightless operates in the so-called T. white spaces below 1 GHz that were vacated by over-the-air broadcasters when they transitioned from analog to digital transmission. As these frequencies are in the sub-1 GHz spectrum, they have the advantages of wide coverage with low transmit power from the base station and the ability to penetrate buildings and other RF-challenged structures.

Weightless comes in two versions:

• Weightless-N is an ultra-narrowband, unidirectional technology.

• Weightless-P is the company’s flagship bidirectional offering that provides carrier-grade performance and security with extremely low power consumption and other features.

Another interesting player to watch is Nwave, an ultra-narrowband technology based on software-defined radio (SDR) techniques that can operate in licensed and unlicensed frequency bands. The base station can accommodate up to 1 million IoT devices over a range of 10 km with RF output power of 100 mW or less and a data rate of 100 b/sec. The company claims that battery-operated devices can operate for up to 10 years. When operating in bands below 1 GHz, it takes advantage of the desirable propagation characteristics in this region.

Also, be aware of Ingenu. Formerly called On-Ramp Wireless, Ingenu has developed a bidirectional solution based on many years of research that resulted in a proprietary direct-sequence spread spectrum modulation technique called random phase multiple access (RPMA). Designed to provide a secure wide-area footprint with high capacity, RPMA operates in the 2.4 GHz band.

A single RPMA access point covers 176 mi² in the US, significantly greater than either Sigfox or LoRa. It has minimal overhead, low latency, and a broadcast capability that allows it to send commands simultaneously to many devices. Hardware, software, and other capabilities are limited to those provided by the company, and the company builds its public and private networks dedicated to machineto-machine communications.

As more devices and services connect to the internet, refinements and enhancements will emerge. As with any engineering decision, the learning curve and time-to-market constraints can dictate OEMing an existing solution. As the number of nodes increases, the prices should drop.

A parallel approach allows OEMing a canned solution while developing your own. Already, antennas, filter networks, and assorted components for hardware are available. The sheer number of expected IoT connections makes this technology one that will be around for a long time. Climbing the learning curve can be desirable, even for smaller, narrowly focused companies.

We see ransom hackers disrupting normal operations of everything. So, think twice about how to manage security across the worldwide distribution of your IoT designs. Leave room for a backup plan.

Lastly, remember that various sources plan to put 262,000 Wi-Fi satellites around the globe. Although this might provide connectivity everywhere, it will still be a pay-per-connection technology.

A physical layer for Industrial Ethernet, Single-Pair Ethernet combines high data rates with a lightweight cable design, streamlining communication across automation levels.

By Sagar Patel, Product Manager at Lapp North Americathe Industrial Internet of Things (IIoT), the goal for many manufacturers is to connect all the machines, controls, actuators, and sensors in their plants. That’s easier said than done, however. There are many system inconsistencies at play, making communication between the field device, control, and enterprise levels anything but easy.

To reap the benefits of the IIoT, manufacturers need smooth, seamless communication across all automation levels. Although Ethernet has long been the communication standard at the control level, bus systems still dominate the field level, limiting potential data flow. The solution is to implement a cost-effective and space-saving infrastructure, enabling nonEthernet field devices to communicate with the Ethernet networks above it.

Single Pair Ethernet (SPE) has emerged as this critical bridge, making the field level smarter while ensuring consistent, reliable network communication across the entire automation pyramid. SPE is a cable design that uses a single pair of twisted copper wires to transmit data at speeds up to 1 gigabyte per second (Gbps), allowing real-time, continuous data transfer to and from the field level. Additionally, it offers the advantages of supporting cable lengths up to 1,000 meters (at 10 Mbps), minimizing space requirements, and easing installation.

The following white paper takes a deep dive into this cable construction; it looks at features, benefits, and standardization practices, empowering you to quickly and effectively use IIoT communication technology to improve your manufacturing facility.

Until now, manufacturers have typically relied on bus networks to enable the flow of data from field devices. One of the most widespread bus communication methods for automation technology is PROFIBUS, a serial fieldbus technology established over 30 years ago that uses one pair of cores in a BUS cable. Later, as the industry expanded from buses to Industrial Ethernet, particularly at the control and enterprise levels, PROFIBUS and PROFINET International (PI) developed PROFINET. This Industrial Ethernet protocol uses two pairs of cores to transmit Ethernet at a rate of 100 megabits per second (Mbps). Some manufacturing operations even use Gigabit (GB) Ethernet; a transmission technology that requires four pairs of cores and provides data rates up to 1 billion bits per second, or 1 GB.

With the rise of the connected factory, bus systems can no longer handle the large data transmission rates required by the ever-increasing number of sensors in production facilities. Despite the benefits of Industrial Ethernet solutions, Ethernet is not always suitable for connecting devices at the lowest field level. Cat.5 and Cat.7 cables can be too large to efficiently connect hundreds, possibly thousands, of individual sensors spread throughout production environments.

Another challenge is connection complexity. In order to bridge the communication gap between the field device level (bus) and everything above it (Ethernet), manufacturers must install translators and gateways. In addition to driving up system costs and complexity, the fieldwork required to install this additional hardware increases the risk of installation errors.

A streamlined cable solution, SPE simplifies Ethernet connectivity from the sensors up through the control and company levels, as well as with the cloud — opening new application areas for Industrial Ethernet. A lightweight, efficient cable design, SPE reduces the number of cores to two, enabling 1-Gb Ethernet communication over a single pair of cores. It also eliminates the need for costly gateways between Ethernet and bus networks, reducing installation work and minimizing the chance of errors. Because the cabling itself requires less space, SPE significantly reduces component costs.

In addition to high data transmission speeds via Ethernet, SPE can supply power to end devices via Power over Ethernet (PoE), a standard that specifies the power distribution method for use over a single twisted-pair link. PoE can provide 0.5 to 50 W of power over a twisted pair to power devices like cameras, sensors, and remote input/output (I/O). It further reduces costs by eliminating the need for additional cabling to power devices.

Benefits of SPE include the following:

• supports data rates up to 1 Gbps.

• cables can be deployed up to 1,000 m.

• SPE is more secure with end-to-end protection down to the edge.

• it is an open communication tool — no dependence on one manufacturer or software

• SPE is a cleaner network topology, eliminating the need for costly, complex gateways

• the cables feature a small form factor.

• SPE offers a hybrid solution for data and power

By enabling continuous, long-length communication between the sensor level and cloud, as well as providing power supply via PoDL, SPE offers powerful applications in many industries.

SPE can bridge long distances in large chemical plants, where analog cables or

LAPP has contributed to SPE cable development with the introduction of our ETHERLINE T1 product family, consisting of SPE cables for use in industrial machinery and plants:

• ETHERLINE T1 FD P is a shielded 26AWG cable for use in cable chains.

• ETHERLINE T1 FLEX is an 22AWG cable for occasional movement.

• ETHERLINE T1 P FLEX is an 18AWG cable suitable for distances up to 1,000 m.

bus networks — some with data transmission rates of just 31.25 kilobits (kbit) — are still the norm. The Ethernet Advanced Physical Layer (Ethernet-APL), an offshoot of the IEEE 802.3cg (10BASE-T1L) standard, defines additional properties for these applications, taking into account the use of SPE cabling in explosive areas.

Transportation

SPE shines in electric transportation (e-transportation) applications because of its small, lightweight form factor, reducing weight and bend radii by up to 55 and 30%, respectively. Compared to a cable

with four wire pairs, which will weigh around 4.6 kilograms (kg) per 100 m, an SPE cable weighs only 3.0 kg. Specific use cases in the transportation sector include passenger

SPE can be used to network sensors in fire alarm systems, light or temperature sensors, access control systems, information boards and much more.

SPE is covered by several international standards, including the Institute of Electrical and Electronics Engineers (IEEE). In addition to specifying the primary transmission method, IEEE 802.3 stipulates the transmission channel, transmission length, and number of connectors. Other relevant standards organizations include the International Organization for Standardization (ISO), the International Electrotechnical Commission (IEC) and the Telecommunications Industry Association (TIA), all of which cooperate with IEEE 802.3.

When it comes to the cabling, several IEC 61156 standards are in progress, including IEC 61156-11 and IEC 61156-12 for 600-megahertz

information systems, seat reservation systems, passenger counting visual (PCV) systems, and closed-circuit television (CCTV).

(MHz) data cables in permanent and flexible installations, respectively. Others include IEC 61156-13 and IEC 61156-14 for 20-MHz data

The SPE Industrial Partner Network, of which LAPP is a member, consists of many electrical connection companies, including cable and connector manufacturers, as well as companies that specialize in advanced Single Pair Ethernet technology. The group expects SPE to replace the current fieldbus systems at the sensor and actuator level, providing the core infrastructure for intelligent sensors and actuators in modern smart factories. The goal of this partnership is to continue to create standardized interfaces and system components, all while working closely with various international committees.

• SPE benefits at a glance

• enables Networking with Transmission Control Protocol/Internet Protocol (TCP/IP) without system disruptions

• IP can address all field participants

• suitable for real-time critical applications thanks to time-sensitive networking (TSN)

• accommodates distances up to 1,000 m; more flexibility in cabling, and fewer switches

• can supply power to terminal devices via the same cable using PoDL

• sustainable, thanks to the elimination of batteries compared to wireless systems

• uses less material and lower weight over traditional 2- and 4-pair Ethernet

• offers flexibility and space savings in drag chain applications, conduits, etc.

• reduces installation errors and saves assembly time

• improves operational reliability

cables in permanent and flexible installations, respectively.

Regarding the SPE connectors, several IEC 63171 standards are in under-development. The first and only standard for connections in industrial applications that has been adopted by all major standards bodies is IEC 63171-6, which covers all versions from IP20 to IP 65/67. Published in 2020, IEC 63171-6 describes the T1 Industrial Style SPE plug-in face.

This T1 industrial connector has a very robust design, including stainless steel shield

plates, and a rugged core and cable gland. The interface also features a strong, stainlesssteel lock to maintain a secure connection — even in harsh production environments — and a stainless-steel jacket that encloses the entire connector, minimizing electromagnetic interference (EMI).

LAPP USA | lappusa.com

The demand for high data throughput, low power, and longer battery life is driving much of the breakthroughs and evolutions in connectivity technology.

In this Design Guide, we present the need-to-know basics, as well as the technology fine points aimed at helping you and your designs keep pace and stay competitive in the fast-changing world of connectivity.

BROUGHT TO YOU BY: