125 minute read

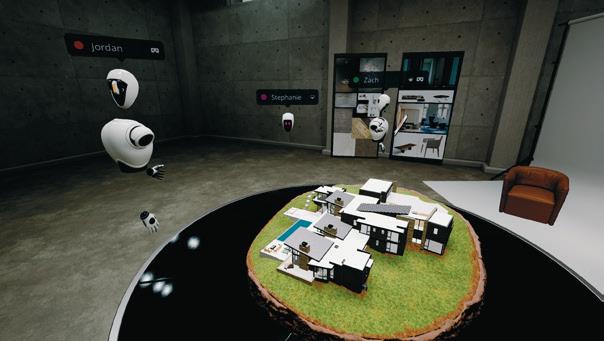

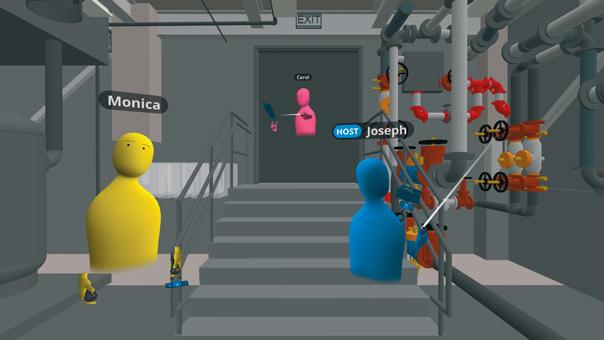

Concept collaboration with Arkio

WS-1640A-PRO-G4 [Threadripper Pro]

This Threadripper Pro workstation is not for everyone, but looks ideal for applications where memory bandwidth is critical, writes Greg Corke

In just a few years AMD’s Threadripper CPU has become synonymous with high-performance workstations. This is especially true in design viz, where rendering tools like V-Ray, KeyShot and Unreal Engine thrive on the plentiful CPU cores.

But Threadripper is not actually a workstation processor. It’s a ‘consumer’ CPU with buckets of multithreaded performance — far more than your average YouTuber or gamer would ever need.

Even though Threadripper has sold well, AMD knew it needed a dedicated workstation CPU in order to properly address the workstation market (just like Intel has done with Xeon), so in summer 2020 it launched Ryzen Threadripper Pro.

Threadripper Pro shares the same core silicon as Threadripper, but has several features that set it apart from its ‘consumer’ sibling. These include more memory channels (8 vs 4), so it has more memory bandwidth; higher memory capacity (2 TB ECC memory vs 256 GB) so it can support larger datasets; and additional PCIe Gen4 lanes (128 vs 64), so it can support more GPUs and SSDs.

While these features can give Threadripper Pro an advantage in some workflows, the downside is the CPU runs at slightly slower clock speeds than consumer Threadripper with equivalent core counts, both in terms of base and boost frequency. How this equates to realworld performance will depend on the application — whether it’s bottlenecked by memory bandwidth or CPU frequency.

Open competition

Threadripper Pro was originally exclusive to Lenovo in the Lenovo ThinkStation P620 workstation. However, in March 2021 AMD opened up the CPU to everyone, resulting in a plethora of new workstations. One of those machines is the WS-1640A-PRO-G4 from Derby-based Workstation Specialists, which can be configured with a choice of three AMD Ryzen Threadripper Pro CPUs — the 16-core 3955WX, 32-core 3975WX or 64-core 3995WX.

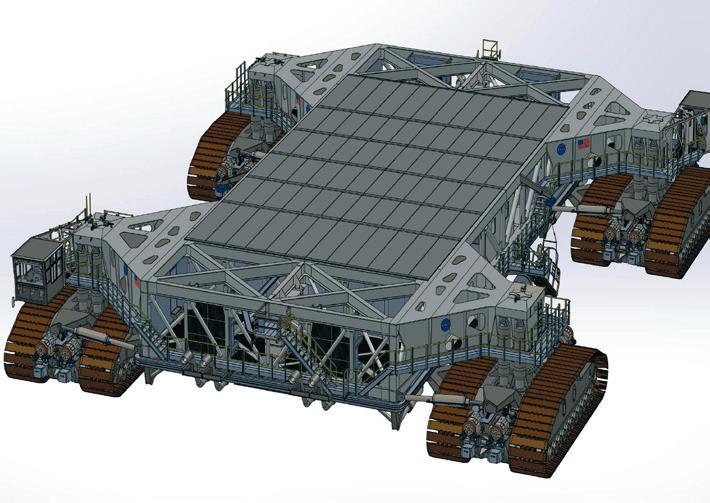

The first thing you notice about the machine is its size. At 240 x 547 x 475 mm, the Fractal Design 7 XL chassis is significantly larger than the ThinkStation P620 (165 x 460 x 440mm).

But there’s a reason for this. Built around the Extended ATX ASUS Pro WS WRX80E-SAGE SE WiFi motherboard, the WS1640A-PRO-G4 can support up to four double height GPUs, twice that of the ThinkStation P620. If you’re into GPU rendering this is an important consideration.

It also means there’s plenty of room for storage expansion. With three on-board PCIe 4.0 M.2 slots and eight SATA ports, you can easily add to our review machine’s storage — a 1 TB Samsung 980 PRO PCIe 4.0 M.2 NVMe SSD and 2 TB Seagate Barracuda 3.5-inch HDD. There’s a total of eight memory slots, all of which need to be populated in order to make the most of the 8-channel memory architecture. Our review machine was fitted with 128 GB (8 x 16 GB) DDR4 3200 GHz, but those who work with huge datasets can go all the way up to 2 TB with 256 GB 2,933 GHz ECC registered modules.

Our review machine came with the 32core Threadripper Pro 3975WX, which has a 3.5 GHz base frequency and a 4.2 GHz boost. It’s well suited to a range of multithreaded workflows, from rendering, photogrammetry and CFD which typically max out all available cores, to FEA and point cloud processing which, while multithreaded, might use fewer cores.

The machine performed well in our rendering tests but was outshone by the 32-core consumer Threadripper Scan workstation we reviewed earlier this year. It was around 7% slower in KeyShot and 12% slower in V-Ray. In applications like these, memory bandwidth is not as important as frequency. In KeyShot, for example, the Threadripper Pro maintained 3.8 GHz on all cores, but Threadripper hit 4.0 GHz.

In point cloud processing software Cyclone Register 360, the gap was much smaller, but Threadripper still had a 1% lead. This could be a case of memory bandwidth and CPU frequency cancelling each other out.

For Threadripper Pro to shine against its consumer counterpart it needs to be used in applications where memory bandwidth is critical, such as CFD or FEA, as it means data can be fed into the CPU much quicker.

Unfortunately, we don’t currently have any engineering simulation software in our testing suite, but we have heard anecdotally that in applications like Ansys Mechanical users might see a significant performance benefit. AMD also told us that when compiling shaders in Unreal Engine, Threadripper Pro has been seen to deliver a 30-40% jump in performance over an overclocked Threadripper CPU. Threadripper Pro’s 8-channel memory should also benefit workflows like video editing and post-production and when running multiple tasks in parallel.

Product spec ■ AMD Ryzen

Threadripper PRO 3975WX CPU (32 cores) (3.5 GHz, 4.2 GHz boost) ■ 128 GB (8 x 16 GB) 3,200 MHz 8-channel

DDR4 memory ■ 1 TB Samsung 980

PRO M.2 PCIe 4.0

NVMe SSD + 2 TB

Seagate Barracuda 3.5-inch HDD Graphics ■ Fractal Design 7 XL chassis (240 x 547 Our review machine came with x 475 mm (WxDxH) two GPUs to test: the AMD ■ Microsoft Windows Radeon Pro W5500 (8 GB) and 10 Pro 64-bit the new Nvidia RTX A6000 ■ 36 Months Premium RTB (48 GB). The difference in hardware warranty performance is huge, but so is ■ with AMD Radeon Pro W5500 (8 GB) £4,899 (Ex VAT) the price and not all workflows need such high levels of graphics ■ with Nvidia RTX processing. We’d recommend A6000 (48 GB) GPU £8,199 (Ex VAT) the AMD Radeon Pro W5500 in workstation workflows like simulation and specialists.com point cloud processing where graphics requirements are quite low, but the GPU still delivers good all round performance and is fully certified for a range of 3D applications. If you’re into real-time visualisation, VR or GPU rendering, the Nvidia RTX A6000 is a phenomenal GPU. And, if you really want to beef up the GPU rendering capabilities, you can pack four of these double width cards inside, something you can only do on very few other workstations. The verdict The WS-1640A-PRO-G4 is an excellent, well-built workstation, ideal for the most demanding of users. But it’s not for everyone. While the superior memory bandwidth of Threadripper Pro should benefit certain workflows like engineering simulation and some aspects of design viz, those that simply do ray trace rendering may still be better off with consumer Threadripper, especially as it’s cheaper. The caveat is, if you work with huge datasets: Threadripper Pro goes all the way up to 2 TB, while Threadripper peaks at 256 GB. In summary, get to know your applications, workflows and the size of your datasets before you invest.

CAD workstation round up

The latest workstations for CAD and BIM-centric workflows: 11th Gen Intel Core (up to 8 cores) and AMD Ryzen 5000 (up to 16 cores) to go beyond 3D design and into the realms of rendering, reality modelling and simulation

1

BOXX Apexx S3

BOXX has built a major part of its business around overclocking and with the BOXX Apexx S3 it permanently boosts 11th Intel Core clock speeds across all eight cores. The Intel Core i7-11700K runs at 5.0 GHz, while the Intel Core i7-11900K runs at 5.3 GHz. As with all BOXX workstations, there’s also a huge focus on build quality, with the custom chassis made from ‘aircraft-grade’ aluminium, offering a strength and rigidity way beyond that of most off-the-shelf cases.

■ boxx.com ■ boxx-tech.co.uk

2

Dell Precision 3450 SFF

The Dell Precision 3450 SFF is a very compact desktop workstation. Measuring a mere 290 x 93 x 293mm it can even be mounted behind a display (pictured left). The ‘small form factor’ chassis does mean a more limited set of processor options, maxing out at the 65W Intel Core i9-11900 or 80W Intel Xeon W-1390. It’s also restricted to entry-level pro GPUs, but the AMD Radeon Pro WX 3200 and Nvidia Quadro P1000 are perfectly suited to 3D CAD and BIM workflows.

■ dell.com/precision

3

Fujitsu Celsius W5011

This 21 litre ‘micro tower’ is not as deep as comparable workstations, as the motherboard and GPU span its entire depth. It offers a choice of 11th Gen Intel Core or Intel Xeon W-1300 processors and can be configured with a massive range of GPUs, from the entry-level CAD-centric Nvidia T400 up to the Nvidia Quadro RTX 5000 (it doesn’t yet offer the new Nvidia RTX A4000 / A5000). Other features include up to 128 GB of memory, multiple drives and tool-less access.

■ fujitsu.com

4

Lenovo ThinkStation P350 Tiny 5 Boston VENOM R41-10NP 6 Broadberry CyberStation SFF

Tiny by name, tiny by nature — this is the smallest workstation on the planet, measuring a mere 37 x 183 x 179mm. However, it still has everything you need for mainstream CAD and BIM workflows, including an 11th Gen Intel Core i9 CPU (8 cores, 5.2 GHz), up to 64 GB of DDR4 3200 MHz memory and a choice of Nvidia P1000 or T600 GPUs. There’s no room for a HDD but with an M.2 PCIe Gen4 NVMe SSD up to 2 TB there’s still plenty of storage. Plus, built in WiFi.

■ lenovo.com/workstations Boston offers a huge variety of desktop workstations in its Venom range, from Intel Core and Intel Xeon, to AMD Threadripper, Threadripper Pro and AMD Epyc. This AMD Ryzen 5000 machine can be fitted with optional liquid cooling for ‘maximum performance and whisper quiet operation’ and matched with up to 128 GB of DDR4 3,200 MHz stock or 4,733 MHz overclocked memory. All of Boston’s workstations are available to lease.

■ boston.co.uk With its 250 x 203 x 367 mm Fractal Design Core 500 chassis, the Broadberry CyberStation SFF is one of the smallest AMD Ryzen 5000 workstations. This, together with the built-in WiFi, make it well suited to home workers. Despite its size it can still take a whole host of pro GPUs, up to the Nvidia RTX A6000. Two RAM slots on the Gigabyte AMD Ryzen X570 I AORUS PRO motherboard mean it’s limited to 64 GB, but that’s still plenty for most CAD-centric workflows.

■ broadberry.co.uk/amd-ryzen-workstations

7 1 Dell Precision 5760 Interpro IPW-R9

As the name suggests, the InterPro IPW-R9 features a choice of 3rd Gen AMD Ryzen 9 CPUs, including the 12-core Ryzen 9 5900X and 16-core Ryzen 9 5950X. To keep clock speeds running as high as possible for longer periods, the UK firm uses a range of Corsair all-inone liquid CPU coolers. Different BIOS profiles can be created for customers, matched to their workflows. For example, to allow higher clock speeds by temporarily sacrificing cores.

8

Novatech ProStation WR7-WX41 9 Overclockers RENDA

The Novatech ProStation WR7-WX41 is built around the AMD Ryzen 5000 Series with a choice of three CPUs — the 8-core 5800X, 12-core 5900X and 16-core 5950X. It’s fully customisable, but not just core components like memory, graphics and storage. Customers can also choose from 15 different CPU coolers (air or liquid) and nine different chassis, from full towers like the Phanteks Enthoo Pro (pictured) to 4U rack mounts like the Chenbro RM41300G. It’s not hard to guess what this UK firm specialises in. The Overclockers RENDA workstation is all about pushing the limits of performance, while maintaining stability. Professional overclocker Ian Parry (aka 8Pack) heads up the R&D, delivering hand-built machines based on each customer’s workflow requirements. With a custom water cooling solution he says he can push the AMD Ryzen 9 5950X to 5.1 GHz on one core and 4.6 GHz on all cores.

10 11

13

12

10

BIMBOX Stryker III

BIMBOX is laser focused on the AEC sector and has extensive experience of Revit, Enscape, Leica Cyclone, V-Ray, Unreal Engine and many others — and, importantly, what makes them tick.

The firm takes overclocking extremely seriously. For its Stryker III workstation it ‘delids’ the Intel Core i9-11900K CPU, taking off the standard heat spreader and mounting its own liquid cooler directly onto the silicon. This brings down the CPU temperature considerably so it can safely run at 5.3 GHz on all cores.

BIMBOX is based in the US, but with the help of Ingram Micros its machines will soon be built, sold and supported in the UK and other countries.

11

HP Z2 G8 SFF

With its 338 x 308 x 100mm chassis, the HP Z2 G8 SFF is slightly bigger than the Dell Precision 3450 SFF (top left) but has the option of more powerful processors. These include the 125W Intel Core i9-11900K and the Nvidia Quadro RTX 3000, which extends the reach of the workstation beyond 3D CAD and BIM and into the realms of entry-level viz.

According to HP, the Z2 G8 SFF offers ‘Unthrottled performance’ thanks to Z’s ‘industry-leading’ thermals that keep the processor and graphics card cool, so they can run at max performance for extended periods of time. ‘Unthrottled performance’ also extends to the new PCIe Gen 4 Samsung PM9A1 SSD.

12

BOXX Apexx Denali

With its Apexx Denali, BOXX was one of the first workstation manufacturers to offer a Ryzen 5000 Series workstation.

It uses the same compact custom ‘aircraft-grade’ aluminium chassis as the Intel-based Apexx S3 and at 174 x 388 x 452mm it’s smaller than most AMD Ryzen 5000 tower workstations. But that doesn’t come at the expense of expandability. The Apexx Denali can house up to two high-end Nvidia RTX, Nvidia GeForce or AMD Radeon Pro GPUs and two 3.5-inch Hard Disk Drives (HDDs).

To keep the processor running at peak frequencies it uses a liquid-cooled closed loop system with a sizeable radiator.

13

Armari Magnetar

The Armari Magnetar V16R-RA850G2-2S is one of the smallest AMD Ryzen 5000 Series workstation out there, measuring a mere 360 x 87 x 400mm. The custom chassis features a high quality Japanese steel frame which was designed in-house by the specialist UK manufacturer.

Unlike many other small form factor workstations, there is no compromise on graphics and the workstation can support one dual slot GPU like the AMD Radeon Pro W6800 or two single slot GPUs like the Nvidia RTX A4000.

Custom fans and a 14cm all-in-one liquid CPU cooler help maintain peak performance, while still preserving quiet operation.

Best lightweight workstation laptops 2021

Our top picks for ultra-portable mobile workstations to take CAD and design visualisation on the road — all under 20mm and most below 2kg

Dell Precision 5760

The Dell Precision 5760 is somewhat unique as it remains the only thin and light 17-inch mobile workstation from a major vendor. It is a replacement for the Dell Precision 5750 but features an enhanced thermal design including dual output fans, vapour chamber and a hidden exhaust venting through the hinge.

Like the 15-inch Dell Precision 5560 (see top right) it features a combination of aluminium and carbon fibre for the chassis and a 94% display to body ratio thanks to the 4-sided InfinityEdge, 16:10 aspect ratio display.

The thin (8.67 mm - 13.15 mm) and light (2.15kg) design means some compromise on graphics with the Nvidia RTX A2000 (4 GB) and Nvidia RTX A3000 (6 GB) being the only options, although the latter is ‘VR Ready’. However, it offers the same broad choice of 45W 11th Gen Intel Core and Xeon CPUs and supports up to 4 TB of PCI Gen4 SSDs and 64 GB of DDR4, 3,200 MHz memory.

HP ZBook Studio G8

The 15.6-inch HP ZBook Studio G8 is HP’s first mobile workstation to offer both pro and consumer graphics options in the same machine, up to the Nvidia RTX A5000 (16 GB) or GeForce 3080 (16 GB). Both GPUs are ideal for design viz, VR and GPU rendering but as the laptop is very slim (17.5mm) we expect the same GPU might run faster in the thicker (22.8mm) HP ZBook Fury G8 15, which should offer better cooling and increased power draw. The HP ZBook Studio G8 offers a choice of 11th Generation Intel Core H-Series processors up to the Intel Core i9-11950H but only up to 32 GB RAM, which might be a little light for some workflows. It also features an optional HP DreamColor display with a 120Hz refresh rate, a billion on-screen colours, 100% DCI-P3, and ‘end-to-end’ colour accuracy with Pantone validation. It starts at 1.79kg.

■ hp.com/z

MSI Creator Z16

MSI’s new pro-focused laptop marks a change in aesthetics for the Taiwanese company. The slimline 16mm chassis is made from CNC-milled aluminium with a ‘Lunar Gray’ finish. It starts at 2.2kg.

With a 16-inch 16:10 aspect ratio display you get a bit more viewing space than the traditional 16:9. ‘True Pixel technology’ means extremely accurate colours and the display is hardware calibrated in the factory to give 100% coverage of the DCI-P3 colour gamut. QHD+ (2,560 x 1,600) resolution means pixel density is lower than a typical 4K (3,840 x 2,160) laptop display.

The Z16 features a choice of 11th Gen Intel Core H series processors, an Nvidia GeForce RTX 3060 laptop GPU with Nvidia Studio drivers, up to 64 GB memory and up to 4 TB of storage spread across two M.2 NVMe PCIe Gen4 SSDs.

■ msi.com

Dell Precision 5560

The Dell Precision 5560 wins hands down when it comes to portability. It’s the thinnest and lightest out of all the 15.6-inch mobile workstations — a mere 7.7mm at the front, 11.64mm at the rear and starting at 1.84kg. And with ultra thin bezels, it’s also notably smaller than comparable machines.

In order to achieve this sleek aesthetic, it only includes entrylevel graphics options including the Nvidia T1200 (4 GB) and Nvidia RTX A2000 (4 GB), which are best suited to 3D CAD / BIM and entry-level viz workflows. However, there’s no compromise on the CPU with options going up to the Intel Xeon W-11955M (8 Core, 2.60 GHz up to 5.00 GHz). The laptop supports up to 64 GB of DDR4 3,200 MHz memory and 4 TB of NVMe PCI 4.0 storage. The IPS 4K ‘Gorilla Glass’ display is also top notch — 500 nits, 100% AdobeRGB and 99% DCI-P3.

■ dell.com/precision

Lenovo ThinkPad P1 Gen 4

The first three generations of this thin and light mobile workstation featured a 15.6-inch display and CAD-focused pro graphics. The G4 edition is a ‘clean sheet’ design with a 16-inch display and higher-powered GPUs, including the ‘professional’ Nvidia RTX A5000 (16 GB) and ‘consumer’ Nvidia GeForce 3080 (16 GB). Memory and storage capacity remains the same with up to 64 GB DDR4 3,200 MHz and up to two 2 TB M.2 NVMe PCIe Gen4 SSDs. To accommodate the higher-end GPUs, which draw significantly more power than those in previous generation ThinkPad P1s, Lenovo has developed a new thermal design. It is also using AI to dynamically manage the ‘cooling budget’. For example, if a workflow is dependent on both the CPU and the GPU, it might set the Total Graphics Power (TGP) to 80W, whereas if a workflow is totally reliant on the GPU, such as GPU rendering, it could go as high as 90W or 100W. Despite more powerful GPUs and a larger display, the ThinkPad P1 Gen 4’s carbon fibre and magnesium alloy chassis has only increased slightly in size and weight – 361.8 (w) x 245.7 (d) x 18.4mm (h) and starting at 1.81kg.

■ lenovo.com/workstations

Microsoft Surface Book 3 (15-inch)

The Microsoft Surface Book 3 launched in 2020 so is the oldest machine in this round up, but it warrants inclusion because it offers something different. At the push of a button, you can remove the touchscreen display and turn it into a tablet. And with the optional pressure sensitive Surface Pen, use it for precision sketching.

The 15-inch display has a resolution of 3,240 x 2,160 and an aspect ratio of 3:2, which is deeper than all the other machines.

As it’s last year’s model, the Surface Book 3 features a 10th Gen Intel Core processor - the Core i7-1065G7. With a boost of 3.9 GHz, performance in CAD will be OK, but with four cores and a base clock of 1.5 GHz it will be significantly slower than others in multi-threaded workflows like rendering.

For graphics, you have the option of the Nvidia Quadro RTX 3000 (6 GB), which is designed for entry-level viz, but its ‘Max-Q Design’ means it will run slower than other machines with the same GPU.

The Microsoft Surface Book 3 has a thickness of between 15mm and 23mm and weighs 1.9kg with the keyboard and 0.81kg without.

■ surface.com

AMD Radeon Pro W6800

This beast of a card is the first pro GPU from AMD with hardware-based ray tracing built in. With a whopping 32 GB of on board memory it’s designed for the most demanding arch viz workflows, writes Greg Corke

Price $2,250

amd.com/radeonpro

It’s been a long time coming but AMD has finally delivered its first professional GPU with hardware ray tracing built in. And with 32 GB of VRAM, the AMD Radeon Pro W6800 is a beast of a graphics card.

Priced at $2,249, the Radeon Pro W6800 goes head-to-head with the 16 GB Nvidia RTX A4000 ($1,000) and 24 GB Nvidia RTX A5000 ($2,250), both of which we review on page WS28.

In terms of raw performance, the Radeon Pro W6800 sits somewhere between AMD’s ‘consumer’ Radeon RX 6800 and Radeon RX 6800 XT. But as a workstation-class card there are several key differences.

First, it will be certified for a wide range of professional applications, including all the major CAD and BIM tools. This can be especially significant for enterprise customers.

It can also support up to six displays, which can be important for powerwalls, and features Error Correcting Code (ECC) memory to protect against crashes. And instead of three axial fans that recirculate air inside the machine, it has a single ‘blower’, which draws in cool air from the top of the card and pushes it out the rear of the machine. This design can be particularly beneficial in multi-GPU workstations.

Perhaps most importantly, however, is that the Radeon Pro W6800 has a colossal 32 GB of on-board GDRR6 memory, double that of its consumer counterparts, and more than Nvidia’s pro GPUs at the same price point.

Monster memory

32 GB is a huge amount of memory for a GPU, surpassed only by the 48 GB Nvidia RTX A6000 which costs twice as much. It means the Radeon Pro W6800 can handle some seriously demanding visualisation datasets. This could be a huge multi-disciplinary city-scale model with immense detail or one with less geometry but hyper realistic assets such as 8K textures or detailed vegetation.

It’s a huge step up from its predecessor, the AMD Radeon Pro W5700, which only had 8 GB and highlights AMD’s ambitions for high-end design viz and real-time ray tracing.

The new GPU features enhanced Compute Units (CU) with dedicated ‘Ray Accelerators’. As this is AMD’s first pro GPU with hardware ray tracing, there aren’t currently a huge number of applications that can take advantage of its ‘Ray Accelerators’, but this is changing.

The list currently includes applications that support DirectX Raytracing (DXR), such as Unreal Engine. Also, any that feature Radeon ProRender 2.0, the latest version of AMD’s physicallybased rendering engine. This includes Solidworks Visualize, Acca Software, Autodesk Inventor, Rhino, Autodesk Maya, and Blender.

Looking to the future, it will also extend to any application that supports Vulkan Ray tracing, including those in development at Solidworks (Project Romulan - tinyurl.com/SW-graphics), Autodesk (One Graphics System - tinyurl. com/Revit-GPU) and Enscape.

The Radeon Pro W6800 will not accelerate ray tracing in Nvidia RTXenabled applications such as Luxion KeyShot, Chaos V-ray, Chaos Vantage, Enscape 3.0 and others.

Of course, the Radeon Pro W6800 can also be used for many other applications that don’t rely on hardware ray tracing. This includes those that use the OpenGL or DirectX graphics APIs, including real-time design viz tools like Lumion or Twinmotion, Virtual Reality (VR) or photogrammetry software.

The GPU is very much focused on viz and is not optimised for FP64 (Double Precision) code, so applications like engineering simulation will likely to continue to be best served by the AMD Radeon Pro VII ($1,899).

Viewport boost

The Radeon Pro W6800 supports a new pro driver feature called Radeon Pro Viewport Boost, which is designed to reduce latency and boost viewport navigation performance.

It detects when a 3D model is moving quickly in the viewport then

Solidworks 2021 SP3 (OpenGL)

SPECapc benchmark (FSAA) - shaded with edges

1.23 Benchmark score (bigger is better)

AMD Radeon Pro W5700 1.72

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000

Nvidia RTX A4000 2.08

2.29

2.52

Nvidia RTX A5000 N/A

0.0 0.5 1.0 1.5 2.0 2.5 3.0

Solidworks Visualize 2021 SP3 (ProRender)

Computer model (denoising disabled)

1,000 passes, accurate quality (1,500 x 1,500 resolution)

1.23 Render time (secs) (smaller is better)

AMD Radeon Pro W5700 351

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000 211

358 Solidworks 2021 SP3 (OpenGL)

SPECapc benchmark (FSAA) - RealView, shadows & AO

1.23 Benchmark score (bigger is better)

AMD Radeon Pro W5700 2.26

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000

Nvidia RTX A4000 3.02

2.90

3.35

Nvidia RTX A5000 N/A

0.0 0.5 1.0 1.5 2.0 2.5 3.0 3.5

Solidworks Visualize 2021 SP3 (ProRender)

Computer model (denoising enabled)

100 passes, accurate quality (1,500 x 1,500 resolution)

1.23 Render time (secs) (smaller is better)

AMD Radeon Pro W5700 67

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000 45

56

automatically drops the resolution in specific areas to reduce the number of pixels the GPU needs to process. Then, as soon as that movement stops, it restores the full pixel count. According to AMD, this can increase Frames Per Second (FPS) dramatically without impacting the visual experience.

AMD Radeon Pro Viewport Boost currently works with Revit, 3ds Max, Twinmotion and Unreal Engine (for packaged projects only, not currently Unreal Engine Editor). Support for other applications is coming soon.

We explore this in more detail on page WS26

Specifications

The AMD Radeon Pro W6800 is the first workstation GPU to be based on AMD’s 7nm RDNA 2 architecture.

AMD states peak FP32 Throughput (Single Precision) as 17.83 Teraflops of Compute Performance. It is not optimised for FP64 (Double Precision).

With six Mini DisplayPort outputs it can drive up to six displays at 5K resolution or up to two displays at 8K resolution.

The board itself is full height, double slot, with a peak power of 250W. It requires a 6-pin and an 8-pin power connector and should fit most mid-sized tower chassis.

The AMD Radeon Pro W6800 is a PCIe 4.0 graphics card. While it is fully compatible with older PCIe 3.0 workstations, it’s designed to work best with PCIe 4.0 workstations. With double the bandwidth of PCIe 3.0, data can theoretically be fed into the GPU much quicker, although it won’t make a difference in all workflows.

PCIe 4.0 compatible CPUs include 11th Generation Intel Core, Intel Xeon W-1300, AMD Ryzen 5000, AMD Ryzen Threadripper 3900X and Threadripper Pro 3900WX series.

The AMD Radeon Pro W6800 is also designed to work better with AMD CPUs with AMD Smart Access Memory. This essentially gives the CPU better access

to the GPU’s onboard memory. AMD says it unlocks higher performance for ‘key professional workloads’ but did not elaborate further.

The Radeon Pro W6800 also includes 128 MB of AMD Infinity Cache, a ‘lastlevel’ data cache integrated on the GPU die designed to reduce latency and power consumption.

The Radeon Pro W6800 on test

We put the AMD Radeon Pro W6800 through a series of real-world application benchmarks, for GPU rendering, real-time visualisation and 3D CAD.

All tests were carried out using the AMD Ryzen 5000-based Scan 3XS GWPME A132R workstation (see page WS12 for a full review). Resolution was set to 4K (3,840 x 2,160) and we used AMD’s enterprise 21.Q1 graphics driver.

For comparison, we used AMD’s previous generation ‘RDNA’ workstation GPU, the AMD Radeon Pro W5700 (8 GB), plus Nvidia’s brand new ‘Ampere’ workstation GPUs, the Nvidia RTX A4000 (16 GB) and Nvidia RTX A5000 (24 GB), which we review on page WS28.

Unreal Engine 4.26

Over the past few years Unreal Engine has established itself as a very prominent tool for design viz, especially in architecture and automotive. It was one of the first applications to use GPU-accelerated real-time ray tracing, which it does through Microsoft DirectX Ray tracing (DXR). It means the AMD Radeon Pro W6800 is fully compatible.

For testing, we used two datasets, both freely available from Epic Games: an arch viz interior of a small apartment and the Automotive Configurator, which features an Audi A5 convertible. Both scenes were tested with ray tracing enabled (DirectX Ray tracing (DXR)) and without (DirectX 12 rasterisation).

The Radeon Pro W6800 did well with DirectX 12 rasterisation, showing a vast improvement over the Radeon Pro

Lumion 11.5 (DirectX 12 - real time)

Architectural house

4K (3,840 x 2,160 resolution)

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000

Nvidia RTX A4000

Nvidia RTX A5000 9.90

11.60 18.40

16.53

22.30

0 5 10 15 20

Lumion 11.5 (DirectX 12 - real time)

Colossal building (28 GB)

4K (3,840 x 2,160 resolution)

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700 2.30

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000 2.20 6.80 Lumion 11.5 (DirectX 12 - rendering)

Architectural house

8K (7,680 x 3,840 resolution)

1.23 Render time (secs) (smaller is better)

AMD Radeon Pro W5700 510

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000

Nvidia RTX A4000 294

390

280

Nvidia RTX A5000 212

0 100 200 300 400 500 600

Lumion 11.5 (DirectX 12 - rendering)

Colossal building (28 GB)

8K (7,680 x 3,840 resolution)

1.23 Render time (secs) (smaller is better)

AMD Radeon Pro W5700

AMD Radeon Pro W6800 218 1,283

Nvidia Quadro RTX 4000 1,243

W5700, and sitting somewhere between the RTX A4000 and RTX A5000. With real-time ray tracing enabled, however, it fell notably behind both Nvidia GPUs. Without hardware ray tracing built-in, the Radeon Pro W5700 pretty much ground to a halt. Autodesk VRED Professional 2022

Lumion 11.5 Enscape 3.0

Lumion is a real-time rendering tool popular with architects. The 11.5 release uses DirectX 12 rasterisation. It does not currently support hardware-based ray tracing.

The software can work with 8K textures and has a vast object library including trees with leaves that move in the wind, all of which can place huge demands on GPU processing and memory.

We tested the GPUs in two ways: one measuring real-time 3D performance in terms of Frame Per Second (FPS) and two, recording the time it takes to render an 8K scene.

Lumion supplied us with two datasets: a standard architectural house with surrounding vegetation, which will fit into 8 GB of GPU memory; and a colossal building model which needs 28 GB, more than the capacity of the Nvidia RTX A4000 (16 GB) and RTX A5000 (24 GB).

It came as no surprise that the AMD Radeon Pro W6800 came out top when testing the 28 GB model as it was the only GPU able to load the entire dataset into memory. The Nvidia RTX A4000 (16 GB) and RTX A5000 (24 GB) really struggled, especially in real-time 3D where it was very hard to navigate the scene.

With the smaller scene, however, the Nvidia RTX A5000 demonstrated a clear lead over the Radeon Pro W6800 and the RTX A4000 also stood up well, edging out the Radeon Pro W6800 when rendering. Enscape is a real-time viz and VR tool for architects that delivers very high-quality graphics in the viewport. The software has used elements of ray tracing for some time and version 3.0 is RTX-enabled, so hardware ray tracing is supported on Nvidia RTX GPUs. Later versions will use the more modern Vulkan API and support ray tracing on both Nvidia and AMD GPUs.

For our tests, we used a large scene of a building complex and its surrounding area in Enscape 3.0 (non RTX). At 9.5 GB, the GPU memory requirements of this model are relatively high, but Enscape models can be much larger.

In terms of performance, the Radeon Pro W6800 delivered a very smooth experience at 29 FPS, more than double that of the Radeon Pro W5700. It edged out the Nvidia RTX A4000 but was a bit behind the Nvidia RTX A5000.

Autodesk VRED Professional is an automotive-focused 3D visualisation, virtual prototyping and VR tool. It uses OpenGL and delivers very high-quality visuals in the viewport. It offers several levels of real-time anti-aliasing (AA), which is important for automotive styling, as it smooths the edges of body panels. However, AA calculations use a lot of GPU resources, both in terms of processing and memory. We tested our automotive model with AA set to ‘off’ and ‘ultra-high’. As we have seen previously with AMD GPUs, the AMD Radeon Pro W6800 did OK with anti-aliasing set to off, but was still significantly behind ‘‘ The AMD Radeon Pro W6800 stands out the RTX A5000. With antialiasing enabled, however, performance dropped from the competition due to its substantial considerably, with even 32 GB of memory, surpassed only by the the RTX A4000 taking a Nvidia RTX A6000 which costs twice as much substantial lead. ’’ Solidworks Visualize 2021 The name of this GPUaccelerated physically-based renderer is a bit misleading as it works with many more applications than the CAD application of the same name. It can import models from PTC Creo, Solid Edge, Catia and Inventor, as well as several neutral formats. The software was initially programmed to work with Nvidia Iray and, more recently, Nvidia RTX. However, in the 2020 release, AMD Radeon ProRender was added, so users now have a choice of two rendering engines. Both support denoising, a post-processing technique that filters out noise from unfinished / noisy images and means you can get better

Unreal Engine 4.26 (DirectX 12 - rasterisation)

Audi car configurator model (ray tracing disabled)

4K (3,840 x 2,160 resolution)

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000

Nvidia RTX A4000

Nvidia RTX A5000 N/A

16.98 28.83

24.61

33.00

0 5 10 15 20 25 30

Unreal Engine 4.26 (DirectX 12 - rasterisation)

Arch Viz interior model (ray tracing disabled)

4K (3,840 x 2,160 resolution)

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700 24.30

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000 26.89 46.30 Unreal Engine 4.26 (DirectX 12 - DXR)

Audi car configurator model (ray tracing enabled)

4K (3,840 x 2,160 resolution)

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700 N/A

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000

Nvidia RTX A4000

Nvidia RTX A5000 9.40

8.38

13.44

19.02

0 5 10 15

Unreal Engine 4.26 (DirectX 12 - DXR)

Arch Viz interior model (ray tracing enabled)

4K (3,840 x 2,160 resolution)

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700 N/A

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000 5.30

5.21

looking renders with significantly fewer rendering passes.

We tested both AMD and Nvidia GPUs with Radeon ProRender using the PC model from the SPECapc for Solidworks 2021 benchmark. We rendered at 1,500 x 1,500 resolution with 1,000 passes (denoising disabled) and 100 passes (denoising enabled) with accurate quality. Both settings produced excellent visual results.

With denoising enabled, there was little between the Radeon Pro W6800, and Nvidia’s Ampere GPUs, but the RTX A5000 had a bigger lead with denoising disabled.

Solidworks 2021

While most CAD applications won’t benefit from any GPU more powerful that the Nvidia Quadro P2200 or AMD Radeon Pro W5500, Solidworks 2021 is an exception. By using OpenGL 4.5, a more modern version of the popular graphics API, more algorithms can be pushed onto the GPU so there is a benefit to higher performance cards.

Even so, the application is still CPU limited to some extent, so the performance benefit of more powerful GPUs isn’t as big as you’d expect from a dedicated real-time viz tool.

Like most CAD tools, the most popular way to view models in Solidworks is in shaded with edges mode. Using the SPECapc for SolidWorks 2021 benchmark we saw a small improvement over the Radeon Pro W5700, although the Radeon Pro W6800 was behind both Nvidia GPUs.

Solidworks also features more realistic display styles for viewing models in real

With six Mini DisplayPort outputs the W6800 can drive up to six displays at 5K resolution or up to two displays at 8K

With a peak power of 250W, the W6800 requires a 6-pin and an 8-pin power connector

Enscape 3.0 (OpenGL)

Large building complex

4K (3,840 x 2,160 resolution)

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700 13

AMD Radeon Pro W6800 30

Nvidia Quadro RTX 4000 Inconsistent results

Nvidia RTX A4000 27

Nvidia RTX A5000 36

0 5 10 15 20 25 30 35 Autodesk VRED Professional 2022 (OpenGL)

Automotive model (No Anti Aliasing)

4K (3,840 x 2,160 resolution)

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000

Nvidia RTX A4000

Nvidia RTX A5000 30.65

51.85

30.88

48.80

63.30

0 10 20 30 40 50 60 70

VRMark - Blue Room

DirectX 11

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700 49.65

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000 99.77

57.7 Autodesk VRED Professional 2022 (OpenGL)

Automotive model (Anti Aliasing - Ultra-high)

4K (3,840 x 2,160 resolution)

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700 3.30

AMD Radeon Pro W6800 5.45

Nvidia Quadro RTX 4000

Nvidia RTX A4000 7.30

12.25

Nvidia RTX A5000 15.98

0 5 10 15

VRMark - Cyan Room

DirectX 12

1.23 Frames Per Second (FPS) (bigger is better)

AMD Radeon Pro W5700

AMD Radeon Pro W6800

Nvidia Quadro RTX 4000 182.43

173.69 351.17

Arch viz studio Beehive pushed the W6800 to its limits in Lumion on ‘Aedas City’, a visualisation project that features six of the international architecture firm’s building designs. It uses 28 GB (yes, 28 GB) of GPU memory

time. Solidworks RealView, which is only supported by pro GPUs, adds realistic materials and supports environment reflections and floor shadows. Meanwhile, ambient occlusion adds more realistic shadows and helps bring out details.

Both viewing styles are more GPUintensive, so performance is less limited by the frequency of the CPU. In our tests, we saw a bigger benefit to the more powerful GPUs when RealView, shadows and ambient occlusion were enabled.

We were unable to test the Nvidia RTX A5000 as Solidworks 2021 Service Pack 3 did not recognise the card. We expect this to be fixed in SP4, out soon. VRMark We also tested with VRMark, a dedicated Virtual Reality benchmark that uses both DirectX 11 and DirectX 12. It’s biased towards 3D games, so not perfect for our needs, but should give a good indication of the performance one might expect in ‘game engine’ viz tools, although all datasets are different.

The Radeon Pro W6800 came out top in the ‘Cyan room’ test which measures DirectX 12 performance. AMD itself has highlighted how its ‘RDNA 2’ architecture performs well in DirectX 12 applications. The verdict The Radeon Pro W6800 stands out from the competition due to its substantial 32 GB of memory, surpassed only by the Nvidia RTX A6000 which costs twice as much. But you have to take design viz very seriously to need such a huge amount.

Architectural visualisation studio Beehive certainly does. It pushed the W6800 to its limits in Lumion, while working on ‘Aedas City’, a visualisation project that features six of the international architecture firm’s building designs.

And because the project could be held entirely within GPU memory, it managed to massively reduce render times for a 891 frame video – from 36 hours and 11 mins (with the 24 GB Nvidia Quadro RTX 6000 GPU) to 9 hours and 27 mins (with the Radeon Pro W6800).

There are big benefits for projects like this but this is an extreme example. Most design-centric visualisation workflows require significantly less GPU memory, although with ever increasing demands for realism and resolution, this will likely change in the future. There is also a strong workflow argument for more GPU memory, by not having to worry so much about optimising geometry or textures.

For now, in more mainstream viz workflows, AMD faces very stiff

competition from Nvidia. The 16 GB Nvidia RTX A4000, for example, generally offers a little less performance than the Radeon Pro W6800 but costs half as much. Meanwhile, the 24 GB Nvidia RTX A5000 offers parity on price, but has a clear performance lead in some workflows and better software compatibility. One can’t help but wonder if AMD has missed a trick by not pricing the Radeon Pro W6800 more aggressively to make it more competitive in workflows where large memory capacity ‘‘ There is a strong workflow argument for is less important. Or perhaps there’s room for a Radeon Pro W6700? having so much memory on a GPU, by not Nvidia also appears to have having to worry so much about optimising a clear lead in DXR hardware geometry or textures ray tracing, although this is perhaps to be expected. ’’ AMD’s ray accelerators are ‘first generation’ and there is also scope for driver improvements. AMD is innovating in other areas, however. The Radeon Pro Viewport Boost, for example, is an exciting feature that takes a smarter approach to how precious GPU resources are allocated. And this is certainly one to watch for the future. We also wait with interest to see how the forthcoming 8 GB AMD Radeon Pro W6600 shapes up. At $649 it should hit the sweet spot for CAD users who also want a real-time 3D, ray tracing or VR capability. Nvidia doesn’t yet have a pro GPU with hardware ray tracing in this entry-level market segment.

AMD Radeon Pro Viewport Boost

In recent years AMD has allocated significant resources to the development of its Radeon Pro graphics drivers. The new 21.Q2 release promises to increase 3D performance by dynamically reducing viewport resolution, and without impacting the visual experience. Radeon Pro Viewport Boost works with any AMD Radeon Pro GPU but Greg Corke tests it out with the new Radeon Pro W6800

tinyurl.com/viewport-boost

At the beginning of June AMD launched the AMD Radeon Pro W6800, a monster 32 GB professional GPU, which we review in-depth on page 20.

Such a huge amount of on-board memory certainly makes the W6800 stand out from other GPUs in its class. However, the ‘RDNA 2’ workstation card also features a new pro graphics driver feature called Radeon Pro Viewport Boost, which is designed to reduce latency and boost viewport navigation performance.

The idea behind the technology is simple but smart. It detects when a 3D model is moving quickly in the viewport, then dynamically drops the resolution to reduce the number of pixels the GPU needs to process. Then, as soon as that movement stops, it restores the full pixel count. According to AMD, this can increase Frames Per Second (FPS) dramatically without impacting the visual experience.

AMD Radeon Pro Viewport Boost currently works with Autodesk Revit 2021, Autodesk 3ds Max 2021, Twinmotion and Unreal Engine 4 (for packaged DirectX 11 projects only – not currently DirectX 12 or Unreal Engine Editor). Support for other applications is coming soon.

It works best in GPU limited workflows. i.e. those where the GPU is being pushed to its limits and is the bottleneck in the workstation. And, with this in mind, it should deliver the biggest benefits at higher resolutions (4K and above), with larger models and when visual quality settings are maxed out.

Testing Radeon Pro Viewport Boost

Radeon Pro Viewport Boost is enabled in the AMD Radeon Pro 21.Q2 driver under graphics settings. Users have control over the minimum dynamic resolution that the application viewport will drop down to, expressed as a percentage of its native resolution. It can be set between 50% and 83.3%. The lower the value, the bigger the potential performance boost.

To show the extent to which it is working at any given moment, one to four small green dots appear in the top left corner of the viewport – one being the least, four being the most.

The smart thing about Radeon Pro Viewport Boost is that it only works when the model is in fast motion, when the eye is less sensitive to a loss of visual detail.

In both of our Unreal Engine arch viz interior scenes, for example, it only kicked in when ‘running’ (shift, up arrow) and not when ‘walking’ (up arrow only). At 50%, the drop in resolution is clearly visible but only really when you actively look out for it. At 83.3% it was very hard to see any difference.

When modelling in Revit or 3ds max, lines become more pixelated. But with the speed with which one tends to pan, rotate, or zoom-in, to quickly shift focus to a different part of the model, it’s really not detrimental to the overall experience.

We tested on a fairly standard 4K (3,840 x 2,160 resolution) 60Hz IPS panel. There may be a bigger discernible difference on higher spec displays.

The performancebenefits can be huge. In Unreal Engine 4.26, testing with a Paris interior scene from arch viz artist Benoit Derau (benoitdereau.com) we saw frame rates more than double (116%) when minimum resolution was set to 50%. In Unreal Engine’s freely available Arch viz interior scene packaged as a DirectX11 project it increased by 59%.

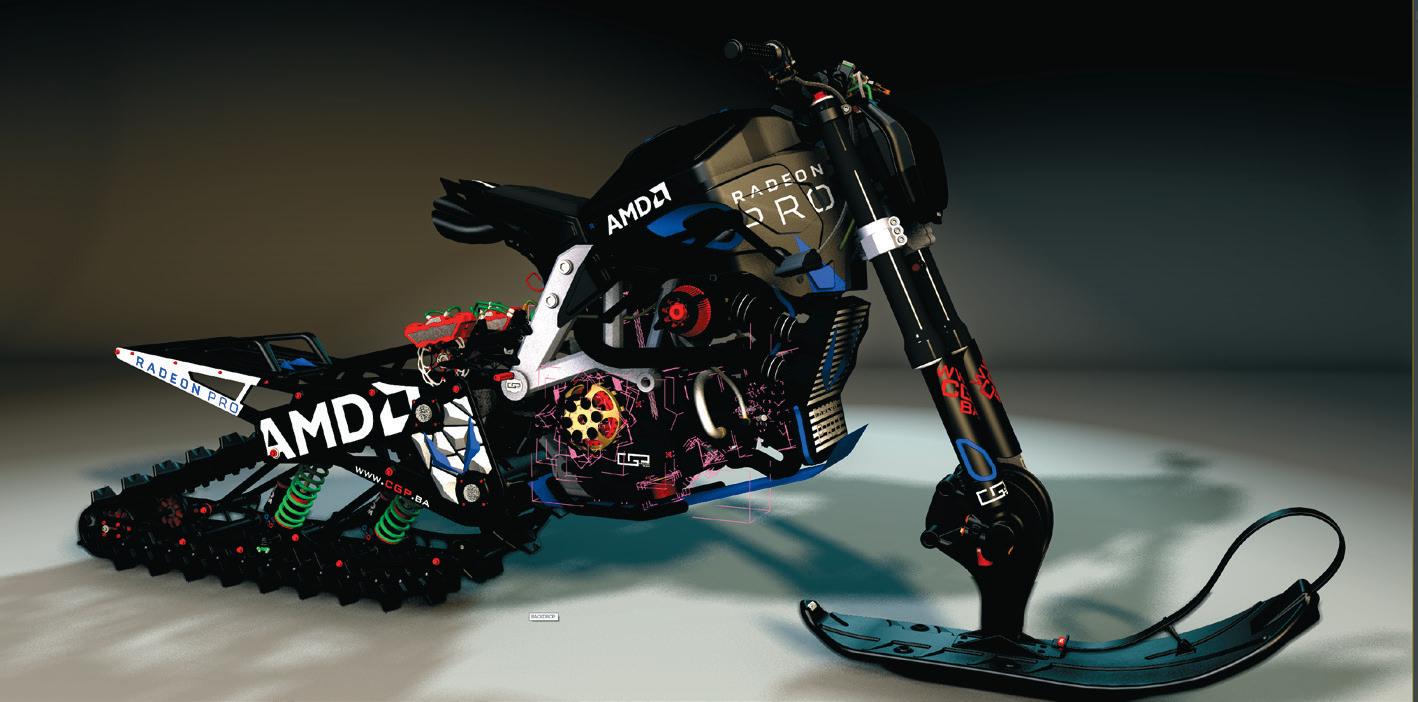

In Twinmotion the boost was around 30% with the ‘materials room’ demo scene when visual settings were set to ‘ultra’. In 3ds max we saw around a 20% improvement with AMD’s ‘snow bike’ model with high anti-aliasing and “High Quality” shading.

What about CAD/BIM? For design viz applications like Unreal Engine and Twinmotion having the highest quality graphics is always the ultimate goal. However, in 3D CAD and BIM modelling workflows it’s usually less important, with the focus instead on the clear representation of geometry.

With this in mind, the benefits of Radeon Pro Viewport Boost in CAD or BIM applications like Autodesk Revit are less clear. In Revit, the most popular way to view BIM models is in ‘shaded’ mode. However, with this display style enabled we saw no benefit to Radeon Pro Viewport Boost. As with many CAD and BIM tools, the GPU simply isn’t stressed enough, so the CPU becomes the bottleneck instead. It’s only when you start ramping up the quality settings that more demands are placed on the GPU and Radeon Pro Viewport Boost can come into effect. And while the performance increases can be large, we only found a few select scenarios where a substantial benefit could be seen. We tested with four relatively small Revit models and found that if the following criteria were met — realistic display style, smooth lines with antialiasing, transparency enabled, and viewport set to perspective mode — then there was a huge performance gain; almost double the Frames Per Second. But without all of those enabled — especially with the viewport set to orthographic — the performance gains were minimal, or there were none at all. We would be interested ‘‘ It’s good to see AMD innovating to learn how the system works with significantly larger Revit models. It’s also worth pointing by taking a out here that most CAD smarter approach to how GPU and BIM applications already have a built-in feature to help improve resources are viewport performance allocated. Why when working with large bother rendering pixels that most models. In Revit, for example, the ‘simplify display during view people won’t even navigation’ feature notice when (which is switched on models are moving at speed? by default) suspends certain graphics effects and temporarily removes ’’ some objects when the model is in motion. Radeon Pro Viewport Boost gives the best performance boost when this feature is enabled, so you are getting a lower res representation of a model that has already been simplified. Of course, Revit like many CAD and BIM applications, is renowned for being CPU limited so it’s hardly surprising we found reduced benefits for Radeon Pro Viewport Boost. This is especially true for a high-end graphics card like the Radeon Pro W6800, which is complete overkill for Revit. In CAD applications that make better

use of the GPU, such as Solidworks 2021, AMD Radeon Pro Viewport Boost could have a bigger impact in a broader set of viewing styles.

It’s also important to note that CAD applications are changing, with new graphics engines that use modern APIs like Vulkan to push more processing onto the GPU and reduce the CPU bottleneck. This includes future versions of Solidworks (Project Romulan - tinyurl.com/SW-graphics) and Autodesk Revit (and other Autodesk applications) which will use the new One Graphics System (tinyurl.com/Revit-GPU).

What we think

It’s good to see AMD innovating by taking a smarter approach to how GPU resources are allocated. Why bother rendering pixels that most people won’t even notice when models are moving at speed?

From our tests we see a clear benefit for design visualisation, where applications almost always push the GPU to its limits and visual quality is of paramount importance. We’re less convinced with the broader advantages for CAD and BIM software. In Revit, for example, it appears you have to use a fairly specific combination of visual settings in order to benefit. And, in a workflow where the clear representation of geometry is usually the priority, one would also question how many people actually view models that way.

At the moment, application support is quite limited, but this will grow. We imagine AMD is working on support for Unreal Engine Editor as well as DirectX 12, which should be a big attraction for viz artists, especially those working with huge datasets that approach the substantial 32 GB memory limit of the Radeon Pro W6800.

When AMD first announced Viewport Boost it was exclusive to the Radeon Pro W6800 and W6600 GPUs. AMD has now confirmed that it will be expanding support to prior generation Radeon Pro GPUs as well. So, for those that already own an AMD Radeon Pro GPU, this could be a great way to get more out of your investment.

And it’s perhaps with less powerful GPUs like these, that users will get the biggest benefits. In all of our tests we experienced pretty good viewport performance (most well above 20 FPS) even with Viewport Boost disabled. But it’s when frame rates drop lower, and viewports become choppy, that any performance increase can make a huge difference to practical workflows and become far more important than numbers on charts.

With Radeon Pro Viewport Boost enabled and min resolution set to 50% some pixelation is noticeable on this wall painting when ‘running’ in this Unreal Engine scene. The four green dots (top left) show that Viewport Boost is in full effect

As soon as you stop ‘running’ the four green dots disappear and the full resolution image is instantly restored

In 3ds Max, with Radeon Pro Viewport Boost enabled and min resolution set to 50%, there is little difference between the moving image (left) and the static image (below) although the lettering on the bike is not as sharp

Nvidia RTX A4000 / A5000

Nvidia’s new Ampere-based pro GPUs, the Nvidia RTX A4000 and RTX A5000, offer a big step up from the Turing-based Quadro RTX family. With more memory and significantly enhanced processing, they promise to make light work of demanding realtime ray tracing, GPU rendering and VR workflows, writes Greg Corke

Price $1,000 (A4000) / $2,250 (A5000)

nvidia.com

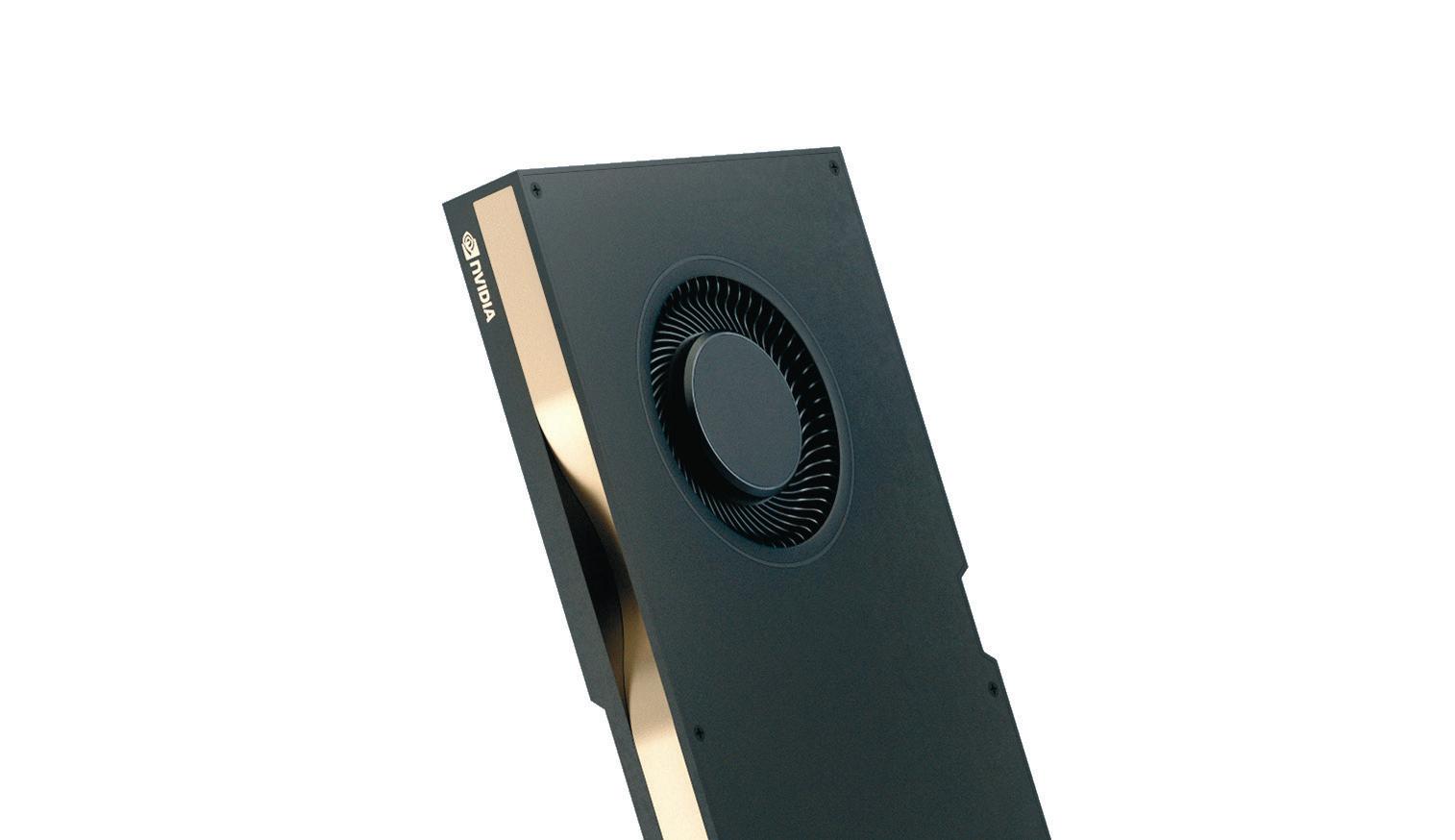

In February 2021 we reviewed the Nvidia RTX A6000, the first pro desktop GPU to be based on Nvidia’s ‘Ampere’ architecture. With 48 GB of memory and buckets of processing power, the dual slot 300W graphics card is designed for the most demanding visualisation workflows – think city-scale digital twins or complex product visualisations using very hi-fidelity textures, such as those captured from real-life scans.

Of course, the Nvidia RTX A6000 is complete overkill for most architects or product designers who simply want a capable GPU for real-time visualisation, GPU rendering or VR. And it’s here that the new Nvidia RTX A4000 and Nvidia RTX A5000 come into play.

Announced at Nvidia’s GTC event this year, the PCIe Gen 4 ‘Ampere’ Nvidia RTX A4000 and Nvidia RTX A5000 are the replacements for the PCIe Gen 3 ‘Turing’ Nvidia Quadro RTX 4000 and Quadro RTX 5000, which launched in 2019.

The RTX A4000 and A5000 are midrange ‘Quadro’ GPUs in everything but name. Nvidia might be retiring its longserving Quadro workstation brand, but the features remain the same.

Both GPUs offer more memory than their consumer GeForce counterparts, are standard issue in workstations from Dell, HP and Lenovo, and come with pro drivers with ISV certification for a wide range of CAD/BIM applications.

And with an estimated street price of $1,000 for the Nvidia RTX A4000 and $2,250 for the Nvidia RTX A5000, they have much more palatable price tags than the Nvidia RTX A6000 which costs $4,650. Nvidia RTX A4000 (16 GB)

With 16 GB of GDDR6 ECC memory, the Nvidia RTX A4000 offers a big step up from the 8 GB Quadro RTX 4000. 8 GB is fine for mainstream viz workflows but for more complex projects it can be limiting, so delivering 16 GB in a sub $1,000 pro GPU is a big step forward. Previously, 16 GB was only available on the ‘Turing’based Quadro RTX 5000.

As you’d expect from Nvidia’s new ‘Ampere’ architecture, the Nvidia RTX A4000 also offers a significant improvement in processing. This can be seen in all areas of the GPU with more CUDA cores for general processing, thirdgeneration Tensor Cores for AI operations and second-generation RT Cores for hardware-based ray tracing. It leads to a substantial performance increase in many different applications (see later on).

Furthermore, as the Nvidia RTX A4000 is a single slot GPU with a max power consumption of 140W delivered through a single 6-pin PCIe connector, it’s available in a wide range of desktop workstation form factors. This includes compact towers like the HP Z2 Tower G8 and Dell Precision 3650.

The board features four DisplayPort 1.4a ports and can drive up to four displays at 5K resolution. It is cooled by a single ‘blower’ type fan, which draws in cool air from the top and bottom of the card, pushes it through a radiator and then directly out of the rear of the workstation chassis. This is in contrast to most consumer GeForce GPUs which use axial fans that recirculate air inside the machine.

There are pros and cons to each design, but with a blower fan you can stack cards within the chassis without having to leave space between them. This means you can get a very good density of GPUs inside a mid-sized chassis.

With the AMD Threadripper Pro-based Lenovo ThinkStation P620, for example, you could get four Nvidia RTX A4000s back-to-back, which could be a very interesting proposition for GPU rendering. Even though the RTX A4000 doesn’t support NVlink (so there’s no pooling of GPU memory) 16 GB is still a good amount and two, three or four RTX A4000s could work out well in terms of price/performance compared to the more powerful RTX A5000 or A6000.

Another potential use case for highdensity multi-GPU is workstation virtualisation using GPU passthrough, where each user gets a dedicated GPU. Again, this workflow looks well suited to the Lenovo ThinkStation P620, which can be configured with up to 64 CPU cores and 2 TB of memory.

Other more niche pro viz features include support for 3D Stereo, Nvidia Mosaic for professional multi-display solutions, and Quadro Sync II, an addin card that can synchronise the display and image output from multiple GPUs within a single system, or across a cluster of systems.

Nvidia RTX A5000 (24 GB)

With 24 GB of GDDR6 ECC memory, the Nvidia RTX A5000 offers only a 50% memory uplift compared to the Quadro RTX 5000 it replaces.

Like the Nvidia RTX A4000 it offers a significant upgrade in all areas of processing — CUDA, Tensor and RT cores.

It’s a double height board, with a max power consumption of 230W which it draws from the PSU via an 8-pin PCIe connector, but it’s still available in compact towers.

The board also features four DisplayPort 1.4a ports and is cooled by a single ‘blower’ type fan, but only draws in cool air from one side of the card.

The Nvidia RTX A5000 supports all the same features as the Nvidia RTX A4000 but differs in two main areas.

One, it supports Nvidia NVLink, so GPU memory can be expanded to 48 GB by connecting two 24 GB GPUs together. Two, it supports Nvidia RTX vWS (virtual workstation software) so it can deliver multiple high-performance virtual workstation instances that enable remote users to share resources. In the Lenovo ThinkStation P620, for example, which we reviewed earlier this year, you could get a very high density of CAD/ BIM users who only need high-end RTX performance from time to time.

Nvidia RTX A5000 inside the AMD Ryzen 5000-based Scan 3XS GWP-ME A132R workstation

Testing the new cards

We put the Nvidia RTX A4000 and Nvidia RTX A5000 through a series of real-world application benchmarks, for GPU rendering, real-time visualisation and 3D CAD.

All tests were carried out using the AMD Ryzen-based Scan 3XS GWP-ME A132R workstation at 4K (3,840 x 2,160) resolution using the latest 462.59 Nvidia driver (see page WS12 for a full review).

For comparison, we used the last two generations of ‘4000’ class Nvidia pro GPUs – the 8 GB ‘Turing’ Nvidia Quadro RTX 4000 (from 2019) and the 8 GB ‘Pascal’ Nvidia Quadro P4000 (from 2017). Three to four years is quite a typical upgrade cycle in workstations, so the intention here is to give a good idea of the performance increase one might expect from an older machine (N.B. to see all of the benchmark scores for the Nvidia Quadro P4000 visit tinyurl.com/ RTX4000).

We also threw some Nvidia RTX A6000 scores in there. These were done on two different workstations with a 32-core Threadripper Pro 3970X and a quad core Intel Xeon W-2125 CPU. While both CPUs have lower frequencies and instructions per clock (IPC) the results should still give a pretty good idea of comparative performance, especially in GPU rendering software.

The results of these tests can be seen in the charts on page WS20 in our review of the AMD Radeon Pro W6800.

Hardware-based ray tracing

It’s been just over two years since Nvidia introduced ‘Turing’ Nvidia Quadro RTX, its first pro GPUs with RTX hardware ray tracing.

In a classic chicken and egg launch, there were very few RTX-enabled applications back then, but this has now changed. For design viz, there’s Chaos V-Ray, Chaos Vantage, Enscape, Unreal Engine, Unity, D5 render, Nvidia Omniverse, Autodesk VRED, KeyShot, Siemens NX Ray Traced Studio, Solidworks Visualize, Catia Live rendering and others.

Nvidia RTX gave GPU rendering a massive kick start and while there is increased competition from hugely powerful CPUs like the 64-core AMD Threadripper [Pro], we are seeing deeper penetration of GPU rendering tools, especially in architect / engineer / product designer friendly workflows.

Nvidia RTX is being used to massively accelerate classic viz focused ray trace renderers like V-Ray, KeyShot and Solidworks Visualize, which we test later on in this article. However, some of the more exciting developments are coming from the AEC sector in tools like Enscape, Chaos Vantage and Unreal Engine, which really make ray tracing ‘real-time’. Vantage, for example, is built from the ground up for real-time ray tracing so can maximise the usage of RT cores within the new GPUs.

Chaos Group V-Ray

V-Ray is one of the most popular physically based rendering tools, especially in architectural visualisation. We put the new cards through their paces using the freely downloadable V-Ray 5 benchmark, which has dedicated tests for Nvidia CUDA GPUs, Nvidia RTX GPUs, as well as CPUs.

The results were impressive. In the CUDA test, the Nvidia RTX A4000 was 1.62 times faster than the previous generation Nvidia Quadro RTX 4000 and in the RTX test 1.70 times faster. The lead over the Pascal-based Quadro P4000 was nothing short of colossal – 3.53 times faster in the CUDA test. As the P4000 does not have dedicated RT cores, it could not run the RTX test.

Stepping up to the Nvidia RTX A5000 will give you an additional boost. Compared to the Nvidia RTX A4000 it was between 1.27 and 1.37 times faster.

Interestingly, the RTX A5000 was not that far behind the RTX A6000, which costs more than twice as much.

rendering. But it’s one of the slickest implementations we’ve seen, allowing users to switch between CPU and GPU rendering at the click of a button.

In the Keyshot 10 benchmark, part of the free KeyShot Viewer, the performance leap was even more substantial than in V-Ray. The Nvidia RTX A4000 and Nvidia RTX A5000 outperformed the Quadro RTX 4000 by a factor of 1.89 and 2.51 respectively. And the RTX A5000 was only 20% slower than the RTX A6000.

Solidworks Visualize Real time 3D

The name of this GPU-accelerated physically based renderer is a bit misleading as it works with many more applications than the CAD application of the same name. It can import models from Creo, Solid Edge, Catia and Inventor, as well as several neutral formats.

Since the 2020 release the software has supported Nvidia RT cores and Tensor cores to improve rendering performance with Nvidia RTX GPUs. Users can choose to render scenes with or without denoising enabled.

Denoising is a postprocessing technique based on machine learning that filters out noise from unfinished / noisy images and is the foundation for many RTX-accelerated applications. It means you can get better looking renders with significantly fewer rendering passes.

DS Solidworks reckons that if a scene routinely needs 500 passes without the denoiser, then you may be able to achieve the same rendering quality with 50 passes with the denoiser enabled.

We tested the stock 1969 Camaro car model at 4K resolution with 1,000 passes (denoising disabled) and 100 passes (denoising enabled) set to accurate quality. Both settings produced excellent visual results.

The RTX A4000 and RTX A5000 delivered the 100-pass render in 22 seconds and 14 seconds respectively. This isn’t the most complex scene but being able to render at such speeds is quite incredible and can have a profound impact on workflows. In comparison, it took the Quadro P4000 GPU 105 seconds, so you can see just how far things have progressed in four years. While GPU rendering is a major play for the Nvidia RTX A4000 and Nvidia RTX A5000, real-time 3D using OpenGL, DirectX and (in the future) Vulkan continues to be a very important part of architectural visualisation, with applications including TwinMotion, Lumion, Enscape, Unreal Engine, LumenRT and others.

Of course, the boundaries between realtime 3D and ray tracing continue to blur. In fact, out of the list above only Lumion and Twinmotion are yet to support RTX, although it should be coming to Twinmotion soon.

To test frame rates, we used a combination of monitoring software including FRAPS, Xbox Game Bar and MSI Afterburner. We only tested at 4K (3,840 x 2,160) resolution. At FHD (1,920 x 1,080) resolution this class of GPU simply isn’t stressed enough. In Autodesk VRED Professional we tested our automotive model with AA set to ‘off’ and ‘ultra-high’.

Considering that this pro viz application used to only really run effectively on Nvidia’s ultra-high-end professional GPUs, it’s quite astounding that the Nvidia RTX A4000 – a sub $1,000 card – delivered over 30 FPS at 4K resolution with medium anti-aliasing. In saying that, those really pushing the

boundaries of automotive visualisation will still likely need the top-end Nvidia RTX A6000 especially for high-res VR workflows. In Unreal Engine we used two datasets: an arch viz interior of a small apartment and the Automotive Configurator, which features an Audi A5 convertible. Both scenes were tested with ray tracing enabled (DirectX Ray tracing (DXR)) and without (DirectX 12 rasterisation). The results were pretty much as expected with good scaling between all the GPUs with DirectX 12 rasterization. With real-time ray tracing enabled, performance naturally takes a hit in general, but the Quadro P4000 really suffers without any RT cores. We also tested with VRMark, a dedicated Virtual Reality benchmark that uses DirectX 11 and DirectX 12. In the DX12 test both GPUs came in second to AMD’s Radeon Pro W6800 (see page WS20). ‘‘ The performance leap from the Quadro RTX CAD and BIM The Nvidia RTX A4000 and RTX A5000 are 4000 to RTX A4000 is hugely impressive, overkill for most CAD and the step up from the four-year old ‘Quadro P4000 is simply phenomenal and BIM applications and are unlikely to give you significantly better 3D ’’ performance than more mainstream GPUs like the Nvidia Quadro P1000 or P2200. However, CAD applications are changing and, in the future, should be able to make much better use of the plentiful power of higher-end GPUs like the RTX A4000 and A5000. In addition, it is important to note that both GPUs will be certified for a wide range of pro CAD / BIM applications, which is important for some firms. This is especially true for enterprises that buy 100s or 1,000s of workstations from large OEMs like HP, Dell and Lenovo and want assurance that the GPUs will be stable and that they will be properly supported by the software developer. Certification is a major reason why

Solidworks Visualize 2021 SP3 (Iray)

1969 Camaro car model (denoising disabled)

1,000 passes, accurate quality 4K (3,840 x 1,080 resolution)

1.23 Render time (secs) (smaller is better)

Nvidia Quadro P4000

Nvidia RTX 4000

Nvidia RTX A4000 1,029

349

211 Solidworks Visualize 2021 SP3 (Iray)

1969 Camaro car model (denoising enabled)

100 passes, accurate quality 4K (3,840 x 1,080 resolution)

1.23 Render time (secs) (smaller is better)

Nvidia Quadro P4000

Nvidia RTX 4000 35 105

Nvidia RTX A4000 22 Luxion KeyShot 10 benchmark (GPU)

1.23 Relative performance to reference system (bigger is better)

Nvidia Quadro P4000 1

Nvidia Quadro RTX 4000 1

Nvidia RTX A4000 1

Nvidia RTX A5000 1 5.60

32.94

62.34

82.60

V-Ray 5 image courtesy of Toni Bratincevic

some firms choose Nvidia’s pro-focused RTX GPUs over their ‘consumer GeForce’ counterparts so they can confidently use applications like Revit, Solidworks, PTC Creo, and Siemens NX alongside more vizfocused tools like Chaos V-Ray, Enscape, Luxion KeyShot and Solidworks Visualize.

Solidworks 2021 can make better use of powerful GPUs than most CAD applications but it is still CPU limited to some extent, so the performance benefit the new cards give you isn’t as big as you’d get from a dedicated real-time viz tool.

In the SPECapc for SolidWorks 2021 benchmark we saw a small improvement, generation on generation with the shaded with edges. The Nvidia RTX A4000 was 1.10 times faster than the Quadro RTX 4000 and 1.44 times faster than the ‘Pascal’ Quadro P4000.

With RealView, Shadows and Ambient Occlusion enabled we saw a bigger benefit over older GPUs. The Nvidia RTX A4000 was 1.16 times faster than the Quadro RTX 4000 and 1.57 times faster than the Quadro P4000.

We were unable to test the Nvidia RTX A5000 as Solidworks 2021 SP3 did not recognise the card. We expect this to be fixed in SP4, out soon. Conclusion

With the new Nvidia RTX A4000 and A5000, Nvidia has made its ‘Ampere’ GPU architecture much more accessible to a wider audience. In particular, we see the sub $1,000 Nvidia RTX A4000 hitting the sweet spot for designers, engineers or architects that want a pro viz capability in their workflow.

The performance leap from ‘Turing’ to ‘Ampere’ (Quadro RTX 4000 to RTX A4000) is hugely impressive. In realtime 3D, a 45% to 60% boost, generation on generation, seems typical, with even bigger gains from real-time ray tracing when the enhanced RT and Tensor cores come into play. The step up from the four-year old ‘Pascal’ Quadro P4000 is phenomenal, especially for rendering.

Equipping the RTX A4000 with 16 GB of memory is very significant. While we often see models/scenes that surpass 8 GB (the capacity of the previous generation Quadro RTX 4000) scenes that are 16 GB and above are certainly less common, and more the preserve of viz specialists than most architects or product designers who use standard materials and assets.

For viz workflows that need lots of memory, Nvidia has strong competition from the 32 GB AMD Radeon Pro W6800, but in less demanding worflows Nvidia’s biggest competitor in pro graphics is currently itself.

The new 12 GB ‘consumer’ GeForce RTX 3080 Ti, for example, might have half the memory of the Nvidia RTX A5000, but offers more performance on paper for half the price. Nvidia even has a GeForce Studio driver for applications including Enscape, Unreal Engine and V-Ray.

Despite the obvious attraction of Nvidia’s consumer GPUs, Nvidia’s ‘A’ class models should continue to find favour in large firms and enterprises that buy in volume, want more memory, consistent supply, pro viz features or the assurance of certification.

Nvidia still has some work to do to flesh out its Ampere family. While mobile workstations already have entry-level RTX A2000 and A3000 GPUs, there’s no equivalent for desktops.

The AEC industry would certainly welcome a sub $500 pro RTX GPU to replace the Pascal-based Quadro P2200, which is now long in the tooth. In years gone by, we would have expected to see a desktop RTX A2000 before the end of 2021, but with ongoing supply challenges and high demand, things are very hard to predict right now.

Chaos Group V-Ray 5.0 benchmark

V-Ray GPU CUDA

1.23 vpaths (calculations per minute) (bigger is better) Chaos Group V-Ray 5.0 benchmark

V-Ray GPU RTX

1.23 vrays (calculations per minute) (bigger is better)

Nvidia Quadro P4000 1

Nvidia Quadro RTX 4000 1

Nvidia RTX A4000 1

Nvidia RTX A5000 1 290

634

1,025

1,299 Nvidia Quadro P4000 1 N/A

Nvidia Quadro RTX 4000 1

Nvidia RTX A4000 1

Nvidia RTX A5000 1 919

1,559

2,128

For more performance data see charts on page 20 as part of the in-depth review of AMD Radeon Pro W6800 with 32 GB of on-board memory

Dell U4021QW UltraWide

Dell’s UltraWide monitors are wellregarded in the design and engineering industry. Al Dean takes a look at the latest product, which targets the home worker who needs that little bit extra when it comes to screen real estate

The way we look at our computing hardware has changed over the last 15 months. After all, many of us now find ourselves more regularly interacting with others through a screen, rather than face to face – plus we’re stuck working in our own homes.

With these limitations in mind, the idea of being hunched over a laptop screen every day isn’t appealing, even one with a 17-inch display. It’s just not good for our working practices, or our spines.

As a result, the idea of a display device that supports better posture, gives you a lot more pixels to play with and which doesn’t look like it’s been mandated by a corporate IT department, is appealing.

This is where the new Dell UltraWide U4021QW display has some real strengths. Having spent the last two months in close contact with the 40-inch 5,120 x 2,160 (5K2K) resolution display, I can share what day-to-day life is now like. Set up

The first steps are to attach the stand, adjust it for height and tilt, then figure out connectivity. Here, you have a number of options: USB-C (or Thunderbolt), DisplayPort or two HDMIs. While the ThunderBolt and DisplayPort options give you the full 60Hz refresh option, it’s worth noting that this steps down to 30Hz for HDMI.

My personal choice was to use DisplayPort to connect to my trusty desktop workstation, and the powered USB-C port to connect to my MacBook Pro – the benefit of the latter being that I don’t need a separate power cable, as it draws under 90W. However, most workstation-class laptops will be way over this.

The set-up process is pretty slick – with one caveat (one that says more about the author of this review than it does about Dell’s user experience team).

You scroll through the options on a small menu to the lower right of the screen using a joystick. I completely missed this first time round, so spent a good 15 minutes switching the monitor off/on and jabbing what I already knew was not a touchscreen device. You have been warned!

Once you’ve updated your resolution settings and installed the Dell Display Manager application, you can then start to explore what the 40-inch 5,120 x 2,160 display feels like to use. Personally, I also like to calibrate a new display every week or so for the first month or two, just to ensure that the panel is as close to accurate Product spec as possible. I use DataColor’s Spyder device for this. ■ 40” LED-backlit LCD curved monitor In use ■ 5,120 x 2,160 My usual set-up is two 27-(WUHD) resolution. 21:9 Aspect Ratio inch monitors, both running ■ 946.6 mm x 248 at HD, rather than 4K. By mm x 457.8 mm (including Stand) ■ Pixel Pitch 0.1815 mm comparison, and in terms of screen real estate, the U4021QW is the equivalent ■ Frequency 60Hz of one and a half, but it’s the (via DisplayPort) / 30Hz (via HDMI) 5K2K resolution that really ■ Brightness makes it sing. It’s clear, crisp 300 cd/m² and represents colour pretty ■ 2 x HDMI, accurately. The calibration

DisplayPort and Thunderbolt 3 I ran only had to tweak the ■ 100mm x 100mm settings a little.

VESA Mount Compared to running two ■ Height Adjustment monitors, I don’t miss the 120mm, Swivel 60, Tilt -5/+21 separation of the two displays ■ 13.8 kg and found that window ■ 3-year Advanced management wasn’t a concern, Exchange Service and Premium Panel Exchange to on-site even if you’re essentially losing half a monitor. If you’re ■ £1,610 (Ex. VAT) accustomed to using a single ■ dell.com display, then this is going to feel incredibly expansive.

If you’re a Windows user, then the Dell Display Application is useful for zoning up your display and having application windows snap to known positions. You can set this up, then save it as a preset so that your work environment is always the same. I only wish there was a similar tool for Mac OSX.

Managing two machines

Modern, well-designed monitors (rather than consumer-grade displays) come into their own in the way they tackle the needs of professional users.

An excellent example is how this system manages inputs. For example, I often run both the Mac and the Windows workstation at the same time, so having quick options to switch inputs is very useful, without too much pressing of cryptic buttons, rather using the onscreen menu and rear-mounted joystick/button combination.

There are also some nice options to explore: split-screen and picture-inpicture (PnP). This means you could split the screen into two halves, with each machine represented in its own half.

The PnP option also means you can have the bulk of your screen showing one machine, and a smaller quarter or eighth of the screen showing what’s going on in your other.

In conclusion

The Dell U4021QW is a great display. Whether you’re looking for a replacement for a single or dual-monitor set-up, or perhaps replicating it at home, then it’s got pretty much everything you need in terms of aesthetics. In other words, it looks pretty slick compared to many, but also offers flexibility of inputs, window control and more.

Ultimately, the quality of the display is what makes or breaks this hardware, and on this count, it’s a winner. Clear and crisp, it also represents the full colour range accurately.

To be honest, Dell might need to send around the heavies if it wants it back.

Compared to running two monitors, I don’t miss the separation of the two displays and found that window management wasn’t a concern, even if you’re essentially losing half a monitor ’’

5K2K monitors: what other options are there?

LG 34WK95U-W

Screen size: 34-inch Price: $1,499 Web: lg.com

Notes: LG has been a favourite of ours for a while, having spent years with a trusty 28-inch LG CRT back in the day. The LG 34WK95U-W is 34-inch ultrawide, but still manages to pack in 5,120 x 2,160 pixels. It strangely comes in 7kg heavier than the Dell. LENOVO THINKVISION P40W-20

Screen size: 40-inch Price: $1,699 Web: lenovo.com

Notes: When we tested out an early variant of this (the ThinkVision P44w), it really impressed, so we have no reason to doubt that fitting this form factor out with a 5K2K display will rock. A few tweaks, such as the phone stand in the base) are pretty nice too. MSI PRESTIGE PS341WU

Screen size: 34-inch Price: $999 Web: msi.com

Notes: MSI describes this as a display for creators (read: We made it in white). It looks stunning and a decent colour gamut (98% of DCI-P3) means it should display colours accurately. Whether it’ll be those colours in two years’ time remains to be seen.

Hybrid working what does it mean for AEC firms?

With many firms re-evaluating office space and working from home policies, we asked Adam Jull of IMSCAD about the role that virtual workstations can play – and the differences between VDI, public and private cloud

The pandemic has shifted the way we all work. As the dust settles and we try to get back to some sort of normality, a hybrid work model seems to be the favoured approach of employers and employees alike. But what does this really mean for AEC firms who have historically invested a lot in their offices / studios to provide great spaces for client facing and for collaboration amongst staff?