ADEGLISTUDIDIVERONA

DipartimentodiInformatica

Master’sDegreein

ComputerEngineeringforRoboticsandSmartIndustry

Master’sThesis

DEVELOPMENTANDCHARACTERIZATIONOFA

3DPRINTEDHEMISPHERICALSOFTTACTILE SENSORFORAGRICULTURALAPPLICATIONS

Supervisor:Candidate:

Prof.RICCARDOMURADOREFABIOCASTELLINI

Co-supervisor:StudentID:

FRANCESCOVISENTIN,PhDVR464639

ACADEMICYEAR2021-2022

UNIVERSIT `

ListofFigures 1.1Ontheleftasculpturedepictinganancientromanharvester calledGallicVallus;ontherightamoderncombineharvester designedforgrain,potatoes,carrots,beets.............

2 1.2SoftRobotics’mGrip:amodulargrippingsystemthatenables reliable,high-speedpickingoftraditionallyhard-to-graspsingle items................................. 4

morphicrobotichand”[38]whileontherightasoftanthropomorphichand[7]........................... 9 2.2Fromlefttoright:ROBOTIQHand-EAdaptiveGripper,2F-85 Gripperand3-FingerAdaptiveRobotGripper.......... 10 2.3Fromlefttoright:UniversalRobotsZXP7*01VacuumUnit, OnRobotVG10VacuumgripperandJoulinFoamGripper.... 10 2.4Abrieftimelineofmilestonesinthedevelopmentofsoftgripper technologiesaspresentedin[63]................... 13 2.5SoftRobotics’mGripmodularsoftgripper............. 16 2.6OntheleftFestoFlexShapeGripperandontherightFestoTentacleGripper............................. 16 i

2.1Ontheleftan“integratedlinkage-drivendexterousanthropo-

2.7FestoBionicSoftArmequippedwithaflexibleMultiChoiceGripper................................... 16 2.8CambridgeConsultants’Hanksoftgripper............. 17 2.9Fromlefttoright:asoftfabricgripperwithgeckoadhesion; abioinspiredsoftgrippermadeofDragonSkin30silicon;a hybrid/softroboticgrippermadeofurathenrubber........ 19 2.10Classificationofautomaticharvestingmethods,accordingto[47]. 21 2.11Asimplifiedschemeofbasicpickingtechniques,accordingto[47]. 22 2.12OntheleftRaussendorfautonomoussystemforagricultural purposesC¨asar;ontherightDJIAGRASMG-1PSeriesagriculturedrone............................. 25 2.13OntheleftAscTecFalcon8flyingdrone;ontherightTevel Aeroboticsautomatedfruitpicker.................. 25 2.14AutonomousrobotsproposedbyNa¨ıoTechnologies,fromleft toright:Oz,DinoandTed..................... 26 2.15OntheleftthecompletelyautonomousmobilerobotforpreciselycontrollingsoilgrassingproposedbyVITIROVERSolutions;ontherightTertillWeedingRobot............. 26 2.16AgrobotE-Seriesstainlesssteelandmilitary-gradealuminum robot................................. 27 2.17HarvestCROORoboticsBerry5fruitpickingrobot....... 28 2.18OntheleftAugeanRobotics’Burroself-drivingrobot;onthe rightHarvestAutomation’sHV-100robot............. 28 2.19OntheleftANYmalproposedbyANYbotics;ontherightUnitreeGo1proposedbyUnitreeRobotics............... 30 ii

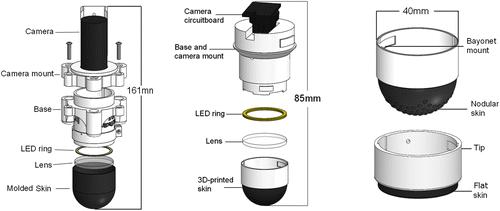

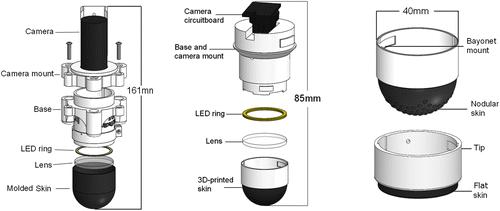

2.29Asreportedin[75]:Open-TacTip(left):theoriginalversionof thesensorcomprisesa3D-printedcameramountandbaseand acastsiliconeskin.ImprovedTacTip(center):theredesigned basehousesawebcam,andmodulartipswith3D-printedrubber skin.Modulartips(right):separatemodulartipswithanodular fingerprint(above)andflattip(below)............... 40

2.20Ontheleftthe3DprintedChromaTouchtactilesensorandthe transducedforcesintomarkerappearancechanges;ontheright arenderofthefingertipassembly.................. 33 2.21OnthelefttherenderedstructureoftheUniversalGripper;on therightthemanufacturedprototype............... 34 2.22Ontheleftthedimensioned(mmunits)sensorassembly;onthe rightthesoft-bubblemountedonaKUKAiiwarobot...... 35 2.23Ontheleftthesoft-bubbleparallelgripperthatestimatesinhandposeandtracksshear-induceddisplacements;ontheright thedimensionedschemewheretheToFdepthsensorisdepicted inblue................................. 36 2.24TheGelSightFinRaygripper.................... 37 2.25TheHiVTactactilesensorprototype................ 38 2.26DesignoftheFingerVisionanditsprototypeinstalledonthe Baxtergripper............................ 38 2.27ArenderingoftheVisiflex[18]tactilesensoranditsexploded view.................................. 39 2.28TheVisiflexsensorcontactedinmultiplepoints;redLEDsrepresentarethefiducialmarkers,whilegreenLEDsarethecontact pointsthatcanbeseenbythecamera,becauseofthewaveguide. 40

iii

2.30OnthelefttheDenseTactsensormountedontheAllegrohand andits3Dreconstructionresults;ontherightavisualizationof theraycastingalgorithm,usedtodeterminetheradialdepth fromthe3Dcalibrationsurfacewhichisthenprojectedintothe imageplane..............................

2.31Asreportedin[79]:(a)basicprincipleoftheGelsightdesign thatconsistsofasensingelastomerpiecewiththeopaquereflectivemembraneontop,supportingplate,LEDsandcamerato capturetheshadedimageswithdifferentlightings;(b)picture ofthesensor;(c)arrangementoftheLEDsandcamerawhen

3.2Fromlefttoright:theFormlabsForm2stereolithography3D

41

viewingfromthetop......................... 42

Hand2-fingersgripper........................ 45

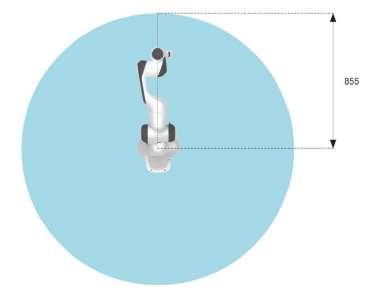

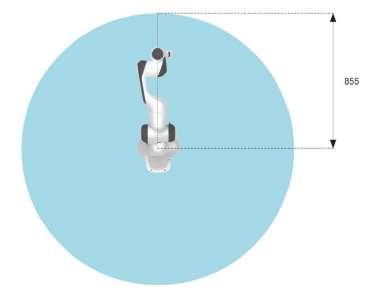

3.1TheFrankaEmikaPandaroboticarmequippedwiththeFranka

printer;theElastic50AResin;a3Dprintedsampleasshown onthewebsite[22].......................... 46 3.3Fromlefttoright:thejustprinteddome;thewasheddome; howthewasheddomepresentsontheinside............ 47 3.4ShoreHardnessscaleofgeneralpurposeitems........... 47 3.5Chartofthemostcommonsoftmaterials’propertiesandcuring times,accordingto[33]....................... 48

rightthehemisphericaldomeafterbeingcured.......... 48

superimposeddetectedblobswhichareclearlynoisy....... 50 iv

3.6Ontheleftthehemisphericaldomebeforebeingcured;onthe

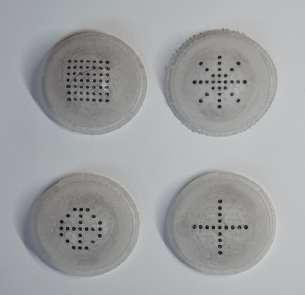

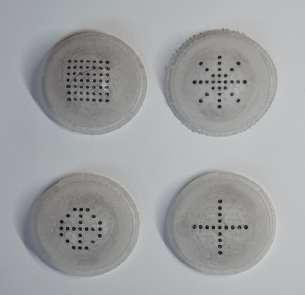

3.7Onthelefttheinitial“doublecross”designconsistingof0.9mm diametermarkerswithaspacingof1.5mm;ontherightthe

3.8Ontheleftthefourdesignedpatterns;ontherightthechosen “doublecross”pattern........................ 50 3.9Ontheleftaphotographofanexplodedviewoftheinitial prototype;ontherightarenderoftheprototype......... 51 3.10Multiplerenderedviewsofproposed3Dprintedmodulardesign. 52 3.11Overallviewofthetestingexperimentalsetup........... 53 3.12Closerviewsoftheexperimentalsetup............... 54 3.13OntheleftanexampleofCartesianrobot;ontherighttheATI NanoSeries6-axisforce/torquesensor............... 55 3.14Ontheleftasideviewofthearm’sworkspace,ontherighta topviewofthearm’sworkspace.................. 55 3.15OriginalFrankaEmikaHandgripper................ 56 3.16RendersofthedefinitivesetupmountedontheFrankaEmika Pandarobot............................. 57 3.17PhotographsshowingtheFrankaEmikaPandarobotwiththe customgripperinstalledontheFrankaHand........... 57 3.18TheIntelRealSenseD435iRGBDcamera............. 58 3.19SimpleBlobDetector’sthresholdingoptions............. 59 3.20Fromlefttoright:therawframewhennoforcesareapplied; therawframeafterapplyingaforce;theframeaftermarker detection............................... 61 3.21Left:therawframewhenanormaldisplacementof10mmis causedbytheexternalforce.Center:theframeaftermarker detection,fittingellipses.Right:theframeaftermarkerdetectionfittingcircles........................... 62 v

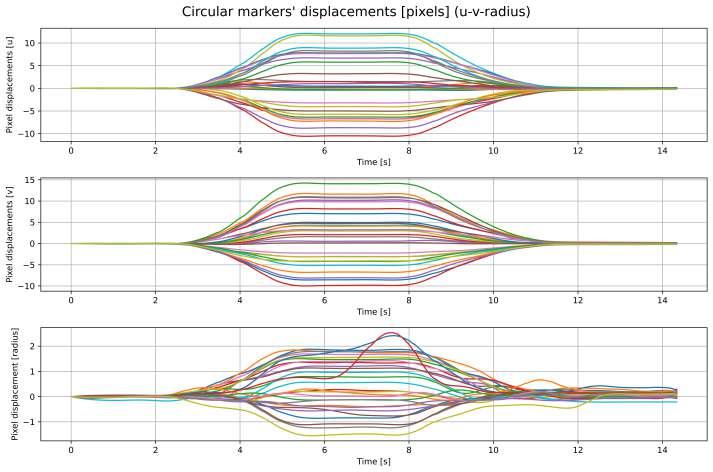

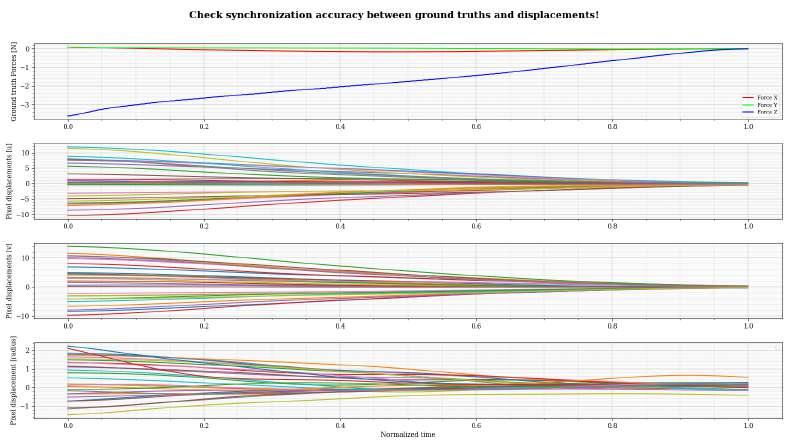

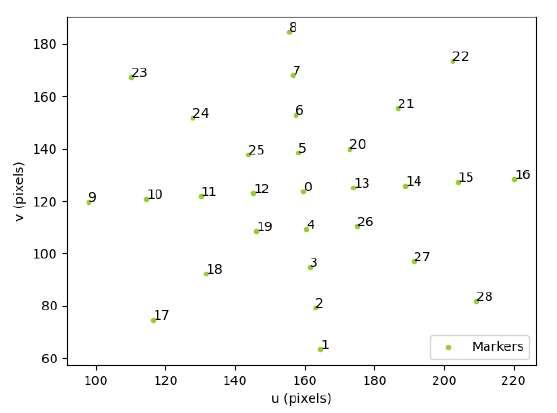

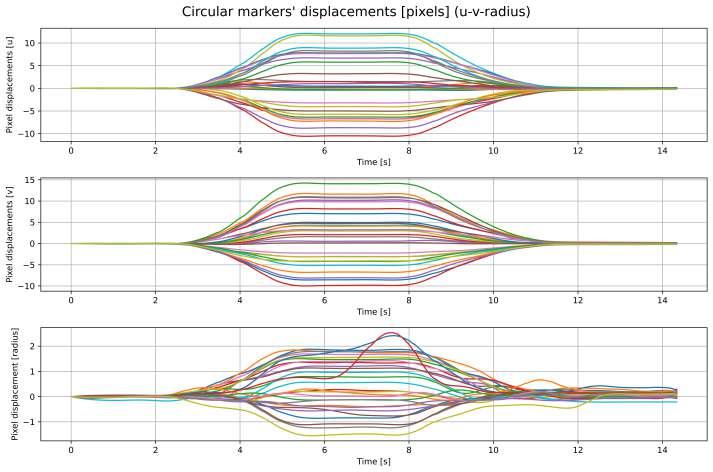

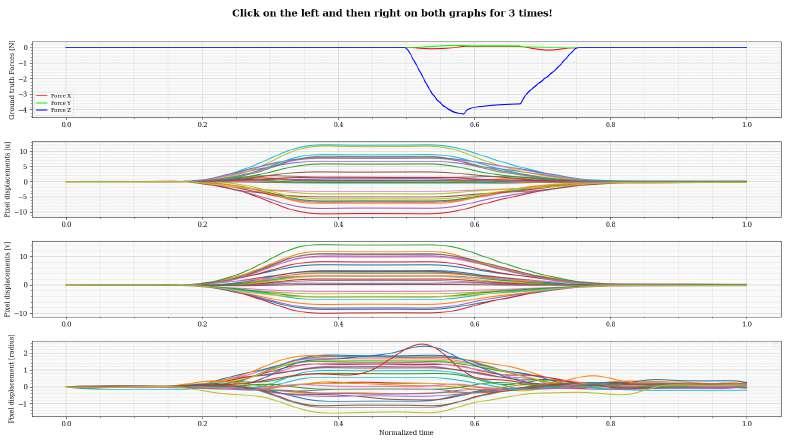

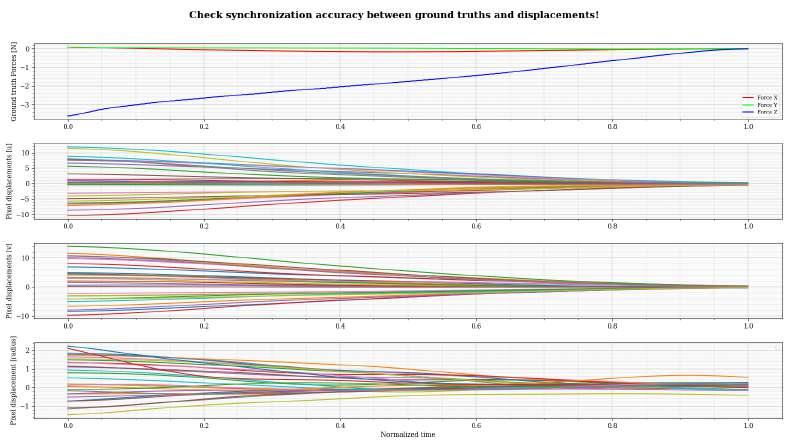

3.22Circularmarkers’displacements(horizontal,verticalandradius)inpixelunits.Eachofthe29markersisrepresentedbya differenthue............................. 63

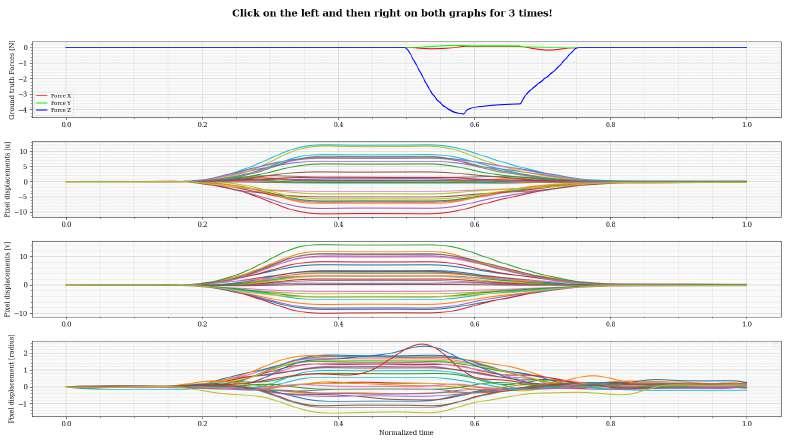

3.23Ellipticmarkers’displacements(horizontal,verticalandradius) inpixelunits............................. 63

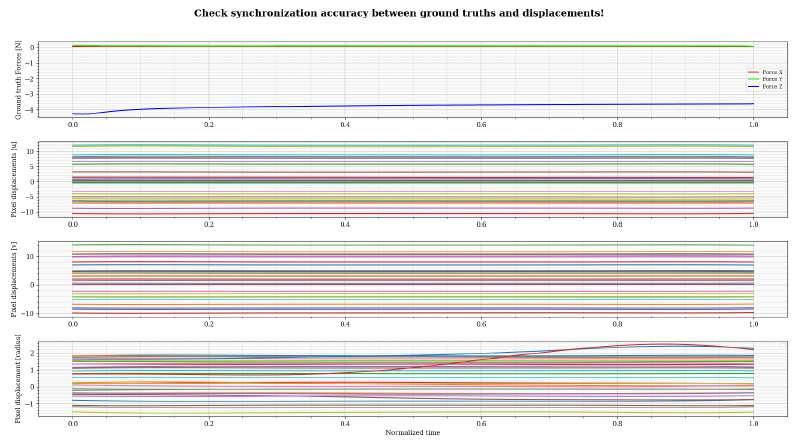

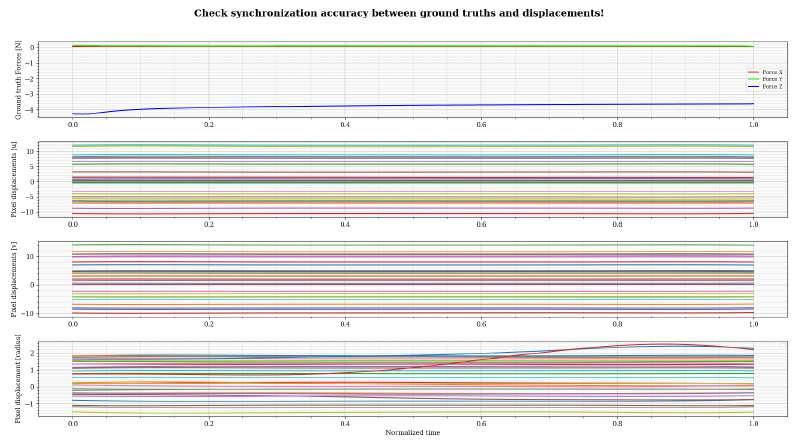

3.24Plotofthesubsequentmarkers’coordinatesinpixelunitsduring deformation.............................. 65

3.25Fromlefttoright:therawframeinrestposition;thesuperimposedarrowsthatshowthedisplacement’sdirectionofeach marker(thearrowswerelengthenedbyafactorof8tomake themmorevisible);therawframeunderaforceofaround4N.. 66

3.26Ontheleftarepresentationofhowcircularmarkersembeddedinthemembranearesearchedasellipses;ontherightthe developedviewertoolillustratingthegripper’sdeformation[57]. 67

3.27Fromlefttoright:topandfrontviewsoftheobtained3Dcoordinatesofthemarkersdetectedas circles,usingtheformulas describedin[57]........................... 68

3.28Left:sideviewoftheobtained3Dcoordinatesofthemarkersdetectedas circles,usingtheformulasdescribedin[57]. Right:3Dmeshobtainedusingthe“pyvista”library........ 69

3.29Topandfrontviewsoftheobtained3Dcoordinatesofthemarkersdetectedas ellipses,usingtheformulasdescribedin[57]... 70

3.30Ontheleftafrontviewoftheobtained3Dcoordinatesofthe markersdetectedas circles,ontherightafrontviewofthe obtained3Dcoordinatesofthemarkersdetectedas ellipses 70

vi

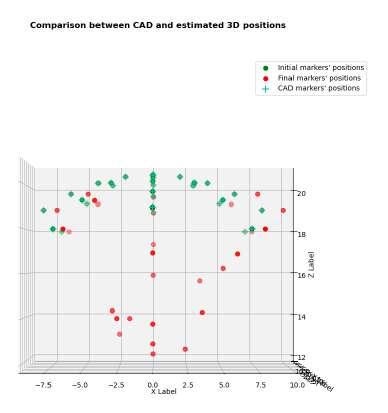

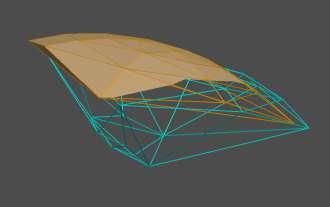

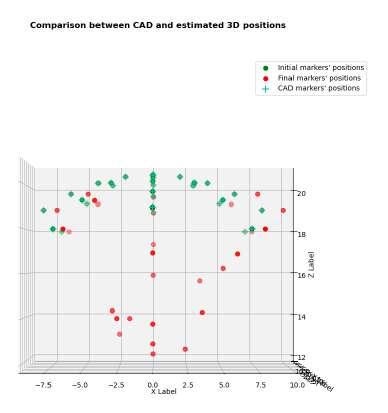

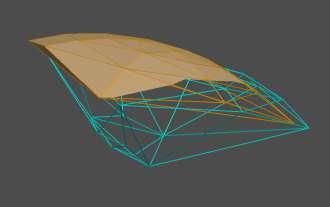

thedeformedframe;ontherightthebefore(coincidingwith theCADgroundtruths)andafterdeformationmeshesobtained

triangulatingthe3Dmarkers’coordinates............ 72 3.32Topandfrontviewsofthe3Dmarkers’coordinatesobtained withthe proposedmethod 73 3.33Fromlefttoright:atopandabottomviewofthe3Dmesh obtainedthroughbooleandifferencebetweendomeandtriangulatedmarkers’coordinates;asideviewofthe3Dmeshobtained throughbooleandifferencebetweenrestpositionanddeformed positiontriangulatedmarkers’coordinates............. 73 3.34ForceandtorquecomponentsmeasuredbytheATINanosensor. 74 3.35Figureshowingfromtoptobottom:the3forcecomponents’ groundtruths,the u, v and radius displacementsofeachmarker. 77 3.36Plotshowingthefirstsegmentofthesynchronizedgroundtruths andmarkers’displacements..................... 77 3.37Plotshowingthesecondsegmentofthesynchronizedground truthsandmarkers’displacements................. 78 3.38Plotshowingthethirdsegmentofthesynchronizedground truthsandmarkers’displacements................. 78 4.1ThemaindifferencesbetweenMachineLearningandDeepLearningandanexampleofDeep-CNN[42]............... 83 4.2ThetypicalarchitectureofDeep-CNN[71]............. 84 4.3Ontheleftthedetectedmarkers’movementusingSimpleBlobDetector;ontherightanexampleofmarkermovementswhen anormalforceisapplied[78].................... 84 vii

3.31Ontheleftaradiusdisplacementheatmapsuperimposedon

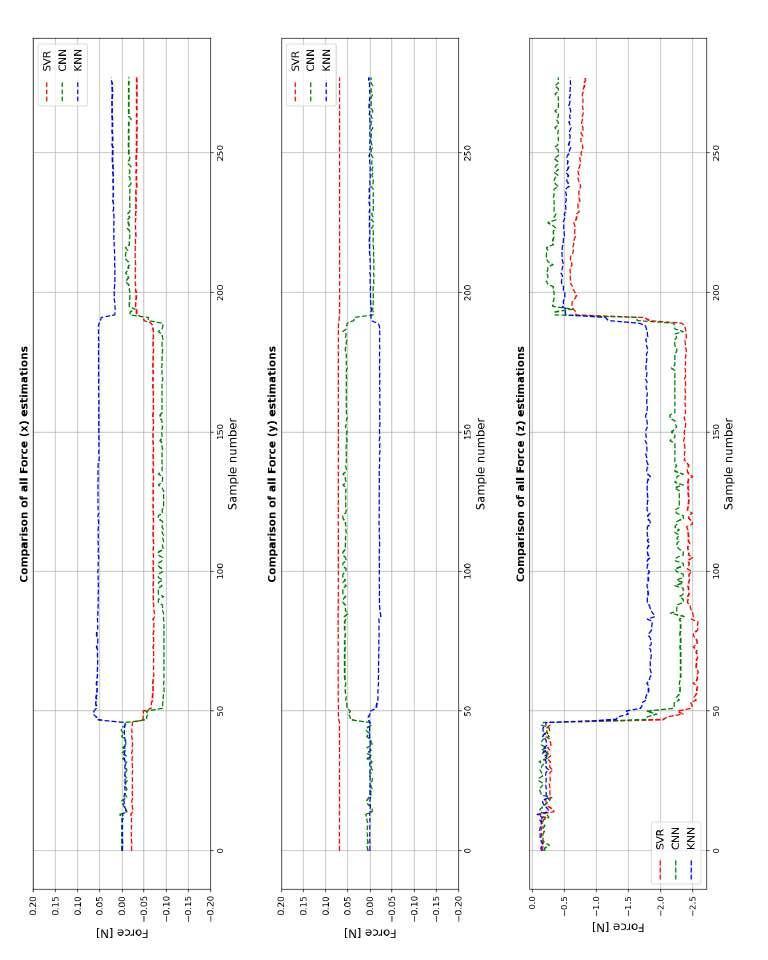

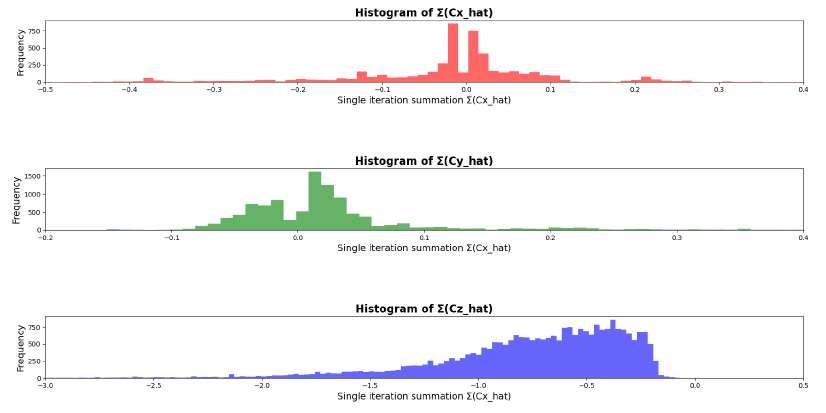

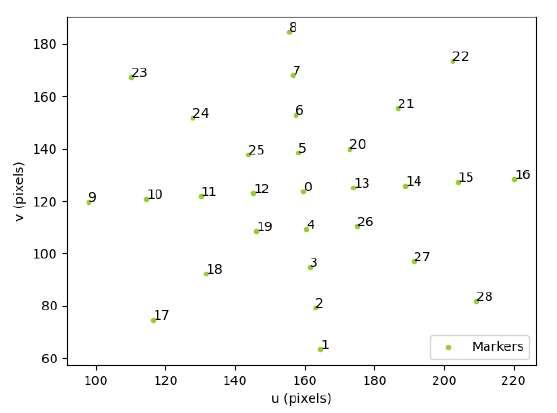

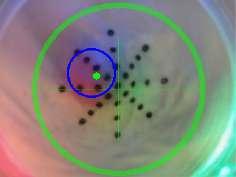

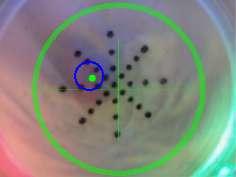

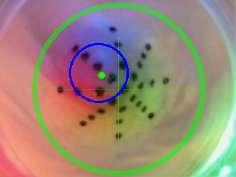

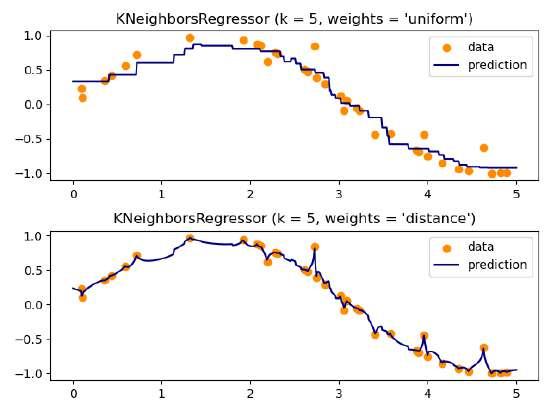

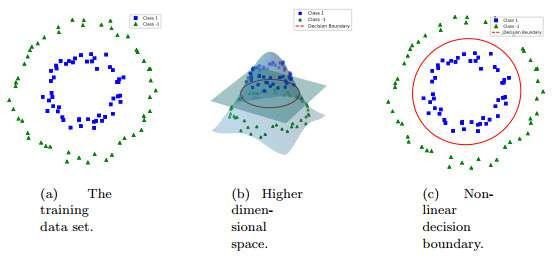

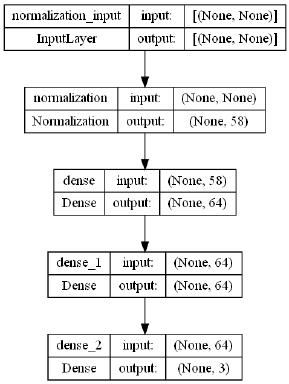

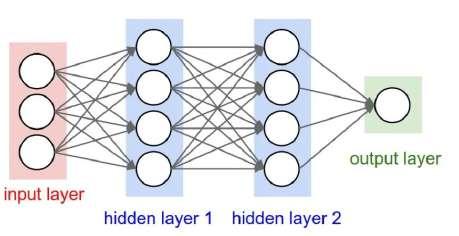

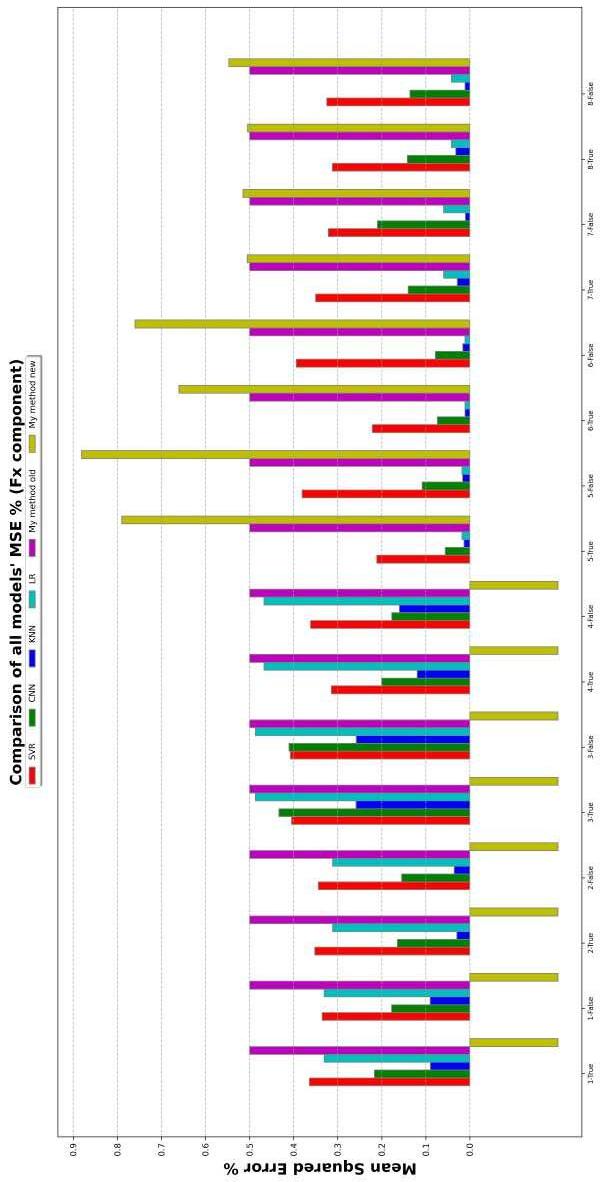

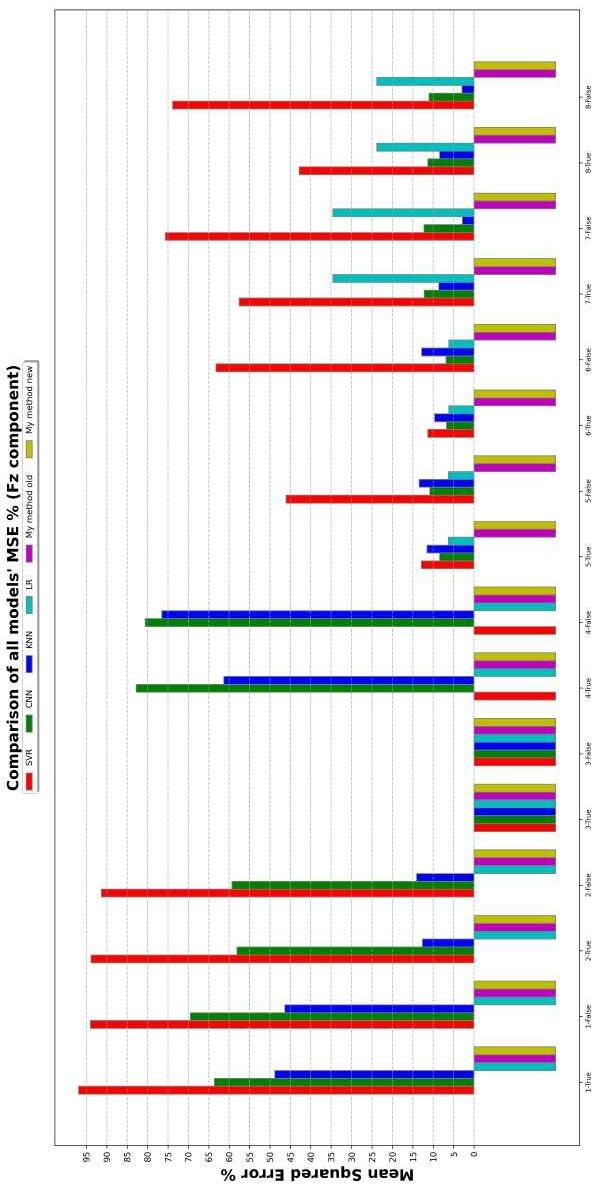

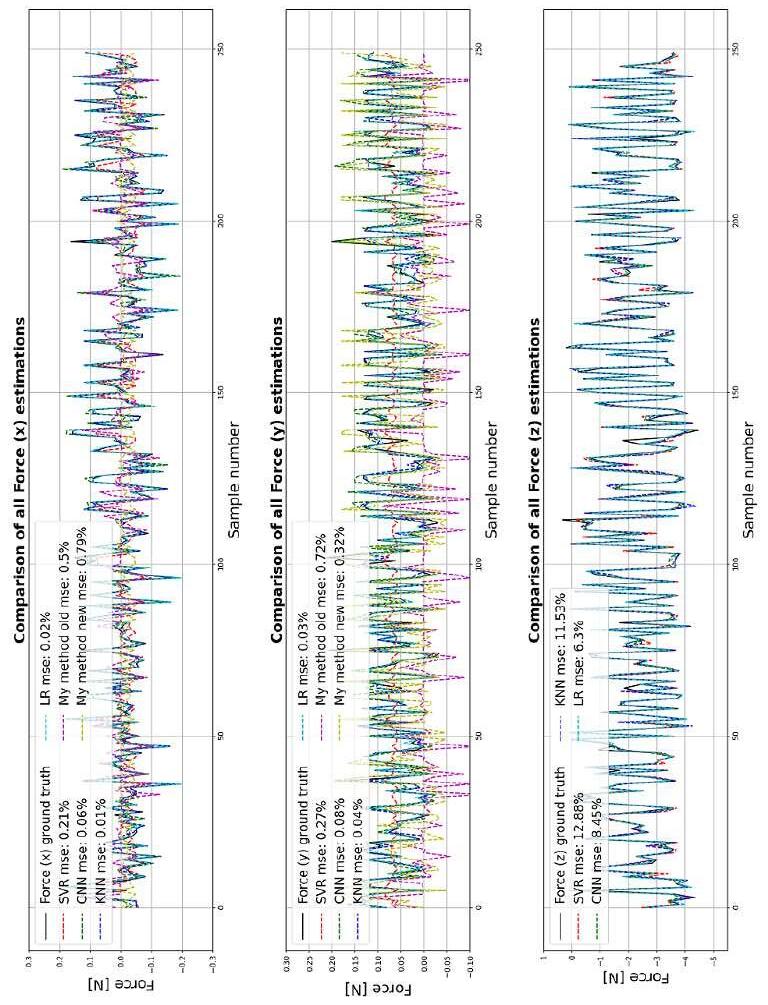

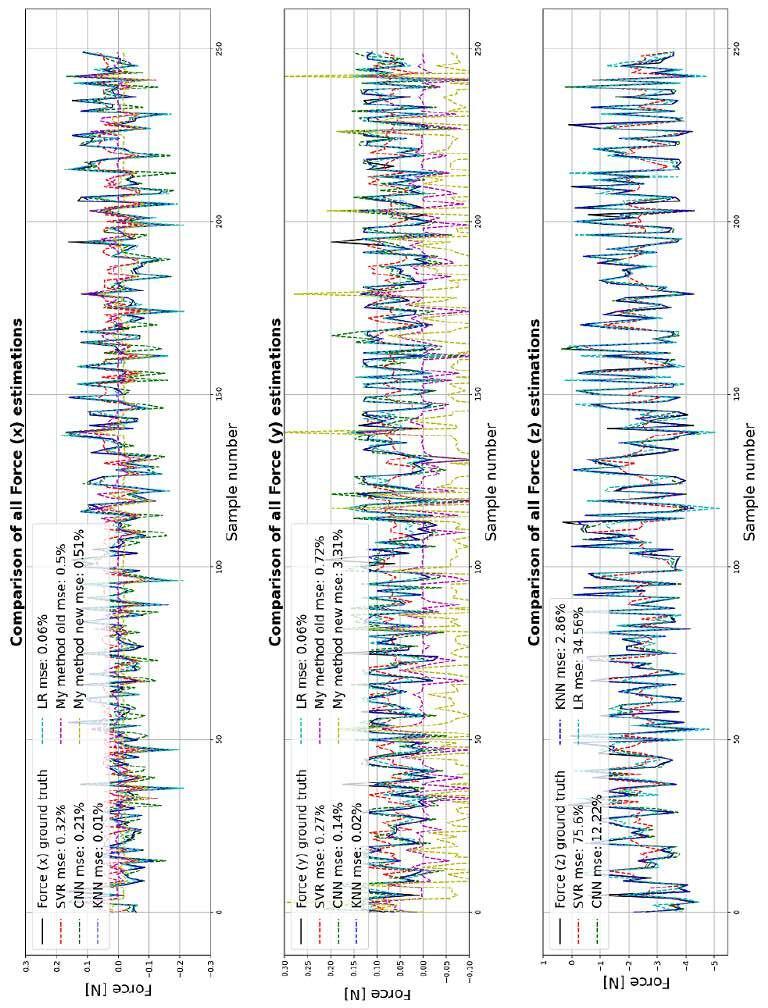

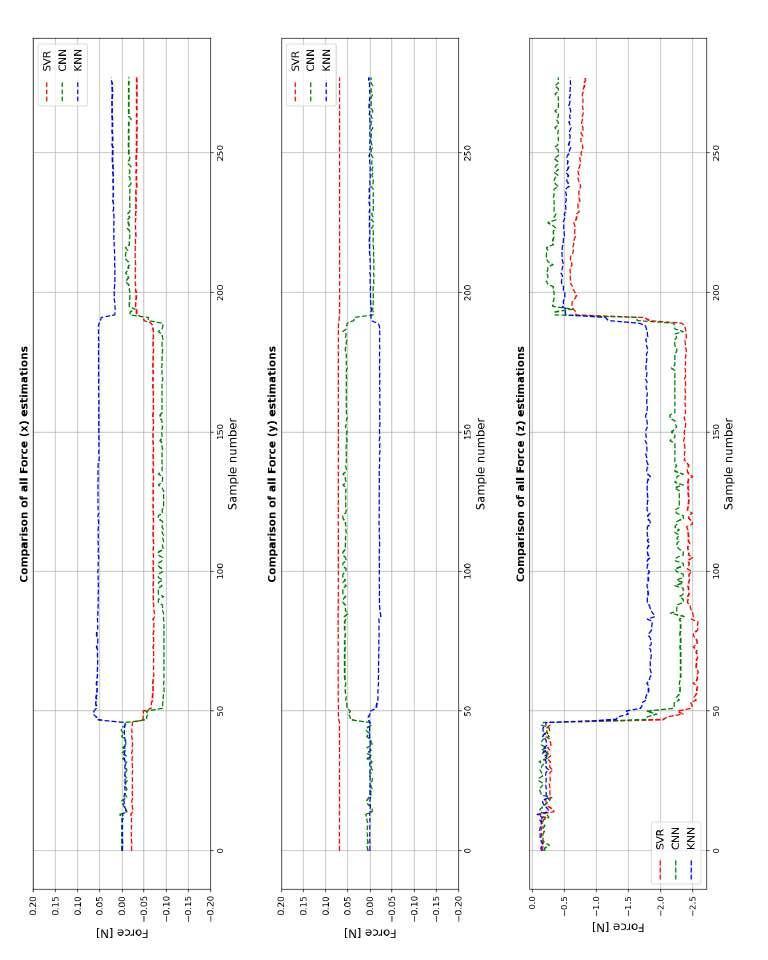

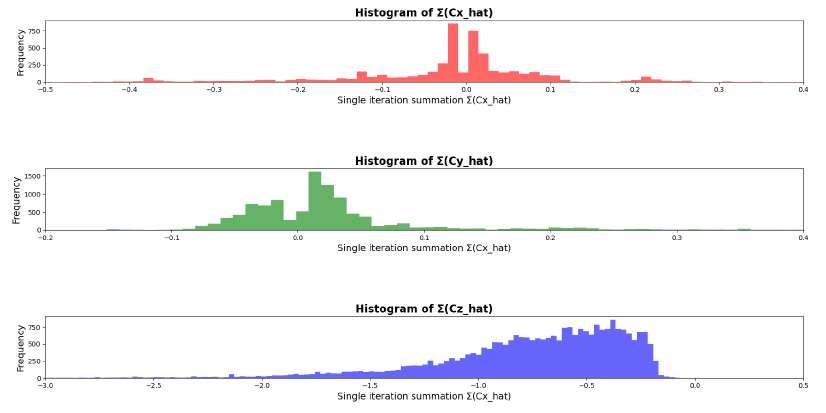

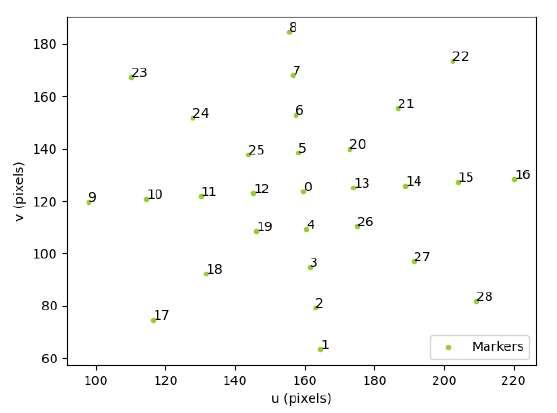

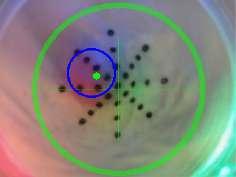

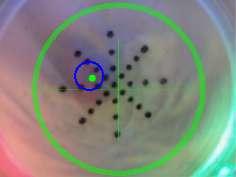

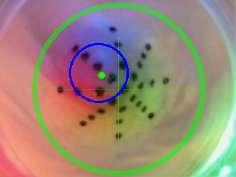

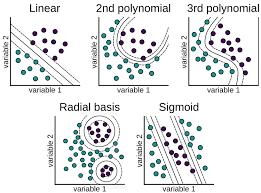

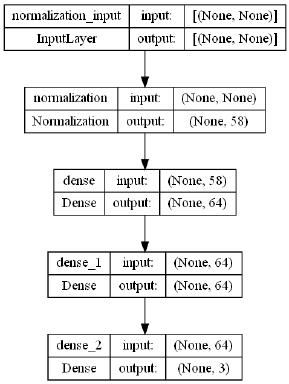

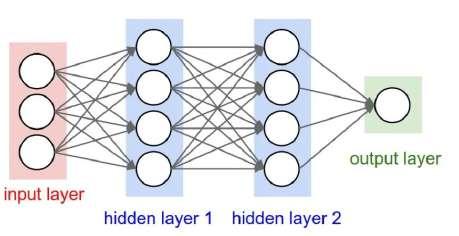

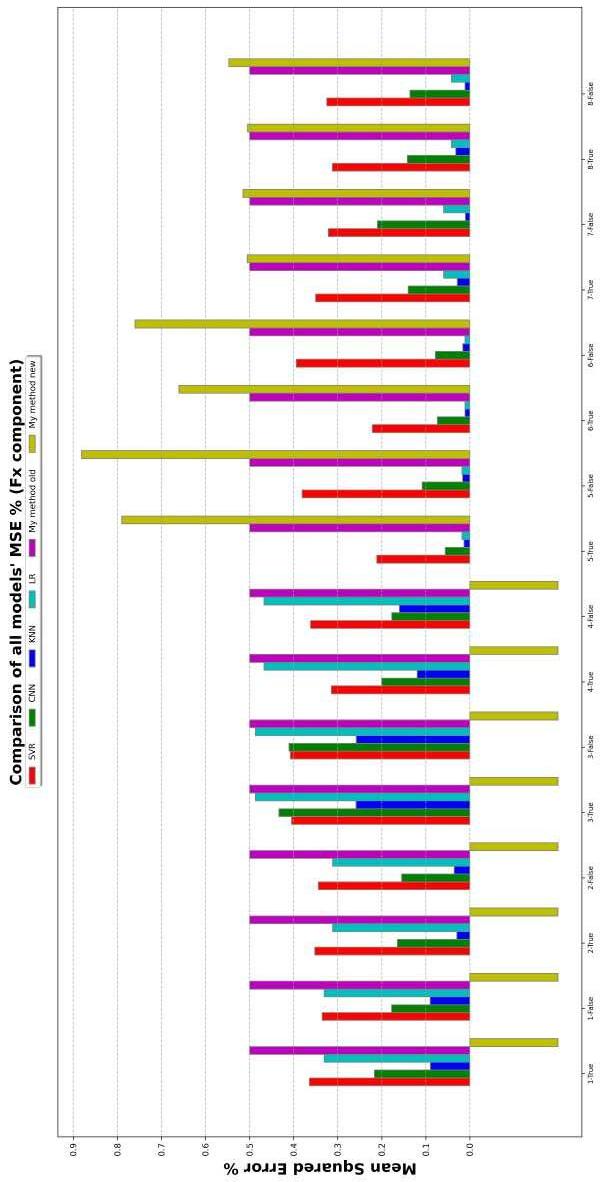

4.4Mode,meanandmedianin3differentdatadistributionscenarios[45]................................ 87 4.5Histogramsofthecomputed Cx, Cy, Cz coefficientsduringeachiteration........................... 88 4.6Sortingorderofthe29fiducialmarkers.............. 89 4.7Asequenceofimagesshowingtheestimatedapplicationpoint (green)andarea(blue),dependingontheappliedforce’smagnitude................................. 91 4.8GraphicalrepresentationoftheKNNalgorithm[13]....... 93 4.9Theeffectofthe“weights”parameterontheestimates.Thedefaultvalueis“uniform”andassignsequalweightstoallpoints; “distance”assignsweightsproportionaltotheinverseofthe distancefromthequerypoint[59]................. 94 4.10ApplicationofSVMincaseoflinearlydistributeddata[50]... 95 4.11ApplicationofSVMincaseofnon-linearlydistributeddata[50]. 96 4.12Left:agraphicalrepresentationofthechosenSequentialmodel usingtheplot model()function;Right:agenericexampleof 2-hiddenlayersNeuralNetwork[12]................ 97 4.13Onthelefttherawimagesensedbythefish-eyecamera;onthe rightthebinarizedimage...................... 98 4.14Sortingorderofthe29fiducialmarkers.............. 101 4.15Histogramplotshowingthe Fx estimationMeanSquaredError dependingonthefeaturetype/scalingcombination(negative barscorrespondtoerrorsabove100%)............... 103 4.16Histogramplotshowingthe Fy estimationMeanSquaredError dependingonthefeaturetype/scalingcombination(negative barscorrespondtoerrorsabove100%)............... 104 viii

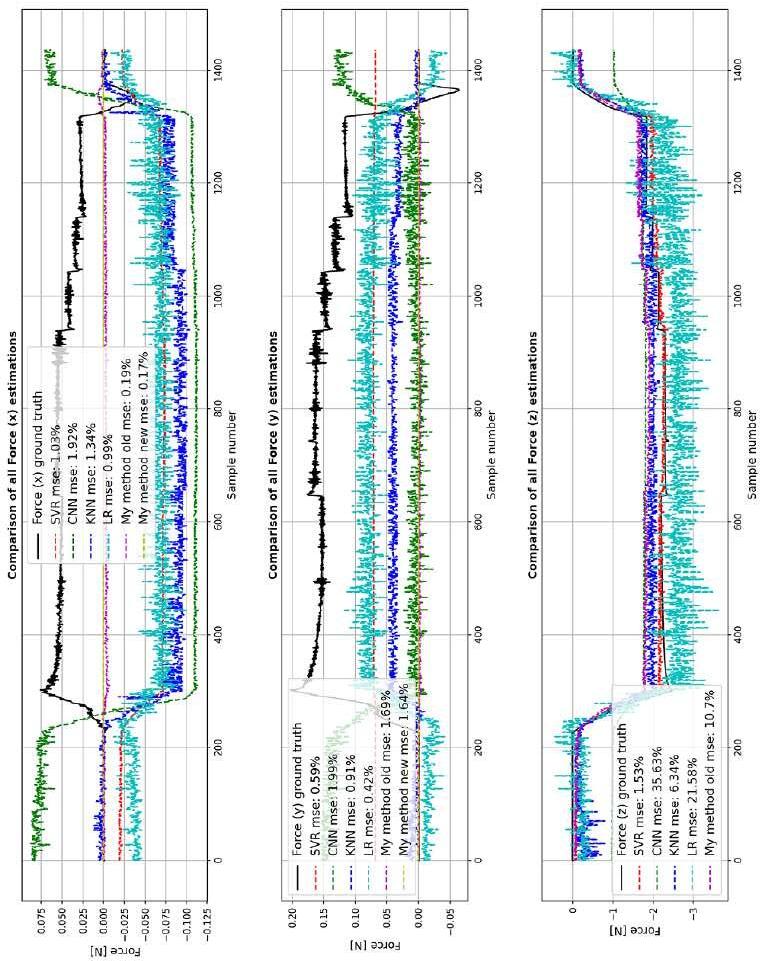

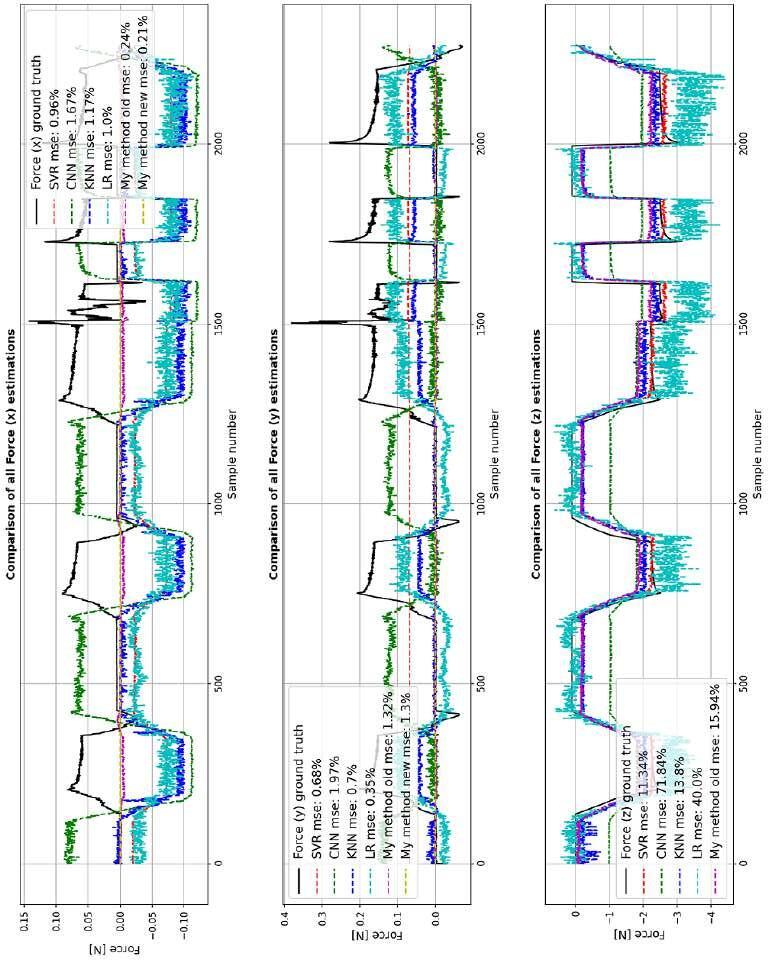

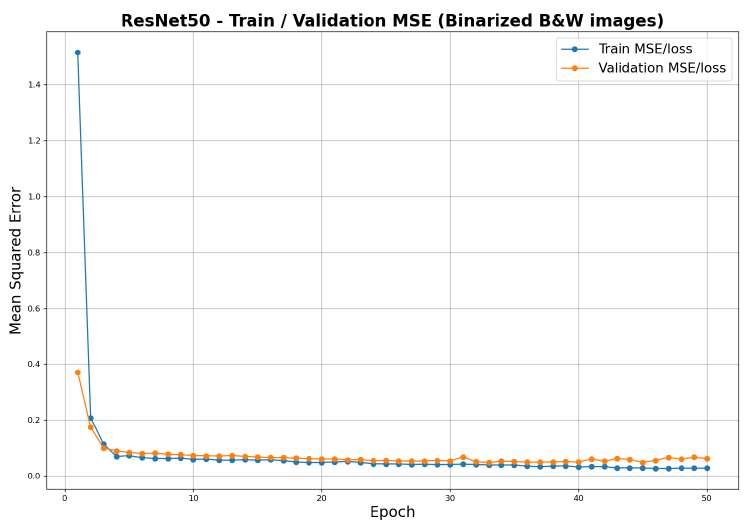

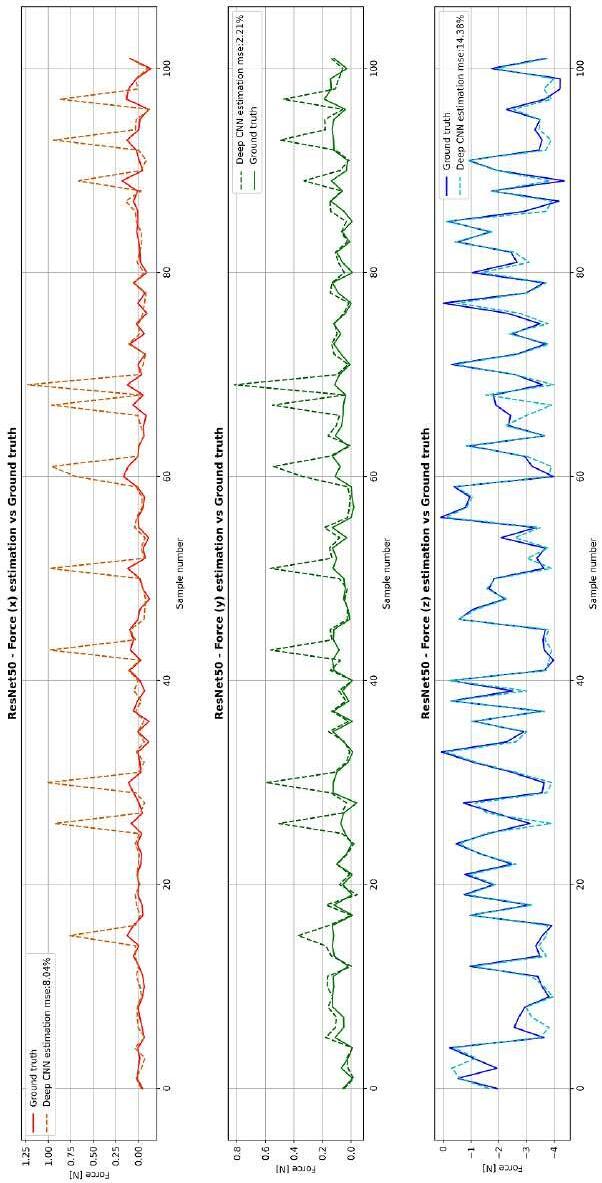

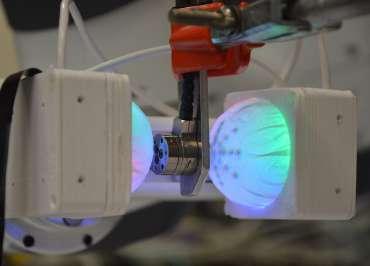

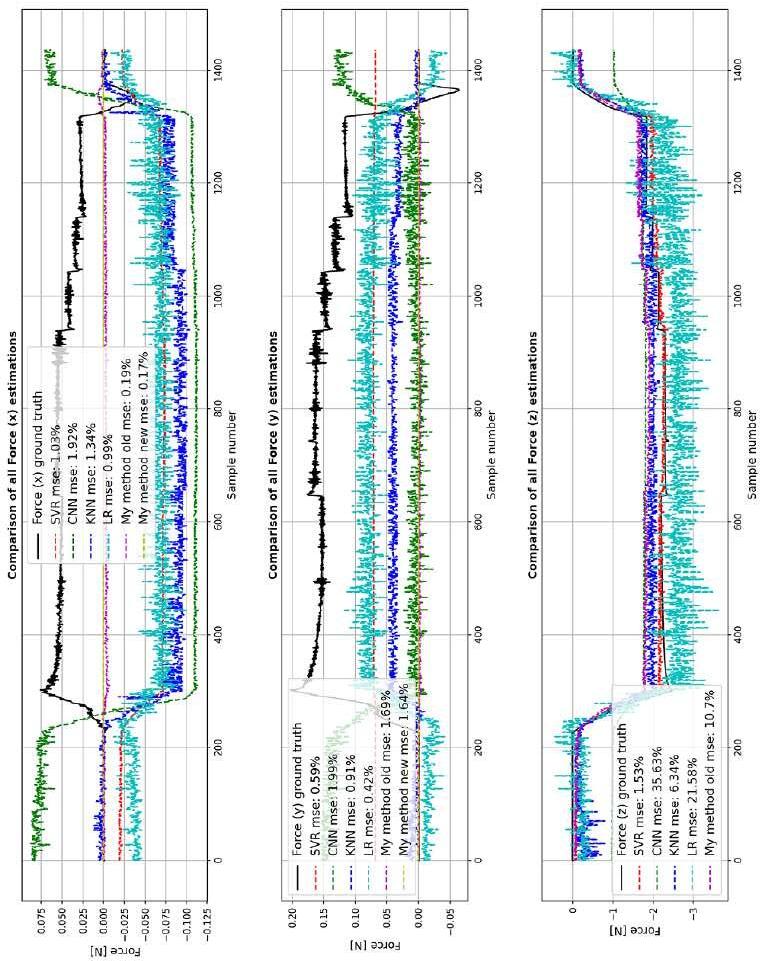

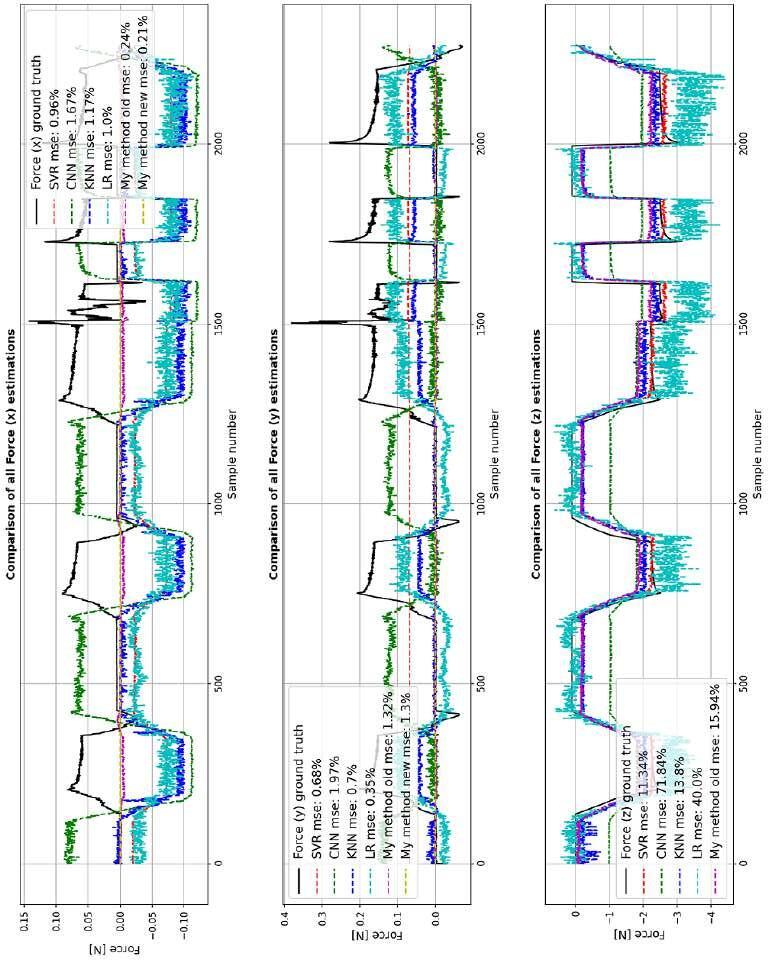

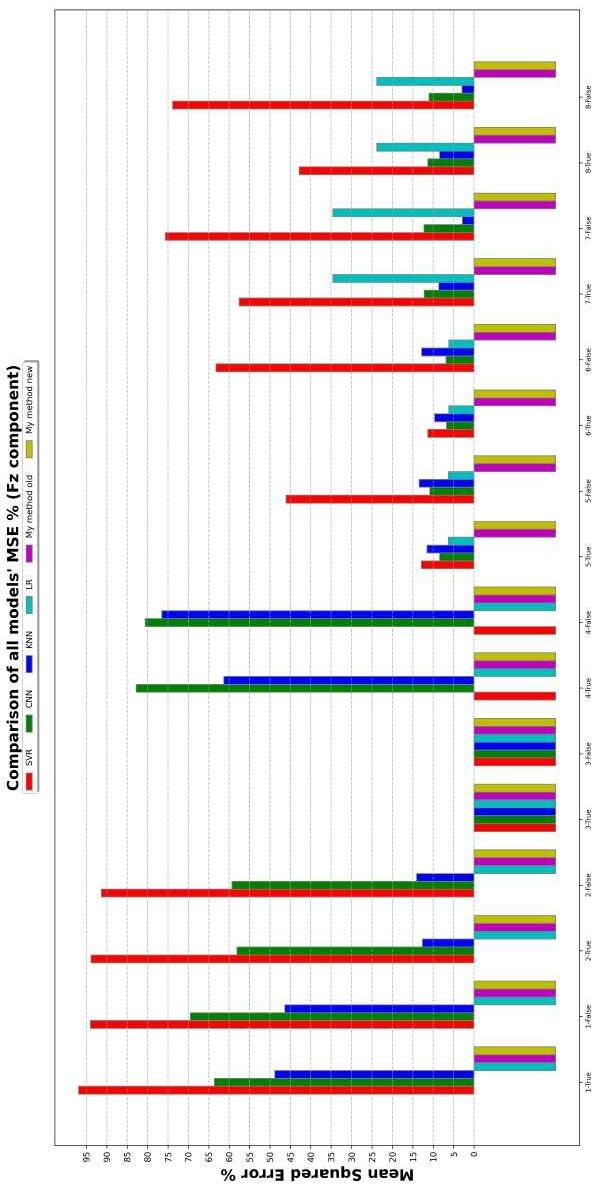

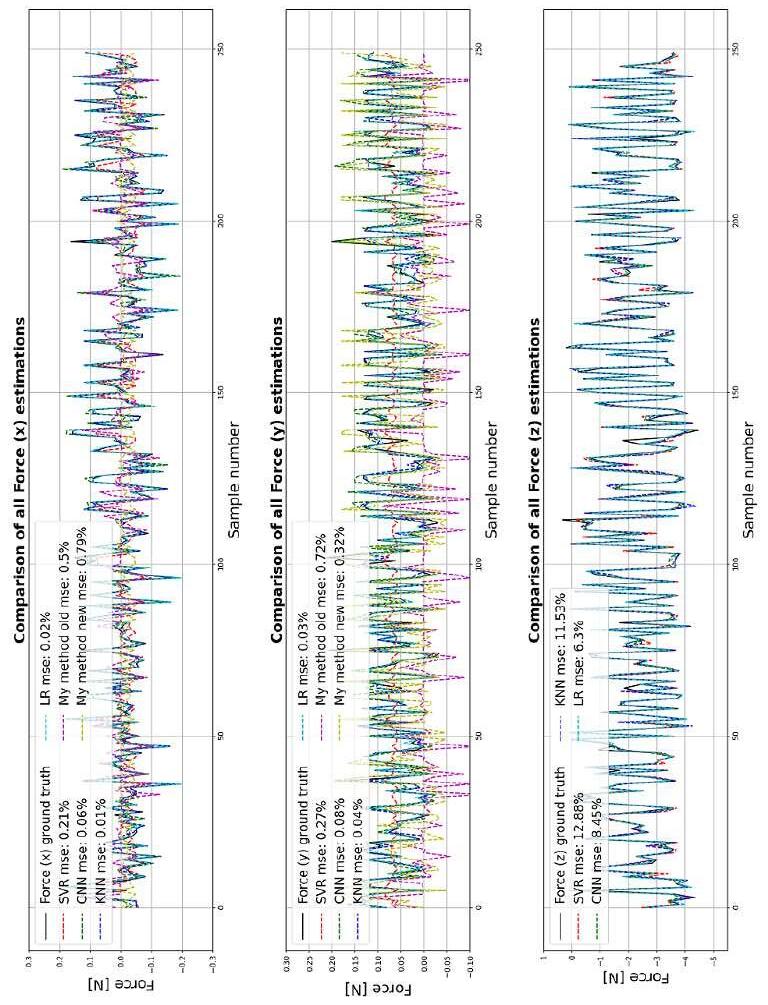

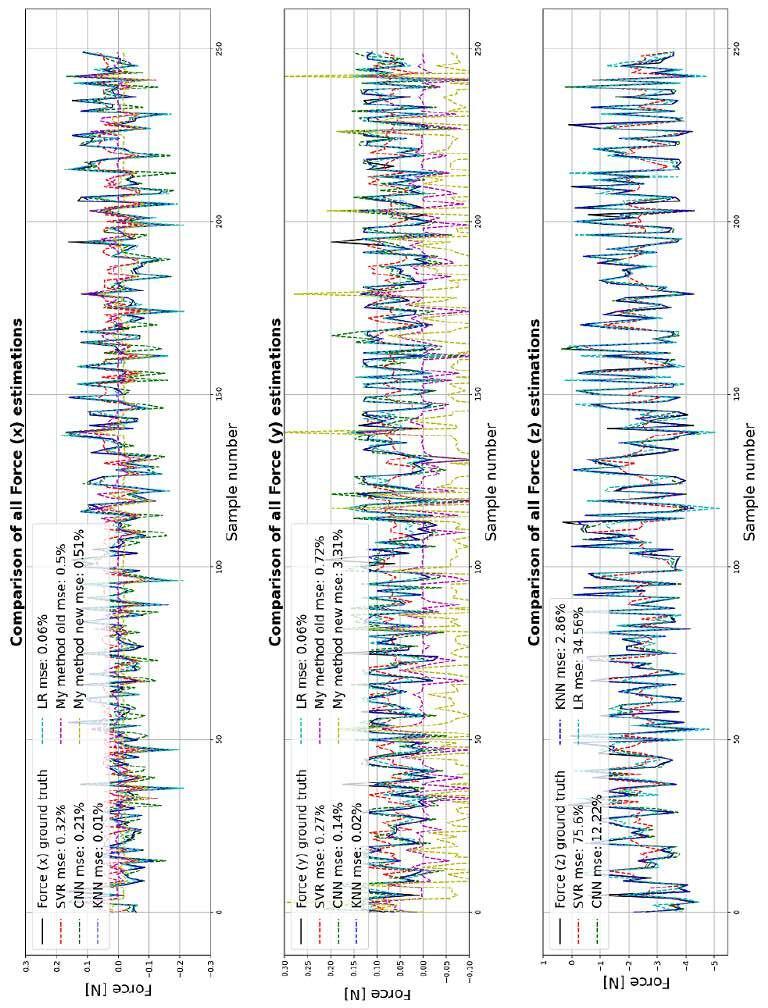

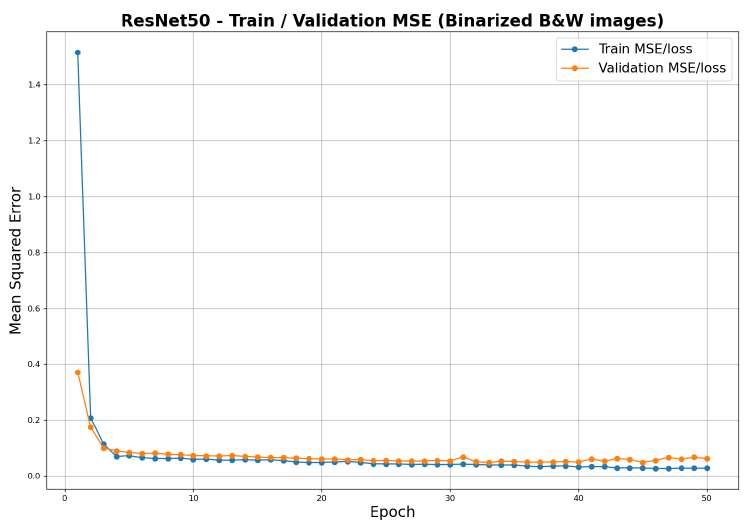

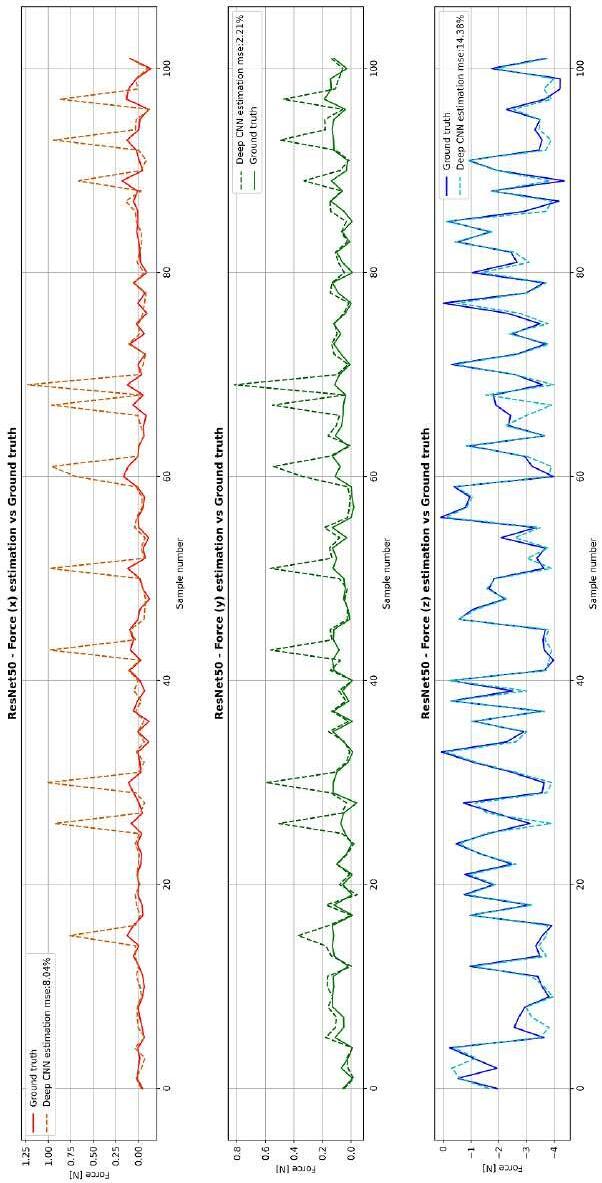

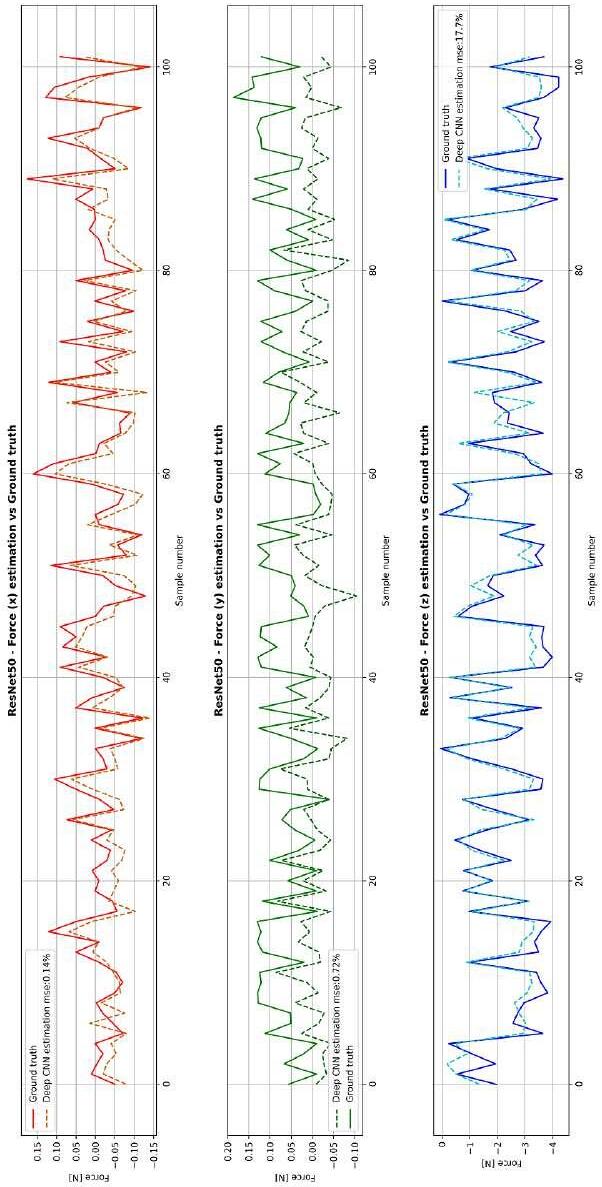

4.17Histogramplotshowingthe Fz estimationMeanSquaredError dependingonthefeaturetype/scalingcombination(negative barscorrespondtoerrorsabove100%)............... 105 4.18Comparisonoftheestimatedforcecomponents,consideringfeatureoptionnumber2(Option 2. inthelist)withfeaturescaling.106 4.19Comparisonoftheestimatedforcecomponents,consideringfeatureoptionnumber5(Option 5. inthelist)withfeaturescaling.107 4.20Comparisonoftheestimatedforcecomponents,consideringfeatureoptionnumber6(Option 6. inthelist)withfeaturescaling.108 4.21Comparisonoftheestimatedforcecomponents,consideringfeatureoptionnumber7(Option 7. inthelist)withoutfeature scaling................................. 109 4.22Comparisonoftheestimatedforcecomponents,consideringfeatureoptionnumber5(Option 5. inthelist)withfeaturescaling andwithouttheshuffleoption(toseetheactualforces’trends). 111 4.23ResNet50trainandvalidationlossconsideringrawRGBimages asinput................................ 112 4.24ResNet50trainandvalidationlossconsideringBinarizedimages asinput................................ 112 4.25ResNet50predictionsonthetestsetconsideringrawRGBimagesasinput............................. 113 4.26ResNet50predictionsonthetestsetconsideringBinarizedimagesasinput............................. 114 4.27Photographsoftheforceestimationvalidationsetup....... 116 4.28Estimatedforcesagainstgroundtruthsduringthevalidation phaseusingtheATINanosensorandpressingwiththedevelopedgripper(1)........................... 117 ix

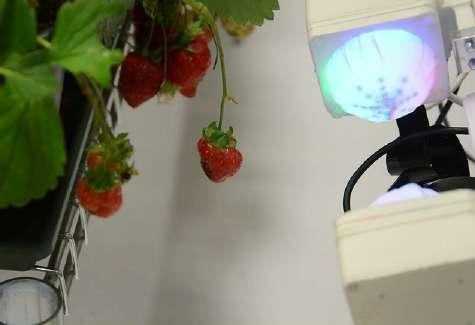

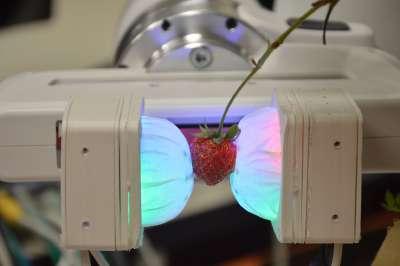

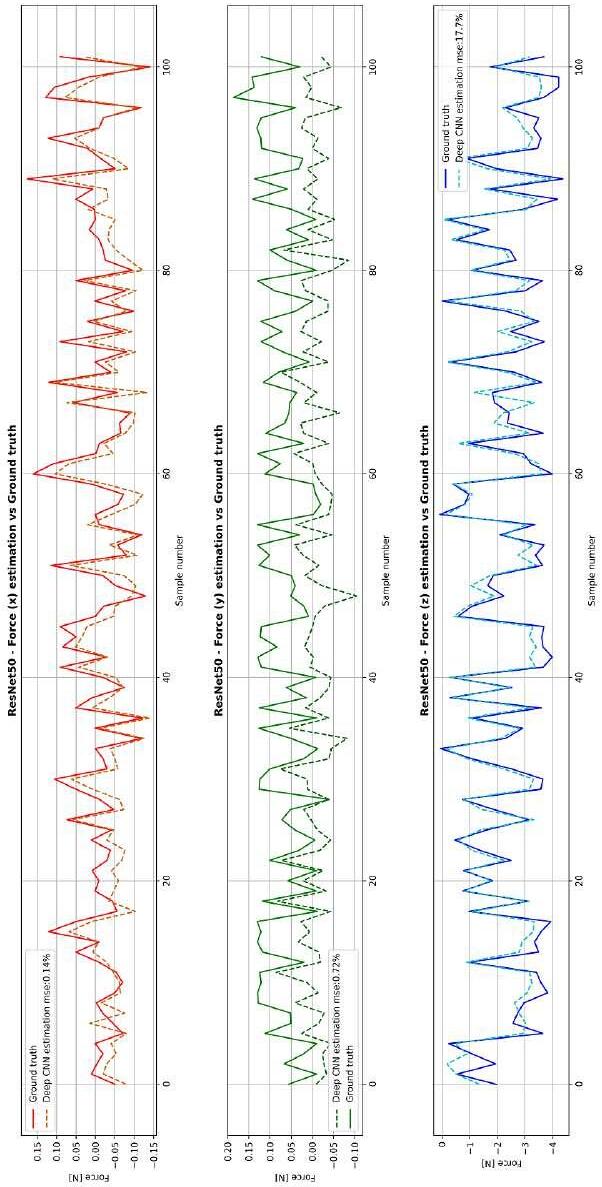

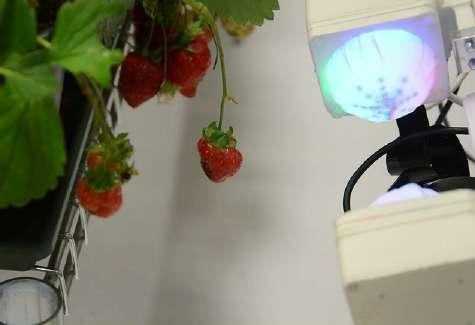

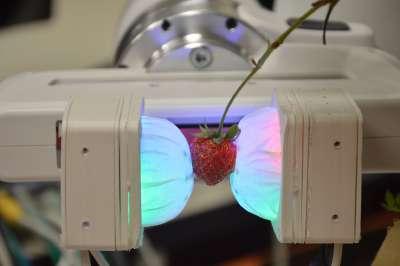

4.29Estimatedforcesagainstgroundtruthsduringthevalidation phaseusingtheATINanosensorandpressingwiththedevelopedgripper(2)........................... 118 4.30Estimatedforcesagainstgroundtruthsduringthevalidation phaseusingtheATINanosensorandpressingwiththedevelopedgripper(3)........................... 119 4.31Photographshowinghowstrawberryplantsweresetup(emulatingahydroponicculture)toperformthepickingtask..... 120 4.32Qualitativeresultofthefine-tunedCNNtestedononeofthe plantsusedforthesetupshownin4.31............... 121 4.33Phase1:detectionandlocalizationoftheripefruit........ 122 4.34Phase2:approachoftheripestrawberrygiventhe3Dtarget point................................. 123 4.35Phase3:applicationofthepickingpatterntoharvestthestrawberry................................. 123 4.36Imagesensedbythefish-eyecamerawhenthedomeisdeformed, withthesuperimposeddetectedmarkers.............. 123 4.37Onlineforcefeedbackswhilegraspingthestrawberry(weused theKNNmodeltoestimatenormalforceanddetermineifthe 1.75forcethresholdhasbeenreached)............... 124 5.1Photographsofthedevelopedsensingdeviceholdingastrawberrywithoutsqueezingit...................... 126 x

ListofTables

2.1AbriefsummaryofTable4reportedin“SoftGrippersforAutomaticCropHarvesting:AReview”[47]asaliteraturereview offoodsoftgrippers......................... 15

2.2Asummaryofthemainmaterials’characteristicsusedinsoft grippersmentionedin[47]...................... 18

4.1Summaryofthebestperformanceachievedbyeverymodelevaluatedonthetestset(MSEvaluesrefertothe Fz component).. 115 xi

Preface

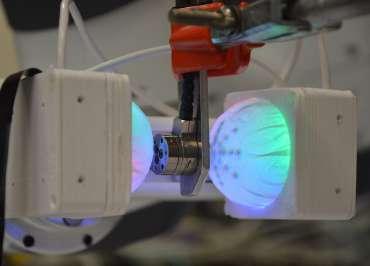

Inthisspace,I’dliketothankallthepeoplethatsupportedmyuniversity studies.StartingfromProfessorsRiccardoMuradoreandFrancescoVisentin, theybothprovidedvaluablefeedbackassupervisorsofthisMaster’sThesis. Theykindlysharedtheirknowledgeandexpertisecontributinginasignificant waytothiswork.Particularly,Francescodealtwiththedesignand3Dprinting ofthecharacterizedsensorandofthecasestoholditinplace.I’dliketo alsothankPost-DocResearchersGiacomoDeRossiandNicolaPiccinellithat helpeduswiththerobot’smotionplanningduringthefinalevaluationphase.

Lastbutnotleast,athankgoestoallmyfriendsandfamilythatsupported myjourneyfromallperspectives.ParticularlyI’dliketoshowgratitudetomy parentsMariangelaandLivio,mygirlfriendLucia,mybrotherMatteoandmy closestfriends.

xii

Abstract

Softrobotics,andparticularlysoftgrippingtechnology,facesmanychallenges. Duetoseveralstrictrequirementssuchassmalldimensions,lowcostandefficientmanufacturingprocess,accuratesensingcapabilities,thedevelopmentof softgrippersisstillanopenresearchproblem.Inthisworkahemispherical deformableandcheaptomanufacturetactilesensorisproposedandcharacterized.Thedeviceis3Dprintedusingastereolithography(SLA)3Dprinter andismadeofasemi-transparentelasticpolymerresinthatisproperlycured afterwards.Theoverallaimistosensenormalandtangentialforcesappliedto thegripper.Thegripperisdesignedandthoughtforagriculturalapplications suchasgraspingdelicatefruitsandvegetables.

xiii

Contents ListofFiguresi ListofTablesxi Prefacexii Abstractxiii 1Introduction1 1.1Problemstatement......................... 1 1.1.1Howtocopewiththeincreasingfooddemand...... 1 1.1.2Agriculture4.0andtheroleofsoftrobotics....... 2 1.1.3Doallhavethemeanstofaceatransition?........ 5 1.2Thesisstructure........................... 7 2Overviewofsoftroboticsinagriculture8 2.1Softgrippers............................. 8 2.1.1Abriefintroductiontoroboticgrippers.......... 8 2.1.2Softgrippingtechnologiesforagriculture......... 11 2.1.3State-of-the-artofsoftgrippers.............. 13 2.1.4Softgrippers’materialsandmanufacturingprocess... 17 2.1.5Controllingasoftgripper................. 19 xiv

2.2Agriculturalpractices’automation................. 20 2.2.1Harvestingprocessclassification.............. 20 2.2.2Harvestingpickingpatterns................ 22 2.2.3Automationlevelofagriculturalprocesses........ 23 2.2.4Openissuesinagriculturalautomationandproposedsolutions............................ 29 2.3Openissuesinsoftrobotics.................... 31 2.3.1Softgrippers’limitationsandrequiredimprovements.. 31 2.3.2Casestudy-relatedstate-of-the-art............. 32 3Thedesignoftheprototype43 3.1Specificationsandgoals....................... 43 3.2Themanufacturingprocess..................... 45 3.2.1Prototypingandmanufacturingthehemisphericaldome. 45 3.2.2Designandmanufacturingofthecase........... 50 3.3Experimentalsetups........................ 52 3.3.1Testingsetupusedduringsoftwareimplementation... 52 3.3.2Temporarysetupfordataacquisitionduringsensor’scalibration........................... 54 3.3.3Definitivesetupmountedontherobot.......... 55 3.4Implementedsoftwarealgorithmsandapproaches........ 58 3.4.1Markerdetectionandtracking............... 58 3.4.2Frompixeltometricunitswithamonocularsetup... 66 3.4.3Rawdataacquisitionforsensorcalibration........ 74 3.4.4Offlinesemi-automateddatasetcreationpipeline..... 75 4Experimentalresults81 4.1Forceestimationapproaches.................... 81 xv

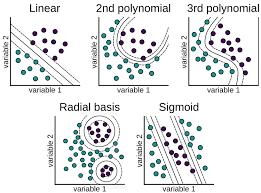

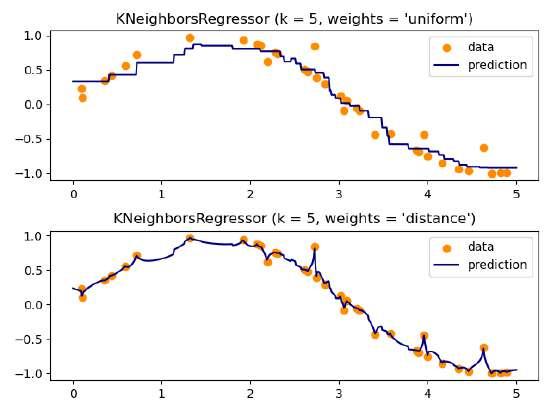

4.1.1MachineLearningvsDeepLearning............ 82 4.1.2Linearestimation...................... 84 4.1.3Non-linearlycompensatedandmarker-locationbasedestimation........................... 88 4.1.4LinearRegressionmodel.................. 92 4.1.5K-NeighborsRegressormodel............... 92 4.1.6SupportVectorRegressionmodel............. 94 4.1.7NeuralNetworkSequentialmodel............. 96 4.1.8DeepConvolutionalNeuralNetworkmodel........ 97 4.1.9Featureextraction..................... 98 4.2Comparisonoftheresults..................... 101 4.2.1Evaluationonthetestset.................. 101 4.2.2Evaluationwiththeroboticgripper............ 115 4.2.3Real-timeforcefeedbackandstrawberrydetection.... 120 5Conclusions125 Bibliography127 xvi

Chapter1 Introduction

InthischaptertheMaster’sThesis’workismotivated.Agriculture4.0,industrialautomationandsoftroboticscanhelptotransitiontowardsamore sustainableandefficientharvestingprocess.AttheendofChapter1willbe brieflyexplainedhowtherestoftheworkisstructured.

1.1Problemstatement

1.1.1Howtocopewiththeincreasingfooddemand

Asstatedbyseveralpapers[7,47,65,30]andwellrespectedinstitutions,agricultureisrequiredtosignificantlygrowitsproductivitytokeepupwiththe risingglobalfooddemand.TheUnitedNationsFoodandAgriculturalOrganization(FAO)foreseesthat“foodandfeedproductionwillneedtoincrease by70%by2050inordertomeettheworld’sfoodneeds”[65].Makingharder toaccomplishsucharesultistheshortageofworkersinthisfield,duetothe timeconsumingandlabourintensiveactivitiesthey’reaskedtostandupto, whilebeingoftenexploitedandunderpaid[11,8].Themainreasonsofthedecliningtrendinagriculturalinterestare:highland,realestate,machineryand

1

agrotechnologyprices;unequalwork-lifebalance;lackofgovernmentincentives and,inmostcases,poorworkingconditions.Also,theagriculturalindustry ishavingtroublescompetingwithcorporatejobsthatofferhigherpayand smart-workingoptions.Allofthistranslatesinfeweryoungfarmerscoming intofilltheshoesofretiredones.Inaddition,theglobalCOVID-19pandemichas“increasedtheneedofindustrialautomationforrelievingworkforce challengesandincreasingoperationalandfoodsafetyinfactoryenvironments” [30].

1.1.2Agriculture4.0andtheroleofsoftrobotics

It’sknownthatfromromanandgreektimes,ifnotevenbefore,humansare tryingtoautomateasmuchaspossiblelaboriousandrepetitivetasks,making useoftheavailabletechnology.Asanexample,romansusedasocalled“GalloRomanharvester”showninFigure1.1tospeedupthegrainharvestingprocess.

Nowadaysautomationplaysabigroleinthefarmingindustryminimizingwasteofproducts,timeandoptimizingthecropproductioncycle.An increasingnumberofcompaniesareworkingonroboticsinnovationtodevelopdrones,autonomoustractors,roboticharvesters,automaticwatering, andseedingrobots[30].Themaingoalistoaddressoratleasthelpwiththe previouslymentionedissuesthataffecttheagriculturesector. Agriculture

Chapter1Introduction2

Figure1.1: OntheleftasculpturedepictinganancientromanharvestercalledGallic Vallus;ontherightamoderncombineharvesterdesignedforgrain,potatoes,carrots,beets.

4.0 ,alsocalled precisionagriculture referstotheuseofInternetofThings (IoT),bigdata,ArtificialIntelligence(AI)androboticstomaketheentire productionchainmoreefficient.Technologicalinnovationisexploitedtocollect,transmitandpreciselyanalyzedatafromthefield.Datagatheredfrom sensorsisthenelaboratedwiththeaimofsupportingfarmersinthedecisionmakingprocessrelatedtotheiractivities.Theultimategoalsare“increasing economic,environmental,andsocialsustainability-aswellasprofitabilityofagriculturalprocesses”[2].

ThemainbenefitsofIndustry4.0inthecontextofagricultureare:

• avoidingunnecessarywaste(e.g.computingtheexactwaterrequirementsofthecrop);

• minimizingcostsbyplanningandpredictingallstagesofcultivation, fromlandpreparationandsowingtoharvesting;

• improvingthetraceabilityofthesupplychainthusthefoodqualityina sustainablemanner.

Althoughmoreandmorecompaniesarenowusinghigh-techdevicesandsensorstoeasethefarmers’work,makingthemabletoconcentrateonhigher leveltasks,there’sstillplentytobediscoveredinthisfield.Inthisregard, arelativelynewresearchbranchisfocusingon softrobotics andinparticular softgrippers Softrobotics isasubfieldofroboticsconcerningthedesign, control,andfabricationofrobotscomposedofcompliantmaterials,insteadof rigidlinks.Figure1.2showstwoexamplesofsoftgrippersdesignedbytheU.S. companySoftRobotics[66].Thedepicted mGrip modulargrippingsystemis fullyconfigurableandisthoughtforsafelyandefficientlypickingandpacking delicateproductssuchasfood.

Chapter1Introduction3

Inthelastdecadestheongoingtrendinroboticspointstowards collaborativerobots thatcanoperateoutsideofacage,interactingwithhumansandthe surroundingenvironment.Softrobots,notonlyaimatbeingsaferforhumans butmaysolvemanyopenissuesinrobotics.Infact,being compliant,they’re flexible,hardertobreakordamage,adaptabletounstructuredanddynamic environments.Theycanmeethygieneandstrictmanipulationrequirements whileoperatingwithdelicateorfragileproducts,makingthemdesirablein theagricultureandfoodindustries.Also,inapick-and-placetasktheydon’t requireanin-depthcharacterizationoftheobjecttohandle,ensuringgood performanceevenwithoutanyforcefeedback.Ingeneral,softroboticgrippersallowasimplercontrolarchitecturethantraditionalrigidrobots.Infact, mostsoftgrippersare underactuated,meaningthatthecontrolinputsare lessthantheachievabledegreesoffreedom.Byreplacingtheintricaterigid bodyjointmechanicswithsimplecompliantmechanisms,thenumberofparts requiredissignificantlyreduced,leadingtolowercostsformaintenanceand assembly.

Chapter1Introduction4

Figure1.2: SoftRobotics’mGrip:amodulargrippingsystemthatenablesreliable,highspeedpickingoftraditionallyhard-to-graspsingleitems.

1.1.3Doallhavethemeanstofaceatransition?

Globalagriculturehasbeenconstrainedbymanyfactors,suchassocioeconomicissues,climatechange,desertificationanddiminishedcropyields,attributedtothedecreaseofvitalnutrientsinagriculturallands[39].Despite thecompellingevidencesupportingagriculturalautomation,criticshavearguedthatdevelopingcountries,includingthoseinSub-SaharaAfrica,areless equippedforthetransitiontoAgriculture4.0[39].Infact,it’snotclearhow thosecountrieswouldbesuppliedwithnewtechnology,especiallysoftrobots. Asmentioned,Europeisexperiencinganintenselabourshortageinthisfield, thussoftroboticsandseeding/plantingequipmentswouldbeavalidsolution. Ontheotherhand,developingnationsinAfricahaveacriticalmassofunemployedyouth[39]butthisisnotenoughtotransitiontoamoreautomated harvestingprocess.AccordingtoFoodandAgricultureOrganizationofthe UnitedNations(FAO)[73],“digitalinnovationsinmechanizationtechnologies canmakeagriculturemoreattractivetoruralyouth,especiallyindeveloping countries”.Suchahighunemployedyouthofferstheopportunitytocreatenew andmoreattractivejobstoleavebehindrudimentaryhandtools.According toFAO,governmentsshouldbeprovidedwiththenecessarytechnicalsupport totransformagricultureinasustainableway;theinitiativeisalignedwiththe FrameworkforSustainableAgriculturalMechanizationinAfrica(SAMA)and Asia(SAM).

Overtheyears,agriculturalmechanizationhasevolvedfrombasichand toolsandanimal-drivenimplementstoengine-poweredequipments,butnot uniformlyallovertheworld.Infact,manualtoolsandanimalpowerarestill commonlyusedindevelopingcountries,negativelyaffectingthelivelihoodsof small-scalefarmersandtheirproductivity[73].

EconomicincentivestohelpwiththetransitiontoAgriculture4.0aretak-

Chapter1Introduction5

ingplacealsoinItalyforseveralyears.Asreportedbyarecentarticleon thistopic[51],it’simportantnotonlytoofferincentivesbutalsotocommunicatethemeffectivelyinordertoreachmostofthefarmersandsmall-medium enterprises.Supportingthisstatement,87%ofbigcompaniesknowanduse someofthecurrentconcessions,butonly59%ofthesmallercompaniesknow aboutthem.Tobeabletoadoptnewtechnologysuchas agrobots (robotsfor agriculture),acertainlevelofunderstandingoftheroboticdeviceisrequired: agoodfarmerisnotnecessarilyexpertindigitaltechnologiesandautomation. Someofthefarmers’reluctanceisdueto:anotsostraightforwardprocess, lackofcontinuoussupportandtraining,andabsenceofexternalincentives (e.g.policiesormarketprices)[39].Also,toachievethebestresults,thefarm systemandfarmers’workflowitselfmustadapttotherobots.Forinstance, spacingbetweencropsandcropstructuresneedstomatchtheoperationalparametersoftheagrobotasitmovesamongthecultivatedcrops.Ofcourse, purchasepriceofthedevicehastobetakenintoaccountasitcouldbeunsustainableformedium-smallfarms.Thiscanbecomelessofaproblemforlarge commerciallyorientedfarmswherehighlabourcostsduringharvestseason canbeattenuatedthroughautomation.FAOpresentstheneedtofindprofitablebusinessmodelswherethefarmerdoesnotnecessarilyowntherobot butcanbenefitfromthetechnology.Twopossiblesolutions,alreadyinplace inmanyfarmingsystems,areserviceprovisionandcooperativeownership.To conclude,arecentstudy[73]summarizesthemainbenefitsforAgriculture 4.0indevelopingcountriesandparticularlyinVietnam.Itreportsthatat thisstage“theagricultureofVietnamisstilldominatedbyindividualhouseholdswithsmallscaleproductionandlowskilltechniques.However,thereisa growingtrendofprivateinvestmentinagriculture,whichapplymoderntechniques,frombothforeignanddomesticinvestors.Moreinterestingly,thereare

Chapter1Introduction6

1.2Thesisstructure

ThisMaster’sThesisfocusesontheresearch,developmentandcharacterization ofamarker-basedhemisphericalsoftgrippercapableofsensingforceswhenin contactwiththeexternalenvironment.

Theworkisstructuredasfollows:

• Chapter2 presentsanoverviewaboutsoftroboticsandsoftgrippersas apossiblesolutiontotheproblemstheagriculturalindustryfacesnowadays.Themainstate-of-the-artsoftgrippingapproachesarediscussed andanoverviewoftheopenissuesinthisfieldisreported;

• Chapter3 addressestheprototypingandmanufacturingphaseexplainingthemainstepstodevelopthesensingdevice.Theexploitedexperimentalsetupsarebrieflypresented,whilethefinalpartoftheChapter explainstheimplementedofflinepipelinetocreatethedataset.Also, someapproachesfor3Dshapereconstructioninspiredfromstate-of-theartsimilarorsomehowrelatedwork,thatturnedouttobenotvery accurate,arenonethelessshown;

• Chapter4 isdedicatedtotheexperimentalresults.Thedevelopedofflineandonlinepipelines’outputsareshownandcommented.Inthis Chapterthemainlyfacedproblemsandsolutionsarediscussed.Moreover,alltheexploitedalgorithms,MachineLearningandDeepLearning estimationapproachesarecitedandbrieflyexplained.Finally,usingthe developedsetupmountedontheFrankaEmikaPandarobotasimple pickingtaskisattemptedandthequalitativeresultsarereported.

Chapter1Introduction7

companiesnowspecializingintechnicalsolutionsforagriculture”.

Chapter2 Overviewofsoftroboticsin agriculture

InthisChapterthesoft-grippingtechnologyisintroducedandpresentedasa possiblesolutiontomanyproblemstheagriculturalindustryiscurrentlyfacing.

Anoverviewabouttheavailabletechnologiesonthemarketispresentedand thepublicationsrelatedtothecasestudyarebrieflysummarized.

2.1Softgrippers

2.1.1Abriefintroductiontoroboticgrippers

Graspingandmanipulationarefundamentalfunctionsthatrequireinteraction withthesurroundingenvironment. Grasping canbedescribedasthe“abilitytopickupandholdanobjectagainstexternaldisturbances”[63],while manipulation istheabilitytoexertforcesonanobject,causingitsrotationanddisplacementwithrespecttothemanipulator’sreferenceframe.A roboticgripper isaroboticend-effectorthatcanbemountedonarobotic

8

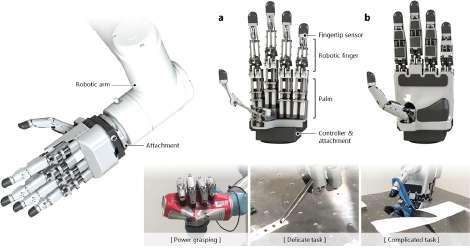

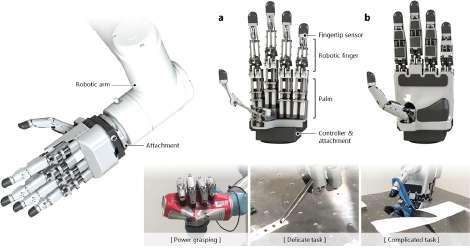

arm,actinglikeatoolor,specifically,ahandforgrasping,pickingandplacing objects.Traditionally,roboticgrippersaremadeofrigidjointsandlinksand they’reactuatedthroughelectricmotorsinsidethestructure.Inalternative theycanbeactuatedthroughcablesortendons,asshowninFigure2.1.Gripperdesignsrangefromtwo-fingeredgripperstoanthropomorphichandswith articulatedfingersandpalm(Figure2.1)[63].Infact,theirdesignisoften inspiredtohumanoranimalfeaturestoachieve dexterity (theabilitytoperformnon-trivialactionquicklyandskilfullywiththehands)and compliance (flexibilityandelasticdeformability).InFigure2.2threegrippersfromthe ROBOTIQcompany[56]areshown.They’realldesignedtobemountedon collaborativerobotsforprecisionassemblytasks.Moreover,inFigure2.3there arevacuumgrippersproducedbydifferentmanufacturers,suchasUniversal Robots[72],OnRobot[49]andJoulin[35].Thelatterproposes“TheFoam Gripper”whichischaracterizedbyafoamsuctioncupthatisinsensitiveto porosities.

Oneofthemainchallengesofrigidanthropomorphicgrippersishandling softanddeformableobjectslikefruitsorvegetablesthatrequireanadditional careduringmanipulation.Thesocalled softgrippers aretryingtoaddress someofthoseproblemsavoidingthehighmechanicalandcontrolcomplexity

Chapter2Overviewofsoftroboticsinagriculture9

Figure2.1: Ontheleftan“integratedlinkage-drivendexterousanthropomorphicrobotic hand”[38]whileontherightasoftanthropomorphichand[7].

ofclassicalgrippersrequiredtoachieve “softwarecompliance”.Infact,they allowasimplercontrollabilityandadaptabilitytodynamicenvironments,while beingrobust,durable,versatileand inherentlycompliant thankstothesoft materials. Underactuation,thatdenotesalowernumberofactuatorsthan degreesoffreedom,isfundamentaltohaveasimplercontrollability:asan example,humanfingerscanbeseenasthecompositionofonetendonand threelinks,meaningtwodegreesoffreedomgivenasinglecontrolinput.

Also,roboticgripperscanbeequippedwithsensorstoestimateposition andvelocityofthegripperelements(e.g.withHall-effectsensors,encoders, torquesensors)andwithsensorstoretrieveinformationaboutthein-contact

Chapter2Overviewofsoftroboticsinagriculture10

Figure2.2: Fromlefttoright:ROBOTIQHand-EAdaptiveGripper,2F-85Gripperand 3-FingerAdaptiveRobotGripper.

Figure2.3: Fromlefttoright:UniversalRobotsZXP7*01VacuumUnit,OnRobotVG10 VacuumgripperandJoulinFoamGripper.

objectsorappliedexternalforces(e.g.pressure,force,torquesensors,optical sensors,resistive,conductiveandelectromagneticsensors).

2.1.2Softgrippingtechnologiesforagriculture

Accordingto[63],softgrippingtechnologiescanbeclassifiedinthreemacro categories,eventhoughthey’renotexclusiveandmanydevicesmakeuseof combinationsoftwotechnologyclassestoreachhigherperformance:

• Actuation: passivestructurewithexternalmotors,fluidicelastomer actuators(FEAs),electroactivepolymers,shapememoryalloys(SMAs);

• Controlledstiffness: granularjamming,lowmeltingpointalloys (LMPAs),electro-rheological(ER)andmagneto-rheological(MR)fluids,shapememorypolymers(SMPs);

• Controlledadhesion: electro-adhesion,geckoadhesion(dryadhesion).

Grippingby actuation consistsofbendinggripperfingersorelements aroundtheobject,aswedowithourfingerswhenpickingupaneggoraglass ofwater.Thebendingshapecanbeactivelycontrolled,otherwisecontactwith theobjectcanbeexploitedtoinducedeformation[63].

Grippingusing controlledstiffness exploitsthelargechangeinrigidity ofsomematerialstoholdthetargetobject.Anactuatorisneededtoenvelop theobjectwithpartofthegripperandwhileit’ssofttheappliedforcecan beverylow,allowingthemanipulationofdelicateobjects.Suchgrippersare fastandallowtuningofthestiffnesstoadesiredlevelbutitsrangecanbe limiting.

Grippingusing controlledadhesion,similarlytovariablestiffness,requiresanactuationmethodtopartiallyenveloptheobject.Controlledadhesionreliesonsurfaceforcesattheinterfacebetweengripperandobject.

Chapter2Overviewofsoftroboticsinagriculture11

Thisoperatingprincipleisamajoradvantagewhenmanipulatingverydelicateobjects,asitavoidsthehighcompressionforcesrequiredingrippingby actuation.Also,it’sanidealmethodforflatobjectsorobjectsthatcan’tbe envelopedbutrequiresclean,relativelysmoothanddrysurfaces.

Asdescribedin[47]additionalcriteriatochoosethegripper’stechnology couldbe:targetobjectsize,grippersize,liftingcapabilitiesandratiobetween gripper’sandobject’smasses.Also,powerconsumption,controllabilityease (openloop),scalability,modularity,adaptabilitytovarioustargetobjects.

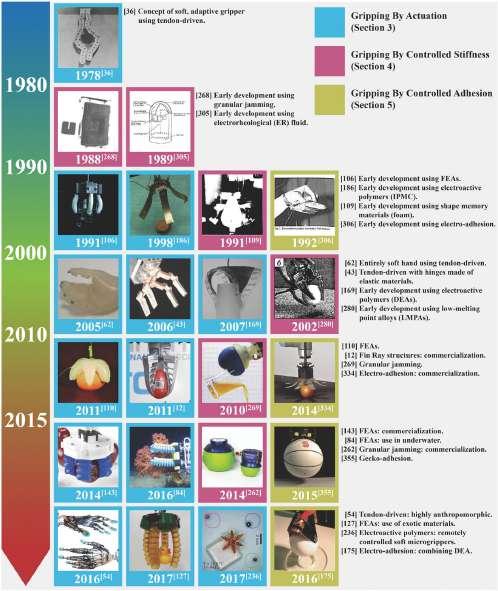

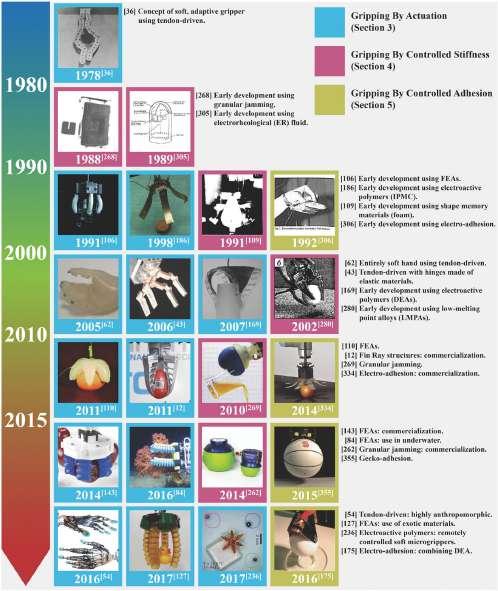

Responsetime,surface-relatedrequirements,bio-compatibility,robustnessin unstructuredenvironments,compliance,lifetimecanallaffecttheefficiency oftheagriculturetask.Figure2.4showsabrieftimelineofmilestonesin thedevelopmentofsoftgrippertechnologiesaccordingto[63],startingfrom thelate70s’tendondrivengrippersto2017’sFEAsusingthermo-reversible Diels-Alderpolymers.

Accordingtothementionedrequirements,themostsuitableandcommonly usedtechnologiesare:

• Granularjamming: reactingtoexternalvariablessuchaschemical concentration,humidity,orlight,theyachievegoodliftingratio,response timeandabilitytoliftmedium-sizefruits;

• PassivestructureswithexternalmotorsandFEAactuators: idealforfruitharvestinggrippers,highliftingratio,wideobjectsize range,goodresponsetime,abilitytograspanyobject.

Softcomponentstypicallyusedinthegrippers’actuatorsincludeurethanes, hydrogels(invisibleinaqueousenvironments),hydraulicfluidsandpolymers, suchas siliconeelastomers [47,63].Actuatorsbasedonsiliconeelastomers haveattractedstronginterestduetotheirlowcostandeaseofmanufacture;

Chapter2Overviewofsoftroboticsinagriculture12

Figure2.4: Abrieftimelineofmilestonesinthedevelopmentofsoftgrippertechnologies aspresentedin[63].

theydonotrequiretheuseofcomplexmachineryorskilledlabour.Inaddition, thesecompliantmaterialsarealsoadvantageouswhenconsideringthesafety ofinteractionwithbiologicalproducts,makingthemappropriatecandidates foragriculturalapplications.

2.1.3State-of-the-artofsoftgrippers

Inthefieldofsoftrobotics,there’sstillplentyofroomforimprovementofsoft actuatorsdesignedforpicking,placingandharvestingfruitsandvegetables. Handlingthistypeofproductsrequiresprecisecontrolofthegrippertosuccessfullyfollowthe pickingpattern’smovementswithoutcausinganydamage tothefruit.Inliterature[47]themaincapabilitiesofanidealpickingrobot

Chapter2Overviewofsoftroboticsinagriculture13

Chapter2Overviewofsoftroboticsinagriculture14

wouldbe:

• 3Dlocalizationoffruitsinsidetheplant;

• pathandtrajectoryplanning;

• applicationofthesuitedfruitdetachmentmethod;

• adequatestorageofthefruit.

Allofthisshouldbecarriedoutwiththeaimofincreasingtheharvestratio betweenroboticandmanualpicking,increasingtheharvestedfruitquality,beingeconomicallyjustified.End-effectorsarerequiredtoappropriatelyhandle fruitstopreservetheirquality,meaningtheirvalueonthemarket.

Softgrippersareconsideredtobeoneofthebestsolutionsforharvesting crops,thankstotheiradaptabilityanddelicacywhengraspingandmanipulatingthetargetproducts.Byusingmaterialswithamoduleofelasticitysimilar tobiologicalmaterials,softgrippersensuresafeinteractionwithhumansand theworkingenvironment.Table2.1summarizesthemostrecentproposalsfor foodsoftgrippers[47].

Regardingcommerciallyavailablesoftgrippers,in2015thecompanySoft Robotics[66]introduced mGrip:apneumaticallypoweredgrippermadeof softelastomers.AsshowninFigure2.5,itconsistsofanetworkofparallel airchambersembeddedintheelastomerthankstowhichasinglepneumatic sourcecancontrolthedevice.So,complianceisachievedwithouthardlinkages, additionalsensorsoravisionsystem.Thismodulargrippercanbesetupas twoopposingfingersormultiplefingersplacedinacircularpattern.

Festo[19]isanindustrialautomationcompanythatproducescollaborative robotswithsoftgrippersattached(examplesinFigure2.6and2.7). BionicSoftArm isaroboticarmthatinitslargestversionhassevenpneumatic

Softtechnology andyear

Graspedobject Objectsize orweight GrippertypeControllability

FEAs(2020)Lettuce50x250mm

2pneumaticactuatorsand ablade(8kg,450x450x300mm) Close-loopwith forcefeedback

FEAs(2010) Apple,tomato, strawberry,carrot 69mm;5-150g Magnetorheologicalgripper (fingersize:82x16x15mm) PID

FEAs(2017)Cupcake75.2g Softfingers (fingerlength:97mm) Open-loop

FEAs(2020)Orange1kg Softfingers (fingersize:95x20x18mm) Open-loop

FEAs(2020)Tomato,kiwifruit46-76mm 4softchambersincircularshell (diameter:46mm;height:30mm) Open-loop

Tendon-driven(2020)Tomato500g3softfingerdesign Pre-programmed motors’rotation

FEA-tendondriven(2019)Banana,apple,grapes2.7kg 3softfingerdesignwith asuctioncup(390g) Teleoperation

Topologyoptimizedsoftactuators Apple,grapefruit, guava,orange,kiwi 1.4kg2compliantfingersOpen-loop

Table2.1: AbriefsummaryofTable4reportedin“SoftGrippersforAutomaticCrop Harvesting:AReview”[47]asaliteraturereviewoffoodsoftgrippers.

actuatorsandasmuchdegreesoffreedom.Itcanbeequippedwithvarious adaptivegrippersforpickandplacetaskssuchas FlexShapeGripper,inspiredtothebehavioursofachameleon; MultiChoiceGripper,anadaptive, flexiblehandlingsysteminspiredtotheopposablethumb; TentacleGripper, anoctopus-inspiredgripperwhichwrapsaroundobjectslikeanoctopus’sarm andthenusesvacuumsuctioncupstoholditfirmlyinplace.

Hanksoftgripper fromCambridgeConsultants[10]attemptstoemulate thehumanhands’fourfingersandopposablethumbthatallowasophisticated senseoftouchandslipusingsensorsembeddedinitsindividualpneumatic fingers(Figure2.8showstheHanksoftgripper).Thesesensorsareembedded duringthemoldingprocessinsideitshollowsiliconefingers,thatareactuated pneumatically.Basedonthedeformationofthefingers,theappliedforceis measuredandtheforcefeedbackclosureisprovided.

Finally,theincreasingpressureforenvironmentallyfriendlytechnologies

Chapter2Overviewofsoftroboticsinagriculture15

hasinducedresearcherstoexploresoftgrippersmadeofbiodegradable,and evenedible,materials[63].

Chapter2Overviewofsoftroboticsinagriculture16

Figure2.5: SoftRobotics’mGripmodularsoftgripper.

Figure2.6: OntheleftFestoFlexShapeGripperandontherightFestoTentacleGripper.

Figure2.7: FestoBionicSoftArmequippedwithaflexibleMultiChoiceGripper.

2.1.4Softgrippers’materialsandmanufacturingprocess

Accordingto[63],softgrippersaremadeofurethanes,hydrogels,braided fabrics,hydraulicfluidicsandpolymers,suchassiliconeelastomers,whichbecameverydesirablethankstotheirlowcostandsimplicitytomanufacture.

Themostcommonlyusedsoftmaterialsthatresideinthesiliconeelastomers categoryare:DragonSkin,Ecoflex,polydimethylsiloxane(PDMS),Elastosil M4601andSmooth-Sil.OtherpolymersareAgilus30/VeroClear,ultra-high molecularweightpolyethylene,electrostaticdischarge(ESD)plasticsheet, thermoplasticelastomers(TPEs)andthermoplasticpolyurethane(TPU).An importantaspectforthesuitabilityofsoftgrippersintheagriculturalsector, assuggestedby[63],isthatthematerialsthey’remadeofmustn’tcontaminatethefood.Thistopicshouldbeinvestigatedmore,tounderstandifsoft grippers’degradationmayleaveparticlesonthemanipulatedcrops.Table2.2 summarizesthemainadvantagesofthementionedmaterials.

Regardingthe manufacturingprocess,severalapproachescanbementioned:

• Moulding :fusedmaterialisplacedinsidea(typically3Dprinted)mold

Chapter2Overviewofsoftroboticsinagriculture17

Figure2.8: CambridgeConsultants’Hanksoftgripper.

SoftmaterialMainspecificationsShorehardness

DragonSkin,Ecoflex,Smooth-SilVersatile,easytouseandhandle,lowcost10to50ShoreA ElastosilM4601 Highlyresistanttobendingandelongation; lowviscosityinitsuncuredform;easytomold

Approximately28ShoreA

PDMS

TPUandTPE

Highelasticity;itisathermosettingpolymer, obtainedbyirreversiblyhardening(curing)asoft solidorviscousliquidprepolymer(resin).

PreciselymathematicallymodellablethroughFinite ElementMethod(FEM)analysis.Thevariationinits hardnessthroughseveralmixingratioshasbeen extensivelystudiedintheliterature.

Approximately50ShoreA

Canbe3Dprinted.Also,TPU-95isverydurable suitableforagriculturalenvironments,where harmfulcollisionswithobjectsarefrequent. 85ShoreA

Table2.2: Asummaryofthemainmaterials’characteristicsusedinsoftgrippersmentionedin[47].

andremovedafterhardening.ItcanbedonemanuallyorthroughFused DepositionModelling(FDM)printers;

• ShapeDepositionManufacturing(SDM):suitablefor3Dsoftactuatorsmadeofmultiplematerialswithdifferentproperties;

• Softlithography :suitedfordevelopingmultichannelsoftactuators;

• Virtuallost-waxcasting :avariantofatechniquenormallyappliedto castmetal.Inthiscase,thefinalparttobeobtainedisvirtuallydesigned (CAD)andavirtualmoldiscreatedbyinvertingthepartdesign.This moldisthen3Dprintedandfilledwithuncuredsilicone.Aftercuring, themoldisdestroyedusingasolvent;

• Soft3-Dprinting :themostpromisingtechnologyduetotheeliminationofseveralmouldingstages,whichmakesthemanufacturingprocess easierandallowsthedesignofmorecomplexinnerchambersorpneumaticnetworks.

Chapter2Overviewofsoftroboticsinagriculture18

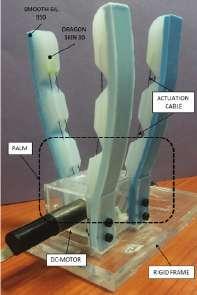

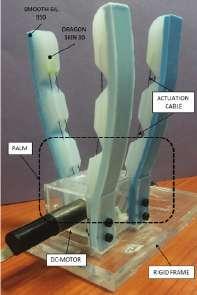

In[29]amulti-fingeredsoftgripperdesignthatcompriseshydraulic-driven andsheet-shapedfabricbendingactuatorsisproposed.In[44]abioinspired softroboticgripperforadaptablegraspingisproposed.Themanufacturing processinvolvesmoldingandcastingoftheDragonSkin30siliconeinsidethe molds.In[25]bothhybridroboticgripperandacompletesoftroboticgripper areproposed.Theyarecharacterizedbyretractabletelescopicinflatablefingers.Thisdesignisthoughttobeexploitedinunknownenvironmentsdueto theirhighconformabilityandcompactness.AsshowninFigure2.9,telescopic mechanismsaremadeofurethanerubber(Smooth-OnVytaflex40)whilethe clawsare3DprintedwithPLA(PolyLacticAcid)material,anddrivenvia rackandgearcouplingsconnectedtothreeRoboticsDynamixelXM430-W350 smartactuators.

softgrippermadeofDragonSkin30silicon;ahybrid/softroboticgrippermadeofurathen rubber.

2.1.5Controllingasoftgripper

Aspreviouslymentioned,softactuatorsaredeformableandcompliant,which translatesintoalargeintrinsicnumberofdegreesoffreedom.Howasoft

Chapter2Overviewofsoftroboticsinagriculture19

Figure2.9: Fromlefttoright:asoftfabricgripperwithgeckoadhesion;abioinspired

actuatoriscontrolledhighlydependsonthechosenmaterialsandthecontrol complexitycanbereducedbasedonthedesign.Softgrippersareoftencitedas anexampleof morphologicalcomputation meaningthatcontrolcomplexityisreducedbymaterialsoftnessandmechanicalcompliance[63].Several controlstrategieshavebeenproposedforFEA-typeactuatortechnologysuch asProportional-Integral-Derivative(PID)control,closedloopcurvaturecontrol,real-timeArtificialNeuralNetwork(ANN)control.However,open-loop controlisoneofthemostfrequentlyused.Accordingtoarecentreview[47], difficultiescanbeencounteredwhilecontrollingcertaintypesofFEAsoftactuatorsandpassivestructuresactuatedbyexternalmotorsortendonmotors, duetotheirdeflectionaroundtheobject.

2.2Agriculturalpractices’automation

2.2.1Harvestingprocessclassification

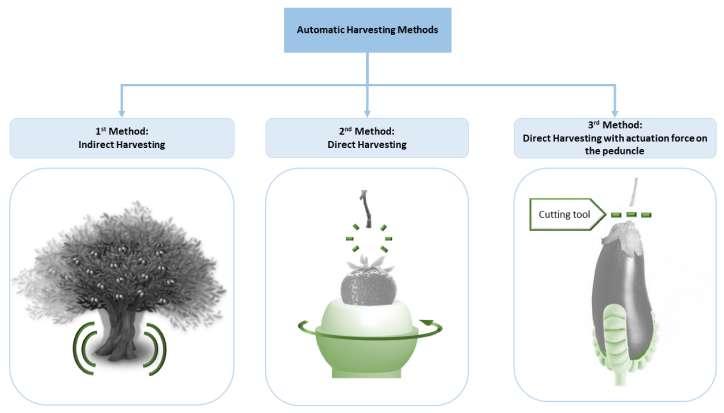

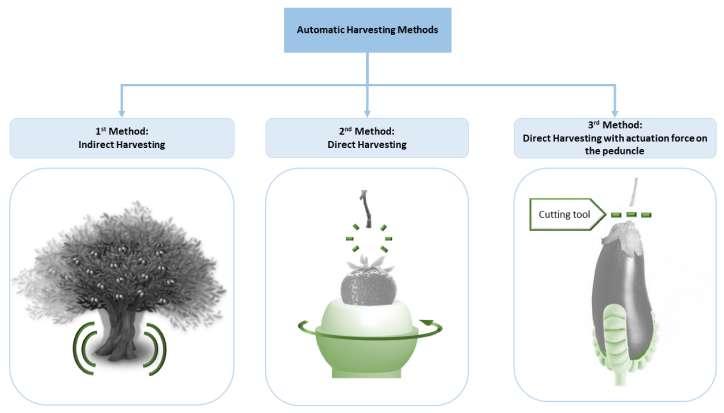

Harvestingisaprocessthatcomesintoplayrightatthefinalstageoffruit developmentanddeterminesthefruitquality.Itisimportanttoharvestfruits andvegetablesattheproperstageofmaturityinordertomaintaintheirnutrientqualityandfreshnessforprolongedperiodoftime[30].Nowadays,the majorityoffruitsusedforfreshconsumptionareharvestedbyhand,anda mechanicalharvestermaytakecareofthoseusedforprocessing.Handharvestingrequiresquitealongtimeandexcessivelabouruse,whilemechanical harvestinghasagreaterefficiency.Accordingtoarecentlypublished(2021) reviewpaper[47],mechanicalharvestingmethodscanbedividedinto:

• Indirectharvesting: aforceisappliedtotheplantwithoutmaking adirectcontactwithfruits;involvesmethodssuchasairblasting,limb

Chapter2Overviewofsoftroboticsinagriculture20

shaking,trunkshakingandcanopyshaking(typicallyusedforolives, almonds,pistachionuts).

• Directharvesting: usedwheneveraplantduetoitsstructurecan’t beshaken,requiringadirectapplicationofamechanicalforceonthe fruitoritspeduncle.Inthiscasepickingtechniques(orpatterns)such astwisting,pulling,bending,liftingoracombinationofthem,arechosentoeffectivelydetachfruitsfromthestem(e.g.strawberries,apples, tomatoes).

• Directharvestingwithanactuationforceonthepeduncle: appliedwhenacuttingtoolisrequiredtoproperlydetachthefruitbecause ofitshardpeduncleconnectiontotheplant(e.g.oranges,cucumbers, peppers).

Infigure2.10aclassificationofthemostcommonlyusedharvestingmethods aspresentedin[47]isshown.

Chapter2Overviewofsoftroboticsinagriculture21

Figure2.10: Classificationofautomaticharvestingmethods,accordingto[47].

Dependingonthecrop,morethanoneharvestingtechniquecouldbeused andseveralfactorssuchassize,shape,fragilityofthetree,maturitystage ofthefruits,thewilltoriskdamagingfruitorplant,financialprofitability, determinethechoiceofthemostsuitableone.

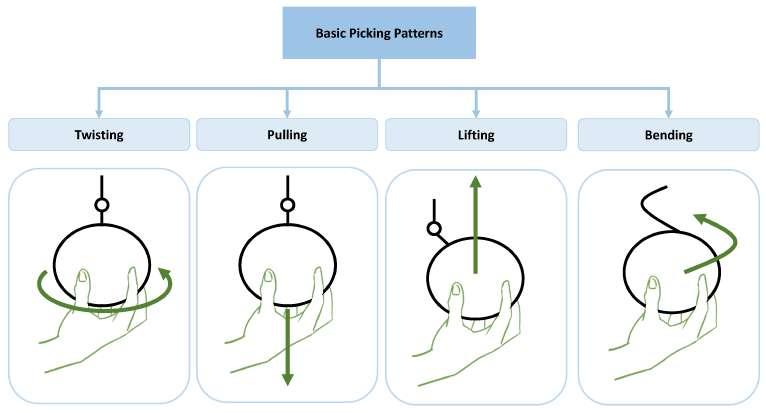

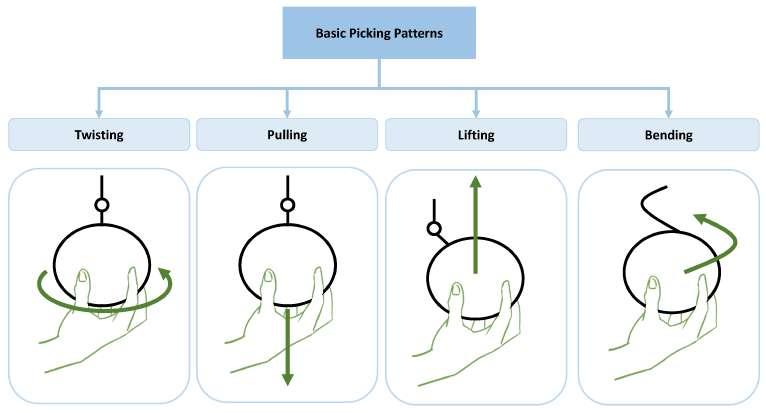

2.2.2Harvestingpickingpatterns

Regardingthesecondmentionedharvestingmethod(directharvesting),furtherconsiderationscanbemade.Aresearchbranchinroboticsfocuseson studyingthehumanmovementsperformedduringtheharvestingofcrops,with theobjectiveofreplicatingthemusingroboticgrippers.Thesemovementsare thesocalled pickingpatterns,whichincludebending,lifting,twisting,and pullingoracombinationofthem(showninFigure4.34).

Inliterature,severalstudieshavebeenconductedtounderstandthemost suitablepickingpatternandthereforegripperdesign,foreachfruitsuchas tomatoes,apples,kiwis,strawberries.Inparticular,softgrippersarebeing

Chapter2Overviewofsoftroboticsinagriculture22

Figure2.11: Asimplifiedschemeofbasicpickingtechniques,accordingto[47].

developedbecauseofthecompliancecharacteristicsthatallowdelicatemanipulationofthefruit,sincedirectcontactisrequiredwhileharvesting.Also, directharvestingwithanadditionalactuationforcecanbesolvedusingsoft grippertechnologyandasuitablecuttingtoolsuchassaw,hotwire,scissors oraknife[63].

ThisMaster’sThesis’work,mainlyduetothedimensionsofthemanufacturedsensingdevice,focusesontheharvestingofsmall-sizefruitssuchas strawberriesandtomatoesthatcanbeharvestedfollowingthesecondmethod. Thepickingpatternusuallyincludestwistingandpullingoncethefruitis grasped.Instead,manyotherfruitssuchasolives,raspberriesandblueberries thatwouldbedirectlyharvestedbyhand,they’refareasiertoharvestwith thefirstmethod(e.g.shakingtheplant),ifautomationisinvolved.

2.2.3Automationlevelofagriculturalprocesses

Asoftenhappens,tasksthatcanbeeasilycarriedoutbyhumansbecomevery challengingforrobots.Inthefieldofcropharvesting,anexperiencedfarmer canfirmlyandrapidlydistinguishmaturitystageofacropnotonlybythe colorbutalsobythesize,shape,surfacetexture,softnessandresonance(sound itcreateswhentapped).Iftasted,thefruitorvegetablecanbeharvested consideringitsaroma,sweetness,sourness,bitterness.Mostofthisproperties aredifficulttobesensedbyarobotandthisisthereasonwhymostofthe agrobotsareonlysuitedforharvestingcropsthatwillbeprocessedbeforesale. Also,thefarmlandenvironmentistypicallynon-structured,highlydynamic andfullofobstacles.It’scharacterizedbydumpyandunevengroundthatcan onlygetworseifbadweatherpresents.Abriefreviewofthecurrentlypresent onthemarketsolutionsispresented.

Accordingtoarecentlypublished(2021)reviewpaper“AdvancesinAgri-

Chapter2Overviewofsoftroboticsinagriculture23

cultureRobotics:AState-of-the-ArtReviewandChallengesAhead”[48],AI, IoTtechnologiesandcomputervisionalgorithmscanbesuccessfullyusedfor soilandweedmanagement,fruitclassificationandweeddetectionincomplex environments.Regardingthelandpreparationtask,theGermancompany Raussendorf[55]developedin2014“C¨asar ”(showninFigure2.12),amobilefour-wheeldriveremote-controlled/temporarly-autonomousrobotforsoil fertilization.InordertoperformsuchataskReal-TimeKinematic(RTK) technologyfortheGlobalNavigationSatelliteSystem(GNSS)isused,allowingtoimprovetherobot’slocationaccuracyupto3cm.Itisdesignedto workinconjunctionwithfarmersfeaturingacollisiondetectionsystemwitha maximumdetectiondistanceof5m.Ontheotherhand,theChinesecompany DJI[15]developedaflyingdroneequippedwith8rotors(AGRASMG-1P ) toperformagriculturalactivitiessuchasapplyingliquidfertilizers,pesticides andherbicides(showninFigure2.12).Ithasatransportingcapacityupto10 litersoveramaximumdistanceofupto3km,ensuringasprayingcapacity of6ha/h.Toavoidcollisionswithhighvoltagewiresorhighvegetation,an omnidirectionalradaranti-collisionsystemisembedded,allowingtodetectobstaclesupto15meters.Thisapproachcanbeusefulwheneverdirectcontact withthesoilisnotrequired,asitacceleratestheprocessandavoidsterrestrial obstaclesandnavigationinaroughenvironment.

AscendingTechnologies[5]proposed AscTecFalcon8 :aremotlycontrolledmulticopterdesignedforinspectionandmonitoring,surveyandmappingapplications.Itcanbeusedinagricultureformonitoringtheamountof chlorophyllpresentinthevines,preventingorhighlightinganypossibledisease. TevelAeroboticsTechnologies [70]proposedaflyingautonomous robotaimedatautomatingthefruitpickingtask,selectingtheripecropsand gentlygraspingthem.Figure2.13includesthementionedflyingdrones.

Chapter2Overviewofsoftroboticsinagriculture24

Anotherkeytaskinagricultureisweedcontrol:atypeofpestcontrolthat aimsatreducingthegrowthofnoxiousweedsthatcompetewithcropsfor space,nutrients,waterandlight.Severalcompaniesdevelopedautonomous robotstoremovethoseundesirableweeds:thefrenchcompanyNa¨ıoTechnologies[46]proposed“Oz ”,“Dino”and“Ted ”forlargescalevegetablefarms andwinegrowers.AccordingtoNa¨ıoTechnologies,70Ozrobotsweresoldin 2018alone,being80%ofsalestotheFrenchinternalmarket,15%toEuropean countriesand5%totherestoftheworld.ThethreeNa¨ıoTechnologiesrobots areshowninFigure2.14.

Thefrenchcompany VITIROVERSolutions [74]developedacompact, lightweightmobilerobotforweedsremoval(showninFigure2.15).It’sable

Chapter2Overviewofsoftroboticsinagriculture25

Figure2.12: OntheleftRaussendorfautonomoussystemforagriculturalpurposesC¨asar; ontherightDJIAGRASMG-1PSeriesagriculturedrone.

Figure2.13: OntheleftAscTecFalcon8flyingdrone;ontherightTevelAerobotics automatedfruitpicker.

tooperateundervariousweatherconditions,itisequippedwithphotovoltaic panelsandallowscontrolandmonitoringthroughamobileapplication,putting intopracticetheIoTconcept.Also,TertillCorporation[69],basedinMassachusetts,proposed Tertill :acheap(349.00USD),lightandsmallwheeled autonomousrobotdesignedtoremoveweedsfromresidentialgardens(shown inFigure2.15).

Oncecropsaremature,theharvestingprocesstakesplace.Thespanish companyAgrobot[3]proposes AgrobotE-Series:arobotthatconsistsof (upto)24independentcartesianroboticarmsabletoworktogetherforgently

Chapter2Overviewofsoftroboticsinagriculture26

Figure2.14: AutonomousrobotsproposedbyNa¨ıoTechnologies,fromlefttoright:Oz, DinoandTed

Figure2.15: Ontheleftthecompletelyautonomousmobilerobotforpreciselycontrolling soilgrassingproposedbyVITIROVERSolutions;ontherightTertillWeedingRobot.

Chapter2Overviewofsoftroboticsinagriculture27

harvestingdelicatefruitssuchasstrawberries.AscanbeseeninFigure2.16, ithasthreewheelsanditsmechanicalstructurecanbeadjustedtosuitthe cropdimensions.

Moreover,theamericancompanyHarvestCROORobotics[28],created Berry5 :aroboticpickerthatexploitsAItodetermineifastrawberryis ripeornot,beforeharvesting(showninFigure2.17).Ithasapickingspeedof 8secondsperfruit,movingthroughstrawberriesbedsataspeedof1.6km/h,

resultingtoanequivalentyieldof25to30humanharvesters[48].Likethe AgrobotE-Series,thevariousmechanismsoftheBerry5robotareprotected bypatents,makingitsscientificanalysisdifficult.

OthercompaniessuchasAugeanRobotics[9]andHarvestAutomation [52]arefocusingonrobotsthatcancooperatewithhumansforcarryingand organizingproductswiththeobjectiveofincreasingindustrialproductivity.

AugeanRoboticsdeveloped Burro (showninFigure2.18):amobilecollaborativerobotthatexploitscomputervision,highprecisionGPS,andAIto followpeopleandnavigateautonomouslywhilecarryingortowingobjects.

Ithasamaximumcarryingpayloadof226Kg,dependingontheterrainand amaximumtowingcapacityof907Kg.HarvestAutomationproposed HV-

Figure2.16: AgrobotE-Seriesstainlesssteelandmilitary-gradealuminumrobot.

100 (showninFigure2.18):amobileautonomousrobotdesignedtoperform materialhandlingtasksinunstructured,outdoorenvironmentssuchasthose typicallyfoundincommercialgrowingoperations.Therobotcansafelycollaboratewithworkersandrequireminimaltrainingtooperate,withamaximum payloadof10Kg.

Interestingly,thereviewpaper[48]showsthatamongthe62considered projects/availableproducts,80%ofthemareintheresearchstage.Also,most ofthemconsistsoffour-wheeldrive(4WD)mobilerobotsandalmost70%of

Chapter2Overviewofsoftroboticsinagriculture28

Figure2.17: HarvestCROORoboticsBerry5fruitpickingrobot.

Figure2.18: OntheleftAugeanRobotics’Burroself-drivingrobot;ontherightHarvest Automation’sHV-100robot.

Chapter2Overviewofsoftroboticsinagriculture29

themdonotincludecomputervisionalgorithms.

Despitetheconstanttechnologicaladvances,manychallengesarestillto beovercomesuchasfruitocclusions,changesinambientlighting,simplicity ofconstructionandefficiency.

2.2.4Openissuesinagriculturalautomationandproposedsolutions

Accordingto[48],mostagriculturalrobotsare4WD,buttheagricultural environmentisclassifiedassemi-structuredandthiskindoflocomotionis stronglyaffectedbysoilcharacteristics.Also,atrade-offbetweenqualityand costofembeddedelectronicdevices(sensors,cameras,IoTcomponents)must betakenintoaccount.

Wheeledrobotsnotonlystruggletomoveinanagriculturalenvironment butcanalsocauseundesiredsoilcompaction.Asmentioned, UAV devicescan beavalidalternativeifthetaskallowsit. Leggedrobots arealsoproposed by[48]requiringlesscontactwiththegroundwhilemovingandbeingableto adjusttheirposturedependingonterrain’sslope.Leggedrobotssuchasthe onesshowninFigure2.19arerelativelylight,small,autonomousandhave locomotionpatternsthatadapttotheenvironment.Onedrawbackisthat theirsmallfeetimplyasmallcontactareathatcreatesaconsiderableamount ofpressureonthefootplacementregion.So,topreventrobots’feetfrom penetratingsoftsoilsandtrappingthemselves,theyrequireacustomizedfeet design.

Also,embeddedsensorscanhaveasignificantimpactonthefinalproduct’scost.Anideacanbetoestimatevariablesinsteadofmeasuringthem throughasmartdesign,likesuggestedbytherecentsoftgrippingandtactile sensingpublications(discussedinSubsection2.3.2).Ifbudgetallowsit,by

investinginsensorswithahighIngressProtection(IP),meaningtheycan operatewithhightemperatureandhumidityranges,atasmallpriceincrement,theroboticsystemcanbenefitintermsoflifetime.Also,computer visionandmachinelearningalgorithmscanfurthermoreimprovetheefficiency ofautomatedtaskssuchasdiseases’identification,detectionofweeds,selectiveapplicationofpesticides,locationofcrops,classificationofripenessand yieldestimation.However,thosealgorithmsneedimprovementsintermsof robustnessmakingthemindependentofweather,temperature,humidityand lightingchanges.TheuseofAIalgorithms,suchasMLP(MultiLayerPerceptron),CNN(ConvolutionalNeuralNetwork),R-CNN(Region-basedCNN), andSVM(Support-VectorMachines)provedtobeadaptabletorapidvariationinnaturallighting,changingseasonsandcropgrowing[48].

Chapter2Overviewofsoftroboticsinagriculture30

Figure2.19: OntheleftANYmalproposedbyANYbotics;ontherightUnitreeGo1proposedbyUnitreeRobotics.

2.3Openissuesinsoftrobotics

2.3.1Softgrippers’limitationsandrequiredimprovements

Asseen,softgrippershavelotsofadvantagesbutstillneedsomeimprovements.Acommoncomplainisthatsoftrobotsandgrippersrequirea timeconsumingmulti-stepfabricationprocess thatinvolvesmoldmaking, casting,curingandsupportremoval[7].Ascanbenoticedbythepreviously mentionedstate-of-the-artsolutions,themanufacturingofmostsoftgrippers isvery“handmade”andstillfartobeoptimizedforproduction.Therefore,repeatabilitycanbehardtoachieve,eventhoughprocessesbasedon3Dprinting andlostwaxmanufacturingcanbevalidoptionsforstandardizingthemanufacturing.

Also,softend-effectorsarenoteasytomodeloftenrequiringtechnical expertisetoaccountforthecontinuousdeformationsgivenbysoftmaterials.Althoughtheirdesign,placementandtestingisnottrivial,manyresearchesproposeeasiertodevelopend-effectorsexploiting3Dprintingtechnology[7,47,63].Asanexample,atCarnegieMellonUniversity,Pennsylvania,someresearchersproposedafullyprintablelow-costdexteroussoftmanipulatorthatwasdesignedthroughaframeworktheydeveloped[7].They wereabletousetheclassicalrigid-linked UnifiedRobotDescriptionFormat (URDF),generallynotcapableofdescribingcontinuousdeformationsofsoft materials,exploitingquasi-rigidapproximations.Thiswaytheend-effector’s behaviourcanbequicklyevaluatedinsimulation.Dependingonthetypeof grippingmethodthereareadvantagesbutalsolimitations:anapplecanbe firmlygraspedwhileastrawberrywouldneedamoregentleapproach.Some grippersareeasiertomaintainandclean,othersareonlyabletogripsmooth

Chapter2Overviewofsoftroboticsinagriculture31

anddrysurfaces,whileothershavealimitedadaptivegrasping.Featureslike modularity,easeofrepairandtheabilitytohandlefoodandmultiplecrops aredesiredforagriculturalapplications.

Anotherproblemthatistypicallynotaddressedbyresearchesisthe energy sourcesystem ofthesoftgrippers,thatshouldbetailoredtotheagriculturalunstructuredenvironment.Infact,theproposedelectrical,pneumaticor chemicalenergysourcesaretypicallyonlysuitableforalaboratoryoravery structuredindustrialcontext.

Ingeneral,challengesforsoftgrippersincludeminiaturization,robustness, speed,integrationofsensing,andcontrol.Improvedmaterials(elastomers, phasechangematerials)andprocessingmethodsplayalargeroleinfuture improvements[63].Finally,nomatterhowmuchthetechnologyisadvanced andreliable,thetransitionofsoftgrippersfromtheresearchstagetothe industrialcontextneedstobeeconomicallycompetitivewithrespecttoolder methodologiesandsemi-automaticormanualapproaches.Thisisanotso trivialpointtoaddress,becausewithoutpoliticalmanoeuvrestoincentive technologyinnovation,it’shardtojustifyhugeinvestmentsformostofthe small-mediumsizedenterprises.

2.3.2Casestudy-relatedstate-of-the-art

ThisSubsectionisdedicatedtoaroundupofthemainpapersthatweretaken asinspirationforthisMaster’sThesis.Inthecurrentstate-of-the-artthere’s alackofinformationaboutsphericalandhemisphericalsofttactilesensors andgrippers,sothefewavailableresearchesonthistopicareconsideredto beworthmentioning.Thefollowingcasestudy-relatedworkswillbeusefulto betterunderstandthenextchapter.

Oneofthepapersthatinspiredthisworkis “Rapidmanufacturingof

Chapter2Overviewofsoftroboticsinagriculture32

Chapter2Overviewofsoftroboticsinagriculture33

color-basedhemisphericalsofttactilefingertips”,publishedin2022 [58].Inthispapertheauthorspresenta3Dprintedtactilesensorcalled ChromaTouchthatexploitshue,centroidandapparentsizeofthemarkersto estimatenormalandlateralforces.Thedevice,showninFigure2.20ismade withaStratasysJ735multi-materialadditivemanufacturingsystemthatallowstheprecisealignmentofupto400markersona21mmradiushemisphere. Thesensingprincipleisbasedontherelativedisplacementbetweendifferently coloredmarkersthatlieontwoseparatelayers.Inparticular,thesubtractive colormixingencodesthenormaldeformationofthemembrane,andthelateral deformationisfoundbycentroiddetection.Thisapproachstandsoutbecause

mostoftheexistingmarker-basedsolutionsfailtodirectlyencodethedistance betweenmarkersandmonocularcamera,forcingthenormaldeformationtobe estimatedfromthelateraldisplacementofthemarkers.Inthiscase,theChromaTouchsensorencodesnormaldeformationinthehue-valueofthemarkers. Also,usingsubtractivecolormixing(thecolorofthetranslucentmarkerson theinnerlayerismixedwiththecoloroftheopaquemarkersbehindthem) allowsahighersensingresolutionwithrespecttoolderproposalssuchasthe GelForcesensor.However,themainobjectiveofthisworkistoperformaccu-

Figure2.20: Ontheleftthe3DprintedChromaTouchtactilesensorandthetransduced forcesintomarkerappearancechanges;ontherightarenderofthefingertipassembly.

Chapter2Overviewofsoftroboticsinagriculture34

ratecurvatureestimationswhenthesensorispressingagainstapositivelyor negativelycurvedobjectbutcontactingforcesandtorquesarenotestimated.

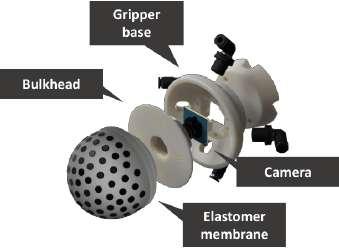

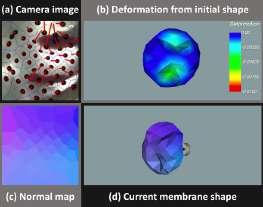

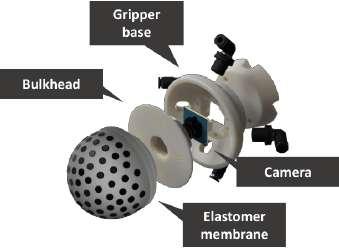

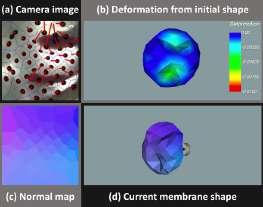

AnotherresearchworkdevelopedattheUniversityofMadridentitled “A universalgripperusingopticalsensingtoacquiretactileinformationandmembranedeformation” [57],proposedagranular-jamming basedgripperwithsemi-transparentfillingthatallowstodetectthemembrane’sdeformationandtheobjectbeinggrasped.Theprototype,shownin Figure2.21,isabletograspcylindricalandrectangularobjects(10to70mm length)whiletrackingthegripper’sdeformationsothatobjectclassification throughthereconstructedpointcloudcouldbeperformed.Also,graspingsuccessisdetectedestimatingshearforces.Thefabricationprocessconsistsin 3Dprintingthemoldsthatarelaterfilledwithsiliconeorepoxyresintorespectivelycreatethesoftgripper’smembraneandbulkhead.Also,athickness of1mmandaShoreHardnessA-20werechosen,whiletheembeddedcircular markershaveadiameterof6mmandathicknessof1.5mm.Eventhough thesizingandmanufacturingofthisprototypearedifferentfromours,avery similarmarkertrackingapproachwasusedandthesuggested3Dpositionestimationalgorithmwasimplementedbutdidnotachieveacceptableresults.

Figure2.21: OnthelefttherenderedstructureoftheUniversalGripper;ontherightthe manufacturedprototype.

Figure2.21: OnthelefttherenderedstructureoftheUniversalGripper;ontherightthe manufacturedprototype.

Chapter2Overviewofsoftroboticsinagriculture35

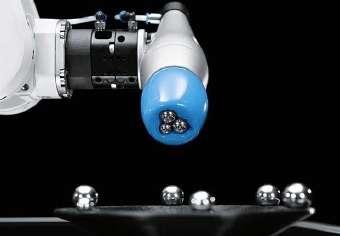

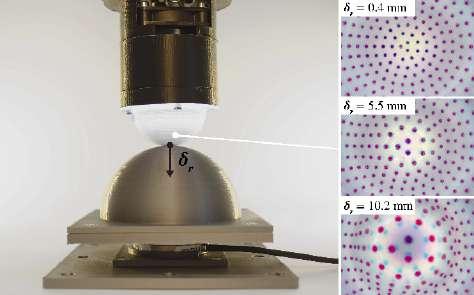

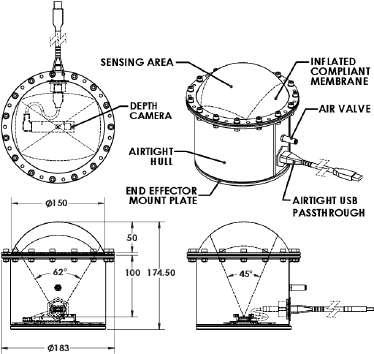

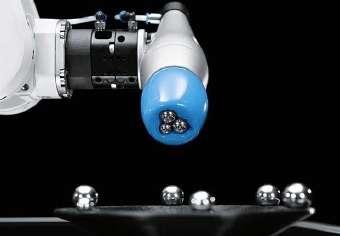

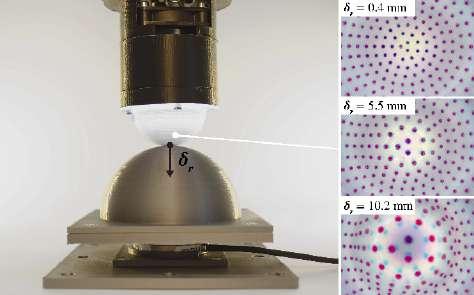

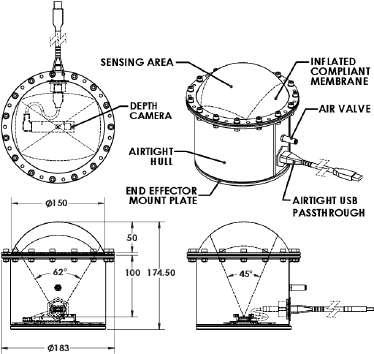

Thepaper “Soft-bubble:Ahighlycompliantdensegeometrytactilesensorforrobotmanipulation” [4]proposesadensegeometrysensor andend-effectorfortactile-objectclassification,poseestimationandtracking (showninFigure2.22).Itmeasuresdeformationofathin,flexibleair-filled membraneusingadepthcamera.Thesensedfeaturesarethenexploitedto performobjectshapeandtextureclassification(usingaDeepNeuralnetwork), objectsorting,objectposeestimationandtracking.Asshownbythefollowingscheme,thedimensionsoftheproposeddeviceallowtheuseofaTime OfFlightdepthcamera(PMDpicoflexx)andthedesignfollowsitsminimum sensingdistanceof100mm.Asmentionedbytheauthors,thischoiceallows avoidingnon-trivialandrobustalgorithmsfor3Dshapereconstruction(e.g. structuredlighting,photometricstereoalgorithms).Toourknowledge,asimilarapproachcouldnotbeadoptedduetothecurrentlyavailableonthemarker depthsensors’limitations(sizeandsensingrange),whilekeepingacompact design.

Similarlytothepaperwhichhasjustbeendiscussed,in “Soft-bubble

Figure2.22: Ontheleftthedimensioned(mmunits)sensorassembly;ontherightthe soft-bubblemountedonaKUKAiiwarobot.

Chapter2Overviewofsoftroboticsinagriculture36

grippersforrobustandperceptivemanipulation” [40],asoft-bubble grippersystemispresented(showninFigure2.23).Inthisworkthemain contributionstothistechnologyarethedesignimprovementswithasmaller parallelgripperformfactor,theintroductionofhigh-densitymarkersonthe internalbubblesurface,usedforestimatingshearforces,aproximitypose estimationframeworkandintegratedtactileclassification.Asintheprevious work,aToFcameraisusedtosensedepthbutinthiscaseaprototypecamera fromPMDtechnologieswithaworkingrangeof4-11cmwasemployed(and placedatanangletoreducetheoverallgripperwidth).Also,markerswere addednottoinferdepthbuttoestimateslippageandgraspqualityfrom shear-induceddisplacements.

Figure2.23: Ontheleftthesoft-bubbleparallelgripperthatestimatesin-handposeand tracksshear-induceddisplacements;ontherightthedimensionedschemewheretheToF depthsensorisdepictedinblue.

In “GelSightFinRay:IncorporatingTactileSensingintoaSoft CompliantRoboticGripper” [43]asoftgripperwithtwosensorizedfingers forretrievingtactileinformationisproposed.TheFinRaydesignhasthe advantageofnotrequiringactuationforsecurelygraspingobjectsunlikemany softandrigidgrippers.Likementionedbyotherstudies,externalambient

Chapter2Overviewofsoftroboticsinagriculture37

lightingcaninterferewithavision-basedsystem:inthiscaseadarkcloth wasappliedonthesensingdevice,obstructingoutsidelighting.Asshownin

Figure2.24,theproposedFinRayfingerisequippedwithmarkersforslipand twistdetection,itcanmeasuretheorientationofthein-contactobjectand throughanRGBilluminationandpre-collectedreferenceimagescanperform 3Dreconstruction.

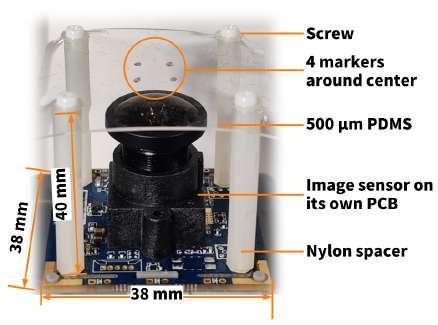

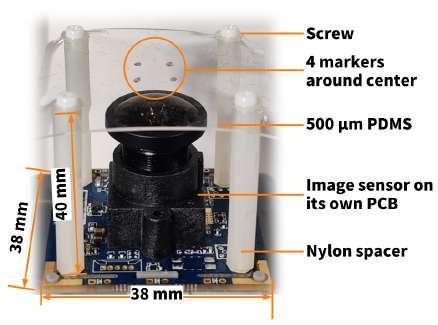

Regardingtheforceestimationproblem,in “HiVTac:AHigh-Speed Vision-BasedTactileSensorforPreciseandReal-TimeForceReconstructionwithFewerMarkers” [53]aprototypeforforcereconstructionisproposed.Thedevelopedalgorithmallowsreal-timeestimationofthe directionandintensityoftheexternalforce.TheHiVTactactilesensorshown inFigure2.25ismadeofasquaresheetofPDMS(polydimethylsiloxane)with dimensionsof40mm × 40mm.Ithas4markersthataretrackedthrougha wide-anglecamera.Themainproblemwhenre-adaptingtheobtainedresults onthecase-studyarethestronggeometricalassumptionsthataremadethanks toaplanardesign,thatarenotsuitedforahemisphericaldome.

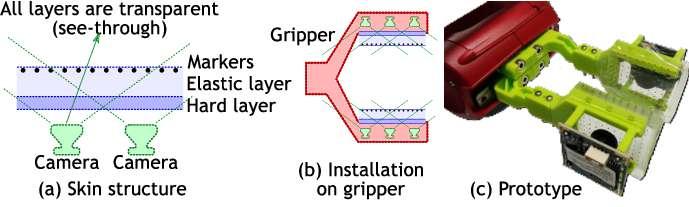

In “FingerVisionforTactileBehaviors,Manipulation,andHapticFeedbackTeleoperation” [78]and “ImplementingTactileBehav-

Figure2.24: TheGelSightFinRaygripper.

iorsUsingFingerVision” [77]byYamaguchi,thesameforceestimation approachisused.Alsointhesepapers,thevision-basedtactilesensor(shown inFigure2.26)isalmostplanar,makingitdifferentfromourdesign.Nonetheless,thesamemarkertrackingandforceestimationapproachhasbeenimplementedandtestedtoseeifasimplelinearizationwouldbesuitableforsmall deformationsatleast.Inparticular,tangentialforcesareestimatedconsideringthehorizontaldisplacementsofthemarkerswhilenormalforces,dueto theunstablemarker’swidthreadingofthedetectionalgorithm,isestimated throughthenormofthemarkers’positionchange.ThenoisyradiusreadinggivenbytheBlobDetectionalgorithmisalsoconfirmedbyourwork;the accuracyofthismethodtoahemisphericalsurfacewillbelaterdiscussed.

Chapter2Overviewofsoftroboticsinagriculture38

Figure2.25: TheHiVTactactilesensorprototype.

Figure2.26: DesignoftheFingerVisionanditsprototypeinstalledontheBaxtergripper.

In “Visiflex:ALow-CostCompliantTactileFingertipforForce, Torque,andContactSensing” [18]acheapcomplianttactilefingertipis proposed.Thesensor,showninFigure2.27,iscapableofcontactlocalization andforce/torqueestimation.Accordingtothepaper,testsindicatethattypicalerrorsincontactlocationdetectionarelessthan1mmandtypicalerrorsin forcesensingarelessthan0.3N.Atafirstglance,thedesignoftheVisiflexis verysimilartoours,eventhoughdifferentapproachesforforceandapplication pointestimationareused.Inthisworktheamericanresearchersusedadomeshapedacrylicwaveguidecoveredbyasiliconecap.EightLEDsactasfiducial markersandthelightinjectedintothewaveguideistotallyinternallyreflected exceptwherethecapcontactsthewaveguide.Thisbehaviourisexploitedto easilysenseeithersingleormulti-contactwiththeexternalenvironment,as showninFigure2.28.Anotherdifferenceinthedesignwithrespecttoours isduetoa6degreesoffreedom(DoF)fingertipthatisaccomplishedusinga 6-DoFflexuresystem.Wrenchsensingisperformedusingseveraltechniques suchasstiffnessmatrixestimation,linearapproximationandnonlinearapproximationstartingfromtheexperimentaldata(basedonNeuralNetworks).

Chapter2Overviewofsoftroboticsinagriculture39

Figure2.27: ArenderingoftheVisiflex[18]tactilesensoranditsexplodedview.

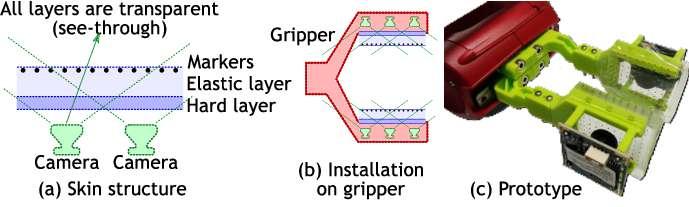

“TheTacTipFamily:SoftOpticalTactileSensorswith3DPrintedBiomimeticMorphologies” [75]isaslightlyolderpaper(publishedin2018)thatcomparesseveralproposeddeviceswiththesamebiomimetic designprinciple.Inparticular,theyallexploitdeformationofthefingertip sensingthedisplacementofpinsormarkersusingacamera.Severalpatterns areconsideredandcompared,basedonthein-handmanipulationandobject explorationcapabilities.InFigure2.29threeiterationsofthedesignareshown.

(center):theredesignedbasehousesawebcam,andmodulartipswith3D-printedrubber skin.Modulartips(right):separatemodulartipswithanodularfingerprint(above)andflat tip(below).

Chapter2Overviewofsoftroboticsinagriculture40

Figure2.28: TheVisiflexsensorcontactedinmultiplepoints;redLEDsrepresentarethe fiducialmarkers,whilegreenLEDsarethecontactpointsthatcanbeseenbythecamera, becauseofthewaveguide.

Figure2.29: Asreportedin[75]:Open-TacTip(left):theoriginalversionofthesensor comprisesa3D-printedcameramountandbaseandacastsiliconeskin.ImprovedTacTip

Chapter2Overviewofsoftroboticsinagriculture41

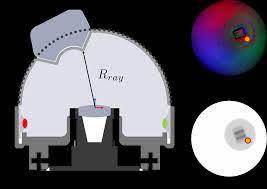

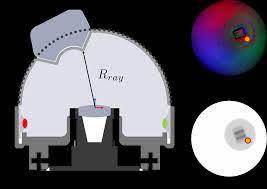

In “DenseTact:OpticalTactileSensorforDenseShapeReconstruction” [16]acompacttactilesensorwithhigh-resolutionsurfacedeformationmodelingforsurfacereconstructionofthe3Dsensorsurfaceispresented. Inthisworkforceestimationisnotaddressedandthedesigndoesn’tcomprise fiducialmarkers.AsshowninFigure2.30,usinga3-coloredlightinginside thedomeandanRGBcamera,thesurfacedeformationisestimatedandthe contactingobject’sshapeisreconstructed.However,thisestimatesarehighly dependenton3Dshapecalibrationprocess(using3DprintedobjectsandinferringCADmodels)andDeepNeuralNetwork’saccuracy.

Figure2.30: OnthelefttheDenseTactsensormountedontheAllegrohandandits3D reconstructionresults;ontherightavisualizationoftheraycastingalgorithm,usedto determinetheradialdepthfromthe3Dcalibrationsurfacewhichisthenprojectedintothe imageplane.

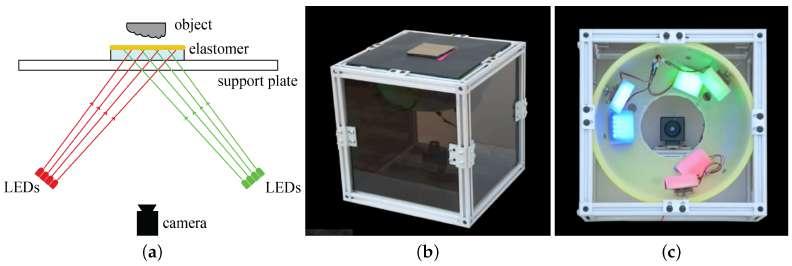

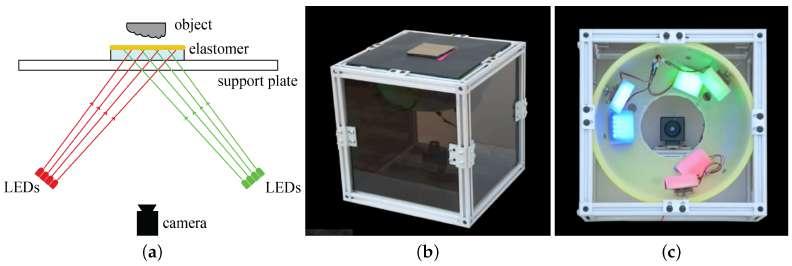

Similarlytothepreviouslycitedpaper,in “GelSight:High-Resolution RobotTactileSensorsforEstimatingGeometryandForce” [79]

3Dshapereconstructionisperformedusingastructuredlightsetupanda photometricstereoalgorithm.Inparticular,3differentlycoloredLEDsare arrangedatdifferentdirectionsandcombiningtheshadingfromthreeormore directions,surfacenormalsoneachpixeloftheshadedimageareestimated (asshowninFigure2.31).Afterwards,surfacenormalisintegratedtoget the3Dshapeofthesurface.Also,forceestimationiscarriedoutthrough

Chapter2Overviewofsoftroboticsinagriculture42

markertracking.Theproposedsensorhasaplanargeometry,sothementioned approacheswerenoteasilyapplicabletoourcasestudy.

Figure2.31: Asreportedin[79]:(a)basicprincipleoftheGelsightdesignthatconsists ofasensingelastomerpiecewiththeopaquereflectivemembraneontop,supportingplate, LEDsandcameratocapturetheshadedimageswithdifferentlightings;(b)pictureofthe sensor;(c)arrangementoftheLEDsandcamerawhenviewingfromthetop.

Chapter3 Thedesignoftheprototype

Inthischapterthespecificcasestudyispresented,analyzingstepbystepthe methodologiesusedtomanufacturetheprototype.Also,theexperimentalsetups andthemainComputerVisionalgorithmsaredescribedindetail.

3.1Specificationsandgoals

AsdiscussedinChapter2,thereareseveralreasonswhysoftroboticsisdrawingattentionofcompaniesandresearchers.Severalrecentlypublishedpapers focustheirattentionontactilesensing,thatcanprovideinformationabout thecontactsuchasfriction,slippage,surfacefeatures(curvature,texture), appliedreactionforcesandtorques.Moreover,itcanbeavalidoptionfordescribingtheobject’sshape,orientation,rigidity,insituationswherevisionis outofreach.Asmentionedin[58]mostoftheresearchonthistopichasbeen focusingonsoftbutflattactilesensors.Ontheotherhand,extendingthis technologiestofreeformorhemisphericalsurfacesisnottrivial.Inorderto sensethemechanicaldeformationthecommonstrategyistoapplyapattern ofmarkersinsidethefingertipandtrackitsmotionusinganRGBorRGBD

43

Chapter3Thedesignoftheprototype44 camera.Thisway,there’snoneedfordirectwiringtothesoftmaterial,guaranteeinghighercompliancethanembeddingcapacitiveorresistivematerials.

Basedonthechosenpatternandmarkerdensity,thesensingresolutioncan befairlyhigh.AsdiscussedinChapter2,sincethefingertipdesignhasto becompact,therearenotavailabledepthcameras(RGBDnorToF)onthe marketthatareabletopreciselysensedepth,requiringaminimumdistanceof around10cm.So,ifamonocularcameraisusedsomeothertechniqueshaveto beexploitedtoperformdepthestimation.In[58]2layersofdifferentlycolored markersareexploited;in[57]theyrelyoncameracalibrationandthesensed markers’dimensions;in[43],[16]and[79]deepneuralnetworkapproachesare usedtocalibratethesensorandperform3Dreconstruction.

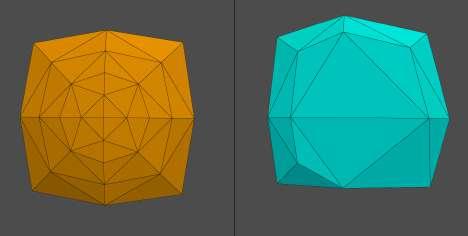

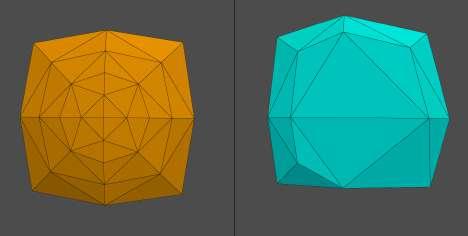

Inourworkwefocusedonthedevelopmentofa cheap,3Dprinted, marker-basedhemisphericalsoftgripper capableofsensingforceswhen incontactwiththeexternalenvironment.Moreover,the3Dshapeestimation approachproposedin[57]hasbeenexplored.

Thefinalgoalsofourworkareto:

• designandmanufactureasofttactilesensorwithasuitedpatternof fiducialmarkerstobetracked;

• calibratethesensingdeviceinordertoperformonlineestimationofboth shearandnormalforces;

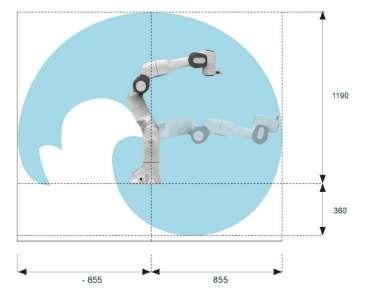

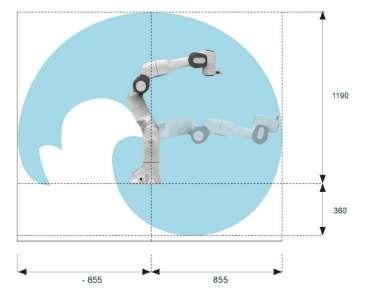

• designastructurethatcanholdthesensingdeviceinplaceandmountit onthe“FrankaEmikaPanda”[23]robot’send-effector(showninFigure 3.1);

• attemptasimpleharvestingtaskexploitingthedevelopeddevicewithina forcecontrollooponthegripperandanexternaldepthcameramounted ontheroboticarm.

3.2Themanufacturingprocess

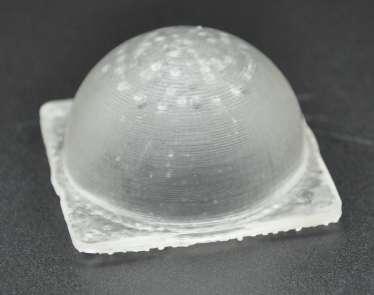

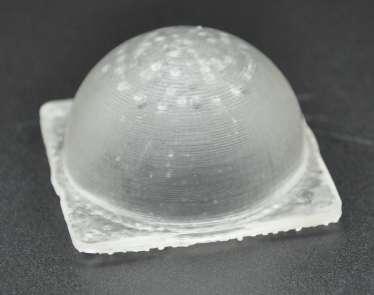

3.2.1Prototypingandmanufacturingthehemispherical dome

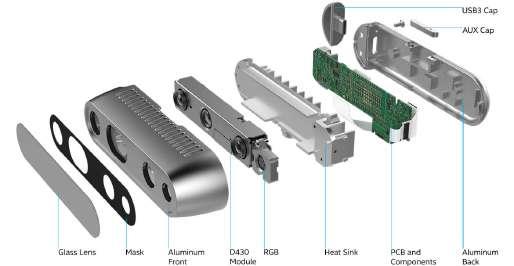

Firstofall,wefocusedonthemanufacturingofthetactilesensorandthe patternselection.Prioritizingeaseofmanufacturingandreproducibility,we decidedtoavoidasmuchaspossiblemulti-materialadditivemanufacturing andmolding,focusingon3Dprinting.Ourhemisphericalsensingdevicewas 3DprintedusingtheFormlabsForm2stereolithography(SLA)3Dprinter [21]showninFigure3.2.Itcostsaround3000$ andit’scharacterizedby alayerthicknessoraxisresolutionofrespectively25,50and100microns. ThecompatiblematerialsarealsoprovidedbyFormlabs[22]andtheyrange from150$/lto400$/ldependingonmechanicalpropertiessuchasstiffness, elasticity,thermalresistenceandachievableresolution(upto0.005mm).To 3Dprintthehemisphericaldomeweusedthe Elastic50AResin,apolymer resinthatsupportsamaximumresolutionof100micronsand50AofShore Hardness.Therequiredprintingtimedependsonthegeometryandheight

Chapter3Thedesignoftheprototype45

Figure3.1: TheFrankaEmikaPandaroboticarmequippedwiththeFrankaHand2-fingers gripper.

Chapter3Thedesignoftheprototype46 ofthemodel;inourcaseitwasaround4hata0.10mmresolutionforthe singledome,while5hata0.10resolutionfortwodomes.SLAresinsare photocurable throughlightthatliesintheultravioletspectrum.

Afterthe 3Dprintingprocess,theelastomerlookslikeinFigure3.3,so alltheunnecessarymaterialhastobeproperlyremovedtomakethesurface smooth.After3Dprinting,themodelshavetobecleanedeitherwithIPA (IsopropylAlcohol),withaproperwashingmachineorbyhand,likeinour case,duetothefragilityofthematerial.Inparticularthe3Dprinteddomes needtobedippedinIPAforabout10minutes;thenithastobegentlyshaken whileinsidethewashingtubandlefttosoakforanother10minutes.This processallowstoremovealltheresinresiduefromthepolymerizedstructure, duetothefactthattheprintercontinuouslydepositsresinonthemodeland thelaserbeamcausesitspolymerizationjustabovetheprintingplane(thelaser losespowerafterthe0.10mmlength).Thecleaningprocessisveryimportant toavoidthatalltheresiduesonthesurfacepolymerizeduringthenextstep.

Afterwards,the UVcuringprocess takesplaceinsideaspecialultravioletovenforabout20minutesat60◦.Withoutenteringintotoomuchdetail, curing isachemicalprocessthatcausesthetougheningorhardeningofapoly-

Figure3.2: Fromlefttoright:theFormlabsForm2stereolithography3Dprinter;the Elastic50AResin;a3Dprintedsampleasshownonthewebsite[22].

mermaterialbycross-linkingofpolymerchainsandinourcaseitwasinduced byheat.Theamountofrequiredcuringtimeiscomputedconsideringthegoal ShoreHardnessofthefinalmaterialthatisabout50A.Tobetterunderstand themeaningofthisvalue,Figure3.4showsaShoreHardnessscaleofgeneral purposeitems.ShoreHardnessisinfactameasurementunitthatindicates theresistanceofamaterialtoindentation.AsshowninFigure3.4,thereare differentscales,dependingontheapplicationfieldandmaterialtype,thatcan overlapeachother.

Figure3.4: ShoreHardnessscaleofgeneralpurposeitems.

Figure3.5showsasummaryofthemostcommonmaterials’propertiesand curingtimes,accordingto[33],usedinsoftrobotics.

Figure3.6showshowthepolymerdomepresentsbeforeandafterthecuring process.

Chapter3Thedesignoftheprototype47

Figure3.3: Fromlefttoright:thejustprinteddome;thewasheddome;howthewashed domepresentsontheinside.

Chapter3Thedesignoftheprototype48

Figure3.5: Chartofthemostcommonsoftmaterials’propertiesandcuringtimes,accordingto[33].

Figure3.6: Ontheleftthehemisphericaldomebeforebeingcured;ontherightthehemisphericaldomeafterbeingcured.

Oncethematerialiscured,itbecomessemi-transparentallowingexternal lighttopartiallypassthrough.Thisbehaviour,thatinitiallywasthoughtto beanaddedvalueisactuallysomethingtodealwithinordertoachievean accuratetrackingalgorithm.Infact,theshadowsgeneratedbythein-contact objecttendtointerferewiththemarkers’detectionandtrackingalgorithms.

Toavoidthisproblem,atemporarylight-coloredclothwasplacedoverthe domeduringtestingandcalibration,whileathinlayeroflatexwasusedasa coveronthefinalsensingdevice.Also,it’sworthmentioningthatthehigher thecuringtimethegreatertheopacitybutalsothematerial’sstiffness.

Theprototypingprocessincludedmultipleiterationsofthedomedesign, inparticular:

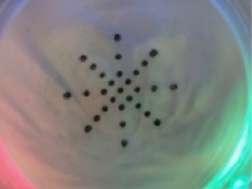

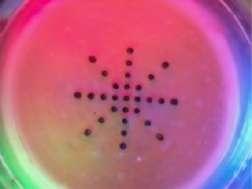

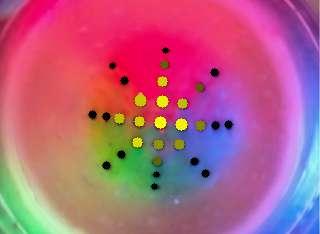

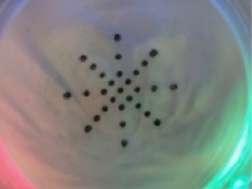

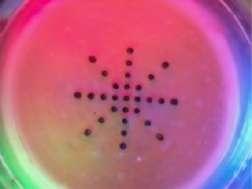

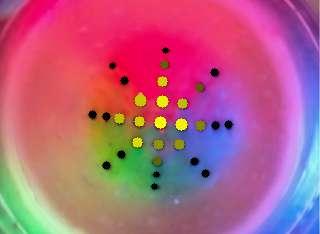

• initially,themarkers’diameterwas0.9mm(insideviewofthedome showninFigure3.7)andtheywerespacedby1.5mmintervals.Toimprovemarkers’detectionandtrackingtheywereincreasedtoadiameter of2mmandaspacingof2mm;

• fourdifferentpatternswereprintedandevaluatedbasedontheirsensitivitytoappliedforcesandrobustnessduringmarkers’tracking.As mentionedbystate-of-the-artrelatedpapers,atradeoffbetweensensing resolutionandsignal-to-noiseratiomustbefound.Inourcase,wedecidedtoadoptthe“doublecross”designshowninFigure3.8.Weled tothisdecisionduetothemarkers’detectionandtrackingreliability; also,thepatternwasdesignedinsuchawaythatdiagonalcrossescan bedistinguishedfromorthogonalcrossesbythenumberofmarkersand haveaslightlydifferentspacing.Thetotalnumberofmarkersonthe chosendesignis29andtheywerefilledwithblackresintomaximizethe contrast(thisoperationcouldbeeasilyintegratedduring3Dprinting,if theprinterallowsit).

Chapter3Thedesignoftheprototype49

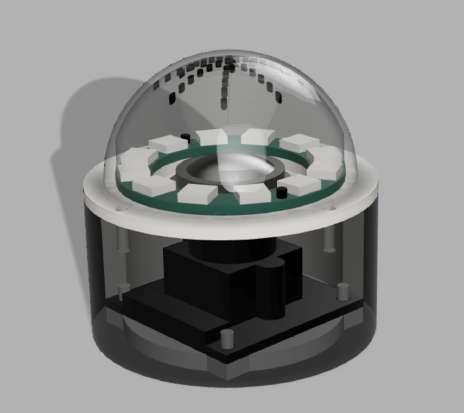

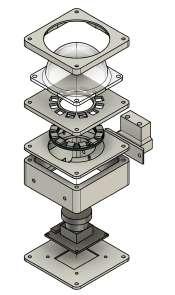

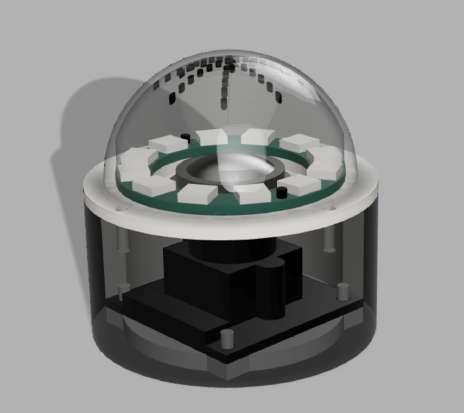

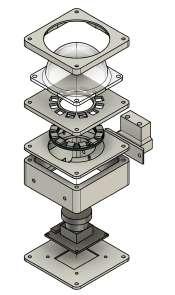

3.2.2Designandmanufacturingofthecase

Thecasewas3DprintedusingacheapFDM(FuseDepositionModelling) printerandit’smadeofPLA(PolylacticAcid).Initially,itwasthoughtasa 4partsdesigntodecoupleasmuchaspossiblethedifferentlayers,asshown inFigure3.9.Infact,startingfromtoptobottom:

• asquarepiececonstrainsthehemisphericaldomeatitsbase;

Chapter3Thedesignoftheprototype50

Figure3.7: Onthelefttheinitial“doublecross”designconsistingof0.9mmdiameter markerswithaspacingof1.5mm;ontherightthesuperimposeddetectedblobswhichare clearlynoisy.

Figure3.8: Ontheleftthefourdesignedpatterns;ontherightthechosen“doublecross” pattern.

Chapter3Thedesignoftheprototype51

• athinnerplatewithacircularindentationholdstheAdafruitNeoPixel Ring(12LED)[1];

• a“box-like”shapedpieceholdsthe5MPfish-eyeRGBcameraforRaspberryPi[20];

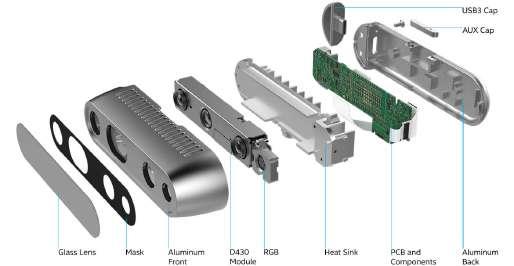

• thebasecompletesthedesignandincludesseveralholesforpositioning screwsandcablemanagement.