The Last Data Center

Cable ships head for rough seas Boat shortages

DE-CIX’s CEO Ivo Ivanov peers into the future

A second look Why big tech is taking on time

Targeting cybercrime Raiding data centers

Long-term data storage enters a new epoch Issue 46 • November 2022 datacenterdynamics.com

CUSTOMIZATION IS OUR STANDARD CONFIGURABLE RACKS AISLE CONTAINMENT COLOCATION CAGES MILLIONS OF CONFIGURATIONS AVAILABLE NEW DATA CENTER BUILDS OR RETROFITS SECURE AND FLEXIBLE LAYOUTS www.amcoenclosures.com/data 847-391-8100 MADE IN THE USA an IMS Engineered Products Brand

6 News

Loudoun’s limits, bankruptcies, fires, saboteurs, and layoffs. Plus the climate crisis claims a data center 14 When everything else is gone

Exploring the frontiers of long-term data storage to find out what remains of the human race

The CEO interview

“We see a huge wave of new market participants on platforms like DE-CIX, who are not interested in peering directly,” DE-CIX’s CEO says.

Light matters

How photonic computing could revolutionize AI and interconnect technology 31 A second look

The dangers of a negative leap second mean that its time to rethink time 35 Cable ship crisis

Everyone wants submarine cables. Nobody wants to buy the ships that lay them 42 The flamingo universe

Simulating the cosmos requires a lot of RAM. 46 A necessary shutdown

Why we’re killing off 2G and 3G, and what it means for 5G 48 Raiding data centers

When law enforcement comes for servers, what happens? 51 Amped up

Ampere talks Arm data centers, and why it’s building its own cores 54 Telco consolidation

Behind Vodafone’s merger with Three UK 58

Reusing waste compute

How digital boilers could heat homes, and do compute on the side 62

Op-ed: Brace for impact

The economy is turning, and that means tough times ahead

24

ISSN 2058-4946

Contents November 2022 28

Issue 46 • November 2022 | 3 14 48 35

54

28

24

GET THE POWER OF THE PLUS.

™

From the Editor

When everything else is gone

What will remain of the human race?

We don't know how long our species will survive, and even if we can hold on for thousands more years it is not clear that the knowledge of today will carry on with us.

On the cover, we talk to those building records of our present to bring hope to our future. From a mine at the end of the Earth, to Microsoft's cutting edge research labs, to the edge of space, we travel in search of the next stage of data storage.

Data

Over in a second

While that feature focuses on immense timescales, elsewhere we delve into the impact of a single second. A fight over time itself risks causing serious outages, as we face the first ever negative leap second (p31).

Connection issues

Our vulnerability to perturbations in collective time are the result of our interconnected digital world.

How that came to be, and why it's important to build an open network, are the focus of our interview with the CEO of DE-CIX (p24).

But we may not be able to keep building out as easily as we have in the past, as the economy starts to turn and

money becomes less attainable (p62).

Further down the line, we will start to suffer from years of underinvestment in the cable ship industry, just as submarine cables start to boom.

There's no incentive for operators to build more, and that could mean a crisis for those that depend on them (p35).

Darkness

One sector that relies on the growth of the Internet is that of cybercrime.

But nefarious platforms, spammers, and criminal enterprises still need somewhere to call a home.

The world of cybercrime enforcement is not as glamorous as Hollywood might pretend, but it still means the occasional data center raid.

Now, however, the denizens of the dark web are embracing the cloud, causing new challenges for law enforcement (p48).

A different form of darkness can be found in Durham. We travel to the North England city to see a supercomputer built to study dark matter at immense scales.

You can find the answer to life, the universe, and everything on page 42.

The telco challenge

Like supermassive black holes, telecoms companies must consume all around them.

Our new telco editor, Paul Lipscombe, analyzes the next big merger in the telco sector - Vodafone and Three UK - and looks at what it means for their competitors (p54).

Elsewhere, he charts the end of 2G and 3G services (p46).

10,000

number

Meet the team

Editor-in-Chief

Sebastian Moss @SebMoss

Executive Editor

Peter Judge @Judgecorp News Editor Dan Swinhoe @DanSwinhoe

Telecoms Editor

Paul Lipscombe Reporter Georgia Butler

Partner Content Editor Claire Fletcher

Head of Partner Content Graeme Burton

@graemeburton

SEA Correspondent Paul Mah @PaulMah

Brazil Correspondent Tatiane Aquim

@DCDFocuspt Designer Eleni Zevgaridou

Head of Sales Erica Baeta Conference Director, Global Rebecca Davison Conference Director, NAM Kisandka Moses

Channel Manager Alex Dickins

Channel Manager Emma Brooks Channel Manager Gabriella Gillett-Perez Chief Marketing Officer Dan Loosemore

Head Office

DatacenterDynamics 22 York Buildings, John Adam Street, London, WC2N 6JU

© 2022 Data Centre Dynamics Limited All rights reserved. No part of this publication may be reproduced or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, or be stored in any retrieval system of any nature, without prior written permission of Data Centre Dynamics Limited. Applications for written permission should be directed to the editorial team at editorial@ datacenterdynamics.com. Any views or opinions expressed do not necessarily represent the views or opinions of Data Centre Dynamics Limited or its affiliates. Disclaimer of liability: Whilst every effort has been made to ensure the quality and accuracy of the information contained in this publication at the time of going to press, Data Centre Dynamics Limited and its affiliates assume no responsibility as to the accuracy or completeness of and, to the extent permitted by law, shall not be liable for any errors or omissions or any loss, damage or expense incurred by reliance on information or any statement contained in this publication. Advertisers are solely responsible for the content of the advertising material which they submit to us and for ensuring that the material complies with applicable laws. Data Centre Dynamics Limited and its affiliates are not responsible for any error, omission or material. Inclusion of any advertisement is not intended to endorse any views expressed, nor products or services offered, nor the organisations sponsoring the advertisement.

The

of years Microsoft believes that Project Silica will be able to hold data for

storage is nearing its limits, unless we embrace new technologies

Dive even deeper Follow the story and find out more about DCD products that can further expand your knowledge. Each product is represented with a different icon and color, shown below. Events Intelligence Debates Training Awards CEEDA

Sebastian Moss Editor-in-Chief

Issue 46 • November 2022 | 5 >>CONTENTS

News

The biggest data center news stories of the last three months

NEWS IN BRIEF

Meta ups server room temperatures to 90 degrees F

The company also reduced humidity to 13 percent. But as many of its large facilities are in drought-stricken areas, some are concerned about local impacts even with wider restoration efforts.

Vertiv CEO Rob Johnson to retire on health grounds

Giordano Albertazzi, currently President, Americas, has been appointed immediately as chief operating officer. On January 1, 2023, Albertazzi will succeed Johnson as CEO.

Microsoft installs Ambri high-temperature ‘liquid metal’ batteries as backup

The Ambri batteries use electrodes made of calcium alloy and antimony electrodes, with a molten salt electrolyte. They are also used by TerraScale data centers.

Loudoun County puts limits on data center growth

Loudoun County, Northern Virginia, home of the world’s largest concentration of data centers, has adopted new rules which could limit future development there.

In late September, the Board of Supervisors of the County approved proposals from the county planners, which will limit data center projects in some neighborhoods, particularly along Route 7. The new rules will also require data centers to adopt higher-quality building designs and tougher environmental rules depending on their proximity to housing.

The new zoning rules, based on proposals set out by the planners in July, were adopted by the Board of Supervisors on September 20.

The adopted rules will exclude data centers from certain areas, in particular keeping them Route 7, where there is no more power infrastructure to support them. Route 28 will remain open to new facilities.

The problem on Route 7 arose because data centers were approved automatically in many locations along the highway, so many were approved that the number exceeded the capacity of Loudoun’s power distribution networks. This happened because data centers were approved “by right” in certain types of neighborhood. They were fasttracked without any hearings with local

boards, without the backing of residents - or even, apparently, without reference to whether the utility, Dominion Power, could even supply them with electricity.

To fix this problem, Loudoun is reclassifying neighborhoods, according to the “place types” used by zoning rules.

Data centers will no longer be approved by right in “suburban mixed-use” locations, which make up 887 acres of vacant land in the County. They will also lose automatic approval in areas including “urban transit center,” “urban mixed-use,” “urban employment,” and “suburban neighborhood.”

They will also be barred in “urban transit center” areas, where tall buildings cluster around Metrorail stations - and that place type has been enlarged by also including “urban employment” areas.

Data centers continue to be allowed byright in areas designated “suburban industrial/ mineral extraction”, “transition light industrial,” and “transition industrial/mineral extraction.”

Data centers that are permitted will face stricter environmental controls, using “high-quality building design” to make their exteriors less ugly, and limiting the noise made by air-conditioning systems used to cool the servers bit.ly/LoudounBreakingPoint

Stack deploys beehives at data center campus in Milan

“Honeybees are responsible for 80 percent of the world’s pollination, but their population is threatened and drastically declining,” Stack said. “To support their preservation, we’ve adopted three hives that will host nearly 200,000 bees.”

EcoDataCenter to reuse heat in fish farms and greenhouses

EcoDataCenter and Wa3rm will focus on large-scale cultivation in fish farms and greenhouses in the projects, though further details haven’t been shared. There are several data centers warming fish farms, including Green Mountain and the White Data Center.

Switch begins work on expanding pyramid campus in Grand Rapids, Michigan

Gaines Township officials approved a site plan amendment in July 2021 for a 312,000-square-foot (29,000 sqm) expansion at Switch’s existing data center, located at the former Steelcase Inc. “pyramid” building in Gaines Township. Construction appears to have begun in October this year.

Whitespace

>>CONTENTS 6 | DCD Magazine • datacenterdynamics.com

Compute North files for Chapter 11 bankruptcy, with $500m owed

Cryptomining data center firm Compute North has filed for bankruptcy.

Minnesota-based Compute North Holdings Inc., which provides data center hosting services for cryptocurrency miners and blockchain companies, filed for Chapter 11 bankruptcy in Texas this September.

The company said it owed as much as $500 million to at least 200 creditors. The firm’s CEO Dave Perrill has also stepped down but will remain on the board.

Data center operators worldwide are seeing energy prices rise, while cryptomining firms are facing a double-whammy of low Bitcoin prices and Ether’s recent move away from ‘proof of work’ mining, reducing the need for mining hardware.

Bloomberg reports Compute North faced delays in energizing mining machines for its client Marathon Digital Holdings Inc. in Texas due to local regulations in the state.

Compute North’s 280MW mining facility in Texas was planned to be operational in April, but was held up by the approvals process. By the time it could launch, Bitcoin prices had plummeted, and funding opportunities had dried up.

In February, Compute North raised $385 million, consisting of an $85 million Series C equity round and $300 million in debt financing. However, one of its main backers, Generate Capital, withdrew funding at the end of the year, sparking the bankruptcy process.

Founded in 2017, Compute North operates four US data centers; two in Texas in McCamey (280MW) and Big Spring, and one each in Kearney, Nebraska (100MW), and North Sioux City, South Dakota (&MW). A third 300MW facility was in development in Granbury, Texas. The company’s assets are worth between $100 million and $500 million, according to its Chapter 11 petition.

“The Company has initiated voluntary Chapter 11 proceedings to provide the company with the opportunity to stabilize its business and implement a comprehensive restructuring process that will enable us to continue servicing our customers and partners and make the necessary investments to achieve our strategic objectives,” a spokesperson said.

Both Compass Mining and Marathon Digital are customers of Compute North, and released statements saying the filing shouldn’t affect operations.

However, Marathon has $31.3 million invested in Compute North, and has paid the company about $50 million in security deposits and prepayment.

Separately, rival cryptomining firm Core Scientific said that it was facing bankruptcy this October, warning that it would not pay its debts for the month, or in November. By the end of the year, it could run out of cash.

“In the event of a bankruptcy proceeding or insolvency, or restructuring, holders of the company’s common stock could suffer a total loss of their investment,” the company said. “Substantial doubt exists about the company’s ability to continue as a going concern.

bit.ly/CryptosGambleFails

Dutch colo firm Datacenter Almere files for bankruptcy

Dutch data center firm Datacenter Almere has filed for bankruptcy.

The Flevoland company this month was declared bankrupt by a court in Midden-Nederland.

Led by Andrew van der Haar, the company offered colocation services and operated a single data center on 153 Randstad 22 in Almere, outside Amsterdam. Subsidiaries ICT Campus Almere and IaaS provider NL Datastore are also subject to the bankruptcy decision.

The court has appointed a trustee, Mr. KCS Meekes, associated with De Advocaten van Van Riet BV, who will take care of the settlement of the bankruptcy. DCD has reached out to van der Haar for comment.

The facility was originally built in 2001 for Sara (now teaching and research cooperative Surf.nl) before being taken over by Vancis in 2008. Interxion/Digital Realty acquired the facility in 2018 and operated the site as Almere ALM1. Datacenter Almere relaunched the facility in 2019.

The company reportedly suffered a major outage in September after a break in a high-voltage cable. A number of companies, including NorthC and Keppel, own and operate data centers in Almere bit.ly/DataCenterBankruptcy

>>CONTENTS DCD Magazine #46 Issue 46 • November 2022 | 7

Europe could face a winter of mobile network blackouts

Mobile networks across Europe could start going down this winter, as operators warn that the energy crisis may lead to regular power cuts and energy rationing.

This has led to fear within the telecoms industry, notes Reuters, which reports that industry officials are concerned that a challenging winter could put telecoms infrastructure to the test.

The potential power issues have been fueled by Russia’s invasion of Ukraine, with Russia deciding to halt gas supplies via Europe’s key supply route in the wake of this conflict.

Four telecoms executives say that there are currently not enough backup systems in many European countries to handle widespread power cuts. This potentially

increases the prospect of mobile phone outages.

This has led some European countries to try and ensure communications can resume as normal even if power cuts end up exhausting backup batteries.

Europe has nearly half a million telecoms towers, with most providing battery backups that last around half an hour to run the mobile antennas.

French electricity distributor Enedis has put forward plans for a ‘worst-case scenario’ that will see power cuts lasting up to two hours, affecting different parts of the country on a rotational basis, notes Reuters. Any blackouts would exclude hospitals, police, and government facilities.

Sources claim that the French

government, telecom operators, and Enedis have discussed the issue over the summer.

“Maybe we’ll improve our knowledge on the matter by this winter, but it’s not easy to isolate a mobile antenna (from the rest of the network),” said a French finance ministry official with knowledge of the discussions.

Swedish, German, and Italian telcos have also raised concerns, while Nokia and Ericsson are working with mobile network operators to mitigate the impact of potential power shortages.

UK telecoms company BT recently told the FT that it was not currently seeking more backup power for the winter, but was assessing which of its non-critical hardware could be switched off.

In the data center industry, the likes of Equinix and Digital Realty have increased their diesel reserves in preparation for potential grid and fuel supply issues.

Equinix usually fills its tanks to 60 percent capacity, but is now raising that to 90 percent across many of its sites. Digital Realty said that it had established more priority delivery agreements with its diesel suppliers. One of the UK’s largest equipment rental groups is also stockpiling extra diesel generators in anticipation of high demand going into winter.

“We’ve been doing contingency planning since the war in Ukraine broke out,” Gary Aitkenhead, Equinix’s SVP of Europe, Middle East and Africa operations, said.

“We don’t ever expect to have to run for more than a few hours, or at worst case a day, on diesel but we’re prepared to run for up to a week.”

bit.ly/EuropeanEnergyCrisis

UK government could ration diesel supplies to keep data centers up

The UK government is considering rationing data centers’ access to diesel fuel for backup if the power crisis accelerates in coming months.

Government officials have discussed allocating diesel for data center backup generators if the continuing energy crisis leads to power cuts from the National Grid, according to Bloomberg

Data centers make up 2.5 percent of the UK’s electricity demand, and in recent years have faced increasing opposition from local residents. In West London it was reported that housing building projects were not being allocated power, because data centers had already taken the available capacity.

As well as grid power, data centers need backup, currently nearly always provided by diesel generators. There have been reports of data centers stockpiling diesel for what is expected to be a difficult winter.

“Our members have taken all necessary precautions by filling up their reserves, but we need to see government take necessary measures to ensure a continuous supply in the unlikely event of prolonged blackouts,” Matthew Evans, markets director at technology industry group techUK, told Bloomberg.

Data center operators in turn are coming forward with suggestions to progress the idea of using their backup systems to shift demand off the grid at key times, to avoid the need for blackouts.

bit.ly/DieselSupplyCrunch

Whitespace

>>CONTENTS 8 | DCD Magazine • datacenterdynamics.com

Dominion to resume connecting new Loudoun data centers, but capacity

still limited

Dominion Energy has found a way to connect some new data centers in Loudoun County, Northern Virginia - but there will still be some that have to face delays till 2026.

In July, the energy utility shocked data center operators in the world’s most concentrated data center hub by announcing that it could not guarantee new facilities would get power through its network of overhead lines, meaning planned connections for some facilities in the East of the County would be delayed for years.

But in late September, Dominion said that it is once again connecting new data centers. Some planned projects will be held up for as much as four years, because of constraints in its transmission infrastructure.

Dominion Energy now says it has been working with customers to spot strategies to squeeze some incremental capacity out for new projects, according to Data Center Frontier

“After completing a comprehensive analysis of our system and accelerating several near-term projects, we’ve been able to lift the temporary pause and resume new data center service connections on an incremental basis,” Dominion’s Aaron Ruby said.

bit.ly/AtLeastThatsSomething

AWS buys 105 back-up diesel generators for new data center in Dublin

In response to concerns over impact on electricity networks in the region

Amazon has applied for an emission license to Ireland’s Environmental Protection Agency (EPC) to install 105 diesel generators at its new Dublin data center site.

First reported by The Times, the application from Amazon requests 105 backup diesel generators and four diesel-powered fire pumps to be located at the data center site in Clonshaugh Business and Technology Park. The units will have the ability to general a total of 674MW of power. Industrial emissions licenses are required when units are expected to generate more than 50MW of power.

Over the summer Amazon was granted planning permission for two new data centers at the Clonshaugh Business and Technology Park. Located on the site of a former Ricoh building once earmarked for T5’s first European facility, it’s unclear if Amazon had been previously granted a connection by EirGrid before the current moratorium was brought in.

According to The Times, applications for licenses have ‘flooded into the agency,’ and nine of the ten applications received this year are for Amazon’s data centers.

The application comes as a response to concerns over the impact data centers are having on electricity networks in the country. It was reported earlier this year that the amount of metered electricity consumed by data centers in Ireland reached 14 percent in 2021.

It was this report that led to the consideration of a moratorium on data centers country-wide. Instead, the government published a revised statement in which it said that data centers should make efficient use of the country’s electricity grid by using available capacity and alleviating constraints, increase renewable energy use, colocated with a renewable generation or energy capability, be decarbonized by design, and provide opportunities for community engagement.

There remains a de facto moratorium enforced by the grid in the Dublin area, with EirGrid stating no new grid connection applications for data centers will be accepted in Dublin until 2028.

Interxion (Digital Realty) paused plans for expansion in the area as a result, while Dataplex recently entered voluntary liquidation after EirGrid denied power contracts at two data center sites. Microsoft, AWS, and Equinix have also reportedly paused projects in the area.

Ireland’s Commission for Regulation of Utilities has reportedly encouraged data centers and other large consumers of energy to turn to emergency power generators. Since January of last year, a total of ten facilities — nine operated by Amazon and one by Microsoft — have applied for industrial emissions licenses. K2, Equinix, and Echelon applied for permits in 2020 and 2021, ahead of the CRU decision.

bit.ly/BackUpALotOfSecs

The world is heading for a catastrophe as nations fail to reduce carbon emissions, the UN’s environment agency has warned.

The agency said that there is ”no credible pathway to 1.5°C in place” for 2030, and even reducing emissions to hit 1.8°C by 2050 seems unlikely.

“This report tells us in cold scientific terms what nature has been telling us all year through deadly floods, storms, and raging fires: we have to stop filling our atmosphere with greenhouse gases, and stop doing it fast,” Inger Andersen, the executive director of the UN Environment Programme (UNEP), said.

“We had our chance to make incremental changes, but that time is over. Only a root-and-branch transformation of our economies and societies can save us from accelerating climate disaster.”

She added “every fraction of a degree matters: to vulnerable communities, to ecosystems, and to every one of us.”

bit.ly/ItWasGoodWhileItLasted

DCD Magazine #46 Issue 46 • November 2022 | 9

“No credible pathway to 1.5°C in place,” UN warns

COMPREHENSIVE ENGINEERING EXCELLENCE www.anordmardix.com

Intel to lay off a “meaningful number” of employees in $10bn spending cut, as chip sales crater

Semiconductor designer and manufacturer Intel plans major layoffs and spending cuts as its internal issues are compounded by a slowing global economy.

Revenues fell 20 percent year-over-year in the last quarter, while net profit fell by 85 percent.

Other than its networks and Edge division, which grew in revenue by 14 percent to $2.3 billion, revenue fell across the board.

PC processors dropped by 17 percent to $8.1bn, its relatively new chip foundry business dropped two percent to $171 million, and its servers and AI chips fell 27 percent to $4.2bn.

But CEO Pat Gelsinger tried to pitch its server chips as a positive sector, saying that its upcoming 4th Generation Xeon Scalable chips “will be our fastest ever Xeon to a million units.”

The next three generations of Xeon server products are all on target, he claimed, and are “making very good milestones.” The 4th Gen ‘Sapphire Rapids’ had been repeatedly delayed due to technical issues.

Irrespective of the economic slowdown, Intel’s server business has faced pressure from a resurgent AMD, which has eaten at its oncenear-monopoly market share. AMD’s Epyc processors have scored an increasing number

of wins in the data center and supercomputer space, while issues with Intel’s manufacturing process meant that its products fell behind. At the same time, x86 as a whole is under attack from Arm processors, as well as RISC-V and novel architectures.

Intel commanded an 86.1 percent presence in the x86 server chip market in the second quarter, down from 90.5 percent in the year before - and well down from highs of 98 percent.

With both internal and external pressures cutting into sales and profit, Intel said that it would cut costs by $3 billion in fiscal 2023, and up to $10bn by 2025.

“These savings will be realized through multiple initiatives to optimize the business, including portfolio cuts, right-sizing of our support organizations, more stringent cost controls in all aspects of our spending and improved sales and marketing efficiency,” David Zinsner, chief financial officer at Intel, said during an earnings call.

Speaking to Barron’s , Zinsner said there would be a “meaningful number” of job losses, echoing earlier reports that the company could be set to fire thousands of employees. bit.ly/AllLayoffsAreMeaningful

Oracle has laid off more than 200 workers at its Redwood City location.

The job cuts happened last week as the tech giant filed a Worker Adjustment and Retraining Notification in California.

In total 201 jobs have been cut, said to be ranging from data scientists, application developers, marketing specialists, and software developers.

Oracle previously announced cost-cutting plans to save $1 billion, noting that job cuts would be likely. Earlier this year DCD reported that further job cuts are likely to happen beyond the US, in Europe, India, and Canada.

The layoffs follow Oracle’s $28.3bn acquisition of healthcare IT company Cerner in June.

Oracle still plans to invest in its cloud service, as it ramps up to serve TikTok, which it gained as a customer after then-President Trump tried to ban the Chinese social media platform, causing it to shift to the cloud provider founded by a Trump donor.

bit.ly/ CoveringCe rnerCosts

Microsoft lays off 1,000 employees across company

Microsoft has quietly laid off around 1,000 employees across multiple divisions of the company.

The true scale of the cuts, first reported by Insider, are not known.

Among the divisions impacted by the layoffs are Xbox, Xbox Cloud, Microsoft Strategic Missions and Technology organization, Azure, and Microsoft government. The Mission Expansion cloud government team is among those potentially on the chopping block.

“Like all companies, we evaluate our business priorities on a regular basis, and make structural adjustments accordingly,” Microsoft said in a statement. “We will continue to invest in our business and hire in key growth areas in the year ahead.”

The company announced it would lay off less than one percent of its 180,000-person workforce in July, and has slowed hiring since May.

A number of high-profile executives have also left Microsoft Azure over the past year, including its VP of global infrastructure, VP of cloud infrastructure M&A, CVP of Azure IoT, and VP of global data center construction.

bit.ly/AQuietFiring

Oracle lays off more than 200 Californiabased workers

>>CONTENTS DCD Magazine #46 Issue 46 • November 2022 | 11

Rogers and Shaw merger deal hits a

snag

The proposed $20 billion merger of Rogers Communications and Shaw Communications faces uncertainty after mediation with Canada’s competition bureau failed.

Rogers, Shaw, and Quebecor failed to mediate their differences with Canada’s competition bureau, according to Reuters

Rogers first announced its intentions to buy Shaw in March 2021, in a move that would see four Canadian operators consolidate down to three, but the move was met with fierce opposition by Canada’s Competition Bureau over fears it would hurt competition.

In a bid to mitigate competition concerns, Rogers outlined its plans to sell Shaw-owned Freedom Mobile to telecoms and media firm Quebecor, through its subsidiary Videotron for CAD$2.9bn (US$2.3bn), which would allay any fears over the competition in Canada.

However such proposals have not been enough, with Rogers, Shaw, and Quebecor detailing in a joint statement that “the mitigation did not yield a negotiated settlement.”

The companies plan to try to come to a resolution with a tribunal this November.

Police raid SK Group over Kakao fire outage, as Korea’s government impose safety drills on data centers

Korean police have raided the offices of SK Group, and the SK data center which caught fire in October.

Local police confiscated documents relating to the fire, which brought down the KakaoTalk messaging service on Saturday.

KakaoTalk is used by 90 percent of South Koreans, and the outage brought down many finance and travel applications that rely on KakaoTalk IDs. The disruption continued for much of a week, and the data center is still operating without backup power, so further disruption is possible at the time of publication.

Kakao, which has seen one of its CEOs step down in the wake of the incident, has blamed SK’s Li-ion batteries for the fire.

The police will also be interrogating SK Group officials, according to Yonhap News Agency

Meanwhile, the country’s Ministry for Science and ICT (MSIT) is stepping in to impose disaster management procedures on private companies in Korea. All the country’s large data centers will be subject to a government disaster management system, and subject to regular inspections and safety drills, according to Korea JoongAng Daily The measures promised to establish a center for disaster prevention for digital infrastructure, which will address private data centers as well as government facilities.

Nationwide inspections of data centers and network infrastructure will be carried out by MSIT with the National Fire Agency, and an expert group will create a list of measures to improve data center safety.

bit.ly/KakaoGoesKaka

Peter’s Starlink factoid

Elon

SpaceX CEO Elon Musk has reversed calls for the US government to continue to foot the bill for Starlink Internet services in Ukraine.

The world’s richest man tweeted: “The hell with it … even though Starlink is still losing money & other companies are getting billions of taxpayer $, we’ll just keep funding Ukraine govt for free.”

The company had long claimed credit for the tens of thousands of Starlink dishes sent to Ukraine since the outset of Russia’s invasion, and said that it had not received money for the deliveries.

But in April it was revealed that USAID spent millions on buying thousands of terminals, while the governments of France and Poland also acquired thousands of the dishes. Some Ukrainian citizens have also tweeted that they’ve been paying for Starlink out of their own pocket.

But SpaceX was covering some costs, and sent a letter to the Pentagon asking for money, which leaked. This was met by a public outcry, exacerbated by recent Musk comments that echoed the demands of Russia’s government.

bit.ly/AStrangeMan

Whitespace

Researchers have reverse-engineered Starlink’s communication network to use it as a global positioning system. But to be as accurate as GPS, SpaceX would have to provide more data.

bit.ly/DealGetsRogered

>>CONTENTS 12 | DCD Magazine • datacenterdynamics.com

Musk says SpaceX will pay for Ukraine’s Starlink service after pushback

Saboteurs cut fiber cables in France, in second incident this year

Multiple fiber cables were cut in Southern France, in what appears to be a targeted attack.

The incident is the second in the country this year. Back in April, multiple cables were cut in the country overnight in a coordinated incident.

“We are aware of a major cable cut in the South of France that has impacted major cables with connectivity to Asia, Europe, US, and potentially other parts of the world,” cloud security company Zscaler said in a blog post.

“As a result of the cable cut, customers may see packet loss and or latency for websites and applications which traverse these impacted paths.”

The company said that there were at least three cable cuts - Marseille-Lyon, MarseilleMilano, and Marseille-Barcelona. Police were on-site at the first cut, investigating the cuts, which caused delays to the repairs.

The cuts echo those in April, although it is not known for certain that they are connected. The Spring cuts caused outages across France, impacting 10 Internet and infrastructure companies and several cities.

“The people knew what they were doing,” Michel Combot, the managing director of the French Telecoms Federation, told Wired “Those were what we call backbone cables that were mostly connecting network service from

Paris to other locations in France, in three directions.”

Arthur PB Laudrain, a researcher at the University of Oxford’s department of politics and international relations who has been studying the attacks, added: “It implies a lot of coordination and a few teams.”

No groups or individuals claimed responsibility for the damage, and French police have not announced any arrests related to the damage. It is not known if the cuts are related to Covid conspiracy theories, anti-tech activity, or for another reason.

Fiber optic cables used for Orange’s network were intentionally cut in the Paris region back in 2020.

The week before the cut, submarine cables to the Shetland Islands were severed, causing Internet outages in the remote Scottish archipelago (see right).

While such outages are usually accidental, British tabloids have focused on the presence of a Russian “research ship” near the cut, suggesting that they could have intentionally damaged the cable.

Russia is also accused of sabotaging the Nordstream gas pipeline in the Baltic Sea, but there is no proof it was involved in the Shetland cut.

bit.ly/WillThereBeAThird

Shetland outages caused by submarine cable breakages

The Shetland Islands experienced a major outage that affected landline, Internet, and mobile services.

The outage at the archipelago in the Northern Isles of Scotland was been caused by a breakage in the SHEFA-2 submarine cable. The cause of the break hasn’t been disclosed.

BT blamed the outages on breakages to a ‘thirdparty cable that connects the Shetland’, which is 100 miles off the North coast of Scotland.

The police declared the outages a major incident, while the MP for Orkney and Shetland Alistair Carmichael said that the damage had caused a “catastrophic impact.”

BT Group said in a statement: “Engineers are working to divert services via other routes as soon as possible and we’ll provide further updates. Our external subsea provider is also looking to restore their link quickly.”

SHEFA-2 was initially deployed in 2007.

bit.ly/OhShetNo

Twitter data center brought down by California’s extreme heat wave

A Twitter data center was brought offline by California’s extreme heat wave, as equipment shut down during record temperatures.

The company avoided an outage by transferring its workloads to its data centers in Atlanta and Portland, but said that if either of them had failed the social media website would have gone too.

“On September 5th, Twitter experienced the loss of its Sacramento (SMF) data center region due to extreme weather. The unprecedented event resulted in the total shutdown of physical equipment in SMF,” Carrie Fernandez, Twitter’s VP of engineering, said in an internal message seen by CNN.

“All production changes, including deployments and releases to mobile platforms, are blocked with the exception of those changes required to address service continuity or other urgent operational needs.”

The outage occurred before Elon Musk acquired the site.

bit.ly/WelcomeToOurFuture

>>CONTENTS DCD Magazine #46 Issue 46 • November 2022 | 13

The last data center

Long-term data storage enters a new epoch

Sebastian Moss Editor-in-Chief

Sebastian Moss Editor-in-Chief

DCD Magazine #46

>>CONTENTS

Images by Sebastian Moss

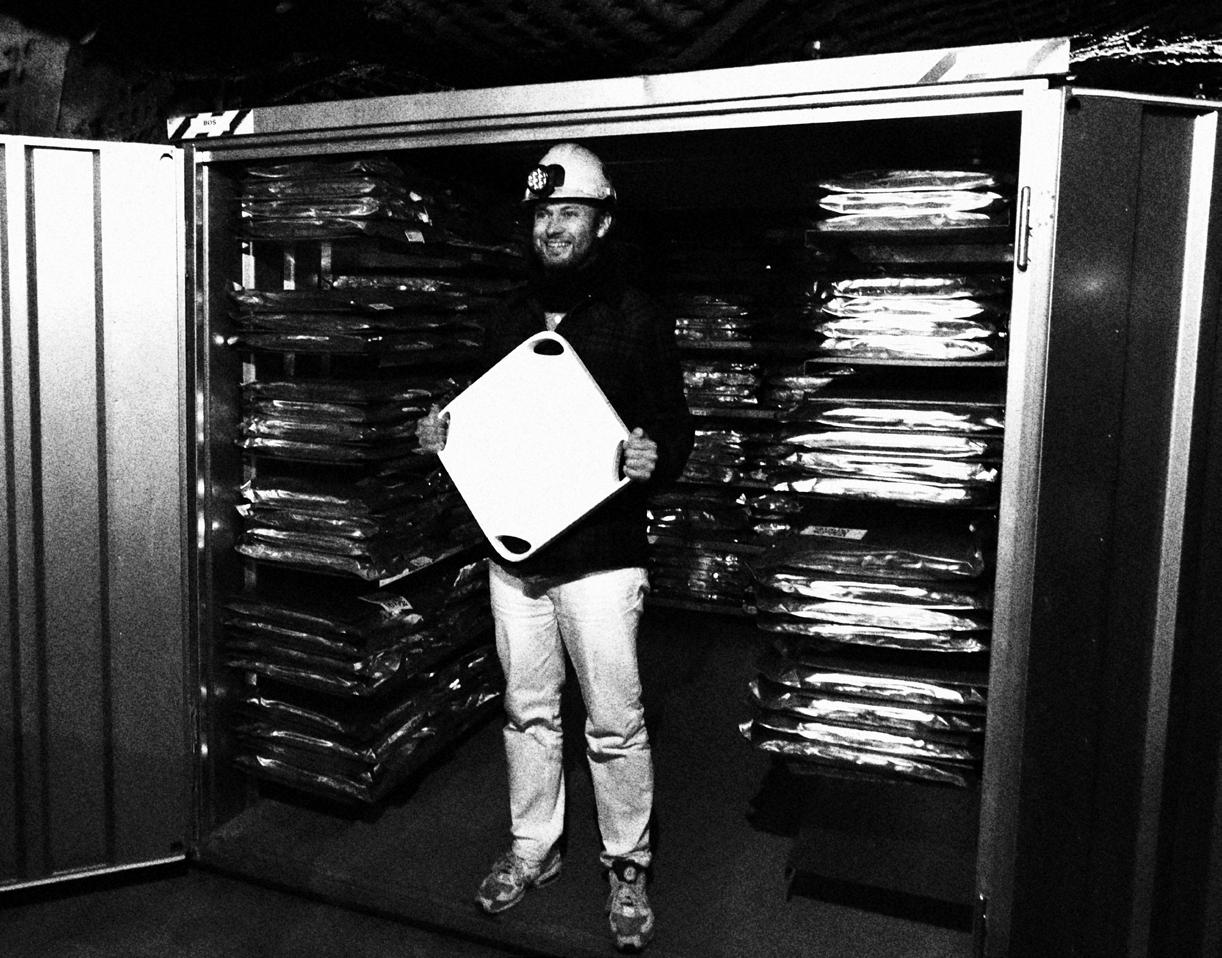

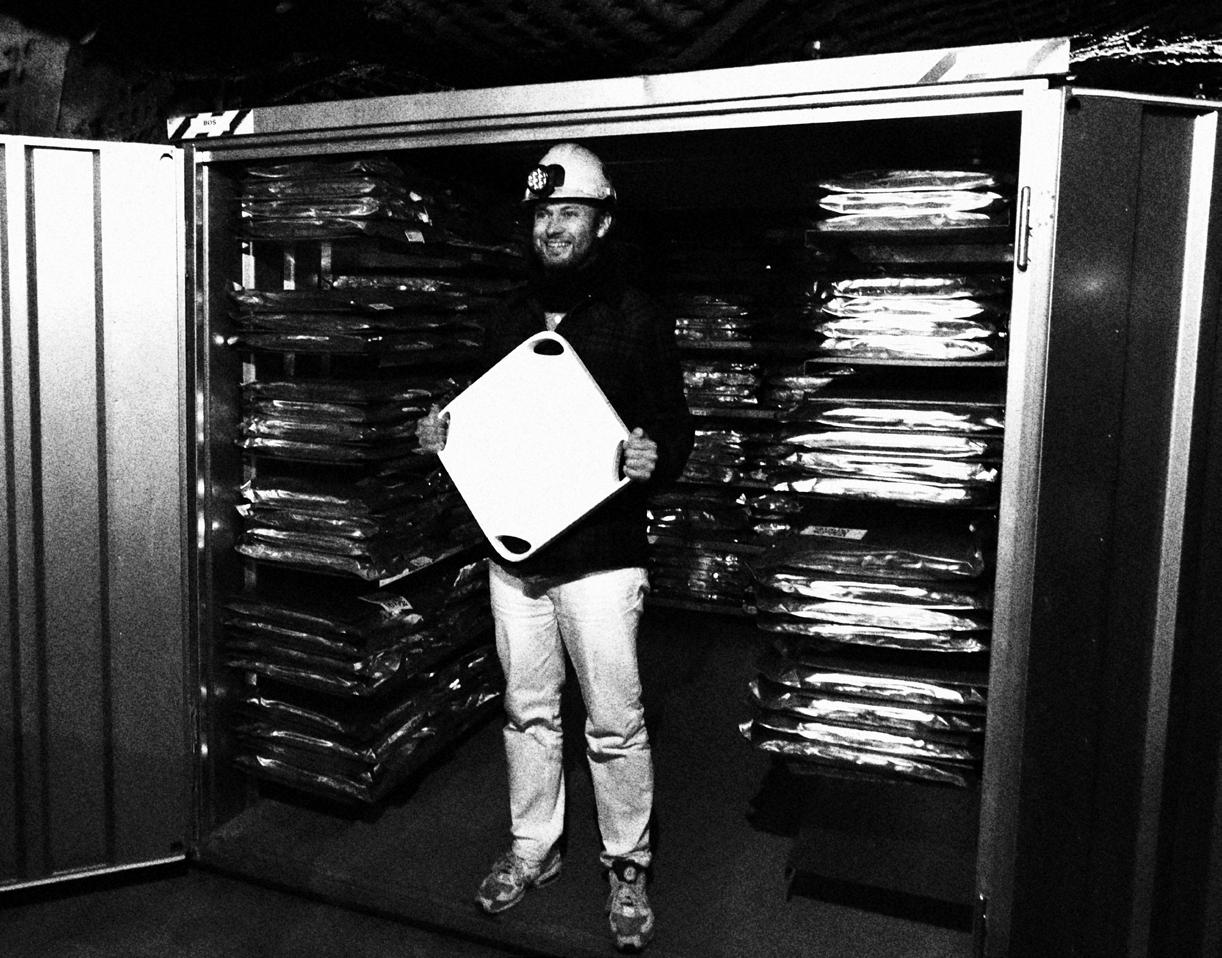

Buried deep in a mountain at the edge of civilization there lies what may end up being humanity's last message.

To get there we traveled over permafrost and up a steep passage, past signs warning of polar bears ahead. Then we descended into the dark mines, our headlamps illuminating falling ice crystals disturbed by our presence, bathing us in glittering and ephemeral showers.

It's not clear how long we traveled down the shaft, time travels differently underground. Here in this Svalbard mountain, it is measured in eons, not hours.

We go past the Global Seed Vault, a backup facility of the world's seeds in case of disaster, and journey further downwards. At last, we come to a door, glowing in the pitchblack. Emblazoned on it are the words "Arctic World Archive: Protecting World Memory."

Before we talk about what's behind that door, we should understand the two great data challenges it hopes to solve. It is joined by dozens of startups, researchers, and even a trillion-dollar corporation in competing to figure out the future of data.

One challenge is philosophical, that of our death and destruction, and what we leave behind. The other is more immediate, that of growing hordes of data, which threaten to overflow our current systems and leave us unable to keep critical data in an economic way.

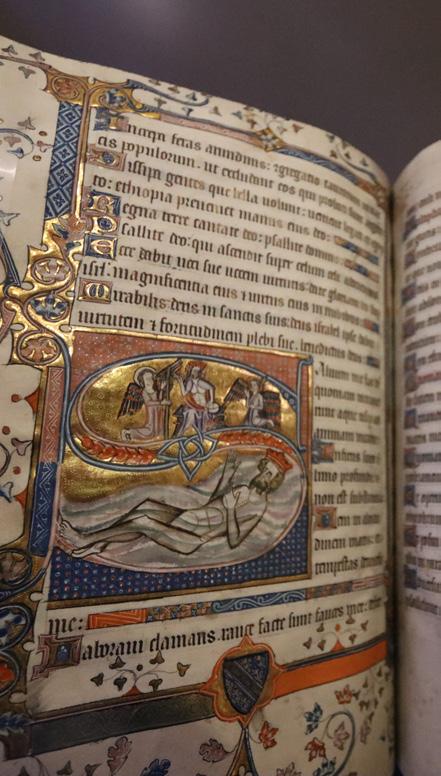

The beginning of recorded knowledge

The story of data is an ancient one. Some 73,000 years ago in what is now South Africa, an early human picked up a piece of ocher and scratched a symbol into a shard of stone, in what is our earliest recorded piece of human artwork.

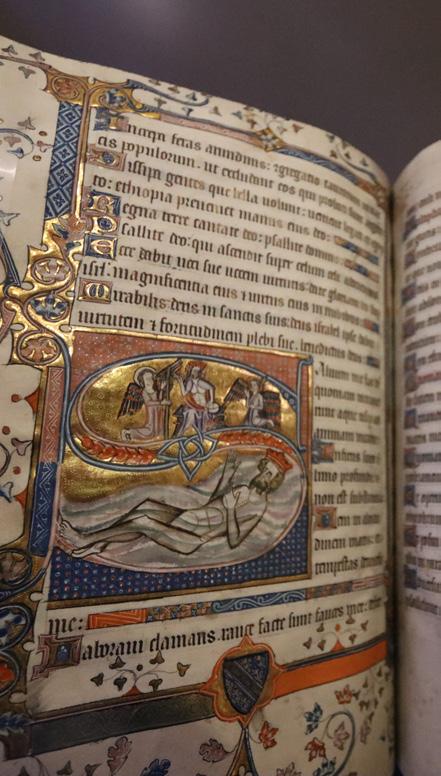

It took the majority of our species' history to get to written recordings, with the Kish tablet in 3500–3200 BC, where humans etched pictographic inscriptions into limestone. Even then, it took thousands of years to advance to clay tablets, and still further to get to papyrus, parchment, and finally paper.

Most of what happened in the world was not recorded. Of what was, the majority

has been lost in wars, fires, and through institutional decay, never to be recovered. Our understanding of ourselves and our past is told through what little survived, providing a murky glimpse that is deeply flawed and relies on the skewed records of kings and emperors.

Now, things are different. We are flooded with data, from individuals, corporations, and machines themselves. But we keep that data primarily on hard drives and solid state drives, which last mere decades if kept unused in ideal conditions, and just a handful of years if actively run.

Other common storage platforms are magnetic tape and optical discs, which themselves come in multiple formats of varying density and lifespan, but are often used for ‘cold’ longer-term storage.

All have their uses and individual benefits and drawbacks, but the simple fact is that if we stopped transferring data to new equipment, nearly all of it would be gone before the century is out.

"The only written records of our time would be the embossing on stainless steel cooking pots saying 'made in China' and probably the company logos on ceramics," Memory of Mankind (MoM) founder Martin Kunze explained.

Kunze is one of a select few hoping to prevent such a tragic loss for our future. To do so, he is looking to our past.

A collection of memories

"I studied art with a focus on silicate technologies and ceramics," he said. "The idea of using ceramics as a data carrier is not new, it's 5,000 years old."

Like the archive in Svalbard, and the Deep Sea Scrolls of the past, he has also turned to depositing data underground as a method of long-term storage.

2km deep, inside the world's oldest salt mine beneath the Plassen mountain in

Hallstatt, Austria, rows of neatly organized ceramic tiles attempt to provide a snapshot of our world.

The most immediately discernable tiles are readable to the human eye - with words and images printed onto them at 300 dpi resolution, similar to a normal color printer.

Less visually exciting at a distance, but perhaps far more important, are ceramic microfilm plates. Kunze turned to physical vapor deposition, a method of vacuum deposition that produces thin films and coatings on substrates such as metals, and then laser etches data at five lines per millimeter. This gives around 500 times the density of the original plates - which will be used to store 1,000 of the world’s most important books.

This, in turn, sparked a governmentfunded project to build a femtosecond laser, which could write onto even thinner materials such as glass-ceramic. "It's very early days, but we have proved that it's possible to write and read 10 gigabytes per second" at much higher density, he said.

As for its lifetime, it should "far exceed the existence of our Solar System, so you could say it's eternal," he said. The technology is being developed by a company Kunze cofounded, Cerabyte, currently still in stealth mode.

Cerabyte does not expect to produce the tech on its own, and has turned to Sonywhich has an optical disc factory in Austria that is slowly declining with the death of the format - to potentially develop "a minimum viable product that we aim to have in one and a half years," he said.

He's far from alone in trying to reinvent the data storage landscape. While Kunze turned to the past for inspiration, others have gone for an approach that appears ripped from science fiction.

The data soup

"I guess you could say we're in the business

Issue 46 • November 2022 | 15 Escape Ukraine

The last data center >>CONTENTS

"We're manipulating DNA for the purpose of both storage and compute and making some real progress here"

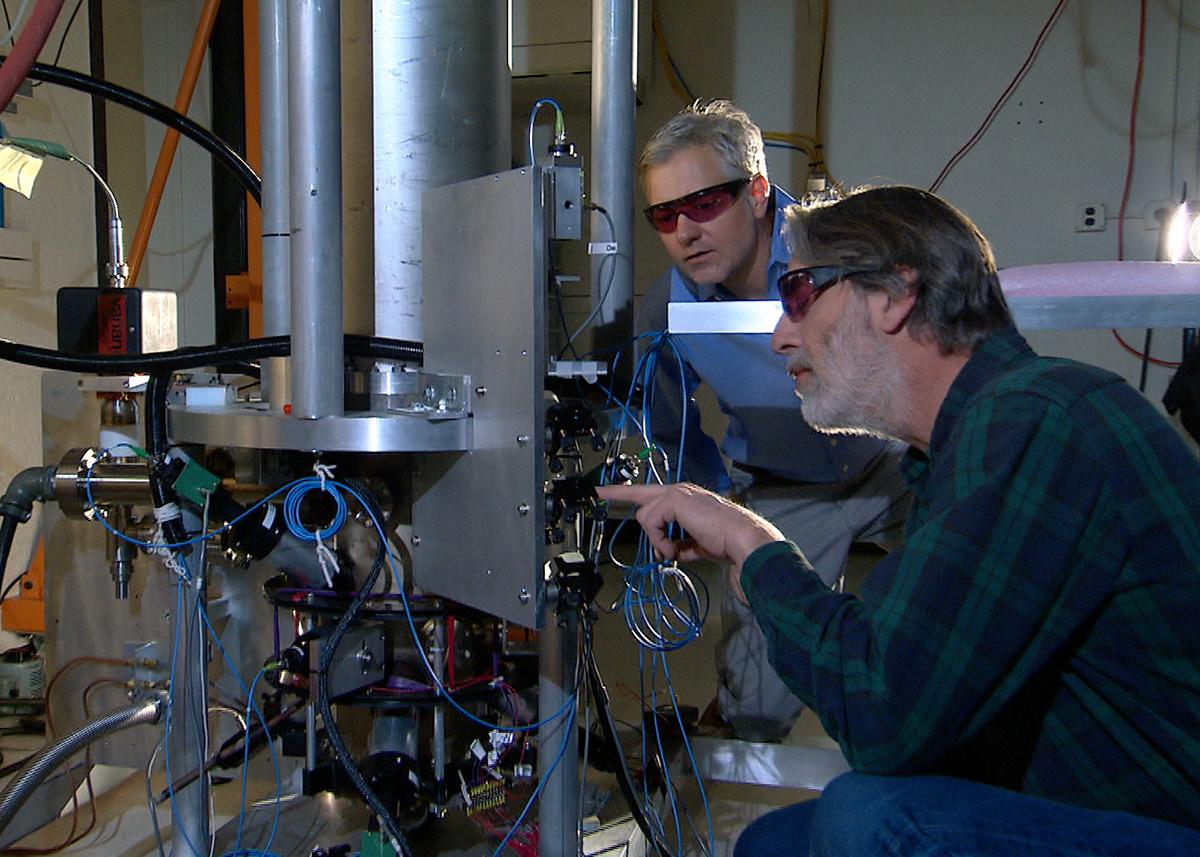

of trying to build a bio-computer," Dave Turek, chief technology officer at Catalog, said. "We're manipulating DNA for the purpose of both storage and compute and making some real progress here."

Turek knows all about the intricacies of traditional computing - the last time we supercomputer, then the world's most powerful, a project he spearheaded during his nearly 23 years at IBM.

Wetware computing is a new avenue. "I'm not a molecular biologist, so we're on even ground here," he joked before launching into a dense explanation of DNA.

The classic double helix construct of DNA is made of ladders that are formed of just four bases: Adenine, Guanine, Cytosine, and Thymine. With that simple starting point, all of the sequences of bases that dictate everything that makes every living thing unique are found. "It's biology's way of encoding information," Turek said.

We have DNA of about 3.5 billion bases long, which contains all that we are, hinting at the storage potential that could be harnessed. It's not a new idea: Early attempts date back to 1988, while American geneticist George Church encoded a book into DNA in 2012.

Those approaches took each of the letters A, G, C, and T, and assigned bit values to them, potentially allowing incredible data storage density. "Immediately, people started seeing DNA as the remedy to the overflow of information created in modern society," Turek said. "And they're completely wrong."

Catalog believes that those researchers, as well as some rival DNA storage companies, have made a crucial error. "You then have to solve a fundamental problem, which is that every time you add another base to your synthetic piece of DNA, it takes 30 seconds. So if the cost is 30 seconds for every two bits of information, that's not going to work very

decoding."

This is because the genome industry has prioritized accuracy at the base level over speed, at a fidelity that Catalog doesn't actually need. "We've got to resolve the issue of rapid decoding in parallel to the velocity with which we can write,” he said.

"We have some partnerships established

The company has decided to scale back and not work at the base pair level, instead constructing an alphabet composed of small snippets of DNA. Catalog now has 100 of these oligonucleotides, as they are known, with which it can create data by connecting them together in what is called ligation.

"And the machine that we invented and developed automates that process, which is a big deal,” Turek said. “It uses inkjet printheads that contain these oligonucleotides. And each reservoir is unique from every other one. So that each of the nozzles can be instructed to fire a particular snippet of DNA out of my alphabet."

The ‘Shannon’ machine has three thousand nozzles that deposit DNA ink drops at the picoliter level, 500,000 times a second. These mix together to create a long string of data holding DNA. “The way it's currently configured, I can create a trillion unique molecules in a day,” he said.

This can then be read with a DNA sequencer, with the company currently using Oxford Nanopore machines. These are much slower than the writing machines - "if I take 10 minutes to write a whole bunch of data, it might take me a week to do all the

to begin to try to do some real innovation on the read side as well. We have ideas today that we think could easily generate two orders of magnitude improvement in speed."

Even at the slightly lower storage ability of oligonucleotides, the amount that DNA can theoretically record is mindblowing. "You could put all the information in the world in this," Turek said, holding up a Pepsi can. Such a feat is still a way off, he admitted, but the company was able to store all of Wikipedia into a few droplets.

As for the long-term storage abilities, the death of a mammoth some 1.2 million years ago gives an insight into its longevity. Last year, researchers successfully sequenced the previously unknown Krestovka mammoth, and its body was not exactly kept in ideal conditions.

Perhaps more enticing still is another idea being cooked up at Catalog - using DNA for compute. The concept is in its early stages, and only works for very specific types of compute, but would still be a profound advancement in computing.

“We're doing one case that is inspired by a real potential user,” Turek said. “This is not a theoretical abstract academic exercise that originated in a textbook. And we're using

16 | DCD Magazine • datacenterdynamics.com DCD Magazine #46

>>CONTENTS

that customer to guide us in terms of the nature of the algorithms, and the other kinds of things that need to manifest themselves in DNA.”

It’ll be a long time before such efforts will bear fruit, but Catalog is hopeful that the opportunity is vast. “If you want to build a parallel computer, which I did for 25 years, and you want to add an incremental unit of computing, it typically has a pretty hefty cost to it, and consumes a lot of energy and space,” Turek said.

In DNA, he argues, “I can make it parallel cheaply to an extraordinarily large degree. If you say ‘I want to I want to run this instruction 100,000 times in parallel,’ I would come back to you and say, ‘100,000? Why not 10 million? Why not 10 trillion?’”

The idea is different, to say the least. We’re used to thinking about the computing world in terms of electricity and physics, in bits and bytes. “But look at how people are moving on from von Neumann architectures, and beginning to create quantum computers,” Turek countered.

“We think that we're in the right place at the right time, because there is a de facto acceptance of alternative architectures in the computing world. However strange you think this might be, the guys in the quantum

world are stranger - and they can still sell their computers.”

Nonetheless, Catalog still has a long way to go before it can convince companies to put their data lakes into data soups, and embrace unconventional storage solutions. Here, rival Microsoft has an advantage - it can deploy its long-term storage concept in its data centers, and rely on a robust sales network to convince users that its approach is the way to go.

Halls of glass

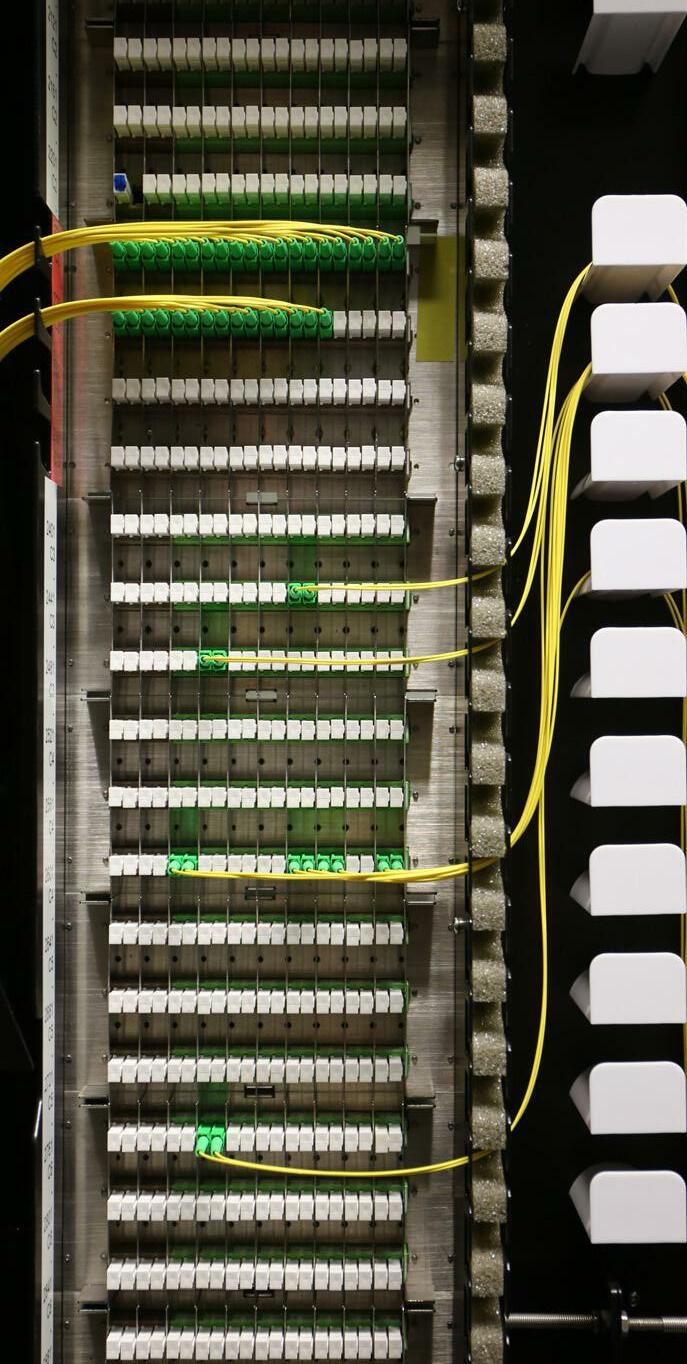

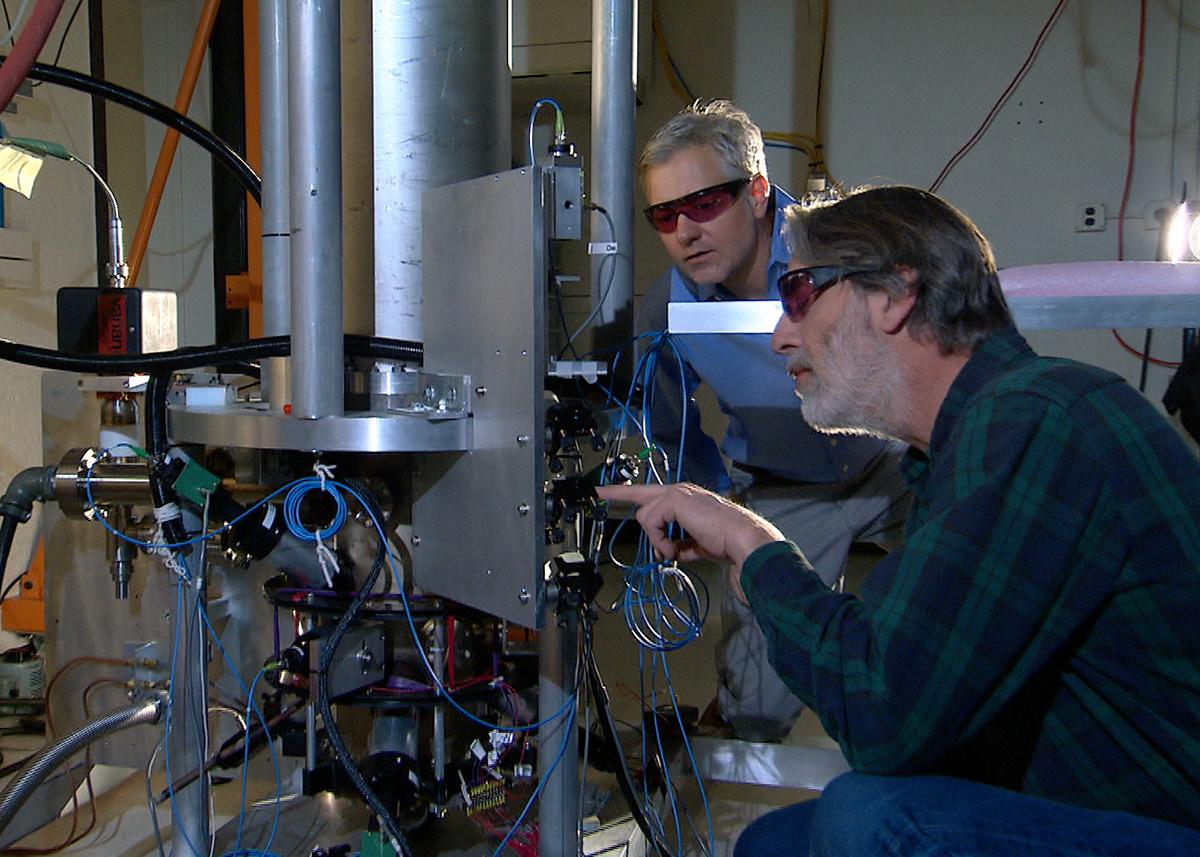

In Cambridge, the hyperscaler’s researchers have been experimenting with fused silica, where a femtosecond laser encodes data in glass by creating layers of threedimensional nanoscale gratings and deformations at various depths and angles.

“To read it, we image it with a microscope,” Microsoft Research’s distinguished engineer and deputy lab director Dr. Ant Rowstron explained. “It's got these layers in it, and you focus on a layer. And then we take several images concurrently. We don’t spin it, we read an entire sector at a time.”

Project Silica was set up by Rowstron and Microsoft after realizing that conventional storage was set to hit a bottleneck. “We began from the ground

up, asking what storage should look like,” he recalled, with Microsoft deciding to build upon silica data storage research from the University of Southampton.

It has gone through multiple iterations over the past few years, slowly getting denser as well as easier to read and write. “If we were to fill our 12-centimeter by 12-centimeter reference platter entirely with data, we’d be at around five terabytes,” Rowstron said.

Escape Ukraine

“We have turned to warehouse-style robotics, and we have these little robots that operate independently, they can move up and down and along the structure in this crabbing motion"

The last data center >>CONTENTS

Writing is still slow and expensive, requiring high-powered lasers to accurately etch the glass in just the right part. Critics, including some working on the other projects in this piece, worry that there are fundamental limits to how fast you can pump energy into the glass without causing issues.

“It's been a lot of work,” Rowstron admitted. “When we first saw the technology at Southampton, it was taking hundreds of pulses to write data into glass. But we’ve been working on how to form these structures with a very, very small number of pulses.”

He declined to disclose exactly how far the project had come, but added “if you compare it to the state of the art, we were significantly below [that number of pulses]. You can think of it as dollars per megabyte writing, and I would say the technology is now in a good spot.”

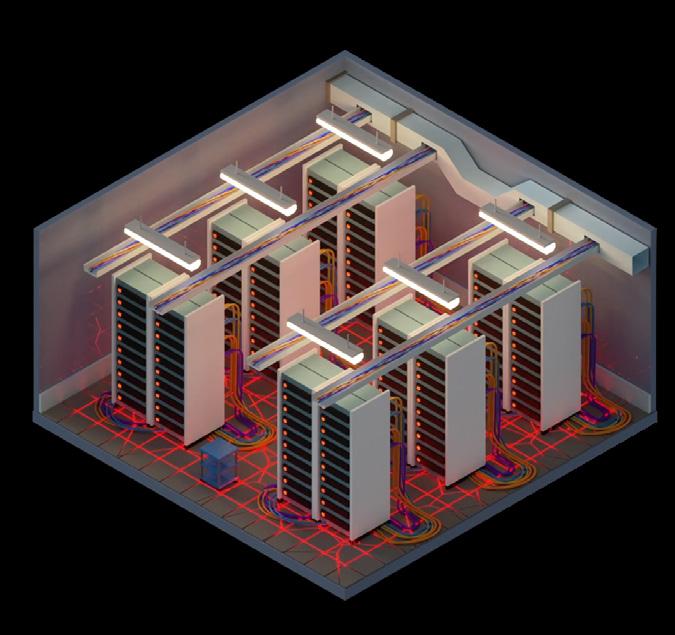

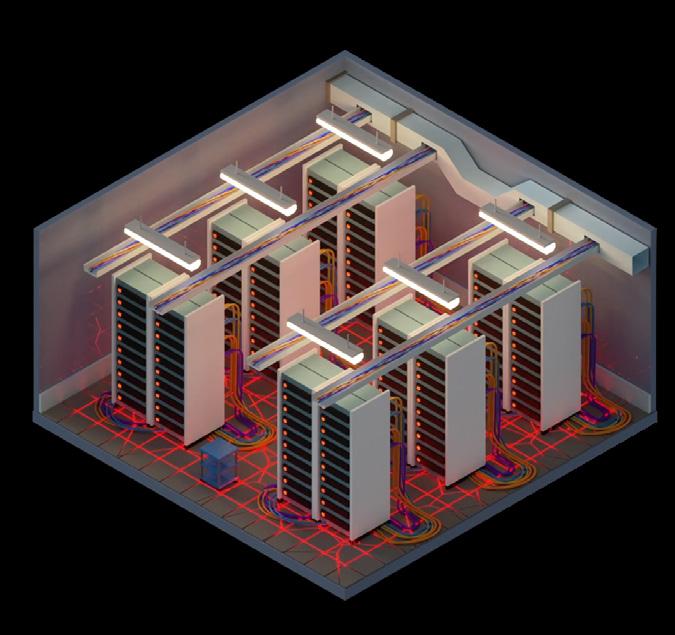

Microsoft is also thinking about how it would be read in a data center. “We have turned to warehouse-style robotics,” Rowstron said. “And we have these little robots that operate independently, they can move up and down and along the structure in this crabbing motion, which is pretty cool.”

On one end there would be a machine

The guardians of knowledge

writing the data onto the blocks of glass, on the other a reader ready to uncover what is within each block. Other than the robots and the writer, it wouldn’t need any power, and it won’t need any cooling.

That means that the first data centers Silica will inhibit will be massively overengineered. “I guess one day we'll end up with buildings or data centers dedicated to just storing that preserved glass,” Rowstron said.

That glass can last around 10,000 years. “No one's asked us to go further,” Rowstron said. “There are things you could trade-off - you could trade density for lifetime and things like that. But you've got to remember our goal is to get a technology that will allow us to use this in our data centers. No data center is going to exist for 10,000 years.”

Others could go further, should there be demand. The original Southampton work found that its much-slower-to-write silica could last 13.8 billion years at temperatures of up to 190°C (375°F). The researchers there

stored the Universal Declaration of Human Rights, Newton’s Opticks, the Magna Carta, and the King James Bible on small discs.

“My hope is that in 200 years’ time there will be a new storage technology that is even more efficient, even denser, even longer life, and people are going to say ‘we don't need to use glass anymore,’” Rowstron said. “But they’ll move formats because they want to, not because they have to.”

Rowstron believes Silica will prove useful for both major challenges of data storage. "You want to make sure that whatever else happens, data from the world is not lost," he said. But the tech is naturally focused on the more immediately pressing concerns faced by businesses.

"Hard drives are languishing, we've had so little capacity increases in the last five years; tape is suffering," he said. "There is more and more data being produced, and trying to store that sustainably is a challenge for humanity."

Cold storage that doesn't require constant power would be a huge boost for the environment, as would moving away from rare earth metals such as those found in HDDs (of which there may not be enough to meet future demand). It is also much

In popular culture, the Library of Alexandria - one of the greatest repositories of knowledge in antiquity - was lost to the flames, its endless rows of papyrus consumed by fire. Scientific theories, fantastical tales, and bureaucratic minutia became embers in a matter of hours.

But this is likely not true, Richard Ovenden, the head of the Bodleian Library, said. Its death was slow, as papyrus became less relevant with new technology - parchment - and no transition was made, like with the Library of Pergamum.

More profound was an institutional decay, as a lack of management, investment, and care led to its tragic demise.

"I think we are in a moment in time where that risk is high again," Ovenden, author of Burning the Books: A History of Knowledge Under Attack, said. "You certainly see that with the failure of public libraries in many Western countries."

Our story on how we store data for years to come has focused on the technology, but it's important not to forget the humans working to protect and share knowledge.

Librarians and archivists have passed the torch of knowledge from one generation to the next, ensuring the light of humanity does not go out.

"Throughout history, there have been moments of destruction of knowledge, but they've often been

matched with moments of renewal or of rescue," Ovenden said.

"That certainly is the case today, both in terms of communities rallying around their libraries to protect them, and in library's ability to adapt to the various challenges posed by the big tech companies today."

We live in a strange time where we have more access to information than ever, but it is through the lens of corporations who put profit over fighting misinformation or loss. "Preservation is not in Google's mission," Ovenden said.

"We need places on the Internet where information can be relied on, and I think many of those are libraries and archives that are not profit-making companies."

Preserving the creations of corporations, with their platforms and proprietary information, is also a difficulty.

"As those preserved worlds become much more complex and exist much more in real-time, that task of preservation becomes much more complex and philosophically challenging, as well as financially challenging for organizations," he said.

"There should be a link with regulation and taxation on the profits of the major technology companies in order to allow third-party preservation to take place by not-for-profit preservation organizations."

18 | DCD Magazine • datacenterdynamics.com DCD Magazine #46

>>CONTENTS

Credit: Microsoft

cheaper over the longer term, when all you have to worry about is where to put the glass.

The technology caught the eye of Guy Holmes, CEO of Tape Ark, an Australian company focused on moving aging tape libraries to the cloud. "I've actually got it just sitting here in my office," he said, grabbing what at first looked like a clear square of glass, but revealed rows of microscopic etchings when a light was shined into it.

"It's very early days, but it seems to have legs internally there, and it appears to be picking up its density," he said. "I spent a lot of time with the guys at the program just talking through density, access times, access frequency, etc. Customers tend to do one restore per week at the moment based on backups. As the tapes age, the number of access requests reduce significantly.

“We're finding there are these archives of infinite retention or tapes that have never been accessed since they were created.”

His company remains excited about the technology, but is currently focused on the more immediate challenge of simply getting data off of antiquated tape systems and onto the cloud, including Microsoft Azure. There, it might even go back on tape, but first Holmes recommends trying to see if there is hidden value in the data.

"The number of people that don't even know what's on their tapes is pretty startling," he said. "On there could be a cure for a disease, but nobody knows. So we get pretty excited by some of the projects we do."

Other efforts have immediate clear value. "We got to work with Steven Spielberg on six petabytes of Holocaust survivor videos, which needed long-term preservation, it needed immutability so that nobody could go and do deep fakes on a holocaust video and say that it didn't occur," he said. The work required moving critical data off of both tape and film, in an effort to give it a longer life.

With film, we come back to the door in Svalbard. The company behind the project has its roots in the cinema industry, selling film projectors and digital light modulation technologies to Hollywood and Bollywood.

“And then that movie Avatar came out, and there was a super fast transition from a film-based world to digital projector-based world,” Piql CEO Rune Bjerkestrand said.

The return of film

While film appeared to be on its way out, the company was convinced that there was still something to the medium. Piql studied different types of film from around the world,

hoping to find one that would last a long time, and store a lot of data. Some last up to 500 years, but don’t have much density, and are dangerously flammable.

“So we embarked on developing our own film, Piql Film, which is a nano silver halide film on a polyester base,” Bjerkestrand said.

The company takes binary code and converts it into grey pixels, for a total capacity of 120GB per roll of film. Under good conditions, that film can last around 1,000 years, Piql claims.

The company has split its data archiving in two. One is more traditional, where it works with companies like Yotta in India to convert data to film and store them in commercial data centers around the world - with Piql highlighting its use against ransomware or data center disasters.

Then there’s the Arctic World Archive, pitched as a more humanitarian mission to preserve crucial data for future generations.

Due to its distance from other land masses, the fact that it has been declared demilitarized by 42 nations, and its lack of valuable resources, the hope is that Svalbard is unlikely to be nuked in any future conflicts. However, just like everywhere else on the planet, the Norwegian archipelago cannot claim total safety - it is one of the fastest-warming places in the world due to climate change, and the territory has faced increased aggression from Russia.

Still, the land is remote and its empty mines are of little use to would-be invaders. At the depositing ceremony we attended, works of art from national archives around the world were placed in storage (and images of an Indian couple’s wedding, who paid to have “their love recorded for eternity”). Large reels of film are carefully vacuum sealed and then placed in the shipping container 300m underground, nestled deep in the permafrost.

Anyone can pay to store their data at the archive, but it is primarily finding business with governments and public bodies. Microsoft’s software collaboration platform GitHub also stored 6,000 software repositories on the site (a backup is also stored at the Bodleian library, see box out).

“It's for people that want to bring their valuable, irreplaceable information into the future to the next generation,” Bjerkestrand said, but added that the company was not planning to be a curator in and of itself. “That has been a serious discussion, and we came to the conclusion, that no, why should we have an opinion on what's worth bringing into the future?”

When pressed on how the AWA will last 1,000 years or more when tied to a corporation, Bjerkestrand said that the company planned to spin it off as a nonprofit foundation.

Currently, however, people pay for storage for up to 100 years, after which the data is sent back to them if they don’t keep paying. In an eventuality where the institution that first contracted Piql collapsed, that could cause problems: "They need to make sure that somebody gets the right to the reel,” he said.

A foundation, on the other hand, would have a longer-term focus. “Such a foundation would have interest across the world for organizations to support, it's basically supporting world memory to survive into the future,” Bjerkestrand said. “So I think it's a good cause that you could get sponsors, donors, and supporters for.”

When thinking about scale in that way, you cannot assume that future generations will be able to understand how data storage formats work. Here, Piql has an advantage: As it’s film, you can simply hold it up to the light, and the first few frames are pictures of how to access the information.

That data then needs to be able to be readable with what is available on the roll. “We have a fundamental principle that it should be self-contained,” Bjerkestrand said. “We don't compromise that it should be open source license and free to retrieve the data with the tools that are on the data. We had scenarios where we could do more data on the film, but then it would be too complex to read.”

Simply recording reality and putting it on one of the above media doesn’t work if future civilizations can’t understand it, posing a critical challenge for much of the data of our day.

The death of tools

“My biggest concern is the loss of knowledge

Issue 46 • November 2022 | 19 Escape Ukraine

The last data center >>CONTENTS

of the software that's needed to quickly interpret digitized content,” Vint Cerf, TCP/IP co-creator, said.

“An increasing amount of digital content that we create was made by software, which is needed to correctly understand, render, and interact with data, like with spreadsheets and video games where you actually need a piece of software, plus a bunch of data in order to exercise it. If you don't have that software running anymore on the platforms that are available 100 years from now, then you won't be able to do that.”

Cerf, known as one of the fathers of the Internet, told DCD that he was “worried about the kinds of software that's needed to interact with databases, for instance, timesheets and other kinds of complex objects, where we may not have as widespread implementations available, some of them may even be proprietary.”

Fellow Internet Hall of Fame member Brewster Kahle shares the concern about specific pieces of software and data being proprietary or in the hands of corporations.

"If you look at the history of libraries, they are destroyed or they're strangled such that they're left irrelevant,” he said. “And that used to be by king and churches, but these days it is governments and corporations.”

As one of the developers of the World Wide Web precursor the WAIS system, Kahle found himself at an inflection point

Our obituary

of humanity - where data was set to be shared and accessible by the world, but was transient and easily lost. This, he hoped, gave an opportunity for a new kind of library.

"As we're coming to a change in media type, can we go and start a library, right away?" he wondered, launching the nonprofit Internet Archive, which tries to create a long-term copy of websites, music, movies, books, and more.

"The goal of the Internet Archive is to try to build the Library of Alexandria for the digital age," he said. And, so far, the effort has been wildly successful: "We're a small organization, we're $20-25 million a year in operational costs, and yet we're the 300th most popular website in the world."

But its continued success is threatened by a changing world.

“I could only start the Internet Archive after we had gotten the Internet and the World Wide Web to really work, both are open systems. So if we go into a period where the idea of public education or universal access to all knowledge starts to eclipse, we're in trouble,” Kahle said.

“We see that now within corporate environments, and we're starting to see it in government environments, whether it's banning books or where you can pressure organizations to do things without going through the rule of law, but you go through the rule of contract.”

Layers of control

As the web becomes more centralized and in the hands of a few cloud providers, their terms of service risk controlling which information lasts long enough to be stored for greater timescales. At the same time, as systems get built on top of cloud providers, or platforms like Facebook, storing them would require also recording all that backend software that is not shared.

Kahle is less worried about the longevity of data storage devices, and more about ensuring immediate access in an open world.

“100 years ago, microfilm was a new technology, and it was greeted with this fanfare that we'd be able to make it available so that people in rural areas could have access to information just like the people in big universities,” he said. “Well, that didn't really come about, it ended up just being used to just reinforce the power structures and the publishers that existed at the time.”

The question of world memory is less about the medium it is stored on, and more about how it is used when it is on that medium, he argued. “Microfilm may last 500 years… if you don't throw it away. But it turns out, people will just throw it away even if it hasn't been copied forward.

“We need not just formats that will last a long time, we need to keep the material in conversation, in use so that people will continue to love it and keep it going.”

We don’t know when humanity will end. It could be soon, with climate change, nuclear war, or another pandemic. Perhaps we will survive, eventually spreading to distant stars.

When we do, we may come across a golden disc floating through space, carrying a message from our past. Or, possibly, someone else might come across it.

“There were two audiences we designed for - one was the extraterrestrial audience, and that was the one that I was most concerned with, and the other was the message to ourselves,” Jon Lomberg, NASA's design director for the Golden Record, recalled.

“In a sense, it was a message to ourselves, as well as a message to extraterrestrials saying this is a snapshot that one group of people thought would be a good capture of the Earth at this point in time.”

While destined for the stars aboard the Voyager spacecraftwhich are the furthest man-made objects from our planet - the team had to contend with very terrestrial challenges.

First, there was copyright, with some songs not allowed on the golden vinyl.

Then there were issues with nudity, after the earlier Pioneer plaque which included a line drawing of a naked man and woman drew criticism from the more prudish for showing a penis, and those on the other end of the spectrum for the censorship of female genitalia.

Lomberg’s anatomically correct drawings of a man and woman were not included on the Golden Record.

Another image that was not included was that of an atomic bomb or mushroom cloud, despite the pervading threat of nuclear holocaust when the vessels launched in 1977. “Carl [Sagan] didn't want it to seem like a threat,” Lomberg said. “He didn't want anything on record that could seem like ‘if you mess with us, this is what we could do.’ He wanted to greet the cosmos with open arms.”

The discs contain music from around the world (which is available online for those curious), as well as different languages, all of which will last an incomprehensibly long time. The side facing outwards is covered by a box that “lasts about a billion years, and then it's about another billion years to erode the outward facing side,” Lomberg said. “The inward-facing side lasts a lot longer - they think up into the trillions of years."

Should it be found by another species billions upon billions of years from now, it will be our last message to the cosmos. And, by dint of data storage limitations and intentional curation, it will be an imperfect one.

But Lomberg is happy with that: “In a sense, this is our obituary; let's be remembered for Mozart, not Hitler. We'll likely be done in by our own flaws, our own shortcomings may well destroy us, and that's punishment enough. But that wasn't the whole story of us.”

20 | DCD Magazine • datacenterdynamics.com DCD Magazine #46

>>CONTENTS

Networks Supplement INSIDE Connecting the world Building light into chips > Can we use photons for compute and networking? How peering went global > DE-CIX’s new CEO reflects on the growth of interconnection A second look > Ending the leap second and saving time from itself

Sponsored by

Next-Gen

22 | DCD Magazine • datacenterdynamics.com EcoStruxure™ Micro Data Center from Schneider Electric™ lets you operate in any edge environment. • Enjoy fewer costly service visits. • Deploy faster with pre-integrated solutions delivered in shock packaging. • Rely on the support of our partner and services network. when they operate at the edge. IT professionals get more EcoStruxure IT Expert 6U Wall Mount EcoStruxure Micro Data Center apc.com/edge #CertaintyInAConnectedWorld ©2022 Schneider Electric. All Rights Reserved. Schneider Electric | Life Is On and EcoStruxure are trademarks and the property of Schneider Electric SE, its subsidiaries, and affiliated companies. 998-22321004_GMA

The networking challenge

You know the story. The amount of data the world produces is growing exponentially. More importantly, the data is created from more sources than ever before, and then has to travel to yet more end points than ever.

Gotta connect them all

The story of how the data center sector linked up cannot be told without looking at DE-CIX (p24).

We talk to the CEO of the German Internet exchange company about how it became one of the key lynchpins of our modern world.

“When I’m asked 'what's the difference between DE-CIX and Equinix?' I say 'we have more data centers,'" CEO Ivo Ivanov told us.

It doesn't actually own any of those data centers, of course. "We are in more than 700 facilities around the globe," he said. "Including Equinix.”

Looking ahead, it represents an opportunity for an open peering network, where these networks are not controlled by a single company.

This could have a profound impact on the next iteration of the Internet, especially as Edge comes to the fore.

Let there be light

Over fiber, data travels at the speed of light. Then it reaches the data center.

We're used to compute being in the realm of electrons, but that means heat and latency.

Lightmatter hopes to use photons for computing and interconnection, bringing light

further into the data center (p28).

That could mean processing at the speed of light, just in time for an explosion in artificial intelligence workloads.

“We have about a 42x improvement in latency, because the processing is happening at the speed of light," CEO Nick Harris said.

"You're doing multiplication and addition as light flies through the chip."

Ain't got time for that

There are 31,536,000 seconds in a year. It takes just one of them to break everything.

Since 1972, the world has added leap seconds to UTC every few years in an effort to bridge the gap between atomic clock-based time and the time derived from the Sun's position as our spin decelerates.

This may not sound like a big deal, but every time it happens is a calamity for networks. Computer systems that rely on a precise and identical time cannot handle the change, crashing spectacularly.

Companies like Google and Meta have come up with workarounds, smearing a second over hours. This is a step forward, but still carries a great risk.

That danger is only growing. The Earth's spin is no longer slowing, in fact it is speeding up.

This change means that we will soon have to do a world first and roll out the negative leap second.

With systems crashing even with a time addition that we're used to, how will they react to such an event?

Companies don't want to find out, so suggest killing off leap seconds entirely (p31).

24 Next-Gen Networks Supplement | 23

Contents

28 24.How peering went global Changes in the world’s networks turned Germany’s Internet peering platform into a global phenomenon 28. Building light into chips The AI revolution needs so much processing power, could it be time to bring in a new generation of chips that combine photonics with conventional silicon? 31. A second look Hyperscalers want to end the leap second, as things start to turn negative 31 Next-Gen Networks

Sponsored by

How peering went global

If your business wants a connection in Africa, India, or the Far East, you might find yourself looking at a platform originally founded as a very local service handling Internet traffic in Germany.

The story of how DE-CIX changed from a single-country Internet exchange to a global interconnection player is the story of big changes in networkingand in the way society consumes those networks.

Starting in a post office

The Internet was created in the United States. By the early 1990s, it had grown internationally, linking networks round the world. But all peering still took place in the United States, so when two Internet users on different networks communicated, all their traffic still had to go through the US.

This applied in every country in the world, creating heavy delays, and massive network costs in days when international bandwidth was much more limited and expensive than it is today.

Two users connecting in London or Frankfurt had to effectively communicate over a two-way link to the only Internet exchange points, with all their traffic going to the US and back, adding massive delays and telecoms costs.

Local groups could see an answer. In late 1994, a group of five British Internet service providers (ISPs) set up the London Internet Exchange (LINX), using a Cisco Catalyst switch with eight 10Mbps ports in London’s Docklands.

In 1995, Germany followed suit swiftly, with its own Internet exchange point (IXP), the Deutsche Commercial Internet Exchange (DE-CIX).

Internet exchanges in all countries have grown massively: DE-CIX for

Peter Judge Executive Editor

instance now connects more than 3,000 networks.

“In 1995, DE-CIX was established as a pure peering point, a fabric operator,” says Ivo Ivanov, DE-CIX’s CEO.

Three ISPs launched the German IXP in the back room of a post office in Frankfurt, and expanded into an Interxion data center, becoming one of the largest Internet exchanges in Europe.

“Over time we grew in Frankfurt, and we added Hamburg and Munich,” he says. “In 2007, Telegeography said we were a new telecommunications capital of Europe, because of the huge concentration of traffic between the Western and Eastern hemispheres.”