CUSTOMIZATION IS OUR STANDARD.

MILLIONS OF CONFIGURABLE RACKS AVAILABLE IN TWO WEEKS OR LESS

CUSTOMIZATION IS OUR STANDARD.

MILLIONS OF CONFIGURABLE RACKS AVAILABLE IN TWO WEEKS OR LESS

6 News

Meta madness, Power takes power, and Equinix heats up

14 Data centers’ Buddy

The future of Loudoun, with Buddy Rizer - the man behind the rise of Data Center Alley

16 Digital Realty’s next move

Building Digital Dulles, a gigawatt campus

22 The newcomers

PowerHouse and CorScale on

developing for hyperscalers

27 NTT GDC’s moment

The Japanese giant is ready to take on the US. Can it compete in the big leagues?

31 The Queen of Prince William

How PW’s Digital Gateway came to be, from the lady behind it all

36 There’s something about Maryland

Touring Quantum Loophole’s giant data center campus with CEO Josh Snowhorn

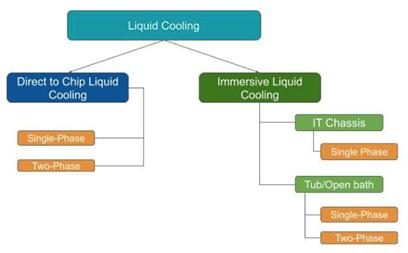

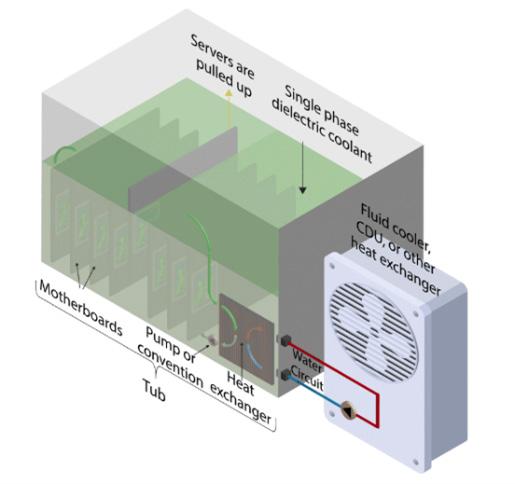

41 The cooling supplement

Over cooling, liquid breakdown, and plant-based oils

57 Australia’s great migration

Moving away from the East Coast, and building data centers out west

59 The Awards winners

We dive into the winners of the 2022 DCD Awards, highlighting who won the industry’s biggest competition

78 Exploring immersion cooling

What are Dug McCloud and Microsoft up to? We take a deep dive

82 Asia explores the multi-cloud

And an intro to Hashicorp

85 5G in Africa

Why the rollout is so slow, and what comes next

88 Op-ed: Social media drama

Meta and Twitter have ripped up their data center plans. What does that mean for the industry?

What happens with the world's data center hotspot runs out of power and land?

Last month, armed with a recorder, camera, and a drone, Dan Swinhoe and I toured Virginia and Maryland, in search of answering that simple questionwhat's next for Loudoun and Virginia?

Our Buddy

The success of Loudoun as the data center capital is thanks to the work of hundreds, as well as some fortunate

The newcomers

Next, we travel to the construction sites of PowerHouse and CorScale, the new kids on the block.

Backed by large funds, and with a history in real estate, the two companies hope to cash in on the data center goldrush by building powered shells for the hyperscalers.

The challenger

We then talk to NTT Global Data Centers, touring one of its many Loudoun sites.

After buying up a number of regional contenders like RagingWire and leaving them mostly independent, NTT has pieced them together as a global giant.

Now, the company is ready to spend billions to try and become one of the biggest data center companies in the world.

Its next move? Build out in Prince William County.

events in history. But few can claim more credit in its rise than Buddy Rizer, the county's economic director.

We caught up with Rizer to understand that story, and find out how Loudoun is recovering from Dominion's surprise power crisis.

Start big

Our journey to understand the future of Virginia begins with Digital Realty.

We tour one of their massive million square foot data halls and then head to Digital Dulles, an ambitious plan to build a gigawatt campus next to an airport.

The company still has plenty of runway for growth, but warned that there is no more land left in Loudoun.

The dealmaker

Over in PWC, we talk to the lady who created the PW Digital Gateway, a massive landsale to data center operators that could reshape the Virginia data center landscape.

Mary Ann Ghadban never wanted to sell, and was settling into retired life - until a data center and high voltage power lines came along. So she took control, and decided to leave on her own terms.

The mega campus

Finally, we leave Virginia for nearby Maryland to understand Quantum Loophole's plans to build a giant campus for data centers to build on.

The amount of electricity Buddy Rizer expects Loudoun data centers to consume

Meet the team

Editor-in-Chief

Sebastian Moss @SebMoss

Executive Editor

Peter Judge @Judgecorp

News Editor

Dan Swinhoe @DanSwinhoe

Telecoms Editor

Paul Lipscombe

Reporter

Georgia Butler

Partner Content Editor

Claire Fletcher

Head of Partner Content

Graeme Burton @graemeburton

SEA Correspondent

Paul Mah @PaulMah

Brazil Correspondent

Tatiane Aquim @DCDFocuspt Designer

Eleni Zevgaridou

Head of Sales

Erica Baeta

Conference

Director, Global Rebecca Davison Conference

Director, NAM Kisandka Moses

Channel Manager

Alex Dickins

Channel Manager

Emma Brooks

Channel Manager

Gabriella Gillett-Perez

Chief Marketing Officer

Dan Loosemore

Head Office

DatacenterDynamics

22 York Buildings, John Adam Street, London, WC2N 6JU

permission should be directed to the editorial team at editorial@ datacenterdynamics.com. Any views or opinions expressed do not necessarily represent the views or opinions of Data Centre Dynamics Limited or its affiliates. Disclaimer of liability: Whilst every effort has been made to ensure the quality and accuracy of the information contained in this publication at the time of going to press, Data Centre Dynamics Limited and its affiliates assume no responsibility as to the accuracy or completeness of and, to the extent permitted by law, shall not be liable for any errors or omissions or any loss, damage or expense incurred by reliance on information or any statement contained in this publication. Advertisers are solely responsible for the content of the advertising material which they submit to us and for ensuring that the material complies with applicable laws. Data Centre Dynamics Limited and its affiliates are not responsible for any error, omission or material. Inclusion of any advertisement is not intended to endorse any views expressed, nor products or services offered, nor the organisations sponsoring the advertisement.

The landscape of Ashburn is dotted with cranes, construction never stops in DC Alley

Meta is reviewing a number of underconstruction data center projects around the world as part of a drastic design change for AI workloads.

The Facebook-owner in December laid off its primary contractor on two data center projects in Denmark, ending development on the Odense expansion.

Now, DCD understands, it is reworking some of its 11 under-development projects for new designs, which themselves are still under development - with the first such pause in Temple, Texas.

This may include the cancelation of contracts with contractors for existing designs, but our sources note that Odense is the only one where a new development is currently not planned. The others are being “rescoped,” which will likely impact their construction timelines, and require new contracts (and potentially approval by local authorities), but are still expected to ultimately end in new data centers.

“Supporting AI workloads at scale requires a different type of data center than those built to support our regular online services,” Meta’s Nordics comms manager Peter Münster told DCD when the Odense expansion was canceled.

“This is why we are focusing our efforts on building a new generation of data centers.”

When asked why the Odense site couldn’t be retooled for the new designs, Münster said: “We are focused on building AI capacity and as of now, this site does not fit our current needs.”

Those facilities will be liquid cooled, the company said at the Open Compute Summit in October.

At the time, it appeared like the shift would be gradual, but the company now seems to be making a drastic switch. The Denmark decision, at least, appears to have been on quite short notice - for example, the contractor for the Odense data center was only brought in this August.

Odense will still see the completion of a smaller expansion that was well underway. It is believed that data centers that are near completion on the older design will still be finished as is.

Meta last month announced it would lay off 11,000 employees, some 13 percent of its entire workforce, amid worsening economic conditions, impacts to its business model from Apple, and an as-yet-unsuccessful pivot to the metaverse.

bit.ly/Metastasized

“These [bills] are corporate cronyism at its finest,” Michigan Democratic Representative Yousef Rabhi said of two proposed bills.

The company has received a $5.2 million tax bill from Wasco County for the facility in The Dalles which it built in 2006, which has now expired.

Datagrid bought a 43-hectare site in January 2022. The company aims to build up to ten 6,500 sq m (70,000 sq ft) 10MW modules totaling 65,000 sq m.

Governments around the world have agreed to put an end to leap seconds, which are added to coordinated universal time (UTC) to keep it aligned with astronomical time (UT1). 27 such leap seconds have been added since the practice began.

The companies said the acquisition will allow Power Control and Legrand to help customers ensure that they have the right UPS and other mission critical power solutions in place to meet potential supply volatility over the coming months. “We want to develop our presence in the UK,” Legrand’s CEO said.

Amazon Web Services (AWS) said that it plans to keep hiring next year, after the broader company laid off more than 10,000 people. AWS implemented an executive hiring freeze earlier this year, and laid off contractors, as well as workers at Luna cloud gaming.

Equinix has promised to “adjust the thermostat,” increasing the temperature in its data centers to reduce energy spent in cooling systems.

The giant colocation company has promised to shift temperatures closer to 27°C (80°F) across its global data center fleet, which will lead to less energy wasted in unnecessary data center cooling. This is an unusual move, as colocation providers often operate at lower temperatures than necessary to avoid upsetting the customers who own the equipment in their facilities, and fear that overheating may damage it.

Equinix plans to allay these fears by applying the increased temperatures gradually over several years, explaining that

the announcement will have no immediate impact on existing customers. Customers will be notified when the thermostat is going up at the site where their equipment is hosted.

A temperature of 27°C has been recommended by industry bodies for many years and is approved by hardware manufacturers. It is also used by hyperscale cloud companies that successfully run their equipment in their own facilities at even higher temperatures. However, colocation customers and enterprise data centers have often continued to use temperatures that industry standards bodies regard as unnecessarily low.

Equinix explains that increased temperatures will result in less energy used

in cooling, and will start the process to move gradually: “Starting immediately, Equinix will begin to define a multi-year global roadmap for thermal operations within its data centers aimed at achieving significantly more efficient cooling and decreased carbon impacts,” says the press release.

In its announcement, Equinix points out that increasing temperatures will allow its customers to reduce the Scope 3 carbon emissions associated with their data center operations. Scope 3 emissions are those from a company’s supply chain, and are important - but are proving difficult to reduce.

Equinix has lined up support from analysts and hardware vendors.

“Most data centers operate within the restrictive temperature and humidity bands, resulting in environments that are unnecessarily cooler than required,” says a press release quote from Rob Brothers, program vice president, data center services, and analyst at IDC.

He added that Equinix wants to “change the way we think about operating temperatures within data center environments”, saying: “With this initiative, Equinix will play a key role in driving change in the industry and help shape the overall sustainability story we all need to participate in.”

In response to a question from DCD, Equinix said that: “There is no immediate impact on our general client base, as we expect this change to take place over several years. Equinix will work to ensure all clients receive ample notification of the planned change to their specific deployment site.”

bit.ly/HotDataCenterNews

Digital Realty CEO Bill Stein has been terminated from his role as CEO, effective immediately.

The colo giant announced today that its board of directors has appointed current president and chief financial officer, Andrew P. Power, as its CEO and to the board of directors, effective immediately.

In an SEC filing on the change, Digital said the board “approved the termination of A. William Stein as Chief Executive Officer of the company without cause, effective immediately” on December 13. No reason for the change was given, but it noted Stein will receive around $15 million in cash and various other separation payments and benefits.

According to another SEC filing from August 2021, Stein’s employment with the company was changed to “automatically be extended each year for successive one-year periods until either the employer or Mr. Stein provides 60 days written notice of non-extension prior to the expiration of the thencurrent term.”

Digital said in a statement: “Bill was explicitly terminated ‘without cause’ pursuant to his employment contract. This is different from being terminated for cause.”

bit.ly/PowerTakesPower

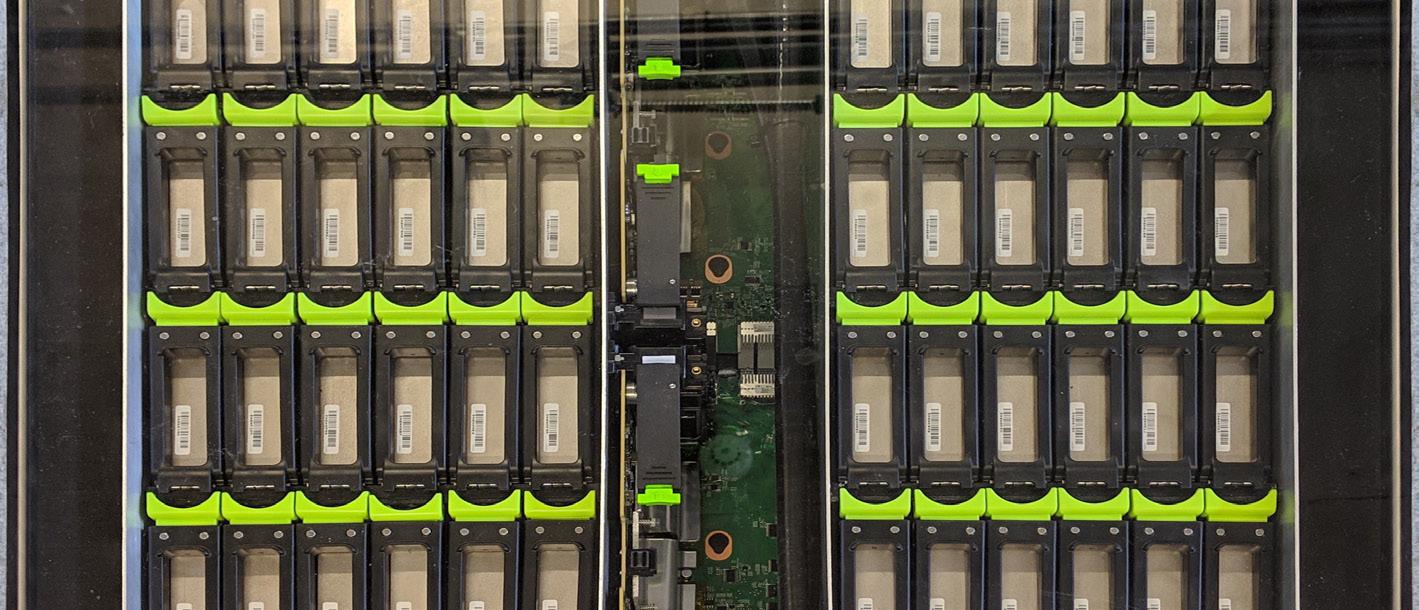

Immersion cooling specialist Iceotope and social media company Meta have demonstrated that immersion cooling can be safely used with hard drive storage - by re-engineering an air-cooled storage system to be cooled by liquid.

The study found the liquid-cooled version of the system had a more uniform temperature, and the power required to cool the system was reduced to less than five percent of the system’s total power consumption. The silent operation of the system also protected the hard drive from acoustic vibrations which can be an issue for air-cooled hard drives.

The test took a standard commercial air-cooled, high-density storage system that held seventy-two hard drives in a 40U

rack, along with two single socket nodes, two SAS expander cards, a NIC, and a power distribution board, and re-engineered it for single-phase immersion cooling.

The test was important because hard drives, with capacities up to 20TB, currently provide 90 percent of the storage in data centers (according to research by Cybersecurity Ventures). While increasing power densities are driving data centers to consider immersion cooling, hard drives have normally been excluded for fear that they might be incompatible with the technique.

According to Iceotope, the test found that hard drive systems in a rack form factor “turned out to be an ideal fit for precision immersion cooling technology.” One

reason for this seems to be a change in hard drive engineering. While hard drives have normally been sealed to prevent the ingress of dust, the arrival of helium-filled hard drives means that such drives are now hermetically sealed, making them compatible with immersion cooling.

To carry out the test, Iceotope and Meta added an Iceotope precision immersion liquid cooling system, immersing the drives in dielectric fluid and fitting a dedicated dielectric loop and a liquid-to-liquid heat exchanger and pump.

Facebook-owner Meta then measured temperature variation across the hard drives and cooling pump power in the air-cooled and liquid-cooled systems.

The results showed the variance in temperature between all 72 HDDs was just 3°C, regardless of their location inside the rack. The cooling system released its heat to a secondary water circuit, and the drives operated reliably with rack water inlet temperatures up to 40°C.

On top of that, the system was efficient, with cooling power at less than five percent of the total power consumption. And the companies assert that liquid cooling will mitigate vibrations that have been known to cause damage or failure of hard drives.

Chassis immersion might seem an extreme option, but Iceotope argues that other forms of liquid cooling such as it is less invasive than cold plates, tank immersion, or two-phase immersion, and allows user access for servicing, and the ability to hotswap drives.

bit.ly/ColdColdStorage

A fire broke out at a QTS data center in New Jersey in the early hours of Wednesday, November 23.

The fire was reported at 02:45 am, and extinguished by 05:00 hours, with no casualties. However, the local fire department reports the fire was extinguished with heavy flows of water, and extra care had to be taken on the site due to flammable building materials on site to build an extension to the facility.

“Early Wednesday morning, authorities responded to a fire on a concrete and steel structure under construction adjacent to QTS’ Piscataway data center,” QTS said. “The local fire department... fully extinguished the fire shortly after arrival.”

QTS has been building a two-story, 90,000 sq ft extension to a data center on the site. The fire broke out in this new construction, and did not spread to the adjacent operational data center, which was unscathed.

The spokesperson explained: “QTS determined that several pallets of roofing material stored on the roof for future installation caught fire. The cause has not been determined. No injuries or customer disruption was reported. The operational data center adjacent to the construction site was not impacted.”

QTS bought the data center site from DuPont Fabros in 2016. The 38acre campus already holds two facilities, as well as the new building under construction.

bit.ly/RaiseTheQTSRoof

Being involved in a project as early as concept design stage allows us to manage and coordinate the utility interaction and significantly reduce the design and procurement timeframes.

Engineering & Design

Our Engineering & Design teams are engaged with Distribution Network Operators in the leading Data Centre Markets

High Voltage

We have established ourselves as a leader for Engineering, Procurement and Construction of energy solution projects.

Electrical & Mechanical

Our Electrical and Mechanical teams have extensive Project delivery experience having delivered significant projects globally.

hmvengineering.com

hmvengineering.com

French data center engineering company APL is proposing a novel solution to data center location: lightsout data centers in newly-constructed underground caverns.

Eco-Caverne, designed by Swissbased underground construction startup Eccus, is based on new underground space excavated 30m under existing terrain. APL says these underground facilities can be build up to 20 percent cheaper than building above-ground, and have benefits including providing waste heat to warm nearby buildings.

Numerous data centers have been built in underground caverns, claiming advantages in cooling and resilience.

Eccus intends to take these advantages to urban areas, adding further benefit: the Eco-Caverne will be easy to access, and create available space in completely built-up areas.

The Eco-Caverne is a waterproof cylinder 30m underground, up to 150m long. The chambers will be built in one of three standard diameters; “Vega” approximately 10m, “Rigel” approximately 13m, and “Hadar” around 15m. Each chamber will be provided with a 3m x 6.5m lift with a capacity of 12 tonnes at one end, and an emergency exit at the other.

The chambers will also have ventilation, security systems, and a fire detection and prevention system.

Eccus says it can build underground, using the same techniques as underground road and railway tunnels, and deliver secure underground space quickly compared with building above ground, and with other benefits. The Eco-Cavernes can be built under existing buildings, and provide space where land is otherwise unavailable.

APL is applying this idea to data centers, which it plans to offer in France and Switzerland: “This new solution makes it possible for a company to create or complete its computer hosting area on land that

has already been built or in a saturated zone,” says APL’s announcement.

The data center builder is making much of the heat advantages, proposing the facilities’ waste heat can be used directly to warm buildings above. APL says a 2,000 sqm (21,500 sq ft) data center can supply 22GWh of heat energy per year, enough for 2,000 homes.

The facilities can also be run without day-to-day staff, allowing the operator to reduce oxygen levels to 13 percent, cutting fire risks.

bit.ly/DataDownUnder

A French startup is proposing to float data centers on urban rivers.

Denv-R plans to launch facilities cooled by river water, starting with a test facility in Nantes next year. The company’s two founders, based at the IMT Atlantique engineering school in Nantes, say this will reduce energy consumption and CO2 emissions.

The company is planning to float a demonstration system on the Loire river by the Quai Wilson island in Nantes, in June 2023.

There is only limited information on Denv-R’s website, but according to an article on 20 Minutes, the idea sounds similar to the barges pioneered by the US company Nautilus, which has a floating facility in California, alongside one in development in Ireland, and a land-based data center in Maine.

Denv-R says its system will be smaller than Nautilus,’ making it more suitable for deployment on urban rivers. It also circulates water passively, without the need for pumps.

Based on the render provided by IMT Atlantique, the facilities appear to follow a catamaran-like design, with two hulls next to each other.

bit.ly/DataDownUnder

Thales Alenia Space will lead the European Commission’s ASCEND feasibility study for data centers in orbit.

‘Advanced Space Cloud for European Net zero emission and Data sovereignty’ is part of the EU’s Horizon Europe research program, and aims to see if data centers in space would lead to fewer emissions than those on Earth.

The data centers would rely on solar power plants generating several hundred megawatts, which would also be in space.

They would then link to the Earth via high-throughput optical communications.

The first stage of the study sets out to answer a critical question - whether the emissions created by producing and launching the space data centers would be less than that generated by ground based ones.

Then it will study whether it is possible to develop the necessary launch solution and to ensure the deployment and operability of these spaceborne data centers using robotic assistance technologies.

bit.ly/SpaceToExpand

From designing your power solutions, through installation and commissioning, our rainmakers are on call 24/7. They’re authorized to contact any data center expert to help you with anything related to protecting your data, now and in the future. Our rainmakers are the supply chain who keep your confidence on.

GPU maker Nvidia and cloud giant Microsoft have entered into a multi-year collaboration to build “one of the most powerful AI supercomputers in the world.”

The cloud-based system will use Nvidia GPUs and networking gear, as well as use Nvidia’s AI software stack.

Specifics were not disclosed, but Nvidia said that the deal will add tens of thousands of Nvidia A100 and H100 GPUs, as well as Quantum-2 400Gb/s InfiniBand networking gear.

“As part of the collaboration, Nvidia will utilize Azure’s scalable virtual machine instances to research and further accelerate advances in generative AI, a rapidly emerging area of AI in which foundational models like Megatron Turing NLG 530B are the basis for unsupervised, self-learning algorithms to create new text, code, digital images, video or audio,” Nvidia said.

When the system comes online, customers will be able to deploy thousands of GPUs in a single cluster to train large language models, complex recommender systems, run generative AI models, and more. A date was not disclosed for when the supercomputer is expected to launch, but it will likely be installed in phases.

bit.ly/BringBackSiliconGraphics

Microsoft has acquired UK-based Lumenisity Limited, a manufacturer of hollow core fiber (HCF) solutions.

A type of optical fiber technology, HCF features an air-filled center channel that is surrounded by a ring of glass tubes, akin to a honeycomb pattern. The design allows for higher capacity with minimized chromatic dispersion.

Though not a new technology, interest in the technology has been growing as performance and reliability issues have improved.

Lumenisity was formed in 2017 as a spinoff from the Optoelectronics Research Centre (ORC) at the University of Southampton to commercialize its HCF technologies. The company had raised £12.5 million; euNetworks was a customer, while BT had conducted trials with the fiber firm. It recently opened a 40,000 sq ft HCF manufacturing facility in Romsey, UK.

Microsoft company said the acquisition will expand its ability to ‘further optimize its global cloud infrastructure’ and serve Microsoft’s Cloud Platform and Services customers with strict latency and security requirements. Terms of the deal were not shared.

Lumenisity’s HCF solutions uses a proprietary design where light propagates in an air core, which it claims has ‘significant advantages’ over traditional cable built with a solid core of glass.

“Organizations within the healthcare, financial services, manufacturing, retail, and government sectors could see significant benefit from HCF solutions as they rely on networks and data centers that require high-speed transactions, enhanced security, increased bandwidth, and high-capacity communications,” Microsoft said of the acquisition.

bit.ly/AHollowVictory

The London Stock Exchange announced that Azure will be its preferred cloud provider... after Microsoft invested in it. The company has won similar contracts by investing in Cruise, OpenAI, and others.

Microsoft is believed to have picked up DPU firm Fungible.

Data processing units (DPUs) are a relatively new class of programmable processor that manages how data moves through a data center, offloading networking tasks and helping optimize application performance.

Fungible was founded in 2015 as the first company to pitch such a product to the cloud, and managed to raise over $370 million.

But the company, co-founded by the founder of Juniper Networks, struggled as

larger players entered the market, including Nvidia, Intel, and AMD. Lightbits, Liqid, and GigaIO also took market share.

This August, the company laid off staff as its sales slowed and its cash piles dwindled. SemiAnalysis reports that the company initially tried to sell itself to Meta, but failed. It was in talks with Microsoft for a custom silicon deal, but as its options narrowed, it sold to the company for a fire sale price.

Microsoft is believed to have no interest in selling Fungible’s kit to external customers.

bit.ly/AFungibleToken

P i c t u r e d : J o a n n a S t i l e s B u s i n e s s M a n a g e r f o r D a t a C e n t r e s a t M e e s o n s A I p r e p a r i n g t o p r e s e n t w i n n e r s V a n t a g e a t t h e D C D a w a r d s

D C D

P r o u d s p o n s o r s o f t h e M i d d l e E a s t a n d

A f r i c a D a t a C e n t r e D e v e l o p m e n t A w a r d a t t h e 2 0 2 2 D C D A w a r d s .

K e e p i n g D a t a C e n t r e s s a f e a n d s e c u r e w i t h

l a y e r e d p h y s i c a l s e c u r i t y .

O u r a p p r o a c h t o e n t r y s e c u r i t y a t M e e s o n s f o c u s e s o n b e t t e r - r a t e d , b e t t e r - t e s t e d p r o d u c t s A s

a n i n t e r n a t i o n a l p h y s i c a l s e c u r i t y p r o v i d e r , w e u s e o u r k n o w l e d g e o f s t a n d a r d s , c e r t i f i c a t i o n s , p o l i c i e s a n d 3 r d p a r t y a c c r e d i t a t i o n s t o s u p p o r t p u b l i c s a f e t y d e m a n d s w o r l d w i d e W e f i r m l y u n d e r s t a n d t h e i m p l i c a t i o n s o f n o t p r o t e c t i n g d a t a s u i t a b l y , w h i c h i s w h y w e a r e c o m m i t t e d t o s e c u r i n g t h e d a t a a n d a s s e t s w i t h i n y o u r b u i l d i n g w i t h t h e f o l l o w i n g :

H i g h - s e c u r i t y p o r t a l s S p e e d g a t e s

F u l l - h e i g h t t u r n s t i l e s

H V M

F i n d u s a t w w w . m e e s o n s . c o m

The making of Loudoun County as the heart of the data center industry wasn’t always a foregone conclusion.

It had to survive the dot-com bubble bursting, build its credibility in a cautious industry, and find ways to keep on growing, no matter what. Now, its position is secure, with the Virginia county cementing its place as the capital of a demanding industry.

But Loudoun, and Ashburn within it, face new headwinds as power and land demands outstrip supplies, and some locals push back against the preponderance of one industry.

To understand how Loudoun will adapt in the current climate, and what it means for the future of data centers in the country, we sat down with the man who made it possible - Buddy Rizer, Loudoun’s executive director for economic development.

“When it all started here, it came by accident,” he explained. “The Internet exchange was in Tyson's Corner, but the federal government realized that you could just drive a truck into that building, and moved it out here.”

That was followed by AOL and WorldCom, and some of the first dedicated data centers in the country.

It was the height of the dot-com bubble, and "they were all putting fiber in the ground. Companies like PSINet and UUNET were just throwing money around and valuations were just stupid."

Then came the crash. "That kind of blew up," Rizer said, with PSINet going bankrupt in 2001, and UUNET owner WorldCom filing for what was then the largest Chapter 11 bankruptcy protection in history a year later due to widespread fraud.

Rizer was brought on to the economic development team in 2007 with a simple aim: Increase county revenues. "81 percent of our tax revenue was coming from residences," he recalled. "And, as we saw during the housing bubble a year later, that was not good."

Looking out his window, Rizer pointed to three data centers built during the first boom. "They sat empty for years, they never got filled, until I was able to convince Digital Realty to move in."

The early companies may have left, but their infrastructure was all there - available for a new crop of corporations to buy up for cheap and build more sustainable businesses on top of.

"That was purposeful, that was something that we saw as an opportunity. When I hear people say, ‘Loudoun’s success was a lightning strike’… it was purposeful, we looked at that as

“If we're at 28 million square feet in Loudoun today, we could definitely exceed 40 million at total build-out"

an opportunity and I had to be proactive in building that," Rizer said.

"I was going to every show, we were trying to get deals done," he said, sitting in front of a stuffed gorilla wearing hundreds of lanyards from various events he visited. "With CyrusOne, wherever [then-CEO] Kevin Timmons was speaking at a conference, I was going to that conference and sitting in the front row, just so he could see me, and so I could talk to him after. He always said, 'I think we're too late to get in,' and all these things, but then when they came in, they were incredibly successful.”

Proudly surveying the data center landscape from his window, he continued: "We worked very hard to build this. And it was not just us, it was landowners and Dominion," the power utility.

But after decades of building out the infrastructure that made the unprecedented data center expansion possible, Dominion this summer shocked the industry with the surprise announcement that it could no longer guarantee new power connections for four years.

"To wake up one day and to find out that there's now no new power till '26? I was flabbergasted. I really couldn't believe that we ended up there,” Rizer said, admitting that despite their close ties he found out at the same time as everybody else.

The challenge was understandable, he said - "there's no case study to point to given the power density we have here," he cautioned. "There were a lot of factors, there was the Covid growth, and the shift in 2017, when the hyperscalers came in, where their ramp up of power is much quicker. They bring it on like that,” he said, snapping his fingers. “That changed everything.”

Dominion’s announcement was a similar sudden snap. “No one's really been able to explain to me how we went from the idea of unlimited power that we always just assumed we had, to suddenly 'we don't know.'"

Beyond just the immediate impact on the data center sector, the sudden cessation of power and buildouts meant that the county’s tax revenue plans were - and still arethrown into disarray.

"We had projected 20 percent year-overyear revenue growth because that's what we traditionally had," Rizer said. "Now we know that that's probably not going to be the case. So that does impact our county budget. When you're dealing with half a billion dollars or more, 20 percent of that is a big number.

“When we're trying to plan schools, roads, community services, parks, sheriffs, fire departments, and all of those things, having

that unpredictability that's going to impact our budget process for the next three or four years is not ideal. And we were already into our budget planning when we found this out.”

But the Dominion delays, the scale of which we are still learning, represent just a temporary blip in the story of Loudoun, Rizer argues.

“It sucks for those companies that had already deployed investment into the county, and then now find out that they can't power the buildings that they built. But that hasn't paused any of the demand for the land. People are still trying to secure long runways so that when the power is here, they'll be able to really move pretty quickly. Nothing has slowed down the demand on our land.”

As it currently stands, the data center industry takes up around three percent of the land in Loudoun (with a higher concentration within Ashburn), and consumes around two gigawatts of power. At full-zoned buildout, “maybe it’s five percent of the landmass,” Rizer said, adding that older facilities will eventually be torn down and rebuilt taller and denser.

“If we're at 28 million square feet, we could definitely exceed 40 million at total build-out. That gets us to five gigawatts, and over a billion dollars of annual revenue.”

After years of building without limits, 2022 also saw the Board of Supervisors limit data center projects in some neighborhoods, particularly along Route 7. The new rules will also require data centers to adopt higher-quality building designs and tougher environmental rules depending on their proximity to housing.

“There's always going to be pushback when things start to get big,” Rizer said. “That's a natural part of the conversation. But there is a difference between process and policy - the process has been tough, I’m not gonna hide from that. But when you look at it from a policy standpoint, honestly, I don't find it overly restrictive.”

He believes the certainty of the new policy will be welcomed by the industry, while design standards are only for the worst offenders - most new builds already meet the requirements. Rizer said that while he welcomes more data centers, it’s important to set restrictions in some areas to build “unique communities where people want to be and also protect Western Loudoun County and protecting the farmland there. I don't see a scenario where we're going to go west of Route 15. I just don't know that that's what we need to do.”

He added: “There are places where we don't think there should be data centers. And I think that's okay. There's been a decade or

Where data centers call home

more of unrestricted growth in the last year, and do you know how many data centers we've turned down? One. It's not like we've turned off the spigot.”

While they haven’t turned down that many data centers, the dwindling number of large tracts of land and skyrocketing land prices have driven companies to neighboring counties.

“It's not like if Prince William wins or Frederick wins a deal that we lose,” Rizer said. “We have limited resources and limited land, and now limited power. I would rather it come to the region than not at all. I'm competitive and I don't like losing, but if there’s a deal that they can't put here, I'd rather it go to Prince William."

The county receives around 31 percent of its tax revenue from data centers but, at some point, data centers will max out all the space made available to them, even if the industry stays strong for the foreseeable future.

“I think that the idea that data centers are going to go away anytime soon is probably science fiction,” he said. “Our internal research tells us that the demand for data centers outstrips supply through the next 20-30 years. And if it does start to deteriorate, I don’t think that it starts here.”

But with a limit to growth on the horizon, he hopes to use the money raised by data centers to help promote a more diverse economy. “Even the data center sector wouldn't believe that it makes sense to have all of your eggs in one basket,” he said.

“We're trying to build Life Science cluster, we're trying to build a cybersecurity industry, and take advantage of the Dulles Airport, and take advantage of the fact that we now have Metro,” he said, referencing the Silver Line rail extension connecting Loudoun to DC that took nearly two decades, but opened in the week of our conversation.

“There's really three things that have been total game changers for us - one was 60 years ago, when the airport came, the second was the tech infrastructure that was built here pre-bubble burst, and then I think Metro is going to be the third.”

The future of Loudoun, he hopes, will involve more data centers than ever beforebut will rely on them less as new sectors sprout alongside it. “I don't feel like what we've done here in Loudoun County is the end of anything.”

Still, he is happy to reflect on how far the county has come from its dot-com bubble days. “It's so cool to be the biggest in the world at something, to be able to have this industry that has built what it has. There's something to be said for that.”

Sebastian Moss Editor-in-Chief

Sebastian Moss Editor-in-Chief

As one of the world’s largest data center companies, Digital Realty has an outsized presence in the data center hotspot of Virginia.

The company has some of the earliest buildings on data center alley, dating back nearly two decades, along with some of the largest, and is building a massive new campus right near Dulles Airport.

We visited a million-square-foot facility and toured its new construction site to understand Digital’s future in Virginia. “It's such a critical market, not only for Digital, but for the industry overall. It is the gold standard of availability zones on Earth,” company CTO Chris Sharp said.

The company operates around 600MW of IT load in Loudoun today, and is building a new ‘Digital Dulles’ campus that is planned to add another gigawatt on top. “What I think is interesting is we have almost 1,000 acres inside of the Loudoun County area, and 575 acres of that is left to develop,” Sharp said. “There's a lot of runway.”

Its involvement in the county dates back to Digital’s earliest days, when it acquired three data centers starting in 2005. It also built its own, and gained more facilities with the acquisition of DuPont Fabros.

“Once we started development of our campus, we just never stopped,” Rich Becher, design manager at Digital Realty, said. “When I was preparing for this interview, I learned

“The largest cloud availability zones on Earth are in this market. And what we're seeing now is the adjunct workload that is going to drive more demand is AI"

that the first customer we put into that campus is still with us,” he added. “That was a happy thing to learn.”

The company currently has no plans to tear down its older facilities and rebuild them larger and denser. "They still serve their needs," Becher said. "Our customers are happy with them, and are staying in them."

He noted that the components and features of the data centers that age faster are modular, allowing for them to be replaced more frequently than the building itself.

"That modularity is what really allows those buildings to keep up with customers' evolution within them," Sharp added. "It is extremely tedious for these larger deployments to ever lift and shift."

Beyond the size and the number of stories, telling apart the older facilities from the new ones is easy: Just look for windows. “It's funny how that topic has evolved,” Becher said. “In the beginning, we wanted to make the building’s appearance comfortable for our customers - concrete so cars don't get in, and no windows so people don't get in.

“What we're seeing now, and not just in Virginia, is that [local governments] are pushing back on the appearance of the buildings, they don't want these blank wall buildings. So now they have glass on the outside, but you still can’t get in.”

The facility DCD toured, 'Building L' at Round Table Plaza, is built to the company's

newest designs, several generations on from the older concrete Virginia sites.

At over one million square feet, it is a huge data center in its own right. "When we were building 'Building L,' one of the crazy things we considered was a moving walkway," Becher admitted. "It's so long that we went to a manufacturer to understand how they work, but ultimately never put it in."

We had to rely on our legs to tour the site, which has a utility power capacity of 120MW. The company's largest in the state, the first customer moved into the data center in October 2017.

"We put our last customer in that building in December of 2020," Becher said proudly. "I think we sold the building faster than we built it."

Developing a single building of that size was a learning moment for the company. “When it launched, we only had one freight elevator, and then we added another one because it was so busy with everybody moving in,” Becher said. “Now any building that gets close to that size will get two freight elevators.”

With Digital Dulles, its huge planned campus adjacent to the airport, Digital Realty is planning smaller individual buildings, but a larger overall footprint. “We have 14 buildings planned on Digital Dulles,” Becher said. “The biggest buildings are larger than half the size of Building L.”

The company hopes that the facilities will represent the next stage of Digital’s life in the data center capital. It also may be the company’s last major land deal in the vicinity. “Believe me, these large blocks of land - there are no more left,” Sharp said. “And, at the size we operate, it was getting upwards of a million to a million-and-a-half per acre inside of this area,” he added, noting that with Digital Dulles they were able to get the land for “around half of that.”

That fundamental land limit will eventually mean the end of the unperturbed growth in Loudoun (delayed somewhat by Dominion’s power issues, which will impact Digital Dulles to an unknown extent). But it doesn’t mean the end of Loudoun as a data center hub - far from it, Sharp argued.

“The largest cloud availability zones on Earth are in this market,” he said. “And what we're seeing now is the adjunct workload that is going to drive more demand over some period of time is artificial intelligence. We see this as being the next epicenter of artificial intelligence, because of the fact that some of the largest data oceans on Earth exist in this market. And you want to do analytics against that.”

Cloud providers don’t want to have fiber repeaters within the same availability zone, so that means operating within 2-12km of another data center to have a “contiguous” or parent/child setup. Sharp envisions high-density data centers for AI workloads close to facilities working on analytics, storage, and other tasks, all operating under a single availability zone.

That requires a lot of interconnection. “Once you build an epicenter, there's a lot of value both in how it's interconnected, and just the efficiencies from the amalgamation of infrastructure and the matchup of customers,” Sharp said.

With Digital Dulles, its meet-me-rooms are much larger than its existing sites, on a percentage basis, “because of the amount of physical fibers and conduits required to run it,” Sharp said, with those fibers connecting to its data centers as well as those of its rivals.

“ServiceFabric is absolutely everything,” he said, referencing the company’s global service orchestration platform. “Because we are open, and so we don't care if your workloads are in another competitor's data center or ours.”

Given the desire for every major company to have an IT presence in Virginia, and the preponderance of potential customers, it is unlike any other market. “There's just some uniqueness with other competitors, it’s more like coopetition,” Sharp said. “Quite frankly, there's more demand than we could ever meet.

“And we don't see that slowing down anytime soon.”

•50% + savings in capital cost vs chilled water systems

•Lightweight and compact footprint manufactur from Stainless Steel and Composite materials

•Approaching 2000 units installed throughout the world cooling over 500MW of Data Centre space

•Rapid deployment allowing simple installa and set up

•Minimal maintenance and water

•Low connected power, reducing the size for generators and power distribution

+44 (0)1527 492750

info@excool com

www.excool.com

Virginia remains the main hub of data centers worldwide. But while the area looks increasingly full, new companies are still popping up looking to serve the hyperscalers’ insatiable demand for new capacity.

Two new debutantes, PowerHouse and CorScale, are set to launch new hyperscale facilities in Virginia in the near future.

Both are newly-founded by large, wellestablished real estate firms. Both are backed by large investment firms. And both are set to develop large amounts of new capacity dedicated to serving the big cloud providers.

DCD visited both companies’ maiden data center development sites – PowerHouse in Loudoun County’s Ashburn and CorScale’s outside Gainesville in Prince William County – and spoke to company executives about being the new kids on the block.

In the heart of Ashburn, PowerHouse is

opening its account with a sizeable data center project. The company, founded by American Real Estate Partners (AREP) and backed by investment firm Harrison Street, is seeking to start with an 80MW facility.

“Like a lot of other companies, it was Covid that really pushed it,” says Luke Kipfer, vice president of data center development and construction at AREP/PowerHouse Data Centers. “AREPs portfolio was heavily in class A office space, so we've been actively diversifying. And our partnership with Harrison Street lets us go all in on the data center model.”

The company is planning developments on three sites across Northern Virginia Six buildings are currently in planning or underway totaling 2.1 million sq ft (195,100 sqm) and up to 338MW of critical power.

Sat on 10 acres and currently under construction, ABX-1 is set to be the first building completed. It will comprise one two-story 265,000 sq ft (25,000 sqm) building. Located at 21529 Beaumeade Circle in Ashburn, the first 15MW will launch in 2023; at full build-out at the end of the

Dan Swinhoe News Editor

year, the facility will offer 45MW across six data halls. The site, which DCD visited in November 2022, is reportedly expandable up to 80MW.

Harrison Street and AREP acquired the site for $21.5 million in January 2021. The land, formerly home to a retail strip mall-type facility, was previously owned by Chirisa Investments, which bought the site in 2018 and had planned to build a 280,000 sq ft (26,000 sqm), 30MW data center.

DPR is the construction partner with PowerHouse on the facility. And despite being in one of the areas most affected by Dominion’s surprise capacity issues in Ashburn, Kipfer suggests the site will have enough power for the launch of the first phase and Dominion should hopefully have the issues sorted by the time the facility is reaching full capacity around 2026. An onsite substation is being developed on part of the project which will serve both ABX-1 and surrounding facilities.

On Sterling’s Pacific Boulevard, PowerHouse Pacific will comprise three three-story buildings totaling 24 data

halls across 1.1 million sq ft (102,200 sqm). Construction is due to start in 2024 and complete in 2024/2025; the site will offer 265MW.

The Pacific campus was previously part of the former AOL headquarters and most recently used by Yahoo!. New parent Oath sold the 43.3-acre property in December for $136 million.

And, finally, the company is developing a 23-acre site in Arcola’s Arcola Boulevard. PowerHouse Arcola will comprise two two-story buildings and 12 data halls across 614,300 sq ft (57,070 sqm). The buildings will offer 54MW and 66MW. Construction is due to begin in April 2024 and end in 2025, with the second building finished in 2026.

Kipfer said the sites aren’t yet pre-leased, but there is “strong interest” from clients and the company expects them to be leased soon.

Before joining AREP, Kipfer was regional director at Direct Line Global and director of construction at Markley Group in the Boston area.

“I came out here just to be more involved in some of these larger-scale projects,” he says.

“In Boston, it was more Edge deployments, smaller enterprise, higher ed, pharmaceutical. So they had some real requirements, but they were all a one-off build. Even though the numbers and scale is different, the projects here are almost less complex. With a lot of hyperscale builds, it's the same widget 100 times over.”

Kipfer said PowerHouse is an AREPowned entity, with the projects operated in a joint-venture model with Harrison Street.

While Harrison Street is well-versed in data center investments, AREP doesn’t have a long tradition of developing data centers, and it’s flexible in what it will deliver.

“While AREP's new to data centers, they've been a developer in this area for 20 years,” says Kipfer. “We've got very deep contacts with brokers, with local utilities, all the players here that you have to know to make things happen. We're able to get a lot of sites before they come publicly to market, so we're able to get good sites and identify power.”

“We're not limited to one development model. We’re open to just about everything right now,” he adds.

“We’re developing relationships with hyperscalers and understanding their needs; as long as it's something that has market viability, it's something that we're interested in. These guys are just building so quickly, we're basically giving them a

head start in their deployments.”

Prior to setting up PowerHouse, AREP acquired what is now known as Quantum Park in 2016 for a reported $212 million alongside hedge fund Davidson Kempner Capital Management from Verizon.

The park is the former UUNet/MCI Worldcom site that was a key connectivity hub dating to the early days of the Internet; Verizon had an existing data center there and Aligned has since developed on the site. Last year, DigitalBridge-backed Landmark Dividend acquired a portfolio of assets in the Quantum Park campus.

Harrison Street has a number of data centers under its ownership. January 2021 saw the company acquire the Pittock Block carrier hotel in Portland, Oregon, for $326 million alongside 1547 CSR, and in November 2021 it acquired CIM Group’s stake in four US data centers co-owned with 1547.

Harrison and 1547 have completed a number of data center deals together; the two companies previously acquired the Wells Building carrier hotel in Milwaukee, Wisconsin, for $7.25 million in 2020.

“Harrison are a knowledgeable data

center firm, they've got a lot of experience that the team that it's great to work with, they know the market,” says Kipfer, who adds the company is looking to expand out of Northern Virginia with Harrison’s help.

On whether hyperscalers will continue to need outside developers, he says the combination of having the right sites and development teams, alongside the need for flexible capital deployment will mean there is always a need for outside developers.

“There's always going to be a certain need there. There seem to be a lot fewer individuals in the hyperscale enterprise teams that are really good at site development; a lot of them are focused on the nuts and bolts of a data center; the UPS, network methodology, things like that,” he says.

“Certain users have different priorities in terms of deployment of capital; some would rather lease it out and spread it out, and some want to own.

"And that's where we've been flexible; we'll do long-term leases, we'll do leases with option to buy, we're able to work with a lot of different users’ of needs for how they are deploying their infrastructure.”

Like PowerHouse, CorScale is backed by a large and established real estate firm, this time in the shape of Patrinely Group. And it has the financial backing of real estate investment firm USAA Real Estate.

While not big names in the data center space, the two companies have the portfolio and capital to operate at scale, and have experience in the industry. Nic Bustamante, senior vice president at Corscale/Patrinely Group, tells DCD that the two companies have a history working together and developing enterprise data centers – usually in the 1-5MW range, but in some cases up to 20MW – as part of office developments. One of the most recent examples is HPE’s new HQ in Houston, Texas.

“The company [Patrinely] had this experience building these smaller data centers, and for around five years considered getting into the hyperscale space. It is

heavily diversified in real estate assets, and data centers are one of those real estate and asset classes that was interesting to them.”

Like PowerHouse, CorScale isn’t starting small. The company’s debut project is a 300MW campus in Gainesville, Prince William County. The campus, known as Gainesville Crossing, is located close to Manassas Battlefield and looks set to be joined by QTS and Compass once the two companies start development on the PW Gateway project.

After breaking ground in

utility power. A Dominion Energy on-site substation will deliver power.

The site was bought for $74.5 million from Buchanan Partners in August 2020, by real estate firm Wolff Co, on behalf of Patrinely.

“We didn't have to spend a ton of time and resources putting together the utility plan for that project, it was already relatively de-risked,” says Bustamante. “We have direct access to connectivity adjacent to the site, and the power plan for that area with Dominion is already really well vetted, so line of sight to power was really great at that site. It is closer to Loudoun and the core network market in Ashburn than something further south, and we thought it was kind of a nobrainer.”

Discussions with an end-user are ongoing to close to agreeing on a pre-lease for the first building. While CorScale and Bustamante declined to name a customer, DCD understands AWS is in talks to be their first customer.

Bustamante said the closing of the site acquisition occurred around a month before he joined the company. He previously worked on data centers at Apple, Google, Microsoft, and Rackspace; and has been joined at Patrinely by Stuart Levinsky, formerly of Iron Mountain Data Centers, Switch, CyrusOne, and Cincinnati Bell.

“I got to be CorScale employee number one, so I could handpick our team,” he says on what attracted him to the company. “It requires very little effort to get capital and get committee approval to deploy that capital, and I think that's a significant part of the execution; the visibility to property on a global basis, through our partner at USAA.”

Prior to its official launch, Corscale’s existence was revealed in a press release that said the nascent data center firm was signed up to use Ledger7860's carbon accounting package. Bustamante said the focus on green credentials is one of the ways the company is hoping to differentiate itself from other hyperscale-focused developers in the space and area.

early 2022, the first 72MW phase is expected to come online around Q4 2022 and will consist of a single two-story building with eight data halls and office space totaling approximately 483,000 square feet (44,900 sqm). At full build-out, the 130-acre campus will comprise 2.3 million sq ft (213,700 sqm) over five two-story buildings and 306MW of

“These guys are also very comfortable with and prefer Green development. So they've already had a number of LEED Platinum projects. I see a lot of hyperscale developers who prefer not to do that; the traditional hyperscale data centers don't seek LEED Platinum, Net Zero type of approach.”

Another differentiation, according to Bustamante, is the company’s ability to deliver complex projects quickly makes them a desirable partner.

“A lot of developers will say ‘I need perfectly

level, square, rectangular properties.’ Our ability to execute on complexity was already pretty evident with the prior relationships.”

As well as the Gainesville Crossing site, the company is planning a second Virginia development in the Kincora area of Sterling in Loudoun County. Sat on a 22-acre site, the company is planning a single 500,000 sq ft (46,450 sqm) building, consisting of eight 9MW data halls across three stories. Other projects are planned in California and outside London in the UK.

On the potential capacity issues Dominion has in Loudoun’s Ashburn, Corscale is confident it won’t be affected thanks to its building timeline.

“We weren't going to start construction until 2024 anyway, so we think that our initial connection date and service date may not end up being impacted depending on how they perform fixing things in Loudoun,” explains Bustamante. “You can expect us to do more in Virginia. I think we're pretty well positioned in that market to continue development there.”

Going forward, CorScale aims to stay focused on greenfield development with some brownfield sites that make sense. On acquiring existing facilities, the company is less keen.

“We've looked at a few acquisitions and generally said no because they look more like repositions or they're relatively distressed and not of the right scale,” says Bustamante. “We see a lot of capacity come on the market that isn’t bad, but it is not at the magnitude that we like. We can't take a 20MW data center and turn it into a 50MW data center too easily.”

While Loudoun is and will remain the epicenter of the world’s data center industry, things are changing. Quantum Loophole is looking to bring millions of square feet of new data center space to Maryland, while Prince William County is seeing huge amounts of development in a short space of time. But as the geography of the industry changes, the way facilities look and operate will likely have to change too.

CorScale’s Gainesville site gaining planning permission, combined with the roll-out of new transmission lines, were the driving reasons behind local landowner Mary Ann Ghadban gathering other locals together to sell the initial 800 acres that make up part of the new PW Digital Gateway project.

Bustamante tells DCD the fact QTS and Compass are investing so heavily in land adjacent to CorScale is ‘validation’ of their idea of building outside of the area’s traditional data center hubs. However, he says the sheer magnitude of those projects means development needs to be very carefully considered to ensure it meets energy and sustainability requirements that locals are happy with.

“I fear data center development that is unchecked. Data centers need to be comfortable coexisting wherever they go, in any market. We're successful because we work hand in hand with the municipality and user groups; and we shouldn't find ourselves at odds with the local community.

“What I see in the Gateway Project is a whole lot of people concerned about those things and more, but just the sheer magnitude and scale of it. Hopefully, the

green spaces that they put in will separate them from those things, but development needs to be very considerate not just to the battlefield, but to the locals who are very concerned about it.”

In Loudoun and Ashburn, both Bustamante and Kipfer can foresee data centers getting taller, but also more aesthetic. Two and three stories are becoming more common, and DCD has heard rumors of an application for a five-story facility being submitted.

Over the summer Loudoun officials proposed new zoning rules for data centers that would also set out new environmental standards for building design and noise.

“A typical data center that is a big grey wall-to-wall box? I think that's pretty passé. And those days are probably behind us, particularly in Loudoun, and I see that becoming table stakes in PWC and other markets,” says Bustamante. “Those operators that design data centers that have long mechanical gantries and generators spread out across hundreds of acres, I think those users are going to have more problems obtaining consent to develop, and they're also going to continue to be viewed negatively.

“I think you've got to build a product that is Class A. People are going to be held to a higher standard [going forward], and I think that's only fair given the capital that's coming in.”

Kipfer agrees. “Ashburn is never going to go away,” he adds. “This is a desirable place to be in terms of connectivity and power. But we want to keep building here, and if we don't keep a good relationship with the county and the neighbors, we know it's going to get turned off.”

“The hyperscalers are just building so quickly, we're basically giving them a head start in their deployments”Photography by Sebastian Moss

The ZincFive UPS Battery Cabinet is the world's first Nickel-Zinc Battery Energy Storage Solution product with backward and forward compatibility with megawatt class UPS inverters.

There’s a throughline to my conversation with Don Schopp, as we toured NTT Global Data Centers' VA3 facility in Ashburn, Virginia. NTT GDC is ready to play in the big leagues

Schopp joined RagingWire as a senior national account manager way back in 2012. “We were a family-owned privately held company, in just one market with two buildings,” he recalled. A year later, the Japanese telecoms giant NTT took a large stake in the company, but remained mostly hands off.

“We were only so relevant,” Schopp said. By 2017, NTT said it would buy the whole business, but its involvement was still limited. At the same time, it acquired India-based NetMagic, UK-based Gyron, Europe's e-shelter, and South Africa-based-Dimension Data, while NTT built out its own data centers in Malaysia, Japan, and elsewhere.

We're at over a gigawatt across our global portfolio. I don't know how many people are really today running a data center business at that scale”

Move

NTT is hereSebastian Moss Editor-in-Chief

It took until 2019 for NTT to mix the different companies together, under the brand name of NTT GDC, and still a few more years for the disparate businesses to work together under one corporate culture and a primary design template.

“We've arrived,” Schopp, now VP of strategic growth & channel sales, said several

One of the markets NTT GDC is looking to expand its presence in is Africa, first with a presence in South Africa, before expanding north.

We caught up with Michael Abendanon, head of NTT GDC MEA, soon after the launch of its Johannesburg data center.

Like Schopp, Abendanon is a transfer from an NTT acquisition - Dimension Data, which operates nearly a dozen data centers on the continent.

times as we walked through the facility. “We're at over a gigawatt across our global portfolio. I don't know how many people are really today running a data center business at that scale.”

There are obviously two competitors that blow that number out of the water - Equinix and Digital Realty.

"When you look at NTT globally, we're getting to that level," Schopp argued. "We're in 30 different countries, and we're growing... we're looking at Nairobi, Cape Town. Warsaw, Milan, Ho Chi Mihn, Singapore, and more."

The company has its roots in Asia, where it has a huge presence, but "we're now entering the main stage in the Americas," he said.

"What our construction plans in the United States are right now over the next two years will double what we currently have under operations today," he said. "It took all of that time to get to this point. And the next two years will beat that."

Currently, its 'Americas' business just means the United States, "but expect the Americas to include Canada and South America," Schopp said. “And then there are second-tier markets like Nashville, Austin, Charlotte, Miami, Montreal, Toronto, Salt Lake, and Denver that we are looking at.”

Crucially, while its ambitions are still dwarfed by the industry's two giants, Schopp noted that "NTT is way bigger than them, bigger than IBM, bigger than Bank of America bigger than Cisco. It's a huge company, but 80 percent of its business is in Japan, and we're part of the global business."

Being tied to a wider business can haveits positives and negatives. It could mean being tied to a slower business, and lost in the shuffle of more profitable ventures, but Schopp argues that it means access to a global customer base and lots of money.

But NTT has kept that subsidiary somewhat separate, deciding not to merge the older and smaller data centers into the GDC brand. "Those data centers have been around for a long time," Abendanon said.

"Things have moved on. Ultimately, Dimension Data won't be building any more data centers going forward, they will be focusing on taking up space in GDC facilities," he said, with the company bringing over customers to the new facilities.

That first new data center opened this October, with Johannesburg 1 Data Center providing 12MW of capacity across 6,000 sqm (64,600 sq ft) once fully built out (it is currently half that).

"We're looking to expand in Johannesburg beyond that facility," Abendanon said. "We are feverishly putting plans together as to the expansion journey there and in Cape Town."

Beyond South Africa, the company is looking to East Africa in the short term. "And the natural location for East Africa would be Kenya," he said.

The opportunity is vast, he argues: "Africa typically lags first world economies by four to seven years, and if you have a look at the data center penetration in Africa, comparative to the rest of the world, it's got less than one percent of the data center space versus the rest of the world.

“There is definitely lots of room for growth in Africa, considering the population, considering what's happening with Internet penetration. One might say ‘guys are you not over-investing in Africa?’ Our view is that it is only the tip of the iceberg in terms of demand.”

“What you'll see out of us over the next few years is billions and billions poured into the US and globally to construct data centers,” he said.

That has required a maturation of its business proposition, as it pursues larger customers like the hyperscalers.

“We're building and attracting more clients who want powered shell or want to take down an entire server room or an entire building,” he said. “We even build to suit for them, and that’s not necessarily was what we were known for, but we're now competing against the heavyweights.”

The shift is part of the company’s evolution, Schopp said. “We came into this market as a colo company, and started moving upstream to bigger single tenants, so building design data centers that are fit for those clients.”

Currently, hyperscalers are “small potatoes” as a percentage of NTT GDC’s customer base, but it hopes to increase their presence in the years to come. “But we'll never get away from our heritage, because we'll offer colocation down to the single cabinet,” Schopp said. “Not in every building and every market around the world, but where it makes sense.”

It’s also had to improve on how it builds

data centers with a standardized design, and how it handles its supply chain. “I would say that we've gotten a lot better than what we were as RagingWire,” Schopp said.

“Part of that is to admit that we had to improve, and then the other part was bringing people in to do something about it. Brittany Miller [previously of Microsoft], leads our construction and a lot of people from Facebook and Google are now part of the NTT family.”

The other part is its vendor-managed inventory. “On our newer sites we have a standard build, and we can ship the same products to different data centers,” Schopp said. “Before, we were kind of a wannabe on pre-builds and supply chains,” he admitted.

“Companies like Compass were out in front of us, and they paved the way that we follow. Now we’re doing that too, and I think that's also helped us secure more of those large-scale deals.

"The people on the other end of the table would ask the same question that you were - 'how do I know you're predictable?' Well, we have the capital source, the design, and we have this vendor managed just-in-time inventory.”

The facility we toured was mid-way through NTT GDC’s transformation,

representing 16MW of critical IT load out of its 224MW Ashburn data center campus. With 112,000 square feet (10,400 sq m) of data floor space, it is larger than its new standard design that spans 21,000 square feet and 6MW.

Uniquely, this site also has a huge amount of space given over to a large open staircase, conference-like center, and offices.

"We thought it would be nice to have people in here, a conference room, a NOC, and all that," Schopp said. "That's really changed in this era."

That space isn't used as much as it was pre-pandemic, but it still isn't worth knocking it down for more data hall space, Schopp said.

With Loudoun's space at a premium and hard to find, NTT GDC is looking to Prince William County as its next Virginia buildout.

This June, the company said that it had purchased nearly 104 acres in the county to develop a 336MW data center campus in PWC. A month later, utility Dominion said that it wouldn't be able to provide power to new builds in Loudoun.

"People were like 'what did you know?' but we were just a little bit fortunate in that," Schopp said. "We tried many times to get land and were unsuccessful and then we finally got land."

“What you'll see out of us over the next few years is billions and billions poured into the US and globally to construct data centers”

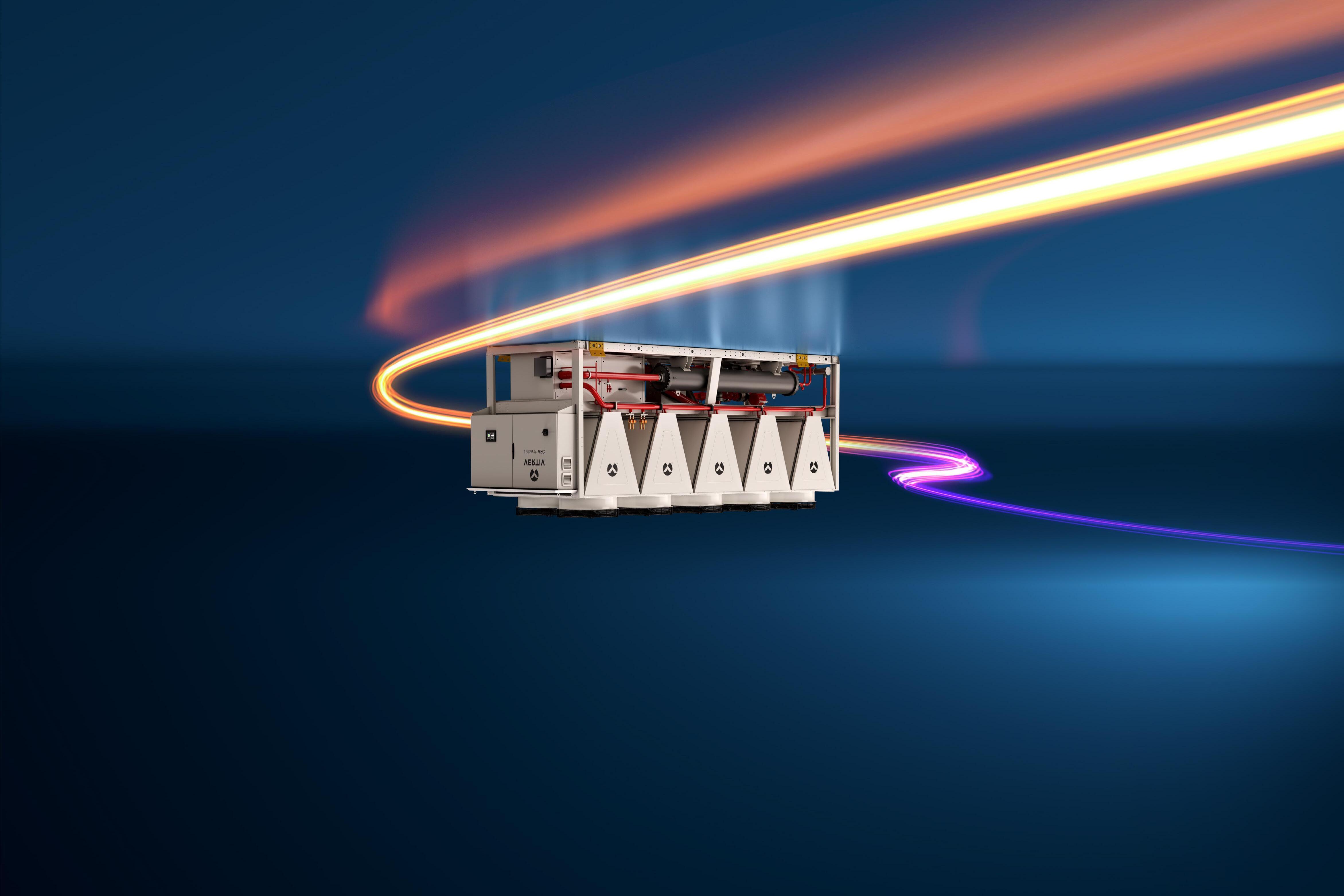

While the south side of the Potomac river is home to the world’s highest concentration of data centers, Maryland and Frederick County to the north are largely virgin space.

But as land becomes increasingly scarce and expensive, a new company is looking to turn Maryland into the next great data center market outside Washington DC with a new data center park offering to hyperscalers and wholesalers.

Led by former Terremark and CyrusOne executive Josh Snowhorn, Quantum Loophole has partnered with TPG Real Estate Partners (TREP) and is developing a 2,100-acre, gigawatt-scale data center park in Maryland’s Frederick County.

“The growth areas that we're focused on going outside of the Virginia area; and on Chicago, California, and potentially Dallas”

The world’s first multi-tenant gigawatt data center campus looks to change the local geography of the data center world

Located some 25 miles north of Ashburn in Adamstown, the campus is centered around the former Alcoa Eastalco Works aluminum smelting plant. The land includes the plot on which the now-demolished metals plant stood, as well as a number of surrounding greenfield plots currently used to grow animal feed, as well as a manor house from the 1800s.

“We provide land, energy, water, and fiber services at an unprecedented scale,” says Quantum Loophole CEO Josh Snowhorn, who has pitched the project as “the wholesaler to wholesalers.”

Work has begun on the site; with groundworks on supporting infrastructure such as power distribution and underground

power ducts, water and sewer pump stations and piping, and underground fiber distribution ducts, beginning in July 2022. Given its previous use, most of the land is already zone for industrial uses.

Under general contractor STO Mission Critical, the site is due to go live with power, fiber, and water infrastructure in late 2023 or early 2024. Groundworks for the first data center have already begun, so the first facilities will likely be ready around the same time.

According to Quantum Loophole director of operations

Chris Quesada, the company will be offering different ownership models depending on customer needs; the land will be available to buy, with Quantum owning the supporting fiber, water, and power infrastructure; or available on a long-term lease on which customers can build their own facility; and customers can ask Quantum to build the facility in more of a powered shell-type arrangement. The latter is reportedly the company’s least favored option due to the upfront capital requirements, but one it will happily do if required. Quantum has previously said it expects to deploy individual data center modules of 30-120MW capacity in less than nine months.

A key part of the project is the company’s QLoop network; a 43-mile fiber conduit system able to hold more than 200,000 strands of fiber running from the campus

to Leesburg in Virginia’s Loudoun County and back, running under the Potomac in two places as well as the Monocacy river on the journey. The infrastructure build out will include two on-site network centers.

Quantum raised $13 million in seed funding in 2021 before TREP, the real estate equity investment platform of asset firm TPG, invested. The size of TREP’s investment in Quantum hasn’t been disclosed, but it has around a 20 percent stake in the company.

While its investment portfolio includes stakes in the likes of Airbnb and Dropbox, TPG isn’t known for its dedicated data center investments; its previous telco/ communications investments include AllTell, later acquired by AT&T, and Astound Broadband. However, TPG previously invested in the department store chain Neiman Marcus, which was founded by members of Snowhorn’s family.

“We had bidders hunting us down and competing to invest in us,” says Snowhorn. “TREP are wonderful partners with a deep understanding of the data center sector.

“They looked at the assets that were for sale; Switch and CyrusOne and lots of other folks out there, but I think that they looked at it as a world that was starting to commoditize itself a little bit. But they looked at us as a business, as a wholesaler to the wholesalers, that is unique and less at risk of being commoditized. We're going to be the single greatest return they've ever seen.”

When asked which came first, the site as an opportunity or the concept of a gigawatt wholesaler, QL tells DCD the company and concept pre-empted the interest in the property.

“I always felt that there was something missing that hadn't really pushed the limits

of scale and building an Internet city-scale ecosystem that would support massscale interconnection and commonality, something like a master-planned community,” says Snowhorn.

The site was acquired for around $100 million; at around $48,000 an acre, that’s significantly less than the $3 million per acre paid in parts of Virginia for prime data center land.

In May 2022, Aligned Data Centers became the first company to publicly announce plans to develop a data center at the campus. Andrew Schaap, CEO of Aligned Data Centers, said at the time that the “attractive tax exemptions, power availability, and proximity to Northern Virginia” were key drivers in its decision to choose Frederick County.

On its website, Aligned says its Maryland plot spans 75 acres. There, it says the company is planning a total of 1.3 million sq ft (120,800 sqm) and 192MW of capacity

across four multi-story buildings, each spanning 325,000 sq ft (30,200 sqm) and 48MW per facility. However, Aligned reached out to us to say it's planning a total of 3.3 million sq ft (306,600 sqm) and 264MW.

Aligned has already begun work on the site. The company’s plot is located in the center of the Quantum Loophole campus, with a small number of Aligned-affiliated staff on-site as DCD was given the tour.

Snowhorn previously said the company has signed contracts with four different entities totaling more than 240MW for the Frederick site, representing the first phase of power available to the site. It is unclear which other companies have leased space at the site, or the scale of each project, but

Snowhorn makes reference to ‘multiple hyperscalers’ and government customers.

While some of the ‘smaller’ plots measure more than 70 acres, operations director Quesada told us that most interested parties are looking at developing multi-building mini-campuses within the wider Quantum Loophole park averaging around 200 acres or more.

“One of the hyperscalers is engaged with us for 300 acres and around 800MW of power across eight buildings; that will be two parcels of four buildings at two stories tall and 100MW each, and they'll interconnect those and use those as two distinct availability zones,” explains Snowhorn. “Another client is taking 50 to 65 acres and they're going to put up anywhere from three to four two-story buildings in a denser environment.”