Ursula von der Leyen vows to increase EU research spending

Over €380 million new funding for LIFE programme

Microplastics merge with ‘Forever Chemicals

The history and heritage of the written word

Ursula von der Leyen vows to increase EU research spending

Over €380 million new funding for LIFE programme

Microplastics merge with ‘Forever Chemicals

The history and heritage of the written word

As a seasoned editor and journalist, Richard Forsyth has been reporting on numerous aspects of European scientific research for over 10 years. He has written for many titles including ERCIM’s publication, CSP Today, Sustainable Development magazine, eStrategies magazine and remains a prevalent contributor to the UK business press. He also works in Public Relations for businesses to help them communicate their services effectively to industry and consumers.

At a summit of leaders in 2021, the then relatively new in-post US President, Joe Biden, said we must – “overcome the existential crisis of our time. We know just how critically important that is because scientists tell us that this is the decisive decade. This is the decade where we will make decisions to avoid the worst consequences of the climate crisis”.

As Donald Trump reclaims power over the US, those scientists Biden alluded to will be watching with a sense of returning gloom as the direction of policies for sustainable development looks inevitable to take a nosedive beyond 2025.

Where Biden proclaimed to lead energy and climate mitigation policies with science, Trump has other ideas. Trump’s expected choice of Energy Secretary is Chris Wright, the CEO of Liberty Energy, a giant of a North American hydraulic fracturing company. He’s a pro-oil, climate sceptic who was flagged by LinkedIn for his statement declaring: “There is no climate crisis…”

There will also likely be a systematic dismantling of sustainability-related regulations, a playbook of the former President, which will leave standards and scrutiny taking the backseat for a more ‘open’ market, made worse by the championing of fossil fuels.

In the US and many other locations too, it is apparent that a lot of people are choosing short-term over long-term survival, and that’s understandable when you struggle to drive to work because it costs more at the pump, or you can’t afford food or escalating bills to heat your property. Climate impact can sometimes be perceived as something that will only happen to others, so that’s not seemingly as bad as being short of money. It may only be when climate disaster arrives on everyone’s literal doorstep that it begins to matter, but by then it may be too late to act.

With this in mind, it’s interesting to glance back at The World Economic Forum’s Global Risks Report 2024, published last January. The report was compiled from findings from the Forum’s Global Risks Perception Survey, which takes answers from around 1,500 experts from academia, business, government and key institutions. The report revealed the biggest global risks beyond 2024, with a view of two years into the future, and then ten.

It stated that ‘misinformation and disinformation’ would be the biggest immediate risk until 2026, with extreme weather events coming in second place, ‘societal polarisation’ in third place and ‘cyber insecurity’ in fourth. Whereas in the next 10-year period from its publication, the predicted order of global risk would firmly be with the natural world, with the number one risk being extreme weather events, then in order descending, critical changes to Earth systems, biodiversity loss, ecosystem collapse – followed by natural resources shortage – so all nature’s demise in the top spots of threats to our way of life, played out over the coming nine years.

While the US commands a dominant role on the global and Western stage, Europe has to remain resolute and committed to scientific-led policy for the safety and security of its people and the wider world. We have to have the imagination to understand that climate change, biodiversity loss and resource degradation will impact us, sooner rather than later, so every single decision we make about our future today, counts like never before.

The latest US election tells us that a large proportion of people still don’t get it, or care enough. Any diminishing or degrading of sustainability and climate mitigation efforts now, will have great impacts in just a handful of years, impacts that go beyond prices of goods, energy security and our human sphere. An impending collapse of our natural world’s stability will make today’s decisions pitched against scientific guidance, nothing short of historical markers of our ignorance in the face of reality.

Richard Forsyth Editor

EU Research takes a closer look at the latest news and technical breakthroughs from across the European research landscape.

10

We spoke to Professor Martin Røssel Larsen about how he is using organoids to investigate the role of sialic acids in early brain development, and the long-term consequences of any malformations.

The SciFiMed project team are developing new tools to analyse the FH family of proteins and investigate diseases associated with dysregulation of the complement system, as Professor Diana Pauly explains.

We spoke to Prof. Marianna Kruithof-de Julio and her team at the University of Bern about their research which involves using patient-derived organoids to improve bladder cancer treatment. Their work aims to tailor therapies based on individual tumor profiles, addressing the disease’s high heterogeneity and recurrence rates.

Enzymes transfer oxygen or electrons and speed up oxidation reactions so that a wide variety of products can be made without using high temperatures or harsh chemicals, as Professor John Woodley explains.

We spoke to Professor Johannes Lengler about his work in refining geometric inhomogenous random graphs (GIRGs), and how they could improve epidemic modelling and inform more effective quarantine strategies.

It’s not clear whether left atrial appendage closure prevents strokes in patients who have not been diagnosed with the condition, a question Dr Helena Dominguez and her colleagues in the LAACS-2 study are addressing.

Mount Etna is one of the most active volcanoes in the world. The Improve project team are investigating signals from the volcano and how they relate to magma dynamics, as Dr Paolo Papale explains.

The Re:FAB project aims to address sustainability concerns and encourage the transition towards a circular economy, as Helena Tuvendal, Ola Karlsson, Johan Borgström and Fabian Sellberg explain.

We spoke to Professor Torsten Nygaard Kristensen from Aalborg University about his work in optimizing the farming of efficient insect populations that can convert organic waste into highvalue products.

We spoke with Professor Charlotte Jacobsen, Professor Poul Erik Jensen, Professor Marianne Thomsen, Emil Gundersen and Malene Fog Lihme Olsen to learn more about their research into microalgae cultivation.

Researchers in the Co-Green project are looking at the issues around wind farm noise and how local communities can be better included in project planning, as Dr Julia Kirch Kirkegaard and Daniel Frantzen explain.

35 TRINC

Dr Astrid Nonbo Andersen and the TRiNC project team are exploring how truth and reconciliation commissions function in different Scandinavian countries and their role in shedding new light on the past.

36 UNDERSTANDING WRITTEN ARTEFACTS

The Understanding Written Artefacts (UWA) cluster brings together a broad community of researchers to analyse a variety of written artefacts, from 5,000 years ago right up to the present day.

42 FAMILY BACKGROUND, EARLY INVESTMENT POLICIES, AND PARENTAL INVESTMENTS

How do young families respond to public policies such as subsidised childcare? Does their response depend on their background? These questions lie at the core of Dr Hans Sievertsen’s research.

44 CONTESTABLE (AI)

Individuals affected by automated decisions have the right to meaningful information about the basis on which they were reached, as well as the right to contest the decision, issues Professor Thomas Ploug is addressing.

The team behind the Ultra-Lux project are developing a new type of thin-film light-emitting diode, using a class of materials called perovskites, as Professor Paul Heremans and Karim Elkhouly explain.

48 METEOR

Rare earth elements have been used to produce magnets since the ‘60s. We spoke to Professor Mogens Christensen about his work in developing a more environmentallyfriendly method of producing ceramic magnets.

The QFaST project team are using sophisticated sensors to measure the properties of spin excitations in magnets, and investigating whether they could be used to perform certain tasks, as Dr Pepa MartínezPérez explains.

52

The production of terrestrial gamma-ray flashes within thunderclouds is the most energetic natural process on earth. We spoke to Dr Christoph Köhn about his research into the signatures of TGFs.

EDITORIAL

Managing Editor Richard Forsyth info@euresearcher.com

Deputy Editor Patrick Truss patrick@euresearcher.com

Science Writer Nevena Nikolova nikolovan31@gmail.com

Science Writer Ruth Sullivan editor@euresearcher.com

Production Manager Jenny O’Neill jenny@euresearcher.com

Production Assistant Tim Smith info@euresearcher.com

Art Director Daniel Hall design@euresearcher.com

Design Manager David Patten design@euresearcher.com

Illustrator Martin Carr mary@twocatsintheyard.co.uk

PUBLISHING

Managing Director Edward Taberner ed@euresearcher.com

Scientific Director Dr Peter Taberner info@euresearcher.com

Office Manager Janis Beazley info@euresearcher.com

Finance Manager Adrian Hawthorne finance@euresearcher.com

Senior Account Manager Louise King louise@euresearcher.com

EU Research

Blazon Publishing and Media Ltd 131 Lydney Road, Bristol, BS10 5JR, United Kingdom

T: +44 (0)207 193 9820

F: +44 (0)117 9244 022

E: info@euresearcher.com www.euresearcher.com

© Blazon Publishing June 2010 ISSN 2752-4736

The EU Research team take a look at current events in the scientific news

These projects aim to address environmental challenges and advance climate action across the continent.

The Commission has granted today more than €380 million to 133 new projects across Europe under the LIFE Programme for environment and climate action. The allocated amount represents more than half of the €574 million total investment needs for these projects – the remainder coming from national, regional and local governments, public-private partnerships, businesses, and civil society organisations.

LIFE projects contribute to reaching the European Green Deal’s broad range of climate, energy and environmental goals, including the EU’s aim to become climate-neutral by 2050 and to halt and reverse biodiversity loss by 2030, while ensuring Europe’s longterm prosperity. This investment will have a lasting impact on our environment, the economy and the well-being of all Europeans.

The projects cover a range of environmental areas, including the circular economy, nature and biodiversity restoration, climate resilience, and clean energy transitions. These investments will have a profound impact on Europe’s environment, economy, and citizens’ quality of life, propelling the EU’s green transition.

One of the primary focuses of the LIFE Programme is promoting a circular economy. Nearly €143m has been dedicated to 26 projects that aim to reduce waste, improve water use, and tackle air and noise pollution. These initiatives also emphasise the importance of recycling and reusing materials to minimise environmental impact. Notably, Italy’s LIFE GRAPhiREC project is set to recycle graphite from battery waste, potentially generating €23.4m in revenue while cutting production costs by €25m. Spain’s LIFE POLITEX project, with a €5m investment, aims to reduce the fashion industry’s environmental footprint by converting textile waste into new fabrics. Additionally, the Canary Islands’ €9.8m DESALIFE project seeks to improve water resilience by desalinating 1.7 billion litres of ocean water using offshore wave-powered buoys.

Close to €216m has been allocated for projects that restore ecosystems and protect biodiversity. Twenty-five projects will address habitat restoration, species conservation, and improved management of freshwater, marine, and coastal environments.

LIFE4AquaticWarbler and LIFE AWOM, two biodiversity projects, aim to save the rare aquatic warbler bird, mobilising €24m across multiple countries, including Belgium, Germany, and Spain.

Budapest’s €3.6m Biodiverse City LIFE project promotes the peaceful coexistence of nature and urban life, showcasing how cities can integrate biodiversity into their planning.

With €110m earmarked for climate resilience and mitigation efforts, Europe is making strides in adapting to the impacts of climate change. IMAGE LIFE and LIFE VINOSHIELD, two notable projects, will help European vineyards and cheese producers (e.g., Parmigiano Reggiano and Camembert) adapt to extreme weather conditions and water scarcity. To further accelerate the green transition, €105m is being invested in projects that promote clean energy solutions. One example is LIFE DiVirtue, a €1.25m project using virtual and augmented reality to train construction professionals in building zero-emission structures.

Meanwhile, the €10m ENERCOM FACILITY project will empower 140 emerging energy communities across Europe to develop sustainable energy initiatives and promote long-term renewable energy solutions. The LIFE Programme’s investment in these 133 projects highlights the EU’s commitment to tackling environmental challenges and achieving a sustainable future. Through innovations in the circular economy, biodiversity restoration, and climate resilience, Europe is moving closer to its goal of becoming climate-neutral by 2050 and ensuring the success of its green transition.

https://cinea.ec.europa.eu/programmes/life_en

Closing the innovation gap with the US and China is her first ambition –but speech is light on new details.

Ursula von der Leyen promised to put research and innovation “at the centre of our economy” as she laid out her plans for the next five years, before the European Parliament voted to confirm her second term as president of the European Commission.

A total of 401 MEPs voted in favour of von der Leyen’s reelection this afternoon, and 284 voted against, making for a more comfortable majority than her first vote in 2019. There was no mention of research in her speech to MEPs before the vote, but in her political guidelines published this morning, von der Leyen pledges to increase the EU’s research spending, and “expand” the European Research Council (ERC) and the European Innovation Council (EIC).

The ERC delivers grants to support primarily early-stage research, with a budget of more than €16 billion under Horizon Europe. French president Emmanuel Macron has called for the ERC to be reinforced, and ERC president Maria Leptin recently urged grant holders to lobby politicians for more funding. The EIC supports researchers and innovators with grants and equity investments. Its €10.1 billion budget until 2027 makes it one of the biggest deep tech investors in the world, but its president has stressed the need for additional funding. Meanwhile MEPs and the research community have been calling for the Horizon Europe budget to be doubled to €200 billion in the next framework programme, as member states continue to show no signs of spending 3% of GDP on R&D, targets which were proposed back in 2003.

Von der Leyen also wants to launch new public-private partnerships, propose a European biotech act next year as part of a wider life sciences strategy, and strengthen the university alliances designed to deepen cross-border links between institutions. Leptin said von der Leyen’s appointment would provide much-needed continuity, and she is “very pleased” with the commitment to increase research spending with a focus on fundamental research, scientific excellence, and disruptive innovation, and to expand the ERC. “In the ERC competitions, we can now only fund 60% of excellent proposals; increased funds would certainly help to close this gap and further curb the brain drain from Europe,” Leptin said. “What’s more, there is a need to increase the funding given to ERC grantees as the grant sizes have not changed since the creation of the ERC in 2007, despite inflation.”

Her focus on research and innovation won applause from MEPs. “I very much welcome that we finally have a Commission President again that understand the value of science, research and innovation for the European project,” said German MEP Christian Ehler in a statement posted to X. But despite research and innovation winning pride of place in von der Leyen’s speech, she didn’t yet flesh out any policy details.

In her political guidelines for this term, von der Leyen has promised to “increase” research spending and expand the European Research Council (ERC) and European Innovation Council (EIC). But it’s unclear what budget the Commission will shoot for when haggling with member states begins in earnest next year.

In a report published this week, MEPs in the Parliament’s Committee on Industry, Research, and Energy (ITRE) say half of the FP10 budget should go to the ERC and EIC. MEPs are also backing the creation of a European Technology and Industrial Competitiveness Council to enhance private sector participation, and a European Societal Challenges Council to manage research and innovation activities addressing societal challenges.

In her speech on Wednesday, von der Leyen did not mention several policy ideas in the recent report from former Italian prime minister Mario Draghi – even though the Competitiveness Compass is set to be based on his report. The report, for example, wants the EU to set up its own breakthrough innovation agency, modelled on the US’s Defense Advanced Research Projects Agency. It also wants the ERC to fund EU chairs for “top researchers”. Following her speech, MEPs approved von der Leyen’s picks for commissioner, as expected following weeks of haggling among the parliament’s political groups. 370 voted for, 282 against, and 36 abstained. They will take office on 1 December.

Study detects a synergistic effect making substances more dangerous, raising a significant alarm considering the fact that humans are exposed to both.

Microplastics and persistant materials known as ‘forever chemicals’ are two of our most concerning modern pollution problems. Now new research has shown how their impact on the environment drastically increases when combined. A team from the University of Birmingham in the UK looked at the effects of microplastics and PFAS (per- and polyfluoroalkyl substances) on Daphnia magna water fleas, both separately and mixed together.

Exposing Daphnia to both pollutants together under laboratory conditions caused up to 41 percent more damage to the water fleas than the plastics and forever chemicals did separately. That effects included stunted growth, delayed sexual maturity, and fewer offspring, with the severity of harm greater in tests on water fleas that had previously been exposed to other chemical pollution, suggesting a cumulative effect. “It is imperative that we investigate the combined impacts of pollutants on wildlife throughout their lifecycle to get a better understanding of the risk posed by these pollutants under real-life conditions,” says environmental scientist Mohamed Abdallah. “This is crucial to drive conservation efforts and inform policy on facing the growing threat of emerging contaminants such as forever chemicals.”

Microplastics are fragments of plastic less than 5 millimeters (0.2 inches) across, which accumulate in the environment as a result of the break-up of larger materials or the shedding of synthetic fibres.

Though the extent of their effects on ecosystems and human health isn’t fully known, research suggests there is good reason to be concerned as they increasingly spread, both into the most remote spots on Earth and deep inside our own bodies. PFAS, meanwhile, are used in a multitude of manufacturing processes for their fire-suppressing qualities, and have been linked to kidney damage and cancer growth. Slow to break down, these pollutants have been found in wildlife and falling rain – as ubiquitous in our environment as microplastics.

The study was designed to simulate D. magna’s potential exposure to both of these toxins in the natural world. These water fleas are a key part of the aquatic food chain, as well as a useful indicator of environmental pollution. “Our research paves the way for future studies on how PFAS chemicals affect gene function, providing crucial insights into their long-term biological impacts,” says evolutionary systems biologist Luisa Orsini.

Identifying the impact of individual pollutants is challenging, let alone deciphering their impact on the environment when combined. With improvements in analytical methods and technology, the researchers hope we can better quantify the harm caused by numerous pollutants under more complex circumstances. “These findings will be relevant not only to aquatic species but also to humans, highlighting the urgent need for regulatory frameworks that address the unintended combinations of pollutants in the environment,” says Orsini. “Understanding the chronic, long-term effects of chemical mixtures is crucial, especially when considering that previous exposures to other chemicals and environmental threats may weaken organisms’ ability to tolerate novel chemical pollution.”

Air pollution in Europe has declined over the past two decades but remains one of the greatest environmental health threats. This is the message from the United Nations Environment Programme (UNEP) on the International Day of Clean Air for Blue Skies, celebrated on 7 September. Almost all European city-dwellers (96%) are exposed to concentrations of fine particles well above the World Health Organization (WHO) guidelines. In 2021, this reference threshold was set at 5 µg/m³, a level deemed to pose no health risk.

Fine particles come from solid fuels used for domestic heating, industrial activities, and road transport. In 2022, only Iceland recorded a national average of fine particle concentrations below the WHO guide value, according to the European Environment Agency (EEA). On the other hand, Croatia, Italy and Poland recorded concentrations above the current European Union (EU) limit of 25 µg/m³ in 2022—a standard five times higher than the WHO threshold. Fine particles, one of the worst contributors to air pollution, caused the premature death of almost 4 million people in 2019, according to UNEP. East Asia and Central Europe are the hardest hit. The European Commission reports that this type of pollution causes 300,000 premature deaths annually in the EU.

UNEP’s “Air Pollution Note”, based on 2019 data, paints a mixed picture for the EU. In France and Belgium, the population is exposed to levels of fine particles 2.2 and 2.6 times higher than the WHO threshold (5µg/m3). In Italy, Hungary and Romania, the average level (16 µg/m3) is 3.2 times higher than the WHO

guideline. In Poland, fine particle pollution is twice as high as in Belgium (23 µg/m3, 4.6 times the WHO threshold). Peaks in concentration of fine suspended particles in Serbia, BosniaHerzegovina and Northern Macedonia, reached levels 5 to 6 times higher than the WHO threshold.

Legislation was adopted by the EU in 2016, via the new National Emission Ceilings Directive (“NEC” Directive). This legislation is in force in each of the 27 member states for five primary air pollutants: sulfur dioxide (SO2), nitrogen oxides (NOx), non-methane volatile organic compounds (NMVOCs), ammonia (NH3) and fine particulate matter (PM2.5). However, by 2022, only 16 member states had met their national commitments for the period 2020-29, according to the EEA. For 11 other countries, the targets for at least one of the five primary pollutants have not been met.

The challenge remains for all EU countries, particularly in the case of ammonia. The agricultural sector is the main source of this air pollutant, and these emissions have fallen only slightly since 2005. The Green Deal’s “zero pollution” action plan targets reducing premature deaths caused by fine particles by at least 55% by 2030, compared with 2005 levels. It also includes a long-term objective of no significant impact on health by 2050. In March 2024, the European institutions reached a political agreement on ambient air quality to bring EU standards closer to the WHO reference thresholds. Thus, from 1 January 2030, the new European standard will be 10 µg/m³ for fine particles.

Dengue, the most common mosquito-borne disease in the world, is having a record-breaking year.

Climate change is placing more people at risk of mosquitoborne diseases such as dengue fever, extending the seasonal window and creating frequent outbreaks that will become increasingly difficult to deal with, experts have said. The geographic range of vector-borne diseases has expanded rapidly in the past 80 years, placing more than half the world’s population at risk. Experts warn that due to accelerating global warming and urbanisation, cases are set to spread across currently unaffected parts of northern Europe, Asia, North America and Australia over the next few decades.

As a result, an additional 4.7 billion people will be placed in danger if emissions and population growth continue to rise at their current rate. The warning – which will be shared in a presentation at this year’s Global Congress held by the European Society of Clinical Microbiology and Infectious Diseases – comes after a report by The National last month revealed global cases of dengue are rising sharply, with a number of Arab nations in particular experiencing an increase in cases in recent months.

Prof Rachel Lowe of the Catalan Institution for Research and Advanced Studies will tell the forum substantially more people will be placed at risk of mosquito-borne diseases due to warming temperatures. She said: “Global warming due to climate change means that the disease vectors that carry and spread malaria and dengue can find a home in more regions, with outbreaks occurring in areas where people are likely to be immunologically naive and public health systems unprepared.

“The stark reality is that longer hot seasons will enlarge the seasonal window for the spread of mosquito-borne diseases and favour increasingly frequent outbreaks that are increasingly complex to deal with.”

Dengue cases have been largely confined to tropical and subtropical regions because colder temperatures kill the mosquito’s larvae and eggs. But longer, hotter seasons have resulted in dengue becoming the most rapidly spreading mosquito-borne viral disease in the world. Nine of the 10 most hospitable years for dengue transmission have occurred since 2000, resulting in the disease spreading in 13 European countries, with localised transmission in France, Italy and Spain last year. According to the World Health Organisation, the number of dengue cases reported has increased in the past two decades from 500,000 in 2000 to more than 5 million in 2019.

By combining disease-carrying insect surveillance with climate forecasts, researchers are developing ways to predict when and where epidemics might occur and direct interventions to the most at-risk areas in advance. One similar project, which is being led by Prof Lowe, is using a powerful supercomputer to understand how climate and disease transmission are linked to predict mosquito-borne disease outbreaks in 12 countries. “By analysing weather patterns, finding mosquito breeding sites with drones and gathering information from local communities and health officials, we are hoping to give communities time to prepare and protect themselves,” she said. “But ultimately, the most effective way to reduce the risk of these diseases spreading to new areas will be to dramatically curb emissions.”

Scientists and whale watchers have recently spotted killer whales off the coast of Washington state in the US swimming with dead fish on their heads.

In September 2024, scientists and whale watchers spotted orcas (Orcinus orca) in South Puget Sound and off Point No Point in Washington State swimming with dead fish on their heads. This is the first time they’ve donned the bizarre headgear since the summer of 1987, when a trendsetting female West Coast orca kickstarted the behavior for no apparent reason. Within a couple of weeks, the rest of the pod had jumped on the bandwagon and turned salmon corpses into must-have fashion accessories, according to the marine conservation charity ORCA — but it’s unclear whether the same will happen this time around.

The motivation for the salmon hat trend remains a mystery. “Honestly, your guess is as good as mine,” Deborah Giles, an orca researcher at the University of Washington who also heads the science and research teams at the non-profit Wild Orca. Salmon hats are a perfect example of what researchers call a “fad” — a behavior initiated by one or two individuals and temporarily picked up by others before it’s abandoned. Back in the 1980s, the trend only lasted a year; by the summer of 1988, dead fish were totally passé and salmon hats disappeared from the West Coast orca population.

Orca researchers’ best guess is that salmon hat fads are linked to high food availability. South Puget Sound is currently teeming with chum salmon (Oncorhynchus keta), and with too much food to eat on the spot, orcas may be saving fish for later by balancing them on their heads. Orcas have been spotted stashing food away in other places, too. “We’ve seen mammaleating killer whales carry large chunks of food under their pectoral fin, kind of tucked in next to their body,” Giles said. Salmon is probably too small to fit securely under orcas’ pectoral fins, so the marine mammals may have opted for the top of their heads instead.

Camera-equipped drones could help researchers monitor salmon hat-wearing orcas in a way that was not possible 37 years ago. “Over time, we may be able to gather enough information to show that, for instance, one carried a fish for 30 minutes or so, and then he ate it,” Giles said. But the food availability theory could be wrong — if the footage reveals that orcas abandon the salmon without eating them, researchers will be sent back to the drawing board. Whatever the reason for the behavior, Giles said it’s been fun to watch it come back in style. “It’s been a while since I’ve personally seen it,” she said.

Brain organoids provide a means for researchers to study different mechanisms in the developing brain, and to investigate how they function at the molecular level. We spoke to Professor Martin Røssel Larsen about how he is using organoids to investigate the role of sialic acids in early brain development, and the long-term consequences of any malformations.

A type of negatively charged monosaccharide, sialic acids play an important role in intermolecular and intercellular interactions, and in the development of neurons. For example, the presence of a post-translational modification (PTM) called polysialic acid (PSA) on neural cell adhesion molecules (NCAM) prevents neurons from interacting with each other. “These cells are therefore effectively pushed away from each other by the negative charge of PSA, and then free to migrate. This is very important in early brain development, where cells migrate to organise and generate axons, dendrites, and other cell types,” explains Martin Røssel Larsen, Professor of Molecular Biology at the University of Southern Denmark (SDU), where he leads a research group. As Principal Investigator of several research projects based at SDU, Professor Røssel Larsen aims to build a deeper picture of the role of sialic acids in early brain development, work in which he is also considering the brains of other species.

“The biggest difference between humans and chimpanzees is sialic acid,” he continues.

“There is an enzyme that takes human sialic acid and puts on an OH group, then you get the sialic acid we find in monkeys.”

As humans we all have the gene that leads to the addition of an OH group, although it doesn’t function due to a mutation. The project team is working to correct that specific gene in stem cells, then the plan is to investigate whether the introduction of monkey-type sialic acid leads to changes in the human brain, using brain organoids grown from induced pluripotent stem cells. “These organoids can be thought of as minibrains reflecting early brain development. They represent just one part of the brain, the cortical layer,” outlines Professor Røssel Larsen. Researchers are using sophisticated imaging techniques in this work. “If we change the sialic acid, do we get a different morphology of our mini-brains? Can we correlate that to the size of the cortical layers? It is believed that the size of the cortex is related to the learning and memory differences that we see between monkeys and

humans,” continues Professor Røssel Larsen. “To study this specific molecular change between human and chimpanzees we aim at introducing the mutation of this gene in chimpanzee stem cells, so it doesn’t function. Then we make organoids and look at whether that mutation leads to changes in the size of the cortex and further change in proteins and their PTMs, especially sialylation.”

developed to isolate nerve terminals from brain organoids, meaning they can now be taken into a human system, which opens up new avenues of investigation, says Professor Røssel Larsen. “We can manipulate stem cells, make brain organoids from these, and then see if there is a change in the nerve terminals,” he outlines. “We’re looking at whether we can see changes if we manipulate enzymes

“If we change the sialic acid, do we get a different morphology of our mini-brains? Can we correlate that to the size of cortex? Many people believe that the size of cortex is related to the learning and memory differences that we see between monkeys and humans.”

Researchers in the project are using imaging techniques as well as mass spectrometry to look at changes in sialic acid in the cortical layers, then relate it back to the function of specific proteins. A further strand of research involves working with nerve terminals – these are small, active compartments which are sometimes called synaptosomes. “We can stimulate these nerve terminals, and they will release neurotransmitter, then they will take up a vesicle again and perform synaptic transmission repeatedly,” explains Professor Røssel Larsen. A method has now been

that add sialic acids - sialyltransferases. How is sialic acid used in this very sophisticated brain system? Is it a way of fine-tuning interactions? It is likely that protein-protein interaction is heavily regulated by sialic acid on cell surfaces.”

The hope is that this research will uncover morphological changes in the brain that can be related to changes in sialic acid, while Professor Røssel Larsen also hopes to identify the targets of sialic transferases in the brain. By knocking down for example the 2,6 sialyltransferases - another distinct difference

in the sialic acid biology between human and chimpanzees - Professor Røssel Larsen and his team hope to see exactly what sites and what proteins it changes. “We can then find substrates for these sialyltransferases, and correlate this with any morphological changes in the brain,” he explains. Sialic acids are also thought to play a major role in immunity and in the progression of certain conditions, including certain forms of cancer and schizophrenia, another topic that Professor Røssel Larsen is addressing in his research. “We think that if we want to look at diseases like schizophrenia, we need to move away from only focusing on the genes to look at what is present in the brain at the time with respect to the building blocks that do the work in the cell, the proteins,” he outlines. “In our interdisciplinary DEVELOPNOID project we have taken plasma from patients with schizophrenia and controls. We then reprogrammed the blood cells from patients to stem cells, and we are now growing brain organoids to investigate underlying molecular mechanisms leading to schizophrenia using ‘Omics technologies and imaging.”

This research could lead to new insights into the underlying mechanisms behind schizophrenia and other diseases, and ultimately the development of new, more effective treatments. The project’s research at this stage is largely fundamental in nature however, with the team focused primarily on uncovering the role of sialic acids in the brain. “If we can identify the role of sialic

acids in the brain it would be a very big achievement, it would be really exciting,” says Professor Røssel Larsen. This is still a relatively neglected area of research, and Professor Røssel Larsen hopes that the project’s work will stimulate further interest and development. “We hope there will be a renewed focus on PTMs like glycosylation, which have been overlooked for a long time. Not many people work on glycosylation in Denmark, and not a lot is known about its true function, partly because the technology is not there yet,” he explains. “We want to contribute to continued development, and to use brain organoids to investigate other diseases beyond schizophrenia. Brain organoids are a very effective model system, they cover more ground than 2-d systems.”

The brain is comprised a lot of different paths that communicate with each other, yet how this communication occurs is not well understood. More sophisticated brain organoid models could help researchers gain a fuller picture. “It is possible now to make different brain organoids from several parts of the brain, then put them together and let them grow together. Then you will see that the neurons start migrating in and out of the different organoids,” says Professor Røssel Larsen. A lot of attention in the research group will be focused in future on developing methods to characterise PTMs, in particular glycosylation, which is a topic of deep interest to Professor Røssel Larsen. “It’s very difficult to measure and analyse changes in the glycostructure, but it’s also very fascinating,” he stresses.

Interdisciplinary project DEVELOPNOID

Project Objectives

Protein glycosylation is important for communication between cells and cell migration, mechanisms essential for development of the brain. A big difference between us and chimpanzees is the sugar molecule, sialic acids (SA), on cell surface proteins. SA is involved in neural development, and in the present project we will investigate the role of SAs in early brain development using brain organoids, multi-omics and imaging.

Project Funding

The role of sialic acids in early brain development project is funded by The Independent Research Fund Denmark.

Project Partners

• Prof. Kristine Freude, Department for Veterinary and Animal Science, University of Copenhagen, DK.

• Dr. Madeline A. Lancaster, MRC Laboratory of Molecular Biology, Cambridge Biomedical Campus, Cambridge, UK.

• Prof. Jonathan Brewer, Department of Biochemistry and Molecular Biology, University of Southern Denmark, DK.

Contact Details

Project Coordinator,

Professor Martin Røssel Larsen

Department of Biochemistry and Molecular Biology

University of Southern Denmark (SDU) Campusvej 55; Odense M - DK-5230

T: +45 6550 1872

E: mrl@bmb.sdu.dk

W: https://dff.dk/ansog/stottet-forskning/ podcasts/celler-med-sukker-pa

Martin Røssel Larsen

Martin Røssel Larsen is Professor of Molecular Biology at the University of Southern Denmark, where he leads a research group. He is internationally recognized for his work in developing methods for characterising post-translational modifications (PTMs) of proteins and in bridging biological mass spectrometry and biomedical research.

The complement system is an important part of the body’s defence against pathogens, and malfunctions can leave people more susceptible to disease. The SciFiMed project team are developing new tools to analyse the FH family of proteins and build a deeper picture of diseases associated with dysregulation of the complement system, as Professor Diana Pauly explains.

The complement system forms an important part of the body’s defence against pathogens, reacting rapidly to the presence of foreign material, while also identifying and tagging cellular debris for removal by phagocytes. When this system is dysregulated or out of balance, it can leave individuals more susceptible to diseases. “A malfunctioning complement system can heighten susceptibility to infections, such as meningococcal infections. Conversely, an overactive complement system can lead to autoimmune diseases, where the body attacks itself,” explains Professor Diana Pauly, group leader of the experimental ophthalmology lab at Philipps University Marburg in Germany. While the complement system has been intensively studied over the years, some of the regulatory mechanisms are still not fully understood, a topic at the heart of the EU-backed SciFiMed project. “We are investigating the role of complement factor H (FH) and FH-related proteins (FHRs), which are key regulators of the complement system. FH is one of the most important inhibitors, while FHR proteins share structural domains with FH,” says Professor Pauly, the project’s Principal Investigator.

There are generally considered to be five main FHR proteins. Researchers in the project aim to investigate their functions and gain a deeper understanding of their roles in disease development and progression. The FHRs share some structural similarities with FH, but Professor Pauly says there are also some clear differences. “The FH protein has some regulatory domains which are not present in the FHRs, while some of the FHRs have dimerization domains which are not present in the others,” she outlines. The project team is working to build a fuller picture of the impact of FHR proteins on disease, looking beyond

genetic associations. “We know that changes in FHRs are associated with the development of specific diseases – or protection against others – especially in the eye and the kidney. But we only know that on a DNA basis,” explains Professor Pauly. “We have developed a variety of new tools to analyse each of the individual proteins in this FH family and get an idea of their function. We have commercialised new ELISAs (Enzyme-Linked Immunosorbent Assays) to detect FHR proteins.”

This allows researchers to identify cases where the concentration of these proteins is particularly high or low, and then investigate the impact on disease. Specific antibodies have also been generated through project partner, Sanquin, allowing researchers to distinguish between each of the proteins, which Professor Pauly says is an important development. “These tools, which are now available commercially through our partner Hycult, will be instrumental in advancing research,” she says. These antibodies are already proving invaluable for investigating the function of FHR proteins, while other tools are also under development in the project. “We have used these antibodies to stain FHR proteins in specific tissues for example, and to isolate them from tissue extracts for interaction studies. This helps us to identify the regulatory processes in which they are involved,” explains Professor Pauly. “We believe that this research will extend beyond SciFiMed, paving the way for many new discoveries.”

The development of ELISA, as well as lateral

flow assays using these new antibodies, opens up wider possibilities. Researchers can now conduct clinical studies across a variety of biomaterials for different diseases, where previously only genetic associations with these proteins had been established. “This allows us and other researchers to explore a wide range of diseases. Initially, our partners tested healthy individuals to establish baseline values, and we have conducted preliminary studies in patients with age-related macular degeneration, delirium, and stroke, showing promising trends that merit further exploration,” says Professor Pauly. The complement system is known to be involved in a broad spectrum of autoimmune diseases, and Professor Pauly says functional assays can help researchers build a fuller picture. “We know a fair amount about the complement system’s role in infections, but less about autoimmune diseases. These assays could help researchers find out how the complement system is involved in autoimmune diseases,” she outlines.

longer have to be sent to central laboratories, with a waiting time of a week or more for the results,” says Professor Pauly. “With lateral flow assays, doctors or medical technicians can check the FHR status directly on-site, allowing for immediate adjustments to potential therapies.”

A significant degree of progress has been made in this respect, and work is ongoing to improve the lateral flow assays, with the aim of moving towards commercialisation in the future. Currently the project’s lateral flow assay is capable of detecting three proteins - FHR-2, FHR-3, and FHR-4 - within the FH family in 15 minutes, while other assays are at different stages of development. “The quantitative assays and the antibodies have been commercialised and are available for researchers, while we are also developing at least two functional assays for the whole protein family,” outlines Professor Pauly.

The overarching goal in this research is to help improve the treatment of diseases associated

“A malfunctioning complement system can heighten susceptibility to infections, such as meningococcal infections. Conversely, an overactive complement system can lead to autoimmune diseases, where the body attacks itself.”

This research represents a step towards the goal of providing personalised care that more closely reflects the specific circumstances of individual patients. Clinical studies (although not on a personalised basis) are planned, which it is hoped will lead to the identification of biomarkers for disease development and treatment effectiveness. “This will help doctors provide more personalised treatment,” continues Professor Pauly. Researchers are also working to develop lateral flow assays capable of rapidly providing information to clinicians on the levels of FH and FHR proteins, which can then guide treatment. “We can imagine a scenario where blood samples, urine, or other samples that need FHR concentration measurements no

with dysregulation of the complement system. The tools developed in the project will help researchers build a deeper understanding of these diseases, believes Professor Pauly. “With the ELISA and the antibodies, we hope that researchers will push the science forward, conducting studies and uncovering the role of these proteins in infections and autoimmune diseases,” she says. This will ultimately contribute to the development of new, more effective therapies against a variety of different diseases. “The importance of the complement system is increasingly recognised, especially with the growing number of complement-targeting drugs that have been approved. This represents a significant opportunity for combating various diseases,” says Professor Pauly.

Screening of inFlammation to enable personalized Medicine

Project Objectives

The function of the FH-related (FHR) proteins, important components of the complement system, is unclear, a topic at the heart of the SciFiMed project. The project consortium, bringing together eight partners from across Europe, are working together to develop tools to analyse these proteins, which will ultimately help researchers develop more effective therapies against a wide variety of diseases.

Project Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 899163.

Project Partners

SciFiMed brings together an excellent multidisciplinary team of Engineers, Chemists, Geneticists, Immunologists, and Physicians from both academia and industry from four different countries. https://www.scifimed.eu/our-team

Contact Details

Project Coordinator,

Professor Diana Pauly, Ph.D

Exp. Ophthalmologie, Augenheilkunde AG Pauly

AWT-Nummer: 3911

Baldingerstraße 35043 Marburg

Germany

T: +49 170 2738864

E: diana.pauly@uni-marburg.de W: https://www.scifimed.eu/ W: www.paulylab.de

Professor Diana Pauly is a research scientist and group leader at the Philipps University Marburg in Germany. She holds a Ph.D in Biotechnology from the University of Potsdam, and a degree in Human Biology, specialising in immunology, from the University of Greifswald.

We

spoke to Prof. Marianna Kruithof-de Julio and her team at the University of Bern about their research which involves using patient-derived organoids to improve bladder cancer treatment. Their work aims to tailor therapies based on individual tumor profiles, addressing the disease’s high heterogeneity and recurrence rates.

Bladder cancer ranks as the tenth most prevalent cancer worldwide, with approximately 550,000 new cases and 200,000 deaths each year, making it a substantial public health challenge. Despite advancements in treatment, its heterogeneity and high recurrence rate pose ongoing difficulties, highlighting the demand for more effective and personalised treatments. Dr. Marianna Kruithof-de Julio and her team at the University of Bern are developing patientderived organoids from bladder cancer tumors to tackle these issues. These small clusters of cells mimic the genetic and molecular traits of the original tumors, providing a promising path for personalised cancer treatment.

Bladder cancer is characterised by a high heterogeneity between patients which often contributes to treatment failures. Molecular and histological differences highlight the need for personalised treatment approaches. Pathologically, the disease is classified into two main types: non-muscle invasive bladder cancer (NMIBC) and muscle-invasive bladder cancer (MIBC). NMIBC accounts for around 70% of cases and is typically treated with transurethral resection of the bladder (TUR-B) and intravesical instillations of chemotherapy, often in combination with the Bacillus Calmette–Guérin (BCG) vaccine. However, many of these tumors will recur, and about 10% will progress to MIBC, a highly aggressive tumor with a high mortality rate. MIBC is usually treated with systemic cisplatin-based chemotherapy followed by radical cystectomy, a highly invasive procedure that is only beneficial for 30-40% of patients.

Patient-derived organoids have been successfully used to predict drug response in vitro for various cancers such as ovarian, pancreatic, and gastrointestinal cancers. Prof. Kruithof-de Julio and her research team have successfully grown organoids from various bladder cancer stages and grades that preserve the histological and molecular heterogeneity of the original tumors. In their study, the team created the organoids from specimens obtained through transurethral resection, cystectomy. The organoids were successfully cultured

biomarkers that could forecast therapy response and resistance. This approach aids in understanding how mutations render therapies ineffective and how tumors develop resistance to treatment over time. It can also determine which treatments might be effective based on the genetic composition of a patient’s tumor. However, before organoids can be broadly implemented in clinical settings, they must undergo further validation through clinical trials. Dr. Kruithof-de Julio highlights that the success achieved with organoids in cancer treatment can potentially be applied to human patients.

“With the use of this technology, we can evaluate a broad spectrum of drugs to identify the most promising options for each individual patient.”

regardless of the tumor’s stage, grade, or histological pattern. The organoids maintained a similar level of cellular proliferation compared to the original tumors and mirrored the tumor’s evolution over time, allowing researchers to observe how the cancer changes and adapts.

The researchers have created a drug screening method to evaluate both standard-care drugs and FDA-approved cancer drugs on patient-derived organoids. By examining how these organoids react to the drugs and comparing their drug response profiles with their genetic information, the researchers have identified

One of the most significant findings is the heterogeneity in organoid drug responses. For example, in few NMIBC organoids tested, the standard chemotherapy drug mitomycin C showed low significant effect, whereas epirubicin was effective in 60% of the samples tested. In contrast, MIBC organoids displayed a higher sensitivity to the cisplatin/gemcitabine combination, which is a common treatment regimen for this aggressive form of cancer. “These findings underscore the significance of personalised treatment plans. Identifying which drugs are

most effective for particular genetic profiles can enhance patient outcomes and minimise the trial-and-error method that currently dominates cancer treatment”, explains Prof. Kruithof-de Julio.

The researchers analysed the genetic mechanisms behind drug resistance. They found that organoids derived from tumors with high genomic burdens, such as those from high-grade invasive cancers, showed different responses than those from low-grade tumors. In one case, the team analysed organoids from a patient who experienced multiple recurrences of NMIBC. By comparing organoids from different stages of the disease, they could track how the tumor evolved and became resistant to treatment. This longitudinal analysis provides a deeper understanding of the disease and its evolution. In another case, organoids from a patient diagnosed with pan-urothelial disease were tested with various drugs. The organoids showed a high

sensitivity to lapatinib, a drug targeting the EGFR/ERBB2 pathway, which is frequently altered in bladder cancer. This finding suggests that lapatinib could be a viable option for patients with similar genetic profiles, which highlights the potential for repurposing existing drugs for more personalised treatments.

“Organoids allow us to test a wide array of drugs and pinpoint the most promising options for each patient,” says Prof. Kruithofde Julio. “This approach not only saves time and resources but also reduces the burden on patients who would otherwise endure multiple treatment trials.” The research team is now intent on further refining their organoid models and increasing their drug screening capabilities. They aim to include a wider range of drugs and investigate new therapeutic targets. Additionally, they are working on enhancing the scalability of organoid cultures to make this technology more accessible for clinical use.

Project Objectives

The research project focuses on cultivating patient-derived organoids from bladder cancer tumors to develop personalised treatment plans. By growing organoids replicating the original tumors’ genetic and molecular characteristics, the team aims to identify effective drug therapies for individual patients. The study also seeks to understand the genetic mechanisms behind drug resistance and the evolution of bladder cancer, ultimately aiming to translate these findings into clinical practice for improved patient outcomes.

Project Funding

Development of a platform for GU cancer patient-derived organoids OC-2019003 3RCC / InoSwiss 101.951 IP-LS awarded to Marianna Kruithof-de Julio.

Project Partners

The team includes Prof. Marianna Kruithof-de Julio, Prof. Bernhard Kiss, Prof Beat Roth, and Prof. George Thalmann, from the Department of Urology of the University Hospital Bern, Prof. Roland Seiler-Blarer from the Department of Urology of the Spitalzentrum Biel-Bienne and Dr. Marta De Menna, Deputy Director of the Translational Organoid Resource Core at the Department for BioMedical Research of the University of Bern. Their combined expertise drives this innovative research in personalised cancer treatment and precision oncology.

Contact Details

Professor Marianna Kruithof-de Julio, PhD Head of the Urology Research Laboratory Department of Urology & Department for BioMedical Research

Director

Organoid CORE facility

University of Bern Murtenstrasse 24, 3008 Bern, Switzerland E: marianna.kruithofdejulio@unibe.ch

Professor Kruithof-de Julio is a researcher at the University of Bern, specializing in precision medicine within urology. She leads the Urology Research Laboratory and the Organoid CORE facility, focusing on advanced tools for precision medicine. Her team has successfully developed patient-derived organoids for bladder, prostate, and pancreatic cancers, providing critical models for better understanding these diseases. They also utilize microvascular on-chip chambers to study the functional potential of single cells. What distinguishes her research group is their holistic approach, examining tumor cells, stroma, immune cells, and vasculature together to gain a comprehensive view of cancer and its progression.

As natural and biodegradable agents, enzymes are key to advancing sustainable, green manufacturing practices across diverse sectors. These natural proteins/catalyst facilitate oxidation reactions by transferring oxygen or electrons and speed up oxidation reactions so that a wide variety of products can be made without using high temperatures or harsh chemicals, as Professor John Woodley explains.

The majority of oxidation reactions carried out in industrial chemistry involve the use of environmentally harmful oxidants, while the reactions themselves are not particularly selective. This means many of these reactions are not sustainable. Nevertheless, oxidations are amongst the most important chemical reactions in industry. Fortunately, enzymes found in nature could provide a more sustainable way of catalysing some of these reactions (for example in producing pharmaceuticals), and researchers are exploring their wider potential. “There is a lot of interest in using enzymes to carry out and catalyse reactions in industrial reactors and process plants,” explains John Woodley, Professor of Chemical and Biochemical engineering at the Technical University of Denmark (DTU), and Principal Investigator of the FLUIZYME project. Enzymes catalyse a wide variety of reactions in the natural world, but they may lose their structure and lose stability in an industrial reactor, which will then affect how they function. “Enzymes are large molecules, made up of lots of amino acids, which form into a particular structure (or shape). Enzymes need to maintain their structure in order to maintain stability, “ says Professor Woodley. “And when an enzyme loses stability, it can no longer perform at the same level as it did initially.”

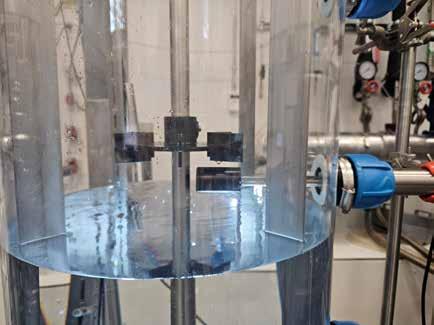

This may occur naturally, as a result of higher temperatures or changes in the pH of the solution for example, while other factors may also be involved. The FLUIZYME project team is investigating whether the rate at which this transition takes place is accelerated in industrial environments, using scale down apparatus designed to mimic the conditions in the larger reactors. “For example, we put bubbles containing oxygen in at the bottom of a vertical tube, which then rise to the top. We do this in a very defined way, so we know there’s a bubble coming at a specific point, and then a second will come later on,” outlines Professor Woodley. The bubbles rise to the top and the enzyme inside the tube is then exposed to the bubble, with Professor Woodley and his colleagues investigating changes in enzyme

stability in this environment. “We know the size of the bubbles and how much protein is in there as well. We can measure the activity of the enzyme before and afterwards, and we can also look at the structure of the enzyme before and afterwards, and so on,” he says. “We expose enzymes to these different conditions for periods of around 70 hours, and over that time we see that they lose structure and therefore stability.”

their stability in this bubble apparatus,” explains Professor Woodley. This approach has already yielded some interesting insights. “We’ve seen that an enzyme in solution gets pulled towards the bubble, and likes to stick on the bubble. This may not seem surprising in itself, but this happens to quite a marked degree,” continues Professor Woodley. “We think that hydrophobic amino acids buried inside the enzyme are dragged towards the gas-liquid interface (bubble

“Enzymes are large molecules, made up of lots of amino acids, which form into a particular structure or shape. Enzymes need to maintain their shape in order to catalyse reactions.”

The project team works on many enzymes, but mostly oxidases such as NADPH oxidase and also HMFO oxidase, alongside a number of variants. One of the standard ways of measuring the stability of these enzymes has been to heat them up and observe what happens to the structure until it reaches its melting temperature, at which it essentially falls to pieces. “To date, this socalled melting temperature has been used as an indicator of the stability of an enzyme. Now we are trying with these variants to measure their melting temperatures, and to correlate that with

surface). This then has the effect of pulling the enzyme out of solution, so that it is no longer available for reactions to take place.”

This then raises the question of whether introducing a substance like a surfactant to the solution would have a positive effect, so that an enzyme would no longer stick to the bubble, which could be an interesting avenue of investigation. In future, Professor Woodley and his colleagues plan to test many more enzymes, and build a mathematical model of their behaviour. “We are in the process of building a model which can be

used to make predictions. We hope to be able to predict whether a particular enzyme with certain properties will have problems with the bubbling, or if we need to control the bubbling in some way,” he says. This is with a view to maintaining the stability of an enzyme and enabling more efficient industrial biocatalysis. “A big part of the cost of implementing industrial biocatalysis processes relates to enzyme stability. In many cases, the more stable an enzyme is, the lower the process cost,” continues Professor Woodley. “This research is very relevant to the industrial application of enzymes.”

The wider backdrop to this research is the challenge of developing more environmentally friendly and sustainable methods of producing valuable chemicals (including pharmaceuticals). Biocatalysis represents a more sustainable approach than existing methods, yet Professor Woodley says it remains difficult to run a large-scale process. “Biocatalysis takes place in water, and the solubility of oxygen in water is

for enzymes,” he explains. The project’s work holds wider importance in terms of running any process with proteins on larger scales, and Professor Woodley is looking to share their findings more widely. “A lot of the processes use proteins as catalysts (enzymes) or produce proteins as products. Potential exposure to air is always a risk in stirred tanks, so it’s a very big issue,” he says. “We need to understand how to avoid the unnecessary effects of air drawn from the surface and also the supply oxygen to oxidation reactions. This is a very different kind of environment to those typically considered in enzymology.”

This research could ultimately help improve the design of bioprocess plants, reduce the cost of implementing industrial processes, and address concerns around the sustainability of the chemical and biotechnology industries. Alongside these more long-term considerations, Professor Woodley is also keen to utilise the apparatus developed in the project, and contribute to the goal of building a deeper picture of the factors that affect the stability of different

Understanding the Effect of Non-natural Fluid Environments on Enzyme Stability

Project Objectives

Fluizyme is an Advanced ERC project with the overall objective of understanding the effect of new-to nature conditions on enzyme stability. Such conditions occur when biocatalytic reactions are scaled-up in industry, in large tanks with fluctuating concentrations and often with interfaces (gas-liquid and liquid-liquid). In particular the focus in this project is on understanding the effect of the gas-liquid interface on the stability of oxygen-dependent enzymes, such as oxidases.

Project Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under Grant agreement No 101021024.

Project Participants

DTU Chemical Engineering

Contact Details

Project Coordinator, Professor John Woodley

DTU Chemical Engineering

Department of Chemical and Biochemical Engineering

Søltofts Plads 228A 2800 Kgs.

Lyngby

Denmark

T: +45 5350 3747

E: jw@kt.dtu.dk

W: https://www.kt.dtu.dk/research/prosys/ projects#fluizyme

John Woodley is Professor of Chemical Engineering at the Technical University of Denmark. He has a PhD from University College London (UK) and after gaining industrial and academic experience, in 2007 he moved to Denmark. He leads research at the interface of process and protein engineering, and has long standing interest in biocatalysis, where enzymes are used for the synthesis of valuable chemicals. A dedicated teacher, he has educated more than 60 PhD students. He was co-chair of the GRC Biocatalysis conference in 2016 (USA) and will be co-chair of the EIC Enzyme Engineering conference in 2025 (Denmark). John is a Fellow of the Royal Academy of Engineering (UK).

New network models have been developed over recent years that could help researchers gain deeper insights into the spread of disease. We spoke to Professor Johannes Lengler about his work in refining geometric inhomogenous random graphs (GIRGs), and how they could improve epidemic modelling and inform more effective quarantine strategies.

A variety of highly sophisticated network models have been developed over the years to look at the dynamics of social interaction, for example the way that rumours filter through a social network and how diseases spread across populations. One prominent type are inhomogenous network models, which can generate clear results. “For example, we see with inhomogenous network models that information can spread very fast,” outlines Johannes Lengler, Professor of Computer Science at ETH Zurich. Another type are geometric models, which while capturing certain aspects of social networks in the real world, may generate results contradictory to those from inhomogenous network models. “For example, if you look at the spread of epidemics, then it’s extremely fast if you look at the inhomogenous models, then it’s very slow if you look at the other type, the geometric models. So this raises the question, which is the right one?” asks Professor Lengler.

This is a topic Professor Lengler is exploring further in the DynaGIRG project, an initiative backed by the Swiss National Science Foundation (SNSF), in which he and his colleagues are looking at the dynamics of social interaction in network models that combine these characteristics. This research centres around geometric inhomogenous random graphs (GIRGs), a new type of network model which Professor Lengler says is highly flexible. “There is a lot of freedom in the model definition, and it’s quite a robust model,” he explains. In the project Professor Lengler is working to refine these GIRGs and build a deeper understanding of their component structure. “I am taking the GIRG model, trying to improve it, and to make it even more flexible,” he continues. “Another part involves looking at certain processes on the model, including bootstrap percolation, routing and the progression of epidemics.

The project’s agenda also includes research into how information is transmitted through social networks, a topic addressed in Stanley Milgram’s famous ‘small-world’ experiment in 1967. In this experiment two people were randomly selected in two entirely different locations, and one was asked to send a letter to another, with only limited information. “They knew their address and a little personal information, but person A was not allowed to send the letter to person B directly. They were only allowed to send the letter to one of their friends, who was then asked to send a letter on to one of their friends, and so on. Was it possible with such a chain to reach B?” says Professor Lengler. This was indeed found to be possible, and letters needed just six hops on average before they reached their intended recipient. “Even if you have only a little information, you will be able to find a friend who is better at finding the intended recipient,” continues Professor Lengler.

This routing process has been modelled on GIRGs, and researchers have been able to essentially reconstruct the chain of intermediaries which passed on the letters, as well as to analyse what happens in each of the different phases. As part of his work in the project, Professor Lengler is now looking to check whether these insights also hold in terms of how information filters through social networks. “The most obvious question is, how many steps does it take to get to the intended recipient? We can also look at the route of messages and what happens when a lot of messages are sent. Do all these paths go through the same few vertices in the model, or are all these paths very different from each other?” says Professor Lengler. “We have found that these messages do indeed all go through a very small set of well-connected vertices, which is what we would expect to see in social networks.”

A parallel can be drawn here with the epidemiology case, where a single individual may play a particularly significant role in the spread of a disease, a so-called superspreader. The first step towards becoming a super-spreader is to meet a lot of people, yet Professor Lengler says other factors are also involved. “A person can be a super-spreader if they have a high viral load. However, it’s

not the case that if you have 100 times more contacts then you will also spread a disease to 100 times more people, and we study the effects of this,” he says. The project has largely focused on the role of people in spreading disease, but Professor Lengler also intends to look at the importance of events and meetings in future. “One type of meeting would be between close-by vertices, where one vertex invites others, and usually they know each other,” he continues. “Another type of event is where vertices which are very different from each other come together.”

The project’s research holds wider relevance in terms of understanding how diseases spread and in developing quarantine strategies to control or limit their transmission. Epidemiological models were used extensively during the Covid-19 pandemic for example to assess the likely impact of restrictions on the spread of the disease. “If you impose restrictions on flights, if you try to force vertices not to move very far but otherwise don’t restrict them very much, does this change the dynamics of disease spread?” outlines Professor Lengler. The GIRG models can provide a more detailed picture than standard epidemic models in this respect. “Standard epidemic models

Dynamic Processes on Geometric Inhomogeneous Random Graphs

Project Objectives

The project aims to understand how epidemics and other dynamic processes spread in networks that combine inhomogeneity with clusterlike characteristics. In particular, researchers aim to understand under which circumstances processes spread exponentially, and in which circumstances they spread even faster or slower.

Project Funding

This project is funded by the SNSF.

Contact Details

Project Coordinator,

Prof. Dr. Johannes Lengler

ETH Zürich

Department of Computer Science

OAT Z 14.1

Andreasstrasse 5 8050 Zürich

E: johannes.lengler@inf.ethz.ch

W: https://as.inf.ethz.ch/people/members/ lenglerj/index.html

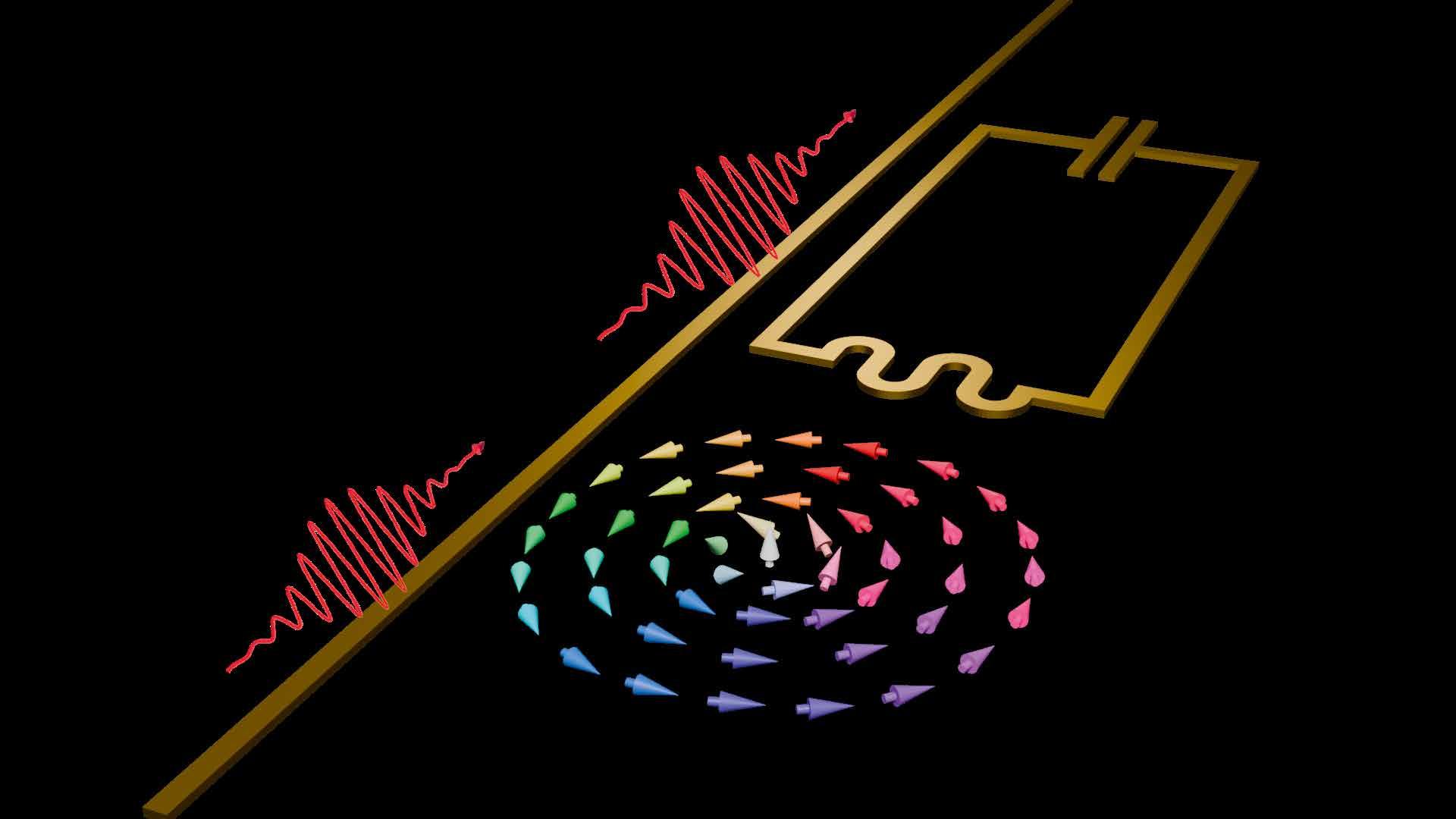

Depending on the parameters, the macroscopic structure of the networks differs. Left: strong heterogeneity. Shortest paths mostly use nodes with many neighbors. Right: weak heterogenity. Shortest paths mostly use nodes with few neighbors. Image created by Joost Jorritsma.

build on the hidden assumption that when one vertex infects another, then this new vertex is found at random from the whole population. So if someone in the UK infects someone else there, then this second person is just uniformly at random, you will not see geometric information,”

This geometric information was not typically available in the models used during the Covid-19 pandemic, a major motivation behind Professor Lengler’s work. The hope is that these new models will add a further dimension in epidemiology and allow researchers to gain a fuller picture of how diseases spread. “We have looked at how geometry affects the spread of a disease. It

Johannes Lengler is a Professor in the Department of Computer Science at ETH Zurich. He gained his PhD at the University of Saarland before moving to Zurich, and has conducted research in both pure and applied mathematics.

similar properties. “This is about the extent to which vertices are sorted by similarities of degrees. How does this distribution compare to a baseline? For a popular vertex, do we see a lot more vertices in a neighbourhood which are also popular?” outlines Professor Lengler. The overall picture seems to be that there is a high degree of positive assortativity in social networks, where for example stars associate with other stars, while negative assortativity has been observed in certain technological networks. “The central nodes and servers here tend not to connect so much to other central nodes and servers, but rather to low degree and low weight nodes,” continues Professor Lengler.

“The spread of epidemics is extremely fast if you look at the inhomogenous models, then it’s very slow if you look at the geometric models. So this raises the question, which is right?”

may or may not have a very strong impact, and this depends on the characteristics of the disease and the contact network,” says Professor Lengler. Some infections are known to spread exponentially, but there are also others which grow polynomially, and Professor Lengler says GIRGs can be used in both of these different scenarios. “These models can show both types of behaviours. Earlier models had a lot of trouble modelling infections like ebola or aids, because they did not have the geometry in them, while the GIRG model takes this into account,” he explains.

The evidence suggests that GIRGs are highly effective in terms of replicating the features of real-world social networks, and Professor Lengler is now actively working to improve them further. One major topic of interest is assortativity, the tendency for vertices to associate with others with

The GIRG model as currently defined is entirely neutral with respect to assortativity, a topic that Professor Lengler and his colleagues hope to address in future. The idea will be to develop a method to control assortativity. “We can then have strong positive assortativity, or strong negative assortativity in a model,” says Professor Lengler. This would add a further dimension to GIRGs, and open up deeper insights into not just how diseases spread, but also other scenarios. “We can look at how two competing opinions spread in the network for example, opinions A and B. Let’s say that a vertex changes, perhaps with a bit of randomness, to the majority opinion in a neighbourhood. Can there be bubbles where both of these opinions are stable?” continues Professor Lengler. “This can happen in some types of network, while in others this would be unstable, and one opinion would take over in the end.”

The left atrial appendage is often closed by doctors performing open-heart surgery to reduce the risk of stroke for patients with atrial fibrillation, yet it’s not clear whether this can prevent strokes in patients who have not been diagnosed with the condition. The LAACS-2 team aim to gain a fuller picture of whether this operation protects against stroke, as Dr Helena Dominguez explains.

A condition characterised by an irregular cardiac rhythm, atrial fibrillation can lead to the development of blood clots in the upper chamber of the heart, which may provoke a stroke. It has long been common practice for surgeons to close the left atrial appendage, a kind of cul-de-sac in the heart that doesn’t seem to be necessary for cardiac function, as a preventative measure. “It’s known that clots can build up in the left atrial appendage, and surgeons have been cutting it away for many years,” says Dr Helena Dominguez. The LAAOS-3 study showed that this procedure is an effective preventive measure in patients with atrial fibrillation. Dr Dominguez and her colleagues investigated whether this procedure might also protect patients without atrial fibrillation from stroke in an earlier study. “In our study we investigated whether left atrial appendage closure by surgery (LAACS) prevented damage to the brain regardless of whether atrial fibrillation had been diagnosed before surgery, looking at endpoints stroke or new infractions, in CT and MRI scans,” she outlines. “We found a difference between the group who had undergone the LAACS procedure, and those who hadn’t.”

“It’s

looking at the incidence of strokes. Biology samples have been taken from the left and the right heart appendages in a subgroup of patients, to investigate whether there are structural changes that render the heart prone to building up clots, even in the absence of atrial fibrillation.

known that clots can build up in the left atrial appendage, and surgeons have been cutting it away for many years.”