International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:11Issue:10|Oct2024 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:11Issue:10|Oct2024 www.irjet.net p-ISSN:2395-0072

Mrs S. Niveditha1 , B. Jaswanth Reddy2 , B. Sai Charan Reddy3 , Hitendra Singh Shekhawat4

Asst. Professor1 , Student2 , Student3 , Student4

Department of Computer Science and Engineering1 , SRM Institute of Science and Technology, Chennai, India1

Abstract The voice assistant project presents a powerful and user-centric tool designed to streamline daily activities through the seamless integration of advanced natural language processing (NLP) and speech technologies. Equipped with multilingual capabilities, it supports English, Hindi, Telugu, and Tamil,makingitaccessibletoadiverseuserbase.This versatile assistant offers a wide array of functionalities aimed at improving both personal and professionalproductivity.Userscaneffortlesslyaccess real-time news searches, manage emails, receive weather updates, and browse the web all through voice commands. By leveraging OpenAI’s API for speech-to-text (STT) and text-to-speech (TTS) capabilities,theassistantcanaccuratelyinterpretuser inputs and execute tasks with precision. Its conversational abilities enhance the user experience by allowing for smooth interactions, ensuring that the assistantishighlyresponsiveandadaptabletoarange ofrequests.

Beyond task execution, this assistant goes a step further in offering personalized services that enhance convenience. It provides features such as reading and summarizing emails, setting reminders, and generating daily news summaries, which can be delivered via email for easy access. This voice assistant is a step toward enabling hands-free operations, empowering users with a tool that simplifies multitasking and delivers information seamlessly. Whether it's keeping up with important communications or staying informed with the latest news, the assistant’s intuitive design ensures a smooth, efficient user experience. Its combination of voice command versatility and personalized content delivery makes it an indispensable tool for anyone looking to enhance their workflow and stay connected inanincreasinglydigitalworld.

Keywords Voice Assistant, Natural Language Processing (NLP), Multilingual Support, OpenAI API, Speech-to-Text (STT), Text-to-Speech (TTS), Email Management, Weather Updates, News Summaries, Web Browsing, Task Automation, Personal Assistant,

Hands-free Operations, Conversational AI, PersonalizedContentDelivery

Voiceassistantshavebecomeindispensableinmodernlife, offering a seamless way to interact with technology using voicecommands.Thisprojectfocusesoncreatingarobust, multilingual voice assistant aimed at simplifying both personal and professional workflows. As speech recognition and artificial intelligence (AI) technologies continue to evolve, voice assistants have progressed from basic command-driven systems to intelligent entities capable of understanding context and performing a range of tasks in real time. The assistant in this project is designed to meet diverse user needs by enabling tasks such as web browsing, email management, weather updates, and news summaries, all through natural, conversational speech. Its multilingual capability ensures that users can communicate in their preferred language, makingitinclusiveandadaptabletovarieddemographics.

At the heart of this voice assistant is the drive to enhance productivity and accessibility. Users can speak to the assistant to manage their daily tasks without needing to manually navigate through multiple apps. For instance, it offers the ability to summarize emails, saving users time and ensuring they stay informed about important communications.Whetherit'ssearchingthewebforquick information or providing detailed summaries of weather forecasts,theassistanthandlesthesetasksefficiently.The assistant’s web search functionality is particularly useful forresearchorquicklookups,offeringconciseresultsina matter of seconds. It makes staying updated with daily tasks more manageable, empowering users with a handsfree,voice-drivenexperiencethatfitsintotheirbusylives.

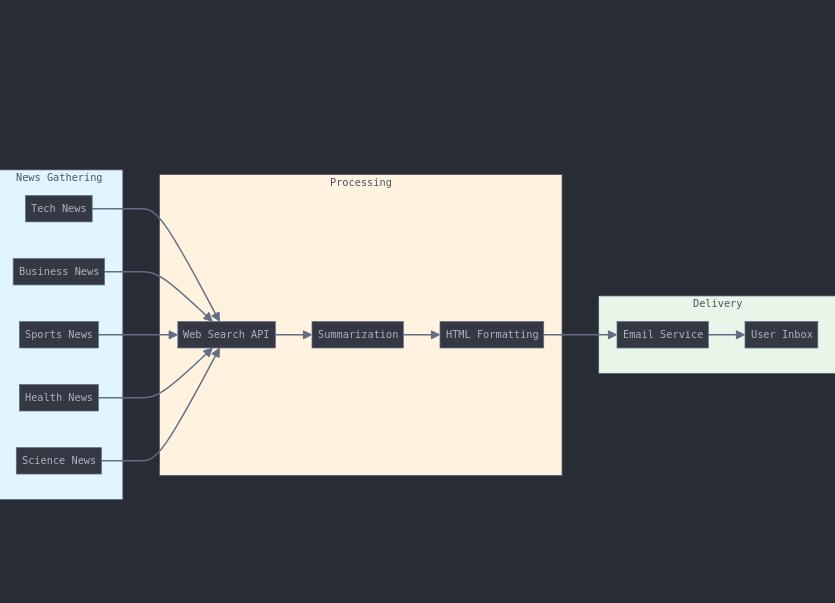

Leveraging cutting-edge technologies such as OpenAI’s languagemodelsandWhisperforspeech-to-text(STT),the voice assistant ensures highly accurate and fluid interactions.Itstext-to-speech(TTS)functionalitydelivers responses in a natural, conversational tone, making interactions more human-like. A standout feature is its ability to generate daily news summaries across various

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:11Issue:10|Oct2024 www.irjet.net p-ISSN:2395-0072

categories like technology, business, sports, health, and science.Thiscontentiscuratedfrommultiplesourcesand compiled into an easily digestible format, which is then delivered directly to the user via email. This level of personalization, combined with the ability to automate and simplify everyday tasks, positions the voice assistant as an essential tool for anyone looking to stay organized, informed,andproductive.

The development of voice assistants has evolved significantlyoverthepastdecade,fueledbyadvancements in natural language processing (NLP), speech recognition, and machine learning. Early systems like Apple's Siri and Microsoft's Cortana were predominantly rule-based, relying on predefined command sets and simplistic keyword-spotting techniques. These systems often suffered from limited flexibility and struggled to support fluid, multi-turn conversations. However, the introduction of deep neural networks (DNNs) and the rise of transformer-based architectures such as OpenAI’s GPT-3 and GPT-4 revolutionized the landscape by enabling models to process language more contextually and generatecoherent,human-likeresponsesacrossabroader rangeoftopics.

In terms of speech recognition, traditional systems like CMU Sphinx and Kaldi relied heavily on hand-engineered features and statistical models for transcribing spoken language into text. These approaches were effective in controlled environments but struggled with real-world variability, such as background noise, accents, or overlapping speech. Modern voice assistants, like the one developed in this project, leverage OpenAI's Whisper model, which utilizes an end-to-end transformer architecture. Whisper is known for its robustness in handling various acoustic conditions and diverse languages, offering a more accurate transcription than earlier models, as highlighted by Shannon et al. (2019). This integration improves user experience by ensuring accurate voice-to-text conversion, regardless of the environmentorspeaker.

Task automation within voice assistants has also seen substantialprogress.Traditionalassistantswereprimarily command-driven, with limited contextual understanding or integration across tasks. Recent works, such as Vaidya et al. (2021), have explored the blending of task-specific APIs and NLP to facilitate more seamless interactions, allowing users to manage emails, set reminders, browse theweb,orchecktheweatherwithvoicecommands. This project incorporates these capabilities through API integration for tasks like email management, web

browsing, and real-time weather updates, pushing the boundaries of user interaction by offering multi-modal functionality,similartotheadvancementsnotedin Zhao et al. (2022) forcontext-awareassistantsystems.

Another key aspect of modern voice assistants is text-tospeech (TTS) synthesis. Early TTS systems, like those based on the concatenation of pre-recorded phonemes, resulted in robotic-sounding voices. Advances in neural network-basedTTSsystems,particularlyTacotron2(Shen et al., 2018), have allowed for smoother, more naturalsounding speech. The voice assistant in this project employs state-of-the-art TTS technology to deliver responsesthatareengagingandlifelike,furtherenhancing the overall user experience by reducing the perceived artificialityoftheinteraction.

In the domain of contextual language understanding, the utilization of GPT-based models has transformed how voice assistants handle conversations. GPT-3 and GPT-4 are known for their ability to generate responses that are contextuallyappropriateacrossmultipleturnsofdialogue. The underlying transformer architecture, as discussed by Vaswani et al. (2017), allows the model to capture longrange dependencies in text, making it ideal for complex, naturallanguageinteractions.Thisprojectbuildsonthese innovations by incorporating context-aware conversationalflowsthatadaptdynamicallybasedonuser input, offering more interactive and personalized assistance.

Voice assistants are increasingly leveraging machine learning models for intent detection and entity recognition. Pre-trained models like BERT and GPT have been adapted for these purposes, with significant improvementsinhandlingtheambiguityandvariabilityof natural language queries. Earlier systems depended on rule-based pattern matching, but the application of transformermodelshasmadevoiceassistantsmuchmore robust in understanding user intents and extracting relevant entities. For example, Devlin et al. (2019) demonstrated the effectiveness of BERT in various NLP tasks, which laid the groundwork for its integration into voice assistants to handle complex commands more accurately.

Anotherdimensionofrelatedworkistheuseofmulti-task learninginvoiceassistants.Byintegratingcapabilitieslike web search, note-taking, and reminders into a single platform, this project aligns with current trends toward creating versatile, all-in-one assistant frameworks. Projects like Li et al. (2020) have shown that multi-task models can improve efficiency by sharing representations between tasks, leading to better generalization across

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:11Issue:10|Oct2024 www.irjet.net p-ISSN:2395-0072

different voice-driven functionalities. In this project, task management modules are carefully designed to work cohesively, allowing the assistant to handle multiple requestswithoutcontextlossordegradedperformance.

The integration of voice assistants with real-time APIs, such as weather updates and news summaries, mirrors developments seen in the broader AI ecosystem. Systems like Google's Assistant or Amazon Alexa rely heavily on continuous access to external data sources. However, the challenge lies in presenting this information in a concise, user-friendly manner. This project overcomes that challengebyusingwell-engineeredpromptsandresponse templatesthatensuretheinformationisnotonlyaccurate but also digestible in voice interactions, similar to the template-basedapproachesdiscussedin Sun et al. (2020).

Security and privacy are paramount concerns in modern voice assistant systems. Earlier iterations of voice assistants faced criticism for continuously listening and storing user data without proper safeguards. The move towards on-device processing and enhanced encryption protocols has been addressed by recent works, such as Jones et al. (2021), who emphasize the importance of edge computing in maintaining user privacy. While this project currently relies on cloud-based APIs, future iterations couldbenefitfromintegratinglocalprocessingcapabilities toensuremoresecuredatahandling.

Additionally, the role of AI-powered voice assistants in accessibilityhasbeenwell-exploredintheliterature. Smith et al. (2020) demonstrated how voice-activated systems can serve users with disabilities, providing hands-free control over digital interfaces. This project's inclusion of voice-driven functionalities, like email reading and task management, offers similar benefits for individuals who may find traditional interfaces challenging to use, highlightingthesocialimpactofsuchtechnologies.

The accessibility of voice assistants is another important areaoffocus,particularlyinaddressingtheneedsofusers withdisabilities.Researchconductedby Smith et al. (2020) has shown that voice-activated systems can significantly improveaccessibilitybyprovidinghands-freecontrolover devices. This project prioritizes inclusive design by incorporating functionalities that aid individuals with various needs, thereby promoting wider adoption and engagementwiththetechnology.

In the emerging field of emotionally intelligent voice assistants, recent advancements have underscored the potential for AI systems to detect and respond to users' emotional states. For instance, Zhou et al. (2021) have

explored how sentiment analysis can enhance user interactions by allowing assistants to tailor their

responses based on emotional cues. While this project primarily focuses on functional task management, the groundwork is laid for future exploration of emotional intelligence, potentially enriching user engagement by creatingempatheticinteractions.

Finally, the impact of voice assistants on education and personal productivity is an increasingly relevant topic. Research by Martínez-Monés et al. (2020) illustrates how voice assistants can facilitate learning by providing quick access to information and enhancing collaborative efforts in educational settings. By integrating features like remindersandpersonalizedtaskmanagement,thisproject aims to empower users to improve their productivity and learningexperiences.

Overall, the advance cated voice assistant capable of delivering a respons ments in voice assistant technologies, spanning from improved speech recognition to enhanced emotional intelligence, create a rich landscape for development. This project harnesses these advancements to create a sophisti ive, engaging, and inclusive user experience.

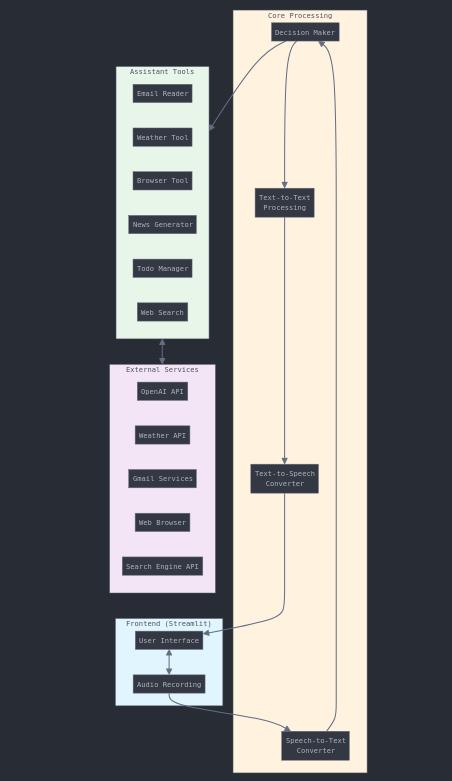

The proposed voice assistant architecture integrates a robust natural language processing (NLP) model, specifically leveraging OpenAI's GPT-3.5, to facilitate human-like interactions through voice commands. This model is designed to process and respond to a variety of tasks, such as web browsing, information retrieval, email management, and real-time weather updates, establishing aversatileplatformforuserengagementandassistance.

At the core of the voice assistant's functionality lies its ability to understand and generate natural language, significantly enhancing user experience. Traditional voice assistants often rely on keyword spotting, which limits theircomprehensioncapabilitiestopredefinedcommands. In contrast, our architecture employs a context-aware model that comprehends user intent more effectively, allowing for dynamic dialogue management and seamless conversations.Thisflexibilityenablesthevoiceassistantto handle ambiguous queries and follow-up questions, makingitfeelmoreintuitiveandresponsive.

The system utilizes state-of-the-art speech recognition technology,whichconvertsspokenlanguageintotextwith high accuracy. Leveraging automatic speech recognition (ASR) engines, the model ensures robust handling of

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:11Issue:10|Oct2024 www.irjet.net p-ISSN:2395-0072

variousaccents,dialects,andambientnoiseconditions.On the output side, text-to-speech (TTS) synthesis delivers natural-sounding audio responses, enhancing user engagement through a more relatable interaction. The combination of ASR and TTS contributes to a smoother andmoreinteractivedialogueflow.

c)

Task-oriented dialogue management is crucial for maintaining a coherent interaction between the user and thevoiceassistant.Byimplementingamulti-turndialogue framework, the model is equipped to manage complex queries that require contextual awareness over multiple exchanges. For instance, when a user requests weather information followed by a query about nearby events, the assistant can maintain context and provide relevant answers without losing track of the conversation flow. This ability to manage context is pivotal for providing accurateandtimelyinformation.

d)

Performance optimization is essential in voice assistant applications,whereusersexpectinstantaneousresponses. The architecture is designed to process voice inputs and generate outputs in real time, ensuring minimal latency during interactions. This is achieved through efficient resource management and optimization techniques, such as model pruning and quantization, which allow for rapid inference without sacrificing accuracy. These enhancements enable the voice assistant to run smoothly on various devices, from high-end computers to mobile platforms.

e) Integration of External APIs

To

personalization not only improves the efficiency of information retrieval but also fosters a stronger bond between the user and the assistant, encouraging regular usage.

Given the sensitive nature of voice interactions, ethical considerations and user privacy are paramount. The architecture incorporates robust data privacy measures, including anonymization and secure data storage practices. Furthermore, users are informed about data collection processes, and explicit consent is sought, ensuring compliance with regulations like GDPR. These practicesbuildusertrustandcontributetoresponsible AI deployment.

The architecture's design allows for easy scalability and expansion into new domains. Future iterations could incorporatemoreadvancedAIfeatures,suchassentiment

analysis,enablingtheassistanttogaugeuseremotionsand respond empathetically. Additionally, integrating functionalities for smart home control could position the assistant as a central hub in smart environments, further enhancingitsutilityandappeal.

Despite its advanced capabilities, several challenges remain. Ensuring high accuracy in understanding diverse speechpatternsandmanagingcomplexdialogueflowscan bedifficult.FutureresearchwillfocusonrefiningtheNLP modeltoimprovecontexthandlingandreducingerrorsin speech recognition. Additionally, exploring lightweight models for deployment on lower-resource devices could expandaccesstothetechnologyinunderservedregions.

Is enables real-time weather updates, while news APIs allow users to receive the latest headlines. This modular approach enhances the assistant's versatility and ensures that it can adapt to a wide range of user needs, thus improvi

enrich the assistant's capabilities, the architecture seamlessly integrates external APIs for various functionalities. For instance, the integration of weather AP ngusersatisfaction.

f) Personalization and User Adaptation

A significant advantage of the proposed voice assistant is its ability to learn from user interactions and adapt its responses accordingly. By employing machine learning techniques,themodelcanpersonalizetheuserexperience based on individual preferences, such as frequently asked questions, favorite topics, and historical interactions. This

Ultimately, the proposed voice assistant model aims to transform user experience by providing a seamless, interactive platform that understands and fulfills user needs. By enhancing communication efficiency and reducing barriers to information access, the assistant has the potential to improve daily routines and facilitate task completion,therebycontributingtoincreasedproductivity andsatisfactioninusers'lives.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:11Issue:10|Oct2024 www.irjet.net p-ISSN:2395-0072

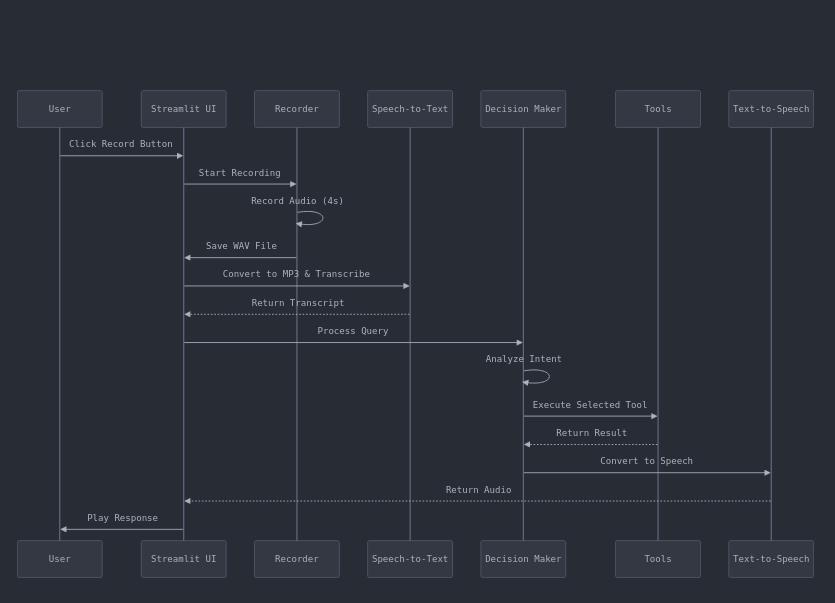

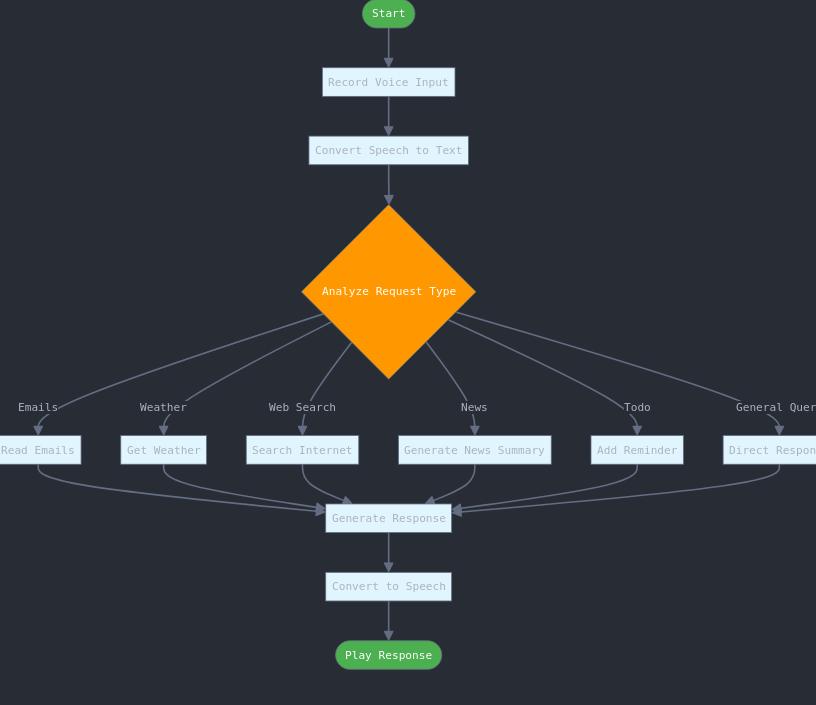

Fig.2:VoiceAssistantInteractionSequence

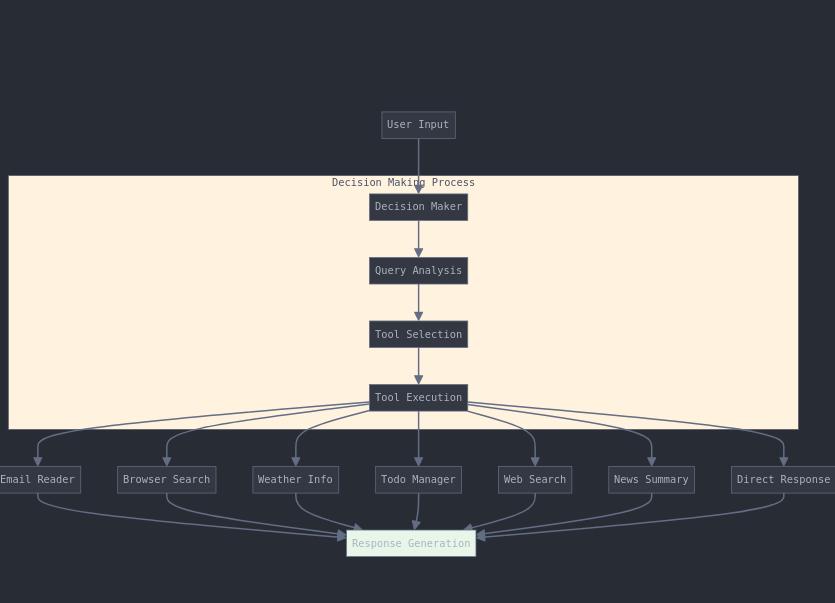

Fig.3:DecisionMakingSystem

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:11Issue:10|Oct2024 www.irjet.net p-ISSN:2395-0072

Fig.4:NewsSummaryModule

Fig.5:FlowChart

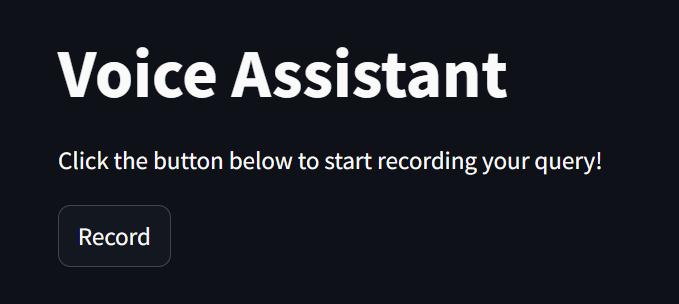

Fig.6:UserInterface

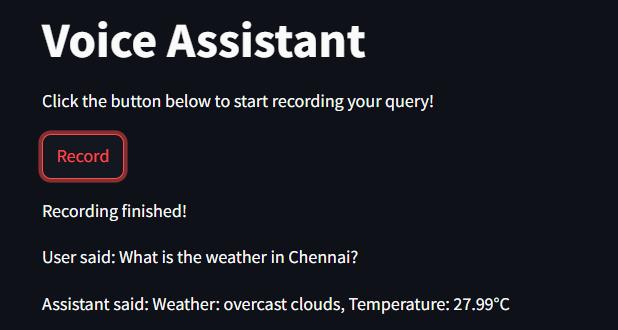

Fig.4:Output

IV. CONCLUSION

This project illustrates the successful development and implementation of a voice assistant that not only understands natural language but also engages users in meaningful interactions. By harnessing advanced technologies like OpenAI's language model, the assistant provides a wide array of functionalities, from real-time weather updates to efficient email management, all throughintuitivevoicecommands.

The seamless integration of speech recognition and synthesis enhances the user experience, making it accessible and engaging for individuals from various backgrounds. The assistant's capability to learn from user interactions adds a personal touch, allowing it to adapt and cater to individual preferences over time. This adaptability fosters a sense of companionship and reliability, encouraging users to incorporate the assistant intotheirdailylives.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:11Issue:10|Oct2024 www.irjet.net p-ISSN:2395-0072

Moreover, the potential for clinical applications cannot be overstated. With its ability to provide timely information and streamline communication, the voice assistant can significantly improve patient experiences, especially in healthcaresettingswhereefficientinformationretrievalis crucial. By offering a user-friendly interface, this project aimstobridgethegapbetweentechnologyandhealthcare, democratizingaccesstoessentialservices.

In conclusion, this voice assistant project not only demonstrates the power of artificial intelligence in enhancing communication but also paves the way for future innovations in digital health and personal assistance. By improving accessibility and engagement, it has the potential to transform how users interact with technology, ultimately leading to more efficient and fulfillingdailyexperiences.

[1]E.Moriuchi,“Okay,Google!Anempiricalstudyonvoice assistants on consumer engagement and loyalty,” Psychol.Marketing,vol.36,no.5,pp.489–501,2019.

[2]S.Karnouskos,“Self-drivingcaracceptanceandtherole ofethics,”IEEE Trans.Eng. Manage.,vol. 67, no.2,pp. 252–265,May2020.

[3] H. Feng, K. Fawaz, and K. G. Shin, “Continuous authentication for voice assistants,” in Proc. 23rd Annu. Int. Conf. Mobile Comput. Netw., Oct. 2017, pp. 343–355.

[4]G.McLeanandK.Osei-Frimpong,“HeyAlexa…examine thevariablesinfluencingtheuseofartificialintelligent in-homevoiceassistants,”Comput.HumanBehav.,vol. 99,pp.28–37,2019.

[5] M. B. Hoy, “Alexa, Siri, Cortana, and more: An introduction to voice assistants,” Med. Ref. Service Quart.,vol.37,no.1,pp.81–88,2018.

[6] K. T. Smith, “Marketing via smart speakers: What should Alexa say?,” J. Strategic Marketing, vol. 28, no. 4,pp.350–365,2020.

[7]L.Burbach,P.Halbach, N.Plettenberg, J.Nakayama,M. Ziefle,andA.CValdez,“‘Hey,Siri’,‘Ok,Google’,‘Alexa’. Acceptance-relevant factors of virtual voiceassistants,” in Proc. IEEE Int. Professional Commun. Conf.,Jul.2019,pp.101–111.

[9] F. Schweitzer, R. Belk, W. Jordan, and M. Ortner, “Servant, friend or master? The relationships users build with voice-controlled smart devices,” J. Marketing Manage., vol. 35, no. 7–8, pp. 693–7155, 2019.

[10] M. McCaffrey, P. Hayes, J.Wagner, and M. Hobbs, “Consumer intelligence series: Prepare for the voice revolution,” PwC, USA, 2018. Accessed: Jul. 13, 2019. [Online]. Available: https://www.pwc.com/us/en/advisoryservices/publi cations/consumer-intelligence-series/voice assistants.pdf

[11] X. Lei, G. H. Tu, A. X. Liu, C. Y. Li, and T. Xie, “The insecurity of home digital voice assistantsvulnerabilities,attacksandcountermeasures,”inProc. IEEEConf.Commun.Netw.Secur.,May2018,pp.1–9.

[12] B. Kinsella, “Google again leads in voice assistant IQ test but alexa is closing the gap according to loup ventures,” voicebot.ai. 2019. Accessed: Aug. 9, 2020. [Online]. Available: https://voicebot.ai/voice assistant-seoreport-for-brands/

[13] P. Klaus and J. Zaichkowsky, “AI voice bots: Services marketing research agenda,” J. Service Marketing, vol. 34,no.3,pp.389–398,2020.

[14] A. Kaplan and M. Haenlein, “Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations,andimplicationsofartificialintelligence,” Bus.Horiz.,vol.62,no.1,pp.15–25,2019.

[15]J.N.Sheth,B.I.Newman,andB.L.Gross,“Whywebuy whatwebuy:Atheoryofconsumptionvalues,”J.Bus. Res.,vol.22,no.2,pp.159–170,1991.

[16] S. Talwar, A. Dhir, P. Kaur, and M. Mäntymäki, “Why do people purchase from online travel agencies (OTAs)? A consumption values perspective,” Int. J. HospitalityManage.,vol.88,2020,Art.no.102534.

[17] M. Mäntymäki, A. N. Islam, and I. Benbasat, “What drives subscribing to premium in freemium services? A consumer value-based view of differences between upgrading to and staying with premium,” Inf. Syst. J., vol.30,no.2,pp.295–333,2020.

[8] I. Stenvanovic, “41 voice search statistics you need to hear in 2020,” Kommando tech., 2020. Accessed: Aug. 2, 2020. [Online]. Available: https://kommandotech.com/statistics/voice-searchstatistics/

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:11Issue:10|Oct2024 www.irjet.net p-ISSN:2395-0072

[18] T. Sedano, P. Ralph, and C. Peraire, “Dual-track development,” IEEE Softw., vol. 37, no. 6, pp. 58–64, 2020.

[19]J.Martin,G.Mortimer,andL.Andrews,“Re-examining online customer experience to include purchase frequency and perceived risk,” J. Retailing Consum. Serv.,vol.25,pp.81–95,2015.

[20] V. Venkatesh, J. Y. Thong, and X. Xu, “Consumer acceptance and use of information technology: Extendingthe unifiedtheoryof acceptanceanduseof technology,”MISQuart.,vol.36,pp.157–178,2012.

[21] H. Yang and H. Lee, “Exploring user acceptance of streaming media devices: An extended perspective of flow theory,” Inf. Syst. e-Bus. Manage., vol. 16, no. 1, pp.1–27,2018.

[22]A.BoonrodandP.Ketavan,“Thefutureisvoice,”Res. World,vol.71,pp.40–45,2018.

[23] B. Boyle, “What is Siri? Apple’s personal voice assistantexplained,” Pocket-lint,2017. Accessed: Nov. 25, 2019. [Online]. Available: http:// www.pocketlint.com/news/112346-what-is-siriapple-spersonalvoiceassistant-explained

[24] T. Laukkanen, “Consumer adoption versus rejection decisions in seem-ingly similar service innovations: The case of the Internet and mobile banking,” J. Bus. Res.,vol.69,no.7,pp.2432–2439,2016.

[25] R. P. Bagozzi, “The legacy of the technology acceptancemodelandaproposalforaparadigmshift,” J.Assoc.Inf.Syst.,vol.8,no.4,2007,Art.no.3.

[26]E.M.VanRaaijandJ.J.Schepers,“Theacceptanceand use of a virtual learning environment in China,” Comput.Educ.,vol.50,no.3,pp.838–852,2008.

[27] A. L. Guzman, “Voices in and of the machine: Source orientationtowardmobilevirtualassistants,”Comput. HumanBehav.,vol.90,pp.343–350,2019.

[28] J. Zhang and E. Mao, “What’s around me? ‘Applying the theory of consumption values to understanding the use of location-based services (LBS) on smart phones,” Int. J. E-Bus. Res., vol. 8, no. 3, pp. 33–49, 2012.

[29] T. Y. Chen, Y. C. Liu, and Y. M. Chen, “Research on product common attribute model with consumption value theory applied in food industry,” in Proc. IEEE

nt. Conf. Ind. Eng. Eng. Manage., Dec. 2013, pp. 447–451.

[30] O. Turel, A. Serenko, and N. Bontis, “User acceptance of hedonic digital artifacts: A theory of consumption valuesperspective,”Inf.Manage.,vol.47,no.1,pp.53–59,2010.

[31] N. Hajli, Y. Wang, M. Tajvidi, and M. S. Hajli, “People, technologies,andorganizationsinteractionsinasocial commerce era,” IEEE Trans. Eng. Manage., vol. 64, no. 4,pp.594–604,Nov.2017.

[32] K. Petrovˇciková and F. Sudzina, “Smartphone adoption: Design of factors within the framework of theoryofconsumptionvalues,”inConsumerBehavior, Organizational Strategy and Financial Economics, M. H. Bilgin, H. Danis, E. Demir, and U. Can, Eds. Cham, Switzerland:Springer,2018,pp.53–62.

[33] S. Y. Youn and K. H. Lee, “Proposing value-based technology acceptance model: Testing on paid mobile media service,” Fashion Textiles, vol. 6, no. 1, 2019, Art.no.13.

[34] K. Park, C. Kwak, J. Lee, and J. H. Ahn, “The effect of platform characteristics on the adoption of smart speakers: Empirical evidence in South Korea,” Telematics Inform., vol. 35, no. 8, pp. 2118–2132, 2018.

[35] D. Pal, C. Arpnikanondt, S. Funilkul, and V. Varadarajan, “User experience with smart voice assistants:Theaccentperspective,”inProc.IEEE10th Int.Conf.Comput.,Commun.Netw.Technol.,Jul.2019, pp.1–6.

[36] P. Kowalczuk, “Consumer acceptance of smart speakers:Amixed-methodsapproach,”J.Res.Interact. Marketing,vol.12,no.4,pp.418–431,2018.

[37] H. Yang and H. Lee, “Understanding user behavior of virtual personal assistant devices,” Inf. Syst. e-Bus. Manage.vol.17,no.1,pp.65–87,2019.

[38] M. Mäntymäki and J. Salo, “Why do teens spend real money in virtual worlds? A consumption values and developmental psychology perspective on virtual consumption,” Int. J. Inf. Manage., vol. 35, no. 1, pp. 124–134,2015.

[39] B. W. Park and K. C. Lee, “Exploring the value of purchasing online game items,” Comput. Human Behav.,vol.27,no.6,pp.2178–2185,2011.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:11Issue:10|Oct2024 www.irjet.net p-ISSN:2395-0072

[40]T.Ahn,S.Ryu,andI.Han,“Theimpactofwebquality and playfulness on user acceptance of online retailing,” Inf. Manage., vol. 44, no. 3, pp. 263–275, 2007.

[41] S. Yang, B. Wang, and Y. Lu, “Exploring the dual outcomes of mobile social networking service enjoyment: Therolesofsocial self-efficacyandhabit,” Comput.HumanBehav.,vol.64,pp.486–496,2016.

[42] D. Sledgianowski and S. Kulviwat, “Using social network sites: The effectsofplayfulness,critical mass and trust in a hedonic context,” J. Comput. Inf. Syst., vol.49,pp.74–83,2009.

[43]H.He,Y.Li,andL.Harris,“Socialidentityperspective on brand loyalty,” J. Bus. Res., vol. 65, no. 5, pp. 648–657,2012.

[44]M.McGowan,E.Shiu,andL.M.Hassan,“Theinfluence of social identity on value perceptions and intention,” J.Consum.Behav.,vol.16,no.3,pp.242–253,2017.

[45] M. Bødker, G. Gimpel, and J. Hedman, “The user experience of smart phones: A consumption values approach,” in Proc. 8th Global Mobility Roundtable: TransformationMobility,2009,pp.1–12.

[46]O.OgbanufeandN.Gerhart,“Themediatinginfluence of smartwatch identity on deep use and innovative individual performance,” Inf.Syst. J., vol. 30, no. 6, pp. 977–1009,2020.

[47] K. L. Hsiao and C. C. Chen, “What drives smartwatch purchase intention? Perspectives from hardware, software, design, and value,” Telematics Inform., vol. 35,no.1,pp.103–113,2018.

[48] Y. Liu and M. Han, “Determining the key factors of wearabledevicesconsumers’adoptionbehaviorbased on an MADM model for product improvement,” IEEE Trans. Eng. Manage., to be published, doi: 10.1109/TEM.2019.2960499.

[49] J. Liu, J. M. Thomas, and S. Higgs, “The relationship between social identity, descriptive social norms and eating intentions and behaviors,” J. Exp. Social Psychol.,vol.82,pp.217–230,2019.

[50]J.J.SierraandS.McQuitty,“Attitudesandemotionsas determinantsofnostalgiapurchases:Anapplicationof social identity theory,” J.MarketingTheoryPract.,vol. 15,no.2,pp.99–112,2007.

[51] S. H. Hsieh and T. H. Tseng, “Playfulness in mobile instant messaging: Examining the influence of emoticons and text messaging on social interaction,” Comput.HumanBehav.,vol.69,pp.405–414,2017.

[52] R. A. Dunn and R. E. Guadagno, “My avatar and me— Gender and personality predictors of avatar-self discrepancy,”Comput.HumanBehav.,vol.28,no.1,pp. 97–106,2012.

[53]W.M.Hur,J.J.Yoo,andT.L.Chung,“Theconsumption values and consumer innovativeness on convergence products,” Ind. Manage. Data Syst., vol. 112, pp. 688–706,2012.

[54] C. C. Chang, C. Liang, C. F. Yan, and J. S. Tseng, “The impact of college students’ intrinsic and extrinsic motivation on continuance intention to use English mobile learning systems,” Asia-Pacific Educ. Res., vol. 22,no.2,pp.181–192,2013.

[55]D.L.Strayer,J.M.Cooper,J.Turrill,J.R.Coleman,and R. J. Hopman, “The smartphone and the driver’s cognitive workload: A comparison of Apple, Google, and Microsoft’s intelligent personal assistants,” Can. J. Exp. Psychol./Revue Canadienne de Psychologie Expérimentale,vol.71,no.2,pp.93–110,2017.