International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 11 | Nov 2024 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 11 | Nov 2024 www.irjet.net p-ISSN:2395-0072

J. P. Pramod

, Sumaiyya Fatima2 & Baddula Gayathri Yadav3

1Asst Professor, Dept of Physics

Stanley College of Engineering and Technology for Women

2&3B.Tech Student, Dept of Computer Science and Engineering

Stanley College of Engineering and Technology for Women

Abstract:

Artificial Intelligence (AI) is advancing the field of medical physics by delivering solutions that can be employed to maximizethequalityofimagingindiagnosticimaginglikeMagneticResonanceImaging(MRI)andComputedTomography (CT)withtheweightonservingtodoctorsenhancediagnosticcapabilities.Historically,medicalimagingtechnologieshave transformedandshapedhowhealthcareprofessionalsdodiagnosticsandtreatpatients.FromX-raytechnologyinthepast timestocutting-edgeimagingtechnologieslikeMRIandCT,thereremaininnovationsgivingbirthtonewpossibilitiesand challenges.Presently,artificial intelligenceisusheringthenextwavewithsophisticatedsolutionsto enhanceimagesand diagnostic tools, redefining the boundaries of medical physics. This demonstrates how compared to conventional downstreammethodsinMRIandCTimaging,AI-basedimageprocessingtechniqueshavechangedthegamebyfixinglow resolution, noise, and artifacts. Through a deeper analysis of specific AI characterizations such as Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs), and a discussion of clinical applications, this research highlightsthesetechnologiesaligningtomeetanincreasingdemandforprecisionandaccuracyinmedicaldiagnostics.

Keywords:

ArtificialIntelligence(AI),MedicalPhysics,DiagnosticImaging,MagneticResonanceImaging(MRI),ConvolutionalNeural Networks(CNNs)

Introduction:

Medicalimaginghasrevolutionizedhealthcare,providingmedicalprofessionalswithincredibleinsightsintotheworkings of the human body. These technologies were not available a few decades ago. Some of the major medical physics tools include Magnetic Resonance Imaging (MRI) and Computed Tomography (CT), and they each have their benefits. For example,inthecaseofMRI,usesverystrongmagneticfieldsandradio wavestoimagesofttissuesofthehumanbodyat incredibly high spatial resolutions. As a result, MRI has been successfully applied to neurological, musculoskeletal, and cardiovascularimaging.MRIdoesthisbecauseofthealignmentofhydrogenprotonsthroughstrongmagneticfields.These protons get knocked out of this temporary comfortable state, and as they go back to their happy state they emit radio signals, which are collected and reversed into the image. The main obstacle for MRI is sensitivity to patient motion. This problem is due to the long scan time. Patients have to be completely motionless for 30-60 minutes. If the patient moves even 1mm during the scan, the scan will be blurry and the image will not be diagnostic. CT imaging provides crosssectionalimagesofthebodythroughtheuseofX-rays.Ithasanincrediblebonecontrast,sowithCTscanningtumorscan be detected inside liver tissue. CT is also used in neurological imaging in the context of stroke. However, a CT scan is limited by its use of ionizing radiation. Any amount of ionizing radiation comes with a cancer risk if you need to be repeated. Low-dose CT imaging has attempted to alleviate some of these issues by decreasing radiation exposure; however, this energy decrease increases noise and lowers image resolution, which makes it more difficult for small abnormalities to be detected. Moreover, both modalities have noise and low resolution, which results in the loss of key information.

Artificial intelligence, with all its potential, might become a solution to some of these problems, especially in image enhancement and noise reduction. In the past decade, AI has become an intermingling force transformational in medical physics, totally changing the way imaging data is processed and analyzed. Highly constructed models like convolutional neural networks (CNNs) and generative adversarial networks (GANs) have long been invented with the aim of quality enhancement, motion artifact correction, and noise reduction. For example, CNNs can be designed to identify and delete noisefromMRIscans,producingclearer,moreaccurateimaging.ContrastandartifactresolutionwereoptimizedusingAI techniquesinCTimaging,whichenabledimproveddetectionofpathologiesandimproveddiagnosticoutcomes.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 11 | Nov 2024 www.irjet.net p-ISSN:2395-0072

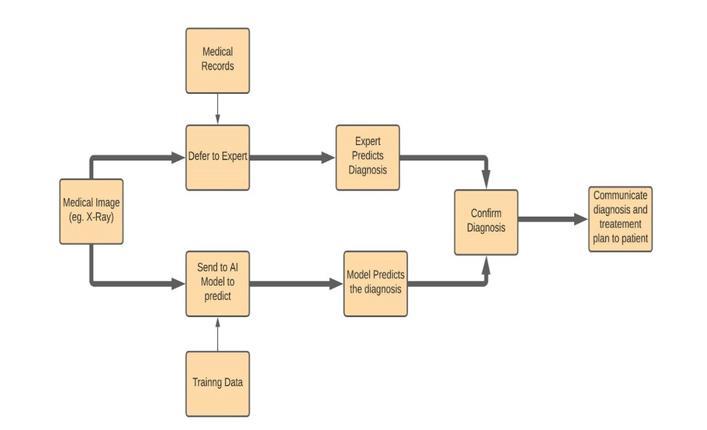

1: The Process Flow Representation of Medical Image Diagnosis using AI.

Specialized AI Models for Enhanced MRI and CT scans:

In imaging and diagnostic medicine, new AI technologies are being developed that allow traditional scans to be transformed into visual maps that contribute to deep clinical insights. Therefore, subtle elements appearing in the most obnoxious images can now be clearer or more enhanced and hence easier to interpret with AI. The whole scope of improvementsdoesnotmerelyaddressimageenhancementbutalsotheuseofdifferentAImodelseachwithasetofskills drivingthischange,extendingthechancetoMRIandCTimaging.

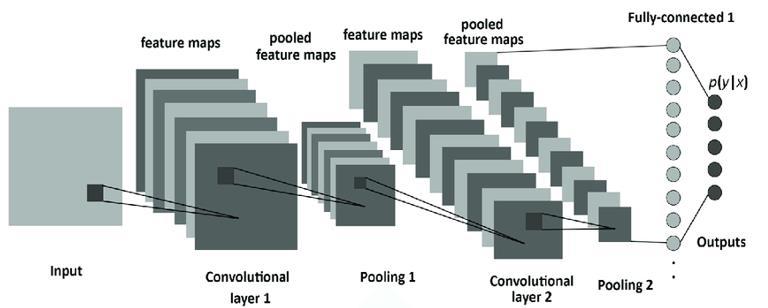

Convolutional Neural Networks (CNNs) have a prominent and pioneering role in understanding, refining, and visualizingcomplexvisualdata.Thesenetworksexcelindetectingandamplifyingminutefeaturessuchasedges,textures, andcontours.Inapplication,aCNNcanbeusedtooutlinewithgreatprecisiontheboundaryofatumorfromanMRIscan or to change a low-resolution CT image into a higher-resolution one. Their ability to recognize complex patterns and structures gives them the advantage when creating finer details in medical images where clarity is crucial, like in cases where early tumor detection or abnormalities in soft tissues are involved. Here, the structure of CNN consists of several layers,whichallowsthemtoautomaticallyextractfeaturesfromimages.ThefirstlayersofaCNNareasfollows:

Convolutional Layers:Theselayersapplyconvolution operations ontheinputimageusingvariousfilters(kernels)that slideoverit,detectingsimplefeaturessuchasedgesandtextures.Eachfilteractivatesifcertainfeaturesexistintheimage, thusenablingthenetworktolearnfrompatterns.

Activation Layer:Theselayersintroducenon-linearityinthemodel,usuallybytheapplicationoftheRectifiedLinearUnit (ReLU)activationfunction,enablingthelearningofcomplexrelationshipswiththeinputs.

Pooling Layer: These layers follow the convolution layers, which assist in downsampling the feature maps while preservingandprobablyreducingdimensionsizeforthecomputationthatissignificantenough.Maxpoolingandaverage pooling are some techniques to downsample the feature maps while reducing the computational load and avoiding overfitting.

Fully Connected Layers:Theselayersariseattheveryendofthenetwork,wherethefeatureslearnedfromtheprevious layer are fully connected and dispersed to produce the final output, which may be class labels or image reconstructions. Thelayersconnectalltheneurons,allowingforaverythoroughdecisionbasedontheextractedfeatures.

Ultimately,asvariousconvolutionstakeplaceacrossdifferentlevels,itshierarchicalstructureenablestheirproficiency in tasksthatdemandintricateimageanalysisitisamust-useinmedicalimagingtoolslikeMRIandCTscans.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 11 | Nov 2024 www.irjet.net p-ISSN:2395-0072

Figure 2: CNN layers, including convolutional layers, pooling layers, and fully connected layers.

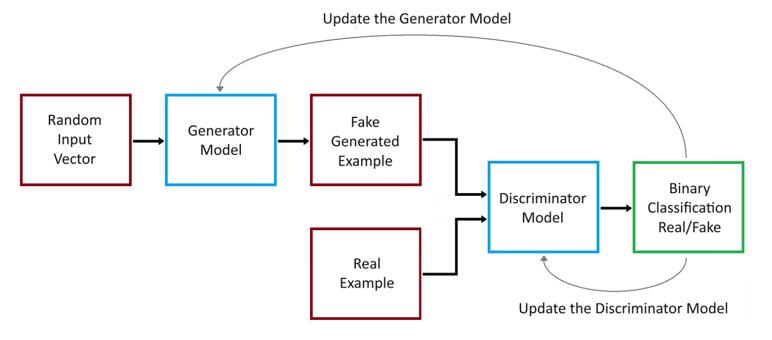

Generative Adversarial Networks (GANs) might, however, take a more innovative approach to picture enhancement. GANshavetwomethodsworkinginoppositiontooneanothertoenablethegenerationofhigh-qualityimages.:

Generator: The generator takes random noise as input and converts it to a synthetic image. Its prime aim is to produce imagesthatwillbepassedoffasrealones.Thegeneratorprogressivelybecomesadeptatproducingincreasinglyrealistic outputsbytrainingtominimizethedifferencesbetweengeneratedimagesandrealdata.

Discriminator:Thediscriminatoractslikethatmajoredincritiquingtheauthenticityofageneratedimagebyessentially giving it input images which include real and non-real ones and giving output the probability of that input image being real. The ultimate and only objective of the discriminator is to classify the image correctly as real or fake so that the generatorisalwaysencouragedtoimproveitsoutput.

ThisadversarialgameallowsGANstoproduceextremelycleanimagesfromnoisyorincompletedata.CTimagingbenefits greatly from GANs because they step in when low-dose scans are taken, essentially pictures taken at reduced radiation levelstominimizepatientexposurebutalsoexposegrainyimages.Increatingtheseimages,GANsaffordphysiciansevery bitofinformationwithoutriskingpatientexposuretounnecessaryradiation.

Figure 3: GANs, featuring a visual representation of the generator-discriminator loop.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 11 | Nov 2024 www.irjet.net p-ISSN:2395-0072

Tothismix, Recurrent Neural Networks (RNNs) addyetanothercapabilityparticularlywhenmotionovertimeistobe captured.Whileinmostmodels,apictureistreatedasanisolatedentity,RNNssequentializethoseimagesandcanthusfit well into the dynamic applications of MRI. Accordingly, in cases like blood flow monitoring or muscle activity tracking, RNNs aim to provide a smooth, coherent vision of how these alterations evolve, thus rendering a much deeper comprehensionofphysiologicalprocesses.

Autoencoders arethedetail-orientededitorsinAIland.Inshort,theyparsecomplexdataandreconstructittohighlight the most critical features; removing any irrelevant noise. Autoencoders, on the other hand, are good at capturing latent patternsandthatiswherethepowerofautoencoderscomes inoptimizationwithMRIorCTscans.Bycleaningthedata, theycanfindsmallvariationsthatdisappearinbusyandnoisyimages,whichhelpdoctorsdetectearlypotentialdiseases wemightmiss.

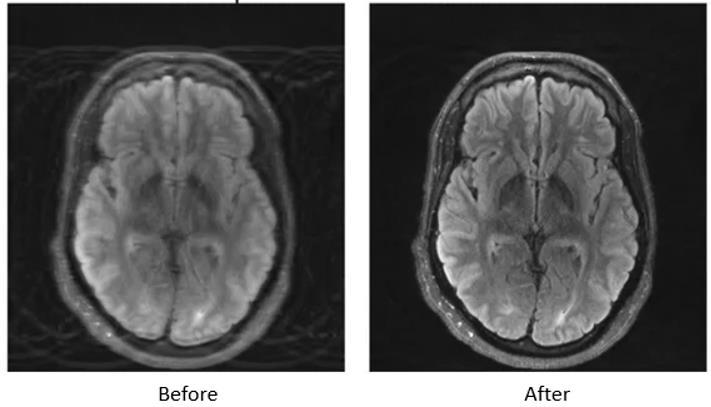

ConvolutionalNeuralNetworksarecentralinMRIimageenhancement,especiallyregardingimageresolutionandquality. In MRI scanning, resolution is often hampered because of time constraints and discomfort to the subject, causing poor image quality. CNNs solve the problem of image resolution through super-resolution techniques that upscale lowresolution images to high-resolution ones. The models learn, using multiple layers of convolutional filters, to extract minuteanatomical detail cortical foldsandsubtletissue boundaries thatmightnot be readilyapparentinlow-resolution scans. This ability is particularly important in neuroimaging, where accuracy regarding structural details is crucial in mappingbrainregionsorin detectingmicrostructural abnormalities.The bestpossibleresolutionupscaleofMRIimages byCNNs-afeaturethatprovidesradiologistswithimprovedimageswillbeanadditiontothefightagainstapatientlosing anopportunityforearlydetectionofhis/hermedicalcondition.

Another critical challenge that faces MRI imaging is noise, which can obscure some details and complicate the whole thing'sinterpretation.However,CNNsexcelindenoisingthoseMRIscansbyfirstidentifyingadifferencebetweenrandom noise and actual tissue characteristics. They are trained on datasets with paired images: one clean image, andone noisy image-pair for this technique to work well by successfully removing unwanted noise while keeping the relevant anatomical features. For example, with low-field MRIs, wherein signals are weak, CNNs can increase clarity significantly and enable a better analysis of structures such as white matter in the brain.The denoising process is needed in treating processes like multiple sclerosis and stroke because clear visualization of lesions is not only advantageous but also necessaryfortreatment.

In addition, CNNs are used for motion artifact correction, which is a common issue with MRI scans caused by the movement of a patient. While undergoing an MRI scan, any movement of the patient can result in blurring or ghosting artifacts that compromise image quality. With this, CNNs try to learn a set of data that includes artifacts-affected images withcorrectedversionsfromwhichtheycanconstructacleaneroutput.Thisapplicationisparticularlyadvantageouswith pediatric imaging because cooperation from the child during the imaging process can be limited and reducing repeated scanswouldbehighonthelistofpriorities.

Generative Adversarial Networks (GAN) come in next to provide another wave of sophistication to MRI imaging due to theirpeculiarroleinimagesynthesisandcontrastenhancement.Contrastisoftenkeyindistinguishingvarioussofttissues in MRI imaging. Unlike CNNs, which mostly boost visibility in already existing images, GANs take things even one step further, with the potential to generate entirely new high-quality images out of noisy or incomplete data. The generator networkgeneratessyntheticimages,whilethediscriminatornetworkprovidesjudgmentsonthoseimagesandscoldsthe generator to come up with amore realistic output. This adversarial arrangement works particularly well in completing missingdetailsonMRIscansthatsufferfromincompletedatabecauseofrapidscanningprotocolsorlimitedfieldofview. Thesyntheticdata generatedby a GAN alsoservesto enhance the variability inAI model training when confronted with varyingimageconditions.

Also, GANs come out as extremely powerful contrast enhancers. In MRI imaging, contrast is of the essence for differentiatingamongdifferentkindsofsofttissues.GANscanbetrainedtomaximizecontrastenhancementoutofimages with different qualities of contrast, thus counteracting it: structures of critical importance are therefore brought into sharper view. This can help detect soft tissue structures in the brain or the liver, for instance, where one should differentiatebetweennormalandpathologicalareas.Foroneexample,tinylesionsorsubliminalvascularstructuresmay beviewedmorebrightlyandeasily,thuspermittinggreaterdiagnosticconfidenceandchanceforearlydiseasedetection.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 11 | Nov 2024 www.irjet.net p-ISSN:2395-0072

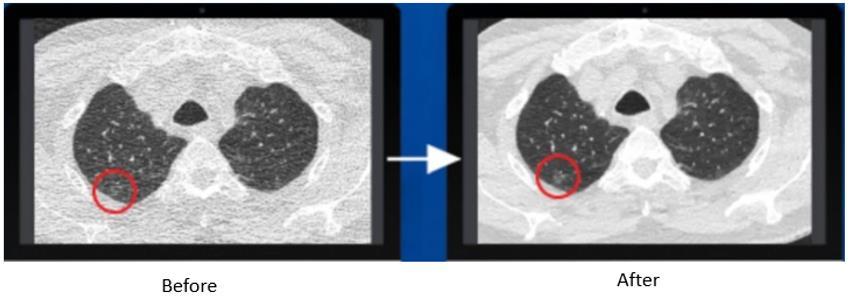

Figure 4: MRI scan comparison showing before and after AI enhancement, highlighting noise reduction, motion artifact correction, and improved resolution.

Advancements in AI-based CT Image Processing:

Convolutional Neural Networks (CNNs) play a significant role in CT imaging for overcoming the issue of low-dose CT optimization. Since radiation damage needs to be kept to a minimum, especially in children and patients requiring more thanoneortwoscans,low-doseCTscansare,however,invariablynoisyandlow-qualityimages-infact,suchpoor-quality images are unlikely to provide any meaningful diagnosis. In direct contrast, CNNs have tackled this problem with great success by learning mappings from low to high doses, or effectively reconstructing good-quality high-dose scans even while exposing patients to minimal radiation. The mapping was performed through successive convolutional layers that instinctively learn to detect and accentuate relevant structures such as bones, blood vessels, and tumors. Because prominent features thus remain salient in imaging for which reconnaissance is possible, CNNs enable a high-quality diagnosiswithoutcompromisingpatientsafety.

MetalartifactreductionisanotherimportantapplicationofCNNinCTimaging.Metalimplantssuchasdentalfillingsand hipreplacementsoftencauseveryseverestreakartifactsintheCTimages,preventing propervisualizationofthetissues surroundingtheseartifacts.CNNsaretrainedtorecognizeandreducethesedistortions,resultinginimprovedqualityfor these images to allow for better interpretation. The networks are trained using pairs of images, low-quality images that are affected by metal artifacts, and high-quality images without these effects, to differentiate genuine anatomical structures from artifacts. Thus, radiologists are expected to enjoy clearer visuals with their decisions in even more challengingsituationsinvolvingmetal-induceddistortions.

Generative Adversarial Networks (GANs) signify a massive change in CT imaging, especially by denoising low-dose CT scans and enhancing image quality. As CT imaging relies on X-rays, in an attempt to lower the dose, one increases noise that outweighs fine details and limits diagnosis. GANs will generate high-quality images from noisy low-dose inputs. A generator produces synthetic images resembling high-dose scans while the discriminator studies these outputs by enforcing the trained discriminator to consider these from a high-quality reference. The iterative process of eradicating noise from standard-dose CT scans upholds diagnostic integrity. This is especially important in oncology because a clear visualizationoftumoredgeswillleadtotherighttreatmentdecisions.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 11 | Nov 2024 www.irjet.net p-ISSN:2395-0072

Another area of great merit of GANs lies in image fusion and the reconstruction thereof. In situations with multimodal imaging, as would be PET-CT, which requires both anatomical and functional information to be visible, here GANs allow data from different modalities to be fused into thecreation of a unified high-quality image. This fusion enhances their visibilityofcomplexconditions;thus,radiologistscanseeboththestructuralandmetabolicaspectsofaparticulardisease froma singleimage. ThegistisgivenbyGANssincetheycanrealignandcombinedetailsfrom PETandCTscanstogive informationcrucialforeachdiagnosisandpersonalizedtreatmentplanning.

Figure 5: CT scans before and after AI enhancement, illustrating noise reduction, motion artifact correction, and improved resolution.

In the field of therapid evolution of medical imaging and die-valuation of performance in diagnosis; spotting such differences becomes necessary. Such comparative analysis brings out key features within which these two models perform;theseincludealookintodifferentperformancemetrics,efficiencyagainstaccuracy,andclinicalvalue.

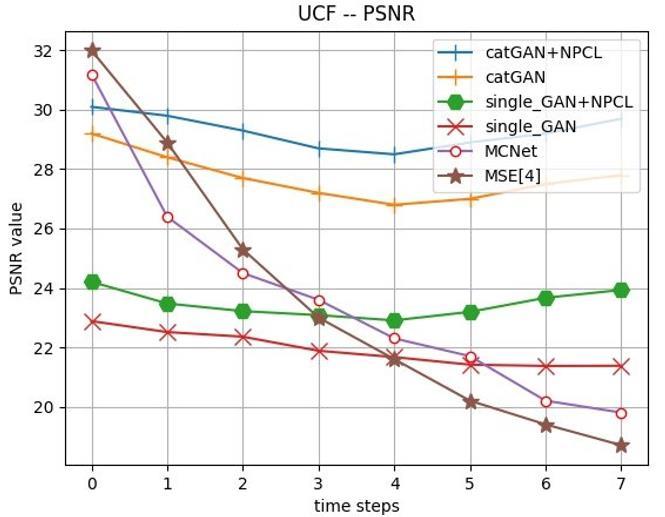

Performance metrics:

It is of paramount importance a determine of quality of medical images. Two computed measures at numerous stages of applicationincludetheStructuralSimilarityIndex(SSIM)andthePeakSignal-to-NoiseRatio(PSNR).

The comparison of local pixel patterns to the luminance, contrast, and structure provides the SSIM. This is especially relevantinmedicalimagingwherethesmallestdifferenceinthequalityofanimagingtechniquecouldhavesomeclinical effect.ThePSNRprovidesameasureoftheratioofthemaximumsignalpowertothatofthepowerofthecorruptingnoise. PSNR is still among the most widespread measures, but, when it comes to complex medical images, it does not always correlate with the visual quality as perceived. Combined, the two metrics offer an image quality estimation, which is importantfromtheclinician'spointofviewforassessingtherelativeeffectivenessofAIandtraditionaltechniques.

IncomparisonbetweenAIandtraditionalimageprocessingtechniques,oneoftheadvantagesofAIisefficiencyandspeed in the performance of large data sets. Deep learning models recognize the patterns and features that may have gone undetected under traditional algorithm attempts. For instance, noise reduction and artifact removal take less time when performedbyAIinsuchawayastoallowclinicalworkflowtorunsmoothly.

Ontheotherhand,conventionaltechniqueshavetheirworthbecauseoftheinterpretabilitynatureandsimplicityinvolved in them. Filter-based noise removal or histogram equalization is less intensive to realize computationally, easier to use, andquite predictableinoutcomes;henceformingan essence withinsome specificclinical contexts. Itisthe waytogeta correctbalancebetweenthesnobberyoftheAImodelsandthereliabilityoftraditionalmethods.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 11 | Nov 2024 www.irjet.net p-ISSN:2395-0072

Thereal-worlduseofAIinclinicalMRIandCTworkflowsisthusessentialinassessingtheinformation.Manystudieshave shown the positive effects of AI on clinical grounds with improved diagnostic capacity and workflow efficiency. An example is AI-assisted reconstruction work in low-dose CT images that showed improvement in image quality, with diagnostic capabilities nearly equivalent to that of conventionally acquired standard-dose images, thus ensuring thecomparativesafetyofthepatientwithreducedradiationexposure.

However, data privacy issues, the necessity of vast volumes of training data, and the issues of integration with existing systems act as a stumbling block to clinical proliferation. Collaborative research between research technologists and academicshasremainedtheonlysolutiontoovercometheseproblems.

By evaluation of performance metrics, efficiency and accuracy, and clinical validity, we find great disparities between AI models and conventional techniques. We believe in a collaborative approach in which both sets of methodologies take advantageofinherentstrengthstooptimizepatientcaredeliveryanddiagnosticaccuracy.

Figure 6: Quantitative evaluation results. The models are assessed using PSNR and SSIM as performance metrics, with higher values of PSNR and SSIM indicating better model performance.

TheinfluenceofAItechnologiescontinuestochangetheambiancefortechnologicalmedicalimagesciences;however,itis paramount that the pressures behind their design and integration should be well understood and addressed. To achieve such victory, these challenges now become major aspirational targets, if AI could be effective in its calling of increasing accuracy,diagnosis,andpatientcareoutcomes.Theserangefromissuessuchasdatalimitations,andgeneralization/bias considerations,tooperationalpracticabilityinimplementingAIinclinicalpractice.

Data Limitations:

One of the greater challenges in developing robust AI models for medical imaging lies in the unavailability of large and quality datasets for training purposes. Despite the above, now the condition is that there is a deluge of data from AI;

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 11 | Nov 2024 www.irjet.net p-ISSN:2395-0072

however, gathering a broad spectrum of clinically relevant imaging datasets from a single or more institutions remains cumbersome.AsgreatasregulationssuchasHIPAA orpatientconfidentialitylawsmaybe,interoperabilitymustcomply with strict regulations on data sharing among institutions. The influences of differing imaging protocols in their scope acrosshospitalsandclinicsstandtoupseteffortsofdatastandardization.

Additionally, emerging methodologies one could look out for include federated learning, and this provides a necessary oasisinthiskindofproblem.FederatedlearningallowsthetrainingofanAImodelacrossseveralinstitutionswithoutany needforexchangingrawpatients'dataataninstitution.Instead,localmodelsaretrainedineachinstitution,learningtheir parameters and aggregated into a model, unlike sensitive information from a patient aggregate going into it. The advantageofitistwofold-itnotonlyassuresthesafetyofitssubjects,butitallowsusingvariousdatasets,which isforthe ideal formation of AI models in such a manner that they generalize across diverse populations with differing imaging settings. This facilitates the collaboration of numerous health organizations in creating AI solutions while still enabling themtomaintaindataprotectionandrolloutmandatesfordiscretion.

Generalization and Bias:

ThemajorityofAImodelstrainedonnarrowandhomogenoussetsofdatafindithardtoadaptfurthertodifferentclinical settings.AnillustrativecaseinpointisanAImodeldevelopedondatafromaspecifichospital,whichprovedtobeuseful but was unable to translate those results toanyone assessed in carriers who had varied demographics or imaging apparatus.Thissmallextentofgeneralizabilitycausesapprehensionabouttheextenttowhichitispossibletoensurethat AI systems can safely and reliably support thedelivery of clinical care issues This is particularly important considering howquicklypatientdemographics,imagingtechniques,andtypesofprocedureswillchangeinclinicalpractice.

An extended example comes from designing an AI to detect pneumonia on chest X-ray; computer vision performed astonishingly on the training cutoff, however for some reason even self-supervised prevailed did not in patients longitudinally followed and images hoarded between the two clinical centers. Eventually, the idiosyncratic way some hospitals augmented ‘pneumonia’ screening routine processes got enshrined in the visual appearance of often varying degreesofpneumoniaandcombineditlogicallywiththehealthimages;onesyllabletendedtopositiveoutcomesforone institution less than another. This drives home the point that different data sets need to be used for training with scrupulous considerations on the need for validation to prove that AI models can be grafted into the existing diverse settings.

ThereisongoingresearchintobiasinAImodelswhereapotentialcureisbeingsought.Thereisalsoincreasingemphasis onbuildingfairerandbroaderdatasetsanddesigningfairalgorithms,whichhavethepotentialofoutperformingeventhe best models, while still addressing the issues concerning the bias in the data and its processing. In addition, external validationofAIonawidercross-institutional,andpatientpopulationbasis,willensurethattheperformancewillbejustas great,evenwhenreferredtoas100%accurate,irrespectiveofthepopulation.

Bringing AI into everyday imaging workflows comes with a bunch of real-world problems. Rules and regulations, like needinglotsoftestingandgettingthegreenlightfromgroupssuchastheFDA,canmakeittoughtogetAItechintoclinics. Plus many hospitals and medical centers don't have the right setup to add AI tools to their current imaging systems. GettingAIplatformstoworkwellwithold-schoolmedicalgearisabigtechnicalchallenge.

Besidesthetechnical andlegal stuff,therearealsomindsetissueswhenitcomestousingAI.Manydoctorsmightnot be sureabouttrustingAIforimportantdiagnosisdecisionsiftheycan'tseehowthealgorithmswork.Togetpeopletotrust AI tech, we need to explain how these systems work, and what they can and can't do in real-life medical situations CreatingAImodelsthatcanshowdoctorswhytheymakecertainpredictionscanhelpbuildfaithinthesetools.

Also,weneedsolidtrainingprogramsforradiologistsandotherhealthcareworkerssotheyknowhowtouseAItoolswell. InsteadofthinkingAIwilltakeoverhumanknow-how,weshouldseeitasawaytoboostclinicaldecision-makingleading tomoreaccurateandquickerdiagnoses.

Conclusion:

Artificial intelligence is already showing great promise in medical imaging to give doctors better insight into the human body,thusincreasingdiagnosticaccuracythroughinnovationssuchasMRIandCTscans.State-of-the-artmodels,suchas convolutionalneuralnetworks(CNNs)andgenerativeadversarialnetworks(GANs),havealreadybeenusedtoshow how © 2024, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page16

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 11 | Nov 2024 www.irjet.net p-ISSN:2395-0072

AI can reduce image noise, improve clarity, and validate low-dose scans with similar reliability to traditional methods. However,despitetheseextraordinaryadvancements,anarduoustaskremainsinadoptingAIasanordinaryconstituentof everydayclinicalpractice.

Transformational systems will require further research into even more intelligent, adaptive algorithms that can cleanly addressreal-worldproblemsinmedicalimaging.ManycurrentAImodelsrequirehugestoresoflabeled(annotated)data which can be a lengthy and expensive endeavor to systematically procure. Investigating alternative means such as unsupervisedlearningmightopenapathwaytobuildingrobustAIsystemsthatarenotsoheavilyreliantonlabeleddata.

Inaddition to thisisincreasingtransparencyin AImodels.Suchis requirednotonlyfor the clinician tounderstandhow decisions are reached, but for the nurturing of trust in these systems. Looking ahead, the integration of AI into regular clinicalworkflowswillmeanmorethantechnicalinnovation.AcollaborativeeffortamongAIexperts,healthprofessionals, and regulatory authorities will be critical, to produce safe, reliable, and user-friendly solutions. Overcoming these challenges would potentially enable AI to bring about greater changes in medical imaging and move patient care from beingaboutspeed,safety,andpersonalization.

Ultimately, as AI continues to evolve, it is set to rewrite the script of medical imaging and, subsequently, healthcare, leadingtogreaterpatientsafetyglobally.

References:

1. Bansal, S., Sharma, A., & Gupta, R. (2023). "ArtificialIntelligenceinMedicalImaging:AReview." Journal of Medical Imaging and Radiation Sciences,54(2),91-102.https://doi.org/10.1016/j.jmir.2023.01.004

2. Chung, A. H., et al. (2020). "Deep Learning for Medical Image Analysis: Overview and Future Directions." Healthcare,8(4),410.https://doi.org/10.3390/healthcare8040410

3. Lakhani, P., & Sundaram, B. (2020). "DeepLearninginMedical Imaging:OverviewandFutureDirections." Journal of Medical Imaging,7(4),041002.https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7594889/

4. Litjens, G., et al. (2017). "ASurveyofDeepLearninginMedicalImageAnalysis." Medical Image Analysis,42, 60-88.https://doi.org/10.1016/j.media.2017.07.005

5. Nguyen, T. T., & Lee, S. W. (2023). "AI-Based Techniques for Improving MRI and CT Image Quality: A Review." Progress in Medical Physics,30(2),49-60.https://doi.org/10.14316/pmp.2023.30.2.49

6. Wang, Z., et al. (2023). "RecentAdvancesinArtificialIntelligenceforMedicalImageProcessing:AReview." Journal of Medical Systems,47(3),12.https://www.ncbi.nlm.nih.gov/pmc/articles/PMC11156779/ 7.

8. Zhang, Y., Chen, Y., & Zhang, L. (2023). "Artificial Intelligence in Medical Imaging: A Review of Current Applications and Future Directions." Bioengineering, 10(12), 1435. https://doi.org/10.3390/bioengineering10121435