International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

Sai Ujwal V1

1Student, Computer science and engineering, Jain University, Bangalore

Abstract - Advanced Machine Learning (AML) technologies have transformed predictive modeling, introducing sophisticated algorithms and methods to address complex regression challenges. Within AML, evaluating regression models is crucial for guiding model selection, optimization, and interpretation. The theory behind regression metrics in advanced machine learning is explored in this paper, along with the statistical characteristics, mathematical foundations, and real-world applications of important metrics like AME,SRME,SME, and squared-R (R²S). The goal of the review is to give practitioners a comprehensive understanding of these core ideas by helping to clarify them. Additionally, advanced topics such as robust regression metrics, ensemble-based evaluation, and the relationship between regression metrics and model interpretability are explored, offering insights into the latest developments in the field. Through this comprehensive analysis, the paper aims to equip researchers, practitioners, and enthusiasts in the AML community with the knowledge to effectively use regression metrics in their work.

Key Words: Machine Learning, Statistics, Squared Mean Error, Absolute Mean Error, Standardized Root Mean Error,AMLCommunity,PredictiveModeling.

1.INTRODUCTION

This Advanced Machine Learning (AML) Technologies: PredictiveModellingEntersaNewEra.AdvancedMachine Learning (AML) A new era of predictive modelling has been made possible by technological advancements. The creation of highly accurate and scalable solutions across various applications. In the domain of AML, regression tasks, which involve predicting continuous numerical values, are ubiquitous. Whether forecasting stock prices, estimatinghousingvalues,orpredictingcustomerlifetime value, regression models play a central role in numerous fields.

Despite advancements in algorithms and methodologies, evaluatingregressionmodelsremainsacornerstoneofthe model development process. Through the use of regression metrics, practitioners can evaluate the precision, resilience, and generalizability of their models. Regression metrics quantify model performance. In-depth information about the mathematical concepts, statistical characteristics, and real-world applications of important metrics are provided in this paper's exploration of the

theoretical underpinnings of regression metrics within advancedmachinelearning.

Regression metrics quantify the discrepancy between the predictedvaluesgenerated byaregressionmodelandthe actual values observed in the dataset. Understanding the mathematicalunderpinningsofthesemetricsiscrucialfor interpreting their significance and utility in model evaluation.

SquaredmeanError(SME)isacommonlyusedregression statistic that calculates the average squared difference between values that were predicted and those that were observed.SMEhasthefollowingmathematicaldefinition: [ text{sme} = frac{1}{n} sum_{i=1}^{n} (y_ihat{y}_i)^2]

where(n)isthenumberofobservations,(y_i)isthetarget variable'sactualvalueforobservation(i),and(hat{y}_i)is the corresponding forecast value. SME is sensitive to outliersandbigerrorssinceitpenalizesgreaterdeviations more severely by squaring the errors. Finding the regressionmodel'sparameters thatmaximizethemodel's fit to the training set of data is equivalent to minimizing the mean square error. But it's important to understand that SME might not always match the goals of the modelling work, particularly in situations when the data distributionisskewedortherearealotofoutliers.

Error Squared Root Mean (ESRM)is a variant of SME that provides a measure of prediction error in the same units asthetargetvariable.

By taking the square root of SME, SRMEoffers a more interpretable metric that facilitates comparisons across different datasets and domains. Mathematically, SRMW is expressedas:

[ text{SRME} = sqrt{text{SME}} = sqrt{frac{1}{n} sum_{i=1}^{n}(y_i-hat{y}_i)^2}]

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

Although it offers a more natural comprehension of predictionaccuracy,SRMEcarriesoverthecharacteristics of SME. Nevertheless, SRME must be interpreted and applied with caution since, like SME, it is susceptible to outliersandchangesinthedatadistribution.

An additional regression statistic that calculates the average absolute difference between expected and actual dataiscalledMeanAbsoluteError(AME).SinceMAEdoes notsquare the errors, itis less susceptible to outliers and big errors than SME and SRME. In terms of mathematics, MAEisdefinedas

[text{AME}=frac{1}{n}sum_{i=1}^{n}|y_i-hat{y}_i|]

AME offers a reliable measure of central tendency by concentrating ontheabsolutesizeof errors; this makes it especially helpful in situations when there are a lot of outliersoranasymmetricdistributionofthedata.

squared-R (R²S),The percentage of the target variable's variance that the regression model explains is mea-sured and is sometimes referred to as the coefficient of determination.Inmathematics,R²canbewrittenas:

[R2s=1-frac{SS_{text{res}}}{SS_{text{tot}}}]

where ( SS_{text{tot}} ) signifies the total sum of squares and ( SS_{text{res}} ) the sum of squared residuals (errors).HigherR²valuesindicateabettermatchbetween the model and the data. R² runs from 0 to 1. R2should be read in conjunction with other metrics because, although it offers information about the regression model's goodness of fit in comparison to a basic baseline model (often the target variable's mean), it does not take overfittingormodelcomplexityintoconsideration.

Regression metrics exhibit distinct statistical properties that influence their behavior in different modeling scenarios. Understanding these properties is essential for selectingthemostappropriatemetricfor agiventaskand interpretingtheresultseffectively.

Bias and variance are fundamental concepts in statisticall earning theory, representing different sources of error in model estimations. Bias refers to the systematic error introduced bythemodel's assumptions orsimplifications, leading to underfitting and poor predictive performance. Variance captures the sensitivity of the model to variations in the training set, which leads to overfitting andadecreaseingeneralisationcapacity.

The trade-off between the model complexity and of the generalisation performance is reflected in the sensitivity of

SME and SRME to both bias and variance. High bias models often underfit the data, resulting in significant errorsandlowR2values.Ontheotherhand,highvariance models might overfit the data, identifying noise rather thanunderlying patterns. This wouldlead to low bias but highSRMEandpoorgeneralisationperformance.

AME, being less sensitive to variance, offers a robust measure of central tendency that is less affected by fluctuations in the training data.In situations where the data distribution is very skewed or asymmetric, it might, nevertheless, underestimate prediction errors. Although R²isfrequentlyusedtoevaluatemodelperformance, bias and variation are not directly captured by it. Rather, it offers a gauge of the percentage of variance explained by the model in comparison to a basic baseline model. Consequently, in order to properly evaluate model complexity and generalisation ability, R2 should be assessedinconjunctionwithothermetrics.

Efficiency and consistency are desirable properties in statisticalestimation,ensuringthattheregressionmetrics provide reliable and stable measures of model performance across different datasets and modeling scenarios

Abstract:

The analysis of regression techniques forms the foundation of predictive modeling in advanced machine learning (AML), where models are tasked with predicting continuous outcomes based on input features. Arange of criteria are used to evaluate regression models, and each one offers a different perspective on themodel's effectiveness.

Inthis thorough examination, we examinethe widerange of regression measures available in AML, including basic metrics like Mean Squared Error SME and Root Mean (Squared Error (SRME) as well as more advanced measures such as Huber loss and Quantile loss. By examining the mathematical principles, statistical properties, and practical implications of each metric,With regard to advanced machine learning, this study seeks to provideacomprehensivegraspofthedifferentregression measuresthatareavailable.

We emphasise each metric's significance and provide practitioners the tools they need to make well informed decisions about model selection, optimisation, and

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

deployment through thorough examples, comparisons, andreal-worldapplications.

Regression metrics are essential for evaluating The effectiveness of regression models in sophisticated machinelearning. Theyprovidenumerical assessments of a model's precision, accuracy, and capacity for generalisation, which serve as a basis for choices made during the model-development process.. This paper explores the diverse landscape of regression metrics, including both traditional and advanced measures. By examining the mathematical foundations, statistical properties, and practicalapplicationsofeachmetric,Ourgoalistoprovide practitioners with the skills and resources they need to successfully negotiate the challenges associated with evaluating regression models in advanced machine learning.

2.1Squared mean error (SME)

Squaremeanerrorisafundamentalregressionmetricthat calculates the mean squared discrepancies between expected and actual values. This section delves into the mathematicalformulationofMSEitssensitivitytooutliers, and its interpretation in model evaluations. Through examples and case studies, we illustrate the applications ofMSEinassessingpredictionaccuracyandguidingmodel selectiondecisions.

One variation of SME that uses the square root of the average squared discrepancies between the actual and anticipated values is called square root mean error. This section looks at how SRME should be interpreted intuitively, how it relates to SME, and if it works well in situationswherethetargetvariables'unitsmakesense.9

( AME ) represents the average absolute differences between the values that were predicted and those that were observed, making it another crucial regression metric.Thissectionlooksatthepracticalconsequences of MAE for advanced machine learning decision-making, its interpretation inmodel assessments, andhow robust it is tooutliers.

Squared-R (R²S)

squared-R(R²S)isacommonlyusedregressionmetricthat measures the percentageof thetargetvariable's variation thatthe regression model accounts for. The mathematical

definition of R2; it sinterpretation in model assessments; and its limits in evaluating overfitting and model complexityareallcoveredinthissection.

TherobustHuberLossregressionmeasureoffersatradeoff between computing efficiency and sensitivity to outliers by combining the best features of the MAE and MSE. This section explores the mathematical formulation ofHuberLoss,itsadvantagesovertraditionalmetrics,and itspracticalapplicationsinrobustregressionmodeling.

Withtheuseofaflexibleregressionmetriccalledquantile degradation, conditional quantiles of the target variable may be estimated, offering insights into the full distribution of predictions. The mathematical formulation of Quantile Loss, its interpretation in model evaluations, andits useinadvanced machinelearning risk assessment anddecision-makingarecoveredinthispart.

Weighted Loss Functions allow for the customization of loss functions based on the specific requirements of the modeling task. This section explores the concepts of weightedlossfunctionstheirimplementationinadvanced machine learning frameworks, and their practical implications for model optimization and performance tuning.10

This section conducts a comparative analysis of various regression metrics using real-world datasets and case studies.Throughside-by-sidecomparisonsandsensitivity analyses, For every measure, we point out its advantages and disadvantages and offer suggestions on which one to useforaparticularmodellingtask.

We conclude by discussing practical considerations and recommendations for using regression metrics effectively in advanced machine learning. The guidelines in this part will help you choose the best metric for your modelling work based on its unique goals, limitations, and features. We also provide insights into advanced machine learning best practices for hyper parameter tuning, model evaluation,andperformanceoptimisation.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

Conclusion

With their ability to provide objective measures of model performanceandserveasaguidefordecisionsDuringthe model construction process, regression metrics are essential tools in advanced machine learning.-By understanding the diverse landscape of regression metrics, practitioners can make informed decisions in model selection, optimization,anddeployment,ultimately leadingtomoreaccurateandreliablepredictivemodelsin advanced machine learning. By providing practitioners with the information and resources they need to successfully negotiate the challenges of regression model evaluationandmakewisechoicesincomplicatedmachine learning projects, this thorough analysis hopes to empower practitioners. Through detailed explorations, comparisons, and practical recommendations, we illuminate the significance ofregressionmetrics and their role in driving success in advanced machine learning applications.

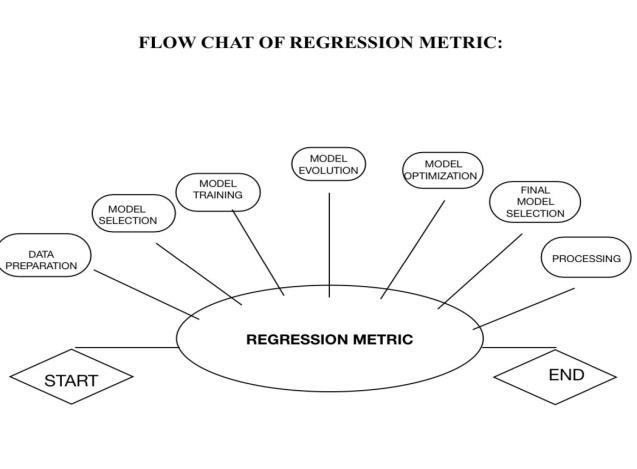

Begin

Data Preparation: Gatherandpreprocessthedataset.

Managemissingvalues,anomalies,andelementscaling.

Model Selection: Pick appropriate regression algorithms (e.g.,rectilinear regression, verdict trees, neurological networks).Divide the information into teaching and testingbatchesorusecross-verification.

Model Training: Educate the regression models on the teaching data. Refine hyperparameters utilizing methods likegridinvestigationorcasualexploration.

Model Evaluation: Make estimations on the testing data usingtaughtmodels.

Compute regression metrics:

Mean Quadrilateral Mistake (MQE)

Root Mean Quadrilateral Mistake (RMQE)

Mean Definite Mistake (MDA)

R-cubed (R²)

Adjusted R-cubed

Mean Definite Proportion Mistake (MDPM)

Mean Quadrilateral Logarithmic Mistake (MQLM)

Explained Degree Importance:Contrast model concert utilizingthesemetrics.

Model Optimization : Go around on model selection, teaching, and evaluation steps to refine concert. Lead aspect engineering and selection. Use regularization techniquestoforestallovermatching.

Final Model Selection:Verify the verified models concert on data never seen (e.g., holdout dataset, practical-world data).Appraise model hardiness and generalization potential.

Stop:This flowchart portrays the successive steps implicated in evaluating regression metric reckoning data preparation, model selection, teaching, evaluation, optimization, and final model selection. The regression metricsplayacrucialpartinassessing modelconcertand guidingthemodelselectionandoptimizationprocess.

CaseStudy:PredictingHomePrices

Problem Description

Your organisation, a real estate company, is looking to develop a machine learning model that can forecast property values based on a variety of characteristics, like location,numberofbedrooms,andsquarefootage.

Data processing

Gather data on previous house sales, such as square footage, number of bedrooms, location, and so on. Scale numerical features, encode categorical variables, and handlemissingvaluestopreprocessthedata

Model Selection

Select regression algorithms such as gradient boosting, decision trees, random forests, or linear regression that areappropriateforthetask.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

Model Instruction

Utilising the training data, create several regression models. Use methods like random or grid search to optimisethehyperparameters.

Model Assessment

Usingthelearnedmodels,makepredictionsaboutthetest data. To evaluate model performance, compute different regressionmetrics.

Square root mean (SME)

Evaluates the mean squared variation between expected andactualprices.

Square root mean error (SRME)

Yields,inthesameunitasthetargetvariable, theaverage errorsize.

Absolute mean Error (AME)

Computes the mean absolute difference between the pricesthatwereanticipatedandthosethathappened.

Squared-R(R²S)

Shows the percentage of the target variable's volatility that the model can account for. MAPE stands for Mean Absolute Percentage Error. calculates the typical percentage difference between the prices that were expectedandthosethatwereactuallypaid.

Squared mean Logarithmic Error (SMLE)

Calculates the mean squared difference between the logarithmoftheactualandforecastedprices.Themodel's capacity to explain the variability in the target variable is gauged by the explained variance score. To determine which model performs the best, compare models using thesemetrics.

Model Optimization

Adjust hyper parameters or use feature engineering to fine-tune the chosen model. To avoid overfitting, use regularisationprocedures.

Final Model Selection:Check the finished model's performance with hypothetical data. Based on regression metrics,choosethemodelthatperformsthebest.

Decision Making: Regression metrics help stakeholders make informed decisions about the effectiveness of predictive models.A home price prediction model's mean absolute error (AME) of $10,000, for instance, indicates that the model's predictions typically differ by $10,000

from the actual values. Model Comparison: Regression metrics make it possible to compare various models and determine which is the most accurate for a certain situation. Managers have the option to select the model with the highest R-squared value or the lowest SRME, for example.

Performance Monitoring: Regression metrics facilitate ongoing monitoring of model performance in production environments. If the SRME of a home price prediction model suddenly increases, it might indicate a decline in prediction accuracy, prompting further investigation. Business Impact Assessment: Understanding regression metrics helps assess the business impact of machine learningmodels.

Forexample,iftheMAPEofarevenueforecastingmodelis consistently high, it might lead to inaccurate financial planning and budgeting decisions. Feedback Loop: Regression metrics provide feedback for model improvement. By analyzing which features contribute most to the errors (e.g., through feature importance analysis),datascientistscanrefinethemodelandenhance itspredictiveaccuracy.

Regression metrics are vital tools in advanced machine learning workflows, from model development and evaluationtodeployment andoptimizationTheyfacilitate well-informed decision-making in practical applications such as property price prediction and offer insightful informationabouttheperformanceofthemodels.

METHODOLOGY OF REGRESSION METRICS:

UtilizingRegressionMetricsinReinforcementLearning

In reinforcement learning (RL), regression metrics are sometimes used to evaluate the performance of learned policies or value functions. These measurements aid in determining how well the learnt model resembles actual values or environmental behaviours. Regression metrics areusedinRLusingthefollowing

1. Define the Problem: Clearly define theRL problem you are addressing, including the environment, the agent'sactions,andtherewards.

2. Choose a Regression Model: Select a regression model to approximate the value function or policy. Regular options consist of decision trees, neural networks, and linearregression.Basedontheavailableevidenceandthe problem'scomplexity,adecisionmustbemade.

3. Data Collection: Collect data by interacting with the environment using an exploration strategy, such as ε greedy,softmax,orUpperConfidenceBound(UCB).

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

4. Data Preprocessing: Preprocess the collected data to prepareit for regression. This may involve normalization, feature scaling, or feature engineering to extract relevant featuresfromtherawstateobservations.

5. Split the Data:Into training and validation sets, divide the gathered data. Regression model training takes place on the training set, while model performance assessment occursonthevalidationset.

6. Put the Regression Model to Work:Make use of the training data to create a regression model. Reduce as much as possible the discrepancy between the actual values that come from the environment and the values thatareexpected.

7. Choose Evaluation Metrics: Select appropriate regression metrics to evaluate the performance of the trained model. Common regression metrics in RL include Squared Mean Error (SME), Absolute Mean Error (AME), andSquaredRootMeanError(SRME).

8. Evaluate the Model:Together with the chosen regression metrics, assess the trained regression model using the validation. This stage facilitates the assessment of how well the model generalises to new data and how well it represents the underlying dynamics of the surroundings.

9. Iterate:Asyouloopthroughthemodeltrainingprocess, make adjustments to the hyperparameters, the architecture of the regression model, or the data pretreatmentprotocolsbasedontheevaluationresults.

10. Final Model Selection:OncesatisfiedTodeterminethe model's ultimate degree of generalizability, compare its resultsonthevalidationsettoanothertestingset.

11. Deployment: Deploy the trained regression model in the RL system to make decisions or control actions in the environment based on the learned policy or value function.

By following this methodology, you can effectively use regression metrics in reinforcement learning to evaluate andimprovetheperformanceoflearnedmodels.

Advantages and Limitations of Regression Metrics in AdvancedMachineLearningRegressionmetricsarecrucial tools in advanced machine learning (ML) for evaluating the performance of regression models. Let's explore their advantagesandlimitations:

Advantages:

1. Quantitative: Assessment: Regression metrics provide objective measures of model performance, enabling

clear comparisons between different models or parametersettings.

2. Interpretability: The intuitive interpretations of many regression indicators facilitate comprehension of the model's prediction quality. As an illustration, Mean Squared Error (SME) directly measures the average squareddifferencebetweenpredictedandactualvalues.

3. Guidance for Model Selection: By comparing different models using regression metrics, practitioners can make informeddecisionsaboutwhichmodelarchitecture,hyper parameters, or feature engineering techniques are most effectiveforagiventask.

4. Optimization: Regression metrics can be used as optimization objectives during model training. For example, minimizing MSE during training directly improves themodel'sperformanceaccordingtothatmetric.

5. Diagnostic Tools: Regression metrics can highlight specific areas where the model performs well or poorly. Examining residuals or error distributions can reveal patternsoroutliersthatmayrequirefurtherinvestigation.

1. Sensitivity to Outliers: SME is one of the regression metricsthatissusceptibletooutliers.Ameasurecanbe significantly affected by a small number of extreme outliers., potentially leading to misleading conclusions aboutthemodel'soverallperformance.

2. Bias-Variance Tradeoff: Different regression metrics may emphasize different aspects of model performance, suchasbiasorvariance.Forexample,whileMSEpenalizes large errors heavily, it may result in overly complex models that perform poorly on unseen data (high variance). Choosing an appropriate metric requires balancingthetradeoffsbetweenbiasandvariance.

3. Assumption Dependence: Certain regression metrics assumespecificpropertiesofthedatadistribution,suchas normality or homo scedasticity. Deviations from The realityofthecanbeimpactedbytheassumptions.

4. Limited Scope: Regression metrics focus primarily on theaccuracy ofpoint predictions,potentially overlooking other important aspects of model quality, such as uncertainty estimation,robustnesstodistributionalshifts, orfairnessconsiderations.

5. Domain Specificity: The effectiveness of regression metrics based on thegoals ofthe modelling work andthe problem domain. A metric that works well in one domain may not generalize effectively to others, requiring careful considerationofcontextwheninterpretingresults.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

Overall,Regression metrics provide insightful information about how well regression models perform in advanced machine learning; however, in order to guarantee a thorough assessment of model quality, they should be utilised carefully and in concert with other assessment methods.

Summary of Regression Metrics in Advanced Machine Learning

In advanced machine learning, regression metrics are essential for evaluating the performance of regression models.Herearethekeypoints:

1. Squared mean error(SME)

• determines the average squared difference between the values that were anticipated and those that were observed.

• Better model performance is indicated by lower MSE, withvaluesaround0beingpreferred.

• sensitivetoanomalies.

2. Squared Root Mean Error (SRME):

•Ameasureoftheerrorspreadisgivenbythesquareroot ofSME.

• Additionally sensitive to outliers, lower values are preferable.

3. Absolute Mean Error (AME):

•findstheaverageabsolutedifferencebetweenthevalues thatwereanticipatedandthosethatwereobserved.

• When outliers are present, it's a solid option because it's less susceptible to them than SME and SRME.

4. squared-R (R²S) Score

• calculates the percentage of the dependent variable's variation that can be predicted from the independent variable.

•hasarangeof0to1,where1representsanidealmatch.

• Does not say whether the bias in the model's predictionsexists.

5. R-Adjusted-squared:

• A modified form of squared-R that penalises adding pointlesspredictors.

• beneficialwhenworkingwithseveralpredictors.

6. Squared Mean Logarithmic Error (MSLE):

• calculates the mean logarithmic difference between the expectedandactualvalues.

• helpful when the variable being targeted spans multipleordersofmagnitude.

7. Percentage Mean Error (MPE):

• determines the average percentage change between the values that were anticipated and those that were observed.

• utilised frequently in forecasting to evaluate the precisionofpredictions.

8. Determination Coefficient(COD):

•AnothertermforR-squared,representingtheproportion ofvarianceinthedependentvariablepredictablefromthe independentvariable.

1. Draxler, R. R., and T. Chai (2014). Which is greater: mean absolute error (MAE) or root mean square error (RMSE)? The literature presents arguments against avoiding root mean square error (RMSE). Geoscientific Model Development, 7(3), 1247-1250. The contrasts between regression analysis's use of MAE and RMSE is examinedinthiswork.

2. Matsuura, K. & Willmott, C. J. (2005). The mean absolute error (MAE) is a more useful metric for evaluatingaveragemodelperformancethantherootmean square error (RMSE) in ClimateResearch, 30(1), 79-82.It explores the ramifications of using MAE instead of RMSE inmodelperformanceassessmentandmakesanargument foritsadoption.

3. Friedman, J., Tibshirani, R., and Hastie, T. (2009). * Springer, The Elements of Statistical Learning: Data Mining, Inference, and Prediction. a thorough guide on several machine learning methods that includes in-depth explanationsofregressionmetrics.

4. Warrens, M. J., Chicco, D., & Jurman, G. (2021). When evaluating regression analyses, the coefficient of determination (R-squared) provides more information thanSMAPE,MAE,MAPE,MSE,andRMSE.PeerJComputer Science,7(7):e623.

• In this essay, the coefficient of determination (Rsquared) is examined and contrasted with alternative regressionmetrics.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

5. Frank, E., Witten, I. H., Hall, M. A., & Pal, C. J. (2016). PracticalMachineLearningToolsandTechniquesforData Mining.KaufmannMorgan.

• A useful manual that goes over a number of machine learning tools and methods, including a thorough explanationofregressionmetrics.

6. G.AthanasopoulosandR.J.Hyndman(2018).Concepts andapplicationsofforecasting.Otexts.

• A chapter on regression model evaluation metrics may be found in this open-source book that offers insights intoforecastingtechniques.

7. In 2016, Bengio, Y., Goodfellow, I., and Courville, A. VibrantLearning.MITPress.

• A basic text on deep learning that covers regression measurespertinenttomodelsusingdeeplearning.

8. In2014,GneitingandKatzfusspublishedapaper.using probabilistic forecasting. 125–151 in Annual Review of StatisticsandItsApplication,1.

• offers assessment measures for probabilistic regression modelsandfocusesonprobabilisticforecasting.

9. Hastie, T., Witten, D., James, G., and Tibshirani, R. (2013). Springer, An Introduction to Statistical Learning: withApplicationsinR.

• An introduction to statistical learning methods and measurements in a more user-friendly version of The ElementsofStatisticalLearning.

10. Zhang Z. (2017). Variable selection using best subset and stepwise methods. 4(7) Annals of Translational Medicine,136.

• examines techniques for choosing variables in regression analysis, many of which entail comparing models'regressionmetrics.