International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net

YASH GOYAL

p-ISSN:2395-0072

Electronics and Communication Engineering, Netaji Subash University of Technology, Delhi

Abstract - Graphics Processing Units (GPUs) have undergone a transformative evolution from fixed-function devices designed solely for graphics rendering to highly programmable and parallel processors capable of handling a diverse array of computationally intensive tasks. This review paper provides a comprehensive examination of the architectural developments and applications of GPUs. Key milestones in GPU evolution, such as the introduction of programmable shaders and unified shader architectures, are discussed alongside modern advancements in GPU technology. The paper explores various applications of GPUs, including high-performance computing, artificial intelligence, machine learning, and cryptocurrency mining. Additionally, the review delves into the development environments and support systems that facilitate GPU programming, highlighting essential tools, libraries, and integrated development environments. Finally, the paper addresses the challenges faced by current GPU architectures, such as power consumption and the future of heterogeneous computing, projecting the continued integration of AIspecific hardware in GPUs. Through this detailed exploration, the paper underscores the pivotal role GPUs play in advancing computational capabilities across multiple domains

Key Words: GPU architecture, programmable shaders, unified shader architecture, high-performance computing (HPC), artificial intelligence (AI), machine learning, cryptocurrency mining, CUDA, OpenCL, tensor cores, development environments, GPU-accelerated computing, power efficiency, heterogeneous computing, NVIDIA Tesla, parallel processing, deep learning

1.Introduction

Graphics Processing Units (GPUs) have revolutionized the field of computing, providing unprecedented parallel processing power that has transformed graphics rendering and general-purpose computing alike. Initially designed as dedicated hardware for accelerating the rendering of images and videos, GPUs have evolved into versatile processors capable of executing a wide array of computational tasks with high efficiency and speed. This evolution has been driven by significant advancements in GPU architecture, including the transition from fixed-function pipelines to programmable shaders, the developmentofunifiedshaderarchitectures,andtheintegrationofspecializedcoresforartificialintelligence(AI)andmachine learning(ML)applications.

The demand for high-performance computing (HPC) has surged across various industries, from scientific research and data analysis to entertainment and healthcare. GPUs have become a cornerstone of HPC due to their ability to handle large-scale parallel computations,significantlyoutperforming traditional Central Processing Units(CPUs)in tasksthat require extensive data processing and complex calculations. This capability has made GPUs indispensable in fields such as climate modeling, molecular dynamics, and astrophysics, where simulations and data processing need to be executed with high precision and speed.

In the realm of artificial intelligence and machine learning, GPUs have played a pivotal role in accelerating the training and inference of deep neural networks. The introduction of tensor cores in modern GPUs has specifically enhanced their performance in AI tasks, enabling faster and more efficient processing of large datasets. This has led to significant advancements in AI research and applications, including natural language processing, image recognition, and autonomous systems.

Moreover, the versatility of GPUs extends to emerging applications such as cryptocurrency mining and real-time data processing in autonomous vehicles. The parallel processing capabilities of GPUs make them ideal for the repetitive and computationally intensive tasks involved in mining cryptocurrencies. In autonomous vehicles, GPUs process sensor data and runcomplexalgorithmsforperception,planning,andcontrol,ensuringsafeandefficientoperation.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN:2395-0072

As the scope of GPU applications continues to expand, so does the need for robust development environments and support systems.DevelopersleverageframeworkslikeCUDAandOpenCLtoharnessthefullpotentialofGPUs,utilizingawiderangeof tools,libraries,andintegrateddevelopment environments(IDEs)to optimize and debugtheir applications. Thedevelopment of user-friendly software ecosystems and high-level programming interfaces has further democratized access to GPU programming,enablingresearchersandengineersacrossdisciplinestoutilizeGPUpowerintheirwork.

ThisreviewpaperaimstoprovideacomprehensiveexaminationofGPUarchitecture,tracingitsevolutionfromearlydesigns to modern advancements. It explores the various applications of GPUs, highlighting their impact on high-performance computing, artificial intelligence, scientific research, and more. Additionally, the paper discusses the development environments and support systems that facilitate GPU programming, addressing the challenges and future directions in the field.ByofferinginsightsintothecurrentstateandfutureprospectsofGPUtechnology,thispaperunderscoresthepivotal role GPUsplayinadvancingcomputationalcapabilitiesacrossmultipledomains.

2.1Evolution of GPU Architecture

TheevolutionofGPUarchitecturehasbeenmarkedbyseveralkeymilestones.ErikLindholmetal.[1]discusstheintroduction of NVIDIA's Tesla architecture, which marked a significant shift toward unified graphics and computing architectures. This architecturelaid the foundation for the modern programmable GPUs, which have become central to both graphics rendering andgeneral-purposecomputing.

Shilan Ahmed Mohammed et al. [2] provide a comprehensive overview of GPU concepts and the challenges associated with graphapplications.Theirreviewhighlightsthetransitionfromfixed-functionpipelinestoprogrammableshaders,emphasizing howthisshifthasenabledGPUstohandlemorecomplexcomputationsbeyondtraditionalgraphicstasks.

In a detailed exploration of GPU architectures and programming, Stephen W. Keckler et al. [3] discuss the future of parallel computing with GPUs. They highlight the role of GPUs in accelerating various computational workloads and predict the increasingimportanceofGPUsinhigh-performancecomputing(HPC)andartificialintelligence(AI).

MarkoJ.Misicetal.[4]tracetheevolutionandtrendsinGPUcomputing,focusingontheadvancementsinGPUhardwareand software that have driven performance improvements. They examine the implications of these advancements for different applicationdomains,includingscientificcomputingandmachinelearning.

FumihikoIno[5]exploresthehistoryandfutureprospectsofGPU-acceleratedgridcomputing.Thisworkunderscorestherole of GPUs in enhancing the computational capabilities of grid systems and discusses potential future developments that could furtherintegrateGPUsintodistributedcomputingenvironments.

GPUshavebecomeindispensableinawiderangeofapplications,fromgraphicsrenderingtoscientificcomputingandAI.The NPTEL online course by Soumyajit Dey [3] provides a detailed examination of GPU architectures and their applications in various computational tasks. The course emphasizes the versatility of GPUs in handling parallel workloads, making them suitablefordiverseapplications.

In the realm of HPC, GPUs have proven to be highly effective in accelerating simulations and data processing tasks. Marko J. Misic et al. [4] discuss the impact of GPUs on HPC, highlighting case studies where GPUs have significantly reduced computationtimesandenabledmorecomplexsimulations.

Artificial intelligence and machine learning are other areas where GPUs have made a substantial impact. The integration of tensor cores in modern GPUs has enhanced their ability to perform deep learning tasks, as discussed by Fumihiko Ino [5]. TheseadvancementshavemadeGPUsacriticalcomponentintraininganddeployingAImodels.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN:2395-0072

GPUs have alsofound applicationsinemerging fieldssuchascryptocurrencymining.According toan article on Investopedia [7], the parallel processing capabilities of GPUs make them ideal for the repetitive hashing operations required in mining cryptocurrencies. This application has driven significant demand for GPUs, influencing both their market and technological development.

3.Evolution of GPU Architecture

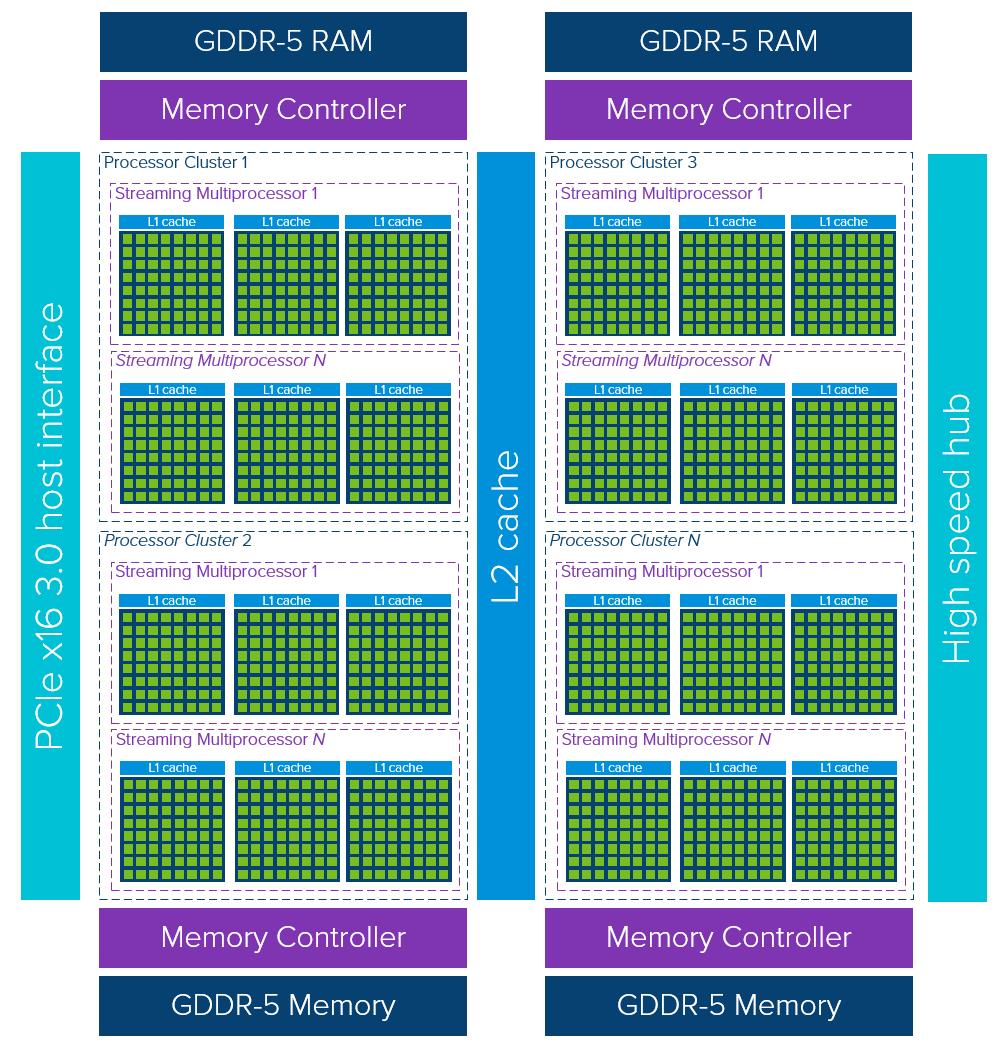

Figure 1: Modern GPU Architecture

This diagram illustrates the architecture of a modern GPU, highlighting key components such as the GDDR-5 RAM, memory controllers,processorclusters,streamingmultiprocessors,L1andL2caches,PCIex163.0hostinterface,andhigh-speedhub. The architecture is designed to optimize parallel processing and memory bandwidth, essential for high-performance computingtasks.

3.1 Early GPU Designs

Early GPUs were specialized for graphics rendering, handling tasks like rasterization, shading, and texture mapping. They operatedasfixed-functionpipelines,meaningeachstageoftherenderingprocesswashardcodedandnotprogrammable.This designwasefficientforgraphicstasksbutlimitedinflexibility[1].

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net

p-ISSN:2395-0072

TheintroductionofprogrammableshadersmarkedasignificantshiftinGPUarchitecture.Shadersalloweddeveloperstowrite customprogramstocontrolvertexandpixelprocessing,enhancingflexibilityandenablingmorecomplexgraphicseffects.This programmabilitylaidthegroundworkforGPUstobeusedingeneral-purposecomputing(GPGPU)[1].

NVIDIA's Tesla architecture introduced the concept of a unified shader architecture, where a single type of processor could execute vertex, pixel, and geometry shaders. This unification increased efficiency and resource utilization, allowing GPUs to achievehigherperformanceinbothgraphicsandcomputetasks[1].

ModernGPUs,suchasNVIDIA'sVoltaandAmperearchitectures,featurethousandsofcorescapableofexecutingmanythreads simultaneously. They incorporate advanced features like tensor cores for AI processing, enhanced memory hierarchies, and high-speed interconnects, making them suitable for diverse applications beyond graphics, including scientific computing, machinelearning,anddataanalytics[2].

3.5

ModernGPUsintegrateaplethoraofadvancedfeaturesandtechnologiestopushtheboundariesofperformanceandefficiency. Somenotableadvancementsinclude:

Tensor Cores:Designedspecificallyfordeeplearningoperations,tensorcoresperformmixed-precisionmatrixmultiplications andaccumulateresultsinhigherprecision.Thissignificantlyacceleratesthetrainingandinferenceofneuralnetworks[5].

Ray Tracing Cores: Dedicated hardware for ray tracing allows GPUs to simulate the physical behavior of light, producing highlyrealisticimagesinreal-timeapplicationslikegamingandsimulations[3].

High-Bandwidth Memory (HBM): HBM is a type of memory used in GPUs that offers higher bandwidth and lower power consumption compared to traditional GDDR memory. It enhances the overall performance and efficiency of GPUs in dataintensivetasks[2].

Multi-GPU Technology: Technologies like NVIDIA NVLink enable multiple GPUs to communicate at high speeds, allowing themtoworktogetheroncomplextasks.ThisisparticularlybeneficialinHPCandlarge-scaleAIapplications[1].

Table1: Evolution of GPU Architectures

OverviewoftheevolutionofGPUarchitectures,highlightingkeyfeaturesandexamplearchitecturesfromeachera

Era

EarlyGPUs

Programmable Shaders

UnifiedShader

Key Features

Fixed-functionpipelines

Vertexandpixelshaders

Unifiedshaderarchitecture

Example Architectures

References

NVIDIAGeForce256 [1]

NVIDIAGeForce3 [2]

NVIDIATesla [1]

ModernGPUs Tensor cores, HBM, ray tracing cores NVIDIA Volta, NVIDIA Ampere [5]

Table1: Evolution of GPU Architectures

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN:2395-0072

4.Applications of GPUs

Figure 2: Common GPU Computational Tasks

This diagram illustrates common computational tasks performed by GPUs, such as matrix multiplication (GEMM), deep learning(CONV2D,GEMM),graphprocessing(GRAPH),signalprocessing(FFT),andothermathematicaloperations.GPUsare designed to efficiently handle these parallelizable tasks, leveraging their architecture to accelerate computations in various applications.

4.1 Graphics and Visualization

GPUs remain essential for rendering high-quality graphics in video games, simulations, and virtual reality. They provide the necessaryperformancetohandlecomplexscenes,highresolutions,andreal-timerenderingrequirements[3].

4.2High-Performance Computing (HPC)

GPUs have become indispensable in HPC due to their ability to perform massive parallel computations. They accelerate simulations, molecular dynamics, climate modeling, and other computationally intensive tasks, significantly reducing processingtimescomparedtotraditionalCPUs[4].

4.3Artificial Intelligence and Machine Learning

GPUsareparticularlywell-suitedforAIandmachinelearningworkloads,wherelarge-scalematrixoperationsandparallelism arecritical.TensorcoresinmodernGPUsenhancedeeplearning performance,makingGPUsakeycomponentintrainingand deployingneuralnetworks[5].

4.4 Cryptocurrency Mining

The parallel processing capabilitiesofGPUs makethem ideal forcryptocurrencymining, wheretheyareusedtoperform the repetitivehashingoperationsrequiredtovalidatetransactionsandsecureblockchainnetworks[7].

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN:2395-0072

4.5Scientific Research

In scientific research, GPUs are used to accelerate a variety of computations, including bioinformatics, computational chemistry, and physics simulations. Their ability to process large datasets in parallel makes them valuable tools in research thatrequireshighcomputationalpower[4].

4.6Healthcare and Genomics

GPUs have been instrumental in advancing healthcare and genomics research. They enable faster analysis of genomic data, helpingresearchersunderstandgeneticvariationsandtheirimplicationsfordiseasesandtreatments.Inparticular,GPUshave beenusedtospeedupDNAsequencingandmoleculardynamicssimulations[6].

4.7 Autonomous Vehicles

In the field of autonomous vehicles, GPUs play a crucial role in processing sensor data and running complex algorithms for perception, planning, and control. The real-time processing capabilities of GPUs are essential for the safe and efficient operationofself-drivingcars[3].

Table2: Applications of GPUs

SummaryofvariousapplicationsofGPUs,includingtheirdescriptionsandexamples.

Application

Description

Examples

References

Graphics and Visualization Rendering high-quality graphics for games, simulations Videogames,VRapplications [3]

High-Performance Computing Accelerating simulations, molecular dynamics, climatemodeling Scientific research, data analysis [4]

ArtificialIntelligence Trainingandinferenceofdeepneuralnetworks TensorFlow, PyTorch applications [5]

CryptocurrencyMining Hashingoperationsforvalidatingtransactions Bitcoin,Ethereummining [7] ScientificResearch Accelerating computations in bioinformatics, chemistry DNA sequencing, molecular dynamics [4]

AutonomousVehicles Processing sensor data, running algorithms for perception Self-drivingcars,drones [3]

Applications of GPUs

5.Development Environment and Support

5.1Programming Models and Languages

CUDA, developed by NVIDIA, is a parallel computing platform and programming model that allows developers to use C++ syntaxtowriteprogramsforGPUs.ItprovidesextensivelibrariesandtoolstooptimizeanddebugGPUapplications.CUDAis widelyusedinvariousfields,includingscientificcomputing,machinelearning,andimageprocessing[1].

OpenCL (Open ComputingLanguage) isanother popularframework for writing programsthat execute across heterogeneous platforms,includingCPUs,GPUs,andotherprocessors.ItissupportedbymajorGPU vendorslikeAMDandIntel,makingita versatilechoiceforcross-platformdevelopment[2].

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net

p-ISSN:2395-0072

Numerous tools and libraries support GPU development. NVIDIA's cuDNN is a GPU-accelerated library for deep neural networks that provides highly optimized implementations for standard routines such as forward and backward convolution, pooling, normalization, and activation layers. This library significantly speeds up the development and deployment of deep learningmodelsonNVIDIAGPUs[5].

TensorFlow and PyTorch are two widely-used deep learning frameworks that offer comprehensive support for GPU acceleration. They abstract the complexities of GPU programming and provide high-level APIs to leverage GPU power for trainingandinferenceofneuralnetworks[5].

NVIDIA Nsight is an integrated development environment for debugging, profiling, and optimizing CUDA applications. It integrates with popular IDEs like Visual Studio and Eclipse, providing developers with a familiar environment to develop, analyze,andoptimizetheirGPU-acceleratedapplications[1].

VulkanisanotherAPIdesigned forhigh-performancegraphicsandcomputeapplications.Itprovideslow-level accesstoGPU hardware, enabling developers to achieve fine-grained control over GPU resources and optimize performance-critical applications[2].

6.1Power Consumption and Efficiency

OneoftheprimarychallengesforGPUarchitectureismanagingpowerconsumptionandimprovingenergyefficiency.AsGPUs become more powerful, they also consume more power, which can limit their scalability and applicability in powerconstrained environments. Various approaches are being explored to address this issue, including the development of more efficientcoolingsystems,dynamicpowermanagementtechniques,andadvancementsinsemiconductortechnologytoreduce energyconsumptionwhilemaintaininghighperformance[4].

6.2 Heterogeneous Computing

The future of GPU architecture lies in heterogeneous computing, where CPUs and GPUs work together seamlessly to handle differentpartsofa workload.Advancesininterconnect technologiesandsoftwareframeworkswill becrucial inrealizingthe full potential of this approach. For instance, NVIDIA's NVLink and AMD's Infinity Fabric are designed to facilitate high-speed datatransferbetweenCPUsandGPUs,enhancingtheefficiencyofheterogeneoussystems[2].

AsAIapplicationscontinuetogrow,GPUswillincreasinglyincorporatespecializedhardwareforAIprocessing,suchastensor cores and dedicated AI accelerators. This integration will drive further performance improvements and expand the range of applicationsthatcanbenefitfromGPUacceleration.Additionally,thedevelopmentofnewsoftwaretoolsandlibrariestailored forAIworkloadswillfurtherenhancetheusabilityandperformanceofGPUsinAItasks[5].

The software ecosystem surrounding GPUs is continually evolving to provide better support for developers. This includes advancements in compilers, debuggers, and profiling tools that make it easier to write, optimize, and troubleshoot GPUacceleratedapplications.EnhancementsinprogramminglanguagesandAPIs,suchastheintroductionofmoreintuitivesyntax andhigher-levelabstractions,alsocontributetothegrowingaccessibilityofGPUprogramming[1].

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN:2395-0072

6.5Security

As GPUs are increasingly used in critical applications, security and reliability become paramount concerns. Researchers are exploring various methods to ensure the robustness of GPU hardware and software against potential vulnerabilities and failures.Thisincludesdevelopingtechniquesforerrordetectionandcorrection,implementingsecureprogrammingpractices, andenhancingtheresilienceofGPUsystemsinthefaceofhardwareandsoftwarefaults[4].

ThecontinuousadvancementofGPUtechnologyisopeningupnewavenuesforresearchandapplications.Forexample,GPUs are being explored for their potential in quantum computing, where they could be used to simulate quantum systems and accelerate quantum algorithms. Additionally, the integration of GPUs in edge computing devices promises to bring highperformance computing capabilities to the edge of the network, enabling real-time processing for IoT and other latencysensitiveapplications[3].

7.Conclusion

GPUs have significantly evolved from specialized graphics accelerators to versatile, high-performance computing engines. Their parallel processing capabilities have made them essential in a variety of fields, including graphics rendering, highperformance computing, artificial intelligence, scientific research, and more. This review has highlighted key architectural advancements,suchas programmable shaders and unifiedshader architectures,as well asmodern developmentsliketensor coresandhigh-bandwidthmemory.

AsGPUscontinuetointegratespecializedAIhardwareandsupportheterogeneouscomputing,theirimpactontechnologyand industrywillonlygrow.Challengessuchaspowerconsumptionandsecuritymustbeaddressed,buttheongoingdevelopment of supportive software ecosystems and advanced programming tools will enhance their usability and performance. GPUs are poisedtoremainattheforefrontoftechnologicalinnovation,drivingadvancementsincomputationandexpandingtheirrolein emergingapplications.

References

1. Erik Lindholm, John Nickolls, Stuart Oberman, John Montrym, and NVIDIA, "NVIDIA TESLA: A UNIFIED GRAPHICS ANDCOMPUTINGARCHITECTURES,"IEEEComputerSociety,0272-1732/08Mar-Apr2008.

2. Shilan Ahmed Mohammed, Rezgar Hasan Saeed, Jihan Abdulazeez Ahmed, Shilan Bashar Muhammad, Zainab Salih Ageed, Zryan Najat Rashid, "GPU Concepts and Graph Application Challenges: A Review," International Journal of MultidisciplinaryResearchandPublications,ISSN(Online):2581-6187.

3. SWAYAM, NPTEL Online course, "GPU Architectures and Programming," Prof. Soumyajit Dey.Stephen W. Keckler, William J. Dally, Brucek Khailany, Michael Garland, David Glasco, NVIDIA."GPUs AND THE FUTURE OF PARALLEL COMPUTING."ArticleinIEEEMicro.November2011DOI:10.1109/MM.2011.89.

4. Marko J. Misic, Dorde M. Durdevic, and Milo V. Tomasevic, "Evolution and Trends in GPU Computing." Conference Paper.January2012.

5. FumihikoINO,"ThePast,PresentandFutureofGPU-AcceleratedGridComputing,"ConferencePaper.December2013.

6. "What's in Your Genome? Startup Speeds DNA Analysis with GPUs," [Online]. Available: https://blogs.nvidia.com/blog/2018/09/05/parabricks-genomic-analysis/

7. "GPUUsageinCryptocurrencyMining,"[Online].Available:https