International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

Aswathy Ashokan1

1Assistant Professor, Dept. of CSE College of Engineering Munnar, Kerala, India

Abstract - Thispaperprovidesacomparativeanalysisoftwo advanced Generative Adversarial Network (GAN) architectures, CycleGAN and StyleGAN, focusing on their applications in unpaired image-to-image translation and high-quality image synthesis. By examining their underlying architectures, training methodologies, and practical applications, aimtoelucidatethestrengthsandlimitationsof each model. Experimental results on various datasets will be presentedtohighlighttheperformancedifferences,providing insights into their suitability for specific tasks in computer vision.

Key Words: Generative Adversarial Network (GAN), CycleGAN, StyleGAN

1.INTRODUCTION

Generative Adversarial Networks (GANs) have revolutionized the field of computer vision, enabling the generationofhighlyrealisticimages.Amongthemyriadof GANvariants,CycleGANandStyleGANhaveemergedastwo prominentmodels,eachexcellingindifferentapplications. CycleGAN is renowned for its ability to perform unpaired image-to-image translation, making it suitable for tasks where paired training data is unavailable. Conversely, StyleGANiscelebratedforitsabilitytogeneratehigh-quality imageswithfine-grainedcontroloverstyleandattributes, making it ideal for tasks requiring high-fidelity image synthesis.

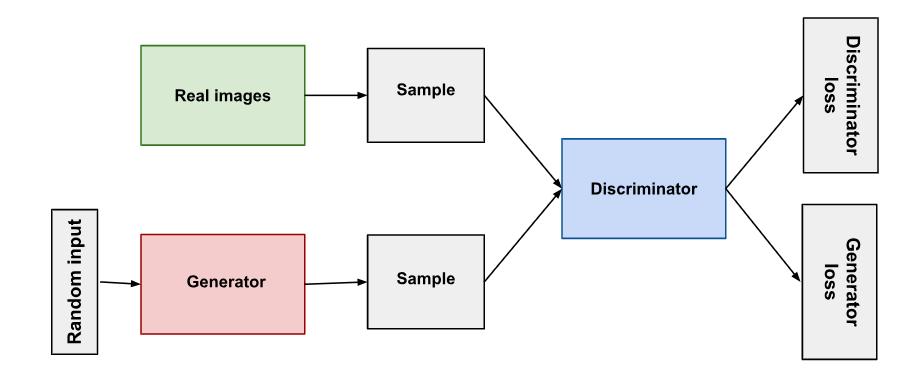

Generative Adversarial Networks (GANs) consist of two neuralnetworks,ageneratorandadiscriminator,whichare trained simultaneously through adversarial training. The generatoraimstocreaterealisticimagesfromrandomnoise, whilethediscriminatorattemptstodistinguishbetweenreal andgeneratedimages[1].Theadversarialtrainingprocess leads to the generator producing increasingly realistic imagesasittriestofoolthediscriminator[1].

1.2 CycleGAN

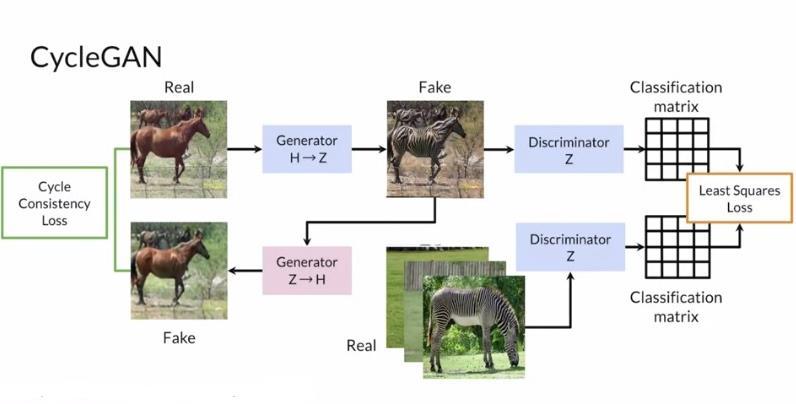

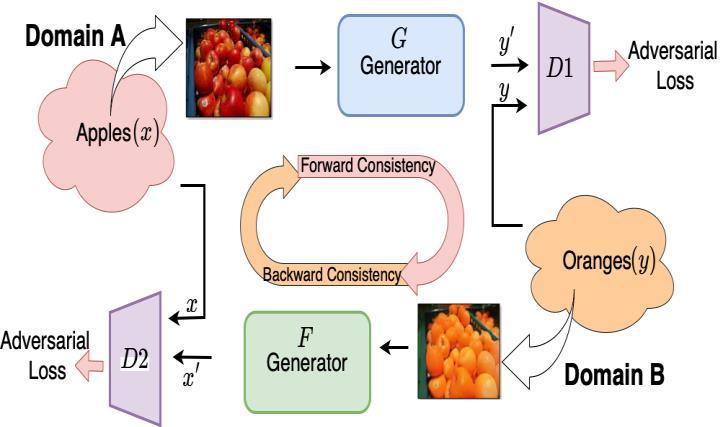

CycleGAN is designed for unpaired image-to-image translation.Itusesadual-generatoranddual-discriminator architecture to transform images from one domain to another and back again, ensuring consistency through a cycle-consistency loss. This loss penalizes discrepancies betweentheoriginalimagesandthosereconstructedaftera cycle of translations. Additionally, an identity loss is employedtopreservekeycharacteristicsoftheinputimages duringtranslation[2][3].ApplicationsofCycleGANinclude styletransferandobjecttransfiguration,wheredirectpaired dataisnotavailable[2][3].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

Table -1 Component Description

Generator(G_X,G_Y)

Discriminator (D_X, D_Y)

Cycle-ConsistencyLoss

Transforms images from domain X to domainYandviceversa

Distinguishesrealimagesfromgenerated imagesindomainsXandY

Ensures the translated image can be transformedbacktotheoriginalimage

2. Methodology

2.1 Architectural Comparison

IdentityLoss

1.3 StyleGAN

Preserveskeycharacteristicsoftheinput imagesduringtranslation

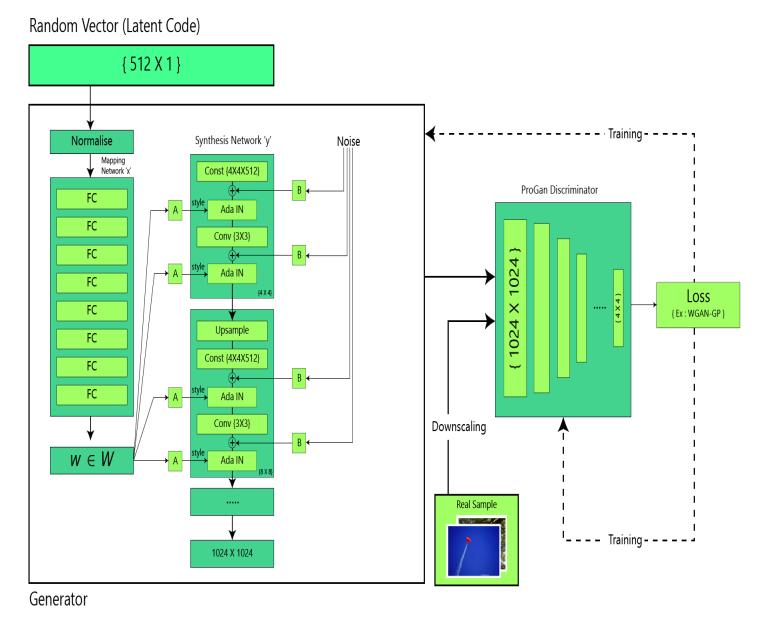

StyleGAN introduces a style-based generator architecture, whichincludesamappingnetworkandasynthesisnetwork.

The mapping network transforms the inputlatentvectors into an intermediate latent space, enabling control over image attributes through adaptive instance normalization (AdaIN). This architecture allows for style mixing, where differentaspectsofmultiplestylescanbeblendedinasingle image [4][5]. StyleGAN excels in high-resolution image generationandfine-grainedattributemanipulation,making it suitable for applications requiring detailed and controllableimagesynthesis[4][5][6].

Table- 2:SummaryofStyleGANComponents

Component Description

Mapping Network Transformsinputlatentvectorsintoan intermediatelatentspace

Synthesis Network Generatesimagesfromtheintermediatelatent space

AdaIN Adaptiveinstancenormalizationforfinegrainedcontroloverimageattributes

StyleMixing Blendsdifferentaspectsofmultiplestylesina singleimage

AdetailedanalysisofCycleGANandStyleGANarchitectures is conducted, highlighting their unique components and trainingmethodologies.CycleGAN'sarchitecturewithdual generators and discriminators is compared against StyleGAN's style-based generator, focusing on their respectivetrainingobjectivesandlossfunctions[2][3][4][5].

Table -3:ComparisonofCycleGANandStyleGAN Architectures

Feature CycleGAN StyleGAN

Generators Twogenerators(G_X, G_Y) Style-basedgenerator

Discriminators Twodiscriminators (D_X,D_Y) Onediscriminator

LossFunctions Cycle-consistency, identity,adversarial Adversarial,style mixing,perceptual

Applications Unpairedimage-toimagetranslation High-resolutionimage synthesis,styletransfer

The experimental setup includes a comprehensive descriptionofthedatasetsusedfortrainingandevaluation, such as unpaired image datasets for CycleGAN and highresolutionimagedatasetsforStyleGAN.Trainingprotocols, including hyperparameters, training duration, and computational resources, are outlined to ensure

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

reproducibility and provide context for the results [2][3][4][5][6].

Parameter CycleGAN StyleGAN

Dataset Horse2Zebra, Summer2Winter FFHQ(highresolutionfaces)

TrainingDuration 200epochs 1000epochs

Hyperparameters

Computational Resources

Learningrate:0.0002, Batchsize:1

2xNVIDIATeslaV100 GPUs

Learningrate: 0.001,Batchsize: 8

4xNVIDIATesla V100GPUs

To evaluate the performance of CycleGAN and StyleGAN, both quantitative and qualitative metrics are employed. Quantitative metrics include the Inception Score (IS) and FréchetInceptionDistance(FID),whichmeasurethequality anddiversityofthegeneratedimages.Qualitativeanalysis involves a visual comparison of the generated images, assessing their realism and adherence to the desired attributes[2][3][4][5][6].

Inception Score (IS)

IS=exp(Ex∼ pg[DKL(p(y∣x)∥p(y))])

where pgp_gpg is the distribution of generated images,p(y∣x)p(y|x)p(y∣x) is the conditional probability of labelygivenimagex,andp(y)isthemarginaldistribution overalllabels.

Fréchet Inception Distance (FID)

TheformulaforFIDis:FID=∥μr−μg∥2+Tr(Σr+Σg

)

where μr and Σr are the mean and covariance of the real images'features,andμgand Σgarethemeanandcovariance ofthegeneratedimages'features.

IS focuses on the confidence of classifications and the diversity of generated images, but it can be gamed by producing many similar images that fall into confident categories.

FID providesamorecomprehensivemeasurebycomparing the distributions of real and generated images in feature space, making it more sensitive to differences in image qualityanddiversity.

Table- 5:EvaluationMetricsDefinitions

Metric Description

InceptionScore(IS)

FréchetInception Distance(FID)

VisualComparison

Measuresthequalityanddiversityof generatedimages

Assessesthesimilaritybetween generatedimagesandrealimages

Subjectiveevaluationofimagequality andrealism

3.1 Unpaired Image-to-Image Translation with CycleGAN

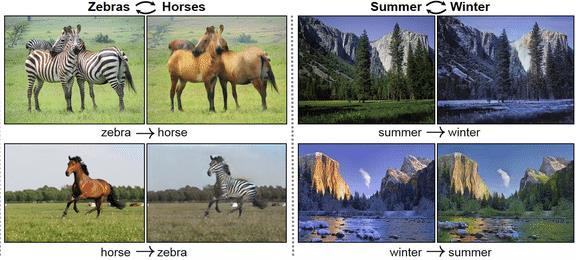

ExperimentswithCycleGANinvolvetaskssuchashorse-tozebra and summer-to-winter translation. The cycleconsistency and identity preservation capabilities of CycleGAN are analyzed, demonstrating its effectiveness in maintainingkeycharacteristicsoftheinputimagesduring unpairedtranslationtasks[2][3].

Fig-4ExampleresultsfromCycleGAN(horse-to-zebraand summer-to-winter)

Table- 6:CycleGANPerformanceMetrics

Task Inception Score (IS)

Fréchet Inception Distance (FID) Horse2Zebra 3.45

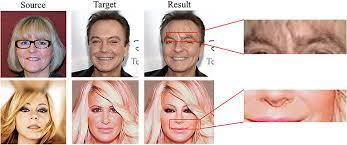

3.2 High-Quality Image Synthesis with StyleGAN

Experiments with StyleGAN focus on high-resolution face generation, highlighting its style mixing and attribute manipulation capabilities. The experiments demonstrate

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

StyleGAN's ability to produce highly realistic images with fine-grained control over variousattributes, suchasfacial expressions,hairstyles,andbackgrounds[4][5][6].

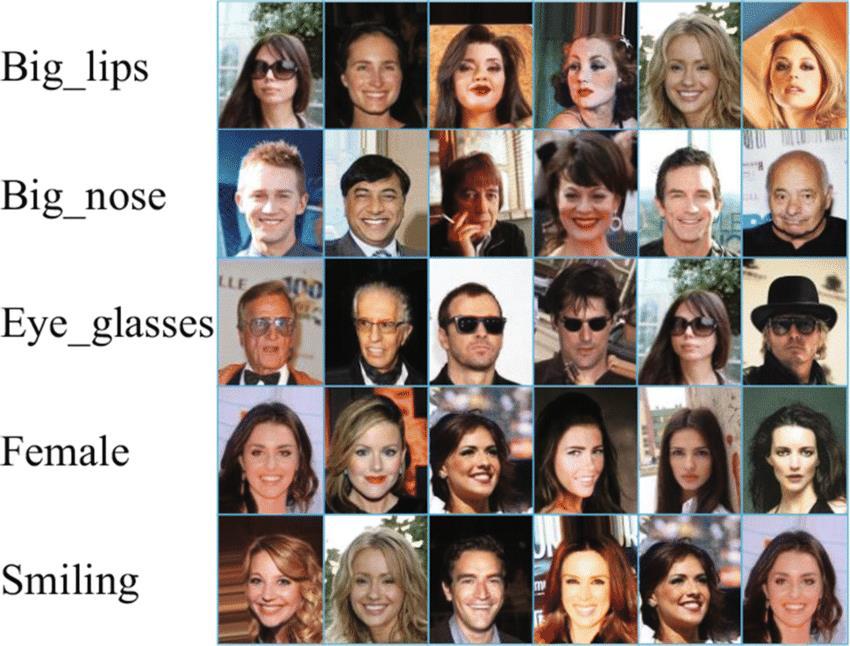

Fig-ExampleresultsfromStyleGAN(high-resolutionfaces withdifferentattributes)

SamplefaceattributesimagesfromCelebAdataset

Table 7:StyleGANPerformanceMetrics

4. Results

4.1 Quantitative Results

The quantitative results compare the IS and FID scores of CycleGAN and StyleGAN. The statistical significance of the performance differences is analyzed to provide a clear understandingofeachmodel'sstrengthsintheirrespective domains[2][3][4][5][6].

Table -8:ComparisonofISandFIDScores

4.2 Qualitative Results

Qualitative results involve a visual comparison of the translated and synthesized images, offering a subjective evaluation of image quality and realism. The visual assessmentscomplementthequantitativemetrics,providing aholisticviewofthemodels'performance[2][3][4][5][6].

5. Discussion

5.1 Strengths and Limitations

Thediscussionsectionoutlinesthestrengthsandlimitations of CycleGAN and StyleGAN. CycleGAN's robustness in unpaired translation tasks and its limitations in image qualityareexamined.Conversely,StyleGAN'sexcellencein imagequalityandstylecontrol,alongwithitslimitationsin requiringpaireddata,arediscussed[2][3][4][5][6].

5.2 Suitability for Applications

Ananalysisisconductedonthesuitabilityofeachmodelfor specific tasks. CycleGAN is best suited for unpaired image translationtasks,whileStyleGANexcelsinhigh-resolution imagesynthesisandattributemanipulation.Potentialareas forfutureresearchandimprovementarealsohighlighted, suggestingwaystointegratethestrengths ofbothmodels [2][3][4][5][6].

i. Conclusion

This paper presents a comprehensive comparison of CycleGAN and StyleGAN, highlighting their respective strengths and applications in the field of generative modeling. Our experiments demonstrate that while CycleGAN excels in tasks requiring unpaired image translation,StyleGANprovidessuperiorimagequalityand controlforhigh-resolutionimagesynthesis.Futureresearch couldfocusonintegratingthestrengthsofbothmodelsto develop more versatile and powerful generative architectures.

[1]. Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley,D.,Ozair,S.,Courville,A.,&Bengio,Y.(2014). GenerativeAdversarialNets.AdvancesinNeuralInformation ProcessingSystems,27.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

[2]. Zhu, J. Y., Park, T., Isola, P., & Efros, A. A. (2017). UnpairedImage-to-ImageTranslationusingCycle-Consistent AdversarialNetworks.ProceedingsoftheIEEEInternational ConferenceonComputerVision(ICCV).

[3]. Kim, T., Cha, M., Kim, H., Lee, J. K., & Kim, J. (2017). Learning to Discover Cross-Domain Relations with GenerativeAdversarialNetworks.Proceedingsofthe34th InternationalConferenceonMachineLearning(ICML).

[4]. Karras, T., Laine, S., & Aila, T. (2019). A Style-Based GeneratorArchitectureforGenerativeAdversarialNetworks. ProceedingsoftheIEEEConferenceonComputerVisionand PatternRecognition(CVPR).

[5].Karras,T.,Laine,S.,Aittala,M.,Hellsten,J.,Lehtinen,J.,& Aila,T.(2020).AnalyzingandImprovingtheImageQuality of StyleGAN. Proceedings of the IEEE/CVF Conference on ComputerVisionandPatternRecognition(CVPR).

[6]. Brock, A., Donahue, J., & Simonyan, K. (2019). Large Scale GAN Training for High Fidelity Natural Image Synthesis. Proceedings of the International Conference on LearningRepresentations(ICLR).