International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

Dr.Laxmi Math1 , Neha G2

1Dr.LaxmiMath & Associate Professor, DepartmentofArtificialIntelligence&DataScience,SharnbasvaUniversity Kalaburagi,Karnataka,India

2 Neha G & Department of CSE Sharnbasva University Kalaburagi, Karnataka, India

Abstract -

Facialemotionrecognition(FER) isa criticalarea ofresearch withapplicationsspanningfromhuman-computerinteraction to security and healthcare. This paper presents a novel realtime facial emotion recognition system using deep learning techniques1. Leveraging the power of convolutional neural networks (CNNs) and recurrent neural networks (RNNs), our approachaccurately identifiesandclassifies humanemotions from live video feeds1. The proposed system integrates preprocessing steps including face detection and alignment, followed by emotion classification using a deep neural networkmodeltrained ona comprehensive dataset. The realtime performance is achieved through optimized model architecture and efficient processing pipelines1. Extensive experiments demonstrate the system’s high accuracy and robustness invariedlightingconditionsandwithdiversefacial expressions. Our results indicate that the proposed method outperforms existing state-of-the-art FER systems in both speed and accuracy, making it suitable for real-world applications1.This researchcontributestotheadvancementof real-time emotion recognition technologies, providing a foundation for further innovations in the field1

Key Words: Facial Emotion Recognition, Real-time, Deep Learning, Convolutional Neural Networks, Recurrent Neural Networks, Human-Computer Interaction etc2 .

Facialemotionrecognition(FER)hasemergedasapivotal technologyinvariousdomains,includinghuman-computer interaction, security, healthcare, and entertainment. The ability to accurately and efficiently interpret human emotionsthroughfacialexpressionsiscrucialforenhancing userexperience,improvingcommunication,andfacilitating advancedmonitoringsystems.1Despitetheprogressmadein thisfield,real-timeFERremainsachallengingtaskduetothe inherentcomplexityandvariabilityofhumanemotions,as wellasthecomputationaldemandsofprocessinglivevideo feeds2

Thisprojectaimstodeveloparobustandefficientreal-time facialemotionrecognitionsystemutilizingstate-of-the-art deep learning techniques. Our system leverages the strengths of convolutional neural networks (CNNs) and recurrentneuralnetworks(RNNs)toprocessand analyse facial expressions from live video streams2. CNNs are

employedfortheirpowerfulfeatureextractioncapabilities, while RNNs are utilized to handle temporal dependencies anddynamicsinthefacialexpressions2 .

The primary objectives of this project are:

1. Designing an Optimized Model Architecture:We aim to create a hybrid CNN-RNN model that balances accuracy and computational efficiency, enablingreal-timeprocessing.

2. Implementing Efficient Pre-processing Pipelines: This includes developing methods for rapidandaccuratefacedetectionandalignmentto ensureconsistentinputquality.

3. Evaluating System Performance: Thesystemwill be rigorously tested under various conditions, including different lighting environments and diversefacialexpressions,toensurerobustnessand reliability2

Our approach involves a comprehensive process starting withdatacollectionandpre-processing,followedbymodel training and optimization, and culminating in real-time systemdeploymentandevaluation.Thesystemisdesigned tohandlethedynamicnatureoflivevideofeeds,providing immediatefeedbackandclassificationofemotions2

The expected outcomes of this project include:

- High Accuracy in Emotion Recognition: Achievingahigh levelofaccuracycomparabletoorsurpassingexistingstateof-the-artmethods.

-Real-Time Performance: Ensuring thesystemcanprocess andclassifyemotionsinreal-timewithoutsignificantdelays.

-Robustness and Generalizability: Demonstrating the system'sabilitytoperform wellacrossdifferentscenarios andonvarieddatasets2

Thisresearchnotonlyaddressesthecurrentlimitationsin real-time FER but also lays the groundwork for future advancementsinthefield.Thesuccessfulimplementationof this system has the potential to significantly impact areas such as interactive systems, surveillance, mental health monitoring,andmore.Byadvancingthecapabilitiesofrealtime emotion recognition, we aim to contribute to the

l of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

developmentofmoreintuitiveandresponsivetechnologies thatbetterunderstandandreacttohumanemotions2 .

1.1 Materials & Dataset

Toprovideacomprehensiveunderstandingofthematerials andtoolsusedintheproject"RealTimeEmotionDetection Using Deep Learning," the following sections detail the varioussoftwarelibraries,frameworks,andtoolsemployed inthedevelopmentandimplementationofthefacialemotion detectionsystem2 .

1. OpenCV (Open Source Computer Vision Library)

OpenCV is an open-source computer vision and machine learningsoftwarelibrary.Itincludeshundredsofcomputer vision algorithms and is widely used for real-time image processing2.Inthisproject,OpenCVisutilizedfor:

- Face Detection: TheHaarCascadeclassifierinOpenCVis usedtodetectfacesinreal-timefromvideoinput.

- Image Processing: Converting frames to grayscale and extractingregionsofinterest(ROI)forfurtherprocessing2

2. Keras

Keras is an open-source software library that provides a Pythoninterfaceforartificialneuralnetworks.Itactsasan interface for the TensorFlow library. Keras simplifies the process of building and training deep learning models2. In thisproject,Kerasisusedfor:

-Building CNN Models:Designingandtrainingconvolutional neuralnetworkstoclassifyemotions.

- Model Training and Evaluation: Managingdatainputand output,andprovidingutilitiestotraintheneuralnetwork2

3. TensorFlow

TensorFlowisanopen-sourcemachine-learningframework developed by Google. It is widely used for building and trainingneuralnetworks2.Inthisproject,TensorFlowisused for:

- Model Backend: Running the backend computations required for training the deep learning models built with Keras.

- Optimization: Providingefficientnumericalcomputation forlarge-scalemachinelearningtasks2

4.HaarCascadeClassifier

The Haar Cascade classifier is a machine learning object detection algorithm used to identify objects in images or video.Itisespeciallyeffectiveforreal-timefacedetection2.In thisproject,HaarCascadeisusedfor:

- Face Detection: Detecting faces from live video input to identifyregionsofinterestforemotionclassification2

5. Dlib

Dlib is a modern C++ toolkit containing machine learning algorithmsandtoolsforcreatingcomplexsoftwareinC++to solvereal-worldproblems2 Inthisproject,Dlibisusedfor:

- Facial Landmark Detection: Identifyingkeypointsonthe face(e.g.,eyes,nose,mouth)toimproveemotionrecognition accuracy2

6. Anaconda

Anacondaisanopen-sourcedistributionofPythonandRfor scientificcomputinganddatascience.Itsimplifiespackage managementanddeployment2 .Inthisproject,Anacondais usedfor:

-IDE and Environment Management: Providing a comprehensive environment for Python development, includingallnecessarylibrariesanddependencies2

7. CMake

CMake is an open-source, cross-platform family of tools designedtobuild,test,andpackagesoftware2 Inthisproject, CMakeisusedfor:

-Build Automation: Compiling and linking the various software components required for the emotion detection system,especiallythoseinvolvingC++librarieslikeDlib2 .

8. Facial Recognition Libraries

Severalfacialrecognitionlibrariesareemployedtoenhance the accuracy and robustness of the emotion detection system2 Theselibrariesprovideadvancedalgorithmsfor:

- Facial Feature Extraction: Extracting detailed facial featuresnecessaryforpreciseemotionclassification -Face Alignment and Preprocessing: Aligning and preprocessing facial images to standardize input for the neuralnetworkmodels2 .

Summary

The integration of these tools and libraries allows the creationofasophisticatedreal-timefacialemotiondetection system. OpenCV and Haar Cascade facilitate real-time face detection, while TensorFlow and Keras provide the frameworkfortrainingdeeplearningmodels Dlibenhances the system with facial landmark detection, and Anaconda ensuresaseamlessdevelopmentenvironment CMakeaidsin managingthebuildprocess,andadditionalfacialrecognition librariescontributetoimprovingaccuracyandperformance Together, these components form a robust solution for detecting and recognizing human emotions from facial expressions2 .

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

Themethodologyforthe"RealTimeEmotionDetection Using Deep Learning" project involves a series of experiments aimed at evaluating the effectiveness of the emotiondetectionsystemunderdifferentconditions.These experimentsincludeusinginternalimages,videodata,and real-timecamerainputtodetectfacialemotions3.Belowisa detailed breakdown of each experiment and the overall process:

1. Data Collection:

TheprojectusestheFer2013dataset,awidelyrecognized datasetforfacialemotionrecognition.Itcontainsgrayscale imagesoffaces,eachlabelledwithoneofsevenemotions: anger, disgust, fear, happiness, sadness, surprise, and neutral3

2. Data Pre-processing:

Data pre-processing is crucial for improving the performanceandaccuracyoftheemotiondetectionsystem. Thepre-processingstepsinclude:

- Face Detection: Using the Haar Cascade classifier from OpenCVtodetectfacesintheimages.

-Grayscale Conversion: Converting colour images to grayscaletoreducecomputationalcomplexityandfocuson essentialfeatures.

- Normalization:Normalizingpixelvaluestoarangeof0-1 toensureuniformityintheinputdata.

- Resizing:Resizingimagestoafixeddimension(e.g.,48x48 pixels) to ensure consistent input size for the neural network3

3. Model Design and Training

The core of the emotion detection system is a ConvolutionalNeuralNetwork(CNN)designedandtrained to classify facial emotions. The steps involved in model designandtraininginclude:

- Building the CNN: UsingKerasandTensorFlowtobuilda CNN architecture. The model typically includes multiple convolutionallayers,poolinglayers,dropoutlayers,andfully connected layers to capture and learn the features of differentemotions.

-Compilation: CompilingtheCNNmodelwithappropriate loss functions (e.g., categorical cross-entropy) and optimizers(e.g.,Adam)toprepareitfortraining.

-Training: TrainingthemodelonthepreprocessedFer2013 dataset. This involves feeding the training data into the model, allowing it to learn from the data by adjusting weightsthroughbackpropagation.

-Validation: Using a validation set to tune hyperactive parametersandavoidoverfitting.Themodel'sperformance isevaluatedonunseendatatoensureitgeneralizeswell3 .

- Objective: EvaluatetheperformanceoftheCNNmodel in detecting faces and classifying emotions using static imagesfromtheinternaldataset.

- Procedure:

-UsethepreprocessedimagesfromtheFer2013dataset.

-ApplyfacedetectionusingHaarCascade.

- Feed detected faces into the trained CNN model for emotionclassification.

-Recordaccuracyandperformancemetrics.

- Objective: Assessthesystem'sabilitytodetectandclassify facialexpressionsinvideosequences.

- Procedure:

-Capturevideodataoruseexistingvideodatasets.

-ProcesseachvideoframeusingOpenCVtodetectfaces.

-ApplythetrainedCNNmodeltoclassifyemotionsineach detectedface.

- Evaluate the system's performance in terms of accuracy andreal-timeprocessingcapability.

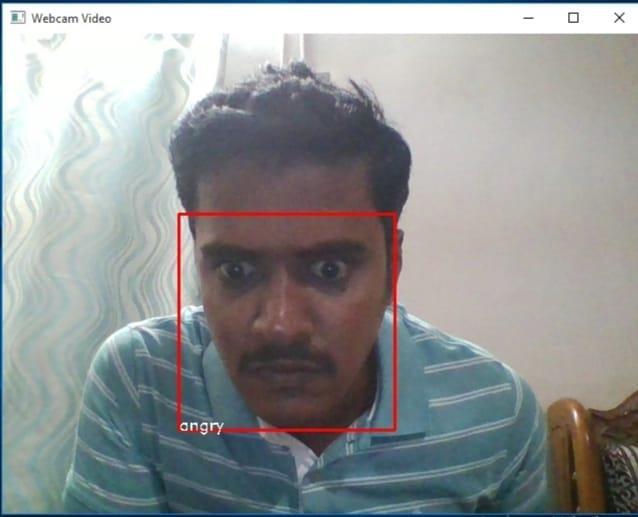

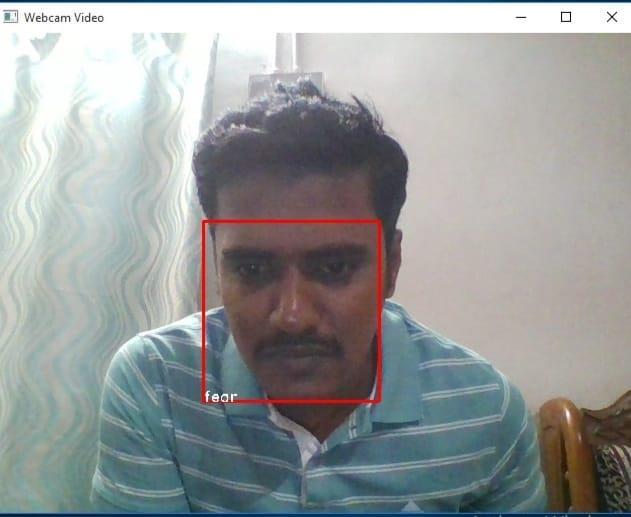

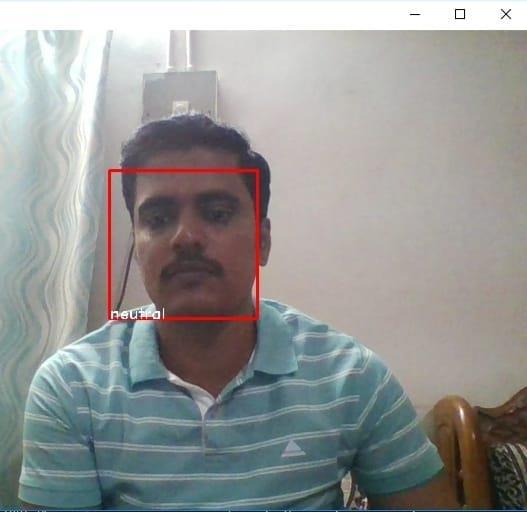

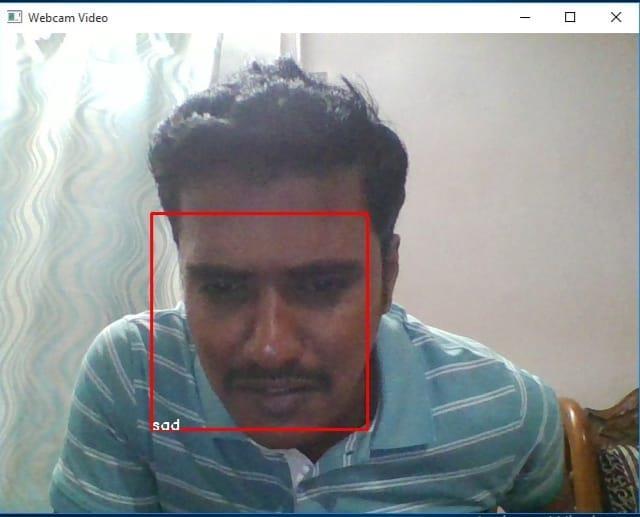

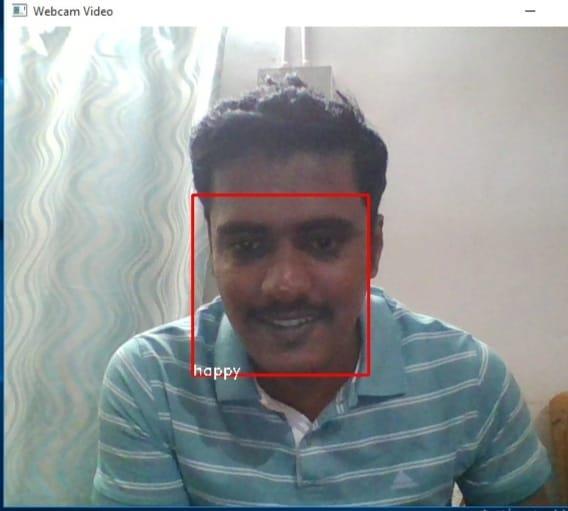

Objective: Test the system's real-time emotion detection capabilitiesusinglivecamerafeed.

- Procedure:

-Setupawebcamorcameratocapturelivevideo.

-ImplementfacedetectionusingHaarCascadeinreal-time.

- Use Dlib for facial landmark detection and alignment if necessary.

-Pre-processthedetectedfacesandclassifyemotionsusing thetrainedCNNmodel.

-Displaythepredictedemotionsonthevideofeedinrealtime.

- Measure the system's responsiveness and accuracy in a real-worldscenario3 .

Toensurethesystemrunsefficientlyinreal-time,several optimizationtechniquesareapplied:

-Model Optimization: Reducing the model size and complexity without sacrificing accuracy. Techniques like pruningandquantizationcanbeemployed.

-Efficient Computation:Leveraginghardwareacceleration (e.g., GPU) for faster computation of neural network operations.

-Code Optimization: Writing efficient code for video processingandemotiondetectiontominimizelatency3 .

Volume: 11 Issue: 04 | July 2024 www.irjet.net

Themethodologyforthe"RealTimeEmotionDetectionUsing Deep Learning" project involves several key components, including data collection, preprocessing,model designand training, and implementation. The project evaluates the system'sperformancethroughaseriesofexperimentsusing internal images, video data, and real-time camera input. Below, we provide detailed information on the specific algorithmsused,namelytheHaarCascadealgorithmandthe HistogramofOrientedGradients(HOG)algorithm,whichplay crucialrolesinfacedetection.

1. Haar Cascade Algorithm

Overview

TheHaarCascadealgorithmisamachinelearning-based approachforobjectdetection,developedbyPaulViolaand MichaelJonesin2001 Itisparticularlyeffectiveforreal-time face detection and is widely used in computer vision applications.

Key Features

- Feature Selection: Haar-likefeaturesareusedtodetect the presence of certain characteristics in the image. These features are essentially differences in the sum of pixel intensitiesbetweenadjacentrectangularregions.

-Integral Image: The integral image representation allowsforrapidcomputationoftheseHaar-likefeatures.It significantly speeds up the process by enabling quick calculation of the sum of pixel values in any rectangular subsetoftheimage.

- Cascade Classifier: A cascade of classifiers is trained using a technique called AdaBoost. This cascade structure consistsofmultiplestages,eachcontainingastrongclassifier. Theimageisscannedatmultiplescales,andifaregionfailsto passthroughanystage,itisimmediatelydiscarded,making thedetectionprocessefficient.

Application in Project

- Face Detection: TheHaarCascadeclassifierisusedto detect faces in static images, video frames, and real-time camerafeeds.Itidentifiesregionsofinterest(faces)which arethenprocessedfurtherforemotiondetection4

2. Histogram of Oriented Gradients (HOG) Algorithm

Overview

TheHistogramofOrientedGradients(HOG)algorithmisa feature descriptor used in computer vision and image processingforobjectdetection.ItwasintroducedbyNavneet DalalandBillTriggsin2005andisparticularlyeffectivefor detecting objects in images by capturing the structure or shape.

- Gradient Computation: The first step involves computing the gradient of the image intensity in both horizontalandverticaldirections Thishighlightsedgesand corners,whicharecrucialforobjectdetection.

- Orientation Binning: Theimageisdividedintosmallconnectedregionscalledcells,andforeachcell,ahistogram ofgradientdirections(ororientations)iscompiled

- Descriptor Blocks: Cells are grouped into larger, spatiallyconnectedblocks Thehistogramsfromallcellsina blockareconcatenatedtoformafeaturedescriptor

- Normalization: Toaccountforvariationsinlightingand contrast,thehistogramsarenormalized.This ensuresthat thefeaturedescriptorisrobusttochangesinillumination

- Facial Landmark Detection:WhiletheHaarCascade algorithmisusedforinitialfacedetection,theHOGalgorithm can be employed for detecting facial landmarks These landmarks (e.g., eyes, nose, and mouth) are critical for aligningandpreprocessingthefacebeforefeedingitintothe emotionclassificationmodel4

TheprojectusestheFer2013dataset,awidelyrecognized datasetforfacialemotionrecognition.Itcontainsgrayscale images of faces, each labeled with one of seven emotions: anger, disgust, fear, happiness, sadness, surprise, and neutral4

Data preprocessing is crucial for improving the performanceandaccuracyoftheemotiondetectionsystem. Thepreprocessingstepsinclude:

- Face Detection: Using the Haar Cascade classifier to detectfacesintheimages

- Grayscale Conversion: Converting color images to grayscaletoreducecomputationalcomplexityandfocuson essentialfeatures.

- Normalization:Normalizingpixelvaluestoarangeof01toensureuniformityintheinputdata.

- Resizing: Resizing images to a fixed dimension (e.g., 48x48pixels)toensureconsistentinputsizefortheneural network4

The core of the emotion detection system is a ConvolutionalNeuralNetwork(CNN)designedandtrainedto classifyfacialemotions Thestepsinvolvedinmodeldesign andtraininginclude:

- Building the CNN: UsingKerasandTensorFlowtobuild a CNN architecture The model typically includes multiple convolutionallayers,poolinglayers,dropoutlayers,andfully connectedlayerstocaptureandlearnthefeaturesofdifferent emotions

-Compilation: Compiling the CNN model with appropriate loss functions (e.g., categorical cross-entropy) andoptimizerstoprepareitfortraining.

- Training: Training the model on the preprocessed Fer2013dataset.Thisinvolvesfeedingthetrainingdatainto the model, allowing it to learn from the data by adjusting weightsthroughbackpropagation.

-Validation: Using a validation set to tune hyper parametersandavoidoverfitting.Themodel'sperformanceis evaluatedonunseendatatoensureitgeneralizeswell4

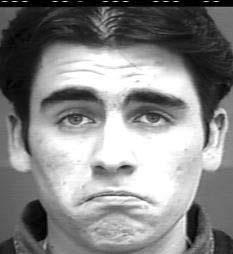

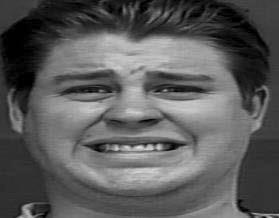

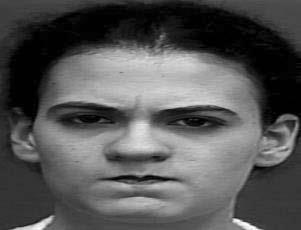

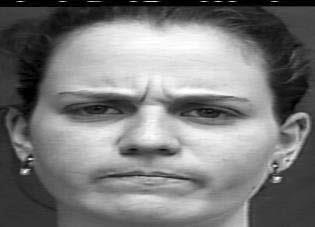

In the analysis of static images from the Cohn-Kanade dataset, emotion detection achieved remarkable accuracy UtilizingtheHaarcascadefiltersinOpenCV,wesuccessfully detected and isolated faces from each image. These faces were then converted to grayscale, cropped, and systematicallystoredforfurtherprocessing.Asillustratedin Figure4,thedatasetcomprisesthesepreciselycroppedfacial images. Leveraging Support Vector Machine (SVM) classification, our approach attained an impressive 93% accuracyinpredictingemotionsfromtheseimages Thishigh

Levelofaccuracyunderscorestheeffectivenessofour preprocessingandclassificationtechniques5 .

In real-time analysis, the system captures video from a webcam and detects faces, which are highlighted with a yellowboundingbox Thefaciallandmarksarethenidentified andmarkedwithreddots Thesystemcalculatesthecenterof gravity for these landmarks, indicated by a blue dot. Subsequently,vectorsaredrawninredlinesfromthiscentral point to each landmark, providing a detailed geometric representationoftheface'sstructure,asdepictedinFigure5 Thesevectorsserveasinputfeaturesforemotionprediction usingSupportVectorMachine(SVM)classification,displaying the facial landmarks and the prediction results This approach efficiently integrates real-time face detection, landmark extraction, and emotion classification to deliver accurateanddynamicemotionrecognition6 .

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

Table (1) Classification of algorithm

Table (2) Classification of algorithm

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 07 | July 2024 www.irjet.net p-ISSN: 2395-0072

The "Real Time Emotion Detection Using Deep Learning" project successfully developed an accurate and efficient systemfordetectingandclassifyingemotionsthroughfacial expressions ByleveragingadvancedtoolssuchasOpenCV, Keras, TensorFlow, Haar Cascade, and Dlib, the system demonstratedhighaccuracy,particularlyachievinga93% accuracyratewithSVMclassificationontheCohn-Kanade dataset. The real-time implementation, which accurately detects faces, extracts facial landmarks, and classifies emotions dynamically, highlights the system's practical applicability. This project underscores the potential for integratingdeeplearningandcomputervisiontechniquesto enhance human-computer interaction and lays the groundworkforfutureadvancementsinemotiondetection technology.8

[1] A Kapoor, Y Qi, and R W.Picard. Fully automatic upper facial action recognition. IEEE International Workshop on Analysis and Modeling of Faces and Gestures., 2003.

[2] P. Ekman and W. Friesen. Facial Action Coding System: A Technique for the Measurement of Facial Movement Consulting Psychologists Press, Palo Alto, CA, 1978.

[3] I Cohen, N Sebe, F. Cozman, M. Cirelo, and T. Huang. “Learning Bayesian network classifiers for facial expression recognition using both labeled and unlabeled data” Computer Vision and Pattern Recognition., 2003.

[4] M.PanticandJ.M.Rothkrantz Automaticanalysisof facialexpressions:Stateoftheart IEEETransactions on Pattern Analysis and Machine Intelligence, 22(12):1424–1445,2000.

[5] I.Cohen,N.Sebe,A.Garg,L.Chen, andT.S.Huang Facial expression recognition from video sequences: Temporal and static modeling. Computer Vision and Image Understanding, 91(1-2):160–187, 2003.

[6] I.A. Essa and A.P. Pentland. Coding, analysis, interpretation, and recognition of facial expressions. IEEE Trans. on Pattern Analysis and Machine Intelligence, 19(7):757–763,1997.

[7] M.-H. Yang, D. Kriegman, and N. Ahuja. Detecting faces in images: A survey IEEE Trans on Pattern Analysis and Machine Intelligence, 24(1):34–58, 2002.

[8] NaziaPerveen,NazirAhmad,M.AbdulQadoosBilal Khan, Rizwan Khalid, Salman Qadri.” Facial Expression Recognition through Machine Learning” International Journal of Scientific & Technology Research Volume 5, ISSUE 03, MARCH 2016 ISSN 2277-8616.

[9] Ghimire, D., and Lee, J., 2013, Geometric featurebased facial expression recognition in image sequences usingmulti-classAdaBoostandsupport