30 minute read

NEWS

Safety Certification Issued for Industrial Collaborative Robot Technology

By David Greenfield

Editor-In-Chief/Director of Content, Automation World

With the rise of collaborative robots (cobots) over the past decade, it looked like the robotics industry would be segmented into two divisions—one for the high-speed industrial robots with which we’re all familiar and the other for cobots. Some robotic technology suppliers would, of course, offer both types of robots while others would specialize in one area or the other.

Then something interesting happened. Industrial robot suppliers began experimenting with the concept of bringing collaborative robot capabilities to industrial robots. I first saw this displayed as a concept project in a Mitsubishi Electric booth at a trade show five or six years ago. At the time, I thought this concept would proliferate quickly; however, I did not see it again until I attended the SPS (Smart Production Solutions) event in Nuremberg, Germany, in 2019. At this event, it was again at the Mitsubishi booth where I saw the technology on display. But this time it was not part of a concept display, but as part of a product introduction between Mitsubishi and Realtime Robotics.

A few months later, on my last business trip before COVID-19 brought a temporary end to live industry events, I met with Veo Robotics and learned that they were devising a system that could be used to bring collaborative capabilities to any industrial robot. Veo does this with its FreeMove system, which uses multiple camera sensors and an algorithmic computing platform to transform industrial robots into cobots. Veo has relationships with ABB, Fanuc, KUKA, and Yaskawa and its system is being applied and tested in the aerospace, automotive, household appliance, and construction equipment manufacturing industries. A key component in the acceptance of technologies that promise to make industrial robots collaborative is overcoming the safety concerns of those who could be working in close proximity to the robots. After all, if you’ve ever seen an industrial robot going through its high-speed paces in a caged environment, you know you don’t want to be anywhere inside that cage when the robot is operating.

Such reservations about working so closely with industrial robots may now be on the verge of disappearing as Veo Robotics has just announced that its FreeMove system has been certified for compliance with ISO 13849 for PLd, Category 3 by TÜV Rheinland. According to TÜV, ISO 13849 addresses the safety of all system technologies including mechanical, hydraulic, and pneumatic products. Where safety functions are to be performed by safety related parts of the control system (SRP/CS), EN ISO 13849-1 can be used to show compliance with the essential health and safety regulations of the Machinery Directive 2006/42/EC.

“This is a momentous accomplishment for the team and the automation industry overall,” said Veo Robotics’ CEO and co-founder Patrick Sobalvarro. “After spending the last four years visiting factories, working with our manufacturing customers, and building FreeMove, we are thrilled to be able to offer the fully safety-certified system.” He added that FreeMove is “the only solution on the market today that implements dynamic, 3D speed and separation monitoring.”

In related news, Atlanta-based Factory Automation Systems (FAS) has joined Veo’s Certified Systems Integrator program. FAS will include FreeMove as part of its safety offering to customers.

Mark Ligler, vice president of FAS, said, “Veo Robotics’ FreeMove is a first-of-its-kind safeguarding system that fills a need in the industrial robotics industry.”

Palletizing application using FreeMove and an ABB robot. Operator load station application using the FreeMove system and a Fanuc robot.

Dell’s Edge Computing Strategy to Complement the Cloud

By David Miller

Contributing Writer, Automation World

The benefits of the industrial cloud, which grants users scalability, low-cost access to machine learning algorithms, and a single source of truth for their data, have become well known. However, edge computing—which is not opposed to, but works in tandem with the cloud—is also growing in importance. In fact, Gartner predicts that more than 50% of enterprise-generated data will be created and processed outside of data centers or the cloud by 2022.

Edge computing refers to systems that push intelligence, processing power, and communication capabilities as close to the source of data creation as possible. The benefits of this are myriad: data coming out of plants can be aggregated and filtered via edge modules prior to being sent to the cloud to conserve bandwidth; sophisticated analytics trained on cloud-based machine learning models can be delivered to the edge and applied in real-time; external data such as weather conditions, supply chain information, or real-time pricing can be used as a source of I/O for legacy controllers that lack cloud connectivity capabilities; and communication between multiple clouds can be facilitated.

Focusing on these edge computing advantages, Dell Technologies has released two new edge products: • An updated version of its Dell EMC

Streaming Data Platform, which can ingest real-time data from an array of industrial internet of things (IIoT) connected devices and use it to perform rapid analytics on-premises while also passing historical data onward to other higher-level systems such as the cloud for long-term storage; and • The Dell Technologies Manufacturing Edge

Reference Architecture, offered in partnership with PTC, and which enables application virtualization from numerous cloud systems. In other words, machine learning models trained on large, aggregated datasets from various cloud vendors can be delivered to the edge for low-latency application and updated in iterations.

Both technologies are integrated with Dell’s Apex Private Cloud, which offers an edge framework as-a-service, meaning companies can side-step upfront costs and only pay for what they use.

“The edge is quickly rivalling data centers and public clouds as the location where organizations are gaining valuable insights,” said Jeff Boudreau, president and general manager of Dell’s Infrastructure Solutions Group. “By putting compute, storage, and analytics where data is created, we can deliver those data insights in real time and create new opportunities for businesses.”

By Sean Riley, Senior Director, Media and Industry Communications, PMMI

Advancements in automation, both machine and software, are moving manufacturing toward a smarter factory. With this expansion comes such challenges as determining return on investment and finding skilled labor and the internal resources to assess automation needs, according to the “Automation Timeline: The Drive Toward 4.0 Connectivity in Packaging and Processing” infographic and white paper from PMMI, The Association for Packaging and Processing Technologies. See this infographic at awgo.to/1204.

Many consumer packaged goods (CPG) companies rely on OEM and technology provider partners for troubleshooting, maintenance training, design and modification, installation and start-up, staff training, and overall expertise. As this automation timeline advances, PACK EXPO Las Vegas and Healthcare Packaging EXPO 2021 (Sept. 27-29, Las Vegas Convention Center), arrives at a critical time when CPGs and OEMs need to foster strong partnerships to overcome automation challenges, implement low maintenance design, and improve the reliability of packaging and processing machines.

Current automation levels in the packaging and processing industries are at 64% for automated equipment and 21% for semi-automated. The white paper associated with the infographic shows that, although COVID-19 impacted automation plans, manufacturers recognize that they can improve operations and the broader enterprise by expanding automation strategies and components.

Some of the tools in place driving the future of automation include the Industrial Internet of Things (IIoT), device integration as more machines feature data acquisition capabilities, and the expansion of robotics for secondary packaging and palletizing. Additional drivers include incorporating artificial intelligence and predictive analysis to bolster maintenance.

As manufacturers continue to adopt greater levels of technology and connectivity, a robust cybersecurity strategy is essential. Trends such as remote access and IIoT connectivity make manufacturing more efficient, but they also create new points of vulnerability that bad actors can exploit, as highlighted in PMMI’s 2021 Cybersecurity: Access Your Risk white paper (awgo.to/1205).

Solutions to improve cybersecurity, combat cyberattacks, navigate the automation timeline, and safely add connectivity to operations will be on display at PACK EXPO Las Vegas and Healthcare Packaging EXPO 2021. To register and learn more about the packaging solutions that will be on display, the educational and networking opportunities, and the virtual component of the show, visit packexpolasvegas.com.

| | AT11-19US

: Take flight with flying motion technology!

Flying 2D product transport with up to 6 degrees of freedom

kg

360°

5° Levitating planar movers

Scalable payload

360° rotation

Tilting by up to 5°

Lifting by up to 5 mm

Velocities up to 2 m/s

www.beckhoff.com/xplanar

XPlanar enables new dimensions of freedom in product and material handling: Levitating planar movers fly over flexibly arranged planar tiles accommodating nearly any track layout and path planning. Individual 2D transport at up to 2 m/s Processing with up to 6 degrees of freedom Transport and processing in one system Wear-free, hygienic and easy to clean Free arrangement of planar tiles enabling totally customized machine and process layouts Multi-mover control enables parallel and individual product handling Fully integrated into the powerful and standardized PC-based Beckhoff control system (TwinCAT, PLC IEC 61131, Motion, Measurement, Machine Learning, Vision, Communication, HMI) For use across all industries: assembly, packaging, food/bev, pharma, laboratory, entertainment, …

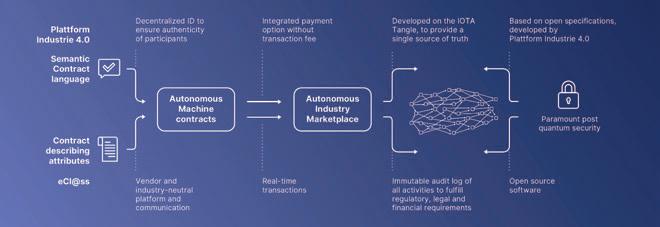

The convergence of technologies— especially Internet of Things, artifi cial intelligence, and blockchain—is setting the stage for a new “machine economy” where self-su cient systems autonomously execute transactions.

By Stephanie Neil, Senior Editor, Automation World

Imagine the day when the packaging machine on the plant floor automatically detects that it needs more materials and it alerts the supplier—ordering and paying for product and scheduling delivery, all without relying upon any human intervention. Or a factory floor where machines self-organize and self-optimize themselves to heighten productivity, reduce waste, and increase product quality.

This is the day that the Industry 4.0 movement is working toward. In this future state of manufacturing, data and services are shared beyond the factory walls in a global inter-company communication infrastructure and payment network.

Some of it exists now. “Communication amongst machines to route around failures is already happening,” said Stephen Mellor, chief technology officer of the Industrial Internet Consortium (IIC) and executive vice president of the Object Management Group (OMG). “In fact, it’s what the internet was invented to do in respect to network nodes. In a factory, say, data would be gathered by nodes in the edge and they would make decisions on how to re-route around the failed machine.”

So while we’ve not completely entered the age of the machine economy, defined as a network of smart, connected, and selfsufficient machines that are economically independent and can autonomously execute transactions within a market with little to no human intervention, we are getting close. The building blocks to create the factory of the future are here, including the Internet of Things (IoT), artificial intelligence (AI), and blockchain. This trifecta of technology has the potential to disrupt the industrial space, but it needs to be connected with a few more things, such as digital twin technology, mobile robots, a standardized way for machines to communicate, and smart services, like sharing machine capacity in a distributed ecosystem.

More importantly, there needs to be a framework to enable this ubiquitous interconnectivity. While technology companies build the machine-to-machine applications, there are industry efforts underway focused on building the underlying architecture and ecosystem of partners.

A framework for machine autonomy

IIC (a program from the OMG) is a global not-for-profit partnership of industry, government, and academia that has been working on several architectures including the Industrial Internet of Things Connectivity Framework (IICF), the Industrial Internet Reference Architecture (IIRA), the Business Strategy and Inno-

vation Framework (BSIF), and the Industrial Internet Security Framework (IISF).

The IICF defines a reference architecture for opening up data otherwise locked in a plethora of domain-specific connectivity technologies used in IIoT systems, by using gateways to one of a few core connectivity standards that can provide syntactic interoperability without compromising the fidelity of the functional and non-functional aspects of the domainspecific technology.

The IIRA, a standards-based architectural template and methodology, enables Industrial Internet of Things (IIoT) system architects to design their own systems based on a common framework and concept. It addresses the need for a common architecture framework to develop interoperable IIoT systems for diverse applications across a broad spectrum of industrial verticals in the public and private sectors.

The BSIF provides a high-level identification and analysis of issues that any enterprise will need to address to capitalize on the opportunities emerging from this current revolution that is the IIoT.

And, addressing the cybersecurity challenge is critical to the success of the IIoT, Industry 4.0, and the Industrial Internet revolution. To that end, IIC members have developed IISF, a common security framework and an approach to assess cybersecurity in IIoT systems. It is the most in-depth cross-industry-focused security framework comprising expert vision, experience, and security best practices, according to IIC.

These are just a few of the IIC research and development projects that will move the industry forward.

“At seven years old, we have published several seminal documents that establish the machine economy landscape,” said Mellor. “We began several years ago with testbeds. As the name suggests, these were testing technologies and business models. We are now focused on deployments in industry that allow the target company to digitally transform their business.”

For example, a testbed in Cork, Ireland, called the International Future Industrial Internet Testbed (INFINITE), is developing software-defined infrastructures using big data that makes it possible for multiple virtual domains to securely run via one physical network. In phase one of the testbed,

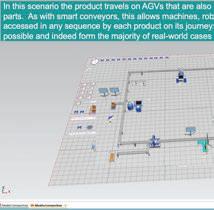

In this scenario the product travels on AGVs that are also used to replenish workcells with parts. As with smart conveyors, this allows machines, robots, and human operators to be accessed in any sequence by each product on its journey. Hybrid scenarios are also possible and indeed form the majority of real-world cases

Source: Siemens

three geographically dispersed data centers are interconnected into a reconfigured Dell network. In phase two, INFINITE is applied to a use case called “Bluelight,” which allows ambulances to securely connect to a hospital’s system and relay information while in route, so hospital staff are prepared to take over the care of the patient once the ambulance arrives.

The ultimate outcome is to use smart data to improve the emergency services. According to the INFINITE testbed notes: “Consider the scenario where an emergency service vehicle is dispatched to an incident. The response time is critical. What if the real-time GPS data generated by the emergency service vehicle can be combined with other real-time data from diverse sources such as: current traffic levels for all routes to the incident, location of roadwork, diversions, and road closures. By combining and analyzing these diverse raw datasets in real-time in order to provide valuable and intelligent route planning and insights for the emergency service vehicle, response times will improve, leading to better life enhancing outcomes.”

This same concept could be applied to the plant floor. “Fleets of machines would gather data that is sent to a data center that can be compared and contrast and then change the operating parameters of non-optimal machines,” Mellor said. will need to have their “wallets,” which is something that the IOTA Foundation has been working on since 2017, when the nonprofit initiative was founded. This international consortium of European universities and technologists came together to create a protocol layer for IoT that defines how devices transact with each other using trusted data across the technology stack in a virtual industry market place.

Today, while the premise is the same, the execution is different. “The vision of IOTA is the same for the machine economy. To provide a public protocol or better public infrastructure of automation and machines is still important. But we’ve refocused IOTA more to infrastructure,” said Holger Köther, director of partner management at IOTA Foundation, noting the organization has spent the last year and half rewriting the IOTA technology stack (node, libraries, wallet) from scratch while keeping the IOTA vision and current market requirements in mind.

In March, IOTA announced the beta launch of its Firefly wallet, giving cryptocurrency wallets a new benchmark in security and usability. At the core of Firefly are two new, open-source libraries, one for wallet application development and the other for security. It is also lightweight and uses the Edwards-curve Digital Signature Algorithm (EdDSA), which is natively supported by most IoT devices, Köther said.

In addition, a reengineered distributed ledger technology (DLT), called Tangle, enables secure micropayments between machines. It is open, feeless, and scalable, designed to support frictionless data transfer, and is not based on blockchain which has cost and scalability limitations, according to IOTA, which has a goal to build an entire ecosystem for machine communication that can apply to different industries.

“The overarching story is that IOTA is developer-friendly….and provides a DLTbased system that is resilient and scalable,” Köther said. In addition, the IOTA is working with the Open Management Group to certify the IOTA protocol and standardize how it works.

As the architecture and standards get sorted out, others are developing areas that will be an important part of the equation, like the digital twin. “To have the ability to send requests which can be fulfilled basically means having a digital twin of that product that has been designed, validated, and the part program processed for a particular machine. That is an important part of the equation,” said Alastair Orchard, vice president of Siemens Digital Enterprise.

To achieve the Industry 4.0 dream where cyber-physical machines can understand their own capabilities, negotiate with other machines, and can design any product, full digitized manufacturing is required And, of course, not every machine is intelligent.

“We are working on and deploying a tran-

In discrete manufacturing, the bill of process may be published to an execution engine that matches equipment capabilities to each operation and guides the product on its unique journey through the factory.

Source: Siemens

sitional technology where we take the manufacturing blueprint called the bill of process, which is like a recipe that contains all of the information needed to manufacturing something, including the materials, the tolerances, the set points, and part programs,” Orchard said. “If [the machines] are not intelligent, we do have the possibility with our edge technology to create an intelligent wrapper around them, and then use the manufacturing blueprint to broker conversations between machine wrappers.”

A digital twin of the machine knows where all the product parts are to deploy a system where the bill of process is executed, distributed into edge boxes, and products are driven through the factory, communicating with brownfield machines through intelligent wrappers. “Key to this is logistics, either a smart conveyor or AGV to move product between machines. In this way we actually are able to kickstart fully autonomous and flexible manufacturing even though the machines themselves aren’t this super cyber-physical intelligent entity that we’ve been dreaming of.”

The ability to flexibly move things and capture that data across the supply chain is another area to tackle, according to IBM. “In today’s supply chain everything is siloed,” said Vijay Pandierajan, director of operations at IBM Sterling, noting that there are so many steps in the process that it’s hard to reconcile everything—especially if a shipment didn’t arrive. “We have business transaction intelligence that maps out all of the documents you are supposed to get and sequence it.”

Business transaction intelligence, part of IBM’s Supply Chain Business Network, enables companies to garner deeper insights into supply chain data to help them better manage, for example, order-to-cash and purchase-to-pay interactions. The technology does this, in part, using machine learning to identify volume, velocity, and value-pattern anomalies in supply chain documents and transactions. “It’s a simple example of AI,” Pandierajan said. “AI won’t [solve] everything, but it will make you more capable as a human.”

Culture and capacity

Indeed, ultimately what the machine economy is trying to accomplish is to create a better human experience. Yet, people are the biggest bottleneck to the autonomous marketplace.

“The biggest obstacle is culture,” said IIC’s Mellor. “The average age of the industrial plant is 19 years. These are huge investments that last for decades. The organizations that run these facilities are very cautious. Even a 0.5% chance of failure can cost millions of dollars.”

But we do have the technology, and the critical steps to move toward the machine economy are happening now.

You can see it at Siemens, where a proofof-concept M2M marketplace is underway with the goal of machines selling their own capacity. “There are so many areas of experimentation in our German machining factories, where each machine understands its own costs, its own schedule, its own maintenance, and communicates any windows of opportunity it has on a permission blockchain which others can subscribe to and request unused machine uptime capacity to machine their parts,” Orchard said.

It is an early example of how machines, using IoT, AI, and blockchain, may completely disrupt the manufacturing business model in the factory of the future.

SUPPLY CHAIN OPTIMIZATION AND THE FUTURE OF INDUSTRY

Beset by cost and availability pressures on all sides, more companies are turning to sophisticated supply chain technologies to navigate the ever-changing production landscape.

By David Miller, Senior Technical Writer, Automation World

After March’s Suez Canal blockage and last year’s bout of empty store shelves during the fallout from the COVID-19 pandemic, the integrity of supply chains is on everyone’s mind. Yet even before these recent debacles, similar disruptions had begun to creep into view. In many ways, current trends merely represent the result of long-gestating issues.

“I believe it’s nothing short of historic the amount of pressure that we’re dealing with. It’s everything from soaring freight rates, capacity shortages in containers, driver shortages, imbalanced carrier networks, warehouse labor and space being in short supply, and then natural disasters like COVID-19 or even environmental events,” said Jonathan Foster, principal consultant at Proxima, a procurement consultancy group. “2020 was the year of disruption. It’s coming from everywhere, and the amount of pressure we’re seeing is unprecedented.”

To get a better understanding of how these issues are impacting the Automation World audience, we conducted a survey to shed light on how industrial companies are using supply chain automation technologies to adapt to these constraints.

Overall adoption

According to the survey, only 44% of respondents are currently using some form of supply chain software. When asked “If your facility does not use supply chain software, is your company planning to add it?” 15% responded “Yes, in the next year,” 46% responded “In the next few years,” and the remaining 39% indi-

End-to-end visibility aimed at giving companies more granular insight into warehouse inventories and plant material capacities is becoming increasingly common in supply chain management.

cated that it was not in their near-term plan at all.

While supply chain software suppliers expressed some surprise that, in such a globalized business landscape, more than half of respondents still have not adopted advanced supply chain software products, they ultimately conceded that it is in line with their experience working with clients.

To have 56% not using supply chain software “does seem like a big number,” says Shaun Phillips, director of product management at QAD DynaSys (QAD’s supply chain software). “But frankly, if I did a survey of our entire installed base and asked them which system our solution replaced, I would expect that close to 60% of them would say Microsoft Excel. Even among the customers we’ve sold to in the last month, a large portion of them are still at that stage.”

The reason for this is that supply chain automation largely subsists on the collection of data from Industrial Internet of Things (IIoT)-enabled sensors and devices. As such, companies that are still lagging in their overall digital transformation may not possess the infrastructure necessary to fully leverage a more sophisticated supply chain software product. Beyond that, while supply chain software that can automate data collection and communication or allow end-users to engage in more precise demandplanning may allow them to optimize their production workflow, older software systems can still satisfy their fundamental needs when push comes to shove.

Still, some industry sectors have been faster to evolve than others. According to Phillips, manufacturers of consumer electronics have been strong adopters of supply chain software because their notoriously slim profit margins require them to cut costs any way they can. By better matching supply to demand, they can maximize the amount of product moved while limiting excess stock. As a result, they can improve their time-to-market for shorter, more varied product runs.

In addition, highly regulated fields, such as food and beverage and pharmaceutical production, have been among QAD’s most prominent clients, Phillips says. For these companies, while reducing costs and improving lead times remain important goals, their primary emphasis in revamping their supply chain systems is increasing quality and traceability. In these cases, better software can assist in automating the collection and communication of plant-floor data to the various participants in a given supply chain, allowing them to ensure that products which may have a finite shelf-life reach their intended destination on time while also meeting stringent quality requirements.

Integration trends

To better understand why some companies are shifting toward more sophisticated supply chain planning, it’s important to understand the two often-contradictory goals they are tasked with achieving. On the one hand, shrinking margins require them to run their operations more efficiently. This means that they must limit costs, which often entails adopting just-in-time models to minimize freight and inventory expenses. However, increasingly prevalent disruptions— whether they be natural disasters or geopolitical shake-ups that limit trade—render this model challenging, as they require companies to hold more safety stocks to increase their resiliency.

As a result, software that grants users predictive capabilities or allows them to engage in complex, parallel planning for multiple

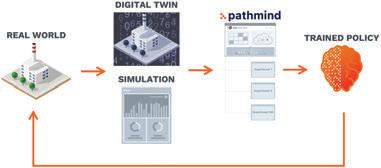

Pathmind allows end-users to leverage an additional layer of artifi cial intelligence that can be used in conjunction with other software products via a Rest application programming interface.

“what-if” scenarios is expected to continue gaining traction in the future, says Chris Nicholson, CEO at Pathmind, a supplier of artificial intelligence (AI) simulation models for supply chain data. These software products, driven by end-to-end data visibility and machine learning algorithms, allow end-users to ensure that they are prepared for a variety of potential scenarios while still limiting their freight and inventory costs as much as possible.

The growing need for end-to-end visibility is particularly relevant for plant-level operators and systems integrators because it often requires more integration between supply chain software and manufacturing execution systems (MES) to extract granular data such as equipment health and material availability that can help to more accurately gauge a facility’s true capacity. Collecting and communicating this data to supply chain partners in real time can assist them in more effectively coordinating their own procurement and inventory activities.

“A normal enterprise resource planning (ERP) solution that is integrated with supply chain capabilities might take in orders and then create requisitions or releases for customers. Then, maybe they could also schedule things on the shop floor taking into consider things like material availability,” says Andrew Robling, senior product manager at Epicor, an ERP, MES, and supply chain software provider. “When it comes to getting data off the floor—as far as what was actually produced—that tends to be more of an MES task. The MES has integration to machines, so it can automate that collection of data.”

This uptick in MES integration was borne out in our survey, which found that 68% of respondents had connected their supply chain software to their MES in some way. Moreover, while 61% of respondents indicated that this integration was via ERP, the remainder specified that the integration was directly to their MES.

Evolving capabilities

Going forward, supply chain planners may not only be reaching for more data inside plants, but outside of them as well. According to Phillips, increasingly inventive uses of big data to engage in more precise demand planning will continue to become more commonplace. As an example, he notes the case of a company that manufacturers a consumer good requiring the import of raw materials. This company found that by purchasing public domain customs data on the quantity of their primary material inputs being brought into the U.S., they were able to make deductions about the activity of competitors in their geographic region. Similar cases also abound in industries that produce building materials, where information from banks pertaining to the issuance of mortgage loans can be used to predict demand based on new construction starts.

Because this type of demand forecasting relies on large quantities of rapidly changing data from disparate sources, it’s likely that supply chain software products offering it will be cloud-based. Moreover, having more data in the cloud is likely to fuel synergistic growth with another trend sweeping over the supply chain software market: machine learning.

According to Nicholson, machine learning allows companies to make use of data in a way human analysts simply can’t. For instance, one company using Pathmind’s software was able to take order data for multiple products coming from its ERP and group them together based on material commonalities. After doing this, orders on similar batches of products could be grouped and routed through CNC machines together to minimize deliveries between them and reduce material wastage. The same sort of large-scale coordination could be applied to delivery trucks moving products across a distribution network, Nicholson notes.

“AI offers expansive visibility. It means you can see more than any individual actor or even isolated system could see because it offers real-time visibility across so many different machines and systems,” he says. “It’s like a control tower. Imagine trying to run an airport without a control tower by telling all of the individual planes to land as safely as they can.”

Ultimately, even with trends such as reshoring on the rise, global supply chains will still play a major role in manufacturing. As such, the manufacturing industries will need to be prepared for a world in which complex supply chain planning plays a greater role in their overall operations. According to Phillips, many companies are having what he calls an “Amazon moment.”

“Amazon was the first to come up with the idea of using lead times to better serve market segments. If you order something, you can get it the next day or even later in the afternoon. They made supply chain capability into a market strategy,” he says. “What supply chain software can bring to a company is changing. There was a time when it was seen as a necessary evil that was just a cost center needed to synchronize industry flows, but now it’s becoming a competitive differentiator. Now, you can actually use your supply chain to reduce costs, improve service levels, reduce wastage, and guide where you grow and defend your market.”

Read more about AI for Supply Chain Optimization at awgo.to/1197.

A new SCADA system gives Pluspetrol greater insight into its remote operations.

Using SCADA to Monitor Jungle Field Sites

Pluspetrol replaced its aging SCADA systems with Inductive Automation’s Ignition platform to track multiple geographically dispersed sites in the Ecuadorian jungle.

By Jeanne Schweder, Contributing Writer, Automation World

Ajungle isn’t a place you normally associate with modern automation technology, but for Pluspetrol it was the best way to solve a long-standing problem: How to easily access process information generated by five field sites in the Ecuadorian jungle from its headquarters in Quito.

Pluspetrol is a private, independent gas and oil supplier with a presence in Angola, Argentina, Bolivia, Colombia, Ecuador, the United States, Netherlands, Peru, Suriname, and Uruguay. By replacing its aging SCADA workstations with Inductive Automation’s Ignition, a web-based control and monitoring platform featuring tools for building HMI, SCADA, and the Industrial Internet of Things (IIoT) systems, the company was able to make a 20-year jump into the future in just days.

The five geographically separated sites in Ecuador have a combined total of 18 workstations, each with its own SCADA system, that have been running for 20 years in a stand-alone architecture. This system forced each control station to establish an individual connection to the controllers, causing traffic and data loss on the network, which would repeatedly saturate the controllers due to the number of responses they had to generate for each station.

Maintenance and updating of the SCADA applications in the stations was uncontrolled, leaving outdated applications without standards and unreliable data which resulted in errors. Process information could only be accessed by personnel in the field, who had to generate reports in Excel, limiting its use for administrative and process purposes.

System migration requirements

est SCADA systems, the company required Ignition to work in parallel with the old SCADA applications work for a month. It also required that there be no downtime during the move to the new platform operations. As part of this process, system integrator Automation Solutions Ecuador (ASE) had three days to start up Ignition at full operation, along with migrating 10 core PLCs with 2,000 I/Os in the main facilities and at the well sites.

During the three-day migration, controllers were upgraded without altering the commands and animations of the application. The project involved 4,000 tags, 300 screens and 10,000 alarms, as well as 25 clients, a number that continues to grow.

The tags created for the new controllers were saved in a database and their addresses were updated by means of scripting. Then, the old control stations were replaced by Ignition control stations and the scan classes were adjusted for each connection.

The new control architecture consists of a client-server SCADA system, with two Ignition redundant gateways installed in each main location, and three stand-alone satellite gateways on each remote site. This gateway network configuration allows information to be transmitted from the different locations to the two main redundant servers where the application is running.

Data sharing and access control

To share process information with all areas of the company, the main servers were configured within a DMZ that links the control network with the administrative network, providing access to system information from any point of the company's network, both nationally and internationally.

Applications are accessible throughout the company while maintaining proper levels of control and access. The access levels are currently controlled through active directory integration. This also enabled ASE to provide performance indicators through levels of access and control to the managerial board.

If there’s ever a loss of communication between locations, no information will be lost due to the store-and-forward configuration in each server, assuring reliability and real results in the data.

Application development and maintenance are also more organized and efficient. Each business unit can request modification of their apps individually, and these changes are published immediately across the entire system.

This is the first time in Ecuador that an Ignition platform has been used to control and monitor an entire oil extraction process, its transport and delivery to the national pipeline, as well as generate reports to local and corporate levels. The system also reports data to Ecuador’s national hydrocarbon regulation and control agency, ARCH, to achieve transparent connectivity with different platforms from different vendors.

Pluspetrol’s primary goal for the control migration was to convert process data into useful information for decision-making in the areas of operation, development, and management, at national and international levels of both the company and the Ecuadorean regulatory agents. Consequently, historian displays were adapted to allow searching for detailed information in the database.

With the migration of the SCADA system, the old control stations’ operating systems and hardware were updated to help reduce time lost in the maintenance of obsolete devices. Now that Pluspetrol’s SCADA is no longer a black box, all of the workstation and server hardware and software are managed by the IT department—bringing IT and OT closer together than ever.

Additional business needs addressed by this update include the first real-time maintenance KPIs (key performance indicators) dashboard, as well as the first reservoir and power generation process monitoring apps for management.