41 minute read

VFX TRENDS: WORKING REMOTELY

WORKING REMOTELY: HOME RULES FOR THE VFX INDUSTRY

By TREVOR HOGG

TOP: A weekly remote meeting being held by SideFX. (Image courtesy of SideFX)

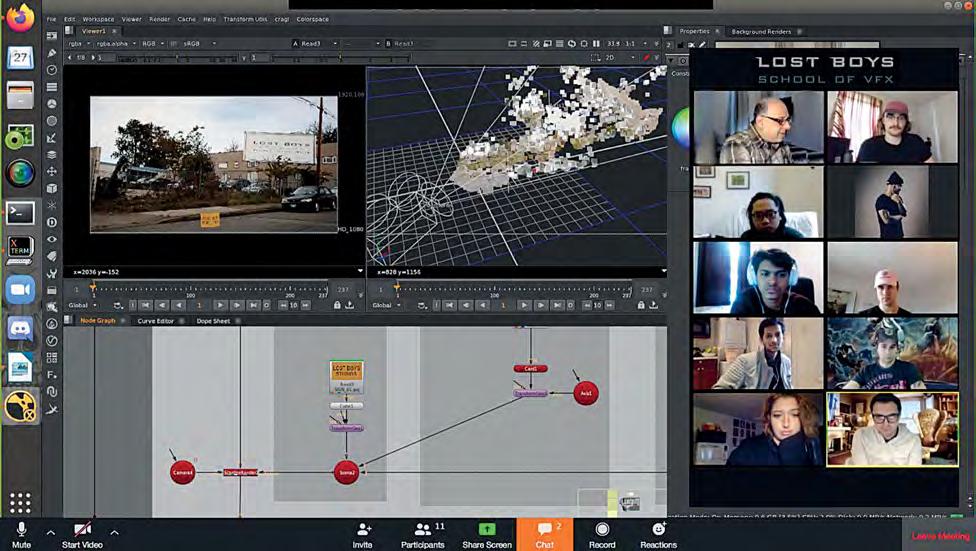

OPPOSITE TOP: Lost Boys Studios conduct a remote 3D tracking lecture for compositors in Montreal. (Image courtesy of Lost Boys Studios)

Working remotely is part of the regular routine for the visual effects industry; however, with the global lockdown caused by the coronavirus pandemic, the sheer number of individuals requiring offsite access has been unprecedented. Initially, impeding the remote solution were studio concerns about security, which led to an online petition from 10,000 visual effects artists to the Motion Picture Association of America and the Visual Effects Society releasing a statement in support of allowing artists to work remotely. For expert insight into the logistical challenges of relying on local Internet providers to having to balance domestic and professional lives within the same space, VFX Voice “traveled” to New Zealand, U.K., Canada and U.S. via video conferencing, phone and email to learn about the short and long-term impact of relying entirely on a remote workflow.

Decorating his basement office in Atlanta with self-made woodcarvings is Aldo Ruggiero, Visual Effects Supervisor at Crafty Apes. “It wouldn’t work so much if one person was remote and everyone else is in the office, but this strangely works well. We’re pushing 450 shots for a Netflix show. Nobody got furloughed in my office. Crafty Apes is a smart company. They do half movies and half television shows. The companies that are having the biggest trouble are the ones working in TV because it’s week by week. You shoot something, edit, and four weeks later you’re doing visual effects. We are using a system called Teradici and connecting through VPN. I do ask people to be available between 9 a.m. and 7 p.m., and to communicate. We video conference every day. My colleagues and I know more about each other’s lives than we ever did. It actually has become a more intimate type of work. It goes back to artists working by themselves. You miss not having the expertise of the people around you, and sharing ideas and opinions.”

His backyard in Los Angeles serves as the conference area for Cody Hernandez, Postvis Supervisor at Day for Nite. “I was on

“It wouldn’t work so much if one person was remote and everyone else is in the office, but this strangely works well. We’re pushing 450 shots for a Netflix show. Nobody got furloughed in my office. … We are using a system called Teradici and connecting through VPN.” —Aldo Ruggiero, Visual Effects Supervisor, Crafty Apes

another show on set. I would get up in the morning, go into work, login into my computer, see what the notes are for the day from production, and go from there. It’s really the same thing here. I have an extra hour of sleep and then I start my same routine. At the end of work, instead of driving for an hour I can go for a walk or run with my family. The only trouble my wife and I have is keeping the dogs quiet when we’re in meetings. I give the dogs a bone and they’re good for the next couple of hours! If you have an artist in Spain who is an amazing animator, you can use him now as long as he has an Internet connection. It’s all going to work out to the artist’s favor.”

A family expansion occurred in Vancouver for Chris Downs, Visual Effects Supervisor at DNEG. “Our second daughter was born in early March, and we’ve been home between maternity leave and lockdown since then. The biggest challenge is trying to entertain the four-year-old given that with the newborn we’re less mobile to begin with and the playgrounds are shut down. Our garage has been converted into an in-law suite, so I’m set up in there with my own workspace which is quite nice! Week one was an eye-opener with where things actually were with the Internet. We ended up upgrading our home Internet so I could review the final comps at an appropriate quality level and reasonable frame rate. We never had planned to be using the garage this frequently, so I had to add a Wi-Fi booster to make sure that we got it all the way out here.”

Residing in the English countryside and London are the Framestore trio of Jonathan Fawkner, Creative Director of Film; Fiona Walkinshaw, Global Managing Director of Film; and Alex Webster, Managing Director of Pre-Production. “From March 16, we had a third of our people already working from home for various reasons and everyone from March 23,” notes Walkinshaw. “It took about two weeks. For film, that was 1,600 people, but for the company as a whole it’s about 2,500. Our American offices in New York City and Los Angeles operated on Teradici anyway and are smaller, so it was easy for them to take their things and go home. For film, about 60% of people needed individual configurations or kits. Our systems teams were unbelievable and went into military mode. It was one of those instances in the horrible circumstances that we found ourselves in what was quite a positive thing. Everyone wanted to make it work.”

Webster was in the midst of establishing a new department when the lockdown occurred. “We had to push pause on the postvis projects that were shooting at that time. Simultaneously, we were delivering previs, character modeling and development, and lightweight virtual production in terms of virtual location scouting and camera sessions for other projects. We focused on getting those artists working remotely and provided them with Teradici in most instances. What complicated that is we had to get the vis and visual effects networks talking to each other for the first time. At the same time, we’re working in Unreal as well as Maya.”

Facility and remote workflows are quite similar. “I can drive the pictures and get full access to all of the dailies that we’re producing,” states Fawkner. “We use G Suite and have Google Meet. We have permanent meeting rooms which you can jump into. People are sticking much more to schedules because you’re not having to literally walk out of one meeting room and go around the building to another one. If I’m late to a meeting and we can’t start, then everyone else can carry on working. The rhythm of the day hasn’t changed at all. We are keeping to the same time slots that we had before going virtual. The interfacility communication has skyrocketed. I now talk to the other facilities around the world way more than I did before. It’s much more fluid and that has surprised me. It’s something we’ll want to keep going.”

Sequestered in Los Angeles with seven dogs is Sam Nicholson, CEO and Founder of Stargate Studios. “We’re seeing a huge upswing of interest in virtual production, which is predictable. It happened after 9/11. We built the whole virtual backlot and started to realize it was a lot cheaper to bring the location to the actors

than the actors to the location. It could potentially replace 50% of the greenscreen work, if not more. But be careful if you can’t make up your mind in pre-production as to what you’re going to get on set. There is no alpha channel. We’re working on that. The Mandalorian took care of that by floating a greenscreen behind the people so it looks great, but you have to fix it in post.”

With support from the New Zealand government, Weta Digital was able to protect its employees and business. “We were able to shift 1,500 crew to ‘Work from Home’ status with minimal impact to our shot production capabilities,” remarks David Conley, Executive Visual Effects Producer. “We also benefited from some good fortune by having a strong slate of shows that were already in-house and from a bit of hustle on our side to secure additional work based on the strength of our response so far.” The next step is shifting the workforce back to the facility. “We’ve already moved our first group of about a dozen artists back in with proper social distancing and it has worked out well. We want to make sure artists feel comfortable returning and we are able to create a new

“Our systems teams were unbelievable and went into military mode. It was one of those instances in the horrible circumstances that we found ourselves in what was quite a positive thing. Everyone wanted to make it work.” —Fiona Walkinshaw, Global Managing Director of Film, Framestore

environment that supports a mix of crew who are at home and others who are in the office.”

“We are reasonably fortunate to have a good internet infrastructure here in Wellington,” remarks Sean Walker, Sequence Visual Effects Supervisor at Weta Digital. “Fiber is available in most places, and the remote working software from Weta isn’t data-heavy. I’ve found that I can even conduct dailies with little preparation [not pre-downloading clips], and it does not negatively impact on our reviews. This is something I thought may affect our workflows, but hasn’t at all. Last-minute dailies additions are definitely still an occurrence. Having most of our communication through a single app [Microsoft Teams] has made keeping in touch

OPPOSITE TOP: Seven weeks were left in the production of Soul when the entire workforce at Pixar Animation Studios had to switch to a remote workflow. (Image courtesy of Pixar Animations Studios)

OPPOSITE BOTTOM: Some shots for Wonder Woman 1984 had to be completed remotely. (Image copyright © 2020 Warner Bros. Entertainment Inc.)

TOP: Connected was completed remotely during the quarantine. The animated adventures of the Mitchell Family feature the voices of Maya Rudolph, Abbi Jacobson, Michael Rianda and Danny McBride. (Image courtesy of Columbia Pictures and Sony Pictures Animation)

BOTTOM: agora.studio recently completed work on The Witness. (Image courtesy of Netflix and agora.studio)

very easy.” Shifting to a remote workflow occurred during the final weeks of delivery for Black Widow. “Amazingly, we only lost about half a day to a full day for the transition, and in a miraculous fashion we had already made up for the lag by the end of the week.”

Straddling the worlds of visual effects and animation is Sony Pictures Imageworks, with the remote strategy coordinated by Michael Ford, Vice President, Head of Systems & Software Development in Los Angeles. “On the animated side it is very much business as usual because there isn’t a dependency on shooting. We’re taking it day by day on the visual effects side waiting for what the filmmakers and studios are able to do. You can replace things that were going to be photographed with CG and augment it or get it to a point where you’re ready for whatever the film elements are going to be. One of the unique things about us is that pre-COVID-19 we operated out of a single data center. We were using the same process already. All that we had to do was to get those users to take a ‘portal’ using Teradici to connect into our data center and put them in their houses. The configuration was the only difference, along with making sure that it was secure and matched the requirements of our client.”

Pixar Animation Studios does not have a history of working remotely. Entering into this unknown territory were Jim Morris, President; Pete Docter, Chief Creative Officer; Steve May, CTO; and Dana Murray, Producer. “We got things up and running to 75% efficiency fairly quickly,” remarks Morris. “We have five movies at some level of production at the moment plus different shows for Disney+, and we’re able to keep forward momentum on all of them. Those of us who do our work on computers have an advantage

in this tough situation where we can keep working. Some of the challenges were not the ones I was thinking. People have families while others live by themselves. Just coming up with ways to keep connectivity and esprit de corps around the work we’re doing has taken some thought.”

“We’re our own client so that makes things simpler, because I could say we’re only going to worry about supporting artists, animators and production staff who are on our highest priority show, which was Soul,” notes May. “A few years ago, we moved the artists and animators to virtual workstations that consist of Teradici being connected to our onsite data center. Fortunately, we already had high-capacity incoming network bandwidth. With Soul, we had over 200 people on the crew at the time this happened, and to get them working remotely when they’re at the

OPPOSITE TOP: Post-production on Over the Moon, directed by Glen Keane, was impacted by the coronavirus. (Image courtesy of Columbia Pictures, Sony Pictures Animation and Sony Pictures Imageworks)

OPPOSITE BOTTOM: DNEG worked remotely on the highly anticipated adaptation of Dune, directed by Dennis Villeneuve. (Image courtesy of DNEG and Warner Bros. Pictures)

TOP: The final two episodes of Space Force were worked on remotely by Crafty Apes. From left: Jimmy O. Yang (Dr. Chan Kaifang) and John Malkovich (Dr. Adrian Mallory). (Photo by Aaron Epstein courtesy of Netflix)

BOTTOM: The post-production process for Black Widow (Scarlett Johansson) was impacted by the lockdown. (Photo by Film Frame copyright © 2020 Marvel Studios)

TOP: Pixar Animation Studios Producer Dana Murray supervises the final sound mix for Soul while working remotely at Skywalker Ranch. (Image courtesy of Pixar Animation Studios and Dana Murray)

MIDDLE: With the help of Teradici, Mike Accettura, who is a lead compositor for Crafty Apes, was able to work remotely. (Image courtesy of Crafty Apes)

BOTTOM: Pixar Animation Studios Chief Creative Officer Pete Docter has been impressed by the resourcefulness of his colleagues. (Image courtesy of Pixar Animation Studios and Pete Docter) final crunch of production felt like a major accomplishment. It went so well that within two weeks we had all of the other productions up and running. We had to change the ways that some people were used to working. Editorial had used Avid on the Mac since the beginning of time, and in order to remote in we had to ask them to switch to Windows.”

Docter has been impressed by the resourcefulness of his colleagues. “People are finding ways to record actors in their closets and to do these big editorial sessions that we normally do in a room with everybody connected separately. Animation dailies are tough. Animators can work well from home, but when it comes time to show other people, we usually sit in a screening room and turn to each other and say, ‘What do you think of this?’ There are a lot of suggestions and solutions. That’s a lot harder and slower this way. If you’re just starting a project, working from home might actually be an advantage because you’re less distracted by people knocking on your door and talking to you. But if you’re in the middle, like we have with two or three films, that’s the most difficult because of lack of being able to work together.”

Seven weeks were left in the production of Soul. “Sound and post have been delayed, but since our release date has been shifted, it’s all fine,” remarks Murray. “What was cool is that John Batiste actually recorded our end credit song in his home.” Fortunately, Soul composers Trent Reznor and Atticus Ross, as well as sound designer Ren Klyce, have home studios as well. “The hardest part is some people have good Wi-Fi and others don’t. Also, people with kids have to figure out teaching and cooking while also still managing the work on their plate, so we’ve been flexible. For the most part, people appreciate having something to focus on.”

Classrooms became a virtual experience for visual effects schools and programs. Such is the case for Lost Boys Studios in Vancouver and Montreal, as well as Sheridan College in Oakville, Ontario. “Every student’s workstation was rebuilt to operate independently of our network,” explains Mark Bénard, Founder and VFX Director/Supervisor at Lost Boys Studios. “Software, licensing and data all had to be localized. Additional storage was installed. Wi-Fi adapters compatible with Linux were sourced and tested. In tandem with our workstation prep, our other instructors began researching the best tools for remote instruction and student support. We are creating more pre-recorded lessons which can be more efficient than large group lectures that are prone to tangential discussions. One-on-one support has become a larger priority with sessions taking more time than in person. Since many of our alumni are also working from home, this has provided an opportunity to involve them in guest artist discussions, allowing more interaction between students with professional artists.”

“This is an ongoing process. We turned around effective technical solutions for emergency remote teaching within a couple of weeks of being told we were not allowed on campus,” remarks Noel Hooper, Program Coordinator/Professor of Computer Animation, Visual Effects, Digital Creature Animation at Sheridan College. “We were in the later stages of production in our programs, so it was primarily the review/critique process that we had to move online, which has methodologies already established in the

industry. As a contingency plan, we are preparing all the curriculum for online delivery in case we’re still remote this fall. The challenge is to now truly convert our programs into effective online learning. I anticipate that if we are back on campus there will be recommended social distancing in place. In that case, fewer students may be allowed in each space, so we’ll have to develop a hybrid system to keep class sizes the same.”

Software developers are implementing measures to ease the transition, whether it be Ziva Dynamics in Vancouver or SideFX in Toronto. “At Ziva, we have always had a significant contingent of remote workers, and we have prided ourselves on our ability to work effectively with a distributed team,” remarks James Jacobs, Co-Founder and CEO at Ziva Dynamics. “Amidst all of this, we are also trying to recognize the unique challenges our users may be facing as they transition out of the studio to homebased work environments. As such, we’ve made our academic licenses free, waived the wait time between free trials, and offered free remote licenses for all of our studio customers. Ziva is proud to say that many of our customer relationships have been strengthened as a result of these measures.”

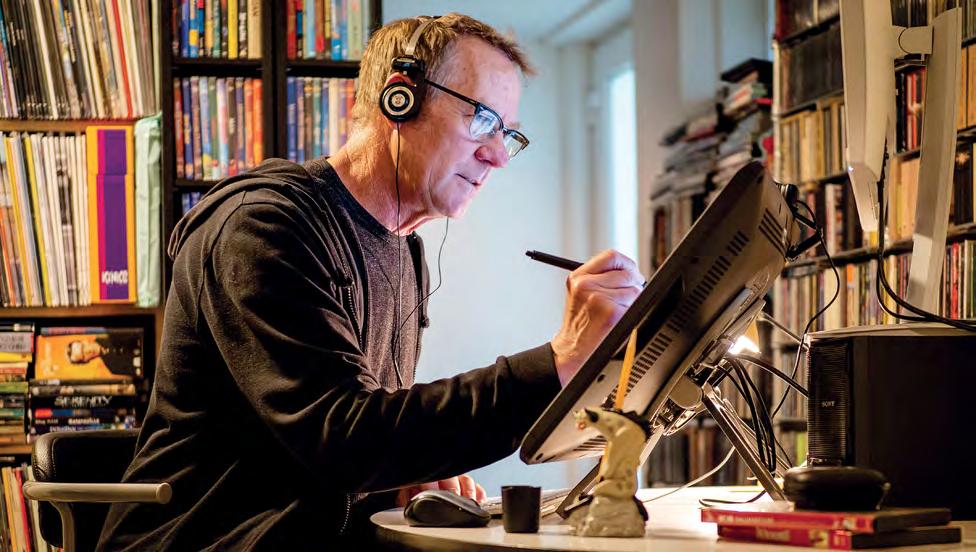

“As developers of software, we have been less impacted than our customers,” notes Kim Davidson, President and CEO at SideFX. “We’ve worked closely with our customers to help them with remote licensing. Additionally, we’ve focused on delivering more learning and training to all customers including studios, schools and self-learners. The biggest change has been the suspension of in-person industry and Houdini community events. Instead, we have reorganized to run online Houdini events, training and summits, such as the Gamedev Hive 2020 and the Houdini Hive Worldwide. These have been successful as they allow for a broader range of presenters and participants and still allow for extensive two-way interactions. We still like to meet in-person with our customers, but connecting more online may be the most significant adjustment that will continue for SideFX post-pandemic.”

An interesting postscript is whether there will be a rise in and growing acceptance of virtual companies. “We’re mostly doing character animation and rigging,” remarks David Hubert, Founder and Creative Director at agora.studio, from Montreal. “Data is transferred on Nextcloud servers. We have a programmer who builds custom tools for the pipeline such as in Maya. We’re also using a lot of software like Slack and Zoom that allow us to properly manage all of this. Everyone has their own computer.” Reviews and notes are conducted through SyncSketch. “We push an update to the cloud and it automatically goes to their machine,” states Jacob Gardner, Animation Director at agora.studio, who is based in Chicago. “When people are working locally, they’re not having any issues with fiber optics or Internet connections.” A financial hurdle to the virtual process is that cloud rendering remains expensive. “I don’t think that everyone is going to go back to the studio or everyone is going to work from home,” notes Hubert. “Having a computer at home that you can work on and one at the studio will become the norm.”

TOP: An example of a remote copy of Ziva VFX being utilized. (Image courtesy of Ziva Dynamics)

MIDDLE: One of the projects that Weta Digital Visual Effects Supervisor Sean Walker had to finish remotely was Black Widow. (Image courtesy of Weta Digital and Sean Walker)

BOTTOM LEFT: Sony Pictures Imageworks Animation Supervisor Alan Hawkins gets creative in finding an appropriate office space to finish his work on Connected. (Image Courtesy of Sony Pictures Imageworks and Alan Hawkins)

BOTTOM RIGHT: Sheridan College student Xiao Yang balances home and academic life. (Image courtesy of Sheridan College and Xiao Yang)

WORKING FROM HOME: HOW THE COVID-19 PANDEMIC HAS IMPACTED THE VFX INDUSTRY AND WILL CHANGE IT FOREVER

By THE VES TECHNOLOGY COMMITTEE

OPPOSITE TOP: The VES Technology Committee in action.

WHAT HAPPENED

This year our industry faced an unprecedented health crisis that presented challenges to our safety, technology and security. Many of our members’ businesses and employers were forced to take radical action. The VES responded by helping encourage a dialogue between members, studios, vendors and facilities to move us all forward. One of the groups leading the effort was the VES Technology Committee. The committee is composed of professionals from across the industry that represent a diverse set of technical backgrounds and works with other industry organizations like the Academy Software Foundation to develop and promote common standards and best practices across the industry. Recent projects led by the committee include the VFX Reference Platform, Cinematic Color Pipeline and Camera Report specification. Acting as a technical conduit to software vendors and helping push initiatives to benefit the VFX industry as a whole are also part of our core mission.

In response to the COVID-19 pandemic, the VES Technology Committee began meeting on a weekly basis to share ideas and give updates on successful attempts to start solving the engineering problems presented by Working From Home (WFH) during the pandemic. The lockdown of non-essential commercial activity came swiftly in the areas where VFX facilities are clustered. Most facilities only had a few days to set up initial WFH infrastructure before the lockdowns became effective. These weekly committee meetings created a platform to develop a document for best practices that was shared across the industry and is open to anyone who wishes to contribute additional ideas. The document (available at: https://tinyurl.com/VES-Covid19) continues to evolve and represents an excellent reference point to develop WFH procedures and strategy. Additional resources for working remotely can be accessed on the VES website at: https://www.visualeffectssociety.com/ves-covid-19-resources/.

WHAT DID WE LEARN?

The majority of VFX work requires on-premises infrastructure for some combination of security, technical and economic reasons. Few companies in our industry had plans in place to meet the challenge of a global pandemic under these constraints. The wholesale conversion of facilities to a WFH scenario was not something that was previously considered, given the physical security requirements necessary to ensure the safety of intellectual property. As

The weekly meeting [of the VES Technology Committee] created a platform to develop a document for best practices that was shared across the industry and is open to anyone who wishes to contribute additional ideas. The document (available at: https://tinyurl.com/VES-Covid19) continues to evolve and represents an excellent reference point to develop WFH procedures and strategy.

an industry, we needed to think quickly, adapt our methods, and invent new procedures to continue our creative journey. The main solution to tackle the challenge involved extending the desktop workstation experience to the user’s home. This required remote display solutions, so that no actual production files needed to leave the facilities’ networks. This solution transfers the display of secure office workstations directly to home computer screens over an encrypted data stream.

Some facilities were already leveraging these remote access solutions internally in order to gain flexibility in electrical requirements, improve cooling, reduce the physical labor of moving heavy workstations around the facility, and manage the requirements for physically secure locations for end users. Others were using this technology to support a hybrid model where some users worked remotely. For these facilities already using remote display solutions, the most straightforward approach was to extend this infrastructure to allow everyone to work remotely. Thus infrastructure initially deployed to meet on-premises flexibility goals turned out to be a crucial part of a disaster mitigation plan.

Owners and managers of facilities without existing infrastructure had to quickly decide on a solution they could deploy in a very short time. This meant assessing technologies the in-house teams were already familiar with and exploring what expertise and services were offered by industry-specific resellers, consultants and system integrators. The animation and VFX technology community came together on various forums to share as much expertise as possible. This helped avoid duplication of effort at the start of the crisis and quickly spread information focused on remote access technologies. The industry also needed to seek software-based solutions since supply chains had already been impacted. Licensing models and costs of commercial solutions needed to fit within the technical, operating, and business plan of each company. In addition, some other facilities preferred to leverage capabilities already included in client operating systems or to use free or open-source technologies.

The workflows for VFX and animation also created unique challenges. Many users required higher resolution, frame rate, and/ or more color accuracy than typical remote office work. Remote access protocols specifically targeting the media and entertainment industries typically have better support for these requirements. VFX facilities often use a mix of Windows, Linux and Mac systems. Users were often required to use their personal equipment at home to access their workstation back at the office (and in some cases, mobile devices running iOS/Android were part of the equation). At the time, few remote access solutions supported all of these platforms on both the server-side (the workstation at the office) and the client-side (the device used at home). So, in many cases, more than one remote access system had to be deployed to efficiently support different platforms required for different workflows.

Additional challenges were presented by latency and bandwidth of home internet connections. Any extra delay directly affects tasks that are dependent on getting immediate feedback from interacting with your mouse or tablet, such as storyboarding, production design, modeling and texture painting. This remains a challenge today, especially as not all combinations of operating systems and remote display technology are compatible with input accessories like tablets. The Tech Committee worked to voice these issues to software and hardware vendors and has seen responses in the form of fixes, new releases and planned improvements. More

than anything, though, it has become clear there is not an industry-standard alternative to artists sitting together in a calibrated room, viewing and discussing high-resolution and high-dynamic-range uncompressed color-correct content in real-time. Most teams cobbled together workflows by combining commodity video-conferencing software solutions. Reviewers were forced to accept imperfect synchronized playback or approaches that required pre-staging media on every user’s work-provided device, adding delay to the creative approval process. A few cloud and streaming solutions are beginning to emerge, but they are not yet fully vetted from a security point of view. This is an area that will likely see significant evolution over time.

Digital content security is incredibly important to everyone, from client studios to VFX and animation facilities, with strict and precise requirements that are often mandated in order to work on certain projects. Facilities doing this work were compelled to coordinate with the security teams of the content owners to ensure that the remote-access infrastructure met enough security requirements and best practices. Previously, this involved a strict separation between machines accessing pre-release content and the open internet. The necessity of remote work required a significant departure from these security postures that barred any access to content from employees’ homes. A common approach to address this was to leverage VPN technology, which encrypts all traffic between a user and their company’s datacenter. Since allowing production content to be broadly accessed by employees from home wasn’t a typically permissible working condition in most facilities, no standardization existed around these requirements. It was only after several iterations that facilities derived a practical checklist to which employees were asked to adhere. Helping users walk through the huge variety of home setups and troubleshooting

the problems that users encountered was a challenge for already strained IT departments. Home users, in many cases, were required to upgrade the security of their Wi-Fi by reconfiguring their router. Additional security requirements included a minimum precaution that all monitors face away from doors and windows in order to minimize exposure to additional family members. There was another potential challenge for those who share their home space with individuals employed at other facilities.

While pre-existing workflows have undoubtedly been impacted by the aforementioned challenges, new opportunities and workflows have evolved to allow productivity to continue at levels close to those from before the disruption. Reports started flowing in about the advantages of checking a render after dinner, managing time more flexibly, and not having to wait for a meeting room when a client review runs long. The collaboration through video conferencing was forced on the industry, but some of the workflow changes are viewed as positive.

LOOKING FORWARD

Having made the leap to allow Working From Home, studios and VFX partners are recognizing this as a time to completely reimagine workflows. There is an expectation that WFH and distributed collaboration will be the new norm for at least a portion of the workforce. We are learning what safety looks like in a post-COVID-19 world. Potential benefits of artists working remotely include rapid scaling of resources based on current needs and easing the need for costly infrastructure build-outs. It’s a great time for experimentation and rethinking the way we work, including an evaluation of cloud solutions that might enable more elastic resources to leverage the global ecosystem.

While visual effects is a highly competitive sector of content

creation, the community recognizes we must come together for the health and safety of our talent and clients. New protocols will lead to shorter shooting days and longer shooting schedules. There will be more extensive visual effects and a need for digi-doubles where extras once stood. Producers will need to carefully evaluate what can happen remotely versus what can be achieved in post-production. Previsualization will likely become an even more helpful tool to plan and execute shoots efficiently and safely. Methods of virtual production may offer the potential for a reduced footprint, but will not be able to replace top talent or fully address the needs of collaborative filmmaking. Remote-friendly collaborative tools that familiarize, simplify or abstract the more technical parts of the creative process and allow artists to focus on vision will be in high demand.

One positive side effect of the crisis for our industry is that content consumption grew. As everyone stayed safe at home, they increasingly wanted new things to watch and play. Streaming service adoption accelerated, which continues to help drive more content creation demand. New platforms including AR and VR are emerging that will require even more services from our industry. Many video games set new sales records as gamers used online services to stay connected with friends and fill more free time. With a very bright future ahead, how do we apply the learnings from this experience and evolve? How do we hold on to the benefits we gained in the COVID-19 response?

As an industry, the WFH transformation has accelerated new approaches to secure remote workflows, but there is more work to be done, and more opportunities to be discovered. It is the opinion of the VES Tech Committee that industry practices would benefit from a more formal exchange of ideas and a broader discussion of lessons learned. We would like to advocate that the industry choose a method to foster dialogue and create permanent change, perhaps utilizing some form of an ongoing working group to build upon the efforts that have taken place.

Many thanks are owed to the very small group of dedicated individuals who helped capture the information, prepare the document and coordinate the efforts of the VES Tech Committee. This greatly helped with the emergency transition that occurred, but going forward we see a place for a broader industry focus on best practices with more voices participating.

OPPOSITE TOP: Dave Burgess, Animation Supervisor at Animal Logic, working from his home in Vancouver.

TOP: Max Sachar, producer of Pixar’s OUT, working from his home in San Francisco.

BOTTOM: VES Technology Committee member Darin Grant’s setup at home in Southern California.

NEW TECH IN VFX: FALL EDITION

By IAN FAILES

TOP: Epic Games’ Unreal Engine 5 real-time demo called ‘Lumen in the Land of Nanite,’ running live on PlayStation 5. (Image courtesy of Epic Games)

Keeping up with the latest in visual effects tools can be daunting. These past few years have seen major innovations in technology solutions for VFX artists and, as VFX Voice found out, there are many to come. Here’s a roundup of new or recently-launched products that you might already be using, or that you may soon find part of your visual effects production workflow.

SOLUTIONS IN THE REAL-TIME SPACE

Game engine maker Epic Games made an early reveal this year when it demonstrated the photoreal capabilities of Unreal Engine 5 (releasing in late 2021), including features such as the Nanite virtualized micropolygon geometry and the Lumen global illumination tool.

“With these new features,” says Epic Games Business Development Manager Miles Perkins, “there will be less of a concern to design assets with a game engine GPU rendering budget in mind. You won’t have to cut corners on asset fidelity because you’ll be able to work with the same level of detail in-engine as those built for a traditional VFX feature film pipeline.”

Meanwhile, Unreal Engine has now become one of the mainstays of virtual production workflows, and Epic has been pitching the game engine as more than just a rendering portal, with Perkins noting, in particular, that Unreal Engine’s physics abilities can match what happens in the real world to the virtual.

Facial motion capture company Faceware Technologies released Faceware Studio this year as a real-time facial animation platform. While a re-engineering of Faceware Live, Studio incorporates a real-time streaming workflow that allows users to track any face, and includes machine learning techniques to perform better facial tracks.

“We currently use neural networks on the ‘hard to track’ parts of the face, like determining jaw position,” discusses Faceware Vice President, Product and Development Jay Grenier. “We’ve had huge success with it so far, and we’re in the process of evaluating the benefits to the user of tracking the entire face with these new techniques.

TOP: A demonstration of the shading capabilities in Unreal Engine 4.25. The tool is now widely used in virtual production, visualization and, of course, game production. (Image courtesy of Epic Games)

BOTTOM: The Faceware Studio interface, with a link into Unreal Engine. (Image courtesy of Faceware Technologies)

TOP TO BOTTOM: Faceware Studio enables tracking of faces captured with facial capture cameras to produce facial animation on a corresponding CG model. (Image courtesy of Faceware Technologies)

Autodesk Maya’s USD Layer Editor. (Image courtesy of Autodesk)

Foundry’s HEIST app, which came out of the EIST project with the BBC R&D. (Image courtesy of Foundry)

OPPOSITE TOP TO BOTTOM: A frame from NVIDIA’s Omniverse playable ‘Marbles’ game. (Image courtesy of NVIDIA)

Houdini’s new Topo Transfer tool. (Image courtesy of SideFX)

The final CG plant asset by SO REAL, intended for use in AR/VR, game or VFX projects. (Image courtesy of SO REAL)

“There is so much more to come that we’re excited about,” adds Grenier. “From here, we’ll move on to features for improving the quality of the animation so that users can not only create rapid content, but also refine the data and produce even higher quality results with the tool.”

USD TAKES THE STAGE

Pixar’s Universal Scene Description (USD) framework, which is being adopted in several different tools, is currently receiving significant attention in VFX. Epic Games’ Unreal Engine was one of the first applications to integrate USD back in 2017 with UE 4.16, and has continued to the latest release.

“Scenes can be read and modified in Unreal Engine with changes reflected in the USD data immediately,” says Ryan Mayeda, Product Manager for Unreal Engine Virtual Production at Epic Games. “4.25 improves large scene performance and offers complete Python support for pipeline developers. Going forward, Epic is fully committed to USD, and each release in our roadmap will bring new features that focus on building connected asset workflows.”

Meanwhile, Autodesk has been developing a USD for Maya plug-in to provide translation and editing capabilities for USD. “Our goal is to teach Maya’s tools and UI how to talk to native USD data,” outlines Autodesk Senior Software Architect Gordon Bradley. “You can load USD data into Maya, edit it naturally using Maya’s standard tools, and save it out.”

The plug-in has been developed as a fully open source project, following on from early individual work done by Pixar, Animal Logic, Luma Pictures and Blue Sky Studios. Adds Bradley, “We’re excited to include this out of the box once it’s ready so artists can just install Maya and start working with USD.”

Another key USD adopter is NVIDIA. The company has been pushing ahead with Omniverse, which draws upon USD and NVIDIA’s RTX technology and allows teams to interactively work together in different pieces of creative software. NVIDIA’s Richard Kerris, Industry General Manager for Media and Entertainment, notes that NVIDIA has been collaborating with several major VFX studios on Omniverse and pursuing more virtual production uses, too.

“The feedback we’ve been getting is, how can we incorporate Omniverse into virtual sets, and how can we use that to interact with devices or objects from other studios we might be working with, rather than the antiquated import/export model.”

Omniverse was unveiled for the architecture, engineering and construction (AEC) industry earlier this year, and also featured in a key ‘Marbles’ demo showcasing a playable game environment with real-time physics and dynamic lighting. Kerris says more will be happening soon with its media and entertainment customers, a group he cites is “in our DNA.”

Meanwhile, Foundry has utilized USD as part of research done during the EU-funded Enabling Interactive Story Telling (EIST) project, a collaboration with the BBC R&D. Here, an interactive ‘branching’ AR/VR storytelling tool was developed that Foundry adjudged could be extended to VFX and post-production. The team built an application that allowed for sequencing of USD files on a timeline.

“We realized in the project that USD could be used to solve other problems, too,” shares Foundry Head of Research Dan Ring, noting that the EIST project also bore the development of a realtime scene layout and playback review tool.

“The big thing we’re looking at with it all,” says Ring, “is how you might capture data during production, particularly virtual production or on set. We want to have a timeline of truth – a single source of truth for a production where you collect all of your data.”

TOP TO BOTTOM: A look at the latest Foundry’s Katana user interface. (Image courtesy of Foundry)

Clarisse’s procedural scene layout. (Image courtesy of Isotropix)

User interface for Substance Alchemist, where users build material libraries. (Image courtesy of Adobe)

Users can mark up and scrub through media using SyncReview to review the content together. (Image courtesy of Foundry)

NEW TOOLS AND NEW FEATURES TO TRY OUT

SideFX Houdini’s latest features for the procedural software center around three areas, and further its focus on USD. First, there’s a number of developments in Houdini’s Solaris, its USD-based context for procedural lookdev, layout and lighting, and the Karma renderer that integrates into Solaris. The second area is interactive physics, where aspects such as pyro sims and Vellum brushes are being solved essentially in real-time, providing direct interaction for the artist in their scenes. The third area involves character creation within Houdini. Here, motion retargeting, procedural rigging, a Topo Transfer tool and other developments are leading the charge into new character workflows.

Part of the push with each of the above areas is, says Cristin Barghiel, Vice President of Product Development at SideFX, a response to customers asking the software maker to enable more and more development to occur inside Houdini. “This has been a running string through the history of Houdini – we strive to make it easier for artists to make beautiful work, and make sure our software works well with others to ensure a performant pipeline.”

If you’re looking for new ways to digitally scan assets both inside and out, SO REAL Digital Twins AG has launched a service aimed at doing exactly that primarily for AR/VR, but also for VFX and other areas of CG. The service works using CT scans.

“CT uses X-rays,” explains SO REAL’s Head of Film & AR Raffael Dickreuter. “The X-ray photons penetrate the entire object. We capture the inside and outside at the same time. We work with the raw data produced by the scanner and then can deliver many formats.

“We already knew how precise CT scans can be, down at the level of microns,” adds Dickreuter. “We knew that many physical parameters, CG for example, can be extracted from the volume data. The question was: could that be converted from the volume domain to the polygon domain? So we tried it and it worked.”

For Katana users, the software’s latest incarnation offers up a number of enhancements, including streamlined workflows, shading network improvements, new snapping UX built on top of USD, dockable widgets and network material editing.

Jordan Thistlewood, Director of Product - Pre-production, Lookdev & Lighting at Foundry, observes that “the scale of productions are not getting smaller, and it’s been getting harder and harder to manage massive amounts of information. So with tools like Katana what we’re trying to do is look at what are the core performance aspects, the core workflow aspects, the core interoperability – what are the other programs being used and how is it part of the pipeline? Also, what does an artist do? How do they sit there and consume this mass amount of information – that’s led to the latest changes.”

SHOT PRODUCTION FOCUS

Visual effects artists already use Isotropix’s Clarisse iFX as a way of working with complex CG scenes, as well as the newer BUiLDER toolset with its nodal scene assembly and nodal compositing features. Isotropix CEO and Co-founder Sam Assadian has been watching artists take full advantage of the extra power in BUiLDER and says extra features are coming soon.

“We are always continuing to simplify collaborative workflows,

improving rendering performances and extending our support of industry standards such as USD, Autodesk Standard Material and Material X.

“The later point is very important since collaboration and asset sharing are becoming very common in the industry,” continues Assadian. “We will also be publicly releasing Clarisse SDK to boost the third-party ecosystem tools already gravitating around Clarisse. We’ve also recently published a new API designed to simplify the integration of any third-party renderers to Clarisse.”

VFX artists have also been generating complex texturing detail with Adobe’s Substance products. One of the latest developments has been on the machine learning side with the material authoring tool Substance Alchemist via an algorithm called Materia.

“It takes any photograph of a surface and converts it into a full physically-based material,” details Jérémie Noguer, Adobe’s Principal Product Manager - Entertainment. “To get to the output material right, we trained the algorithm on thousands of scanned materials for which we had the ground truth data – photogrammetry – and various renders of that data with different lighting conditions.”

Noguer says the application of Materia to VFX workflows would be to generate accurate materials from photos taken on a physical set. “Materia excels at generating architecture type materials and organic grounds, stone walls and such, so it could be useful in many cases where photogrammetry would be overkill, too pricey, time-consuming or straight-up impossible.”

VFX studios themselves are often responsible for the creation of bespoke tools for use in production. One such tool is Framestore’s Fibre dynamics solver, used on projects like Lady and the Tramp to make hair and fur move realistically when interacting with water, wind, clothes and other characters.

“At the core of Fibre,” states Framestore Lead R&D Technical Director Alex Rothwell, “we needed a robust solver algorithm, something that could deal with the wide range of element interactions required. We opted for a Physically Based Dynamics approach, facilitated by the intra hair constraints implemented using Cosserat-type rods.”

“Our previous workflows had required the creation of low-resolution proxies and other manual setup in order to guide collisions between hair and geometry. Fibre uses an optimized collision engine, effectively eliminating the need for any preprocessing.”

TOOLS TO KEEP WORKING

With working remotely becoming the norm this year, a common solution among studios became Amazon Web Services’ (AWS) Studio in the Cloud, a mix of cloud-based virtual workstations, storage and rendering capabilities.

“Rather than purchase high-performance hardware, as well as house and maintain physical machines, users can spin up the resources they need, when they need them, and spin them down, paying only for the time used,” offers Will McDonald, Product and Technology Leader at AWS. “In addition to shifting capital expenditure to operational expenditure, creating digital content on the cloud also provides greater resource flexibility.”

McDonald attests, too, that virtual workstations are a way of maintaining compute power and doing it securely, and have “enabled studios to continue to work effectively to the point where several are evaluating how to continue to work in this way to maximize their flexibility to hire the best talent regardless of where they reside.”

Remote collaboration is a theme in another Foundry tool, SyncReview, for Nuke Studio, Hiero and HieroPlayer. The idea here is to be able to run Nuke Studio or Hiero sessions in different locations, say where a VFX studio is based in separate countries, and have the full media synchronized in high fidelity between them.

Juan Salazar, Senior Creative Product Manager, Timeline Products at Foundry, explains the idea and how it came about. “With SyncReview, you can run a session of Nuke Studio and have everything syncing in both places. It’s something that hasn’t been done before completely like this.

“SyncReview came from a bigger project we are doing to improve the review process for the VFX pipeline. One of the main issues in review sessions is the lack of visibility over the whole timeline and also seeing total image and color accuracy.”

TOP TO BOTTOM: Framestore’s Fibre tool was used for the hair/ fur of the characters in Lady and the Tramp. This stage shows the clumping stage. (Image copyright © 2019 Walt Disney Pictures)

Simulation QC render automatically generated by Framestore’s pipeline. (Image copyright © 2019 Walt Disney Pictures)

Final render. (Image copyright © 2019 Walt Disney Pictures)