Thank you for being a part of the global VFX Voice community!

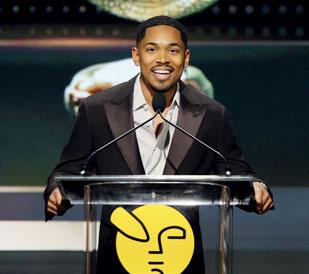

Coming out of a much-anticipated awards season, this issue of VFX Voice shines a light on the 23rd Annual VES Awards gala. Congratulations again to all of our outstanding nominees, winners and honorees!

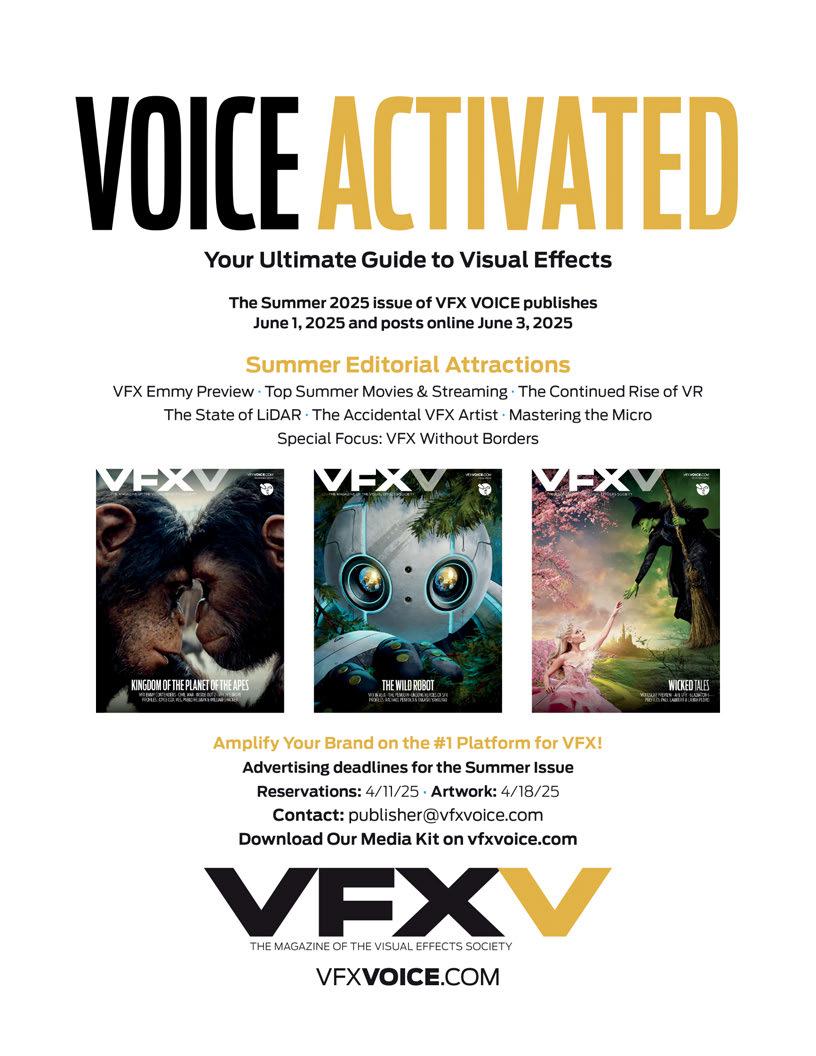

Our cover story takes us inside the stop-motion animated Memoir of a Snail. We share a behind-the-scenes look at The Electric State with the Russo brothers and delve into trends around retro effects, real-time VFX, the unsung technical heroes of the pipeline and the rise of high-quality indie film VFX. We highlight the latest in LED volumes, virtual production and Gen AI in schools. And circling the globe, we deliver a special focus on emerging VFX markets in Europe, South Africa and the Middle East… and we put our New Zealand Section in the spotlight.

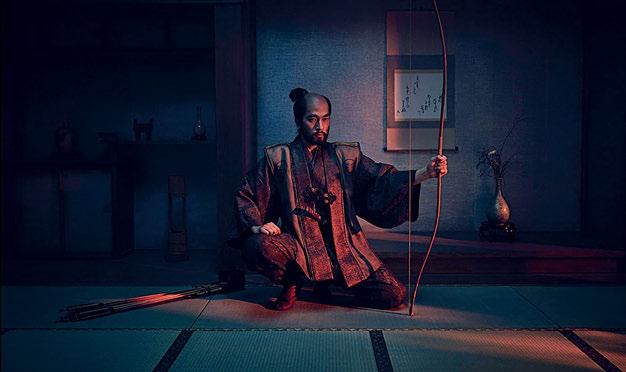

Share the joy with our VES Awards photo gallery capturing the festive celebration that awarded outstanding artistry and innovation in 25 categories and honored Virtual Reality/Immersive Technology Pioneer Dr. Jacquelyn Ford Morie with the Georges Méliès Award, Golden Globewinning actor-producer Hiroyuki Sanada with the VES Award for Creative Excellence, and Academy Award-winning filmmaker and VFX Supervisor Takashi Yamazaki with the Visionary Award.

Dive in and meet the innovators and risk-takers who push the boundaries of what’s possible and advance the field of visual effects.

Cheers!

Kim Davidson, Chair, VES Board of Directors

Nancy Ward, VES Executive Director

P.S. You can continue to catch exclusive stories between issues only available at VFXVoice.com. You can also get VFX Voice and VES updates by following us on X at @VFXSociety.

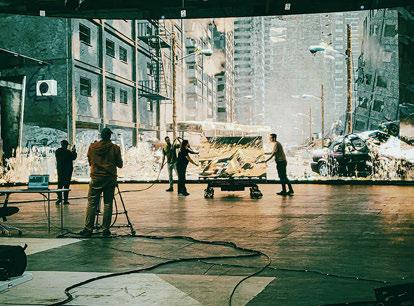

8 VIRTUAL PRODUCTION: REAL-TIME VFX

How real-time is impacting VFX workflows and pipelines.

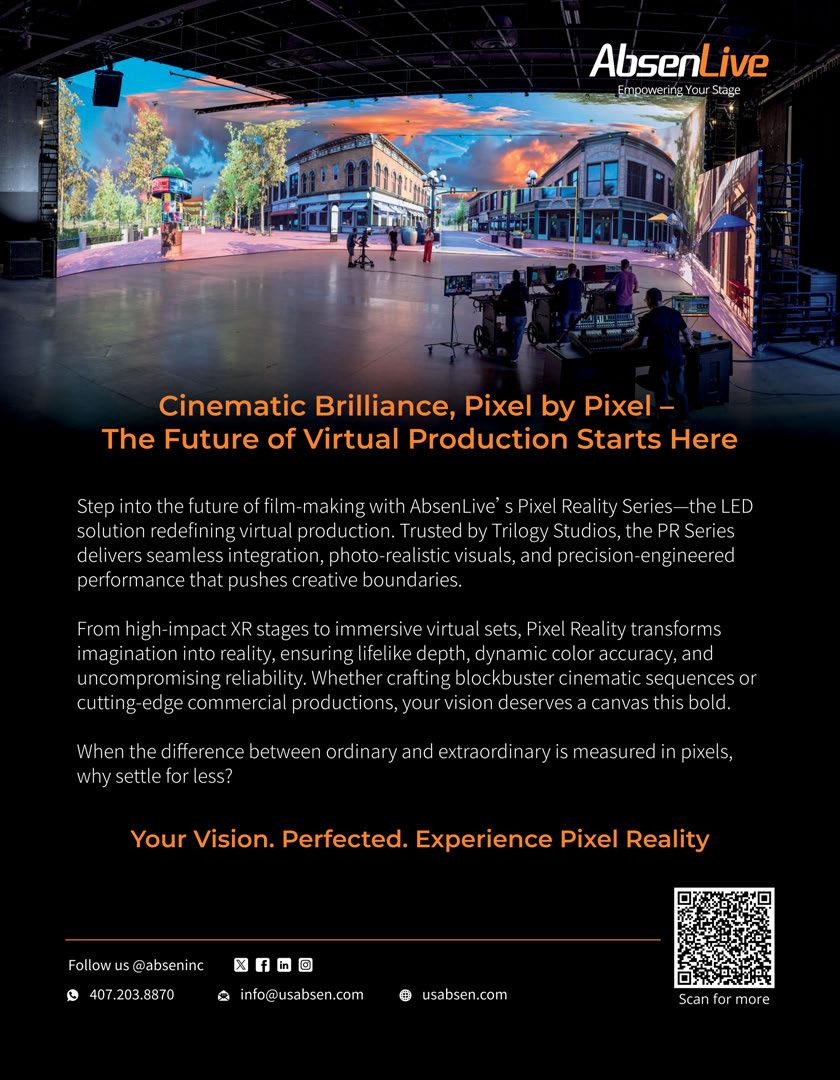

18 VIRTUAL PRODUCTION: LED VOLUMES

VFX supervisors help provide a bridge to LED volumes.

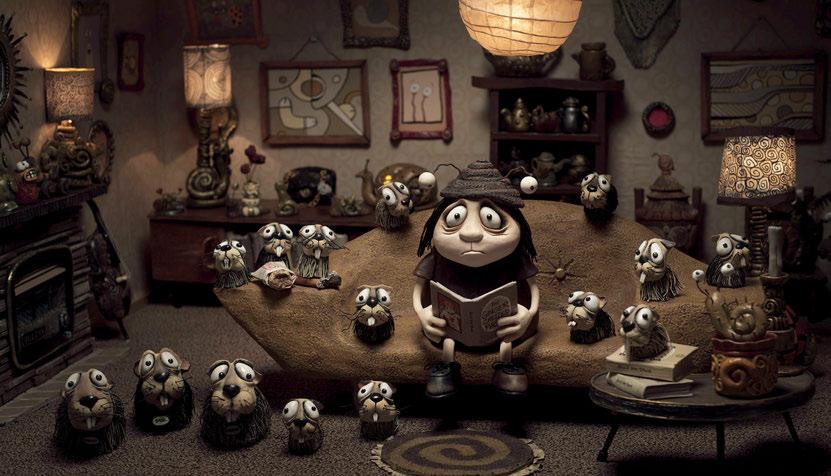

24 COVER: MEMOIR OF A SNAIL

Filmmaker Adam Elliot advances traditional stop-motion.

30 VFX TRENDS: RETRO EFFECTS

Striking a balance between in-camera and digital effects.

36 FILM: THE ELECTRIC STATE

The Russo brothers create a new interpretation of the 1990s.

42 EDUCATION: VFX & NEW TECH

VFX/animation schools sharpen their focus on VP and AI.

46 PROFILE: TAKASHI YAMAZAKI

Recipient of the VES Visionary Award.

47 PROFILE: DR. JACQUELYN FORD MORIE

Recipient of the VES Georges Méliès Award.

48 THE 23RD ANNUAL VES AWARDS

Celebrating excellence in visual effects.

56 VES AWARD WINNERS

Photo Gallery.

62 VFX TRENDS: UNSUNG HEROES

Technical directors reveal their complex role in the pipeline.

70 SPECIAL FOCUS: EMERGING MARKETS

VFX in Eastern Europe, South Africa and the Middle East.

78 VFX TRENDS: INDIE VFX

Indie films impress with remarkable effects on a limited budget.

84 TECH & TOOLS: VFX SOFTWARE

Catching up with some essential tools shaping the industry.

2 EXECUTIVE NOTE

90 THE VES HANDBOOK

92 VES SECTION SPOTLIGHT – NEW ZEALAND

94 VES NEWS

96 FINAL FRAME – STOP MOTION

ON THE COVER: Grace Pudel is a melancholy young woman who collects snails in Adam Elliot’s stop-motion Memoir of a Snail (Image courtesy of Arenamedia Pty Ltd. and IFC Films)

Visit us online at vfxvoice.com

PUBLISHER

Jim McCullaugh publisher@vfxvoice.com

EDITOR

Ed Ochs editor@vfxvoice.com

CREATIVE

Alpanian Design Group alan@alpanian.com

ADVERTISING

Arlene Hansen Arlene-VFX@outlook.com

SUPERVISOR

Ross Auerbach

CONTRIBUTING WRITERS

Naomi Goldman

Trevor Hogg

Chris McGowan

Barbara Robertson

Oliver Webb

ADVISORY COMMITTEE

David Bloom

Andrew Bly

Rob Bredow

Mike Chambers, VES

Lisa Cooke, VES

Neil Corbould, VES

Irena Cronin

Kim Davidson

Paul Debevec, VES

Debbie Denise

Karen Dufilho

Paul Franklin

Barbara Ford Grant

David Johnson, VES

Jim Morris, VES

Dennis Muren, ASC, VES

Sam Nicholson, ASC

Lori H. Schwartz

Eric Roth

Tom Atkin, Founder

Allen Battino, VES Logo Design

VISUAL EFFECTS SOCIETY

Nancy Ward, Executive Director

VES BOARD OF DIRECTORS

OFFICERS

Kim Davidson, Chair

Susan O’Neal, 1st Vice Chair

David Tanaka, VES, 2nd Vice Chair

Rita Cahill, Secretary

Jeffrey A. Okun, VES, Treasurer

DIRECTORS

Neishaw Ali, Fatima Anes, Laura Barbera

Alan Boucek, Kathryn Brillhart, Mike Chambers, VES

Emma Clifton Perry, Rose Duignan

Dave Gouge, Kay Hoddy, Thomas Knop, VES

Brooke Lyndon-Stanford, Quentin Martin

Julie McDonald, Karen Murphy

Janet Muswell Hamilton VES, Maggie Oh

Robin Prybil, Lopsie Schwartz

David Valentin, Sean Varney, Bill Villarreal

Sam Winkler, Philipp Wolf, Susan Zwerman, VES

ALTERNATES

Fred Chapman, Dayne Cowan, Aladino Debert, John Decker, William Mesa, Ariele Podreider Lenzi

Visual Effects Society

5805 Sepulveda Blvd., Suite 620 Sherman Oaks, CA 91411 Phone: (818) 981-7861 vesglobal.org

VES STAFF

Elvia Gonzalez, Associate Director

Jim Sullivan, Director of Operations

Ben Schneider, Director of Membership Services

Charles Mesa, Media & Content Manager

Eric Bass, MarCom Manager

Ross Auerbach, Program Manager

Colleen Kelly, Office Manager

Mark Mulkerron, Administrative Assistant

Shannon Cassidy, Global Manager

P.J. Schumacher, Controller

Naomi Goldman, Public Relations

By TREVOR HOGG

TOP AND BOTTOM: Real-time software programs are being developed by Chaos, such as the ray tracing renderer Vantage and Arena, which does ray tracing for in-camera effects. (Images courtesy of Chaos)

OPPOSITE TOP TO BOTTOM: The introduction of full ray tracing to the virtual production process removes the need for rasterized rendering. Source: Ray Tracing FTW. (Image courtesy of Chaos)

An ambition for real-time visual effects is to have the ability to visualize, explore and iterate quickly without closing the door on the visual effects team finishing it off to get the final image. Previs from The Witcher Season 3. (Image courtesy of Cinesite and Netflix)

Real-time is most useful at the concepting stage. (Image courtesy of Vū Technologies)

Virtual production could not exist without real-time rendering, customarily associated with game engines such as Unreal Engine and Unity. Still, real-time technology is also impacting workflows and pipelines constructed to produce visual effects on a daily basis. As the tool is refined to become more cinematically proficient, new challenges and opportunities have emerged for visual effects artists and production teams. “My first job in the visual effects industry was working on Star Wars: Episode 1 – The Phantom Menace, the first movie to do previs,” recalls Kevin Baillie, Vice President and Head of Creative at Eyeline Studio. “Our real-time capabilities back then were quite limited, but now fast forward to where we have these images that can look near to final quality in real-time. Not just previs, but a virtual art department to build set designs whether we’re looking at them through a camera, VR goggles or any other means. These incredibly powerful tools allow a filmmaker to accelerate some of the physical process, start it digitally and iterate on it quickly before we get into the tedious, expensive physical phase. When I worked with Robert Zemeckis on Pinocchio, we previs’d the entire movie. As we were shooting it, we did real-time on-set composites of the scenes that involved live-action, relay down cameras for everything that was a fully virtual shot, then those cameras went into the visual effects post-production process. We made the movie three times using these real-time technologies, and that iteration helped Zemeckis narrow it down on what exactly he wanted.”

Unreal Engine became the answer when pandemic restrictions meant that not everyone could go into the same vehicle together to scout locations for The Handmaid’s Tale. “I would go out, scan the locations, rebuild them in Unreal Engine, and we would walk through in sessions,” recalls Brendan Taylor, President & VFX Supervisor at Mavericks VFX. “I like to say that we are making a game called, ‘Let’s make a movie.’ What’s awesome about that is you can create all the rules for this world. The thing about a game is you need to be able to see it from all angles and be able to change things on the fly. When we’re working in film, we’re dealing with what’s

here and in the camera.” Virtual scouting led to some discoveries that Elisabeth Moss applied when directing her first episode of The Handmaid’s Tale. Taylor explains, “What we were able to do was build the set on the bluescreen stage from the plans, sit with a monitor on a little handheld rig [in our screening room] and explore the space with Elisabeth. She tried things out with just me, Stuart Biddlecombe [Cinematographer] and Paul Wierzbicki [Unreal Engine Specialist]. Elisabeth said, ‘There’s something missing. We’re so monochrome.’ Paul responded, ‘Sometimes these buildings have red lights on them.’ He quickly put a flashing red light in the corner, and it changed the tone of the scene to give it this devilish look. It made this guy pushing women off of the roof even more menacing. We would have never known until we lived within this game we had created. For me, that was a real a-ha moment where it became collaborative again.”

Simplification is taking place when it comes to game engines and real-time. “We don’t have enough people who know Unreal Engine to drive a virtual production because it’s such a beast of a software that has been in development forever,” observes Jason Starne, Owner, SHOTCALLER and Director of Virtual Production for AMS Pictures. “We need some simplified things, and that’s what we are starting to see with what companies like Chaos are doing. They’re building something that allows you to have a 3D world scene that is truly a real-time path tracer, and the path tracer gives the best quality you can out of a rendered image. Real-time is an aspect of the pipeline. It’s a tool just like virtual production is another toolset a studio would have.” Misconceptions are an issue. “The con is that the marketing has made even our clients believe this is easy to do and can be achieved without a whole lot of work going into it. In real life, we have to put work into it and make or build things in a way where we can get speed out of it. It’s not just going to be real-time because it’s coming out of Unreal Engine. It could be, but it will look like crap. How do we get the quality versus the speed that we need?”

TOP TO BOTTOM: The mantra for Vū Technologies is ‘content at the speed of thought,’ which they believe will be the next evolution of communication. (Image courtesy of Vū Technologies)

Real-time allows digital artists to iterate way faster, which means more options for clients. Scene from Sweet Tooth (Image courtesy of Zoic Studios and Netflix)

Real-time has shifted the involvement of Zoic Studios toward the front end of the production, resulting in far less in the back end. Scene from The Sympathizer. (Image courtesy of Zoic Studios and HBO)

The Chaos Group is developing real-time software programs, such as the ray tracing renderer Vantage and Arena, which does ray tracing for in-camera effects. “For us, Arena is an extension of the camera that the DP already has, and as long as the DP can talk to the people who are running the stage, like to a grip or camera operator, then we’re in good shape,” remarks Christopher Nichols, Director of Chaos Labs at the Chaos Group. “We looked at what they needed to do to get the correct video on the LED walls. Essentially, we needed a system that synchronizes renders across multiple nodes and can track a camera so you can get the correct parallax. That’s the fundamental thing we added to Vantage, enabling it to become an in-camera effect solution. By introducing full ray tracing to the process that removes the need for rasterized rendering, you can make a better duplicate of the camera and don’t need to optimize your data or geometry in the same way that you need for video games. Almost everything that is done in post-production uses full ray tracing, either V-Ray or Arnold. That massively cuts down on how much time and energy is used to put the CG elements behind people because it’s the same asset for everything. The virtual art department can focus on compositing the shot correctly or creating the right environment and not on, ‘How do I remake this to work for a game engine?’”

More options have become available to be creative. “We’re seeing concepts emerge now that would have been nearly impossible without the use of real-time tools to plan and execute, like digital twins, which are changing the game for creators, especially when budget and ambition are both high and there’s no room for miscommunication,” notes states Brian Solomon, Creative Technology Director at Framestore. “Another area advancing rapidly revolves around how we utilize characters. Real-time allows us to previs and utilize dynamic 3D characters earlier in feature film production, especially with character-driven live-action pictures. Similarly, there are now advantages coming

from production-grade real-time variants of characters. These are benefiting larger brands and animated IP owners, as a host of new formats are emerging that allow these characters to interact with the world in ways they couldn’t prior and at turnaround speeds not hitherto possible. Real-time overall is broadening the horizon for characters.”

Real-time technology is positively transforming production pipelines. “In the traditional visual effects world, it is allowing for faster iterations which enable additional exploration of creative options,” notes Paul Salvini, Global Chief Technology Officer at DNEG. “These advances are most critical in areas like animation and creature and character effects [such as the simulation of muscle, skin, hair, fur and cloth]. In cases where the final output from real-time solutions needs further processing, seamlessly connecting real-time and non-real-time tools becomes critical. The role of artists doesn’t fundamentally change, but the tools will allow a more interactive workflow with better feedback. Real-time visual effects are also transforming more areas of production than ever before from previs through final render.” Audience members are getting to enjoy even more immersive and interactive experiences. Salvini remarks, “Some recent live and virtual concert experiences have done a great job of bringing together the best of the real and computer-generated worlds to deliver experiences never before possible for audiences, such as allowing a current artist’s performance to be mapped visually onto their younger selves.”

Storytelling and being able to present clients with the best possible imagery are the main technological goals for Sony Pictures Imageworks, which meant figuring out how to get close to real-time with their GPU renderer Arnold. “The more the client is educated with real-time and sees what the studios are doing, the more they want you to push the envelope,” states Gregory Ducatel, Executive Director, Software Development at Sony Pictures Imageworks. “The magic you get when you work

TOP TO BOTTOM: The visual effects pipeline at Zoic Studios has always been modular. Scene from The Boys. (Image

Technology is an ecosystem that is constantly evolving because of innovation. (Image courtesy of Vū Technologies)

Real-time visual effects are here to stay because it is the best way to get feedback from clients or collaborators. Composite from 9-1-1 (Image courtesy of Zoic Studios and ABC)

with good creatives, clients and technology is that the creativity of those people jumps. It’s crazy. Currently, if you go outside of Unreal Engine, the quality of the imagery drops, and then with lighting, it goes back up; that was not acceptable for us because artists lose the context of their work, and the creatives don’t like that. This is why Spear [Sony Pictures Imageworks’ version of the Arnold Renderer] was brought to the table. How can we always have the highest quality possible at each given step but never go back to the previous one?” The feature animation and visual effects applications are somewhat different: however, the principles remain the same. “We always want better quality, more iterations. We don’t want to wait for notes and for the artists to do something, then go back to notes. If you can do that in real-time, the artist can move forward, and it’s exactly what you want,” states Ducatel.

Real-time visual effects are here to stay. “People who don’t see that real-time is where we all should go are stuck in the past,” believes Julien Brami, VFX Supervisor & Creative Director at Zoic Studios. “There is time for finishing and concepting; all of these take time, but when we need the interactivity and get feedback, whether from clients or collaborators, real-time is the best tool. Real-time allows us to iterate way faster, and faster means more options. Then you can filter what is working. Instead of saying ‘no’ to a client, now you have an opportunity to work with them. There are more iterations, but it’s less painful to iterate.”

The pipeline is evolving. Brami says, “The visual effects pipeline at Zoic Studios has always been modular. We try to make the pipeline procedural so it can be crafted per show and be more efficient. Real-time has shifted our involvement toward the front end of the production, and we have way less in the back end. With a traditional pipeline we would have a bluescreen or greenscreen

TOP TO BOTTOM: The more the client is educated with real-time and sees what the studios are doing, the more they want the envelope pushed. Scene from K-Pop: Demon Hunters. (Image courtesy of Sony Pictures Animation and Sony Pictures Imageworks)

Real-time is allowing the utilization of dynamic 3D characters earlier in the process of feature film production, especially with character-driven live-action pictures. Scene from Paddington in Peru. (Image courtesy of Framestore and Columbia Pictures)

Three years ago, it was all about using game engines for real-time, but with the advances in generative AI, people are doing things even more instantly. (Image courtesy of Vū Technologies)

and have to key everything; all of that would have been at the tail end, which is usually more stressful.”

Technology is constantly advancing along with the growth of expectations. “Virtual production, machine learning and real-time rendering engines; all of these have been around for decades,” observes Mariana Acuña Acosta, SVP Global Virtual Production and On-Set Services at Technicolor. “It’s not like it just happened overnight. What has continued to advance is our computing power. I can’t even comprehend how we’re going to be able to maintain all of the machine learning and AI with these new generational GPUs. What has pushed these advancements forward has been virtual production, cloud workflows, machine learning, AI and the game engines themselves.” To avoid obsolete technology, hardware has to be constantly updated. “It’s costly for a studio to be constantly updating hardware. Maybe at some point, you get a project or want to create your own project and realize you don’t have enough hardware to go and run with it. That’s when the cloud comes in, as you can scale and have the best spec machines. This is crucial because then the cloud service providers are the ones that have a lot of resources to go around when it comes to RAM and GPUs.”

Rendering improves with each new release of Unreal Engine and Unity. “Advances in real-time rendering, such as virtualized geometry with Unreal Engine’s Nanite, have significantly reduced the time required to optimize assets for real-time performance while enhancing their visual fidelity,” observes Dan Chapman, Senior Product Manager, Real-Time & Virtual Production at Framestore. “Looking ahead, Gaussian Splatting is setting a new standard for photorealism in real-time applications. By moving away from traditional polygon-based 3D models and building on Neural Radiance Fields [point clouds that encode light information], Gaussian Splatting offers a more efficient and accurate approach to rendering complex, photorealistic scenes in real-time.” Real-time visual effects have raised the expectations of audiences when it comes to immersive, interactive and personalized experiences.

Chapman remarks, “Technologies like augmented reality, virtual reality and projection mapping allow attractions to respond to guest movements and decisions in real-time, creating personalized storylines and environments that feel unique to each visitor. This shift is also taking place online, where audiences are actively participating in experiences in a way that they can shape and share with others. This is particularly evident in platforms like Fortnite and Roblox, where users engage in live events, socialize with friends and collaborate on creative projects.”

Sometimes, real-time solutions slow down to a traditional visual effects renderer. “It can go in the wrong direction if you’re pushing it too far,” notes Richard Clarke, Head of Visualization & VFX Supervisor at Cinesite. “I’m curious if we can evolve this two-stage process where you can visualize, explore, iterate quickly, and have a good idea of what your end product is going to be, but still not closing the door on allowing the visual effects team to finish it off or push it to the cloud for higher processing. What you get back is closer to a final version. One little wrinkle at the moment is the various render passes that the visual effects team will be utilizing can’t be replicated as easily. The more AOVs [Arbitrary Output Variables] you’re pushing out, the more you’re going to slow down the real-time. Postvis is a real melding of real-time technology and visual effects pipeline workflows. The nice thing about postvis is it’s not an end product. We’ve got a little trick where we make a beautiful scene in Arnold, bake all of the lights and textures, output shots in minutes direct from Maya and go straight into comp. They almost look final. That’s pre-packaging things. Game engines

pre-capture a lot of their lighting to make real-time. That’s where you can save on a lot of processing. The more I use real-time technology, the more I think it’s going to be a cornerstone of everything. Autodesk showed us a beta version of Unreal Engine in Maya. I got excited about that because we’ve been doing it the other way around. Having Unreal Engine in your viewport was like a hallelujah moment for me because most visual effects artists are Maya-centric at the moment.”

As with nature, technology is an ecosystem. “What we’re seeing right now at the top level is the merging of many new innovative technologies,” states Tim Moore, CEO of Vū Technologies. “Three years ago, it was all about using game engines for real-time, and with the advances in generative AI, you now see people doing things even more instantly. The merging of those two is interesting; to be generative inside a 3D environment where you have all the perspectives and control.” Real-time is most useful at the concepting stage. “For people who have simple thoughts and want an extravagant output, AI is amazing because you can give it a little and the AI will fill in the rest. For people who have a specific vision and want it to come to life, AI becomes challenging because you have to figure out how to communicate to this thing in a way where it sees what you see in your head, and you have to use words to do that.” The future can be found in the mantra of Vū Technologies. Moore comments, “The vision for our company is ‘content at the speed of thought,’ and to me that is the next evolution of communication. Encoding and decoding language into sounds and words is an inefficient way to communicate, whereas the ability to use visuals as a communication layer is the most universal language in the world. Everyone perceives the world in a visual way. That ability to make visuals at the speed of thought is the big evolution of storytelling we will see in the next 10 years.”

By TREVOR HOGG

TOP: Preparing for a virtual production shoot of a Vertibird featured in Fallout. (Image courtesy of All of it Now)

OPPOSITE TOP TO BOTTOM: LED walls are beneficial for rendering content for backgrounds but often fall short as a lighting instrument. (Image courtesy of Disney+ and Lucasfilm Ltd.)

Limitations still exist regarding how much you can put on the LED wall in terms of computational power. (Image courtesy of Dimension Studio, DNEG and Apple TV+)

Westworld Season 4 made use of virtual production technology to expand the scope of the world-building. (Image courtesy of Technicolor and HBO)

Has virtual production revolutionized filmmaking, beginning with The Mandalorian in 2019, and accelerated by the COVID-19 pandemic a year later? The answer is ‘no,’ but the methodology has become an accepted alternative to bluescreen and greenscreen. Even though technology continues to advance at a rapidly, some things have remained the same. “It’s a mixed bag,” states Matt Jacobs, VFX Supervisor. “What’s on my mind now when talking to people is building brick-and-mortar facilities. There was a project constructing a backlot in France, and I asked, ‘Did you set up an LED volume because you’ve sunk a lot of money into this?’

And they’re like, ‘No, because every time we do an LED volume, it seems that the ask is different for what the volume needs to do.’ Everybody comes in and says, ‘I need it for process shots for cars.’

Or, ‘I’m doing playback, and I need the volume to be this size and configuration.’ The ability to pop up a volume, be flexible and build the volume out to case-specific specs seems to be the way to go these days.”

Companies like Magicbox offer a tractor-trailer studio setup. “The pop-up trailer is an interesting thing, but you also have to look at that as a set configuration,” Jacobs notes. “Yes, it’s mobile, but it’s what the tractor trailer looks like. Do you need a volume that is semicircle? Do you need the ceiling, or is that lighting?

How are you going to work a volume with a known configuration of width and height? Is it squared-off walls or a circular volume? Does it have ceiling panels that you need for reflections in a car? How are those ceiling panels configured? I was on a Netflix shoot, and we had this great volume at Cinecittà Studios outside of Rome. It was a cool setup and a big stage. The floor was a Lazy Susan, so it actually spun around. The ceiling was great, but because the tiles didn’t line up perfectly, there were lines and seams across the car

where there were no reflections. We had to bring in walls to do fill reflection on the front of the car. We had to do a lot of work to reconfigure that stage and bring in certain elements. Thankfully, they were nimble and had a lot of great pieces and solutions for us to work with. But it goes back to the point that the stage was probably too big for certain things, and maybe it wasn’t perfect for our car shoot.”

Generally, people think that virtual production is synonymous with the LED volume. “I think virtual production is anytime that you’re using real-time technologies in conjunction with normal production,” remarks Ben Lumsden, Executive Producer at Dimension Studio. “The biggest single change is you can push a lot more through Unreal Engine. You’ve got a whole suite of tools specifically addressing LED volume methodologies. There’s the switchboard app and level snapshots that allow you to go back to a period of time when there was that particular load on the volume and understand exactly where everything was, which animation was where and what the lighting setup was. On Avatar, James Cameron would get so frustrated because everything was done using MotionBuilder. Cameron would return to post-production after being on set, and all the creative changes he made on the day got lost in translation through the pipeline.” MegaLights from Unreal Engine 5.5 is a huge step forward. Lumsden says, “Beforehand, it was geometry, which was too expensive. But then Nanite came along with Unreal Engine 5, meaning geometry was no longer an issue. Our experiments with MegaLights so far suggest that lights will no longer be an issue.”

Limitations still exist regarding to how much you can put on the LED wall in terms of computational power. “You don’t want to drive too many metahumans, for instance, but you can put loads

of volumetrically-captured people and make sure that their card is pointed back to the camera or their rendered view is relative to the position of the camera,” Lumsden notes. “One thing that we did that was cool regarding R&D is marrying our performance-capture technology with the LED virtual production. We’ve been doing some tests where we can actually drive metahumans on the wall as digital extras being live-puppeteered on a mocap stage and interacting with the real talent; that’s a new technology or workflow that we may well bring into production going forward.” Sound remains problematic. “There is a real issue with capturing audio because you’ve got this big echo chamber. There are some fantastic new LED panels coming out all of the time. But the great new panels are always expensive. Over time, that will change, as with all of these things. There are also some new and interesting technologies of people doing projector-based methodologies, which are intriguing because the price point is more applicable to indie filmmakers.” Interest rates have made productions more cost-conscious and less adventurous. “The early stories of the volume being a cost-saving mechanism put volume shoots at a disadvantage because producers came in expecting to see a 10x savings in cost or whatever number they had in mind, and it’s dramatic but not that dramatic,” observes Danny Firpo, CEO & Co-Founder of All of it Now. “Now, people are realizing what the volume does well, which are process shoots for vehicles or being able to create a lot of environments in a short amount of time or being able to move the environment around talent.” Hardware and software have greatly improved. “The expansive rate of cheap graphic cards is increasing in power and is helping to keep the dream of a real-time holodeck-style volume within arm’s reach. The quality of real-time graphics is increasing exponentially, and the time it takes to create those real-time environments is decreasing due to the impressive tools that have come out on the software side. Nanite and some of the impressive tools that have come out from Unreal Engine 5.3 and all of the way up to 5.5 are creating a much better environment for artists to create the best version of what they can possibly create now. In addition, we’re seeing a better understanding across the board of LED and camera providers and even lighting vendors

of what types of equipment flourish in an LED volume environment as opposed to trying to take live show or film rental inventory and cramming it into the volume, which we saw in the volumes during the pandemic.”

One particular department head remains central in being able to understand and communicate the capabilities of the LED volume to other members of the production team. “The visual effects supervisor is an ideal bridge because they already exist in this hybrid or mixed reality of 2D and 3D, real-time, physical and digital environments colliding to create the finished product,” Firpo states. “That type of thinking is more challenging for somebody from a different department like Art, Camera or Lighting and is only used to dealing with one physical reality in a real-world space. What we have discovered is specialists are emerging in those departments who have a real understanding of that and are willing to take an extra day and pre-light or go through a virtual scout and ultimately help explore those worlds more and use the same mentalities of what they would do in a physical scout.” An effort has been made to make the virtual production process more intuitive for the various departments. Firpo notes, “We’re moving all of the extraneous tools and features that we deal with and making a simplified UI. For example, giving a DP doing a virtual location scout using an iPad, which is ubiquitous on set, a sense of a rigged virtual camera, which feels like operating a physical one but is essentially a digital portal into that world. Getting that buy-off and sense of translation from the physical into the digital world and vice versa is where it’s helped bridge that communication and culture gap.”

LED walls are great for rendering content for backgrounds but often fall short as a lighting instrument. “LED volumes have a limited brightness, and the light spreads out, so you can’t create harsh shadows,” notes Lukas Lepicovsky, Director of Virtual Production at Eyeline Studios. “They’re also not full spectrum light. LED walls are only RGB instead of RGBW Amber like you would get from an on-set light. You can maybe use the LED wall as fill light, but then you definitely want to be working with on-set lighting for the actual key light.” Virtual production excels with

Technicolor, in cooperation with the American Society of Cinematographers, conducts an in-camera visual effects demo. (Image courtesy of Technicolor)

Virtual production has not only revolutionized filmmaking, but the methodology has become an accepted alternative to bluescreen and greenscreen. (Image courtesy of Technicolor)

TOP TO BOTTOM: The quality of real-time graphics is increasing exponentially, and the time it takes to create those real-time environments is decreasing. (Image courtesy of All of it Now)

Those About to Die was shot on the LED volume stage at Cinecittà Studios in Rome. (Image courtesy of Dimension Studio, DNEG and Peacock)

Interest rates have made productions more cost-conscious and less adventurous. (Image courtesy of All of it Now)

short turnaround projects such as commercials because all the decisions are made upfront. “If you’re a massive visual effects project, then you’re probably going to want to lean on it more for lighting capabilities, like projecting an explosion that lights up the actor’s face in a nice way, but then leave yourself room in visual effects to augment the background with giant building destruction. This is what we ended up doing with Black Adam. We made the wall be near final, or in some cases just a previs in the background that had good lighting, which had explosions and lightning elements. We used it as a lighting instrument, knowing we would replace the background afterward. It depends on the production because, in those cases, you don’t always know what your final asset looks like while you’re shooting a large feature production. Because it’s a real-time process, you have constraints of polygon budget and render time, so you can’t just fill the world with all sorts of assets. You have to have strong planning when it comes to these things.”

Game engines have been a game-changer and are constantly improving. “Where it can stand to improve still is the integration of some visual effects technology like USD and the ability to quickly share assets between departments and make layered, modifiable changes in the pipeline,” Lepicovsky remarks. “Also, over time, we’ve seen this with visual effects; things started from a rastering approach, and eventually everything turned into ray tracing. So, I’m excited to see that there are also ray tracing possibilities in real-time that are coming forward both from Epic Games and Chaos Vantage, a new entrant in the virtual production market.” It is still too early to judge the impact of machine learning on virtual production. Lepicovsky adds, “There are machine learning tools that generate the backgrounds, but right now, they often want nice animation with all the leaves blowing and trees swaying; that is easier to do in actual game assets. Machine learning has been interesting for us in a new process called Gaussian Splatting, which is like a new version of photogrammetry based on a machine learning process. What is different from traditional photography is that you can have reflective and see-through surfaces and capture hair. Another interesting one involves a relighting process that allows you to capture actors in neutrally-lit lighting conditions, like volumetric capture, but then change the lighting afterwards using machine learning.”

“The LED panel is excellent because it’s an incredibly high output, so people like to use it for the lighting, and companies like ROE Visual are adding additional colors into the diode cluster to get better skin tones,” remarks Jay Spriggs, Managing Partner at Astra Production Group. “But that’s not going to replace a conventional lighting instrument. We know people who are researching projection in volumes because the cost to run that is much lower, and you also have additional benefits. For LEDs, the diodes light up and shoot light out, whereas, in a projection-oriented environment, they are reflective, so you have a different quality of light and mixing, which comes from that. The Light Field Lab stuff is fascinating. I don’t want to even think about what the volume would cost for that!” The

central question is, how do you help with what is happening in the frame? “From there, you reverse engineer that into what products are not just the best for what’s going to happen but also the most money-efficient so that they have enough money to bring in their people.” The most cost-effective way is projecting plate photography, as there are so many more complications with real-time tracking, says Spriggs. However, Unreal Engine is making major strides with a new grading workflow. “That is going to be huge for making better pictures out of the game engine because one of the biggest things has always been: how do you do a final polish pass on what is already a good lighting engine but is not perfect?”

Not everything gets treated the same way. “If Greig Fraser [Cinematographer] wants to get the highest quality lighting effect for the best skin tone, but we’re only doing a couple of tight shots, and he has a generous post budget, then we look at the background of the LED,” Spriggs explains. “We build it with the highest quality LED with the smallest pitch we can find. Don’t worry about the final color that you see in the picture because the post budget will kick all of that stuff out so they can post-render and grade. All we focus on is the skin tone. If someone is trying to shoot a car commercial, they’re trying to get the closest to final pixel for the reflections. You build a volume around the car that they’re looking at with the smallest pitch so that you will not be able to see individual pixels on an LED wall with a ceiling. Shoot that and walk away. You wouldn’t use that same configuration for the other one because benefits wouldn’t be there.” Fundamentals should not be forgotten. Advises Spriggs, “If we focus too much on revolutionizing and democratizing or any such big-picture thoughts, we forget about what we have to do right in front of us, which is to make a damn pretty picture!”

BOTTOM: The visual effects supervisor remains the bridge in understanding and communicating the capabilities of the LED volume to the other heads of the departments. From Time Bandits (Image courtesy of Dimension Studio, DNEG and Apple TV+)

By TREVOR HOGG

TOP: Along with being surrounded by snail memorabilia, Grace finds herself responsible for an ever-growing population of frisky guinea pigs.

OPPOSITE TOP TO BOTTOM: Pinky helps Grace to break out of her shell and experience life.

Director Adam Elliot works on the adult Grace puppet surrounded by her character designs. The most dynamic character is Pinky, who required a selection of heads.

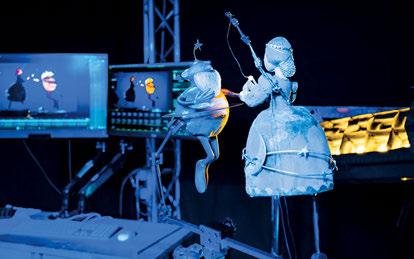

Making the most of the global shutdown caused by the COVID-19 pandemic, Australian filmmaker Adam Elliot mapped out what would become an Oscar-nominee favorite for Best Animated Feature and an Annecy winner. Memoir of a Snail tells the tale of Grace, a hoarder of snail memorabilia who longs to be reunited with her twin brother Gilbert while experiencing the trials and tribulations of becoming an adult. Previously, Elliot made his feature film directorial debut in 2009 with Mary and Max, but interestingly, the methodology and technology between the two productions have not altered much.

“The technology has changed,” states Adam Elliot, Producer, Director, Production Designer and Writer. “Dragonframe is a wonderful tool. The animators love it because they can do all sorts of tricks, and it’s got all sorts of bells and whistles. I apply many restrictions on my animators and try to get them not to rely on the software too much, animate from intuition and celebrate happy accidents. We have LED lights now, so the stages aren’t as hot. The globes don’t burn out as quickly. Sound editing and design are far more digitized. The sound libraries are bigger. Cameras have gotten higher megapixels, so the resolution is much higher. Having said all that, they’re just tools. We still try to animate in a traditional manner. Everything in front of the camera is done traditionally. We don’t do any CGI additions. However, we certainly do cleanup digitally, like removing rigs. All our special effects, like fire, rain and water, are all handmade. The fire is yellow cellophane. We celebrate the old, but we certainly embrace the new.”

Visual effects have come a long way, allowing for more creative freedom. In the old days, we would use fishing line to have things airborne, and now we can have a big metal rod and the visual effects artists remove that digitally,” Elliot notes. “That’s about it.

“We can say to the audience that you can hold in your hand every prop and character you have just seen. However, there was a lot of post-production to make it look that way! There were roughly 1,500 storyboard panels. Then I drew and designed all of the characters [200], most of the props [5,000 to 7,000] and sets [200]. I drew by hand because I was in lockdown during COVID-19 and had a lot of time!”

—Adam Elliot, Producer, Director, Production Designer and Writer

It’s just cleanup. There is a lot of compositing. For elements like fire, we do it on a piece of glass with the camera looking down, often with a greenscreen background, then we composite that in post. We had 600 visual effects shots in the film, and a lot of money spent on the visual effects, but it was mostly basic stuff.” One of the more complex effects was the burning church. “Those flames are recycled and layered,” Elliot explains. “We do one set of flames, then cut, paste and layer them. The claim is that we can say to the audience that you can hold in your hand every prop and character you have just seen. However, there was a lot of post-production to make it look that way!” Every shot was storyboarded. “There were roughly 1,500 storyboard panels. Then I drew and designed all of the characters [200], most of the props [5,000 to 7,000] and sets [200]. I drew by hand because I was in lockdown during COVID-19 and had a lot of time!”

1,600

Compositing was only used where necessary. “Most of the skies you see in the film were on set on giant canvases,” Elliot remarks. “There were only one or two skies or maybe more where we did greenscreen then composited in one of the canvas skies. It is a wonderful tool that now liberates us as stop-motion animators. When I left film school in 1996, I was told I was pursuing a dying art form and that stop motion would be obliterated by CGI. The complete opposite has happened. CGI and digital tools have liberated us. You have to be careful not to get carried away. There is a hybrid look that has gone a bit far, and now with 3D printers, too. Some of these stop-motion films almost look computer-animated because they’re so slick. We’re trying to celebrate the lumps and bumps, brushstrokes, and fingerprints on the clay.”

The design of the characters was based on what Elliot was able to accomplish in his one-room apartment. “Adam started making everything out of Apoxie Sculpt, which is this material that sculpts like clay, then goes rock-hard in about an hour,” explains Animation Supervisor John Lewis. “We had stylized the characters around the fact that they were solid and budgeted around it as well. Creating a character’s costume out of real fabric or silicone that can bend is time-consuming and expensive. Adam wanted us to have these rock-hard-solid puppets. They’re like statues; in some sense, that’s easy because it restricts what the puppet can do. Sometimes, when you don’t move the head, the ear clunks into the shoulder, and you can’t move it where you want it to move, or body movements are stiffer or different than how you might move it otherwise. Adam wrote ‘walking’ out of the film as a stylistic and budgetary choice. If we did have characters walking full frame where you can see their feet, we would have had to have legs that could bend and pants. Instead, we cropped it with the camera, removed the legs and put a little up-and-down rig underneath, which was a cheap microscope stand. It’s about the same size as the puppet’s legs. We put

that on the table and slide the character along; that’s how we get dynamic movement.”

Voiceover narrative figures prominently in the storytelling. “Every shot is cut into the animatic so it has the piece of narration that goes with it as well as the music ideally. Some of that [narration] is scratch and some of it is filled with the real stuff as we go,” Lewis remarks. “You will listen to the narration for every shot that is only five to 10 seconds long. You will time your animation out and express your character differently, depending on what the narrator is saying. They play into each other.” A rhythm gets established with the animation. “Each character will start to lend itself to different expressions and movements. The actor’s voice is a huge guide for that. As an animator, you find that some things are working and keep doing them. Some things don’t work, so you might cut back. Between all the animators, we talk and look at each other’s work. Slowly, a language of that character will develop. Because Grace doesn’t have confidence, she’s often got her hands tucked up to her chest, has her arms in and holds herself tightly. I talked to the animators about how much tension was in the character. Gilbert is bolder, so he’s more likely to be striking big poses with his arms out. Adam has nuanced rules about how he likes each character to look and move when it comes to the blinks and shapes of their eyelids. Certain things make characters look like Adam Elliot characters.”

One of the nine stages had an under-camera rig where a camera could swing down onto a glass tabletop. “Every time an animator had some downtime, they would go onto that stage and do a bit of effects work,” explains Production Manager and VFX Supervisor Braiden Asciak. “The fire was orange and yellow cellophane, and the smoke was cottonwood. They would do these effects,

A surreal moment is when Gilbert and Grace appear inside their mother’s womb, which serves to emphasize the strong bond that exists between the siblings.

Pinky also required a wide variety of mouths.

sometimes to the shot. They’d load the shot we had completed into Dragonframe as a reference and animate those effects elements on top of the animation they had already animated prior. It meant that quite a few of the elements we shot were specific to the shot. However, we could reuse certain elements from those other shots.” The visual effects team consisted of Asciak, Visual Effects Editor Belinda Fithie, Gemila Iezzi [Post Producer at Soundfirm) and four to five digital artists at Soundfirm. “They were simple 2D visual effects, so we didn’t need Maya or Houdini. The visual effects artists mostly used Nuke or DaVinci Resolve – Fusion [a node-based workflow built into Resolve with 2D and 3D tools].”

The church burning-down sequence was challenging but rewarding. “Once we shot all the plates we needed throughout that sequence, we put it into the timeline. Everything was worked out together,” Asciak states. “How was Gilbert going to move around that church? Then we slowly animated the effects elements. Once we finished production, John Lewis spent several weeks doing as many effects elements as possible. We built an extensive effects library of cellophane fire, and he did two big shots – one of Gilbert in the church – and did some compositing in Adobe After Effects himself. Then, John did an exterior shot of the church on fire with the huge fire bursting out of the roof. When John left, it was up to me to layer out the rest of that sequence and ensure continuity. I was doing everything in DaVinci Resolve. Some shots have 15 layers of fire, smoke and embers to try to get it right to a level that Adam was happy with, and it looked compositionally right.”

Creating a sense of peril was critical for the moment Gilbert attempted to rescue a snail in the middle of a busy road. “We had a number of cars animated on greenscreen so we could composite those in later,” Asciak reveals. “But the angle of those plates wasn’t matching the shots. Adam wanted the cars going in from the left and out from the

right to speed by. You had to match that up with the performance to avoid covering the key moments of a laugh or yell. I spent a good two-to-three weeks trying to time all those cars and each of those shots. Also, we didn’t have any sound at that point, so the sound was done to the visual effects work. I felt like we needed to amp up the intensity of that sequence and bolster it with a lot of cars. One way of doing that was [to transition between shots] using side swipes with the light poles, or there was a van that drove by. That was a good way to improve the cutting in the sequence to make it flow and feel chaotic.”

Close attention had to be paid to ensure all the rigs were painted out. “If there was an element of the shot before the rig appeared, then we wouldn’t need a clean plate,” Asciak states. “But for something like Pinky tap-dancing on a table doing the burlesque, we had to go frame-by-frame and paint out that rig from the mirror. In some cases, it’s a little sliver of a rig. When she’s in the pineapple suit and holding out the plate of pineapple chunks, there is a rig that is like a straight line behind her. If you weren’t paying attention, you probably wouldn’t have noticed, but there was a sliver of a rig, and even the visual effects artists asked, ‘Where is the rig?’

Grace’s bedroom had the most scenes, which were shot chronologically. “That set was there for 16 weeks, over half of the shoot period. It goes from being empty to being full of [snail memorabilia], then we return to it being empty. When Grace is sitting in her bed and says she is surrounded by her snail fortress and the camera pulls out, as soon as we finished that shot, we pulled everyone into the kitchen where we had the TV screening room. We watched that shot together for the first time, and it was magical. At that point, we knew we had something on our hands, as we could clearly see the work of the art, animation, lighting and camera departments.”

the

By TREVOR HOGG

OPPOSITE TOP TO BOTTOM: Having practical elements for actors to interact with is an essential part of the filmmaking process for

One of the hardest tasks for MPC was matching digital soldiers with the on-set extras in the battle sequences for Napoleon (Image courtesy of Columbia Pictures)

Having real planes shot grounded the camerawork, which was reflected in visual effects for Top Gun: Maverick (Image courtesy of Paramount Pictures)

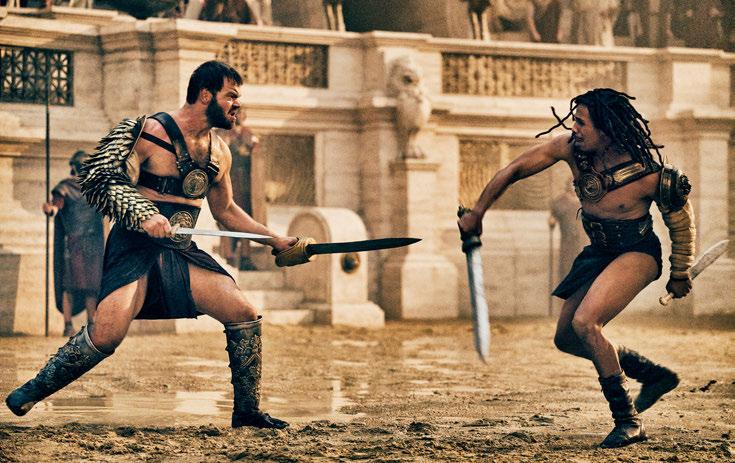

In the digital age, where photorealism is achievable virtually and is getting further refined with machine learning, the visual effects industry finds itself being viewed as a double-edged sword. When used properly, visual effects are interwoven into a cinematic vision that could not be achieved otherwise and, when deployed badly, an instrument of laziness. This perception has been accentuated by the global depository of human knowledge and ignorance known as the Internet. In the middle of all this is a question of whether there is an actual trend of filmmakers favoring practical effects, or is it simply a marketing ploy taking advantage of public opinion?

“Like with any new technology, people went a bit overboard with CGI. CGI is powerful, but it can have some limits,” states director Denis Villeneuve who has created everything from a talking fish in Maelström, a spider in a closet in Enemy, a sandworm ride in Dune: Part Two and a traffic-congested highway in Sicario. “It’s all about the talents of the artists you’re working with. I’m not the only one. Many filmmakers realize it’s a balance, and the more you can capture in-camera, the better. A great visual effects supervisor will tell you the same. The pendulum is going more towards the center, in the right way, between what you can capture in-camera and what you can improve with CGI. If it was up to me, there would be no behind-the-scenes. I feel like you spend years trying to create magic, specifically with CGI, which is so delicate and fragile that it can quickly look silly. So much work has been done to make it look real that I’m always sad when we show behind the curtain.”

Villeneuve is not entirely against the idea of unmasking the illusion as it helps to inform and inspire the legendary filmmakers of tomorrow. “When I was a kid, I read Cinefex and was excited to know how the movie had been made, but it was a specialized publication. It wasn’t wide-open clips that can be seen by millions.

It was something that if you were a dedicated nerd who wanted to know about it, you had to dig for the information, but now it is spread all over the place.”

“There is this need for directors or studios to diminish the visual effects departments and put forth, ‘We did it all in camera,’” notes Bryan Grill, Production VFX Supervisor for Beverly Hills Cop: Axel F, “when we all know that’s not the case. You put your best foot forward to do stunts and practical effects, but there’s always something in there that needs some clean-up or enhancement. It has been this juxtaposition. You’ve had superhero movies, which are nothing but visual effects, environments and multi-dimensions. It’s overbearing. Then you have the other side, which is traditional filmmaking.” Along with Eddie Murphy reprising his role of the quick-witted rogue detective named Axel Foley for the fourth time, an effort was made to recapture the 1980s roots of the franchise. “What always stuck with me about the original Beverly Hills Cop was the opening scene where the truck is barreling down, hitting car after car. One of my other favorite movies from that era was The Blues Brothers, with all of the police cars hitting each other and falling off the bridges. It was a carnage of special effects. That’s what they wanted to bring into this version as well, and they damaged a lot of cars! There were at least 20 more cars that didn’t make the edit that got destroyed. The filmmakers went all out to relive and show the next generation of that type of movie.”

“There is a trend in the marketing of these films where audiences seem to want to crave an authentic experience, so they’re emphasizing the practical aspects even if most of what you’re watching has been digitally replaced in post,” remarks Paul Franklin, Senior VFX Supervisor at DNEG. “If you think back 30 years when Jurassic Park came out, that film was marketed on

Allowing enough time for the various crafts, including visual effects, leads to successfully encapsulating the vision of the director, which was the case with Barbie. (Image courtesy of Warner Bros.)

Even when dealing with the artificial-looking environments found in Barbie, practical sets provide a solid foundation for the seamless integration of visual effects. (Image courtesy of Warner Bros.)

the fact that they had computer-generated dinosaurs in it for the first time. Those of us in the visual effects world who are familiar with that film know that the majority of the dinosaurs that we saw on the screen were Stan Winston’s animatronics that were created practically. If that film was being released today, it would be all of this stuff about Stan Winston building these dinosaurs as animatronics.” That being said, practical effects aspirations do exist. “There are a lot of filmmakers who have seen the success of Interstellar, The Dark Knight movies and recently Oppenheimer, and the way that Christopher Nolan leans into the practical aspect of what he does. They’re going, ‘That’s an effective way to tell your story.’ A lot of filmmakers aspire to that. Whether there are so many of them who can pull it off is a different thing because it turns out to be quite difficult to do that balancing act. I got lucky and worked with Chris for 10 years, and he is a genius filmmaker. I don’t know if there is anybody else quite like him. Steven Spielberg in the days when he was making films such as Saving Private Ryan and Jurassic Park; he’s a filmmaker who knew the value of doing things practically, which is why he would always want to work with Stan Winston. You look back at E.T. the Extra-Terrestrial, and it still holds up because they used state-of-the-art animatronics and practical effects at the time.”

“For a good decade or more, there was this ability for visual effects teams to provide directors and producers with shots that were extraordinary in their ability to break some of the traditional filmmaking rules and to move away from some of the things that made cinema look the way it had for the decades before that,” notes Ryan Tudhope, Production VFX Supervisor on Top Gun: Maverick “That created a bit of a look and, just like anything, looks come and go. One thing, if you think about it, over the course of all of filmmaking, is that every single shot of every single movie has one thing in common, which is that it was shot through a camera. Then came along the digital ability to create digital cameras and shots. That freed us up for a long time to be able to do things with those cameras that had never been done before. When I think about it in terms of what I’m trying to do, it is to recognize what that camera means to the artform and to honor that by trying to design a shot that appreciates what the camera can do and should do, how it

visualizes the world, and how the audience sees the film or shot or whatever the action might be through that limitation. When you don’t respect that, it can be visually stunning and impressive shots, but the audience immediately knows that it’s not real.”

“We’re trying to have our cake and eat it too,” believes Aaron Weintraub, VFX Supervisor at MPC. “The highest compliment we can ever be paid is if people have no idea that we did anything – that’s what we strive for. What we do is stagecraft. We’re trying to fool the audience into thinking that something is completely real and was there in front of the camera; they recorded it, and that’s what you get to see on the screen. If we have done it correctly, nobody knows.” Real-life examples are the starting point. Weintraub explains, “Everything that we do is looking at photographs and film footage and trying to replicate how the light and surfaces react. We’re nothing without reference of the real world, if the real world and photorealism is our goal.” Technology is the means to the end. “Every iteration and every step that we take with the technology is something new that the audience may not ever have seen before. Reacting to the newness is part of saying that the technology is driving it, but it’s a story that we’re trying to tell that we couldn’t do in the past.” Reality can provide a sense of spontaneity to animation. Weintraub notes, “There are mistakes and happy accidents that can happen when you shoot real stuff that you might not get otherwise. When we did Guillermo del Toro’s Pinocchio, which was stop-motion animation, one of the guiding principles of the animation was to try to anticipate all of those weird little accidents that if you were shooting this live-action and put those into the animation. In advance of shooting the stop-motion, the animators would shoot these little videos of their clips to see what would happen.”

“It’s a stylistic thing. When we’re talking about the marriage of practical, what’s shot on set and where we come into play, either augmenting or completely replacing it in some cases, there is always this desire to maintain this visual characteristic that is inherent in practical shooting,” observes Robin Hackl, Co-Founder and Visual Effects Supervisor at Image Engine. “It’s being art-directed and driven by the DP, lighting director and the director himself, and they have hands on the physicality of that

Actual cars were flipped and digitally augmented for Beverly Hills Cop: Axel F. (Image courtesy of Netflix)

Joseph Gordon-Levitt and Eddie Murphy prepare for a scene that takes place inside of a helicopter cockpit for Beverly Hills Cop: Axel F. (Image courtesy of Netflix)

being on set and getting that look. It’s always that apprehension almost of committing fully to CG and leaving it to the hands of the visual effects vendors and artists, even things that they are implementing in practical set photography, like virtual sets that give a higher degree of reality to the lighting of the characters. From the feedback that I’ve gotten from the people on set, the actors in particular react well to virtual production in the sense that they have something tangible to react to and see, to be part of that little world, which is sometimes hard for them to wrap their heads around. What is that emotion tied around that environment they’re in? It heightens that. Of course, it’s not all done that way. The Mandalorian is all over the place, from on-set photography to giant bluescreens and virtual production. The Mandalorian is a good example of aesthetic. The creators wanted to retain as much as possible the flavor and vibe of the original Star Wars films. There were a lot of optical effects done back in the early days and stop-motion that was live in-camera, for the most part they tried to shoot everything in-camera the best they could then augment it. It’s a real harkening back to that era.”

“It’s more about having a good dialogue with the people you are working with and making sure that they understand how to get the best out of the tools they’re using,” states Glen Pratt, Production VFX Supervisor on Barbie. “If I’m blunt, it’s often because bad choices are made. If you allow all the crafts that are involved in filmmaking the time that they say [they need], you get a good result, whether it be building a set, creating pyrotechnic explosions, then equally whatever aspects of visual effects you’re adding into that.”

Open communication is important. “Greta Gerwig hadn’t done visual effects before, so I sat down early with her and talked through various sets of tools that we have at our disposal. I could

tell she was overwhelmed by some of those things. But it’s honing it down to that is just a step in the process of how we will eventually get to the end result. That comes with experience. The more that you work with bringing visual effects into it, the more well-versed the director becomes with the language. A lot of the time, they don’t want to know that level of detail. They only want to know that you have their back and you can do this for them.”

Barbie Land was an artificial environment, but the same photorealistic principles applied. “We captured everything so we could recreate whether it be the actual stages themselves or miniature models, and often we embellished them further on what was there to ground it, make it feel like it belongs in that world and had a cohesive, insistent aesthetic running through it.”

“Personally, when the conditions and the type of effect to be achieved allow me to use the practical, I jump at it,” remarks Mathieu Dupuis, VFX Supervisor at Rodeo FX. “We’re fortunate to have a studio at our disposal here at Rodeo FX, and I can’t imagine executing some of the large-scale effects on our recent projects without the support of practical effects. I’m not just talking about blood splattering on a greenscreen or crowd duplication, which, by the way, is always highly effective. Being able to rebuild scaleddown set pieces [painted green] to capture the precise interaction of, say, glass breaking on a table or to recreate an organic dream effect by filming floating debris in macro within an aquarium allows us to achieve quick, cost-effective results that are both efficient and innovative. There’s also the advantage of avoiding endless discussions with clients by capturing how a flag moves in the wind or how a plate shatters. There’s no need to imagine or convince anyone how these elements would behave because we’ve captured them in real life. There’s nothing more authentic than reality, right!?”

“It’s a stylistic thing. When we’re talking about the marriage of practical, what’s shot on set and where we come into play, either augmenting or completely replacing it in some cases, there is always this desire to maintain this visual characteristic that is inherent in practical shooting.”

—Robin Hackl, Co-Founder and Visual Effects Supervisor, Image Engine

By TREVOR HOGG

Even though the term ‘retro-future’ is nothing new, Swedish artist and musician Simon Stålenhag has been able to create a unique vision where discarded technology is scattered across vast landscapes situated in an alternative universe. His illustrations and accompanying narratives have captured the attention of filmmakers such as Nathaniel Halpern with Tales from the Loop for Prime Video and siblings Anthony and Joe Russo with The Electric State for Netflix, which they spent seven years developing. The latter revolves around the aftermath of a robot uprising where an orphaned teenager goes on a cross-country journey to find her lost brother. The human cast consists of Millie Bobby Brown, Chris Pratt, Stanley Tucci, Giancarlo Esposito, Ke Huy Quan and Jason Alexander. At the same time, Woody Harrelson, Anthony Mackie, Brian Cox, Alan Tudyk, Hank Azaria and Colman Domingo voiced the many mechanical co-stars.

OPPOSITE TOP TO BOTTOM: A complex sequence to execute was 20-foot Herman carrying a Volkswagen campervan containing Michelle,

The drones went through a major design change where the heads resemble neurocasters and had a screen that projected the face of the pilot.

Inspiring the imagery was Swedish artist-musician Simon Stålenhag, who has developed a retro-tech and alternative-world visual aesthetic.

“Simon Stålenhag’s original artwork electrified us,” producer/ director Anthony Russo remarks. “It’s this strange feeling of familiarity in what he’s drawn and also strangeness. It’s a historical period that you can recognize whether or not you lived through it, but it’s not exactly what that period was.” There are elements from the 1990s. “The story is a parable,” producer/director Joe Russo notes. “It’s less about nostalgia than it is about the idea that technology could have developed faster and maybe deviated humanity from its main path. That was the fun part, thinking through those little elements that allowed us to create a new interpretation of the 1990s.” The story taps into the present-day fears of AI usurping its human creators. “Part of what we want to do is explore the fact that you can find humanity in technology and inhumanity in humans,” states Anthony Russo. “We have both of those experiences in our lives and world. Joe and I are technologists. We use technology throughout our lives to tell stories, but at the same time, we all know that technology is powerful and can cause problems, whether the nuclear bomb or social media. It’s us recognizing the complex relationship that we all have with technology as human beings.”

Determining how the robots would be created and executed was a major topic of discussion as they are 80% of the cast. “This is true for all of our projects when you’re dealing with a fantasy world that needs to be created from whole cloth that doesn’t exist,” states Anthony Russo. “The methodology used to create that is always a question. It is driven by how Joe and I see the movie. What are we trying to achieve? What do we want to do with the scenes? How do we want to stage things? How do we want the actors to interact with other characters in the movie who may not be played on the screen by physical actors? These all become questions in terms of what is the right methodology to use to create the film. We were involved with our Visual Effects Supervisor, Matthew Butler, to determine the proper methodologies. Because there are so many characters in it that don’t exist in reality, we had to rely upon visual effects to create a huge portion of the film.”

Dennis Gassner and Richard Johnson, who consulted robotic companies, shared production designer duties. “I was in charge of making a real walking and moving Cosmo,” states Production Designer Richard Johnson. “I had to go to every robotics company in the world that would listen to me. The immediate refrain was. ‘The head is a deal-breaker. It throws him immediately out of balance.’ If you look at all of the real robots that are popping up on the Internet today, they all have tiny heads. The other limiting factor was height. They were all in the zone of 5’ 7” or less. I now know more about real robots than I ever expected to know my entire life!” The robots had to be distinct in their own right. “We felt the robots with more screen time needed to be more iconic-looking, so we looked at iconic products or services or things from the last two, three or four decades. Mr. Peanut is very wellknown brand name. We thought, ‘He could be a robot. Baseball player. Very iconic. Be a robot.’”

Approximately 2,000 visual effects shots are in the final film, with Digital Domain and ILM being the main vendors, followed by Storm Studios, One of Us, Lola VFX and an in-house team.

“We’re not in a world of magic,” observes Visual Effects Supervisor Matthew Butler. “The idea is that these robots were often designed to make us feel comfortable about them serving us. I fought tooth and nail to put in little piston rod push-pulls and things that could justify that Cosmo could actually move. If we designed a particular ball joint or cylindrical actuator or pitch actuator, we made sure that the motion of these robots was restricted to what that could do.” Artistic license was taken with the original design by Simon Stålenhag. “I wanted Cosmo’s eyes to have some emotion. Rather than be just a painted pupil as in the book, we made a smoked glass lens for the pupil that you can see behind it that there is a camera. Artistically, we let those eyes have a gratuitous green light to them. Now, you have a twinkle of an eye and can get emotion into that eye. That was another tricky thing. It was about getting enough emotion into them without breaking the silhouette of the design of the robots that we needed to adhere to – that was hard,” Butler says. Keats’s (Chris Pratt) robot sidekick, Herman, comes in different sizes. “It was always the Russian doll thing where the one size smaller fits into the one size bigger,” Butler remarks. “We did honor the style and personality but not at the expense of physics. Most of the movie is four-foot Herman with Anthony Mackie’s [vocal] and Martin Klebba’s [mocap] performances. It’s also coming out of the script. He’s this sarcastic character. I love his personality, and it came through extremely well. Herman borrowed the power extension cable for his devices and forgot to return it. Meanwhile, all of Keats’ food in the fridge has gone bad. Herman has messed up, and he’s like a guilty teenager shuffling around on the couch, deliberately avoiding eye contact with Keats because he’s this busted little kid. That character is amazing, and it carries through the movie well. Chris Pratt was perfect for this, and it works so well for the two of them. It’s most people’s favorite relationship in the movie.”

Following the example of animated features, Lead Storyboard Artist Darrin Denlinger storyboarded and assembled the entire film into an animatic. “I had a version of the movie before we started shooting, or close to when we started shooting, that was a layout,” states Jeffrey Ford, Executive Producer and Editor. “It had all the storyboards cut together in sequence with sound, music and subtitles instead of dialogue. I used that as a layout to guide

us throughout production so we knew roughly how scenes would play out. Of course, things change on the day; actors re-block the scenes.” The amount of footage captured surpassed Avengers: Infinity War and Avengers: Endgame. Ford explains, “This film that had to be made multiple times because when you deal with animated characters carrying this much weight dramatically, those performances are created in passes. You may shoot a proxy pass where Millie interacts with Cosmo, and it’s a motion capture actor. Then you may shoot a pass where she is interacting with nothing. We might go back and shoot that same performance on the mocap stage multiple times. We may end up with various iterations of those visual effects as they come in over the months. An enormous number of iterations go on, and when you do that, you generate enormous amounts of footage.”

Atlanta doubled as the American Southwest. “Tumbleweeds and sagebrush, the basic things a person sees in the Southwest, do not exist in Atlanta or anywhere near there,” Johnson notes. “We had to fill several trucks with all that stuff and bring it into Atlanta.”

Parts of Atlanta have not changed for years. “Through the camera’s eye, if you wanted to say that it was the 1970s or 1980s, it was easy because nobody had built modern or contemporary homes in those neighborhoods for whatever reason. The same thing happened when selecting the locations for the battles in the city. If you put in the right period of car, voilà! You’re in that era.” A question that had to be answered was where exiled robots responsible for the uprising would live. “The X was in the script and is a large area in the Southwest. Where would these guys go? A country club? A department store? Football stadium? We landed on a shopping mall. It dawned on me one day that the only reason they would go to a place like this is to recharge themselves or maybe for repairs. That’s why in the shopping mall, you see a lot of wires, batteries and charging stations.”

Along with the final battle, which is almost entirely synthetic apart from the Keats’s interaction with the grass, a virtual meeting occurs between antagonists Ethan Skate (Stanley Tucci) and Colonel Bradbury (Giancarlo Esposito). “It’s where Ethan pitches the Colonel to go into the X to get Cosmo back,” Butler recalls. “Bradbury puts on the neurocaster and teleports into this virtual

BOTTOM TWO: It was imperative for believability that Herman’s movements be grounded in real physics.

environment that takes place in this beautiful mountain lake scene. Skate is standing in the middle of the lake while Bradbury is on terra firma and gently steps out to have a conversation with him. Production didn’t go to Norway. It’s just a couple of guys and girls from Storm Studios [located in Oslo] with some backpacks hiking out into some beautiful locations in the summer for reference. We had a shallow pool with plexiglass an inch under the surface so we could have Stanley and Giancarlo stand in the water. The only thing that we kept was the little bit of interaction of water locally right at their feet while the rest was digital water. The ripples needed to come out and propagate out into the lake as it would for real. It’s absolutely stunning work.”

The hardest shot to execute was when Cosmo, Herman, Keats and Michelle (Millie Bobby Brown) have been captured and are escorted into the mall. “They walk into this forecourt and finally see that the whole mall is filled with robots,” Ford recalls. “That shot was incredibly hard and took us months to do. The only things in that shot are Chris Pratt, Millie Bobby Brown and an empty mall. I drew maps of each frame, and we did a whole progression. We talked about where the different robots were, what they were doing, what their day was like, where they were going next and why they were moving in a certain way. We wanted it to feel like a real city. If you were to block out extras, those people would all come up with all of their mini-stories, and they would work it out. But we didn’t have that. We had to figure it all out as animators. It was fun but brutal. Digital Domain did an incredible job on it. I hope people will stop and rewind the shot because it’s beautifully detailed and feels completely real.”

WINNER OF THE 2024 FOLIO: OZZIE AWARD

Best Cover Design VFXV Winter 2024 Issue (Association/Nonprofit/Professional/Membership)

HONORABLE MENTIONS FOR THE 2024 FOLIO: EDDIE & OZZIE AWARD

Best Cover Design VFXV Fall 2023 Issue and Best Full Issue for Fall 2023 Issue

The Folio: Awards are one of the most prestigious national awards programs in the publishing industry. Congratulations to the VFXV creative, editorial and publishing team!

Thank you, Folio: judges, for making VFXV a multiple Folio: Award winner.

By CHRIS McGOWAN

“As far as VFX education, we are constantly seeing new pieces of software and technology being implemented into the pipeline. That is something we are always grappling with when it comes to learning,” says Professor Flip Phillips of The School of Film and Animation at Rochester Institute of Technology (RIT). Each VFX and animation school explores the implementation of new tech differently, such as virtual production and AI.

OPPOSITE