HOOKE

Welcome to the 52nd edition of Hooke magazine, where you will be able to explore an array of scientific research, ranging from reasons pugs should be banned to an in-depth guide into making your own lightsaber! We hope that this edition is an exciting read for all of you, as it was for us while editing.

The joy of Hooke is that it is a magazine for scientists and non-scientists alike. We have preserved this admirable quality by ensuring every article is accessible and interesting to even the non-scientific mind. And, reading about scientific application in the real world, as many of our articles recount, would do nothing but enhance work in the science curriculum.

As the more observant among you may have noticed, this is a slightly slimmer version of Hooke than previous editions. This is because we are aiming to release another edition in Lent term with some more exciting articlesmake sure to keep your eyes peeled!

We would like to thank the authors of these remarkable articles and our loyal readers. We hope you all enjoy this edition! We extend our greatest thanks to Mr Coward, who has made this publication possible through all his encouragement and advice, ultimately allowing us to bring Hooke to life.

With great thanks to the Hooke editorial team:

Yuhong Choi

Aryan Daga

Clare Jin

Lauren Lendrevie

Sandro Levi

Mark Morris

Heather Morrison

Esha Patel

Anisha Sawhney

Caitlyn Tan

Esha Patel

Esha Patel

When deciding on the breed of your life - long companion, owners don’t often consider the health of the breed. It is so common to walk down the street and see dogs such as Pugs, Boxers, Frenchies or King Charles Spaniels, without even pausing to notice the shape of their heads. These brachycephalic dogs, with their anatomically challenged airways, often experience breathing difficulties. One common respiratory problem associated with these breeds is Brachycephalic Obstructive Airway Syndrome (BOAS). It is easy to disregard these health concerns when we see

celebrities such as David Beckham and Lady Gaga with their short-muzzled, cross-bred brachycephalic dogs. However, when animal welfare and wellbeing is being compromised due to in-breeding, for aesthetic reasons, personal desires or ignorance, where do we draw the line?

The popularity of brachycephalic dogs reached an all-time high, following the lockdown in 2020. Owners often underestimate the severity of the health problems their dogs face, prioritising aesthetics over the welfare of their pet. However, whilst dogs with wide eyes and

rounded faces may appear more physically attractive to the public, their narrowed nostrils and elongated soft palate obstructs the passage of air through the lungs and throat, making it difficult to breathe. During sleep, these dogs can experience apnoea (brief periods of halted breathing), reducing the oxygen content of the blood and thus having serious impact on their overall health. Likewise, in warmer seasons, owners are often amused at the sounds their pets make; failing to realise these are the sounds of a compromised respiratory system.

To contextualise this risk, a recent study on the

average lifespan of dogs has indicated that brachycephalic breeds have a shorter lifespan than the average dog, with French bulldogs having the shortest life expectancy of 4.5 years. Given that the average life expectancy for dogs is 11.2 years, the impacts of brachycephaly are shocking. Likewise, English bulldogs with a 7.4 year life expectancy and American bulldogs with an average lifespan of 7.8

years, face similar issues with regards to breathing, exercising and risk of infections. The significantly shorter lifespan indicates the rapid onset of health problems, preventing dogs from living up to their full, long-lasting potential. Despite this, a study conducted by the American Kennel Club showed that breed popularity does not align with longevity and health, meaning that the population continues to ignore these risks and adore these flat-faced breeds.

Expanding the One Health scheme, which aims to achieve optimal health outcomes for humans and animals, vets could also work closely with other professions such as breeders and geneticists to help promote animal welfare. For instance, analysing the genome of each breed would help elucidate the risks of crossing two breeds that are closely related, and thus deter breeders from compromising the health of the breed. In Norway, the crossbreeding of English bulldogs and Cavalier King Charles Spaniels was banned after evaluating the neurological damage caused due to the developments of fluid-filled cysts in the spinal cord, known as syringomyelia. The collaboration between neurobiologists and vets helped to uncover this issue, demonstrating the power of

likely that breeding will take place illegally or already crossbred animals will be imported from other countries. In addition, it fails to educate the public about the health risks associated with other brachycephalic breeds, creating the misconception that only these two breeds suffer from adverse health risks, and all other breeds are perfectly healthy. Thus, instead of imposing bans, a more effective method would be to collaborate and work together to alleviate animals from their pain.

different professions coming together. However, whilst legislation focuses on preventing harmful breeding, it does not work with breeders, but merely limits their capabilities, making it more

Analysingthegenomeofeach breedwouldhelpelucidatethe risksofcrossingtwobreedsthat arecloselyrelated

It is undeniable that brachycephalic dogs make wonderful and affection companions, and their existence should not be overruled, but rather more carefully considered. Brachycephalic syndrome is a ‘man-made’ disorder that can be controlled, with the potential of advancing animal welfare and ensuring dogs can live their happiest and healthiest life to the fullest.

With increased understanding about the detrimental effects that BOAS can have, each party - owners, breeders, veterinarians, animal welfare organisations, and individuals alike- should all take responsibility and enact changes to promote animal health. When deciding on the breed of their life-long companion, owners should prioritise the health of their pet over aesthetics.

Brachycephalic syndrome is a ‘manmade’ disorder that can be controlled, with the potential of advancing animal welfare and ensuring dogs can live their happiest and healthiest life to the fullest.

Crystal Tian

Crystal Tian

Next-generation sequencing is a technology used to determine the order of nucleotides in whole genomes or smaller, targeted regions of DNA or RNA. While older methods such as Sanger sequencing required over a decade to deliver a final draft, NGS can sequence an entire human genome in only one day. NGS was first introduced for commercial use in 2005 and was initially called ‘massively-parallel sequencing’ as it allowed multiple DNA strands to be sequenced at once, in comparison to Sanger sequencing which could sequence strands one at a time using capillary electrophoresis.

The sample must first be used to create a sequencing ‘library’, where the DNA is processed into short double-stranded fragments (DNA fragmentation), which can be done through physical shearing, enzyme digestion, or PCR based amplification of certain genetic regions. These DNA fragments are ligated to technology-specific adaptor sequences which also have a unique ‘barcode’. This allows each sample to be identified by a unique DNA sequence, permitting multiple samples to be mixed and sequenced at the same time. Apart from fragment libraries, there are 2 other methods of library preparation: pairedend libraries and mate-end libraries. Typical DNA sequencing only occurs in one direction, while paired-end libraries allow the DNA to be sequenced from both ends, as they have adaptor tags on both sides of the DNA insert. Mate-pair libraries involve larger DNA inserts, and the sequencing produces two reads that are distal to each other and at opposite orientations. This is more suited for large, structural variant detection and the identification of complex genomic rearrangements.

Before sequencing, the library produced in the first step must be attached to a solid surface and clonal amplification is applied to increase the signal which can be detected from each target during sequencing. During clonal amplification, each DNA molecule in the library is bound to a bead or flow-cell and is then PCR amplified to create a set of identical clones of this unique DNA molecule.

Since each adaptor sequence can be identified by a unique DNA sequence, they are all sequenced at the same time using the ‘sequencing by synthesis’ method, where individual bases are read as they grow along a polymerised strand. Each NGS technology is unique and based on its producer, however they all use the ‘sequencing by synthesis’ method.

Each experiment then generates huge quantities of complex data containing short DNA reads which are analysed in three main steps. In primary analysis, raw signals collected from each sequencing cycle are processed from instrument detectors into digitised data or base calls and outputs files containing base calls assembled into sequencing reads and their associated quality scores. Secondary analysis then aligns the reads, and tertiary analysis mainly involves interpreting the results and extracting useful information from the data.

Cancer is caused by somatically acquired mutations and is hence a disease of the genome. Before NGS, capillary-based cancer sequencing was being used for over a decade, but such investigations did not result in a huge amount of pivotal research due to the fact that they had insufficient samples and a limited number of candidate genes. By combining NGS with cancer research, cancer genomes can be studied in their entirety with the aim to identify mutations in tumours that can be treated using mutationspecific drugs. Currently, there are five key cancer sequencing methods: cancer wholegenome sequencing, cancer exome sequencing, cancer RNA sequencing, targeted sequencing, and circulating tumour DNA (ctDNA) sequencing. Since NGS can assess several genes in a single assay, multiple tests are not required to identify the causative mutation, making it a more efficient and advantageous method for cancer researchers.

Whole genome sequencing (WGS) provides a highresolution,base-by-baseviewofentiregenomes.Itis mainly used for identifying causative variants and novel genome assembly by detecting single nucleotide variants, insertions and deletions, copy number changes, and large structural variants. The advantages of WGS are that it can deliver large volumes ofdatain asmall amountof time as wellas capture both large and small variants, which can be missed by more focused approaches such as exome sequencing. In the field of cancer research, cancer WGS is widely used to compare tumours to matched normal samples and discover novel cancer driver mutations. Furthermore, cancer genomes usually contain unpredictable numbers of point mutations and fusions. Many of these alterations will be novel and will therefore not be within coding regions, so cancer WGS offers the most comprehensive method of identifying the variants. Targeted methods – like exome sequencing - would miss certain variants such as those outside the codingregion.

Targeted sequencing mainly involves sequencing only areas of the researcher’s interest, such as disease-associated genes or genomic hotspots. This method provides low volumes of data, which allows the researcher to focus on their specific regions of interest and is better for detecting rare alleles.

Targeted sequencing NGS panels are the most flexible option as they can be designed to sequence any gene and can also perform RNA transcript analysis. By generating smaller data sets, the data becomes more manageable and comprehensible for researchers, decreasing the workload. Overall, this is the fastest and most costeffective NGS method. It is most often used in clinical sequencing, disease-specific research projects, and oncology.

Cancer exome sequencing is especially useful in finding information about the coding mutations that promote tumour progression. Exome sequencing consists of 2 main parts: target-enrichment, and sequencing. Target-enrichment is the process that selects and captures exomes from DNA samples, usually through array-based exome enrichment (separating the exome via microarrays) or in-solute capturing (binding of exome to magnetic beads). Exome sequencing is mainly used within diseasespecific research,such as cancer, and clinical studies. Furthermore, NGS allows DNA strands to be combined, amplified, and detected at the same time, making it much more efficient than previous methods, such as sanger sequencing, which did not contribute as much as NGS in biological and clinical research. An advantage of cancer exome sequencing is that it allows cancer researchers to specifically inspect the coding regions, which is the section that often contains the mutations that affects tumour progression. By analysing these coding regions, researchers can iden fy and prevent the muta ons thatcontributeto tumourprogression.

Circulating tumour DNA (CtDNA) can act as a non-invasive biomarker, which makes it a potential alternative to invasive tissue biopsies. Liquid biopsies can be used to detect ctDNA from tumours and can be used in the future as a non-invasive approach to monitor treatment response and identifying patients who qualify for therapy. CtDNA is obtained from the rapid turnover of cancer cells due to apoptosis and necrosis, causing the constant release of tumour-derived nucleic acids into the circulation. The preferred source of ctDNA is the plasma, however it can also be found in urine, saliva, pleural effusions, and cerebrospinal fluid. NGS also has applications within microbiology research, complex disease research, as well as reproductive health due to the same reasons as within oncology. NGS’s speed, throughput, accuracy and ability to analyse thousands of genes at once in multiple samples, have completely changed the field of genetic analysis and has opened multitudes of new opportunities within cancer research. With technology such as NGS, the idea of curing cancer has become not only achievable, but likely to come in the nearfuture.

BycombiningNGSwithcancer research,cancergenomescanbe studiedintheirentiretywiththe aimtoidentifymutationsin tumoursthatcanbetreatedusing mutation-specificdrugs.

Lewis Carroll’s famous line from the opening scene of ‘Alice Through the Looking Glass,’ explores the idea of chirality. Chirality is a property in which an object cannot superimpose on its mirror image. It can be found everywhere in life, from our hands, biological molecules, to galaxies in astrophysics.

As stereochemistry was first

If Lewis Carroll had intended to refer to stereochemistry, the molecules in the Wonderland would be chiral to the natural world, as molecules labelled as D would become L and vice versa. If

Julie Jungdiscovered by Louis Pasteur in 1848, shortly before Lewis Carroll published her book, chemists and linguists suspect this line was the author’s subtle reference to stereochemistry. The chiral phenomena were unexpectedly discovered when Pasteur was examining the salt paratatrates of tartaric acid, formed through beetroot fermentation. He noticed that the salts were not identical but were stereoisomers,

the lactose molecules switch from D to L handedness, it becomes indigestible to Alice’s dextrorotatory enzymes. This is a crucial issue in the pharmaceutical industry, as chiral molecules

as they had the same molecular formula and functional groups but different arrangement of groups in space. The molecules’ image and mirror images are referred to as enantiomers or optical isomers, which are separated into D for dextrorotatory and L for Laevorotatory (right and left in Latin), due to their relative configuration.

contain the same atoms, but their enantiomer may have very different pharmacological effects that could be inactive or toxic.

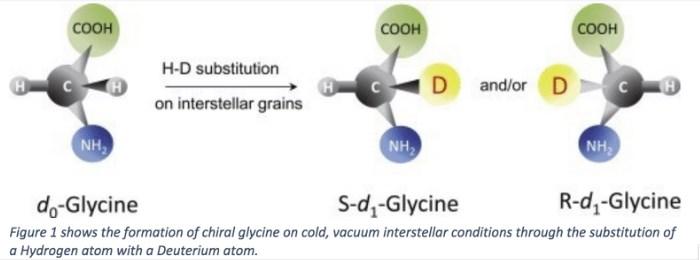

In 2004, NASA’s Stardust spacecraft retrieved 16 amino acids such as glycine, alanine and valine, from samples around the interstellar clouds surrounding a comet. All 16 amino acids, except glycine, were chiral. To investigate this further, scientist Yasuhiro Oba recreated the specific conditions of interstellar clouds: a vacuum condition with the temperature of 12 Kelvin.

The carbon atom is the chiral centre, which is a tetrahedral atom that has four different atoms or groups bonded to it that forms a chiral molecule. On the left, the carbon atom is not a chiral centre as the same two atoms of hydrogen is bonded to it, forming a symmetrical structure. However, on the right side of the figure, the heavier Deuterium atom

is substituted as it forms stronger, shorter bonds and the symmetry is broken.

This experiment provides evidence on how chirality is found on Earth and can help us explain the origins of chirality: given that chiral glycine can form in an interstellar,

light from comets and meteors on early Earth may have given rise to chirality.

primordial environment, it may be the source of chirality as interstellar clouds evolve to a planetary system like Earth. Therefore, the entry of interstellar material such as radioactive decays and polarized

Nevertheless, there is no direct evidence to verify that the chiral amino acids did not initially occur in an interplanetary or interstellar environment. In addition, Dr Marcelo Glesier, a theoretical physicist at Dartmouth College suggests, ‘such a small asymmetry in the universe is not enough to create chirality on Earth’, meaning that these astrophysical interactions between biotic factors on Earth and abiotic factors in space are very weak and short-range. Hence, the origin of chirality remains a highly controversial topic amongst scientists.

Chirality is also relevant in the bio-evolutionary process of mutations, breaking racemic symmetry in proteins. The term racemic symmetry denotes the presence of an equimolar amount of the dextrorotatory and laevorotatory enantiomers of a compound. Consequently, the property of proteins containing only laevorotatory enantiomers is labelled as homochiral or a racemic asymmetry structure.

If all proteins found are lefthanded, how, and why did bioevolution lead to nature selecting only one enantiomer to construct all living organisms?

And what is the significance of homochirality in larger biological

molecules such as DNA and RNA? Lastly, how does homochirality affect the way our body metabolises racemic drugs? To help us answer these questions, we can compare the stability, structural and functional properties of homochiral and heterochiral proteins.. Figure 2 shows the structure of artificial proteins composed of leucine side chains, forming polyleucine. Results from the National Academy of Sciences of USA shows that the average hydrogen bond energy in the artificial polyleucine L/D mixture is a half of the bond energy in the structure containing purely L or D, decreasing from 60% to 30%.

Therefore, the heterochiral polyleucine structure is less stable than the homochiral ones. Homochirality is crucial in larger biological molecules like DNA, as it determines the strength of the hydrogen bonds hence the double helix and globule structure that stabilises the molecule. Amongst early proteins, a random mutation caused an imbalance between L and D enantiomers in proteins, breaking the symmetry. The structures with a more stable hydrophobic interior would have been more frequently successful in producing copies of themselves, as the asymmetry in chiral structures increases, its stability also increases. Therefore, the chirality of biological molecules is crucial in defining their function.

Theoriginofchirality remainsahighly controversialtopic amongstscientists.

The chiral environments in the body, such as enzymes, proteins, and receptors, will interact differently with each enantiomer in a chiral drug. Since enzymes and proteins naturally exist as homochiral structures, only one of the drug enantiomers may have an active response. The serious problem arises when a drug enantiomer binds with a receptor elsewhere in the body where it is unwanted, leading to a toxic effect. This was the case in the 1950s with Thalidomide. Thalidomide was a widely used drug for the treatment of nausea in pregnant women, developed in Germany by Chemi Greunenthal (Florence, 1960). It took until 1961 for doctors to identify the link between thalidomide and birth defects, such as the shortening and absence of limbs, malformation

of hands, ears, and eyes and neurological damages, after 100,000 babies were affected by the S enantiomer of the drug. The thalidomide tragedy marked an important turning point in toxicity monitoring in drug development industries, but also lead to the emergence of a new specialising field ‘chiral pharmacology’ and the evolution of stereochemical nomenclature. However, 50% of drugs nowadays are still used as racemates with their side-effects due to the difficulty and high cost of extraction: more research needs to be done on creating a cost-effective, precise technique that will produce a pure enantiomer drug. Alternatively, as some chiral molecules can switch from one enantiomer to another, manufacturers cannot be certain

that chiral drug regulation in virto is consistent across living patients. Nevertheless, chirality can be beneficial in the pharmaceutical industry. There are drugs such as propranolol (antidepressants) in which a racemate is more useful than a single isomer because of the complementary effects of each other. Therefore, all drug dictionaries and pharmacopoeia must include information on the characteristics, properties, and effects of the chiral form of the drug along with the toxicological effects. It is important to provide this information to health professionals in order to help them to find optical treatment and correct therapeutic control.

The battery has, for many years, played a key part in a society that relies heavily on portable digital technology and one that is moving towards a greener future. One of the biggest changes that the government and industries are working towards is in the transport sector – electric vehicles (EVs) powered by batteries are becoming more and more widespread, soon expected to replace traditional internal combustion engine vehicles.

The first battery, the voltaic pile, was introduced in 1800 by Alessandro Volta. This battery consisted of copper and zinc disks separated by cloth or cardboard soaked in brine. Since then, the battery has evolved into a lightweight and portable piece of technology with countless applications, including in the increasingly popular electric vehicles.

The function of a battery is to store and convert chemical energy into electrical energy to be used as a source of power in an electric circuit.

A simple cell is made up of a cathode, anode and electrolyte. Batteries discharge when redox reactions – reactions where both reduction and oxidation are taking place simultaneously – occur spontaneously and cause energy to be released. During discharge, the positive electrode is reduced/accepts electrons while the negative electrode is oxidised/loses electrons. The electrolyte contains mobile ions, allowing charge to flow through it. Electrons flow through the external device from the negative electrode to the positive electrode, providing electrical energy to the device. Inside the cell, cations that were intercalated in the negative electrode move to the positive electrode side through the electrolyte. This is reversed during charging.

Although the introduction of electric vehicles is, overall, a positive change for the environmental impact of the transport industry, the production of batteries does have some negative implications. One of the biggest impacts comes from mining of cathode materials, such as lithium, which either requires large quantities of water or has a high CO2 emission.

Additionally, nickel and cobalt mining have negative impacts as well, as nickel mining causes deforestation and endangerment of wildlife while countries which mine cobalt often use child labour and have harmful working conditions.

In 2019, John B. Goodenough won the Nobel Prize in Chemistry for the invention of the lithium-ion battery, and it is still the most commonly

available type of battery on the market today. This is due to lithium’s high charge density that means that it is very energy dense, both in terms of volume

and weight. Common materials for the electrodes include nickel and cobalt for the cathode and graphite for the anode.

Due to government funding and competition within the transport industry manufacturers, development of batteries for use In electric vehicles is being accelerated and much research is being done in universities and industry. Batteries are being developed to be optimised in many ways, including:

Increasing the energy density of a battery means that the vehicle can travel further with the same volume/ weight of battery – increasing the distance that cars can travel in between charging – or travel the same distance with a smaller battery, making the vehicle lighter. This increases the cost (as less charging is required), weight/volume, environmental impact (less material needed) and makes electric vehicles more competitive with existing fuel-powered vehicles.

As mentioned, the positive electrode is the most expensive and environmentally damaging part of the battery. To reduce this, the structure of the crystals are being changed (for example, disordered rock salts are being developed - lithium transition metal oxide cathodes with a crystalline rock salt structure but with a disordered arrangement of lithium and transition metal on the cation lattice) allowing it to consist of a higher proportion of manganese instead of cobalt or nickel.

As the widespread use of electric vehicles is a new concept, the life cycle of a first generation of electric vehicles is beginning to come to an end, meaning there is a huge demand for battery disposal. Currently, China has the capability to recycle 120 thousand tons of battery per year, but recycling is expensive, and manufacturers prefer to source virgin materials. Because of this, a lot of research is going into the development of recycling techniques and different ways of separating the materials in the battery are being developed. Cheap battery recycling capability that is able to match the number of vehicles being disposed of would mean that both less landfill space and mining for raw materials would be required.

Increasing the longevity of the batteries would reduce cost and environmental impact. When a battery nears the end of its life, each cycle is shorter, so journeys between charging are shorter. This is caused by battery degradation. Three main factors contribute to this:

Þ SEI layer growth – this is developed on Li metal electrodes (the negative electrode) and irreversibly breaks down the electrolyte, causing electrolyte loss. The SEI layer includes lithium fluoride, lithium carbonate, lithium methyl carbonate, lithium ethylene decarbonate and lithium oxide. The SEI layer forms on the first cycle of the cell but then stops further reactions between the electrode and electrolyte. However, as the cell ages, the thickness of the SEI increases, resulting in further loss of electrolyte and resul ng in a loss of capacity in the cell.

Þ Lithium plating – this is a side reaction where lithium metal forms on top of the surface of the negative electrode instead of intercalating into it. The plated lithium can form SEI (described above). Another consequence is the formation of dendrites, which can cause an internal short circuit if the separator (which separates the electrodes) is punctured.

Þ Structural change/decomposition of the positive electrode – in an NMC battery, each of the cations nickel, manganese and cobalt play a role in the decomposition and structural change of the positive electrode. The consequence of this is capacity loss of the battery, leading to a shorter battery life.

The degradation of batteries can be decreased by using different compositions, structures and materials in the electrodes. However, since there is a huge variation in possibility, researchers model the degradation using mathematical differential equations and analyse different batteries using Python. This means that fewer electrodes have to be assembled and tested, helping save time and resources.

The reason for the introduction of electric vehicles, and the subsequent phasing out of fuelpowered vehicles, is due to the massive carbon footprint that the transport industry is responsible for. It makes up 27% of emissions in the UK, the largest footprint across any sector. Electric vehicles eliminate the need to burn fossil fuels inside the engine of a vehicle, which means that in cities/population dense areas that have large amounts of traffic, greenhouse gas emission is hugely reduced, and carcinogenic fumes are eliminated.

Instead, as the name suggests, electric vehicles rely on power from electricity, which comes from a mixture of fossil fuels and renewable sources.

Another part of the government’s plan to go greener is to increase the proportion of renewable sources making up the energy powering the National Grid, so eventually electric vehicles will be completely carbon-free while being used. While some remain sceptical about the success of electric vehicles – due to the fact that they are still more expensive than internal combustion engine vehicles and the charging infrastructure is yet to be implemented to a degree that will be required – it seems likely that in the near future, the collaboration between engineers, scientists and car manufacturers will lead to a greener future on the roads, driven by battery technology.

Raaghav Das

Raaghav Das

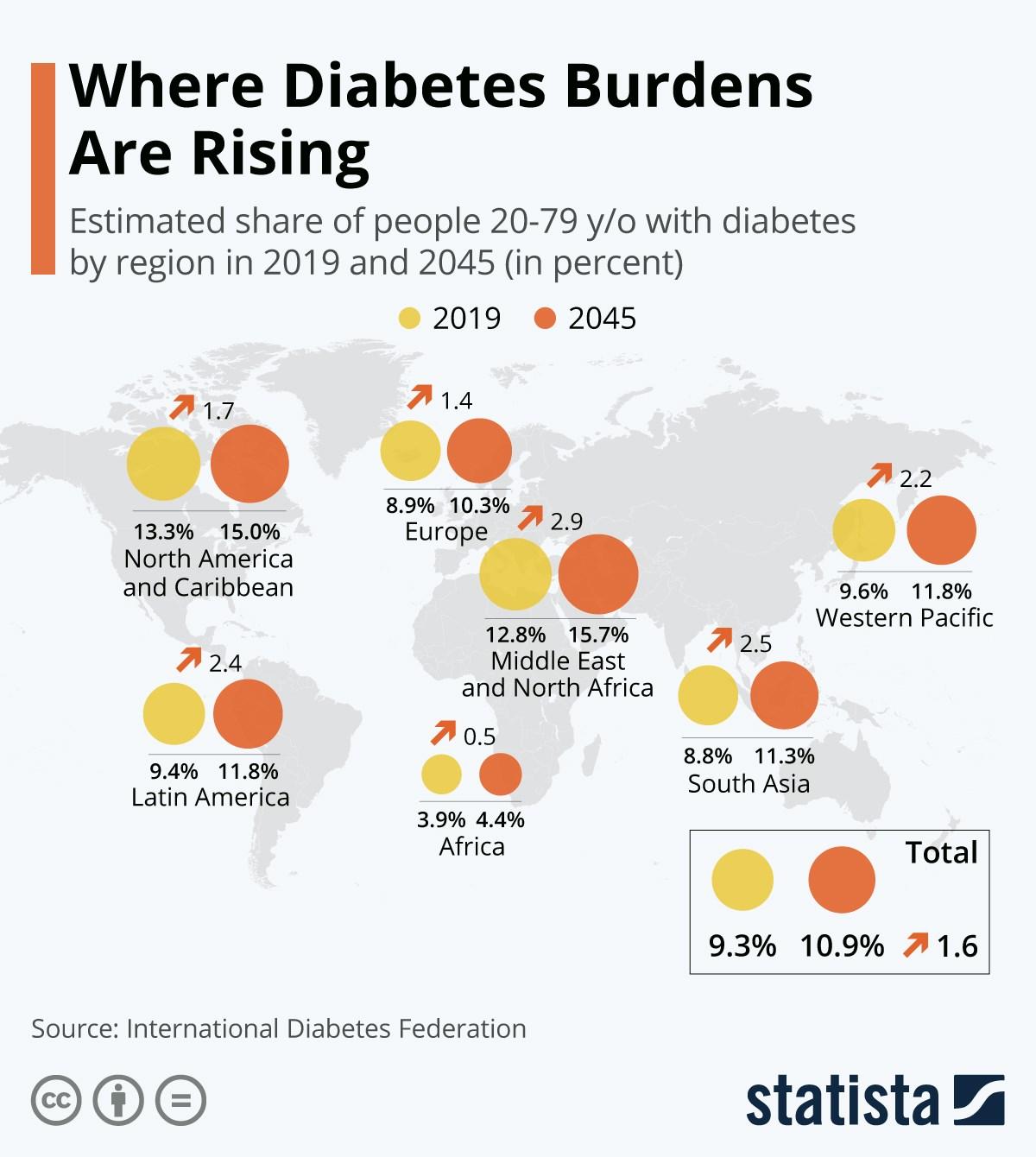

The glands in pancreas secrete the hormone insulin, which transports glucose from the blood to cells where it is broken down in the presence of oxygen. Diabetes occurs when the human body cannot secrete any of the insulin or has insufficient insulin, which causes the body to fail to regulate blood sugar levels. This increase in blood sugar levels is the hallmark of diabetes. Untreated diabetes for a long period can damage nerves, eyes, kidneys and other vital organs. It is therefore a deadly disease and not merely a ‘lifestyle affliction’, as was considered until a few decades ago. Diabetes can be classified into the following categories: Type 1 diabetes, which happens when insulin-secreting pancreatic betacells are destroyed leading to a complete deficiency in insulin. Type 2 diabetesis more common (making up 90% of cases) and occurs when the body becomes resistant toinsulin, so sugar builds up in the blood. The exact aetiology of Type 2 diabetes is still not yet fully understood. Gestational diabetes only occurs in pregnant women during the 2nd or 3rd trimester and disappears after the baby is born.

There has been a great push in the last ten years to find innovative ways to prevent, diagnose, treat and manage the disease with its associated complications. Some of the latest research and technologies offer significant promise and give cause for optimism in the fight against the disease.

commonly known as Diabetes, and its 463

For years, hyper/hypoglycaemia (high/low blood glucose levels) has been tested through finger pricks. However, continuous glucose monitoring (CGM) has replaced this traditional method to manage a diabetes prognosis. One example of such a system uses microneedles under the skin to measure blood glucose, alcohol, and lactate levels every few minutes. Non-invasive glucose monitoring is also an option, whereby a sticky patch is placed onto the skin to monitor blood sugar levels.

Insulin pumps are programmable devices that give a specific dose of insulin at a particular time to the patient. These devices are advanced, storing data about usage patterns. However, they have to be attached to the body and due to their size, not very mobility friendly. Insulin pens are much smaller, more lightweight and even more affordable than insulin pumps. They are designed to carry out the same task as the pump, only they are unable to collect data about usage. Smart pens, on the other hand, connect to a phone using Bluetooth to track insulin doses and timings. They combine the best technological features of an insulin pump while taking the form of a pen. These devices were approved for use in the US in 2017, and now they are widely available to a huge market of diabetics. Researchers from Novo Nordisk, the University of Michigan Medical School, and Cornell University have created an implant which contains living pancreatic cells. This is similar to a device created by MIT researchers, which keeps the islets alive after a transplant, protecting them against the body’s immune response.

FigureÊ2:ÊTheÊdifferentÊriskÊfactorsÊassociatedÊwithÊdiabetesÊtypeÊ2- now,ÊmodernÊtechnologyÊandÊprogramsÊhaveÊ beenÊcodedÊtoÊconsiderÊtheseÊinÊtheÊprevention,ÊmanagementÊandÊtreatmentÊofÊtypeÊ2Êdiabetes.

Significant advancements in machine learning have made it possible to develop algorithms that can almost predict type 2 diabetes, which is important in the development of an efficient and effective prevention plan. Machine learning is a subset of Artificial Intelligence (AI) whereby computer systems seek to discover patterns in existing data to perform classification and prediction tasks on new data. Most of the current machine learning algorithms use datasets and evaluation metrics based on the risk factors associated with type 2 diabetes (Figure 2). Diabetes technology is also continuing to leapfrog thanks to smartphones and wearables. The trove of data from the ever-increasing numbers of people using connected devices and blood monitoring is proving useful far beyond just those customers- it is helping to build diabetes prediction and management health apps using AI for people who do not have or cannot afford such devices.

The most anticipated innovation in this area is around the development of artificial pancreas (i.e. a closed-loop insulin delivery system). They work by continuously monitoring blood glucose levels, calculating the amount of insulin needed, and delivering this dose through a pump. This requires a constant interaction between a continuous glucose monitor (CGM) and the insulin pump, mimicking the body’s natural response to blood glucose levels. The artificial pancreas is still being developed, and it seems to be one of the most promising technologies which could revolutionise the lives of those with type 1 diabetes.

In summary, despite the ever-increasing numbers of global cases, there is reason to be optimistic about controlling diabetes. There are many exciting advancements in diabetes technology that involve machine learning, artificial intelligence, the automation of insulin dispensing, and the prospect of viable transplants or even artificial pancreases.

The lightsaber is one of the most famous weapons of the science fiction world and, although seemingly fantastical, recent scientific developments suggest that the glowing ray might be more tangible than previously thought. This article will explore examples of technology that mimics the lightsaber, delving into the physics behind these inventions by breaking the weapon down into its two components: blade and power supply.

In order to make the lightsaber blade, 7 criteria must be met. First, it must be able to generate sufficient thermal energy to cut through a variety of materials. Second, it must function as a convenient light source, emitting photons in the visible light region of the electromagnetic spectrum. It must also deflect blades of the same material and should measure at a minimum of 3 feet in length. The handle should be approximately 11 inches long and this is where the battery ought to be stored. The battery must also support the blade system - consisting of either light or plasma (as later discussed) - and will need to produce energy in a way that allows the beam to fulfil all of the requirements listed above. This battery must be small and portable, weighing no more than 5 kg. Although the demand for lightsabers is far from high, the need for high power portable batteries is a topical issue and many research projects are currently making advancements in this field.

into the beam. Some elements are able to emit photons with a wavelength in the visible spectrum, allowing the laser to act as a convenient light source.

When faced with this design challenge, one might initially suggest using a high-powered laser. However, the functionality of the laser is debatable. It is a beam of coherent light, meaning that it is derived from a single original source and has photons of the same frequency with a constant phase difference. The beam is generated by providing energy (often in the form of electricity) to a gaseous substance. This excites the electrons in the atoms of the gas into an unstable state as some energy levels are more short-lived than others. If a light beam is then sent into the gas that is filled with unstable atoms, electrons are knocked down from their excited states - releasing photons in the process. This causes a domino effect to take place, allowing millions of photons to be released

However, there are several problems with this proposition. The issue with photons is that they do not have mass as they are discrete packets of energy that do not interact with one other. If you shine two torches at each other they will not interact at all and similarly the laser blade of a lightsaber will not deflect the blade of another lightsaber. There is also no means of making a laser exactly 3 feet long as its length is determined by the point at which its photons are absorbed. This is a characteristic of light as photons do not decay and will continue in their direction of travel until they are absorbed. A possible solution to this problem would be to choose a wavelength of light that is absorbed by the atmosphere as the beam eventually stops when it is absorbed. Unfortunately, wavelengths that are absorbed by the atmosphere are found in regions of the electromagnetic spectrum where light is not visible to humans and therefore the blade would not act as a light source.

The diagram shows the atmospheric absorption spectra. There is a distinct decrease in absorption in the visible spectrum.

Plasma is often described as the 4th state of matter and is, overall, a much better alternative to a laser. Plasma is the state reached when a gas is heated until electrons have enough energy to strip themselves from their atoms, leaving behind a “soup” of positive ions and free electrons. Plasma meets all 7 requirements of the lightsaber blade and it is not massless, like a photon, meaning that two lightsabers made of plasma would interact in the desired way. A plasma cutter works by feeding a compressed gas into a chamber around a cathode where a potential difference is applied. The gas is ionised by the cathode as its temperature and pressure increases. Once passed through a narrow cutting tip, the plasma is focused and at high density and pressure..

denotes the direction of the magnetic field and the third finger points in the direction of the current. Charged particles in a magnetic field will rotate around the direction of the magnetic field, which is used to represent the different parts of a current carrier that interfere with a magnetic field.

If the carrier is negatively charged, the current will act in the opposite direction to its velocity

The design of the plasma cutter can be refined to produce a much larger jet that more closely resembles the Star Wars lightsaber. To overcome the repulsive forces of two like charges, high temperatures must be reached to provide the nuclei with sufficient energy to collide with each other. However, at thousands of degrees Celsius, plasma will inevitably melt its container. This is why, when plasma is used in fusion reactors, magnetic fields are required to keep it off the walls. The charged ions and electrons in the plasma interact with external magnetic fields in a manner that follows Fleming’s Left Hand Rule. A left hand can be held so that the thumb represents the direction of the force exerted on the current carrier, the first finger

vector (as conventional current flows positive to negative and electron flow should be negative to positive). Therefore, by applying Fleming’s Left Hand Rule to the situation seen in the diagram on the right, one can deduce that the electron will follow a helical pattern around the magnetic field. The centripetal force that causes the electron to rotate around the magnetic field is called a Lorentz force. If a particle moves perpendicular to the surrounding magnetic field (as in the example), the equation below can be used to calculate the force: F = qvB.

Here, q is the charge of the particle (C), v is the velocity of the particle (ms-1) and B is the strength of the magnetic field (T or Am-1). Since the particle experiences a centripetal force, the following must be true: mv²/r = qvB.

Plasmaisthestatereachedwhen agasisheateduntilelectrons haveenoughenergytostrip themselvesfromtheiratoms, leavingbehinda“soup”of positiveionsandfreeelectrons.

Plasma can be contained by creating a magnetic field that “eats its own tail”. This idea will be incorporated into the development of ITER, a fusion reactor that is planned be the largest superconducting magnet system ever constructed. Expected to reach a temperature of over 100 million degrees Celsius, the reactor uses hydrogen plasma to generate energy by

Ideally, lightsaber replicas would be composed of 6 kW power supplies however these have an average mass of 120 kg, rendering them unsuitable for use in handheld devices. Fortunately, current advancements in the field of nanotechnology include efforts to design a small and lightweight battery using graphene (a material which is strong, conductive and allows for repeated charge cycles). Graphene batteries would provide a much lighter alternative and would enable charging to occur at higher currents. Graphene can store up to 1,000 Wh per kilogram, meaning that a battery of 6kg could power a portable lightsaber.

nuclear fusion. The interaction of 3 magnetic fields from the central solenoid, toroidal field coil and the outer poloidal field coil confine the plasma, forcing it to circulate in a “doughnut” shape.

To replicate this in a handheld lightsaber, one could use electromagnets strong enough to produce a flow of plasma in a thin eclipse that would resemble a blade. The plasma would not glow enough at the high temperatures reached in the ITER reactor and, therefore, a lower temperature would be required for the blade to act as a convenient light source.

Although the endeavour to build a lightsaber might seem pointless to all except avid Star Wars fans, in actual fact, research in this area overlaps with scientific advancements of great significance. One of these is the development of fusion reactors as a potential new source of sustainable energy, with ITER using hydrogen as its fuel. The design of this fusion reactor is also groundbreaking in its aim to produce an output power which is 10 times that of the input. Through attempts to make a lightsaber, researchers at MIT and Harvard University have discovered the photonic molecule in which photons are able to interact with each other. Photons push and pull against each other but remain linked, acting as particles with mass. These molecules could lead to a revolution in quantum computing as they could be used to represent a 1, 0 or both at the same time. Overall, research into lightsaber technology has proved fruitful and it might not be long before the Star Wars weapon becomes a reality.

Throughattemptstomakea lightsaber,researchersatMIT andHarvardUniversityhave discoveredthephotonic moleculeinwhichphotonsare abletointeractwitheachother.

Althoughtheendeavourto buildalightsabermightseem pointlesstoallexceptavidStar Warsfans,inactualfact, researchinthisareaoverlaps withscientificadvancementsof greatsignificance.

The investigation examines highaltitude balloons as a low-cost alternative for measurement of NO2 concentration versus altitude. First, we discuss the effects that extreme conditions at stratospheric altitudes may have on the data. We go on to consider corroboration between data from multiple flights, and how launching in London explains the trends, including the effects of traffic and the pollution dome. We then assess our data in relation to other measurements of NO2 versus altitude in similar environments. We find that they compare well and discuss possible explanations for discrepancies. Finally, we conclude that high-altitude ballooning has potential as a low-cost alternative for measurement of NO2 concentration versus altitude and should be examined further.

Techniques to measure NO2 concentrations at different altitudes have garnered more interest for air-quality monitoring purposes. The use of high-altitude balloons could greatly reduce the cost of such studies. Current methods include spiralling downwards in an aeroplane, satellite measurement, and expensive ground-based technology such as chemiluminescent analysers. Comparable systems have been flown on high-altitude balloons before, such as the 45 kg acousto-optic tunable filter (AOTF) spectrometer, but this system is heavy, so requires significant paperwork to achieve final permission from the government to launch in many countries, which is impractical and would likely not be granted in built-up areas where NO2 monitoring is most needed, as demonstrated in Appendix 2 of ‘Easy Access Rules for Standardised European Rules of the Air’.

Low-cost high-altitude research balloons generally consist of a latex balloon filled with helium or hydrogen with a maximum payload mass of up to 4 kg, however ours was limited to 2 kg due to legal restrictions in the city centre. Nylon cord is used to attach a parachute 5 m below the balloon, and another 10 m below that is the payload, which contains sensors, radio transmission equipment, flight computers, and other electronics.

Alphasense Ltd’s amperometric air quality NO2-B43F Sensors were used, allowing detection of NO2 without the interference of O3 (Hossein et al., 2016). The NO2 data started as an analogue signal from the electrode and was passed through a 16-bit ADC. A graph of voltage against altitude was then plotted and multiplied by the sensitivity of the sensor in ppb/mv, because the sensor responds linearly to gas concentration (Hossein et al., 2016), giving a reading in ppb. The whole system was tested with NO2 gas at Alphasense Ltd between the 2nd and 3rd flight. A 10 ppm certified NO2 bottle was used in a system of valves and DMFC to produce a 100 ppb NO2 mixing ratio. The measurement showed less than 6% error from the calculated data.

Four flights took place from March to July 2021, each collecting data from two independent sensors in the same payload. The first flight was on Monday 29th March around 12:30, with a mean ascent speed of 4.21 ms-1. The NO2 sensors had no protection against external conditions. The second flight was on Friday 16th April around 13:15 with a mean ascent speed of 3.62 ms-1. Parts of the payload obstructed the sensor, which rendered the results unreliable. This flight has been excluded from data analysis. The third and fourth flights were on Thursday 1st July at 05:00, and Thursday 8th July at 13:00 respectively. The first had a mean ascent speed of 2.55ms-1, and the second 1.64 ms-1. Both had raised hoods over the NO2 sensors, positioned about 5mm from the edge of the box, to protect the sensors from wind whilst still allowing free flow of air. For the fourth flight, a rudimentary Faraday cage was fitted around the NO2 sensors, which reduced noise in the data. Our method is not reliably repeatable because the wind blows the balloon and payload on a unique path each time, however multiple sensors can be placed on the same payload, as we did here.

because the sensor responds linearly to gas concentration (Hossein et al., 2016). Techniques to combat changes in temperature and pressure are complex and none was found to be available for our conditions. Only data from the ascent is shown, because the speedy descent (after burst of the balloon) affected the quality of data when on the way down. The NO2 data is taken from only one of the sensors because the other was affected by electrical interference.

All three flights share an initial sharp drop in NO2 concentration, followed by a steadier increase (Figure 1), suggesting the method is reliable. The first flight has the steepest decrease and the earliest sigma point, followed by the third, then the fourth. Whilst the temperature is affected by the date and time of launch, the pressure is not. The initial sharp decrease in NO2 concentration fits qualitatively with the idea of a pollution dome over London, with primary NO2 emissions coming mainly from traffic and a little from industrial processes, and secondary emissions from the reactions of other pollutants such as O3 and NO. These reactions take place quickly, so even at low altitudes NO2 concentration has contributions from both primary and secondary sources as described in point 364 of ‘Nitrogen Dioxide in the United Kingdom’ (Air Quality Expert Group, 2004). The gradual increase could be due to the ozone layer, where the equilibrium between ozone and NO2 means NO2 is more prevalent there too, but you would expect to see the increase later, starting around 15 km (Wuebbles, 2020). Perhaps the increase observed in our data was due to the interference of temperature, pressure, and other variables. NO2 pollution in built-up areas is predicted to rise from 0 ppb at ground level before quickly reaching a maximum, then experiencing the fall that we observed (Wang et al., 2019; Liu, 2019).

In addition to NO2 concentration, temperature and pressure data has been presented in Figure 1. The exact effects of these variables on the NO2 data are not known, so we used the uncorrected raw sensor data as recorded from the sensors. The data has, however, been normalised such that the moving average minimum concentration reached is set to 0 ppb. This is to account for the sensor zero or background current variation The sensors are difficult to calibrate in varying conditions to give precise concentration readings, but still demonstrate accurate changes in concentration

The initial decrease in our NO2 data compares well with data taken and averaged over four years in Nanjing (Chan et al., 2019): their data shows a spring and summer overall decrease from ground level to minimum of around 10 ppb, whereas ours was only around 6 ppb as shown in Figure 1, however our steeper initial decrease in the earlier spring flight versus the later summer flights matches their observations, along with the earlier minimum in spring versus summer. Data taken in the industrialised North China Plain (NCP) also suggests a maximum-minimum difference of around 10 ppb (Wang et al., 2019). This difference may

also be due to differing levels of pollution in the varying environments.

Whilst the measurements taken in Nanjing (Chan et al., 2019) show an immediate decrease in concentration from ground level, both the data taken in the NCP (Wang et al., 2019) and a German model (Liu, 2019) show an initial peak not observed in our data, though it should be noted that the latter is a model not based on observation, and the data from Nanjing (Chan et al., 2019), being averaged over four years, may give the more accurate result. The position of the troughs in our data also correlates with Figure 2(a) in Melo et al. (2004), although this study is far more rural than the others so less comparable. This is also the only study based on observation that goes above 4000 m and does show a slight positive gradient after the trough, but not as steep as ours. The German model (Liu, 2019), whilst overpredicting the initial peak compared to the other studies, does show a subsequent positive gradient, albeit later than observed here. These studies suggest our positive gradient is a result of – or has been exacerbated by – extreme conditions that would need to be compensated for. In comparison with other studies, our data seems to give a reliable indication of trends in NO2 concentration for the first 5000 to 10,000 m depending on conditions, judging by how long the data remains relatively flat for after reaching its minimum. Until external factors can be accurately compensated for, data beyond this point cannot be trusted. In the right conditions, high-altitude ballooning could still reach higher altitudes than more common methods such as MAX-DOAS measurement (Wang et al., 2019; Chan et al., 2019). Our series of launches cost £287.40 per launch for consumable materials and £1443.28 for setup – £1024.78 for flight equipment and £418.50 for

ground equipment – which adds to £2592.87 overall. Whilst setup costs are a small majority, the low-cost repeatability of the experiment means it is worthwhile. It is worth noting that parachutes have been included as disposable because, even though they are reusable, they need to be specific to the mass of the payload and wind speeds on the day, so it is likely multiple will need to be purchased. The cost will vary depending on the specifics of each experiment, but it should be possible for an experiment on a similar scale to ours to be run at under £3000, excluding labour costs.

High-altitude balloons equipped with low-cost air quality amperometric sensors were used to collect NO2 concentration data versus altitude above London (UK). Environmental conditions can affect sensor data but, in this occasion, it is the uncompensated raw sensor data output that is recorded and reported. The data obtained is comparable, at low altitudes, to data collected by much more advanced systems. If more research were to be done into this method, it could potentially become accurate at greater altitudes and what is already an effective low-cost alternative for NO2 concentration measurements at low altitudes could also become a tool for tropospheric measurement.

Wewouldliketothank‘TheWestminsterSchoolSociety’for fundingourresearch,RichardBlandfordforallhishelp,and LukeMaynardforhiscontinuedsupport.

“High-altitude ballooning has potential as a low-cost alternative for measurement of NO2 concentration versus altitude and should be examined further.”

4. A common respiratory problem among brachycephalic dogs

5.The condition of having low blood sugar levels

8. The largest superconducting magnet system in the world

9. The surname of the inventor of the voltaic battery

1.Next __________ Sequencing

2.The 4th state of matter

3. An alternative to tissue biopsies that is a non-invasive biomarker

6.High-altitude ballooning provide a ___ cost alternative for measurement of NO2 concentration

7.The property of a molecule that is non-superimposable on its mirror image

Drferox (2019). Facial Reconstruction. [online] Dr Ferox, veterinarian. Available at: https://drferox.tumblr.com/post/182302008890/facialreconstruction [Accessed 21 May 2022].

Hendricks, J.C., Kline, L.R., Kovalski, R.J., O’Brien, J.A., Morrison,A.R. and Pack,A.I. (1987).The English bulldog: a natural model of sleep-disordered breathing. Journal of Applied Physiology, 63(4), pp.1344–1350. doi:10.1152/jappl.1987.63.4.1344.

Javed, S. (2022).These are the longest and shortest-living dog breeds in the UK. [online] The Independent.Available at: https://www.independent.co.uk/ life-style/dog-breeds-life-expectancy-jack-russell-terrier-french-bulldogb2068377.html [Accessed 21 May 2022].

Konrad Lorenz (1971). Studies in animal and human behaviour. London: Methuen.

Lewis,T.W.,Wiles, B.M., Llewellyn-Zaidi,A.M., Evans, K.M. and O’Neill, D.G. (2018). Longevity and mortality in Kennel Club registered dog breeds in the UK in 2014. Canine Genetics and Epidemiology, 5(1). doi:10.1186/s40575 -018-0066-8.

Packer, R. and O’neill, D. (2021). Health and welfare of brachycephalic (flatfaced) companion animals : a complete guide for veterinary and animal professionals. Boca Raton: Crc Press.

The Blue Cross (2022). Flat-faced (brachycephalic) dog breeds. [online]

Blue Cross.Available at: https://www.bluecross.org.uk/advice/dog/things-tothink-about-before-buying-a-flat-faced-brachycephalic-dog [Accessed 21 May 2022].

I. https://www.cd-genomics.com/resourse-Principles-and-Workflow-of-WholeExome-Sequencing.html#:~:text=Exome%20sequencing%20contains% 20two%20main,density%20microarrays%20to%20capture%20exome.

II. https://emea.illumina.com/areas-of-interest/cancer/research/sequencingmethods/cancer-exome-seq.html#:~:text=Sequencing%20the%20cancer% 20exome%20provides,alternative%20to%20whole%2Dgenome% 20sequencing.

III. https://www.nature.com/scitable/definition/transcriptome-296/ #:~:text=A%20transcriptome%20is%20the%20full,particular%20cell% 20or%20tissue%20type.

1V. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3841808/

V. https://www.thermofisher.com/uk/en/home/life-science/sequencing/ sequencing-learning-center/next-generation-sequencing-information/ngsbasics/what-is-next-generation-sequencing.html

Florence,A. L., 1960. Is Thalidomide to Blame. British Medical Journal.

James H Kim,A. R. S., 2012.Thalidomide: the tragedy of birth defects and the effective treatment of disease.Toxicology Science.

Jeffrey Skolnick, H. Z. a. M. G., 2019. PNAS. [Online] Available at: https:// www.pnas.org/content/116/52/26571

Lien Ai Nguyen, H. H. a. C. P.-H., 2006. Chiral Drugs:An Overview. Internation Journal of Biomedical Science.

Meierhenrich, U., 2008.Amino Acids and the Asymmetry of Life. s.l.:Springer.

Richard Cammack,T.A. P. C. H. P.A. S. F.V. a. J. S., 2006. Oxford Dictionary of Biochemistry and Molecular Biology (2 ed.). s.l.:Oxford University Press.

Sevin,A., 2015. OpenEdition Journals.

W.H. Brooks,W. G. K. D., 2018.The Significance of Chirality in Drug Design and Development. HHS Author Manuscript.

Whyte, L. L., 1975. Chirality. Leonardo Vol. 8, No. 3 .

Yasuhiro Obaa, N.Y.A., 2015. Chiral glycine formation on cold interstellar grains by quantum tunneling hydrogen–deuterium substitution reactions. Chemical Physics Letters Volume 634.

Figure 1: Buchholz, Kat.“Infographic:Where Diabetes Burdens Are Rising.” Statista Infographics, 2021, www.statista.com/chart/23491/share-of-adults-with-diabetes-world -region/.Accessed 7 July 2022.

Figure 2: Ismail, L., Materwala, H.,Tayefi, M. et al. Type 2 Diabetes with Artificial Intelligence Machine Learning: Methods and Evaluation (2021). https:// doi.org/10.1007/s11831-021-09582-x

“Apps Interpreting Data from Wearable Devices Are Helping People to Live Better.” The Economist, 2022, www.economist.com/technology-quarterly/2022/05/02/appsinterpreting-data-from-wearable-devices-are-helping-people-to-live-better.Accessed 7 July 2022.

Broom, Douglas.“The Silent Epidemic That Is Three Times as Deadly as COVID.”World Economic Forum, 14 Dec. 2020, www.weforum.org/agenda/2020/12/diabetes-silentepidemic-world-health/.Accessed 7 July 2022.

Fregoso-Aparicio, L., Noguez, J., Montesinos, L. et al. Machine learning and deep learning predictive models for type 2 diabetes: a systematic review (2021). https:// doi.org/10.1186/s13098-021-00767-9

Hoskins, Mike.“New Diabetes Technology Expected in 2022.” Healthline, 6 Jan. 2022, www.healthline.com/diabetesmine/new-diabetes-technology-in-2022#dexcom. Accessed 7 July 2022.

Network, Community Access.“10 Facts about Diabetes.” Community Access Network, 5 Nov. 2019, www.communityaccessnetwork.org/10-facts-about-diabetes/.Accessed 7 July 2022.

The British Diabetic Association.“Is There a Cure for Diabetes? - Research Spotlight.” Diabetes UK, 2017, www.diabetes.org.uk/research/research-round-up/researchspotlight/research-spotlight-is-there-a-cure-for-diabetes.Accessed 7 July 2022. Diabetes UK “Research Spotlight – the Artificial Pancreas.”

Diabetes UK, www.diabetes.org.uk/research/research-round-up/research-spotlight/ research-spotlight-the-artificial-pancreas.Accessed 7 July 2022.

WebMD.“Diabetes Basics.”WebMD,WebMD, 25 Oct. 2001, www.webmd.com/ diabetes/features/next-generation-diabetes-technology#:~:text=In%20January% 202020%2C%20Tandem%20Diabetes.Accessed 7 July 2022.

[1] Wookieepedia. (n.d.). Lightsaber. [online] Available at: https:// starwars.fandom.com/wiki/Lightsaber#Mechanics_and_specifications

[2] www.youtube.com. (n.d.).ÿThe Science of Lightsabers. [online] Available at: https://www.youtube.com/watch?v=DolQxe5TAXU

[3] www.surrey.ac.uk. (n.d.). The science of Star Wars | University of Surrey. [online] Available at: https://www.surrey.ac.uk/features/science-of-star-wars

[4] Princeton Plasma Physics Laboratory. (n.d.). About Plasmas and Fusion. [online] Available at: https://www.pppl.gov/about/about-plasmas-and-fusion

[5] ITER. (2019). Plasma Confinement. [online] Available at: https:// www.iter.org/sci/PlasmaConfinement.

[6] Janzen, D. (2018). 8.3 Motion of a Charged Particle in a Magnetic Field. [online] openpress.usask.ca.Available at: https://openpress.usask.ca/ physics155/chapter/8-3-motion-of-a-charged-particle-in-a-magnetic-field/.

[7] ITER. (n.d.). Power Supply. [online] Available at: https://www.iter.org/ mach/PowerSupply.

[8] Softways (n.d.). MRI Database : power%20consumption%20in%20kW. [online] www.mr-tip.com.Available at: https://www.mr-tip.com/serv1.php? type=db1&dbs=power%20consumption%20in%20kW.

[9] MIT News | Massachusetts Institute of Technology. (n.d.). Physicists create new form of light. [online] Available at: https://news.mit.edu/2018/ physicists-create-new-form-light-0215.

[10] Adams, S. and Allday, J. (2013).Advanced Physics. Oxford: Oxford University Press, pp.384–385.

Air Quality Expert Group (2004). In Nitrogen Dioxide in the United Kingdom pp107-109. Department for Environment, Food and Rural Affairs, London

European Union Aviation Safety Agency (2021). In Easy Access Rules for Standardised European Rules of the Air Appendix 2 pp188-193. Cologne, Germany

Hossain M., Saffell J., Baron R. (2015). Differentiating NO2 and O3 at Low Cost Air Quality Amperometric Gas Sensors.ACS Sens. 2016, 1, 11, 1291–1294, DOI: 10.1021/acssensors.6b00603

WangY., Dörner S., Donner S., Böhnke S., De Smedt I., Dickerson R., Dong

Z., He H., Li Z., Li D., Liu D., Ren X.,Theys N.,WangY.,Wang Z., Xu H., Xu

J.,Wagner T. (2019).Vertical profiles of NO2, SO2, HONO, HCHO, CHOCHO and aerosols derived from MAX-DOAS measurements at a rural site in the central western North China Plain and their relation to emission sources and effects of regional transport. Atmospheric Chemistry and Physics, 19(8), pp5417-5449, DOI: 10.5194/acp-19-5417-2019

Wuebbles D. (2020, May 26). ozone layer. Encyclopedia Britannica. https:// www.britannica.com/science/ozone-layer (accessed 2022, January 7)

To submit an article for the next edition of Hooke, email:

dewi.eburne@westminster.org.uk

All submissions welcome!