7 minute read

DEEP THOUGHT

Lucy Wills

Globefox Health

DEEP THOUGHT

Lessons from fi ction for deep tech and automation

he renowned sci-fi writer Alastair Reynolds once told me that the true power of science fi ction is that it allows us to experience "cognitive estrangement".

When the story and it’s setting takes us far enough out, to another universe, or culture, we can look back at aspects of ourselves and our culture, and see them clearly without our usual fi lters and blinkers.

There's also a huge value to near fi ction, where we get to try out small changes from our lived reality and explore what the impacts could be.

Sci-fi is no longer just a source of inspiration and concepts, it’s now an established rehearsal space for the future. Our future.

‘Deep tech’ is a new term for innovations that leverage emerging technologies science and insights at a truly systemic and transformative level. This means they have great potential to drive positive steps in bettering lives and driving economies, yet carry great risks.

In biotech, working at a genetic and metabolic level may yet free us from the suffering of some heritable and acquired diseases, yet the dangers of unforeseen consequences mean that new medicines and protocols are given a great deal of scrutiny.

In engineering, the use of drones, smart swarming devices and autonomous robots is transforming sectors such as farming and environmental monitoring but also brings concerns about their use in civic surveillance and warfare.

But in the case of artifi cial intelligence and machine learning, are we looking at the risks deeply enough?

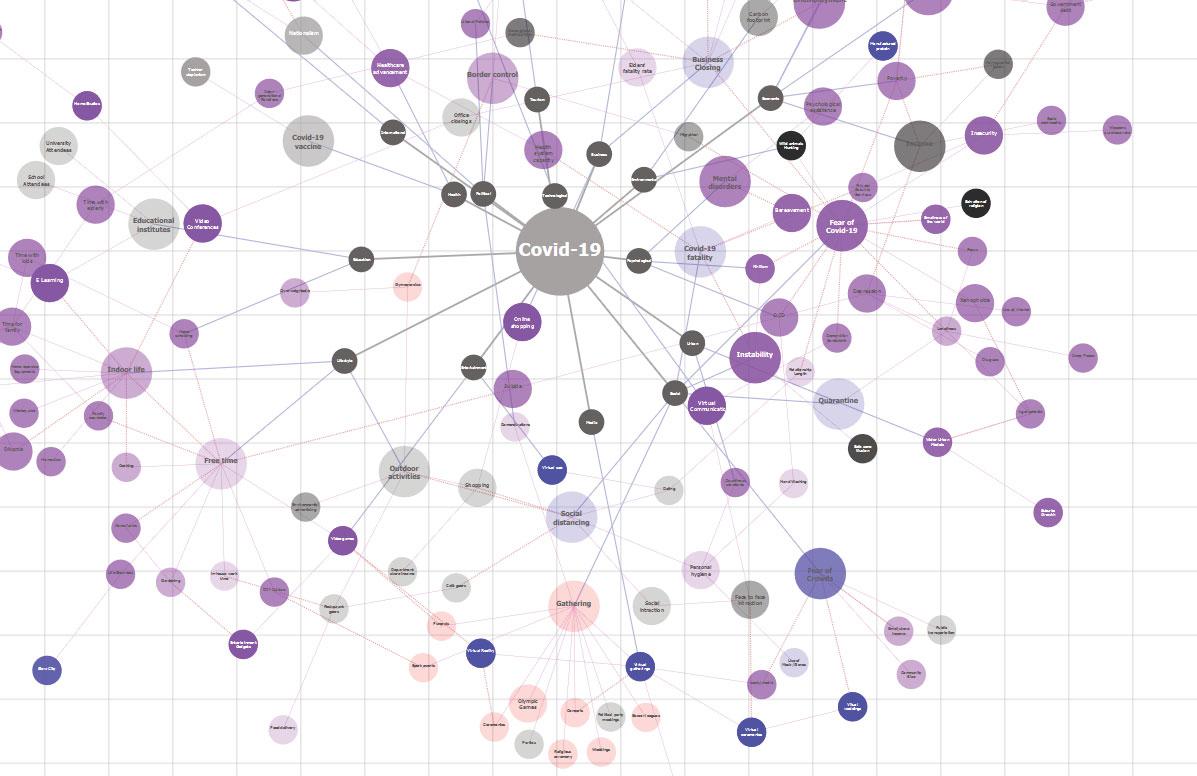

Many of us are aware of the privacy and security risks, or the risk of accident, but we are only just starting to have open discussion about discrimination, fairness and accuracy: whether this be in the framing of the queries, the selection of algorithm, the validation of the data, or even the way data is gathered in the fi rst place.

In healthcare, while the accuracy of AI in processing clinical images has long surpassed that of human doctors, the ability of AI to diagnose or make decisions about care is much less proven.

Current medical models make huge generalisations, take a very simplistic view of health, and exclude way too many people and conditions which don’t fi t in these ‘boxes’, leading to missed diagnoses, human suffering and greater costs further down the line. Yet these models are seen as the foundation of digital services, and are even used to validate them.

Too much of current AI reinforces existing structures and dynamics, and embeds the bias and assumptions that go with them. Now we are training machines to do so much thinking for us, why can’t these systems be designed to help us uncover and dismantle that bias?

Can insights drawn from sci-fi narratives point us towards some measures that may help?

Looking back on the steps that I have taken towards designing healthcare systems that are inclusive, verifi able and accountable, I can see how I have been informed and inspired by narratives and concepts from some of my favourite stories.

Based on a short story by Philip K Dick, the 2002 ‘Minority report’ fi lm took the ‘product placement’ of emerging technology to new levels, with the Production designers engaging with companies and futurists to plot out what new interfaces and products we might see.

Many have since come to pass, such as mass personalisation and gesture-based interfaces.

There is a deeper message within Minority Report that needs to be heard

right now. In the fi lm, the three psychic ‘Pre cogs’ predict future crimes by ‘recording’ similar versions of the same projected event, which is then averaged by computer modelling. Occasionally a ‘Minority Report’ appears, when one of the ‘Pre cogs’ records something quite different.

The current practice of training AI encourages us to ignore such an ‘anomaly’ as it might disturb a distribution curve or appear to cause inaccuracies, yet we do that at our peril.

How can we know what can be safely discarded and what data is signifi cant until we have verifi ed it back with the source or the raw data? It's not enough to say something looks broadly right, the detail in the original data provides a verifi cation that protects us from oversimplifi cation or mistaking the map for the territory.

Police forces worldwide use AI to attempt to predict where crimes may occur from past incidents. When this mapping is combined with facial recognition and movement analysis the risks of bias and racism are compounded: techniques are improving but much imaging software still misidentifi es women and people of colour. But then what else should be looking at to keep communities safe?

Some of you might recognise the title of this piece as the name of the computer from Douglas Adam’s ‘Hitchhiker's Guide to the Galaxy’ story, which when asked the meaning of “life the universe and everything” gave the answer as 42, but then suggested that another much greater computer was needed to fi nd the question.

Surely this should be an absolute goal of machine learning, to create tools that help us ask the questions that we don't know to ask yet.

Douglas also reminded us that we are often blinded to the most critical questions and concerns by our fear of looking at the existential or a desire to stay within in comfortable bounds.

The ‘Joo Janta 200 Super-Chromatic Peril Sensitive Sunglasses’ turn black when they identify danger, giving the illusion of safety, but preventing the wearer from doing anything about it. We need to avoid projecting our blinkered views into the systems we create, and keep our framing, enquiries, models and assumptions open.

Terry Gilliam has explored our relationship to technology through

many of his fi lms. His 1985 fi lm ‘Brazil’ has much to say to us now about politics, status and a need to escape the limitations that others would seek to place upon us.

Yet one seldom remarked upon aspect of this fi lm is the number of huge ducts and pipes that invade almost every scene, carrying essential services, and most critically, data.

In the world of Brazil this transfer of data is made visible, the pipes serving as a constant reminder of the prevalence of surveillance and the need to fi t in.

We may not necessarily have the fi lm’s totalitarian society, but we do live with this mass gathering of data.

In order for everyone to trust the systems around us, It is critical that we are honest about why we are gathering data; what assumptions are being made and what purpose the gathering serves.

Previous step changes indicate that once novel forms of Communication and data transfer become truly ubiquitous and normalised, they become much harder to see, and to evaluate.

So such fl ows should be made visible in some way now, perhaps with simple tools on our devices that make it easy and obvious to see where our data is going and why.

And where possible, we should be able to tinker with them, like the fi lm’s renegade engineer Tuttle, yet with access to a manual, and a just a little more fi nesse.

There is also a short piece of dialogue from ‘Brazil’ that explains beautifully how recursive and exponential arguments create compounded risk.

I hope that by taking this deep philosophical and systemic approach to novel systems we can all avoid ever having to say ”My complication had a little complication”.

Lucy Wills is the founder of Globefox Health, developing accessible digital healthcare tools and services to help people with under-diagnosed conditions and disabilities to better identify, understand and communicate what they are experiencing. The data uncovered will be used to assess and address current bias and limitations and create next generation tools that make the most of emerging systems technologies and understandings.