15 minute read

rendering

Real time ray trace rendering

Nvidia launches Quadro RTX, a new GPU that promises to transform the role of photorealistic visualisation in architecture and engineering, as well as in Hollywood, writes Greg Corke

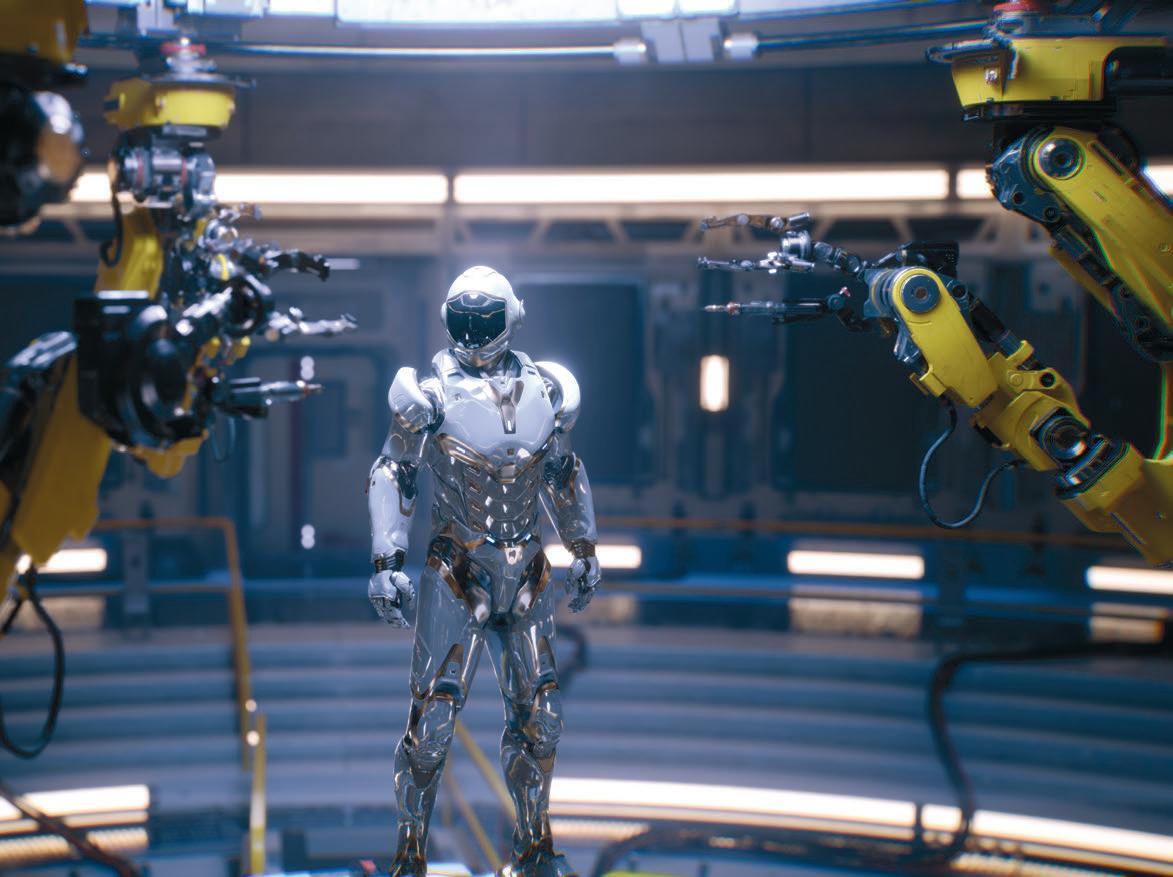

Jaws dropped earlier this year at the to impact all areas of 3D graphics – unveiling of the real-time ray trac- including, of course, design visualisation. ing demo, Star Wars ‘Reflections’. It Previously, designers and architects was the first time that Hollywood- would either have made compromises in quality visual effects, albeit with a quality, using rasterising techniques for few cheats, had been done in real time. real-time rendering, or waited minutes or Lighting moved around a scene interac- hours to get photorealistic results back tively as ray-traced effects, including from a CPU render farm. With Quadro shadows and photorealistic reflections, RTX, Nvidia says designers can now iterwere rendered at 24 frames per second. ate their product model or building and

The dampener was the hardware need- see accurate lighting, shadows and refleced to achieve this level of realism – a per- tions in real time. sonal AI supercomputer called the On stage at Siggraph, Huang illustrated Nvidia DGX Station that comes with four this with a couple of impressive design viz Tesla V100 GPUs and a bill for £60k. focused real-time ray tracing demos – one

Most people expected it to take five to from automotive manufacturer Porsche ten-years for this technology to trickle and another of an architectural interior. down to a single GPU in a desktop work- For its 70th birthday, Porsche created a traced rendering in a game engine.” station. But, amazingly, with Nvidia’s new trailer for its new 911 Speedster Concept “Porsche’s collaboration with Epic and ‘Turing’ Quadro RTX family of GPUs, car. Rather than ray trace rendering it Nvidia has exceeded all expectations announced last month at professional frame by frame on a CPU render farm, from both a creative and technological graphics conference Siggraph, it now the ‘movie’ was generated in real time perspective,” said Christian Braun, manlooks set to become a reality by the end of inside Unreal Engine using a pair of ager of virtual design at Porsche. the year when the “world’s first ray-trac- Quadro RTX GPUs. The virtual car is “The achieved results are proof that ing GPUs” are set to ship. Nvidia CEO Jensen Huang described Turing and RTX as ‘‘ Out of the blue, real-time ray tracing looks real-time technology is revolutionising how we design and market our vehicles.” the greatest leap since the to have arrived, and with it the potential to Huang then turned to introduction of the CUDA GPU in 2006. “This fundamentally changes how comtransform the way architects and designers use photorealistic visualisation architecture, with a real-time, ‘photoreal’ ray-traced global illumination demo set in the puter graphics will be done. It’s a step change in realism,” he said. fully interactive — lighting can be adjust’’ lobby of the Rosewood Bangkok Hotel. The interesting thing about this demo

Not surprisingly, Nvidia has its sights ed in real time and the car viewed from was that Nvidia’s RTX ray traced viewer set on the $250 billion visual effects any angle, with reflections on the physi- had a live connection to both Autodesk industry, getting a slice of the colossal cally-based materials updating instantly. Revit and SolidWorks. Any edits or materibudgets currently spent on CPU render Epic Games, developer of Unreal Engine, al changes made in the popular BIM and farms. But the new technology looks set refers to this as “offline-quality ray- CAD tools instantly showed up in the view-

er. Different lighting options could be explored at different times of the day. The demo illustrated how photorealistic visualisation could be used to influence design in real time and not just for polished renders for client communication or marketing.

A new type of GPU Nvidia achieves this huge leap in realtime ray trace rendering performance thanks to the new ‘Turing’ GPU architecture, which combines three different engines in a single GPU. Until recently, all Nvidia GPUs – including the current ‘Pascal’ Quadro P Series – only featured CUDA cores.

This changed with ‘Volta’, which marked the arrival of Tensor cores in the Tesla V100 and Quadro GV100, designed specifically for deep learning, a subset of artificial intelligence (AI).

‘Turing’ adds a third type of core, the RT core, that is designed specif- Hollywood quality, it into a higher quality image. ically to accelerate ray tracing fully interactive There’s a lot going on here, operations. And it is these three graphics could be coming to a desktop some of which should become processors working together near you soon clearer in the coming months, (ray tracing on RT cores, shad- but in short Nvidia is using a ing on CUDA cores and deep learning on combination of AI techniques and differTensor cores) that allow Turing to deliver ent processing engines to accelerate ray significant performance improvements trace rendering, rather than just relying for ray trace rendering – six times faster on faster CUDA cores to do everything. than Pascal, claims Nvidia.

Nvidia is using deep learning in differ- The cards ent parts of the graphics pipeline to cut Nvidia has launched three Turing-based down on the level of work required to Quadro RTX GPUs. The top-end Quadro generate photorealistic output. Earlier RTX 8000 ($10,000) and RTX 6000 this year the company introduced the use ($6,300) are virtually identical, both of Tensor cores for AI-based de-noising, boasting 4,608 CUDA cores, 576 Tensor which Huang describes as filling in all cores and a ray tracing speed of 10 the spots that the rays haven’t reached GigaRays/sec. The only difference is the yet. Now, Nvidia has introduced deep memory, with the RTX 8000 featuring learning anti-aliasing (DLAA), a new 48GB GDDR6 compared to the technique which trains a neural network RTX6000’s 24GB. to take a lower resolution image and turn The Quadro RTX 5000 costs signifi-

cantly less ($2,300) but fea- This Porsche 911 support NVLink, a proprietary tures fewer CUDA cores (3,702) Speedster Concept car Nvidia technology that allows and fewer Tensor (384) cores, model is ray traced and fully interactive and cards to be installed in a workand the ray tracing speed drops can be viewed from any station in pairs and share their to 6 GigaRays/sec. It also only angle at 24 FPS memory. It means those who has 16GB of GDDR6 memory. have the budget for two Quadro

The amount of memory available on RTX 8000s can effectively have a GPU these cards is significant as this has been with a colossal 96GB. a barrier to adoption for GPU rendering, In addition to DisplayPort, all three particularly in visual effects, where data- GPUs will support USB Type-C and sets and textures can be huge. VirtualLink, a new open industry stand-

At 48GB, the RTX 8000 offers signifi- ard being developed to meet the power, cantly more than its predecessors, the display and bandwidth demands of nextQuadro GV100 (32GB) and Quadro generation VR headsets through a single P6000 (24GB). All Quadro RTX cards USB-C connector. Applications, applications At Siggraph, much of the focus was on Unreal Engine, which uses the Microsoft DXR API to access Nvidia’s RTX development platform that helps ISVs develop RTX-enabled applications.

However, Nvidia also has buy-in from several design and engineering focused software developers and even more in visual effects.

For example, arch viz legend Chaos Group previewed Project Lavina using Microsoft’s DXR to deliver 3-5x realtime ray-tracing performance over Volta generation for scenes exported from Autodesk 3ds Max and Autodesk Maya (see below).

Meanwhile, users of SketchUp, Cinema4D and Rhino will get access to Nvidia OptiX denoiser technology through the Altair Thea render plug-in.

Summary With the launch of Turing and its Quadro RTX ray-tracing GPUs, Nvidia has sent ripples through the computer graphics industry. Out of the blue, realtime ray tracing looks to have arrived, bringing with it the potential to transform the way architects and designers use photorealistic visualisation in their workflows. We look forward to test driving the new cards later this year when they launch in Q4.

■ nvidia.com

Chaos Group unveils Project Lavina

Chaos Group has previewed a new real-time ray tracing technology that renders up to 90 frames per second on the new Nvidia Quadro RTX GPUs.

Project Lavina, named after the Bulgarian word for avalanche, was unveiled at Siggraph with a demo depicting a massive 3D forest and several architectural visualisations running at 24-30 frames per second (FPS) in standard HD resolution.

Rather than using game engine shortcuts like rasterised graphics and reduced levels of detail, each scene features live ray tracing for interactive photorealism.

Unlike most game engines, which require assets to be rebuilt and optimised, Lavina simplifies this process with direct compatibility and translation of V-Ray assets.

Upon loading the scene, the user can explore the environment exactly as they would in a game engine, and experience physically accurate lighting, reflections and global illumination.

According to Chaos Group, one significant benefit of ray tracing is that its speed is minimally impacted as scenes get larger, unlike rasterised graphics.

Each tree in Project Lavina has 2 to 4 million triangles and there are over 20 different trees, making for about 100 million unique triangles along with the terrain. The forest then has over 80,000 instances, making the rendered scene over 300 billion triangles. All of this is without any culling or swapping, or level of detail – meaning all that geometry is there; all the time.

Chaos Group says that Lavina will eventually be able to handle much more geometry, as it will also support scenes too large for GPU memory by running “out of core” and using system RAM while maintaining most of its speed.

Chaos Group’s findings from Lavina are already being applied to V-Ray GPU. V-Ray GPU will also be gaining the out-of-core work being pioneered on Lavina. ■ chaosgroup.com

Q&A with Glass Canvas

Technical Director Bill Nuttall discusses the London studio’s restless creative spirit, the kind of hardware required to render a 25,000-pixel trompe l’oeil hoarding – and the art of creating VR experiences so immersive they could kill

London’s Glass Canvas produces Sometimes, it’s a loose creative brief some of the world’s most beauti- where we are required to create the ful architectural visualisations, design almost in shorthand for a vision from the interiors of Grade 1 list- image or masterplan, sometimes it’s fully ed town houses to the exteriors of con- verified planning work, and at other temporary high-rises. As well as stills times, it can be really conceptual animaand animations, the studio has recently tion. At any time, we typically have been expanding into VR work, on projects 30-40 projects going across the entire ranging from health and safety applica- Glass Canvas Group. tions to presenting major urban developments. Q: What software do you use Q: How did Glass Canvas in production? get started? A: We use Autodesk 3ds Max for modelling, along A: The studio was founded with plugins like Forest in 2001 by Andrew Pack and RailClone. Our Goodeve. Prior to setting up, renderer is Chaos Group’s Andrew was working as a V-Ray. Post-production is visualiser at Foster + done in Adobe Photoshop. Partners, and after three years wanted to strike out on his own. At the time, there ‘‘ Although And we use the Adobe suite for animation — After Effects, Premiere Pro and weren’t as many other archi- typical job Audition — plus SynthEyes tectural visualisation firms deadlines for 3D camera tracking. For in London as there are today. I joined full-time in 2005. I was originally an architect, haven’t changed much virtual reality work, we use Epic Games’ Unreal Engine, plus Autodesk ReCap for but preferred the more crea- in the last 15 turning photographs into tive stages of the architectur- years, client models for use in VR. al process, in particular experimenting with the soft- expectations Q: : What about hardware? ware and 3D modelling. I did have, which a few freelance jobs for Glass puts us in a A: I assemble all our hardCanvas, Andrew offered me a job, and I’ve been here ever since. Andrew and I are now continual cycle of upgrading ware; I have done for about ten years. Everything in our office is put together by me co-owners. our software, by hand, so it’s all handhardware and picked components, right up Q: How would you summarise the kinds of jobs Glass workflows to our 30TB file server. The last four workstaCanvas does? ’’ tions I’ve put together have used AMD’s 16-core Ryzen A: It really is pretty much everything Threadripper CPU – the 1950X – and across architectural visualisation. Last 64GB of RAM. My graphics card of week, I was working on a masterplan in choice is currently AMD’s Radeon Pro Casablanca, producing a two-and-a-half- WX 9100. It offers the highest possible minute animation, and this week, I’m viewport quality in 3ds Max and plenty working on some planning and market- of HBM2 framebuffer memory to boot. ing images of a mixed-use scheme in Rendering for us is CPU-based, and the East London. Ryzen Threadripper machines are just fantastic for that. The value for money is incomparable to anything that Intel makes. I wouldn’t want to make a render farm out of the Ryzen Threadripper chips, as I like to put in as many CPUs as possible, but they’re definitely comparable to the Intel Xeons that are going to farms when it comes to power.

Q: What is your internal render set-up?

A: We have a rack with around 400 cores in total. During the day, the artists can use them as an extension of their own workstations, then at night, they’re either rendering animation frames or large singleframe images: typically, 5,000 pixels by 3,750 pixels.

I haven’t built any render nodes for several years, but the new ones would be made with AMD’s Epyc processors, given the opportunity. They’re cheaper than Intel’s offerings, and will offer the same or better levels of rendering power. Like Threadripper, they offer superior bang for your buck.

Q: What’s the most technically challenging job that Glass Canvas has worked on?

A: The City of Wolverhampton Commercial Gateway Master-plan was pretty intensive. It’s a large collection of buildings, then lots of Forest Pack objects. That really chews through memory, even though we try to make as much of the geometry as we can procedural. In the aerial view, the roofs are hand-modelled, but the facades are all RailClone objects. We had a team of four working on that one for over six weeks.

With every new project we like to deliver something more, be it detail, scope, quality or realism. Although typical job deadlines haven’t changed much in the last 15 years, client expectations have, which puts us in a continual cycle of upgrading our software, hardware and workflows.

1

3

1 For the re-development of Morley House, a Grade II-listed building on London’s Regent Street, Glass Canvas was commissioned to visualise the completed works to help attract and engage residential and retail occupiers 2 To help promote the £110 million redevelopment of Luton Airport, Glass Canvas delivered CGIs, animation and 360 VR content 3 The City of Wolverhampton Commercial Gateway Master-plan is one of the most technically challenging jobs that Glass Canvas has worked on due to the complexity of the scene

A: I don’t think it’s massively different to what you would see in a classical art gallery. One of the things you often see, especially with Renaissance art, is the use of two dominant colours: very often contrasting colours, like blues and oranges, or close harmonics, like purples and blues. And then a strong composition: the rule of thirds is still as relevant today as it’s always been.

It’s also important to study the works of great photographers, cinematographers and film-makers. Look for patterns and themes in their compositions, and make note of the best camera movements and edits. You know greatness when you see it: figuring out what creates it is the key.

Q: What was your most memorable Virtual Reality (VR) project? for a construction company, which involved taking photos of their equipment – really massive steamrollers where the front bit is as big as you – and turning them into animated models. It took one of our artists two days to turn around 100 photographs into a refined 3D model to use in Unreal Engine, via ReCap and with clean-up in 3ds Max. We were demoing the result to the client, and this poor person put the headset on, turned around, saw a steamroller coming in their direction, shouted out in panic, and ran across the room and into a wall.

Q: You’re not meant to kill the client during the demo, you know.

A: I think the client was actually quite impressed. That really is immersive. A: VR is still very much trying to find its feet within architectural visualisation. Our last five VR projects have all been very different from each other. Our clients are going through the process of discovering what works best for them, so we’re trying a bit of everything, from room-scale setups to smartphones in cardboard goggles.

I don’t see much of an application for VR in 3D modelling for a while. Sitting there modelling in 3D space sounds amazing, but can you imagine having to wear a headset all day? It’s bad enough looking at a screen all day long without having it five centimetres away from your eyeballs.

But my predictions can be notoriously inaccurate. When I was at school, back in the 1980s, one of my predictions was that mountain bikes were just a fad. That tells you all you need to know.