M.Sc. Dissertation 2023-24

M.Sc. Dissertation 2023-24

FOUNDING DIRECTOR:

Dr. Michael Weinstock

COURSE DIRECTORS:

Dr. Elif Erdine

Dr. Milad Showkatbaksh

STUDIO TUTORS:

Paris Nikitidis

Felipe Oeyen

Dr. Alvaro Velasco Perez

Lorenzo Santelli

Fun Yuen

We extend our deepest gratitude to Course Director Dr. Elif Erdine, Dr. Milad Showkatbakhsh, and Founding Director Dr. Michael Weinstock for their insightful and intellectually stimulating discussions. The tangential ideas arising from these conversations often led to equally, if not more, thoughtprovoking metaphorical reflections, encompassing a broader scope than the primary subject matter. We also wish to thank Alessio Erioli [Co-de-iT] for assisting us in envisioning alternative possibilities, and express our sincere appreciation to our studio tutors and colleagues for their steadfast belief in the core principles of this dissertation.

GRADUATE SCHOOL PROGRAMMES

PROGRAMME:

EMERGENT TECHNOLOGIES AND DESIGN

YEAR:

2023-2024

COURSE TITLE:

M.Sc. Dissertation

DISSERTATION TITLE:

GAP IN THE CLOUD

STUDENT NAMES:

Burak Aydin [M.Arch.]

Mehmet Efe Meraki [M.Arch.]

Prakhar Patle [M.Sc.]

Rushil Patel [M.Arch.]

DECLARATION:

“I certify that this piece of work is entirely my/our and that my quotation or paraphrase from the published or unpublished work of other is duly acknowledged.”

SIGNATURE OF STUDENT:

DATE:

20th September 2024

This thesis explores the interdependent relationship between data—its generation, storage, and consumption—and the utilization of space and energy within the urban fabric, focusing on London’s context as one of the global data hubs. Based on past studies, current observations and future projections, there is a growing need to reconsider data centre typologies, which will eventually need to be re-integrated into the urban fabric where the information is produced and processed. By challenging traditionally isolated yet highly embedded typologies, the study unfolds a context-specific functional hybridization, cultivation for food production, to provoke a mutual integration with the public through the developed material system combined with the space-making strategy.

Functional and spatial hybridization is enabled by reusing the excess heat generated from computational activity and retaining them using phasechange materials (PCM). The heat retention performance of the system is further enhanced by developing a PCM in-filled Triply Periodic Minimal Surface panel system and passively regulating the temperature, ensuring thermal comfort for the enveloped agricultural function. This creates a mutual energy loop within the closed system, reducing dependency on external resources.

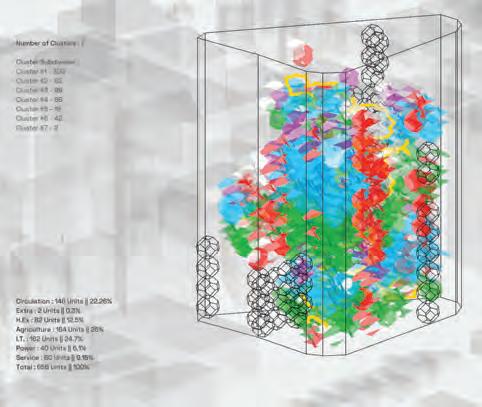

As mission-critical facilities, data centres require a highly modulated functional distribution, yet this does not translate to its space-making practices especially in the urban fabrics where the boundaries are pre-set. Addressing this challenge, spatial experiments utilized a shape-grammar approach with an automated interpreter, developed to optimize the functional and spatial distribution of the designated space-making units informed by the site conditions.

These parallel set of experiments ensured a dynamic set of spectra to enhance the building performance and spatial qualities for adaptability and responsiveness to the ever-changing demands of data, space and energy. The re-positioning of data centres in the urban fabric, through a re-imagined typology aims to transform today’s unwieldy and isolated facilities to tomorrow’s integral components of the urban ecosystems.

Keywords:

Data x Space x Energy, Phase-Change Material, Triply Periodic Minimal Surface, Shape-Grammar, Adaptability, Urban Ecosystem.

Rooting back to earliest cave paintings humankind has always had an urge to reposit information it received and generated and tangibly transfer to future receivers. This information has been embedded through various modes that have continuously evolved throughout history.

Following the invention of writing and spanning the era of transition from tablets to books, libraries served as common repository hubs to organize information. For over millennia, paper and the printing press remained the main sources of information storage systems.

The discoveries of the transistor and integrated microchip in the 1950s hinted at the upcoming of the digital age. In the late 90s, the transition occurred when digital storage surpassed paper in cost-effectiveness for storing information.

1This advancement enabled a global shift towards new ways to compute, store, and transfer information faster than ever. To manage the increasing resource and information traffic, new typologies emerged in our built environments such as data centres.

Additionally, the unprecedented power of information processing enabled us to decode humanity itself, leading to the discovery of DNA as arguably the most efficient medium of information storage.2 DNA: The Ultimate DataStorage Solution | Scientific American

Portraying the evolution of information processing, storage, and transfer systems, it is apparent that humankind will continue to pursue its mission to process, store, and transfer information to the future generations.

Tracing back to the fundamentals, the initial observation of this study portrays that since antiquity, three fundamental concepts are common through the evolution of informatics systems:

- Data,

- Space,

- Energy

All of which are necessary to reposit a unit of information, no matter what the medium is.

The complex relationship between these factors raises several questions:

- Is there a hierarchy between them?

- How are they interdependent on each other?

The concepts of information and data are often used interchangeably in colloquial language, but it is critical to differentiate them to reveal their multi-layered structure. 3

Although an ever-continuing semantic discourse is present to dissect indetail, the general framework of how information is derived and expanded, is portrayed by what is known as the “DIKW (data-information-knowledgewisdom)” pyramid which its precise origin is uncertain as Wallace stated.4

Involving processes such as distilling, abstracting, processing, organizing, and interpreting, multiple layers of organization and meaning are added to the each step of the pyramid. Although variations appeared over time, “data” always set the base for all. It is recognized as the raw material derived from abstracting the environment around using numbers, characters, bits, and symbols.

Data can be stored in multiple analogues and encoded digital forms as binary digits. In this manner, it can be interpreted as a building block. Similar to bricks, data are commodities that gain value when they are used and/or stored. Interrelating and structuring multiple data, we produce “information”. The patterns of information generate what is called knowledge. And with the ability to judge and execute in context, wisdom is reached.

data abstracted element linked data organized information applied knowledge information knowledge wisdom

Data - Brick analogy and its continuous development diagram (illustrated by the authors).

[Fig. 03] Various classifications of data (illustrated by the authors).

Classifying data is a rather complex problem from multiple extents. Initially, the data that are captured as their own are considered “raw data”, meaning they have not been “processed” by any means. We are exposed to both raw and processed data through multiple means.

“Metadata” is data about data; the “private” and “open data” discourses address the accessibility questions for multiple stakeholders. “Structured data” resembles the way we store information in physical libraries with books. On the other hand, “semi-structured data” is a combination of structured and unstructured. This is not necessarily always a negative feature, but because of factors like the internet and expanding social media, around eighty percent (80%) of data flowing are labeled as semi-structured.5

Though all these labels are rather relative, data can be subjective and objective. In this manner, according to Kitchin, data can be attributed as “socio-technical assemblages”.6

"socio-technical assemblage" collected & translated data about the data

meta data

[Fig. 04] Various classifications of data (illustrated by the authors).

private data

structured data

semi-structured data

public data

available to access, use & share unstructured data

flowing without a context : text, AV, logs + more

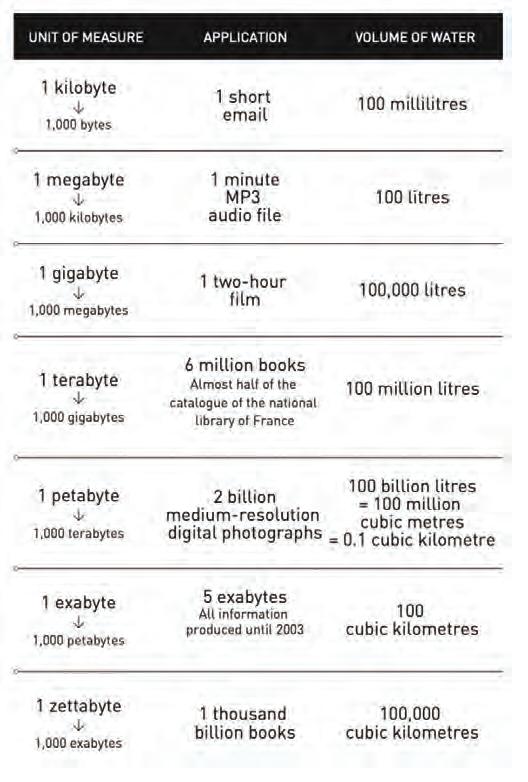

To convey the scale of the unfolding data landscape, let’s imagine a unit of data: a byte, as a water droplet. In this manner, a gigabyte would be equal to an amount of rainwater tank.7

It is estimated that the data we’ll generate next year will be equal to 175 zettabytes, parallelly the volumetric amount of 175 Gulfs of California, and it is projected that the magnitude is only going to tremendously increase.

data generated, + future projections (as zettabyte) = 1e 9 TB

[Fig. 06] Yearly distribution of data generated, projections and analogous relationship (retrieved from the book :The

[Fig. 07] Data processing apparatuses comparison, the first and the most up-to-date computer (images retrieved from https://penntoday.upenn.edu/news/worlds-first-general-purpose-computer-turns-75/ (left) and https://japan-forward.com/a-look-at-the-magic-behind-fugaku-the-worlds-leading-supercomputer/ (right).

Similar to “information”, which is embodied in objects, knowledge, and knowhow are embodied in persons and networks of humans. Humankind is limited in its capacity to acquire and reposit knowledge and expertise, which raises the need for the accumulation of information in the form of data.8

The processes to analyse, process, and store data heavily involve apparatuses that are entwined and embedded within the ever-growing infrastructures.

“In a dark, tepid room lies an array of blinking cuboid machines speaking in code. They compute, store, and transmit immortalized memory bytes - information of today’s mortal lives. In this dark, tepid room lie physical matter supporting virtual terrains, whose boundaries unremittingly expand, transcending the perimeters of the space.” – Tang Jialei (Harvard GSD)

Digital information storage, like writing on paper, occupies physical space. It’s not the information itself that requires space, but the physical medium on which it’s stored. The more compact the writing, the more information can fit on the page, provided it remains legible. Similarly, on hard disks, information is stored magnetically, with tiny sections of the disk magnetized to represent binary data (1s and 0s).9

The evolution of data processors from their inception to the present day encapsulates a remarkable journey of technological advancement and miniaturization. This narrative begins in the 1940s with the advent of ENIAC (Electronic Numerical Integrator and Computer), the first electronic generalpurpose computer. ENIAC, a large weighing approximately 30 tons and occupying 1,800 square feet, signified the dawn of the computing era. Despite its enormous size, it could perform only 5,000 operations per second, a minuscule fraction compared to contemporary standards.

09] Hardware miniaturization.

10] Data production timeline.

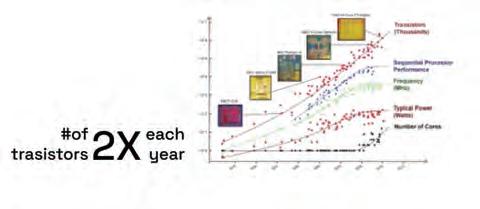

The past decades saw a relentless drive towards “miniaturization”, as per Moore’s Law. In the 1960s, Gordon Moore hypothesizes that the number of transistors on a microchip would double roughly every two years, leading to exponential increases in computing power.10 This prediction has largely held, propelling us into an era where billions of transistors can be integrated onto chips smaller than a fingernail. For example, the Intel 4004, introduced in 1971, was the world’s first microprocessor, containing 2,300 transistors and executing around 92,000 operations per second. In contrast, modern processors consist of 16 billion transistors and perform trillions of operations per second. This comparison illustrates the drastic advancements in processing power and efficiency over the past few decades. Despite the tremendous progress achieved through miniaturization, the pursuit of more powerful and efficient processors persists.

“Cartesian enclosure , a controlled space, shaped by technological rationales, coalescing in unassuming architectures organized according to borders that neglect the surrounding environment. Supporting Western man’s exceptionality, these architectures follow economic efficacy rationales and extractives logics, often at the expense of ethical and ecological awareness.”Marina Otero Verzier.11

A data centre is a specialized facility designed to house an array of networked computer servers that store, process, and transmit data. These centres are equipped with redundant power supply systems, advanced cooling systems, and robust security measures to ensure continuous operation and data protection. At their core, data centres comprise servers, storage systems, networking infrastructure, and environmental controls, all functioning cohesively to support various applications and services. As we move to the Information Age, we’re now dealing with much more than just communication.

Programmatically, a data centre is divided into four main sections: computing, power distribution and storage, climate control, and physical security.12 There may also be additional areas like small office spaces.

Data centres are vital components of the modern infrastructure supporting our interconnected physical and digital worlds. They vary widely in location and size, from urban to rural settings and from single servers to massive warehouse facilities. They require significant energy and utility support, such as electricity and water, to operate. A large-scale data centre can cover 1.3 million square feet and use as much power as a medium-sized town. Like earlier infrastructure, data centres are essential and resource intensive. They are part of a global information technology communication network that includes sub sea internet cables, landing points, inland internet cables, and internet exchange points. Given the deep integration of internet technology in our economy, society, and culture, the construction of data centres is crucial.

Raw Information/Input

Processed Information/Output

[Fig. 11] Components of a data centre.

Supercomputer

E.N.I.A.C.

1946

Single Server

200-digits

167 m²

8 people

Mainframe

IBM 1401

1971

Single Server

18000 Character

30 m²

8 people

IBM SP1/SP2

Supercomputer

Client- Server

1970-1990s

Multiple Server

64MB to 2 GB

125 m²

4 people

Virtualized

Dell poweredge 800

1990-2010s

800 servers

2GB-1PB

450 m²

3 people

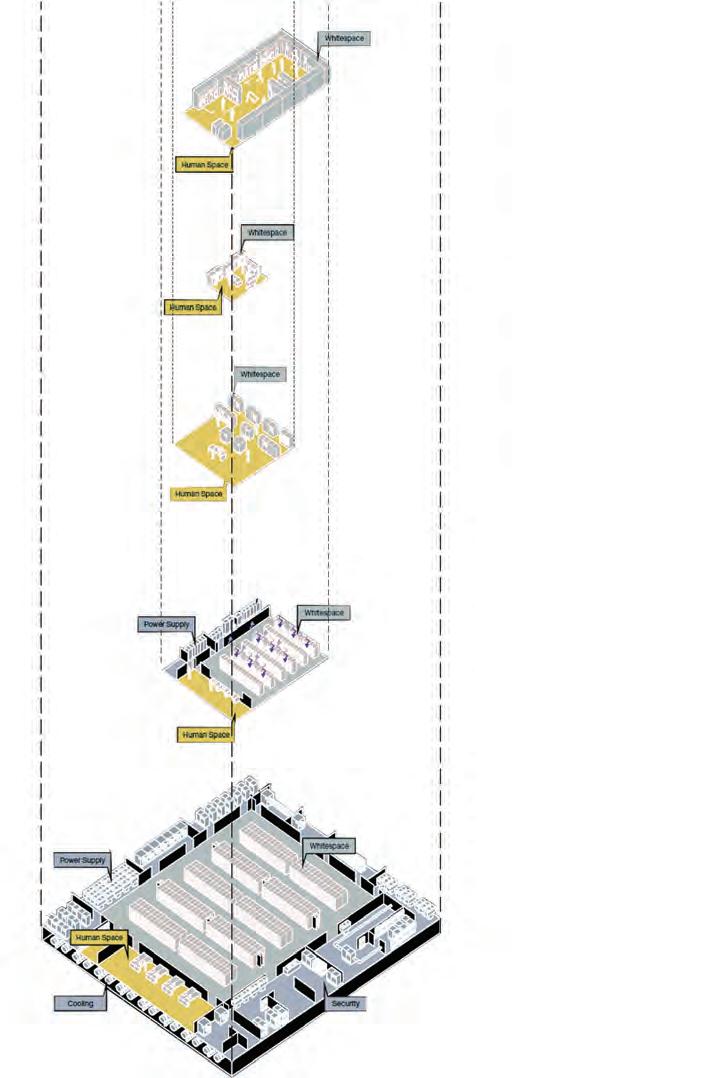

The evolution of data centres reflects a significant shift in the relationship between humans and computers, driven largely by advancements in information and communications technology (ICT). From the 1960s to the 1990s, early data centres were symbolized as relatively simple setups, featuring a limited number of servers and basic infrastructure. During this period, there was minimal whitespace (areas designated for equipment) and restricted space for human maintenance.

Cloud Switch SuperNAP

2010- Today

6000 Servers

1PB-15EB

325,000+ m²

1 person

As we move into the 2000s and 2010s, data centres experienced substantial growth and increased complexity. This era saw a rise in server capacity and the introduction of specialized systems for cooling and power supply. The need for expanded whitespace became evident, as did the necessity for dedicated areas for human operation and maintenance, reflecting the growing sophistication of these facilities.13 By the 2010s, data centres had evolved into large-scale operations with highly advanced infrastructure. It’s noticeable that through their evolution, data centres have minimized the need for human input by prioritizing and expanding whitespace.

With the interdependence of data and space, how does the equation adapt when energy is brought into context? A space hinting a relationship with data tends to also expect to be continuously fed with a source of energy. “This energy experiences conversion to and from different states. How does this energy conversion have a relationship with the distribution and consumption of the same?”

For a unit data–bit-, energy requirements outweigh space as the digital means became the consensus for reaching and sharing information across public and private domains. Although the means to store data become more efficient, the demand to process data is exponentially accelerating, resulting in the energy demands continuously being questioned.

Data centres require well-defined metrics to accurately measure performance and address inefficiencies. Power Usage Effectiveness (PUE) is a key ratio comparing the total energy consumed by the data centre facility to the energy used by the IT equipment. PUE is crucial for evaluating and enhancing the energy efficiency of data centres. By comprehending and optimizing PUE, data centre operators can minimize environmental impact and improve overall performance.

Total Facility Energy: This value encompasses all the energy utilized by the entire data centre, including:

IT Equipment: Servers, storage, network switches, and other computing hardware.

Mechanical Systems: Air conditioners, chillers, compressors, pumps, and other mechanical infrastructure.

Electrical Systems: UPS systems, power distribution units (PDUs), transformers, lighting and miscellaneous

IT Equipment Energy: This value refers to the energy directly consumed by the IT equipment for data processing, storage, and networking.

[Fig. 13] PUE values and their corresponding efficiency values. (retrieved from https://submer.com/blog/howto-calculate-the-pue-of-a-datacenter/).

The major demand for data centres began in the early 2000s, driven by the dotcom bubble, internet expansion, e-commerce, and social media. The internet’s growth increased the need for data storage and processing, while e-commerce platforms like Amazon generated vast amounts of data requiring expansive infrastructure. Social media platforms such as Facebook contributed to a surge in data creation and sharing. By the mid-2000s, these factors firmly established the need for more data centres. In the early 2010s, cloud computing services from AWS and Microsoft Azure and the rise of big data analytics further heightened the demand for advanced data centre infrastructure.

In the early 2000s, global data centre energy consumption more than doubled, primarily driven by the increasing electricity demands of the rapidly increasing number of installed servers.14 Concurrently, minor improvements in average PUE globally led to a similar sharp increase in electricity usage by data centre infrastructure systems.15 However, by 2010, the growth in server electricity consumption is slowed due to improved server power efficiency and higher levels of server virtualization, which also curbed the increase in the number of installed servers.16

[Fig. 14] Electricity use distribution of data centres throughout the years (retrieved from Geng, Hwaiyu. “Data Center Handbook: plan, design, build, and operations of a smart data center,” 2021).

[Fig. 15] Data centre energy efficiency throughout the years (retrieved from the report: Uptime Institute. Uptime Institute Global Data Centre Survey; 2018).

Energy Consumption and Efficiency

By 2018, IT devices, primarily servers and storage, dominated data centre energy consumption due to the rising demand for computational and storage services. However, energy consumption for data centre infrastructure systems significantly decreased from 2010 to 2018, owing to improvements in global average PUE values1718. Consequently, global data centre energy use increased by only 6% between 2010 and 2018, despite significant increases in data centre

IP traffic, computeinstances, and storage capacity19. Uptime’s data shows that industry PUE has remained at a high average ranging from 1.55 to 1.59 since around 2020. Despite ongoing industry modernization, this overall PUE figure has remained almost static, in part because many older and less efficient legacy facilities have a moderating effect on the average. In 2023, the industry average PUE stood at 1.582021.

[Fig. 16] Global Electricity Demand from Data Centres, AI, and Cryptocurrencies, 2019-2026 (retrieved from International Energy Agency (IEA). Electricity 2024 - Analysis and forecast to 2026 “Electricity 2024 - Analysis and Forecast to 2026,” 2024).

The International Energy Agency (IEA) estimates that data centres, cryptocurrencies, and artificial intelligence (AI) consumed approximately 460 TWh of electricity globally in 2022, representing nearly 2% of the world’s total electricity demand.

Looking ahead, the energy demand of data centres is expected to grow significantly due to rapid technological advancements and the evolution of digital services. The IEA projects that by 2026, global electricity consumption by data centres, cryptocurrencies, and AI could range between 620 and 1,050 TWh, with a baseline estimate of around 800 TWh. This increase—ranging from an additional 160 to 590 TWh compared

to 2022 levels—is comparable to the electricity consumption of countries like Sweden or Germany22.

Improvements in reducing Power Usage Effectiveness (PUE) and enhancing energy efficiency in data centres are increasingly becoming a significant focus. Hyperscale colocation campuses and many large new colocation facilities are being designed with PUE values significantly below the industry average of 1.4. For instance, Scala Data Centres is constructing its Tamboré Campus in São Paolo, Brazil, aiming for 450 MW with a PUE of 1.4. Cloud hyperscale data centres of companies like Google, Amazon Web Services, and Microsoft already report PUE values of 1.2 or lower at some sites23.

Energy Resources

The electric power sector is the largest source of energy-related carbon dioxide (CO2) emissions globally and is still highly dependent upon fossil fuels in many countries2425. As demand for data centre services rises in the future, the impacts of data centres on climate change will likely continue. Some data centres are pursuing renewable electricity as part of climate commitments, alongside longstanding energy efficiency initiatives to manage ongoing power requirements.

When considering renewable power sources, data centres generally face three key challenges26:

1.) Limited local access to renewable energy-supported grid.

2.) Insufficient land and rooftop on-site generation of renewable energy.

3.) Intermittent power reliability to avoid power interruptions.

Beyond energy, water is also an essential resource facing challenges. Data centres frequently struggle with water usage, especially in regions with limited water sources. Additionally, innovative data centre designs are exploring water as a cooling system rather than heat, driven by technological advancements like AI.

For example, training GPT-3 in Microsoft’s state-of-the-art U.S. data centres can directly evaporate 700,000 litres of clean freshwater. More critically, the global AI demand may be accountable for 4.2 – 6.6 billion cubic meters of water withdrawal in 2027, which is more than the total annual water withdrawal of 4–6 Denmark or half of the United Kingdom. This is very concerning, as freshwater scarcity has become one of the most pressing challenges shared by all of us in the wake of the rapidly growing population, depleting water resources, and aging water infrastructures.

In summary, the data centre industry is at a crucial crossroads involving energy, environmental, and resource management. Historically, rising energy demands driven by technological advancements and growth of AI and supercomputing have led to increased consumption, despite efforts to improve PUE. Data centres also face significant challenges related to CO2 emissions and water usage. Addressing these environmental concerns through innovative cooling solutions, and achieving better PUE metrics, is essential for advancing sustainable data centre operations.

19] Intersections of data, space and energy.

The trilogy of these individual case domains and their intersections hinted us about the hidden potentials of such wide domain. Through the literature and studies we questioned data centre building practices along with the potential value this typology can have in various contexts.

20] Inferences from the intersection of data, space and energy.

Apart from the primary domains of data, space and energy, new domains of contextual adaptation, waste energy utilization and spatial hybridization were three interrelated tangents that we extracted from the study of their intersections.

[Fig. 21] Global data centre distribution (retrieved from https://espace-mondialatlas.sciencespo.fr/en/topic-contrasts-and-inequalities/map-1C20-EN-locationof-data-centers-january-2018andnbsp.html)

Although data centres are globally distributed, they are predominantly clustered in North America —particularly in the USA—and Europe —with the highest concentration found in the United Kingdom.

In Europe, most data centres are in northern countries such as the Netherlands, Germany, and France, but the UK stands out with the greatest density. Also, it might be worthwhile considering volume or other measures of data

exchange traffic. The largest exchanges outside the United States include Frankfurt, Amsterdam, London, Moscow, and Tokyo.

Currently, the UK operates approximately 350+ data centres, with the mentioned 120+ situated in London. The demand for data process and storage are surging due to the AI boom, leading to significant growth and development of data centres.

[Fig. 22] Data centre hubs in northern Europe (retrieved and redrawn from https:// www.datacentermap.com/united-kingdom/)

[Fig. 23] The continuous journey of Data (redrawn from thesis DataHub: Designing Data Centers for People and Cities, Harvard GSD)

The data journey begins with connected devices such as smartphones and smart cars. Initially, this data are sent to edge data centres These facilities are smaller and decentralized.27 Located close to end-users, they are positioned in the urban fabric to minimize latency and improve data processing speed.

Their location prioritises closeness to the end-users; so, introducing a new factor beyond the miniaturisation of such kinds. From there, the data progresses to large hyperscale data centres having vast amounts of data processing and storage capabilities.

The increasing use of the "smart" devices, that demand rapid access to data and its processing, is driving research into new solutions for managing latency. Edge Computing has emerged as a promising approach to meet the latency demands of the next generation 5G networks by positioning storage and computing resources closer to end-users.

The location of data centres in urban areas like London is influenced by multiple factors, with facilities often being either retrofitted buildings or newly constructed, purpose-built structures depending on the availability of building stock. However, expansion and scaling requirements of AI and Supercomputing within London's urban context pose challenges due to limited plot sizes and existing building stocks.

Despite the challenges posed, London’s existing infrastructure and its central role in the global data network provide a strong foundation for the need for

expansion in an urban context and therefore, a re-imagined approach towards the data-processing typologies in our everyday surroundings.

Accordingly, questions regarding the current concerns related with the existing typologies and examples were gathered to the related bases to further define the "problem space" for the research:

edge remote

[Fig. 26] Challenging the idea of absolute Modularity (retrieved from https://www.wired.com/2013/02/ microsofts-data-center/)

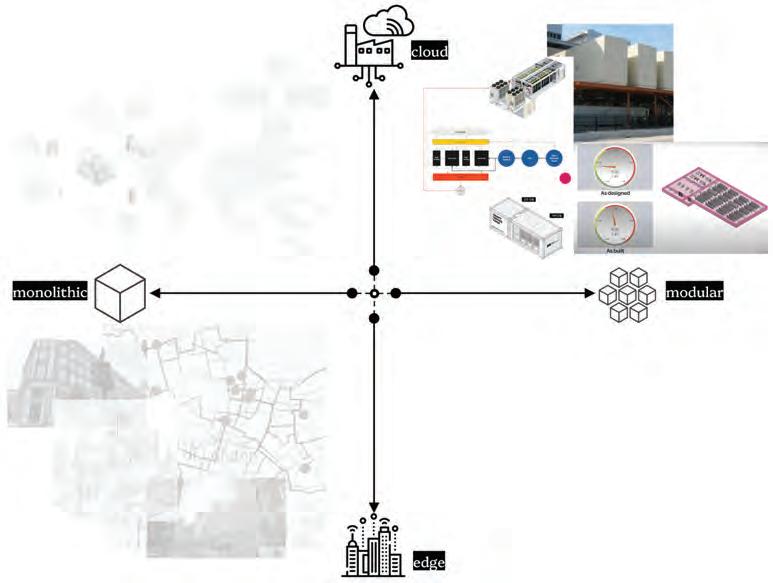

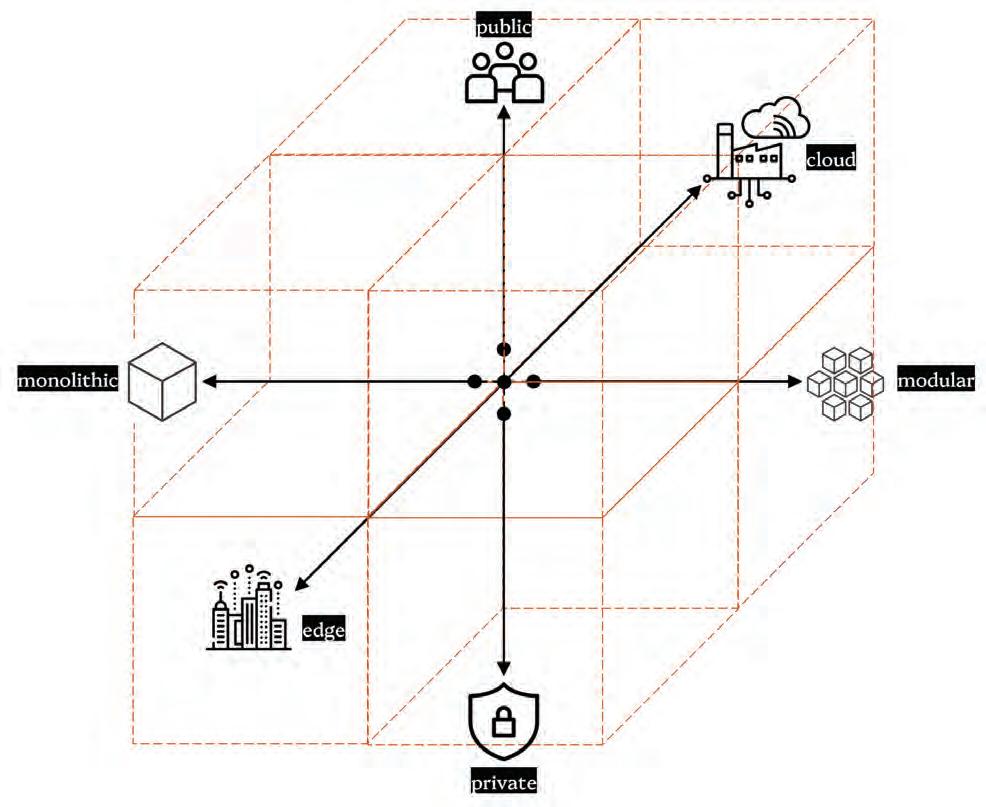

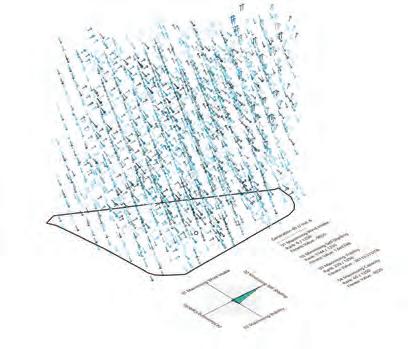

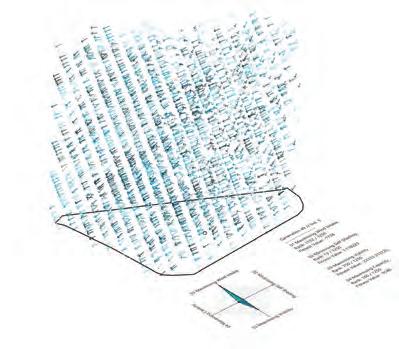

Juxtaposing the two fundamental spectra of features, a two-dimensional chart was proposed to plot and better dissect the related case studies.

Considering the insights retrieved from the “data-space-energy” intersection, it is possible to better understand the prominent qualities of data-centre typologies by mapping the features through multiple spectra under four group under four quadrants:

1) Monolithic | Cloud

2) Monolithic | Edge

3) Modular | Cloud

4) Modular | Edge

27] Juxtaposed spectra, the base to plot the case studies (generated by the authors)

[Fig. 28] Case studies extracted from around the world.

Although Data Centre Plans and Layouts are kept classified due to their mission-critical nature and strategic importance, we’ve gathered multiple DC examples from around the world.

[Fig. 29] Linkedin Oregon DC (retrieved from https://www.linkedin.com/blog/engineering/ developer-experience-productivity/lessons-learned-from-linkedins-data-center-journey)

[Fig. 30] Naver DC Project in South Korea (retrieved from https://www.datacenterdynamics.com/ en/news/naver-plans-cloud-ring-second-korean-data-center/)

For this quadrant, two examples were compared and contrasted after a general desk research of traditional data-centres,

1.) an existing 8 MW capacity facility in the United States28. 2.) a conceptual project for Naver DC in South Korea29.

Both facilities share common, fundamental function clusters and similar hierarchical structures, essential for their operation. These clusters include electrical and mechanical support areas, loading and shipping docks, conditioned storage, Points of Presence (POPs), and office spaces.

Although the design and construction methodologies are monolithic, the clustered units have a repeating pattern in order to function as mini data centres within the governing monolithic envelope.

The architectonic elements provide only a sheltering purpose for these missioncritical facilities.. The main target is to achieve easily maintainable and high capacity computing in remote settings, ensuring uninterrupted service.

[Fig. 31] Data Centre Project in Denver, colorcoded plan, (retrieved from https://www. ckarchitect.com/denver-data-center-den01/ o93ie2kwvzkqbcdnjrwfmzikepo55j)

[Fig. 32] Data Centre Project in Northern Virginia, color-coded plan, (retrieved from https://www. ckarchitect.com/northern-virginia-data-centernv01-1)

[Fig. 33] Data Centre project in Norway, colorcoded plan, (retrieved from https://www. datacenterdynamics.com/en/news/keysourceand-namsos-datasenter-planning-norwegian-edgefacility/)

Initial layout surveys show that data centres, as mission-critical facilities, require a fundamental set of spatial and functional relationships. These functional distributions often repeat across different contexts, raising questions about the influence of contextual and environmental conditions on the design process

[Fig. 34] Surveyed Plan of the case-study (retrieved from https://www.ckarchitect.com/8mw-datacenter-1)

[Fig. 35] The extracted access and functional distribution diagram (generated by the authors)

The survey on the 8 MW capacity example portrays the mini-data centre structure. This specific example consist of 4 similar cells and 1 smaller cell to provide the required redundant computation capacity. In this manner, 8 MW is equal to the combination of 4 equal cells, providing 2 MW each, with repeating IT, Mechanical and Electrical Components. Additionally the internal and external access control elements like mantraps and the linear layout were observed.

[Fig. 36] Naver DC project in South Korea, plan layout (retrieved from https://www.datacenterdynamics.com/ en/news/naver-plans-cloud-ring-second-korean-data-center/)

The conceptual approach of a 'cloud-ring' primarily manifests in the facility’s plan organization. Circulation spaces are designed to enable maintenance robots to navigate through the data halls, with human involvement deliberately minimized to reduce unplanned interference and optimize facility management. However, the introduction of social functions within the ring seems contradictory to this intent. While the ring is highly isolated from human factors, the inclusion of such spaces raises questions about their practical role and necessity within a system engineered to prioritize automation over human interaction.

[Fig. 37] The extracted access and functional distribution diagram (generated by the authors)

Despite their advanced designs, both case studies reveal significant limitations in terms of flexibility and scalability. The US data centre’s interconnected cells, each connected to 2MW Power Distribution Units (PODs) , restrict the upgrade-ability of individual components due to their tight integration without a common spine. This rigid structure makes it difficult to adapt to new technologies or scale operations efficiently, posing long-term operational challenges. Similarly, the Naver DC plan in South Korea, though innovative with its integration of smart farming systems and water clusters for heat utilization and cooling, fails to address scalability comprehensively.

We question the current approach to space-making in these monolithic data centres, highlighting missed opportunities for innovative design practices that could unlock hidden potentials. Both facilities prioritize functional clustering and architectural shelter, but they fall short in exploring flexible and scalable (both up and down) configurations.

To better understand, where some data centres are embedded in London, a building like many other in Angel has been noticed. Although the façade resembles a housing block with its window articulation, in fact it is a camouflaged data centre. The street facing façade versus the backdoor and the roof portrays the Frankenstein situation.

It is extracted that “monolithic - edge” data centres are embedded in urban fabrics. Deployed on the existing grid that we share, these typologies are hidden in our everyday surroundings. Despite being in cities, like their cloud data centre counterparts, these types do not consider any contextual input regarding their surroundings. Due to the lack of buildable area in dense urban fabrics, the construction method has shifted towards retrofitting existing buildings to accommodate the infrastructural necessities of these data centres. Many examples suffer from space constraints that are strictly pre-defined by the buildings they occupy. This not only results in expansion issues but also leads to “over-scaling.” In these typologies, the complex web of components and spatial relations makes scaling down as difficult as scaling up. Overall, as long-lasting monolithic data centre construction practices appeared in the cities, retrofitting existing buildings in isolation from their surroundings results in neither flexible nor responsive data centres.

[Fig. 39] Level3 DC in Angel,London (generated by the authors via Google Earth Imagery)

Due to the lack of build-able area in dense urban fabrics, the construction activities have long ago shifted towards retrofitting existing buildings to accommodate the infrastructural necessities of these data centres. The pre-set conditions of the envelope makes it harder to fit-in as with the best-practice, cost and energy efficient way possible.

from https://www.ckarchitect.com/ digital-capital-partners-dcp03-2)

https://www.ckarchitect.com/ digital-capital-partners-dcp03-2)

On the other hand, whether purpose-built or retrofitted, many examples suffer from spatial constraints that are strictly pre-defined by the buildings they are "fitted".

This not only results in expansion issues but also leads to “over-scaling.”

In these typologies, the complex web of components and spatial relations makes scaling down as difficult as scaling up. Overall, as long-lasting monolithic data centre construction practices appeared in the cities, retrofitting existing buildings in isolation from their surroundings results in neither flexible nor responsive data centres.

[Fig. 44] a Microsoft Cloud Computing Facility in Virginia (retrieved from https://baxtel.com/data-center/microsoft-azure/photos)

[Fig. 45] a Microsoft Cloud Computing Facility example (retrieved from https://www.cnet.com/culture/microsoft-boxing-up-its-azure-cloud/ )

In the modular-cloud data centres, studies highlight the use of prefabricated modular systems that can be easily plugged in or out to the existing structure to adjust capacity, thereby accommodating demand fluctuations.

A case study of Microsoft’s data centre illustrates this approach. The facility resembles a warehouse, with certain fixed functions and spaces that remain unaffected by changes in computational demand. Within this warehouse, various IT container boxes are arranged, with their spatial configurations constantly adjusted to accommodate more containers and provide the flexibility needed to scale up or down based on demand. Additionally, these warehouses are connected to external power supply modules with cooling functions, which help meet the increased power requirements of the IT units.

[Fig. 46] An additive-modular example project (retrieved from https://www.ckarchitect.com/ containerized-data-center1)

This example highlights the importance of sustaining the critical functional relationships between the IT, Heat Exchanger, and Power Delivery units. Rather than a functional zoning within a shelter, the approach avoids establishing rigid boundaries. This allows for greater flexibility, making it comparatively more adaptable to evolving needs and technologies. Still for an effective edge-counterpart, no contextual input for the overall scheme is observed.

[Fig. 47] A sheltered modular example, surveyed and extracted (retrieved from https:// www.se.com/uk/en/work/solutions/for-business/data-centers-and-networks/modular/)

[Fig. 48] Additional modular examples, surveyed and extracted (retrieved from https://koreajoongangdaily.joins.com/2020/07/23/business/tech/Naver-cloudIT/20200723183308292.html)

These highly engineered modules perform as expected, significantly enhancing data centre performance and are, therefore, considered a promising solution. However, despite their ease of initial scalability, the system lacks a central framework that would allow for the re-purposing of its spatial organization. This limitation restricts functional interplay and the integration of hybrid functions. The absence of a unifying structure presents challenges for future scaling, both mechanically and architecturally, particularly in addressing unpredictable future demands or 'unknown projectiles.

As the fourth quadrant on the chart, there are no “modular-edge” data centre examples that integrate modularity as an opportunity to both scale up and down in the dense urban fabric of cities. Taking pre-fabrication as a core principle to ensure expected performance values, data centre modules, generally containers, are typically deployed temporarily for specific time frames. In this manner, the idea of stacking containers as they are bringing challenges during the construction process since it is much harder to replace entire prefabricated volumes rather than their parts. Within the boundaries of this quadrant, pursuing adaptability for edge computing through a modular approach holds potential, though the core concept of the “module” must be revisited.

In addition, a third spectrum is proposed with initial insights regarding the lack of public interaction to better define our domain of interest. To frame and juxtapose the third dimension, is introduced:

Combining this with the first two dimensions, the resulting three-dimensional chart helps to frame the research question and its area of focus.

[Fig. 51] DC distribution in Greater London, redrawn from "Using data centres for combined heating and cooling: an investigation for London"31

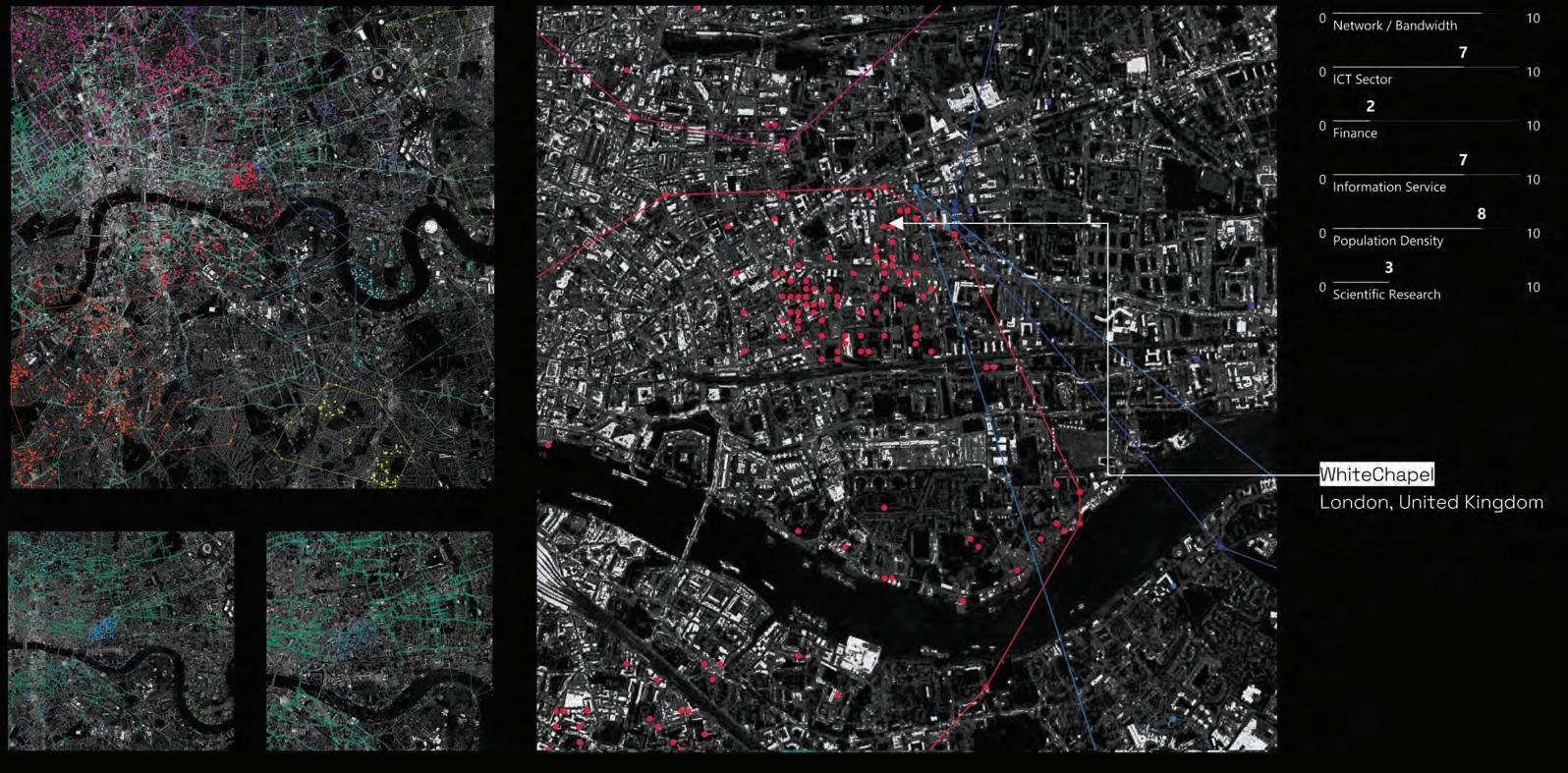

Plotting the data centres, it has been realized that the most data processes and edge computing happen in Central London, near the City of London.

In London, data centres contribute significantly for the consumption of city’s electricity. Majority of energy consumed by data centres is converted into heat, currently cooling technologies including air and liquid is used to cool these data centres which often released into atmosphere, representing a potential waste of energy. Effective heat recovery and potential hybridization of a program that citizens can engage with, can significantly reduce the carbon footprint of data centres ensuring a contribution for the public's good.30

[Fig. 52] Area of allotments (m2 per person) in Greater London (redrawn from the article : Urban agriculture: Declining opportunity and increasing demand 32)

In London, looking at the allotment distribution for cultivation per person, areas with high population density and office buildings are not capable of providing sufficient allotment areas.

Although these zones have high data processing and significant food consumption, they have low food production capabilities.

This observation hinted a potential hybrid function for edge computing typologies, tailored for the public need.

[Fig. 53] Image courtesy of Solomon R. Guggenheim Museum (retrieved from ,https://metalocus.es/sites/default/files/ metalocus_countryside_koolhaas_guggenheim_01.jpg)

In the past, agriculture was considered as alien to the city as fields at the boundaries. However, the contemporary city and agriculture are conceived as a whole. We are seeing more and more city and farm coming together to the point that you could argue the possibility of thinking agricultural production within the urban space.

[Fig. 54] Juxtaposed Maps (generated by the authors utilizing prior two figures)

Both data centres and farms require backup power systems to maintain operations during primary power failures. Data centres need effective airflow management and temperature control to ensure servers function efficiently, while farms need these systems to keep plants healthy. Security measures are crucial for both, with cannabis facilities potentially requiring security levels comparable to data centres.33

Historically, early indoor farms utilized data centre equipment due to the lack of specialized farming equipment. Net Zero Agriculture’s systems, inspired by IT rack designs, are used in shipping containers. Companies like ABB, Air2O, and Schneider, which specialize in UPS and HVAC systems, serve both sectors.

Integrating urban farms with data centres can transform these typically secluded, inaccessible spaces into visible and functional parts of the community, effectively breaking the notion of data centres as "hidden entities." By allocating space for community gardens, residents can engage directly with the facility, growing their own produce and fostering a connection to the site.34

These allocations serve as social hubs, promoting interaction and collaboration. Additionally, regularly scheduled farmers' markets within the integrated facility can showcase and sell produce grown on-site, drawing visitors and creating a lively, market-like atmosphere. This visibility and community engagement make the data centre an active, integral part of the urban environment.

Research Question

How can we generate a scalable data centre typology in the urban fabric of London, by defining a public interface via utilizing the excess heat for cultivation purposes

In London data centres contribute significantly for the consumption of city’s electricity. London’s data centres, including major facilities in Dockland and slough?, are part of the UK’s broader data centre infrastructure. Which in total consumes approximately 12TWh35 of electricity annually. Majority of energy consumed by data centres is converted into heat, currently cooling technologies including air and liquid is used to cool these data centres which often released into atmosphere, representing a potential waste of energy.

Heat exchangers and heat pumps are commonly used technologies for capturing and repurposing waste heat from data centres. These systems can transfer heat from the data centre to a secondary use, such as heating buildings or greenhouses Effective heat recovery can significantly reduce the carbon footprint of data centres.

Both data centres and farms require backup power systems to maintain operations during primary power failures. Data centres need effective airflow management and temperature control to ensure servers function efficiently, while farms need these systems to keep plants healthy. Security measures are crucial for both, with cannabis facilities potentially requiring security levels comparable to data centres. Historically, early indoor farms utilized data centre equipment due to the lack of specialized farming equipment. Net Zero Agriculture’s systems, inspired by IT rack designs, are used in shipping containers. Companies like ABB, Air2O, and Schneider, which specialize in UPS and HVAC systems, serve both sectors36.

Integrating urban farms with data centres can transform these typically secluded, inaccessible spaces into visible and functional parts of the community, effectively breaking the notion of data centres as “hidden entities.” By allocating space for community gardens, residents can engage directly with the facility, growing their own produce and fostering a connection to the site. These gardens serve as social hubs, promoting interaction and collaboration37 . Additionally, regularly scheduled farmers’ markets within the integrated facility can showcase and sell produce grown on-site, drawing visitors and creating a lively, market-like atmosphere. This visibility and community engagement make the data centre an active, integral part of the urban environment.

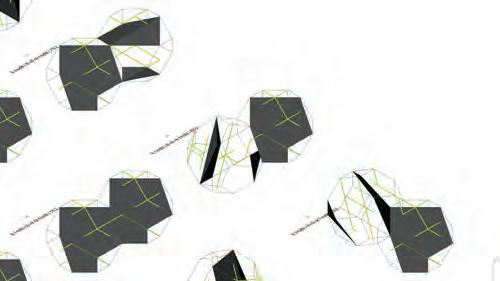

The site selection method in this study uses data sampling by overlaying multiple maps to identify optimal locations for a data-processing typology that hybridizes a cultivation function. In this way, relevant maps are collected from various sources and evaluated based on their legend information.

A grid of sampling points across Central London is generated, and the input data from each map are extracted and juxtaposed. Each sampling point is attributed with the corresponding extracted values. Various maps, serving as source criteria, are weighted according to their relevance to the designated goals, adding up to a total value for each sample point. These goals aim to ensure the chosen locations enhance operational efficiency, community integration, and environmental benefits. These points are then used as center points for potential sites to be identified around them.

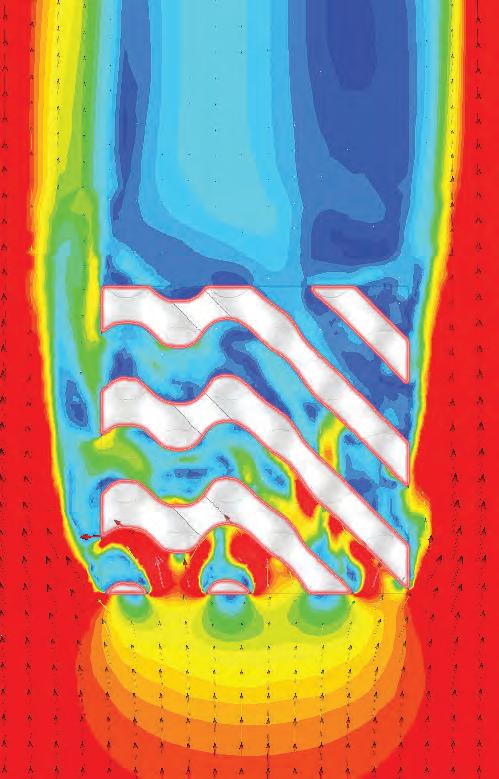

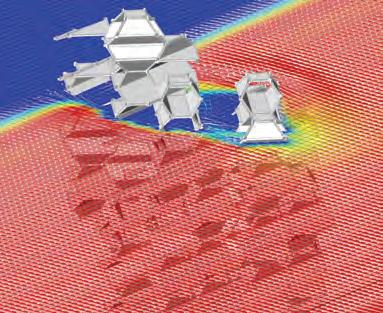

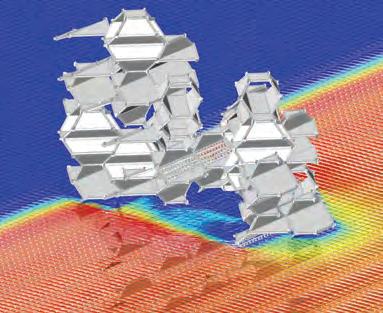

Computational Fluid Dynamics (CFD) analysis combines data models to predict the performance of the input in terms of its response to fluid flow and heat transfer. It examines several fluid flow properties, including temperature, pressure, velocity, and density.38

This methodology is implemented in different scales to support different stages of the design development. The implementation scale varies from the urban context level of London to the local assembly level of the proposed material system.

When neeeded, Fast Fluid Dynamics (FFD) methodologies were also utilized to efficiently predict, compare and contrast the performance of the inputted geometry utilizing less computing power.

Heat transfer mechanisms refer to the ways in which thermal energy moves within a medium, as well as from one medium to another, following the principles of thermodynamics. This research primarily utilizes the second law of thermodynamics, which states that during thermal contact, energy exchange between mediums continues until thermal equilibrium is achieved.39

Computational workflows incorporating specialized equations are used for a quick and accurate understanding of these mechanisms, enabling a performance-driven design process.

The design criteria for the proposed material system heavily depended on the characteristics of thermal energy within. Design optimizations to facilitate this movement were implemented based on the outcomes of this analytical study.

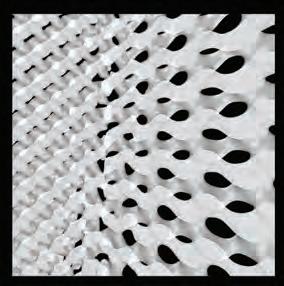

This exploration included additive manufacturing of complex geometries such as minimal surfaces, triply periodic minimal surface-based (TPMS) lattice structures, and their compositions. TPMS exhibit these properties in a periodic manner across three dimensions, posing considerable fabrication challenges with conventional methods.40 Lattice structures, made up of repeating unit cells, offer a combination of lightweight properties and high strength.

The intricacy of these geometries makes additive manufacturing essential, as it eliminates the need for numerous unique formworks or jigs, increasing stability and performance while reducing fabrication time and material waste.

To facilitate hybridized cultivation, this study explored the potential integration of phase-changing materials (PCMs). The selection of suitable PCMs within specific temperature ranges involved a series of material experiments, guided by the heat characteristics obtained from relevant datasheets.

Additionally, the latent heat capacity, cycle stability, and volumetric changes during phase transitions of the selected materials are examined through material experiments using multiple data channels, including continuous logging thermometers and thermal imaging methods.

Evolutionary multi-objective optimization involves multi-criteria decisionmaking. Using principles of genetic evolution and an evolutionary multiobjective optimization engine, multiple solutions are generated, tested, and evaluated based on their performance against the specified objectives.41

The workflow for the design development phase required an evolutionary multi-objective optimization process to generate, compare, and contrast global assembly options in relation to the established and weighted objectives.

To achieve this, Wallacei, an evolutionary engine plug-in for Grasshopper 3D, was utilized. It also allows users to select, reconstruct, and output any phenotype from the population after the simulation is complete.

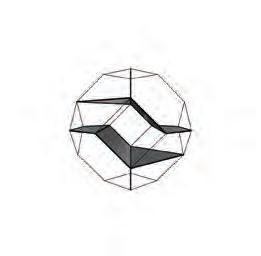

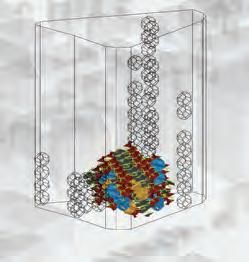

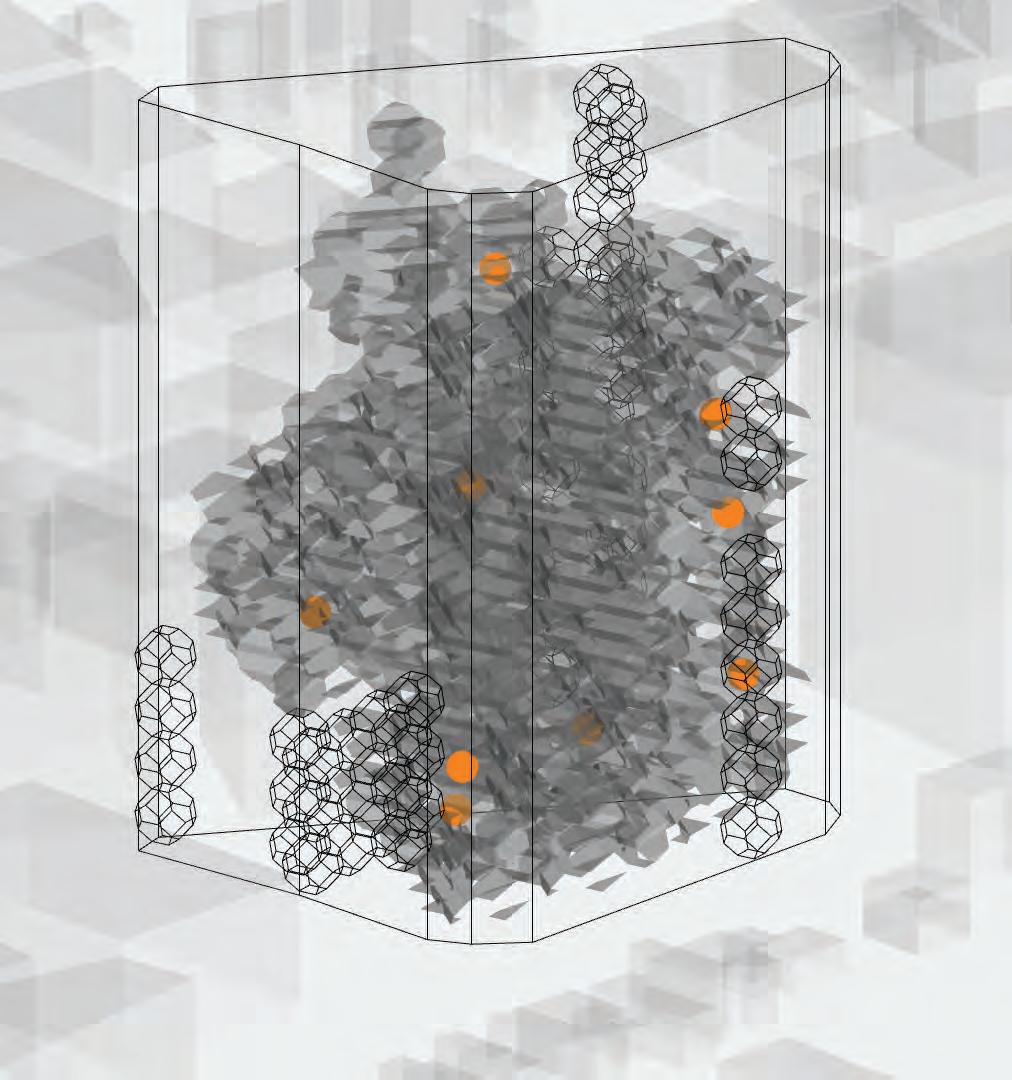

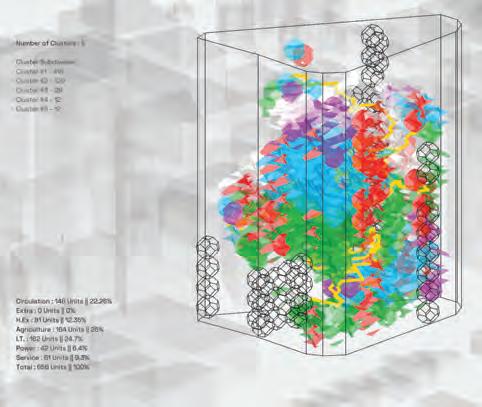

This method explored the simultaneous mapping of environmental conditions for the volumetric analysis of the selected site and its impact on early-stage design. The methodology is based on deconstructing the urban site into a volumetric grid of points. For each of these points, various physical properties, such as solar radiation, airflow, and visibility, are computed. Subsequently, interactive visualization techniques allow for the observation of the site at a volumetric, directional, and dynamic level, revealing information that is typically invisible.42

The research uses this analysis to identify potential areas for the deployment of built structures by examining the field within the volume and assessing the site's future potential for growth. This analysis is then layered with multiple objectives, providing a potential deployment field defined by a weighting system.

To assess the topological conditions created by the assembly of components, Space Syntax and graph theory methodologies have been utilized at the assembly scale. The shortest walk refers to the distance cost between two line segments, weighted by three key factors: metric (least length), topological (fewest turns), and geometrical (least angle change).43

In this context, a further analysis of the clustering and access conditions was conducted using the "ShortestWalk" plugin44 within the Grasshopper environment of Rhino3D. This analysis aims to ensure overall accessibility to the main units by examining the relationships between the space-making units.

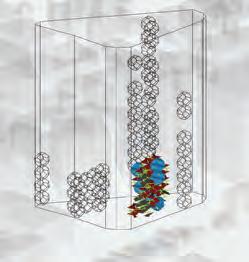

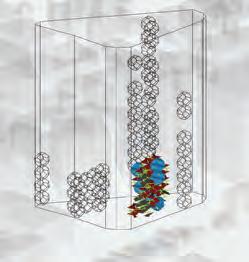

As “mission-critical” typologies, one key inference from data centre case studies was its interdependent sub-cluster requirements to enhance performance efficiency and regulate accessibility within the facilities. Within its typological context, a shape grammar approach is proposed to define a set of mutual rules while generating an adaptable configuration of these “localscaled” units, which will gather the sub-clusters at the “regional scale” and ultimately assemble them at the “global scale”.

Shape grammars, being non-deterministic, provide users with various choices of rules and application methods at each iteration.45 This enables multiple potential outcomes as the it proceeds. The growing set of relationships and complex interdependency create a laborious process which is prone to errors if carried out manually. Regardingly, a shape grammar interpreter that will automate the process is required.

In this context, the “Assembler” plug-in46 is utilised within the Grasshopper environment under Rhino3D. The plug-in aim is defined as “distributing granular decision in an open-ended process,” automating the task of determining “Which rule to apply” or, as stated, “Where do I add the next object?”.

[Fig. 64] De-constructing a data centre (generated by the authors)

Based on the conducted case studies, the fundamental components that comprise the computational capabilities of a data centre were identified through their respective system details. These details are crucial in defining the spatial and functional requirements of the units, as well as the envelopes around and between them. The following components were studied:

- I.T.E. or I.T. : Information Technology Equipment

- Heat Exchange Configurations

- Auxiliary Service Units

[Fig. 65] Traditional Air Cooled DC Diagrams (retrieved and edited from https://journal.uptimeinstitute.com/alook-at-data-center-cooling-technologies/ )

Traditional Information Technology (IT) systems aimed to remove the excess heat from the computers by circulating a treated (filtered, temperature ensured) air around the room.

The electricity consumed by the computing hardware dissipates as heat due to resistances in the circuits. The resultant heat must be dissipated to ensure the required thermal conditions for the information technology equipment (ITE) to operate properly.

Focusing on the core IT stacks in data centres, a wide range of cooling technologies are utilised, employing either air, water, or engineered fluids . In the context of this research, an overview of multiple solutions was conducted to determine the most convenient option that offers both required flexibility in scaling and the highest possible heat-reuse capacity.

As the power density of the chips increased overtime, air became a less viable medium for projected demands

In the scope of the research, various liquid cooling solutions were dissected in the features regarding their scalability, additional spatial and infrastructural requirements, and the heat removal procedure.

Although many modifications multiply the cooling solutions, the selected ones distinctly differentiate from each other and set the base for their typologies. Starting from the widespread air-cooling solutions to the latest immersion cooling options, multiple solutions were compared based on aspects such as scalability and modularity, spatial and infrastructural requirements, characteristics of the dissipated heat, and integrated required systems.

Immersion cooling is a type of liquid cooling by submerging the server units in a cooling fluid present in specially designated tanks. On the other hand, water-cooled server racks resemble conventional rack-mount servers, but they are networked with water blocks and fluid-circulating tubing to aid in heat dissipation. Due to the maximum use of liquid around the generated heat, the thermal conductivity advantage is highly retrieved.

[Fig. 66] Heat Load per ITE solution chart (retrieved from https://www.akcp.com/blog/a-look-at-data-centercooling-technologies/)

[Fig. 67] Immersion Cooling Principle Diagram (retrieved from, https://www.asperitas.com/what-isimmersion-cooling#how-it-works)

Immersion-Cooling solutions in data centres provide PUE (Power Usage Effectiveness) values in a range of 1.02 to 1.04, portraying that they use up to 50% less energy than their traditional air-cooled counterparts while handling the same computational load. 47

Additionally, immersion-cooling solutions provide 5 times more powerdensity per rack, than the traditional air cooled solutions, therefore they fit more computational capacity into a smaller volume, much more efficiently.48

[Fig. 68] Immersion Cooling Principle Diagram (retrieved from, https://www.asperitas.com/what-is-immersioncooling#how-it-works)

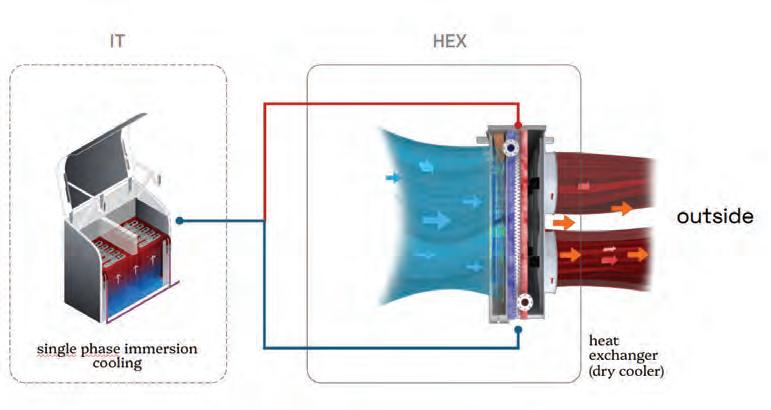

Within the scope of this research, the single-phase immersion cooling solution has been identified as the most feasible option due to its flexibility through modularity and its effective heat transfer capabilities to the submerged fluid. By circulating the heated fluid through a heat exchanger, the resulting heat is efficiently transferred and can be directed to where it is needed through additional material interventions.

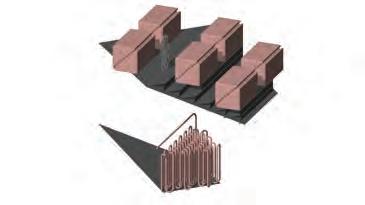

A heat exchanger is a mechanical device designed to efficiently transfer thermal energy between two or more medium at different temperatures without mixing them. It operates based on the principles of conduction and convection, enabling the transfer of heat through solid walls and fluid motion.

In data centres, heat exchangers are vital for maintaining the optimal operating temperatures of ITE Spaces, enhancing energy efficiency, and contributing to ensured thermal management practices.

[Fig. 69] Working principle of an Liquid-to-Air heat exchanger (retrieved from https://www.altexinc.com/ case-studies/air-cooler-recirculation-winterization/).

The input and output parameters of the heat exchanger are determined by the operational requirements of both the ITE and the Cultivation Spaces.

Considering the utilization of Immersion Tank ITE Spaces, where highperformance computing, AI, and supercomputers generate substantial energy consumption, liquid cooling systems have demonstrated superior efficiency in managing the thermal loads of high-density data racks.

In the Cultivation Units, water supply is critical as it provides nutrient delivery, specific oxygen, and pH levels necessary for plant growth. This necessitates a separate closed system for water supply and circulation. However, the air supplied to the plants must be maintained within a specific temperature range to optimize growth. This can be achieved by using external air, which is then cooled down to the required temperature through a heat exchanger utilizing hot liquid from the ITE Spaces.

Liquid-to-air heat exchangers are considered best practice among liquid-based systems. Dry coolers, a type of air-to-liquid heat exchanger, transfer heat from the liquid to the surrounding air. In this system, warm liquid flows through a network of coils or tubes, while large fans blow ambient air over these coils. As the air absorbs heat from the liquid, the liquid is cooled before returning to the system. Typically, dry coolers dissipate heat directly into the air, but this heat can also be repurposed, such as for heating cultivation spaces.

[Fig. 70] Immersion cooling infrastructure example without heat reuse (retrieved from https://pictures.2cr. si/Images_site_web_Odoo/Partners/Submer/2CRSi_ Submer_Immersion%20cooling%20EN_April_2023.pdf).

- Comparison

In standard practice, dry coolers release heat directly into the air without being reused for other purposes, resulting in a significant amount of energy being lost to the environment. Given the scale of energy consumption involved, this process leads to considerable heat waste, which is simply dispersed into the atmosphere.

However, this heat can be repurposed in various ways. Instead of releasing it into the atmosphere, the heat can be redirected to programs such as cultivation spaces, where crops and vegetation require specific temperatures to thrive. By using this excess heat to support agricultural processes, the system becomes more energy-efficient and sustainable. Proposed

[Fig. 72] Required temperatures for the IT and cultivation spaces (generated by the authors).

For the development of the overall system, the inlet and outlet temperatures of the fluids in the dry cooling heat exchangers will be crucial. Since the project involves ITE Spaces, which consist of immersion cooling tanks, and Cultivation Units designed to accommodate crops and vegetation, accurately determining these temperatures is essential for advancing the design and conducting detailed physical experiments.

Immersion Cooling Inlet-Outlet Temperatures:

-Inlet Temperature: around 40°C

-Outlet Temperature: 50°C (10°C higher than the inlet temperature)

Farming Unit Inlet-Outlet Temperatures:

-Inlet Temperature: Typically, between 18°C and 24°C

-Outlet Temperature: It should stay within 2°C of the inlet temperature to ensure a stable growing environment.

The immersion cooling units need to lower the liquid temperature from 50°C to 40°C, while the cultivation space must stay between 18°C and 24°C. Using a dry cooling heat exchanger, this temperature regulation can be effectively achieved.

q = Heat (cal or J)

m = Mass (g)

c = Spesific heat (J/g ° K)

ΔT = Change in temperature

The temperature range required for the Cultivation Space is certain but depends on the stability of heat generated in the IT Space. Fluctuation in the total number of active servers and their usage capacity in the IT Space can affect the total heat produced, potentially supplying less heat to the Cultivation Area than needed.

Data centre activity fluctuates throughout the day, with usage peaking during working hours. Thus, the data centre’s capacity must be designed to meet peak demand during these times. At night, data production and consumption decline as the number of users decreases, leading to only partial use of the available capacity. This usage difference can reach up to 40%. As the heat from the IT Space fluctuates, it impacts the heat supplied to the Cultivation Area, potentially affecting plant growth. Therefore, it is crucial to regulate and maintain consistent heat levels in the Cultivation Area.

Thermal energy storage methods can be divided into two classes: sensible heat storage, in which the material's temperature changes with the amount of energy accumulated, and latent heat storage, which implicates the storage or release of energy during its phase change.49

As Al-Yasiri and Szabó discuss, typical thermal energy storage uses physical materials such as "brick" and "concrete." However, relying on materials with high thermal mass for sensible thermal energy storage has a drawback: the low energy density.50

On the other hand, PCM stores thermal energy in the form of "latent" heat. As the material encounters a phase change at an approximately steady temperature, it can effectively maintain the thermal energy.51

[Fig. 73] Intervention point diagram (PCM Phase Change Energy x Temperature diagram retrieved and edited from https://thermtest.com/phase-change-material-pcm)

In this manner, it is projected that incorporating PCM into an enveloping element can ultimately enhance the thermal comfort of the related space. The charging and discharging (heat gain & loss) cycles can be repeated numerous times. Therefore, PCM can be effectively categorized as a thermal storage medium.

Although it is not common in the construction industry, the introduction of PCMs is preferred over other methodologies for several rationales, including their elevated heat of fusion, all-around availability, non-toxicity, cost-effectiveness, and comparatively minimal environmental impact while installing and maintaining. Additionally, PCMs are highly engineerable and available according to the desired temperature ranges.

[Fig. 74] PCM selection chart (the base graph retrieved from https://thermalds.com/phase-change-materials/)

75] Filtered PCM options (generated by the authors)

PCM's performance stands predominantly determined by its material property metrics, such as density, thermal conductivity, latent heat of fusion, phase change temperature, .etc. Further influential aspects often regarded are cycling stability, toxicity and flammability, re-cyclability, and cost-effectiveness.52

PCMs are mainly categorized into "organic", "inorganic", and "eutectic" according to their respective properties. Each class has a distinct range of thermochemical characteristics and operating temperatures, making some more suitable for specific applications than others.

The surveyed graph portrays the ranges of melting temperatures of various PCM kinds. Utilizing the chart, "hydrated salts", "paraffins" and "fatty acids" were determined to coincide the melting temperature range related with the heat exchanger input/output values.

In this manner, salt hydrates, an inorganic material, were preferred due to their ease of maintenance, wide range of customization options, low-volume change in-between phases, and non-flammable nature.

Within the "salt hydrates" class, Calcium Chloride Hexahydrate (CaCl2.6H2O) was selected as the PCM material due to its typical availability, and overall fit to the expected criteria.

Selected PCM phase change temperature graph (retrieved from the article Thermophysical parameters and enthalpy-temperature curve of phase change material(...) 53

There are two mainstream encapsulation techniques for PCMs. Micro-capsules are characterized as capsules with a diameter of less than 1 cm, while macroencapsulation refers to a broader range of applications, typically with a diameter of more than 1 cm.54

Respectively, macro-encapsulation of PCMs help the system

1) prevent significant phase separations; 2) quicken the pace of heat transmission; 3) give the PCM infill a self-supporting structure.

In complement to the intrinsic properties of PCMs, the capacity of energy economizing also relies heavily on the design of the structure, as well as the thickness and location of the respective enveloping PCM layer regarding the surrounding space within the envelope.55

Several studies indicate that the PCM layer should be positioned near by the heat source.56 For cooling performance to occur, the building element must have the PCM layer applied outside. On the other hand, it ought to be situated nearer the interior for heating performance purposes.57

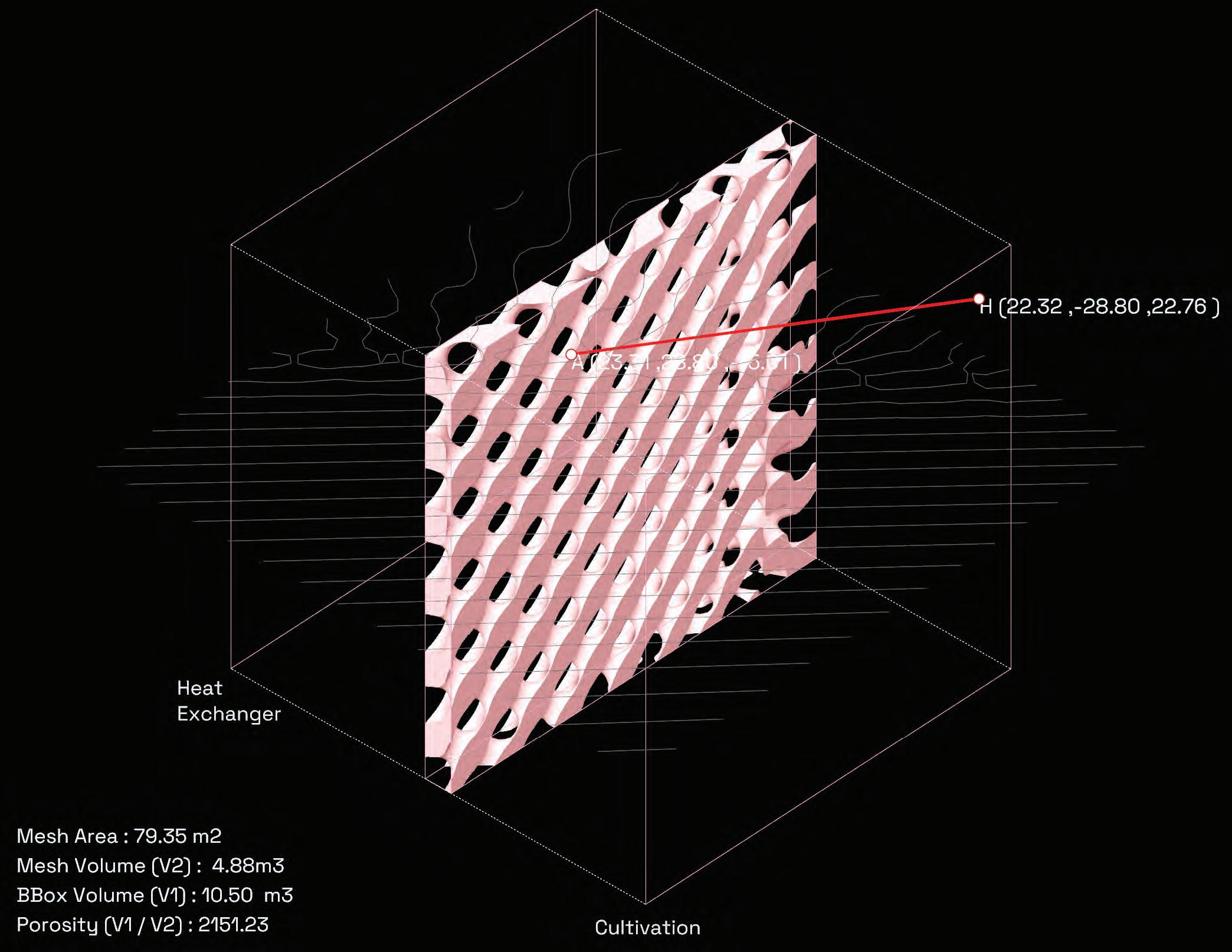

In this manner, a PCM layer that is expected to harness the excess heat from the heat exchangers is envisioned as a porous panel system that will allow circulating the hot air around the PCM infill.

[Fig. 79] TPMS surface ability to subdivide a volume into two equal parts (retrieved from https://blog.fastwayengineering. com/3d-printed-gyroid-heat-exchanger-cfd)

The encapsulation method and its material need to meet particular criteria to be compatible with the building materials concerning the PCM it encapsulates:58

1) a shell formation around the PCM;

2) preventing the leakage of PCM when its molten;

3) should perform expected when encountering mechanical and thermal loads.

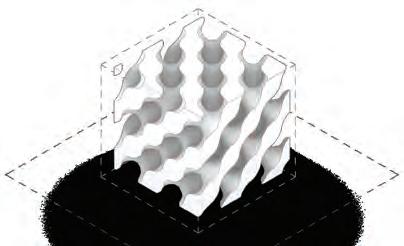

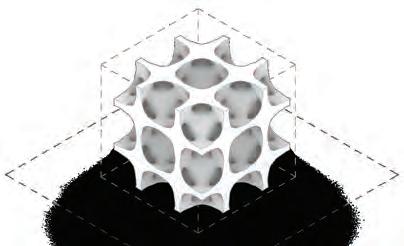

Examining the described parameters, an additive manufacturing potential for a macro-encapsulating shell is realized. The porosity attribute and the surface characteristics directed the focus to experiment with Triply Periodic Minimal Surfaces with the attributes described in the figure above.

Triply Periodic Minimal Surfaces (TPMS) provide effective and passive ways to improve heat transfer performance.59 Their configurations, which include Schwarz-P, Diamond, Neovius, and Gyroid offer a high surface-area-to-volume ratio and comparatively complex geometries. Because of these characteristics, TPMS is suitable for high-temperature and high-pressure settings. In addition to their thermo-hydraulic characteristics, TPMS outperform conventional systems in heat transfer efficiency and pressure drop reduction.

Utilizing these surface definitions, various shell geometries have been tested to efficiently subdivide the volume into PCM infill and void spaces for hot air circulation. The resultant shells are attributed as TPMS-Based Porous Cellular Structures.

3

[Fig. 80] Selected TPMS types (generated by authors)

sin(x)*sin(y)*sin(z) + sin(x)*cos(y)*cos(z) + cos(x)*sin(y)*cos(z) + cos(x)*cos(y)*sin(z)

3*(cos(x)+cos(y)+cos(z)) + 4*cos(x)*cos(y)*cos(z) Gyroid Schwarz-P Diamond Neovius

sin(x) cos(y) + sin(y) cos(z) + sin(z) cos(x)

cos(x)+cos(y)+cos(z)

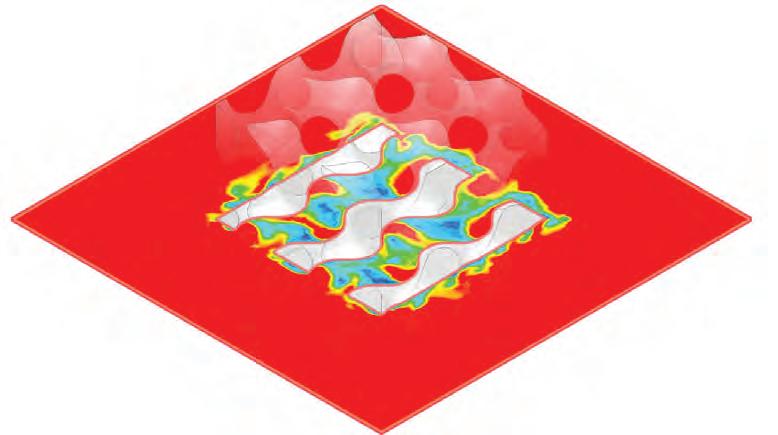

A series of computational fluid dynamics experiments were conducted to analyse and observe how different surface types respond to the output air characteristics from the heat exchanger. The hot air values were extracted from data sheets of the respective heat exchanger modules.

It was observed that while diamond and gyroid configurations perform better to re-direct the hot air, the pockets generated within the other two supplies potential heat traps.

[Fig. 84] Blended TPMS based cellular structure (generated by authors)

The achieved mathematical model, and generative shell generation pipeline allow multiple mathematical modifications of Blending multiple types and grading opportunities to be able to combine multiple advantages.

How can the proposed material system can be further customized in response to the space it inhabits ?

Gathering the capabilities, within the material system development; it was questioned how can the morphology of the shell can be customized in relation to the space it inhabits.

Regardingly combining a matrix of space x energy inputs with related equations, an adaptive panel configurator pipeline utilizing the blending and grading capabilities was achieved.

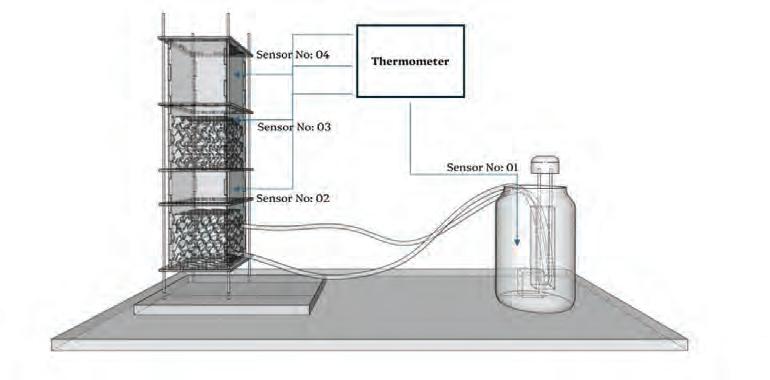

The experimental setup was employed as a proof of concept for investigating the impact of Phase Change Materials (PCM) on temperature changes within spaces. This experiment was conducted in two stages to generate comparable data.

The setup is comprised of several components: the ITE Tank, Heat Exchanger, Fan, TPMS Regulator Volume, and Cultivation Space. These names reflect our conceptualization of architectural spaces. To avoid ambiguity, the Cultivation Space can be referred to as Void Space, and the IT Tank can be designated as the Water Tank. In this configuration, hot water circulates between the Heat Exchanger and the Water Tank. As the Heat Exchanger’s temperature increases due to the circulating hot water, a fan transfers the heated air from the Heat Exchanger to the bottom surface of the TPMS Regulator Volume, from where it is circulated into the Void Space.

The only independent variable in this experiment is the TPMS Regulator Volume, which is responsible for transferring heat from the Heat Exchanger to the Void Space. The temperatures measured during the experiment, excluding that of the Water Tank (Sensor 01), include those of the Fan (Sensor 02), TPMS Regulator Volume (Sensor 03), and Void Space (Sensor 04). Sensors 02, 03 and 04 are considered dependent variables. Control variables include fan speed, water velocity, the upper threshold of water temperature, as well as the conditions of the heater, pipes, cables, and the surrounding environment.

In the first stage of the experiment, a gyroid-type TPMS with a void subvolume was used. In the second stage, a gyroid-type TPMS with a sub-volume filled with PCM (Calcium Chloride Hexahydrate) was utilized. The phase change temperature of Calcium Chloride Hexahydrate is 30°C.

The primary objective of this experiment was to assess the role of the TPMS gyroid surface in regulating the transfer of hot air from the heat exchanger to the Void Space (also referred to as the Cultivation Space). The focus of the study was on comparing the time required for the Void Space, when equipped with an empty gyroid surface versus a PCM-filled gyroid surface, to heat up and cool down. This comparison aimed to evaluate the impact of the PCM material on thermal regulation within the space.

In the experiment, multiple recording devices were employed for real-time data collection. Temperature variations were continuously monitored using thermal cameras positioned at two distinct locations, while key areas of the experimental setup were measured using four temperature sensors connected to a digital thermometer. Sensor data was recorded at 5-second intervals, creating tabular datasets for analysis. These values were subsequently plotted on a two-axis graph for further examination. Additionally, timelapse photography captured the physical changes every 15 seconds, ensuring comprehensive documentation of the experiment.

[Fig. 99] Experiment result plotted on a graph.

The target temperature range for the cultivation area was set between 25 and 28.5 degrees Celsius. The temperatures in the experiment were slightly higher than the required 18-24°C range for the Cultivation Space. This is because the Phase Change Material (PCM) used, Calcium Chloride Hexahydrate, has a phase change temperature of 30°C. As it was the PCM with the lowest phase change temperature available on the market, the experiment’s temperature was consequently higher. However, achieving the desired 18-24°C range is possible with PCMs that have lower phase change temperatures, which commercially can be available. Additionally, the experiment served as a proof of concept rather than a direct application.

When comparing the temperatures during the heating experiments, it was observed that the setup with the PCM-containing surface took longer to heat

up the Void Space compared to the setup without PCM. This indicates that the PCM material effectively absorbs heat during the process, slowing down the Void Space temperature increase which balances the fluctuation.

In the cooling experiments, the data collected indicates that the PCM continued to retain the heat it had absorbed. This retention of heat by the PCM contributed to regulating the temperature change in the Void Space.

The presence of PCM thus demonstrates its effectiveness in maintaining more stable temperature conditions within the Void Space.

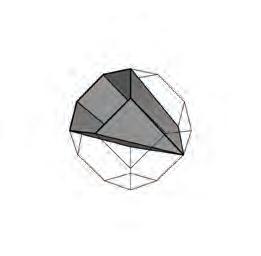

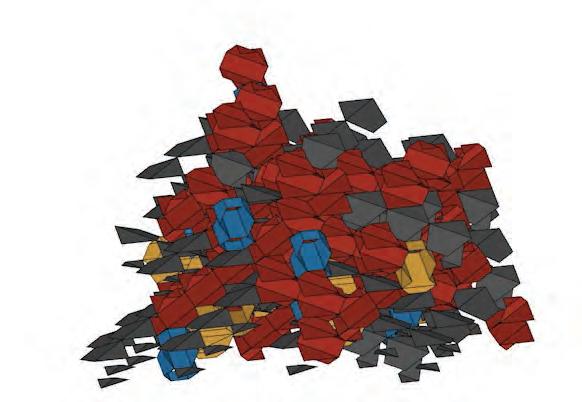

[Fig. 100] Generating Archimedian solids.

In addressing the emerging space-filling (packing) problem, the process of truncation is considered crucial. Beyond the use of Platonic solids (regular polyhedra), Archimedean solids (semi-regular polyhedra) are generated by symmetrically slicing away the corners.

Through truncation, where the corners or edges are cut off, more faces are created, providing additional potential connection points. However, a balance must be maintained as the increase in connections needs to be carefully weighed against optimizing volume efficiency to achieve the most effective packing option ensuring the spatial necessities.

Fig.1 Sectioningstacked cubesperpendicularlytoany edgeproducestheregular(44). Sectioningperpendicularlytoa spacediagonalproducesthe regular(3 )andthequasiregular (3.6.3.6),iftheplanecontains theedges’midpoints

Fig.2 Sectioningstacked hexagonalprisms perpendicularlytothesixfold symmetryaxisproducesthe regular(63

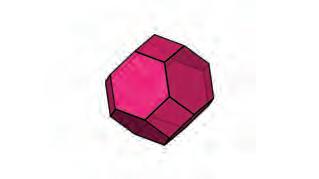

The Bisymmetric Hendecahedron, Sphenoid Hendecahedron and Gyrobifastigium fulfilled the purpose of space-filling, but from the perspective of an architecturally feasible space, they comprised quite a few acute angles between their consecutive faces which resulted in a volume with a lot of ‘corner-like’ spaces. On the other hand the Rhombic Dodecahedron, Elongated Dodecahedron and Truncated Octahedron comprised of only right/ obtuse angles between their consecutive faces thereby serving as a better option for space-filling in the context of architectural space making.

Fig.3 Sectioningstacksof rhombicdodecahedraproduces theregular(44),iftheplaneis perpendiculartoafourfold symmetryaxis

Total Faces : 12

Quadrilateral Faces : 12

Polygonal Faces : 0

Fig.4 Sectioningstacksof elongateddodecahedraproduces theregular(44),iftheplaneis perpendiculartoafourfold symmetryaxis

Fig.5 Sectioningstacksof truncatedoctahedraproducesthe regular(44),iftheplaneis perpendiculartoafourfold symmetryaxisandcontainsa longdiagonalofthehexagonal faces

Fig.4 Sectioningstacksof elongateddodecahedraproduces theregular(44),iftheplaneis perpendiculartoafourfold symmetryaxis

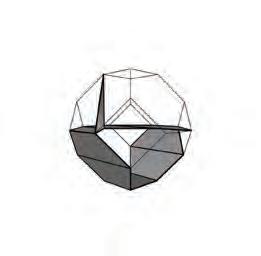

The truncated octahedron, in comparison to the other two dodecahedrons, comprised more polygonal faces, all with the same proportions. This not only increased its potential of creating spatial variations within the same volume but also allowed more permutations and combinations for orienting one face to another face (either polygon to polygon or quadrilateral to quadrilateral).

Fig.6 Therearenoregularfacedtessellationsproducibleby sectioningstacksofBilinski’s rhombicdodecahedra.The tessellationofirregular hexagonscontainsmidpointsof someedges

Fig.5 Sectioningstacksof truncatedoctahedraproducesthe regular(44),iftheplaneis perpendiculartoafourfold symmetryaxisandcontainsa longdiagonalofthehexagonal faces

Total Faces : 12

Quadrilateral Faces : 8

Polygonal Faces : 4

Fig.6 Therearenoregularfacedtessellationsproducibleby sectioningstacksofBilinski’s rhombicdodecahedra.The tessellationofirregular hexagonscontainsmidpointsof someedges

This process of 'assembling' helped expand the spatial quality of the volume, which again depended on the face selected and its orientation in relation to the second face selected.

Total Faces 14

Quadrilateral Faces 6

Polygonal Faces 8

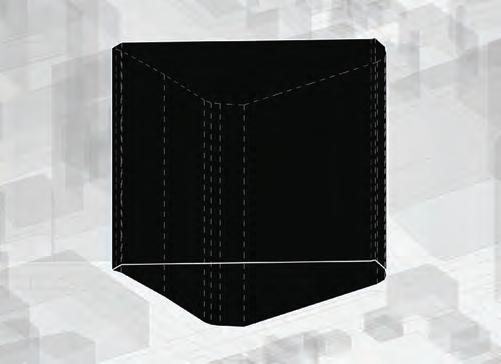

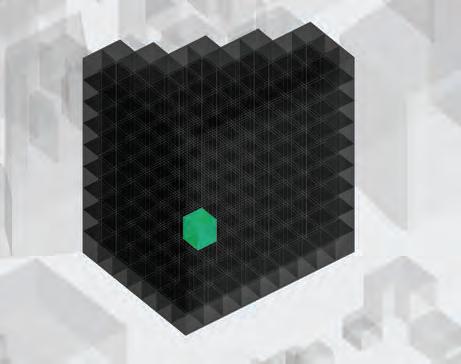

[Fig. 104] Single-surface exploration within the truncated octahedron.

After picking the truncated octahedron as the space-filling object, different vertices were joined and experimented to generate different surfaces thereby transitioning to space-making objects.

[Fig. 103] Multi-surface exploration within the truncated octahedron.