After the avalanche of nolvelties in the NAB Show, calm returns to the market and we prepare for the next show, just around the corner. IBC will be held in just three months; an event that, unlike NAB -which focuses mainly on the American market- attracts a global audience. There were many things that caught our attention at the Convention Center in Las Vegas, and this is reflected in an article in this magazine that offers readers what in our opinion was most relevant in this edition of NAB.

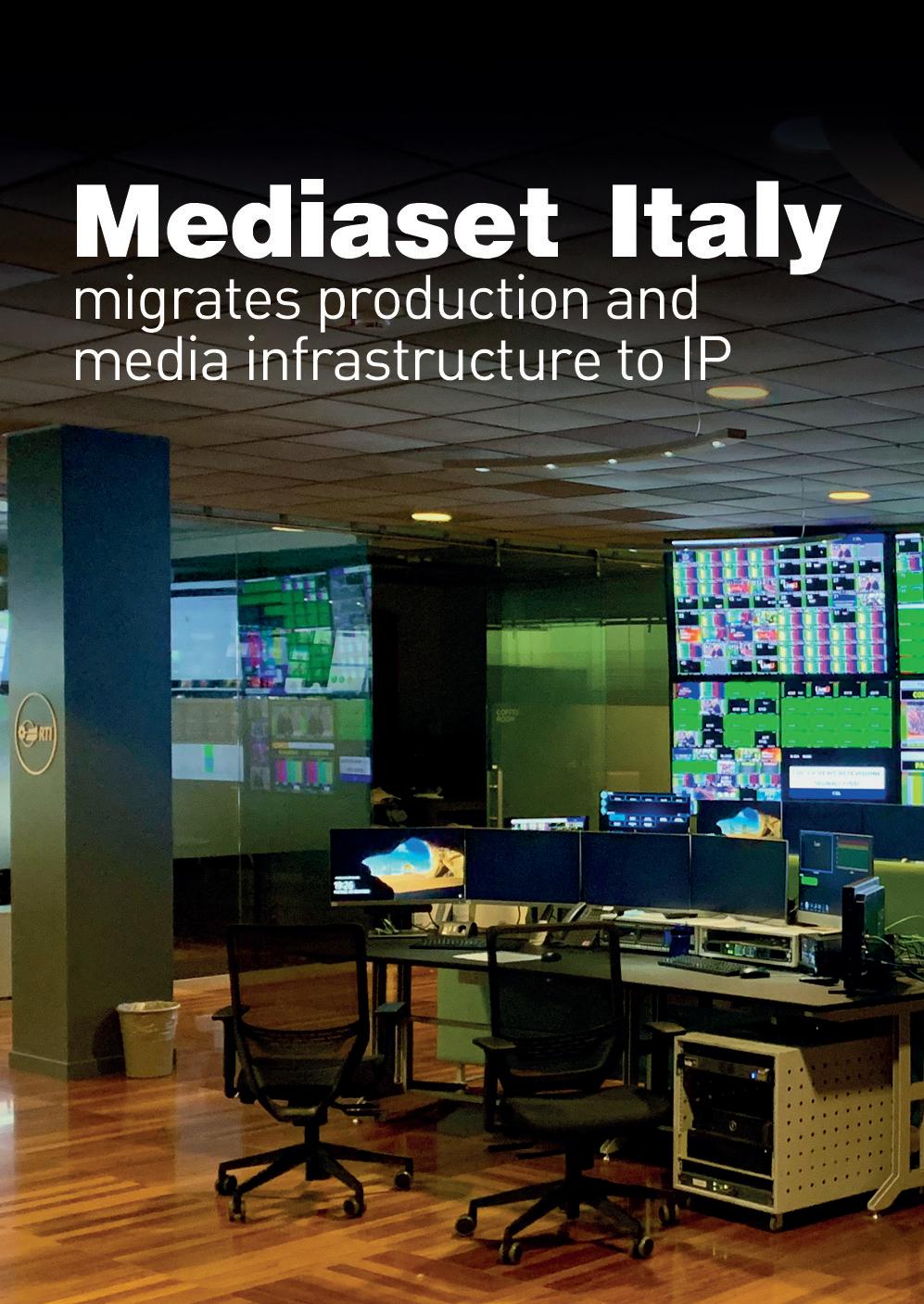

The Broadcast world moves fast, always striving for innovation, and proof of this are the large projects that are being carried out in a good number of broadcasters. Mediaset Italy has just completed the first stage in the ambitious challenge of migrating all production and infrastructure into IP.

Marco Di Concetto -Head of Broadcast Systems & Infrastructures at RETI Televisibe Italiane Spa- and Pietro Osmetti -Head of Broadcast Control Rooms /

Studio Engineering, unveil all the keys and challenges they have had to overcome to undertake such a massive renovation.

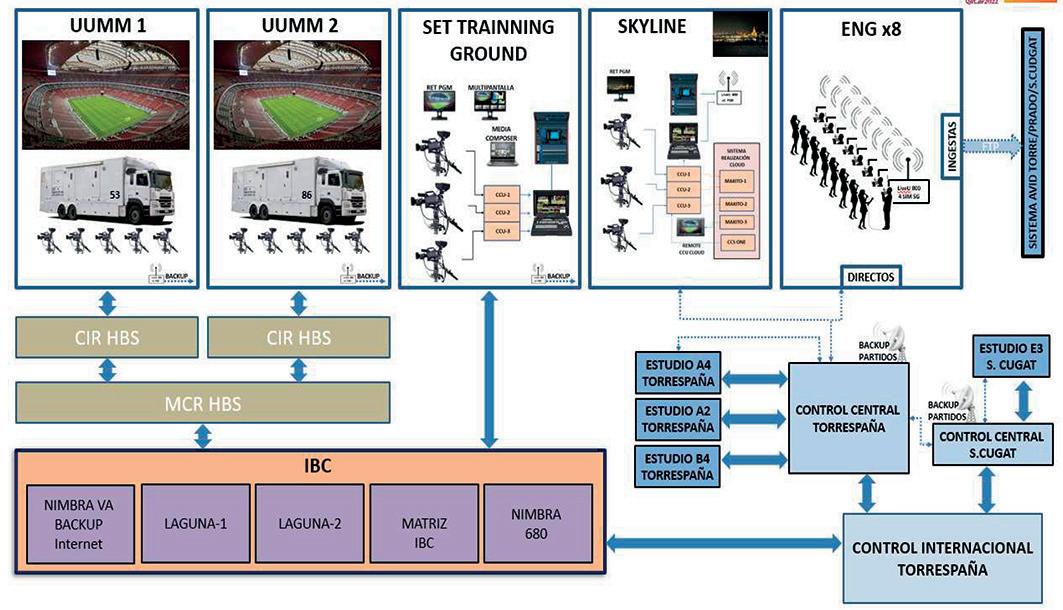

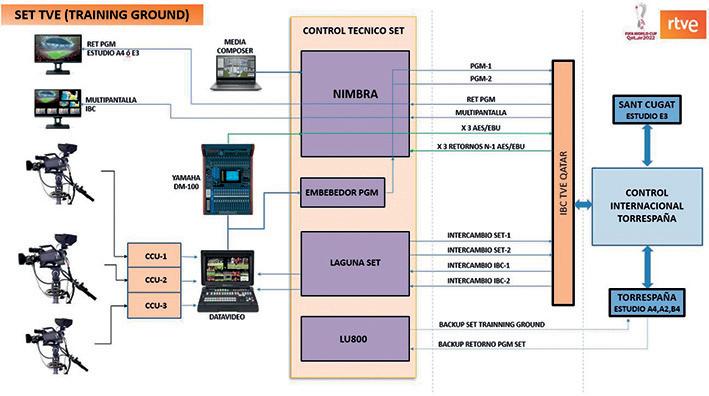

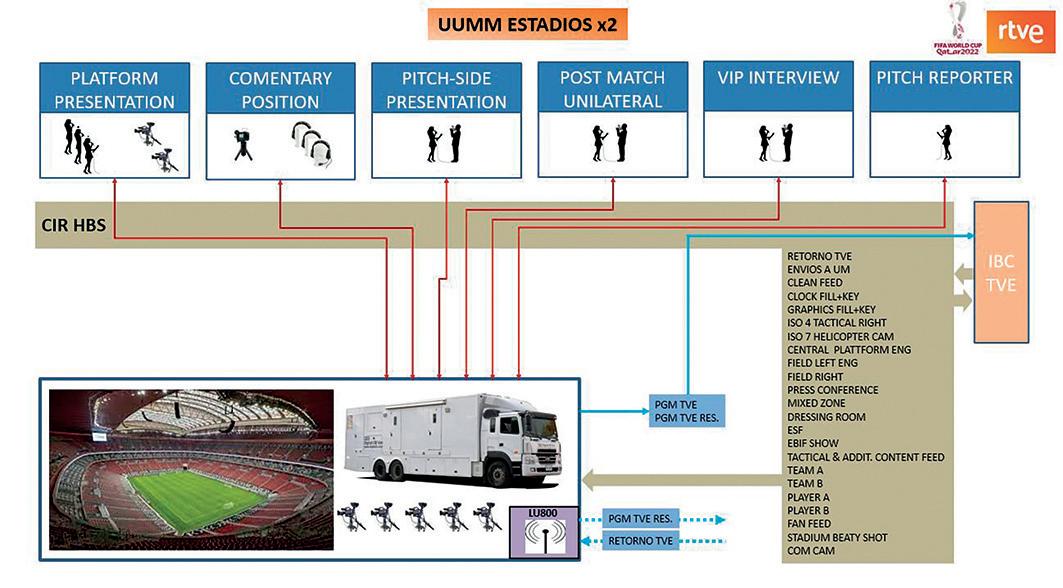

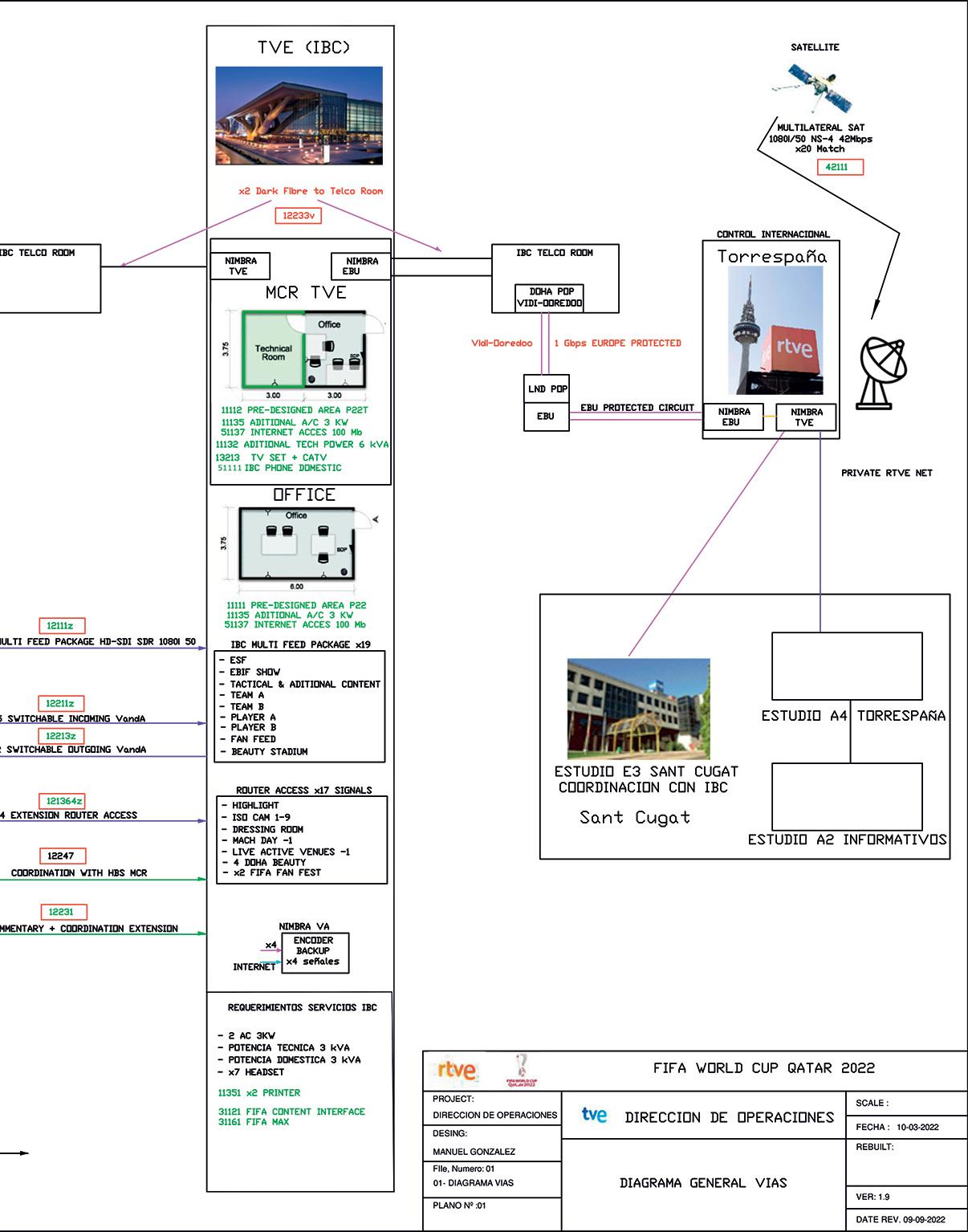

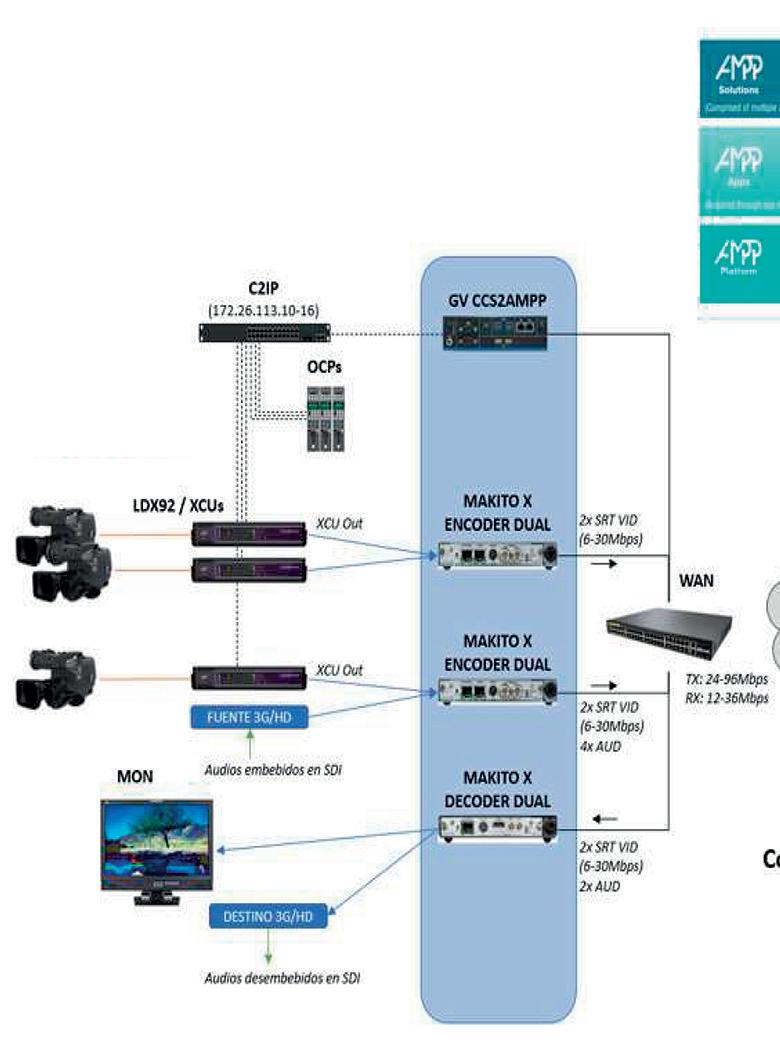

Also massive was the operation that deployed RTVE for the FIFA World Cup Qatar 2022. In this issue of the magazine the reader will find a detailed explanation of how this television made the technical production for the coverage of this event.

We also interviewed one of the big host broadcasters: HBS (Host Broadcast Services), specializing in sports broadcasting and closely linked to FIFA for years now. Its CEO, Dan Midowonik, tells us about HBS’ way to success and which projects they are planning to deliver in a near future.

And finally, we talk about virtual production with Plateau Virtuel Studio and the successful HBO series “The Last Of Us” with their director of photography, Eben Bolter.

Starting in early 2020, just before COVID-19 hit, italian broadcaster Mediaset began a project to migrate its production and media infrastructure over to IP. After three years of overcoming obstacles and adjusting teams, workflow, structures and devices to the new system with detailed attention, Marco Di Concetto -Head of Broadcast Systems & Infrastructures at RETI Televisibe Italiane Spa- and Pietro Osmetti -Head of Broadcast Control Rooms / Studio Engineering- have told us what can be expected during this new stage in Mediaset production.

Eben Bolter has spoken with us about new tools and ways in film making, how he foresees the future of the industry and which kind of filming and producing high-tech is he keener on.

TSL, company specialised in audio monitoring, broadcast control systems and power management tools, is putting its new device TM1-Tally on display through Media Production & Technology Show 2023 (MPTS), which is taking place from today, May 10th until tomorrow, May 11th.

The TSL TallyMan product family serves as the central control system for fast-paced media workflows simplifying the way of managing multiple hubs and spoke systems required to produce and distribute sports, news, concerts, house of worship, and other live broadcast events.

Highlights of the TM1-Tally MPTS showcase include:

– A full-fledged tally system that works with all manufacturers for basic to medium tally systems.

– Expandable from six devices to 12, the TM1Tally accommodates more devices as requirements change.

– Route tally and media as well as perform other control functions such as protocol translation, device control, and system macros.

– Control challenges in smaller systems or fly-packs can be addressed and reconfigured as production demands change.

– Compatible with TSL hardware and virtual control panels.

TM1-Tally ensures the correct tally functions are

managed no matter how many switchers or routers are in the signal chain. It supports industry-standard broadcast solutions and protocols, and can connect to a range of switchers, routers and multi-viewers, utilising the built-in GPIO. The TM1-Tally can also connect up to 64 cameras.

With an option to upgrade larger systems and control panel expansion, TM1Tally offers flexibility and aims to saving time and money while improving productivity. The newest addition to TSL range of products, TM1-Tally, provides a low cost of entry to TSL’s professional control product family in a space saving form factor that is well-suited for OB truck environments and crowded broadcast facilities.

TSL introduces its new TM1-Tally, a broadcast control solution to simplify operations and save space

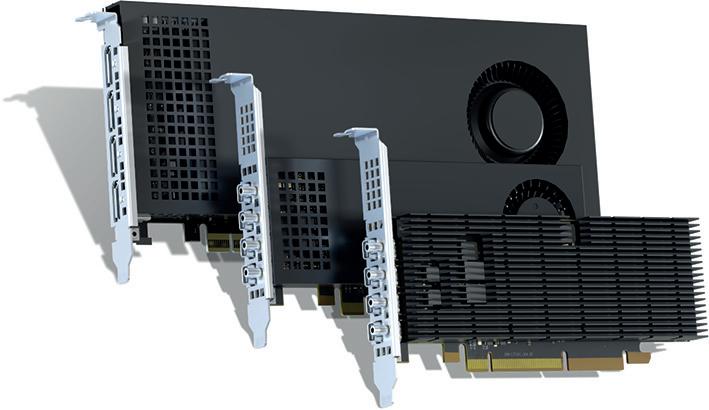

New Matrox LUMA series of graphics cards with Intel Arc GPUs was recently announced by Matrox Video, who aims to highreliability and embedded PC applications in the medical, digital signage, control room, video wall, and industrial markets.

The series are formed by three single-slot cards: LUMA A310, a low-profile fanless card; LUMA A310F, a low-profile fanned card, and LUMA A380, a full-sized fanned card.

The video company has worked on the LUMA range in order to meet what the mainstream graphics market for driving multiple screens demand: a balance

between size, performance for different applications and reliability.

The LUMA A310 card is a low-profile fanless card which offers quiet operation and eliminates a point of failure (the fan), thereby increasing reliability and extending the card’s life. The LUMA A310 is the perfect choice for anyone needing a small card that fits in a small-form-factor system. Examples include industrial systems that sit on a table or behind a monitor, or surgical displays in an operating room, where there are stringent requirements for reliability.

All three LUMA cards have four outputs and can drive

four 5K60 monitors -all three can also drive up to 8K60 or 5K/120 displays but are limited to two outputs when doing so-. They are compatible with all the latest graphical capabilities, supporting DirectX 12 Ultimate, OpenGL 4.6, Vulkan 1.3, and OpenCL 3.0, as well as Intel’s oneAPI for compute tasks and the Intel Distribution of OpenVINO toolkit for AI development. The cards also have classleading codec engines that can both encode and decode H.264, H.265, VP9, and AV1.

Close collaboration with Intel made it possible for Matrox Video to customize certain features of the LUMA cards to address specific market needs and offer several qualities that are in high demand but aren’t available elsewhere:

The A310 is the only fanless board of its class on the market.

All LUMA cards support DisplayPort 2.1 and can output up to 8K60 HDR.

One of the most important and active venue placed downtown in the French capital, the Palais des Congrés de Paris hosts all kinds of events from medical conventions to the Tour de France presentation; due to this circumstance, the convention center needs a powerful but flexible AV AV infrastructure to ensure it can meet the needs of its several users at once. At the heart of this system is an RTS intercom backbone which has been modernized to work with OMNEO* IP networking architecture.

At over 40,000m3 the Palais des Congrés is a vast complex boasting meeting spaces of all sizes. The largest of its 29 conference rooms, Le Grand Amphitheatre, can seat 3800 participants, while the smallest caters to 90 people. Various spaces within the complex are in daily use, with the in-house AV team ensuring that the technical

infrastructure for all events is functioning correctly and with the utmost efficiency. In step with the evolving day-to-day demands of a venue complex of this size, Palais des Congrés recently updated its AV infrastructure to become fully IP networked.

For the intercom side of the upgrade, the venue turned to local RTS partner Pilote Films. The team at Pilote Films highlighted that OMNEO would enable the congress center to get the most out of its IP architecture, as it allows RTS equipment to integrate into existing network infrastructure with the benefit of a range of builtin device control and

audio technologies, such as Dante™ digital audio. Equally, the backwardscompatible nature of RTS equipment allows seamless integration with RTS legacy systems.

The fully IP-based system now features an Odin 96-port digital matrix interconnected with an Odin 48-port matrix alongside 40 KP-Series keypanels and several PH88 headsets. Completing the solution are 13 Roameo AP-1800 access points and 24 Roameo TR-1800 beltpacks, with six of the access points and eight beltpacks reserved exclusively for Le Grand Amphitheatre.

The event, which will take place in the Italian town during next November, 28th and 29th, has opened the inscription period and early bird offers with reduced rates will be available until July 20, 2023.

Naples, Italy, has been designated as the place where 11th HbbTV

Symposium and Awards will take place on November 28-29, 2023, as HbbTV Association announced recently. This association is a global initiative and looks for provide open standards for the delivery of advanced interactive TV services through broadcast and broadband networks for connected TV sets and set-top boxes

The annual key summit of the connected TV industry, targeting platform operators, broadcasters, advertisers and adtechs, standards organisations and technology companies,

will be co-hosted with Comunicare Digitale, an Italian association for the development of digital television.

For the first time, the HbbTV Symposium conference will be split into a traditional format with keynotes, presentations and round tables on the first day while the second day will host an unconference in which the participants will decide about the agenda, topics and discussions, actively shaping and driving the conference sessions and conclusions.

“We are excited about being back in Italy, hosting a new conference format. While delegates told us that they liked the previous concept, they also encouraged us to create more opportunities for interactivity between speakers and the audience, enabling more exchange among the participants. We are confident that the

new structure will create an extraordinary event providing visitors with valuable insights on how to successfully develop their business in the changing market conditions,” said Vincent Grivet, Chair of the HbbTV Association.

The HbbTV Symposium will take place at the Naples Maritime Station, a modern congress and exhibition centre located in the port area of Naples at the Mediterranean Sea. The event will also host the 6th edition of the HbbTV Awards, featuring a wide range of categories designed to acclaim best practice and excellence in the HbbTV community.

Targeting top-level executives of the connected TV industry, the HbbTV Symposium will provide a platform for commercial and promotional activities.

Journalists will easily insert audio clips into their articles as channel boosts its radio contributions thanks to tight integration with iNEWS® NRCS

Sky News Arabia covers everything that happens in the Middle East and North Africa region —and almost everything that happens internationally—across its TV, radio, and digital platforms, 24/7. In order to boost its radio offering, the broadcaster highlighted it with the selection of the DAD automation system from ENCO to enable intuitive audio workflows with their existing Avid iNEWS® newsroom

computer system (NRCS).

This project was developed in line with the network’s aim to consistently enhance its services. As Sky News Arabia was already using the iNEWS system for its TV broadcast, they also wanted to integrate their TV and radio operations through the same NRCS to keep the workflow streamlined, and ensure ease of use for its journalists and producers.

ENCO’s

Interface plug-in enables Media Object Server (MOS) protocol integration between iNEWS and the DAD automation system, allowing journalists and news producers to access DAD audio asset libraries directly within the iNEWS client interface. Users can search the ENCO library, preview clips, trim media as needed, and bring the results into their stories by simply dragging and

dropping the desired items. iNEWS rundowns are automatically synchronized as playlists on the DAD automation system to keep everything effortlessly updated for subsequent playout.

Audio clips can be recorded into Sky News Arabia’s DAD system in the studio, or imported as files either manually or through watch folders using the DAD

Dropbox utility. Journalists interact with the DAD system primarily through the iNEWS plug-in, while studio personnel take advantage of the intuitive DAD Presenter interface for managing playout.

Suresh Kumar, Director of Technology, at Sky News Arabia commented: “The process of deploying DAD radio automation and integrating it with our

iNEWS newsroom system was done in line with our aim to constantly enhance our technical efficiencies. This seamless integration will enhance our ability to deliver content quickly and efficiently to our audiences through our radio channel, and we were pleased to partner with ENCO who acted as an extension of our own team throughout this project.”

The national broadcaster in Denmark, who has public service obligations as it’s a state-owned company, has installed two S500 consoles, of various configurations, and three SSL System T S300 at its headquarters (Odense),and in two OB trucks. Two more identically configured S500 consoles are scheduled for installation at TV 2’s headquarters, plus three at a second location in the country’s capital, Copenhagen, which will eventually bring TV 2’s complement of SSL System T consoles to 10 in total.

“The main thing we were looking for was a solution

where everything is within a single ecosystem,” says Jens Christensen, Broadcast Engineer, Media Technology, Production & Facility. “We were not interested in buying a lot of outboard gear; we wanted everything inside the console.” When TV 2 first went shopping for a replacement console a couple of years ago, he says, “SSL was pretty much the only company who provided that.” The installed SSL System T consoles have replaced older broadcast audio desks from another manufacturer that no longer services or supports them, he reports.

Over the past two years, TV 2 has installed a System T S500m three-bay, 32-fader console configured for

mobile applications in the company’s 20-camera OB4 truck, the largest remote production vehicle in Denmark, and an S300-32 32-fader desk in a smaller, 10-camera truck, DNK 47. A compact 16-fader S300-16 surface is integrated into OB6 Flypack, a standard 40foot shipping container that can be moved around the world for events and, when not on location, is based at the Odense facility where it is used as a gallery [control room] primarily for eSports. Additionally, a 32-fader System T S300-32 has been installed in Control Room D at TV 2’s Odense headquarters and an S500-32 is currently being prepared for integration into another gallery.

Networking is central to TV 2’s plans going forward. “Here at TV 2, we have quite a big audio-over-IP network between our two main

locations in Odense and Copenhagen,” Christensen continues. “At the moment, we are building a new gallery in Copenhagen, which will be Control Room F. That is also going to be connected to the other galleries over the network, so we can remote-control the console from wherever we are in our facility.”

TV 2 plans to make the most of the System T’s networking and remote control abilities. “We are in the process of separating our studios from our galleries so we can connect any studio to any gallery,”

he says. Each of the main studio galleries at the Odense facility will house an identically configured System T S500-32 console, providing familiarity when moving between galleries. “Because of how the facilities were built we couldn’t do this before. But the IP infrastructure will make it much more flexible and easier to do that,” he says.

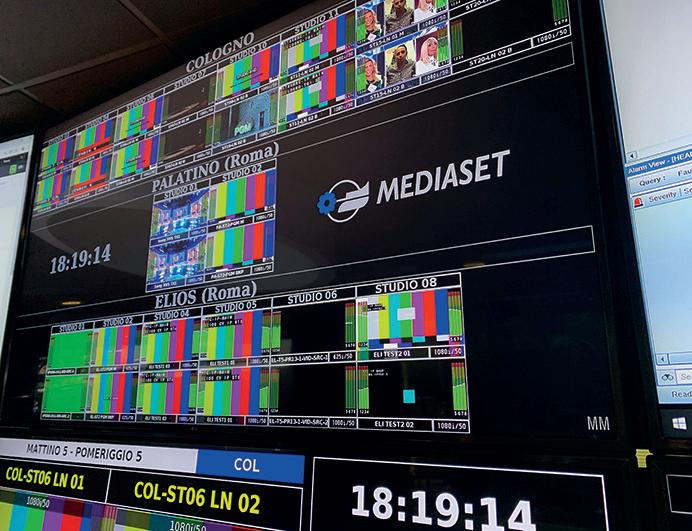

«We were the first in Italy making these things inside only one studio», Pietro Osmetti and Marco di Concetto explain how the migration to IP worked for leading broadcaster in Europe

Starting in early 2020, just before COVID-19 hit, italian broadcaster Mediaset began a project to migrate its production and media infrastructure over to IP. After three years of overcoming obstacles and adjusting teams, workflow, structures and devices to the new system with detailed attention, Marco Di Concetto -Head of Broadcast Systems & Infrastructures at RETI Televisibe Italiane Spa- and Pietro Osmetti -Head of Broadcast Control Rooms / Studio Engineering- have told us what can be expected during this new stage in Mediaset production.

The first question for you is ‘why?’ or, in other words, what are the objectives that lead your company, MEDIASET Italy, to undertake this renovation to IP? What were the main pain points on the main opportunities you see with this transition to IP?

MdC: Actually we’re in a stage where media sector is looking for renovation or replacement of obsolete technologies in studios, the control rooms. And in the meantime, we wanted to manage the internal in-sourcing of activity, which previously was undertaken by a sister company, by ourselves.

I am talking about video distribution and routing of video signals among our different company production centers; as you probably know, we have two main production centers in Milan and Rome but also playout centers and regional sites… which meant that we had to consider whether renovating just based on the traditional HD / SDI technology or moving everything to IP.

Finally, we went with all the IP renovation.

PO: Up to three years ago, the routing of this signal among intercampus, so among our production centre in Rome and Milano, was done by a sister company and now with this renovation we are doing migration to the IP2110, we internalise all the service among different production centres. So in Rome and Milano that service is all within our structure; also, we had to change the technology in some new studios — after this renovation on the IP routing— and we moved to have also completely IP2110 devices inside the studios. The audio renovation for us was made by Dante Technology —Dante protocol was made in 2017.

To sum up, in Marco di Concetto’s words:

In the last 3 years Mediaset has launched several important projects as a part of an overall roadmap of IP transformation, in 3 different, complementary areas:

1. Video distribution and routing between and across the company different production centers in Milan and Rome

2. Studios and related Control Rooms technology

3. Playout and Head-End (DTT, SAT, OTT)

Migration from SDI / SD-HD to SMPTE-2110 standard (with NMOS 0.5) has been led by the following main objectives:

• Support Inter-campus and intracampus transfer of 1.5G or 3G (in the future possibly UHD 12G) video signals via fiber connections between Mediaset production centers in Milan and Rome, using the new IP based infrastructure

• Integration of Studios and Control Rooms based upon the SMPTE-2110 standard for Video and Dante for Audio with the backbone infrastructure above, to increase efficiency through the possibility to more easily share Control Rooms with different Studios and allow remote production models

• Complete the full-IP overall infrastructure (to avoid gateways, intermediate processing, etc.) with a new IP based Playout and Head-End

We (MEDIASET) were the first in Italy making these things inside only one studio, so it was not easy but neither so complicated.

MdC: However, I’d like to do a little clarification: not all devices at the moment are in 2110 due to the vast of data portals and the monolithic routing. So, for example, the camera studio that is 1.5 Gb/s, is already in SDI and we put between the device and the IP routing a gateway for migrate the SDI signal in IPC.

In what stages were made this transition? What stage you are right now? This transition is already done or just partially done or are you planning additional steps in this transition to IP of your main production centers?

MdC. Regarding to the distribution of signals across the Rome and Milano production centers, the project has been completed and launched at the beginning January 2022, last year. So, apart from some external

contributions made with backpacks, contributions from football stadiums or other events which are coming from outside either Italy or international, all the internal distribution of audio video signals has been completed and is fully supported with this infrastructure.

Pietro anticipated that it’s monolithic in the sense that we have not distributed IP Infrastructure based on… PO: Primary live technology.

MdC: ...exactly, but we are based on the EXE routers from Evertz which has supported a kind of

«Not one single signal or frame was lost during IP transmissions implementation», remarked both

monolithic approach. All the signals within each production centers are directly interfaced to these big very big routers.

Actually, the transition, wich had been planned over an approximately 6 years deployment, started in 2020 and was initiated with the replacement of legacy SDI based video switchers with new, large IP based core Video routers installed in each production centers, and communicating each other through a geographic fiber optic backbone.

In parallel with this installation, 2 initial Control Rooms and Studios mainly featuring Sony IP video

switchers and SSL audio mixers have been launched, one in Rome and one in Milan Production Centers.

At present, 3 Control Rooms in Milan and 2 Control Rooms in Rome (sharing a higher number of studios in a time-division strategy) are already operative, along with additional IP based OBVAN (named “IPVAN”), with a third Control Room and Studio in Rome planned for summer 2023.

In the next 3 years, the remaining studios and control rooms will be migrated to IP. In the meantime, also a new Playout system and the

«The transition, wich had been planned over an approximately 6 years deployment, started in 2020 and was initiated with the replacement of legacy SDI based video switchers with new, large IP based core Video routers installed in each production centers, and communicating each other through a geographic fiber optic backbone»

Head-Ends will be migrated to IP, with integration with the core infrastructure in the next 2 years.

So have you done all this renovation by yourselves or it was supported by an integration company? In this last case, who is this company developing the plan and how is its relationship with MEDIASET Italy?

MdC. The main integrator supporting us in this transition to IP, made possible mainly through Evertz core IP technology, Sony (plus 1 Panasonic Kairos) video switchers, Solid State Logic (SSL) audio mixers (plus Yamaha

backup ones) and RTS intercom systems, is Italy’s based Professional Show company. Professional show has been one of the leading broadcast suppliers of Mediaset for years

PO. It’s a mixed team. So we begin with thinking about our requirement, our necessities. We install, we decide how to interconnect all devices. But then,the first configuration was made by Evertz and Professional Show, with our support.

For sure it was a very big challenge for us when we started at the beginning of 2020 and during the COVID season; yes, we built that structure in our production

center in Rome and we already started also doing so with the production centre in Milan. But the most important thing is that during this migration not one single signal or frame was lost in the transmission.

I’d like to remark this because to build from zero could seem complicated but may be easier than integrate all the things in studios working 24 for 7, 365 days per year. The content production cannot be stopped, especially News department —news production never stops.

So, as we already said, the migration and the new system implementation was a great challenge for us, because we renewed not only the routing among the intercampus system but also the scheduling system. As a result, the workflow was little bit different between before and after the migration.

In addition, some things that before were made by our partner, manually, now we manage them; we also managed the dry run

in parallel between old and new system. It wasn’t easy but we did it and we reached the target. Of course, Professional Show engineers cooperate with Mediaset engineers to tune the overall design and integration with Mediaset technical resources (eg. Network) and offer L2 support services to Mediaset Operations Team.

So, when you decided to go together with all these let’s say ‘six hands’ integration, the design was done also altogether among the three parties or was something coming from you and executed by the integration team? Did the design of the renovation depend on the integrator or was it developed internally by Mediaset Italia?

MdC: Well, actually, yes (it was something coming from us and executed by integration team). The highlevel design was conceived by us and for sure the integration of the audio, video, the 2110 network with the management

network (because of course all these devices have to be managed which really good care) relays on our internal production network.

So let’s say that the high level design was done jointly.

The design of the renovation was developed internally by Mediaset. The choice of technologies (and the integrator accordingly) was the result of a 2 years selection process, PoCs, liaisons to other international broadcasters.

Due to the details of the architecture of these

big routers and also the processing boards (because it’s not pure routing, but sometimes there is also an audio processing, video processing and so on), of course we had to rely directly on Evertz —the manufacturer— sometimes and the same applies to other devices, as I already mentioned. For example, the video mixers from Sony and the audio mixers from solid-state that in this case sometimes we relied directly on the resources of the manufacturer or specific system integrators in Italy.

That makes sense. Going into the details of the technology and going first to the connections, has everything migrated to IP or do you continue to coexist with legacy technology? If so, how do you ensure communication between equipment? What equipment have you integrated?

MdC. The picture is quite complex. As to audio mixers, different technologies have been chosen for microphones and other sound

equipment (e.g. Wysicom, Sony, etc.). Related to graphics, most of Mediaset soft and hard news leverage on Dalet Cube graphics systems plus some Chyron ones. Also video recording and playout for such systems is based on Dalet Brio video servers.

Entertainment productions also feature different graphics systems directly rented from services, whilst multicamera recording, playout, slomo etc are realized with Evertz DreamCatcher video servers. We have

also recently deployed a graphic and Virtual Studio technology from ClassX to support lean, cost effective productions for smaller studios.

Intercom is fully Dante based and features RTS technology.

Finally, we have 2 asset archival systems, the main one at the Enterprise level, based on Dalet Galaxy and “light” departmental archives, managed through Italian based Mediapower Arkki systems.

Until all studios and control rooms will be migrated to IP, and the new playout system completed, legacy systems are being interfaced via SDI to SMPTE-2110 gateways (from Evertz) and Dante gateways (Evertz or Ferrofish) for audio.

One of the big challenges that Pietro mentioned in the migration from older to new system was to synchronize the scheduling system. This normally is a booking system of signals that the production people request, and that is a bunch

«The design of the renovation was developed internally by Mediaset. The choice of technologies was the result of a 2 years selection process, PoCs, liaisons to other international broadcasters.»

of audiovisual signals with contributions from outside and with associated voice communication so, in the scheduling system all these requests from production have to be settled down.

Originally this was based on this schedule program, but honestly it was some kind of data base, just to put information inside, orders that people had to execute manually on the associated company.

Now the system is that when the production operator enters this information (of course this have to be entered in a proper way, and have to be automatically interpreted and parsed by the native IRM (Intelligent Resource Manager) from Evertz) it directly turn into routing of the signals.

So the management have become mainly operated by the control room operators and other cases are management by exception; so that if even for some reason routing cannot occur in time or not correctly or there is some problem, in these cases,

operators from Evertz intervene.

Otherwise everything is done automatically.

The challenge was to migrate from old main system to the new one in just a day, because every time you move a production from the way of operating to the next one you move from an old legacy switcher to the new one. Of course, you had to do this in stages but you need to do it in less than one month, because some of these productions were working together and… I mean, the big challenge was changing the structure while we had everything working properly.

And some productions work uninterruptedly: New Year celebrations, News reporting…

One thing you didn’t mention is that with this new infrastructure that we know, IP, is much more capable. Do you foresee or you are looking to go to new format UHD or HDR formats to for the

«The challenge was to migrate from old main system to the new one in just a day, because every time you move a production from the way of operating to the next one you move from an old legacy switcher to the new one.»

production, or for the moment you are only let’s say producing an HD and this will come when the viewers or when the content will arrive? How do you see this issue?

PO: This question is very is very hard to answer … because in my opinion is not only the problem related to the production, because for sure for the production is possible also go in more than HD format; but you have to consider that we have a DTV transmission and so the bandwidth is not so

requirement for the new bid. But this does not imply that all the infrastructure will automatically support Ultra HD because it causes a problem also for several systems not just that the production but also the post production in some cases and in the archiving

Typically, we archive all our content at XF 50 Mb/s and all our other archival systems are based upon these formats. If we have to move to ultra HD, this will multiply by 4 the costs.

And at the moment we have just some content… —as Pietro mentioned…— that comes from studios, from the major one that we have an agreement with, that comes in Ultra HD movies fictions, and so on, but we only deliver it to the final user just over the top offering.

Obviously, one thing is to have the capacity and the other thing is to migrate to this new format, absolutely.

PO: And you have to change. We have to change a lot of things, not only the infrastructure. It’s not only related to this.

One of the things that came to our mind when we and it is new from

the SDI to the IT is the security of the cyber security itself. Did you address any way this cybersecurity issue? Did you isolate the network? What are the measurements? Obviously, you cannot tell me a lot of details for security reasons, but what are the measurements that you adopted into your production network that is not the same than in the Office network?

MdC: Security has always been and is increasingly becoming a very critical and complicated issue. At the moment, our SMPTE-2110 video and Dante audio networks are completely “closed” from the outside and private. The relevant

management networks are carefully segregated and not exposed to Internet. We are implementing strict and continuously updated policies managed by an Internal Security department, directed by our Chief Security Officer and operated by Mediaset Security Operation Center (SOC).

Different measures are being adopted at different levels, with policies for intrusion prevention, data leakage, GDPR, that are requested for both internal users/departments and external suppliers (hardware/software).

So, as you can see, we decided from the very beginning to completely separate the video and

Dante audio networks from the rest of our network.

PO: And in addition to this, especially the video net to the SMPT 2110 video network actually is working on a point to point connexion among the different core IP routers from Evertz directly, with none in the middle. Of course, the only network which might

be on potential risk is the management network. But the management network is completely segregated from the outside, from the public network. And there is carefully managed according to all secure policies and directives issued by our internal security department.

MdC: For example some problems with some services, for example the over the top services, were exposed to the internet attacks —DDoS attacks especially— and this has raised from several years ago awareness on the importance of having everything under control with web application servers, in order to protect us from outside.

But, I insist, for this

specific broadcast network everything is completely segregated with no exposure to the outside. Of course, we run a vulnerability test regularly because some potential threats can come also from the inside.

PO: In this sense, security issues, sometimes we might be considered a little bit too protective and this leads us to the question related to cloud services. I would say that 95%, if not 99%, of our audio video infrastructure is on premise.

MdC: As far as the broadcasting quality, video production and playout are concerned, we are considering implementing cloud services just for some metadata arrangement with artificial intelligence in

«We decided from the very beginning to completely separate the video and Dante audio networks from the rest of our network.»

cloud for some production content. This means that the low quality content is sent to the outside, processed and metadata returned. And this is one of the occasional cloud usages we have.

The other one issue is for the distribution of our over the top services; in this case we rely on cloud services and external content delivery networks. Typically we have our own VPC; VPC like a cloud service in an external domain of a

supplier, but it’s the system integrator that implements the services directly on our VPC domain.

And this is the only usage of cloud services we do to the date; but, in any case, we have a direct link to Amazon to avoid in any kind of threat or problem.

Ok, you just answered my last question, which was about implementation of cloud technology and, beyond that, virtualization of

systems for remote work. Is there any more you want to do there or what’s the plan…?

MdC: At present time, the majority of our broadcast TV production and playout systems are onpremises. Only our MAM and Newsroom Dalet systems exploit supporting AI services for metadata enrichment in cloud (on a Mediaset VPC Domain).

Cloud services are mostly used for office IT and,

more recently, Mediaset OTTV distribution services (Mediaset Infinity).

Virtualization of systems for remote work (in particular remote editing and postproduction) is part of an ongoing evaluation and some PoCs that will soon start.

Completion of the IP Migration, of the complete content acquisition and distribution chain, as I outlined above.

Virtualization of production systems (especially for

pop-up productions) can be one of the areas of future exploitation.

For sure we have to consider it, because at the moment our production system is based on premise systems, post production and inside storage devices… so for sure we have to at least evaluate this and we’ll start next month with some POC for evaluate all these questions. At the moment it’s all in our mind but is mandatory to consider it also for the particular way in this moment.

…but at least you are trying some proof of concepts and so on. In order to evaluate and to see what we have.

PO: We have made some Proof Of Concept that were related in particular way on the post production system and editing and post production.

Yes, It’s what everybody starts, it makes sense.

As you know is not only a problem of technology, It’s not only a problem of devices, but it’s an issue about the way to work and workflow…

…and, yeah, you need to educate your crew also.

PO: Not only educate but we have to involve a lot of structure not only the technical structure or the operating structure, because this also changed the way in which we worked: now we have our post production room inside the production center and so the editor goes directly to their room and check the content and check the teams and

«At present time, the majority of our broadcast TV production and playout systems are on-premises.»

he’s able to change also anything.

If we think in a postproduction system or in a remote area, we have to involve for sure also all the structure: also the production, not only the technical or operating.

A challenge, but like the migration on the IP was involved all the structure because it also maybe a few things, but few things change also in the other system so all the structure of the company. So for sure have to be managed together. It’s not possible for one to arrive and say “from tomorrow we work in this way”, no; because this is not going to happen and it’s not going to be efficiently.

MdC: For example during the COVID we had to implement some kind of remote desktop and based architecture for journalists especially to access the Newsroom system, our data and newsroom system. It was a reasonably hard because not only I mean in terms of bandwidth, in terms of responsiveness of the system.

And indeed, also a large number of people were working at home, so there was that urgent at them in that specific period. We somehow survived it but we also realized that that in a remote editing and post production infrastructures had to be designed very carefully to avoid interruptions, delays in editing… and post productions are very critical and very demanding.

And the 2nd thing we have in account is costs, because of course during the COVID, It was a necessity but now we have spaces, we have rooms equipped with everything, with monitors for people to work with. And so I mean the interest, I wouldn’t say has disappeared, because we have also to consider this, but it is not so urgent as it appeared.

Finally, if you want to share any other plans you have regarding IP, maybe to go with the obvious, maybe to go with in order to be used with other production companies

or external production companies that can connect through IP to your production centers. Do you have any future plans that you want to develop this IP technology outside, let’s say, of your two production centers in Rome and Milan?

MdC: In terms of that, yes. Let’s say that by now we already have to separate file-based contributions from live. As far as file based the contribution occurs, yes; we have been using it for several years. I don’t know if in which of the questions I mentioned, but we have also these specific workflow managers that also accelerate contribution geographically distributed and the system where you cannot use all pure TCP/IP and so you have to rely on alternative systems, yes.

So, for as far as the FDP basically apart from some services that legal services that contribute with tapes, everything is based on IP.

Regarding live, we are receiving some external

contributions. For example, although I did but not directly 2110, not directly 2110 we are planning to receive them, with the receivers that are put but that exit in pure SDI and I are interfaced to our IP gateways SDI 2110. This is the only case we have.

As I mentioned some external contributions, the live contributions also from a group that move in the field are coming from outside are contributing via several backpacks.

You know that we use a cellular network or also satellite in some case and that we have receivers that of course receive these contributions and translate them into SDI and then into our contribution and distribution network.

So, in this sense we have plans to move over IP the future? I don’ know. I’d say not right now. Both for security reasons and because at the moment we are not using, apart from these backpacks, any other contribution system based on IP.

Marco, Pietro do you want to say before we close?

No, I would say that, yeah, you think the only concern that might be that is Pietro mentioned earlier that sometimes we are still to consider that the benefit of using IP directly connected to our infrastructure based on the on the granularity of interfaces. Because as long as we move signals at 1.5 Gb and the interfaces are a 25 Gb or 100 Gb, sometimes it’s more convenient, it feels more convenient, I mean when interfacing these kinds of signals.

It’s different if you have to interface a mixer uh for example Sony mixers implement 2110 but already convey several video signals over these 2110 interfaces.

If it is about interfacing directly cameras for example, maybe in the future we will move somehow to Ultra HD and at 12Gb It might be convenient but as so far with 25G interfaces on our IP course is more

convenient to aggregate engage ways with SDI and then turning again into IP. This is the only rational behind that some legacy choices that we are still…

PO: Or maybe we can go also with the camera, but we have to consider to put some aggregations in the device. Then now the aggregation is made by an SDI aggregation. Maybe now in the, now it’s possible or segregate by an IP like a cost or something like that. But for sure not directly connected to the monolithic system. Otherwise, is the benefit you OK but the wasting of band and dataport is not convenient at the end of the work.

But now, you have to also to consider that we started in 2020 to build the IP infrastructure and not and not a lot of device were compatible nor affordable. Now we find that maybe we are also more confident with the system and for us is little bit easier but not so much.

It’s a continuous challenge.

Host Broadcast Services (HBS) is a company that specialises in TV produc on and broadcas ng for large sports events. Since its founda on, in 1999, it hasn’t stopped growing and widening its services range; today, HBS features several offices worldwide and employs almost 120 people. Dan Miodownik, CEO, tells us about HBS’ way to success and which projects are they planning to deliver in a near future.

Host Broadcast Services (HBS) was founded in 1999. The team who had worked with FIFA on the successful delivery of the host broadcast of the 1998 FIFA World Cup™ in France, led by Francis Tellier, were approached in a consultancy role for the following edition in 2002. That soon led to the incorporation of HBS as a company with the single purpose of delivering the 2002 FIFA World Cup Korea/Japan™.

The basics of the host broadcast project (multifeeds concept, director’s selected from numerous territories and hand-picking their teams, sourcing of equipment, and building long-standing relationships with suppliers, etc.) were then invented. This successful model is the cornerstone of any HB operation, evolving with the industry but staying close to those initial principles.

Over the years HBS has grown in experience, staff, as well as global locations, and as a result has evolved

«We specialise in project management of major events, with a large focus on content production (live and non-live) and broadcast engineering, but also with expertise in construction and delivery of broadcast sites, venue management, broadcaster relations – as well as the internal solutions required such as logistics, IT and human resources.»

into a multi-project company. We specialise in project management of major events, with a large focus on content production (live and non-live) and broadcast

engineering, but also with expertise in construction and delivery of broadcast sites, venue management, broadcaster relations –as well as the internal solutions required such

as logistics, IT and human resources. Our strength is the ability to offer all of these skills as a turnkey package or component elements to clients, based on what they require for their project.

While our main longstanding partnerships are with FIFA, UEFA, the Ligue de Football Professionnel, the Fédération Française de Tennis, Concacaf and World Rugby, we have over the years worked on nearly all major sports and events, on all five habitable continents and in over 100 countries.

The company was founded in 1999. What has been the evolution of your technology during this time? What technological change has made the biggest impact in your workflows?

The industry has evolved substantially over the course of HBS’ existence, with many technological changes brought on by work we have done in partnership with our clients on major events.

Purely on the standard of world feed signals we have gone from SD in 2002 to UHD HDR in 2018 and 2022, with several other innovations tried and tested over the years.

The coverage on major events today is leaps and bounds from where we were with our first events, for instance, the number of cameras for a standard camera plan – taking audiences closer to the action – has grown, in some cases doubling, which has meant the storytelling

options for directors has hugely evolved, as well as the quantity of exclusive content we can offer to rights holders.

Another major change we are seeing develop in recent years is the move from satellite distribution to cloud-based IP contribution and distribution. Whether hybrid or full OTT, the cloud distribution method is here to stay and the option between this approach and satellite will continue to allow for greater flexibility.

In terms of changing the way we work, the emergence of remote production has been a game changer. The ability to deliver without the requirement of bringing crew and equipment on site to event locations has obvious financial and environmental advantages, which is beneficial to smaller events that can now be covered in the optimum manner. We will see further advances in remote and centralised production on events in the coming years.

What resources does HBS have and to what productions does it allocate them?

As a project management company our key resource is our staff and their expertise. Over the years we have retained our experts and their accumulated knowledge of delivering broadcast services and solutions for major events and this is what forms the strong foundation of HBS’ reputation and track record of flawless delivery.

The company has invested in a core of technical equipment which is redeployed from event to event, updated when advances require, which is stored in an off-site warehouse. Similarly, some technical solutions have been developed over the years that have become a staple of our operations.

As an example, the Equipment Room Container (ERC) is an approach to setting up technical installations at a venue that we developed to optimise implementation time on

site. The full system is assembled and tested at an off-site warehouse before being sealed in the container and shipped to the host country of the event, allowing for immediate plug and play.

Which manufacturers do you work with?

Over our 20+ years as a system integrator in the broadcast industry we have

worked with practically all major supplies of equipment, technology solutions and broadcast services – we have good relationships with all and are not tied to any manufacturer.

Part of our corporate ethos and values is strong budget management and transparency with our clients. As such, each project’s requirements are

analysed individually from each other and we tailor our response and our selection of suppliers based on the clients’ needs and goals.

We strive to find the most cost-efficient solution that delivers to the standards we demand at the level that is required. Through our network of suppliers around the world we are able to make our decisions

based on good analysis and a fair selection process. We are known for a pioneering role in technology, but only when the solution demands.

Who are the most important customers for your company?

Each project and client has its own objectives and we deliver on those goals independently for each project.

As a multi-project company, we work with many clients

on large and small projects, sometimes delivering an all-in-one host broadcast operation and sometimes just an element or component of a bigger mission.

The key thing is ensuring that each project is delivered to the highest standard for our client, whether it is the project management of a full season or standalone tournament, or the provision of one service as part of a bigger project being led by a federation or organiser.

What protocol does HBS use in order to guarantee security and reliability during transmission?

Planning for each event always includes a backup solution and crisis scenario methodology. It is rare that it is required but it is essential to have in place and with a team that understands the procedure to respond calmly and efficiently in implementing any switchover required.

«Different type of projects have their own scale and challenges to overcome – for an event like a FIFA World Cup™, it can of course be measured as biggest because of factors such as global audience, or the length of the event.»

Procedures look to ensure that you are never reliant on one technology. If there is an IP solution, then that is doubled down with a satellite backup. For an event with an International Broadcast Centre (IBC), there is always a solution on standby for a situation where the IBC becomes inoperable. At the Rugby World Cup 2019 in Japan this scenario came into action when a typhoon passed through Tokyo. It was necessary to shut down the IBC facility for 24 hours, with the operations switched to a remote site in the UK, before coming

back online again once the inclement weather had passed and the impact on site in Japan evaluated. While remote production is becoming more prevalent, with the cost and environmental benefits that brings, it remains the case that the main World Feed is managed on site at the stadium, which is a way of managing risks.

Could you tell us about the biggest projects HBS has been involved in?

Every event is big. For our client, their event is the most important date in

«The human factor is the one element that can be different for an opening match of a FIFA World Cup™.»

their calendar, so, for us, as a service provider, that is the same mindset – we are there to make each and every client shine. Different type of projects have their own scale and challenges to overcome – for an event like a FIFA World Cup™, it can of course be measured as biggest because of factors such as global audience, or the length of the event.

For multi-sport games you can potentially have over 40 different active venues, each with a different set of installations and camera plan, each covering a different sport with their

own guidelines and style of coverage, with events occurring simultaneously and potentially medal winning-moments and medal ceremonies overlapping – yet the output must remain consistent and the director of the world feed must make sure all stories are covered with a coherent narrative.

Meanwhile, a season-long event requires planning over a much longer stretch, without necessarily the same crew working on every weekend or every match – which again can bring its own complications

in terms of logistics, project management and consistency.

How would you define the audience of a FIFA World Cup on the opening day? And how does your company translate this knowledge of audiences in terms of production?

The size of the audience does not change the production: technically, it makes no difference to operations if it is the first match of an event or the last. The plans are in place, the testing has been done.

The human factor is the one element that can be different for an opening match of a FIFA World Cup™. For the staff who have been working on planning events, sometimes for up to four years, there is that sense of anticipation, that adrenaline to finally see all the hard work and organisation come to fruition with live images beamed out from the event.

which

have you find the most difficulties?

Each event and sport brings its own set of challenges, but in terms of a specific type of event, anything which is not in a controlled environment – for example, a winter sports event or a marathon. Within a stadium or an arena there is a certain level of control, when an event leaves that situation and takes place on a mountain or across a route around a city there are (potentially) many more uncontrollable factors (topography, weather, or proximity of the fans to the competitors).

The weather can take a real impact on a winter sports event, perhaps a sudden snowfall, which could cover cameras positioned on the course, or make it more complicated for technicians/operators to reach. Sometimes in the opposite manner, if snow has not fallen heavily enough in advance of an event. No matter how much technical preparation has gone in, if the conditions are not right it becomes a very different operation.

The technology is used where it is relevant to the project. What it brings to broadcast, especially for the federations and event organisers, is the opportunity to better manage and control how their content is distributed..

Through the broadcast rights system the federation organises the event and the sale of rights, but the distribution lies with the broadcaster, the providers and their manufacturers

– working within the parameters of their rights agreement. With cloud solutions, an event organiser can look closer at an OTT solution via an owned-platform – removing the third-party element and allowing them to control the distribution of coverage if they so wish.

It gives them the chance to scale their solution based on the event: for a largescale event they can remain with a traditional model but for smaller or regional events they can choose, if they wish, a solution that may look at a cloud-based contribution/distribution for that project, or parts of.

The whole ecosystem of cloud-based distribution offers the benefits of

What technological breakthroughs are HBS considering to incorporate in a next future? And, in terms of company structure or appointments, does HBS plan any move ?

As a company, HBS continues to look at where our skills and expertise can be applied – potentially even beyond sports.

We have recently opened a branch in the U.S., following our extended contract with Concacaf it became necessary to have a permanent presence. As a project management company, we can offer top-to-bottom solutions, or elements of, which is where our strength lies. We see an opportunity in the North American territory to apply the philosophy we have learned over the years on the events, projects and sports that match our experience.

And, of course, a key factor for all companies in the industry, is understanding how we can continue to improve the way we operate in a sustainable, socially responsible and environmentally conscious manner. It is important that we actively take steps and are seen to be leading the change within the industry on these matters.

In terms of technology, remote production is clearly the direction the industry is heading, and we are, of course, looking at how we adapt our services to match that approach and to keep the client’s needs at the centre of that strategy.

With that in mind, areas such as agnostic platforms that offer a catch-all service for areas such as distribution, virtual commentary or archiving, are interesting to explore. Having one tool that can be tailored for all of a client’s requirements will allow remote solutions to be applied more frequently.

scale and flexibility to the client, and allows to optimise on site resources, which is why it is valuable for us to investigate and understand the opportunities that it presents.

There are great advantages in 5G andeven though the technology, according to recognised professionals, is ready to goit has not yet become standard. In your opinion what would it take to get to this point?

The key factor is coverage and the consistency of that coverage. If 5G coverage cannot be completely relied upon then the question would have to be why risk a 5G solution over a cabled approach? Its usage depends on the event and the requirements, and then applying it as a solution when it makes sense rather than as a go-to solution. There are different factors in play when making that decision. Is it a public or private network? If it is public and you have a lot of spectators around the event will that saturate the network? Such factors are clearly going to have an impact.

TM Broadcast has had the pleasure of chatting with the Director of Photography of the last HBO show success “The Last Of Us”. Eben Bolter has spoken with us about new tools and ways in film making, how he foresees the future of the industry and which kind of filming and producing high-tech is he keener on.

Who is Eben Bolter and how did he start in the cinematography world?

I’m a cinematographer and I got my start making short films with friends on DSLR cameras. Rather than going to film school I committed to shooting as many shorts as I could, and over the years I’ve shot over 100 shorts now. It’s a great way to learn by getting your hands dirty across all areas of the camera and lighting departments.

What has been your progression and how did you get involved in a production like “The Last of Us”?

Doing so many short films allowed me to build a show reel which led to getting an agent, shooting feature films and then TV series. I’ve been lucky to work with HBO several times before, so when “The Last of Us” was announced my agent was able to get me an interview and somehow I got the job.

To develop these chapters of “The Last of Us”, what technical and human teams were involved?

There are 9 episodes in season 1 of show, 3 of which I DPed. We ran 3 cameras for most of the

«Doing so many short films allowed me to build a show reel which led to getting an agent, shooting feature films and then TV series.»

“I’MA CINEMATOGRAPHER AND I GOT MY START MAKING SHORT FILMS WITH FRIENDS ON DSLR CAMERAS.”

show with an incredible crew of Canadian camera technicians, and I was fortunat in being able to bring my A- camera operator from LA Neal Bryant. Lighting wise Paul Slatter headed up a massive lighting department, and Spike Taschereau runs a very efficient grip department.

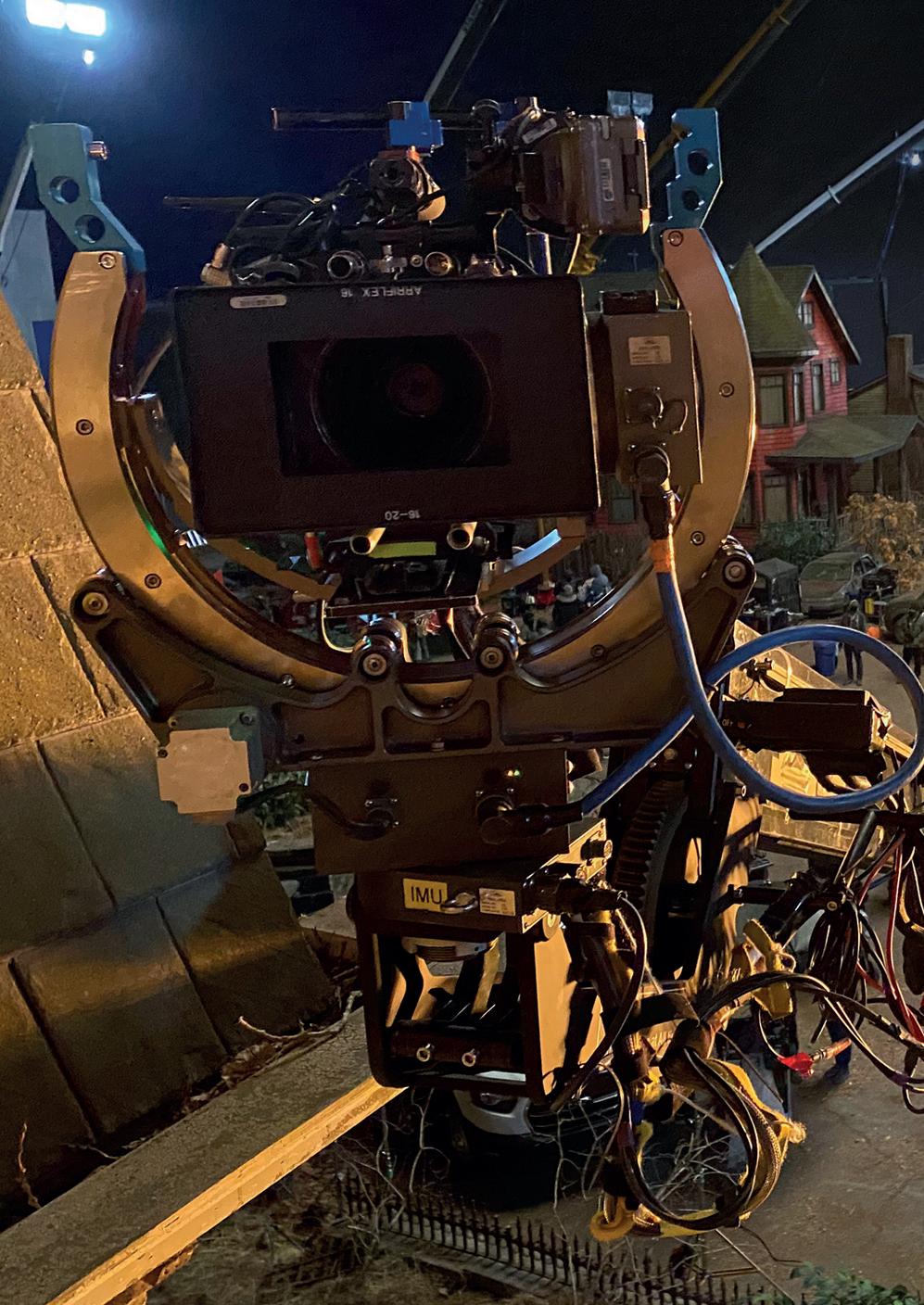

What technology has been used to develop the chapters?

Technology wise, most of the innovation in the show was with regards to production design and VFX, with designer John Paino and VFX head Alex Wang coordinating brilliantly to bring the world of “The Last of Us” to life on camera. Our usual approach was if the actors could touch it, it needed to be real. If it was in the far distance out of reach above them, we would use VFX extensions.

What is the biggest technical challenge you have faced? How did you solved it? Once finished, which chapter do you consider the most

rewarding as for the work you have done?

In episode 5 we had a cul-de-sac sequence that required building the street and houses on a backlot outside of our studio in central Calgary. The scene called for a middle of nowhere moonlight feel that would seem organic and naturalistic, yet allow the audience to see the story beats clearly. The added complication was winds in Calgary can get up to 100mph, so we had to create a large scale softlight solution without using

textiles. We settled on a lighting ‘net’ approach, with 400 individual bi-colour LED tubes evenly spaced and suspended from four construction cranes, giving soft and controllable light with wind being able to pass through so we could keep shooting.

What is the biggest technological innovation you have introduced in the filming of this season of “The Last of Us”?

It wasn’t really a show where we were looking to innovate with technology,

more just to tell the story in the most authentic way possible. I’d say the most interesting new piece of technology was the ZeeGee camera rig. It’s a device that emulates handheld operation but attached to a Steadicam vest and arm. This takes away some of the shakiness from handheld operating, and allows the operator to create an authentic handheld look but from any height, not just the arbitrary height of their shoulder.

Chapter three “Long, Long Time” had a

great impact and swas really acclaimed by the audience. You can see scenes like Bill and Frank’s conversation at the piano or their last supper. In terms of lighting and photography, how do you create the mood for an episode like this?

For Peter Hoar -the director- and for me this was very much a story where we wanted to use the gift of a relationship told over 20 years to its full potential, so we very carefully plotted out how

the seasons, weather and time of day could be best utilised to reflect the mood of the scenes and of their relationship overall.

As for the fifth chapter, how was the lighting approach in the sniper scene and the battle against the infected?

Again, this was about reflecting the truth of the situation. Our characters are in the middle of nowhere in the dead of night, with no electricity and they can’t use their torches to keep hidden, so I had to motivate visibility via simulated moonlight, until the armada arrive and the headlights and fire would take over. The difficultly

«I’d say the most interesting new piece of technology was the ZeeGee camera rig. It’s a device that emulates handheld operation but attached to a Steadicam vest and arm.»

was in committing to the authenticity of our world whilst doing large scale and flexible moonlight which is inherently fake.

HDR is becoming a standard now that HDR monitoring solutions are available, have you ever had the opportunity to shoot in HDR? Has it become the norm, and does it change the work of a DoP? What do you think of this technique?

Yes, I’m actually a big fan of the opportunities which HDR gives us as film-makers. I also believe it’s the new reality of how most people will view our work. Any TV bought over the past 5 years is going to have HDR and with the way film releases and of course high-end TV drama released now, you need to shoot for how the audience will see the work. On the Netflix movie “Night Teeth”, Netflix supported my request to have 4K HDR monitoring on set at DIT and this was a revelation for me. We shot and lit for HDR, then graded the HDR first with Jill Bogdanovic at Co3. For me, the trick is to think of the

HDR range as an expanded toolbox. Every single scene and shot doesn’t have to use the full range, and on “ Night Teeth” we usually capped our highlights at around 70% brightness, but when bright light is used as a story-telling tool we had the ability to open that up to 100% and literally make our audience squint.

Another of the great revolutions that this industry is experiencing is Virtual Production. Have you had the opportunity to shoot with this technology? What do you think about Virtual Production? Is it going to change the way we all produce content?

I was fortunate to be asked to DP a short film for a company to demonstrate

their Virtual Production screens and was thrilled to get an early look at the technology. It’s incredibly impressive and offers up some fascinating opportunities for interactive lighting and in-camera VFX capture. There are still a lot of quirks to work out and it doesn’t work for every type of shoot or scene, but for certain productions and scenes it’s a wonderfully exciting new tool.

What will be the next big revolution regarding the work of the Director of Photography?

«I’m actually a big fan of the opportunities which HDR gives us as film-makers. I also believe it’s the new reality of how most people will view our work.»

I think HDR will continue to be normalised, particularly as cinemas start to fit giant OLED screens instead of projectors, and if Apple release a mainstream virtual reality headset as rumored, it will be interesting how filmmakers will come up with ways to provide content for that user experience. I’m a tech-head and I love to keep up to date on the opportunities out there; I was very impressed with the PSVR2, so if Apple get their version right I can really see it taking off in the mainstream.

In which upcoming projects will we be able to see Eben Bolter?

My next release is Marvel’s “Secret Invasion” which I had the pleasure of DPing the additional photography for. I had a great time working with Marvel and I’m looking forward to fans watching this one.

A date marked in red on the calendar of every broadcast professional: the NAB. We missed two editions for obvious reasons and the previous one was somewhat subdued, but in this year 2023 it has made a strong comeback, and with sufficient reasons to stay the reference event worldwide.

Apart from all the new products and services that we were able to know on the carpet during the three and a half days of show by the hand of the large suppliers, the complement that was the whole series of conferences, round tables and informative talks complements an offering that was really hard to pass by. Let’s explain a little more what this NAB 2023 has meant and what are the trends in which find ourselves immersed.

In this world, partly inherited from the not-sodistant times, in which the remote won against faceto-face, it is understandable that doubts arise about the convenience or not

By Yeray Alfageme, Business Development Manager at Optiva Media an EPAM companyof a face-to-face event such as a fair. Of course, to know the news about hardware, equipment or new materials for our productions, seeing, hearing and touching is more than necessary. In the end we are individuals,

and we like to touch things and when it comes to a new camera, a new screen or a new teleprompter touching it with our hands has a definite added value.

Our industry has long been, if not already part of it,

immersed in a change of systems and equipment into software and services and it is in this second aspect where doubts arise about whether it is essential to visit a fair to know the news in these areas. Manufacturers are less and less waiting for big events to break their news and the thing is that when we talk about services it is very easy and convenient to show this in an online event with a greater audience at a lower cost.

I remember the years when both customers and suppliers waited for the fairs to have those project meetings or to sign new agreements to avoid unnecessary travel costs. All the meetings were concentrated in just a week and with a trip to Las Vegas we all met with everyone. This, thanks to our friends Teams, Zoom, or Meet and with the cultural change that was brought about is no longer the case. Why wait if we can have more immediacy and launch a press release about it? That’s what many of us think...

However, there is one last aspect that makes very valuable -in addition to being the world reference event- the holding of NAB and similar events: training and dissemination. And the hosts have realized this. We can’t compare at all the offering of talks, informative events, round tables and actions to promote innovation and entrepreneurship from a few years ago with present times. The fair is a meeting point, a place to learn and discover new things, not only from suppliers and manufacturers, but also from industry associations and the training and dissemination agenda has gained weight -a lot so- in trade fairs. Undoubtedly, one of the factors why NAB is a must-go event.

«A failure in a broadcast, production or event, has a very large impact even at corporate level. That is why we look with avid interest as well as with suspicion at new technologies.»

Like in many other industries and thanks to the explosion that has meant a quantum leap at computational level that allows AI to have burst -literally- the impact that this technology has had on broadcasting is not a minor one. Even more so if we consider how close we are to the IT industry, and we are going to be even closer

to it, a native place where AI emerged and where it reigns supreme.

It is rare nowadays for programmers not to make use of AI on a daily basis and this adoption has taken not even years, maybe just months, and if you don’t keep up with this pace, you are left behind. Something similar, albeit at a different pace, is happening on broadcast. Even if we take all this into account, our industry is a very traditional one. We are reluctant to

change things that work well, although we know that we have obvious improvements available, and this is because of the risk that we are taking is really huge. A failure in a broadcast, production or event, has a very large impact even at corporate level. That is why we look with avid interest as well as with suspicion at new technologies.

But the impact that AI has had and will have cannot be denied, at least if we

want to remain at the forefront. Examples of this are applications of this technology to archiving systems. There are many manufacturers that offer, with great success at times, systems that allow you to categorize and tag large amounts of content in a fast, easy and very accurate way. Even more than if done manually. Beware, this is not about substituting machines for people, but rather about letting machines -AI- do what they are better than us at, thus enabling us to add value where we really can. No one will ever be able to create feelings, emotions or stories that allow us to convey what we feel. But we all will agree that tagging 100 years of a television archive is not a task where the documentary filmmaker feels very accomplished, although there is a place for everything and for everyone.

Another aspect in which we have seen great real applications of AI is in fast editing of content. Let me stop at the ‘real’ bit.

Because we sometimes term as AI the application of certain algorithms in certain ‘complex’ tasks or we apply AI to tasks or workflows where, honestly, it is not necessary to do so. That is why I have allowed myself to choose those use cases, such as archiving or fast editing, for example news, to illustrate this concept.

In times when speed prevails over quality -always over an acceptable minimum threshold-

automating processes thanks to complex algorithms that allow us to go beyond simple editing and understand the content and know how to compile a history of it, always under our guidelines, has a great added value, which in turn allows us to create more and better. Of course the ideal environment is news editing. Fast, but quality editing is something essential to get there before anyone else and tell everything that is going on.

Another technology that is not new at all but one that in this NAB has well exploded with a real presence is cloud computing, or rather more appropriate, cloud production. And not only for the fact of making remote productions or achieving lower costs, which in many cases until now has been due to these two reasons, but because producing in the cloud, with the amount of resources and possibilities that this gives us, changes and improves our way of producing.

Because there are systems working 24/7 for which remote access, and sometimes even when necessary, it is not essential, and therefore to deploy them in the cloud does not bring much -if any- added value and only increases costs. At the end of the day, in the cloud we pay for redundancy, security, scalability and

maintenance, something that in a permanent system is more convenient and economical to undertake ourselves.

It’s for events or productions, occasions or in situations where our production needs exceed our capabilities when the cloud makes all the sense in the world. There are many -if not all- manufacturers of production systems, nearly regardless of their field of specialization- that offer production environments in the cloud in exchange for a subscription per use. And here I think comes the rub.

Our fellow financial colleagues are going to love having guaranteed recurring revenue and not a multi-million-dollar sale that they have to spread over time, since the shelf life of what they acquire is going to be long. It is much easier to go slowly than by pushes. But manufacturers should not push their customers to adopt systems that suit them for external reasons. There are uses and occasions when cloud production is ideal and subscriptions are best

«There are many manufacturers that offer, with great success at times, systems that allow you to categorize and tag large amounts of content in a fast, easy and very accurate way. Even more than if done manually.»

for everyone, but the fact that we have made a huge investment in a technical development to have a scalable platform in the cloud does not justify that such a system will be valid for everything.

A concept that is more novel, not only for broadcast but in technology in general, is Edge computing. What does this mean? Unlike the traditional cloud where there are large data centers where customers share resources, thus making them more efficient and capable, the Edge offers that same scalability and capacity close to customers.

Let’s picture ourselves in a football stadium in Malaga, several hundred kilometers away from the location of one of the datacenters from one of the best-known suppliers in Saragossa. The latency our video streams will experience if we want to produce this basketball game remotely in said datacenter can reach up to 50 ms, depending on the transmission conditions of an unmanaged network such as the Internet. In these cases it becomes necessary to have the processing performed close to where the information has been generated, i.e. close to the stadium.

And where on the Edge can we place these ‘minI’ data centers for

processing? Well, in the communication towers of telecom operators. Does anyone else have any other concept in mind when we are making reference to them? Right: 5G. And the thing is that in remote production environments where our video sources are contributing to our production through a 5G network, with the decreased latency increased bandwidth that this implies, it does not sound very convenient then go over the Internet to a datacenter hundreds of kilometers have processed, right? That’s where Edge makes sense.

Because if we combine contribution networks of our very low latency signals, such as 5G networks, with very large processing capabilities with very low execution times, such as computing at the edge of the cloud, with advanced AI systems, including machine learning, we have an environment of innovation, increased quality and new possibilities that we are just

beginning to explore. And all this has been seen at NAB.

However, I must say that this type of environment is not for everyone. Just as the transition from SDI to IP is being done in a step-bystep fashion, manufacturers should not push their customers to adopt novel systems for convenience and not out of necessity. Give us time.

There are many opportunities, capabilities and possibilities in new production environments, but let’s not rush. My continuity for a regional or national TV that broadcasts on DTT and on a proprietary OTT platform does not need to be in the cloud tomorrow with an AI that decides what content to broadcast and the advertisers. Time will tell.

And here I have to stand up for some manufacturers that offer an expansion, migration or even experimentation model in these new environments in an easy and gradual way for everything. That is were we will find ourselves. But

let’s not force anyone to adopt new systems and go to SaaS subscription models, by ceasing to support, evolve and update more traditional systems, because it’s by doing things that we figure out where we want to go.

The NAB Show 2023 has presented a wide variety of novelties and advances in the world of technology and audiovisual production. One of the most prominent trends in this event has been the consolidation of artificial intelligence and machine learning as key tools in the industry. In this sense, various solutions have been showcased for data processing and analysis, process automation and improvement of production efficiency.

In addition, NAB Show 2023 has shown the continuous growth of 5G technology, which is becoming a crucial element in transmission and distribution of content in real time. New solutions and devices have been introduced to improve

connectivity and network capacity, thus enabling creation and distribution of higher quality, real-time content.

Another trend worth highlighting has been the development of virtual and augmented reality, which are gaining ground in production of content and in creation of immersive experiences. In this sense, devices and tools have been presented that allow greater interaction and customization of virtual and augmented experiences, which in turmn promises greater user immersion and participation.

In short, the NAB Show 2023 has been a very enriching event for the AV and technology industry, having shown a wide range of novelties and advances. Artificial intelligence, 5G and virtual and augmented reality are strengthened as the main trends, which promises an improved quality and efficiency in production and distribution of content, as well as greater interaction and customization of user experiences.