By the time summer 2024 ends, sports broadcasting worldwide will have seen one of its most innovative and technologically exciting seasons in recent years. On this issue of TM Broadcast International we make a full tour on the latest advances that will de fi ne remote production and live sports broadcasting in the coming years.

The coming months will provide an exciting selection of top-tier sports events, including UEFA Euro 2024 in Germany (including group matches at the newly expanded Bremen stadium), Tour de France and the culmination of all sporting competitions - Paris 2024 Olympic Games. The tentpole events will prove the ideal staging grounds for new broadcast technologies and remote production innovations.

In said ideal world our focus on remote production in this issue couldn’t come at a better time. We are delighted to provide our readers with unique insights from pioneers in the space such as Riedel, EMG and Gravity Media; de fi ning new ways of working for distributed work fl ows. And there is a thoughtprovoking conversation with Tom Peel who runs remote production for SailGP. He provides insight into what it’s like when images are everything in high octane sailing action.

Editor in chief

Javier de Martín editor@tmbroadcast.com

Key account manager

Patricia Pérez ppt@tmbroadcast.com

Editorial staff press@tmbroadcast.com

But there’s more: in a contribution from Grass Valley, ‘Embracing the Future of Remote Production in Motorsports’, we look at how the motorsports sector is leading the charge when it comes to remote production. Within this article, we will explore the grappling with the unique intricacies of a high-speed, high-intensity industry and find out about how modern methods are rising to meet these challenges.

For sports broadcasting, both ultra-highdefi nition (UHD) and High Dynamic Range (HDR) are pushing the quality bar even higher. The events this summer will feature the newest innovations in these technologies, giving viewers a more immersive window to the action with enhanced clarity and color depth.

At the end of the present issue, within the ongoing series from our lab, we go hands-on with some of the latest PTZ camera developed by Canon: the 4K UHD 60P – 4:2:2: to 10 bits.

To sum up, these transformative technologies will soon arrive to spare us further embarrassments on sporting spectacles of this summer. This will drive a new golden age of live sports coverage, one in which we see broadcasters and production teams step up to the challenge; you can bet they’re going to do some phenomenal things over this period that deliver more eyeballs from around the world just as promised.

Creative Direction

Mercedes González mercedes.gonzalez@tmbroadcast.com

Administration

Laura de Diego administration@tmbroadcast.com

Published in Spain ISSN: 2659-5966

TM Broadcast International #131 July 2024

TM Broadcast International is a magazine published by Daró Media Group SL Centro Empresarial Tartessos Calle Pollensa 2, oficina 14 28290 Las Rozas (Madrid), Spain Phone +34 91 640 46 43

STORIES OF SUCCESS

BUSINESS & PEOPLE

REMOTE PRODUCTION | REMOTE PRODUCTION

Remote production in sports is conquering the audiovisual and broadcasting industry. For this reason, we wanted to create a unique content in TM Broadcast International magazine, including the best and most innovative initiatives in the industry. Here is the result for our readers.

• From Sea to Screen LiveLine transforms SailGP with real-time data and graphics revolutionize its broadcasting and content delivery systems.

Interview: TM Broadcast International magazine and Tom Peel, Director of LiveLine at SailGP

• SNFCC invests in Riedel to support REMI workflows at its View Master Events

• Embracing the Future of Remote Production in Motorsports

By Jens Envall, DMC

• EMG / Gravity Media Revolutionises Netball Coverage with REMI Workflows

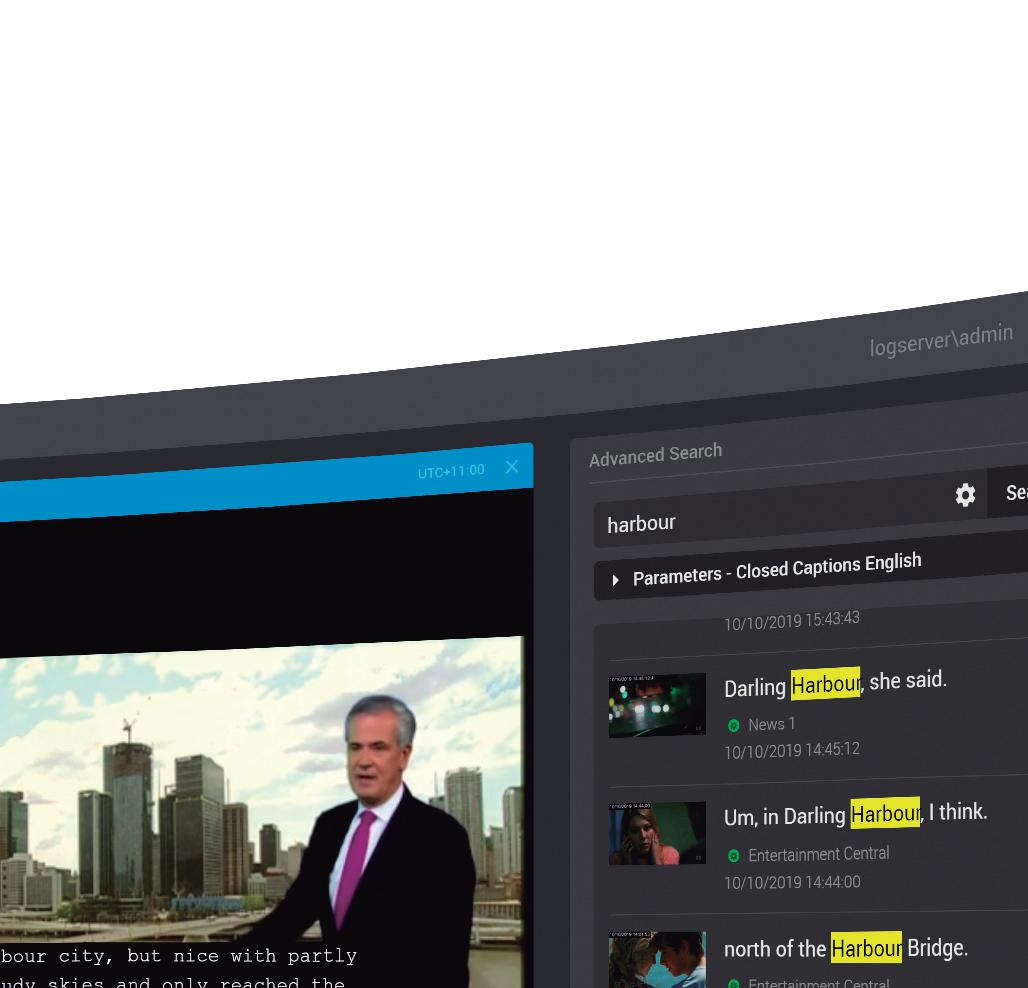

Viewers are used to the best possible picture and sound quality on both traditional TV channels and streaming platforms. This is putting evergreater demands on the technology and facilities used to monitor and analyze broadcast and OTT services.

But as Erik Otto, Chief Executive of Mediaproxy, explains, the technology continues to evolve.

TEST ZONE | CANON CR-N700

A high-performance PTZ camera: 4K UHD 60P - 10-bit 4:2:2 CR-N700

Laboratory test conducted by Carlos Medina Expert and Advisor in Audiovisual Technology

The success of PTZ cameras has been one of the most relevant highlights in the audiovisual sector and in the field of live cameras in the past four years. Many professionals had real reluctance to use this type of camera because for them it was more identifiable with webcams, video calls or simply for security issues.

Ikegami is set to unveil its latest addition to the broadcast-quality production equipment lineup, the HDK-X500 HD portable camera system, at IBC 2024 in Amsterdam RAI convention center. This versatile camera system will be showcased at stand 12.A31 from Friday, September 13 to Monday, September 16.

Gisbert Hochguertel, Ikegami Europe Product Specialist, highlights the versatility of the HDK-X500: “This camera is designed to excel in a wide range of applications. It can easily transition from studio setups on pedestals, to sports coverage on tripods, to location shoots on shoulders. At its core are three state-of-the-art 2/3-inch CMOS sensors with global shutter architecture, ensuring natural image capture even in challenging environments. It eliminates geometric distortion during still-frame replay of fast-moving objects and avoids

flash bands when capturing strobe-lit stages or flash photography.”

“The HDK-X500 incorporates our advanced AXII digital processor, delivering superior picture quality, reliability, lower power consumption, and enhanced functionality. It features automatic optical vignetting correction across OVC-compatible B4 bayonet mount zoom lenses and remote back focus adjustment.”

“To manage ambient lighting effectively, the camera is equipped with a dual neutral-density and colour-compensation filter system, similar to our high-end models. The colour-compensation filter wheel includes space for custom effect filters or an additional optical low-pass filter, reducing aliasing in challenging conditions, particularly useful for shooting LED walls.”

“The HDK-X500 also includes a 16-axis colour corrector for precise scene matching or colour effects. Chroma and hue levels can be adjusted individually within these axes in real time, supporting both standard programme production and colour matching across multiple cameras.”

“Like other models in the Ikegami UNICAM-XE family, the HDK-X500 adheres to ITU-R BT.2020 specifications, offering a wider colour space than conventional HD. Combined with the CMOS sensors’ wide dynamic range, it supports brilliant HDR imaging using the HLG opto-electronic transfer function per ITU-R BT.2100, ensuring detailed reproduction from bright highlights to deep shadows. Optional OETFs such as PQ and S-LOG are also available.”

“Technical specifications include a horizontal resolution of 1,000 TVL (typical centre resolution at 5% modulation), a typical signalto-noise ratio of 62 dB, and F12 sensitivity (1080/50p). The HDK-X500 supports high framerate capture at 2x speed (1080i at 100 Hz) or low-frame-rate capture (1080p/23.98, 25, 29.97), available via software licenses.”

“Accessories for the HDK-X500 include a range of monocular and studio viewfinders, remote camera controllers (OCP-100/ OCP-300/OCP-500), a 4K-UHD upscaler, and an ST2110 media-over-IP interface for the BSX-100 base station.”

EVS has officially launched VIA MAP® Version 1.0, an advanced Media Asset Platform tailored for the evolving needs of modern media organizations, as the company indicated. Following its debut at IBC2023, VIA MAP is now available to EVS customers, designed to provide a unified environment that supports the entire content lifecycle—from live acquisition and story creation to production, post-production, distribution, and monetization.

Mike Shore, EVS Senior Vice President of Asset Management Solutions, emphasized the platform’s unique positioning:

“As the initial point of contact for content, we have developed an end-to-end solution with VIA MAP. This platform enables our customers to seamlessly integrate our solutions, optimizing workforce efficiency to meet the time-sensitive demands of today’s media landscape.”

Accessible through an intuitive HTML5 web interface, VIA MAP offers Core and Creative applications finely tuned for specific functions such as baseband ingest, file import, advanced editing, and content metadata management. This integration enhances the connectivity of EVS solutions, facilitating increased operational efficiencies over time.

VIA MAP integrates with EVS’s MediaHub® for seamless content exchange and distribution, bridging live production with distribution and monetization strategies. This integration empowers content owners to maximize value by providing self-service access to an on-demand content portal, leveraging metadata for real-time search and delivery capabilities across distributed and diverse logging operations.

In collaboration with LiveCeption®, VIA MAP facilitates real-time collaboration between live production and post-production teams, enabling swift and efficient content utilization. Operators and editors can concurrently contribute to productions, browse and edit

content in real-time, and externalize segments as needed. This continuous enrichment accelerates content preparation and delivery, fostering collaboration among production teams regardless of location.

Harnessing EVS’s flexible MediaInfra solutions, VIA MAP leverages IP-based infrastructures for live video operations, enhancing routing, monitoring, orchestration, and media processing. Integration with Cerebrum enables router control and automation capabilities, reducing delays and enhancing operational efficiency.

Mike Shore concluded, “The launch of VIA MAP® underscores EVS’s commitment to a unified live ecosystem where our solutions converge seamlessly, integrate with best-of-breed third-party technologies, and maximize content value.”

EVS will showcase VIA MAP at IBC2024, booth 5.G08 in hall 5, from September 13th to September 16th.

Meptik has introduced Meptik Studio Pro, which the company describes as a turnkey solution designed to accelerate the installation of virtual production studios. This allencompassing package includes a pre-configured Disguise studio control stack, ROE LEDs, camera tracking technology, comprehensive creative and technical support, as well as training. Meptik Studio Pro aims to simplify the setup process for creative teams across various industries, enabling them to elevate their production capabilities effectively and efficiently.

The Meptik Studio Pro is available in four configurations tailored to different production needs:

• Small: Ideal for presentations, keynotes, or single-talent setups.

• Medium: Suited for commercial shoots, advertisements, online courses, and teaching.

• Large: Designed for high-budget film and episodic projects with multiple actors and elaborate sets.

• XR: Geared towards augmented reality projects that blend virtual and physical spaces.

At its core, Meptik Studio Pro features a pre-configured rack equipped with Disguise’s technology, including VX and RX servers, and Disguise Designer software for visual control. This setup supports a range of cinematic content formats, from 2D and 2.5D to real-time 3D environments like Unreal Engine or Volinga for NeRFs. Additionally, customers can select from various ROE LED display sizes tailored to their specific application needs.

Telos Alliance® has recently announced the immediate availability of its solution Omnia® Forza FM virtual audio processing software.

Debuted at the 2024 NAB Show, Forza FM represents the latest addition to the Omnia software lineup. Building upon the success of Forza HDS for HD, DAB, and streaming audio, Forza FM features optimized wideband and multiband processing tailored specifically for FM radio. It incorporates the renowned “Silvio” clipper designed by Frank Foti, the same clipper used in Omnia.11, feeding a stereo generator with dual μMPX outputs. Additionally, Forza FM includes processing capabilities for HD-1 with an integrated diversity delay.

Forza FM is delivered as a container suitable for deployment on an on-premises COTS server or cloud-hosted platforms like AWS. Alternatively, broadcasters can license up to two instances of Forza FM processing with μMPX on the Telos Alliance AP-3000 hardware platform.

The software maintains the user-friendly, single-page HTML5 UI introduced with Forza HDS, enhancing usability with “smart controls” that simplify adjustments across multiple parameters. This approach ensures that novice users can achieve exceptional on-air sound quality, while seasoned professionals retain the granular control necessary for fine-tuning audio processing.

Frank Foti, Omnia Founder and Chairman of the Board at Telos Alliance, expressed enthusiasm about the reception of Forza FM: “Since unveiling Omnia Forza FM in 2023 and formally introducing it just prior to the 2024 NAB Show, the excitement and positive feedback from the industry have been overwhelming. We are delighted to announce the availability of Forza FM for immediate shipment.”

Omnia Forza FM is now accessible through Telos Alliance’s global network of Omnia channel partners, ensuring broadcasters worldwide can enhance their FM radio operations with advanced audio processing capabilities.

Avid has unveiled Avid Huddle, a new Software-as-a-Service (SaaS) solution designed to enhance collaboration for post-production teams by integrating real-time editing sessions with Microsoft Teams, irrespective of location

Avid® has recently announced the launch of Avid Huddle™, a cutting-edge SaaS platform aimed at empowering post-production teams to streamline content review and approval processes. In an era marked by hybrid work environments, editors using Media Composer | Ultimate™, Media Composer | Enterprise™, or Avid | Edit On Demand™ can seamlessly share high-fidelity media within live Microsoft Teams meetings. Remote reviewers are now able to collectively view, discuss, and annotate content in real-time, fostering collaboration as if they were physically together.

Tanya O’Connor, Vice President of Market Solutions at Avid, highlighted the significance of overcoming geographical barriers in collaborative post-production workflows: “Collaborating on video content during post-production poses challenges when creative teams are geographically dispersed. Avid Huddle breaks down these barriers, enabling seamless

distributed work. Now, remote, over-the-shoulder content review with any production stakeholder can be achieved effortlessly using one of the world’s most widely used collaboration apps.”

Editors can initiate Teams meetings directly from Media Composer using Avid Huddle, ensuring the secure sharing of high-quality video content. This integration guarantees that all content remains safeguarded throughout the review process. Avid Huddle enhances efficiency by enabling producers, clients, and other stakeholders to provide immediate feedback through time-stamped comments and annotations during live sessions.

Simon Crownshaw, Worldwide Media & Entertainment Strategy Director at Microsoft, emphasized the transformative impact of Avid Huddle in content review and approval processes: “With the robust and widely adopted Microsoft Teams platform, Avid Huddle represents a significant

paradigm shift in how TV shows, movies, commercials, and other content can expedite the review and approval process with enhanced efficiency. Most importantly, we are enabling an unparalleled video and audio streaming experience akin to observing an editor at work within Media Composer.”

Avid Huddle simplifies the traditionally cumbersome task of distributing content to multiple stakeholders and consolidating their feedback separately. By simulating an in-person content review experience, editing teams can significantly accelerate project timelines, thereby reducing costs and enhancing productivity. Avid Huddle will be available through flexible monthly or annual subscriptions, allowing users to scale their usage as needed—paying only for what is required, when it is required. Notably, only the host requires an Avid Huddle subscription, while participants can seamlessly join sessions via Microsoft Teams.

TikTok is gearing up to elevate the Esports World Cup (EWC) experience with the introduction of a dedicated EWC Hub within its app

The leading platform for short-form mobile video, TikTok, has teamed up with the Esports World Cup Foundation (“EWCF”) to announce a new partnership ahead of the inaugural Esports World Cup in Riyadh, Saudi Arabia this summer. This collaboration aims to leverage TikTok’s innovative platform and expansive global audience to revolutionize engagement and diversify content offerings for esports enthusiasts worldwide.

The EWC Hub on TikTok will serve as a central hub showcasing content from the EWC, official broadcasters, teams, and players, ensuring comprehensive and immersive coverage throughout the tournament. According to the company’s statements, exclusive content tailored for TikTok will be produced by EWC, fostering collaborations with popular TikTok creators to further enrich the fan experience.

In addition to the hub, TikTok will introduce interactive activities designed to enhance community engagement during the EWC. A weekly live show produced by TikTok will provide in-depth coverage and behind-the-scenes insights, keeping fans informed and entertained throughout the event. TikTok LIVE will feature diverse creators broadcasting live from the event, offering unique perspectives and experiences. Custom icons and features exclusive to TikTok LIVE for the EWC will further enrich the viewer experience with dynamic content.

Mohammed Alnimer, Sales Director of the Esports World Cup Foundation, expressed enthusiasm about the partnership, stating, “We are thrilled to collaborate with TikTok to redefine esports entertainment for a global audience. TikTok offers a unique platform to authentically engage with esports communities worldwide, allowing more fans to connect with and

support EWC athletes and teams. Content creation is integral to our strategy of expanding the global esports ecosystem, and TikTok is an ideal partner to help us achieve these goals.”

Mohamed Harb, Head of Partnerships at TikTok MENA, highlighted TikTok’s commitment to enhancing the fan experience and promoting cross-cultural connections within the esports community. “Our innovative capabilities enable content creators to bring fresh and exciting perspectives to esports, enhancing real-time interactions and offering exclusive content. By continuously evolving to meet the needs of the esports community, we are shaping the future of gaming content and fan engagement.”

As both companies indicated, the synergy between TikTok’s robust platform and EWC’s premier events is poised to captivate a diverse audience.

RTVC, Colombia’s national broadcaster, has recently upgraded its studios in Barranquilla, located in the Atlántico region, with advanced DHD SX2 audio production consoles. This initiative is part of RTVC’s broader strategy to decentralize operations and enhance accessibility across Colombia via a secure digital audio network.

The project, overseen by Bogota-based broadcast systems specialist ASPA Andina, involved integrating DHD SX2 consoles across three key studios. These consoles utilize Dante audio-over-IP technology, enabling seamless sharing of digital media and production resources between RTVC’s staff and Radiónica, its state-owned music radio network.

The main studio, dedicated to Radio Nacional de Colombia, is equipped with an SX2 mixer featuring 16 motorized

faders. A second studio serves as a multipurpose facility for Radiónica and shared production needs, out fi tted with an SX2 mixer boasting 10 motorized faders. Additionally, a third studio designed for routine recording and production tasks includes a four-fader SX2 mixer. The installation also incorporates DHD’s Assist App, facilitating remote technical support, and independent level control jacks for hearing-impaired operators.

In support of these consoles, two DHD XC3 cores were deployed—one for the main studio and another shared between the second and third studios. These cores provide essential processing power, enhancing operational efficiency and flexibility across the studios.

Juan Pablo León, Technical Director, Commercial at ASPA Andina, highlighted the significance of this collaboration:

“This new system aligns with RTVC’s decentralization policy, enabling enhanced accessibility and operational efficiency across their Atlántico region facility.”

Marc Herrmann, Managing Director of DHD audio, noted the project’s focus on inclusivity: “RTVC’s commitment to hearing-impaired inclusivity is a particularly pleasing aspect of this project.”

The DHD SX2 mixers offer expandability with additional fader modules, ensuring flexibility to meet evolving production needs. Each console incorporates a 10.1-inch multi-touch display for real-time monitoring of connectivity and signal levels, alongside motorized faders for precise control. The XC3 IP core, designed specifically for broadcast environments, supports a wide range of audio processing capabilities, making it ideal for RTVC’s demanding production requirements.

Ateme has been chosen by Drei TV to power its advanced OTT streaming service, just in time for a significant European Football Tournament.

The partnership sees the company specialized in broadcast technology providing its state-of-the-art video compression and management technologies to support Drei TV’s delivery of over 60 channels through their OTT platform. This collaboration aims to elevate service quality and enrich the user experience for Drei TV customers across Austria.

Ateme’s solutions enable Drei TV to enhance its video processing capabilities, streamline operations, and offer premium

video quality. This strategic deployment underscores the German broadcaster’s commitment to innovation and customer satisfaction in the competitive TV service landscape.

Günter Lischka, Chief Commercial Officer at Drei TV, expressed enthusiasm about the partnership: “We are thrilled to collaborate with Ateme, whose expertise and innovative solutions perfectly align with our needs. Their swift response and professionalism have surpassed our expectations. At Drei TV, our goal is to deliver the utmost reliability and quality to our customers, and we look forward to enhancing their viewing experience.”

Johannes Schlieszus, Sales Director for Germany & Austria at Ateme, highlighted the importance of leveraging cuttingedge technology in the dynamic TV service industry: “In a rapidly evolving market, TV operators must stay ahead with the latest technology. Ateme is proud to support Drei TV in delivering high-quality and reliable service, particularly during major sporting events, where user experience is paramount.”

According to both companies, this collaboration between Ateme and Drei TV marks a significant milestone in enhancing OTT streaming capabilities, ensuring viewers enjoy superior video quality and a seamless viewing experience.

Antenna Hungária Zrt, a leading telecommunications company in Hungary, has unveiled its revamped OB11 OB van, equipped with advanced IP-based solutions from Lawo. This upgrade includes audio and video broadcast equipment selected from Lawo, a global company specialized in innovative live media production workflows. Lawo’s renowned broadcast control system, Virtual Studio Manager (VSM), has been implemented to oversee overall control. The integration was managed by Lawo partner RexFilm Broadcast and Communication Systems, ensuring seamless implementation and optimal operation.

For its new OB 11 and other modern large-production trucks, Antenna Hungária has integrated Lawo’s VSM as the central control system for the entire broadcast workflow. VSM’s vendor-agnostic design allows seamless integration with a wide range of broadcast equipment, facilitating customized workflows and intuitive control over thousands of I/O and processing resources. This includes IP edge devices, network infrastructures, traditional video routers, SDI video switchers, audio routers, audio consoles, multiviewers, intercoms, and modular equipment—all accessible through a highly automated and intuitive user interface.

In addition to touchscreen operation, Antenna Hungária has installed several hardware operating panels to enhance operational flexibility.

Vilmos Váradi, Head of Broadcast Production at Antenna Hungária, emphasized, “This upgrade underscores our commitment to delivering cutting-edge broadcast experiences. Lawo’s state-of-theart solutions play a crucial role in achieving our goals.”

Central to the audio infrastructure upgrade is Lawo’s 48-channel mc²56 MkIII audio production console, equipped with two A__UHD Core audio engines in a redundant design, each offering 1,024 mc²-quality DSP channels. Optimized for modern IP-based production environments, the mc²56 supports SMPTE 2110, AES67/Ravenna, Dante (via a Power Core gateway), MADI, and Ember+. Local I/Os include 16 MIC/line inputs, 16 line outputs, eight AES3 inputs and outputs, eight GPI/O, and a local MADI port (SFP).

The audio infrastructure also features A__mic8 and A__madi6 units, alongside three A__stage64 Audio over IP StageBoxes supporting SMPTE ST2110-30/31 and AES67/Ravenna standards. Additionally, an A__digital64 node supports 32 AES inputs with Sample Rate Conversion and 32 AES outputs in a compact 3RU footprint. Redundant MADI ports and Dual Media streaming ports with WAN-compatible ST 20227 class C seamless protection switching enhance audio I/O connectivity. With ST2110-10 compliant PTP clocking support, additional wordclock I/O, GPIO, and a dedicated management port, the A__digital64 seamlessly integrates into hybrid and IPcentric broadcast installations.

With Lawo consoles and stageboxes across all twelve OB trucks in its fleet, Antenna Hungária ensures compatibility and operational consistency across different productions, providing flexibility and system safety for its operators, as the company stated.

StreamGuys has enabled Dick Broadcasting, which operates 19 radio stations spanning three southeastern US states, to increase its monthly advertising revenue fivefold since adopting StreamGuys’ ad insertion services. The partnership has leveraged StreamGuys’ programmatic ad capabilities to automatically fi ll unsold ad slots during live streams and podcasts, significantly boosting revenue generation for Dick Broadcasting.

A pivotal aspect of the collaboration has been the integration of midroll placements into Dick Broadcasting’s advertising strategy. This shift required a strategic overhaul in how ads were distributed across their streams.

Tyler Huggins, Director of Advertising at StreamGuys, highlighted the initial challenge: “Nielsen’s requirement for Dick Broadcasting to simulcast their over-the-air streams for FM ratings in their markets limited them to preroll ads. While effective, this approach constrained revenue potential. By segmenting their streams into in-market and out-of-market categories, we were able to introduce midroll placements specifically for the latter.”

StreamGuys employs a “waterfall system” that prioritizes direct sold campaigns followed

by programmatic ad placements from their advertiser network. If no suitable ad impressions are available, the company facilitates the placement of unsold promos, managing the entire process from server-side ad insertion to revenue distribution.

Huggins elaborated on their role: “We handle all campaign management, advertiser relationships, and invoicing for Dick Broadcasting. Collaborating closely with their app developers and web team, we utilize precise geographic data to determine listener locations. This granular insight enables us to differentiate between in-market and out-ofmarket listeners, optimizing ad delivery accordingly.”

Additionally, Dick Broadcasting utilizes StreamGuys’ SGrecast service to repurpose live radio shows into podcasts and side streams, further monetizing these assets through automated ad insertions. StreamGuys also manages hosting and delivery

of all streaming content via their enterprise CDN, ensuring seamless distribution across websites, apps, and smart speakers.

Taylor Dick, Vice President of Finance and Strategic Analysis at Dick Broadcasting Company, emphasized the impact of the partnership: “We’ve significantly reduced streaming infrastructure costs while enhancing ad relevance for our diverse audience. StreamGuys has enabled us to not only manage and monetize our streaming business more effectively but also achieve substantial revenue growth without added operational burdens.”

Through StreamGuys’ programmatic ad services, Dick Broadcasting has optimized its streaming operations, delivering a superior listening experience while maximizing revenue potential across their digital platforms, as the company stated.

Ooona announced recently that its flagship platform, Ooona Integrated, has been selected to empower Adapt, a startup dedicated to pioneering workflows in media preparation, localization, and distribution. This strategic collaboration aims to harness advanced technologies in enhancing the capabilities of artists and industry experts involved in localization processes.

Adapt aims to empower artists and media professionals by integrating cutting-edge AI tools, delivering enhanced quality and cost-effectiveness to its clientele. The company plans to build on existing, proven solutions to expedite the delivery of value to its customers.

“In the realm of AI-driven media, localization stands at the forefront,” stated Justin Beaudin, Founder and CEO of Adapt. “To succeed, we must utilize

top-tier localization tools to offer competitive solutions to our team and clients. Platforms like Ooona Integrated enable us to achieve this efficiently, allowing us to concentrate on refining our personnel and processes.”

Ooona Integrated combines Ooona’s translation management system with a comprehensive suite of tools, all hosted on AWS and rigorously tested. The platform boasts TPN Gold and ISO certifications, leveraging advanced cloud technologies, Single Sign-On (SSO), and robust security measures to ensure scalability, stability, and data protection.

“At Adapt, sustainability is fundamental,” explained Erica Neiges, Senior Technology Consultant and Sustainability Lead. “Ooona’s cloud-based solution not only supports our fluctuating compute needs but

also aligns with environmental responsibility, making it a sound business choice.”

“Ooona’s role in our long-term strategy is pivotal,” emphasized Justin Beaudin. “We are forward-thinking, and Ooona’s adherence to MovieLabs’ 2030 Vision principles aligns perfectly with our goals.”

“We are excited to commence this partnership,” added Wayne Garb, Co-founder and CEO of Ooona. “Ooona’s user-centric approach mirrors Adapt’s vision of leveraging software and cloud infrastructure to enhance creative endeavors.”

This collaboration marks a significant step forward in leveraging technology to redefine media localization processes and underscores Ooona and Adapt’s commitment to innovation in the industry.

ARRI Rental has announced the opening of a new facility in Vienna, Austria, aimed at enhancing its service offerings by providing lighting and grip equipment alongside logistics support for productions of all scales. This new 750 square meter facility complements the existing ARRI Rental Vienna office, which continues to specialize in camera equipment packages.

Situated at 7 Haidequerstraße 6, 1110 Wien, the new ARRI Rental facility is strategically located within the hq7 studios complex, set to launch this summer as a premier European production center capable of hosting major international film and TV projects. Its proximity to the airport, central station, and city center

ensures convenient access for productions.

The Vienna facility will stock state-of-the-art lighting and grip equipment, featuring the latest ARRI lighting fixtures like the SkyPanel X and Orbiter. Exclusive offerings from ARRI Rental include the versatile BrikLok LED lights, the rugged Hexatron crane vehicle for all-terrain shoots, and the adaptable Hover Dolly with Delta Tracks.

Heading the new facilities operations, Andreas Buchschachner will oversee both the new facility and the existing office, supported by lighting expert Ingo Gaertner. Buchschachner remarked, “Expanding in Vienna

Vincent Grivet, representing Eutelsat, has been re-elected as Chairman of the HbbTV Association for another two-year term. The decision was made at the recent meeting of the industry association’s Steering Group (SG) on June 21, 2024. Jon Piesing from TP Vision was also re-elected as Vice-Chairman, while Xavi Redon from Cellnex Telecom continues as Treasurer.

underscores our confidence in the city as a key European production hub. With our longstanding presence and strong local ties, we’re committed to bolstering the growth of the industry here.”

The ARRI Rental Vienna facility for Lighting and Grip, alongside Logistics, is now fully operational and open for business.

“I am grateful to all HbbTV members for their active participation and support for the Association. It is a great honor to continue serving as Chair during this dynamic period,” stated Vincent Grivet. “Television remains a central medium for entertainment, culture, and information consumption, and the open HbbTV standards provide opportunities for a wide

array of rich services in our rapidly evolving digital landscape.”

As the Association indicated, during the upcoming term, HbbTV will focus on updating its core standards to align with market demands and emphasize interoperability to ensure that receiving devices comply with the specifications.

EVS Broadcast Equipment has announced significant changes to its Leadership Team aimed at driving profitable and sustainable growth. These internal promotions are part of EVS’s strategic efforts to bolster its commercial success within the live video production industry.

Quentin Grutman has been appointed Chief Strategic Accounts Officer, transitioning from his previous role as Chief Customer Officer. He will now lead a newly formed team dedicated to strategic customers. Quentin’s leadership since 2020 has been pivotal in revitalizing EVS’s growth trajectory, emphasizing customer satisfaction to foster deeper relationships with key clients.

Nicolas Bourdon, formerly Chief Marketing Officer, has assumed the role of Chief Commercial

Officer. Nicolas, who has been instrumental in enhancing EVS’s brand presence and guiding the company’s return to growth, will now oversee global sales and marketing efforts, leveraging his innovative approach to drive further expansion.

Oscar Teran, previously Senior Vice President of SaaS Offering & Digital Channels, now serves as Executive Vice President Markets & Solutions.

Oscar played a crucial role in EVS’s digital transformation and market expansion. In his new capacity, he will manage the global product portfolio, market intelligence, business alliances, and global learning initiatives, ensuring EVS remains at the forefront of industry innovation.

“These strategic appointments underscore our commitment

to nurturing internal talent and leveraging their expertise to achieve our growth objectives,” stated Serge Van Herck, CEO of EVS Broadcast Equipment. “Quentin, Nicolas, and Oscar bring extensive industry knowledge and leadership experience, which will be invaluable as we continue to innovate and expand our global footprint.”

The strengthened Leadership Team is poised to enhance EVS’s capability to deliver cutting-edge solutions and exceptional service to its global customer base. By prioritizing strategic customer relationships, driving global sales and marketing efforts, and advancing product innovation, EVS is well-positioned to realize its growth ambitions and strengthen its leadership in the live video production industry.

The 2024 EBU News Report delves into the profound impact of artificial intelligence (AI) on journalism and the news industry, raising crucial questions about the present and future of news reporting and the role of human involvement in this evolving landscape.

“Trusted Journalism in the Age of Generative AI” synthesizes insights from in-depth interviews with 40 global media leaders and academic experts. It serves as a guidebook, navigating the opportunities and challenges brought by AI to journalism while stressing the imperative of upholding integrity within an increasingly intricate information ecosystem. The report offers practical case studies, checklists, and firsthand perspectives on the daily complexities of integrating generative AI within news organizations.

The report arrives at a pivotal juncture as the transformation

of news media through generative AI is not speculative but actively unfolding, ushering in new dependencies on Big Tech that media leaders must navigate adeptly.

Dr. Alexandra Borchardt, Lead Author of the report, underscores the urgency of the current moment: “We are at a crossroads where decisions regarding AI’s integration into journalism will shape whether we strengthen journalism’s bonds with audiences or undermine the credibility and trust upon which its legitimacy stands. This report aims to guide these critical decisions that will influence how news is produced, delivered, and consumed for years to come.”

The EBU News Report advocates for independent journalism as pivotal in informing citizens and upholding democratic values. It acknowledges that while generative AI promises to reshape

news production by enhancing diversity and quality, human oversight remains indispensable for ensuring accuracy and accountability. The integration of AI into journalism, the report contends, holds promise for enriching both the production and consumption of news.

According to the EBU, the key insights highlighted in the report include:

• Accuracy and storytelling: AI must complement but not replace accurate, fact-based storytelling.

• Accountability: AI tools can assist in holding power accountable, yet human oversight is paramount.

• Trust and relationships: Building and maintaining trust in a fragmented digital landscape are crucial.

• Editorial judgement: Journalists will continue to play a pivotal role in deciding resource allocation and content priorities.

• Community and real-world reporting: Human-driven, on-the-ground reporting remains essential.

The report also poses critical questions about managing the influence of Big Tech, audience reception of AIgenerated content, combating misinformation, and ensuring sustainable business models in an AI-driven market.

Vizrt has announced a significant partnership with Timbre Broadcast Systems, marking a strategic move to enhance live production capabilities across the Sub-Saharan African region. The addition of Timbre Broadcast Systems to Vizrt’s global network of channel partners underscores a commitment to providing scalable solutions and support for live production technologies. This partnership aims to empower broadcasters and content creators of all sizes in Sub-Saharan Africa with access to award-winning products and reliable service from Vizrt.

“Audiences worldwide expect top-notch viewing experiences, regardless of where or who they are watching. Whether for independent producers

or professional teams, Vizrt is dedicated to equipping our partners with the tools they need to craft compelling visual narratives,” stated Marco Kraak, General Manager of Channel Sales at Vizrt.

Timbre Broadcast Systems, headquartered in South Africa, brings 44 years of expertise in serving the professional video industry across the Sub-Saharan African region. Positioned as both a supplier and Systems Integrator, Timbre Broadcast Systems is renowned for its commitment to delivering cutting-edge technology solutions tailored to diverse application needs.

“In live production, the TriCaster range sets the standard for technology and service

excellence. The versatility of the TriCaster line ensures solutions that seamlessly integrate with various setups and workflows, meeting the specific requirements of content creators in our region,” commented Armand Claassens, CEO of Timbre Broadcast Systems.

Vizrt’s extensive partner network includes hundreds of certified partners worldwide, collaborating closely with users of Vizrt technology. Continued investment in its partner program remains a core focus for Vizrt, bolstering capabilities through expanded product offerings, solutions, certifications, and training to support the growth of partner businesses in the evolving video technology market.

Remote production in sports is conquering the audiovisual and broadcasting industry. For this reason, we wanted to create a unique content in TM Broadcast International magazine, including the best and most innovative initiatives in the industry. Here is the result for our readers.

German communications software and systems company Riedel Communications has provided View Master Events with state-of-the-art equipment to conduct live, programmed remote production at the Stavros Niarchos Foundation Cultural Center (SNFCC) in Athens. The UHD5 as an OB van integrates with Riedel’s award-winning MicroN UHD, Artist-1024 matrix intercom node, SmartPanel control panel and Bolero wireless intercom to show how the decentralized infrastructure can be combined with optimized remote productions workflows for streamlining

signal distribution and enabling greater flexibility in production setups across a rich variety of venues.

England Netball has also benefited from the same game-changing approach by EMG / Gravity Media in its netball coverage. Using an advanced remote production workflow, they have produced high-quality broadcasts with minimal on-site resources. Haivision Encoders and Vizrt TriCaster guarantee seamless signal transfer while reducing replay workload, proving REMI can deliver premium production standards in a cost effective way that is sustainable over time.

The 2024 RallyX Championship Motor Racing series has established an innovative remote production model to radically reduce operational cost and environmental waste in motorsports. Powered with Grass Valley AMPP servers and LDX 98 series cameras, DMC Norway has proven to be agile & scalable in creating full scale broadcasts from anywhere.

We also sit down with Tom Peel, Director of LiveLine at SailGP to talk about the evolution and impact of their innovative Live Line technology. It highlights the broad potential of remote production in sports

broadcasting, integrating real-time data and AR graphics on top of the live race feed using just two feeds sent from racetrack alongside our San Franciscobased studios. SailGP is redefining live sports coverage with an unprecedented level of centralization and advanced AI/ML techniques directly from the track.

Collectively, these case studies showcase how the world of remote production has evolved at pace and how its adoption could vastly improve viewer experience as well as help to drive sports broadcasting sustainability in both an operational sense.

Interview: TM Broadcast International magazine and Tom Peel, Director of LiveLine at SailGP

In the world of sports broadcasting, few challenges match the complexity of capturing and presenting the high-speed action of sail racing. SailGP, the global sailing league, has impulsed the viewer experience with its LiveLine technology. At the helm of this innovation is Tom Peel, Director of LiveLine at SailGP, whose team has developed a cutting-edge remote production system that brings the excitement of F50 catamaran racing to screens worldwide. With just a week to go until the Grand Final in San Francisco, where the ultimate winner of Season 4 will be crowned, the creator of this innovative solution talk to us about how it was conceived, how it was developed, and how it is now adapting to cover different sports.

In this complete exclusive interview, Peel explains all of the details that go into making LiveLine - a system which handles over 1.15 billion data requests per hour for every competing boat at America’s Cup events. It is an example of how the technology overlays graphics with augmented reality over live race footage from Bermuda and gives fans on-screen information about boat speeds, course boundaries and leaderboard positions in real time. Peel also provides insight into the benefits of remote production, how Oracle Cloud in assisting with their graphics packages and SailGP’s plans to scale this technology across

sports as a whole - providing a look at what lies ahead for sports broadcasting.

Can you explain what LiveLine is and how it enhances the viewer experience for SailGP races?

LiveLine is “the patented broadcast graphics package that tells the story of SailGP racing.” The system is intended to humanize the very technical sport of sail racing for viewers. This solution, in essence, laces augmented reality graphics on top of live race footage. We overlay virtual boundaries on the racetrack, ladder lines to

indicate who is leading which team and show boat speeds... how far back in the race. All of this enables non-sailing viewers to get a quick easy grasp as well on where the race is at.

The system handles more than 1 billion data requests per hour from each F50 catamaran. These data points are converted to visually appealing graphics that enrich the broadcast. LiveLine has the key advantage of being produced remotely, unlike our London studio. This method, centralized to all our international broadcasts will also minimize impact on the environment.

This tool has effectively removed the most challenging elements of sail racing and delivered compelling visual cues that have helped broaden SailGP’s viewership while making this sport easy to understand even from an armchair. It is not just a matter of displaying data -it’s about using that data to tell a compelling story that keeps viewers engaged throughout the race.

What inspired the development of LiveLine, and how long did it take to bring the concept to fruition?

How did the idea of LiveLine come about? Sailing is by its nature a very complex and untelevised sport, even for sailmen it’s complicated to figure it out. The genesis of LiveLine can be traced back to 2011, when thoughts turned towards the America’s Cup in San Francisco. Stan Honey had the idea of pitching Larry Ellison on a new visual tool to improve their sailing broadcast. Their aim was

to help audiences follow the sport better as well make it more saleable, with live graphics overlaying visual context and data regarding races.

To accomplish this, our project team was tasked with creating a unique system integrating live racecourse video and high-accuracy GPS data combined with real-time performance statistics. That was accompanied by new technology - the scale of data generated by F50 catamarans requires specialist handling, using Oracle Cloud. The network generates more than 100 million data requests per hour over a onemegabyte link from each boat, converting this input into engaging graphical elements which add to the viewer experience.

It was many years from the first concept until it finally came to life, with ongoing learning and iterations continued through all parts of that journey. Its first use was in the America’s Cup, and now it has been developed further for SailGP as well other sports.

The LiveLine set began two years before it was used on-air as development for the system had been in progress, including making the initial prototype smaller and faster, introducing features that would help take information during a sport depending upon where the camera is located.

How does LiveLine integrate real-time data from the F50 catamarans into the broadcast graphics?

LiveLine fuses real-time telemetry from F50 catamarans operating at speeds of more than 30 knots with the latest graphic and visualization technology, using cutting-edge GPS tracking data as well as thousands of data points collected per second via sensors around and onboard each boat (via cloud computing). Here’s how it works:

1. Sensors: 125 sensors placed strategically on each F50 catamaran that gather information about its speed, heading,

environmental conditions and sea current. This data is sent via real-time being processed by the system of each boat for 1.15 billion requests per hour.

2. Data Transmission:

The obtained data is transmitted from the boats to the shore, and then sent through various private fiber networks uplinking into Oracle Data Center in London. It can process data in real-time with low latency and high bandwidth.

3. Data Processing- The data is processed in Oracle Data Center with Oracle Cloud

technology. This consists of processing among needless records and producing further indices for the published to broadcast. Real-Time performance information is further derived using this processed data.

4. Graphics Overlay: Data is linked to the broadcast graphics via LiveLine. During race broadcasts, this includes overlaying augmented reality (AR) graphics onto live footage that shows virtual boundaries, ladder lines and even real-time boat speeds. In large part due to the system’s use of inertial RTK GPS, it can accurately track boats within two centimeters.

5. Remote Production: The production is centralized and managed remotely from SailGP’s broadcast control room in London. This centralized model provides both consistency across broadcasts and eco-sustainability by reducing the number of on-site production teams.

LiveLine uses these technologies to bring the viewer an unprecedented view of sailing, ensuring they have a clear and instant visual context that makes this complex sport much more accessible and engaging for audiences around the world.

What were the biggest technical challenges in developing a system that could process 1.15 billion data requests per hour?

The creation of a system to execute 1.15 billion data requests hourly was not an easy task, as it posed several important technical problems. The major roadblocks that we encountered were:

• Data Volume & Filtering: The amount of data produced by the F50 catamarans is staggering. With 125 sensors in each boat, the data is coming at them around-the-clock. The biggest problem we faced was attempting not exclusively to handle this data but also trying to filter out the noise and focus on only those metrics which were relevant for a broadcast. We had to create algorithms that worked together to effectively parse and filter the data in real-time, only pulling pertinent information forward for graphics.

• Low Latency Transmission: Understanding the importance of low-latency data transmission. The data from the boats had to be sent 7000 kilometers away to the Oracle Data Center in London and rendered on broadcast graphics within a threshold that we could live with. This necessitated not just a resilient, highspeed network but that it be underlain by multiple private fiber networks to keep bandwidth up and latency low.

• Real Time Data Processing: The real-time processing of this data posed another important task. To combine all the information, including deriving two new metrics and transforming raw data into visual elements that make sense for our users, we used Oracle Cloud technology to handle this processing of data. It needed an enterprise-grade cloud computing solutions to process real-time data at scale.

• Accuracy and Precision: High positional accuracy

was essential in order to obtain good tracking of the boats, this is critical for producing high-quality broadcast graphics. We were able to track the boats with 2cms accuracy because we utilized high precision RTK GPS systems. It was extremely difficult to incorporate this level of precision into the graphics system, which demanded sophisticated calibration and synchronization techniques.

• Remote Production: Managing the whole production process remotely was an added level of challenge. The nature of the shoot meant we had to centralize all our operations in our London studio so that any data and video feeds from through different global locations could be fed directly into virtual environments where needed during the live broadcast. This remote arrangement not only necessitated dependable delivery of data, but also strong systems to remotely monitor and control the installation.

• Scalability and Reliability: Ensuring the system could scale without issue, particularly when running multiple events at once in di ff erent locations was an ongoing headache. We needed to create emergency and triple backup systems so that possible errors could not disrupt the service at a time of live broadcasting.

Overcoming these obstacles allowed us to create a system that processes the huge data volumes and brings it together graphically in an onscreen viewer friendly manner by replacing traditional broadcast graphics with real-time, accurate animated equivalents.

How does the remote production workflow for LiveLine operate, and what advantages does it offer over traditional on-site broadcasting?

LiveLine’s remote production utilizes an entirely centralized workflow where the entire event is run remotely from SailGP’s broadcast studio in London rather than at the race locations. Let me digit through the detailed explanation of how does this workflow work and it is better solution than doing conventional broadcasting on-premise:

Remote Production Workflow

Data Collection & Transmission: Every F50 catamaran is packed with 125 sensors that help gather valuable live data on a wide range of points, such as boat speed and direction, weather conditions and sea currents. The data is sent from the boats to shore and then through diverse private fiber networks to the Oracle Data Center in London. This provides a low latency, high bandwidth source of truth as real-time processing often depends on this.

Data Processing: The data is then processed using Oracle Cloud technology at the Oracle Data Center.

This includes sifting out all that is not needed in addition to creating extra metrics appropriate for broadcast. The feed, in turn, is used to construct real-time visualizations of performance data.

Broadcast and Graphics

Overlay: LiveLine embeds the processed data into the broadcast graphics. This also involves displaying augmented reality (AR) graphics over live footage of the race, including virtual perimeters, ladder lines and boat speeds.

The entire productionfrom graphics generation to video feed management, is all orchestrated remotely via judges in the studio at London.

Advantages Over Traditional On-Site Broadcasting

• Stability and Consistency: This helps to keep the system stabilized, centralized and consistent during all events. It saves resources required to set up and knockdown the setup before every event, hence reducing chances

of equipment failure resulting in a reliable broadcast output.

• Sustainability: With its ability to dramatically decrease the carbon footprint, remote production is a small win for sustainability. Rather than fly numerous people and equipment to each race venue, a smaller crew on the ground takes care of necessary functions while much of production is done from an offsite location.

• Cost Efficiency: The resulting financial savings are enormous, as it eliminates the usual expenses associated with moving and setting up equipment at each location. It eliminates the logistical complexities and potential delays of transporting equipment also.

• Scalability: Remote setup is very easy with a remote setup allows for scalability. With this, the production team can control multiple feeds and outputs without increasing their on-site presence. It is very useful for controlling a bunch of different international transmissions at once.

• Enhanced Viewer Experience: By utilising a centralized system, the volume of highprecision data that can be incorporated into live broadcasts in real-time is extensive and in turn supplies viewers with comprehensive visual context. Its an added

benefit that enhances the experience for viewers and helps to explain such a complex sport in sailing which is often making it difficult for people unfamiliar with this type of Regatta racing entertainment.

Can you describe the role of Oracle Cloud in powering LiveLine and enabling real-time data processing?

It is from Oracle Cloud that LiveLine draws realtime data processing capabilities to achieve our broadcast graphics. As a bridging platform, it aggregates all the data from our F50 catamarans and other sources across the racecourse.

Fifty data sensors mounted on each F50 catamaran capture boat speed, direction and wind/race/ sea states for a wealth of information. The boats transmit this data to shore, which is then sent over diverse private fiber networks back to the Oracle

Data Center in London. As an example, we can easily process about 1.15B data requests per hour from every boat —this is a lot of information but with the Oracle Cloud infrastructure it does not overload our systems in any way and that was simply impossible to achieve even when using Google Cloud platform for computing alone.

Through Oracle Cloud, we exercise this time to filter the data and process and analyse it in real-time. To bring all the data together as quickly as possible, we turn to Oracle’s managed software solutions and Fast Connect product. This is what not only helps us generate insights but also convert the raw data into visually appealing graphics that you get to see in our broadcasts.

Scalability and performance in Oracle Cloud are important for our remote production workflow, etc. It enables us to intake tons of data from many different parts of the world and centralize those processes, here in London. This method not

only keeps all our shows consistent but also makes a massive difference environmentally by cutting down the number of onthe-ground production teams we would otherwise need.

In addition, Oracle Cloud o ff ers the required aircooling capabilities to power our augmented reality graphics (the digital sideline markers, ladder

lines and real-time stats). With the cloud infrastructure we can keep latency to a minimum, which is vital for synchronizing our graphics with live race footage.

Oracle Cloud has been at the very heart of LiveLine, making it possible for us to bring sailing alive with an experience that’s rich in data and easy-to-consume for global audiences.

How does LiveLine achieve its high level of accuracy in tracking boats and overlaying graphics?

The LiveLine technology is possible through a proactively-designed solution that takes advantage of leading edge technologies and very precise data integration. Centered around our RTK

GPS system installed on every F50 catamaran (sub-two-centimetre positioning). That level of precision is vital for the high-speed, close-quarters racing that characterizes SailGP circuits.

To these we add data from up to 125 sensors on each boat - measuring parameters such as speed, course or wind and current conditions.

We pass this data in real time to our Oracle Cloud processing systems which process more than 1.15 billion queries per hour and boat, whilst we filter the processed response also in a highly scaled way using cloud services integrating knowledge created from our IoT connect layer on top of OCR/Surface Radar/ Drones images/video metadata (type AI Boolean Decision Validation).

Data is then processed and overlaid onto livestreamed video footage from a helicopter-mounted camera. Steady footage is a must for precision graphic

overlays, and we achieve this using gyro-stabilized camera gimbals. Our technology uses this GPS data along with video footage, and our software integrates that information to create wayfinding using virtual graphics on top of the racecourse.

Having control of our boats and all the data we produce is one core advantage. This has given us access to what is essentially every piece of information, allowing us to perfect our systems for tracking and graphics. With Siebel turned off, we have developed solutions for

both GPS positioning and data processing customtailored to the nuance of sail racing.

The aerial-based solution enables boats to be tracked within two centimetres and graphics (like the yellow line on TV) overlay, maintaining their position irrespective of camera movements or changing conditions. This accuracy is what truly differentiates LiveLine and enables us to provide all those who watch live sailing from around the world with an exciting visual experience that illustrates where every sailor sits in each race.

Are Sail GP planning to standardize

LiveLine for broader adoption across different sports and broadcasting networks?

Yes, of course. While SailGP is the change agent in this chat today, it was developed with a crosssport approach and as such would be available to other broadcasters if they so desired (however some/much of LiveLine remains proprietary). The system, developed initially for the America’s Cup and now further adapted to SailGP is credited with providing increased viewer engagement: pool feeds are synchronous match racing -- overlaid graphic analytics add clarity as vectors above each competitor provide insight in a broadcast friendly format despite being derived from live telemetry only about three seconds old. The promise we see with this solution could easily make it a game-changing part of the broadcast enhancement landscape in myriad other sports.

The solution offers advantages for other sports, such as:

Improved Viewer Experience: LiveLine overlays a clear, live visual context that makes complex sports more understandable and exciting for audiences.

Commercial The technology has virtual advertising capabilities by enabling dynamic and powerful branding real estate, with no need for physical infrastructure.

Sustainability: The entire ecosystem reduces the need for on-site production teams and infrastructure, which is a key pillar in sustainable broadcasting.

To sum up, we can say that with this system, one tool allows us to serve a great many sports. We want to amplify sports broadcasts around the world by telling more of a story and offering untapped commercial potential, through our experience with this technology. What steps are being taken to adapt

LiveLine for use in other sports beyond sailing?

To expand the capabilities of LiveLine into other sports beyond sailing we are taking several steps:

Design of the redesign: We are considering more modularity in our Sports Logic components, to make it easier for us and even others who want to integrate with our data or into other existing sports systems.

General input normalization: We are normalizing our possibilities of inputs in order to cleanly and without overweight enter the different data systems that we will face across sports

Customizable graphics packages We are working on template-based graphic toolkits that you can use for various sports, each with the same base functionality that is at the heart of LiveLine. Though wellexecuted, we’re continuing to optimize our remote production in order for it to be effectively utilized at the different great events and locations.

AI and machine learning:

We are also in early research around how we can incorporate more advanced AI and machinelearning functionality to drive better tracking of users data analytics across its myriad sports.

Scalability improvements:

We are looking to make the system more scalable so that we can serve various sports with different data needs, ranging from individual competitions up mass participation races.

Collaborative partnerships - Partnering with other leagues has built a strong relationship on focussing their needs and challenges which have been accounted for in creating LiveLine.

Cross-sport testing: We are also doing proof-of-concept tests with different sports to ensure we can effectively address any sport-bysport specific challenges in bringing our solution into additional broadcasts.

Mobile integration: We are now working to optimize the new graphics for mobile devices through apps that we’re developing like our

forthcoming LiveLineVR phone app which is set up so viewers can “drink in” all of these cutting-edge graphical enhancements across a broad range of sports.

Sustainability is central as we continue to not only spotlight the sport, but also how it can be done responsibly in a Post-2020 world-with our adaptations carefully designed such that SailGP does bring 90-percent of what you see on TV from home (reducing or removing any need for large-scale infrastructure being shipped venue-byvenue).

We hope to provide many more sports with the storytelling scope and commercial benefits that a system such as ADM LiveLine can offer, taking fan engagement levels in sport up another notch across all aspects of delivering quality content, while opening doors to new partnerships. How does the system handle varying weather

conditions and their impact on data collection and transmission?

The weather doesn’t really affect broadcast or transmissions; it’s actually a problem for the shooting team and cameras. We use a range of gyro-stabilized gimbals, such as Cineflex and Shotover, to ensure steady and clear footage regardless of weather conditions. These systems are robust and capable of handling the movements caused by wind and other environmental factors.

Fog and visibility: Fog can be a challenge for camera visibility, but it does not affect our ability to track the boats. Our system can draw graphics through the fog, ensuring that viewers can still see the racecourse and boat positions accurately.

Rain and wind: We have conducted events in various weather conditions, including rain and high winds. Our helicoptermounted cameras are gyro-stabilized, allowing them to operate

effectively in windy conditions. Additionally, the data transmission and processing systems are designed to function reliably in adverse weather.

What kind of feedback have you received from viewers and broadcasting partners regarding LiveLine, and how has this shaped its development?

The response from viewers and broadcasters to LiveLine has been outstanding. Our technology has increased the opportunity to connect and engage a larger audience for sailing which is reflected in viewership growth. We’ve had 14m unique broadcast viewers tuning in on average per event and our audience has grown by more than 300% year-on-year [9].’

In turn, our broadcasting partners even love how it provides a transparent and current visual context of exactly where races are on track.

Of course, the enlightened portion of her fan base would certainly be grateful that LiveLine is shining a light on some of the black arts associated with sail racing.

While this LiveLine has raised some concerns about its use, it allows us to tell stories with a totally different perspective that wasn’t possible before. With this real-time data and graphics overlaid onto the live feed, you’re able to craft a stronger narrative that also has more interesting visual elements for fans watching at home. This has been especially useful in showing viewers the tactics and strategies of teams during races.

LiveLine virtual advertising options were praised by our commercial partners. Dynamic and context-aware brand placement within the broadcast, without physical infrastructure, was also very well received.

Finales - This feedback has significantly contributed to the direction LiveLine took in its development. We have always improved our graphics and how we

communicate data to make it more user friendly. We have also continued to grow our capabilities with a wider range of advertising solutions that can be tailored and implemented by clients.

An added bonus is the great response from those who observed the technology at work live or on television; it has inspired us to think about offering [applications for LiveLineFX] beyond sailing. Also, we are currently in discussion with some of the other sports properties on how they can use our technology to increase their fan base especially sports happening out-side stadium where providing context to viewers is quite a task.

The feedback we have received overall has supported our development and will help push the boundaries of what can be achieved with LiveLine, not only for SailGP but hopefully throughout sports broadcasting.

Looking ahead, what do you see as the next big

innovation in sports broadcasting technology, and how is SailGP positioning itself to lead in this area?

As we look toward the future, I truly think that one area of sports broadcasting technology innovation to pay attention to will be increased AI and machine learning integration (to help process live data at a quicker pace) in order to enhance more realtime storytelling for fans. With systems such as our LiveLineFX, we are beginning to see the effects of AI and some changes have already been made (for example with athlete tracking) or under way.

This is why SailGP seeks to be the leader in this space and from our side, we will continue work on improving our AI. One of those is advanced predictive analytics that can actually foresee the results of races and then chart important instances for viewers, in real-time. We will then be able to convey even richer stories on our broadcasts.

We’re also investing in expanding AR experiences to new areas. But then again, we’re also creating a LiveLineVR phone app that will go live from our New York event and enable it to be viewed with an entirely new race experience? in other ways. How we see AR enhancing the experience of watching broadcast esports at home, and onsite is just at its beginning.

We are also looking at options of how to adapt our technology better for different sports and make

it more scalable. So, by modularizing our Sports Logic components, we are enabling additional applications for LiveLine across a broader base of events and disciplines.

Sustainability is also of utmost importance for us. With each of our innovations we are continuously trying to reduce the environmental impact so let’s do a bit this time too. Remote production is a great example of this; reducing our reliance on-site

infrastructure whilst striving to deliver the highest broadcast quality.

At the end of it all, we are looking to rock up [to these venues] and open people’s eyes to how far you can take sports broadcasting. We are not just keeping up with the new innovations in this space but leading how these consumer experiences come to life through a blend of amazing tech at our disposal and unique insights on what makes sports stories so compelling.

ATHENS, Greece-Riedel Communications’ almost indestructible technology is now part of how View Master Events--a live online production company in Athens, run by experienced businessman Dimitris Grakas, and CEO/Owner Konstadinos Roditis that transmits professional audiovisual broadcasts over the web to remote audiences worldwide - enables new levels of creativity on its productions at Stavros Niarchos Foundation Cultural Center (SNFCC). The power of Remote Integration (REMI) workfl ows in modern broadcast environments is highlighted by this collaborative eff ort.

Leading this technological effort is the newly unveiled partnership, which recently launched its UHD5 OB van through View Master Events and Live Productions. UsingRiedel’s cuttingedge technology, which includes MicroN UHD devices and an Artist-1024 node in combination with SmartPanels and Bolero, theUHD5 vanintegrated through MediorNet Compactstage boxesprovides seamless connections to SNFCC’s infrastructure.

The UHD5 van’s integration with SNNPs distributed MediorNet system allows fast and easy REMI production throughout different parts of the venue. This setup allows for:

Seamless distribution of video, audio, data, and communications signals

Operation in six-wire mode reduces cabling and increases system stability

Flexible signal routing, easy scalability

John Zarganis, Production and Technical Director of View Master Events & Live Productions said “Our new UHD HDR OB truck give us the production fl exibility that would have been unimaginable a few years ago by being able to cover anything from small scale single broadcast events right through to large multi sport fi elds units.”

With MicroN UHD devices from Riedel aboard the new UHD5 van, View Master Events can accept 4K/Ultra HD signals in addition to a wide array of video formats. One of the most practical aspects of this versatility is that it minimizes workflows, meaning there will be less demand for a wide range of additional equipmentall essential staples in streamlining productions designed as REMI events where efficiency reigns.

Riedel SmartPanels provide an intuitive user interface for managing various areas of the production.

This integration allows highly efficient signal routing, processing and monitoring for live events across SNFCC’s array of venues - the opera house; multiple outdoor stages including a cityscape custom-built stage in its brilliant architecture park face during the summer months hosting large-scale festivals as well as local residents’ gatherings such at basketball courts with ping pong tables to nestle together; conference halls and park areas.

Going forward, Zarganis said that there are plans to extend their MediorNet network with Riedel

HorizoN as well and thus already making key steps towards IP workflows (future-proofing our system). This future-proof attitude will allow View Master Events to provide the same top-notch REMI productions even as technology changes.

The alliance between View Master Events, Riedel Communications & the Stavros Niarchos Foundation Cultural Center is a perfect example of what today’s latest REMI productions can do. With the Riedel technology, View Master Events is able to produce top-notch content at different venues but also continually offer its customers new applications and greater flexibility within ever shorter timeframes.

By Jens Envall, DMC

The 2024 RallyX Motorsports Championship, now in its tenth year, has become a beacon of innovation in the realm of live event coverage. By adopting a remote production model, the championship is setting new standards for efficiency, sustainability, and broadcast quality.

Historically, live event production required substantial on-site infrastructure, including large trucks, extensive camera setups, and a significant crew presence. This conventional approach, while effective, imposed considerable logistical challenges and environmental costs. The advent of remote production has revolutionized this landscape.

Mats Berggren, COO of DMC Norway, describes this shift as “a small revolution and a totally new workflow approach.” The primary innovation lies in the ability to deliver high-quality TV coverage using regular internet lines, provided there’s sufficient bandwidth for the program output, multiview feed, and control signals. This technological leap reduces the reliance on massive bandwidth traditionally required for remote production.

ffi

The move to remote production for RallyX significantly reduces both operational costs and carbon emissions. In previous years, DMC

deployed two large trucks to various venues across Finland, Sweden, Norway, and Denmark. This year, they have transitioned to using small vans equipped with Grass Valley AMPP servers, substantially cutting down travel and accommodation expenses while supporting RallyX’s sustainability goals.

Peter Hellman Strand, head of broadcast and media for RallyX, emphasizes the environmental benefits: “This advancement enables us to drastically reduce our on-site presence, aligning with our commitment to an eco-friendly production.” By minimizing the need for extensive travel and

on-site personnel, RallyX is contributing to a more sustainable future for sports broadcasting.

The flexibility of AMPP has enabled DMC to adapt to the unique challenges of covering RallyX events, which often take place in remote locations with varying internet connectivity. For example, venues like Nysum in Denmark and the Tierp Arena in Sweden are situated far from urban centers, making permanent broadcast connections impractical. Instead, DMC relies on existing internet infrastructure, requiring around 100 Mb/s to ensure smooth operations.

To achieve this, the production utilizes LDX 98 series cameras with a range of lenses, including super slow-motion capabilities and wireless links. The entire production, managed remotely from DMC’s broadcast centers in Norway and Sweden, is streamed in 1080p 50 to the RallyX YouTube channel. This setup allows

the production team, including the director and key technical staff, to work from centralized locations, further reducing the logistical footprint.

Sweden, highlights the efficiency gains: “We can set up 8, 16, or 24 cameras using the same bandwidth. It means we can scale up and down without additional investment.” This scalability is crucial for maintaining high production values while adapting to different event requirements.

RallyX’s successful implementation of remote production is a compelling case study for the broader broadcasting industry. It demonstrates how cutting-edge technology can address long-standing challenges such as high bandwidth requirements

and the need for large on-site crews.