Welcome to the October issue of TM Broadcast International!

As always, we bring you the innovators and game-changers who are de fi ning what is possible in broadcast and audiovisual production. In this issue, we bring our readers some of the industry’s leading lights as they share their experiences using next-generation technology to deliver results for broadcasters and audiences.

Among our cover stories and within the world of public broadcasting, TM Broadcast International talks to Adde Granberg, CTO of Sweden’s SVT, about its recent adoption of the Agile Live OTT management platform and its plans for the future. Readers were then treated to a one-on-one with Lucien Peron, CTO of PhotoCineLive, and its recent acquisition of an OB van, integrated by OBTech in partnership with Sony, to take OB to distances never before dreamed of.

Editor in chief

Javier de Martín editor@tmbroadcast.com

Key account manager

Patricia Pérez ppt@tmbroadcast.com

Editorial staff press@tmbroadcast.com

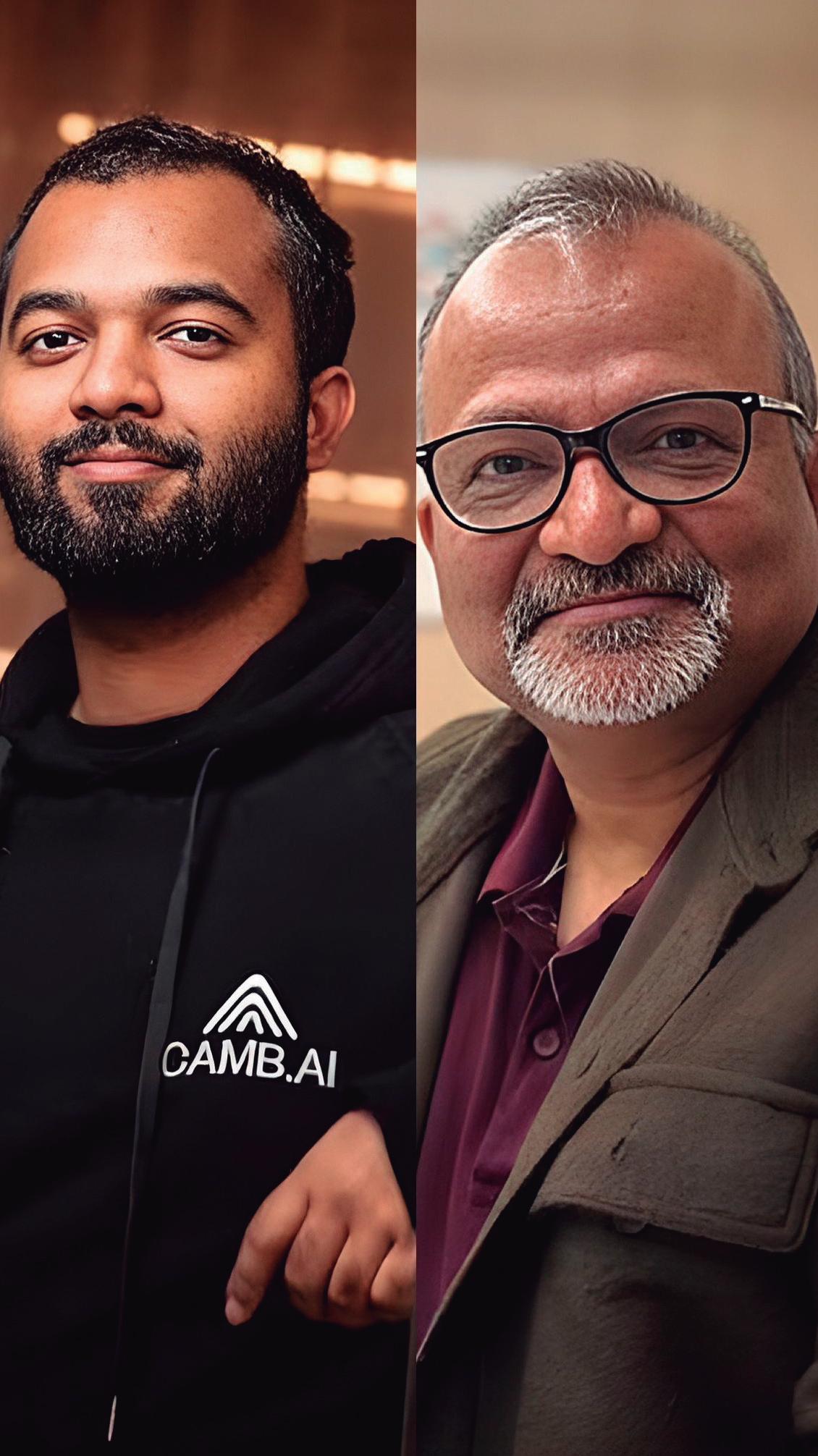

Keeping up with the latest developments, our sports broadcast feature looks at CAMB.AI’s groundbreaking real-time subtitling and translation platform.

Founder and CTO Akshat Prakash explains how the technology won over viewers when it was used by Eurovisionsports.tv for its coverage of the World Athletics Championships. Continuing the trend, the October issue features a close-up with AI technology expert Rubén Nicolás-Sans, who analyzes the role of artificial intelligence in sports production, focusing on the recent Olympic Games in Paris.

Finally, we met with Stuart Campbell, a commercial DoP at heart, who delivers high impact work for successful series such as ‘Special Ops: Lioness’, ‘Mayor of Kingstown’ or the recently awarded film ‘Drive Back Home’.

This issue looks into the heart of an ever-changing and evolving business to give our readers both the hottest current trends and a glimpse of where we’re all going next.

Creative Direction

Mercedes González mercedes.gonzalez@tmbroadcast.com

Administration

Laura de Diego administration@tmbroadcast.com

Published in Spain ISSN: 2659-5966

TM Broadcast International #134 October 2024

TM Broadcast International is a magazine published by Daró Media Group SL Centro Empresarial Tartessos Calle Pollensa 2, oficina 14 28290 Las Rozas (Madrid), Spain Phone +34 91 640 46 43

Sveriges Television (SVT), Sweden’s public broadcaster founded in 1956, has always pushed the boundaries of innovation without abandoning its public service vocation. At the forefront of this technological transformation is Adde Granberg, SVT’s Chief Technology Officer, who brings more than three decades of broadcast technology experience to his role.

48 OB | PHOTOCINELIVE

PhotoCineLive’s new OB: big capabilities in a compact package

PhotoCineLive, a leading production company known for its cinematic approach to live events, has recently unveiled its latest technological marvel: a compact 4K Ultra HD OB van.

From automated cameras to personalized content How AI is transforming sports broadcasting

Artificial Intelligence is revolutionizing sports broadcasting, offering unprecedented opportunities for content creation, audience engagement, and operational efficiency.

In an era where global reach is paramount, CAMB.AI emerges as a game-changer in the world of sports broadcasting. Co-founded by Akshat Prakash and his father, this innovative company is pushing the boundaries of real-time, multilingual content delivery.

From ad man to award-winning cinematographer

Despites his youth, Stuart Campbell is already an accomplished Director of Photography who has made significant contributions to the world of cinematography through his work on notable projects such as Special Ops: Lioness, The Handmaid’s Tale, and Mayor of Kingstown.

Vizrt has introduced two new models to its renowned TriCaster family: the TriCaster Vizion and the TriCaster Mini S. According to the company’s statements, these launches cater to both advanced production teams and smaller content creators.

TriCaster Vizion: The future of live production

At the forefront of this release is the TriCaster Vizion, a new flagship model that combines IP connectivity, configurable SDI I/O, and powerful audio mixing with industry-leading graphics. Built with the future of broadcast and live event production in mind, TriCaster Vizion features advanced graphics powered by Viz Flowics and integrates AI-driven automation to simplify complex tasks, freeing production teams to focus on creative execution.

“TriCaster Vizion is the hub of a complete digital media production ecosystem,” said Ulrich Voigt, Global Head of Product Management at Vizrt. “It’s designed to meet the everevolving needs of modern media production, empowering users to push creative boundaries, streamline workflows, and deliver the content they envision.”

The flexibility of TriCaster Vizion extends to its licensing options, offering both perpetual and subscription models. Users can also choose between two hardware platforms supported by HP, making it adaptable for different production environments, from broadcast

studios to enterprise media networks. With its combination of AI, IP connectivity, and configurable hardware, TriCaster Vizion is engineered for broadcasters, sports networks, and live event producers looking for high-end, versatile production tools.

For smaller production teams and content creators, Vizrt has introduced the TriCaster Mini S, an entry-level, software-only solution that brings professional-grade production capabilities within reach. With support for IP connectivity, 4Kp60 streaming, and built-in TriCaster Graphics powered by Viz Flowics, the Mini S offers everything needed for top-tier video production in a more accessible package.

“TriCaster Mini S is equipped to bring you everything needed to start your live production journey,” Voigt added. “It puts the choice in your hands—select your hardware, and you’re ready to create, produce, and stream your stories, your way.”

The TriCaster Mini S eliminates barriers related to budget and experience, offering users the flexibility to choose their own hardware while benefiting from the reliability of the TriCaster brand. Whether it’s live switching, virtual sets, social media publishing, or web streaming, the Mini S ensures that creators at any level can achieve high-quality results with a simple software download.

Since its introduction in 2005, the TriCaster line has continuously evolved, providing users with more flexibility and options. The release of TriCaster Vizion and TriCaster Mini S marks a significant expansion of this legacy, offering both top-tier and entry-level solutions without compromising on quality. These models are designed to adapt to the fastchanging media landscape, accommodating both existing and emerging technologies.

Both the TriCaster Vizion and TriCaster Mini S are now available for order, offering production teams more choices and enhanced capabilities to meet the demands of today’s media environment.

Krotos has launched Krotos Studio 2.0.3, introducing a groundbreaking AI Ambience Generator. This new tool offers content creators across film, TV, gaming, and beyond the ability to design custom soundscapes with a simple text-based prompt. By leveraging Krotos’ extensive library of professionally recorded sounds, users can now generate high-quality ambiences tailored specifically to their projects, providing enhanced flexibility and creative control.

With the AI Ambience Generator, users of both Krotos Studio and Krotos Studio Pro can create immersive sound environments by merely describing a scene. Whether it’s the serene sounds of a “forest at dawn” or the bustling atmosphere of an “urban city night,” the tool taps into Krotos’ ever-expanding library of sounds, generating fully customizable presets in real-time. Unlike static audio files, the presets created by the AI Ambience Generator are fully editable, enabling users to fine-tune soundscapes according to the unique needs of their projects. The generated

soundscapes can then be exported directly to popular DAWs and NLEs such as Adobe Premier and DaVinci Resolve, ensuring a seamless integration into any production workflow.

The introduction of the AI Ambience Generator drastically simplifies the traditionally time-consuming process of sound design. Rather than manually searching through vast sound libraries, creators can now describe their desired ambience and receive precise, high-fidelity results in seconds. This powerful tool allows sound designers, filmmakers, and game developers to focus on their creative vision rather than the technicalities of sound selection.

George Dean, Lead Software Developer at Krotos Studio, highlighted the significance of this new feature: “The AI Ambience Generator is proving to be one of our most popular features. It unlocks a seamless workflow where users can create high-quality, customizable soundscapes in seconds, using only their imagination and a

description. Instead of spending time searching through libraries, they can focus on their creative vision and move faster through their projects.”

According to the developers, this new solution’s key benefits would be:

› Precision: Users can go beyond traditional sound libraries to generate the exact background ambience they need in seconds.

› Customization: Easily adjust elements of the soundscape with a few simple controls, creating ambiences of any desired length.

› Quality: The tool uses pristine, high-fidelity audio, recorded by top-tier sound engineers, ensuring the final output is free from synthetic artifacts.

This new AI-driven feature enhances the efficiency of the creative process, allowing users to remain fully immersed in their work without the need to comb through endless audio files. By offering both speed and precision, the AI Ambience Generator represents a major leap forward in sound design, empowering creators to deliver exceptional audio experiences more efficiently than ever before.

Krotos’ new AI Ambience Generator sets a new standard in customizable sound design, unlocking greater potential for content creators across the media landscape.

The portable Pixellot Air NXT introduces enhanced video quality, sound, and AI-powered features, tailored for soccer, basketball, hockey, lacrosse, and football

Pixellot has unveiled its latest innovation: the Pixellot Air NXT, a next-generation portable camera designed to revolutionize multisport broadcasting. Approved by FIBA, this camera is packed with features aimed at delivering superior video coverage for a wide range of sports, including soccer, basketball, ice hockey, football, lacrosse, and field hockey.

The Pixellot Air NXT sets new standards for AI-powered sports streaming with notable improvements in video clarity, sound, and integration into Pixellot’s comprehensive coaching, analytics, and OTT ecosystem. The camera now features digital stereo microphones, smooth NVidia-powered video processing, and up to four times the storage capacity, ensuring every moment of action is captured with precision.

Designed for all levels of sports, from grassroots to elite

The Pixellot Air NXT is versatile enough to cater to sports families, local teams, and elite organizations. Its unique Tournament Mode simplifies the live production of consecutive games, making it ideal for streaming large events or backto-back matches. With a storage capacity of up to 512GB and a battery life of five hours, the Air

NXT offers robust performance for long recording sessions. Moreover, the battery charges 33% faster than previous models, ensuring minimal downtime between events.

The camera’s dual-stream functionality is a standout feature. Users can choose between a TVstyle dynamic video stream, which automatically zooms in on the ball and key action, or a full-field panoramic view, offering a bird’seye perspective of the entire game. This flexibility appeals to both fans and coaches, providing them with the option to watch the game from any angle.

Pixellot has also emphasized ease of integration with other platforms. Its open architecture allows seamless connectivity with third-party services such as analytics tools, scouting platforms, advanced graphics solutions, and remote commentary systems. In addition, sports organizations can stream directly to YouTube, social media, third-party OTT platforms, or use Pixellot’s white-label streaming solution tailored for professional and amateur sports leagues.

At the heart of the Pixellot Air NXT is a newly developed AI architecture that

sets a new benchmark in sports broadcasting. This AI system is powered by deep learning algorithms trained on over five million games from various sports over the past eight years, offering unparalleled precision in ball tracking and game-state recognition.

A key innovation in the Air NXT is its automated highlight generation, capable of capturing both game and individual player highlights. Whether it’s a last-minute equalizer or a game-winning three-pointer, users can easily share clips on social media with just a few clicks. The system also supports manual highlight creation, offering greater flexibility for broadcasters and teams.

The Pixellot Air NXT has been officially approved by FIBA through its Equipment & Venue Centre’s Approved Software program, validating its suitability for professional basketball competitions. This endorsement underscores Pixellot’s commitment to delivering top-tier sports video solutions trusted by teams and venues worldwide.

Westdeutscher Rundfunk (WDR), the German regional public broadcaster, has partnered with Riedel Communications to bolster its communications and signal distribution infrastructure for the broadcast of UEFA Euro 2024. This collaboration, facilitated by Broadcast Solutions and Riedel’s Managed Technology Division, enabled WDR to conduct efficient remote production for linear TV, radio, online, and social media platforms from its Broadcast Center Cologne (BCC).

Opting for a centralized approach from Cologne, WDR successfully minimized travel and operational costs while ensuring comprehensive coverage of UEFA Euro 2024. The broadcast feeds were consolidated at WDR’s control room in Mainz, decoded, and transmitted via fiber to Cologne. Additionally, signals from up to six unilateral cameras with embedded audio were transmitted from each stadium to Cologne, where they were integrated into production workflows across various platforms.

Felix Demandt, Project Manager at Riedel Communications, highlighted the strategic advantages of this setup: “ARD aimed to combine on-location presence with centralized operations. Previously, large OB vans were required at stadiums, but now, with centralized control from Cologne supported by a lean on-site team, production efficiency has significantly improved. This setup allowed for agile responses to dynamic production demands throughout Euro 2024.”

To accommodate the influx of signals at BCC, WDR expanded its infrastructure with 17 MediorNet MicroN UHD nodes. Ten nodes featured the Standard App to synchronize UEFA feeds with the house clock and flexibly distribute video and audio signals, while the remaining seven nodes used the MultiViewer App for scalable multiviewing capabilities. Control and configuration of this infrastructure were managed via the hi human interface from Broadcast Solutions.

Humphrey Hoch, Product Manager at Broadcast Solutions, emphasized the seamless integration: “WDR, familiar with MediorNet and hi human interface from their Ü3 OB van, appreciated the user-friendly, scalable, and reliable interaction between these technologies. With 29 hardware panels and software licenses, WDR had flexible access to the hi system across their operational hubs.”

In addition to enhancing the existing intercom system, WDR deployed a rented Riedel Artist Node with MADI cards to connect commentator stations in stadiums, alongside additional intercom panels to accommodate expanded operations during Euro 2024.

The integration of Euro 2024 into WDR’s ongoing operations required meticulous planning to ensure uninterrupted regular broadcasts. For instance, the Euro 2024 control room was isolated from the main WDR control room to focus exclusively on championship content. Close collaboration from planning to execution ensured that the system met WDR’s stringent requirements and operated seamlessly throughout the event.

“WDR delivered outstanding coverage of Euro 2024 through advanced and efficient remote production,” concluded Demandt. “We are proud to have contributed to this success story with our cutting-edge services and technologies.”

Slovak Telekom, a subsidiary of Deutsche Telekom, has partnered with MediaKind to overhaul its TV distribution headend with the deployment of MediaKind’s Aquila Broadcast solution, marking a major step towards a cloud-ready, next-generation infrastructure. This strategic transformation aims to enhance video quality and optimize operational e ffi ciency, ensuring Slovak Telekom remains at the forefront of broadcast technology.

The multi-year agreement reinforces the strong partnership between Slovak Telekom and MediaKind, focusing on a seamless migration to modern technologies and the future-proo fi ng of content

delivery. The deployment will initially be on-premises, leveraging Slovak Telekom’s existing infrastructure, but with the fl exibility to transition into hybrid cloud environments. MediaKind’s advanced AI-driven compression technology is central to the upgrade, o ff ering signi fi cant improvements in both video quality and bandwidth e ffi ciency.

Juraj Matejka, Technology Tribe Lead TV & Entertainment at Slovak Telekom and T-Mobile Czech Republic, highlighted the importance of this move: “Aquila Broadcast represents the next chapter in our longstanding partnership with MediaKind, ensuring that our viewers receive the highest quality content, delivered with the e ffi ciency and reliability they

expect. This migration not only preserves the investments we’ve made in our current headend but also positions us to meet the growing demands of our customers by embracing cloud-native solutions that are both scalable and adaptable.”

One of the key components of this deployment is MediaKind’s AI Compression Technology (ACT), which dynamically optimizes video quality in real-time by adjusting codecs based on the content being processed. This allows Slovak Telekom to deliver exceptional video experiences across various platforms while minimizing bandwidth usage—essential when supporting multiple codecs and resolutions, including MPEG-2, MPEG-4 AVC, and HEVC.

According to Boris Felts, Chief Product O ffi cer at MediaKind, this project exempli fi es how MediaKind’s technology can bridge the gap between traditional broadcast systems and modern cloudenabled infrastructures:

“Our partnership with Slovak Telekom is a prime example of how MediaKind’s solutions can provide a smooth transition

from legacy systems to modern, cloud-ready architectures. Aquila Broadcast enhances operational e ffi ciency and o ff ers a clear path for future upgrades, enabling Slovak Telekom to tailor the viewing experience to meet the demands of tomorrow’s viewers.”

This deployment showcases the scalability and adaptability of MediaKind’s solutions, allowing

telecom operators like Slovak Telekom to navigate the rapidly evolving media landscape while maintaining service continuity and high-quality content delivery. With AI-powered compression and the ability to extend into hybrid cloud environments, Slovak Telekom is well-positioned to meet future broadcast challenges and expectations.

Broadcasting Center Europe (BCE), a key media services provider supporting over 400 clients worldwide, has completed a significant expansion of its Network Operation Centre (NOC) at its Luxembourg headquarters. The project involved extending the control desk and monitor wall setup, all sourced from Custom Consoles, which has been BCE’s partner since the construction of RTL City in 2017.

Rosa Lopez Sanchez, Project Engineer at BCE, explains the decision to continue working with Custom Consoles: “We initially chose Custom Consoles’ Module-R and EditOne desks, along with MediaWall and MediaPost monitor display supports, for their flexibility and unified visual style. Sourcing all the desks and display supports from a single supplier ensured consistency in equipment installation and wiring, and it made future maintenance easier.”

The recent upgrade took full advantage of the modular nature of the Module-R system, which was designed for easy expansion and reconfiguration. BCE added eight new 19-inch sections, equipped with 3U equipment

pods and monitor support arms to the existing control desk.

The MediaWall was also expanded, growing from three sections supporting 12 large display screens to seven sections accommodating a total of 28 displays. “The ease of extending both the Module-R desk and MediaWall was a major benefit. The new components fit seamlessly, and we’re extremely satisfied with the outcome,” added Lopez Sanchez.

The expanded control desk retains its wrap-around, inverted-U design, with increased width to accommodate three or more operators simultaneously. The new layout consists of a 14-bay central section, flanked by two-bay-width corner sections and three-bay-width wings on both sides. Each bay is outfitted with 3U-high angled equipment pods, with additional pods placed in the corner sections. Deskmounted 24-inch monitors are positioned along the central, corner, and wing sections, providing an optimal working environment for operators.

Custom Consoles’ Module-R system is built for flexibility,

allowing users to configure their desks in various sizes and layouts to suit specific operational needs. Unlike fixed furniture, Module-R desks can be expanded or reconfigured throughout their lifecycle. The design supports broadcast industry-standard 19-inch rack-mounting equipment, which can be added at the time of installation or later. Furthermore, individual desk components such as desktops or pods can be replaced or modified to meet evolving requirements.

The MediaWall system, a key feature of BCE’s expanded NOC, enables the construction of large flat-screen monitor displays with its customizable horizontal and vertical support elements. MediaWall can be either fully self-supporting or attached to the studio wall, and it allows screens to be positioned edge-to-edge for a continuous display. All cabling is concealed within the structure, ensuring a clean and efficient installation. MediaWall is available in a variety of configurations, tailored to the specific needs of the control room.

This latest expansion at BCE highlights the importance of adaptable and scalable solutions in broadcast environments. The ability to easily upgrade both the desk and monitor systems allows for future growth and ensures that BCE remains at the forefront of media operations, supporting its global client base with cuttingedge technology and efficient control room setups.

Zattoo, a provider of TV streaming and TV-as-a-Service solutions in Europe, has announced that Sky Switzerland, an OTT pay-TV and VOD provider in the Swiss market, is now an early adopter of Zattoo’s newly launched Stream API product suite. This partnership highlights Sky Switzerland’s role as a lighthouse customer, integrating Zattoo’s advanced streaming services

into its IPTV platform, powering applications, frontends, and devices.

The Stream API suite, which debuted at the IBC 2024 show in Amsterdam, is designed to offer operators cutting-edge tools for next-generation streaming. Among its standout features are the Advertising API, Playback SDK, and Playback Telemetry, which are crafted to meet the complex needs of

today’s streaming environment. These tools are built on Zattoo’s carrier-grade, fully managed head-end video platform, supported by one of Europe’s largest and most reliable content distribution networks. With over 20 years of technological innovation, Zattoo’s platform is the largest and fastest-growing unicast content delivery solution in Europe, ensuring superior streaming quality.

EVS has officially completed its acquisition of MOG Technologies, marking a significant step in expanding its solutions portfolio and broadening its market reach. This move enhances EVS’s ability to deliver innovative end-toend solutions tailored to the media and broadcast industry, integrating MOG’s expertise in software-defined video solutions.

Since the initial agreement in August, both companies have made strides in merging their operations, with teams from EVS and MOG working together to ensure a smooth transition. The process has been highly collaborative, and MOG’s team is now fully integrated into EVS.

Serge Van Herck, CEO of EVS, emphasized the importance of this acquisition, stating, “The completion of this acquisition is a pivotal moment for us. We are thrilled to welcome the talented team at MOG Technologies and to integrate their state-of-the-art software-defined video solutions into our portfolio. Together, we are better positioned to deliver

innovative, end-to-end solutions for the media and broadcast industry, ensuring that our customers continue to receive the best technology and support available.”

This integration is set to bolster EVS’s MediaCeption® solution by incorporating MOG’s ingest and transcoding technologies, enhancing the company’s live production ecosystem. The alignment of the two companies’ products was showcased at the IBC trade show in Amsterdam, where the integration was met with positive customer feedback. A major customer project stemming from this partnership is expected to be announced soon, underscoring the benefits of the acquisition.

Luis Miguel Sampaio, former CEO of MOG Technologies, will continue to play a key role in guiding the integration process. He expressed his optimism about the future, stating, “Since the signing of the agreement, we have seen tremendous collaboration between our teams,

and the integration is progressing smoothly. The potential of our combined expertise is already becoming evident, and I am excited about the future we are building together.”

This partnership unlocks new avenues for innovation, particularly in the realm of software-defined ingest solutions and cloud-based content management. With MOG now part of EVS, the combined companies are poised to offer an even broader range of solutions designed to streamline workflows from ingest to distribution.

Strengthened innovation and customer support

The acquisition brings with it key strategic benefits, including the expansion of EVS’s R&D capabilities. With the addition of a new R&D center from MOG, EVS now operates six centers globally, accelerating the company’s innovation pipeline and attracting new talent. The collaboration will also enhance customer support by leveraging the expertise and combined portfolios of both companies, ensuring clients receive best-in-class service and solutions.

According to the statements of both companies, as MOG’s team officially joins EVS, the acquisition marks a pivotal moment for both companies, strengthening their commitment to technological excellence and customer success.

At the NAB Show the company will have two systems on display at:

> Booth #321 The Studio-B&H

> Booth #421 FUJINON Lenses

ARRI's Francois Gauthier and Peter Crithary will be on hand to answer any questions and guide you through the groundbreaking innovations that the ALEXA 35 Live can bring to your next live production, including the outstanding cinematic ARRI Look.

Blackmagic PYXIS 6K is a high end digital film camera that produces precise skin tones and rich organic colors. It features a versatile design that lets you build the perfect camera rig for your production! You get a full frame 36 x 24mm 6K sensor with wide dynamic range with a built in optical low pass filter that's been designed to perfectly match the sensor. Plus there are 3 models available in either EF, PL or L-Mount.

With multiple mounting points and accessory side plates, it’s easy to configure Blackmagic PYXIS into the camera you need it to be! PYXIS’ compact body is made from precision CNC machined aerospace aluminum, which means it is lightweight yet very strong. You can easily mount it on a range of camera rigs such as cranes, gimbals or drones! In addition to the multiple 1/4″ and 3/8″ thread mounts on the top and bottom of the body, Blackmagic PYXIS has a range of side plates that further extend your ability to mount accessories such as handles, microphones or even SSDs. All this means you can build the perfect camera for the any production that’s both rugged and reliable.

Visitors can expect to see innovations in Virtual Production, Real-Time 3D Graphics, Newsroom Workflows, and Immersive Presentations that are poised to transform the industry.

Partnering with ATX Event Systems and collaborating with Ikan, ProCyc, and Zeiss, Brainstorm will showcase Suite 6.1, featuring the most recent updates to InfinitySet, Aston, and eStudio. These tools are designed to revolutionize broadcast workflows, virtual production, and XR/AR experiences, delivering high-quality virtual content and elevating the art of storytelling.

Key Features of Suite 6.1

Seamless Unreal Engine Integration:

Suite 6.1 boasts deep integration with Unreal Engine 5.4, allowing InfinitySet to control Unreal Engine (UE5) directly from its interface. This tight integration empowers users to manage and edit UE blueprints, objects, and properties, blending Unreal Engine’s rendering power with Brainstorm’s proprietary eStudio render engine. Users can easily decide which engine renders specific parts of the scene, and the enhanced Unreal Compositor simplifies the blending of real video feeds, such as keyed characters, within UE scenes. This innovation combines the flexibility of Unreal Engine with Brainstorm’s unique TrackFree technology, expanding creative possibilities.

High-Performance Virtual Production on One Workstation:

Powered by the new NVIDIA RTX 6000 Ada Generation GPU, Suite 6 enables high-performance

virtual production from a single workstation, reinforcing Brainstorm’s commitment to sustainability. This efficiency reduces hardware demands, which helps clients minimize power consumption, lower costs, and reduce their carbon footprint.

Dual-GPU Support for InfinitySet:

InfinitySet now supports Dual-GPU workstations, splitting rendering tasks across two GPUs to improve performance and visual quality, particularly in XR environments. The inclusion of tools like XR Config and CalibMate simplifies the calibration process with mathematical precision, ensuring a faster setup and reducing potential human error.

Aston’s Next-Level Motion Graphics

For broadcasters, Aston delivers enhanced motion graphics capabilities with updates designed to streamline collaboration. The new Graphic Instances feature simplifies the workflow for design teams by allowing lead designers to create templates that can be modified by other team members without compromising consistency or design integrity. Aston’s StormLogic also introduces advanced transitions and interactions to amplify the impact of on-screen graphics, while new tools for touchscreen interactivity enable designers to create rich, dynamic experiences using multi-touch gestures.

Edison Ecosystem Expands with New Offerings Brainstorm will also showcase exciting updates to its Edison Ecosystem, which caters to the corporate and educational sectors. New additions include:

> EdisonGO: A mobile capture solution utilizing an iPad for video and tracking, perfect for on-the-go content creation.

> Edison OnDemand: A browser-based tool for delivering immersive presentations, ideal for remote learning or corporate training.

> Edison OnCloud: A cloud-based, subscription-based solution that brings Edison’s powerful features to users wherever they are, without requiring significant hardware investment. This flexible platform is perfect for large institutions with diverse needs, offering tailored functionality based on user roles.

Industry Expertise

“As virtual production becomes increasingly prevalent in both broadcast and film, we’re thrilled to demonstrate how Brainstorm’s solutions can empower creators of all sizes to produce top-tier virtual content,” says Ruben Ruiz, Brainstorm’s EVP and Country Manager for the US and Canada.

“With our decades of experience, we’re not only pushing the boundaries in traditional media but also expanding our reach into the Corporate and Education markets.”

Join Us at NAB New York 2024

Don’t miss the opportunity to witness Brainstorm’s latest innovations firsthand. Visit booth #801 at NAB New York 2024 to discover how we continue to drive the future of real-time 3D graphics and virtual production.

Canon U.S.A. announces its participation in the 2024 NAB Conference, where it will showcase its latest developments and solutions, including a new next-gen broadcast lens. Throughout its expanded booth (#C3825), Canon will offer attendees new opportunities to explore the future of production and the latest Canon gear, including its new broadcast lens, the CJ27e×7.3B, Canon’s first 2/3” portable lens with a class leading 27x optical zoom (among portable lenses for 2/3-inch 4K cameras with ENG-style design). The theme of Canon’s NAB booth this year is “Connect, Create, Collaborate.”

“Canon's exhibit at NAB 2024 showcases our cutting-edge technology and forward-thinking innovations,” said Brian Mahar, senior vice president and general manager, Imaging Technologies & Communications Group.

“We welcome any and all visitors to see all that Canon has to offer in the industry.”

The booth will feature Canon’s line of outstanding RF cinema prime lenses and flex zoom lenses, as well as the versatile RF24-105mm F2.8 L IS USM Z lens for hybrid shooters. Canon will also showcase the improved PTZ tracking system, the EOS VR System featuring the Canon RF 5.2mm f/2.8 L Dual Fisheye lens, and the enhanced functionalities of the EOS C500 Mark II, which now supports three new Cinema RAW Light formats through a new firmware update. These displays demonstrate Canon's continuous innovation across broadcast, house of worship, cinema, VR, and social capture solutions.

An LED Virtual Production set will be at the front of the booth, and toward the back Canon will have a display of Canon’s low-light SPAD (Single Photon Avalanche Diode) Sensor technology. Education is also fundamental to Canon's mission, evident in interactive engagements at every counter and all product demos. Professionals across all levels can explore Canon's complete product lineup and gain insights into Canon’s latest advancements in imaging technology through the lens displays and PTZ control room area. Canon will also showcase how our volumetric video technology, which is currently being used to capture live sports, can also be used in a virtual production studio. This technology can help unlock fresh realms of creative and technical exploration for professional production companies, advertising agencies, filmmakers, and beyond.

Clear-Com will spotlight the latest version of its EHX configuration software, EHX v14, which now includes fully integrated SIP support—a unique feature in the market. With this capability, Clear-Com is the only intercom provider offering direct SIP integration into the system, making it easier for users to connect with VoIP systems and external communication networks without the need for additional interfaces or thirdparty hardware. EHX v14 enhances system scalability and flexibility, allowing seamless communication across traditional and IP-based infrastructures. The latest update ensures that production teams benefit from the most advanced communication capabilities available today, making it ideal for broadcasters, live event producers, and other complex operations.

Visitors to the Clear-Com booths will have the opportunity to connect with product experts and explore how Clear-Com’s solutions can meet specific audio and broadcast challenges. Clear-Com emphasises a professional and engaging booth environment, offering attendees meaningful insights into the latest communication technologies.

The platform is designed to be very robust while still maintaining the compact form factor of the previous OX-1 platform. Combined with DTV Innovations’ current modular software, it will serve as a base for multiple new products that DTV Innovations will launch soon.

The OX Platform has more than enough built-in capabilities to support advanced IP workflows, the latest processor with internal GPU to support video encoding/decoding, and a lot of hardware options to support baseband video, compressed domain video, and multi-standard terrestrial and satellite modulator/demodulator.

Various key usages of the platform include but are not limited to, secure video streaming over the internet, video baseband to IP encoding/decoding, PSIP and EPG generator/rebrander, terrestrial edge transcoding, secure video backhauls for confidence monitoring, ATSC 3.0 receiver/translator/capture, ATSC 3.0 lab signal generator. It can also serve as an integrated satellite receiver-decoder complete with support for multiple IP streaming and terrestrial RF inputs. The OX Platform’s adaptability allows for the development of affordable and easily implementable solutions tailored to specific broadcast needs, making it an ideal choice for broadcasters seeking to enhance and streamline their operations.

“We’ve been working on this platform for a while. We would like to have a compact yet versatile platform which can be used in various applications”, said Benitius Handjojo, Chief Executive Officer of DTV Innovations. “The new OX Platform is designed to be very flexible and can be used for various applications. Not only it is very compact, I believe it will come at a very attractive price point. Combined with other offerings from DTV Innovations we can provide the customer with a complete broadcast workflow.”

Attendees will have a chance to explore the latest advancements to the Cirkus scheduling and project management platform (booth 647), which is designed to enhance collaboration, optimize resource scheduling and automate project management. Key updates include streamlined workflows that prevent missed communications and ensure deadlines are met; automation tools that free up teams to focus on more meaningful work by handling repetitive tasks; and a new public requests feature that simplifies project and booking requests, even for users without a Cirkus login.

“Cirkus is evolving to meet the diverse needs of media professionals,” explains Stephen Elliott, CEO of Farmerswife. “We’re excited to unveil these new feature updates to our valued customers and industry colleagues at NAB New York. These updates reflect our ongoing commitment to improving collaboration and workflow efficiency in the media sector.”

In addition to the Cirkus updates, Farmerswife also plans to showcase the newly-released version of its software, Farmerswife 7.1. Released just last month, this version introduces over 100 new features, including advanced equipment tracking, scheduling enhancements and improved budgeting capabilities. The company will also reveal its AI-enhanced product plan, anticipating the growing role of AI in the media industry. Additionally, Farmerswife is developing new features to help clients monitor carbon emissions, aligning with sustainability goals.

"Continuing our legacy of success from previous years, where we welcomed esteemed clients like PBS Fort Wayne, Boutwell Studios and more, we remain committed to delivering cutting-edge solutions that streamline workflows and drive our customers towards success,” notes Jodi Clifford, managing director, USA, at Farmerswife. “With the introduction of Farmerswife 7.1 and the latest updates to Cirkus, we are confident in our ability to provide unparalleled efficiency and productivity for media operations. In line with our continued efforts, we will be unveiling our newest AI enhancement product plans."

At this year’s NAB NY (Booth 1013), Lawo will unveil HOME Downstream Keyer, a sophisticated and powerful HOME App for video. Its feature set is closer to what a vision mixer provides than to what operators expect from a downstream keying function of the past.

“HOME Downstream Keyer allows operators to simultaneously and independently transition up to three key layers over an A/B background mix,” comments John Carter, Lawo’s Senior Product Manager, Media Infrastructure. “As Lawo’s first mixing processing app, it comes with eight ST2110-20 receivers and two ST2110-20 transmitters. Each of the three Keyers can perform Luma, Linear or Self keying to provide the desired processing for high-end broadcast graphics designed with transparency and drop shadows.”

The HOME Downstream Keyer app provides comprehensive keying and mixing capabilities to meet production needs and enable additional channel branding within a state-of-the-art, agile infrastructure, based on triggers issued via the VSM control system where desired.

As part of the HOME Apps suite, HOME Downstream Keyer can be deployed in both SDR and HDR workflows. It supports SMPTE ST2110 (incl. JPEG XS), NDI, SRT, and Dante AV* transport as required. Like all HOME Apps, and a growing number of other Lawo solutions, HOME Downstream Keyer can either be licensed perpetually or solicited on an ad-hoc basis through Lawo Flex Subscription credits.

“With HOME Downstream Keyer, Lawo’s HOME Apps have reached a new level of sophistication,” adds Jeremy Courtney, Senior Director, CTO Office. “Architecting the container-based HOME Apps platform from scratch for bare-metal compute has allowed Lawo’s software developers to avoid the pitfalls and limitations of lifting and shifting existing technology to a different platform. The advantages of this clean-slate microservice approach are now becoming apparent.”

(*) Available in a future release of HOME Apps, licensable.

Marshall Electronics will highlight a range of its cutting-edge cameras at NAB New York 2024 (Booth 1352). Marshall offers a wide selection of PTZ and POV cameras, including three new PTZs, the CV612 camera, CV620 camera and CV625 camera.

The CV612 PTZ camera, available in black (CV612-TBI) and white models (CV612-TWI), features the ability to automatically track, follow and frame presenters using AI facial learning for accurate and smooth self-adjusting maneuvers. With advanced AI tracking, the PTZ camera “learns” who is the prime subject and won’t “lose” the presenter when other persons or objects enter the shot. Equipped with 12X optical and a 15X digital zoom, the CV612 offers a 4.1mm49.2mm (6.6-70.3 degrees) field of view. It is built around a professional-grade 2-Megapixel 1/2.8inch, high-quality HD CMOS sensor, which provides format resolutions from 1920x1080, 1280x720 down to 640x480, making this the ideal solution for smaller venues, interviews, web production, live presentations, classrooms and HOW, to name a few, as it has a maximum range of 18M (almost 60-feet).

Marshall will also showcase its new CV620 20x camera, which is the perfect fit for a range of midsized rooms, including corporate communications and teleconferencing, as well as traditional broadcast applications. With 3G-SDI, HDMI, USB 3.0 and IP outputs and 20x zoom, the new camera has a 57-degree field of view and supports up to 1080p/60. The CV620 features a high-quality Sony sensor and is also available in black (CV620-B12) and white models (CV620-W12) to fit varying aesthetics. It also features Mic and Line-in audio options as well as a range of IP protocols, including RTSP, RTMP, RTMPS, SRT and MPEG-TS.

The CV625 camera will also be on display at NAB NY and features a 25x zoom that is ideal for large venue auto tracking, broadcast events, show stages, educational lecture halls and large format houses of worship. Available in black (CV625-TB) and white models (CV625-TW), the camera features a high performance 8-Megapixel 1/1.8-inch sensor to capture crisp high-quality UHD video. The camera delivers up to 3840 x 2160 resolution at 60fps with

an 83-degree horizontal angle-of-view. The CV625 provides versatility for various applications with its range of outputs including HDMI, 3G-SDI, Ethernet, RTSP streaming and USB 3.0.

Mediaproxy is to highlight its recently acquired A3SA certification on booth 1243. The company will demonstrate its full range of compliance monitoring and multiviewer systems, which now provide decryption for ATSC 3.0-based broadcasts being used in the US for NextGen TV.

Mediaproxy announced during August that it is now certified by the ATSC (Advanced Television Systems Committee) 3.0 Security Authority (A3SA) to decrypt ATSC 3.0 streams as part of the LogServer and Monwall product ranges. This gives broadcasters a comprehensive set of software-based tools to monitor and analyze ATSC 3.0 streams without the need for bespoke hardware devices.

The introduction of A3SA security protocols into the LogServer logging and monitoring engine provides broadcasters with a cost-effective option allowing them to monitor both encrypted to-air and off-air signals. For to-air and hand-off applications, an onpremises LogServer system is able to simply take the encrypted STLTP (Studio to Transmitter Link Transport Protocol) output of the packager directly from the stream. This guarantees confidence in what is sent to the transmitter straight from the local IP network.

In off-air monitoring situations it is possible to use inexpensive and familiar integrated receiver/decoders (IRDs) that do not provide decryption but do have outputs of the encrypted IP streams through DASH/ ROUTE (Dynamic Adaptive Streaming over HTTP/Realtime Object delivery over Unidirectional Transport) or the recently approved ALP (A/330, Link-Layer Protocol). This enables ATSC 3.0 compliance on IRDs for streaming platforms such as HDHomeRun, with LogServer handling the DRM aspects for all channel sources.

The new ATSC 3.0 security feature is also available on Mediaproxy's ever-expanding Monwall multiviewer, which accommodates low-latency monitoring of both encrypted outgoing and return signals. Alongside the features on Monwall and LogServer, Mediaproxy has developed an extended toolset for advanced IP packet and table analysis of live broadcast streams or PCAP (packet) captures, which can be accessed via easy-to-use user interfaces.

"We are excited to be debuting LogServer and Monwall with the recently acquired A3SA certification at NAB Show New York," comments Mediaproxy chief executive Erik Otto. "Recent figures show that NextGen TV is now reaching 75 percent of US television households so broadcasters need to be aware of ways they can efficiently and cost-effectively monitor and decrypt ATSC 3.0 streams. This is an important advance for Mediaproxy and NAB Show New York is the ideal place to showcase it."

The livestreaming market is projected to grow 23 percent from 2024 to 2030. Production teams need flexible and scalable AV tools to streamline operations in fast-paced and challenging environments to keep up with this growth. At NAB New York (booth #611), Panasonic Connect North America will showcase its latest AV technology to create powerful workflows for the broadcast and live event markets. This includes its new auto framing capabilities, available as a built-in Auto Framing Feature for the AW-UE160 PTZ Camera and an Advanced Auto Framing plug-in for Panasonic’s

Media Production Suite. These new capabilities will help operators create on-site efficiencies and streamline the delivery of high-quality content.

Automating the production workflow

Panasonic’s new auto framing feature incorporates auto tracking, image recognition and auto framing technologies to deliver precise, user-defined camera framing for high quality, natural content. Framing presets can be combined, including multi-subject group shots, while advanced human body detection allows consistent subject headroom.

The built-in Auto Framing Feature for Panasonic’s AW-UE160 PTZ camera will be available from CY2025 Q1 via firmware updates and the Advanced Auto Framing plug-in for Panasonic's Media Production Suite software platform will be available from CY2025 Q2. The Advanced Auto Framing plug-in will enable auto framing for various PTZs across the Panasonic

lineup and will support multi-camera setup for auto framing to enhance subject-detection accuracy and operability while facial recognition technology enables optimal framing for specified individuals.

Capturing cinema-quality, versatile points of view

Panasonic’s new AW-UB50 and AW-UB10 box-style 4K multi-purpose cameras bring cinema-quality shooting to a compact, versatile form factor ideal for remote scenarios across live events, corporate, and higher education. The AW-UB50 and AW-UB10, available in early 2025, are equipped with large-format sensors and deliver exceptional image quality with expressive detail. The AW-UB50 uses a full-size MOS sensor with 24.2M effective pixels while the AW-UB10 features a 4/3 type 10.3M effective pixel MOS sensor to give production teams the ability to document multiple subjects in a single frame. With Panasonic’s remote system, these cameras can remotely facilitate video production workflows.

Similarly, the recently launched AW-UE30 PTZ camera boasts a compact design and quiet operation to ensure that in-person experiences across corporate and higher education environments go uninterrupted by technology. Its enhanced video streaming with 4K/30p images make remote participants feel as if they are in-person while its horizontal wide-angle coverage of up to 74.1° and a maximum 20x*2 optical zoom allow teams to capture more points of view - even in challenging room layouts.

Navigating complex environments with easy-to-use solutions

LED lights and display walls bring shifting lighting conditions and color accuracy challenges to live event venues. The AK-UCX100 4K Studio Camera addresses these challenges with its new imager featuring color science to account for the specific colors generated by LED lighting and displays walls, as well as increased resolution and dynamic range that easily adapts to different environments. The new imager is less prone to moiré and contains an optical filter wheel setting to assist in managing these artifacts. Venues can create the same visual experiences for viewers regardless of location. Creators can operate the AK-UCX100 with other Panasonic studio cameras utilizing the same accessories, including the UCU700 Camera Control Unit, or they can operate the AKUCX100 as a standalone device.

The new KAIROS Emulator lets creators pre-program a show without requiring a physical hardware connection, ensuring production teams always have access to adjust shows. Creators can also use it as a training tool to familiarize themselves with the KAIROS feature set. The new smart routing feature maximizes input capacity by switching sources between active and idle states, enabling more inputs than the KAIROS Core can handle alone. Additionally, built-in cinematic effects such as film look and glow enhance a production's creativity, eliminating the need for external processing.

QuickLink is launching the new QuickLink StudioPro™ Controller control panel at NAB New York (Booth 1145). Also, at NAB New York, QuickLink will be demonstrating StudioEdge™, QuickLink’s one stop video conferencing solution, fresh off its triumphant success at IBC 2024.

Complementing the award-winning and Best of Show at IBC Show 2024 QuickLink StudioPro multicamera production suite of products, the StudioPro Controller is the ultimate control panel for productions of small-, medium-, and large-sizes, boasting 134 fully customizable LCD buttons for scene switching, project configuration, macros, audio control, and more.

“The StudioPro Controller revolutionizes video production switching by placing LCD screens underneath all 134 tactile push buttons on the device,” said Richard Rees, CEO of QuickLink. “These keys can display virtually anything users choose including text, images, animation, and video. Users can display motion graphics, video sources – even remote guests whose images appear beneath the button – making it easier to find and switch to the exact item needed without having to memorize placement.”

StudioPro Controller can natively control over 600 devices, including DMX lights, PTZ cameras, and many other studio elements, offering fingertip control of every aspect of a production. A single StudioPro Controller unit can control a production program and four additional mix outputs with complete flexibility and endless possibilities for productions in conjunction with StudioPro.

Rees continued, “StudioPro Controller is built from the ground up with forward-thinking simplicity in mind aimed at eliminating the complexity and antiquation of legacy systems. For all of its incredibly powerful and advanced features, StudioPro Controller is easy for anyone to use, including absolute beginners to multicamera production while still providing creative power on par with far more expensive and complex systems.”

Built into StudioPro, or offered as a standalone solution, StudioEdge is a simple, elegant, and easy to use solution for introducing high-quality remote guests from virtually anywhere. StudioEdge incorporates every major video conferencing platform into production starting with 4K broadcast-quality QuickLink StudioCall and also including Microsoft Teams, Zoom, Skype, and more.

TAG Video Systems is presenting its Realtime Media Performance platform at NAB SHOW New York 2024 enhanced with numerous award-winning technologies. The platform will be demonstrated with capabilities designed to increase user confidence, maximize data utilization, simplify troubleshooting, and improve efficiency. These highlights and more can be seen at TAG’s Booth #659.

NAB SHOW New York marks the introduction of TAG‘s QC Elements (Quality Control Elements) to the North American broadcast community. Designed to enrich visualization and simplify troubleshooting, QC Elements enables a vast array of elements to be arranged on a multiviewer tile for a comprehensive view of critical technical data, bringing engineers a built-in quality control station within the display. Engineers can tailor each tile with details on codecs, frame rates, resolutions, closed captions, audio channels, transport streams, and more. This powerful tool expedites the identification and analysis of technical issues, helping users achieve a high-level of IP workflow stability, ensuring seamless quality of service at scale and a flawless viewer experience.

TAG will also present its award-winning Operator Console, an intuitive touch panel console. Operator Console provides a live, interactive view of the multiviewer output on any touch-controlled device bringing a simplified, focused, and intuitive approach to manage TAG multiviewers at any scale. Operators can effortlessly switch between layouts, modify tile content, and isolate audio sources for verification. Additionally, they gain instant access to pre-defined and customizable configurations, simplifying complex workflows, even in the most demanding operational environments, allowing operators to focus on creativity and workflow integrity.

Language Detection, another multi-award-winning feature, is a revolutionary innovation that transforms how operators ensure quality and compliance across large scale operations with multiple closed captions and language subtitles. This innovative technology not only identifies the subtitles’ language but also calculates and analyzes quality based on language-specific dictionaries. Language Detection’s cutting-edge automation eliminates the manual labor traditionally associated with language identifi cation, minimizing the risk of human error, and freeing up operators to focus on more strategic tasks.

TAG will show its Realtime Media Performance platform integrated with the Structural Similarity Index Measure (SSIM) with advanced Quality of Service (QoS) tools to provide a comprehensive, real-time video quality assessment. This awardwinning combination ensures that objective quality assessments and technical performance metrics are continuously monitored, guaranteeing an exceptional viewer experience. By leveraging TAG’s Content Matching technology, SSIM, and QoS features work together to detect, alarm, and report quality issues in real-time, ensuring seamless video delivery.

TAG’s new advanced user management (RBAC – Role Based Access Control) functionality defines and assigns roles within the organization for strengthened security and reduced human error. Knowing that access is limited by specific outputs, sources, or networks brings an additional measure of confidence to those managing multiple networks under the same TAG Media Control System (MCS).

New York broadcasters will get a preview of Group Lens, a functionality designed to simplify the monitoring and troubleshooting of massive media networks. Group Lens is an interactive visual tool that provides an at-a-glance overview of the entire network's status on a single screen. The intuitive tilebased display combined with intuitive color coding to instantly highlight potential issues enabling quick

root cause analysis and efficient troubleshooting for faster issue resolution preventing minor glitches from escalating into major disruptions.

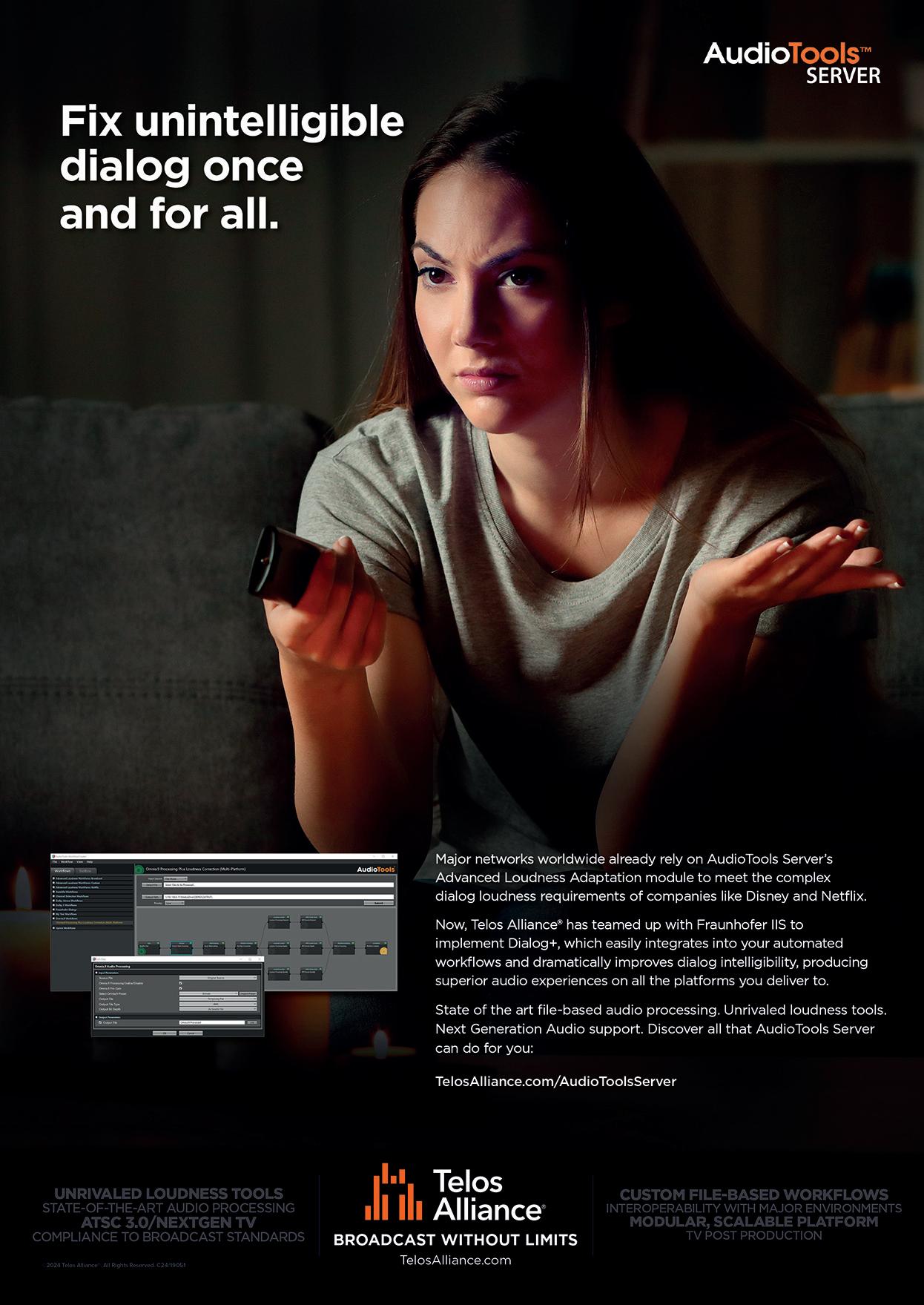

“In today’s media environment, settling for ‘good enough’ audio processing is definitely not good enough to retain your audience,” says Telos Alliance M&E Strategy Advisor Costa Nikols. “The audio requirement for today’s TV broadcaster isn’t just meeting standards, it’s about surpassing viewers’ expectations to deliver exciting, compelling audio with every program.”

“Telos Alliance is focused on helping broadcasters deliver the most engaging content possible. Today’s viewers are demanding better loudness control, better language options, and immersive audio,” Nikols notes. “We’re highlighting our new Media Solutions Initiative at NAB New York to let broadcasters know that the tools they need to achieve these goals are available from a single, trusted source.”

Visitors to the Telos Alliance booth at NAB New York will be able to meet Costa Nikols, Sharon Quigley, and other key members of the Media Solutions team, and see how automated file-based Minnetonka Audio® AudioTools® Server software seamlessly integrates into a larger Next Generation Audio (NGA) processing workflow.

Booth visitors will also experience the seamless accessibility of the new Telos Infinity® VIP Virtual Commentator Panel (VCP), which replicates physical commentator panels in the virtual domain, making full-featured commentary and intercom features available using any computer, tablet, or smartphone.

In addition, Telos Alliance will also be introducing the new family of Linear Acoustic® AERO-series DTV audio processors, the new Axia® StudioCore console engine, StudioEdge high-density I/O endpoint device, Telos VX® Duo broadcast VoIP telephone system, Telos® Zephyr Connect multi-codec gateway software, Omnia® Forza FM audio processing software, and Axia Quasar AoIP mixing consoles.

› Gran Canaria Studios supports the island’s initiative to become one of Europe’s audiovisual production hotspots

› The project comprised lead design, structural engineering, and LED wall installation, all designed and delivered by ARRI Solutions, Video Cine Import (VCI), and ROE Visual

› Flexible facility design maximizes stage utilization and efficiency

The initial phase of Gran Canaria Studios, one of Europe’s largest virtual production stages to date, has been completed, offering a cutting-edge environment for international content producers looking to leverage Gran Canaria’s film location incentives.

Located on the 1,200 sqm stage 1 of the Gran Canaria Platos studio lot, the virtual production infrastructure has been designed and delivered by ARRI Solutions, integrator Video Cine Import (VCI), and ROE Visual, a manufacturer of premium LED products.

The Economic Promotion Agency of Gran Canaria (SPEGC) commissioned the studio to accommodate both independent and high-end national and international productions. It will strengthen the island’s reputation as a thriving film production hotspot.

“The film industry is one of the region’s economic pillars, we are very commited to the development of this industry on the island

and Gran Canaria is spearheading efforts to become a leading hub for TV and film,” states Cosme García Falcón, Managing Director of the SPEGC. “Creating this virtual stage offers new services to content companies from across the world, with the best state-of-the-art studio and premium technical and operational services created by companies of recognized prestige such as ARRI, VCI, and ROE Visual. Gran Canaria Studios will help production companies develop their stories and position the island of Gran Canaria, the region, and Spain as one of the best prepared and most profitable audiovisual production hubs in the market.”

In close cooperation with ROE Visual and VCI’s specialist teams, ARRI Solutions’ experts delivered comprehensive consultancy, system design, supervision, and commissioning for the project. The team prescribed a unique fixed curved space with highly flexible, adjustable elements to accommodate a wide range of productions, maximizing stage utilization.

The volume comprises a fixed 40 m x 8 m main horseshoe-shaped wall and two adjustable ceilings (90 sqm and 45 sqm) suspended from variable speed motors, allowing for height and tilt changes. To optimize the studio space, the whole volume is suspended from an innovative internal steel structure designed to hold the volume and prepared for holding lighting.

Further configurations can be delivered through two supplementary 3 m x 5 m mobile LED walls or “side wings,” providing continuity with the main wall or as part of the physical scene set.

The whole volume uses ROE Visual Black Pearl BP2V2 LED panels, known for their exceptional image quality and reliability. These panels offer high-resolution visuals and provide filmmakers with high-contrast, detailed in-camera visuals and the flexibility and precision needed for cuttingedge virtual production and XR applications.

“We are very pleased that ARRI Solutions had the chance to lead the technical realization of this flagship project. We would like to thank every partner involved for the excellent collaboration and the SPEGC for the extremely positive feedback on the result,” emphasizes Kevin Schwutke, Senior Vice President Business Unit Solutions ARRI Group. “Gran Canaria already has a rich heritage of attracting major features, commercials, and independent productions. With this new studio, production companies now have access to next-generation technologies within a flexible, efficient facility. We are sure that the Gran Canaria Studios will become a key asset in enhancing the production infrastructure in the region.”

“We are thrilled to have contributed to the development of the Gran Canaria Studios,” says Olaf Sperwer, Business Development Manager for ROE Visual. “This project underscores our commitment to delivering top-tier LED solutions that meet the highest industry standards. Collaboration with key partners has been instrumental. Leading the integration, ARRI Solutions delivered seamless and efficient implementation. ROE Visual is proud to have completed this advanced virtual production studio, enabling European and broader international film- and TV-producing communities with the best virtual production technology available. Raising the bar in virtual production will help put locations like the Gran Canaria Studios on the map for premium international film productions. We thank the SPEGC and our partners VCI and ARRI for the successful teamwork in this unique project.”

“We are excited to announce the launch of the largest, most advanced virtual production studio in Spain, which is also among Europe’s most significant, cutting-edge virtual production studios. This landmark project, coordinated by VCI, was made possible through our collaboration with ARRI’s cutting-edge engineering and ROE’s state-of-the-art LED wall technology. We’re pleased to have worked with SPEGC, whose commitment and foresight were essential in making the project a reality. This studio sets a new benchmark for virtual production, advancing the development of a comprehensive digital ecosystem, including skilled professionals in this emerging field,” comments Fernando Baselga, CEO of Video Cine Import.

https://youtu.be/Ougpl7_a0Ag?si=Gs7ifFNgeLytYNJh

Sveriges Television (SVT), Sweden’s public broadcaster founded in 1956, has always pushed the boundaries of innovation without abandoning its public service vocation. At the forefront of this technological transformation is Adde Granberg, SVT’s Chief Technology Officer, who brings more than three decades of broadcast technology experience to his role. Adde Granberg, SVT’s Chief Technology Officer

In a recent interview with TM Broadcast International, Granberg shared insights into SVT’s ongoing journey towards a more efficient, flexible and viewer-centric broadcasting model. From implementing cloud-based production solutions to developing in-house content delivery networks, SVT is embracing cutting-edge technologies to improve its operations and viewer experience.

Granberg’s vision for SVT’s future is clear: moving from hardware-centric to software-based solutions, prioritizing end-user quality, and leveraging artificial intelligence to democratize content creation.

This conversation provides insight into the challenges and opportunities facing public broadcasters in an era of rapid technological change, and how SVT is positioning itself to remain relevant and influential in the evolving media landscape.

You have a wide experience in broadcast tech, I think, including outside vines. And can you tell us a little

about the changes you’ve lived during your professional life?

It’s not that simple, I guess. My journey in broadcast technology has been quite extensive. It began in 1992 when we launched YouthTunnel in Sweden, which was intended to be the Swedish equivalent of MTV. I started as a sound engineer and later transitioned to video editing. Over the years, I’ve been involved with various production companies, including commercial ventures, event organizations, and OB truck operations where I was instrumental in building outside broadcast vehicles.

Throughout this period, spanning from 1992 to the present, the industry has largely maintained its traditional methods of operation. However, we’re now at a critical juncture. The advent of smartphones in the early 2000s initially didn’t signi fi cantly impact our industry, but that’s rapidly changing.

After more than three decades in this field, I believe we’re on the cusp of a monumental shift. The democratization of content creation, fueled by AI and smartphone technology, has revolutionized what’s possible. Tasks that were once the exclusive domain of professionals can now be accomplished by anyone with a smartphone. These devices have become all-in-one production studios, capable of filming, editing, graphics creation, translation, publishing, and even broadcasting from home.

This technological leap represents both a challenge and an opportunity for our industry. As professionals, we must acknowledge and adapt to this new reality. From my perspective, the broadcast production industry needs to evolve rapidly to remain relevant. We must embrace these changes and find ways to leverage new technologies to enhance our offerings. The alternative is to risk becoming obsolete in an increasingly dynamic and democratized media landscape.

SVT recently partnered with Agile Content to develop Agile Live. How do you see this cloudbased production solution changing SVT’s workflow in the coming years?

It’s a journey we just started, but the main focus with this cloud solution is the quality for the end user. Our partnership with Ateliere to implement Agile Live marks the beginning of a significant transformation in our production workflow. The primary focus of this cloud-based solution is to enhance the quality of experience for our end users. We believe that our viewers should be the ones setting the standard for production quality, not our internal production teams.

This shift represents a fundamental change in our approach. We’re moving away from a hardwarecentric industry towards a software-based one. This transition allows us, for the first time, to truly prioritize the end user’s needs and expectations.

The beauty of this software-based approach is that it eliminates the need

for specialized hardware to process video data. Instead, we can now use software running on standard hardware to achieve the same results, if not better. This not only provides us with more flexibility but also opens up new possibilities for innovation and cost-efficiency.

As well, this cloud-based solution offers substantial benefits in terms of environmental impact and operational costs. Our initial deployment for the Royal Rally of Scandinavia demonstrated significant reductions in both production costs and carbon footprint.

I really anticipate that this technology will revolutionize our entire production process. It will enable us to be more agile, responsive to viewer preferences, and environmentally responsible. We’re at the dawn of a new era in media production, and SVT is committed to leading this change, always keeping our audience at the forefront of our innovations.

The Royal Rally of Scandinavia broadcast

using Agile Live showed significant cost and environmental savings. Can you elaborate on the specific challenges and successes you encountered during this implementation?

The implementation of Agile Live for the Royal Rally of Scandinavia broadcast was a significant milestone in our ongoing journey towards more efficient and sustainable production methods. We’ve been pioneering remote and

home production since 2012, and this latest project represents a culmination of our efforts and learnings.

The success we achieved with Agile Live builds upon our long-standing commitment to remote production. This approach has allowed us to significantly reduce our environmental impact, optimize our investments, and streamline our workflows. As a public broadcaster, these cost

savings translate directly into our ability to produce more content for our audience, which is crucial to our mission.

One of the key challenges we faced was adapting our existing infrastructure and workflows to this new cloud-based system. However, the flexibility it offers is remarkable. For instance, during the pandemic, we realized the full potential of this technology. If a host

or technician fell ill, we could seamlessly bring in replacements from different locations without any travel requirements.

The Royal Rally broadcast demonstrated that we could take this concept even further. With a stable network connection, we can now potentially conduct productions from anywhere - even from our homes. This level of flexibility and redundancy is a gamechanger for our industry.

Moreover, this shift towards software-based production aligns perfectly with the future we envision for broadcasting. It’s not just about cost savings or environmental benefits - though these are significant. It’s about reimagining how we create and deliver content in a world where technology is rapidly evolving and audience expectations are constantly changing.

The success of this implementation has reinforced our belief that cloud-based, softwaredriven production is the way forward. It’s allowing us to be more agile,

more responsive to our audience’s needs, and more sustainable in our operations. As we continue to refine and expand this approach, we’re excited about the possibilities it opens up for SVT and the broader broadcasting industry.

How does SVT plan to balance the adoption of innovative technologies like Agile Live with maintaining traditional broadcast infrastructure?

Balancing the adoption of innovative technologies like Agile Live with our traditional broadcast

infrastructure is a complex but necessary process. The good news is that the new infrastructure is generally more cost-effective, which allows us to gradually build it up and transition over time.

However, this transition presents significant challenges. It requires a complete shift in mindset, new support structures, and an entirely different business model. Moving from hardware to softwarebased solutions introduces a new set of challenges in terms of system stability, upgrade processes, and overall management.

This transition is particularly tricky because we need to maintain our 24/7, 365-day operations while simultaneously undertaking this transformative journey. Based on our current projections, I estimate it will take about three to five years to complete this transformation. This timeline accounts for not only the technical implementation but also the crucial aspects of staff education and adaptation to the new systems.

A significant part of this process involves training our crew and allowing them time to become comfortable with the new technologies. We also need to ensure the new systems are stable and reliable before fully integrating them into our critical operations.

I don’t see any alternative to this path forward. Embracing these changes is essential for SVT to remain competitive and efficient in the rapidly evolving media landscape. If I were to resist this transition, I’d be acting like an outdated, resistant-to-change CTO, which is certainly not my

approach. Our industry is at a pivotal point, and it’s our responsibility to lead the way in adopting these innovative technologies while ensuring a smooth transition for our team and our viewers.

With the development of the Elastic Frame Protocol (EFP), how do you envision this open-source technology impacting the broader broadcast industry?

The development of the Elastic Frame Protocol (EFP) represents a significant shift in our industry’s approach to content delivery. While I can’t definitively claim it’s the future protocol, I believe its underlying principles are crucial for the evolution of broadcasting.

The key advantage of EFP lies in its focus on enduser quality and efficiency. Traditional protocols often introduce unnecessary overhead, but EFP is designed to minimize waste in the system. This aligns with our goal at SVT to prioritize the viewer experience above all else.

Furthermore, EFP embodies a broader trend in our industry - the move from hardwarecentric to software-based solutions. This transition allows for greater fl exibility and scalability, which is essential in today’s rapidly changing media landscape. However, it’s important to note that adopting new technologies like EFP isn’t just about the technical aspects. It requires a fundamental shift in mindset throughout the organization. We need to ensure that our teams, especially those managing these technologies, are equipped to handle this new approach.

Protocols like EFP are tools that enable us to better serve our audience. They allow us to focus on what truly mattersdelivering high-quality content to our viewers in the most efficient way possible. As we continue to explore and implement these technologies, we always keep the end-user experience at the forefront of our decisions.

SVT has been transitioning to IP-based infrastructure. Can you update us on the progress of this transition and any unexpected challenges or benefits you’ve encountered?

Our transition to IP-based infrastructure at SVT is progressing, but it’s important to clarify that we’re focusing on IP in general, not specifically on SMPTE 2110. This distinction is crucial because SMPTE 2110 is a production industry standard that isn’t necessarily optimized for end-user needs, which is a key priority for us.

One of the main challenges we’re facing is the shift from hardware-centric to software-based production. This requires a significant transformation in our workforce and infrastructure. Our network and hosting personnel need to develop a deep understanding of broadcast demands, which is a new requirement for many of them. We’re also building a support organization capable of managing

software-based systems rather than traditional hardware.

The biggest hurdle in this transition is the mindset change required at all levels of our organization. For instance, we’re reimagining user interfaces for production tools. Traditional vision mixers have numerous buttons and functions that aren’t always necessary for modern production workflows. We’re working on creating more intuitive, user-friendly interfaces that anyone can use, not just specialized engineers.

This simplification is challenging because we have many skilled professionals who are experts with traditional equipment. Now, we need to develop interfaces that are accessible to a broader range of users, potentially including myself. The goal is to make production tools as user-friendly as smartphones - you shouldn’t need to be an engineer to operate them effectively.

Ultimately, we’re striving to adopt the ease-of-use principles from consumer

technology into our broadcast industry. This is a difficult but necessary evolution to ensure we remain efficient and relevant in the changing media landscape.

With the move towards cloud-based solutions, how is SVT addressing potential concerns about data security and sovereignty?

At SVT, we’re taking a multi-faceted approach to address data security and sovereignty concerns as we move towards cloudbased solutions. First and foremost, we’re developing our own on-premises cloud infrastructure. This allows us to maintain direct control over our most sensitive data and critical operations, ensuring we can manage security in the best possible manner.

Simultaneously, we’re investing significantly in building a robust IT security department. This is a new and crucial initiative for us, as we recognize the evolving nature of digital threats in the cloud environment. While it’s certainly a challenge, we’re not starting