We all know that sports are, in our Broadcast world, the most demanding kind when it comes to broadcasting. Broadcasters, integrators and manufacturers devote a great deal of resources to continuously improving their work and products. That is why at TM Broadcast International we are always paying attention to every single technological development as well as to the most challenging projects that are in progress. It is therefore time to check how the challenges posed by the Paris Olympic Games were coped with and find out what areas saw the expected success become a reality. With this in mind, we offer our readers an exclusive interview with Isidoro Moreno (Chief Engineer at OBS) in which he analyzes how the challenges they faced in this edition of the Games were overcome.

Editor in chief

Javier de Martín editor@tmbroadcast.com

Key account manager

Patricia Pérez ppt@tmbroadcast.com

Editorial staff press@tmbroadcast.com

In this issue we also cover remote production. Megahertz tells us how the new OB has been implemented for BBC Northern Ireland and we also explore how the virtual OBs used in Paris 2024 turned out, a type of unit that is set to become a standard by transforming the way live sporting events are covered.

This issue is crammed with a wealth of other content, such as an in-depth laboratory test on the Blackmagic Design Ultimatte 12 equipment and Ikegami’s opinion piece on Integrating SDI and IP into an Efficient Broadcast Production System. Come in and discover it all!

Creative Direction

Mercedes González mercedes.gonzalez@tmbroadcast.com

Administration

Laura de Diego administration@tmbroadcast.com

Published in Spain

ISSN: 2659-5966

TM Broadcast International #135 November 2024

TM Broadcast International is a magazine published by Daró Media Group SL Centro Empresarial Tartessos Calle Pollensa 2, oficina 14 28290 Las Rozas (Madrid), Spain Phone +34 91 640 46 43

6 News PRODUCTS

For the drafting of this article we have relied on the information included in the document “Olympic Games Paris 2024 Media Guide” by OBS y comments by Isidoro Moreno, Chief Engineer at OBS.

The BBC Northern Ireland has taken a major leap forward in its broadcast capabilities with the integration of a cutting-edge IP-based outside broadcast truck, designed and delivered by Megahertz.

OBS and the 2024 Paris Olympics: challenges solved

Now that the Paris 2024 Olympic Games are over, it is fitting to analyse their outcome from the points of view of technology and television production.

OPINION | SDI&IP

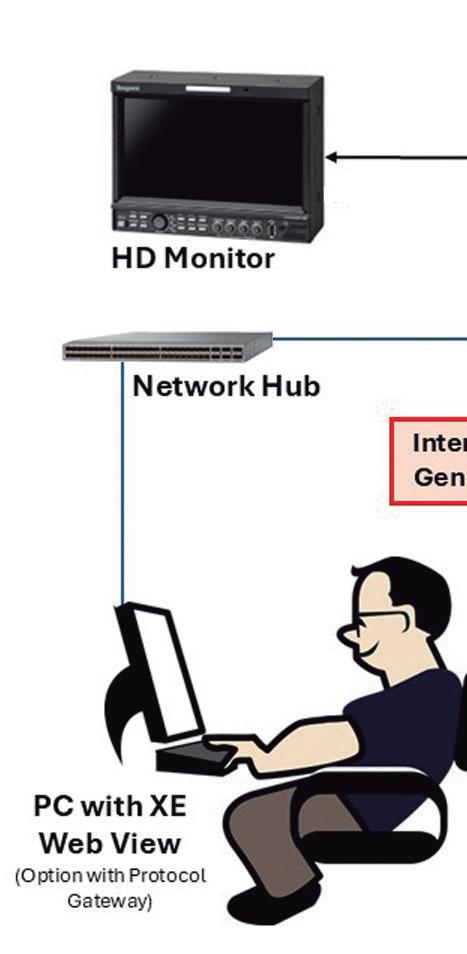

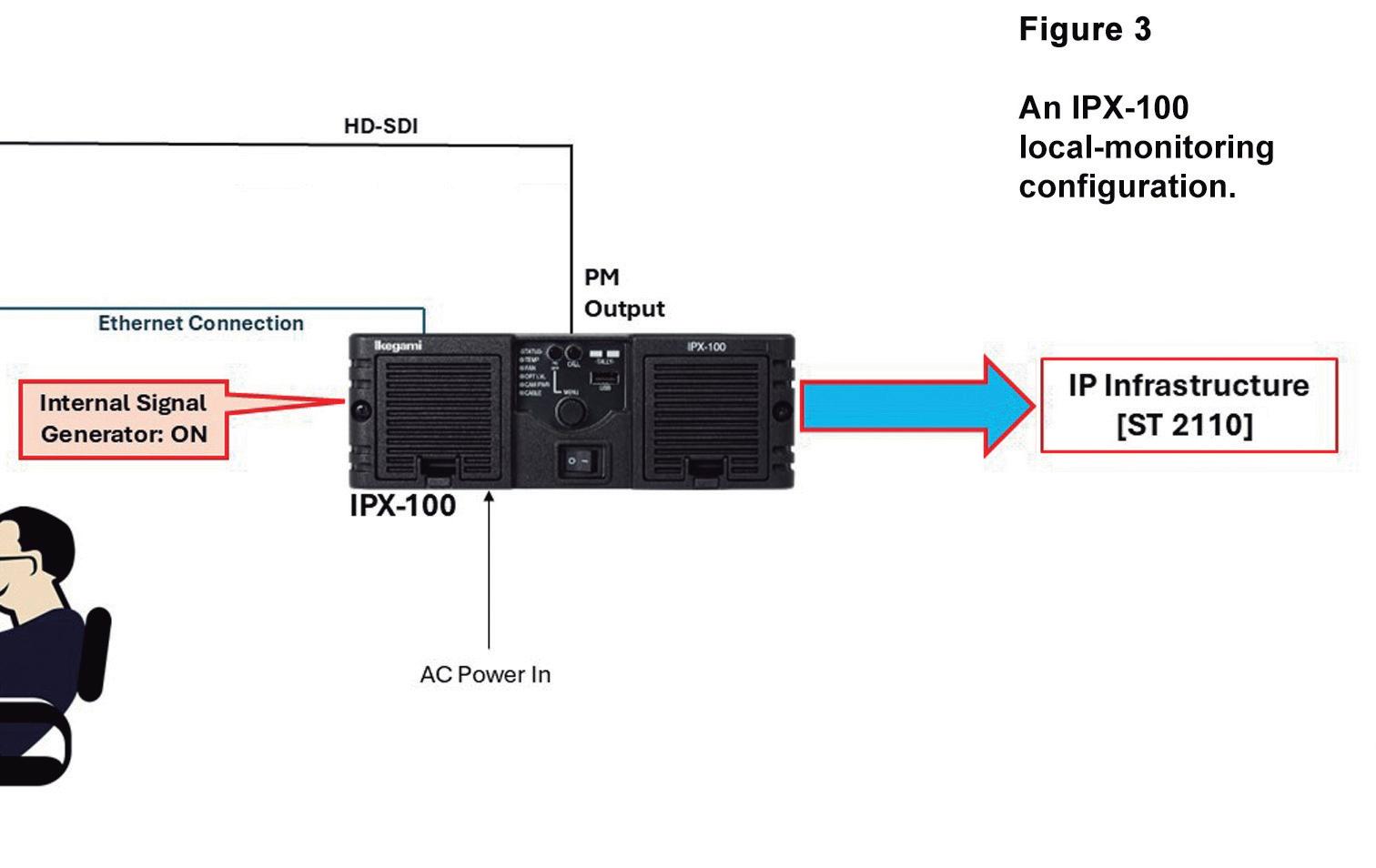

SDI and IP into an Efficient Broadcast Production System by Gisbert Hochguertel, Ikegami Europe

SDI (Serial Digital Interface) was one of the television industry’s great success stories, formalised from 1989 onward as a set of SMPTE standards which expanded over the following decades to embrace SD and HD, 4K-UHD and 8K-UHD.

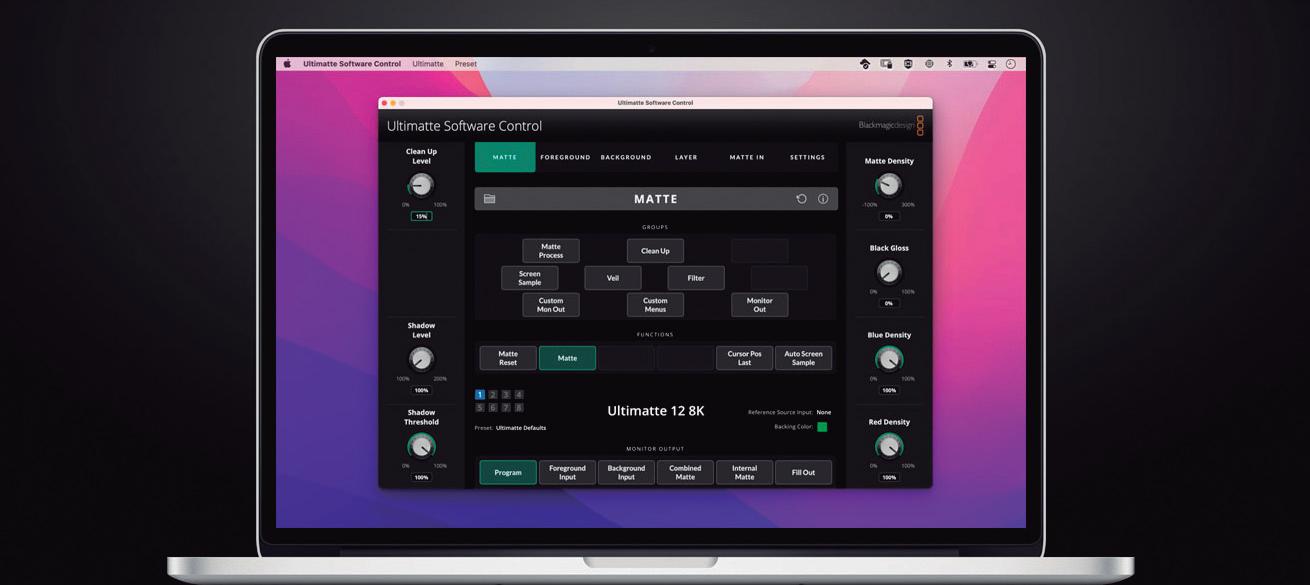

Chroma Key for everyone from Blackmagic Design

Considering the large number of mixers and software applications that nowadays enable carrying out Chroma Key effects with good results, do specific hardware devices make sense just to get Chromas? The unequivocal answer is a wholehearted YES!

Comcast Technology Solutions (CTS) has unveiled its new Cloud TV platform, designed to support broadcasters and video service providers worldwide in managing and delivering a unified broadcast and OTT experience. With a cloud-based, fully managed approach, CTS aims to help media companies centralize operations across live linear TV, FAST channels, SVOD, AVOD, and TVOD—offering a flexible framework that allows content providers to optimize video management, distribution, and monetization within a single system, as the tech firm signaled.

Bart Spriester, Senior Vice President and General Manager of Streaming, Broadcast & Advertising at CTS, highlighted the platform’s potential for the industry: “With Cloud TV, our customers gain access to a next-gen premium platform that lets them manage and monetize their content seamlessly across global streaming, broadcast, and advertising audiences—reliably, securely, and at scale. Cloud TV unites the best of our SaaS services and cloud-based linear playout technology to deliver a fully-featured, modular, and fl exible TV solution.”

As the company stated, the platform is designed for the complex needs of today’s

Tier 1 broadcasters and content owners, addressing key challenges such as cost management, workflow efficiency, and content monetization. The platform, according to specifications released by the company, enables companies to centralize processes like ingesting, transcoding, content protection, server-side and contextual advertising, commerce and subscription management, OTT distribution, and advanced analytics within a single system.

Cloud TV is also engineered to provide robust capabilities in content recommendations, audience insights, and VideoAI™ applications, all of which support dynamic customization of user

experiences and enhanced viewer engagement. This integration allows broadcasters to effectively mix distribution strategies and monetization models, from ad-supported VOD to subscription and transactional services.

Maria Rua Aguete, Senior Research Director at Omdia, commented on the platform’s relevance: “Efficiently blending distribution and monetization across subscription packages, FAST, and VOD is crucial to reaching viewers where they are. The nearly one trillion-dollar media and entertainment market shows that pay TV remains resilient, and ad-based online video is where we expect the most growth.”

Matrox Video has announced its critical role in powering Vizrt’s newly launched TriCaster Vizion, underscoring its strong partnership with Vizrt and solidifying its position as a go-to OEM for live production technology. Vizrt’s TriCaster Vizion is equipped with Matrox Video’s DSX LE5 SDI I/O cards, designed to boost SDI connectivity and enhance compatibility with today’s high-demand production workflows. This integration represents a noteworthy expansion of Vizrt’s reliance on Matrox’s solutions, reinforcing their collaborative growth in delivering high-performance live production technology.

The TriCaster Vizion aims to meet the needs of broadcasters, sports networks, and live event producers who require a versatile and cost-effective solution for large-scale video productions.

With the Matrox DSX LE5 SDI I/O cards, the system enables robust SDI configurations, supporting up to 12G SDI inputs and outputs, which gives producers the flexibility to adapt quickly to varying production needs and environments.

“Our longstanding relationship with Vizrt is built on mutual trust and shared success over two decades,” said Francesco Scartozzi, VP of Sales and Business Development at Matrox Video. “Vizrt’s continued trust reflects our commitment to delivering high-quality, tailored solutions that meet their exacting standards. The DSX LE5 SDI I/O cards in the TriCaster Vizion enable reliable, highperformance functionality for users operating in fast-paced environments. We’re excited to continue pushing the boundaries of live production through future innovations alongside Vizrt.”

This collaboration further illustrates Vizrt’s dedication to integrating Matrox’s advanced technology across its product lineup. As Vizrt looks to expand its offerings in the live production sector, Matrox Video’s role has become pivotal, ensuring Vizrt’s solutions are equipped with the high-quality video processing and connectivity their users demand.

“Partnering with Matrox for the TriCaster Vizion reflects our dedication to providing optimal solutions for live production professionals,” commented Chris McLendon, Senior Product Manager at Vizrt. “The integration of Matrox’s DSX LE5 SDI I/O cards allows TriCaster Vizion to deliver unmatched flexibility and performance, enhancing our users’ production capabilities. We look forward to our continued partnership with Matrox as we push innovation and expand our offerings.”

As the successor xNode, xNode2 carries on as the ultimate “universal translator” between external analog, microphone, and AES/EBU devices and Livewire+ AES67 AoIP networks.

xNode2 retains the important features of its predecessor, while its redesigned internal hardware serves as a foundation for meeting ever-evolving industry technical requirements. Among these are dual 1000MB network interfaces for compliance with SMPTE 2022-7 (Seamless Protection Switching of RTP Datagrams), allowing streaming to separate network branches

for audio redundancy and automatic failover to the available RTP stream.

“Since its introduction at the 2012 NAB Show, customers have welcomed over 37,000 xNodes into their facilities around the world, making it one of the most ubiquitous pieces of gear in modern broadcast history,” noted Milos Nemcik, Telos Alliance Program Manager. “xNode2 carries forward the reliability, ease-of-use, and compact form factor that our customers love, while providing us with the platform we need to build an AoIP endpoint that will serve their needs for many years to come.”

xNode2 boasts a high-resolution, multi-function LCD color front panel display and an improved user interface. A combination of AC and PoE+ (IEEE 8023at) provides redundant power. Like the original xNode, xNode2 will be offered in five variations: Analog, AES/EBU, Microphone, Mixed-Signal, and GPIO.

In a major development for the Southeast Asian film industry, a new virtual production facility, Figment Studio, has been launched. The studio is the result of a collaboration between three industry players —Supreme Studio, The Studio Park, and StageFor— and aims to position Thailand as a key hub for virtual production, not only in the region but across Asia. Powered by ROE Visual LED panels, the studio has attracted an investment of 200 million baht (approximately $6 million) and aspires to rank among the top five production studios in Asia.

Strategic partnerships and technological innovation

Figment Studio brings together the combined expertise of its founding partners. The Studio Park, which houses Thailand’s largest sound stage on its 216-rai complex in Samut Prakan, is a familiar name in Hollywood

circles, having hosted major international productions. StageFor Co., Ltd., a specialist in XR and virtual production, adds its technical proficiency, while Supreme Studio Co., Ltd. has pioneered virtual production technology in Thailand and Southeast Asia.

The studio’s main stage measures 28 meters by 5 meters and is equipped with ROE Visual’s Diamond DM2.6 LED panels. The ceiling, measuring 6 meters by 3 meters, features ROE Carbon CB3.75 panels, delivering high-quality visuals designed to meet the demanding needs of modern film production. The facility also integrates advanced systems, including Brompton Technology’s Tessera LED processing, Unreal Engine, Stype, Redspy tracking, and OptiTrack motion capture. Together, these technologies create a seamless environment for both 2D and 3D workflows, setting a new

benchmark for immersive visual production in the region.

Driving Thailand’s film industry forward Ms. Nattha Neleptchenko, CEO of Supreme Studio Co., Ltd., emphasized the significance of this collaboration for Thailand’s film industry:

“With the combined strengths of the three companies involved in this partnership, we are confident that Figment Studio will be ranked as the top virtual production facility in Thailand and will soon become one of the top five in Asia.”

The studio has already made its mark with high-profile projects, including commercials and feature films like *The Greatest Beer Run* and *Thai Cave Rescue*. Its success highlights the growing demand for virtual production, a trend reshaping the global film and media industry.

LiveU is advancing its next-generation cloud production technology with a tailored solution that meets the localization needs of Skweek, a streaming platform dedicated to basketball and lifestyle content. Skweek, which holds exclusive rights to broadcast EuroLeague Basketball in France and Monaco, has integrated LiveU Studio to offer fans a more personalized and immersive viewing experience, according to the information released by the company.

Skweek now uses LiveU Studio to stream EuroLeague games with local French commentary for teams such as LDLC ASVEL, AS Monaco, JL Bourg en Bresse, and Paris Basketball. This feature allows Skweek to receive the international game feed directly from IMG, where it’s then ingested into LiveU Studio, adding French-language commentary and customized branding. Commentators, connecting remotely to LiveU Studio as “guests,” deliver their insights live from various locations.

Skweek streams up to 12 live games each evening via its pay-per-view platform and also occasionally broadcasts live commentary to YouTube and social media, reaching a broader audience with minimal additional overhead. By leveraging LiveU’s

ability to simultaneously publish across multiple destinations, Skweek efficiently manages both its subscription VOD service and free streams, ensuring content is accessible across various platforms.

An advantage for Skweek has been LiveU’s adaptable payment model, which lets them scale production based on demand, an adecuate approach for high-volume events like the EuroLeague season, both companies signaled. Cyril Méjane, Managing Director of Skweek Studios, shared that LiveU’s tools allowed the team to implement a streamlined, flexible workflow without incurring unnecessary costs. “The Studio toolbox enables us to do complex production easily while only paying for what we use. Delivering live commentary to the cloud is a different way of working for our commentators,

but they quickly adapted to the change and have found it to be a more streamlined process,” Méjane explained.

Philippe Gaudion, Regional Sales Manager at LiveU, noted the speed and e ffi ciency of Skweek’s adoption of LiveU Studio: “Very soon after a LiveU Studio demonstration and in-house testing at a smaller event, Skweek was utilizing the solution for Turkish Airlines EuroLeague Basketball. LiveU Studio provides a rich toolbox that enables complex cloud production in a simple way, as well as access to additional intelligent features.”

Gaudion highlighted how Skweek’s autonomy with remote cloud commentary and distribution has already translated into signi fi cant cost savings and production control.

Aiming to reach audiences across Ethiopia, IGADO has launched a new streaming service, IGADO+, built on MwareTV’s cloudbased platform. Partnering with Ethio Telecom, the national telecommunications provider, IGADO+ offers a diverse range of content accessible via broadband and mobile networks. With 125 live channels and thousands of hours of video-on-demand (VoD), the service presents a wide selection of programming across entertainment, education, religion, children’s shows, and sports.

To cater to Ethiopian audiences, a significant portion of content, including local sports coverage and English Premier League matches featuring Arsenal, is dubbed in Amharic and Afaan Oromoo. Available on mobile devices initially, the platform also plans to extend support to smart TVs, enhancing its accessibility within the Ethiopian market. Ethio Telecom is promoting the service

as an affordable subscription option, with monthly rates set at 199 Birr (about $1.65 USD), available through Ethio’s USSD subscription portal.

IGADO+ relies on MwareTV’s cloud-based TV Management System (TVMS) to operate its streaming services. The system includes all necessary technology for content management and service planning, integrated directly with Ethio Telecom’s subscription management tools. Through an intuitive, drag-anddrop interface, the platform enables operators to efficiently manage content delivery.

To ensure ease of use for Ethiopian consumers, MwareTV provides a suite of customizable, white-label user interfaces for mobile and tablet access, simplifying app creation across devices without requiring additional coding. This flexibility allows IGADO+ to maintain

a consistent and accessible experience for users across platforms.

MwareTV CEO Sander Kerstens highlighted the platform’s hands-off approach to technology management, stating, “Our cloudbased system allows operators like IGADO to focus on building audience engagement without getting bogged down by technical complexities. By automating content delivery through Ethio Telecom’s network, IGADO can focus on expanding its reach through quality programming and strategic offers, confident that our platform will deliver consistent results.”

Kerstens emphasized the low entry cost and subscriber-based model of the MwareTV system, making it a feasible option for localized content platforms like IGADO+ that aim to provide high-value services at affordable rates within emerging markets.

Limecraft has entered into a partnership with ITV Studios Daytime, one of the UK’s leading daytime television production facilities, to revamp and streamline its post-production processes. This collaboration is focused on optimizing media management, transcription, and collaborative workflows for ITV Studios Daytime, which is located at London’s Television Centre and produces popular shows like Good Morning Britain, Lorraine, This Morning, and Loose Women.

ITV Studios Daytime processes approximately 500 hours of raw content every month, necessitating an efficient system for managing and reviewing media assets.

With Limecraft’s support, ITV aims to modernize its approach to ingest and file sharing, integrating the solution directly with its existing Avid MediaCentral platform.

Limecraft’s tools are specifically designed to address challenges in post-production workflows, enabling ITV to automate previously manual processes like transcription and media logging. This has allowed the studio to improve turnaround times for file sharing and media review, which are central to a fast-paced broadcast environment.

According to John Barling, Head of Technology at ITV Studios Daytime, “We were massive users of WeTransfer before, but file sharing from Avid was a clunky process. Limecraft has transformed that. Now, our teams can easily find content, clip it, and share it with peers, streamlining our workflow and improving collaboration in ways we hadn’t seen before.”

Key features and benefits, according to the company:

> Direct Avid integration: Limecraft’s integration with Avid MediaCentral eliminates manual steps previously needed for media logging and file transfers, facilitating smoother collaborative work across teams.

> Automated transcription: With Limecraft’s transcription capabilities, ITV’s media content is transcribed in real time, reducing transcription time from hours to minutes and enabling more rapid content review.

> Real-Time Collaboration: Post-production teams can now review, annotate, and share media files instantaneously, allowing for real-time feedback and collaboration across different departments.

Leveraging AR for spectacular broadcast production, reaching a record 4 million viewers

In a groundbreaking collaboration, Vizrt, a specialist in real-time graphics and live production solutions, teamed up with Champion Data to deliver a high-tech spectacle for the Australian Football League (AFL) Grand Final pre-show featuring pop icon Katy Perry. By merging broadcast with Augmented Reality (AR) graphics, the partnership created a viral broadcast experience that captivated audiences on both television and social media worldwide.

The AFL Grand Final, aired by Seven Network, achieved a record-breaking viewership, attracting 4 million viewers, who witnessed a unique fusion of Katy Perry’s energetic performance and dynamic, galaxy-inspired AR graphics. This enhanced

production showcased the potential of AR graphics within a live broadcast environment, offering fans at home a visually immersive experience and a social media frenzy.

“Despite the AFL Final being an elaborate, live show with multiple camera inputs and extensive graphics overlays, the Vizrt tools very much enabled us to ‘set and forget’ when it came to production programming,” commented Andrew Mott from Champion Data. “We had such confidence in delivering Katy Perry’s production knowing that Viz Virtual Studio and Viz Engine formed the technical backbone. We didn’t need to tweak too much – whether it be for her performance or our standard game day graphics – it all came together perfectly.”

Central to the broadcast’s success was Viz Engine 5, which seamlessly integrated 4K Unreal Engine graphics from Seven

Network’s broadcast team into the live feed. These pre-made graphics, designed by Katy Perry’s production partner Silent Partners Studio, added a stunning visual depth to the performance.

This project also exemplified how Champion Data pushed its existing AFL production infrastructure beyond the traditional Match Day graphics, using Vizrt’s hybrid AR production capabilities to overlay AR graphics onto Perry’s performance without needing a full technical overhaul. The AR-enabled broadcast was managed via Champion Data’s established Vizrt infrastructure, including Viz Engine, Tracking Hub, and Studio Manager. This setup collected data from three cameras (two equipped with mechanical tracking) to calibrate and render Mushroom Graphics’ Unreal-based visuals in real time, enhancing the on-screen experience for audiences.

HuskerVision, the audiovisual backbone behind the University of Nebraska’s sports productions, has taken a leap in audio production quality and operational efficiency thanks to a strategic collaboration with Lawo, as the company explains. The implementation, completed in August 2023, has brought transformative changes to HuskerVision’s workflow, showcasing Lawo’s prowess in live media production technology and setting a new standard for university sports broadcasting.

Operating out of the Michael Grace Production Studio, HuskerVision manages all audiovisual and broadcast production needs for University of Nebraska sporting events, including live game entertainment, non-sporting events, and a roster of television shows broadcast nationally on the Big Ten Network. Staffed by a team of 30 to 50 student operators, HuskerVision produces over 100 fully developed television programs annually, offering Nebraska athletes and sports a prominent national profile.

Garrett Hill, Broadcast/Systems Engineer at HuskerVision, shared his appreciation for the system, noting, “after working with our Lawo installation for more than one year, we have been very happy with its performance and the quality of audio we’re getting

out of it.” The installation includes a lineup of Lawo’s hardware and software: the HOME management platform for media infrastructures, two A__UHD Core audio engines configured as primary and backup, Power Core units equipped with Dante cards for enhanced integration with legacy equipment, and two mc²56 audio production consoles. These consoles, complemented by several A__stage64 and A__digital stageboxes, form the core of HuskerVision’s enhanced audio setup.

Lawo’s solutions have seamlessly integrated into HuskerVision’s workflow, bringing flexibility and simplifying complex signal management tasks. Hill highlighted the efficiency gains from Lawo’s system architecture in managing IP audio streams, particularly in coordinating IP and baseband audio with third-party

equipment—a setup that has streamlined operations.

“This was our first experience with IP-based audio that closely resembles environments based on ST 2110,” Hill commented. “Our broadcast setup remains baseband for video, but audio is now a hybrid IP and baseband system. With the latest equipment, we’ve not only improved audio quality but also optimized our workflow.” He further explained, “The A__stage and A__digital boxes’ capacity to natively output and subscribe to any AES67 streams, alongside robust internal routing support, has simplified the management of audio signals across all venues. The HOME platform’s integration also allows direct management of streams from Q-Sys and Dante equipment, facilitating complex routing and multiple event needs simultaneously.”

In an ambitious move for the UK media landscape, fivefold studios has launched a groundbreaking virtual production facility at Dragon Studios, South Wales, setting a new standard for film and television production in the region. Positioned as an innovative creative hub, fivefold studios combines cutting-edge technology with advanced production capabilities to empower Wales as a leader in virtual production and a dynamic force in the global media sector.

The new studio, developed to bolster film and television infrastructure in Wales, brings virtual production directly into the heart of the region’s creative industry. Offering a range of comprehensive production services, fivefold employs the latest in InCamera Visual Effects (ICVFX), performance capture, and AI-driven production tools.

Spanning over 12,000 square feet, fivefold studios is well-equipped to accommodate projects of all scales, from independent productions to large-scale blockbusters. At its core is a stateof-the-art LED volume, which enables real-time capture of visual effects and empowers filmmakers to explore new creative possibilities while streamlining workflows. Additionally, the facility boasts Europe’s largest permanent green screen cove, covering 5,000 square feet with a height of 33 feet, providing flexible, cost-effective solutions for high-end productions.

Dedicated to the development of industry talent, fivefold studios will also offer training programs in collaboration with Media Cymru. This initiative underscores fivefold’s commitment to equipping the next generation of media professionals and securing Wales’ position as a globally recognized hub for advanced media production.

David Levy, Managing Director of fivefold studios, remarked, “Here at fivefold studios, we are proud to be establishing a sustainable, technology-led enterprise that champions the values shaping the future of Media & Entertainment. Our in-house team, pioneers in the virtual production arena, is dedicated to building a Welsh center of excellence in media production. Our industryexperienced professionals have delivered projects for Net flix, Apple, Paramount, and Amazon MGM Studios, and we’re ready to provide best-in-class virtual production solutions.”

As one of 23 partners in Media Cymru’s consortium, fi vefold studios is part of a collective initiative dedicated to transforming Wales’ media sector into a global innovation hub with an emphasis on sustainable, inclusive economic growth.

In what seems to be a strategic move to consolidate its presence in the UK media landscape, Landsec has acquired the remaining 25% interest in MediaCity from Peel Group, including full ownership of the renowned television facility dock10 and an adjacent 218-bed hotel. This acquisition, finalized last November, positions Landsec to take full control over the iconic MediaCity estate and spearhead future development in the area.

The transaction was completed for a cash payment of £22 million, alongside the assumption of £61 million in secured debt, bringing the total consideration to £83 million. This valuation represents a discount to the latest book value of Landsec’s existing 75% share in MediaCity, primarily due to the terms of the lease agreements. As part of the acquisition, Landsec agreed to waive contracted

future income from “wrapper leases” to Peel, aligning the value of the transaction with the latest book valuation of MediaCity’s assets. In the immediate term, this acquisition is expected to be earnings-neutral.

However, Landsec sees long-term growth potential in MediaCity, with plans to expand residential capacity on lands adjacent to the estate. This addition could bring significant new housing to the area, reinforcing MediaCity’s role as a vibrant hub for media, entertainment, and community life.

Mike Hood, CEO of Landsec U+I, emphasized the strategic importance of this acquisition: “MediaCity has huge potential. Through our increased ownership, we can curate a place that continues to attract cuttingedge businesses and investment to the area, and where people choose to come to work, live and

enjoy their lives. We look forward to sharing more about our plans in the near future.”

About dock10 and MediaCity

Dock10, the leading television production facility at MediaCity, has been central to the estate’s success, attracting high-profile productions from the BBC, ITV, and other major broadcasters. Known for its advanced technical infrastructure, dock10 has positioned MediaCity as a leading media production hub, drawing both media and tech innovators to Greater Manchester.

According to the company, this acquisition gives Landsec a robust foundation to expand its influence in the media and broadcast sectors, combining an iconic location, premier production facilities, and strategic residential expansion to support the needs of this dynamic industry.

This announcement comes after the EBU secured the rights to broadcast the FIFA World Cup 2026™ & FIFA World Cup 2030™

Alan Fagan, a seasoned executive with over 25 years of commercial experience, has been appointed Managing Director of the European Broadcasting Union’s (EBU) new streaming platform, EurovisionSport.com. Formerly Vice-President of ESPN Sports Media EMEA, Fagan brings extensive expertise in managing media rights, partnerships, direct-to-consumer services, and advertising sales. His previous roles include overseeing Disney’s multi-brand advertising and partnership operations in the UK and ESPN’s reach across the EMEA region, where he was part of Disney’s UK Leadership Team during its transformative period, including the Disney+ launch.

In his new role, Fagan will take on strategic, editorial, and commercial leadership at Eurovision Sport, reporting directly to Glen Killane, Executive Director of EBU Sport. His responsibilities include advancing the platform’s growth and overseeing an upcoming team expansion, expected to create several new roles in the coming months.

Reflecting on Fagan’s appointment, Killane stated,

“The leadership team at the EBU, along with our Eurovision Sport partner Nagra, were impressed by Alan’s depth of experience and leadership skills. He will now play a pivotal role in driving Eurovision Sport forward, helping us continue to innovate and deliver exceptional sport experiences that complement our Members’ extensive efforts across Europe and beyond.” Killane also acknowledged the contributions of Jean-Baptiste Casta, the outgoing managing director, who is transitioning to a senior industry role elsewhere.

Fagan commented on Eurovision Sport’s potential, noting that in its first six months, the platform has streamed over 100 events across more than 30 sports, entirely free for viewers. Highlighting the collective scale of 50-plus broadcasters involved, Fagan added, “With a potential audience of over one billion, I am thrilled to join Glen and the team at the EBU, to grow audiences, content, and distribution, and deliver on the commitment to being the first gender-balanced streaming platform in sport.”

A new partnership between CaptionHub and Signapse is set to impulse video accessibility, allowing enterprises to deliver content that truly serves Deaf audiences through AI-driven sign language integration.

As part of its latest feature rollout, CaptionHub, a company specialized in multimedia localization and captioning, will offer seamless integration with Signapse’s pioneering sign language technology. Through this collaboration, CaptionHub clients can now enhance their videos with automatic British Sign Language (BSL) or American Sign Language (ASL) interpretation alongside captions, representing a major advancement in making media inclusive and accessible to all, as the firm signals.

Tom Bridges, CEO of CaptionHub, sees this partnership as a

transformative step: “We are thrilled to join forces with Signapse to bring AI-based sign language capabilities to our platform. This collaboration aligns perfectly with our mission to make video content localised and accessible for our clients. By incorporating Signapse’s innovative technology, we’re not just adding a feature; we’re opening up content to millions of people who wouldn’t previously have had access but also making this step easy for our enterprise client base.”

This new integration comes at a time when accessibility in media is more vital than ever, according to the company. Deaf and hard-of-hearing audiences represent a significant portion of global viewers, with over 87,000 people in the UK alone using BSL as their primary language. Many members of the Deaf community have difficulties with text,

which makes captioning alone insufficient for their needs. This partnership with Signapse aims to close that gap by translating speech directly into visual sign language within videos, creating an inclusive experience, as both companies claim.

Will Berry, CRO of Signapse, emphasizes the impact of this development on accessible content: “Many Deaf people struggle with text – 87,000 people in the UK use British Sign Language (BSL) as their first language. By seamlessly integrating our sign language translation with existing CaptionHub features, we are opening up more video content for the Deaf community. This partnership represents a significant leap forward in bridging the communication gap and ensuring that everyone can enjoy and benefit from video content equally.”

For the drafting of this article we have relied on the information included in the document “Olympic Games Paris 2024 Media Guide” by OBS y comments by Isidoro Moreno, Chief Engineer at OBS.

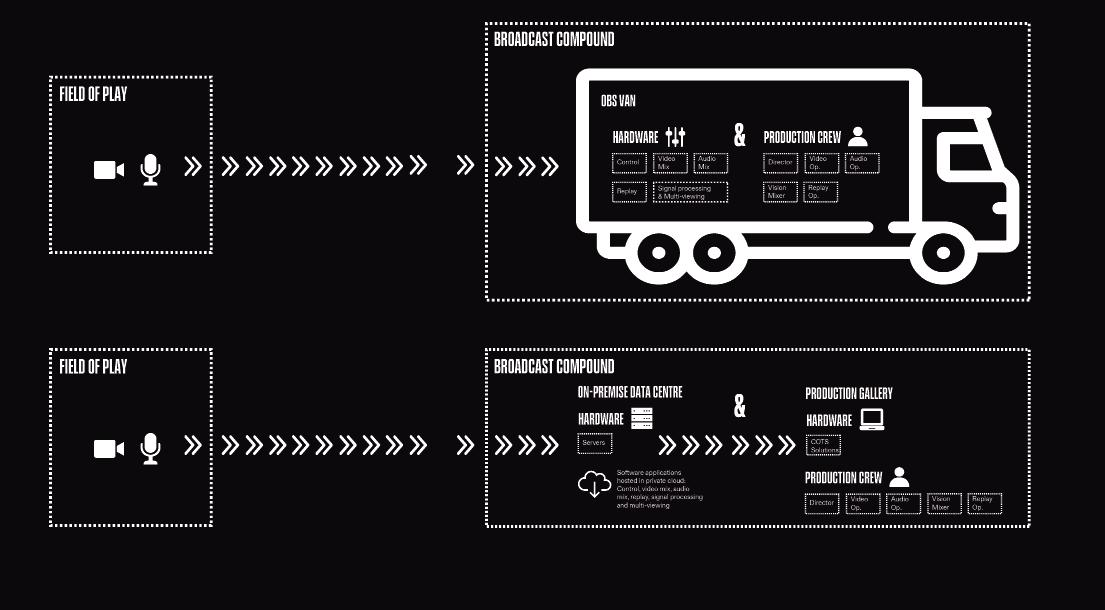

OBS and the revolution of INTEL’s modular, virtualized production

As the host broadcaster of the Olympic Games, OBS has faced a specifi c set of challenges when planning the Mobile External Broadcast Units (OB Vans) or mobile

systems that are essential for live event coverage. Key factors include the space required in the broadcast enclosure at venues, the internal space needed to accommodate both equipment and production sta ff , as well as ensuring that all hardware devices are confi gured and up-to-date to deliver

By Luis Sanz, Audiovisual Consultant

top-notch production quality. Logistics is equally crucial, as it encompasses procuring production units, transporting them globally, shipping specialized equipment, sta ffi ng for technical and production teams, and setting up at the venue. In addition, it is essential to consider the environmental

impact associated with the carbon footprint, thus avoiding the unnecessary transfer of large amounts of hardware, especially servers, from the other side of the world, and choosing local solutions whenever possible, given that it is COTS (Commercial O ff -The-Shelf) hardware, which reduces the need

to mobilize specifi c equipment and contributes to more sustainable logistics.

To address these challenges, OBS has partnered with global sponsor INTEL in order to explore more flexible and modular production environments.

This innovative strategy has aimed to streamline logistical and operational complexity, increase fl exibility and reduce the total broadcast space at the venues. Through this collaboration, OBS and INTEL are opening a new era in broadcast technology, thus creating scalable and adaptable solutions that cater to the demands of Olympic broadcasting while setting new benchmarks for e ffi ciency and sustainability in the industry.

The move towards virtualized OBs will continue as technology advances and broadcasters look for more e ffi cient, fl exible, and sustainable production methods. With progress seen in cloud computing, arti fi cial intelligence (AI), and realtime communication, virtualized OBs will likely become a standard in sports broadcasting, o ff ering unprecedented capabilities and transforming the way live events are covered.

A traditional OB is a mobile production unit equipped with all the technology needed to produce live broadcasts, including cameras, audio equipment, mixers, matrices, and control panels. A virtualized OB decentralizes much of this equipment, using a software-based, cloudhosted architecture that replicates the function of a traditional OB and o ff -the-shelf (COTS) commercial solutions to handle many production tasks. This transformation o ff ers several advantages:

• Efficiency: A traditional OB has numerous elements that provide maximum fl exibility, but in many cases these may be unnecessary for a speci fi c operation. In contrast, a virtual unit virtualizes only the necessary elements within the TV production chain for a particular event, thus optimizing resources and reducing operational complexity without compromising the quality of the broadcast.

• Reduced footprint: Virtualized production configurations are designed to significantly reduce the technical, logistical, physical, financial, and ecological footprint. Less equipment is needed on site, resulting in smaller and more mobile units, and less space and resources required at sites.

• Scalability: Cloud resources can be scaled up or down depending on

the requirements of the event, making this a cost-effective solution.

• Remote production capabilities: Production equipment can operate from anywhere, offering greater flexibility and resiliency.

Main features

• Cloud-based infrastructure: Core production tasks such as video switching,

video-audio mixing, and replay generation are performed by using cloud servers. This con fi guration reduces dependency on on-site hardware.

• Remote operations: Directors, producers, and technicians can collaborate and control the broadcast remotely, allowing for more e ffi cient use of staff and resources.

• Resiliency and redundancy: Cloud solutions provide built-in redundancy and failover capabilities, thus ensuring uninterrupted transmission even when facing hardware failures.

Benefits

• Cost-effectiveness: By reducing the need for large equipment and staff on site, a virtual OB reduces production costs.

• Flexibility: it can be quickly adapted to different types of events and scales.

• Improved quality: Advanced cloud technology enables higher-quality production capabilities on demand.

• Sustainability: Smaller physical footprint (and potentially reduced travel for production teams in a completely remote setup) contribute to lower environmental impact.

• Future-proof: As streaming technology continues to evolve,

a virtualized OB can easily integrate new innovations without significant overhauls of the physical infrastructure.

• Bandwidth and latency: Reliable, high-speed cloud connections are crucial to the smooth operation of a virtualized OB. Latency can be a major issue for live broadcasts.

• Training and adoption: Production teams should be trained to adapt to the new workflows and technologies associated with a virtual OB.

• Cybersecurity: Ensuring the security of cloud-based operations is vital to protect against cyber threats and unauthorized access.

January 2021: Development starts

February 2022: Proof of Concept (POC) at Beijing 2022

The virtualized OB was deployed in the curling enclosure, in parallel to the main production setup. The purpose of this test was to check functionality and

interoperability, as well as the ingestion and processing of the 1080p50 SDR video streams from 18 cameras used for the coverage of one of the curling sheets, along with the audio streams. An on-premises data center replicated the cloud-based architecture platform. In addition, four additional native IP cameras dedicated to the virtualized OB project were connected, thus eliminating the need for camera control units. The performance of the virtualized OB exceeded all expectations.

March 2022December 2023: Development period

To ensure that the virtualized OBs meet the strict OBS production standards, multiple POCs, test events and a close collaboration with partners and suppliers were carried out. Collaboration with OBS host producers played a key role in ensuring that technology is ready for live use, demonstrating its practicality and robustness in real-world scenarios. This rigorous process

included production teams verifying quality standards, thus ensuring virtualized OBs delivered exceptional performance and reliability. Significant progress has been made in the field of virtualized transmission solutions in the last two years, coming closer to matching the capabilities of traditional broadcast equipment.

Implementation of live production. Stage 1 at Gangwon 2024

The virtualized OB was initially deployed at the curling venue in week 1 and then reinstalled at the ice hockey venue in week 2, strictly adhering to the technical setup and specifications planned for Paris 2024. This application demonstrated the feasibility and efficiency of the solution as an alternative to traditional broadcast equipment in a live coverage environment and helped fine-tune preparations for Paris 2024.

July 2024:

Implementation of live production. Stage 2 at Paris 2024

At Paris 2024, OBS has deployed virtualized OBs at three competition venues to produce 14 multilateral broadcasts across fi ve sports. They have been located at Roland Garros (for side tennis courts), Champ-de-Mars Arena (for judo and wrestling) and Chateauroux Shooting Centre (for shooting).

Virtual OBs have been used on 7 tennis courts. In the 4 central tennis courts, neither traditional nor virtualised OBs were used, but the camera signals were sent, within the Roland Garros facilities, to cabins in which racks were located with the hardware in SMPTE 2110 as necessary for the production of the fi nal broadcast signal.

The virtual OBs for tennis worked with the cloud, using edge server installations, a Data Center or outpost (local data center infrastructure at the OBS headquarters) that was also installed at Roland Garros with the collaboration of the sponsor INTEL.

The production of the signal was carried out with software applications installed as cloud services. Having the data center so close to the cameras made it convenient to make camera copies locally; copies of more than 80% of the cameras used in the games were handed over to the committees and broadcasters. Furthermore, due to the proximity, latency was practically nonexistent.

The deployment of virtualized OBs marks a significant leap in broadcasting technology, spearheaded by OBS. These state-of-the-art units are set to revolutionise the way live Olympic events are broadcast, particularly in large-scale environments such as the Paris 2024 Olympic Games.

Configuring a virtualized OB

Virtualized OBs are powered by a suite of sophisticated VOB software, comprising five key pillars.

Each pillar is made up of top-tier manufacturers offering virtualized solutions, selected after careful research and development.

The implementation of a virtualized OB refl ects the confi guration of a transportable hardware fl ypack system. The local data center comprises racks of COTS (Commercial O ff -The-Shelf, equipment mass-produced products available to the general publi)c to which cameras, microphones, etc. are connected as sources... Together with these are the production galleries, designed to look a lot like traditional production units. This design requirement ensures minimal visible and operational impact on production equipment.

Configuration times are progressively reduced as the integration of various software components becomes more agile by means of sophisticated IT-based mechanisms. This trend promises even faster and more efficient setups in the future.

OBS employs a simple multilateral approach with minimal video layers in the video mixer, which typically uses a GFX signal and avoids complex picture-inpicture configurations. This simplicity allows for optimal use of available resources, thus resulting in improved efficiency and performance.

One of the main challenges in cloud-based broadcasting is managing latency, particularly for onsite multilateral program delivery, which must be accessible with minimal delay for commentator positions, large screens, and other local uses. To address this, OBS has opted for private onpremises data centers (edge computers), which has avoided the need to route signals back and forth to the cloud. This setting has ensured that latency is maintained within the 100 -150 milliseconds range.

Theoretical configurations suggest that these private data centers could

be centralized in the International Broadcasting Center (IBC) or in a nearby commercial data center, as long as the physical distance does not exceed certain limits (considering the speed of light, approximately 5 milliseconds per 1000 kilometers of fi ber).

In addition, latency issues are exacerbated by the encoding and decoding of IP transmissions. OBS mitigates this by using uncompressed signals (SMPTE 2110-20 and 211030) or -in exceptional casesultra-low latency processing types such as JPEG-XS per

SMPTE 2110-22. While uncompressed signals are large and demand software, this approach minimizes latency significantly. Future developments may focus on improving video switching capabilities at the network edge.

With a clear vision on the future and strategic goals aligned with technological advances, the shift towards virtualized systems represents a great leap forward for OBS and broadcasting of the Olympics.

The journey of virtualized OBs has been remarkable, starting with smaller-scale deployments and culminating in full integration at three key locations for Paris 2024. With an eye on the future, OBS is preparing for the Milan-Cortina d ‘Ampezzo 2026 Winter Games, where an escalated implementation of these systems is foreseen. A central hub in Cortina will be critical as it will simultaneously serve numerous locations, thus demonstrating the versatility and operational efficiency of these innovative systems.

OBS envisions a large-scale deployment of virtualized OB systems for the 2028 Los Angeles Olympics. This effort will be optimized through the co-location and centralization of the core hardware. Rapid advancements in COTS technologies, coupled with improved processing capabilities, are bound to open up new possibilities, thus facilitating a more effective and longer-range broadcast experience.

Virtualized OBs make a significant contribution to sustainability and cost-effectiveness. By minimizing physical space and optimizing resource

allocation, these systems align with the sustainability goals of the industry at large, positioning virtualized OBs as a forward-thinking solution, especially in terms of scalability.

The next five to ten years will see a radical change in broadcast technology. As cloud capacity expands, more broadcasting processes will migrate to the public cloud. The current one-to-one relationship between software and hardware, dictated by high processing demands and

vendor specifications, will evolve and this will pave the way for applications from different manufacturers to operate on a single piece of hardware, thus offering greater flexibility in software allocation and hardware usage.

The manufacturers’ operation dashboards, which are currently tied to specific software, will become independent of the underlying software. Protocols will be aligned, allowing users to choose their preferred operator panels, whether buttonbased or touchscreens, regardless of the connected software.

The horizon for virtualized OBs is a promising one, with numerous improvements in the works. OBS is working closely with vendors to automate and streamline software deployment on available hardware. In the near future, operators will be able to quickly configure control rooms by selecting various parameters, such as the number of playback channels, mixer inputs, audio DSPs, and other settings, with applications and configurations automatically deployed in seconds. Customization and template implementation are also areas of focus. OBS is exploring the use

of planning documents and artificial intelligence to automate resource allocation, thus reducing the need to recreate configurations for every single event.

Future improvements in cloud technology, such as greater processing power, increased cloud performance, and greater abstraction from the underlying hardware, will further enhance the capabilities of virtualized OBs. These advances promise to deliver more sophisticated, efficient, and flexible broadcast solutions, setting new standards for the industry.

The Olympic virtualized OB project is set to reshape the future of sports broadcasting. With clear strategic goals, a commitment towards technological innovation, and a focus on sustainability and profitability, OBS is paving the way for a new era in broadcasting. As OBS prepares for the upcoming Olympic Games in Milan, Cortina d’Ampezzo and Los Angeles, the potential of virtualized OBs is enormous. This innovative approach promises a future where flexibility, efficiency and cutting-edge technology converge to deliver unparalleled live coverage.

The BBC Northern Ireland has taken a major leap forward in its broadcast capabilities with the integration of a cutting-edge IPbased outside broadcast truck, designed and delivered by Megahertz. In an exclusive interview, Nigel Smith, Operations Manager at Megahertz, explain to TM Broadcast International’s readers that this next-generation vehicle represents a strategic shift in outside broadcasting technology while maintaining crucial compatibility with existing infrastructure.

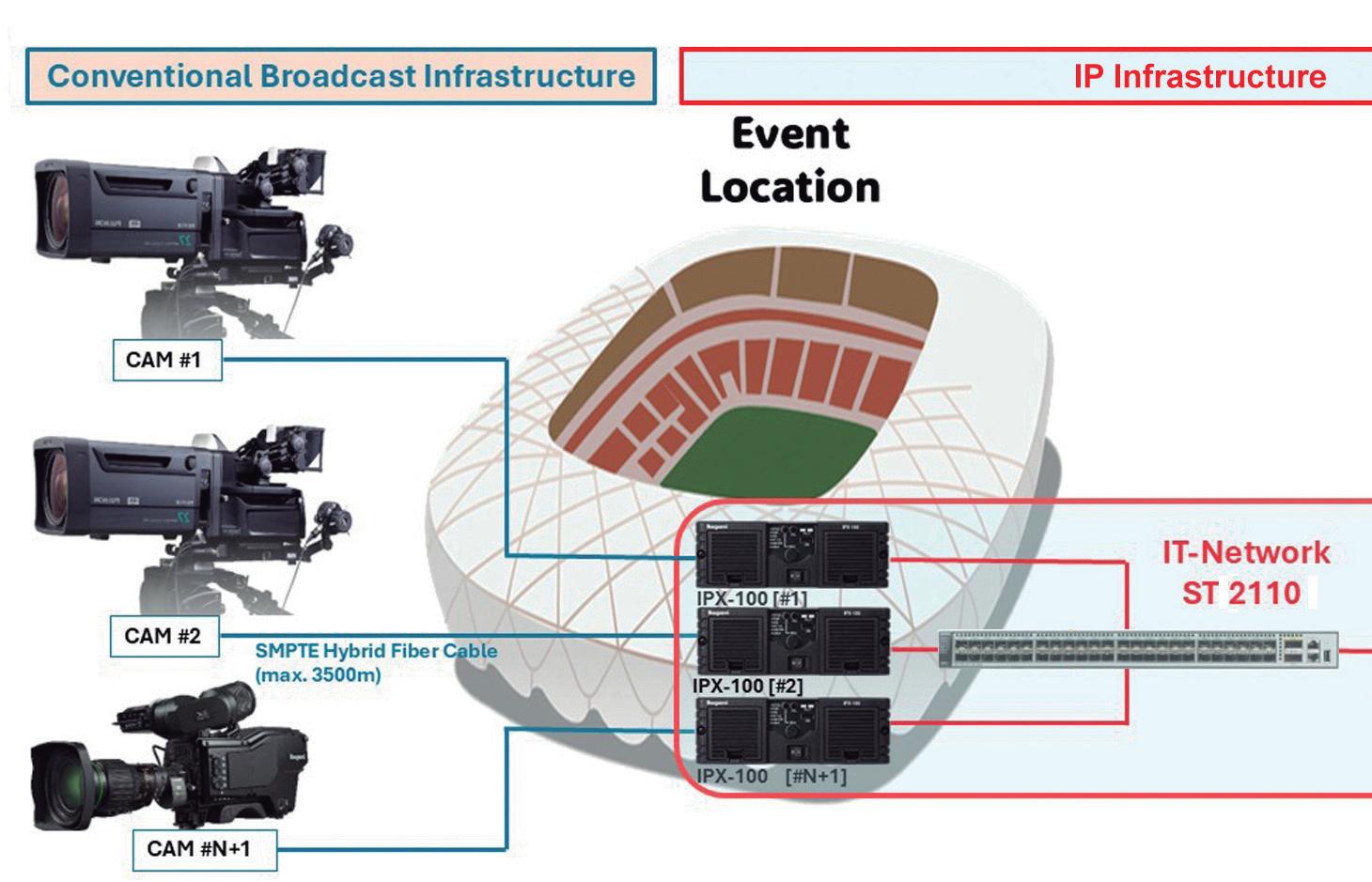

There is literally nothing conventional about the IP-based machine that MegaHertz designed, built, and integrated for BBC Northern Ireland. It is the first mobile unit to be constructed with a comprehensive SMPTE ST 2110 infrastructure, SDI interfaces, and Arista networking for media transport. At its core, the system employs Sony’s VideoiPath Orchestration and Broadcast Control system; Smith will detail all these advantages and their significance along this interview.

During this conversation, the readers will learn that Smith is certain that the industry will continue to move in one direction: toward more compact and flexible IP-based systems that can integrate with cloud production tools. He acknowledges the growing demand for 4K and HDR in sports and live events but asserts that traditional SDI still maintains relevance, particularly in remote production scenarios where 12G solutions continue to play a vital role.

This cutting-edge OB truck not only boosts BBC Northern Ireland’s production capabilities but also serves as an invaluable training ground for staff in IP-based broadcasting. It puts the organization at the forefront of the industry’s technological evolution. Let’s see how it works.

What were the primary specifications requested by BBC Northern Ireland for this new OB truck?

The request was to provide costs for the design, build, integration and commissioning of a new general purpose outside broadcast solution, including any free issue equipment. The specification was for digital and IP-enabled equipment, based on the outlines of:

Proven 24/7 operational system with high levels of resilience and redundancy

Provide video over IP infrastructure and be multi-format in nature

Provide audio over IP, MADI, AES and analog inputs and outputs

Be modular and scalable to provide flexible working environments

Provide a roadmap to emerging technologies and processes in content acquisition and production

Consider industry technology roadmaps to ensure future integration of the design solution with other facilities, both fixed and mobile

Robust construction for an expected minimum 10-year service life

Designed for ease of use

Enable remote production

Provision for internet services such as Skype and Zoom

Provide compatibility with legacy infrastructures such as HD-SDI

How was working with Sony whilst developing the OB?

The project required additional software to be developed by the Sony Nevion team, who delivered on time and to our exact specifications. They were

a pleasure to work with. On the hardware side, we incorporated the MLS mixer, and the Sony team provided excellent commissioning and support services to ensure a seamless integration.

How does this truck differ from traditional SDI-based OB vehicles?

The media flow is all IP SMPTE ST 2110 with SDI interfaces. All the media is transported over Arista networking.

How did you address the interoperability requirements between IP and existing SDI infrastructure?

From the outset, we identified and tested numerous aspects of the system design’s interoperability through vendor workshops, including those held at Calrec HQ. We also conducted several development workshops at Sony’s offices at Pinewood Studios, west of London.

What specific Sony Networked Live solutions are integrated into the truck, and why were they chosen?

The VideoiPath Orchestration and Broadcast Control system from Sony-Nevion was selected for its unparalleled flexibility and comprehensive capabilities. What considerations were made to ensure the truck could serve as both a mobile unit and an additional studio gallery?

The rear termination panel offers a multitude of interconnections, providing a vast array of

options for connecting to external premises. The network is designed to offer an extensive number of media interfaces, ensuring seamless connectivity to external production control rooms.

How does the spine-leaf network configuration benefit the truck’s operations?

The chosen spine and leaf topology provides better scalability and flexibility for the future of the BBC, in addition to higher performance. Additional spine switches can and should be added and connected to every leaf, increasing capacity.

What measures were taken to ensure redundancy and reliability in the IP-based system?

We selected the network design and vendor equipment to support SMPTE 2110-07. Redundant Precision Time Protocol (PTP) Grandmaster Clocks from Telestream provide a redundant reference source locked to GPS. Besides, we used dual network paths to guarantee uninterrupted service even if one path fails. SMPTE ST 2022-7 provides seamless protection switching, and our dual network paths ensure that if one path fails, the other can take over without interrupting the service.

How scalable is this OB truck for future technological advancements?

The Arista network equipment has been carefully selected and configured to support the high data rates required for video and audio streams, providing futureproofing against higher resolutions, including UHD. The Selenio Network Processors from Imagine, along with the Sony Vision Mixer and Cameras, can select UHD as the production format.

How does this IP-based OB truck contribute to BBC Northern Ireland’s

overall transition to IP technology?

The BBC’s live production environment is an invaluable training ground for staff, thanks to the IP ecosystem. It allows us to connect future elements of the broadcast chain at BBC premises in Northern Ireland.

Can you describe the truck’s capabilities for quick turnaround times between productions?

The future of the VideoiPath system will include the ability to save and recall salvos and snapshots of setups with ease, eliminating the need for

engineering teams to set up multiple pieces of equipment.

Besides the BBC Northern Ireland OB truck, what other major projects is Megahertz currently working on or planning for the near future?

We are currently delivering a significant project: a studio and audience viewing space to the University of Bristol. This has been an exciting project delivered into a new building with demanding specifications. The studio is designed as a flexible space for virtual production.

In the smart cinema, we will research the effect on the human of sounds and images. We will use eye tracking cameras and wearable technologies to look at how heart rate and biometrics are affected.

What do you consider to be the most challenging aspect of system integration in today’s rapidly evolving broadcast technology landscape?

Technology has always evolved—and it has always done so rapidly. Since its inception, Megahertz has been at the forefront of new media technologies, devices, and production workflows. The key is staying connected to the technology of standards development,

keeping your staff trained, and regularly experiencing real-world productions. Our engineering staff is formed by members of SMPTE, the IET, and other professional bodies.

Looking at your portfolio of projects, which integration do you find most satisfying from a technical and creative standpoint, and why?

We excel at helping our clients resolve urgent business issues. We think for ourselves, respond quickly, and work together. This is why our customers keep coming back to us.

What trends do you see emerging in OB truck design and integration that broadcasters and

production companies should be aware of?

The shift to IP-based systems is a game-changer. These scalable and flexible production platforms integrate seamlessly with cloud production tools and contribution services. Trucks must become more compact and flexible so they can be easily reconfigured for different productions. Production units must be able to work with multiple output formats. As always, 4K and HDR must be delivered with the utmost quality on these new platforms, especially for sports and live events. However, SDI still has a place at the remote end, and we are seeing many requests for 12G solutions that are being integrated into the ecosystem.

Now that the Paris 2024 Olympic Games are over, it is fitting to analyse their outcome from the points of view of technology and television production. Last April, TM Broadcast presented, in an extensive article, the technical and production approaches that the entity in charge -OBShad set for the staging of the Games in Paris.

By Luis Sanz Audiovisual Consultant

These approaches entailed, in a good number of cases, challenges at different levels. Now that the Games are over, we can assure you that practically all of these challenges have been satisfactorily resolved, as we will see below. For the drafting of this article we have relied on the information included in the document “Olympic Games Paris 2024 Media Guide” by

OBS and comments by Isidoro Moreno, Chief Engineer at OBS.

The first challenge to overcome was the coverage of the opening ceremony, which, breaking all Olympic standards, had a completely outdoor stage, with a route spanning over 6 km along the Seine River, that used the largest number of cameras (105) and special

equipment for a single event ever. Four custom-made stabilized boats have been prepared, equipped with cameras on gyro-stabilized supports, which made it possible to capture the parade of the athletes’ boats.

Below, we review the fulfillment of the goals set by OBS in the staging of the Paris 2024 Olympic Games.

OBS AND THE MHRs (MEDIA RIGHT HOLDERS).

OBS is at the service of the Olympics broadcast rights holders (MRHs). While OBS is responsible for producing the multilateral coverage of the Games, the MRHs customize the programming for their respective audiences.

Thirty-five MRH organizations reached agreements with the International Olympic Committee and acquired the rights to broadcast the Paris 2024 Olympic Games in their territories; together with their sublicensees, they distributed the Games to 176 countries.

Some services

offered by OBS to MRHs for their customized productions:

› ON-FIELD REPORTER POSITIONS FOR BASKETBALL, FOOTBALL, HOCKEY, VOLLEYBALL

OBS has offered exclusive spaces for MRHs, strategically located on the field of play (FOP) level and within the athletes’

tunnel, to comment on live events as they unfolded.

› HALFTIME INTERVIEW POSITIONS FOR BASKETBALL, FOOTBALL, HOCKEY

The MRHs have had a unique opportunity to conduct brief interviews during the half-time period of the matches. These real-time interviews have provided a valuable window into athletes’ or coaches’ strategies, emotions, and perspectives at crucial moments in competitions.

INFLUENCER POSITIONS

With the introduction of Influencer Booths at all competition venues, OBS has offered MRHs a unique opportunity to position their social media equipment at the heart of Olympic venues. These strategically selected spaces provide the perfect setting to capture and share the most captivating moments of the Games.

In the midst of the COVID-19 pandemic, during the Tokyo Olympics, innovative solutions emerged to engage with the supporters of the Games, and OBS had to be particularly creative to ensure that athletes, families and fans could connect with the emotions of Olympic victories despite health restrictions.

The Athlete Moment initiative embodied OBS’s spark of imagination and willpower to enhance the Olympic viewing experience while providing comfort for athletes. The magic of the Athlete Moment occurs at strategically placed stations as athletes leave the field of play, offering them the opportunity to interact with their loved ones immediately after the competition. It is rightly described as the “most public and private moment.” Family members or friends who are unable to attend in person due

to distance or physical limitations, can still share in the unique experience of the athlete moment.

In Paris, this opportunity has not been limited to just families and close friends. Athletes have also been able to connect with their sports club and share the excitement while receiving remote support. In Paris, the Athlete Moment has been used for 35 sports and disciplines in 26 venues, and more than 80 percent of athletes have taken part in it. In total, OBS has produced more

than 200 moments at these Games, totalling around 600 hours of recording.

Since the PyeongChang 2018 Winter Olympics, OBS has explored the potential of 5G networks, pioneering the use of state-of-the-art mobile technology to support broadcast operations at the Olympic Games. On this basis, OBS continued to push the boundaries of

what was possible with 5G technology during Tokyo 2020, and at Beijing 2022 OBS implemented a robust network architecture to broadcast live content via 5G at all competition venues.

Now at Paris 2024, through advanced 5G mobile technology, OBS has offered immersive coverage of events such as the unique Opening Ceremony on the Seine River or the exciting sailing competitions, capturing the magic and spirit of the Olympic Games from every imaginable angle.

Although 5G technology is on the market and can be used, it is very difficult to guarantee broadcast capacity if a private network is not available. In the Games we are talking about about 450,000 people simultaneously sending photos, making videos and posting on social media. Therefore, the network is saturated and, although it was supplemented, there was a danger that at some point during the broadcasting the systems would not be able to be connected. To avoid this and to ensure stable 5G coverage, OBS decided to implement six private 5G networks, which it managed both for its own use and for that of broadcasters.

For the implementation of these networks, OBS relied on the services provided by Orange, which was the technological partner for the Paris 2024 Olympic Games. These networks have ensured high quality and reliable images aas well as secure signal transmission to the IBC, covering key areas along the Seine and enabling signals to be transmitted smoothly.

For the opening ceremony, OBS and Samsung have partnered to bring fans closer to the Olympic Games than ever before.

OBS and Samsung installed more than 200 Galaxy S24 Ultra smartphones on the bow and sides of each of the athletes’ 85 boats. Images captured and shared with Samsung

smartphones provided an intimate view of athletes’ reactions during this historic event.

To ensure sufficient bandwidth, the 5G network transmitted signals from the ships to nearby receivers. In addition, more than 10 5G antennas were strategically placed along the river, which established France’s first independent 5G network.

OBS has extended the use of 5G-connected cameras at sailing competitions in Marseille, thus enhancing the viewer experience by placing Samsung mobile phones on the athletes’ boats. OBS and Samsung have implemented in Marseille the same technology that was used for the Opening Ceremony by installing a Galaxy S24 Ultra smartphone on selected competition boats, allowing fans to experience the thrill of the race up close and truly immerse themselves in the action.

In another sport, namely KITESURF (skateboarding of kites driven by the wind hooked to athletes over the waves), athletes wear helmets with mobile phones equipped with panoramic sensors, which broadcast live action images directly from sailing boats. Given the dynamic and often turbulent nature of navigation environments, the mobile phones that were used feature advanced image stabilization technology.

Augmented reality (AR) studios are at the forefront of sports coverage and are redefining the way content is presented and consumed by audiences around the world.

By integrating cuttingedge technologies, these studios deliver immersive, interactive, and visually captivating broadcasts that elevate storytelling and viewer engagement

to new heights. In a revolutionary move for Olympic broadcasting, OBS -in collaboration with INTELhas installed an AR studio in the Olympic Village for the Paris 2024 Olympics. This setup has generated athlete content in AR and enabled MRHs to conduct live remote interviews with athletes. This has ensured that broadcasters can have greater access to athletes, while viewers can experience interviews in a more engaging and intimate way.

The studio has the option of 3D AR for live broadcasts. Athletes have been captured in 3D, allowing their holographic

representations to be broadcast live. Not only has this added depth and realism to the images, but it has also provided multiple angles and perspectives that were until then unattainable.

In addition to live interviews, AR has been used to create short-form content. These short videos are designed to capture 20 to 90 seconds of original content, perfect for sharing on social media platforms and engaging a wider audience. Using AR in these clips adds an extra layer of emotion, making the content more visually appealing and easy to share.

At Paris 2024, OBS unveiled an innovative content delivery platform designed to deliver cutting-edge AR content generated from the Olympic Village’s AR studio - the 3D AR Space Video Library of Olympic Athletes. For the first time ever, MRHs have had access to high-definition 3D AR videos that bring personal stories to life as athletes share their journeys and knowledge about their sports.

What makes this library one of a kind is its continued expansion. Continuous capture of new content, before and during the Games, ensures that MRHs always have access to the most recent and dynamic representations of their favourite athletes. This unprecedented resource not only enriches storytelling and coverage, but also forges a closer connection between fans and the spirit of the Olympic Games.

The new Augmented Reality Studio at the Olympic Village aims to redefine the way viewers experience the Olympic Games,

bringing athletes’ stories to life like never before; and it is not only revolutionizing storytelling, but also empowering the remote operations of MRHs, granting unprecedented access to athletes and their sports.

The introduction of film cameras in live sports broadcasts is a significant change in the way Olympic sports are presented to the audience and is expected to vastly improve storytelling.

By using these camera systems for the first time, the 2024 Olympic Games have combined the art of cinema with the excitement of live sports, offering viewers an unprecedented immersive experience.

One of the standout features of film cameras is their shallower depth of field compared to traditional lenses. This allows for a more pronounced separation between the subject (the athletes) and the background, creating visually striking and focused images against a blurred

background. Film cameras are great for conveying emotions, capturing everything from an athlete’s euphoria after a win to tension during a crucial match point.

OBS has integrated film coverage into all sports when it has been relevant from an editorial standpoint. During key moments, such as athletes in the tunnel before the match, scenes of jubilation or anguish, and moments of intense concentration, these cameras have been used to create more intimate shots.

Camera manufacturers have offered OBS cameras well suited to their needs. Thesse are cameras that, when recording in HDR-UHD, have resorted to OBS conversion tables, which are the standard that is already being used all over the world.

The whole production has been in HDR for all the equipment, all the cameras have worked in HDR-UHD, except for some that worked in HDR 1080p due to space or performance limitations, as was the case in some RF cameras, which might have inadequate latencies for broadcasting.

As AI continues to revolutionize various industries, its impact on sports broadcasting has been nothing short of transformative.

AI applications, which were tested during the 2024 Gangwon Youth Games, have completely changed the way broadcasters broadcast games. In partnership with core partners, OBS has leveraged cutting-edge AI technologies to push the boundaries of Olympic broadcasting.

During the years of OBS’s experimentation with AI, a primary goal has been to ensure that its use is a faithful, unbiased representation of reality. This commitment upholds OBS’s guiding principle of providing neutral coverage and avoiding favoritism towards any country. The AI Olympic Agenda recognizes these concerns, addressing the myriad risks and associated ethical issues, including data privacy, security, accountability, equity, job displacement, and environmental impact.

In Paris, OBS has produced more than 11,000 hours of content, 15 percent more than what was delivered for Tokyo 2020, and AI technology has played a key role in this huge effort and in the ability to make content more tailored to MRHs.

› AUTOMATIC VIDEO PRODUCTION

Managing and curating highlights from the wide range of content produced during the Olympic Games poses a significant challenge. However, advances in AI have opened up new possibilities for efficiently generating key action moments in real-time. OBS, in collaboration with sponsor INTEL, which uses AI trained on the INTEL® Geti platform, has achieved with this technology automatic creation of featured videos in various formats and languages. This capability allows broadcasters to access and customize content, focusing specifically on the highlights of athlets from their own National

Olympic Committee (NOC) athletes, thereby enhancing their ability to deliver customized coverage based on their viewers’ preferences.

The proof of concept of this AI-powered approach was successfully demonstrated at the Youth Winter Olympics in Gangwon 2024, in ice hockey. Building on this experience, Paris 2024 has expanded the scope to 14 sports and disciplines, demonstrating AI’s ability to optimize content production.

› CAPTURING EVERY ANGLE WITH MULTI-CAMERA PLAYBACK SYSTEMS

Paris 2024 has offered full 360-degree views of the athletes’ performances. Thanks to innovative multi-camera playback systems, developed in collaboration with Alibaba -main sponsor- viewers have enjoyed slow-motion replays with freeze frames from multiple angles. Using cloud-based AI and deep learning, these systems have created 3D models and texture mappings from images captured by up to 45 cameras. 17 systems have been implemented in 14 venues and 21 disciplines. The freezeframe effect, applied to live sports production, offers an unprecedented perspective on athletics.

› TRANSFORMING HOURS OF COMMENTARY AND INTERVIEWS INTO AUTOMATED TRANSCRIPTIONS

As with Tokyo 2020 and Beijing 2022, OBS has used AI at Paris 2024 to transcribe live commentary and interviews across all sports and disciplines. By converting audio streams into cloud-based speech and transforming them into text models, publishers can easily access transcripts in the form of captions.

› INNOVATIONS IN TRACKING TECHNOLOGY FOR DYNAMIC SPORTS

In collaboration with title sponsor Omega, OBS has launched an innovative AI app called ‘Athlete and Object Tracking’ at the Paris 2024 Games that has been used in seven disciplines. This innovation addresses a common challenge for spectators: identifying and tracking athletes or objects in dynamic sports environments. For instance, telling between

sailing boats or following rowers from afar can be confusing. New AI technology simplifies this by using advanced pixel detection algorithms to recognize and track objects such as sailboats and athletes. The system creates patterns from the captured images and integrates tracking data, ensuring accurate identification and continuous tracking.

› REAL-TIME TRAJECTORY ANALYSIS WITH AI FOR ARCHERY

Real-time trajectory analysis with AI in archery is changing the way television viewers experience the sport.

Through high-speed cameras that meticulously record the trajectory of each arrow from the moment it is released to impact on the target, viewers have been able to witness detailed 3D representations of arrows in flight in real time.

› KNOWLEDGE OF ATHLETIC EXCELLENCE THROUGH INTELLIGENT STROBE ANALYSIS

By using advanced AI, OBS and Omega have introduced strobe analysis at the Paris 2024 Olympic Games, which has enhanced the viewing experience of diving, athletics, and artistic gymnastics, and redefined the viewer’s understanding and appreciation of athletic performance. This technology captures movement, frames it, and applies intelligent background management in near real-time, allowing viewers to study the successive movements and biomechanical positions of athletes at critical points during their performances.

In artistic gymnastics, strobe analysis has been implemented in floor, horizontal bars, uneven bars and vault competitions. This technology promises to deconstruct each routine into its most elemental parts, offering a detailed examination of every twist, turn and aerial maneuver.

By freezing key moments in a routine with unprecedented clarity and flexibility, AI-powered strobe analysis allows viewers to appreciate the sheer athleticism and artistry that define Olympic gymnastics. Intelligent strobe analysis integrates seamlessly into live broadcasts. This means that viewers have not only witnessed the development of the sport in real-time, but also gain immediate insight into the nuances of an

athlete’s execution, which enhances the overall viewing experience.

› IMPROVED DATA GRAPHS FOR DIVING

One of the most exciting developments in Paris has been OBS’s ability to use AI and data to give viewers a clearer and more enlightening view of diving.

Omega and OBS are pioneering an AI application called

“Enhanced Data Graphs” specifically for diving. This technology uses AI to detect and display an athlete’s body position in real-time. Detailed metrics such as the height of the diver from the diving board at different stages of the dive, rotation angles and entry into the water have been shown on the screen. By viewing the detailed data displayed along with the dive, the audience can better understand the difficulty and execution of each dive.

› SERVICE REACTION TIME IN TENNIS

As a novelty in Paris, OBS and Omega have introduced the AI app “Service Reaction Time”, which uses intelligent motion detection.

This innovative system detects and analyzes the player’s movements, generating data to accurately measure their reaction time when receiving a service from the other player. By using dedicated cameras, the intelligent motion detection system identifies key elements such as the start of service, shoulder position, rotation and exact moment of reaction based on the trajectory of the ball.

› SPIN DETECTION THROUGH AI FOR TABLE TENNIS

Table tennis, or ping-pong, a sport known for its speed and accuracy, benefits significantly from recent advances in AI technology, particularly in the field of ball spin detection. This innovation provides live

data that has offered both Olympic viewers and commentators information that was previously difficult to measure with traditional methods.

The AI-powered spin detection system that has been used for table tennis employs sophisticated algorithms in combination with high-speed cameras. These cameras capture detailed information about the trajectory and speed of the ball. Through extensive training on large datasets of video footage, the AI algorithm can accurately identify and quantify various types of spin applied to the ball, such as the backspin effect, and establish the speed of ball rotation in a fraction of a second, thus

providing near real-time metrics for broadcasters and viewers alike.

3.0

The launch of OBS Cloud 3.0, in partnership with global title sponsor Alibaba, has transformed the way we experience the Games.

OBS Cloud 3.0 represents a significant leap forward in broadcast technology, revolutionizing workflows and delivery methods for the Olympic Games. By utilizing cloud capabilities, OBS Cloud 3.0 minimizes the need for extensive equipment and resources, dramatically reduces setup times, and offers unparalleled flexibility.

At the Paris 2024 Games, this cloud-based infrastructure supports several key features, which has ensured a seamless and enhanced viewing experience for audiences around the world.

One of the standout features of OBS Cloud 3.0 is its ability to deliver a wide range of content to MRHs with no interruptions, either through Live Cloud or the Content+ portal.