A year full of news and advances in Broadcast ends. 2024 has been a challenge for our sector with numerous and important sporting, political and social events to cover along with great technical requirements. Far from being an obstacle, this is an incentive for our industry to continue improving and innovating.

Speaking of event coverage, IP is already present in all phases of audiovisual production, from workflows to remote production and distribution. LiveU, Lawo and Blackmagic Design tell us about their experiences with this technology.

In the meantime, UHD continues to take firm steps and proof of this is the article that this magazine includes in which you will get to know how the Spanish public broadcaster, RTVE, launched a few months ago -on the occasion of the

Editor in chief

Javier de Martín editor@tmbroadcast.com

Key account manager

Patricia Pérez ppt@tmbroadcast.com

Editorial staff press@tmbroadcast.com

Paris Olympics- Europe’s first UHD channel. An important achievement full of challenges whose workings are described in detail in this report by the technical team of this broadcaster.

And finally, let’s talk about artificial intelligence, without a doubt a topic that has been the subject of many conferences, debates and article pieces this year. Much is being done and more is to come, and no one is able today to point out what the limits of this technology will be. The lab analysis featured in this issue reveals, among other things, how artificial intelligence is already almost becoming a standard in the field of PTZ cameras.

We bid farewell to 2024 and look forward to 2025, wishing everyone a successful year.

See you all in January!

Creative Direction

Mercedes González mercedes.gonzalez@tmbroadcast.com

Administration

Laura de Diego administration@tmbroadcast.com

Published in Spain

ISSN: 2659-5966

TM Broadcast International #136 December 2024

The moderator, Luis Sanz, opened the conference by stating that: “Radiotelevisión Española, the first Spanish broadcaster, is once again a pioneer in an essential technological action to improve services for viewers with the launch of the first Ultra High Definition channel in Europe ever.

By Ronen Artman, VP Marketing, LiveU

50

TECHNOLOGY | LED

A new way for audiovisual production IMMERSIVE LED

The world of cinema and television has always resorted to the innovation of techniques and technologies to enliven new images and sounds.

60

INTERVIEW | ZERO DENSITY

Improving virtual production with Zero density

Interview with Ralf van Vegten

68

TEST ZONE | SONY BRC-AM7

Sony BRC-AM7

Artificial Intelligence comes standard

The integration of AI-controlled functionalities plus some technical features, make this PTZ a different camera. All this combined will be a significant leap forward in the workflows of this type of equipment.

ARRI introduces ALEXA 265, a new-generation 65 mm camera that responds to feedback from users of the ALEXA 65, its predecessor. ALEXA 265 combines a small form factor with a revised 65 mm sensor, delivering higher image quality through 15 stops of dynamic range and enhanced low-light performance. Featuring the same LogC4 workflow, REVEAL Color Science, and accessories as ARRI’s state-of-the-art ALEXA 35, plus a new filter system, ALEXA 265 makes 65 mm as easy to use as any other format.

The ALEXA 265 camera body is based on the compact ALEXA 35 and despite containing a sensor three times as large, is only 4 mm longer and 11 mm wider. Using this body design means ALEXA 265 is less than one-third the ALEXA 65’s weight (3.3 kg vs. 10.5 kg) and takes advantage of ARRI’s latest cooling and power management technologies. While the camera’s small size and weight allow it to be used in ways never imagined for 65 mm—from drones and stabilizers to the most space-constrained locations—its efficiencies make it faster to work with on set. Boot-up time and power draw have been improved, and compatibility with the ALEXA 35 accessory set opens vastly more rigging options.

Feedback from ALEXA 65 users over the last decade made the dramatic reduction in form factor a design priority for ALEXA 265, but also determined the approach to image quality.

Filmmakers wanted to retain the 6.5K resolution and large pixel pitch, but were interested in higher dynamic range and improved low-light performance. A brand-new and comprehensive revision of the 65 mm sensor was therefore developed for ALEXA 265, increasing the dynamic range from 14 to 1 5 stops and the sensitivity from 3200 to 6400 EI (ISO/ASA), with crisper blacks, greater contrast, and a lower noise floor.

Delivering this higher image quality is a simple and efficient workflow that utilizes ARRI’s latest developments. The new-generation LogC4 workflow and 3D LUTs introduced for ALEXA 35 are now shared with ALEXA 265, which records ARRIRAW in-camera to the Codex Compact Drives used in all current ARRI cameras. Standard drive readers and docks can be used, as can Codex HDE (High Density Encoding), reducing file sizes by up to 40% without diminishing image quality. On-set monitors can be set up in HD or UHD, displaying SDR or HDR, or both. ARRI is updating its SDK to ensure that ALEXA 265 images are compatible with all major third-party software tools.

A unique feature of the ALEXA 265 is its filter cartridge system, which allows special filter trays, encased in a protective cartridge, to slide in front of the sensor. ARRI FSND filters from zero to ND2.7 in singlestop increments will be available with ALEXA 265 at the time of launch, and many more creative

filter options are in the works. An encoded chip on the filter tray conveys information about whatever filter has been inserted; this information is available in the user interface and is also recorded in camera metadata for use on set and in post.

ALEXA 265 images are processed in-camera using ARRI REVEAL Color Science, introduced with the ALEXA 35 and also compatible with ARRIRAW images from the ALEXA Mini LF. REVEAL is a suite of image processing steps that collectively help the camera to capture more accurate colors, with subtler tonal variations. Skin tones are rendered in a flattering, natural way, while highly saturated colors and challenging colors such as pastel shades are displayed with incredible realism. All ALEXA 265 and ALEXA 35 cameras are super color-matched to each other, simplifying color grading, and the ALEXA 265’s advanced LED calibration streamlines virtual production and LED volume work.

The list of ALEXA 65 films and filmmakers over the last 10 years is a roll call of the industry’s most visionary projects and people. While 65 mm may only be accessible to relatively few productions, this historic format inspires many and represents the pinnacle of mainstream image acquisition. Now, with the launch of ALEXA 265, a new era of 65 mm begins—one that will redefine the format’s creative possibilities. ALEXA 265 will be available to productions from early 2025.

OpenDrives has unveiled Atlas 2.9, the latest version of its software-defined data storage solution, along with the introduction of the Atlas Professional feature bundle. The new mid-tier offering targets fast-growing, mid-sized media organizations, providing a balance of enterprise-grade performance, cost predictability, and scalable data management.

The Atlas Professional bundle fi ts between the entry-level Atlas Essentials and the high-end Atlas Comprehensive bundles, both launched earlier in 2024 with Atlas 2.8. According to OpenDrives, Atlas Professional is designed to meet the needs of organizations with moderately complex workflows that require robust performance while maintaining cost efficiency. It delivers features like Active/ Passive High Availability (HA), Containerization, NFS over RDMA (NFSoRDMA), and a Single Pane of Glass (SPOG) administrative view, all on OpenDrives’ Ultra hardware platform.

Targeting mid-sized media workflows

Atlas Professional aims to support creative teams in mid-sized media organizations, providing scalable storage and advanced data management features necessary for both planned and unplanned projects. It is particularly suited for companies seeking both performance and cost predictability in a streamlined package. The platform’s ability to simplify the management of dispersed teams is key for organizations looking to stay agile in a competitive market.

Lawo introduces software version 10.12 for its latest mc² audio production consoles, featuring significant improvements in channel management, Waves integration, user experience, and system security. Designed for professionals in demanding audio environments, this update enhances efficiency, flexibility, and production reliability.

One of the key updates in version 10.12 is the new Strip Assign page, which streamlines channel management. The new page offers a modern, intuitive interface that makes assigning channels to fader strips quick and easy. Users can assign single or multiple channels in one step and reassign, duplicate, or swap channels between strips effortlessly. The page also allows for the direct customization of channel labels and fader strip colors, making it simple to tailor the console layout to the user’s preferences. A clear, visual representation of the console banks ensures that the number of sections matches the physical bays, offering an organized view that supports fast navigation. This update saves valuable setup time and ensures that operators can navigate their systems more

efficiently during both live and studio operations.

Version 10.12 also brings substantial improvements to the integration between mc² consoles and Waves systems through the new ProLink protocol. This update replaces the older RFC protocol and enhances communication between mc² consoles and Waves SuperRack 14 systems. Label synchronization is now automatic, reducing manual entry errors. Additionally, the new access channel linking feature allows engineers to open a connected Waves Rack with a single button press on the mc² console. This provides quick access to plug-in adjustments and streamlines the workflow, making it easier for engineers to focus on delivering high-quality sound without navigating between systems.

The user interface and workflow have also been improved with version 10.12. The Signal List now includes new visual indicators that clearly show the status of Aliases, Waves, and Loopback connections, simplifying the management of signal paths. These updates

enhance the clarity of signal health and connectivity, providing immediate feedback on the status of each signal. The new icons and updates to the signal list help operators manage complex, multi-console setups with greater ease.

Finally, version 10.12 strengthens system security with advanced features aligned with EBU R.143 cybersecurity guidelines. It introduces new password policies, including enforced expiration, complexity requirements, and the elimination of default passwords, ensuring a higher level of protection. Private/public key authentication is now available for secure access, minimizing risks associated with password management. The update also includes simple, guided procedures for password resets, making the process straightforward while maintaining robust security measures for the system.

Software version 10.12 is available now for all compatible mc² audio systems, offering audio professionals enhanced control, security, and operational efficiency.

Matrox® Video has officially entered the IP video gateway market with the introduction of Matrox Vion, a high-density IP video gateway, and a new update for the Matrox ConvertIP DSH SMPTE ST 2110 and IPMX transmitter/receiver. Together, these innovations help address the growing complexity of IP video workflows in ProAV and broadcast environments.

Vion is a compact, IT-inspired gateway designed to excel in both local and cloud-based IP video gateway workflows. Vion supports real-time encoding, decoding, transcoding, and conversion across multiple compressed and baseband formats, including H.264/HEVC, JPEG-XS, ST 2110, IPMX, and NDI, addressing the challenges of interoperability between diverse applications across broadcast and ProAV workflows.

Vion is available in two versions, EX and NX, with the EX model offering additional SDI and HDMI inputs. Key benefits include:

> Efficiently convert, transcode, transmux, transrate, and transceive compressed IP signals over IP networks to ensure seamless media distribution.

> Take advantage of HEVC 4:4:4 color codec to deliver superior desktop content making it ideal for color-sensitive applications.

> Ensure compatibility across devices and future-proof your infrastructure with support for ST 2110-22, IPMX, and JPEG XS.

> Facilitate NDI media and protocol conversion, including seamless conversion between NDI and SRT, as well as NDI and IPMX.

> Instantly preview content locally with HDMI and audio outputs.

> Deliver multiple concurrent and bi-directional streams with advanced multi-channel encoding, decoding, transcoding, and cross-conversion.

The upcoming update for ConvertIP DSH introduces an IP-to-IP bridging feature,

enabling conversion between uncompressed and compressed formats such as ST 2110-20, ST 2110-22, and IPMX. With this update, ConvertIP DSH becomes an even more versatile solution for IP video conversion, enhancing workflow flexibility.

“Vion and the new ConvertIP update represent strategic advancements in AV and broadcast technology,” said Spiro Plagakis, Vice President of Product Management at Matrox Video. “By supporting open standards, offering comprehensive protocol conversion, and delivering high-performance encoding and bridging options, these devices empower users to seamlessly adapt their IP workflows, whether on-premises or in cloud environments.”

With the launch of Vion and the ConvertIP DSH update, Matrox Video expands its IP technology portfolio, empowering users to unlock new levels of efficiency, interoperability, and flexibility in their IP workflows.

Vislink Technologies announces the launch of DragonFly V 5G, a groundbreaking bonded cellular miniature transmitter that combines 5G connectivity with ultra-lightweight, high-performance video streaming capabilities. This new transmitter is available for purchase alongside the previously announced DragonFly V COFDM model. With DragonFly V 5G, Vislink continues its reputation of providing industry-leading solutions for live broadcast production, delivering seamless HD video streaming in a small and versatile device.

Key features include:

> 5G Bonding Connectivity: Compatible with public and private 5G networks ensuring greater bandwidth and reliable, high-quality video streaming.

> Compact and Lightweight Design: Weighing just 82 grams,

DragonFly V 5G is ideal for use on drones, body-worn cameras, and other portable applications.

> High-Definition Video Support: Captures and streams video in formats up to 1080p 50/60 HDR.

> Versatile Inputs: Supports HDMI and SDI connections for seamless integration into production workflows.

> Low Power Consumption: Optimized for extended field use without compromising performance.

> Unmatched Reliability: Engineered to maintain robust performance in challenging conditions, such as crowded live events and remote locations.

Versatile Applications Across Industries DragonFly V 5G

transforms the way live video is captured and shared, offering endless possibilities:

> Sports Production: Capture dynamic angles using drones or on-field body-worn cameras for immersive coverage.

> News Broadcasting: Stream live footage from breaking news scenes with minimal setup time.

> Entertainment: Deliver stunning live event coverage with real-time streaming that ensures flawless viewer experiences.

> Public Safety: Provide live situational awareness for surveillance and first responder coordination.

Complementing the new DragonFly V 5G is DragonFly V COFDM, a miniature transmitter designed to deliver reliable, high-definition video in environments where signal integrity is crucial. Utilizing advanced COFDM technology, this device ensures resilient video links even under challenging conditions. Weighing just 73 grams, DragonFly V COFDM integrates seamlessly with drones and body-worn cameras, enabling dynamic, on-the-move live video capture for broadcasters. Its compact and durable design also makes it ideal for defense and public safety applications, providing real-time surveillance and situational awareness for critical missions.

LucidLink has unveiled a major update to its cloud storage and collaboration platform, designed to enhance real-time creative workflows across desktop, browser, and mobile devices. The new release aims to provide creative teams with instant, secure access to their data, no matter where they are working.

The latest version of LucidLink introduces a streamlined, collaboration-focused user interface, intended to unify the user experience across all platforms. The update is scalable, making it suitable for teams of all sizes, from individual freelancers to large enterprises. LucidLink’s flexible pricing model and infrastructure support a wide range of collaborative needs, ensuring users can work without technical or geographic limitations.

Peter Thompson, Co-Founder and CEO of LucidLink, highlighted the significance of the release:

“This is both an evolution of everything we’ve built and a revolution in how teams collaborate globally. For the first time, teams can collaborate instantly on projects of any size from desktop, browser, or mobile, all while ensuring their data is secure.”

While the initial update focuses on improving the desktop and web experiences, future versions will expand to include mobile apps, providing even greater flexibility for remote collaboration. This release also positions LucidLink as a more

comprehensive platform, with expanded integrations, business intelligence capabilities, and advanced pricing options to meet the needs of diverse teams around the world.

According to the company, the key features of the new LucidLink release:

> Real-time collaboration across platforms: The new release introduces a refreshed experience for both desktop and web users, built on a new architecture that ensures smoother collaboration. Future updates will extend this functionality to mobile devices, giving teams more options for accessing and working on files remotely.

> New desktop and web applications: LucidLink now offers an upgraded user experience, allowing teams to collaborate in real-time across workflows that span home, on-premise, and cloud environments, without the need for data downloads, syncs, or transfers. This update simplifies administrative tasks

for both desktop and web platforms.

> Global user model: Users can now access multiple filespaces from a single account, seamlessly switching between desktop, web, and mobile devices. This feature is especially useful for freelancers and the organizations that work with them.

> Streamlined macOS installation: The update provides a faster, more straightforward installation process for macOS users, eliminating the need for system reboots or security changes, allowing users to get started more quickly.

> Simplified onboarding: New team members can now be onboarded quickly using a simple link, reducing friction during setup.

> Scalable infrastructure: LucidLink offers users the option to choose from its bundled high-performance, egress-free storage solutions powered by AWS, or to bring their own cloud storage provider.

NOA GmbH has announced the completion of a digitization system delivery to TV 2 Denmark to preserve the broadcaster’s extensive video archive containing approximately 60,000 Betacam cassettes.

TV 2 Denmark continues to invest in cutting-edge technology, content development, and digital transformation strategies that enhance viewer engagement and maintain its competitive edge in an increasingly complex media environment.

In alignment with the agreed terms, NOA delivered a comprehensive preservation solution at TV 2’s main facility in Odense on standard COTS hardware, including the jobDB archive workflow management system, alongside with specialized ingestLINE and actLINE tools. Initiated in early November, the project was designed to digitize and preserve the broadcaster’s historical video content with rigorous, professional-grade quality control measures.

TV 2 represents the third Scandinavian client for NOA, with

the system strategically designed as a short-term service-based solution. Recognizing the temporary nature of the implementation, both parties have agreed to a service-oriented approach that aligns with the anticipated few-year operational timeframe.

According to Josephine Beck Høpner, project manager at TV 2 Denmark, the organization sought an on-prem streamlined digitization solution to safeguard its vast media heritage and therefore avoid the shipment of any material. “We are thrilled to implement an efficient digitization platform that will preserve our 60,000 Betacam and DigiBeta carriers,” Beck Høpner stated. “NOA’s professional solution integrates seamlessly with our existing IT infrastructure, enabling us to leverage our internal resources for a high-quality, error-free digital conversion.”

The implementation ensures a smooth transition, allowing the organization to systematically digitize and protect its valuable

archival media collection with precision and reliability.

Manuel Corn, sales director for NOA, commented on the strategic expansion: “We are proud to expand our footprint in the Scandinavian region, building on successful digitization projects with Swedish (SRF) and Finnish (YLE) broadcasters. Our robust framework of jobDB, ingestLINE, and actLINE enables rapid deployment within days, leveraging our extensive experience in archive technology,” said Corn. “With a proven track record of digitizing over 5 million hours of audiovisual material across global digitization centers, we are uniquely positioned to deliver comprehensive archival solutions.”

TV 2 Denmark, a state-owned public service broadcaster founded in 1988, operates multiple channels and Denmark’s most popular streaming service, TV 2 Play. The broadcaster employs approximately 1,500 employees across Odense and Copenhagen and reaches millions of viewers nationwide.

TV Markíza, an independent broadcaster in Slovakia, has completed a significant studio upgrade, enhancing its broadcasting capabilities with new LED video wall technology from Leyard. The broadcaster installed a Leyard TVF video wall in its News studio and Leyard CarbonLight CLI and CLI Flex displays in its Morning Show studio ahead of a busy summer season of news and sports coverage.

The Leyard TVF Series video wall, known for its fine pixel pitch and modular design, provides TV Markíza with increased flexibility across its multi-purpose, 24-hour studios. The new displays allow seamless transitions between different content formats, from news and weather to talk shows, with minimal disruption. The upgrade is part of a long-term collaboration between Leyard and the broadcaster, which began in 2017.

TV Markíza had previously installed Leyard TVH series video walls in its News studio in 2017.

Following a studio expansion in 2019, the broadcaster once again turned to Leyard for the 2020 completion of the Good Morning studio, though the project was delayed due to the COVID-19 pandemic.

In September 2022, discussions for renovating the News studio began, with TV Markíza seeking a partner who could provide a high-quality, flexible display solution. “Leyard’s European manufacturing facility and headquarters in Slovakia were key factors in our decision,” says Jaroslav Holota, the architect responsible for the project. “This provided us with the flexibility of local support and eliminated language barriers, which was a massive benefit.” The proximity of Leyard’s factory also allowed for cost-effective testing of the displays, ensuring they met the broadcaster’s exacting standards.

The installation of the new TVF video wall, originally planned for later in the year, was expedited to accommodate TV Markíza’s busy programming schedule, which

included two national elections and other live events. As a result, the installation was completed in the summer, taking advantage of a quieter period in the studio calendar.

The Leyard TVF Series is designed specifically for broadcast environments. Its fine pixel pitch, front-serviceability, and stackable, modular structure make it ideal for dynamic, multi-use studio spaces. The modular design eliminates the need for cabinetto-cabinet cabling, reduces the required installation space, and enhances overall system efficiency. Additionally, the system’s low heat output creates a more comfortable environment for presenters and crew.

From a performance standpoint, the Leyard TVF Series offers low latency and high refresh rates, manufacturer says, essential for live broadcasting. With redundant power supplies and backup control systems, the TVF Series is optimized for content-critical applications, ensuring reliability during high-stakes broadcasts. The new installation also features a curved design in the News studio, achieved through the stackable frame modules, providing an aesthetically versatile backdrop for a range of programming formats. The screens offer improved brightness and visual clarity on camera, further enhancing TV Markíza’s broadcast quality.

Zixi has announced that SPOTV, a linear and digital sports service in Southeast Asia, has adopted Zixi technology to streamline and enhance content distribution across the region.

South Korea’s provider of linear and digital sports content, SPOTV has expanded into Southeast Asia. By deploying Zixi, SPOTV gains complete control over its content distribution, enabling a transition from traditional satellite and fiber to a modern, cloud-based IP infrastructure.

Utilizing Zixi-as-a-Service (ZaaS), SPOTV has seamlessly adopted a flexible, cost-effective, and robust IP delivery workflow, overcoming issues common in fiber networks, like packet loss and signal drops.

A key component of this transformation is the Zixi Broadcaster, bridging SPOTV’s on-premises sources to the cloud where the SDVP efficiently processes and distributes content to affiliates, with ZEN Master serving as the control plane to orchestrate

operations and provide end-toend visibility. By transitioning to IP-based distribution with Zixi, SPOTV can onboard new affiliates faster, enhance operational agility and reduce costs. With the elimination of costly fiber networks and satellite infrastructure in favor of streamlined IP and cloud-based networks, SPOTV can reach new markets and explore additional revenue streams without significant upfront investments.

Zixi-as-a-Service (ZaaS) offers broadcast infrastructure as a service, allowing SPOTV to seamlessly ingest and distribute live video over any IP network with ultra-low latency. It delivers the full capabilities of the SDVP while ensuring a fully redundant video-over-IP pipeline, protecting against all potential failure scenarios. Zixi’s protocol optimization, including higher throughput and lossless compression, along with ZEN Master cloud control interface further enhance cost-efficiency

and ease of management. With reduced egress costs and lower computing power requirements, SPOTV benefits from a lower total cost of ownership while gaining the tools to monitor and manage signals across the network.

“One significant discovery was the positive impact of the Zixi protocol on our network performance, and how Zixi’s adoption has helped resolve issues our affiliates encountered with traditional delivery methods,” said Prakash Maniam, Head of Broadcast Operations and Engineering at SPOTV. “With Zixi’s advanced FEC capabilities, the system effectively recovers lost packets, resulting in error free delivery across the network.”

“The Zixi platform enables SPOTV to fully manage and control our signal delivery, ensuring reliable, end-to-end distribution,” said Lee Chong Khay (CK), CEO of SPOTV. “This partnership with Zixi has empowered us to deliver higher-quality service and meet the growing demands of our audience across Southeast Asia. We look forward to deepening this collaboration as we expand into new phases of growth and innovation with Zixi.”

“With over 15 years of experience in delivering large-scale video workflows, Zixi brings invaluable expertise to our customers,” said Marc Aldrich, CEO, Zixi. He added, “From planning through to execution, our team of regional technical experts supported SPOTV at every step, making the transition to IP a seamless experience.”

New partnership expands global reach and service offerings for media and telecom industries

Ovyo Ltd, a provider of custom OTT and media software services, has announced its merger with Portuguese technology services firm The Loop Co. This merger combines Ovyo’s experience in delivering software solutions to the video, telecom, and satellite industries with The Loop Co.’s nearshore capabilities and expertise in cloud software engineering and embedded systems, both companies stated.

The combined entity aims to offer a comprehensive portfolio of services for OTT streamers, broadcasters, telecom operators, industry vendors, and other media businesses, with a strengthened presence across Europe, North America, and Asia.

Ovyo has expanded its service offerings with the addition of The Loop Co.’s nearshore capabilities in Portugal. This enhances Ovyo’s established offshore presence in India and the UK, providing increased access to global talent and expanding the company’s cloud and data engineering capabilities. The merger allows Ovyo to deliver a broader range of expertise, helping clients access niche skills more efficiently.

“We’re excited to move forward with The Loop Co.,” said Pav Kudlac, CEO of Ovyo. “The addition of nearshore resources provides our customers with faster access to specialized teams across two continents, offering deeper technical expertise in key areas like cloud and data engineering. This is a signifi cant step forward as we continue to expand our global footprint, particularly in the context of media teams’ growing need for high-quality, on-demand services.”

Both Ovyo and The Loop Co. have grown rapidly as software services businesses, sharing a commitment to customer success and employee empowerment. The merger brings together a combined workforce of approximately 250 people, strengthening the teams in Europe, North America, and Asia while ensuring continuity in client-facing

operations. The companies have retained all management and project teams to maintain expertise and provide ongoing stability for clients.

João Bernardo Parreira, CEO of The Loop Co., commented, “We are excited to join forces with Ovyo. Their global delivery model and deep experience in custom software development will enable us to offer even greater value to our customers as we expand our capabilities.”

This merger marks a significant strategic move for both companies as they continue to grow their presence in the fast-evolving media, telecom, and software services sectors. The combined expertise in OTT and video software services, along with enhanced cloud engineering resources, is expected to deliver added value for clients across multiple industries.

Ateme, a provider of video delivery solutions, has announced a partnership with Lingopal.ai, an AI-based language translation platform, to deliver an integrated solution aimed at simplifying content localization for broadcasters and Direct-to-Consumer (D2C) services.

The collaboration combines Ateme’s expertise in video compression and delivery with Lingopal.ai’s AI-powered translation technology, allowing content creators to efficiently localize video content for diverse, global audiences, as both companies stated. This enables broadcasters and streaming services to deliver content in multiple languages, thereby reaching new markets and better serving existing ones, according to their words.

Rémi Beaudouin, Chief Strategy Officer at Ateme, commented: “This partnership marks an important step in helping our customers reach global audiences more efficiently. By integrating Lingopal.ai’s AI-powered speech-to-speech capabilities with our video compression and delivery technologies, we’re enabling content providers to do more with less, while breaking down language barriers and enhancing viewer experiences worldwide.”

The collaboration aims to streamline the localization process, cutting operational complexity and reducing the total cost of ownership (TCO) for content providers. By combining AI-based video compression with speech-to-speech translation, the solution simplifies workflows and enhances efficiency, enabling broadcasters to scale their content globally without significant increases in cost or operational burden, companies stated.

Chase Levitt, Vice President of Sales at Lingopal.ai, added: “We are thrilled to partner with Ateme to deliver a truly comprehensive solution. With our AI translation technology, we can help broadcasters and content creators localize content faster and more effectively than ever before. Combined with Ateme’s advanced video delivery solutions, this integration will allow companies to scale their content globally while significantly improving operational efficiency.”

The integrated solution is designed to assist content creators, broadcasters, and D2C providers in delivering localized, feature-rich content that resonates with global audiences. This approach reduces both costs and operational complexity, providing a more efficient way to handle content localization at scale.

This announcement comes shortly after Lingopal.ai’s success at the Sportel Conference in France, where the company won first place in the pitch competition, competing against leading firms in the industry. This recognition highlights Lingopal.ai’s innovation in real-time speech-to-speech translation technology and underscores its potential to influence the future of global content delivery, as the company stated.

Pixotope has announced a new collaboration with 7thSense, a company specializing in media server, pixel processing, and show control solutions. As part of the partnership, 7thSense has been added to Pixotope’s approved hardware vendor network, integrating its high-performance hardware products with Pixotope’s software ecosystem.

The collaboration aims to combine 7thSense’s expertise in media servers and pixel processing with Pixotope’s real-time graphics and tracking software, creating an optimized solution tailored to the demands of virtual production environments.

According to the company, 7thSense’s hardware is already deployed in major entertainment venues, theme parks, and live event attractions, such as the Millennium Falcon: Smuggler’s Run ride at Disney Parks, Sphere Las Vegas, Expo 2020 Dubai, and the Museum of the Future in Dubai.

Certified compatibility for virtual production workflows

As part of the agreement, 7thSense’s R-Series 10 media server hardware has received official certification from Pixotope. The hardware has undergone thorough testing to ensure seamless integration with Pixotope’s tracking and graphics software. Additionally, a new “Pixotope-ready” SKU will be available, offering Pixotope users a certified, high-performance hardware solution specifically designed for virtual production.

“We’re proud to welcome 7thSense into our growing network of approved hardware partners. Their expertise in advanced pixel processing and media server solutions ensures that our users have access to the powerful, high-performance hardware necessary for exceptional virtual production,” said Marcus B. Brodersen, CEO of Pixotope. “This partnership makes it easier for creators to

find trusted tools that meet the demanding requirements of virtual production.”

Official certification for 7thSense hardware

Rich Brown, CTO of 7thSense, commented, “We’re thrilled to have our hardware officially certified for use with Pixotope software, after completing the functionality testing of our PC hardware with Pixotope’s tracking and graphics applications. We now offer a special ‘Pixotope-ready’ SKU for users seeking high-performance hardware that can elevate their virtual production setups.”

According to both companies’ statements, the partnership between Pixotope and 7thSense aims to drive advancements in virtual production, enabling more immersive and technically sophisticated media experiences across various industries, including film, television, live events, and themed entertainment.

Amagi has announced the acquisition of Argoid AI, a cutting-edge AI company specializing in recommendation engines and programming automation for OTT platforms. This acquisition strengthens Amagi’s mission to empower media companies with intelligent content planning, distribution, and monetization solutions.

Argoid AI, known for its state-of-the-art artificial intelligence capabilities, has developed innovative AI products that enhance content recommendations and enable real-time programming decisions. Its solutions have been pivotal in increasing viewer engagement and optimizing channel operations for customers in the streaming media space. By integrating Argoid AI’s advanced algorithms into Amagi’s existing platform, this acquisition will significantly boost the functionality of Amagi’s product

suite, particularly the Amagi NOW and Amagi CLOUDPORT offerings — enabling media companies to make faster, smarter, and more personalized content scheduling decisions at scale. It will also allow Amagi to deepen its AI-powered content programming, metadata enrichment, and recommendation engine services, which are crucial for transforming to personalized streaming as part of the FAST 2.0 innovation.

“Amagi has been investing in AI/ML over the last couple of years. We strongly believe in AI/ML’s pivotal role in transforming the media and entertainment industry, creating efficiencies, enhancing monetization, and providing an engaging viewer experience,” said Baskar Subramanian, Co-founder and CEO of Amagi. “With this acquisition, Amagi will integrate Argoid’s AI components into its award-winning cloud solutions, significantly enhancing value for

our customers. The combined tech expertise of both companies will address key challenges in the streaming industry, such as content discoverability, viewer retention, and intelligent programming.”

Acquiring Argoid AI brings advanced AI capabilities as well as talented engineers and data scientists to Amagi. Argoid’s founders Gokul Muralidharan, Soundararajan Velu, and Chackaravarthy E will join the Amagi team, contributing to the future roadmap and further integrating AI into Amagi’s offerings.

“We are thrilled to join forces with Amagi, a true leader in media technology,” said Muralidharan. “This partnership allows us to scale our AI-driven solutions, delivering even greater customer value. Together, we will revolutionize how content is programmed and distributed in the digital era.”

New SMPTE ST 2067-70 standard streamlines VC-3 codec integration for broadcast distribution

The Society of Motion Picture and Television Engineers (SMPTE) and Avid have announced the publication of SMPTE ST 2067-70, a new standard for the VC-3 codec within the Interoperable Master Format (IMF) framework. This collaboration aims to improve the efficiency and flexibility of audiovisual content distribution, particularly for broadcasters and media organizations involved in largescale production and delivery.

The VC-3 codec, provided by Avid under its DNx brand, is a widely used production codec for various broadcast operations, including capture, editing, rendering, and long-term archiving. The new standard specifies how VC-3 should be integrated into IMF, building on the existing SMPTE ST 2019-1 standard.

Shailendra Mathur, Avid’s VP of Technology, explained, “For many of Avid’s customers, the DNx family of codecs, specifically VC-3, is used as a mezzanine format to maintain high-quality footage and optimal editing performance. The new SMPTE ST 2067-70 standard will ensure that this same quality can be carried through the IMF mastering stage, simplifying the process for distribution.”

IMF is a key international standard designed to simplify the management and distribution

of content. It consolidates all audiovisual assets needed for different versions of a program into a single, interchangeable package. This framework supports content fulfillment and versioning across multiple territories and platforms, making it a critical tool for modern media supply chains.

The adoption of VC-3 within IMF aims to maintain high-quality video for broadcast, distribution, and archiving, ensuring that the original creative intent is preserved throughout the production and delivery pipeline. The new standard also offers broadcasters the option to use a constant bit rate (CBR) profile for VC-3, which improves predictability for storage and network transport, further optimizing workflows and reducing the potential for quality loss due to transcoding.

The Digital Production Partnership (DPP), an organization representing the media supply chain, played a key role in supporting the development of this standard. DPP Technology Strategist David Thompson noted, “The collaboration between Avid and SMPTE has addressed a clear need for broadcasters who rely on VC-3 as their primary production codec and are looking

to adopt IMF for distribution and archiving. The standard offers significant workflow advantages, including faster turnaround times and easier integration of changes before creating a final IMF deliverable.”

Kevin Riley, Avid’s Chief Technology Officer, added, “We are committed to improving the DNx family of codecs, including VC-3, to meet the needs of our customers and partners. This new standard aligns with our goal of making media processing more efficient, while delivering time and cost savings. It allows broadcasters to maintain existing media workflows and deliver content using the same codec from production through to distribution and archiving.”

The ST 2067-70 standard is expected to streamline workflows for broadcasters and media organizations, offering a more efficient path from production to delivery without the need for additional transcoding or format conversions. This approach will not only help preserve quality but also simplify the storage and transport of large media files, offering significant operational advantages for high-scale content distribution.

Since it’s inception, Zixi has been the trusted leader in live IP video delivery and orchestration technology. The patented, Emmy winning Zixi Protocol was a groundbreaking innovation that addressed the unique challenges of live video streaming over IP that delivers the levels of security, scalability, efficiency and quality that are mandatory for media companies whose business is dependent on pristine broadcast of high value, revenue generating content such as live sports.

Much more than the protocol, Zixi now offers the comprehensive Software Defined Video Platform (SDVP®), a seamlessly integrated suite of tools mandatory for the flawless delivery and orchestration of mission critical content, including capabilities for configuration, automation, monitoring and reporting, advanced AI and ML based analytics, video switching and processing, all designed to meet the demands of modern complex workflows. The platform's ability to deliver broadcast-quality live video over any IP network, cloud platform or edge device has transformed the media and entertainment industry. Known for its scalability, reach, and cost-effectiveness, the SDVP is a preferred choice for broadcasters, OTT providers, service providers and sports organizations such as Amazon Prime Video, Bloomberg, MLB, NFL, Roku, Sky, Warner Media, and YouTube TV.

Recognizing the expanding requirements of the media industry, Zixi has developed the complete SDVP, a modular platform that provides all the tools required for the end-to-end broadcast quality delivery and orchestration of live video. It enables broadcasters to quickly and easily deploy digital first, or transition from legacy satellite, to flexible, IP-based cloud workflows.

The Zixi Broadcaster is the backbone of live video distribution. Supporting 17 protocols, acting as a Universal Gateway, aggregating and normalizing content from diverse sources for seamless distribution while offering advanced features such as multiplexing, OTT packaging, PID normalization, bonding and hitless failover.

ZEN Master serves as the SDVP’s orchestration and management layer, enabling oversight of large-scale deployments with end-to-end visibility, real-time monitoring and reporting. Its Live Events Manager supports autonomous workflows, allowing users to build and visualize event schedules, validate infrastructure, manage multiple live events simultaneously, and transition smoothly to post-event feeds. With ZEN Master, media companies efficiently manage their entire video ecosystem and ensure quality across networks to ensure a flawless viewer experience.

The Intelligent Data Platform (IDP) leverages AI/ML algorithms and advanced analytics to optimize network paths to proactively resolve potential issues. Broadcasters can reduce reliance on manual monitoring and minimize operational costs while maintaining the highest quality of services.

Zixi Edge Compute (ZEC) is installed at the network edge, enabling high-performance connectivity to Zixi Broadcasters, optimizing bandwidth and ensuring ultra-high-density throughput. Support for ARM-based processors enhances efficiency for edge-based deployments.

Zixi Live Transcoding consolidates transcoding and distribution workflows, reduces costs and simplifies distribution. GPU and VPU acceleration allow OTT providers to customize content and scale flexibly.

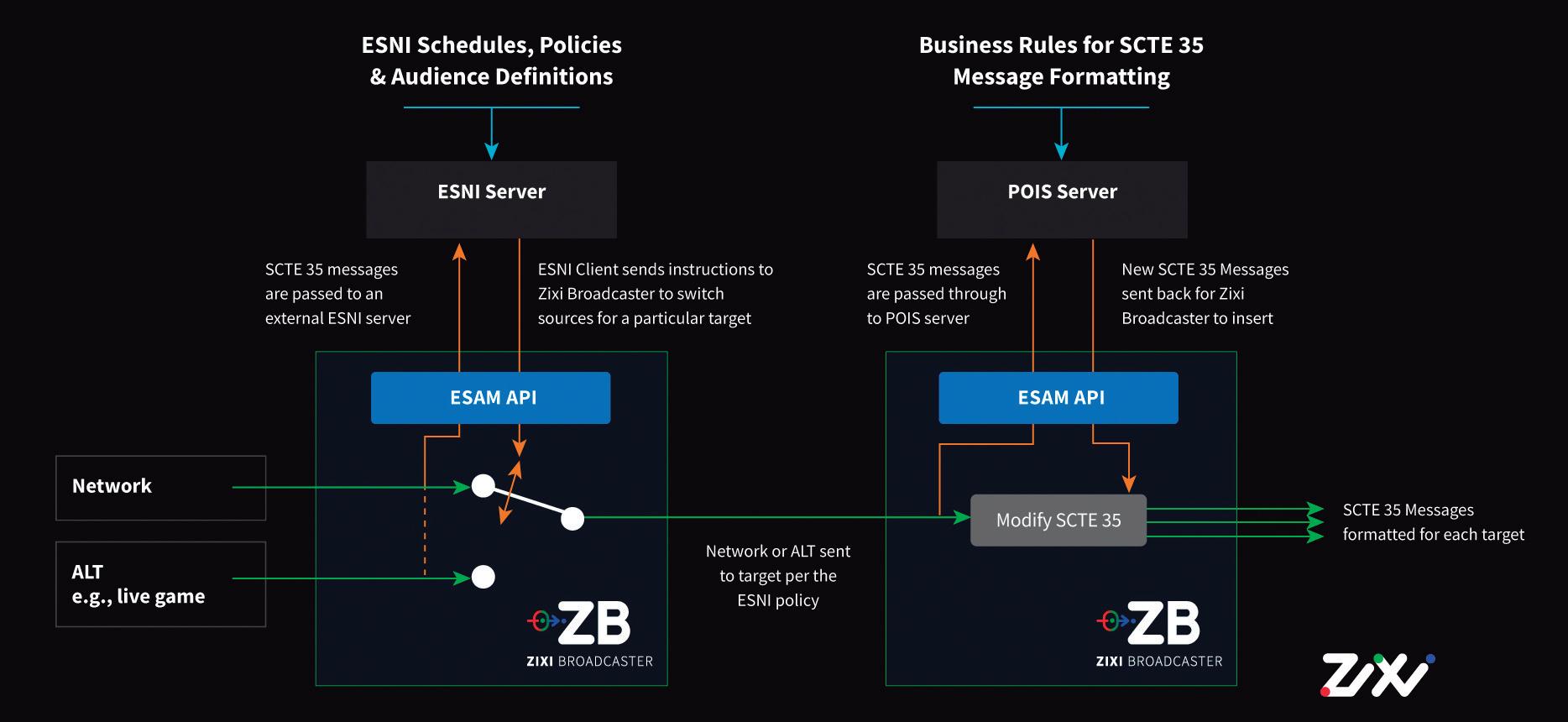

Zixi Market Switching (ZMS) replicates traditional satellite workflows over IP using ESNI schedules and POIS policies for precise stream switching. This enables broadcasters to enforce content rights and optimize monetization, supporting modern advertising insertion workflows.

The SDVP offers unparalleled deployment flexibility, operating on-premises, in private or public clouds (AWS, GCP, Azure), or via Zixi-as-a-Service (ZaaS). This pay-asyou-go model allows broadcasters to scale resources dynamically and minimize costs during peak periods.

Zixi also delivers significant cost efficiencies, with innovative, video-aware algorithms that compress null packets during transmission, reducing unnecessary bandwidth for dramatic efficiency gains and 30 - 60% decreased egress charges. Zixi support for ARM-based processors such as Graviton enables companies to maximize cloud resources and reduce compute costs by up to 90%, significantly reducing the TCO.

The SDVP powers the Zixi Enabled Network, a global ecosystem of 1400+ customers and 400+ integrated technology partners, including cameras, encoders, playout systems and cloud providers who enable seamless end-to-end video workflow interoperability with media organizations.

One of North America’s largest networks, FOX deploys Zixi to distribute all 190 regional and affiliate feeds to digital MVPDs, leveraging the cloud and public IP networks. FOX affiliates use Zixi to transmit the streams to Zixi Broadcasters hosted in AWS to transcode streams into the appropriate format before egress to the MVPDs. ZEN Master is utilized for end-to-end orchestration and visibility as part of a quickly scalable solution that was up and running in a matter of months, only possible with IP and the cloud.

The SDVP is an innovative leap in modern live IP video technology. Zixi has evolved from its protocol origins, into a comprehensive platform that supports ultra-low latency, video processing, orchestration, and analytics. Broadcasters, OTT providers, service providers and sports organizations are now able to quickly deploy complex workflows, while still minimizing costs with the lowest TCO in the industry.

In the modern media environment defined by increasingly rapid change and high expectation, the SDVP offers a flexibly deployed, future-proofed solution for high value, secure and reliable video distribution. With the industry, Zixi continues to evolve and provide future-proofed solutions that enable the delivery and orchestration of live IP video with unmatched efficiency and scalability.

The moderator, Luis Sanz, opened the conference by stating that: “Radiotelevisión Española, the first Spanish broadcaster, is once again a pioneer in an essential technological action to improve services for viewers with the launch of the first Ultra High Definition channel in Europe ever.

And it has done so within the structure of Digital Terrestrial Television –DTT– in such a way that any citizen having a TV set capable of receiving Ultra High Definition will be able to watch all the UHD broadcasts made by the Spanish public broadcaster, Radiotelevisión Española, at no cost.

This milestone has allowed Spain to be the first European country to offer, in Ultra High Definition and at no cost to viewers, the complete broadcast

of the Olympics held in Paris last summer.

But setting up a permanent UHD channel requires, in addition to a great professional commitment, the development of technical infrastructures enabling so. Therefore, in this conference, we will take a look the key elements implemented by RTVE for the new channel: the continuity of broadcasting in UHD, an UHD OB truck, routing and distribution, etc.

Finally, the coverage that RTVE has made of

the Paris Olympic Games in Ultra High Definition will be adequately reviewed.”

Jesús García Romero, Director of the Technical Area for Television, commented on the journey of UHD in TVE.

UHD has been an adventure that we have experienced in the Televisión Española as an authentic technological triathlon competition.

It was an Olympic year and our performance in recent times had been impacted by a simultaneous switch-off and switch-on: the switch-off of standard definition on Digital Terrestrial Television and the kindling of the Olympic flame. Three goals had to be achieved. First of all, complete the transformation of all territorial centers that were operating in SD (Standard Definition). It had been a long road of almost three years that ended just before SD was turned off

Secondly, we had to mount a continuity scheme in Ultra High Definition to use the multiplex that remained available on DTT after the shutdown of SD. And, finally, facing the Olympic Games, since OBS (Olympic Broadcasting Services), the company in charge of television production for the Games, was going to produce completely in Ultra High Definition, we at Televisión Española had to be up to the challenge of delivering the signal in Ultra High Definition.

For this, we needed to have at least one UHD OB

truck that would have to be in operation before the start of the Olympic Games, including the training of technicians and operators, running of technical tests, etc.

And there you have the results. It has been quite an achievement, not only by our technical team, but also of integrators, installers, manufacturers, each of them with their individual collaboration and contribution. It’s indeed been a collective success by the industry.

UHD has always been part of the technological roadmap for Televisión Española. Long ago we started installing editing booths in the Torrespaña and Prado del Rey studios in order to be able to carry out the coloring and grading for the small UHD productions that we were beginning to undertake.

Also since long, we had been leasing a UHD camera to record, as a public service, important events in Spain’s history. We have archival images already recorded in 4K, even though we did not have the production infrastructure

in place back then, but at least we have documented pieces of important events recorded.

This was a long-term vision. We started in 2014 with the Television Chair at Universidad Politécnica of Madrid by doing a pilot project at the Prado

Museum. We also tested a live broadcast in Ultra High Definition of the Parsifal opera from Teatro Real. And in 2016, we made a production of the Changing of the Guard at the Royal Palace in Madrid, with collaboration and loan of equipment from manufacturers.

UHD has always been part of the technological roadmap for Televisión Española. Long ago we started installing editing booths in the Torrespaña and Prado del Rey studios in order to be able to carry out the coloring and grading for the small UHD productions that we were beginning to undertake.

We have also collaborated in the UHD Spain partnership and in all the technical tests that have been carried out prior to launching a DTT UHD channel. There was a test channel in Televisión Española, on which Radio 3 concerts could be watched in UHD every night, Monday to Thursday, at 01:20 a.m.

We are also stressing the value of this UHD production, not only from the point of view of its technical features, but also with new production formats,

specifically with cinema lenses, in a filmmaking look with compact single-sensor cameras and the result, in the opinion of the filmmakers, is spectacular.

Víctor Sánchez García, Director

of Media and Television Operations, explained Radiotelevisión Española’s strategy regarding technical initiatives and above all, UHD.

We at Televisión Española in general see ourselves as the technological driver for part of the sector. It is the mission the Spanish Radio & Television Law has entrusted us, and once the SD channels were turned off, the window of UHD technology was opened for us.

But not only is UHD technology what I wanted to talk about, I wanted to delve somewhat in the more global strategy and also discuss a little bit what movements are being seen in this sector.

There are two or three disruptive technologies, some of which are appreciated by viewers, others that are not perceptible by the audiences and the ones that will change the way we produce.

What viewers probably don’t notice is the IP technology. We made very signi fi cant progress in Spain with IP technology when we started its implementation at the San Cugat center, which we hope to complete by next year. Introducing IT technology into broadcast production systems has been hard work and a matter of several years, but su ffi cient reliability is being achieved to meet the requirements for television. For example, at the Olympics, OBS has also used this technology as a backup for distribution. IP technology is setting a trend in the industry. At user experience level is not noticeable, it stays behind the scenes.

The technology that viewers do appreciate is UHD, which has an

impact on user experience and comprises two elements, both important: one, video quality, and the other -that we are also introducing- is audio quality. TVE works with Dolby Atmos, which allows for a better user experience. Therefore, with UHD, viewers will see and hear better.

TVE is a public service and has introduced UHD in the DTT infrastructure with broadcast technology accessible to all viewers at no cost. This is important, because in order to reach UHD bandwidths and achieve massive access for spectators, OTT platforms are either not ready or the cost of doing so would be huge.

Recently, RTVE has become a member of the 5G MAG association, which promotes the use of 5G broadcasting for transmission, which can be supplemented by the unicast transmission of OTT platforms.

And finally, there is artificial intelligence (AI),

a technology that will change the way we produce, both in the capture and editing of content, as well as, most likely, in broadcasting.

For this reason, and in relation to the latter technology, Televisión Española recently approved internal regulations for the use of AI. We have an internal AI committee within the strategy and implementation group and I think this technology will impact all of us, the entire industry. We are starting several projects, some of them having to do with automatic promotion, some with assistance issues, understanding by means of simple language.

TVE is a public service and has introduced UHD in the DTT infrastructure with broadcast technology accessible to all viewers at no cost.

Jesús García Romero commented on the elements that Radio Televisión Española has actually implemented with UHD, both at local facilities and for the Ultra High Definition OB truck.

The UHD continuity was already tested, assembled, when we were preparing the Games in IP 2110 technology, “channel in a box” type. Everything is already working. Now we have a second step ahead, to provide a live recorder and our own branding in UHD.

We had the Ultra High Definition OB truck foreseen, but it arrived late because ever since the pandemic the delivery of trucks, which is the OB infrastructure, is very complicated as it takes a long time. But then we did well in releasing the tender for the truck and we would see afterwards what to have on board because otherwise we would not make it on time. But while the truck project was being dealt with, we started the engineering design and in the end we succeeded in making it -always on the very last minute- and have enough time to prepare the technicians.

The OB truck is made in Ultra High De fi nition, with up to 12 cameras and features 5.1.4 immersive audio. We chose to continue the line of work that we had been using for OBs, a distributed matrix based on Riedel systems, which allows us to interconnect more than one OB. That is, with this OB truck working alongside another one, we can -instead of just 12 cameras- make a production with many more cameras and also have two diff erent productions, the international signal itself and also a customization from the other OB truck.

We opted for 12G-SDI technology, not IP, firstly because it is not true that IP is cheaper: just as in a large installation at a higher cost, this is offset by the possibility of sharing resources, it is not the case with an OB truck. At that time, it seemed easier and also more reliable for the sector, for the technicians who were in charge of these OBs, with 12G technology, identifying issues is easier.

OBs, in addition to the matrix, have the whole set of ancillary elements that we need: mapping, up conversion and down conversion,

because the OB truck can work or have to deliver signals for third parties in HD.

Image control, of course, dedicated for image control in HDR conditions, with grade 1 monitors of the highest quality, etc.

Manuel

González

Molinero,

Director of TV Technical Media, said that this is what Radio Televisión Española has done at the Olympic Games in Paris.

One of the first doubts that arose was what format to choose for

We had a UHD broadcast channel in DTT already in operation and, on the other hand, we had the delivery of an OB truck for production in UHD, and we also had stateof-the-art UHD cameras so we only needed additional equipment to complete the jigsaw.

production and broadcast of such an important sporting event as the Olympics. It was solved for us, as OBS decided that the native format of all competitions would be UHD 2160 p50 curved HDR and HLG with immersive audio 5.1.4.

We had a UHD broadcast channel in DTT already in operation and, on the other hand, we had the delivery of an OB truck for production in UHD, and we also had state-of-the-art UHD cameras so we only needed additional equipment to complete the jigsaw.

As Jesús said, another doubt at the beginning was whether we should address the project in video over IP ST-2110 or in baseband, that is, 12G SDI. But taking into account the short notice and available means, we decided to go for baseband since by doing so we removed a point of difficulty.

The project was based on three main pillars: production in Paris, signal conveyance, and local production in Torrespaña (Madrid).

RTVE installed an MCR (Signal Control) at the IBC in Paris. This control served as a central node to receive and send all the necessary signals. In this place we received the signal package for all competitions and multilateral signals. In total, we received 49 UHD signals through fiber optics that we converted to 12G signals by means of electric-optical transceivers.

Additionally, we had 5 pre-selected signals from the OBS matrix with up to 80 available signals and we also had 4 exchange video lines connected with the OBS output matrix to exchange customized signals, mixed zones, fl ash interviews, commentator positions etc. with the competition venues.

8 audio lines were engaged in order to receive the audios from the commentator positions and be able to give them the N-1 returns and orders. In that same RTVE’s control spot at the IBC, the fi nal mix of audios from our

commentators was made with the international immersive audio signal, in such a way that from the IBC we took out the signal fi nalized for broadcast and we also relied on two commentator booths where competitions for which personal attendance was not possible were narrated.

From the IBC we would send a total of 6 UHD signals and their corresponding multicast in HD to Spain. As return channels, 6 HD program return signals would be sent. For audio exchange, 12 AES-EBU signals were set up in each direction.

RTVE also had a representation studio and iconic views of the city of Paris on Trocadero Square overlooking the Eiffel Tower. This studio was equipped with four UHD cameras, one of them on a light crane, and as a mixer we used a light one from the Datavideo brand.

The connection of this studio with the IBC was made through dedicated 1+1 Gbps fiber provided by OBS, where by means of NImbra equipment we transmitted signals, including video/audio/ intercom/communications/ corporate services. We always hire redundant services because

throughout our experience we have had many issues of all kinds. In these events everything can happen and we have had to let some events go on many occasions.

Víctor Sánchez

“We usually do duplicates and triplicates on many instances, meaning that we had two fibers on different paths to television input, plus via the Internet, since we managed to transmit a UHD signal over the Internet. It is a guaranteed Internet, we had more than 50 megabytes of Internet upload assured and with these three paths the spectators never had the problem.”

Manuel González

This studio at the Trocadero was used as continuity between competitions, a spot for live cast, as well as to provide information services for La 1 and Teledeporte and Canal 24h.

As an additional resource, we sent 8 ENG photojournalists with their editors to Paris in order to offer viewers different aspects of the daily activity in the Games, as well as a different view of Paris.

To solve the problem around changing light conditions, it took us a lot of effort to install a RoscoView system, a system

of polarized filters that are placed in the window panes and in the lenses fitted on the cameras in an easily adjustable fashion depending on how light shifts throughout the day.

To interconnect Paris and Madrid (Torrespaña) in such a way that it was possible to exchange signals, RTVE contracted international circuits through POP (Point of Present) in Paris and Frankfurt. Based on our experience, we always use two different paths in a way that interconnection is guaranteed in the event that one of them becomes unavailable.

During the Games there were acts of vandalism in France that managed to cut connections for three days and there were broadcasters that lost their connectivity during that time.

For the connection between Paris and Madrid, we estimate a capacity of 2 Gbps for conveyance of all the signals, and for transport of the POP signals to Madrid EBU was hired to deliver the signal to the Madrid International Control through the FINE network.

For signal exchange, connections were set up by means of NIMBRA systems and services were opened for the necessary video, audio, communications and corporate services. Low-delay Sapec Sivac One encoders were chosen for UHD video signal encoding. The codec used was H265 at 125 Mbps, with a 150 ms delay; and for HD signals, Nimbra MAM cards in JPEG2000 format were used. Finally, we used SRT transmission equipment over the

dedicated internet as backup signals for the studio and the IBC.

Víctor Sánchez

“It is worth mentioning that, thanks to the encoding system, the commentator could be inserted live in the audio loop, that is, we sent the signal from Paris to Madrid and over the same signal -which in turn we distributed- the commentator’s audio would be included. It was done successfully because of the system’s low latency, and the slight delay was not seen in the TV broadcast.

We also took a risk with the audio returns and settings, which we did through the intercom and generated the N-1 returns with the latter rather than with the sound console. The return management was done with the intercom and this was an advantage at production level, since the presenters could talk to the commentator, all of it live. It was solved in a simple way with Riedel conferencing. Once done, it went pretty well.”

Manuel González

As we have mentioned, the 6 UHD signals from Paris (x6) were received at the International Control, which was in charge of delivering them by fiber optic to the UHD H01 OB truck. This OB truck was used as production control and for making part of the continuity of La 1 UHD channel.

On the other hand, this OB truck was responsible for the production on a set (Studio A4 in Torrespaña) with 5 UHD cameras that allowed to make summary programs, as well as move from one sport to another. On this set, one of the cameras was sensorized to offer augmented reality.

The remaining HD signals were delivered from the International Control to San Cugat to make the continuity of Teledeporte through the RTVE contribution network through Nimbra. The ingestion or recording of the signals either for the preparation of summaries,

archiving or preparation of pieces for information services was carried out in HD.

The H01 OB truck was responsible for recording UHD signals on EVS recorders, managing up to 6 UHD signals for recording for summaries and replays. The OB truck’s output supplied two program signals: one in UHD and one in simulcast for La 1 HD channel. To achieve this, it was necessary to resort to downmapping, i.e. lower the resolution and change the color space from HDR to SDR. Such conversion was done automatically by

converting LUTs and, in this instance, we used the OBS parameters for sports.

For the Olympic Games, 8 additional booths were set up between Torrespaña and San Cugat combined. As long as the broadcast was in La 1 UHD, the mixing of voice-over audios was carried out at the IBC in Paris, so all the audios from the booths were sent via Nimbra with an ultra-low delay. As Victor said, we invented ultra-low latency audio circuits so that the entire finished product was finalized at the IBC and the workload on the H01 OB truck alleviated as much as possible.

Finally, at the A4 studio we mounted a system of screens powered from the OB truck and graphics systems in UHD and augmented reality that complemented the viewer experience.

Regarding the most relevant operational challenges we faced, one of them was to change the vision for the way of working in capture, lighting, makeup, and production for camera controls in different color spaces. Working in HDR (High Dynamic Range) and expanding the color space means that the lighting and CCU settings handle different

This OB truck was responsible for the production on a set (Studio A4 in Torrespaña) with 5 UHD cameras that allowed to make summary programs, as well as move from one sport to another. On this set, one of the cameras was sensorized to offer augmented reality.

parameters, but since in all cases it was necessary to offer UHD and HD simulcast broadcasting whereas the camera controls adjusted only in HD, here emerged the figure of the HDR supervisor, in charge of ensuring that the UHD broadcast was correct.

In this operation, another of the most important challenges was to make narrations of the same broadcast from two different points, which forced us to constantly control the relevant delays. Regarding 5.1.4 immersive audio, it was necessary to expand the sound console to handle many more channels, coupled with the added difficulty of mixing.

Another of the novelties that we took this year at the Paris Olympic Games was the use of IPVandA Flex as a backup for multilateral signals, which is a service for transporting signals in the cloud, delivered by SRT over the internet. This service, provided by OBS, allowed up to 10 simultaneous signals to be pre-selected from 80 available channels of

multilateral and additional content provided by OBS. As an experience, the service has worked very well and we believe that it is a very good alternative as a replacement for the traditional satellite service.

Ángel Parra as a director, contributed his rather more artistic experience on concrete work when producing with UHD technology.

It has been possible to bring a UHD signal to viewers during all the production processes, sports broadcasting, presentations at the studios and in connections with the different live points with hardly any kind of failure, either in communications or in stability/quality of the signal.

Signal production for the UHD channel has been compatible with the broadcast on the HD channel, with the complexity that this entails concerning adjustments both in video, making SDR compatible

with HDR, as well as in color space and sound, 5.1/Atmos immersive sound, compatible with the final mix in stereo.

The HFR (High Frame Rate) broadcast is noteworthy. The use of the progressive signal has meant a significant quality leap in sports broadcasts so as to avoid moiré and sweeps with loss of definition.

In relation to the use of UHD film cameras, I think that in order to convey to viewers the full potential offered by these cameras, it is necessary to adapt the use of film cameras with fixed lenses to the type of content you want to show. A documentary is not the same as a live broadcast or a sports broadcast. In fact, when this type of device is mixed with the production

of the signal done with cameras from an OB truck, the effect achieved is strange -to say the leastand too flashy for viewers.

What I would highlight is –in terms of the OB truck– that being capable of working in multi-camera UHD is something that not long ago was unthinkable. UHD, or what is the most filmlike 4K, was designed precisely to have that cinema look for working, recording, coproducing and then achieve a broadcast with that look. What I do highlight and regard very important here is the fact that this type of live cinema signal can be achieved in an OB truck, which is something that a few years ago was just fiction. I think this is where technology has advanced the most and the thing we can be most proud of.

Jesús García Romero closed with an important reflection

We are very satisfied with this technical achievement, but I also wanted to give a slightly more far-reaching message. In addition to our technical pride –as a public service medium– and in general, for all of us who have worked on the Games, we have been able to convey values to society.

I can recall the example of Elena, the Paralympic athlete who broke the rules to help her guide, who had fainted, and that cost her to lose the olympic medal. Or all the eff ort made with the consequences of the DANA storm, to bring the images closer to viewers, with the use

of helicopters, ENGs, etc. I think we all have to be very satisfi ed with the role we play in the broadcast world: convey values; make these situations known so that the communities can mobilize their very best; and fi nally, it must be said, the technicians, the operators, they are the fi rst ones to arrive whenever the Olympics are going to be held and they are the last ones to leave. They have been there long before journalists and athletes arrive and never got an olympic medal, so let’s give ourselves that medal, because I believe that all the media, all the technical staff that are in these events are the ones who make all this to be seen and enjoyed possible. So, an olympic medal for the entire sector!

By Ronen Artman, VP Marketing, LiveU

2024 has been packed full of major global news and sports events, from THE EUROS, Summer Games and European Athletics Championships to global elections in more than 70 countries. With more content being delivered to more platforms than ever before, broadcasters and other organizations are transitioning to end-to-end IP workflows.

Eighteen years ago, we invented IP bonding with LRT™ (LiveU Reliable Transport), which is now deployed globally. As an industry, we’re seeing the growing use of IP technology for producing live content, enabling dynamic, robust and flexible workflows. As technology evolves at pace, alternative versions of IP-video bonded connectivity solutions are being tested in real-world environments, including private 5G, network slicing

and the use of LEO (Low Earth Orbit) satellites, such as Starlink. They are being tested for reliable performance in various use cases, including news and sports events.

Network slicing gives telcos the ability to create a dedicated bandwidth “slice”, guaranteeing a certain amount of up and downstream bandwidth for a given period of time – the length of a major event, for example. This provides huge potential when combined with

IP-bonding, creating a powerful combination across contribution workflows that overcomes previous network congestion issues.

We’re seeing telcos make greater investments in 5G now, especially around major venues. For example, Orange has a private 5G Lab at the Stade Vélodrome, also known as Orange Vélodrome in Marseille, France, which operates as a 5G Standalone (5G SA) network.

Private 5G is also likely to play an important role, especially for sports events in remote areas and major news events. Further benefits of utilising private cellular networks are that they provide an additional layer of service for customers including network segmentation, prioritization and greater security, while delivering additional capacity for expanded remote production technologies and employee communications.

At this summer’s Republican National Convention (RNC) and the Democratic National Convention (DNC), LiveU, in collaboration with Pente Networks, provided customers with the option of adding LiveU Private Connectivity. This gave major news broadcasters access to private network connectivity and allowed the world to participate more closely in two of the largest political events of

the year. This option, which provides uncontested connectivity via a dedicated Private 5G connection, is also being made available for the inauguration in January 2025.

There are also use cases for Starlink connectivity in conjunction with LiveU, which are varied and extensive. This includes using Starlink alone, bonding two Starlink terminals, bonding Starlink with cellular networks, and bonding Starlink with other forms of connectivity like WiFi or a local venue LAN. Users have also used Starlink as bandwidth to remotely locate receivers.

5G certainly has a huge role to play in live sport. We know this from the multiple trials in which we’ve been involved, such as with Sky Deutschland for the Special Olympics, where we highlighted the efficiency and ease of use of 5G with IP-bonding. We’re now testing use cases spanning on- and off-site remote production, cloudbased production, mobile production, and edge-cloud production, as part of

FIDAL’s ‘Field Trials Beyond 5G’ Research Project. We will also test Beyond 5G (B5G) technologies, such as network slicing, edge computing, standalone (SA) private network/SNPN, and network exposure.

With the increased deployment of 5G, we see many opportunities for live sports production, replacing traditional transmission methods with cost-effective IP technology. It also makes remote production even more viable, facilitating more sustainable live productions with reduced travel and transport of heavy equipment.

IP is also changing the face of video distribution removing the need for dedicated infrastructure.

At this summer’s European Athletics Championships, live production expert Actua Sport used, alongside our field units, LiveU Matrix for cloud IP distribution to the 35 takers, complementing traditional satellite or fiber connectivity. Actua Sport had to produce field events with multiple

galleries. It had a total of 12 feeds available on-site and remotely through LiveU Matrix distributing the production feeds in LRT™ or SRT to takers and a couple of cloud-based platforms. Takers could access the feeds and record them as a standalone, use for post-production purposes, live stream or feed to other platforms. A couple of takers used those feeds to recreate their own main feed.

Reliable and cost-effective, cloud IP distribution enables sharing and receiving of high-quality, low-latency live feeds with, and from, broadcasters and other stakeholders around the globe both inside and outside their organisations.

As we continue to meet the industry’s growing demand for IP workflows with cloud IP solutions, in combination with remote production, organisations

can reduce production costs and increase flexibility and efficiency. Sustainability is also a key factor with remote IP workflows helping to reduce the number of people on-site as well as the costs. As this article’s headline suggests, IP workflows are shifting from a trend to a necessity providing unlimited potential for the industry as we head into a new age of broadcast innovation.