21 minute read

Salifyanji Namwila '24

Meta Learning: A Step Closer to Real Intelligence

BY SALIFYANJI NAMWILA '24

Advertisement

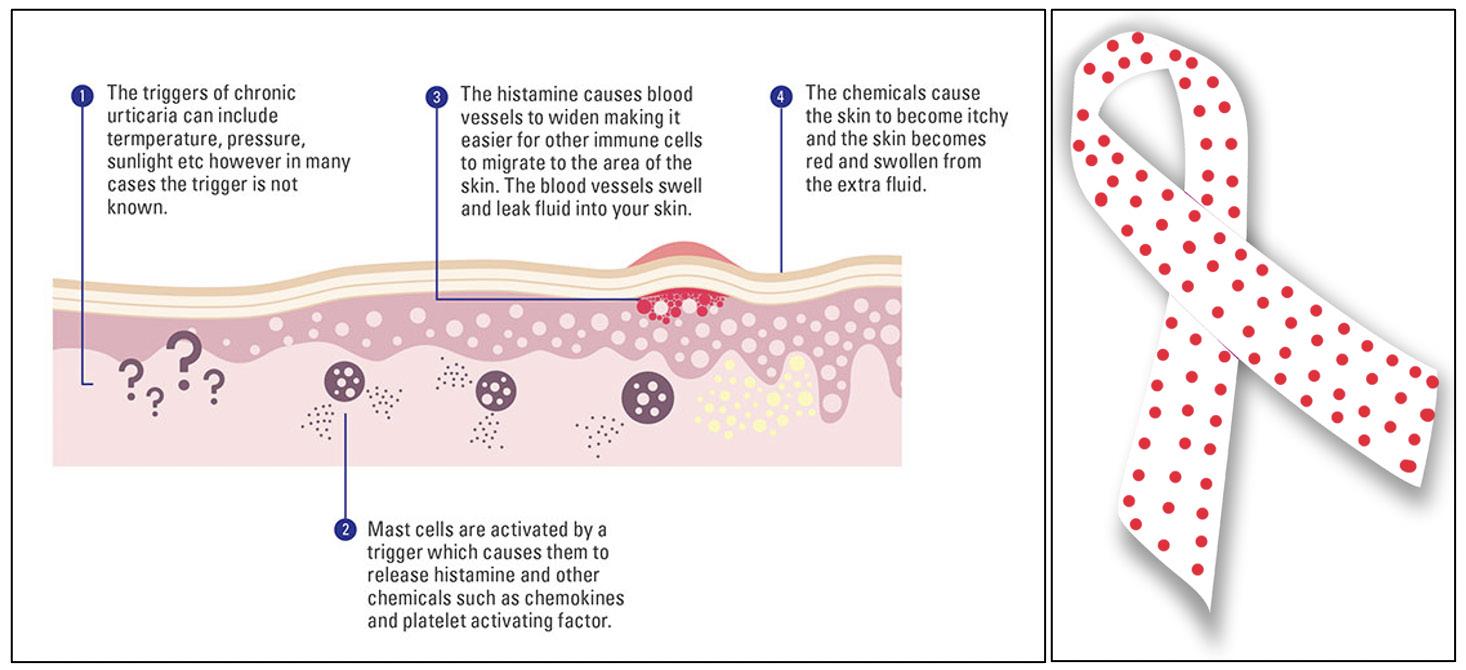

Cover Image: A Deep Neural Network. Deep learning, a technique in machine learning, has become crucial in the domain of modern machine interaction, search engines and mobile applications, revolutionizing modern technology by mimicking the human brain and enabling machines to reason independently. Even though the concept of deep learning extends to various industries, certain Machine Learning (ML) approaches like supervised learning that employ deep learning require huge amounts of input data, which is ultimately expensive to create especially for speci c domains of tasks. e challenge then is for data scientists and ML engineers to create actionable real-world algorithms most e ective for given tasks even with limited input data set. is is where meta learning pitches in. Image Source: Wikimedia Commons A Brief Introduction to Machine Learning, Deep Learning and Neural Networks

Machine learning, Deep Learning, and Neural Networks are all sub- elds of Arti cial Intelligence (AI) and Computer Science. Machine Learning is a branch of AI that focuses on the use of data and algorithms to imitate the way that humans learn; it allows so ware applications to become more accurate at predicting outcomes without being explicitly programmed to do so (Burns, 2021). Deep learning is a sub- eld of machine learning, and neural networks is a sub eld of deep learning. Deep learning and machine learning di er in how each algorithm learns. Deep learning eliminates some of the manual human intervention required in the process and enables the use of larger datasets by automating much of the feature extraction process. Feature extraction in Deep/Machine learning is a process that aims to reduce the number of features in a dataset by creating new features from the existing ones, summarizing most of the information contained in the original set of features (Ippolito, 2019). Classical machine learning on the other hand is more dependent on human intervention. Human experts determine the set of features to understand the di erences between data inputs, usually requiring more structured data to learn (IBM Cloud Education, 2020). Neural networks, or arti cial neural networks (ANNs), are comprised of node layers, containing an input layer, one or more hidden layers, and an output layer. Each node, or arti cial neuron, connects to another and has an associated weight and threshold. If the output of any individual node is above the speci ed threshold value, that node is activated, sending data to the next layer of the network (Jiaxuan et al, 2020). e ‘deep’ in deep learning refers to the depth of layers in a neural network. A neural network that consists of more than three layers- which would be inclusive of the inputs and the output- can be considered a deep learning algorithm or a deep neural network (IBM Cloud Education, 2020). A neural network that only has two or three layers is just a basic neural network. Some methods used in supervised learning include neural networks, logistic regression, support vector machine (SVM), random forest, and more.

Some Types of Machine Learning

Machine learning classi ers fall into three primary categories: Supervised machine

Learning, Unsupervised Machine Learning and Semi Supervised learning. Supervised learning is de ned by its use of labeled datasets to train algorithms to classify data or predict outcomes accurately. Labeled data is a designation for pieces of data that have been tagged with one or more labels identifying certain properties or characteristics, or classi cations or contained objects (Wikipedia: Labeled data in machine learning); unlabeled data on the other hand are not tagged with one or more labels. As input data is fed into the model, it adjusts its weights until the model has been tted appropriately. is is part of the cross-validation process , a statistical technique for testing the performance of a machine learning model throughout the whole dataset, that ensures the model avoids over tting (when a model learns “noise’, or irrelevant information within a dataset, making its performance on unseen data inaccurate) or under tting (when a model is too simple that it cannot establish the dominant trend within the data, resulting in training errors and poor performance of the model). Unsupervised learning uses machine learning algorithms to analyze and cluster unlabeled datasets. ese algorithms discover hidden patterns or data groupings without the need for human intervention. Algorithms used in unsupervised learning include neural networks, naïve bayes probabilistic methods, k-means clustering, and more. Semi-Supervised learning on the other hand is a comfortable medium between supervised and unsupervised learning. During training, it uses a smaller labeled data set to guide classi cation and feature extraction from a larger, unlabeled data set. is way, semisupervised learning can solve the problem of not having enough labeled data to train a supervised learning algorithm (IBM Cloud Education, 2020).

Machine learning technology has many applications including speech recognition, online customer service chatbots, recommendation engines, and computer vision, which makes our lives easier. However, machine learning algorithms have some challenges such as the need for large datasets for training, high operational costs due to many trials during the training phase, and trials taking a long time to nd the model which performs best for a certain dataset. Enter Meta learning, another subset of machine learning to tackle these challenges by optimizing learning algorithms and nding better performing ones.

Introduction to Meta Learning

Meta Learning in simple terms means learning to learn. In machine learning, it refers to learning algorithms (called meta machine learning algorithms or meta learners) that learn from the output of other machine learning algorithms that in turn learn from data (Trianta llou et al., 2019). Accordingly, meta learning requires the presence of other learning algorithms that have already been trained on data. ese meta learners make predictions by taking the output from existing machine learning algorithms as input and predicting a number or class label.

Traditional machine learning uses a large training dataset exclusive to a given task to train a model and fails abruptly when very few data points are available. is is a very involved process. It contrasts with how humans take in new information and learn new skills. Human beings do not need a large pool of examples to learn a skill. We learn very quickly and e ciently from a handful of examples. Drawing inspiration from how humans learn, meta learning attempts to automate these traditional machine learning challenges. It seeks to apply machine learning to learn the most suitable parameters and algorithms for a given task. Meta learning produces a versatile AI model that can learn to perform various tasks without having to train them from scratch. Even

Image 1: An Arti cial Neural Network. e depth of the hidden layer determines whether it is a deep arti cial neural network or not. W1…Wn are weights applied to the output of an individual node before data is sent to the next layer. Image Source: Wikimedia Commons

though many researchers and scientists only recently believed that meta learning could get us closer to achieving arti cial general intelligence, meta learning research dates as far back as the late 20th century.

Research History of Meta Learning

With the advancement of arti cial intelligence technology, meta learning has experienced three stages of development. e beginning of the rst development stage of meta learning can be traced back to the early 1980s, when Donald B. Maudsley put forward the concept of “meta learning” for the rst time. Maudsley regarded meta learning as the synthesis of hypothesis, structure, change, process, and development. He described it as “the process by which learners become aware of and begin to control their already internalized perception, research, learning, and growth habits” (Maudsley, 1980). In 1985, John Biggs used meta learning to describe the specialized application of metacognition, the study of memory-monitoring and self-regulation, meta-reasoning, awareness, and self-awareness, in student learning and believed that meta learning is a subprocess of metacognition. In 1988, Philip Adey and Michael Shayer combined meta learning with physics and proposed a new method of teaching physics based on meta learning ideas (Adey et al, 1988).

In the early 1990s, the development of meta learning entered the second stage, the concept of meta learning slowly penetrated the eld of machine learning, and many researchers contributed to the early work of meta learning. As for the issue of identifying which algorithm from a set of complementary algorithms can optimize each scenario and improve the overall performance on a given task, technically called algorithm selection, at that time, some researchers discovered that this issue is a learning task, and because of this, it gradually developed in the machine learning discipline, and a brandnew eld, “meta learning eld,” gradually formed (Giraud-Carrier et al, 2004). In 1990, Rendell and Cho innovatively proposed a method to characterize classi cation problems using the meta learning idea and conducted experiments to verify that these classi cation features have a positive impact on machine learning algorithm behavior like accuracy, speed (Rendell et al, 1990). However, this idea was mostly used in psychology at that time. In 1992, David Aha further expanded the meta learning idea: for a given dataset with characteristics, say C1, C2…, Cn, the rule of preferring an algorithm, say A1, over another algorithm, say A2, is obtained by a rule-based learning algorithm, and this idea was rst applied to the eld of machine learning (Aha et al, 1992). In 1993, Chan and Stolfo proposed that meta learning is a general technology that can combine multiple learning algorithms and used meta learning strategies to combine classi ers, machine learning algorithms used to assign a class label to a data input, calculated by di erent algorithms. Experiments showed that meta learning strategies and algorithms are more e ective than other experimental strategies and algorithms (Chan et al, 1993). In 1994, with the European development of a largescale classi cation algorithm comparison project STATLOG (comparative testing of statistical and logical learning), the meta learning idea gradually attracted the attention of many scholars (King et al., 1995).

In the 21st century, more and more researchers in the eld of machine learning have paid more attention to the application of meta learning ideas for algorithm selection, and the development of meta learning has also entered the third stage. In 2002, Vilalta and Drissi proposed that meta learning and basic learning are di erent in the scope of adaptation levels; meta learning studies how to dynamically select the correct bias by accumulating metaknowledge (that is knowledge about knowledge), while the basis of basic learning is a priori xed. In 2017, Finn et al. innovatively proposed a Model-Agnostic Meta Learning (MAML) algorithm, which is compatible with any model trained with gradient descent (an optimization algorithm used to nd the values of parameter of a function that minimizes a cost function, best used when parameters cannot be calculated analytically), and is suitable for a variety of di erent learning problems, including classi cation, and regression among others. In 2020, Raghu et al proposed an Almost No Inner Loop (ANIL) algorithm based on MAML. is algorithm has better computational performance than ordinary MAML in standard images. Still, many other researchers have proposed various meta learning models that employ more advanced machine learning approaches like reinforcement learning, a technique in which a learning agent is able to perceive and interpret its environment, take actions, and learn through trial and error.

Some Approaches to Meta-learning Algorithms

Meta learning is not so di erent from basic machine learning. In any machine learning task, data samples are given in form of inputoutput pairs, say (X, Y), from some underlying distribution and the goal is to arrive at a function which can predict, say some Y values for some

unseen data X coming from the same distribution. In meta learning, this given data is di erent tasks which are regarded as samples from some underlying task distribution, and the goal is to come up with a function which can perform ‘well’ for some unseen tasks coming from the same distribution. Like in machine learning, the solution in meta learning is to “learn it."

Each task is a pair of training data (coming from the same distribution) and a loss function. Loss functions measure how far an estimated value is from its true value. It is computed from the output of a machine learning algorithm, which is the input in meta learning. To perform well for a task, it means having a low value for the loss of unseen data that comes from the same distribution where the training data for the task came from. Unseen data also has its own training data which can enhance the performance of the task. In meta learning, one way of solving a task is using given data to learn some parameters of some prediction function that solves that task (Lahoti, 2018). Hence the objective is to come up with some initial set of parameters for a function which when updated with training data from an unseen task, performs well on that task.

Several approaches are used to learn these parameters, including Optimized Meta Learning and Model-Agnostic Meta learning (MAML).

Optimized Meta Learning

Several learning models have many hyperparameters that are optimizable. A hyperparameter is a parameter that is de ned before the start of the learning process and is used to control the learning process. Hyperparameters have a direct impact on the quality of the training process (Wikipedia: Hyperparameter-Machine Learning, 2021). is means that the process of choosing hyperparameters or tuning them dramatically a ects how well the algorithm learns.

With ever-increasing complexity of models, however, more so neural networks, a challenge arises: e complexity of models makes them increasingly di cult to con gure. Consider a neural network. Human engineers can optimize a few parameters for con guration. is is done through experimentation. Yet, deep neural networks have hundreds of hyperparameters. Such a system is too complicated for humans to optimize fully. ere are many ways to optimize hyperparameters, among which include grid searching and random searching.

Grid search method makes use of manually predetermined hyperparameters. e group of predetermined parameters is searched for the best performing one. Grid search involves the trying of all possible combinations of hyperparameter values. e model then decides the best-suited hyperparameter value. However, this method is referred to as traditional since it is very time consuming and ine cient (Stalfort, 2019).

Random Search, unlike grid search, which is an exhaustive method involving the tying of all possible combinations of values, replaces this exhaustive process with random searching. e model makes random combinations and attempts to t the dataset to test for accuracy (Stalfort, 2019). Since the search is random, there is a possibility that the model may miss a few potentially optimal combinations. On the upside, it uses much less time compared to grid search and o en gives ideal solutions. Random search can outperform grid search provided that only a few hyperparameters are required to optimize the algorithm.

Model-Agnostic Meta learning (MAML)

Even though random search generally performs better than grid search, we can do better at arriving at the initialization parameter for the representation function that works well on unseen data. Meta Agnostic Meta learning (MAML) achieves this goal and is by far more e ective than grid search or random search approaches. MAML looks for its initialization parameters that work best for task samples from

Image 2: Diagram of modelagnostic meta learning algorithm (MAML), which optimizes for a representation θ that can quickly adapt to new tasks. Image Source: Wikimedia Commons

Image 3: Qualitative changes during the adaptation phase of learning under the mass-voltage task.

Image Source: Wikimedia Commons the task distribution using gradient descent (Finn et al, 2017). Gradient descent minimizes a given function by moving towards the direction of the steepest descent iteratively. It is used to update the parameters of the model (Andrychowicz et al., 2016). e meta learner seeks to nd an initialization that is not only useful for adapting to various tasks, but also can be adapted quickly (in a small number of steps) and e ciently (using only a few examples). Figure 3 shows a visualization.

Suppose we are seeking to nd a set of parameters θ that are highly adaptable. During the course of meta learning (the bold line), MAML optimizes for the set of parameters such that when a gradient step is taken with respect to a particular task Li (the gray lines), the parameters are close to the optimal parameters θ i* for task Li (Finn et al, 2017).

is approach is quite simple and has several advantages. It does not make any assumptions on the form of the model. It is quite e cient – there are no additional parameters introduced for meta learning, and the learner’s strategy uses a known optimization process (gradient descent), rather than having to come up with one from scratch (Weng L., 2018). Lastly, it can be easily applied to several domains, including classi cation and regression (Rahul B., 2021).

Meta Learning in the Field of Robot Learning

In the rapidly developing eld of robotic learning (Azizi, A. 2020), the application prospects of meta learning are very broad. Even more so, in robot operation skills, meta learning as a ‘learning to learn’ method, has made good progress (Peters, e diagram in Figure 4 demonstrates the e ectiveness of MAML in training robot learners with only a few episodes (i.e., batches of tasks) of adaptation.

For the mass-voltage task, the initial meta-policy, steered the robot signi cantly to the right due to the extra mass and voltage change, which caused an imbalance in the robot’s body and leg motors. However, a er 30 episodes of adaptation using evolutionary strategy MAML method, the robot straightens the walking pose, and a er 50 episodes, the robot can balance its body completely and is able to walk longer distances (Xingyou S. et al, 2020). ese observations only further reinforce how indispensable MAML is, especially in robot training.

With the development of robot technology in the elds of home, factory, national defense, and out-space exploration (Tan et al., 2013), the autonomous operation ability of robots has attracted more attention from the public, and it is expected that it can replace humans in more complex multi-domain tasks. erefore, there is a need to develop more advanced methods for robots to learn operating systems. However, improving the ability of robots to learn operating skills autonomously and quickly is a major issue in the eld of robot learning problem (Ji et al., 2021). In addressing this challenge, Finn et al., proposed a Meta-Imitation Learning (MIL) method by extending MAML to imitation learning (Finn et al., 2017). is method allows robots to master new skills with only one demonstration, which improves robot learning e ciency, whether in simulation or in visual demonstration experiments on a real robot platform; the ability of this method to learn new

tasks has been veri ed, and it is far superior to the latest imitation learning methods.

Still new robust meta learning approaches in robotics keep on being developed. For instance, Yu et al proposed a Domain-Adaptive Meta Learning (DAML) method based on meta learning, allowing the learning of cross-domain correspondences, so that robot learners can visually recognize and manipulate new objects a er only observing a video demonstration of a human user and achieve the e ect of one-time learning (Yu et al., 2018). is way, meta learning cannot only help robots to imitate learning, but also cultivate the ability of robots to learn to learn.

Conclusion

Meta-learning opportunities present themselves in many ways and can be embraced using a wide spectrum of learning techniques. Every time we try to learn a certain task, whether successful or not, we gain useful experience that we can leverage to learn new tasks. We should never have to start entirely from scratch. Instead, we should systematically collect our ‘learning exhaust’ and learn from it to build auto-machine learning systems that continuously improve over time, helping us tackle new learning problems ever more e ciently. e newer tasks we encounter, and the more similar those new tasks are, the more we can tap into prior experience, to the point that most of the required learning has already been done beforehand. e ability of computer systems to store virtually in nite amounts of prior learning experiences (in the form of metadata) opens a wide range of opportunities to use that experience in completely new ways, and we are only starting to learn how to learn from prior experience e ectively. Yet, this is a worthy goal: learning how to learn any task empowers us far beyond knowing how to learn speci c tasks.

Meta learning not only solves problems encountered by conventional arti cial intelligence but also solves the problems of Machine Learning’s prediction accuracy and e ciency in Data Science. Consequently, the role of meta learning in arti cial intelligence is indispensable. With traditional machine learning techniques paving the way to meta learning, researchers are now able to use meta learning models across tasks instead of training new data and new models for di erent tasks from scratch (Huisman, 2021). At the same time, since the birth of arti cial intelligence in the 1950s, people have always wanted to build machines that learn and think about big data like humans (Lake, 2019). e meta learning algorithm based on the idea that “it is better to teach them how to sh than to give them sh,” dedicated to helping arti cial intelligence learn how to learn big data, is the ideal solution to realize this goal, and it is also a new generation force in the development of arti cial intelligence. In the future, researchers will also make breakthroughs in a more challenging direction. Perhaps the million-dollar question that remains the same is: How can we use knowledge about learning (i.e., metaknowledge) to improve the performance of learning algorithms? Obviously, the answer to this question is the key to progress in this eld and will continue to be the subject of in-depth research.

References

Adey, P., et al. (1988) Strategies for Meta-Learning in Physics. Physics Education. Ref: https://iopscience.iop.org/

Image 4: Meta Imitation learning and Domain Agnostic Learning on a robot learner. e le image shows the robot learner being taught the task. Note the bowl in which the peach is being delivered. e image on the right shows how the robot learner imitates the action perfectly even a er positions of receiver plates are shu ed randomly. Image Source: Wikimedia Commons

Andrychowicz, M., et al. (2016). Learning to Learn by Gradient Descent. e1606.04474. https:// arxiv.org/abs/1606.04474

Aha, D. W. (1992). Generalizing from Case Studies: A Case Study. Proceedings of the 9th International Workshop on Machine Learning. Aberdeen, UK. https://www.sciencedirect.com/ science/article/pii/B9781558602472500061

Azizi, A. (2020). Applications of Arti cial Intelligence Techniques to Enhance Sustainability of Industry 4.0: Design of an Arti cial Neural Network Model as Dynamic Behavior Optimizer of Robotic Arms. Complexity, 2020, e8564140. https://www.hindawi.com/journals/ complexity/2020/8564140/

Biggs, J. B. (1985) e Role of Meta-Learning in Study Processes. British Journal of Educational Psychology. https://bpspsychub.onlinelibrary. wiley.com/doi/abs/10.1111/j.2044-8279.1985. tb02625.x

Burns, E. (2021). In-depth guide to machine Learning in the Enterprise. https:// searchenterpriseai.techtarget.com/In-depthguide-to-machine-learning-in-the-enterprise

Finn, C., et al. (2017). Model-agnostic Meta-Learning for Fast Adaptation of Deep Networks. Proceedings of the 2017 International Conference on Machine Learning. Sydney, Australia. http://proceedings.mlr.press/v70/ nn17a

Giraud-Carrier, C., et al. (2004). Introduction to the Special Issue on Meta-Learning. Machine Learning. https://link.springer.com/ article/10.1023/B:MACH.0000015878.60765.42

Hartman, T. (2019). Meta-Modelling Meta Learning. https://medium.com/datathings/metamodelling-meta-learning-34734cd7451b

Huisman, M., et al (2021). A survey of Deep Meta-Learning. Arti cial Intelligence. https://scholar.google.com/scholar_ lookup?title=A%20survey%20of%20 deep%20meta-learning&author=M.%20 Huisman&author=J.%20N.%20 van%20Rijn&author=&author=A.%20 Plaat&publication_year=2021 Lahoti, S. (2018). What is Meta Learning? https://hub.packtpub.com/what-is-metalearning/

Madhava, S. (2017). Deep Learning Architectures. https://developer.ibm.com/ articles/cc-machine-learning-deep-learningarchitectures/

Raghu, A., et al. (2019). Rapid Learning or Feature Reuse? Towards Understanding the E ectiveness of MAML. https://arxiv.org/ abs/1909.09157

Rahul, B. (2021). How to Run ModelAgnostic Meta Learning (MAML) Algorithm. https://towardsdatascience.com/how-torun-model-agnostic-meta-learning-mamlalgorithm-c73040069810

Rendell, L., et al. (1990). Empirical Learning as a Function of Concept Character. Machine Learning. https://link.springer.com/ article/10.1007/BF00117106

Rozantsev, A., et al. (2018). Beyond Sharing Weights for Deep Domain Adaptation. IEEE Transactions on Pattern Analysis and Machine Intelligence. https://scholar.google. com/scholar_lookup?title=Beyond%20 sharing%20weights%20for%20deep%20 domain%20adaptation&author=A.%20 Rozantsev&author=M.%20 Salzmann&author=&author=P.%20Fua&publication_year=2018

Santoro, A. (2016). Google DeepMind. Meta Learning with Augmented Neural Networks. http://proceedings.mlr.press/v48/santoro16.pdf

Stalfort, J. (2019). Hyperparameter Tuning Using Grid Search and Random Search: A conceptual Guide. https://medium.com/@jackstalfort/ hyperparameter-tuning-using-grid-search-andrandom-search-f8750a464b35

Trianta llou, E., et al (2019). Meta-Dataset: A Dataset of Datasets for Learning to Learn from a Few Examples,” 2019. https://arxiv.org/ abs/1903.03096

You, J., et al. (2020). Graph Structure of Neural Networks. In International Conference on Machine Learning (pp. 10881-10891). http:// proceedings.mlr.press/v119/you20b.html

Vilalta R., et al (2002). A Perspective View

and Survey of Meta-Learning. Arti cial Intelligence Review. https://link.springer.com/ article/10.1023/A:1019956318069

Weng L. (2018). Meta Learning: Learning to Learn Fast. https://lilianweng.github.io/lillog/2018/11/30/meta-learning.html

Xingyou, S., et al. (2020). Google AI Blog. Exploring Evolutionary Meta Learning in Robotics. https://ai.googleblog.com/2020/04/ exploring-evolutionary-meta-learning-in.html

Xu, Z., et al (2019). Meta-learning via Weighted Gradient Update. IEEE Access. 2019. https:// ieeexplore.ieee.org/abstract/document/8792107