SPECIAL TOPIC

SPECIAL TOPIC

EAGE NEWS Association reveals short courses for Annual INDUSTRY NEWS UK’s first CCUS projects move ahead

TECHNICAL ARTICLE Volumetric assessment of resources in Brazil’s Parnaíba Basin

CHAIR EDITORIAL BOARD

Clément Kostov (cvkostov@icloud.com)

EDITOR

Damian Arnold (arnolddamian@googlemail.com)

MEMBERS, EDITORIAL BOARD

• Lodve Berre, Norwegian University of Science and Technology (lodve.berre@ntnu.no)

Philippe Caprioli, SLB (caprioli0@slb.com) Satinder Chopra, SamiGeo (satinder.chopra@samigeo.com)

• Anthony Day, TGS (anthony.day@tgs.com)

• Peter Dromgoole, Retired Geophysicist (peterdromgoole@gmail.com)

• Kara English, University College Dublin (kara.english@ucd.ie)

• Stephen Hallinan, Viridien (Stephen.Hallinan@viridiengroup.com)

• Hamidreza Hamdi, University of Calgary (hhamdi@ucalgary.ca)

Fabio Marco Miotti, Baker Hughes (fabiomarco.miotti@bakerhughes.com)

Susanne Rentsch-Smith, Shearwater (srentsch@shearwatergeo.com)

• Martin Riviere, Retired Geophysicist (martinriviere@btinternet.com)

• Angelika-Maria Wulff, Consultant (gp.awulff@gmail.com)

EAGE EDITOR EMERITUS Andrew McBarnet (andrew@andrewmcbarnet.com)

PUBLICATIONS MANAGER Hang Pham (publications@eage.org)

MEDIA PRODUCTION

Saskia Nota (firstbreakproduction@eage.org) Ivana Geurts (firstbreakproduction@eage.org)

ADVERTISING INQUIRIES corporaterelations@eage.org

EAGE EUROPE OFFICE

Kosterijland 48 3981 AJ Bunnik

The Netherlands

• +31 88 995 5055

• eage@eage.org

• www.eage.org

EAGE MIDDLE EAST OFFICE

EAGE Middle East FZ-LLC

Dubai Knowledge Village PO Box 501711

Dubai, United Arab Emirates

• +971 4 369 3897

• middle_east@eage.org www.eage.org

EAGE ASIA PACIFIC OFFICE

EAGE Asia Pacific Sdn. Bhd.

UOA Centre Office Suite 19-15-3A No. 19, Jalan Pinang

50450 Kuala Lumpur

Malaysia +60 3 272 201 40

• asiapacific@eage.org

• www.eage.org

EAGE LATIN AMERICA OFFICE

EAGE Americas SAS Av. 19 #114-65 - Office 205 Bogotá, Colombia

• +57 310 8610709

• americas@eage.org

• www.eage.org

EAGE MEMBERS’ CHANGE OF ADDRESS

Update via your MyEAGE account, or contact the EAGE Membership Dept at membership@eage.org

FIRST BREAK ON THE WEB www.firstbreak.org

ISSN 0263-5046 (print) / ISSN 1365-2397 (online)

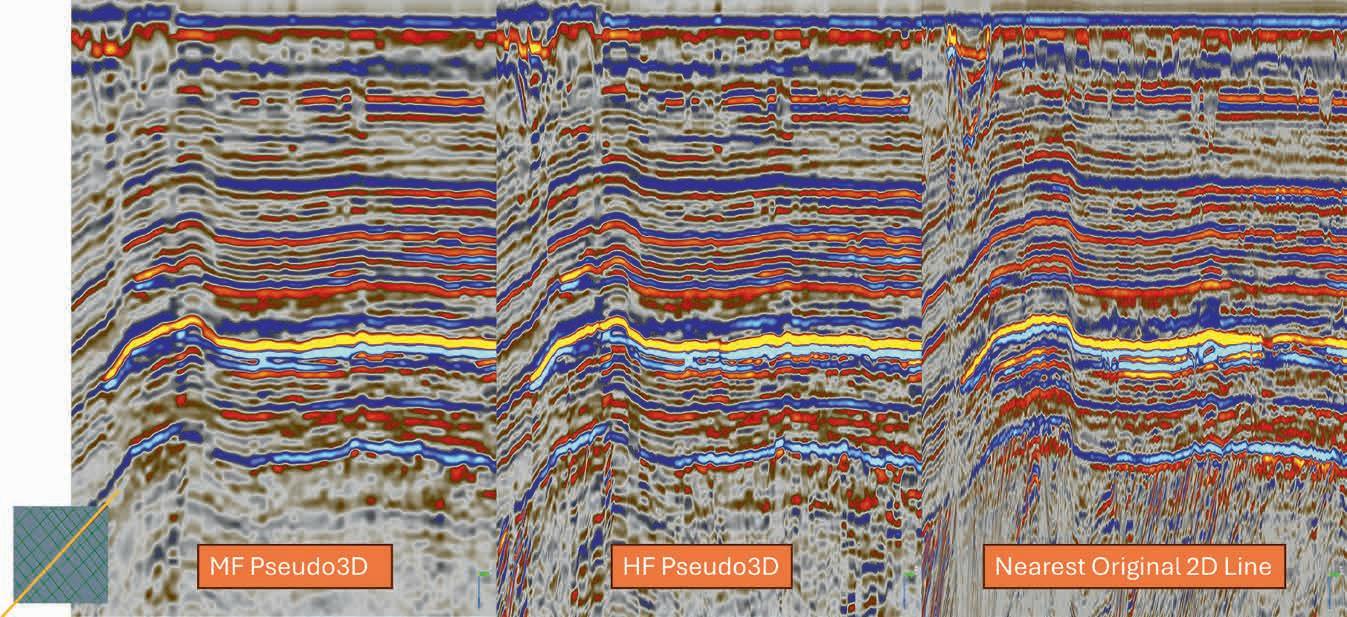

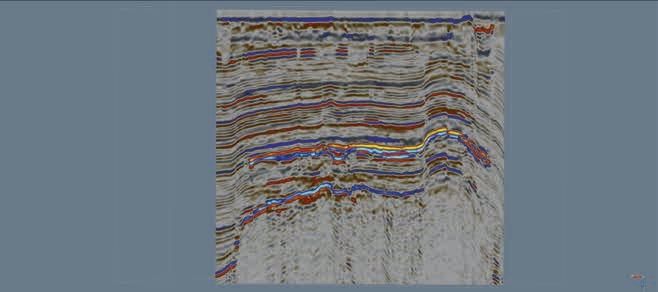

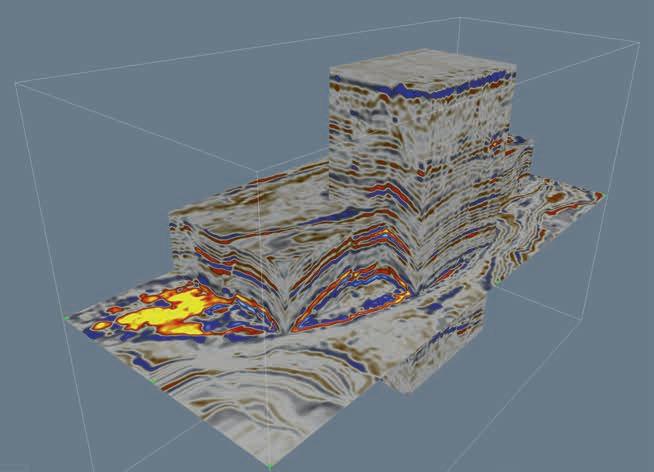

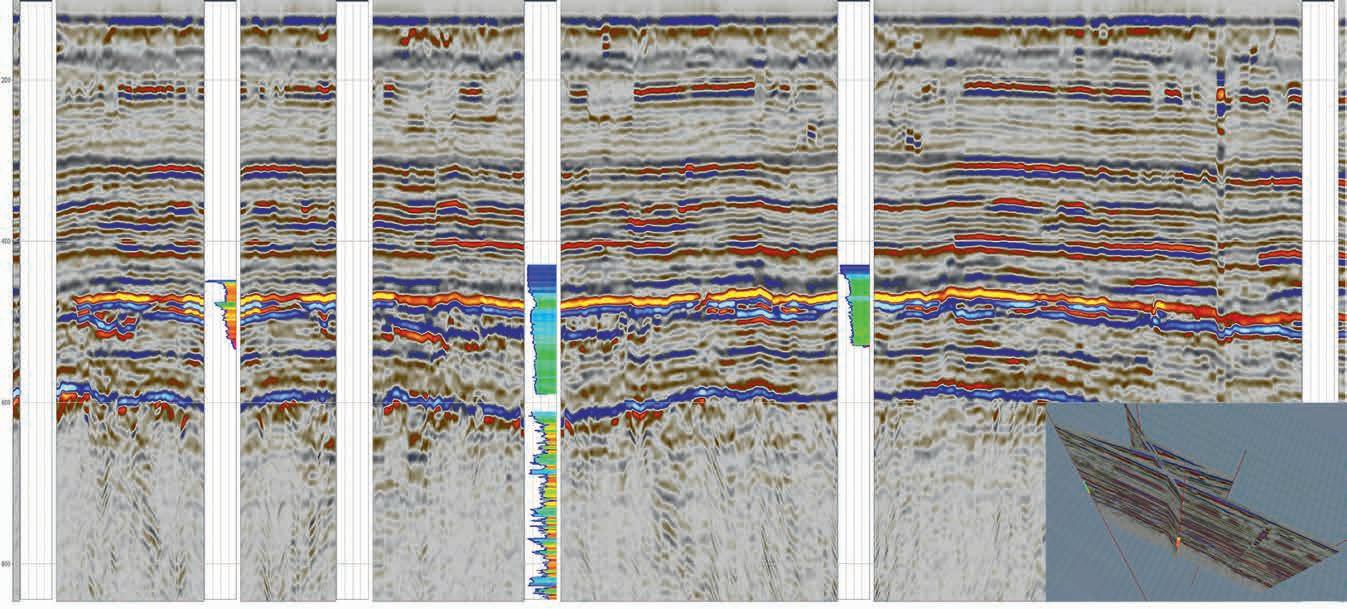

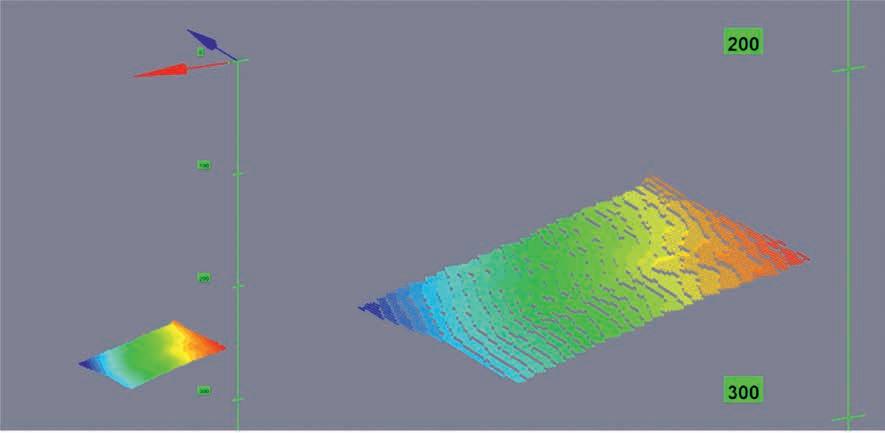

‘Pseudo3D’,

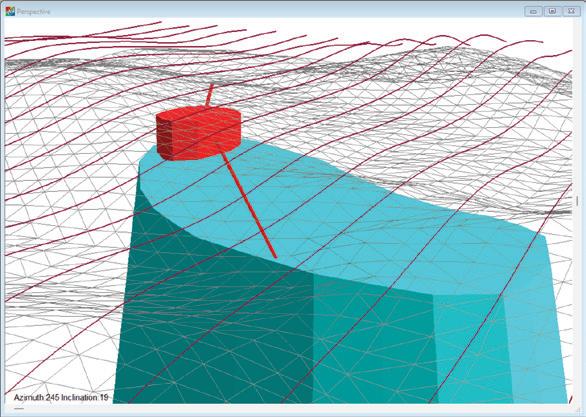

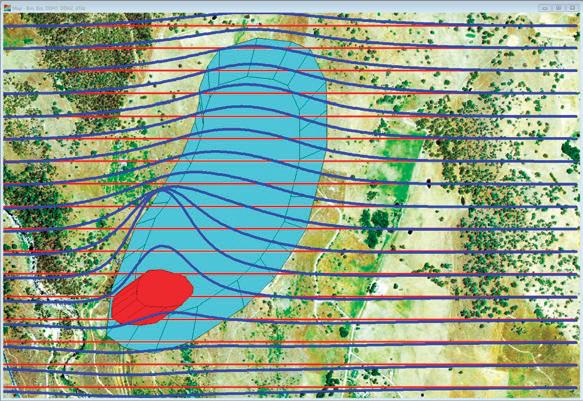

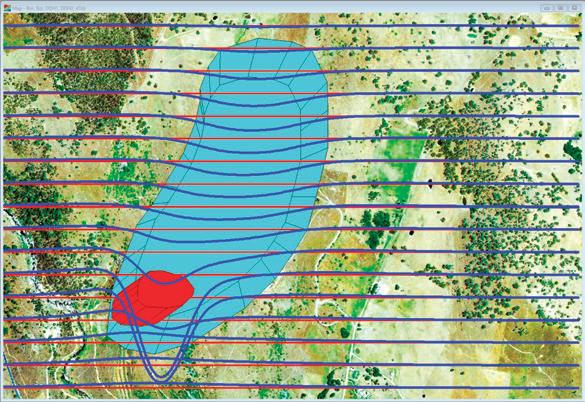

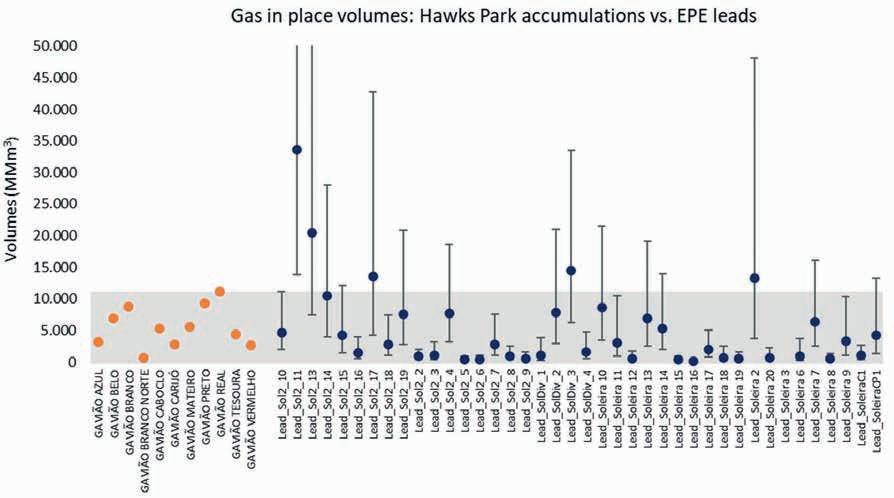

27 Volumetric potential assessment of prospective resources of Devonian and Carboniferous plays in the Parnaíba Basin

C.M. Carvalho, K.S. d’Almeida and P.V. Zalán

35 Legacy insights to modern CCS evaluation: An integrated approach to optimising subsurface suitability analysis

Mike Powney, Jeniffer Masi, Theresia Citraningtyas, Behzad Alaei, Sharon Cornelius, Felix Dias and Pete Emmet

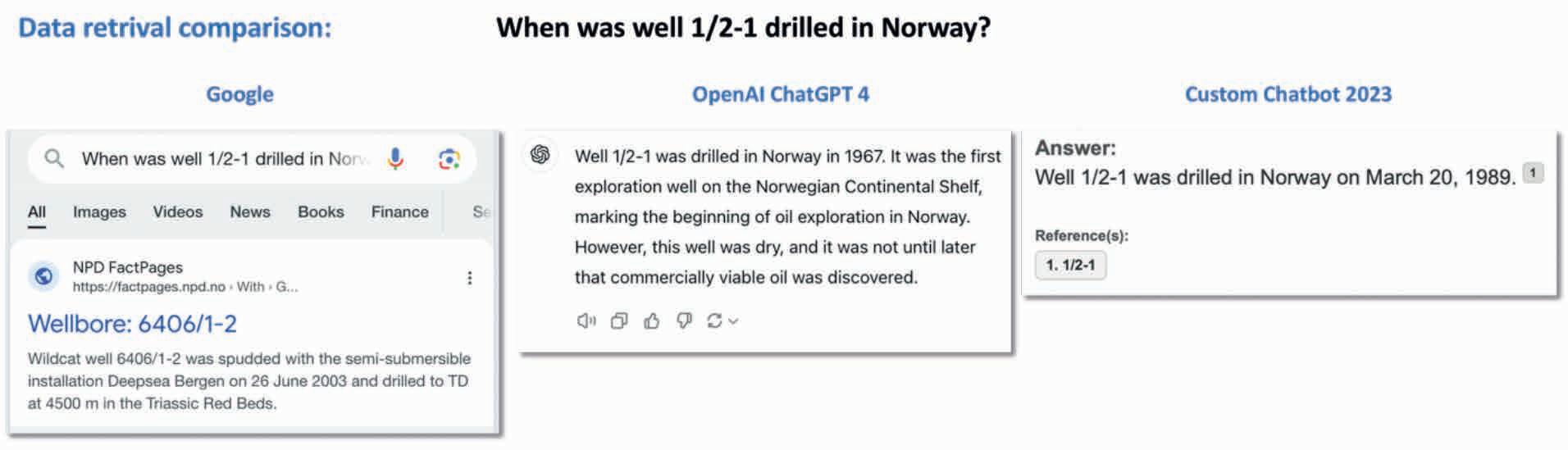

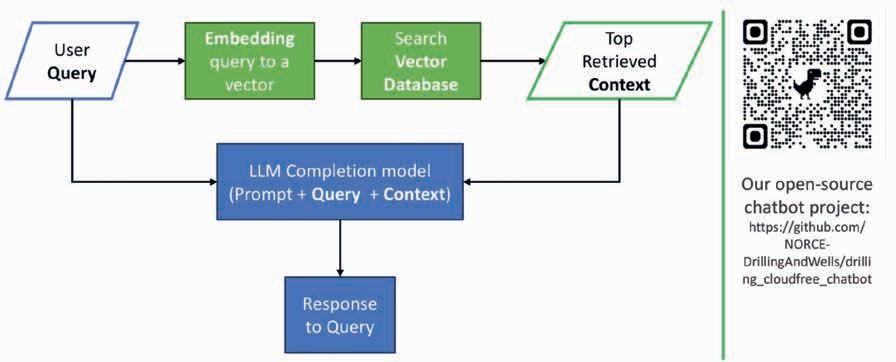

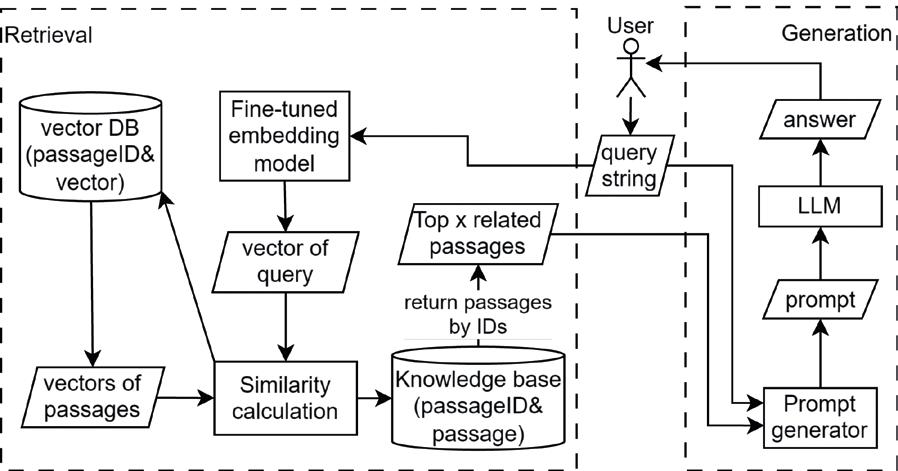

43 Cloud-free question answering from internal knowledge bases: Building an AI for drilling applications

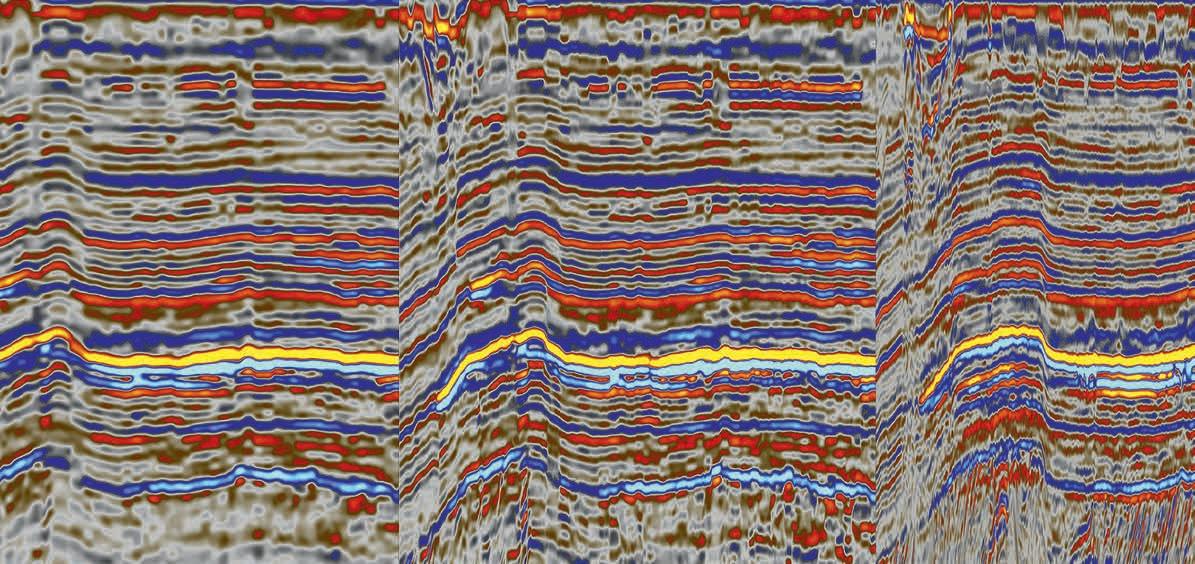

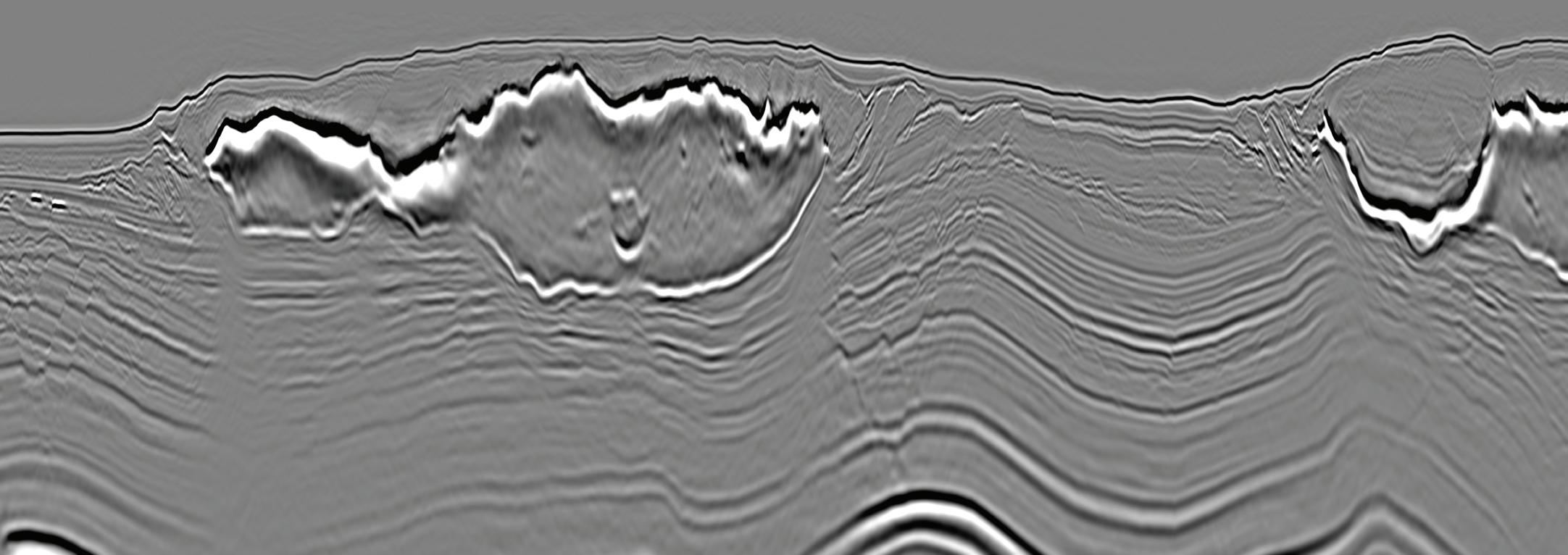

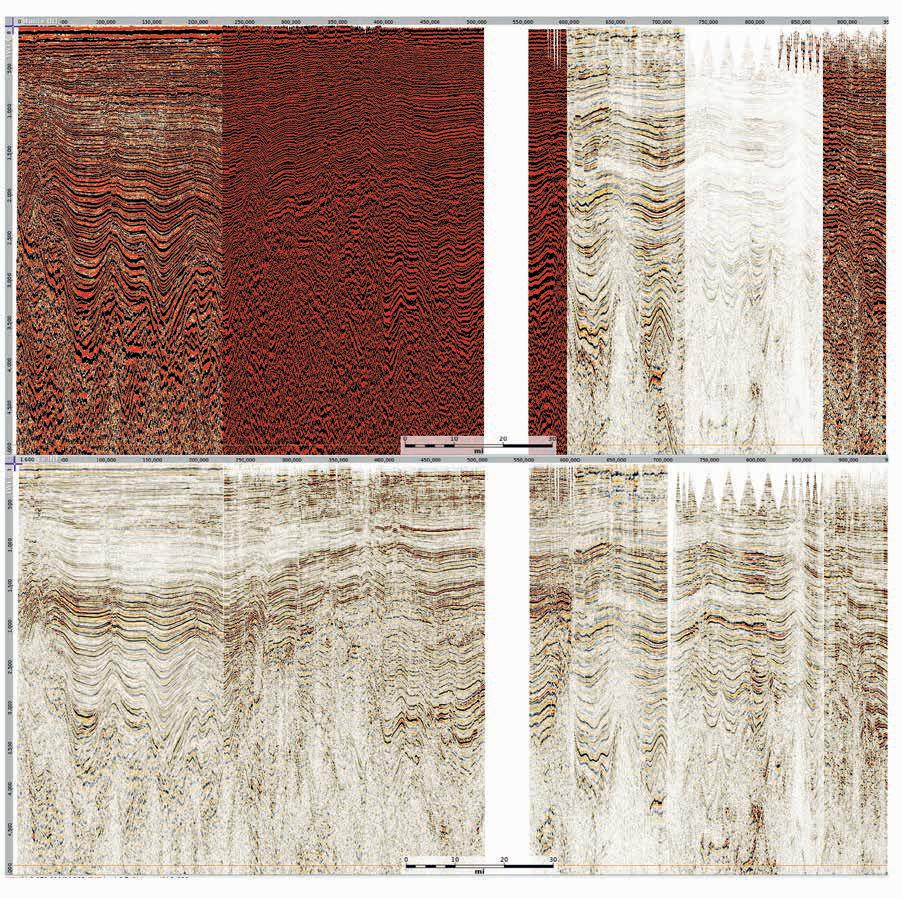

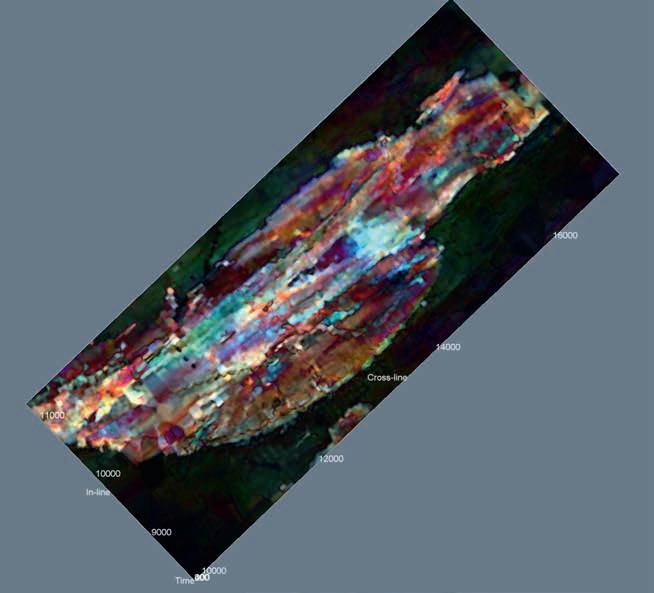

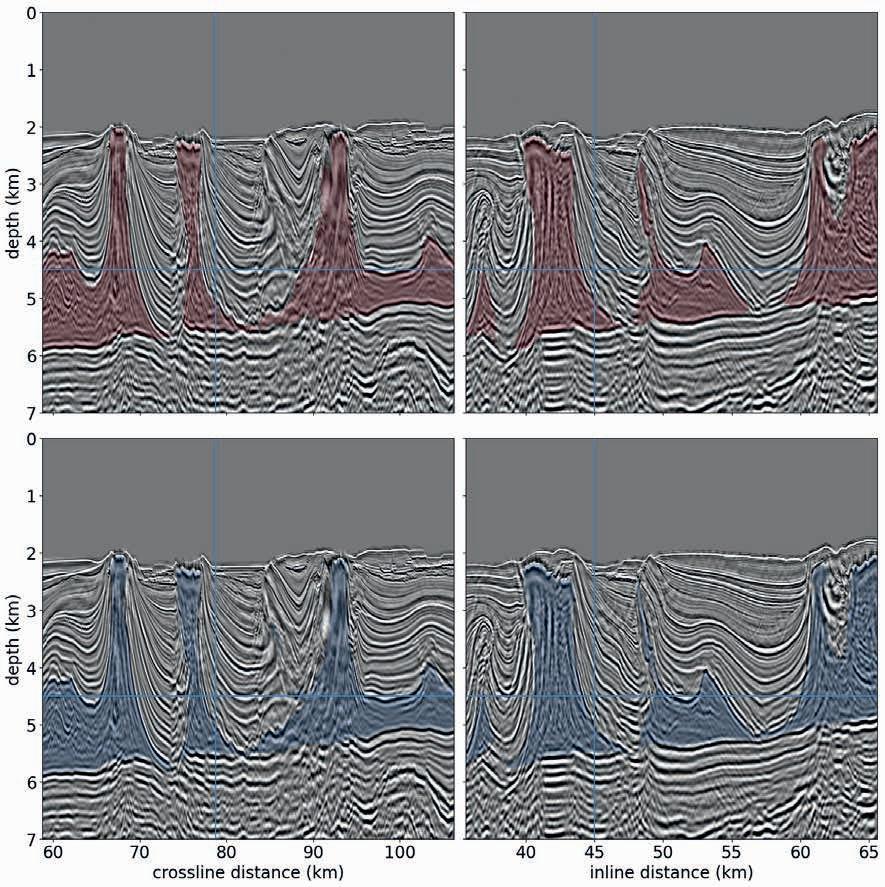

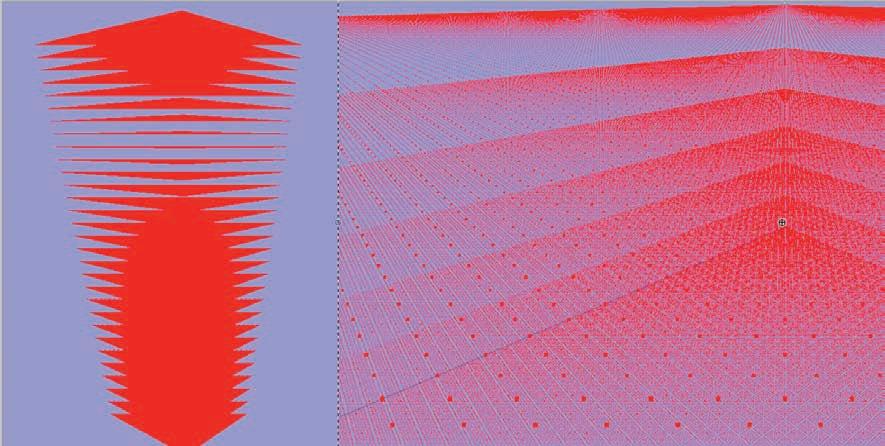

Sergey Alyaev, Liang Zhang Felix James Pacis and Tomasz Wiktorski

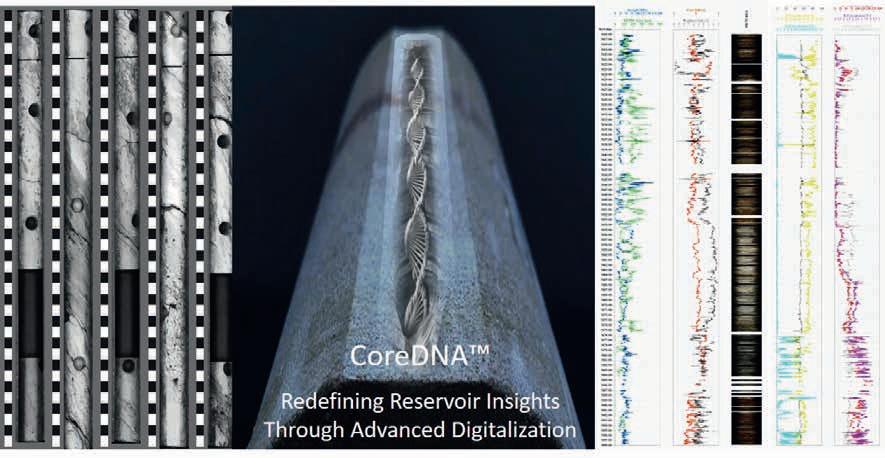

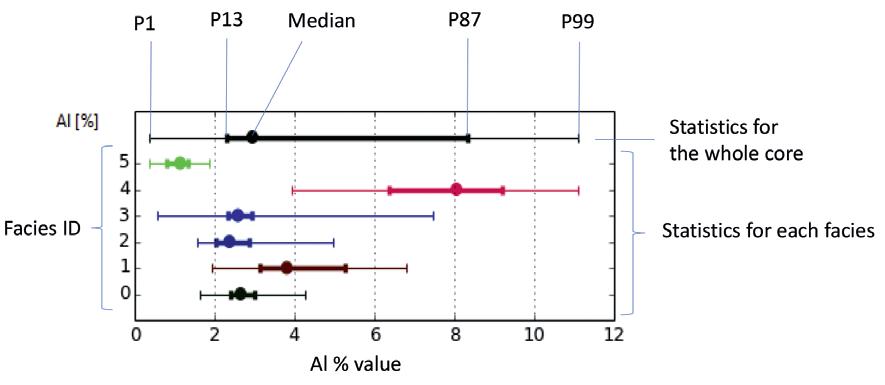

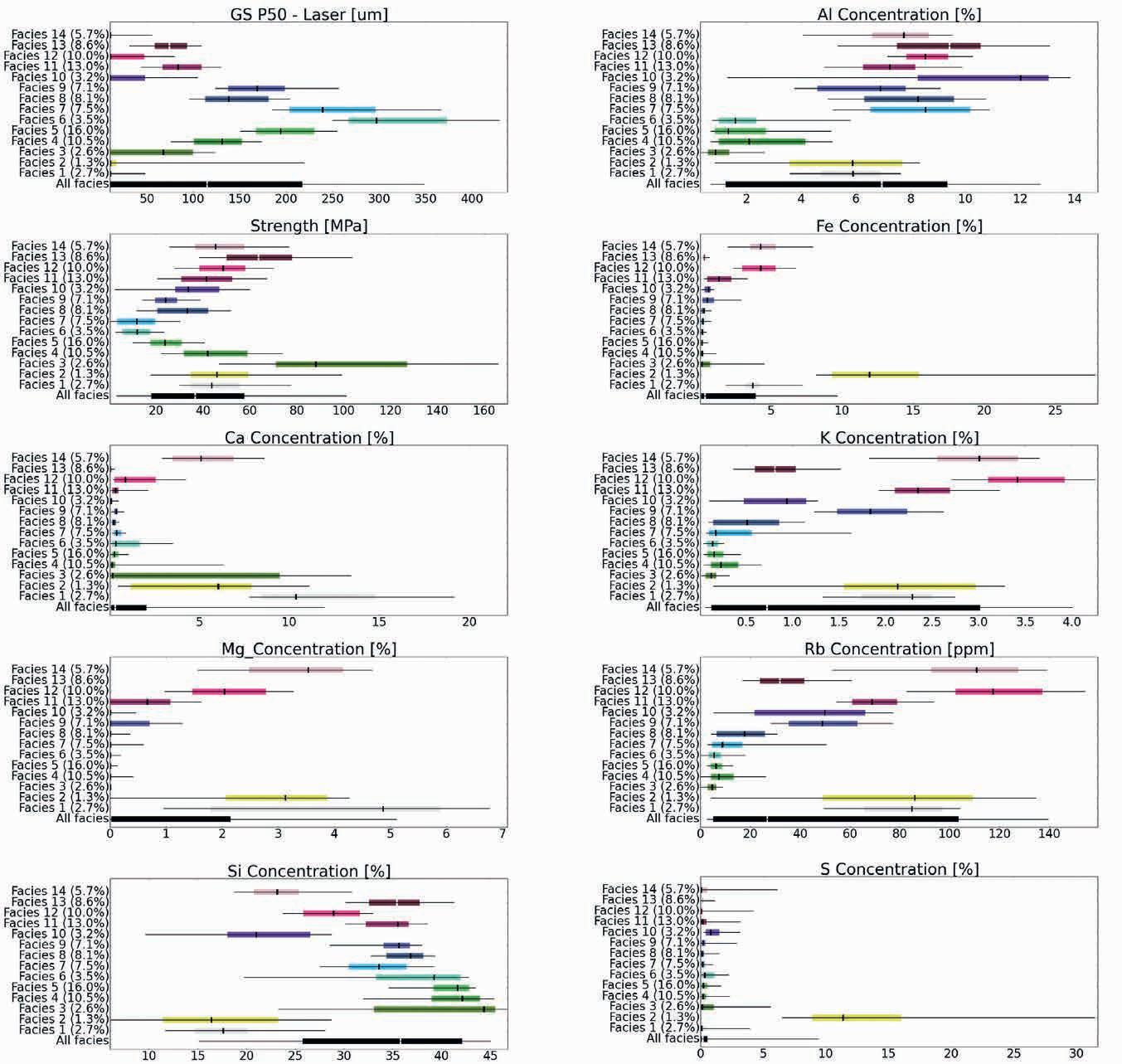

51 Revolutionising subsurface evaluation with advanced core digitalisation

Christophe Germay, Tanguy Lhomme and Jenny Omma

59 ‘Pseudo3D’, a post-stack approach to transforming 2D seismic into 3D D. Markus, K. Rimaila, P. de Groot, and R. Muammar

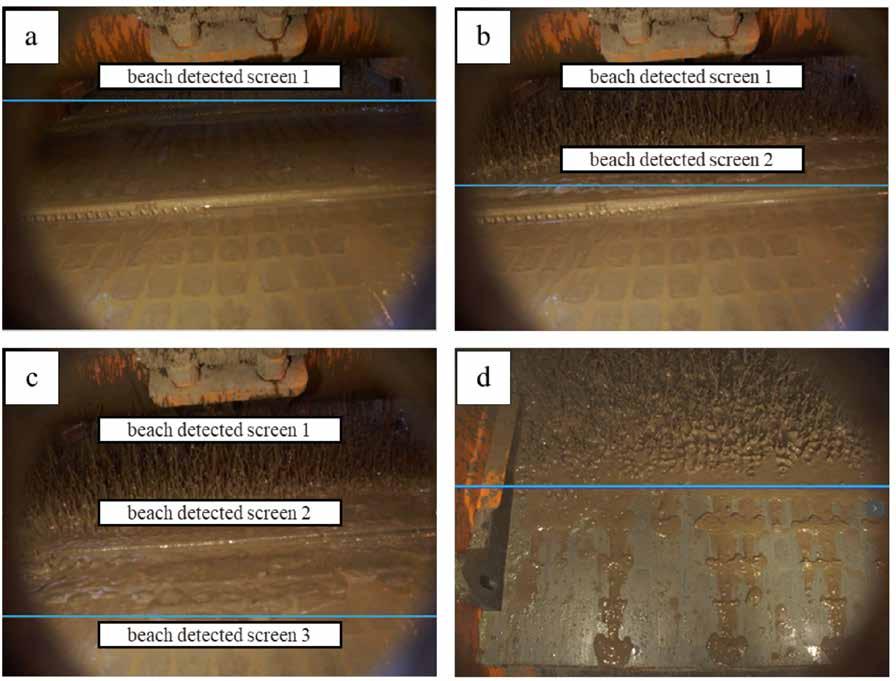

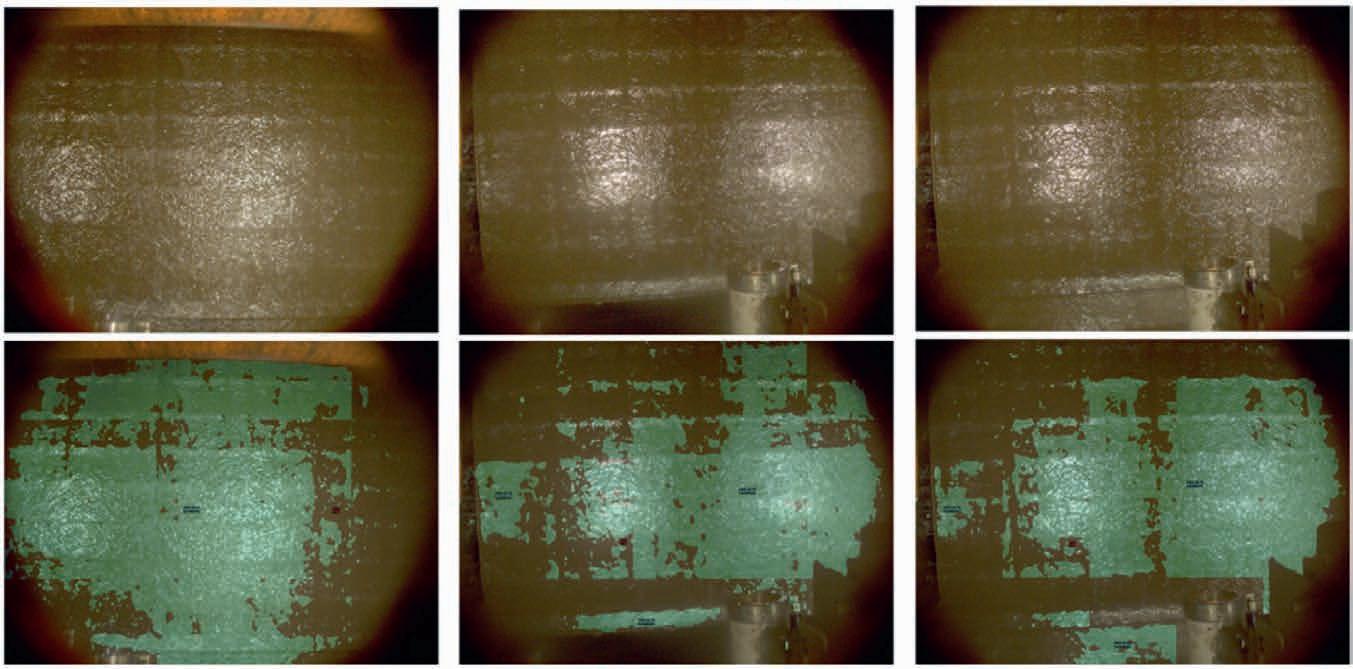

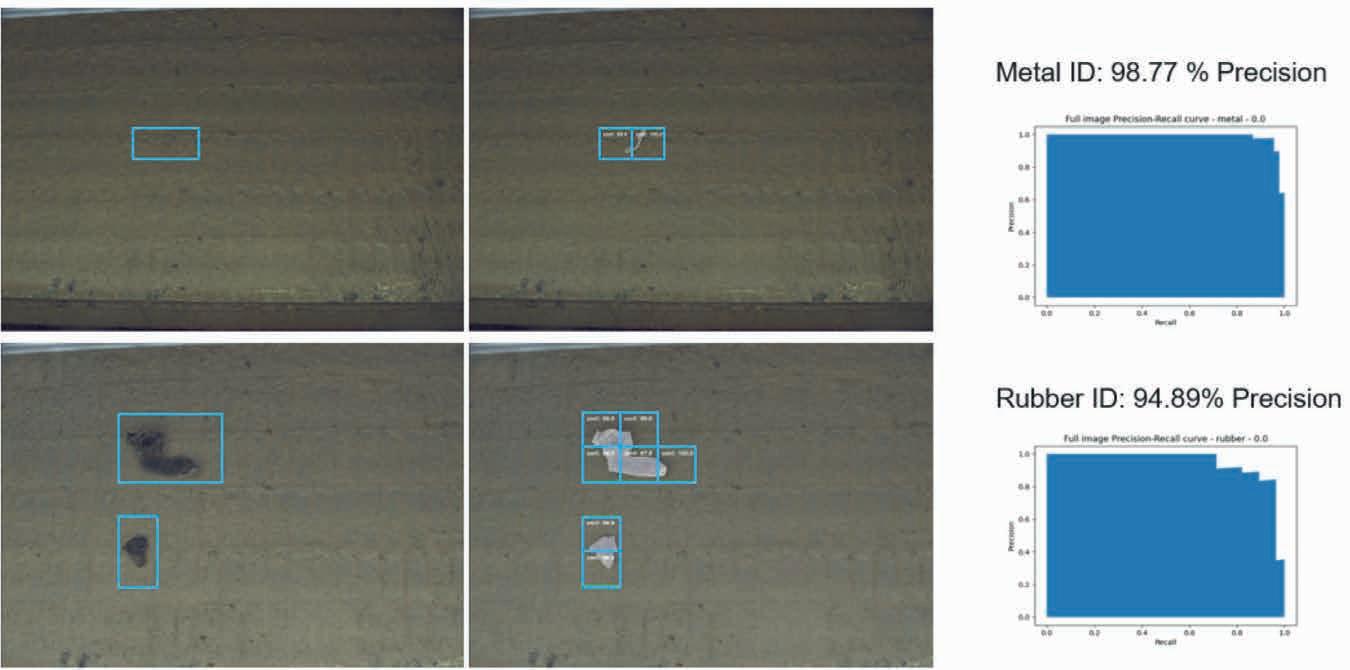

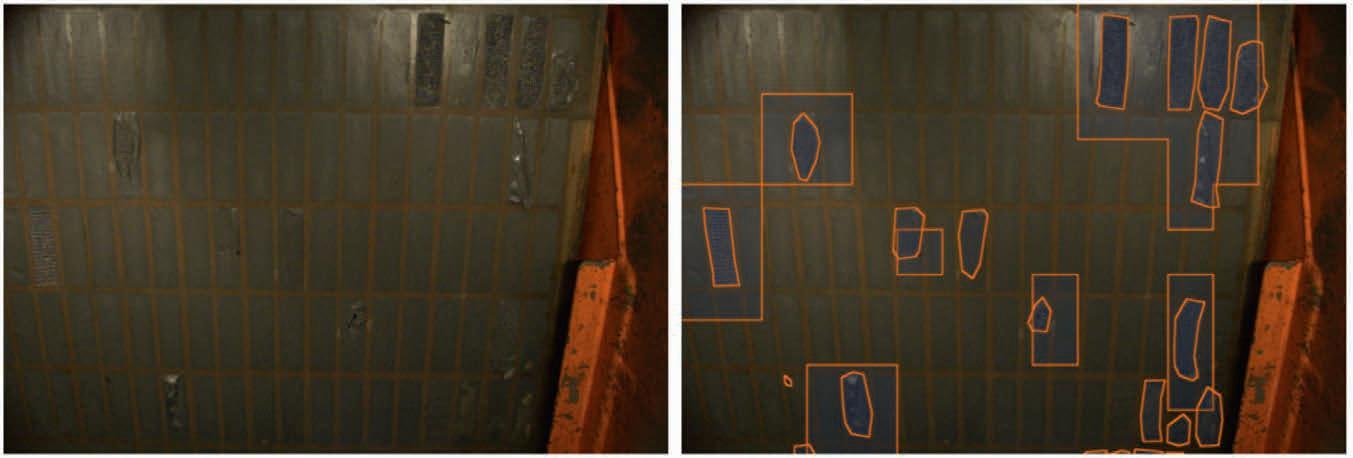

63 Advancing drilling safety and efficiency: Automated shale shaker and borehole instability monitoring with AI and computer vision

Mario Ruggiero and Ivo Colombo

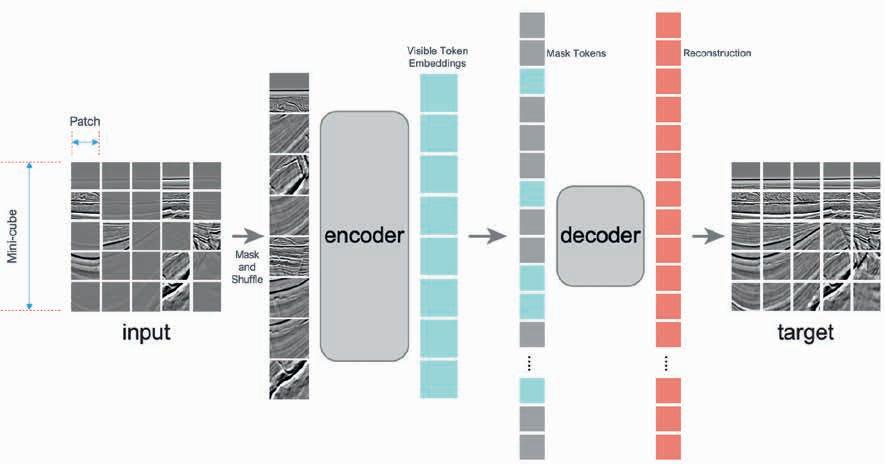

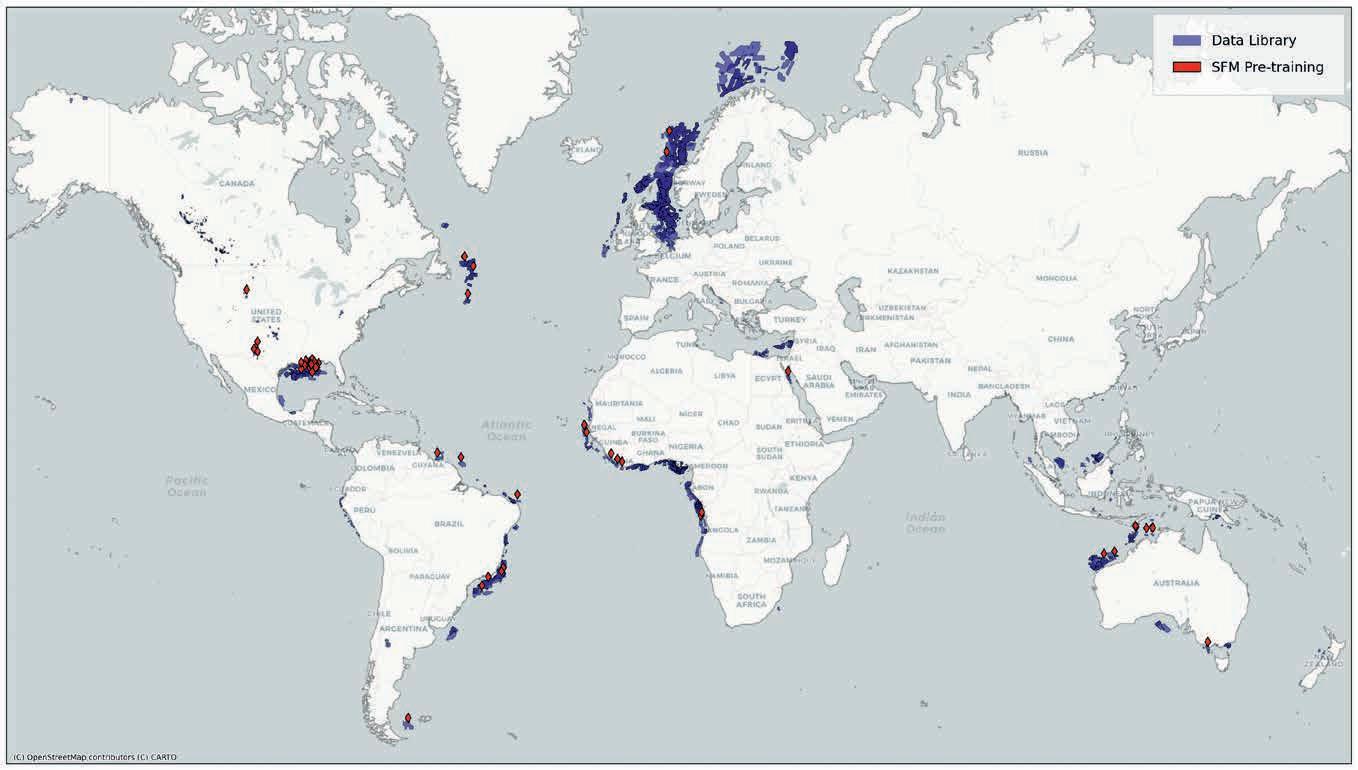

69 Scaling seismic foundation models

Altay Sansal, Ben Lasscock and Alejandro Valenciano

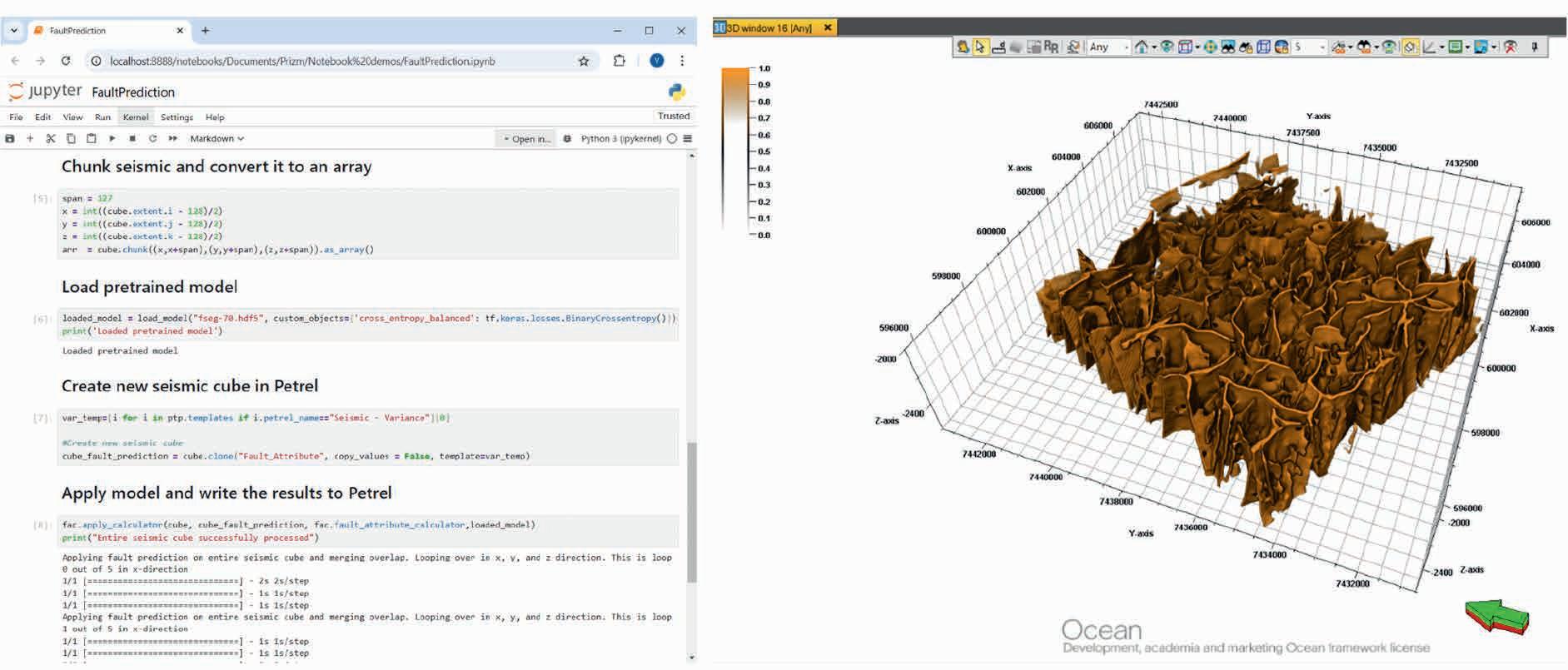

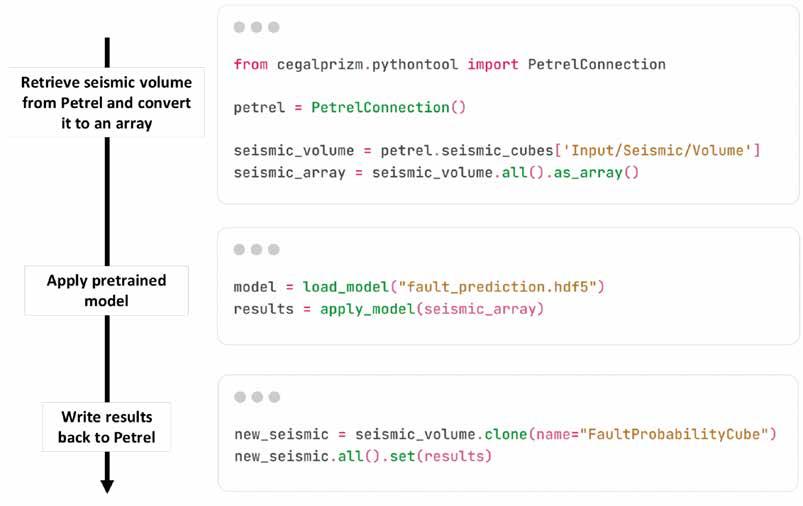

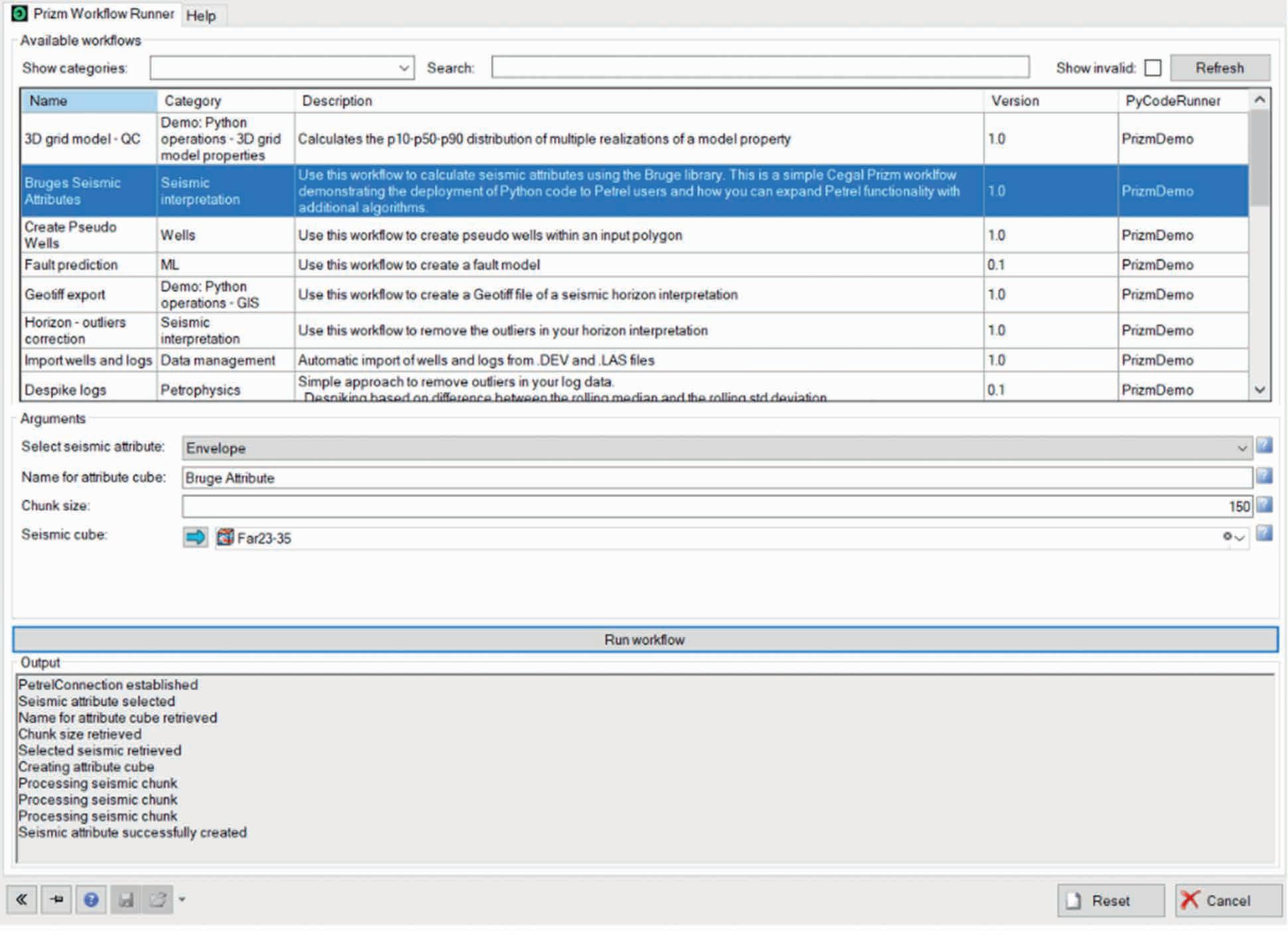

75 Empowering subsurface experts: Seamless integration of research and data into Petrel workflows with advanced Python tools

Julie Vonnet, Vlad Rotar and James Goldwater

81 Fully automated seismic horizons and surfaces detection

Norman Mark

Features

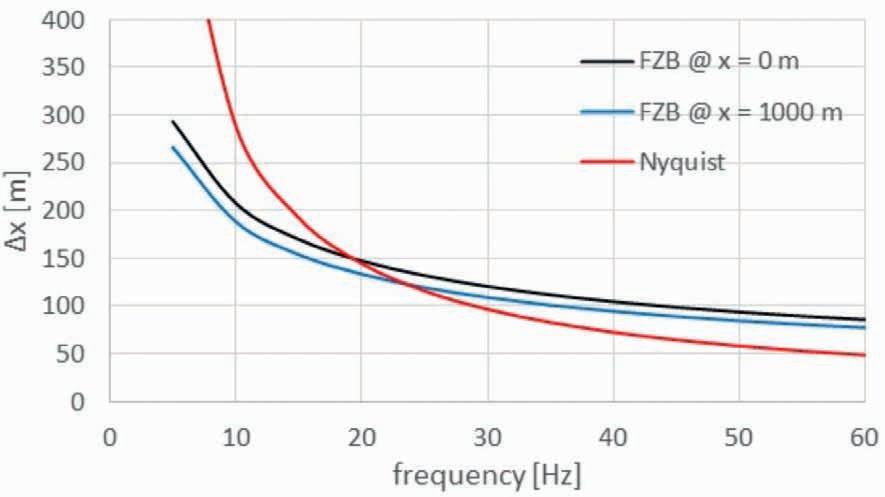

87 Discussion of paper on the theory of wavefield sampling by Goodway et al. (2024) Gijs J.O. Vermeer

92 The authors of Land 3D acquisition design: Theory of wavefield sampling. Part 1 and 2. Andrea Crook, Bill Goodway, Mostafa Naghizadeh, Michael Hons and Cameron Crook

94 Calendar

cover: Ikon Science’s artist’s impression of the digitally enhanced geoscientist. This month we explore innovations in machine learning and artificial intelligence.

Andreas Aspmo Pfaffhuber Chair

Florina Tuluca Vice-Chair

Esther Bloem Immediate Past Chair

Micki Allen Contact Officer EEGS/North America

Hongzhu Cai Liaison China

Deyan Draganov Technical Programme Officer

Eduardo Rodrigues Liaison First Break

Hamdan Ali Hamdan Liaison Middle East

Vladimir Ignatev Liaison CIS / North America

Musa Manzi Liaison Africa

Myrto Papadopoulou Young Professional Liaison

Catherine Truffert Industry Liaison

Mark Vardy Editor-in-Chief Near Surface Geophysics

Yohaney Gomez Galarza Chair

Johannes Wendebourg Vice-Chair

Lucy Slater Immediate Past Chair

Wiebke Athmer Member

Alireza Malehmir Editor-in-Chief Geophysical Prospecting

Adeline Parent Member

Jonathan Redfern Editor-in-Chief Petroleum Geoscience

Xavier Troussaut EAGE Observer at SPE-OGRC

Robert Tugume Member

Timothy Tylor-Jones Committee Member

Anke Wendt Member

Martin Widmaier Technical Programme Officer

Carla Martín-Clavé Chair

Giovanni Sosio Vice-Chair

SUBSCRIPTIONS

First Break is published monthly. It is free to EAGE members. The membership fee of EAGE is € 80.00 a year including First Break, EarthDoc (EAGE’s geoscience database), Learning Geoscience (EAGE’s Education website) and online access to a scientific journal.

Companies can subscribe to First Break via an institutional subscription. Every subscription includes a monthly hard copy and online access to the full First Break archive for the requested number of online users.

Orders for current subscriptions and back issues should be sent to First Break B.V., Journal Subscriptions, Kosterijland 48, 3981 AJ Bunnik, The Netherlands. Tel: +31 (0)88 9955055, E-mail: subscriptions@eage.org, www.firstbreak.org.

First Break is published by First Break B.V., The Netherlands. However, responsibility for the opinions given and the statements made rests with the authors.

COPYRIGHT & PHOTOCOPYING © 2025 EAGE

All rights reserved. First Break or any part thereof may not be reproduced, stored in a retrieval system, or transcribed in any form or by any means, electronically or mechanically, including photocopying and recording, without the prior written permission of the publisher.

The publisher’s policy is to use acid-free permanent paper (TCF), to the draft standard ISO/DIS/9706, made from sustainable forests using chlorine-free pulp (Nordic-Swan standard).

The EAGE Annual 2025 is set to deliver an exceptional lineup of short courses designed to equip geoscientists and energy professionals with the latest insights and practical skills. The courses cover a diverse range of topics, from seismic processing to hydrogen storage and public engagement, offering participants the opportunity to learn from world-renowned experts.

Compressive sensing, explained and challenged — Sunday, 1 June 2025

Jan de Bruin (JdB VitalSeis) takes an objective look at the promises and limitations of compressive sensing (CS). Participants will gain a grounded understanding of what works, what doesn’t, and how to make informed decisions about implementing CS in practical scenarios. Attendees will earn five CPD points.

Reservoir engineering for hydrogen storage in subsurface porous media — Sunday, 1 June 2025

Gang Wang (Heriot-Watt University) focuses on hydrogen storage in subsurface porous media, exploring the hydrogen value chain, policy, and economic considerations, equipping attendees with insights into one of the key pillars of the energy

transition. Participants will earn five CPD points.

A guided tour of seismic processing of multiples; concepts, applications, trends — Monday, 2 June 2025

Clément Kostov provides an in-depth overview of seismic data processing related to multiples, including adaptive subtraction methods, case studies, and emerging trends. Attendees will earn five CPD points.

Geoscience communication and public engagement — Monday, 2 June 2025

Iain Stewart (University of Plymouth/ Royal Scientific Society of Jordan) offers hands-on experience in crafting impactful messages to bridge the gap between

science and public understanding. Participants will earn five CPD points.

State of the art in full waveform inversion (FWI) — Friday, 6 June 2025

Ian F. Jones explores the principles, methodologies, and applications of FWI, including model estimation, reflectivity generation, and pre-stack attribute analysis, enriched with real-world data examples. Attendees will earn five CPD points.

Don’t miss the chance to enhance your expertise, gain valuable CPD points, and connect with industry leaders. Register now and be part of the future of geoscience and engineering at EAGE Annual 2025!

Time to prepare for the Fifth EAGE Digitalization Conference and Exhibition next month on 24-26 March 2025, in Edinburgh, Scotland featuring a major strategic programme of panel discussions exploring three critical themes: the accuracy and value of reliable predictions, the dynamics of innovation cycles in response to shifting priorities, and the integral role of data, technology, and standardisation in boosting predictive capabilities and enhancing investment decisions. Here’s what each day has in store:

Has digital technology made our business more reliable and predictable?

Exploration of how digital advancements are shaping predictions in the oil and gas sector, assessing the impact on investment decisions and forecasting accuracy.

How does the industry demonstrate value from their digital tools?

A review of the past decade’s increase in digital investment, exploring how it has tangibly benefited business efficiency, revenue, and overall success.

How does the energy sector invest wisely in the digital technology stack?

Resource allocation across the technological spectrum will be discussed.

AI value creation: Where are we today and where are we heading?

Current achievements and the future potential of AI in the oil and gas sector will be topic highlight.

What are we learning as we go through the GenAI hype cycle?

Analysis of the evolution of GenAI within the industry, its practical challenges, and the potential for new business models driven by AI.

AI accelerating exploration

Focus on the integration of AI in exploration processes, evaluating its successes, limitations, and areas of most significant potential.

Keeping good ideas moving:

Managing a digital vision through the good times, and the bad

Strategies for maintaining a long-term digital vision, managing through economic cycles and technological changes.

Digital for new energies (faster and cheaper)

How digital innovations are making renewable energy more efficient and reliable, discussing impacts on sustainability and business models.

How can industry realise the benefits of OSDU, and is it guaranteed to succeed?

The benefits and challenges of adopting OSDU standards to enhance data interoperability and analytics in the industry.

Balancing exciting possibilities vs realistic implementations

Use of open source and low-code platforms, focusing on balancing innovation with compliance, security, and business alignment.

How to generate the pull?

Increasing readiness for the next technology

Strategies for improving user engagement with new digital tools and methods, and managing the transition effectively.

The dilemma of the technology manager — how do we balance cloud, on-premise solutions given the different perspectives?

Strategic decisions between using cloud services versus on-premise solutions, tailored to enhance organisational efficiency and cost-effectiveness.

Each session in the strategic programme is designed to prompt in-depth discussion and exchange among experts, aiming to enrich understanding of how digitalization influences the future of energy.

Should you wish to engage in these crucial conversations, reach out to us at europe@eage.org for opportunities to speak. To enjoy discounted registration rates, make sure to sign up at www.eagedigital.org by 10 February.

DUG Elastic MP-FWI Imaging solves for reflectivity, Vp, Vs, P-impedance, S-impedance and density. It delivers not only another step change in imaging quality, but also elastic rock properties for quantitative interpretation and prestack amplitude analysis — directly from field-data input. A complete replacement for traditional processing and imaging workflows - no longer a stretch!

info@dug.com | dug.com/fwi

13-14

EAGE London Local Chapter community met in September for a lecture given by Kunpeng (KP) Liao from Viridien, an expert in the emerging technology of distributed acoustic sensing (DAS) technology.

He showcased how fibre optic cables have the potential to provide 4D reservoir monitoring and overburden

cessing and interpretation has attracted significant attention in recent years for understanding well conditions and for continuous monitoring. Application of DAS for time-lapse until now lacked robust field testing, did not have confirmation with conventional seismic data or was based only on synthetic testing or modelling.

by the Ocean Bottom Node (OBN) seismic data acquired in the area by permanent reservoir monitoring (PRM) system and conveniently used in this case for benchmarking.

Liao outlined the capability of 3D DAS data for extensive overburden imaging using the down-going wavefield, supported with high quali-

imaging, and with clear learnings about future affordable monitoring technology for Carbon Capture and Storage (CCS) for the energy transition.

Acquiring low-cost seismic data using DAS, coupled with data streaming solutions and advanced imaging algorithms allowing for real-time pro-

In his lecture, Liao showed probably the first proven example of real 4D signals recorded by DAS field data. He explained to the audience the nature of DAS-VSP 4D acquisition, the 4D processing challenges and ultimately showed a comparison of the DAS 4D signals with those obtained

ty data examples. This is another key element for monitoring in CCS.

The presentation was followed by an animated Q&A session exchanging further ideas and expertise in the utilisation of DAS and future developments in time-lapse monitoring.

DIEGO ROVETTA

EAGE Membership & Cooperation Officer

We are incredibly proud of the outreach carried out by our Local and Student Chapters worldwide, connecting new members every day. To further energize their work, we have decided to challenge them into a competition called EAGE Champions . Running through March, this friendly contest encourages Chapters to grow by engaging the community in their area, and I am looking forward to congratulating the four champions”. Your network. Your strength. Being part of EAGE means access to a global community that supports your growth and success. BE PART OF SOMETHING BIGGER STUDENTS CAN APPLY FOR THEIR FIRST YEAR OF MEMBERSHIP FOR FREE (RE)ACTIVATE YOUR MEMBERSHIP TODAY!

LOCAL CHAPTERS 29 STUDENT CHAPTERS 45

EAGE’s Symposium and Exhibition on Geosciences for New Energies in America was held at Centro Historico in Mexico City on 3-5 December, 2024 addressing geothermal energy, CCS, water management (with a focus on water footprint), and the pivotal role of mineral exploration in driving the energy transition. These are some impressions from a student perspective.

This was an exceptional gathering of industry leaders, professionals, researchers and companies at the forefront of near surface geoscience and mineral exploration in Latin America. It provided an unparalleled opportunity to engage directly with the latest advancements and applications in the rapidly evolving fields relevant to energy transition along with reflections on water resource management.

The participation of renowned companies such as Geotem, GEODEVICE, STRYDE, and Mujeres WIM, along with organisations like the Asociación Geotermica Mexicana and AGEOCOL, highlighted the critical role that industry plays in advancing these sectors.

Some key takeaways

Located in the Pacific Ring of Fire, Mexico possesses significant geothermal resources, including notable fields such as Los Azufres, Los Humeros, and Cerro Prieto. However, the development of geothermal energy has been slow, primarily due to the absence of a specific legal framework. Although the 2014 Geothermal Energy Law was a step forward, it remains insufficient in fully addressing the sector’s needs. As a result, geothermal projects are often subject to broader regulations meant for mining or hydrocarbons, leading to bureaucratic challenges that hinder investment. The symposium highlighted the necessity of creating a tailored legal framework and introducing financial incentives to foster the growth of the geothermal sector in Mexico.

Discussions covered detecting geological structures in the geothermal fields of the region, geothermal assessment of the Mexican central intraplate volcanism province using neural networks, and an on-going project to create a preliminary heat generation map of Mexico.

Recognising the challenges facing geothermal implementation, solutions like vertical seismic profiling for reducing risk in large-scale geothermal urban applications and different exploration practices to promote social acceptance of geothermal projects in Mexico were discussed. The environmental impact implications of converting abandoned oil wells in Southeast Mexico was mentioned along with potential lithium extraction in the Bolivian salt flats. approaches to achieve more precise and effective results.

It was useful to discuss geothermal applications beyond electricity generation, such as direct heating and industrial uses,

broadening its scope as a strategic energy resource. Regarding mineral exploration, geophysics applies to submarine environments opening new frontiers in resource utilisation in hard-to-reach areas. This approach highlighted the importance of technological innovation and the development of specific methodologies for these complex settings.

Collaboration between the oil and mining industries also emerged as an essential component to enhance exploration and the development of strategic minerals. This point provided inspiration to explore how these areas can align with our academic and professional goals, reinforcing the importance of geosciences in transitioning to a more sustainable future.

The symposium offered a unique platform to foster collaboration between students, industry leaders, and organisations like EAGE serving as a bridge between academic knowledge with realworld applications, ensuring that the next generation of geoscientists is well prepared to address current and future challenges.

With the support of the EAGE Student Fund, 11 students from the EAGE Student Chapter UNAM and IPN Mexico had the opportunity to attend this event. They engaged directly with industry leaders and explored the latest advancements, research, and initiatives in the field.

Basin Research (BR) is an international journal which aims to publish original, high impact research papers on sedimentary basin systems. A new edition (Volume 37, Issue 1) will be published in February.

Geophysical Prospecting (GP) publishes primary research on the science of geophysics as it applies to the exploration, evaluation and extraction of earth resources. Drawing heavily on contributions from researchers in the oil and mineral exploration industries, the journal has a very practical slant. A new edition (Volume 73, Issue 2) will be published in February.

Near Surface Geophysics (NSG) is an international journal for the publication of research and developments in geophysics applied to the near surface. The emphasis lies on shallow land and marine geophysical investigations addressing challenges in various geoscientific fields. A new edition (Volume 23, Issue 1) will be published in February, featuring 6 articles.

Petroleum Geoscience (PG) publishes a balanced mix of articles covering exploration, exploitation, appraisal, development and enhancement of sub-surface hydrocarbon resources and carbon repositories. A new edition (Volume 31, Issue 1) will be published in February.

Geoenergy (GE) Geoenergy is committed to publishing impactful research in subsurface geoscience, driving innovation and progress in the sustainable energy transition. A new edition (Volume 3, Issue 1) will be published in February.

As members of the Brazilian Universidade Federal Fluminense (UFF) Student Chapter, we are thrilled to share the positive impact we have received since introducing our custom designed polo shirts thanks to generous support from the Association. They have already given our Chapter and EAGE greater visibility, unity, and professional engagement.

The design reflects both our values and the global significance of the EAGE brand. Wearing these shirts, we believe, will strengthen our sense of belonging and deepen our connection to the broader geoscience community, inspiring pride among our members. So, to EAGE we offer our grateful thanks.

Below is a photo of members wearing our T-shirts. Maybe it can inspire other student chapters to work on their image!

Listen up all EAGE Student Chapters. It is time to renew your memberships and be sure to enter a team for our Online GeoQuiz Challenge 2025! Renewing your Student Chapter for 2025 means you can strengthen your Chapter’s presence within EAGE’s global community, but have the chance to compete in the Online GeoQuiz, our popular knowledge-based competition that tests your geoscience and engineer expertise and teamwork.

And here’s the exciting part: the top three winning Student Chapters with the highest scores in the Online GeoQuiz will receive three complimentary registrations for three members to attend the EAGE Annual Conference & Exhibition, taking place in Toulouse, France, from 2-5 June

2025. This is more than just a competition, winning is an opportunity to represent your Chapter on a global stage and gain invaluable exposure to the heart of the geoscience industry.

All you have to do is renew your Student Chapter for 2025, ensure your membership is active, and gear up to participate in the Online GeoQuiz. For more information on Chapter renewals and the Online GeoQuiz, please visit https://eage.org/students/establishyour-student-chapter/ or reach out to students@eage.org.

For more information

The undervalued process involved in surface logging technologies will be the subject of a workshop in Paris later this year for which there is a call for papers.

The Surface Logging Workshop: Advancing well construction in the energy transition takes place in the French capital on 12-14 November 2025 and is intended to gather energy professionals to discuss how subsurface logging is increasingly recognised as digitalisation, cross-disciplinary collaboration, and sustainability targets reshape the energy industry.

By providing real-time data that boosts operational safety, efficiency, and overall decision-making, surface logging plays a pivotal role in modern well construction. The workshop will demonstrate how integrating geological, petrophysical, and drilling data optimises well placement, reservoir and overburden evaluation, and hazard detection. Emphasis will also be placed on surface logging’s evolving relevance in unconventional resources, geothermal wells, and new gas exploration such as hydrogen and helium.

Participants will be able to explore how recent advances in surface logging tools and techniques have unlocked new applications, accelerating the technology’s broader adoption. Through presentations of cutting-edge innovations, solutions will be discussed that can improve subsurface understanding and well performance while mitigating operational risks.

Designed for geoscientists, drilling engineers, mud loggers, reservoir engineers, production engineers, and other well-planning professionals, the workshop should offer a rich blend of theory and practical skill-building.

For those interested in submitting a paper, an abstract showcasing your latest research or field experience in surface logging will be welcome. Please send in your submission by 15 June to be considered for a presentation slot at this innovative workshop.

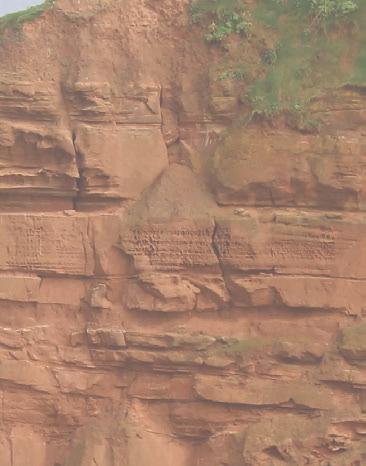

This year there is a chance to experience the extraordinary geology of the breathtaking Argana Valley, nestled in the western High Atlas, just 100 km southwest of Marrakech. The adventure will take you to the heart of the Triassic-Early Jurassic period, offering a rare opportunity to study one of the most exceptional geological wonders in Northwest Africa: the Argana Basin.

This is all possible for those joining the First EAGE Atlantic Geoscience Resource Exploration and Development Symposium Atlantic Conjugate Margins and Their Global Significance In Our Energy Future, on 5-7 May in Marrakech, Morocco

Renowned for its remarkable outcrops, the Argana Basin presents a continuous and complete section of rock formations, unveiling the first tectonic and sedimentary developments of the Central Atlantic Margin. Stretching over 85 km with a width of up to 25 km, this vast rift basin tells a story of ancient transitions — from non-marine to marine environments — etched into its layered rock formations.

Triassic rocks in the basin feature up to 2500 m of coarse to fine-grained red-brown clastic deposits, representing a rich tapestry of geological history. Recent studies have revealed the intricate interplay between tectonic activity and sedimentation, showcasing how shifting depositional conditions shaped the basin over millions of years.

The valley’s unique layout, divided into four sub-basins, makes it an unrivalled natural laboratory for understanding and modelling buried continental rift systems. From base to top, every formation unveils a piece of the puzzle, demonstrating how tectonic reactivations influenced facies distribution and evolution over time.

You could call the Argana Basin a geological masterpiece where history is written in stone. With unparalleled access to its formations, the field trip associated with the conference is perfect for geologists, researchers, and enthusiasts eager to deepen their understanding of rift basin development and the fascinating history of the Central Atlantic Margin.

For more information see Events at www.eage.org.

Our Advanced Seismic Solutions for Complex Reservoir Challenges workshop in Kuala Lumpur on 29-30 April 2025 is being organised to explore the implications of the shift in seismic applications from almost exclusively focusing on fossil fuel exploration to a broader base, including renewable energy and advanced subsurface characterisation for carbon capture and storage.

Addressing the complex challenges in reservoir environments, the technical committee of the EAGE Workshop invites geoscientists, engineers, and industry professionals to come together and explore

these new trends in seismic technology. It is hope that latest and potential future practices in seismic data acquisition and processing will be presented to highlight both legacy and new seismic data developments in land and marine environments.

This year’s workshop promises a comprehensive programme designed to address the latest trends and technologies in seismic solutions. Topics include innovations such as compressive sensing, simultaneous source acquisition, distributed acoustic sensors (DAS), cable-free nodal systems, and marine vibrators as well as machine

learning applications for automated seismic data analysis.

Register now to take the opportunity to connect with leading industry professionals from PETRONAS, PTTEP, SLB, Shearwater GeoServices, TGS, ConocoPhillips, ExxonMobil, Aramco, Shell, and others. Visit the event website using the QR code for more details and secure your spot today.

For more information

The EAGE Student Fund supports student activities that help students bridge the gap between university and professional environments. This is only possible with the support from the EAGE community. If you want to support the next generation of geoscientists and engineers, go to donate.eagestudentfund.org or simply scan the QR code. Many thanks for your donation in advance!

Bjarte Bruheim , best known as a founder of Petroleum GeoServices, is a rare serial entrepreneur in geoscience oil and gas business, not to mention shale. He has been an investor or somehow involved in virtually every significant marine seismic and related technology over four decades, starting operating two seismic vessels with Geco, then PGS 3D data harvesting with Ramform vessels, offshore electromagnetics (EMGS), and ocean bottom nodes (AGS). His first business was a blues/rock band.

Upbringing

I grew up in Foerde, rapidly growing small town on the west coast of Norway. My father was heading up the largest retail business in town and my classmates were from all parts of Norway. In my early teens I was into soccer and track and field, but then music took over. Aged 16, I started my first business, a rock and roll band inspired by American and British music. My first assets were a Gibson SG Standard guitar and a Fender Quad Rewerb amplifier. Playing lead guitar in three different bands, three days a week all summer paid for most of my education.

Academic route

My interest in electronic instruments made my early career choice easy and I obtained my first degree in electronics from Gjoevik engineering school. During those study years, I kind of had a full-time job in the band. For the other band members music was their main income, so we travelled a lot and played 3-5 days a week all summer. I had to plan ahead and be very disciplined when it came to my studies. This paid off and I got the best grades ever and was accepted at NTNU in Trondheim. I took electronics, computer science and physics. My diploma was in fibre-optic sensors. Before starting at NTNU I had to my obligatory military duty in the Norwegian Air Force. I was trained as a radar operator and spent most of the time close to the Russian border.

My first five years at Geco were spent as operations manager for Europe, Africa and

Far East and the next five as VP of operations for North and South America. The professional leadership training I received has helped me a lot during my career. The CEO Anders Farestveit was a hands-on, dynamic leader energising us all. I have absolutely learned what you can and cannot do. During my time the first dual streamer operation was introduced by Geco and paved the way for commercialisation of 3D seismic.

I left Geco after 10 years following the takeover by Schlumberger. For me, the company had become top heavy and slow. I had been arguing strongly for development of technology to expand the multi-client concept with more than two streamers (MC3D). After a year of politics and endless meetings I left and started Precision Seismic with a clear MC 3D strategy. We merged with the 2D MC company Nopec and the navigation company Geoteam, and Petroleum GeoServices (PGS) was born. The team I put together had the right spirit and an endless drive for efficiency and performance. After five years we were building the Ramform purpose-built seismic vessels, six during my time. PGS was to become the first Norwegian technology company to be listed on Nasdaq and NYSE.

As a part of PGS multi-client strategy, we exchanged 3D library data for equity in start-up oil and gas companies. Our success with investment in Houston-based Spinnaker Exploration drilling wells based

on 3D seismic led me to be challenged to find a direct hydrocarbon indicator. Fast forward some years later, I teamed up with Warburg Pincus (who had been PGS financial partner in Spinnaker) to buy EMGS, the pioneering offshore EM company from Statoil (as was). I worked as executive chairman for ten years. Successful in derisking some drilling targets, the company was hampered in the early days by over-optimism, commercial competition and patent disputes.

At PGS I gained my first experience with OBN cables and the potential for the 4D seismic market. In 2016 I was involved in founding an ‘asset light’ seabed seismic services company Axxis Geo Solutions (AGS) and in 2019-2020 we acquired one of the most advanced seismic data sets ever in the North Sea. We used 8000 nodes on the seafloor and shooting data every two seconds for eight months using six sources acquiring a data set of 2.5 petabytes. Unfortunately, the company’s acquisition services closed down because of the Covid pandemic.

We have started a new company Velocitas to operate and continue improve technology, efficiency and lower cost of 3D/4D data sets. We did our first MC proof of concept survey last summer.

I enjoy living and working out of Houston, but spend some time during the summer in Norway to get away from the heat.

BY ANDREW M c BARNET

Mark Zuckberger, CEO of Meta and founder of Facebook, was no doubt sucking up to incoming President Trump when announcing the end of most fact-checking of his organisation’s social media content. In future it will be moderated by everyday users through so-called Community Notes, a system popularised on Elon Musk’s X (previously Twitter).

The decision has generated plenty of hand-wringing in certain quarters because it is regarded as opening the floodgates to more misinformation. That really is a moot point given how many different uncensored social media platforms, messaging apps, podcasts, influencers, etc are out there. You could argue that Zuckerburg is upholding the principle of free speech in a democracy, however disconcerting not to mention threatening this may be.

way suspend disbelief, that news is factual. But that of course begs the question of what constitutes a fact. What is History by E.H Carr (1961) provides the classic takedown of relying on historical facts as the whole truth. Rather they are the arbitrary selection by historians influenced by the period they lived in.

‘Breakdown of the traditional way information is mediated’

What we are really witnessing is the breakdown of the traditional way information is mediated for community consumption. The implications reach into every corner of society, including the siloed worlds of energy and geoscience. It boils down to a question of trust. We may feel comfortable that sufficient knowledge about the activities involving the EAGE is accessible and an accurate reflection of what is going on. However, as we all know, the public perception of the oil and gas industry is increasingly unfavourable as concerns over climate change escalate. Uninformed social media chatter, lies, conspiracy theories, etc simply add fuel to the fire, so to speak. This makes it particularly challenging for professional societies like EAGE to promote understanding of its mission to promote a society founded on sustainable energy deploying the best of science and technology.

Although not often acknowledged enough, everyone has to base their opinions, world view, etc on partial information, even scientific knowledge only represents the best evidence available and is always open to further research.

It is public discourse around our consumption of everyday news which is especially problematic. We assume, or in some

So it is with news. There are countless academic studies describing how news is a social construction. For just how obvious this is, go no further than the observation by comedian/actor Jerry Seinfeld: ‘It’s amazing that the amount of news that happens in the world every day always just exactly fits the newspaper.’ It gets more sinister. One of the first press magnates William Randolph Hearst (model for Orson Welles in the classic movie Citizen Kane) said ‘News is something somebody doesn’t want printed; all else is advertising’. But most damning is the shadow cast over any thought of ethical reporting by Rupert Murdoch, founder of News Corporation and Fox News. ‘Journalism’, he has said with stunning hypocrisy, ‘is a public trust, a responsibility to report the truth with accuracy and fairness. Journalism is also a business, and like any business, it has to attract customers.’

The business imperative is not the only factor distorting news coverage in traditional media (TV, radio and print) although there is no getting away from it being a ‘manufactured’ product. If you start with what makes news, you soon get the idea of the limitations. A popular summary adapted from Shoemaker et al., 1987 recognises these mainly self-explanatory factors in choice of news, e.g., timeliness, proximity (closer means more relevance), importance, impact, or consequence, human interest, negativity (conflict/ controversy sells better), prominence (focus on public figures), and novelty, oddity or the unusual.

The type of media matters. For example, TV news is incredibly limited in the number of items it can cover in a news cast compared with radio and especially print. An overriding criteria is having film. With the exception of live events, such

as Californian wildfires, almost all TV coverage is old news, post happening. There is also a bias towards how accessible any potential item is for camera teams, usually based around big cities. How many times do you hear TV anchors introduce some hapless correspondent reporting ‘live’on location’, hours after the actual event simply to show some film. In passing, this is why local TV media love having animal/pet stories, entertaining and easily planned for a photo shoot/interview.

The decision-making process is of course another whole issue in which journalists are only one consideration. Not just news but all editorial content in conventional media is constrained. Articles have to meet the varying interests of the owner/ stakeholders, advertisers, and editors, thereby immediately introducing bias into the coverage which journalists have to go along with often requiring an element of self-censorship.

‘Rise of social media may provide an unexpected opportunity’

As a product, media coverage is also subject to business principles. Traditional newspapers are in serious if not terminal decline through lack of advertising and falling circulations, and the support for television programming is ebbing in favour of streaming. This affects the depth of news reporting more than many probably realise, and draws attention to one of the major vunerabilities of all news presentation that is rarely addressed, namely the source of news.

You can definitely forget the deep-throat style reporting of the Watergate scandal by Woodward and Bernstein. Media investigations these days are extremely rare: they are costly in resources and staff time, also vulnerable to litigation once published making such exercises an unwarranted risk. A much cheaper option is just to wait and see what comes in, and then have journalists follow up. And this is where reader beware should kick in: just ask yourself how news organisations can start the day with a news agenda already, i.e., anticipating what’s going to happen and planning how it can be reported, no crystal ball needed.

The answer is that much of the material generated to fill news quotients, be it TV, radio or print, comes in without any inquiry needed. If you take news routinely reported such as activity in government, business/financial, entertainment and indeed science/ technology worlds, you can be confident that the first approach to the media was via an annoucement, press release, briefing, direct contact with a journalist, etc. The common denominator is that the source has provided the information and arguably has control of the ‘facts’. Of course the obvious exception should be reporting on an ongoing big event such as a war. But in fact we only get the filtered information that the warring participants allow. In any sensitive incident such as industrial accidents, oil spills, etc, an immediate response for companies involved is to set a PR crisis-handling operation. Furthermore, when reporting journalists tend to provide the view from the top, as more authoritative, i.e., confirmation from a CEO is valued over any old employee, unless it is a rare whistleblower!

This is a topic where Crosstalk can input some personal academic research to verify how sources can influence the media in a manner that has certainly not changed.. A key finding from a study published in the Scottish Journal of Sociology (Nov 1978) entitled ‘The North Sea Oil Story: Government, the oil industry and the press’ by Andrew McBarnet (based on research funded by the UK Social Science Research Council) was that in 1976 at the height of the excitement over North Sea oil, ‘only 14 items received anything like comprehensive coverage in the UK quality and popular national papers … The most striking point about these news columns is that they are all stories which depend on oil industry or government sources making announcements to the press. The press has investigated nothing. In a whole year less than 30 stories were even covered by the four quality papers and of those reports it is difficult to find one which was not prompted by a formal announcement, press conference or some other pre-arranged highly formal, controlled event with the source of newsmaking literally in command of the facts.’

For any organisation such as the EAGE with a credible story to tell, for example, about the value of geoscience in the energy transition era, this media landscape has become even more daunting. It is not a story easy to package to meet conventional media’s news criteria as described here. Plying specialist publications and oil industry news aggregators with information still presents an option. But this is not the audience that the Association would ideally like to tap if it is to accomplish the task of attracting new generations into the geosciences.

Ironically the rise of social media may provide an unexpected opportunity. Pew Research Centre reporting in 2023 showed that in the period 14 April to 4 May 2023, 95% of teens reported using YouTube, 67% TikTok, 62% Instagram 58% Snapchat and only 32% Facebook. The figures in themselves may well have changed and reflect only the US population, but it is known that 59% of EU individuals use social networks, and that in India and China the vast majority of the population engages in some way with social media.

Given this trend, the potential audience of a younger generation may be there for EAGE and others to reach. There are no serious protocols to follow, and no entrenched gatekeepers. Optimising use of social media to get a message across obviously needs research and resources to maintain, and outreach has not in the past been a priority for EAGE or other professional societies. One can imagine the major challenges will be how to stand out from the bewildering number of social media offerings on so many platforms. The biggest followings for podcasts, for example, depend on charismatic individuals. For EAGE to seriously compete outside the cocoon of the geoscience network may require the emergence of an as yet unidentified Mr Geoscience figure!

Views expressed in Crosstalk are solely those of the author, who can be contacted at andrew@andrewmcbarnet.com.

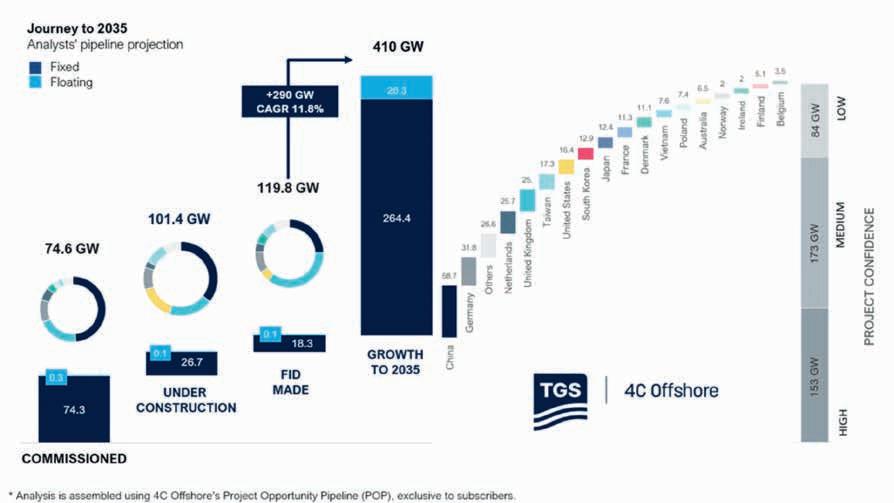

The global offshore wind energy sector set new records for permitting and securing offtake contracts in 2024, underscoring its resilience and adaptability, says TGS’ Q4 2024 Global Market Overview. The report claimed that in 2025 even higher levels of capacity will reach financial close and be secured under offtake agreements.

The fourth-quarter 2024 report highlights how consequential elections and auctions in 2024 have triggered significant changes, with government support gradually catching up with industry cost pressures. Governments globally aimed to lease about 72 GW of new sites this year, with 65.2 GW awarded. However, only 16.6 GW of approximately 30 GW of targeted offtake capacity was secured.

‘While recent auctions have seen mixed success — particularly in Denmark, where an auction received no bids — global growth prospects remain promising, said TGS.

New global key forecasts show that 410 GW of offshore wind projects will start construction by the end of 2035, with the Asia-Pacific market share set to expand significantly, from 7% today to about 16%. Retaining its leadership position, Europe’s share is expected to grow from 44% now to 49% by 2035, leveraging its long development experience to weather supply chain disruptions.

Additionally, China’s role remains dominant, with 26% of global capacity starting

offshore construction by the middle of the next decade.

With this longer-term vision, the UK and US are predicted to be outside the top three markets globally — a consequence of supply chain and policy uncertainty. By contrast, emerging markets outside the top 10 are projected to hold more than 17% of global offshore wind capacity by 2035, a significant increase from 1.1% today.

In the fourth quarter of 2024, Romania and Turkey each published an offshore wind roadmap, Canada took a crucial legislative step with Bill C-49, and Brazil and Colombia advanced their regulatory frameworks. ‘This rapid evolution signals a more diverse and geographically dis-

persed market that extends well beyond traditional industry hubs,’ said TGS.

Overall, developments in 2024 provided a positive long-term direction, said TGS. ‘Governments are raising strike prices, developers are refining strategies to manage risks, and the global market is evolving into a more diverse, geographically dispersed ecosystem. The result is a comprehensive view of an industry at a pivotal juncture, poised to adapt and grow as it heads toward 2035,’ said TGS.

Jamie Bernthal-Hooker, research team lead for 4C Offshore market intelligence at TGS, said: ‘Recent news from around the world, such as Brazil progressing its regulatory framework, shows that offshore

wind still has momentum, even in emerging markets, despite hurdles and uncertainties in the global market. Our forecast reflects an industry that has faced several challenges but with rapid and significant advancements to look forward to in the short and long terms.’

The Q4 2024 Global Market Overview Report covers more than 30 markets

worldwide, while TGS has extended its global outlook for offshore wind to 2035.

Meanwhile, TGS has launched a CO2 Storage Assessment package for the Appalachian Basin in the US, covering 85 million acres. The study provides insights into geological structures, storage capacity and overall suitability of carbon capture and storage (CCS) projects in the region.

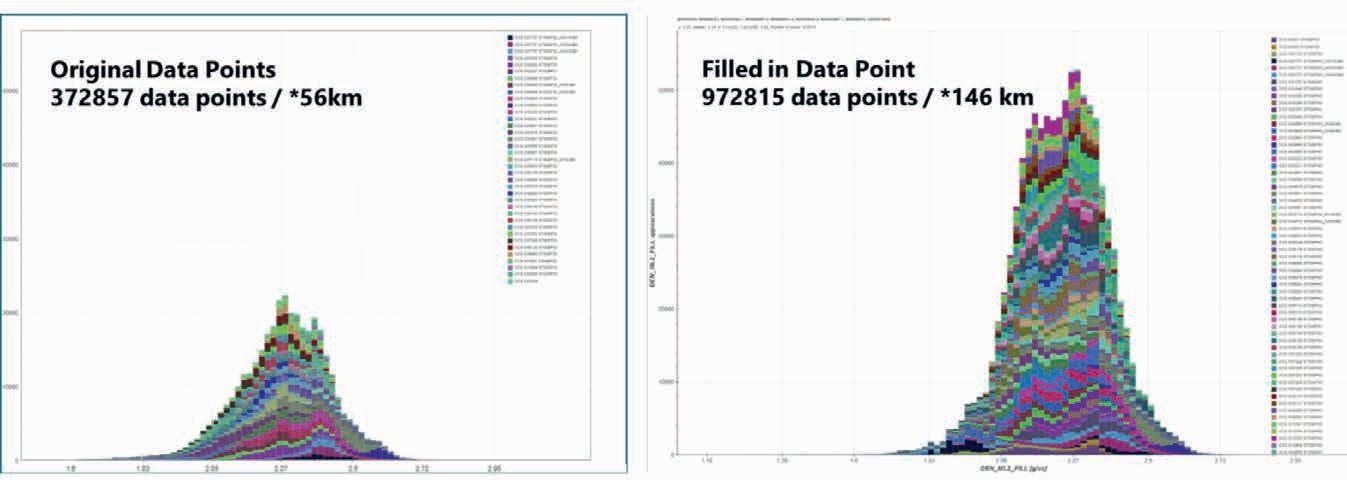

Utilising data from 3400 wells and 29 stratigraphic surfaces, the assessment evaluates reservoir quality, sealing integrity and other geological factors critical to the success of CCS projects. Advanced methodologies such as stratigraphic framework, core-calibrated petrophysical evaluations and log curve interpretations were applied to generate these findings.

Shearwater Geoservices has started the second season of acquisition for the Pelotas Basin multi-client 3D seismic survey offshore Brazil, conducted in partnership with Searcher Seismic.

The sixth multi-client 3D project for the joint venture will utilise the vessel SW Empress to expand the Pelotas survey area beyond 10,000 km2. ‘By acquiring detailed 3D seismic data, the project will provide explorers with critical insights into the geological structure of the Pelotas Basin, helping to de-risk exploration activities, enable faster decision-making, and pave the way for successful hydrocarbon discoveries,’ said Shearwater in a statement.

‘The Pelotas Basin shares a conjugate margin pairing with the Orange Basin

which is increasingly recognised as an emerging super basin of global significance and is a basin where Searcher and Shearwater lead the way with a significant data library,’ said Searcher.

The survey covers large areas of open acreage expected to be available in the 5th cycle of the Brazilian Open Acreage Release, and acreage awarded in the 4th cycle last year. ‘The survey area holds significant promise due to its geological connection with the Orange Basin in Namibia and South Africa, a globally emerging super basin of great importance. This relationship between the Pelotas Basin and the Orange Basin offers unique opportunities to leverage knowledge and discoveries across the Atlantic Conjugate Margin, enhancing exploration strategies

and maximising resource potential,’ said Shearwater.

Irene Waage Basili, CEO of Shearwater, added: ‘Shearwater has already acquired over 26,000 km2 of multi-client data, further establishing our position in the multi-client space. Shearwater and Searcher Seismic have built a highly successful partnership in one of the world’s most prosperous frontier areas and the new season’s work is a great opportunity to extend this success.’

Shearwater has also received a work order from Petrobras to start one of the two 4D projects in Brazil covering the Jubarte and Tartaruga Verde fields in the Campos Basin area. The eight-month com mitment will utilise Shearwater’s vessel Oceanic Vega.

PetroStrat has completed acquisition of Viridien’s Geology Group, formerly known as Robertson Research.

As part of the deal, 69 people and laboratory facilities will be transferred from Viridien to PetroStrat’s headquarters in Conwy, UK.

PetroStrat specialises in biostratigraphy, reservoir geology, integrated multi-client reports and laboratory services and will now add petrophysics, geochemistry, chemostratigraphy, petroleum systems analysis, lead and prospect generation, seismic interpretation and structural geology to its offer.

This extended range of services and geological expertise will enable PetroStrat to serve both traditional oil and gas projects and energy transition initiatives, such as carbon capture and sequestration (CCS) and geothermal energy, said Paul Cornick, co-founder and managing director at PetroStrat. He added: ‘The opportunity to blend the joint capabilities of two of the leading providers of geological services in the energy sector, creating a business of nearly 200 employees and with a truly global market reach is extremely exciting.’

John Gregory, co-founder and business development director at PetroStrat

said: ‘Other benefits of this acquisition will include new offices in Abu Dhabi and Crawley. PetroStrat’s main markets of Northwest Europe, North America and Gulf of Mexico, Africa, Middle East and Asia will be further enhanced with additional significant contracts covering the Middle East and Africa.’

Peter Whiting, EVP, Geoscience, Viridien, said: ‘It fits well with our strategic direction, and PetroStrat, with its recognised strength worldwide in geological services, provides an excellent home for the people and the best platform for the further growth of the business.’

PXGEO has signed a deal with Aker BP to deploy its MantaRay ocean bottom node technology on the Norwegian Continental Shelf.

Aker BP hailed the seismic tool as ‘an innovative seismic acquisition technology that will enhance the quality and efficiency of its subsurface exploration and development activities’. It added that recent results in seismic imaging and seismic velocity model-building obtained from OBN data on the Norwegian Continental Shelf

demonstrated a quality potential superior to other seismic acquisition methods.

PXGEO operates MantaRay, a hovering autonomous underwater vehicle engineered by Manta to deploy and recover ocean bottom nodes with minimal impact to the ocean floor. ‘The MantaRay technology has the potential to reduce a seismic survey operation time significantly,’ said Aker BP in a statement. ‘This will enable Aker BP to capture more detailed and accurate images of the subsurface, reduce operational risks and environmental footprint, and optimise its exploration and development activities in the Norwegian Continental Shelf (NCS).’

Per Øyvind Seljebotn, senior vice-president exploration and reservoir development at Aker BP, said: ‘We are very excited to partner with PXGEO to deploy this innovative seismic acquisition technology on the NCS. The MantaRay technology will enable us to acquire high-quality seismic data in a cost-effective and environmentally responsible way, and support our ambition to deliver profitable and sustainable growth on the NCS.’

Sercel has signed a contract with a marine geophysical company for the deployment of its Tuned Pulse Source (TPS), a low-frequency broadband marine seismic source. The geophysical company is deploying the TPS solution to acquire an ultralong-offset ocean-bottom node (OBN) survey in the Gulf of Mexico, which started in November.

‘TPS is Sercel’s response to the industry’s need for an enhanced low-frequency marine seismic source that produces a broader bandwidth and has a reduced environmental impact,’ said Sercel. ‘This solution delivers exceptionally low-frequency content and the highest amplitude signals at frequencies below 3 Hz. These are crucial for building accurate velocity models with the latest elastic full-waveform

technology, when targeting complex geologies and deep targets with long offsets.’

Jerome Denigot, CEO, Sercel said: ‘During its deployment on multiple OBN projects over the last two years, TPS has clearly demonstrated greater operational efficiency and reduced environmental impact on marine life compared to other low-frequency sources, while delivering the most accurate data.’

The US Bureau of Ocean Energy Management (BOEM) has published a call for information and nominations for wind energy leasing off the coast of the US Pacific Territory of Guam that will help the Pacific island generate 50% of its electricity from renewable energy sources by 2035 and 100% by 2045.

Equinor’s Empire Wind 1 project in the US has secured more than $3 billion financing. Empire Wind 1 will power 500,000 New York homes and is expected to reach its commercial operation date in 2027.

The UK government has signed the first three hydrogen production contracts under Hydrogen Allocation Round 1 (HAR-1). The West Wales Hydrogen project is at a former oil refinery site in Milford Haven. The Cromarty project is near Invergordon in northeast Scotland and Whitelee is outside Glasgow.

Harbour Energy (40%), INEOS (40%) and Nordsfondon (20%) have have made a final investment decision for the Greensand Future carbon capture and storage (CCS) project in Denmark. The project will store carbon dioxide from Danish emitters in a depleted oil field under the Danish North Sea. The project, which aims to store CO2 in the INEOS-operated Nini field, will become the EU’s first operational CO2 storage facility. Greensand Future aims to capture and will initially store 400,000 tons of CO2 each year.

TotalEnergies and OQ Alternative Energy (OQAE) have signed agreements to develop 300 MW of renewable energy projects in Oman. The JV will deliver the North Solar, a 100 MW solar project in Saih Nihaydah in northern Oman; and Riyah-1 and Riyah-2, two 100 MW wind projects, in Amin and West Nimr fields in southern Oman. Construction will start in early 2025, and electricity production in late 2026. The solar and wind projects will generate more than 1.4 TWh of renewable electricity annually.

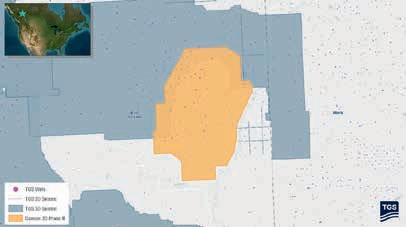

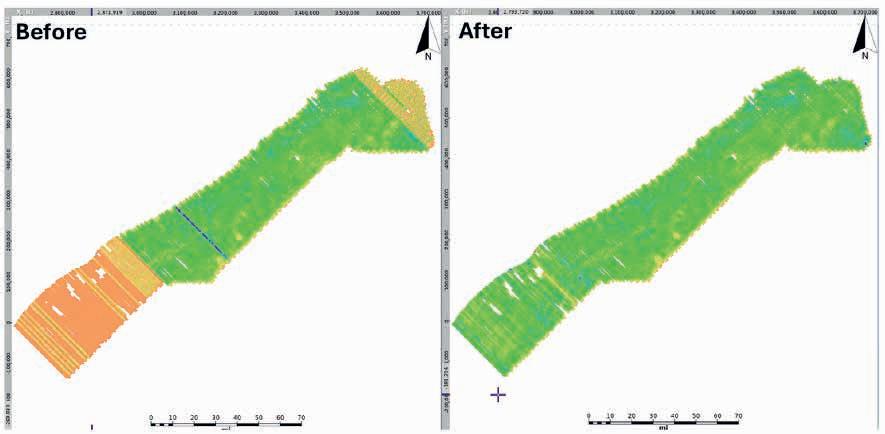

TGS has launched the Dawson Phase III 3D multi-client seismic survey in the Western Canadian Sedimentary Basin, spanning 141 km2

Field recording has just completed, with fast-track data expected in the first quarter of 2025 and final delivery in the second quarter of 2025. The project uses advanced imaging technologies, including multi-survey merging, harmonic noise reduction for slip sweep acquisition and OVT 5D interpretation, which enables precise subsurface imaging and valuable insights for operators developing Montney resources.

‘The Dawson Phase III 3D survey marks an important step as our first new multi-client seismic project in British Columbia since 2019,’ said Kristian Johansen, CEO of TGS. ‘Through the application of modern seismic imaging techniques and close collaboration with First Nations to minimise environmental impact, we continue our commitment to delivering data that supports informed

Map showing selected TGS subsurface data coverage in the British Columbia, Canada area, including the Dawson III 3D seismic survey and surrounding well data.

decision-making in one of Canada’s most active formations.’

The new survey will be merged with 121 km2 of seismic data from the existing Dawson Phase II 3D project. The Dawson Phase III 3D seismic survey will also be integrated with existing TGS’ data to offer an expanded dataset for enhanced subsurface understanding in the region. The project incorporates 291 wells and 191 LAS logs.

Rasmussengruppen has increased its ownership stake in Shearwater Geoservices to 88.8% of the shares outstanding. It has converted the interest-bearing convertible loan provided to Shearwater in 2021, which has accrued interest of $110 million and has been converted into 22,203 shares.

Rasmussengruppen has also acquired 30.850 shares in Shearwater from SLB. SLB has been a shareholder since 2018 when Shearwater acquired the marine seismic acquisition assets and operations

of SLB. After this transaction, SLB will hold a 2.5% equity share in Shearwater.

‘Both transactions demonstrate strong support of Shearwater and our strategy by the main owner. The conversion of debt to equity further contributes to strengthening our balance sheet and liquidity,’ said Andreas Hveding Aubert, the CFO of Shearwater.

Meanwhile, Shearwater has placed $300 million of bonds on the Euronext Oslo Børs.

Equinor has taken a final investment decision on two of the UK’s first carbon capture and storage (CCS) projects in Teesside, the Northern Endurance Partnership (NEP) and Net Zero Teesside Power (NZT Power) off the north-east coast of England.

‘This demonstrates how the industry, alongside the UK Government, has progressed a business model for new power supply and carbon capture, transport and storage services to decarbonise the most carbon intensive region in the UK,’ said Irene Rummelhoff, executive vice-president of marketing, midstream and processing at Equinor.

The project expects to commence construction from mid2025 with start-up in 2028. It includes a CO2 gathering network and onshore compression facilities as well as a 145 km offshore pipeline and subsea injection and monitoring facilities for the Endurance saline aquifer around 1000 m below the seabed. It could transport and store up to 4 million tonnes of captured carbon dioxide emissions per year for 25 years from three Teesside projects initially, rising to an average of up to 23 million tonnes by 2035 with future expansion of the East Coast Cluster.

The UK North Sea Transition Authority recently awarded its first ever carbon capture and storage permit to the Northern Endurance Partnership. The UK government has also awarded an economic licence to Net Zero North Sea Gas Storage, enabling

the joint venture of bp, Equinor and TotalEnergies to start installing infrastructure for the project that will include Teesside-based carbon capture projects NZT Power, H2Teesside and Teesside Hydrogen CO2 Capture.

The UK government recently announced £21.7 billion investment into carbon capture and storage projects. It also recently launched a Green Industrial Partnership with Norway. The two countries will identify gaps and challenges to the development of the North Sea as a hub for carbon storage and develop a bilateral agreement on cross-border transport of CO2 under the London Protocol.

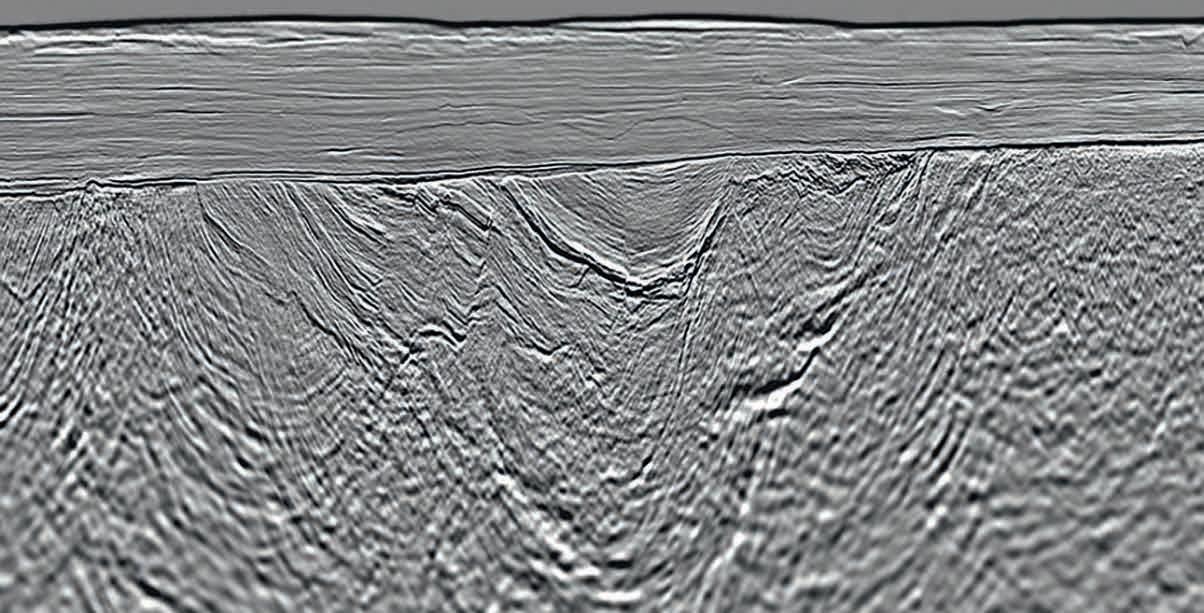

Viridien has completed the seismic imaging for the Selat Melaka multi-client 2D seismic programme, covering the offshore area of the Langkasuka Basin, Malaysia. The newly available final seismic dataset clearly indicates

the presence of a previously unseen Pre-Tertiary fold and thrust belt, extending offshore across the unexplored area, said Viridien.

The high-resolution long-offset dataset provides extensive seismic

coverage and significantly enhanced imaging over this promising area, said Viridien, whose subsurface imaging experts have applied the company’s latest imaging technologies, including full-waveform inversion and Q-tomography, for the first time in the Langkasuka Basin.

Dechun Lin, EVP, Earth Data, Viridien, said: ‘Our first multi-client project offshore Malaysia leverages over 40 years of experience processing seismic data from one of the country’s major basins. We are confident that this ultramodern data set will support the efforts of Petronas in promoting open acreages in Selat Melaka and spur exploration in this frontier area located near proven large discoveries.’

TGS and Viridien have delivered final processed data from the multi-client Sleipner ocean bottom node (OBN) project in the North Sea.

The Sleipner OBN survey, the second of two dense multi-client OBN surveys acquired in the North Sea in 2023, covers 1201 km2 in a mature region that includes the Sleipner East, Sleipner West, Gina Krog, Volve and Utgard fields, along with surrounding near-field exploration acreage – offering potential tiebacks to existing infrastructure.

Conducted between June and September 2023, the survey employed up to three node-handling vessels, three triple-source vessels and additional support vessels. The project recorded approx. 2.8 million shot points into a total of 80,769 node positions.

Viridien applied its advanced imaging technology, including its latest OBN processing techniques and time-lag full-waveform inversion (TL-FWI), to capture subsurface detail at all depths.

The imaged data shows substantial improvement in resolution and structural clarity over previous datasets, offering crucial insights into the region’s complex geology and reservoirs, said Viridien and TGS.

Halliburton and Coterra Energy have launched autonomous hydraulic fracturing technology in North America with the Octiv Auto Frac service, which is part of Halliburton’s ZEUS platform.

‘The Octiv Auto Frac service adds new capabilities to Halliburton’s Zeus intelligent fracturing platform and its leading electric pumping units and Sensori fracuture monitoring service,’ said Halliburton, which added that automation would improve consistency and control. ‘Before this service, fracture decisions were managed man-

ually while pumping. Coterra can now configure the Octiv Auto Frac service to execute designs to their specifications and automate the entire fracture process.’

The initial rollout of the service has led to a 17% increase in stage efficiency, Halliburton said. Based on these results, Coterra deployed the Octiv Auto Frac service to its remaining completion programs that Halliburton executes in the Permian Basin.

Shawn Stasiuk, Halliburton’s vice-president of production enhance-

ment, said. ‘The service ensures that automation delivers consistent fracture execution at every stage while giving our customers the control they demand over their assets.’

Tom Jorden, CEO of Coterra, said:

‘The deployment of intelligent automation for hydraulic fracturing helps us execute stages consistently and provides us with more autonomy and control over the completion process.’

Coterra is the first operator to fully automate and control their hydraulic fracturing design and execution.

Galp (80%, operator), NAMCOR and Custos (10% each), have discovered a column of gas-condensate and another of light oil at the Mopane-2A well (Well #4) in PEL83 offshore Namibia. The drillship is now moving to the Mopane-3X exploration well location (Well #5), targeting two stacked prospects AVO10 and AVO-13.

Operator Harbour Energy and Ithaca Energy have found hydrocarbons at the Jocelyn South prospect (P032) in Block 30/07a in the Central North Sea of the UK Continental Shelf. The top of the Joanne Reservoir was encountered in the well at the depth of 12,620ft TVDSSm with a provisional net pay thickness in the well of 434ft MD.

Arrow Exploration has reached target depth on the Alberta Llanos-1 well on the Tapir Block in the Llanos Basin of Colombia. The well targeted a large, three-way fault-bounded structure with multiple high-quality reservoir objectives. The well, which was drilled to a measured depth of 9960 feet, encountered four main hydrocarbon-bearing reservoirs with a total true vertical depth value of 121 feet, including the C7, Gacheta, Guadalupe, and Ubaque.

Repsol has resumed its exploration activities in the Murzuq Basin in Libya after more than a decade.

The company will test the Memonyat Formation, with the final depth of the well to reach 6050 feet. The well is located 800 km from the capital, Tripoli, and 12 km from the Sharara oil field.

Vår Energi and Equinor have proven oil in appraisal well 7122/8-2 S in the Barents Sea. The well was drilled to delineate the ‘Countach’ discovery near the Goliat field. The discovery is estimated to between 1.6 and 8.3 million Sm3 of recoverable oil equivalent. The objective of the well was to delineate the 7122/8-1 S (Countach) discovery in Lower Jurassic and Middle Triassic reservoir rocks in the Realgrunnen Subgroup and the Kobbe Formation. Well 7122/8-2 S encountered a 35-m oil column in the upper part of the Kobbe Formation in sandstone layers totalling 19 m with good reservoir quality. Sandstone layers totalling 27 m in the middle and lower part of the Kobbe Formation were aquiferous. The reservoir in the Realgrunnen Subgroup had very good to good reservoir quality and a total thickness of 5 m, but was waterfilled. An oil column totalling 217 m was also proven in the Klappmyss Formation, in thin sandstone layers with moderate-to-poor reservoir quality.

Wintershall Dea has proven gas in appraisal well 6507/4-5 S in the

Norwegian Sea, 270 km north of Kristiansund. The discovery in the Lange Formation (‘Sabina’) is estimated between 2.7 and 6.2 Sm3 of recoverable oil equivalent. In the Lysing Formation (‘Adriana’), the well confirms 4-7 million Sm3 of recoverable oil equivalent. In the primary exploration target, the well encountered a 41-m gas column in sandstone rocks totalling 17 m. The well encountered two sandstone layers in the Middle and Lower Lange Formation of around 4 and 21 m, with poor reservoir quality but with traces of hydrocarbons. In the secondary exploration target, the well encountered a 30-m gas column in sandstone rocks totalling 23 m with good-to-very-good reservoir quality.

Equinor and its partners have discovered oil and gas in wildcat well 31/1-4 (‘Ringand’) in the North Sea. Preliminary estimates indicate the size of the discovery is between 0.3 and 2 Sm 3 of recoverable oil equivalent. Well 31/1-4 encountered a 112-m gas column in the Ness, Etive and Oseberg formations, as well as a 16-m oil column in the Oseberg Formation. In addition, a 13-m gas column was encountered in sandstone with moderate reservoir quality in the Drake Formation. The well encountered a 6-m aquiferous sandstone layer.

Iraya Energies is collaborating with Petroleum Sarawak Berhad (PETROS) to implement advanced digital solutions aimed at optimising data-asset management. The joint venture is deploying the innovative ElasticDocs Intuitive Knowledge Container, which integrates technologies such as optical character recognition (OCR), auto-image recognition, and natural language processing (NLP). This

solution is set to enhance PETROS’ documentation and data life-cycle management capabilities.

‘The ElasticDocs platform enables the seamless extraction, organisation, and management of unstructured data from various sources,’ said Iraya. ‘Its advanced OCR technology ensures precise digitisation of energy-related documents, while the auto-image recognition feature

processes and geolocates visual data, integrating it with satellite imagery to improve asset tracking.’

PETROS said: ‘By partnering with Iraya, PETROS gains a robust digital toolset to audit and manage new asset data more efficiently, allowing for streamlined evaluation and improved information sharing across its technical and management teams.’

BP and XRG have reached financial close and completed formation testing of their joint venture and international natural gas platform, Arcius Energy, to focus on the development of gas projects in Egypt. Announced in February 2024, Arcius Energy is 51% owned by bp and 49% by XRG, ADNOC’s investment company.

EMGS has won a contract to shoot a CSEM survey offshore India with a contract value of $10 million. The vessel Atlantic Guardian will commence acquisition after completing another survey offshore India.

Shell has made a final investment decision on Bonga North, a deep-water project offshore Nigeria to start up 16 wells. Bonga North currently has an estimated recoverable resource volume of more than 300 million barrels of oil equivalent (boe) and will reach production of 110,000 barrels of oil a day, with first oil anticipated by the end of the decade.

Shell has made a final investment decision on the Phase 3 Silvertip project, comprising two wells at the Shell-operated Perdido spar in the US Gulf of Mexico. The wells in the Silvertip Frio reservoir Shell – 40%, operator; Chevron 60%), are expected to produce 6000 boe a day (boe/d). First production is expected in 2026.

GVERSE GeoGraphix’s 2024.1 TRITON release now integrates directly with TGS’ well data library, providing geoscientists with streamlined access to well header data, deviation surveys, digital logs and raster logs.

The International Chamber of Commerce (ICC) has found that Tullow is not liable for $320 million Branch Profit Remittance Tax (BPRT) in regard to its operations in the Jubilee and TEN fields offshore Ghana. The tribunal found that BPRT is not applicable under the Deepwater Tano and West Cape Three Points Petroleum Agreements. Tullow is fighting two more disputed tax claims..

Norway’s natural gas output set a new record in 2024 with 124 billion Sm3 sold, compared to the previous record of 122.8 billion Sm3 sold in 2022, said the Norwegian Offshore Directorate.

‘The high production in 2024 was caused by high regularity on the fields and increased capacity following upgrades in 2023,’ said NOD.

The Troll field in the Norwegian North Sea produced a record 42.5 billion Sm3 of gas last year, equivalent to about three times Norway’s annual hydropower production/ according to the majority stateowned company.

Equinor, which operates the field, said, ‘This year’s record is the result of high regularity, a year without turnarounds, as well as upgrades that have increased efficiency.’

Troll holds remaining reserves of 624.2 million Sm3 of oil equivalent, from the original recoverable reserves of approximately 1.77 billion Sm3 of oil equivalent. Gas accounts for 606 million Sm3 of oil equivalent of the remaining reserves while natural gas liquids comprise 15.3 million and oil 2.9 million.

Norway’s total oil and gas production last year was the highest since 2009, reaching 240 million Sm3 of oil equivalent. The Troll and Johan Sverdrup fields in the North Sea contribute about 37% of hydrocarbon production on the Norwegian Continental Shelf (NCS), the NOD said. Gas accounts for over half of production in Norwegian waters, it said, adding most of the gas is exported to Europe.

In the third quarter of 2024 Norway continued to be the European Union’s top pipeline gas supplier with a share of 47%, according to the European Commission’s latest quarterly gas market report.

‘Production on the shelf is expected to remain at a stable, high level over the next two to three years, and will then gradually decline towards the end of the 2020s,’ the NOD added.

At the end of last year 94 fields were operating on the Norwegian shelf, according to the directorate. In 2024 the Hanz and Tyrving fields in the North Sea came onstream, while no fields shut down, it added.

The directorate expects the Castberg field on Norway’s portion of the Barents Sea to start production in the first quarter of 2025. ‘This will be important for oil production and further development of the Barents Sea as a petroleum province”, it said.

‘Several new fields are expected to come on stream over the next few years, but many will also shut down. Some previously shut-down fields are now being considered for redevelopment with a simpler development solution.

‘One important reason why production remains at such high levels is that the fields are producing for longer than originally planned. New and improved technology has allowed us to continuously improve our understanding of the subsurface. This has enabled the industry to further develop the fields. New development projects, more production wells and exploration in the surrounding area have helped extend the lifetimes of most fields.’

This year the directorate expects $22.99 billion in investments in Norway’s offshore oil and gas sector. ‘We expect exploration activity and exploration costs to remain about the same as in 2024,’ the directorate added.

‘Measures to reduce emissions and discharges from petroleum activities on the NCS account for a substantial share of the investments leading up to 2030. Despite the high level of activity in the industry, new investment decisions will be necessary to maintain activity in the future.’

Norway is now the top gas supplier for Europe having overtaken Russia. The country holds about 7.1 billion Sm3 of oil equivalent remaining resources in its continental shelf. The figure includes 3.5 billion Sm3 of oil equivalent undiscovered resources.

Baker Hughes and the University of California, Berkeley have announced a long-term research partnership to establish the Baker Hughes Institute for Decarbonisation Materials at UC Berkeley’s College of Chemistry.

The institute will connect academic research with commercial innovation to accelerate the deployment and scaling of cost-effective climate technology solutions that drive sustainable energy development.

As part of the agreement, Baker Hughes will fund collaborative research to develop next-generation materials for a range of energy and industrial applications, including carbon capture, utilisation and storage (CCUS), hydrogen, and clean power generation. Baker Hughes will be closely involved from the earliest stages of research to shape the programs based on evolving market and customer needs, as any discoveries may potentially be scaled across the company’s portfolio of climate technology solutions.

The institute will be led by C. Judson King Distinguished Professor and UC Berkeley Professor of Chemistry Jeffrey Long, a globally recognised material’s expert who pioneered the use of metal-organic frameworks (MOFs) for adsorbing carbon dioxide and other molecules from industrial emissions streams.

Baker Hughes’ funding will support Berkeley researchers, with expertise in materials development and discovery, computational chemistry, advanced characterisation, process engineering and techno economics.

Initial research projects will focus on advanced material design, including creating and testing new chemical structures like MOFs, as well as developing gas separation and chemical conversion systems. Additionally, the projects will leverage AI and machine learning to accelerate the discovery and development of improved materials and new technology solutions.

‘Our aim is to make materials that not only adsorb gases more efficiently, but also without high energy requirements,’ said Professor Long, the institute’s executive director. ‘As chemists, we know how to adjust materials at the atomic level, but we need partners like Baker Hughes who can scale and industrialise the technology.’

Viridien is expected to announce full year revenues of more than $1.1 billion with EBITDA of $430 million.

Net cash generation of of $50 million has led to a reduction of net debt to $930 million, exceeding the net cash generation target of $30 million due to earlier than expected client collection. The company’s credit rating from Standard & Poor’s has been updated to B. Viridien has also announced a $60 million of bond buy-back. Its revolving credit facility has been extended until October 2026 as part of preparation for the refinancing of the 2027 bonds.

The company cited ‘strong activity’ in Geoscience, a ‘strong pipeline of projects’ and termination of contractual fees from vessel commitments.

Sophie Zurquiyah, CEO of Viridien, said: ‘We achieved EBITDA growth and

net cash flow generation close to $50 million, exceeding our initial target of $30 million. Additionally, we repurchased $60 million of our own bonds, doubling our $30 million commitment. Our credit rating improved from S&P, and we extended our revolving credit facility in preparation for refinancing our debt.

‘These results were driven by the Data, Digital & Energy Transition (DDE) segment, with strong growth in Geoscience (GEO) activities and the launch of the significant Laconia project. This project enhances the value of the seismic data library of Earth Data (EDA) activities in the Gulf of Mexico.

‘The performance of DDE and the successful execution of the transformation plan for the Sensing & Monitoring (SMO) segment enable us to confidently reaffirm our target of generating

around $100 million in net cash flow in 2025.’

Meanwhile, Viridien has announced that as of 30 April it will temporarily combine the roles of chairman and chief executive officer under the leadership of Sophie Zurquiyah. She will replace Philippe Salle, who will take on the role of lead director and vice-chairman. Salle has chaired the company since 2018, while Zurquiyah has been chief executive since 2018.

Colette Lewiner, chair of the Appointment, Remuneration and Governance Committee, said: ‘Philippe’s continued presence on our board as lead director will ensure continuity and provide a balance of power within a unified governance structure. The Board is already engaged in discussions to restore a separate governance structure in 2026.’